Spaces:

Sleeping

title: TensorLM-webui

emoji: 🐨

colorFrom: purple

colorTo: purple

sdk: gradio

sdk_version: 4.1.0

app_file: hf_launch.py

pinned: true

license: gpl-3.0

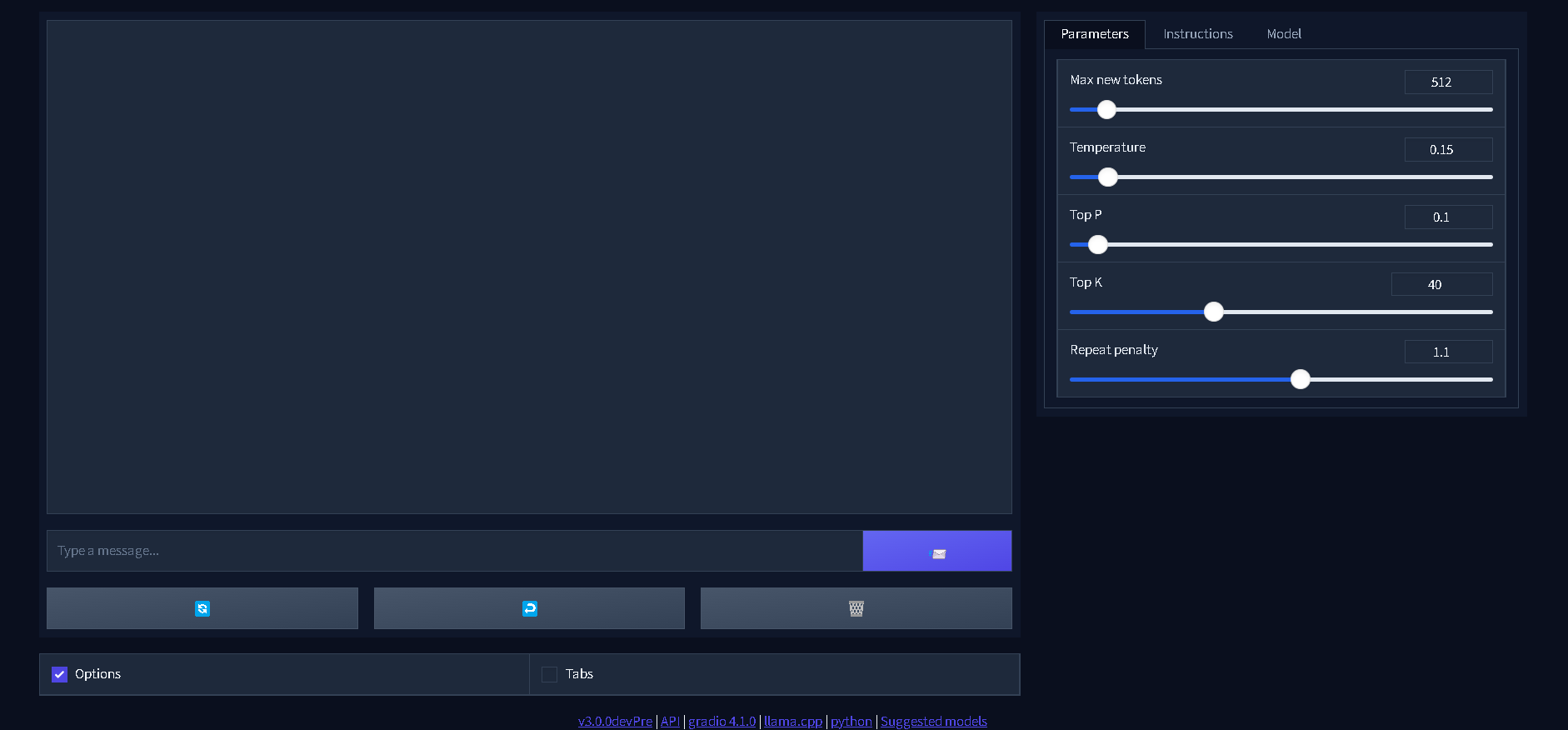

TensorLM - webui for LLM models

This is Fooocus from the world of Stable Difusion in the world of Text Generation, the same ease of use and the same convenience.

This is simple and modern Gradio webui for LLM models GGML format (.bin) based on LLaMA.

Navigation:

Installing

Presets

Model downloading

Args

Fast use

You can use this webui in cloud service Colab:

Features

- Simple to use

- Comfy to work

- Not demanding on resources

- Beautiful and pleasant interface

Installing

In Windows

>>> Portable one-click packege <<<

Step-by-step installation:

- Install Python 3.10.6 and Git

- Run

git clone https://github.com/ehristoforu/TensorLM-webui.git - Run

cd TensorLM-webui - Run

update_mode.bat&& enter 1 and 2 - Run

start.bat

In MacOS

Step-by-step installation:

- Install Python 3.10.6 and Git

- Run

git clone https://github.com/ehristoforu/TensorLM-webui.git - Run

cd TensorLM-webui - Run

python pip install -r requirements.txt - Run

python webui.py

In Linux

Step-by-step installation:

- Install Python 3.10.6 and Git

- Run

git clone https://github.com/ehristoforu/TensorLM-webui.git - Run

cd TensorLM-webui - Run

python pip install -r requirements.txt - Run

python webui.py

Presets

In this app there is 23 default presets.

Thanks, @mustvlad for system prompts!

You can create your custom presets, instruction in presets folder (it is .md-file).

Model downloading

With this interface you don't need to scour the Internet looking for a compatible model; in the "Tabs" checkbox and in the "ModelGet" tab you can choose which model to download from our verified repository on HuggingFace.

Args

To use args:

- In Windows: edit start.bat with Notepad and edit line with

python webui.pytopython webui.py [Your args], for ex.python webui.py --inbrowser - In MacOS & Linux: run

python webui.pywith args -python webui.py {Your args}, for ex.python webui.py --inbrowser

Args list

--inbrowser --share --lowvram --debug --quiet

Forks

While there are no forks 😔, perhaps you will be the first who can significantly improve this application!

Citation

@software{ehristoforu_TensorLM-webui_2024,

author = {ehristoforu},

month = apr,

title = {{TensorLM-webui}},

url = {https://github.com/ehristoforu/TensorLM-webui},

year = {2024}

}