Spaces:

Sleeping

title: RetinaGAN

emoji: 😻

colorFrom: gray

colorTo: red

sdk: streamlit

sdk_version: 1.20.0

app_file: app.py

pinned: false

license: mit

RetinaGAN

Code Repository for: High-Fidelity Diabetic Retina Fundus Image Synthesis from Freestyle Lesion Maps

About

RetinaGAN a two-step process for generating photo-realistic retinal Fundus images based on artificially generated or free-hand drawn semantic lesion maps.

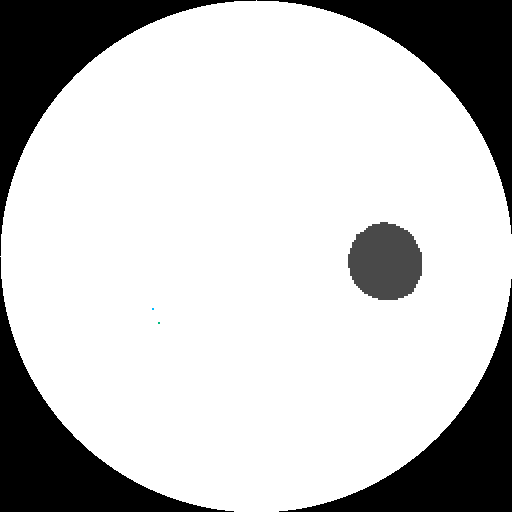

StyleGAN is modified to be conditional in to synthesize pathological lesion maps based on a specified DR grade (i.e., grades 0 to 4). The DR Grades are defined by the International Clinical Diabetic Retinopathy (ICDR) disease severity scale; no apparent retinopathy, {mild, moderate, severe} Non-Proliferative Diabetic Retinopathy (NPDR), and Proliferative Diabetic Retinopathy (PDR). The output of the network is a binary image with seven channels instead of class colors to avoid ambiguity.

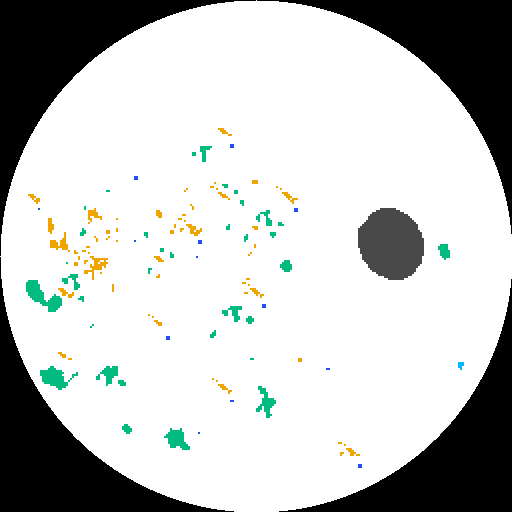

The generated label maps are then passed through SPADE, an image-to-image translation network, to turn them into photo-realistic retina fundus images. The input to the network are one-hot encoded labels.

Usage

Download model checkpoints (see here for details) and run the model via Streamlit. Start the app via streamlit run web_demo.py.

Example Images

Example retina Fundus images synthesised from Conditional StyleGAN generated lesion maps. Top row: synthetically generated lesion maps based on DR grade by Conditional StyleGAN. Other rows: synthetic Fundus images generated by SPADE. Images are generated sequentially with random seed and are not cherry picked.

Cite this work

If you find this work useful for your research, give us a kudos by citing:

@article{hou2023high,

title={High-fidelity diabetic retina fundus image synthesis from freestyle lesion maps},

author={Hou, Benjamin},

journal={Biomedical Optics Express},

volume={14},

number={2},

pages={533--549},

year={2023},

publisher={Optica Publishing Group}

}