SSD Latent Preview at Half-Size

The decoder provides a preview image.

Max supported resolution is between 768 and 1024px.

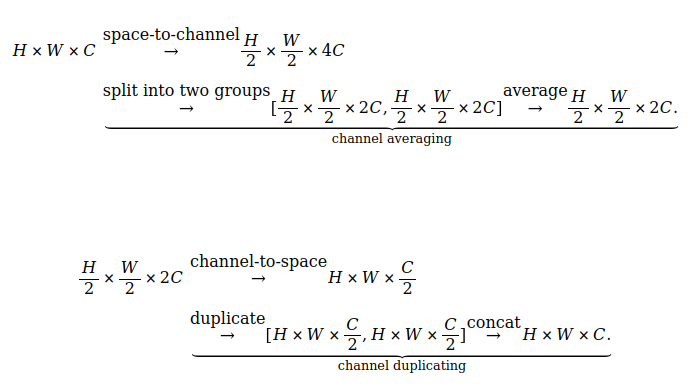

The model processes a latent representation that has been reshaped from 4 channels to 64 channels, which differs from the original implementation.

Inference

from diffusers import AutoencoderKL, StableDiffusionXLPipeline

from safetensors.torch import load_model

from tea_64_model import TeaDecoder

import torch

from torchvision import transforms

def preview_image(latents, pipe):

latents = latents / pipe.vae.config.scaling_factor

tea = TeaDecoder(ch_in=4)

load_model(tea, './vae_decoder.safetensors')

tea.to(device='cuda')

output = tea(latents.float()) / 2.0 + 0.5

preview = transforms.ToPILImage()(output[0].clamp(0, 1))

return preview

if __name__ == '__main__':

pipe = StableDiffusionXLPipeline.from_pretrained('segmind/SSD-1B',

torch_dtype=torch.float16,

use_safetensors=True,

variant='fp16')

latents = pipe('cat playing piano',

'bad quality, low quality',

num_inference_steps=20,

output_type='latent').images

preview = preview_image(latents, pipe)

preview.save('cat.png')

Datasets

- Describable Textures Dataset (DTD)

- AngelBottomless/Booru-Parquets

- hastylol/nai3

- jordandavis/fashion_num_people

- mattmdjaga/human_parsing_dataset

- recoilme/portraits_xs

- skytnt/anime-segmentation

- twodgirl/classicism

- twodgirl/vndb