license: mit

license_link: https://huggingface.co/microsoft/Florence-2-base-ft/resolve/main/LICENSE

pipeline_tag: image-text-to-text

tags:

- vision

- ocr

- segmentation

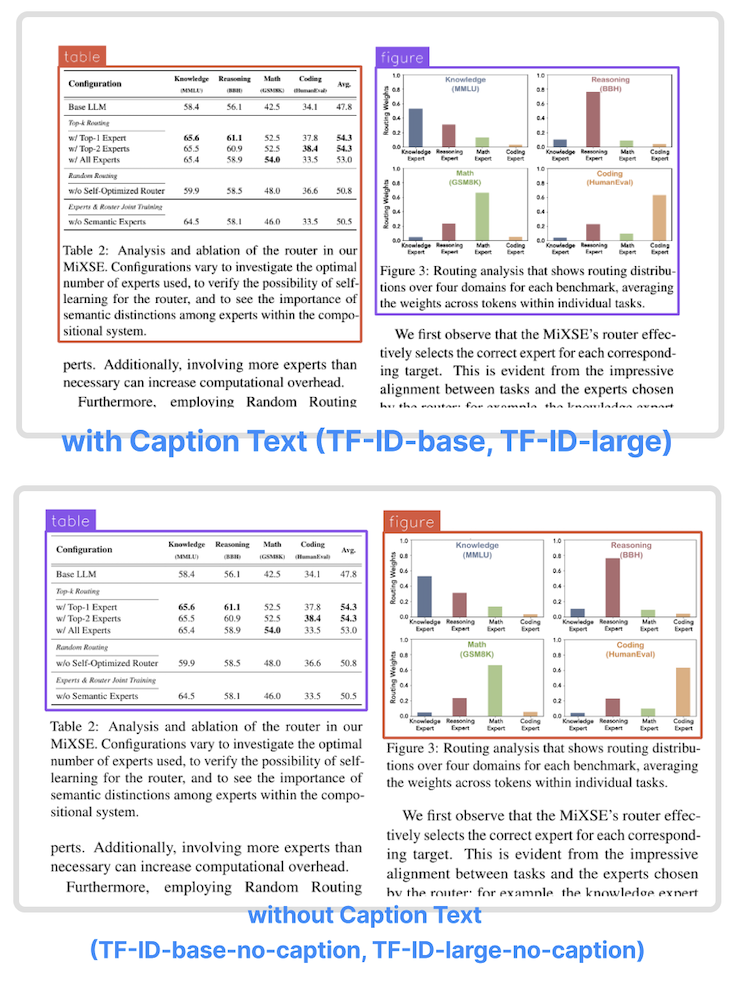

TFT-ID: Table/Figure/Text IDentifier for academic papers

Model Summary

TFT-ID (Table/Figure/Text IDentifier) is a family of object detection models finetuned to extract tables, figures, and text sections in academic papers created by Yifei Hu.

TFT-ID is finetuned from microsoft/Florence-2 checkpoints.

- The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

- TFT-ID models take an image of a single paper page as the input, and return bounding boxes for all tables, figures, and text sections in the given page.

- The text sections contain clean text content perfect for downstream OCR workflows. However, TFT-ID is not an OCR model.

Object Detection results format: {'<OD>': {'bboxes': [[x1, y1, x2, y2], ...], 'labels': ['label1', 'label2', ...]} }

Training Code and Dataset

- Dataset: Coming soon.

- Code: github.com/ai8hyf/TF-ID

Benchmarks

We tested the models on paper pages outside the training dataset. The papers are a subset of huggingface daily paper.

Correct output - the model draws correct bounding boxes for every table/figure/text section in the given page and not missing any content.

| Model | Total Images | Correct Output | Success Rate |

|---|---|---|---|

| TFT-ID-1.0[HF] | 373 | 361 | 96.78% |

Depending on the use cases, some "incorrect" output could be totally usable. For example, the model draw two bounding boxes for one figure with two child components.

How to Get Started with the Model

Use the code below to get started with the model.

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

prompt = "<OD>"

url = "https://huggingface.co/yifeihu/TF-ID-base/resolve/main/arxiv_2305_10853_5.png?download=true"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

do_sample=False,

num_beams=3

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

print(parsed_answer)

To visualize the results, see this tutorial notebook for more details.

BibTex and citation info

@misc{TF-ID,

author = {Yifei Hu},

title = {TF-ID: Table/Figure IDentifier for academic papers},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/ai8hyf/TF-ID}},

}