metadata

language:

- ko

datasets:

- kyujinpy/KOpen-platypus

library_name: transformers

pipeline_tag: text-generation

license: cc-by-nc-sa-4.0

(주)미디어그룹사람과숲과 (주)마커의 LLM 연구 컨소시엄에서 개발된 모델입니다

The license is cc-by-nc-sa-4.0.

Poly-platypus-ko

Polyglot-ko + KO-platypus2 = Poly-platypus-ko

Model Details

Model Developers Kyujin Han (kyujinpy)

Input Models input text only.

Output Models generate text only.

Model Architecture

Poly-platypus-ko is an auto-regressive language model based on the polyglot-ko transformer architecture.

Repo Link

Github KO-platypus2: KO-platypus2

Github Poly-platypus-ko: Poly-platypus-ko

Base Model

Polyglot-ko-12.8b

Fine-tuning method

Same as KO-Platypus2.

Training Dataset

I use KOpen-platypus dataset.

I use A100 GPU 40GB and COLAB, when trianing.

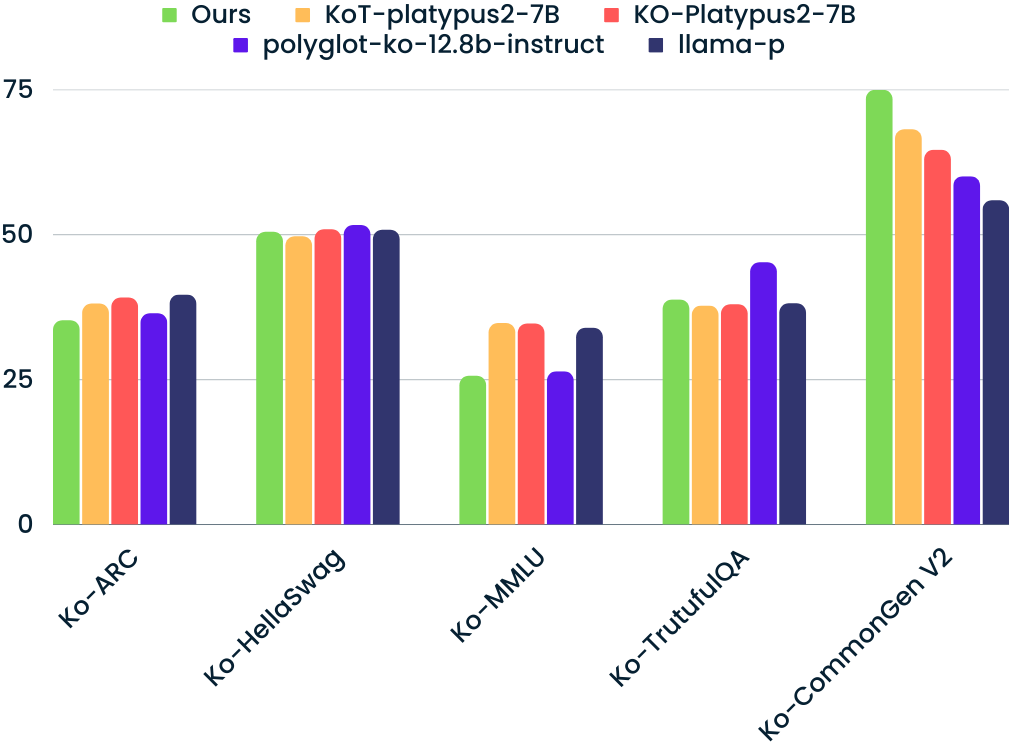

Model Bechmark1

KO-LLM leaderboard

- Follow up as Open KO-LLM LeaderBoard.

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

|---|---|---|---|---|---|---|

| Poly-platypus-ko-12.8b(ours) | 44.95 | 35.15 | 50.39 | 25.58 | 38.74 | 74.88 |

| KoT-platypus2-7B | 45.62 | 38.05 | 49.63 | 34.68 | 37.69 | 68.08 |

| KO-platypus2-7B-EX | 45.41 | 39.08 | 50.86 | 34.60 | 37.94 | 64.55 |

| 42MARU/polyglot-ko-12.8b-instruct | 43.89 | 36.35 | 51.59 | 26.38 | 45.16 | 59.98 |

| FINDA-FIT/llama-p | 43.63 | 39.59 | 50.74 | 33.85 | 38.09 | 55.87 |

Compare with Top 4 SOTA models. (update: 10/01)

Model Benchmark2

LM Eval Harness - Korean (polyglot branch)

- Used EleutherAI's lm-evaluation-harness

Question Answering (QA)

COPA (F1)

| Model | 0-shot | 5-shot | 10-shot | 50-shot |

|---|---|---|---|---|

| Polyglot-ko-5.8b | 0.7745 | 0.7676 | 0.7775 | 0.7887 |

| Polyglot-ko-12.8b | 0.7937 | 0.8108 | 0.8037 | 0.8369 |

| Llama-2-Ko-7b 20B | 0.7388 | 0.7626 | 0.7808 | 0.7979 |

| Llama-2-Ko-7b 40B | 0.7436 | 0.7927 | 0.8037 | 0.8259 |

| KO-platypus2-7B-EX | 0.7509 | 0.7899 | 0.8029 | 0.8290 |

| KoT-platypus2-7B | 0.7517 | 0.7868 | 0.8009 | 0.8239 |

| Poly-platypus-ko-12.8b(ours) | 0.7876 | 0.8099 | 0.8008 | 0.8239 |

Natural Language Inference (NLI; 자연어 추론 평가)

HellaSwag (F1)

| Model | 0-shot | 5-shot | 10-shot | 50-shot |

|---|---|---|---|---|

| Polyglot-ko-5.8b | 0.5976 | 0.5998 | 0.5979 | 0.6208 |

| Polyglot-ko-12.8b | 0.5954 | 0.6306 | 0.6098 | 0.6118 |

| Llama-2-Ko-7b 20B | 0.4518 | 0.4668 | 0.4726 | 0.4828 |

| Llama-2-Ko-7b 40B | 0.4562 | 0.4657 | 0.4698 | 0.4774 |

| KO-platypus2-7B-EX | 0.4571 | 0.4461 | 0.4371 | 0.4525 |

| KoT-platypus2-7B | 0.4432 | 0.4382 | 0.4550 | 0.4534 |

| Poly-platypus-ko-12.8b(ours) | 0.4838 | 0.4858 | 0.5005 | 0.5062 |

Question Answering (QA)

BoolQ (F1)

| Model | 0-shot | 5-shot | 10-shot | 50-shot |

|---|---|---|---|---|

| Polyglot-ko-5.8b | 0.4356 | 0.5698 | 0.5187 | 0.5236 |

| Polyglot-ko-12.8b | 0.4818 | 0.6041 | 0.6289 | 0.6448 |

| Llama-2-Ko-7b 20B | 0.3607 | 0.6797 | 0.6801 | 0.6622 |

| Llama-2-Ko-7b 40B | 0.5786 | 0.6977 | 0.7084 | 0.7144 |

| KO-platypus2-7B-EX | 0.6028 | 0.6979 | 0.7016 | 0.6988 |

| KoT-platypus2-7B | 0.6142 | 0.6757 | 0.6839 | 0.6878 |

| Poly-platypus-ko-12.8b(ours) | 0.4888 | 0.6520 | 0.6568 | 0.6835 |

Classification

SentiNeg (F1)

| Model | 0-shot | 5-shot | 10-shot | 50-shot |

|---|---|---|---|---|

| Polyglot-ko-5.8b | 0.3394 | 0.8841 | 0.8808 | 0.9521 |

| Polyglot-ko-12.8b | 0.9117 | 0.9015 | 0.9345 | 0.9723 |

| Llama-2-Ko-7b 20B | 0.4855 | 0.8295 | 0.8711 | 0.8513 |

| Llama-2-Ko-7b 40B | 0.4594 | 0.7611 | 0.7276 | 0.9370 |

| KO-platypus2-7B-EX | 0.5821 | 0.7653 | 0.7991 | 0.8643 |

| KoT-platypus2-7B | 0.6127 | 0.7199 | 0.7531 | 0.8381 |

| Poly-platypus-ko-12.8b(ours) | 0.8490 | 0.9597 | 0.9723 | 0.9847 |

Implementation Code

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "MarkrAI/kyujin-Poly-platypus-ko-12.8b"

CoT-llama = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

CoT-llama_tokenizer = AutoTokenizer.from_pretrained(repo)

Readme format: kyujinpy/KoT-platypus2-7B