File size: 1,485 Bytes

c44da1d |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

---

license: gpl-3.0

datasets:

- LooksJuicy/ruozhiba

- hfl/ruozhiba_gpt4

language:

- zh

pipeline_tag: text-generation

base_model: google/gemma-2-2b-it

---

# QuantFactory/Gemma-2-2b-Chinese-it-GGUF

This is quantized version of [stvlynn/Gemma-2-2b-Chinese-it](https://huggingface.co/stvlynn/Gemma-2-2b-Chinese-it) created using llama.cpp

# Original Model Card

# Gemma-2-2b-Chinese-it (Gemma-2-2b-中文)

## Intro

`Gemma-2-2b-Chinese-it` used approximately 6.4k rows of ruozhiba dataset to fine-tune `Gemma-2-2b-it`.

`Gemma-2-2b-中文`使用了约6.4k弱智吧数据对`Gemma-2-2b-it`进行微调

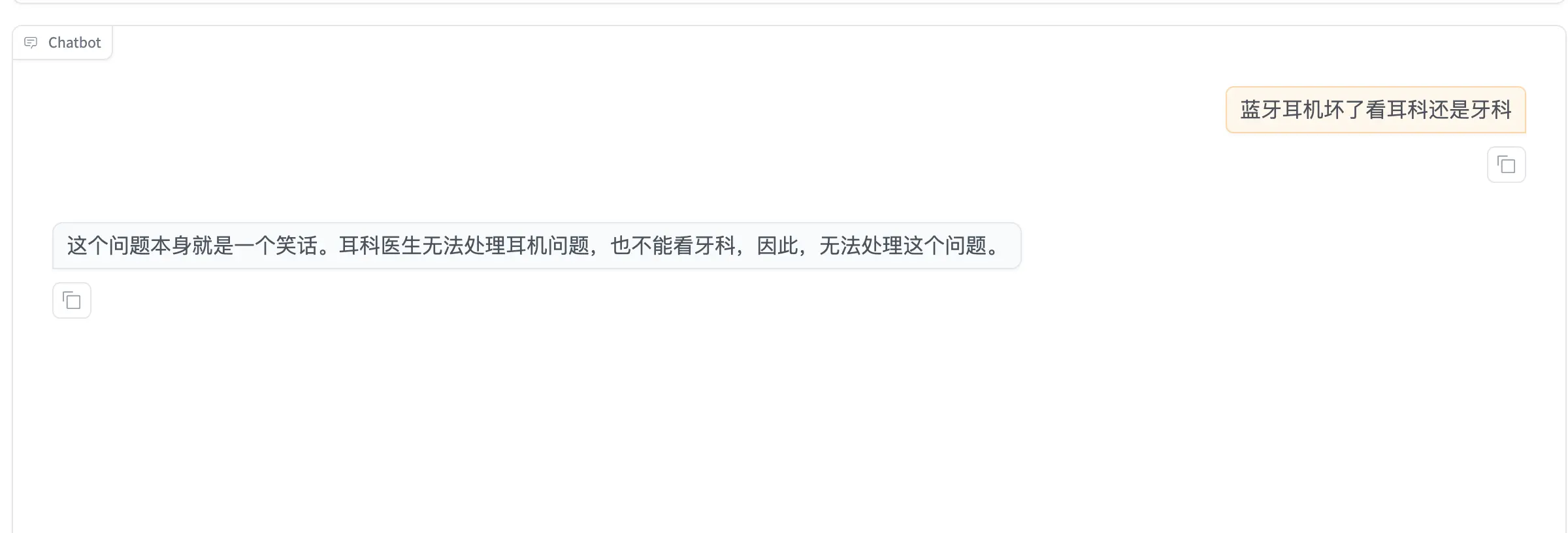

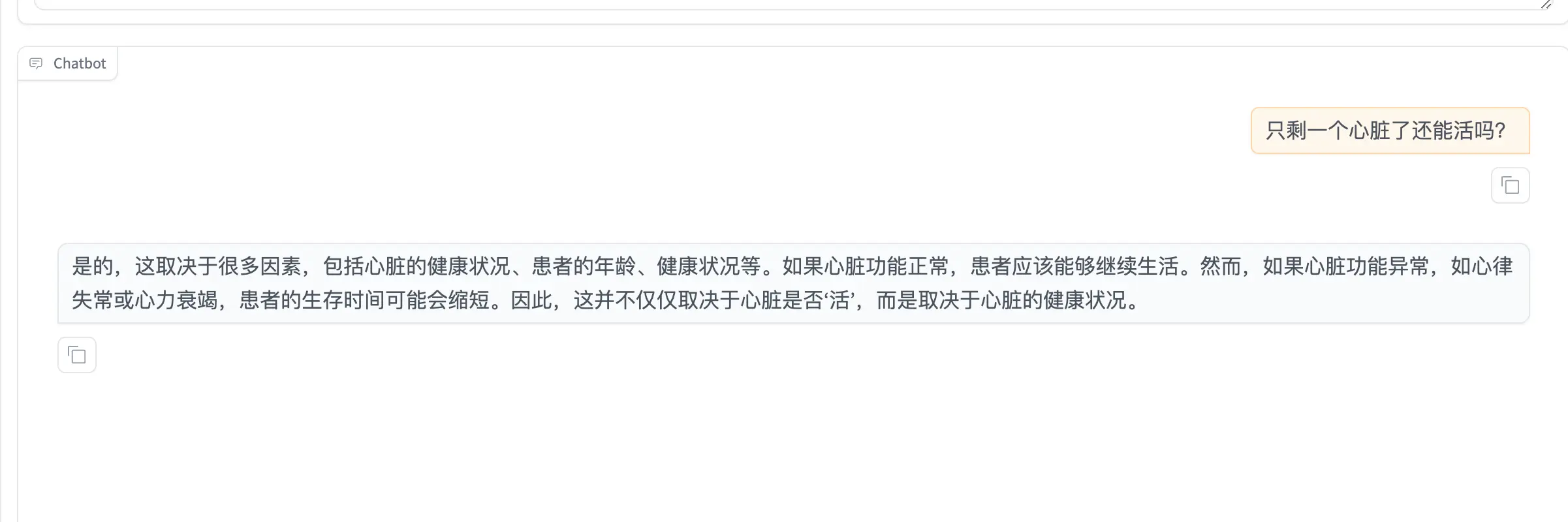

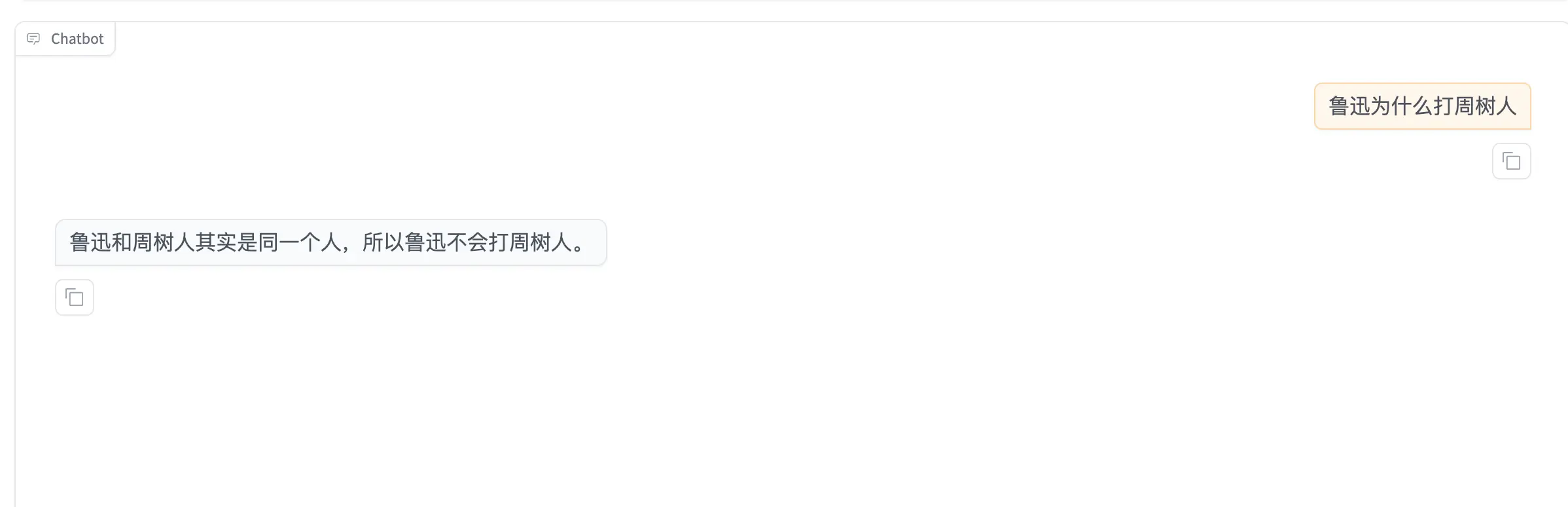

## Demo

## Usage

see Google's doc:

[google/gemma-2-2b-it](https://huggingface.co/google/gemma-2-2b-it)

---

If you have any questions or suggestions, feel free to contact me.

[Twitter @stv_lynn](https://x.com/stv_lynn)

[Telegram @stvlynn](https://t.me/stvlynn)

[email [email protected]](mailto://[email protected])

|