tags:

- Diffusion

- Data Generation

language: en

task: Data generation for computer vision tasks

datasets: MNIST

metrics:

epoch: 31

train_loss:

- 0.0908

- 0.0245

- 0.0209

- 0.0194

- 0.0185

- 0.0178

- 0.0172

- 0.0169

- 0.0167

- 0.0161

- 0.0161

- 0.0159

- 0.0158

- 0.0154

- 0.0155

- 0.0154

- 0.0152

- 0.0151

- 0.015

- 0.0152

- 0.0151

- 0.0148

- 0.0148

- 0.0148

- 0.0147

- 0.0146

- 0.0147

- 0.0145

- 0.0146

- 0.0146

- 0.0146

license: unknown

model-index:

- name: diffusion-practice-v1

results:

- task:

type: nlp

name: Data Generation with Diffusion Model

dataset:

name: MNIST

type: mnist

metrics:

- type: loss

value: '0.01'

name: Loss

verified: false

NLI-FEVER Model

This model is fine-tuned for Natural Language Inference (NLI) tasks using the FEVER dataset.

Model description

Intended uses & limitations

This model is intended for use in NLI tasks, particularly those related to fact-checking and verifying information. It should not be used for tasks it wasn't explicitly trained for.

Training and evaluation data

The model was trained on the FEVER (Fact Extraction and VERification) dataset.

Training procedure

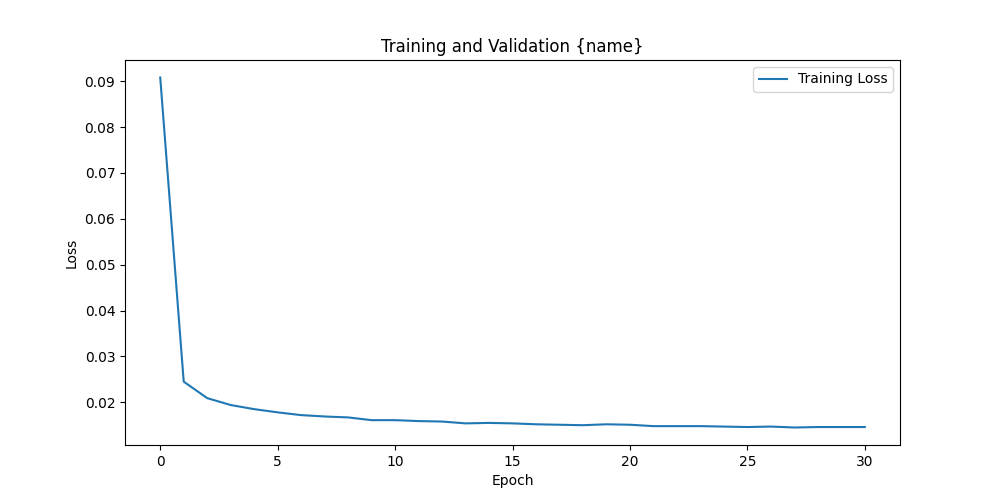

The model was trained for 31 epochs Train Losses of [0.0908, 0.0245, 0.0209, 0.0194, 0.0185, 0.0178, 0.0172, 0.0169, 0.0167, 0.0161, 0.0161, 0.0159, 0.0158, 0.0154, 0.0155, 0.0154, 0.0152, 0.0151, 0.015, 0.0152, 0.0151, 0.0148, 0.0148, 0.0148, 0.0147, 0.0146, 0.0147, 0.0145, 0.0146, 0.0146, 0.0146].

How to use

You can use this model directly with a pipeline for text classification:

from transformers import pipeline

classifier = pipeline("text-classification", model="YusuphaJuwara/nli-fever")

result = classifier("premise", "hypothesis")

print(result)

Saved Metrics

This model repository includes a metrics.json file containing detailed training metrics.

You can load these metrics using the following code:

from huggingface_hub import hf_hub_download

import json

metrics_file = hf_hub_download(repo_id="YusuphaJuwara/nli-fever", filename="metrics.json")

with open(metrics_file, 'r') as f:

metrics = json.load(f)

# Now you can access metrics like:

print("Last epoch: ", metrics['last_epoch'])

print("Final validation loss: ", metrics['val_losses'][-1])

print("Final validation accuracy: ", metrics['val_accuracies'][-1])

These metrics can be useful for continuing training from the last epoch or for detailed analysis of the training process.

Training results

Limitations and bias