url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/11135 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11135/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11135/comments | https://api.github.com/repos/huggingface/transformers/issues/11135/events | https://github.com/huggingface/transformers/pull/11135 | 853,163,982 | MDExOlB1bGxSZXF1ZXN0NjExMjk2NTk4 | 11,135 | Adding FastSpeech2 | {

"login": "huu4ontocord",

"id": 8900094,

"node_id": "MDQ6VXNlcjg5MDAwOTQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/8900094?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/huu4ontocord",

"html_url": "https://github.com/huu4ontocord",

"followers_url": "https://api.github.com/users/huu4ontocord/followers",

"following_url": "https://api.github.com/users/huu4ontocord/following{/other_user}",

"gists_url": "https://api.github.com/users/huu4ontocord/gists{/gist_id}",

"starred_url": "https://api.github.com/users/huu4ontocord/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/huu4ontocord/subscriptions",

"organizations_url": "https://api.github.com/users/huu4ontocord/orgs",

"repos_url": "https://api.github.com/users/huu4ontocord/repos",

"events_url": "https://api.github.com/users/huu4ontocord/events{/privacy}",

"received_events_url": "https://api.github.com/users/huu4ontocord/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hey @ontocord,\r\n\r\nThanks a lot for opening this pull request :-) \r\n\r\nWe are very much open to adding FastSpeech2 to `transformers`! One thing that is quite important to us is that we stick as much as possible to the original implementation of the model. \r\n\r\nIMO, the easiest way to approach this would be to translate [this](TF code) to PyTorch: https://github.com/TensorSpeech/TensorFlowTTS/blob/master/tensorflow_tts/models/fastspeech2.py and add it to our lib.\r\n\r\nLet me know if it would also be ok/interesting for you to stay closer to the official code -> I'm very happy to help you get this PR merged then :-)",

"Happy for you to close this PR in favor of an official implementation. Just as an aside, the G2P (GRU->GRU) code is actually based on the original impementation from the Fastspeech2 paper. But it uses https://github.com/Kyubyong/g2p which is slower than pytorh and based on Numpy. I re-wrote the G2P in pytorch based on the G2P author's notes, and retrained it so it's faster.\r\n\r\nFrom the paper: \r\n\r\n\"To alleviate the mispronunciation problem, we convert the\r\ntext sequence into the phoneme sequence (Arik et al., 2017; Wang et al., 2017; Shen et al., 2018;\r\nSun et al., 2019) with an open-source grapheme-to-phoneme tool5\r\n...\r\n5https://github.com/Kyubyong/g2p\"\r\n\r\nI think this module is really one of the things that keeps the Fastspeech2 model (and tacotron 2 and similar models) from generalizing to more languages. In theory you could just train on character level, but it's harder. DM if you want to discuss work arounds...",

"Hey @ontocord @patrickvonplaten, I was wondering if there has been a followup to this PR. I'd love to see transformer TTS models like FastSpeech2 in this library and would be more than happy to help contribute if possible!",

"I also think we should eventually add models like FastSpeech2 to the library. Gently ping to @anton-l here who was interested in this addition as well.",

"@patrickvonplaten @anton-l Do we only add models with official weights from the paper authors? AFAIK FastSpeech2 has plenty of unofficial implementations with weights, but there is no official repository ([PwC](https://paperswithcode.com/paper/fastspeech-2-fast-and-high-quality-end-to-end)). I think we should reach out to the author (Yi Ren is on GitHub), and if that doesn't work out, consider which implementation/weights we want to port. What do you think?\r\n\r\nAlso if you'd prefer, I'll open a new issue dedicated to this discussion instead of hijacking this PR.",

"I think we should definitely reach out to the original authors! Feel free to contact them :-)",

"Just emailed the first author and cc'd both you and Anton! I'll keep you posted. ",

"I would be interested in working on something more generic than fastspeech2 which needs a g2p module. It’s not truly end to end. \n\n> On Jan 3, 2022, at 7:55 AM, Jake Tae ***@***.***> wrote:\n> \n> \n> Just emailed the first author and cc'd both you and Anton! I'll keep you posted.\n> \n> —\n> Reply to this email directly, view it on GitHub, or unsubscribe.\n> Triage notifications on the go with GitHub Mobile for iOS or Android. \n> You are receiving this because you were mentioned.\n"

] | 1,617 | 1,641 | 1,619 | NONE | null | # What does this PR do?

This is a draft PR for Fastspeech2 which includes melgan and a custom g2p pythorch module. See https://huggingface.co/ontocord/fastspeech2-en

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

@patil-suraj

Models:

- Fastspeech2

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11135/reactions",

"total_count": 2,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 2,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11135/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11135",

"html_url": "https://github.com/huggingface/transformers/pull/11135",

"diff_url": "https://github.com/huggingface/transformers/pull/11135.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11135.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/11134 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11134/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11134/comments | https://api.github.com/repos/huggingface/transformers/issues/11134/events | https://github.com/huggingface/transformers/issues/11134 | 853,135,648 | MDU6SXNzdWU4NTMxMzU2NDg= | 11,134 | Problem with data download | {

"login": "chatzich",

"id": 189659,

"node_id": "MDQ6VXNlcjE4OTY1OQ==",

"avatar_url": "https://avatars.githubusercontent.com/u/189659?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chatzich",

"html_url": "https://github.com/chatzich",

"followers_url": "https://api.github.com/users/chatzich/followers",

"following_url": "https://api.github.com/users/chatzich/following{/other_user}",

"gists_url": "https://api.github.com/users/chatzich/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chatzich/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chatzich/subscriptions",

"organizations_url": "https://api.github.com/users/chatzich/orgs",

"repos_url": "https://api.github.com/users/chatzich/repos",

"events_url": "https://api.github.com/users/chatzich/events{/privacy}",

"received_events_url": "https://api.github.com/users/chatzich/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"@chatzich your question seems similar to this - #2323. ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,621 | 1,621 | NONE | null | Hello can I ask which is the directory that the downloaded stuff is stored? I am trying to bundle these data into a docker image and every time the image is built transformers is downloading the 440M data from the beggining | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11134/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11134/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11133 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11133/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11133/comments | https://api.github.com/repos/huggingface/transformers/issues/11133/events | https://github.com/huggingface/transformers/pull/11133 | 853,071,777 | MDExOlB1bGxSZXF1ZXN0NjExMjE5Mjgw | 11,133 | Typo fix of the name of BertLMHeadModel in BERT doc | {

"login": "forest1988",

"id": 2755894,

"node_id": "MDQ6VXNlcjI3NTU4OTQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/2755894?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/forest1988",

"html_url": "https://github.com/forest1988",

"followers_url": "https://api.github.com/users/forest1988/followers",

"following_url": "https://api.github.com/users/forest1988/following{/other_user}",

"gists_url": "https://api.github.com/users/forest1988/gists{/gist_id}",

"starred_url": "https://api.github.com/users/forest1988/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/forest1988/subscriptions",

"organizations_url": "https://api.github.com/users/forest1988/orgs",

"repos_url": "https://api.github.com/users/forest1988/repos",

"events_url": "https://api.github.com/users/forest1988/events{/privacy}",

"received_events_url": "https://api.github.com/users/forest1988/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,617 | 1,617 | CONTRIBUTOR | null | # What does this PR do?

Typo fix in BERT doc.

I was confused that I couldn't find the implementation and discussion log of `BertModelLMHeadModel,` and found that `BertLMHeadModel` is the correct name.

It was titled `BertModelLMHeadModel` in the BERT doc, and it seems `BertLMHeadModel` is the intended name.

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Documentation: @sgugger

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11133/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11133/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11133",

"html_url": "https://github.com/huggingface/transformers/pull/11133",

"diff_url": "https://github.com/huggingface/transformers/pull/11133.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11133.patch",

"merged_at": 1617884578000

} |

https://api.github.com/repos/huggingface/transformers/issues/11132 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11132/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11132/comments | https://api.github.com/repos/huggingface/transformers/issues/11132/events | https://github.com/huggingface/transformers/issues/11132 | 852,981,758 | MDU6SXNzdWU4NTI5ODE3NTg= | 11,132 | Clear add labels to token classification example | {

"login": "gwc4github",

"id": 3164663,

"node_id": "MDQ6VXNlcjMxNjQ2NjM=",

"avatar_url": "https://avatars.githubusercontent.com/u/3164663?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gwc4github",

"html_url": "https://github.com/gwc4github",

"followers_url": "https://api.github.com/users/gwc4github/followers",

"following_url": "https://api.github.com/users/gwc4github/following{/other_user}",

"gists_url": "https://api.github.com/users/gwc4github/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gwc4github/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gwc4github/subscriptions",

"organizations_url": "https://api.github.com/users/gwc4github/orgs",

"repos_url": "https://api.github.com/users/gwc4github/repos",

"events_url": "https://api.github.com/users/gwc4github/events{/privacy}",

"received_events_url": "https://api.github.com/users/gwc4github/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Have you looked at the [run_ner](https://github.com/huggingface/transformers/blob/master/examples/token-classification/run_ner.py) example and its corresponding [notebook](https://github.com/huggingface/notebooks/blob/master/examples/token_classification.ipynb)?",

"Thanks for getting back to me so quickly @sgugger. \r\nI am using what looks like the same exact version of run_ner with a different notebook. However, I don't see anything in the notebook you provided or run_ner that adds new labels. For example, if you look at the cell 8 of the notebook you linked, you see that it is only using the labels loaded with the model. What if I wanted to find addresses or some other entity type?\r\n\r\nThanks for your help!\r\nGregg\r\n\r\n",

"I am confused, the labels are loaded from the dataset, not the model. If you have another dataset with other labels, the rest of the notebook will work the same way.",

"Sorry, \r\nWhat I want to do is load the Bert model for NER trained from Conll2003 and use transfer learning to add addition training with new, additional data tagged with additional labels.\r\nIn the end, I want to take advantage of the existing training and add my own; teaching it to recognize more entity types.\r\n\r\nI have seen that some people seem to have done this but I haven't found the complete list of steps. ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,621 | 1,621 | NONE | null | I have spent a lot of time looking for a clear example on how to add labels to an existing model. For example, I would like to train Bert to recognize addresses in addition to the B-PER, B-ORG, etc. labels. So I think I would do the following

1. Add B-ADDRESS, B-CITY, B-STATE, etc. to a portion of a data set (like take a small subset of conll2003 or custom data.)

2. Add the labels to the id2label and label2id - BUT, where do I do this? In the config object? Is that all since the model does not expect the new labels?

3. Set the label count variable (in config again?)

4. Train on the new datasets (conll2003 & the new data) using the config file?

So in addition to the questions above, I would think that I could remove the head and do some transfer learning - meaning that I don't have to re-train with the conll2003 data. I should be able to just add training with the new data so that I have Bert+conll2003+my new data but I am only training on my new data. However, I don't see an example of this with HF either.

Sorry if I am just missing it. Here are some of the links I have looked at:

https://huggingface.co/transformers/custom_datasets.html#token-classification-with-w-nut-emerging-entities

https://huggingface.co/transformers/custom_datasets.html#fine-tuning-with-trainer

https://discuss.huggingface.co/t/retrain-reuse-fine-tuned-models-on-different-set-of-labels/346/4 ** GOOD INFO but not complete

https://github.com/huggingface/transformers/tree/master/examples/token-classification

@sgugger was in the thread above so he may be able to help?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11132/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11132/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11131 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11131/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11131/comments | https://api.github.com/repos/huggingface/transformers/issues/11131/events | https://github.com/huggingface/transformers/pull/11131 | 852,974,092 | MDExOlB1bGxSZXF1ZXN0NjExMTQ0OTAw | 11,131 | Update training.rst | {

"login": "TomorrowIsAnOtherDay",

"id": 25046619,

"node_id": "MDQ6VXNlcjI1MDQ2NjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/25046619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/TomorrowIsAnOtherDay",

"html_url": "https://github.com/TomorrowIsAnOtherDay",

"followers_url": "https://api.github.com/users/TomorrowIsAnOtherDay/followers",

"following_url": "https://api.github.com/users/TomorrowIsAnOtherDay/following{/other_user}",

"gists_url": "https://api.github.com/users/TomorrowIsAnOtherDay/gists{/gist_id}",

"starred_url": "https://api.github.com/users/TomorrowIsAnOtherDay/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/TomorrowIsAnOtherDay/subscriptions",

"organizations_url": "https://api.github.com/users/TomorrowIsAnOtherDay/orgs",

"repos_url": "https://api.github.com/users/TomorrowIsAnOtherDay/repos",

"events_url": "https://api.github.com/users/TomorrowIsAnOtherDay/events{/privacy}",

"received_events_url": "https://api.github.com/users/TomorrowIsAnOtherDay/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,621 | 1,621 | NONE | null | # What does this PR do?

fix a typo in tutorial

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11131/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11131/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11131",

"html_url": "https://github.com/huggingface/transformers/pull/11131",

"diff_url": "https://github.com/huggingface/transformers/pull/11131.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11131.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/11130 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11130/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11130/comments | https://api.github.com/repos/huggingface/transformers/issues/11130/events | https://github.com/huggingface/transformers/pull/11130 | 852,965,558 | MDExOlB1bGxSZXF1ZXN0NjExMTM5MDMz | 11,130 | Fix LogitsProcessor documentation | {

"login": "k-tahiro",

"id": 14054951,

"node_id": "MDQ6VXNlcjE0MDU0OTUx",

"avatar_url": "https://avatars.githubusercontent.com/u/14054951?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/k-tahiro",

"html_url": "https://github.com/k-tahiro",

"followers_url": "https://api.github.com/users/k-tahiro/followers",

"following_url": "https://api.github.com/users/k-tahiro/following{/other_user}",

"gists_url": "https://api.github.com/users/k-tahiro/gists{/gist_id}",

"starred_url": "https://api.github.com/users/k-tahiro/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/k-tahiro/subscriptions",

"organizations_url": "https://api.github.com/users/k-tahiro/orgs",

"repos_url": "https://api.github.com/users/k-tahiro/repos",

"events_url": "https://api.github.com/users/k-tahiro/events{/privacy}",

"received_events_url": "https://api.github.com/users/k-tahiro/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,617 | 1,617 | CONTRIBUTOR | null | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes document related to LogitsProcessor

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11130/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11130/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11130",

"html_url": "https://github.com/huggingface/transformers/pull/11130",

"diff_url": "https://github.com/huggingface/transformers/pull/11130.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11130.patch",

"merged_at": 1617952184000

} |

https://api.github.com/repos/huggingface/transformers/issues/11129 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11129/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11129/comments | https://api.github.com/repos/huggingface/transformers/issues/11129/events | https://github.com/huggingface/transformers/issues/11129 | 852,862,577 | MDU6SXNzdWU4NTI4NjI1Nzc= | 11,129 | denoising with sentence permutation, and language sampling | {

"login": "thomas-happify",

"id": 66082334,

"node_id": "MDQ6VXNlcjY2MDgyMzM0",

"avatar_url": "https://avatars.githubusercontent.com/u/66082334?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomas-happify",

"html_url": "https://github.com/thomas-happify",

"followers_url": "https://api.github.com/users/thomas-happify/followers",

"following_url": "https://api.github.com/users/thomas-happify/following{/other_user}",

"gists_url": "https://api.github.com/users/thomas-happify/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomas-happify/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomas-happify/subscriptions",

"organizations_url": "https://api.github.com/users/thomas-happify/orgs",

"repos_url": "https://api.github.com/users/thomas-happify/repos",

"events_url": "https://api.github.com/users/thomas-happify/events{/privacy}",

"received_events_url": "https://api.github.com/users/thomas-happify/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2648621985,

"node_id": "MDU6TGFiZWwyNjQ4NjIxOTg1",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Feature%20request",

"name": "Feature request",

"color": "FBCA04",

"default": false,

"description": "Request for a new feature"

}

] | open | false | null | [] | [] | 1,617 | 1,617 | null | NONE | null | # 🚀 Feature request

<!-- A clear and concise description of the feature proposal.

Please provide a link to the paper and code in case they exist. -->

## Motivation

When training or fine tuning models, data collator provided in huggingface isn't enough.

For example, if we want to further pretrain `mBART` or `XLM-R`, where language sampling or sentence permutation are needed, which is hard to do with huggingface datasets API since it loads all language datasets at first.

Thanks!

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11129/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11129/timeline | null | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11128 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11128/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11128/comments | https://api.github.com/repos/huggingface/transformers/issues/11128/events | https://github.com/huggingface/transformers/pull/11128 | 852,823,550 | MDExOlB1bGxSZXF1ZXN0NjExMDE2MTk3 | 11,128 | Run mlm pad to multiple for fp16 | {

"login": "ak314",

"id": 9784302,

"node_id": "MDQ6VXNlcjk3ODQzMDI=",

"avatar_url": "https://avatars.githubusercontent.com/u/9784302?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ak314",

"html_url": "https://github.com/ak314",

"followers_url": "https://api.github.com/users/ak314/followers",

"following_url": "https://api.github.com/users/ak314/following{/other_user}",

"gists_url": "https://api.github.com/users/ak314/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ak314/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ak314/subscriptions",

"organizations_url": "https://api.github.com/users/ak314/orgs",

"repos_url": "https://api.github.com/users/ak314/repos",

"events_url": "https://api.github.com/users/ak314/events{/privacy}",

"received_events_url": "https://api.github.com/users/ak314/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,618 | 1,617 | CONTRIBUTOR | null | # What does this PR do?

This PR uses padding to a multiple of 8 in the run_mlm.py language modeling example, when fp16 is used. Since the DataCollatorForLanguageModeling did not initially accept the pad_to_multiple_of option, that functionality was added.

Fixes #10627

## Before submitting

- [X] Did you write any new necessary tests?

## Who can review?

@sgugger | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11128/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11128/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11128",

"html_url": "https://github.com/huggingface/transformers/pull/11128",

"diff_url": "https://github.com/huggingface/transformers/pull/11128.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11128.patch",

"merged_at": 1617912769000

} |

https://api.github.com/repos/huggingface/transformers/issues/11127 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11127/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11127/comments | https://api.github.com/repos/huggingface/transformers/issues/11127/events | https://github.com/huggingface/transformers/pull/11127 | 852,743,918 | MDExOlB1bGxSZXF1ZXN0NjEwOTQ3NTk1 | 11,127 | Fix and refactor check_repo | {

"login": "sgugger",

"id": 35901082,

"node_id": "MDQ6VXNlcjM1OTAxMDgy",

"avatar_url": "https://avatars.githubusercontent.com/u/35901082?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sgugger",

"html_url": "https://github.com/sgugger",

"followers_url": "https://api.github.com/users/sgugger/followers",

"following_url": "https://api.github.com/users/sgugger/following{/other_user}",

"gists_url": "https://api.github.com/users/sgugger/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sgugger/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sgugger/subscriptions",

"organizations_url": "https://api.github.com/users/sgugger/orgs",

"repos_url": "https://api.github.com/users/sgugger/repos",

"events_url": "https://api.github.com/users/sgugger/events{/privacy}",

"received_events_url": "https://api.github.com/users/sgugger/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,617 | 1,617 | COLLABORATOR | null | # What does this PR do?

This PR fixes issues that a user may have with `make quality`/`make fixup` when all backends are not installed: in that case `requires_backends` is imported in the main init on top of the dummy objects and the script complains it's not documented.

The PR also refactors the white-list for the model that are not in an Auto-class, which was mostly containing Encoder and Decoder pieces of seq2seq models. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11127/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11127/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11127",

"html_url": "https://github.com/huggingface/transformers/pull/11127",

"diff_url": "https://github.com/huggingface/transformers/pull/11127.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11127.patch",

"merged_at": 1617832581000

} |

https://api.github.com/repos/huggingface/transformers/issues/11126 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11126/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11126/comments | https://api.github.com/repos/huggingface/transformers/issues/11126/events | https://github.com/huggingface/transformers/issues/11126 | 852,707,235 | MDU6SXNzdWU4NTI3MDcyMzU= | 11,126 | Create embeddings vectors for the context parameter of QuestionAnsweringPipeline for reusability. | {

"login": "talhaanwarch",

"id": 37379131,

"node_id": "MDQ6VXNlcjM3Mzc5MTMx",

"avatar_url": "https://avatars.githubusercontent.com/u/37379131?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/talhaanwarch",

"html_url": "https://github.com/talhaanwarch",

"followers_url": "https://api.github.com/users/talhaanwarch/followers",

"following_url": "https://api.github.com/users/talhaanwarch/following{/other_user}",

"gists_url": "https://api.github.com/users/talhaanwarch/gists{/gist_id}",

"starred_url": "https://api.github.com/users/talhaanwarch/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/talhaanwarch/subscriptions",

"organizations_url": "https://api.github.com/users/talhaanwarch/orgs",

"repos_url": "https://api.github.com/users/talhaanwarch/repos",

"events_url": "https://api.github.com/users/talhaanwarch/events{/privacy}",

"received_events_url": "https://api.github.com/users/talhaanwarch/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,621 | 1,621 | NONE | null | Create embeddings vectors for the context parameter of QuestionAnsweringPipeline for reusability.

**Scinario**

For each time we pass question and context to QuestionAnsweringPipeline, the context vector is created. Is there a way to create this context for once and just pass the question to save time and make inference quicker. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11126/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11126/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11125 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11125/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11125/comments | https://api.github.com/repos/huggingface/transformers/issues/11125/events | https://github.com/huggingface/transformers/issues/11125 | 852,637,940 | MDU6SXNzdWU4NTI2Mzc5NDA= | 11,125 | Not very good answers | {

"login": "Sankalp1233",

"id": 38120178,

"node_id": "MDQ6VXNlcjM4MTIwMTc4",

"avatar_url": "https://avatars.githubusercontent.com/u/38120178?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Sankalp1233",

"html_url": "https://github.com/Sankalp1233",

"followers_url": "https://api.github.com/users/Sankalp1233/followers",

"following_url": "https://api.github.com/users/Sankalp1233/following{/other_user}",

"gists_url": "https://api.github.com/users/Sankalp1233/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Sankalp1233/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Sankalp1233/subscriptions",

"organizations_url": "https://api.github.com/users/Sankalp1233/orgs",

"repos_url": "https://api.github.com/users/Sankalp1233/repos",

"events_url": "https://api.github.com/users/Sankalp1233/events{/privacy}",

"received_events_url": "https://api.github.com/users/Sankalp1233/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The text you are providing is probably too long for the model. Most Transformer models accept a sequence length of 512 tokens.\r\n\r\nWhich model did you use?",

"I just used the general q-a pipeline:\r\nfrom google.colab import files\r\nuploaded = files.upload() \r\nfilename = \"New_Spark_Questions.txt\"\r\nnew_file = uploaded[filename].decode(\"utf-8\")\r\n!pip3 install sentencepiece\r\n!pip3 install git+https://github.com/huggingface/transformers\r\nquestion = \"Why should I take part in SPARK?\" \r\nfrom transformers import pipeline\r\nqa = pipeline(\"question-answering\")\r\nanswer = qa(question=question, context=new_file)\r\nprint(f\"Question: {question}\")\r\nprint(f\"Answer: '{answer['answer']}' with score {answer['score']}\")\r\nHow does the q-a pipeline decide the score?\r\nAlso how do we use a model like Bert, XLNet, etc. on a q-a pipeline\r\nDoes the input in the q-a pipeline have to be a dictionary?",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,621 | 1,621 | NONE | null | When I try to feed in this context from a long txt file lets say below:

[New_Spark_Questions.txt](https://github.com/huggingface/transformers/files/6273496/New_Spark_Questions.txt)

and I feed in a question from that txt file: Would police or the FBI ever be able to access DNA or other information collected?

it is not giving me a very good answer: I get Answer: 'help speed up the progress of autism research' with score 0.4306815266609192. How do we see all the cores available, how does the model decide which answer is the best?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11125/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11125/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11124 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11124/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11124/comments | https://api.github.com/repos/huggingface/transformers/issues/11124/events | https://github.com/huggingface/transformers/issues/11124 | 852,636,637 | MDU6SXNzdWU4NTI2MzY2Mzc= | 11,124 | ALBERT pretrained tokenizer loading failed on Google Colab | {

"login": "PeterQiu0516",

"id": 47267715,

"node_id": "MDQ6VXNlcjQ3MjY3NzE1",

"avatar_url": "https://avatars.githubusercontent.com/u/47267715?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/PeterQiu0516",

"html_url": "https://github.com/PeterQiu0516",

"followers_url": "https://api.github.com/users/PeterQiu0516/followers",

"following_url": "https://api.github.com/users/PeterQiu0516/following{/other_user}",

"gists_url": "https://api.github.com/users/PeterQiu0516/gists{/gist_id}",

"starred_url": "https://api.github.com/users/PeterQiu0516/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/PeterQiu0516/subscriptions",

"organizations_url": "https://api.github.com/users/PeterQiu0516/orgs",

"repos_url": "https://api.github.com/users/PeterQiu0516/repos",

"events_url": "https://api.github.com/users/PeterQiu0516/events{/privacy}",

"received_events_url": "https://api.github.com/users/PeterQiu0516/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Have you installed the [sentencepiece](https://github.com/google/sentencepiece) library?",

"> Have you installed the [sentencepiece](https://github.com/google/sentencepiece) library?\r\n\r\nYes.\r\n\r\n```\r\n!pip install transformers\r\n!pip install sentencepiece\r\n```\r\n\r\n```\r\nRequirement already satisfied: transformers in /usr/local/lib/python3.7/dist-packages (4.5.0)\r\nRequirement already satisfied: numpy>=1.17 in /usr/local/lib/python3.7/dist-packages (from transformers) (1.19.5)\r\nRequirement already satisfied: tqdm>=4.27 in /usr/local/lib/python3.7/dist-packages (from transformers) (4.41.1)\r\nRequirement already satisfied: requests in /usr/local/lib/python3.7/dist-packages (from transformers) (2.23.0)\r\nRequirement already satisfied: importlib-metadata; python_version < \"3.8\" in /usr/local/lib/python3.7/dist-packages (from transformers) (3.8.1)\r\nRequirement already satisfied: filelock in /usr/local/lib/python3.7/dist-packages (from transformers) (3.0.12)\r\nRequirement already satisfied: sacremoses in /usr/local/lib/python3.7/dist-packages (from transformers) (0.0.44)\r\nRequirement already satisfied: tokenizers<0.11,>=0.10.1 in /usr/local/lib/python3.7/dist-packages (from transformers) (0.10.2)\r\nRequirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.7/dist-packages (from transformers) (2019.12.20)\r\nRequirement already satisfied: packaging in /usr/local/lib/python3.7/dist-packages (from transformers) (20.9)\r\nRequirement already satisfied: chardet<4,>=3.0.2 in /usr/local/lib/python3.7/dist-packages (from requests->transformers) (3.0.4)\r\nRequirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.7/dist-packages (from requests->transformers) (2020.12.5)\r\nRequirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /usr/local/lib/python3.7/dist-packages (from requests->transformers) (1.24.3)\r\nRequirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.7/dist-packages (from requests->transformers) (2.10)\r\nRequirement already satisfied: zipp>=0.5 in /usr/local/lib/python3.7/dist-packages (from importlib-metadata; python_version < \"3.8\"->transformers) (3.4.1)\r\nRequirement already satisfied: typing-extensions>=3.6.4; python_version < \"3.8\" in /usr/local/lib/python3.7/dist-packages (from importlib-metadata; python_version < \"3.8\"->transformers) (3.7.4.3)\r\nRequirement already satisfied: six in /usr/local/lib/python3.7/dist-packages (from sacremoses->transformers) (1.15.0)\r\nRequirement already satisfied: joblib in /usr/local/lib/python3.7/dist-packages (from sacremoses->transformers) (1.0.1)\r\nRequirement already satisfied: click in /usr/local/lib/python3.7/dist-packages (from sacremoses->transformers) (7.1.2)\r\nRequirement already satisfied: pyparsing>=2.0.2 in /usr/local/lib/python3.7/dist-packages (from packaging->transformers) (2.4.7)\r\nRequirement already satisfied: sentencepiece in /usr/local/lib/python3.7/dist-packages (0.1.95)\r\n```",

"I restart the runtime and it is fixed now. Seems like some strange compatibility issues with colab."

] | 1,617 | 1,617 | 1,617 | NONE | null | I tried the example code for ALBERT model on Google Colab:

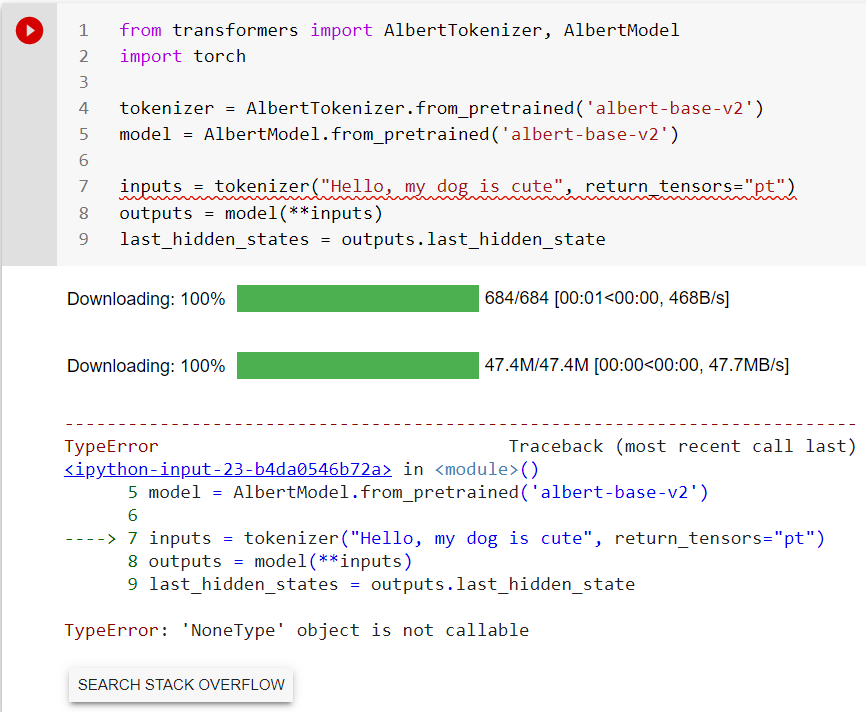

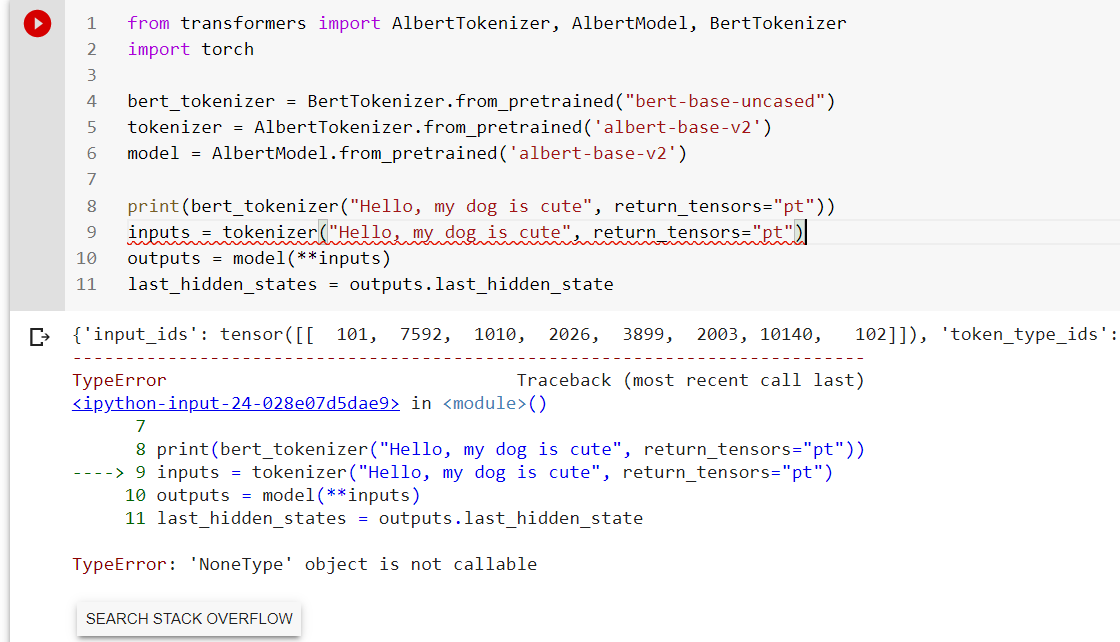

```

from transformers import AlbertTokenizer, AlbertModel

import torch

tokenizer = AlbertTokenizer.from_pretrained('albert-base-v2')

model = AlbertModel.from_pretrained('albert-base-v2')

inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

```

Error Message:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-23-b4da0546b72a> in <module>()

5 model = AlbertModel.from_pretrained('albert-base-v2')

6

----> 7 inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

8 outputs = model(**inputs)

9 last_hidden_states = outputs.last_hidden_state

TypeError: 'NoneType' object is not callable

```

It seems that the ALBERT tokenizer failed to load correctly. And I tried BERT's pretrained tokenizer and it could be loaded correctly instead.

BERT tokenizer:

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11124/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11124/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11123 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11123/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11123/comments | https://api.github.com/repos/huggingface/transformers/issues/11123/events | https://github.com/huggingface/transformers/pull/11123 | 852,568,686 | MDExOlB1bGxSZXF1ZXN0NjEwNzk3NTc5 | 11,123 | Adds use_auth_token with pipelines | {

"login": "philschmid",

"id": 32632186,

"node_id": "MDQ6VXNlcjMyNjMyMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/32632186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/philschmid",

"html_url": "https://github.com/philschmid",

"followers_url": "https://api.github.com/users/philschmid/followers",

"following_url": "https://api.github.com/users/philschmid/following{/other_user}",

"gists_url": "https://api.github.com/users/philschmid/gists{/gist_id}",

"starred_url": "https://api.github.com/users/philschmid/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/philschmid/subscriptions",

"organizations_url": "https://api.github.com/users/philschmid/orgs",

"repos_url": "https://api.github.com/users/philschmid/repos",

"events_url": "https://api.github.com/users/philschmid/events{/privacy}",

"received_events_url": "https://api.github.com/users/philschmid/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,619 | 1,617 | MEMBER | null | # What does this PR do?

This PR adds `use_auth_token` as a named parameter to the `pipeline`. Also fixed `AutoConfig.from_pretrained` adding the `model_kwargs` as `**kwargs` to load private model with `use_auth_token`.

**Possible Usage for `pipeline` with `use_auth_token`:**

with model_kwargs

```python

hf_pipeline = pipeline('sentiment-analysis',

model='philschmid/sagemaker-getting-started',

tokenizer='philschmid/sagemaker-getting-started',

model_kwargs={"use_auth_token": "xxx"})

```

as named paramter

```python

hf_pipeline = pipeline('sentiment-analysis',

model='philschmid/sagemaker-getting-started',

tokenizer='philschmid/sagemaker-getting-started',

use_auth_token = "xxx")

```

cc @Narsil | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11123/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11123/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11123",

"html_url": "https://github.com/huggingface/transformers/pull/11123",

"diff_url": "https://github.com/huggingface/transformers/pull/11123.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11123.patch",

"merged_at": 1617820380000

} |

https://api.github.com/repos/huggingface/transformers/issues/11122 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11122/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11122/comments | https://api.github.com/repos/huggingface/transformers/issues/11122/events | https://github.com/huggingface/transformers/pull/11122 | 852,483,434 | MDExOlB1bGxSZXF1ZXN0NjEwNzI4MDcx | 11,122 | fixed max_length in beam_search() and group_beam_search() to use beam… | {

"login": "GeetDsa",

"id": 13940397,

"node_id": "MDQ6VXNlcjEzOTQwMzk3",

"avatar_url": "https://avatars.githubusercontent.com/u/13940397?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/GeetDsa",

"html_url": "https://github.com/GeetDsa",

"followers_url": "https://api.github.com/users/GeetDsa/followers",

"following_url": "https://api.github.com/users/GeetDsa/following{/other_user}",

"gists_url": "https://api.github.com/users/GeetDsa/gists{/gist_id}",

"starred_url": "https://api.github.com/users/GeetDsa/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/GeetDsa/subscriptions",

"organizations_url": "https://api.github.com/users/GeetDsa/orgs",

"repos_url": "https://api.github.com/users/GeetDsa/repos",

"events_url": "https://api.github.com/users/GeetDsa/events{/privacy}",

"received_events_url": "https://api.github.com/users/GeetDsa/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"hi @GeetDsa \r\n\r\nThanks a lot for the PR. I understand the issue and IMO what should be done here is to make sure to pass the same `max_length` to the `BeamScorer` and `beam_search` instead of changing the method.\r\n\r\nThis is because the overall philosophy of `generate` is that whenever some argument is `None` its value should explicitly default to the value specified in `config`. This how all generation methods work.",

"Thanks for the issue & PR @GeetDsa! I agree with @patil-suraj that we should not change the way `max_length` is set in `beam_search`.\r\n\r\nOverall, the problem IMO is actually that `BeamScorer` has a `max_length` attribute... => this shouldn't be the case IMO:\r\n- `BeamHypotheses` has a `max_length` attribute that is unused and can be removed\r\n- `BeamSearchScorer` has a `max_length` attribute that is only used for the function `finalize` => the better approach here would be too pass `max_length` as an argument to `finalize(...)` IMO\r\n\r\nThis solution will then ensure that only one `max_length` is being used and should also help to refactor out `max_length` cc @Narsil longterm.\r\n\r\nDo you want to give it a try @GeetDsa ? :-)",

"> Thanks for the issue & PR @GeetDsa! I agree with @patil-suraj that we should not change the way `max_length` is set in `beam_search`.\r\n> \r\n> Overall, the problem IMO is actually that `BeamScorer` has a `max_length` attribute... => this shouldn't be the case IMO:\r\n> \r\n> * `BeamHypotheses` has a `max_length` attribute that is unused and can be removed\r\n> * `BeamSearchScorer` has a `max_length` attribute that is only used for the function `finalize` => the better approach here would be too pass `max_length` as an argument to `finalize(...)` IMO\r\n> \r\n> This solution will then ensure that only one `max_length` is being used and should also help to refactor out `max_length` cc @Narsil longterm.\r\n> \r\n> Do you want to give it a try @GeetDsa ? :-)\r\n\r\nI can give a try :)\r\n",

"> BeamHypotheses has a max_length attribute that is unused and can be removed\r\n\r\nNice !\r\n\r\n> BeamSearchScorer has a max_length attribute that is only used for the function finalize => the better approach here would be too pass max_length as an argument to finalize(...) IMO\r\n\r\nSeems easier.\r\n@GeetDsa Do you think you could also add a test that reproduces your issue without your fix and that passes with the fix ? That will make backward compatibility easier to test (we're heading towards a direction to remove `max_length` as much as possible while maintaining backward compatbility)",

"I have created a new pull request #11378 ; @Narsil, I think it will be little hard and time consuming for me to implement a test as I am not well-versed with it.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,617 | 1,622 | 1,622 | CONTRIBUTOR | null | …_scorer.max_length

# What does this PR do?

Fixes the issue #11040

`beam_search()` and `group_beam_search()` uses `beam_scorer.max_length` if `max_length` is not explicitly passed.

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes #11040

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11122/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11122/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11122",

"html_url": "https://github.com/huggingface/transformers/pull/11122",

"diff_url": "https://github.com/huggingface/transformers/pull/11122.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11122.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/11121 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11121/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11121/comments | https://api.github.com/repos/huggingface/transformers/issues/11121/events | https://github.com/huggingface/transformers/issues/11121 | 852,460,423 | MDU6SXNzdWU4NTI0NjA0MjM= | 11,121 | Errors in inference API | {

"login": "guplersaxanoid",

"id": 40036742,

"node_id": "MDQ6VXNlcjQwMDM2NzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/40036742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/guplersaxanoid",

"html_url": "https://github.com/guplersaxanoid",

"followers_url": "https://api.github.com/users/guplersaxanoid/followers",

"following_url": "https://api.github.com/users/guplersaxanoid/following{/other_user}",

"gists_url": "https://api.github.com/users/guplersaxanoid/gists{/gist_id}",

"starred_url": "https://api.github.com/users/guplersaxanoid/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/guplersaxanoid/subscriptions",

"organizations_url": "https://api.github.com/users/guplersaxanoid/orgs",

"repos_url": "https://api.github.com/users/guplersaxanoid/repos",

"events_url": "https://api.github.com/users/guplersaxanoid/events{/privacy}",

"received_events_url": "https://api.github.com/users/guplersaxanoid/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Maybe of interest to @Narsil ",

"@DeveloperInProgress .\r\nSorry, there is no exhaustive list of those as of yet (as a number of them are actual exceptions raised by transformers itself)\r\n\r\nWhat I can say, is that Environment and ValueError are simply displayed as is and treated as user error (usually problem in the model configuration or inputs of the model).\r\n\r\nAny other exception is raised as a server error (and looked at regularly).\r\n\r\nAny \"unknown error\" is an error for which we can't find a good message, we try to accompany it (when it's possible) with any warnings that might have been raised earlier by transformers (for instance too long sequences make certain models crash, deep cuda errors are unusable as is, the warning is better).\r\n\r\nDoes that answer your question ?",

"@Narsil gotcha"

] | 1,617 | 1,617 | 1,617 | NONE | null | I understand that the inference API returns a json with "error" field if an error occurs. Where can I find the list of such possible errors? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11121/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11121/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/11120 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11120/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11120/comments | https://api.github.com/repos/huggingface/transformers/issues/11120/events | https://github.com/huggingface/transformers/pull/11120 | 852,413,510 | MDExOlB1bGxSZXF1ZXN0NjEwNjcwMjU0 | 11,120 | Adds a note to resize the token embedding matrix when adding special … | {

"login": "LysandreJik",

"id": 30755778,

"node_id": "MDQ6VXNlcjMwNzU1Nzc4",

"avatar_url": "https://avatars.githubusercontent.com/u/30755778?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/LysandreJik",

"html_url": "https://github.com/LysandreJik",

"followers_url": "https://api.github.com/users/LysandreJik/followers",

"following_url": "https://api.github.com/users/LysandreJik/following{/other_user}",

"gists_url": "https://api.github.com/users/LysandreJik/gists{/gist_id}",

"starred_url": "https://api.github.com/users/LysandreJik/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LysandreJik/subscriptions",

"organizations_url": "https://api.github.com/users/LysandreJik/orgs",

"repos_url": "https://api.github.com/users/LysandreJik/repos",

"events_url": "https://api.github.com/users/LysandreJik/events{/privacy}",

"received_events_url": "https://api.github.com/users/LysandreJik/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,617 | 1,617 | MEMBER | null | …tokens

This was added to the `add_tokens` method, but was forgotten on the `add_special_tokens` method.

See the updated docs: https://191874-155220641-gh.circle-artifacts.com/0/docs/_build/html/internal/tokenization_utils.html?highlight=add_special_tokens#transformers.tokenization_utils_base.SpecialTokensMixin.add_special_tokens

closes https://github.com/huggingface/transformers/issues/11102 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11120/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11120/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11120",

"html_url": "https://github.com/huggingface/transformers/pull/11120",

"diff_url": "https://github.com/huggingface/transformers/pull/11120.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11120.patch",

"merged_at": 1617804405000

} |

https://api.github.com/repos/huggingface/transformers/issues/11119 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11119/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11119/comments | https://api.github.com/repos/huggingface/transformers/issues/11119/events | https://github.com/huggingface/transformers/pull/11119 | 852,397,579 | MDExOlB1bGxSZXF1ZXN0NjEwNjU2NzMw | 11,119 | updated user permissions based on umask | {

"login": "bhavitvyamalik",

"id": 19718818,

"node_id": "MDQ6VXNlcjE5NzE4ODE4",

"avatar_url": "https://avatars.githubusercontent.com/u/19718818?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/bhavitvyamalik",

"html_url": "https://github.com/bhavitvyamalik",

"followers_url": "https://api.github.com/users/bhavitvyamalik/followers",

"following_url": "https://api.github.com/users/bhavitvyamalik/following{/other_user}",

"gists_url": "https://api.github.com/users/bhavitvyamalik/gists{/gist_id}",

"starred_url": "https://api.github.com/users/bhavitvyamalik/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/bhavitvyamalik/subscriptions",

"organizations_url": "https://api.github.com/users/bhavitvyamalik/orgs",

"repos_url": "https://api.github.com/users/bhavitvyamalik/repos",

"events_url": "https://api.github.com/users/bhavitvyamalik/events{/privacy}",

"received_events_url": "https://api.github.com/users/bhavitvyamalik/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Thank you, @bhavitvyamalik. This is excellent\r\n\r\nLet's first review what we are trying to correct.\r\n\r\nLooking under `~/.cache/huggingface/transformers/` I see:\r\n```\r\n-rw------- 1 stas stas 1.1K Oct 16 13:27 00209bab0f0b1af5ef50d4d8a2f8fb0589ec747d29d975f496d377312fc50ea7.688a102406298bdd2190bac9e0c6da7c3ac2bfa26aa40e9e07904fa\r\ne563aeec3\r\n-rw-rw-r-- 1 stas stas 158 Oct 16 13:27 00209bab0f0b1af5ef50d4d8a2f8fb0589ec747d29d975f496d377312fc50ea7.688a102406298bdd2190bac9e0c6da7c3ac2bfa26aa40e9e07904fa\r\ne563aeec3.json\r\n-rwxrwxr-x 1 stas stas 0 Oct 16 13:27 00209bab0f0b1af5ef50d4d8a2f8fb0589ec747d29d975f496d377312fc50ea7.688a102406298bdd2190bac9e0c6da7c3ac2bfa26aa40\r\ne9e07904fae563aeec3.lock*\r\n-rw------- 1 stas stas 4.9M Oct 14 11:56 002911b8e4cea0a107864f5b17f20c10f613d256e92e3c1247d6d174fbf56fe5.bf6ebaf6162cfbfbad2ce1909278a9ea1fbfe9284d318bff8bccddf\r\ndaa104205\r\n-rw-rw-r-- 1 stas stas 130 Oct 14 11:56 002911b8e4cea0a107864f5b17f20c10f613d256e92e3c1247d6d174fbf56fe5.bf6ebaf6162cfbfbad2ce1909278a9ea1fbfe9284d318bff8bccddf\r\ndaa104205.json\r\n```\r\n\r\nSo some files already have the correct perms `-rw-rw-r--`, but the others don't (`-rw-------` missing group/other perms)\r\n\r\nIf I try to get a new cached file:\r\n\r\n```\r\nPYTHONPATH=\"src\" python -c \"from transformers import AutoModel; AutoModel.from_pretrained('sshleifer/student_pegasus_xsum_16_8')\"\r\n```\r\n\r\nwe can see how the tempfile uses user-only perms while downloading it:\r\n```\r\n-rw------- 1 stas stas 246M Apr 7 10:06 tmplse9bwr1\r\n```\r\n\r\nand then your fix, adjusts the perms:\r\n\r\n```\r\n-rw-rw-r-- 1 stas stas 1.7G Apr 7 10:08 6636af980d08a3205d570f287ec5867d09d09c71d8d192861bf72e639a8c42fc.c7a07b57c0fbcb714c5b77aa08bea4f26ee23043f3c28e7c1af1153\r\na4bdfeea5\r\n-rw-rw-r-- 1 stas stas 180 Apr 7 10:08 6636af980d08a3205d570f287ec5867d09d09c71d8d192861bf72e639a8c42fc.c7a07b57c0fbcb714c5b77aa08bea4f26ee23043f3c28e7c1af1153\r\na4bdfeea5.json\r\n-rwxrwxr-x 1 stas stas 0 Apr 7 10:06 6636af980d08a3205d570f287ec5867d09d09c71d8d192861bf72e639a8c42fc.c7a07b57c0fbcb714c5b77aa08bea4f26ee23043f3c28\r\ne7c1af1153a4bdfeea5.lock*\r\n```\r\n\r\nSo this is goodness.",

"There is also a recipe subclassing `NamedTemporaryFile` https://stackoverflow.com/a/44130605/9201239 so it's even more atomic. But I'm not sure how that would work with resumes. I think your way is just fine for now and if we start doing more of that we will use a subclass that fixes perms internally.\r\n",

"That makes sense, moving it from `cached_path` to `get_from_cache`. Let me push your suggested changes. Yeah, even I came across this subclassing `NamedTemporaryFile` when I had to fix this for Datasets but I felt adding more such tempfiles and then using subclassing would be more beneficial.",

"Any plans for asking user what file permission they want for this model?",

"> Any plans for asking user what file permission they want for this model?\r\n\r\nCould you elaborate why would a user need to do that?\r\n\r\nFor shared environment this is a domain of `umask` and may be \"sticky bit\".\r\n",

"When we started working on this feature for Datasets someone suggested this to us:\r\n\r\n> For example, I might start a training run, downloading a dataset. Then, a couple of days later, a collaborator using the same repository might want to use the same dataset on the same shared filesystem, but won't be able to under the default permissions.\r\n> \r\n> Being able to specify directly in the top-level load_dataset() call seems important, but an equally valid option would be to just inherit from the running user's umask (this should probably be the default anyway).\r\n> \r\n> So basically, argument that takes a custom set of permissions, and by default, use the running user's umask!\r\n\r\nSay if someone doesn't want the default running user's umask then they can specify what file permissions they want for that model. Incase they opt for this, we can avoid the umask part and directly `chmod` those permissions for the newly downloaded model. I'm not sure how useful would this be from the context from Transformers library.",

"Thank you for sharing the use case, that was helpful.\r\n\r\nBut this can be solved on the unix side of things. If you want a shared directory you can set it up as such. If you need to share files with other members of the group you put them into the same unix group.\r\n\r\nIMHO, in general programs shouldn't mess with permissions directly. Other than the fix you just did which compensates for the temp facility restrictions.\r\n",

"@LysandreJik, could you please have a look so that we could merge this? Thank you!",

"@stas00 @bhavitvyamalik I must say that I am not familiar with the umask command, but it seems = as @LysandreJik rightfully points out in my feature request https://github.com/huggingface/transformers/issues/12169#issuecomment-861467551 - that this may solve the issue that we were having.\r\n\r\nIn brief (but please read the whole issue if you have the time): we are trying to use a single shared cache directory for all our users to prevent duplicate models. This did not work as we were running into permission errors (due to `-rw-------` as @stas00 shows). Does this PR change the behaviour of created/downloaded files so that they adhere to the permission level of the current directory? Or at least that those files are accessible by all users?\r\n\r\nThanks!",

"I think, yes, this was the point of this PR. The problem is that `tempfile` forces user-only perms, so this PR restored them back to `umask`'s setting.\r\n\r\nOne other thing that helps is setting set group id bit `g+s`, which makes sub-dirs and files create under such dirs inherit the perms of the parent dir.\r\n\r\nSo your setup can be something like:\r\n\r\n```\r\nsudo find /shared/path -type d -execdir chmod g+rwxs {} \\;\r\nsudo find /shared/path -type f -execdir chmod g+rw {} \\;\r\nsudo chgrp -R shared_group_name /shared/path\r\n```\r\n\r\nwhere `/shared/path` is obvious and `shared_group_name` is the group name that all users that should have access belong to.\r\n\r\nFinally, each user having `umask 0002` or `umask 0007` in their `~/.bashrc` will make sure that the files will be read/write-able by the group on creation. `0007` is if you don't want files to be readable by others.\r\n\r\nNote that some unix programs don't respect set gid, e.g. `scp` ignores any sub-folders copied with `scp -r` and will set them to user's `umask` perms and drop setgid. But I don't think you'll be affected by it.",

"Thanks, this looks promising! We currently have a \"hack\" implemented that simply watches for new file changes and on the creation of a new file, changes the permissions. Not ideal, but seeing that some colleagues use older versions of transformers in their experiments, we will have to make do for now."

] | 1,617 | 1,623 | 1,620 | CONTRIBUTOR | null | # What does this PR do?

Fixes [#2065](https://github.com/huggingface/datasets/issues/2065) where cached model's permissions change depending on running user's umask.

## Who can review?

@thomwolf @stas00 please let me know if any other changes are required in this.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11119/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11119/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11119",

"html_url": "https://github.com/huggingface/transformers/pull/11119",

"diff_url": "https://github.com/huggingface/transformers/pull/11119.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/11119.patch",

"merged_at": 1620629129000

} |

https://api.github.com/repos/huggingface/transformers/issues/11118 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/11118/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/11118/comments | https://api.github.com/repos/huggingface/transformers/issues/11118/events | https://github.com/huggingface/transformers/pull/11118 | 852,394,010 | MDExOlB1bGxSZXF1ZXN0NjEwNjUzNzM4 | 11,118 | Some styling of the training table in Notebooks | {

"login": "sgugger",

"id": 35901082,

"node_id": "MDQ6VXNlcjM1OTAxMDgy",

"avatar_url": "https://avatars.githubusercontent.com/u/35901082?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sgugger",

"html_url": "https://github.com/sgugger",

"followers_url": "https://api.github.com/users/sgugger/followers",

"following_url": "https://api.github.com/users/sgugger/following{/other_user}",

"gists_url": "https://api.github.com/users/sgugger/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sgugger/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sgugger/subscriptions",

"organizations_url": "https://api.github.com/users/sgugger/orgs",

"repos_url": "https://api.github.com/users/sgugger/repos",

"events_url": "https://api.github.com/users/sgugger/events{/privacy}",

"received_events_url": "https://api.github.com/users/sgugger/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,617 | 1,617 | 1,617 | COLLABORATOR | null | # What does this PR do?

This PR removes the custom styling of the progress bar, because the default one is actually prettier and it can cause some lag on some browsers (like Safari) to recompute the style at each update of the progress bar.

It also removes the timing metrics which do not make much sense form the table (there are still in the log history). | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/11118/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/11118/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/11118",

"html_url": "https://github.com/huggingface/transformers/pull/11118",

"diff_url": "https://github.com/huggingface/transformers/pull/11118.diff",