url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/10230 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10230/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10230/comments | https://api.github.com/repos/huggingface/transformers/issues/10230/events | https://github.com/huggingface/transformers/pull/10230 | 810,036,646 | MDExOlB1bGxSZXF1ZXN0NTc0NzkyNjcx | 10,230 | Making TF GPT2 compliant with XLA and AMP | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,613 | 1,613 | 1,613 | CONTRIBUTOR | null | # What does this PR do?

This PR makes the TF GPT2 model compliant with XLA and AMP. All the slow tests are passing as well. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10230/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10230/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10230",

"html_url": "https://github.com/huggingface/transformers/pull/10230",

"diff_url": "https://github.com/huggingface/transformers/pull/10230.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10230.patch",

"merged_at": 1613637362000

} |

https://api.github.com/repos/huggingface/transformers/issues/10229 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10229/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10229/comments | https://api.github.com/repos/huggingface/transformers/issues/10229/events | https://github.com/huggingface/transformers/pull/10229 | 810,020,839 | MDExOlB1bGxSZXF1ZXN0NTc0Nzc5NTUy | 10,229 | Introduce warmup_ratio training argument | {

"login": "tanmay17061",

"id": 32801726,

"node_id": "MDQ6VXNlcjMyODAxNzI2",

"avatar_url": "https://avatars.githubusercontent.com/u/32801726?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tanmay17061",

"html_url": "https://github.com/tanmay17061",

"followers_url": "https://api.github.com/users/tanmay17061/followers",

"following_url": "https://api.github.com/users/tanmay17061/following{/other_user}",

"gists_url": "https://api.github.com/users/tanmay17061/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tanmay17061/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tanmay17061/subscriptions",

"organizations_url": "https://api.github.com/users/tanmay17061/orgs",

"repos_url": "https://api.github.com/users/tanmay17061/repos",

"events_url": "https://api.github.com/users/tanmay17061/events{/privacy}",

"received_events_url": "https://api.github.com/users/tanmay17061/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"As per current implementation, any non-zero value given for `warmup_steps` will override any effects of `warmup_ratio`. It made sense for me to give higher precedence to `warmup_steps` as it seems to be the more inconvenient argument of the 2 to provide from user perspective. Please let me know if this default behaviour is to be changed.\r\n\r\nPS: this is my first PR, so feel free to correct me. I will be happy to accommodate 😄 ",

"Thanks for the review! I've incorporated the comments. \r\nDo let me know if anything else needs to be addressed.",

"Thanks for your comments! \r\nAgreed, the code looks much more readable now. \r\nDo let me know if there can be any more improvement. \r\n\r\nThanks.",

"It would indeed be better in `TrainingArguments.__post_init__`: the rational for that is that when instantiating an object with wrong values, we want the error to be raised as soon as possible and as close as possible to the source for easy debugging.\r\n\r\nIn this case, the problem should appear at the line that parses the `TrainingArguments` or when they are created.",

"Thanks! Taken care of it.",

"Thanks for adding this functionality!"

] | 1,613 | 1,613 | 1,613 | CONTRIBUTOR | null | Introduce warmup_ratio training argument in both

TrainingArguments and TFTrainingArguments classes (#6673)

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

This PR will add a new argument `warmup_ratio` to both `TrainingArguments` and `TFTrainingArguments` classes. This can be used to specify the ratio of total training steps for which linear warmup will happen.

This is especially convenient when the user wants to play around with the `num_train_epochs` or `max_steps` arguments while keeping the ratio of warmup steps a constant.

Fixes #6673

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case. [Link to the issue raised](https://github.com/huggingface/transformers/issues/6673).

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

Since modifications in trainer: @sgugger | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10229/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10229/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10229",

"html_url": "https://github.com/huggingface/transformers/pull/10229",

"diff_url": "https://github.com/huggingface/transformers/pull/10229.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10229.patch",

"merged_at": 1613669013000

} |

https://api.github.com/repos/huggingface/transformers/issues/10228 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10228/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10228/comments | https://api.github.com/repos/huggingface/transformers/issues/10228/events | https://github.com/huggingface/transformers/issues/10228 | 810,013,350 | MDU6SXNzdWU4MTAwMTMzNTA= | 10,228 | Converting original T5 to be used in Transformers | {

"login": "marton-avrios",

"id": 59836119,

"node_id": "MDQ6VXNlcjU5ODM2MTE5",

"avatar_url": "https://avatars.githubusercontent.com/u/59836119?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/marton-avrios",

"html_url": "https://github.com/marton-avrios",

"followers_url": "https://api.github.com/users/marton-avrios/followers",

"following_url": "https://api.github.com/users/marton-avrios/following{/other_user}",

"gists_url": "https://api.github.com/users/marton-avrios/gists{/gist_id}",

"starred_url": "https://api.github.com/users/marton-avrios/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/marton-avrios/subscriptions",

"organizations_url": "https://api.github.com/users/marton-avrios/orgs",

"repos_url": "https://api.github.com/users/marton-avrios/repos",

"events_url": "https://api.github.com/users/marton-avrios/events{/privacy}",

"received_events_url": "https://api.github.com/users/marton-avrios/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi,\r\n\r\nthis file exists, it can be found here: https://github.com/huggingface/transformers/blob/master/src/transformers/models/t5/convert_t5_original_tf_checkpoint_to_pytorch.py\r\n\r\n",

"Thank you! I tried the script and it misses a `config.json` file. Where can I find this?",

"The config.json should be part of the original T5 files, which can be found [here](https://github.com/google-research/text-to-text-transfer-transformer#released-model-checkpoints).\r\n\r\nHowever, I wonder why you want to convert the original checkpoints yourself, because this has already been done by the authors of HuggingFace. You can find all T5 checkpoints on the [hub](https://huggingface.co/models?search=google/t5). ",

"Because I finetuned them on TPU which is not possible in Transformers yet (at least not in TF) and I want to use Transformers for prediction.",

"...I think you linked this issue as location for original T5 files",

"Apologies, updated the URL. The `config.json` file should look something like [this](https://huggingface.co/google/t5-large-ssm-nq/blob/main/config.json), containg all the hyperparameter values. When you fine-tuned T5 on TPUs, do you have a configuration available?",

"Thanks! (you are a lifesaver by the way with these response times :)). I finetuned using the original repo which uses Mesh Tensorflow and it exports checkpoints in the same format as the original published checkpoints. And there is no `config.json` file, not even in the original published checkpoints you linked. For future reference: you can look at the files by going to this url: https://console.cloud.google.com/storage/browser/t5-data/pretrained_models/small if you have a google cloud account.",

"I see that they store configurations in .gin files, like this one: https://console.cloud.google.com/storage/browser/_details/t5-data/pretrained_models/small/operative_config.gin\r\n\r\nWhen opening this on my laptop in Notepad, this looks like this:\r\n\r\n```\r\nimport t5.models.mesh_transformer\r\nimport t5.data.sentencepiece_vocabulary\r\nimport mesh_tensorflow.optimize\r\nimport mesh_tensorflow.transformer.dataset\r\nimport mesh_tensorflow.transformer.learning_rate_schedules\r\nimport mesh_tensorflow.transformer.t2t_vocabulary\r\nimport mesh_tensorflow.transformer.transformer_layers\r\nimport mesh_tensorflow.transformer.utils\r\n\r\n# Macros:\r\n# ==============================================================================\r\nd_ff = 2048\r\nd_kv = 64\r\nd_model = 512\r\ndropout_rate = 0.1\r\ninputs_length = 512\r\nmean_noise_span_length = 3.0\r\nMIXTURE_NAME = 'all_mix'\r\nnoise_density = 0.15\r\nnum_heads = 8\r\nnum_layers = 6\r\ntargets_length = 512\r\ninit_checkpoint = \"gs://t5-data/pretrained_models/small/model.ckpt-1000000\"\r\ntokens_per_batch = 1048576\r\n\r\n# Parameters for AdafactorOptimizer:\r\n# ==============================================================================\r\nAdafactorOptimizer.beta1 = 0.0\r\nAdafactorOptimizer.clipping_threshold = 1.0\r\nAdafactorOptimizer.decay_rate = None\r\nAdafactorOptimizer.epsilon1 = 1e-30\r\nAdafactorOptimizer.epsilon2 = 0.001\r\nAdafactorOptimizer.factored = True\r\nAdafactorOptimizer.min_dim_size_to_factor = 128\r\nAdafactorOptimizer.multiply_by_parameter_scale = True\r\n\r\n# Parameters for Bitransformer:\r\n# ==============================================================================\r\nBitransformer.shared_embedding = True\r\n\r\n# Parameters for denoise:\r\n# ==============================================================================\r\ndenoise.inputs_fn = @preprocessors.noise_span_to_unique_sentinel\r\ndenoise.noise_density = %noise_density\r\ndenoise.noise_mask_fn = @preprocessors.random_spans_noise_mask\r\ndenoise.targets_fn = @preprocessors.nonnoise_span_to_unique_sentinel\r\n\r\n# Parameters for decoder/DenseReluDense:\r\n# ==============================================================================\r\ndecoder/DenseReluDense.dropout_rate = %dropout_rate\r\ndecoder/DenseReluDense.hidden_size = %d_ff\r\n\r\n# Parameters for encoder/DenseReluDense:\r\n# ==============================================================================\r\nencoder/DenseReluDense.dropout_rate = %dropout_rate\r\nencoder/DenseReluDense.hidden_size = %d_ff\r\n\r\n# Parameters for decoder/EncDecAttention:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for get_sentencepiece_model_path:\r\n# ==============================================================================\r\nget_sentencepiece_model_path.mixture_or_task_name = %MIXTURE_NAME\r\n\r\n# Parameters for get_variable_dtype:\r\n# ==============================================================================\r\nget_variable_dtype.activation_dtype = 'bfloat16'\r\n\r\n# Parameters for decoder/LayerStack:\r\n# ==============================================================================\r\ndecoder/LayerStack.dropout_rate = %dropout_rate\r\ndecoder/LayerStack.norm_epsilon = 1e-06\r\n\r\n# Parameters for encoder/LayerStack:\r\n# ==============================================================================\r\nencoder/LayerStack.dropout_rate = %dropout_rate\r\nencoder/LayerStack.norm_epsilon = 1e-06\r\n\r\n# Parameters for learning_rate_schedule_noam:\r\n# ==============================================================================\r\nlearning_rate_schedule_noam.linear_decay_fraction = 0.1\r\nlearning_rate_schedule_noam.multiplier = 1.0\r\nlearning_rate_schedule_noam.offset = 0\r\nlearning_rate_schedule_noam.warmup_steps = 10000\r\n\r\n# Parameters for make_bitransformer:\r\n# ==============================================================================\r\nmake_bitransformer.decoder_name = 'decoder'\r\nmake_bitransformer.encoder_name = 'encoder'\r\n\r\n# Parameters for decoder/make_layer_stack:\r\n# ==============================================================================\r\ndecoder/make_layer_stack.block_scope = True\r\ndecoder/make_layer_stack.layers = \\\r\n [@mesh_tensorflow.transformer.transformer_layers.SelfAttention,\r\n @mesh_tensorflow.transformer.transformer_layers.EncDecAttention,\r\n @mesh_tensorflow.transformer.transformer_layers.DenseReluDense]\r\ndecoder/make_layer_stack.num_layers = %num_layers\r\n\r\n# Parameters for encoder/make_layer_stack:\r\n# ==============================================================================\r\nencoder/make_layer_stack.block_scope = True\r\nencoder/make_layer_stack.layers = \\\r\n [@mesh_tensorflow.transformer.transformer_layers.SelfAttention,\r\n @mesh_tensorflow.transformer.transformer_layers.DenseReluDense]\r\nencoder/make_layer_stack.num_layers = %num_layers\r\n\r\n# Parameters for mesh_train_dataset_fn:\r\n# ==============================================================================\r\nmesh_train_dataset_fn.mixture_or_task_name = %MIXTURE_NAME\r\n\r\n\r\n# Parameters for noise_span_to_unique_sentinel:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for nonnoise_span_to_unique_sentinel:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for pack_dataset:\r\n# ==============================================================================\r\n\r\n\r\n# Parameters for pack_or_pad:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for random_spans_helper:\r\n# ==============================================================================\r\nrandom_spans_helper.extra_tokens_per_span_inputs = 1\r\nrandom_spans_helper.extra_tokens_per_span_targets = 1\r\nrandom_spans_helper.inputs_length = %inputs_length\r\nrandom_spans_helper.mean_noise_span_length = %mean_noise_span_length\r\nrandom_spans_helper.noise_density = %noise_density\r\n\r\n# Parameters for targets_length/random_spans_helper:\r\n# ==============================================================================\r\ntargets_length/random_spans_helper.extra_tokens_per_span_inputs = 1\r\ntargets_length/random_spans_helper.extra_tokens_per_span_targets = 1\r\ntargets_length/random_spans_helper.inputs_length = %inputs_length\r\ntargets_length/random_spans_helper.mean_noise_span_length = %mean_noise_span_length\r\ntargets_length/random_spans_helper.noise_density = %noise_density\r\n\r\n# Parameters for random_spans_noise_mask:\r\n# ==============================================================================\r\nrandom_spans_noise_mask.mean_noise_span_length = %mean_noise_span_length\r\n\r\n# Parameters for targets_length/random_spans_targets_length:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for random_spans_tokens_length:\r\n# ==============================================================================\r\n# None.\r\n\r\n# Parameters for rate_num_examples:\r\n# ==============================================================================\r\nrate_num_examples.maximum = 1000000.0\r\nrate_num_examples.scale = 1.0\r\nrate_num_examples.temperature = 1.0\r\n\r\n# Parameters for rate_unsupervised:\r\n# ==============================================================================\r\nrate_unsupervised.value = 710000.0\r\n\r\n# Parameters for reduce_concat_tokens:\r\n# ==============================================================================\r\nreduce_concat_tokens.batch_size = 128\r\nreduce_concat_tokens.feature_key = 'targets'\r\n\r\n# Parameters for run:\r\n# ==============================================================================\r\nrun.autostack = True\r\nrun.batch_size = ('tokens_per_batch', %tokens_per_batch)\r\nrun.dataset_split = 'train'\r\nrun.ensemble_inputs = None\r\nrun.eval_checkpoint_step = None\r\nrun.eval_dataset_fn = None\r\nrun.eval_summary_dir = None\r\nrun.export_path = ''\r\nrun.iterations_per_loop = 100\r\nrun.keep_checkpoint_max = None\r\nrun.layout_rules = \\\r\n 'ensemble:ensemble,batch:batch,d_ff:model,heads:model,vocab:model,experts:batch'\r\nrun.learning_rate_schedule = @learning_rate_schedules.learning_rate_schedule_noam\r\nrun.mesh_shape = @mesh_tensorflow.transformer.utils.tpu_mesh_shape()\r\nrun.mode = 'train' \r\nrun.init_checkpoint = %init_checkpoint\r\nrun.model_type = 'bitransformer'\r\nrun.optimizer = @optimize.AdafactorOptimizer\r\nrun.perplexity_eval_steps = 10\r\nrun.predict_fn = None\r\nrun.save_checkpoints_steps = 2400\r\nrun.sequence_length = {'inputs': %inputs_length, 'targets': %targets_length}\r\nrun.train_dataset_fn = \\\r\n @t5.models.mesh_transformer.mesh_train_dataset_fn\r\nrun.train_steps = 1000000000\r\nrun.variable_filter = None\r\nrun.vocabulary = \\\r\n @t5.data.sentencepiece_vocabulary.SentencePieceVocabulary()\r\n\r\n# Parameters for select_random_chunk:\r\n# ==============================================================================\r\nselect_random_chunk.feature_key = 'targets'\r\nselect_random_chunk.max_length = 65536\r\n\r\n# Parameters for decoder/SelfAttention:\r\n# ==============================================================================\r\ndecoder/SelfAttention.attention_kwargs = None\r\ndecoder/SelfAttention.dropout_rate = %dropout_rate\r\ndecoder/SelfAttention.key_value_size = %d_kv\r\ndecoder/SelfAttention.num_heads = %num_heads\r\ndecoder/SelfAttention.num_memory_heads = 0\r\ndecoder/SelfAttention.relative_attention_num_buckets = 32\r\ndecoder/SelfAttention.relative_attention_type = 'bias_shared'\r\ndecoder/SelfAttention.shared_kv = False\r\n\r\n# Parameters for encoder/SelfAttention:\r\n# ==============================================================================\r\nencoder/SelfAttention.attention_kwargs = None\r\nencoder/SelfAttention.dropout_rate = %dropout_rate\r\nencoder/SelfAttention.key_value_size = %d_kv\r\nencoder/SelfAttention.num_heads = %num_heads\r\nencoder/SelfAttention.num_memory_heads = 0\r\nencoder/SelfAttention.relative_attention_num_buckets = 32\r\nencoder/SelfAttention.relative_attention_type = 'bias_shared'\r\nencoder/SelfAttention.shared_kv = False\r\n\r\n# Parameters for SentencePieceVocabulary:\r\n# ==============================================================================\r\nSentencePieceVocabulary.extra_ids = 100\r\nSentencePieceVocabulary.sentencepiece_model_file = \\\r\n @t5.models.mesh_transformer.get_sentencepiece_model_path()\r\n\r\n# Parameters for serialize_num_microbatches:\r\n# ==============================================================================\r\nserialize_num_microbatches.tokens_per_microbatch_per_replica = 8192\r\n\r\n# Parameters for split_tokens:\r\n# ==============================================================================\r\nsplit_tokens.feature_key = 'targets'\r\nsplit_tokens.max_tokens_per_segment = @preprocessors.random_spans_tokens_length()\r\nsplit_tokens.min_tokens_per_segment = None\r\n\r\n# Parameters for tpu_estimator_model_fn:\r\n# ==============================================================================\r\ntpu_estimator_model_fn.init_checkpoint = %init_checkpoint\r\ntpu_estimator_model_fn.outer_batch_size = 1\r\ntpu_estimator_model_fn.tpu_summaries = False\r\n\r\n# Parameters for tpu_mesh_shape:\r\n# ==============================================================================\r\ntpu_mesh_shape.ensemble_parallelism = None\r\ntpu_mesh_shape.model_parallelism = 1\r\ntpu_mesh_shape.tpu_topology = '8x8'\r\n\r\n# Parameters for decoder/Unitransformer:\r\n# ==============================================================================\r\ndecoder/Unitransformer.d_model = %d_model\r\ndecoder/Unitransformer.ensemble = None\r\ndecoder/Unitransformer.input_full_attention = False\r\ndecoder/Unitransformer.label_smoothing = 0.0\r\ndecoder/Unitransformer.loss_denominator = None\r\ndecoder/Unitransformer.loss_fn = None\r\ndecoder/Unitransformer.loss_on_targets_only = False\r\ndecoder/Unitransformer.max_length = 512\r\ndecoder/Unitransformer.positional_embedding = False\r\ndecoder/Unitransformer.shared_embedding_and_softmax_weights = True\r\ndecoder/Unitransformer.vocab_divisor = 128\r\ndecoder/Unitransformer.z_loss = 0.0001\r\ndecoder/Unitransformer.loss_denominator = 233472\r\n\r\n# Parameters for encoder/Unitransformer:\r\n# ==============================================================================\r\nencoder/Unitransformer.d_model = %d_model\r\nencoder/Unitransformer.ensemble = None\r\nencoder/Unitransformer.input_full_attention = False\r\nencoder/Unitransformer.label_smoothing = 0.0\r\nencoder/Unitransformer.loss_denominator = None\r\nencoder/Unitransformer.loss_fn = None\r\nencoder/Unitransformer.loss_on_targets_only = False\r\nencoder/Unitransformer.max_length = 512\r\nencoder/Unitransformer.positional_embedding = False\r\nencoder/Unitransformer.shared_embedding_and_softmax_weights = True\r\nencoder/Unitransformer.vocab_divisor = 128\r\nencoder/Unitransformer.z_loss = 0.0001\r\n\r\n# Parameters for unsupervised:\r\n# ==============================================================================\r\nunsupervised.preprocessors = \\\r\n [@preprocessors.select_random_chunk,\r\n @preprocessors.reduce_concat_tokens,\r\n @preprocessors.split_tokens,\r\n @preprocessors.denoise]\r\n```\r\n\r\n=> the relevant part here seems to be only the model hyperparameters:\r\n```\r\nd_ff = 2048\r\nd_kv = 64\r\nd_model = 512\r\ndropout_rate = 0.1\r\ninputs_length = 512\r\nmean_noise_span_length = 3.0\r\nMIXTURE_NAME = 'all_mix'\r\nnoise_density = 0.15\r\nnum_heads = 8\r\nnum_layers = 6\r\ntargets_length = 512\r\ninit_checkpoint = \"gs://t5-data/pretrained_models/small/model.ckpt-1000000\"\r\ntokens_per_batch = 1048576\r\n```\r\nSo maybe you can create a config.json based on those?\r\n\r\n\r\n\r\n> Thanks! (you are a lifesaver by the way with these response times :)).\r\n\r\nAnd happy to hear this :) you're welcome",

"...actually the link you sent for the example config file proved to be extremely useful! Starting from there I've found all related files. Here is everything (including the config file) for T5 Small: https://huggingface.co/t5-small. Also an example workflow for future reference:\r\n```\r\nmkdir t5\r\ngsutil -m cp -r gs://t5-data/pretrained_models/small t5\r\npython ~/transformers/src/transformers/models/t5/convert_t5_original_tf_checkpoint_to_pytorch.py --tf_checkpoint_path t5/small/model.ckpt-1000000 --pytorch_dump_path t5-small-pt --config_file t5/small_config.json\r\n```"

] | 1,613 | 1,613 | 1,613 | NONE | null | I want to use original T5 checkpoint in Transformers library. I found multiple answers referring to `convert_t5_original_tf_checkpoint_to_pytorch.py` which does not seem to exist. Any other way? Or where can I find a (currently working) version of that file? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10228/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10228/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10227 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10227/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10227/comments | https://api.github.com/repos/huggingface/transformers/issues/10227/events | https://github.com/huggingface/transformers/issues/10227 | 809,961,223 | MDU6SXNzdWU4MDk5NjEyMjM= | 10,227 | Showing individual token and corresponding score during beam search | {

"login": "monmanuela",

"id": 35655790,

"node_id": "MDQ6VXNlcjM1NjU1Nzkw",

"avatar_url": "https://avatars.githubusercontent.com/u/35655790?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/monmanuela",

"html_url": "https://github.com/monmanuela",

"followers_url": "https://api.github.com/users/monmanuela/followers",

"following_url": "https://api.github.com/users/monmanuela/following{/other_user}",

"gists_url": "https://api.github.com/users/monmanuela/gists{/gist_id}",

"starred_url": "https://api.github.com/users/monmanuela/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/monmanuela/subscriptions",

"organizations_url": "https://api.github.com/users/monmanuela/orgs",

"repos_url": "https://api.github.com/users/monmanuela/repos",

"events_url": "https://api.github.com/users/monmanuela/events{/privacy}",

"received_events_url": "https://api.github.com/users/monmanuela/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hey @monmanuela,\r\n\r\nThanks for checking out the post! We try to keep the repository for github issues and kindly ask you to post these kinds of questions on the [forum](https://discuss.huggingface.co/). Feel free to tag me there (@patrickvonplaten) :-)",

"@patrickvonplaten thanks for your quick reply! Posted a topic on the forum, closing this issue."

] | 1,613 | 1,613 | 1,613 | NONE | null | ## Who can help

@patrickvonplaten

## Information

Hello,

I am using beam search with a pre-trained T5 model for summarization. I would like to visualize the beam search process by showing the tokens with the highest scores, and eventually the chosen beam like this diagram:

(Taken from https://huggingface.co/blog/how-to-generate)

**I am unsure how I can show the tokens and their corresponding scores.**

I followed the discussion https://discuss.huggingface.co/t/announcement-generationoutputs-scores-attentions-and-hidden-states-now-available-as-outputs-to-generate/3094 and https://github.com/huggingface/transformers/pull/9150.

Following the docs, upon calling `generate`, I have set `return_dict_in_generate=True`, `output_scores=True`

```

generated_outputs = model_t5summary.generate(

input_ids=input_ids.to(device),

attention_mask=features['attention_mask'].to(device),

max_length=input_ids.shape[-1] + 2,

return_dict_in_generate=True,

output_scores=True,

output_hidden_states=True,

output_attentions=True,

no_repeat_ngram_size=2,

early_stopping=True,

num_return_sequences=3,

num_beams=5,

)

```

Now I have an instance of `BeamSearchEncoderDecoderOutput`.

If I understand the docs (https://huggingface.co/transformers/master/internal/generation_utils.html#generate-outputs) correctly, `scores` will provide me with what I want but I am unsure on how to use the `scores`.

Any help/pointers from the community would be greatly appreciated, thank you 🙏 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10227/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10227/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10226 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10226/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10226/comments | https://api.github.com/repos/huggingface/transformers/issues/10226/events | https://github.com/huggingface/transformers/issues/10226 | 809,955,336 | MDU6SXNzdWU4MDk5NTUzMzY= | 10,226 | Trainer.train() is stuck | {

"login": "saichandrapandraju",

"id": 41769919,

"node_id": "MDQ6VXNlcjQxNzY5OTE5",

"avatar_url": "https://avatars.githubusercontent.com/u/41769919?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/saichandrapandraju",

"html_url": "https://github.com/saichandrapandraju",

"followers_url": "https://api.github.com/users/saichandrapandraju/followers",

"following_url": "https://api.github.com/users/saichandrapandraju/following{/other_user}",

"gists_url": "https://api.github.com/users/saichandrapandraju/gists{/gist_id}",

"starred_url": "https://api.github.com/users/saichandrapandraju/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/saichandrapandraju/subscriptions",

"organizations_url": "https://api.github.com/users/saichandrapandraju/orgs",

"repos_url": "https://api.github.com/users/saichandrapandraju/repos",

"events_url": "https://api.github.com/users/saichandrapandraju/events{/privacy}",

"received_events_url": "https://api.github.com/users/saichandrapandraju/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi,\r\n\r\nFor training-related issues, it might be better to ask your question on the [forum](https://discuss.huggingface.co/).\r\n\r\nThe authors of HuggingFace (and community members) are happy to help you there!\r\n"

] | 1,613 | 1,613 | 1,613 | NONE | null | Hi,

I'm training roberta-base using HF Trainer, but it's stuck at the starting itself. Here's my code -

```

train_dataset[0]

{'input_ids': tensor([ 0, 100, 657, ..., 1, 1, 1]),

'attention_mask': tensor([1, 1, 1, ..., 0, 0, 0]),

'labels': tensor(0)}

val_dataset[0]

{'input_ids': tensor([ 0, 11094, 14, ..., 1, 1, 1]),

'attention_mask': tensor([1, 1, 1, ..., 0, 0, 0]),

'labels': tensor(0)}

## simple test

model(train_dataset[:2]['input_ids'], attention_mask = train_dataset[:2]['attention_mask'], labels=train_dataset[:2]['labels'])

SequenceClassifierOutput(loss=tensor(0.6995, grad_fn=<NllLossBackward>), logits=tensor([[ 0.0438, -0.1893],

[ 0.0530, -0.1786]], grad_fn=<AddmmBackward>), hidden_states=None, attentions=None)

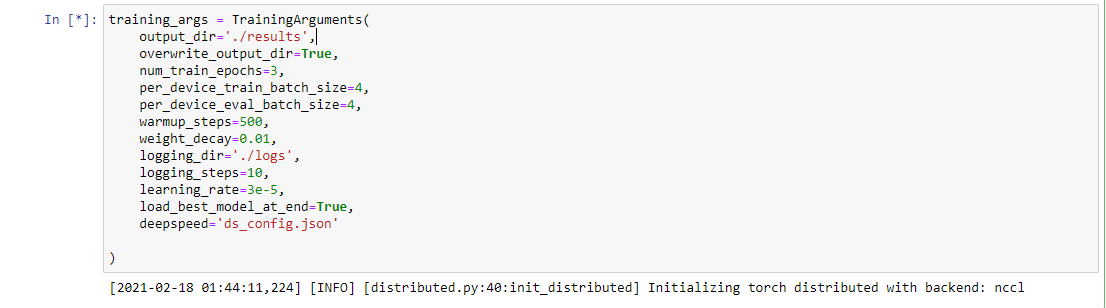

train_args = transformers.TrainingArguments(

output_dir='test_1',

overwrite_output_dir=True,

evaluation_strategy="epoch",

per_device_train_batch_size=8,

per_device_eval_batch_size=8,

learning_rate=3e-5,

weight_decay=0.01,

num_train_epochs=2,

load_best_model_at_end=True,

)

trainer = transformers.Trainer(

model=model,

args=train_args,

train_dataset=train_dataset,

eval_dataset=val_dataset,

tokenizer=tok,

)

trainer.train()

```

I saw memory consumption and it is stuck at -

```

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.51.06 Driver Version: 450.51.06 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... On | 00000000:62:00.0 Off | 0 |

| N/A 49C P0 60W / 300W | 1756MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-SXM2... On | 00000000:8A:00.0 Off | 0 |

| N/A 50C P0 61W / 300W | 1376MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

```

Plz let me know how to proceed further.. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10226/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10226/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10225 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10225/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10225/comments | https://api.github.com/repos/huggingface/transformers/issues/10225/events | https://github.com/huggingface/transformers/pull/10225 | 809,777,966 | MDExOlB1bGxSZXF1ZXN0NTc0NTgyMTE0 | 10,225 | [Trainer] memory tracker metrics | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"> Thanks for adding this functionality! One general comment I have is on the type of the `stage` argument. Since it has only four possible values from what I can see, it would be better to create an enum for those (to avoid typos and have auto-complete in an IDE).\r\n\r\nOh, let me make it absolutely automatic with `inspect` so it won't need a `stage` argument at all. \r\n\r\nAnd I will collapse the two calls into one in all but `__init__`, so it'll be less noisy.\r\n\r\n",

"So, the API has been simplified to remove the need for naming the stages in the caller, tests added. \r\n\r\nI'm sure we will think of further improvements down the road, please let me know if this is good for the first iteration.\r\n\r\nI'm not sure if anybody else wants to review before we merge this. "

] | 1,613 | 1,613 | 1,613 | CONTRIBUTOR | null | This PR introduced memory usage metrics in Trainer:

* [x] adds `TrainerMemoryTracker` (pytorch only, no-op for tf), which records deltas of the first gpu and cpu of the main process - and records them for `init|train|eval|test` stages - if there is no gpu it reports cpu only.

* [x] adds `--skip_memory_metrics` to disable this new behavior - i.e. by default it'll print the memory metrics

* [x] adds `trainer.metrics_format` which will intelligently reformat the metrics to do the right thing - this is only for logger - moves manual rounding from the scripts into that helper method.

* [x] formats GFlops as GF number, so ` 2285698228224.0`, which is very unreadable and now it will be a nice `2128GF` (similar to `100MB`)

* [x] as a sample changes `run_seq2seq.py` to use `trainer.metrics_format` - can replicate to other scripts in another PR.

* [x] changes the metrics logger in `run_seq2seq.py` to align data, so that it's easy to read the relative numbers e.g. allocated plus peak memory should be in the same column to make a quick read of the situation.

* [x] adds a new file_utils helper function `is_torch_cuda_available` to detect no gpu setups in one call.

* [x] adds a test

* [x] consistently use the strange `train/eval/test` trio - it's very confusing - but at least it's consistent - I proposed to fix this `examples`-wide in https://github.com/huggingface/transformers/issues/10165

Request: I beg you to allow me to restore the original refactored metrics dump logic in `run_seq2seq.py` - the current repetition doesn't help the readability and it's just dumping a dict - nothing ML/NLP specific here, there is nothing to understand there IMHO. and then it'd be easy to replicate this to other examples. Thanks. This is the original (and will need to add to it a few formatting entries I added in this PR):

https://github.com/huggingface/transformers/blob/e94d63f6cbf5efe288e41d9840a96a5857090617/examples/legacy/seq2seq/finetune_trainer.py#L132-L145

A picture is worth a thousand words:

```

export BS=16; rm -r output_dir; PYTHONPATH=src USE_TF=0 CUDA_VISIBLE_DEVICES=0 python examples/seq2seq/run_seq2seq.py --model_name_or_path t5-small --output_dir output_dir --adam_eps 1e-06 --do_eval --do_train --do_predict --evaluation_strategy=steps --label_smoothing 0.1 --learning_rate 3e-5 --logging_first_step --logging_steps 1000 --max_source_length 128 --max_target_length 128 --num_train_epochs 1 --overwrite_output_dir --per_device_eval_batch_size $BS --per_device_train_batch_size $BS --predict_with_generate --eval_steps 25000 --sortish_sampler --task translation_en_to_ro --val_max_target_length 128 --warmup_steps 500 --max_train_samples 100 --max_val_samples 100 --max_test_samples 100 --dataset_name wmt16-en-ro-pre-processed --source_prefix "translate English to Romanian: "

```

gives:

```

02/16/2021 17:06:39 - INFO - __main__ - ***** train metrics *****

02/16/2021 17:06:39 - INFO - __main__ - epoch = 1.0

02/16/2021 17:06:39 - INFO - __main__ - init_mem_cpu_alloc_delta = 2MB

02/16/2021 17:06:39 - INFO - __main__ - init_mem_cpu_peaked_delta = 0MB

02/16/2021 17:06:39 - INFO - __main__ - init_mem_gpu_alloc_delta = 230MB

02/16/2021 17:06:39 - INFO - __main__ - init_mem_gpu_peaked_delta = 0MB

02/16/2021 17:06:39 - INFO - __main__ - total_flos = 2128GF

02/16/2021 17:06:39 - INFO - __main__ - train_mem_cpu_alloc_delta = 55MB

02/16/2021 17:06:39 - INFO - __main__ - train_mem_cpu_peaked_delta = 0MB

02/16/2021 17:06:39 - INFO - __main__ - train_mem_gpu_alloc_delta = 692MB

02/16/2021 17:06:39 - INFO - __main__ - train_mem_gpu_peaked_delta = 661MB

02/16/2021 17:06:39 - INFO - __main__ - train_runtime = 2.3114

02/16/2021 17:06:39 - INFO - __main__ - train_samples = 100

02/16/2021 17:06:39 - INFO - __main__ - train_samples_per_second = 3.028

02/16/2021 17:06:43 - INFO - __main__ - ***** val metrics *****

02/16/2021 17:13:05 - INFO - __main__ - epoch = 1.0

02/16/2021 17:13:05 - INFO - __main__ - eval_bleu = 24.6502

02/16/2021 17:13:05 - INFO - __main__ - eval_gen_len = 32.9

02/16/2021 17:13:05 - INFO - __main__ - eval_loss = 3.7533

02/16/2021 17:13:05 - INFO - __main__ - eval_mem_cpu_alloc_delta = 0MB

02/16/2021 17:13:05 - INFO - __main__ - eval_mem_cpu_peaked_delta = 0MB

02/16/2021 17:13:05 - INFO - __main__ - eval_mem_gpu_alloc_delta = 0MB

02/16/2021 17:13:05 - INFO - __main__ - eval_mem_gpu_peaked_delta = 510MB

02/16/2021 17:13:05 - INFO - __main__ - eval_runtime = 3.9266

02/16/2021 17:13:05 - INFO - __main__ - eval_samples = 100

02/16/2021 17:13:05 - INFO - __main__ - eval_samples_per_second = 25.467

02/16/2021 17:06:48 - INFO - __main__ - ***** test metrics *****

02/16/2021 17:06:48 - INFO - __main__ - test_bleu = 27.146

02/16/2021 17:06:48 - INFO - __main__ - test_gen_len = 41.37

02/16/2021 17:06:48 - INFO - __main__ - test_loss = 3.6682

02/16/2021 17:06:48 - INFO - __main__ - test_mem_cpu_alloc_delta = 0MB

02/16/2021 17:06:48 - INFO - __main__ - test_mem_cpu_peaked_delta = 0MB

02/16/2021 17:06:48 - INFO - __main__ - test_mem_gpu_alloc_delta = 0MB

02/16/2021 17:06:48 - INFO - __main__ - test_mem_gpu_peaked_delta = 645MB

02/16/2021 17:06:48 - INFO - __main__ - test_runtime = 5.1136

02/16/2021 17:06:48 - INFO - __main__ - test_samples = 100

02/16/2021 17:06:48 - INFO - __main__ - test_samples_per_second = 19.556

```

To understand the memory reports:

- `alloc_delta` - is the difference in the used/allocated memory counter between the end and the start of the stage - it can be negative if a function released more memory than it allocated

- `peaked_delta` - is any extra memory that was consumed and then freed - relative to the current allocated memory counter - it is never negative - this is the mysterious cause of OOM, since normally it doesn't register when everything fits into the memory.

- so when you look at the metrics of any stage you add up `alloc_delta` + `peaked_delta` and you know how much memory was needed to complete that stage. But the two numbers need to be separate.

We can change the names if you'd like, but if we do, let's make sure that allocated/used shows up before peaked when alphabetically sorted - as they should be read in that order.

Also it would be useful to have them of the same length so it's less noisy vertically. I was thinking perhaps to add `m` to `alloc`? Then it becomes perfect:

```

test_mem_cpu_malloc_delta = 0MB

test_mem_cpu_peaked_delta = 0MB

```

Logic behind `init`:

- since Trainer's `__init__` can consume a lot of memory, it's important that we trace it too, but since any of the stages can be skipped, I basically push it into the metrics of whichever stage gets to update metrics first, so it gets tacked on to that group of metrics. In the above example it happens to be `train`.

Logic behind nested calls:

- since eval calls may be intermixed with train calls, we can't handle nested invocations because `torch.cuda.max_memory_allocated` is a single counter, so if it gets reset by a nested eval call, train will report incorrect info. One day pytorch will fix this issue: https://github.com/pytorch/pytorch/issues/16266 and then it will be possible to be re-entrant, for now we will only track the outer level `train` / `evaluation` / `predict` functions.

After this addition we can already profile/detect regressions for specific training stages. But this doesn't give us the full picture as there other allocations outside of the trainer - i.e. in user's code. It's a start.

Down the road I may code a different version, based on pynvml, which gives somewhat different numbers, and has its own complications. But it gives you the exact gpu memory usage, so you know exactly how much memory is used or left. PyTorch only reports its internal allocations on the other hand.

@patrickvonplaten, this feature should give us already a partial way to track memory regression. So this could be the low hanging fruit you and I were discussing.

It also should be possible to extend the tracker to use TF, but I don't know anything about TF.

@sgugger, @patil-suraj, @LysandreJik, @patrickvonplaten | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10225/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10225/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10225",

"html_url": "https://github.com/huggingface/transformers/pull/10225",

"diff_url": "https://github.com/huggingface/transformers/pull/10225.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10225.patch",

"merged_at": 1613669252000

} |

https://api.github.com/repos/huggingface/transformers/issues/10224 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10224/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10224/comments | https://api.github.com/repos/huggingface/transformers/issues/10224/events | https://github.com/huggingface/transformers/issues/10224 | 809,726,134 | MDU6SXNzdWU4MDk3MjYxMzQ= | 10,224 | No module named 'tasks' | {

"login": "daniel-z-kaplan",

"id": 48258016,

"node_id": "MDQ6VXNlcjQ4MjU4MDE2",

"avatar_url": "https://avatars.githubusercontent.com/u/48258016?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/daniel-z-kaplan",

"html_url": "https://github.com/daniel-z-kaplan",

"followers_url": "https://api.github.com/users/daniel-z-kaplan/followers",

"following_url": "https://api.github.com/users/daniel-z-kaplan/following{/other_user}",

"gists_url": "https://api.github.com/users/daniel-z-kaplan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/daniel-z-kaplan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/daniel-z-kaplan/subscriptions",

"organizations_url": "https://api.github.com/users/daniel-z-kaplan/orgs",

"repos_url": "https://api.github.com/users/daniel-z-kaplan/repos",

"events_url": "https://api.github.com/users/daniel-z-kaplan/events{/privacy}",

"received_events_url": "https://api.github.com/users/daniel-z-kaplan/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"I think you did not clone the repository properly or are not running the command from the folder `examples/legacy/token-classification`, since that folder does have a task.py file.",

"Ah, I was searching for \"tasks.py\" instead. Just user error, thanks for the fast reply."

] | 1,613 | 1,613 | 1,613 | NONE | null | ## Environment info

- `transformers` version: 4.3.2

- Platform: 5.10.8-200.fc33.x86_64

- Python version: 3.8.3

- PyTorch version (GPU?): 1.6.0 (GPU)

- Tensorflow version (GPU?): 2.4.1 (GPU)

- Using GPU in script?: No.

- Using distributed or parallel set-up in script?: No.

@sgugger, @patil-suraj

## Information

Model I am using (Bert, XLNet ...): allenai/scibert_scivocab_uncased

The problem arises when using:

* [X ] the official example scripts: (give details below)

I'm using the old NER script, since the model I'm using doesn't support Fast Tokenizers.

https://github.com/huggingface/transformers/blob/master/examples/legacy/token-classification/run_ner.py

However, I get an error on trying to import "tasks.py". I did find a previous git issue for this, which recommended downloading said file.... but it no longer exists.

The tasks I am working on is:

* [X ] my own task or dataset: (give details below)

I'm using the bc2gm-corpus dataset.

## To reproduce

Steps to reproduce the behaviour:

1. Download the script from the provided github link.

2. Run it with any real model name, a real directory for data (doesn't need to include data), and an output directory.

I used the following command: python3 run_ner.py --model_name_or_path allenai/scibert_scivocab_uncased --data_dir bc2gm-corpus/conll --output_dir ./output

` File "run_ner.py", line 323, in <module>

main()

File "run_ner.py", line 122, in main

module = import_module("tasks")

File "/home/dkaplan/miniconda3/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 973, in _find_and_load_unlocked

ModuleNotFoundError: No module named 'tasks' `

## Expected behavior

I expect the NER script to run, finetuning a model on the provided dataset.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10224/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10224/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10223 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10223/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10223/comments | https://api.github.com/repos/huggingface/transformers/issues/10223/events | https://github.com/huggingface/transformers/issues/10223 | 809,714,942 | MDU6SXNzdWU4MDk3MTQ5NDI= | 10,223 | Slow Multi-GPU DDP training with run_clm.py and GPT2 | {

"login": "ktrapeznikov",

"id": 4052002,

"node_id": "MDQ6VXNlcjQwNTIwMDI=",

"avatar_url": "https://avatars.githubusercontent.com/u/4052002?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ktrapeznikov",

"html_url": "https://github.com/ktrapeznikov",

"followers_url": "https://api.github.com/users/ktrapeznikov/followers",

"following_url": "https://api.github.com/users/ktrapeznikov/following{/other_user}",

"gists_url": "https://api.github.com/users/ktrapeznikov/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ktrapeznikov/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ktrapeznikov/subscriptions",

"organizations_url": "https://api.github.com/users/ktrapeznikov/orgs",

"repos_url": "https://api.github.com/users/ktrapeznikov/repos",

"events_url": "https://api.github.com/users/ktrapeznikov/events{/privacy}",

"received_events_url": "https://api.github.com/users/ktrapeznikov/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The answers to those two points is not necessarily yes.\r\n- DDP training is only faster if you have NVLinks between your GPUs, otherwise the slow communication between them can slow down training.\r\n- DDP training takes more space on GPU then a single-process training since there is some gradients caching.\r\n\r\nBoth issues come from PyTorch and not us, the only thing we can check on our side is if there is something in our script that would introduce a CPU-bottleneck, but I doubt this is the reason here (all tokenization happens before the training so there is nothing I could think of there).\r\n\r\nYou should also try regular `DataParalell` which does not have the memory problem IIRC, but I don't remember the comparison in terms of speed. I think @stas00 may have more insight there.",

"Thanks. I’ll give DP a try.",

"What @sgugger said, \r\n\r\nplease see this excellent benchmark: https://github.com/huggingface/transformers/issues/9371#issuecomment-768656711 You can see that a single GPU beats DDP over 2 gpus there if it's not NVLink-connected. I haven't tried it on 3 gpus though. Surely it should be somewhat faster at least. \r\n\r\nDid you check you're feeding the gpus fast enough? - i.e. check their utilization %, it they are under 90% then you probably have an issue with loading - add more dataloader workers.\r\n\r\nAlso please consider using DeepSpeed ZeRO-DP, which should be even faster. https://huggingface.co/blog/zero-deepspeed-fairscale",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,613 | 1,619 | 1,619 | CONTRIBUTOR | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.3.0

- Platform: Linux-3.10.0-1160.11.1.el7.x86_64-x86_64-with-glibc2.10

- Python version: 3.8.6

- PyTorch version (GPU?): 1.7.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: yes (using official `run_clm.py`)

- Using distributed or parallel set-up in script?: using DDP with `run_clm.py`

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

- gpt2: @patrickvonplaten, @LysandreJik

- trainer, maintained examples: @sgugger

## Information

Model I am using (Bert, XLNet ...): gpt2-medium

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Generate some dummy text data (3000 examples) and save a csv:

```python

import pandas as pd

text = " ".join(100*["Here is some very long text."])

text = 3000*[text]

pd.Series(text).to_frame("text").to_csv("data_temp.csv",index=False)

```

2. Run official [`run_clm.py`](https://github.com/huggingface/transformers/blob/master/examples/language-modeling/run_clm.py) from examples using 3 gpus and DDP:

```shell

CUDA_VISIBLE_DEVICES="1,2,3" python -m torch.distributed.launch --nproc_per_node 3 run_clm.py \

--model_name_or_path gpt2-medium \

--do_train \

--output_dir /proj/semafor/kirill/tmp \

--per_device_train_batch_size 4 \

--block_size 128 \

--train_file data_temp.csv \

--fp16

```

3. Using 3 GeForce RTX 2080Ti with 11Gbs, tqdm says it should approximately take 1 hour: `48/4101 [00:38<53:51, 1.25it/s`. The memory in each GPU is maxed out: ` 10782MiB / 11019MiB `

4. Now, if I just run the same script on a single GPU:

```shell

CUDA_VISIBLE_DEVICES="3" python run_clm.py \

--model_name_or_path gpt2-medium \

--do_train \

--output_dir /proj/semafor/kirill/tmp \

--per_device_train_batch_size 4 \

--block_size 128 \

--train_file data_temp.csv \

--fp16

```

It's actually a little faster: `260/12303 [00:57<44:02, 4.56it/s` and the GPU memory is not maxed out: `9448MiB / 11019MiB`

I can actually double the `--per_device_train_batch_size` from `4 -> 8` and get it down to under 30 mins per epoch: `138/6153 [00:36<26:30, 3.78it/s`

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

So I expected that:

- DDP training on 3 GPUs to be faster than a single GPU (It's actually a little slower).

- If I can load a batch of 8 on a device in a single GPU mode then it should work in multi-GPU mode as well (it doesn't, I get OOM error). | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10223/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10223/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10222 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10222/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10222/comments | https://api.github.com/repos/huggingface/transformers/issues/10222/events | https://github.com/huggingface/transformers/pull/10222 | 809,682,524 | MDExOlB1bGxSZXF1ZXN0NTc0NTAzODA3 | 10,222 | the change from single mask to multi mask support for pytorch | {

"login": "naveenjafer",

"id": 7025448,

"node_id": "MDQ6VXNlcjcwMjU0NDg=",

"avatar_url": "https://avatars.githubusercontent.com/u/7025448?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/naveenjafer",

"html_url": "https://github.com/naveenjafer",

"followers_url": "https://api.github.com/users/naveenjafer/followers",

"following_url": "https://api.github.com/users/naveenjafer/following{/other_user}",

"gists_url": "https://api.github.com/users/naveenjafer/gists{/gist_id}",

"starred_url": "https://api.github.com/users/naveenjafer/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/naveenjafer/subscriptions",

"organizations_url": "https://api.github.com/users/naveenjafer/orgs",

"repos_url": "https://api.github.com/users/naveenjafer/repos",

"events_url": "https://api.github.com/users/naveenjafer/events{/privacy}",

"received_events_url": "https://api.github.com/users/naveenjafer/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2796628563,

"node_id": "MDU6TGFiZWwyNzk2NjI4NTYz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/WIP",

"name": "WIP",

"color": "234C99",

"default": false,

"description": "Label your PR/Issue with WIP for some long outstanding Issues/PRs that are work in progress"

}

] | open | false | null | [] | [

"@Narsil @LysandreJik How do you suggest we go about with the targets param? At the moment, targets can either be a list of strings or a string. In case of multiple masks, there are 2 ways to go about with it.\r\n\r\n1. Provide a way for the user to define targets for each mask. \r\n2. One single target list that can be uniformly applied across all the positions. \r\n\r\nThe first method would be best implemented by expecting a dict as argument in the keyword param. Something like\r\n{ \"0\" : \"str or list of strings\" , \"2\" : \"str or list of strings\" ... } \r\n\r\nThis way the user can decide to skip explicitly defining candidate keywords in some of the mask positions if needed ( skipped mask 1 in the example above). \r\n\r\n",

"Tough question indeed regarding targets! Switching to a dict sounds a bit non intuitive to me, but I don't see any other choice. I guess eventually the API would be the following:\r\n\r\nGiven a single input string, with a single mask:\r\n- A candidate as a string returns the candidate score for the mask\r\n- A candidate list of strings returns the candidate scores for the mask\r\n\r\nGiven a single input string, with multiple masks:\r\n- A candidate as a string returns the candidate scores for all masks\r\n- A candidate list of strings returns the candidate scores for all masks, on all candidates\r\n- A candidate dict of strings returns the candidate scores for the masks which are concerned by the dictionary keys. Their candidates is the dictionary value linked to that dictionary key.\r\n- A candidate dict of list of strings returns the candidate scores for the masks which are concerned by the dictionary keys. Their candidates are the dictionary values linked to that dictionary key.\r\n\r\nThen there are also lists of input strings, with single masks, and lists of input strings, with multiple masks. This results in a very large amount of possibilities, with different returns, which sounds overwhelming. I'm not too sure that's the best way to handle the issue, I'll give it a bit more thought.",

"@LysandreJik I had a question. From what I can understand, one can only define a single set of targets at the moment irrespective of how many input texts are given right? For both the case of a single input text and multiple input texts for even the base case of a single mask, we can only define a single target or a list of targets that applies across them all right? Essentially, it is a many to one relation for the input texts to the target. If that is the case, targets functionality is currently not designed in a useful manner right?",

"Hi, sorry for getting back to you so late on this. I agree with you that we can improve the `targets`. I'm pinging @joeddav as he's the author of the PR that added them.\r\n\r\n@joeddav your input on this PR would be more than welcome! Thank you.",

"Personally, I think the simplest solution would be best: only support `targets` in the single-mask case. If `targets` is passed and there are multiple mask tokens, raise a `ValueError`. It's a pretty narrow use case to need to pass a string with multiple masked tokens while also needing to evaluate possible target tokens for each. In my opinion, that's a complicated and rare use case and we don't need to muddle pipelines code by attempting to support it. It can always be accomplished by using the core modules instead of a pipeline.",

"@joeddav That does make sense to me! The objective of a pipeline should only be to accommodate for some quick use test cases. Making it cumbersome misses the point altogether. @LysandreJik What do you think? ",

"Yes, I agree with @joeddav as well!",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"> [...] If you think this still needs to be addressed please comment on this thread.\r\n\r\nThis feature would have many applications and would enable comparison of MLMs in gloze tests beyond the restricted setting of targeting words in the intersection of the vocabularies of the models to be compared. There are some open questions how `top_k` predictions should be made, see issue #3609, so I think it would be good to wait a few more weeks to give everybody time to read the linked paper and discuss ideas.",

"@jowagner Just to clarify it for others who might be following, the paper you are referring to is this one https://arxiv.org/abs/2002.03079 right?",

"> @jowagner Just to clarify it for others who might be following, the paper you are referring to is this one https://arxiv.org/abs/2002.03079 right?\r\n\r\nYes. I hope to read it soon and get a more clear picture what is needed here. I tend to think that producing `top_k` predictions for multiple masked tokens is outside the scope of the BERT model and really needs an extra model on top of it, e.g. a model that predicts a ranked list of best crystallisation points and can then be used to perform a beam search, fixing on subword unit at a time and producing a k-best list of best crystallisation processes.\r\n\r\n",

"@jowagner I have a doubt in that case coming back to the basics of BERT. when some of the words are masked and a prediction is to be made on multiple masks during pre-training step in BERT, does BERT not face the same issue? Or are the masks predicted one mask at a time in each training sentence fed to BERT?",

"Looking at Devlin et al 2018 again, I don't see the pre-training objective stated but certainly they try to push as much probability mass as possible to the one completion attested in the training data. BERT is trained to get the top prediction right. Good secondary predictions for individual tokens are only a by-product. Nothing pushes the model to make the k-th predictions consistent across multiple masked subword units for k > 1.\r\n\r\nYes, making predictions can be expected to be harder when there are multiple masked subword units but that also happens in pre-training and BERT therefore learns to do this. Maybe BERT does this in steps, crystallising only a few decisions in each layer. A way to find out would be to fix the BERT layers, add MLM heads to each layer, tune these heads and then see how the predictions (and probabilities) change from layer to layer. (This would make a nice paper, or maybe somebody has done this already.)",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"Do we have a final verdict yet on the approach to be followed? @mitramir55 had suggested a code proposal I believe in #3609 ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"@LysandreJik Shall i replace this with the implementation suggested earlier in #3609 and raise a PR? Though I dont quite think we have discussed on what scoring would be ideal for the beam search used to sort the predictions.\r\n\r\n",

"@Narsil had good insights about your previous implementation - @Narsil could you let us know what you think of the solution proposed here https://github.com/huggingface/transformers/issues/3609#issuecomment-854005760?",

"The design in https://github.com/huggingface/transformers/issues/3609#issuecomment-854005760 seems very interesting !\r\n\r\nMain comments: \r\n- I would be curious to see (and probably it would need to become a test) to prove that doing `n` inference instead of 1 will produce better results (because it should be close to the real joint probabilities) that's the main interest of this proposed approach.\r\n\r\n- I think it should output the same tokens as fill-mask pipeline in the degenerate case (when there's only 1 mask).\r\n I don't think it's correct right now (see below what I tried)\r\n\r\n- Because we iteratively do `topk` for each `mask` it's a bit of an exponential if I understand correctly. I would probably add some kind of cleanup to limit the number of \"beams\" to topk (I may have overlooked but it seems to be currently missing)\r\n\r\n- the proposed code could probably be refactored a bit for clarity and avoid integer indexing and deep nesting.\r\n\r\n\r\n```python\r\nimport torch \r\nfrom transformers import AutoModelForMaskedLM, AutoTokenizer, pipeline \r\nimport random \r\n \r\n \r\ndef predict_seqs_dict(sequence, model, tokenizer, top_k=5, order=\"right-to-left\"): \r\n \r\n ids_main = tokenizer.encode(sequence, return_tensors=\"pt\", add_special_tokens=False) \r\n \r\n ids_ = ids_main.detach().clone() \r\n position = torch.where(ids_main == tokenizer.mask_token_id) \r\n \r\n positions_list = position[1].numpy().tolist() \r\n \r\n if order == \"left-to-right\": \r\n positions_list.reverse() \r\n \r\n elif order == \"random\": \r\n random.shuffle(positions_list) \r\n \r\n # print(positions_list) \r\n predictions_ids = {} \r\n predictions_detokenized_sents = {} \r\n \r\n for i in range(len(positions_list)): \r\n predictions_ids[i] = [] \r\n predictions_detokenized_sents[i] = [] \r\n \r\n # if it was the first prediction, \r\n # just go on and predict the first predictions \r\n \r\n if i == 0: \r\n model_logits = model(ids_main)[\"logits\"][0][positions_list[0]] \r\n top_k_tokens = torch.topk(model_logits, top_k, dim=0).indices.tolist() \r\n \r\n for j in range(len(top_k_tokens)): \r\n # print(j) \r\n ids_t_ = ids_.detach().clone() \r\n ids_t_[0][positions_list[0]] = top_k_tokens[j] \r\n predictions_ids[i].append(ids_t_) \r\n \r\n pred = tokenizer.decode(ids_t_[0]) \r\n predictions_detokenized_sents[i].append(pred) \r\n \r\n # append the sentences and ids of this masked token \r\n \r\n # if we already have some predictions, go on and fill the rest of the masks \r\n # by continuing the previous predictions \r\n if i != 0: \r\n for pred_ids in predictions_ids[i - 1]: \r\n \r\n # get the logits \r\n model_logits = model(pred_ids)[\"logits\"][0][positions_list[i]] \r\n # get the top 5 of this prediction and masked token \r\n top_k_tokens = torch.topk(model_logits, top_k, dim=0).indices.tolist() \r\n \r\n for top_id in top_k_tokens: \r\n \r\n ids_t_i = pred_ids.detach().clone() \r\n ids_t_i[0][positions_list[i]] = top_id \r\n \r\n pred = tokenizer.decode(ids_t_i[0]) \r\n \r\n # append the sentences and ids of this masked token \r\n \r\n predictions_ids[i].append(ids_t_i) \r\n predictions_detokenized_sents[i].append(pred) \r\n \r\n return predictions_detokenized_sents \r\n \r\n \r\nsequence = \"This is some super neat [MASK] !\" \r\ntokenizer = AutoTokenizer.from_pretrained(\"bert-base-uncased\") \r\nmodel = AutoModelForMaskedLM.from_pretrained(\"bert-base-uncased\") \r\n \r\npipe = pipeline(task=\"fill-mask\", tokenizer=tokenizer, model=model) \r\nprint(predict_seqs_dict(sequence, model, tokenizer)) \r\nprint(pipe(sequence)) \r\n```",

"> This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\r\n\r\nYes, we need more time or help from somebody with time to review the discussion and make recommendations.\r\n\r\nMy thoughts re-reading a few comments, including some of my own:\r\n\r\n- Producing k-best predictions for multiple masked tokens requires choices, i.e. a model, separate from the underlying transformer model. This is where the PR is stalled. A quick way forward would be to support only `k=1` when there are multiple masked tokens for the time being. For `k=1`, it is undisputed that the prediction should be the transformer's top prediction for each token.\r\n- This PR/feature does not directly allow comparison of cloze test predictions of models with different vocabularies. Users would have to probe with continuous sequences of masked tokens of varying length and somehow decide between the candidate predictions.",

"After reading this thread and skimming through #14716, I must confess I still a little unsure how the scores for multi-masked prompts are computed. Based on my understanding, for a prompt with k-masks, it seems like you want to do a beam search over over the Cartesian product `mask_1_targets x mask_2_targets x ... x mask_k_targets` and return the top-n most likely tuples maximizing `P(mask_1=token_i_k, mask_2=token_i_2, ... m_k=token_i_k)`, i.e.:\r\n\r\n```\r\n{\r\n T_1=[(token_1_1, ..., token_1_k), score_t_1],\r\n T_2=[(token_2_1, ..., token_2_k), score_t_2],\r\n ...\r\n T_n=[(token_n_1, ..., token_n_k), score_t_n]\r\n}\r\n```\r\n\r\nIs this accurate? Perhaps you could try to clarify the design intent and limitations of the current API in the documentation somewhere. If you intend to eventually support computing the joint probability, I think would be beneficial to provide a way for consumers to supply a set of per-mask targets and configure the beam search parameters, e.g. beam width. Thanks!",