url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/2212

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2212/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2212/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2212/events

|

https://github.com/huggingface/transformers/issues/2212

| 539,526,643 |

MDU6SXNzdWU1Mzk1MjY2NDM=

| 2,212 |

Fine-tuning TF models on Colab TPU

|

{

"login": "NaxAlpha",

"id": 11090613,

"node_id": "MDQ6VXNlcjExMDkwNjEz",

"avatar_url": "https://avatars.githubusercontent.com/u/11090613?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/NaxAlpha",

"html_url": "https://github.com/NaxAlpha",

"followers_url": "https://api.github.com/users/NaxAlpha/followers",

"following_url": "https://api.github.com/users/NaxAlpha/following{/other_user}",

"gists_url": "https://api.github.com/users/NaxAlpha/gists{/gist_id}",

"starred_url": "https://api.github.com/users/NaxAlpha/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/NaxAlpha/subscriptions",

"organizations_url": "https://api.github.com/users/NaxAlpha/orgs",

"repos_url": "https://api.github.com/users/NaxAlpha/repos",

"events_url": "https://api.github.com/users/NaxAlpha/events{/privacy}",

"received_events_url": "https://api.github.com/users/NaxAlpha/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] |

closed

| false | null |

[] |

[

"I've read your code, and I don't see anything strange in it (I hope). It seems to be an error due to training a (whatever) model on TPUs rather than Transformers.\r\n\r\nDo you see [this](https://github.com/tensorflow/tensorflow/issues/29896) issue reported in TensorFlow's GitHub? It seems to be the same error, and someone gives indications about how to resolve.\r\n\r\n> ## Questions & Help\r\n> Hi,\r\n> \r\n> I am trying to fine-tune TF BERT on Imdb dataset on Colab TPU. Here is the full notebook:\r\n> \r\n> https://colab.research.google.com/drive/16ZaJaXXd2R1gRHrmdWDkFh6U_EB0ln0z\r\n> \r\n> Can anyone help me what I am doing wrong?\r\n> Thanks",

"Thanks for the help. Actually my code was inspired from [this colab notebook. This](https://colab.research.google.com/github/CyberZHG/keras-bert/blob/master/demo/tune/keras_bert_classification_tpu.ipynb) notebook works perfectly but there is one major difference might be causing the problem:\r\n\r\n- I am force installing tensorflow 2.x for transformer notebook because transformer works only for TF>=2.0 but colab uses TF 1.15 on colab for TPUs\r\n\r\nSo I went to GCP and used a TPU for TF 2.x. The error changed to this:\r\n\r\n\r\nBut yes, you are right this issue might be related to TF in general.",

"In the official docs of GCP [here](https://cloud.google.com/tpu/docs/supported-versions), they show the current list of supported TensorFlow and Cloud TPU versions; _only_ **TensorFlow 1.13, 1.14 and 1.15 are supported** (they don't mention TensorFlow 2.0).\r\nI think it's why you have the problem you've highlighted.\r\n\r\n> Thanks for the help. Actually my code was inspired from [this colab notebook. This](https://colab.research.google.com/github/CyberZHG/keras-bert/blob/master/demo/tune/keras_bert_classification_tpu.ipynb) notebook works perfectly but there is one major difference might be causing the problem:\r\n> \r\n> * I am force installing tensorflow 2.x for transformer notebook because transformer works only for TF>=2.0 but colab uses TF 1.15 on colab for TPUs\r\n> \r\n> So I went to GCP and used a TPU for TF 2.x. The error changed to this:\r\n> \r\n> \r\n> But yes, you are right this issue might be related to TF in general.",

"tpu supports . tf2.1",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,576 | 1,586 | 1,586 |

NONE

| null |

## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

Hi,

I am trying to fine-tune TF BERT on Imdb dataset on Colab TPU. Here is the full notebook:

https://colab.research.google.com/drive/16ZaJaXXd2R1gRHrmdWDkFh6U_EB0ln0z

Can anyone help me what I am doing wrong?

Thanks

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2212/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2212/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/2211

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2211/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2211/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2211/events

|

https://github.com/huggingface/transformers/pull/2211

| 539,377,226 |

MDExOlB1bGxSZXF1ZXN0MzU0MzY1NTg5

| 2,211 |

Fast tokenizers

|

{

"login": "n1t0",

"id": 1217986,

"node_id": "MDQ6VXNlcjEyMTc5ODY=",

"avatar_url": "https://avatars.githubusercontent.com/u/1217986?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/n1t0",

"html_url": "https://github.com/n1t0",

"followers_url": "https://api.github.com/users/n1t0/followers",

"following_url": "https://api.github.com/users/n1t0/following{/other_user}",

"gists_url": "https://api.github.com/users/n1t0/gists{/gist_id}",

"starred_url": "https://api.github.com/users/n1t0/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/n1t0/subscriptions",

"organizations_url": "https://api.github.com/users/n1t0/orgs",

"repos_url": "https://api.github.com/users/n1t0/repos",

"events_url": "https://api.github.com/users/n1t0/events{/privacy}",

"received_events_url": "https://api.github.com/users/n1t0/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Can you remind why you moved those options to initialization vs. at `encode` time?\r\n\r\nIs that a hard requirement of the native implem?",

"Sure! The native implementation doesn't have `kwargs` so we need to define a static interface with pre-defined function arguments. This means that the configuration of the tokenizer is done by initializing its various parts and attaching them. There would be some unwanted overhead in doing this every time we `encode`.\r\nI think we generally don't need to change the behavior of the tokenizer while using it, so this shouldn't be a problem. Plus, I think it makes the underlying functions clearer, and easier to use.",

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=h1) Report\n> Merging [#2211](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/81db12c3ba0c2067f43c4a63edf5e45f54161042?src=pr&el=desc) will **increase** coverage by `<.01%`.\n> The diff coverage is `74.62%`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #2211 +/- ##\n==========================================\n+ Coverage 73.54% 73.54% +<.01% \n==========================================\n Files 87 87 \n Lines 14789 14919 +130 \n==========================================\n+ Hits 10876 10972 +96 \n- Misses 3913 3947 +34\n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [src/transformers/\\_\\_init\\_\\_.py](https://codecov.io/gh/huggingface/transformers/pull/2211/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9fX2luaXRfXy5weQ==) | `98.79% <100%> (ø)` | :arrow_up: |\n| [src/transformers/tokenization\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2211/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `88.13% <67.01%> (-3.96%)` | :arrow_down: |\n| [src/transformers/tokenization\\_gpt2.py](https://codecov.io/gh/huggingface/transformers/pull/2211/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fZ3B0Mi5weQ==) | `96.21% <93.75%> (-0.37%)` | :arrow_down: |\n| [src/transformers/tokenization\\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/2211/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYmVydC5weQ==) | `96.2% <94.73%> (-0.15%)` | :arrow_down: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=footer). Last update [81db12c...e6ec24f](https://codecov.io/gh/huggingface/transformers/pull/2211?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"Testing the fast tokenizers is not trivial. Doing tokenizer-specific tests is alright, but as of right now the breaking changes make it impossible to implement the fast tokenizers in the common tests pipeline.\r\n\r\nIn order to do so, and to obtain full coverage of the tokenizers, we would have to either:\r\n- Split the current common tests in actual unit tests, rather than integration tests. As the arguments are passed in the `from_pretrained` (for the rust tokenizers) method rather than the `encode` (for the python tokenizers) method, having several chained calls to `encode` with varying arguments implies a big refactor of each test to test the rust tokenizers as well. Splitting those into unit tests would ease this task.\r\n- Copy/Paste the common tests into a separate file (not ideal).\r\n\r\nCurrently there are some integration tests for the rust GPT-2 and rust BERT tokenizers, which may offer sufficient coverage for this PR. We would need the aforementioned refactor to have full coverage, which can be attended to in a future PR. ",

"I really like it. Great work @n1t0 \r\n\r\nI think now we should try to work on the other (python) tokenizers and see if we can find a middle ground behavior where they can both behave rather similarly, in particular for tests.\r\n\r\nAlso an open question: should we keep the \"slow\" python tokenizers that are easy to inspect? Could make sense, maybe renaming them to `BertTokenizerPython` for instance.",

"Ok, I had to rewrite the whole history after the few restructuration PRs that have been merged. \r\n\r\nSince Python 2 has been dropped, I added `tokenizers` as a dependency (It is working for Python 3.5+).\r\nWe should now be ready to merge!\r\n\r\nWe should clearly keep the Python tokenizers and deprecate them slowly. In the end, I don't mind keeping them, but I'd like to avoid having to maintain both, especially if their API differ.",

"This is ready for the final review!",

"Ok, this is great, merging!"

] | 1,576 | 1,651 | 1,577 |

MEMBER

| null |

I am opening this PR to track the integration of `tokenizers`.

At the moment, we created two new classes to represent the fast version of both GPT2 and Bert tokenizers. There are a few breaking changes compared to the current `GPT2Tokenizer` and `BertTokenizer`:

- `add_special_token` is now specified during initialization

- truncation and padding options are also setup during initialization

By default, `encode_batch` pads everything using the longest sequence, and `encode` does not pad at all. If `pad_to_max_length=True`, then we pad everything using this length.

If a `max_length` is specified, then everything is truncated according to the provided options. This should work exactly like before.

In order to try these, you must `pip install tokenizers` in your virtual env.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2211/reactions",

"total_count": 7,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 4,

"confused": 0,

"heart": 3,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2211/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/2211",

"html_url": "https://github.com/huggingface/transformers/pull/2211",

"diff_url": "https://github.com/huggingface/transformers/pull/2211.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/2211.patch",

"merged_at": 1577438670000

}

|

https://api.github.com/repos/huggingface/transformers/issues/2210

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2210/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2210/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2210/events

|

https://github.com/huggingface/transformers/issues/2210

| 539,373,044 |

MDU6SXNzdWU1MzkzNzMwNDQ=

| 2,210 |

training a new BERT tokenizer model

|

{

"login": "hahmyg",

"id": 3884429,

"node_id": "MDQ6VXNlcjM4ODQ0Mjk=",

"avatar_url": "https://avatars.githubusercontent.com/u/3884429?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/hahmyg",

"html_url": "https://github.com/hahmyg",

"followers_url": "https://api.github.com/users/hahmyg/followers",

"following_url": "https://api.github.com/users/hahmyg/following{/other_user}",

"gists_url": "https://api.github.com/users/hahmyg/gists{/gist_id}",

"starred_url": "https://api.github.com/users/hahmyg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hahmyg/subscriptions",

"organizations_url": "https://api.github.com/users/hahmyg/orgs",

"repos_url": "https://api.github.com/users/hahmyg/repos",

"events_url": "https://api.github.com/users/hahmyg/events{/privacy}",

"received_events_url": "https://api.github.com/users/hahmyg/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false |

{

"login": "n1t0",

"id": 1217986,

"node_id": "MDQ6VXNlcjEyMTc5ODY=",

"avatar_url": "https://avatars.githubusercontent.com/u/1217986?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/n1t0",

"html_url": "https://github.com/n1t0",

"followers_url": "https://api.github.com/users/n1t0/followers",

"following_url": "https://api.github.com/users/n1t0/following{/other_user}",

"gists_url": "https://api.github.com/users/n1t0/gists{/gist_id}",

"starred_url": "https://api.github.com/users/n1t0/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/n1t0/subscriptions",

"organizations_url": "https://api.github.com/users/n1t0/orgs",

"repos_url": "https://api.github.com/users/n1t0/repos",

"events_url": "https://api.github.com/users/n1t0/events{/privacy}",

"received_events_url": "https://api.github.com/users/n1t0/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "n1t0",

"id": 1217986,

"node_id": "MDQ6VXNlcjEyMTc5ODY=",

"avatar_url": "https://avatars.githubusercontent.com/u/1217986?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/n1t0",

"html_url": "https://github.com/n1t0",

"followers_url": "https://api.github.com/users/n1t0/followers",

"following_url": "https://api.github.com/users/n1t0/following{/other_user}",

"gists_url": "https://api.github.com/users/n1t0/gists{/gist_id}",

"starred_url": "https://api.github.com/users/n1t0/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/n1t0/subscriptions",

"organizations_url": "https://api.github.com/users/n1t0/orgs",

"repos_url": "https://api.github.com/users/n1t0/repos",

"events_url": "https://api.github.com/users/n1t0/events{/privacy}",

"received_events_url": "https://api.github.com/users/n1t0/received_events",

"type": "User",

"site_admin": false

}

] |

[

"Follow sentencepiece github or Bert tensorflow GitHub. You will have some\nfeedback\n\nOn Wed, Dec 18, 2019 at 07:52 Younggyun Hahm <[email protected]>\nwrote:\n\n> ❓ Questions & Help\n>\n> I would like to train a new BERT model.\n> There are some way to train BERT tokenizer (a.k.a. wordpiece tokenizer) ?\n>\n> —\n> You are receiving this because you are subscribed to this thread.\n> Reply to this email directly, view it on GitHub\n> <https://github.com/huggingface/transformers/issues/2210?email_source=notifications&email_token=AIEAE4BMLLHVIADDR5PGZ63QZFQ27A5CNFSM4J4DE7PKYY3PNVWWK3TUL52HS4DFUVEXG43VMWVGG33NNVSW45C7NFSM4IBGFX2A>,\n> or unsubscribe\n> <https://github.com/notifications/unsubscribe-auth/AIEAE4CUY2ESKVEH4IPDL63QZFQ27ANCNFSM4J4DE7PA>\n> .\n>\n",

"If you want to see some examples of custom implementation of **tokenizers** into Transformers' library, you can see how they have implemented [Japanese Tokenizer](https://github.com/huggingface/transformers/blob/master/transformers/tokenization_bert_japanese.py).\r\n\r\nIn general, you can read more information about adding a new model into Transformers [here](https://github.com/huggingface/transformers/blob/30968d70afedb1a9815164737cdc3779f2f058fe/templates/adding_a_new_model/README.md).\r\n\r\n> ## Questions & Help\r\n> I would like to train a new BERT model.\r\n> There are some way to train BERT tokenizer (a.k.a. wordpiece tokenizer) ?",

"Checkout the [**`tokenizers`**](https://github.com/huggingface/tokenizers) repo.\r\n\r\nThere's an example of how to train a WordPiece tokenizer: https://github.com/huggingface/tokenizers/blob/master/bindings/python/examples/train_bert_wordpiece.py\r\n\r\n\r\n\r\n",

"Hi @julien-c `tokenizers` package is great, but I found an issue when using the resulting tokenizer later with `transformers`.\r\n\r\nAssume I have this:\r\n```\r\nfrom tokenizers import BertWordPieceTokenizer\r\ninit_tokenizer = BertWordPieceTokenizer(vocab=vocab)\r\ninit_tokenizer.save(\"./my_local_tokenizer\")\r\n```\r\n\r\nWhen I am trying to load the file:\r\n```\r\nfrom transformers import AutoTokenizer\r\ntokenizer = AutoTokenizer.from_pretrained(\"./my_local_tokenizer\")\r\n```\r\nan error is thrown:\r\n```\r\nValueError: Unrecognized model in .... Should have a `model_type` key in its config.json, or contain one of the following strings in its name: ...\r\n```\r\n\r\nSeems format used by tokenizers is a single json file, whereas when I save transformers tokenizer it creates a dir with [config.json, special tokens map.json and vocab.txt].\r\n\r\ntransformers.__version__ = '4.15.0'\r\ntokenizers.__version__ '0.10.3'\r\n\r\nCan you please give me some hints how to fix this? Thx in advance",

"@tkornuta better to open a post on the forum, but tagging @SaulLu for visibility",

"Thanks for the ping julien-c! \r\n\r\n@tkornuta Indeed, for this kind of questions the [forum](https://discuss.huggingface.co/) is the best place to ask them: it also allows other users who would ask the same question as you to benefit from the answer. :relaxed: \r\n\r\nYour use case is indeed very interesting! With your current code, you have a problem because `AutoTokenizer` has no way of knowing which Tokenizer object we want to use to load your tokenizer since you chose to create a new one from scratch.\r\n\r\nSo in particular to instantiate a new `transformers` tokenizer with the Bert-like tokenizer you created with the `tokenizers` library you can do:\r\n\r\n```python\r\nfrom transformers import BertTokenizerFast\r\n\r\nwrapped_tokenizer = BertTokenizerFast(\r\n tokenizer_file=\"./my_local_tokenizer\",\r\n do_lower_case = FILL_ME,\r\n unk_token = FILL_ME,\r\n sep_token = FILL_ME,\r\n pad_token = FILL_ME,\r\n cls_token = FILL_ME,\r\n mask_token = FILL_ME,\r\n tokenize_chinese_chars =FILL_ME,\r\n strip_accents = FILL_ME\r\n)\r\n```\r\nNote: You will have to manually carry over the same parameters (`unk_token`, `strip_accents`, etc) that you used to initialize `BertWordPieceTokenizer` in the initialization of `BertTokenizerFast`.\r\n\r\nI refer you to the [section \"Building a tokenizer, block by block\" of the course ](https://huggingface.co/course/chapter6/9?fw=pt#building-a-wordpiece-tokenizer-from-scratch) where we explained how you can build a tokenizer from scratch with the `tokenizers` library and use it to instantiate a new tokenizer with the `transformers` library. We have even treated the example of a Bert type tokenizer in this chapter :smiley:.\r\n\r\nMoreover, if you just want to generate a new vocabulary for BERT tokenizer by re-training it on a new dataset, the easiest way is probably to use the `train_new_from_iterator` method of a fast `transformers` tokenizer which is explained in the [section \"Training a new tokenizer from an old one\" of our course](https://huggingface.co/course/chapter6/2?fw=pt). :blush: \r\n\r\nI hope this can help you!\r\n \r\n\r\n",

"Hi @SaulLu thanks for the answer! I managed to find the solution here:\r\nhttps://huggingface.co/docs/transformers/fast_tokenizer\r\n\r\n```\r\n# 1st solution: Load the HF.tokenisers tokenizer.\r\nloaded_tokenizer = Tokenizer.from_file(decoder_tokenizer_path)\r\n# \"Wrap\" it with HF.transformers tokenizer.\r\ntokenizer = PreTrainedTokenizerFast(tokenizer_object=loaded_tokenizer)\r\n\r\n# 2nd solution: Load from tokenizer file\r\ntokenizer = PreTrainedTokenizerFast(tokenizer_file=decoder_tokenizer_path)\r\n```\r\n\r\nNow I also see that somehow I have missed the information at the bottom of the section that you mention on building tokenizer that is also stating that - sorry.\r\n\r\n\r\n",

"Hey @SaulLu sorry for bothering, but struggling with yet another problem/question.\r\n\r\nWhen I am loading the tokenizer created in HF.tokenizers my special tokens are \"gone\", i.e. \r\n```\r\n# Load from tokenizer file\r\ntokenizer = PreTrainedTokenizerFast(tokenizer_file=decoder_tokenizer_path)\r\ntokenizer.pad_token # <- this is None\r\n```\r\n\r\nWithout this when I am using padding:\r\n```\r\nencoded = tokenizer.encode(input, padding=True) # <- raises error - lack of pad_token\r\n```\r\n\r\nI can add them to tokenizer from HF.transformers e.g. like this:\r\n```\r\ntokenizer.add_special_tokens({'pad_token': '[PAD]'}) # <- this works!\r\n```\r\n\r\nIs there a similar method for setting special tokens to tokenizer in HF.tokenizers that will enable me to load the tokenizer in HF.transformers?\r\n\r\nI have all tokens in my vocabulary and tried the following\r\n````\r\n# Pass as arguments to constructor:\r\ninit_tokenizer = BertWordPieceTokenizer(vocab=vocab) \r\n #special_tokens=[\"[UNK]\", \"[CLS]\", \"[SEP]\", \"[PAD]\", \"[MASK]\"]) <- error: wrong keyword\r\n #bos_token = \"[CLS]\", eos_token = \"[SEP]\", unk_token = \"[UNK]\", sep_token = \"[SEP]\", <- wrong keywords\r\n #pad_token = \"[PAD]\", cls_token = \"[CLS]\", mask_token = \"[MASK]\", <- wrong keywords\r\n# Use tokenizer.add_special_tokens() method:\r\n#init_tokenizer.add_special_tokens({'pad_token': '[PAD]'}) <- error: must be a list\r\n#init_tokenizer.add_special_tokens([\"[PAD]\", \"[CLS]\", \"[SEP]\", \"[UNK]\", \"[MASK]\", \"[BOS]\", \"[EOS]\"]) <- doesn't work (means error when calling encode(padding=True))\r\n#init_tokenizer.add_special_tokens(['[PAD]']) # <- doesn't work\r\n\r\n# Set manually.\r\n#init_tokenizer.pad_token = \"[PAD]\" # <- doesn't work\r\ninit_tokenizer.pad_token_id = vocab[\"[PAD]\"] # <- doesn't work\r\n```\r\n\r\nAm I missing something obvious? Thanks in advance!",

"@tkornuta, I'm sorry I missed your second question!\r\n\r\nThe `BertWordPieceTokenizer` class is just an helper class to build a `tokenizers.Tokenizers` object with the architecture proposed by the Bert's authors. The `tokenizers` library is used to build tokenizers and the `transformers` library to wrap these tokenizers by adding useful functionality when we wish to use them with a particular model (like identifying the padding token, the separation token, etc). \r\n\r\nTo not miss anything, I would like to comment on several of your remarks\r\n\r\n#### Remark 1\r\n> [@tkornuta] When I am loading the tokenizer created in HF.tokenizers my special tokens are \"gone\", i.e.\r\n\r\nTo carry your special tokens in your `HF.transformers` tokenizer, I refer you to this section of my previous answer\r\n> [@SaulLu] So in particular to instantiate a new transformers tokenizer with the Bert-like tokenizer you created with the tokenizers library you can do:\r\n> ```python\r\n> from transformers import BertTokenizerFast\r\n>\r\n> wrapped_tokenizer = BertTokenizerFast(\r\n> tokenizer_file=\"./my_local_tokenizer\",\r\n> do_lower_case = FILL_ME,\r\n> unk_token = FILL_ME,\r\n> sep_token = FILL_ME,\r\n> pad_token = FILL_ME,\r\n> cls_token = FILL_ME,\r\n> mask_token = FILL_ME,\r\n> tokenize_chinese_chars =FILL_ME,\r\n> strip_accents = FILL_ME\r\n> )\r\n>```\r\n> Note: You will have to manually carry over the same parameters (unk_token, strip_accents, etc) that you used to initialize BertWordPieceTokenizer in the initialization of BertTokenizerFast.\r\n\r\n\r\n#### Remark 2\r\n> [@tkornuta] Is there a similar method for setting special tokens to tokenizer in HF.tokenizers that will enable me to load the tokenizer in HF.transformers?\r\n\r\nNothing prevents you from overloading the `BertWordPieceTokenizer` class in order to define the properties that interest you. On the other hand, there will be no automatic porting of the values of these new properties in the `HF.transformers` tokenizer properties (you have to use the method mentioned below or the methode `.add_special_tokens({'pad_token': '[PAD]'})` after having instanciated your `HF.transformers` tokenizer ).\r\n\r\nDoes this answer your questions? :relaxed: ",

"Hi @SaulLu yeah, I was asking about this \"automatic porting of special tokens\". As I set them already when training the tokenizer in HF.tokenizers, hence I am really not sure why they couldn't be imported automatically when loading tokenizer in HF.transformers...\r\n\r\nAnyway, thanks, your answer is super useful. \r\n\r\nMoreover, thanks for the hint pointing to forum! I will use it next time for sure! :)"

] | 1,576 | 1,643 | 1,578 |

NONE

| null |

## ❓ Questions & Help

I would like to train a new BERT model.

There are some way to train BERT tokenizer (a.k.a. wordpiece tokenizer) ?

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2210/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2210/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/2209

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2209/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2209/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2209/events

|

https://github.com/huggingface/transformers/issues/2209

| 539,265,078 |

MDU6SXNzdWU1MzkyNjUwNzg=

| 2,209 |

```glue_convert_examples_to_features``` for sequence labeling tasks

|

{

"login": "antgr",

"id": 2175768,

"node_id": "MDQ6VXNlcjIxNzU3Njg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2175768?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/antgr",

"html_url": "https://github.com/antgr",

"followers_url": "https://api.github.com/users/antgr/followers",

"following_url": "https://api.github.com/users/antgr/following{/other_user}",

"gists_url": "https://api.github.com/users/antgr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/antgr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/antgr/subscriptions",

"organizations_url": "https://api.github.com/users/antgr/orgs",

"repos_url": "https://api.github.com/users/antgr/repos",

"events_url": "https://api.github.com/users/antgr/events{/privacy}",

"received_events_url": "https://api.github.com/users/antgr/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"duplicate to 2208"

] | 1,576 | 1,576 | 1,576 |

NONE

| null |

## 🚀 Feature

<!-- A clear and concise description of the feature proposal. Please provide a link to the paper and code in case they exist. -->

I would like a function like ```glue_convert_examples_to_features``` for sequence labelling tasks.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too. -->

The motivation is that I need much better flexibility for sequence labelling tasks. Its not enough to have a final, and decided for me model, for this task. I want just the features (a sequence of features/embeddings I guess).

## Additional context

This can be generalized to any dataset with a specific format.

<!-- Add any other context or screenshots about the feature request here. -->

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2209/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2209/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/2208

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2208/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2208/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2208/events

|

https://github.com/huggingface/transformers/issues/2208

| 539,259,772 |

MDU6SXNzdWU1MzkyNTk3NzI=

| 2,208 |

```glue_convert_examples_to_features``` for sequence labeling tasks

|

{

"login": "antgr",

"id": 2175768,

"node_id": "MDQ6VXNlcjIxNzU3Njg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2175768?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/antgr",

"html_url": "https://github.com/antgr",

"followers_url": "https://api.github.com/users/antgr/followers",

"following_url": "https://api.github.com/users/antgr/following{/other_user}",

"gists_url": "https://api.github.com/users/antgr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/antgr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/antgr/subscriptions",

"organizations_url": "https://api.github.com/users/antgr/orgs",

"repos_url": "https://api.github.com/users/antgr/repos",

"events_url": "https://api.github.com/users/antgr/events{/privacy}",

"received_events_url": "https://api.github.com/users/antgr/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] |

closed

| false | null |

[] |

[

"Do you mean the one already into Transformers in the [glue.py](https://github.com/huggingface/transformers/blob/d46147294852694d1dc701c72b9053ff2e726265/transformers/data/processors/glue.py) at line 30 or a different function? \r\n\r\n> glue_convert_examples_to_features",

"A different one. Does this proposal makes sense?",

"> A different one. Does this proposal makes sense?\r\n\r\nDifferent in which way? Describe to us please the goal and an high-level implementation.",

"Thanks for the reply! First of all I just want to clarify that I am not sure that my suggestion makes indeed sense. I will try to clarify: 1) This implementation ``` def glue_convert_examples_to_features(examples, tokenizer,``` is for glue datasets, so it does not cover what I suggest 2) in line 112 we see that it can only support \"classification\" and \"regression\". The classification is in sentence level. I want classification in tokens level.\r\n\r\nSo to my understanding, this function will give back one ```features``` tensor that is 1-1 correspondence with the one label for this sentence. In my case we would like n ```features``` tensors, that will have 1-1 correspondence with the labels for this sentence, where n the number of tokens of the sentence.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,576 | 1,582 | 1,582 |

NONE

| null |

## 🚀 Feature

<!-- A clear and concise description of the feature proposal. Please provide a link to the paper and code in case they exist. -->

I would like a function like ```glue_convert_examples_to_features``` for sequence labelling tasks.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too. -->

The motivation is that I need much better flexibility for sequence labelling tasks. Its not enough to have a final, and decided for me model, for this task. I want just the features (a sequence of features/embeddings I guess).

## Additional context

This can be generalized to any dataset with a specific format.

<!-- Add any other context or screenshots about the feature request here. -->

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2208/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2208/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/2207

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2207/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2207/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2207/events

|

https://github.com/huggingface/transformers/pull/2207

| 539,253,243 |

MDExOlB1bGxSZXF1ZXN0MzU0MjYxODg0

| 2,207 |

Fix segmentation fault

|

{

"login": "LysandreJik",

"id": 30755778,

"node_id": "MDQ6VXNlcjMwNzU1Nzc4",

"avatar_url": "https://avatars.githubusercontent.com/u/30755778?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/LysandreJik",

"html_url": "https://github.com/LysandreJik",

"followers_url": "https://api.github.com/users/LysandreJik/followers",

"following_url": "https://api.github.com/users/LysandreJik/following{/other_user}",

"gists_url": "https://api.github.com/users/LysandreJik/gists{/gist_id}",

"starred_url": "https://api.github.com/users/LysandreJik/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LysandreJik/subscriptions",

"organizations_url": "https://api.github.com/users/LysandreJik/orgs",

"repos_url": "https://api.github.com/users/LysandreJik/repos",

"events_url": "https://api.github.com/users/LysandreJik/events{/privacy}",

"received_events_url": "https://api.github.com/users/LysandreJik/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=h1) Report\n> Merging [#2207](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/f061606277322a013ec2d96509d3077e865ae875?src=pr&el=desc) will **increase** coverage by `1.13%`.\n> The diff coverage is `83.33%`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #2207 +/- ##\n==========================================\n+ Coverage 80.32% 81.46% +1.13% \n==========================================\n Files 122 122 \n Lines 18342 18345 +3 \n==========================================\n+ Hits 14734 14945 +211 \n+ Misses 3608 3400 -208\n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [transformers/file\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL2ZpbGVfdXRpbHMucHk=) | `70.42% <83.33%> (-1.01%)` | :arrow_down: |\n| [transformers/modeling\\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.51% <0%> (+1.32%)` | :arrow_up: |\n| [transformers/modeling\\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2N0cmwucHk=) | `96.47% <0%> (+2.2%)` | :arrow_up: |\n| [transformers/modeling\\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `74.54% <0%> (+2.32%)` | :arrow_up: |\n| [transformers/modeling\\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `71.76% <0%> (+12.35%)` | :arrow_up: |\n| [transformers/tests/modeling\\_tf\\_common\\_test.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `97.41% <0%> (+17.24%)` | :arrow_up: |\n| [transformers/modeling\\_tf\\_pytorch\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2207/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3B5dG9yY2hfdXRpbHMucHk=) | `92.15% <0%> (+83%)` | :arrow_up: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=footer). Last update [f061606...14cc752](https://codecov.io/gh/huggingface/transformers/pull/2207?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"is this issue related to https://github.com/scipy/scipy/issues/11237, which also started happening yesterday.",

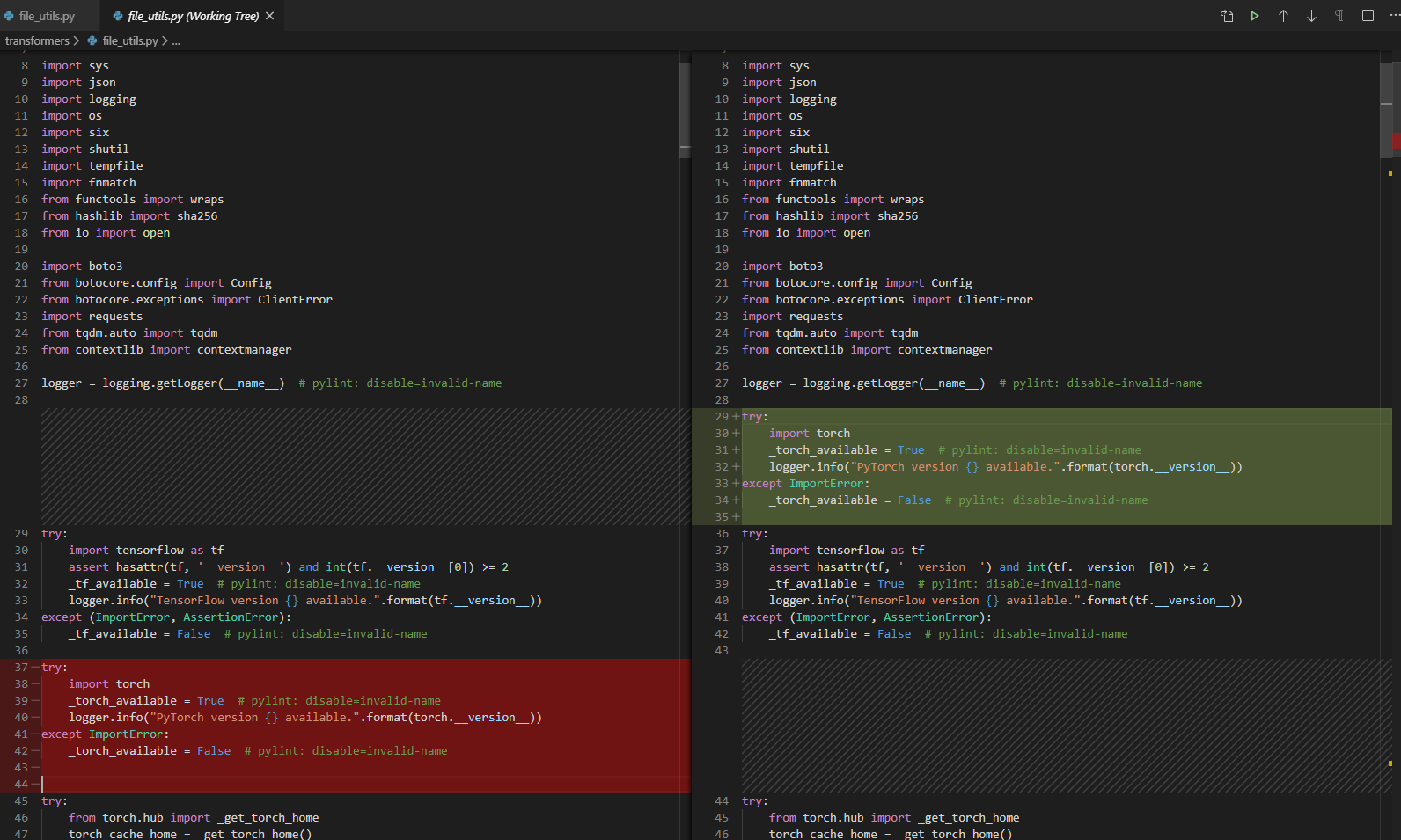

"Indeed, this is related to that issue. I've just tested on the CircleCI machine directly, the segmentation fault happens when importing torch after tensorflow, when scipy is installed on the machine.",

"@LysandreJik I get this error on transfomers 2.2.2 on PyPi. When it will be updated?\r\n\r\n```\r\n>>> import transformers\r\nSegmentation fault (core dumped)\r\n```\r\n\r\n```\r\nroot@261246f307ae:~/src# python --version\r\nPython 3.6.8\r\n```",

"This is due to an upstream issue related to scipy 1.4.0. Please pin your scipy version to one earlier than 1.4.0 and you should see this segmentation fault resolved.",

"@LysandreJik thank you this issue is destroying everything 🗡 ",

"Did pinning the scipy version fix your issue?",

"@LysandreJik I'm now pinning `scipy==1.3.3` that is the latest version before RC1.4.x",

"I confirm that with 1.3.3 works, but they have just now pushed `scipy==1.4.1`. We have tested it and it works as well.\r\n\r\n - https://github.com/scipy/scipy/issues/11237#issuecomment-567550894\r\nThank you!",

"Glad you could make it work!"

] | 1,576 | 1,576 | 1,576 |

MEMBER

| null |

Fix segmentation fault that started happening yesterday night.

Following the fix from #2205 that could be reproduced using circle ci ssh access.

~Currently fixing the unforeseen event with Python 2.~ The error with Python 2 was due to Regex releasing a new version (2019.12.17) that couldn't be built on Python 2.7.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2207/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 1

}

|

https://api.github.com/repos/huggingface/transformers/issues/2207/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/2207",

"html_url": "https://github.com/huggingface/transformers/pull/2207",

"diff_url": "https://github.com/huggingface/transformers/pull/2207.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/2207.patch",

"merged_at": 1576616046000

}

|

https://api.github.com/repos/huggingface/transformers/issues/2206

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/2206/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/2206/comments

|

https://api.github.com/repos/huggingface/transformers/issues/2206/events

|

https://github.com/huggingface/transformers/issues/2206

| 539,196,159 |

MDU6SXNzdWU1MzkxOTYxNTk=

| 2,206 |

Transformers Encoder and Decoder Inference

|

{

"login": "anandhperumal",

"id": 12907396,

"node_id": "MDQ6VXNlcjEyOTA3Mzk2",

"avatar_url": "https://avatars.githubusercontent.com/u/12907396?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/anandhperumal",

"html_url": "https://github.com/anandhperumal",

"followers_url": "https://api.github.com/users/anandhperumal/followers",

"following_url": "https://api.github.com/users/anandhperumal/following{/other_user}",

"gists_url": "https://api.github.com/users/anandhperumal/gists{/gist_id}",

"starred_url": "https://api.github.com/users/anandhperumal/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/anandhperumal/subscriptions",

"organizations_url": "https://api.github.com/users/anandhperumal/orgs",

"repos_url": "https://api.github.com/users/anandhperumal/repos",

"events_url": "https://api.github.com/users/anandhperumal/events{/privacy}",

"received_events_url": "https://api.github.com/users/anandhperumal/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"As said in #2117 by @rlouf (an author of Transformers), **at the moment** you can use `PreTrainedEncoderDecoder` with only **BERT** model both as encoder and decoder.\r\n\r\nIn more details, he said: \"_Indeed, as I specified in the article, PreTrainedEncoderDecoder only works with BERT as an encoder and BERT as a decoder. GPT2 shouldn't take too much work to adapt, but we haven't had the time to do it yet. Try PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased', 'bert-base-uncased') should work. Let me know if it doesn't._\".\r\n\r\n> ## Bug\r\n> Model I am using (Bert, XLNet....):\r\n> \r\n> Language I am using the model on (English, Chinese....):\r\n> \r\n> The problem arise when using:\r\n> \r\n> * [X ] the official example scripts: (give details)\r\n> * [ ] my own modified scripts: (give details)\r\n> \r\n> The tasks I am working on is:\r\n> \r\n> * [ ] an official GLUE/SQUaD task: (give the name)\r\n> * [ X] my own task or dataset: (give details)\r\n> \r\n> ## To Reproduce\r\n> Steps to reproduce the behavior:\r\n> \r\n> 1. Error while doing inference.\r\n> \r\n> ```\r\n> from transformers import PreTrainedEncoderDecoder, BertTokenizer\r\n> model = PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased','gpt2')\r\n> tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')\r\n> encoder_input_ids=tokenizer.encode(\"Hi How are you\")\r\n> import torch\r\n> ouput = model(torch.tensor( encoder_input_ids).unsqueeze(0))\r\n> ```\r\n> \r\n> and the error is\r\n> \r\n> ```\r\n> TypeError: forward() missing 1 required positional argument: 'decoder_input_ids'\r\n> ```\r\n> \r\n> During inference why is decoder input is expected ?\r\n> \r\n> Let me know if I'm missing anything?\r\n> \r\n> ## Environment\r\n> OS: ubuntu\r\n> Python version: 3.6\r\n> PyTorch version:1.3.0\r\n> PyTorch Transformers version (or branch):2.2.0\r\n> Using GPU ? Yes\r\n> Distributed of parallel setup ? No\r\n> Any other relevant information:\r\n> \r\n> ## Additional context",

"@TheEdoardo93 it doesn't matter whether it is GPT2 or bert. Both has the same error :\r\nI'm trying to play with GPT2 that's why I pasted my own code.\r\n\r\nUsing BERT as an Encoder and Decoder\r\n```\r\n>>> model = PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased','bert-base-uncased')\r\n>>> ouput = model(torch.tensor( encoder_input_ids).unsqueeze(0))\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\n File \"/home/guest_1/.local/lib/python3.6/site-packages/torch/nn/modules/module.py\", line 541, in __call__\r\n result = self.forward(*input, **kwargs)\r\nTypeError: forward() missing 1 required positional argument: 'decoder_input_ids'\r\n```",

"First of all, authors of Transformers are working on the implementation of `PreTrainedEncoderDecoder` object, so it's not a definitive implementation, e.g. the code lacks of the implementation of some methods. Said so, I've tested your code and I've revealed how to working with `PreTrainedEncoderDecoder` **correctly without bugs**. You can see my code below.\r\n\r\nIn brief, your problem occurs because you have not passed _all_ arguments necessary to the `forward` method. By looking at the source code [here](https://github.com/huggingface/transformers/blob/master/transformers/modeling_encoder_decoder.py), you can see that this method accepts **two** parameters: `encoder_input_ids` and `decoder_input_ids`. In your code, you've passed _only one_ parameter, and the Python interpreter associates your `encoder_input_ids` to the `encoder_input_ids` of the `forward` method, but you don't have supply a value for `decoder_input_ids` of the `forward` method, and this is the cause that raise the error.\r\n\r\n```\r\nPython 3.6.9 |Anaconda, Inc.| (default, Jul 30 2019, 19:07:31) \r\n[GCC 7.3.0] on linux\r\nType \"help\", \"copyright\", \"credits\" or \"license\" for more information.\r\n>>> import transformers\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:541: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n _np_qint8 = np.dtype([(\"qint8\", np.int8, 1)])\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:542: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n _np_quint8 = np.dtype([(\"quint8\", np.uint8, 1)])\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:543: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n _np_qint16 = np.dtype([(\"qint16\", np.int16, 1)])\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:544: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n _np_quint16 = np.dtype([(\"quint16\", np.uint16, 1)])\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:545: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n _np_qint32 = np.dtype([(\"qint32\", np.int32, 1)])\r\n/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/tensorboard/compat/tensorflow_stub/dtypes.py:550: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.\r\n np_resource = np.dtype([(\"resource\", np.ubyte, 1)])\r\n>>> from transformers import PreTrainedEncoderDecoder\r\n>>> from transformers import BertTokenizer\r\n>>> tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')\r\n>>> model = PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased', 'bert-base-uncased')\r\n>>> text='Hi How are you'\r\n>>> import torch\r\n>>> input_ids = torch.tensor(tokenizer.encode(text)).unsqueeze(0)\r\n>>> input_ids\r\ntensor([[ 101, 7632, 2129, 2024, 2017, 102]])\r\n>>> output = model(input_ids) # YOUR PROBLEM IS HERE\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\n File \"/home/<user>/anaconda3/envs/huggingface/lib/python3.6/site-packages/torch/nn/modules/module.py\", line 541, in __call__\r\n result = self.forward(*input, **kwargs)\r\nTypeError: forward() missing 1 required positional argument: 'decoder_input_ids'\r\n>>> output = model(input_ids, input_ids) # SOLUTION TO YOUR PROBLEM\r\n>>> output\r\n(tensor([[[ -6.3390, -6.3664, -6.4600, ..., -5.5354, -4.1787, -5.8384],\r\n [ -6.3550, -6.3077, -6.4661, ..., -5.3516, -4.1338, -4.0742],\r\n [ -6.7090, -6.6050, -6.6682, ..., -5.9591, -4.7142, -3.8219],\r\n [ -7.7608, -7.5956, -7.6634, ..., -6.8113, -5.7777, -4.1638],\r\n [ -8.6462, -8.5767, -8.6366, ..., -7.9503, -6.5382, -5.0959],\r\n [-12.8752, -12.3775, -12.2770, ..., -10.0880, -10.7659, -9.0092]]],\r\n grad_fn=<AddBackward0>), tensor([[[ 0.0929, -0.0264, -0.1224, ..., -0.2106, 0.1739, 0.1725],\r\n [ 0.4074, -0.0593, 0.5523, ..., -0.6791, 0.6556, -0.2946],\r\n [-0.2116, -0.6859, -0.4628, ..., 0.1528, 0.5977, -0.9102],\r\n [ 0.3992, -1.3208, -0.0801, ..., -0.3213, 0.2557, -0.5780],\r\n [-0.0757, -1.3394, 0.1816, ..., 0.0746, 0.4032, -0.7080],\r\n [ 0.5989, -0.2841, -0.3490, ..., 0.3042, -0.4368, -0.2097]]],\r\n grad_fn=<NativeLayerNormBackward>), tensor([[-9.3097e-01, -3.3807e-01, -6.2162e-01, 8.4082e-01, 4.4154e-01,\r\n -1.5889e-01, 9.3273e-01, 2.2240e-01, -4.3249e-01, -9.9998e-01,\r\n -2.7810e-01, 8.9449e-01, 9.8638e-01, 6.4763e-02, 9.6649e-01,\r\n -7.7835e-01, -4.4046e-01, -5.9515e-01, 2.7585e-01, -7.4638e-01,\r\n 7.4700e-01, 9.9983e-01, 4.4468e-01, 2.8673e-01, 3.6586e-01,\r\n 9.7642e-01, -8.4343e-01, 9.6599e-01, 9.7235e-01, 7.2667e-01,\r\n -7.5785e-01, 9.2892e-02, -9.9089e-01, -1.7004e-01, -6.8200e-01,\r\n -9.9283e-01, 2.6244e-01, -7.9871e-01, 2.3397e-02, 4.6413e-02,\r\n -9.3371e-01, 2.7699e-01, 9.9995e-01, -3.2671e-01, 2.1108e-01,\r\n -2.0636e-01, -1.0000e+00, 1.9622e-01, -9.3330e-01, 6.8736e-01,\r\n 6.4731e-01, 5.3773e-01, 9.2759e-02, 4.1069e-01, 4.0360e-01,\r\n 1.9002e-01, -1.7049e-01, 7.5259e-03, -2.0453e-01, -5.7574e-01,\r\n -5.3062e-01, 3.9367e-01, -7.0627e-01, -9.2865e-01, 6.8820e-01,\r\n 3.2698e-01, -3.3506e-02, -1.2323e-01, -1.5304e-01, -1.8077e-01,\r\n 9.3398e-01, 2.6375e-01, 3.7505e-01, -8.9548e-01, 1.1777e-01,\r\n 2.2054e-01, -6.3351e-01, 1.0000e+00, -6.9228e-01, -9.8653e-01,\r\n 6.9799e-01, 4.0303e-01, 5.2453e-01, 2.3217e-01, -1.2151e-01,\r\n -1.0000e+00, 5.6760e-01, 2.9295e-02, -9.9318e-01, 8.3171e-02,\r\n 5.2939e-01, -2.3176e-01, -1.5694e-01, 4.9278e-01, -4.2614e-01,\r\n -3.8079e-01, -2.6060e-01, -6.9055e-01, -1.7180e-01, -1.9810e-01,\r\n -2.7986e-02, -7.2085e-02, -3.7635e-01, -3.7743e-01, 1.3508e-01,\r\n -4.3892e-01, -6.1321e-01, 1.7726e-01, -3.5434e-01, 6.4734e-01,\r\n 4.0373e-01, -2.8194e-01, 4.5104e-01, -9.7876e-01, 6.1044e-01,\r\n -2.3526e-01, -9.9035e-01, -5.1350e-01, -9.9280e-01, 6.8329e-01,\r\n -2.1623e-01, -1.4641e-01, 9.8273e-01, 3.7345e-01, 4.8171e-01,\r\n -5.6467e-03, -7.3005e-01, -1.0000e+00, -7.2252e-01, -5.1978e-01,\r\n 7.0765e-02, -1.5036e-01, -9.8355e-01, -9.7384e-01, 5.8453e-01,\r\n 9.6710e-01, 1.4193e-01, 9.9981e-01, -2.1194e-01, 9.6675e-01,\r\n 2.3627e-02, -4.1555e-01, 1.9872e-01, -4.0593e-01, 6.5180e-01,\r\n 6.1598e-01, -6.8750e-01, 7.9808e-02, -2.0437e-01, 3.4504e-01,\r\n -6.7176e-01, -1.3692e-01, -2.7750e-01, -9.6740e-01, -3.6698e-01,\r\n 9.6934e-01, -2.5050e-01, -6.9297e-01, 4.8327e-01, -1.4613e-01,\r\n -5.1224e-01, 8.8387e-01, 6.9173e-01, 3.8395e-01, -1.7536e-01,\r\n 3.8873e-01, -4.3011e-03, 6.1876e-01, -8.9292e-01, 3.4243e-02,\r\n 4.5193e-01, -2.4782e-01, -4.7402e-01, -9.8375e-01, -3.1763e-01,\r\n 5.9109e-01, 9.9284e-01, 7.9634e-01, 2.4601e-01, 6.1729e-01,\r\n -1.8376e-01, 6.8750e-01, -9.7083e-01, 9.8624e-01, -2.0573e-01,\r\n 2.0418e-01, 4.1400e-01, 1.9102e-01, -9.1718e-01, -3.5273e-01,\r\n 8.9628e-01, -5.6812e-01, -8.9552e-01, -3.5567e-02, -4.9052e-01,\r\n -4.3559e-01, -6.2323e-01, 5.6863e-01, -2.6201e-01, -3.1324e-01,\r\n -1.2852e-02, 9.4585e-01, 9.8664e-01, 8.3363e-01, -2.4392e-01,\r\n 7.3786e-01, -9.4466e-01, -5.2720e-01, -1.6349e-02, 2.4207e-01,\r\n 3.6905e-02, 9.9638e-01, -5.8095e-01, -7.2046e-02, -9.4418e-01,\r\n -9.8921e-01, -1.0289e-01, -9.3301e-01, -5.3531e-02, -6.8719e-01,\r\n 5.3295e-01, 1.6390e-01, 2.3460e-01, 4.3260e-01, -9.9501e-01,\r\n -7.7318e-01, 2.6342e-01, -3.6949e-01, 4.0245e-01, -1.6657e-01,\r\n 4.5766e-01, 7.4537e-01, -5.8549e-01, 8.4632e-01, 9.3526e-01,\r\n -6.4963e-01, -7.8264e-01, 8.5868e-01, -2.9683e-01, 9.0246e-01,\r\n -6.5124e-01, 9.8896e-01, 8.6732e-01, 8.7014e-01, -9.5627e-01,\r\n -4.1195e-01, -9.1043e-01, -4.5438e-01, 5.7729e-02, -3.6862e-01,\r\n 5.7032e-01, 5.5757e-01, 3.0482e-01, 7.0850e-01, -6.6279e-01,\r\n 9.9909e-01, -5.0139e-01, -9.7001e-01, -2.2370e-01, -1.8440e-02,\r\n -9.9107e-01, 7.2208e-01, 2.4379e-01, 6.9083e-02, -3.2313e-01,\r\n -7.3217e-01, -9.7295e-01, 9.2268e-01, 5.0675e-02, 9.9215e-01,\r\n -8.0247e-02, -9.5682e-01, -4.1637e-01, -9.4549e-01, -2.9790e-01,\r\n -1.5625e-01, 2.4707e-01, -1.8468e-01, -9.7276e-01, 4.7428e-01,\r\n 5.6760e-01, 5.5919e-01, -1.9418e-01, 9.9932e-01, 1.0000e+00,\r\n 9.7844e-01, 9.3669e-01, 9.5284e-01, -9.9929e-01, -4.9083e-01,\r\n 9.9999e-01, -9.7835e-01, -1.0000e+00, -9.5292e-01, -6.5736e-01,\r\n 4.1425e-01, -1.0000e+00, -2.7896e-03, 7.0756e-02, -9.4186e-01,\r\n 2.7960e-01, 9.8389e-01, 9.9658e-01, -1.0000e+00, 8.8289e-01,\r\n 9.6828e-01, -6.2958e-01, 9.3367e-01, -3.7519e-01, 9.8027e-01,\r\n 4.2505e-01, 3.0766e-01, -3.2042e-01, 2.7469e-01, -7.8253e-01,\r\n -8.8309e-01, -1.5604e-01, -3.6222e-01, 9.9091e-01, 3.0116e-02,\r\n -7.8697e-01, -9.4496e-01, 2.2050e-01, -8.4521e-02, -4.8378e-01,\r\n -9.7952e-01, -1.3446e-01, 4.2209e-01, 7.8760e-01, 6.4992e-02,\r\n 2.0492e-01, -7.8143e-01, 2.2120e-01, -5.0228e-01, 3.7149e-01,\r\n 6.5244e-01, -9.4897e-01, -6.0978e-01, -4.8976e-03, -4.6856e-01,\r\n -2.8122e-01, -9.6984e-01, 9.8036e-01, -3.5220e-01, 7.4903e-01,\r\n 1.0000e+00, -1.0373e-01, -9.4037e-01, 6.2856e-01, 1.5745e-01,\r\n -1.1596e-01, 1.0000e+00, 7.2891e-01, -9.8543e-01, -5.3814e-01,\r\n 4.0543e-01, -4.9501e-01, -4.8527e-01, 9.9950e-01, -1.4058e-01,\r\n -2.3799e-01, -1.5841e-01, 9.8467e-01, -9.9180e-01, 9.7240e-01,\r\n -9.5292e-01, -9.8022e-01, 9.8012e-01, 9.5211e-01, -6.6387e-01,\r\n -7.2622e-01, 1.1509e-01, -3.1365e-01, 1.8487e-01, -9.7602e-01,\r\n 7.9482e-01, 5.2428e-01, -1.3540e-01, 9.1377e-01, -9.0275e-01,\r\n -5.2769e-01, 2.8301e-01, -4.9215e-01, 3.4866e-02, 8.3573e-01,\r\n 5.0270e-01, -2.4031e-01, -6.1194e-02, -2.5558e-01, -1.3530e-01,\r\n -9.8688e-01, 2.9877e-01, 1.0000e+00, -5.3199e-02, 4.4522e-01,\r\n -2.4564e-01, 3.8897e-02, -3.7170e-01, 3.6843e-01, 5.1087e-01,\r\n -1.9742e-01, -8.8481e-01, 4.5420e-01, -9.8222e-01, -9.8894e-01,\r\n 8.5417e-01, 1.4674e-01, -3.3154e-01, 9.9999e-01, 4.4333e-01,\r\n 7.1728e-02, 1.6790e-01, 9.6064e-01, 2.3267e-02, 7.2436e-01,\r\n 4.9905e-01, 9.8528e-01, -2.0286e-01, 5.2711e-01, 9.0711e-01,\r\n -5.6147e-01, -3.4452e-01, -6.1113e-01, -8.1268e-02, -9.2887e-01,\r\n 1.0119e-01, -9.7066e-01, 9.7404e-01, 8.2025e-01, 2.9760e-01,\r\n 1.9059e-01, 3.6089e-01, 1.0000e+00, -2.7256e-01, 6.5052e-01,\r\n -6.0092e-01, 9.0897e-01, -9.9819e-01, -9.1409e-01, -3.7810e-01,\r\n 1.2677e-02, -3.9492e-01, -3.0028e-01, 3.4323e-01, -9.7925e-01,\r\n 4.4501e-01, 3.7582e-01, -9.9622e-01, -9.9495e-01, 1.6366e-01,\r\n 9.2522e-01, -1.3063e-02, -9.5314e-01, -7.5003e-01, -6.5409e-01,\r\n 4.1526e-01, -7.6235e-02, -9.6046e-01, 3.2395e-01, -2.7184e-01,\r\n 4.7535e-01, -1.1767e-01, 5.6867e-01, 4.6844e-01, 8.3125e-01,\r\n -2.1505e-01, -2.6495e-01, -4.4479e-02, -8.5166e-01, 8.8927e-01,\r\n -8.9329e-01, -7.7919e-01, -1.5320e-01, 1.0000e+00, -4.3274e-01,\r\n 6.4268e-01, 7.7000e-01, 7.9197e-01, -5.4889e-02, 8.0927e-02,\r\n 7.9722e-01, 2.1034e-01, -1.9189e-01, -4.4749e-01, -8.0585e-01,\r\n -3.5409e-01, 7.0995e-01, 1.2411e-01, 2.0604e-01, 8.3328e-01,\r\n 7.4750e-01, 1.7900e-04, 7.6917e-02, -9.1725e-02, 9.9981e-01,\r\n -2.6801e-01, -8.3787e-02, -4.8642e-01, 1.1836e-01, -3.5603e-01,\r\n -5.8620e-01, 1.0000e+00, 2.2691e-01, 3.2801e-01, -9.9343e-01,\r\n -7.3298e-01, -9.5126e-01, 1.0000e+00, 8.4895e-01, -8.6216e-01,\r\n 6.9319e-01, 5.5441e-01, -1.1380e-02, 8.6958e-01, -1.2449e-01,\r\n -2.8602e-01, 1.8517e-01, 9.2221e-02, 9.6773e-01, -4.5911e-01,\r\n -9.7611e-01, -6.6894e-01, 3.7154e-01, -9.7862e-01, 9.9949e-01,\r\n -5.5391e-01, -2.0926e-01, -3.9404e-01, -2.3863e-02, 6.3624e-01,\r\n -1.0563e-01, -9.8927e-01, -1.4047e-01, 1.2247e-01, 9.7469e-01,\r\n 2.6847e-01, -6.0451e-01, -9.5354e-01, 4.5191e-01, 6.6822e-01,\r\n -7.2218e-01, -9.6438e-01, 9.7538e-01, -9.9165e-01, 5.6641e-01,\r\n 1.0000e+00, 2.2837e-01, -2.8539e-01, 1.6956e-01, -4.6714e-01,\r\n 2.5561e-01, -2.6744e-01, 7.4301e-01, -9.7890e-01, -2.7469e-01,\r\n -1.4162e-01, 2.7886e-01, -7.0853e-02, -5.8891e-02, 8.2879e-01,\r\n 1.9968e-01, -5.4085e-01, -6.8158e-01, 3.7584e-02, 3.5805e-01,\r\n 8.9092e-01, -1.7879e-01, -8.1491e-02, 5.0655e-02, -7.9140e-02,\r\n -9.5114e-01, -1.4923e-01, -3.5370e-01, -9.9994e-01, 7.4321e-01,\r\n -1.0000e+00, 2.1850e-01, -2.5182e-01, -2.2171e-01, 8.7817e-01,\r\n 2.9648e-01, 3.4926e-01, -8.2534e-01, -3.8831e-01, 7.6622e-01,\r\n 8.0938e-01, -2.1051e-01, -3.0882e-01, -7.6183e-01, 2.2523e-01,\r\n -1.4952e-02, 1.5150e-01, -2.1056e-01, 7.3482e-01, -1.5207e-01,\r\n 1.0000e+00, 1.0631e-01, -7.7462e-01, -9.8438e-01, 1.6242e-01,\r\n -1.6337e-01, 1.0000e+00, -9.4196e-01, -9.7149e-01, 3.9827e-01,\r\n -7.2371e-01, -8.6582e-01, 3.0937e-01, -6.4325e-02, -8.1062e-01,\r\n -8.8436e-01, 9.8219e-01, 9.3543e-01, -5.6058e-01, 4.5004e-01,\r\n -3.2933e-01, -5.5851e-01, -6.9835e-02, 6.0196e-01, 9.9111e-01,\r\n 4.1170e-01, 9.1721e-01, 5.9978e-01, -9.4103e-02, 9.7966e-01,\r\n 1.5322e-01, 5.3662e-01, 4.2338e-02, 1.0000e+00, 2.8920e-01,\r\n -9.3933e-01, 2.4383e-01, -9.8948e-01, -1.5036e-01, -9.7242e-01,\r\n 2.8053e-01, 1.1691e-01, 9.0178e-01, -2.1055e-01, 9.7547e-01,\r\n -5.0734e-01, -8.5119e-03, -5.2189e-01, 1.1963e-01, 4.0313e-01,\r\n -9.4529e-01, -9.8752e-01, -9.8975e-01, 4.5711e-01, -4.0753e-01,\r\n 5.8175e-02, 1.1543e-01, 8.6051e-02, 3.6199e-01, 4.3131e-01,\r\n -1.0000e+00, 9.5818e-01, 4.0499e-01, 6.9443e-01, 9.7521e-01,\r\n 6.7153e-01, 4.3386e-01, 2.2481e-01, -9.9118e-01, -9.9126e-01,\r\n -3.1248e-01, -1.4604e-01, 7.9951e-01, 6.1145e-01, 9.2726e-01,\r\n 4.0171e-01, -3.9375e-01, -2.0938e-01, -3.2651e-02, -4.1723e-01,\r\n -9.9582e-01, 4.5682e-01, -9.4401e-02, -9.8150e-01, 9.6766e-01,\r\n -5.5518e-01, -8.0481e-02, 4.4743e-01, -6.0429e-01, 9.7261e-01,\r\n 8.6633e-01, 3.7309e-01, 9.4917e-04, 4.6426e-01, 9.1590e-01,\r\n 9.6965e-01, 9.8799e-01, -4.6592e-01, 8.7146e-01, -3.1116e-01,\r\n 5.1496e-01, 6.7961e-01, -9.5609e-01, 1.3302e-03, 3.6581e-01,\r\n -2.3789e-01, 2.6341e-01, -1.2874e-01, -9.8464e-01, 4.8621e-01,\r\n -1.8921e-01, 6.1015e-01, -4.3986e-01, 2.1561e-01, -3.7115e-01,\r\n -1.5832e-02, -6.9704e-01, -7.3403e-01, 5.7310e-01, 5.0895e-01,\r\n 9.4111e-01, 6.9365e-01, 6.9171e-02, -7.3277e-01, -1.1294e-01,\r\n -4.0168e-01, -9.2587e-01, 9.6638e-01, 2.2207e-02, 1.5029e-01,\r\n 2.8954e-01, -8.5994e-02, 7.4631e-01, -1.5933e-01, -3.5710e-01,\r\n -1.6201e-01, -7.1149e-01, 9.0602e-01, -4.2873e-01, -4.6653e-01,\r\n -5.4765e-01, 7.4640e-01, 2.3966e-01, 9.9982e-01, -4.6795e-01,\r\n -6.4802e-01, -4.1201e-01, -3.4984e-01, 3.5475e-01, -5.4668e-01,\r\n -1.0000e+00, 3.6903e-01, -1.7324e-01, 4.3267e-01, -4.7206e-01,\r\n 6.3586e-01, -5.2151e-01, -9.9077e-01, -1.6597e-01, 2.6735e-01,\r\n 4.5069e-01, -4.3034e-01, -5.6321e-01, 5.7792e-01, 8.8123e-02,\r\n 9.4964e-01, 9.2798e-01, -3.3326e-01, 5.1963e-01, 6.0865e-01,\r\n -4.4019e-01, -6.8129e-01, 9.3489e-01]], grad_fn=<TanhBackward>))\r\n>>> len(output)\r\n3\r\n>>> output[0]\r\ntensor([[[ -6.3390, -6.3664, -6.4600, ..., -5.5354, -4.1787, -5.8384],\r\n [ -6.3550, -6.3077, -6.4661, ..., -5.3516, -4.1338, -4.0742],\r\n [ -6.7090, -6.6050, -6.6682, ..., -5.9591, -4.7142, -3.8219],\r\n [ -7.7608, -7.5956, -7.6634, ..., -6.8113, -5.7777, -4.1638],\r\n [ -8.6462, -8.5767, -8.6366, ..., -7.9503, -6.5382, -5.0959],\r\n [-12.8752, -12.3775, -12.2770, ..., -10.0880, -10.7659, -9.0092]]],\r\n grad_fn=<AddBackward0>)\r\n>>> output[0].shape\r\ntorch.Size([1, 6, 30522])\r\n>>> output[1]\r\ntensor([[[ 0.0929, -0.0264, -0.1224, ..., -0.2106, 0.1739, 0.1725],\r\n [ 0.4074, -0.0593, 0.5523, ..., -0.6791, 0.6556, -0.2946],\r\n [-0.2116, -0.6859, -0.4628, ..., 0.1528, 0.5977, -0.9102],\r\n [ 0.3992, -1.3208, -0.0801, ..., -0.3213, 0.2557, -0.5780],\r\n [-0.0757, -1.3394, 0.1816, ..., 0.0746, 0.4032, -0.7080],\r\n [ 0.5989, -0.2841, -0.3490, ..., 0.3042, -0.4368, -0.2097]]],\r\n grad_fn=<NativeLayerNormBackward>)\r\n>>> output[1].shape\r\ntorch.Size([1, 6, 768])\r\n>>> output[2]\r\ntensor([[-9.3097e-01, -3.3807e-01, -6.2162e-01, 8.4082e-01, 4.4154e-01,\r\n -1.5889e-01, 9.3273e-01, 2.2240e-01, -4.3249e-01, -9.9998e-01,\r\n -2.7810e-01, 8.9449e-01, 9.8638e-01, 6.4763e-02, 9.6649e-01,\r\n -7.7835e-01, -4.4046e-01, -5.9515e-01, 2.7585e-01, -7.4638e-01,\r\n 7.4700e-01, 9.9983e-01, 4.4468e-01, 2.8673e-01, 3.6586e-01,\r\n 9.7642e-01, -8.4343e-01, 9.6599e-01, 9.7235e-01, 7.2667e-01,\r\n -7.5785e-01, 9.2892e-02, -9.9089e-01, -1.7004e-01, -6.8200e-01,\r\n -9.9283e-01, 2.6244e-01, -7.9871e-01, 2.3397e-02, 4.6413e-02,\r\n -9.3371e-01, 2.7699e-01, 9.9995e-01, -3.2671e-01, 2.1108e-01,\r\n -2.0636e-01, -1.0000e+00, 1.9622e-01, -9.3330e-01, 6.8736e-01,\r\n 6.4731e-01, 5.3773e-01, 9.2759e-02, 4.1069e-01, 4.0360e-01,\r\n 1.9002e-01, -1.7049e-01, 7.5259e-03, -2.0453e-01, -5.7574e-01,\r\n -5.3062e-01, 3.9367e-01, -7.0627e-01, -9.2865e-01, 6.8820e-01,\r\n 3.2698e-01, -3.3506e-02, -1.2323e-01, -1.5304e-01, -1.8077e-01,\r\n 9.3398e-01, 2.6375e-01, 3.7505e-01, -8.9548e-01, 1.1777e-01,\r\n 2.2054e-01, -6.3351e-01, 1.0000e+00, -6.9228e-01, -9.8653e-01,\r\n 6.9799e-01, 4.0303e-01, 5.2453e-01, 2.3217e-01, -1.2151e-01,\r\n -1.0000e+00, 5.6760e-01, 2.9295e-02, -9.9318e-01, 8.3171e-02,\r\n 5.2939e-01, -2.3176e-01, -1.5694e-01, 4.9278e-01, -4.2614e-01,\r\n -3.8079e-01, -2.6060e-01, -6.9055e-01, -1.7180e-01, -1.9810e-01,\r\n -2.7986e-02, -7.2085e-02, -3.7635e-01, -3.7743e-01, 1.3508e-01,\r\n -4.3892e-01, -6.1321e-01, 1.7726e-01, -3.5434e-01, 6.4734e-01,\r\n 4.0373e-01, -2.8194e-01, 4.5104e-01, -9.7876e-01, 6.1044e-01,\r\n -2.3526e-01, -9.9035e-01, -5.1350e-01, -9.9280e-01, 6.8329e-01,\r\n -2.1623e-01, -1.4641e-01, 9.8273e-01, 3.7345e-01, 4.8171e-01,\r\n -5.6467e-03, -7.3005e-01, -1.0000e+00, -7.2252e-01, -5.1978e-01,\r\n 7.0765e-02, -1.5036e-01, -9.8355e-01, -9.7384e-01, 5.8453e-01,\r\n 9.6710e-01, 1.4193e-01, 9.9981e-01, -2.1194e-01, 9.6675e-01,\r\n 2.3627e-02, -4.1555e-01, 1.9872e-01, -4.0593e-01, 6.5180e-01,\r\n 6.1598e-01, -6.8750e-01, 7.9808e-02, -2.0437e-01, 3.4504e-01,\r\n -6.7176e-01, -1.3692e-01, -2.7750e-01, -9.6740e-01, -3.6698e-01,\r\n 9.6934e-01, -2.5050e-01, -6.9297e-01, 4.8327e-01, -1.4613e-01,\r\n -5.1224e-01, 8.8387e-01, 6.9173e-01, 3.8395e-01, -1.7536e-01,\r\n 3.8873e-01, -4.3011e-03, 6.1876e-01, -8.9292e-01, 3.4243e-02,\r\n 4.5193e-01, -2.4782e-01, -4.7402e-01, -9.8375e-01, -3.1763e-01,\r\n 5.9109e-01, 9.9284e-01, 7.9634e-01, 2.4601e-01, 6.1729e-01,\r\n -1.8376e-01, 6.8750e-01, -9.7083e-01, 9.8624e-01, -2.0573e-01,\r\n 2.0418e-01, 4.1400e-01, 1.9102e-01, -9.1718e-01, -3.5273e-01,\r\n 8.9628e-01, -5.6812e-01, -8.9552e-01, -3.5567e-02, -4.9052e-01,\r\n -4.3559e-01, -6.2323e-01, 5.6863e-01, -2.6201e-01, -3.1324e-01,\r\n -1.2852e-02, 9.4585e-01, 9.8664e-01, 8.3363e-01, -2.4392e-01,\r\n 7.3786e-01, -9.4466e-01, -5.2720e-01, -1.6349e-02, 2.4207e-01,\r\n 3.6905e-02, 9.9638e-01, -5.8095e-01, -7.2046e-02, -9.4418e-01,\r\n -9.8921e-01, -1.0289e-01, -9.3301e-01, -5.3531e-02, -6.8719e-01,\r\n 5.3295e-01, 1.6390e-01, 2.3460e-01, 4.3260e-01, -9.9501e-01,\r\n -7.7318e-01, 2.6342e-01, -3.6949e-01, 4.0245e-01, -1.6657e-01,\r\n 4.5766e-01, 7.4537e-01, -5.8549e-01, 8.4632e-01, 9.3526e-01,\r\n -6.4963e-01, -7.8264e-01, 8.5868e-01, -2.9683e-01, 9.0246e-01,\r\n -6.5124e-01, 9.8896e-01, 8.6732e-01, 8.7014e-01, -9.5627e-01,\r\n -4.1195e-01, -9.1043e-01, -4.5438e-01, 5.7729e-02, -3.6862e-01,\r\n 5.7032e-01, 5.5757e-01, 3.0482e-01, 7.0850e-01, -6.6279e-01,\r\n 9.9909e-01, -5.0139e-01, -9.7001e-01, -2.2370e-01, -1.8440e-02,\r\n -9.9107e-01, 7.2208e-01, 2.4379e-01, 6.9083e-02, -3.2313e-01,\r\n -7.3217e-01, -9.7295e-01, 9.2268e-01, 5.0675e-02, 9.9215e-01,\r\n -8.0247e-02, -9.5682e-01, -4.1637e-01, -9.4549e-01, -2.9790e-01,\r\n -1.5625e-01, 2.4707e-01, -1.8468e-01, -9.7276e-01, 4.7428e-01,\r\n 5.6760e-01, 5.5919e-01, -1.9418e-01, 9.9932e-01, 1.0000e+00,\r\n 9.7844e-01, 9.3669e-01, 9.5284e-01, -9.9929e-01, -4.9083e-01,\r\n 9.9999e-01, -9.7835e-01, -1.0000e+00, -9.5292e-01, -6.5736e-01,\r\n 4.1425e-01, -1.0000e+00, -2.7896e-03, 7.0756e-02, -9.4186e-01,\r\n 2.7960e-01, 9.8389e-01, 9.9658e-01, -1.0000e+00, 8.8289e-01,\r\n 9.6828e-01, -6.2958e-01, 9.3367e-01, -3.7519e-01, 9.8027e-01,\r\n 4.2505e-01, 3.0766e-01, -3.2042e-01, 2.7469e-01, -7.8253e-01,\r\n -8.8309e-01, -1.5604e-01, -3.6222e-01, 9.9091e-01, 3.0116e-02,\r\n -7.8697e-01, -9.4496e-01, 2.2050e-01, -8.4521e-02, -4.8378e-01,\r\n -9.7952e-01, -1.3446e-01, 4.2209e-01, 7.8760e-01, 6.4992e-02,\r\n 2.0492e-01, -7.8143e-01, 2.2120e-01, -5.0228e-01, 3.7149e-01,\r\n 6.5244e-01, -9.4897e-01, -6.0978e-01, -4.8976e-03, -4.6856e-01,\r\n -2.8122e-01, -9.6984e-01, 9.8036e-01, -3.5220e-01, 7.4903e-01,\r\n 1.0000e+00, -1.0373e-01, -9.4037e-01, 6.2856e-01, 1.5745e-01,\r\n -1.1596e-01, 1.0000e+00, 7.2891e-01, -9.8543e-01, -5.3814e-01,\r\n 4.0543e-01, -4.9501e-01, -4.8527e-01, 9.9950e-01, -1.4058e-01,\r\n -2.3799e-01, -1.5841e-01, 9.8467e-01, -9.9180e-01, 9.7240e-01,\r\n -9.5292e-01, -9.8022e-01, 9.8012e-01, 9.5211e-01, -6.6387e-01,\r\n -7.2622e-01, 1.1509e-01, -3.1365e-01, 1.8487e-01, -9.7602e-01,\r\n 7.9482e-01, 5.2428e-01, -1.3540e-01, 9.1377e-01, -9.0275e-01,\r\n -5.2769e-01, 2.8301e-01, -4.9215e-01, 3.4866e-02, 8.3573e-01,\r\n 5.0270e-01, -2.4031e-01, -6.1194e-02, -2.5558e-01, -1.3530e-01,\r\n -9.8688e-01, 2.9877e-01, 1.0000e+00, -5.3199e-02, 4.4522e-01,\r\n -2.4564e-01, 3.8897e-02, -3.7170e-01, 3.6843e-01, 5.1087e-01,\r\n -1.9742e-01, -8.8481e-01, 4.5420e-01, -9.8222e-01, -9.8894e-01,\r\n 8.5417e-01, 1.4674e-01, -3.3154e-01, 9.9999e-01, 4.4333e-01,\r\n 7.1728e-02, 1.6790e-01, 9.6064e-01, 2.3267e-02, 7.2436e-01,\r\n 4.9905e-01, 9.8528e-01, -2.0286e-01, 5.2711e-01, 9.0711e-01,\r\n -5.6147e-01, -3.4452e-01, -6.1113e-01, -8.1268e-02, -9.2887e-01,\r\n 1.0119e-01, -9.7066e-01, 9.7404e-01, 8.2025e-01, 2.9760e-01,\r\n 1.9059e-01, 3.6089e-01, 1.0000e+00, -2.7256e-01, 6.5052e-01,\r\n -6.0092e-01, 9.0897e-01, -9.9819e-01, -9.1409e-01, -3.7810e-01,\r\n 1.2677e-02, -3.9492e-01, -3.0028e-01, 3.4323e-01, -9.7925e-01,\r\n 4.4501e-01, 3.7582e-01, -9.9622e-01, -9.9495e-01, 1.6366e-01,\r\n 9.2522e-01, -1.3063e-02, -9.5314e-01, -7.5003e-01, -6.5409e-01,\r\n 4.1526e-01, -7.6235e-02, -9.6046e-01, 3.2395e-01, -2.7184e-01,\r\n 4.7535e-01, -1.1767e-01, 5.6867e-01, 4.6844e-01, 8.3125e-01,\r\n -2.1505e-01, -2.6495e-01, -4.4479e-02, -8.5166e-01, 8.8927e-01,\r\n -8.9329e-01, -7.7919e-01, -1.5320e-01, 1.0000e+00, -4.3274e-01,\r\n 6.4268e-01, 7.7000e-01, 7.9197e-01, -5.4889e-02, 8.0927e-02,\r\n 7.9722e-01, 2.1034e-01, -1.9189e-01, -4.4749e-01, -8.0585e-01,\r\n -3.5409e-01, 7.0995e-01, 1.2411e-01, 2.0604e-01, 8.3328e-01,\r\n 7.4750e-01, 1.7900e-04, 7.6917e-02, -9.1725e-02, 9.9981e-01,\r\n -2.6801e-01, -8.3787e-02, -4.8642e-01, 1.1836e-01, -3.5603e-01,\r\n -5.8620e-01, 1.0000e+00, 2.2691e-01, 3.2801e-01, -9.9343e-01,\r\n -7.3298e-01, -9.5126e-01, 1.0000e+00, 8.4895e-01, -8.6216e-01,\r\n 6.9319e-01, 5.5441e-01, -1.1380e-02, 8.6958e-01, -1.2449e-01,\r\n -2.8602e-01, 1.8517e-01, 9.2221e-02, 9.6773e-01, -4.5911e-01,\r\n -9.7611e-01, -6.6894e-01, 3.7154e-01, -9.7862e-01, 9.9949e-01,\r\n -5.5391e-01, -2.0926e-01, -3.9404e-01, -2.3863e-02, 6.3624e-01,\r\n -1.0563e-01, -9.8927e-01, -1.4047e-01, 1.2247e-01, 9.7469e-01,\r\n 2.6847e-01, -6.0451e-01, -9.5354e-01, 4.5191e-01, 6.6822e-01,\r\n -7.2218e-01, -9.6438e-01, 9.7538e-01, -9.9165e-01, 5.6641e-01,\r\n 1.0000e+00, 2.2837e-01, -2.8539e-01, 1.6956e-01, -4.6714e-01,\r\n 2.5561e-01, -2.6744e-01, 7.4301e-01, -9.7890e-01, -2.7469e-01,\r\n -1.4162e-01, 2.7886e-01, -7.0853e-02, -5.8891e-02, 8.2879e-01,\r\n 1.9968e-01, -5.4085e-01, -6.8158e-01, 3.7584e-02, 3.5805e-01,\r\n 8.9092e-01, -1.7879e-01, -8.1491e-02, 5.0655e-02, -7.9140e-02,\r\n -9.5114e-01, -1.4923e-01, -3.5370e-01, -9.9994e-01, 7.4321e-01,\r\n -1.0000e+00, 2.1850e-01, -2.5182e-01, -2.2171e-01, 8.7817e-01,\r\n 2.9648e-01, 3.4926e-01, -8.2534e-01, -3.8831e-01, 7.6622e-01,\r\n 8.0938e-01, -2.1051e-01, -3.0882e-01, -7.6183e-01, 2.2523e-01,\r\n -1.4952e-02, 1.5150e-01, -2.1056e-01, 7.3482e-01, -1.5207e-01,\r\n 1.0000e+00, 1.0631e-01, -7.7462e-01, -9.8438e-01, 1.6242e-01,\r\n -1.6337e-01, 1.0000e+00, -9.4196e-01, -9.7149e-01, 3.9827e-01,\r\n -7.2371e-01, -8.6582e-01, 3.0937e-01, -6.4325e-02, -8.1062e-01,\r\n -8.8436e-01, 9.8219e-01, 9.3543e-01, -5.6058e-01, 4.5004e-01,\r\n -3.2933e-01, -5.5851e-01, -6.9835e-02, 6.0196e-01, 9.9111e-01,\r\n 4.1170e-01, 9.1721e-01, 5.9978e-01, -9.4103e-02, 9.7966e-01,\r\n 1.5322e-01, 5.3662e-01, 4.2338e-02, 1.0000e+00, 2.8920e-01,\r\n -9.3933e-01, 2.4383e-01, -9.8948e-01, -1.5036e-01, -9.7242e-01,\r\n 2.8053e-01, 1.1691e-01, 9.0178e-01, -2.1055e-01, 9.7547e-01,\r\n -5.0734e-01, -8.5119e-03, -5.2189e-01, 1.1963e-01, 4.0313e-01,\r\n -9.4529e-01, -9.8752e-01, -9.8975e-01, 4.5711e-01, -4.0753e-01,\r\n 5.8175e-02, 1.1543e-01, 8.6051e-02, 3.6199e-01, 4.3131e-01,\r\n -1.0000e+00, 9.5818e-01, 4.0499e-01, 6.9443e-01, 9.7521e-01,\r\n 6.7153e-01, 4.3386e-01, 2.2481e-01, -9.9118e-01, -9.9126e-01,\r\n -3.1248e-01, -1.4604e-01, 7.9951e-01, 6.1145e-01, 9.2726e-01,\r\n 4.0171e-01, -3.9375e-01, -2.0938e-01, -3.2651e-02, -4.1723e-01,\r\n -9.9582e-01, 4.5682e-01, -9.4401e-02, -9.8150e-01, 9.6766e-01,\r\n -5.5518e-01, -8.0481e-02, 4.4743e-01, -6.0429e-01, 9.7261e-01,\r\n 8.6633e-01, 3.7309e-01, 9.4917e-04, 4.6426e-01, 9.1590e-01,\r\n 9.6965e-01, 9.8799e-01, -4.6592e-01, 8.7146e-01, -3.1116e-01,\r\n 5.1496e-01, 6.7961e-01, -9.5609e-01, 1.3302e-03, 3.6581e-01,\r\n -2.3789e-01, 2.6341e-01, -1.2874e-01, -9.8464e-01, 4.8621e-01,\r\n -1.8921e-01, 6.1015e-01, -4.3986e-01, 2.1561e-01, -3.7115e-01,\r\n -1.5832e-02, -6.9704e-01, -7.3403e-01, 5.7310e-01, 5.0895e-01,\r\n 9.4111e-01, 6.9365e-01, 6.9171e-02, -7.3277e-01, -1.1294e-01,\r\n -4.0168e-01, -9.2587e-01, 9.6638e-01, 2.2207e-02, 1.5029e-01,\r\n 2.8954e-01, -8.5994e-02, 7.4631e-01, -1.5933e-01, -3.5710e-01,\r\n -1.6201e-01, -7.1149e-01, 9.0602e-01, -4.2873e-01, -4.6653e-01,\r\n -5.4765e-01, 7.4640e-01, 2.3966e-01, 9.9982e-01, -4.6795e-01,\r\n -6.4802e-01, -4.1201e-01, -3.4984e-01, 3.5475e-01, -5.4668e-01,\r\n -1.0000e+00, 3.6903e-01, -1.7324e-01, 4.3267e-01, -4.7206e-01,\r\n 6.3586e-01, -5.2151e-01, -9.9077e-01, -1.6597e-01, 2.6735e-01,\r\n 4.5069e-01, -4.3034e-01, -5.6321e-01, 5.7792e-01, 8.8123e-02,\r\n 9.4964e-01, 9.2798e-01, -3.3326e-01, 5.1963e-01, 6.0865e-01,\r\n -4.4019e-01, -6.8129e-01, 9.3489e-01]], grad_fn=<TanhBackward>)\r\n>>> output[2].shape\r\ntorch.Size([1, 768])\r\n>>>\r\n```\r\n\r\n> @TheEdoardo93 it doesn't matter whether it is GPT2 or bert. Both has the same error :\r\n> I'm trying to play with GPT2 that's why I pasted my own code.\r\n> \r\n> Using BERT as an Encoder and Decoder\r\n> \r\n> ```\r\n> >>> model = PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased','bert-base-uncased')\r\n> >>> ouput = model(torch.tensor( encoder_input_ids).unsqueeze(0))\r\n> Traceback (most recent call last):\r\n> File \"<stdin>\", line 1, in <module>\r\n> File \"/home/guest_1/.local/lib/python3.6/site-packages/torch/nn/modules/module.py\", line 541, in __call__\r\n> result = self.forward(*input, **kwargs)\r\n> TypeError: forward() missing 1 required positional argument: 'decoder_input_ids'\r\n> ```",

"I got the same issue",

"Do you try to follow my suggestion reported above? In my environment, it **works as expected**.\r\nMy environment details:\r\n- **OS**: Ubuntu 16.04\r\n- **Python**: 3.6.9\r\n- **Transformers**: 2.2.2 (installed with `pip install transformers`)\r\n- **PyTorch**: 1.3.1\r\n- **TensorFlow**: 2.0\r\n\r\nIf not, can you post your **environment** and a list of **steps** to reproduce the bug?\r\n\r\n> I got the same issue",

"> Said so, I've tested your code and I've revealed how to working with PreTrainedEncoderDecoder correctly without bugs. You can see my code below.\r\n\r\n@TheEdoardo93 that doesn't make sense you giving your encoders input as a decoder's input. \r\n\r\n",

"> > Said so, I've tested your code and I've revealed how to working with PreTrainedEncoderDecoder correctly without bugs. You can see my code below.\r\n> \r\n> @TheEdoardo93 that doesn't make sense your giving your encoders input as a decoder's input.\r\n> Never-mind, I know what's the issue is so closing it.\r\n\r\nSorry, it was my mistake. Can you share with us what was the problem and how to solve it?",

"@anandhperumal Could you please share how you solved the issue? did you path a ```<BOS>``` to decoder input?\r\nAppreciate that",

"@anandhperumal can you let us know how you fixed the issue?",

"You can pass a start token to the decoder, just like a Seq2Seq Arch.\r\n"

] | 1,576 | 1,592 | 1,576 |

NONE

| null |

## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....):

Language I am using the model on (English, Chinese....):

The problem arise when using:

* [X ] the official example scripts: (give details)

* [ ] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ X] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1. Error while doing inference.

```

from transformers import PreTrainedEncoderDecoder, BertTokenizer

model = PreTrainedEncoderDecoder.from_pretrained('bert-base-uncased',''bert-base-uncased')

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

encoder_input_ids=tokenizer.encode("Hi How are you")

import torch

ouput = model(torch.tensor( encoder_input_ids).unsqueeze(0))

```

and the error is

```

TypeError: forward() missing 1 required positional argument: 'decoder_input_ids'

```

During inference why is decoder input is expected ?

Let me know if I'm missing anything?

## Environment

OS: ubuntu

Python version: 3.6

PyTorch version:1.3.0

PyTorch Transformers version (or branch):2.2.0

Using GPU ? Yes

Distributed of parallel setup ? No

Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/2206/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/2206/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/2205

|