url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/1810

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1810/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1810/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1810/events

|

https://github.com/huggingface/transformers/issues/1810

| 521,782,106 |

MDU6SXNzdWU1MjE3ODIxMDY=

| 1,810 |

NameError: name 'DUMMY_INPUTS' is not defined - From TF to PyTorch

|

{

"login": "RubensZimbres",

"id": 20270054,

"node_id": "MDQ6VXNlcjIwMjcwMDU0",

"avatar_url": "https://avatars.githubusercontent.com/u/20270054?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/RubensZimbres",

"html_url": "https://github.com/RubensZimbres",

"followers_url": "https://api.github.com/users/RubensZimbres/followers",

"following_url": "https://api.github.com/users/RubensZimbres/following{/other_user}",

"gists_url": "https://api.github.com/users/RubensZimbres/gists{/gist_id}",

"starred_url": "https://api.github.com/users/RubensZimbres/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/RubensZimbres/subscriptions",

"organizations_url": "https://api.github.com/users/RubensZimbres/orgs",

"repos_url": "https://api.github.com/users/RubensZimbres/repos",

"events_url": "https://api.github.com/users/RubensZimbres/events{/privacy}",

"received_events_url": "https://api.github.com/users/RubensZimbres/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi! I believe this is a bug that was fixed on master. Could you try and install from source and tell me if it fixes your issue?\r\n\r\nYou can do so with the following command in your python environment:\r\n\r\n```\r\npip install git+https://github.com/huggingface/transformers\r\n```",

"@LysandreJik Thanks for the hint. I used a workaround. I installed `transformers` using:\r\n\r\n```\r\nconda install -c conda-forge transformers\r\n```\r\n\r\nIn Python 3.7.4, then I added `DUMMY_INPUTS = [[7, 6, 0, 0, 1], [1, 2, 3, 0, 0], [0, 0, 0, 4, 5]]` after variable `logger` in `/opt/anaconda3/lib/python3.7/site-packages/transformers/modeling_tf_utils.py`, because it was missing.\r\n\r\nIt was interesting, because in GCP, transformers were not showing in `python3` , only in `sudo python3`",

"@LysandreJik I updated the install from source but ran into the same issue. The \"solution\" was again as @RubensZimbres mentions: adding `DUMMY_INPUTS = [[7, 6, 0, 0, 1], [1, 2, 3, 0, 0], [0, 0, 0, 4, 5]]` after variable `logger`, however I needed it changed in `/opt/anaconda3/lib/python3.7/site-packages/transformers/modeling_tf_pytorch_utils.py`.\r\n",

"I created a Pull Request at: https://github.com/huggingface/transformers/pull/1847",

"Can't reproduce on master and the latest release (2.2.1).\r\nFeel free to reopen if the issue is still there.\r\n"

] | 1,573 | 1,575 | 1,575 |

NONE

| null |

## 🐛 Bug

I'm using TFBertForSequenceClassification, Tensorflow 2.0.0b0, PyTorch is up-to-date and the code from Hugging Face README.md:

```

import tensorflow as tf

import tensorflow_datasets

from transformers import *

tf.compat.v1.enable_eager_execution()

tokenizer = BertTokenizer.from_pretrained('bert-base-cased')

model = TFBertForSequenceClassification.from_pretrained('bert-base-cased')

data = tensorflow_datasets.load('glue/mrpc')

train_dataset = glue_convert_examples_to_features(data['train'], tokenizer, max_length=128, task='mrpc')

valid_dataset = glue_convert_examples_to_features(data['validation'], tokenizer, max_length=128, task='mrpc')

train_dataset = train_dataset.shuffle(100).batch(32).repeat(2)

valid_dataset = valid_dataset.batch(64)

optimizer = tf.keras.optimizers.Adam(learning_rate=3e-5, epsilon=1e-08, clipnorm=1.0)

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

metric = tf.keras.metrics.SparseCategoricalAccuracy('accuracy')

model.compile(optimizer=optimizer, loss=loss, metrics=[metric])

history = model.fit(train_dataset, epochs=2, steps_per_epoch=115,

validation_data=valid_dataset, validation_steps=7)

model.save_pretrained('/home/rubens_gmail_com/tf')

```

The model trains properly, but after weights are saved as `.h5` and I try to load to `BertForSequenceClassification.from_pretrained`, the following error shows up:

```

pytorch_model = BertForSequenceClassification.from_pretrained('/home/rubens_gmail_com/tf',from_tf=True)

NameError Traceback (most recent call last)

<ipython-input-25-7a878059a298> in <module>

1 pytorch_model = BertForSequenceClassification.from_pretrained('/home/rubens_gmail_com/tf',

----> 2 from_tf=True)

~/.local/lib/python3.7/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

357 try:

358 from transformers import load_tf2_checkpoint_in_pytorch_model

--> 359 model = load_tf2_checkpoint_in_pytorch_model(model, resolved_archive_file, allow_missing_keys=True)

360 except ImportError as e:

361 logger.error("Loading a TensorFlow model in PyTorch, requires both PyTorch and TensorFlow to be installed. Please see "

~/.local/lib/python3.7/site-packages/transformers/modeling_tf_pytorch_utils.py in load_tf2_checkpoint_in_pytorch_model(pt_model, tf_checkpoint_path, tf_inputs, allow_missing_keys)

199

200 if tf_inputs is None:

--> 201 tf_inputs = tf.constant(DUMMY_INPUTS)

202

203 if tf_inputs is not None:

NameError: name 'DUMMY_INPUTS' is not defined

```

If I run:

```

pytorch_model = BertForSequenceClassification.from_pretrained('/home/rubens_gmail_com/tf/tf_model.h5',from_tf=True)

```

I get:

```

UnicodeDecodeError Traceback (most recent call last)

<ipython-input-35-4146e5f25140> in <module>

----> 1 pytorch_model = BertForSequenceClassification.from_pretrained('/home/rubens_gmail_com/tf/tf_model.h5',from_tf=True)

~/.local/lib/python3.7/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

285 cache_dir=cache_dir, return_unused_kwargs=True,

286 force_download=force_download,

--> 287 **kwargs

288 )

289 else:

~/.local/lib/python3.7/site-packages/transformers/configuration_utils.py in from_pretrained(cls, pretrained_model_name_or_path, **kwargs)

152

153 # Load config

--> 154 config = cls.from_json_file(resolved_config_file)

155

156 if hasattr(config, 'pruned_heads'):

~/.local/lib/python3.7/site-packages/transformers/configuration_utils.py in from_json_file(cls, json_file)

184 """Constructs a `BertConfig` from a json file of parameters."""

185 with open(json_file, "r", encoding='utf-8') as reader:

--> 186 text = reader.read()

187 return cls.from_dict(json.loads(text))

188

/opt/anaconda3/lib/python3.7/codecs.py in decode(self, input, final)

320 # decode input (taking the buffer into account)

321 data = self.buffer + input

--> 322 (result, consumed) = self._buffer_decode(data, self.errors, final)

323 # keep undecoded input until the next call

324 self.buffer = data[consumed:]

UnicodeDecodeError: 'utf-8' codec can't decode byte 0x89 in position 0: invalid start byte

```

I'm using Python 3.7.4, 8 x V100 GPU, Anaconda environment on a Debian on GCP. Any ideas on how to overcome this issue ?

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1810/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1810/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1809

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1809/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1809/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1809/events

|

https://github.com/huggingface/transformers/issues/1809

| 521,750,456 |

MDU6SXNzdWU1MjE3NTA0NTY=

| 1,809 |

Why do language modeling heads not have activation functions?

|

{

"login": "langfield",

"id": 35980963,

"node_id": "MDQ6VXNlcjM1OTgwOTYz",

"avatar_url": "https://avatars.githubusercontent.com/u/35980963?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/langfield",

"html_url": "https://github.com/langfield",

"followers_url": "https://api.github.com/users/langfield/followers",

"following_url": "https://api.github.com/users/langfield/following{/other_user}",

"gists_url": "https://api.github.com/users/langfield/gists{/gist_id}",

"starred_url": "https://api.github.com/users/langfield/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/langfield/subscriptions",

"organizations_url": "https://api.github.com/users/langfield/orgs",

"repos_url": "https://api.github.com/users/langfield/repos",

"events_url": "https://api.github.com/users/langfield/events{/privacy}",

"received_events_url": "https://api.github.com/users/langfield/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi, some Transformers have activation in their heads, for instance, Bert.\r\nSee here: https://github.com/huggingface/transformers/blob/master/transformers/modeling_bert.py#L421\r\n\r\nThis is most likely a design choice with a minor effect for deep transformers as they learn to generate the current or next token along several of the output layers.\r\nSee the nice blog post and paper of Lena Voita for some intuition on this: https://lena-voita.github.io/posts/emnlp19_evolution.html",

"Thank you! That link was very helpful."

] | 1,573 | 1,573 | 1,573 |

NONE

| null |

## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

This is more of a question about the transformer architecture in general than anything else. I noticed that, in `modeling_openai.py`, for example, the `self.lm_head()` module is just a linear layer. Why is it sufficient to use a linear transformation here for the language modeling task? Would there by any advantages/disadvantages to throwing a `gelu` or something on the end and then calling the output of that the logits?

```python

class OpenAIGPTLMHeadModel(OpenAIGPTPreTrainedModel):

r"""

**labels**: (`optional`) ``torch.LongTensor`` of shape ``(batch_size, sequence_length)``:

Labels for language modeling.

Note that the labels **are shifted** inside the model, i.e. you can set ``labels = input_ids``

Indices are selected in ``[-1, 0, ..., config.vocab_size]``

All labels set to ``-1`` are ignored (masked), the loss is only

computed for labels in ``[0, ..., config.vocab_size]``

Outputs: `Tuple` comprising various elements depending on the configuration (config) and inputs:

**loss**: (`optional`, returned when ``labels`` is provided) ``torch.FloatTensor`` of shape ``(1,)``:

Language modeling loss.

**prediction_scores**: ``torch.FloatTensor`` of shape ``(batch_size, sequence_length, config.vocab_size)``

Prediction scores of the language modeling head (scores for each vocabulary token before SoftMax).

**hidden_states**: (`optional`, returned when ``config.output_hidden_states=True``)

list of ``torch.FloatTensor`` (one for the output of each layer + the output of the embeddings)

of shape ``(batch_size, sequence_length, hidden_size)``:

Hidden-states of the model at the output of each layer plus the initial embedding outputs.

**attentions**: (`optional`, returned when ``config.output_attentions=True``)

list of ``torch.FloatTensor`` (one for each layer) of shape ``(batch_size, num_heads, sequence_length, sequence_length)``:

Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

Examples::

tokenizer = OpenAIGPTTokenizer.from_pretrained('openai-gpt')

model = OpenAIGPTLMHeadModel.from_pretrained('openai-gpt')

input_ids = torch.tensor(tokenizer.encode("Hello, my dog is cute")).unsqueeze(0) # Batch size 1

outputs = model(input_ids, labels=input_ids)

loss, logits = outputs[:2]

"""

def __init__(self, config):

super(OpenAIGPTLMHeadModel, self).__init__(config)

self.transformer = OpenAIGPTModel(config)

self.lm_head = nn.Linear(config.n_embd, config.vocab_size, bias=False)

self.init_weights()

def get_output_embeddings(self):

return self.lm_head

def forward(self, input_ids=None, attention_mask=None, token_type_ids=None, position_ids=None, head_mask=None, inputs_embeds=None,

labels=None):

transformer_outputs = self.transformer(input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

head_mask=head_mask,

inputs_embeds=inputs_embeds)

hidden_states = transformer_outputs[0]

lm_logits = self.lm_head(hidden_states)

outputs = (lm_logits,) + transformer_outputs[1:]

if labels is not None:

# Shift so that tokens < n predict n

shift_logits = lm_logits[..., :-1, :].contiguous()

shift_labels = labels[..., 1:].contiguous()

# Flatten the tokens

loss_fct = CrossEntropyLoss(ignore_index=-1)

loss = loss_fct(shift_logits.view(-1, shift_logits.size(-1)),

shift_labels.view(-1))

outputs = (loss,) + outputs

return outputs # (loss), lm_logits, (all hidden states), (all attentions)

```

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1809/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1809/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1808

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1808/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1808/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1808/events

|

https://github.com/huggingface/transformers/issues/1808

| 521,734,279 |

MDU6SXNzdWU1MjE3MzQyNzk=

| 1,808 |

XLMForSequenceClassification - help with zero-shot cross-lingual classification

|

{

"login": "rsilveira79",

"id": 11993881,

"node_id": "MDQ6VXNlcjExOTkzODgx",

"avatar_url": "https://avatars.githubusercontent.com/u/11993881?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rsilveira79",

"html_url": "https://github.com/rsilveira79",

"followers_url": "https://api.github.com/users/rsilveira79/followers",

"following_url": "https://api.github.com/users/rsilveira79/following{/other_user}",

"gists_url": "https://api.github.com/users/rsilveira79/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rsilveira79/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rsilveira79/subscriptions",

"organizations_url": "https://api.github.com/users/rsilveira79/orgs",

"repos_url": "https://api.github.com/users/rsilveira79/repos",

"events_url": "https://api.github.com/users/rsilveira79/events{/privacy}",

"received_events_url": "https://api.github.com/users/rsilveira79/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi, we've added some details on the multi-modal models here: https://huggingface.co/transformers/multilingual.html\r\nAnd an XNLI example here: https://github.com/huggingface/transformers/tree/master/examples#xnli"

] | 1,573 | 1,575 | 1,575 |

NONE

| null |

## ❓ Questions & Help

Hi guys

According to XLM description (https://github.com/facebookresearch/XLM?fbclid=IwAR0-ZJpmWmIVfR20fA2KCHgrUU3k0cMUyx2n_V9-9C8g857-nhavrfBnVSI#pretrained-cross-lingual-language-models), we could potentially do XLNI by training in `en` dataset and do inference in other language:

```

XLMs can be used to build cross-lingual classifiers. After fine-tuning an XLM model

on an English training corpus for instance (e.g. of sentiment analysis, natural language

inference), the model is still able to make accurate predictions at test time in other

languages, for which there is very little or no training data. This approach is usually

referred to as "zero-shot cross-lingual classification".

```

I'm doing some tests w/ a dataset here at work, and I'm able to train the model with *XLMForSequenceClassification* and when I test it in `en`, performance looks great!

However, when I try to pass another language and do some inference, performance on other language (the so-called zero shot XLNI) does not look fine.

Here are the configurations I'm using:

```python

config = XLMConfig.from_pretrained('xlm-mlm-tlm-xnli15-1024')

config.num_labels = len(list(label_to_ix.values()))

config.n_layers = 6

tokenizer = XLMTokenizer.from_pretrained('xlm-mlm-tlm-xnli15-1024')

model = XLMForSequenceClassification(config)

```

I trained the forward pass passing the `lang` vector with `en` language:

```python

langs = torch.full(ids.shape, fill_value = language_id, dtype = torch.long).cuda()

output = model.forward(ids.cuda(), token_type_ids=tokens.cuda(), langs=langs, head_mask=None)[0]

```

For inference, I'm passing now other languages, by using following function:

```python

def get_reply(msg, language = 'en'):

features = prepare_features(msg, zero_pad = False)

language_id = tokenizer.lang2id[language]

ids = torch.tensor(features['input_ids']).unsqueeze(0).cuda()

langs = torch.full(ids.shape, fill_value = language_id, dtype = torch.long).cuda()

tokens = torch.tensor(features['token_type_ids']).unsqueeze(0).cuda()

output = model.forward(ids,token_type_ids=tokens, langs=langs)[0]

_, predicted = torch.max(output.data, 1)

return list(label_to_ix.keys())[predicted]

```

BTW, my `prepare_features` function is quite simple, just use the `encode_plus` method and then zero pad for a given sentence size:

```python

def prepare_features(seq_1, zero_pad = False, max_seq_length = 120):

enc_text = tokenizer.encode_plus(seq_1, add_special_tokens=True, max_length=300)

if zero_pad:

while len(enc_text['input_ids']) < max_seq_length:

enc_text['input_ids'].append(0)

enc_text['token_type_ids'].append(0)

return enc_text

```

I've noticed in file (https://github.com/huggingface/transformers/blob/master/transformers/modeling_xlm.py) that the forward loop for `XLMModel` does add `lang` vector as sort of an offset for the embeddings:

```python

tensor = tensor + self.lang_embeddings(langs)

```

I'm wondering if I'm passing the `lang` vector in right format. I choose the model with *tlm* language model on purpose because I was guessing that it benefits of the translation language model of the XLM. Have you guys experiment already with zero-shot text classification? Any clue my inference in other languages is not working?

Any insight will be greatly appreciated!

Cheers,

Roberto

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1808/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1808/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1807

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1807/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1807/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1807/events

|

https://github.com/huggingface/transformers/issues/1807

| 521,619,218 |

MDU6SXNzdWU1MjE2MTkyMTg=

| 1,807 |

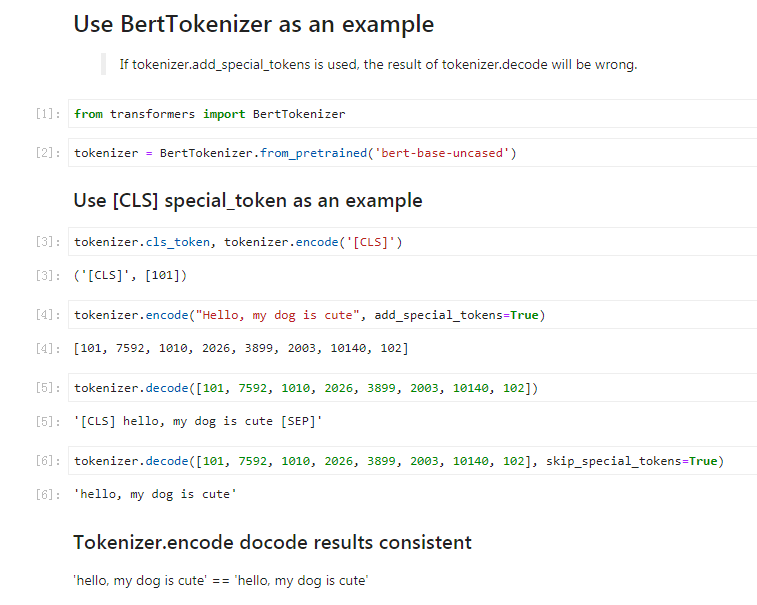

Whether it belongs to the bug of class trainedtokenizer decode?

|

{

"login": "yuanxiaosc",

"id": 16183570,

"node_id": "MDQ6VXNlcjE2MTgzNTcw",

"avatar_url": "https://avatars.githubusercontent.com/u/16183570?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yuanxiaosc",

"html_url": "https://github.com/yuanxiaosc",

"followers_url": "https://api.github.com/users/yuanxiaosc/followers",

"following_url": "https://api.github.com/users/yuanxiaosc/following{/other_user}",

"gists_url": "https://api.github.com/users/yuanxiaosc/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yuanxiaosc/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yuanxiaosc/subscriptions",

"organizations_url": "https://api.github.com/users/yuanxiaosc/orgs",

"repos_url": "https://api.github.com/users/yuanxiaosc/repos",

"events_url": "https://api.github.com/users/yuanxiaosc/events{/privacy}",

"received_events_url": "https://api.github.com/users/yuanxiaosc/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"You're right, this is an error. The PR #1811 aims to fix that issue!",

"It should be fixed now, thanks! Feel free to re-open if the error persists."

] | 1,573 | 1,573 | 1,573 |

NONE

| null |

## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1807/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1807/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1806

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1806/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1806/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1806/events

|

https://github.com/huggingface/transformers/issues/1806

| 521,590,490 |

MDU6SXNzdWU1MjE1OTA0OTA=

| 1,806 |

Extracting First Hidden States

|

{

"login": "brytjy",

"id": 46053996,

"node_id": "MDQ6VXNlcjQ2MDUzOTk2",

"avatar_url": "https://avatars.githubusercontent.com/u/46053996?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/brytjy",

"html_url": "https://github.com/brytjy",

"followers_url": "https://api.github.com/users/brytjy/followers",

"following_url": "https://api.github.com/users/brytjy/following{/other_user}",

"gists_url": "https://api.github.com/users/brytjy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/brytjy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/brytjy/subscriptions",

"organizations_url": "https://api.github.com/users/brytjy/orgs",

"repos_url": "https://api.github.com/users/brytjy/repos",

"events_url": "https://api.github.com/users/brytjy/events{/privacy}",

"received_events_url": "https://api.github.com/users/brytjy/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[] | 1,573 | 1,573 | 1,573 |

NONE

| null |

## ❓ Questions & Help

For example, right now in order to extract the first hidden states of DistilBert model,

tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased')

model = DistilBertModel.from_pretrained('distilbert-base-uncased', **output_hidden_states=True**)

input_ids = torch.tensor(tokenizer.encode("Hello, my dog is cute")).unsqueeze(0) # Batch size 1

outputs = model(input_ids)

**first_hidden_states = outputs[1][0] # The first hidden-state**

However, I can only extract the first layer hidden states after running through the entire model; all 7 layers (which is unnecessary if I only want the first).

Is there a way I could use the model to only extract the hidden states of the first layer and stop there? I am looking to further optimize the inference time of DistilBert.

Thank you :)

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1806/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1806/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1805

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1805/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1805/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1805/events

|

https://github.com/huggingface/transformers/issues/1805

| 521,574,385 |

MDU6SXNzdWU1MjE1NzQzODU=

| 1,805 |

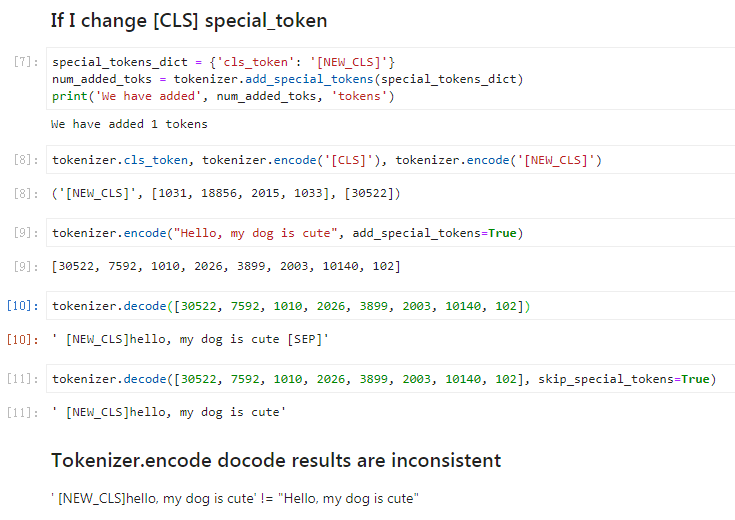

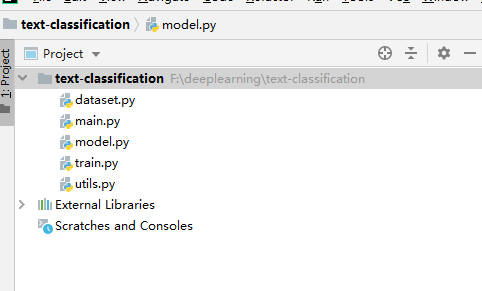

RuntimeError: CUDA error: device-side assert triggered

|

{

"login": "cswangjiawei",

"id": 33107884,

"node_id": "MDQ6VXNlcjMzMTA3ODg0",

"avatar_url": "https://avatars.githubusercontent.com/u/33107884?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cswangjiawei",

"html_url": "https://github.com/cswangjiawei",

"followers_url": "https://api.github.com/users/cswangjiawei/followers",

"following_url": "https://api.github.com/users/cswangjiawei/following{/other_user}",

"gists_url": "https://api.github.com/users/cswangjiawei/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cswangjiawei/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cswangjiawei/subscriptions",

"organizations_url": "https://api.github.com/users/cswangjiawei/orgs",

"repos_url": "https://api.github.com/users/cswangjiawei/repos",

"events_url": "https://api.github.com/users/cswangjiawei/events{/privacy}",

"received_events_url": "https://api.github.com/users/cswangjiawei/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] |

closed

| false | null |

[] |

[

"Are you in a multi-GPU setup ?",

"I did not use multi-GPU setup, I used to `model.cuda()`, it ocuurs \"RuntimeError: CUDA error: device-side assert triggered\", so I changed to `model.cuda(0)`, but the error still occurs.",

"Do you mind showing how you initialize BERT and the code surrounding the error?",

"My project structure is as follows:\r\n\r\nAnd `dataset.py` is as follows:\r\n```\r\n# -*- coding: utf-8 -*-\r\n\"\"\"\r\n@time: 2019/8/6 19:47\r\n@author: wangjiawei\r\n\"\"\"\r\n\r\n\r\nfrom torch.utils.data import Dataset\r\nimport torch\r\nimport csv\r\n\r\n\r\nclass MyDataset(Dataset):\r\n\r\n def __init__(self, data_path, tokenizer):\r\n super(MyDataset, self).__init__()\r\n\r\n self.tokenizer = tokenizer\r\n texts, labels = [], []\r\n with open(data_path, 'r', encoding='utf-8') as csv_file:\r\n reader = csv.reader(csv_file, quotechar='\"')\r\n for idx, line in enumerate(reader):\r\n text = \"\"\r\n for tx in line[1:]:\r\n text += tx\r\n text += \" \"\r\n text = self.tokenizer.tokenize(text)\r\n if len(text) > 512:\r\n text = text[:512]\r\n text = self.tokenizer.encode(text, add_special_tokens=True)\r\n text_id = torch.tensor(text)\r\n texts.append(text_id)\r\n label = int(line[0]) - 1\r\n labels.append(label)\r\n\r\n self.texts = texts\r\n self.labels = labels\r\n self.num_class = len(set(self.labels))\r\n\r\n def __len__(self):\r\n return len(self.labels)\r\n\r\n def __getitem__(self, item):\r\n label = self.labels[item]\r\n text = self.texts[item]\r\n\r\n return {'text': text, 'label': torch.tensor(label).long()}\r\n```\r\n\r\n`main.py` is as follows:\r\n```\r\n# -*- coding: utf-8 -*-\r\n\"\"\"\r\n@time: 2019/7/17 20:37\r\n@author: wangjiawei\r\n\"\"\"\r\n\r\nimport os\r\nimport torch\r\nimport torch.nn as nn\r\nfrom torch.utils.data import DataLoader, random_split\r\nfrom utils import get_default_folder, my_collate_fn\r\nfrom dataset import MyDataset\r\nfrom model import TextClassify\r\nfrom torch.utils.tensorboard import SummaryWriter\r\nimport argparse\r\nimport shutil\r\nimport numpy as np\r\nimport random\r\nimport time\r\nfrom train import train_model, evaluate\r\nfrom transformers import BertModel, BertTokenizer\r\n\r\nseed_num = 123\r\nrandom.seed(seed_num)\r\ntorch.manual_seed(seed_num)\r\nnp.random.seed(seed_num)\r\n\r\nif __name__ == \"__main__\":\r\n parser = argparse.ArgumentParser(\"self attention for Text Categorization\")\r\n parser.add_argument(\"--batch_size\", type=int, default=64)\r\n parser.add_argument(\"--num_epoches\", type=int, default=20)\r\n parser.add_argument(\"--lr\", type=float, default=0.001)\r\n parser.add_argument(\"--kernel_size\", type=int, default=3)\r\n parser.add_argument(\"--word_dim\", type=int, default=768)\r\n parser.add_argument(\"--out_dim\", type=int, default=300)\r\n parser.add_argument(\"--dropout\", default=0.5)\r\n parser.add_argument(\"--es_patience\", type=int, default=3)\r\n parser.add_argument(\"--dataset\", type=str,\r\n choices=[\"agnews\", \"dbpedia\", \"yelp_review\", \"yelp_review_polarity\", \"amazon_review\",\r\n \"amazon_polarity\", \"sogou_news\", \"yahoo_answers\"], default=\"yelp_review\")\r\n parser.add_argument(\"--log_path\", type=str, default=\"tensorboard/classify\")\r\n\r\n args = parser.parse_args()\r\n\r\n input, output = get_default_folder(args.dataset)\r\n train_path = input + os.sep + \"train.csv\"\r\n\r\n if not os.path.exists(output):\r\n os.makedirs(output)\r\n\r\n # with open(input + os.sep + args.vocab_file, 'rb') as f1:\r\n # vocab = pickle.load(f1)\r\n\r\n # emb_begin = time.time()\r\n # pretrain_word_embedding = build_pretrain_embedding(args.embedding_path, vocab, args.word_dim)\r\n # emb_end = time.time()\r\n # emb_min = (emb_end - emb_begin) % 3600 // 60\r\n # print('build pretrain embed cost {}m'.format(emb_min))\r\n\r\n model_class, tokenizer_class, pretrained_weights = BertModel, BertTokenizer, 'bert-base-uncased'\r\n tokenizer = tokenizer_class.from_pretrained(pretrained_weights)\r\n bert = model_class.from_pretrained(pretrained_weights)\r\n\r\n\r\n train_dev_dataset = MyDataset(input + os.sep + \"train.csv\", tokenizer)\r\n len_train_dev_dataset = len(train_dev_dataset)\r\n dev_size = int(len_train_dev_dataset * 0.1)\r\n train_size = len_train_dev_dataset - dev_size\r\n train_dataset, dev_dataset = random_split(train_dev_dataset, [train_size, dev_size])\r\n test_dataset = MyDataset(input + os.sep + \"test.csv\", tokenizer)\r\n\r\n train_dataloader = DataLoader(train_dataset, batch_size=args.batch_size, shuffle=True, collate_fn=my_collate_fn)\r\n dev_dataloader = DataLoader(dev_dataset, batch_size=args.batch_size, shuffle=False, collate_fn=my_collate_fn)\r\n test_dataloader = DataLoader(test_dataset, batch_size=args.batch_size, shuffle=False, collate_fn=my_collate_fn)\r\n\r\n model = TextClassify(bert, args.kernel_size, args.word_dim, args.out_dim, test_dataset.num_class, args.dropout)\r\n\r\n log_path = \"{}_{}\".format(args.log_path, args.dataset)\r\n if os.path.isdir(log_path):\r\n shutil.rmtree(log_path)\r\n os.makedirs(log_path)\r\n writer = SummaryWriter(log_path)\r\n\r\n if torch.cuda.is_available():\r\n model.cuda(0)\r\n\r\n criterion = nn.CrossEntropyLoss()\r\n optimizer = torch.optim.Adam(model.parameters(), lr=args.lr)\r\n best_acc = -1\r\n early_stop = 0\r\n model.train()\r\n batch_num = -1\r\n\r\n train_begin = time.time()\r\n\r\n for epoch in range(args.num_epoches):\r\n epoch_begin = time.time()\r\n print('train {}/{} epoch'.format(epoch + 1, args.num_epoches))\r\n batch_num = train_model(model, optimizer, criterion, batch_num, train_dataloader, writer)\r\n dev_acc = evaluate(model, dev_dataloader)\r\n writer.add_scalar('dev/Accuracy', dev_acc, epoch)\r\n print('dev_acc:', dev_acc)\r\n if dev_acc > best_acc:\r\n early_stop = 0\r\n best_acc = dev_acc\r\n print('new best_acc on dev set:', best_acc)\r\n torch.save(model.state_dict(), \"{}{}\".format(output, 'best.pt'))\r\n else:\r\n early_stop += 1\r\n\r\n epoch_end = time.time()\r\n cost_time = epoch_end - epoch_begin\r\n print('train {}th epoch cost {}m {}s'.format(epoch + 1, int(cost_time / 60), int(cost_time % 60)))\r\n print()\r\n\r\n # Early stopping\r\n if early_stop > args.es_patience:\r\n print(\r\n \"Stop training at epoch {}. The best dev_acc achieved is {}\".format(epoch - args.es_patience, best_acc))\r\n break\r\n\r\n train_end = time.time()\r\n train_cost = train_end - train_begin\r\n hour = int(train_cost / 3600)\r\n min = int((train_cost % 3600) / 60)\r\n second = int(train_cost % 3600 % 60)\r\n print()\r\n print()\r\n print('train end', '-' * 50)\r\n print('train total cost {}h {}m {}s'.format(hour, min, second))\r\n print('-' * 50)\r\n\r\n model_name = \"{}{}\".format(output, 'best.pt')\r\n\r\n model.load_state_dict(torch.load(model_name))\r\n test_acc = evaluate(model, test_dataloader)\r\n print('test acc on test set:', test_acc)\r\n```\r\n\r\n`model.py` is as follows:\r\n```\r\n# -*- coding: utf-8 -*-\r\n\"\"\"\r\n@time: 2019/8/6 19:04\r\n@author: wangjiawei\r\n\"\"\"\r\n\r\nimport torch.nn as nn\r\nimport torch\r\nimport torch.nn.functional as F\r\n\r\n\r\nclass TextClassify(nn.Module):\r\n def __init__(self, bert, kernel_size, word_dim, out_dim, num_class, dropout=0.5):\r\n super(TextClassify, self).__init__()\r\n self.bert = bert\r\n self.cnn = nn.Sequential(nn.Conv1d(word_dim, out_dim, kernel_size=kernel_size, padding=1), nn.ReLU(inplace=True))\r\n self.drop = nn.Dropout(dropout, inplace=True)\r\n self.fc = nn.Linear(out_dim, num_class)\r\n\r\n def forward(self, word_input):\r\n batch_size = word_input.size(0)\r\n represent = self.bert(word_input)[0]\r\n represent = self.drop(represent)\r\n represent = represent.transpose(1, 2)\r\n contexts = self.cnn(represent)\r\n feature = F.max_pool1d(contexts, contexts.size(2)).contiguous().view(batch_size, -1)\r\n feature = self.drop(feature)\r\n feature = self.fc(feature)\r\n return feature\r\n```\r\n\r\n`train.py` is as follows:\r\n```\r\n# -*- coding: utf-8 -*-\r\n\"\"\"\r\n@time: 2019/8/6 20:14\r\n@author: wangjiawei\r\n\"\"\"\r\n\r\n\r\nimport torch\r\n\r\n\r\ndef train_model(model, optimizer, criterion, batch_num, dataloader, writer):\r\n model.train()\r\n\r\n for batch in dataloader:\r\n model.zero_grad()\r\n batch_num += 1\r\n text = batch['text']\r\n label = batch['label']\r\n if torch.cuda.is_available():\r\n text = text.cuda(0)\r\n label = label.cuda(0)\r\n\r\n feature = model(text)\r\n loss = criterion(feature, label)\r\n writer.add_scalar('Train/Loss', loss, batch_num)\r\n loss.backward()\r\n optimizer.step()\r\n\r\n return batch_num\r\n\r\n\r\ndef evaluate(model, dataloader):\r\n model.eval()\r\n correct_num = 0\r\n total_num = 0\r\n\r\n for batch in dataloader:\r\n text = batch['text']\r\n label = batch['label']\r\n if torch.cuda.is_available():\r\n text = text.cuda(0)\r\n label = label.cuda(0)\r\n\r\n with torch.no_grad():\r\n predictions = model(text)\r\n _, preds = torch.max(predictions, 1)\r\n correct_num += torch.sum((preds == label)).item()\r\n total_num += len(label)\r\n\r\n acc = (correct_num / total_num) * 100\r\n return acc\r\n```\r\n\r\n`utils.py` is as follows:\r\n```\r\n# -*- coding: utf-8 -*-\r\n\"\"\"\r\n@time: 2019/8/6 19:54\r\n@author: wangjiawei\r\n\"\"\"\r\n\r\n\r\nimport csv\r\nimport nltk\r\nimport numpy as np\r\nfrom torch.nn.utils.rnn import pad_sequence\r\nimport torch\r\n\r\n\r\ndef load_pretrain_emb(embedding_path, embedd_dim):\r\n embedd_dict = dict()\r\n with open(embedding_path, 'r', encoding=\"utf8\") as file:\r\n for line in file:\r\n line = line.strip()\r\n if len(line) == 0:\r\n continue\r\n tokens = line.split()\r\n if not embedd_dim + 1 == len(tokens):\r\n continue\r\n embedd = np.empty([1, embedd_dim])\r\n embedd[:] = tokens[1:]\r\n first_col = tokens[0]\r\n embedd_dict[first_col] = embedd\r\n return embedd_dict\r\n\r\n\r\ndef build_pretrain_embedding(embedding_path, vocab, embedd_dim=300):\r\n embedd_dict = dict()\r\n if embedding_path is not None:\r\n embedd_dict = load_pretrain_emb(embedding_path, embedd_dim)\r\n alphabet_size = vocab.size()\r\n scale = 0.1\r\n pretrain_emb = np.empty([vocab.size(), embedd_dim])\r\n perfect_match = 0\r\n case_match = 0\r\n not_match = 0\r\n for word, index in vocab.items():\r\n if word in embedd_dict:\r\n pretrain_emb[index, :] = embedd_dict[word]\r\n perfect_match += 1\r\n elif word.lower() in embedd_dict:\r\n pretrain_emb[index, :] = embedd_dict[word.lower()]\r\n case_match += 1\r\n else:\r\n pretrain_emb[index, :] = np.random.uniform(-scale, scale, [1, embedd_dim])\r\n not_match += 1\r\n\r\n pretrain_emb[0, :] = np.zeros((1, embedd_dim))\r\n pretrained_size = len(embedd_dict)\r\n print('pretrained_size:', pretrained_size)\r\n print(\"Embedding:\\n pretrain word:%s, prefect match:%s, case_match:%s, oov:%s, oov%%:%s\" % (\r\n pretrained_size, perfect_match, case_match, not_match, (not_match + 0.) / alphabet_size))\r\n return pretrain_emb\r\n\r\n\r\ndef get_default_folder(dataset):\r\n if dataset == \"agnews\":\r\n input = \"data/ag_news_csv\"\r\n output = \"output/ag_news/\"\r\n elif dataset == \"dbpedia\":\r\n input = \"data/dbpedia_csv\"\r\n output = \"output/dbpedia/\"\r\n elif dataset == \"yelp_review\":\r\n input = \"data/yelp_review_full_csv\"\r\n output = \"output/yelp_review_full/\"\r\n elif dataset == \"yelp_review_polarity\":\r\n input = \"data/yelp_review_polarity_csv\"\r\n output = \"output/yelp_review_polarity/\"\r\n elif dataset == \"amazon_review\":\r\n input = \"data/amazon_review_full_csv\"\r\n output = \"output/amazon_review_full/\"\r\n elif dataset == \"amazon_polarity\":\r\n input = \"data/amazon_review_polarity_csv\"\r\n output = \"output/amazon_review_polarity/\"\r\n elif dataset == \"sogou_news\":\r\n input = \"data/sogou_news_csv\"\r\n output = \"output/sogou_news/\"\r\n elif dataset == \"yahoo_answers\":\r\n input = \"data/yahoo_answers_csv\"\r\n output = \"output/yahoo_answers/\"\r\n return input, output\r\n\r\n\r\ndef my_collate(batch_tensor, key):\r\n if key == 'text':\r\n batch_tensor = pad_sequence(batch_tensor, batch_first=True, padding_value=0)\r\n else:\r\n batch_tensor = torch.stack(batch_tensor)\r\n return batch_tensor\r\n\r\n\r\ndef my_collate_fn(batch):\r\n return {key: my_collate([d[key] for d in batch], key) for key in batch[0]}\r\n\r\n\r\nclass Vocabulary(object):\r\n def __init__(self, filename):\r\n self._id_to_word = []\r\n self._word_to_id = {}\r\n self._pad = -1\r\n self._unk = -1\r\n self.index = 0\r\n\r\n self._id_to_word.append('<PAD>')\r\n self._word_to_id['<PAD>'] = self.index\r\n self._pad = self.index\r\n self.index += 1\r\n self._id_to_word.append('<UNK>')\r\n self._word_to_id['<UNK>'] = self.index\r\n self._unk = self.index\r\n self.index += 1\r\n\r\n word_num = dict()\r\n\r\n with open(filename, 'r', encoding='utf-8') as f1:\r\n reader = csv.reader(f1, quotechar='\"')\r\n for line in reader:\r\n text = \"\"\r\n for tx in line[1:]:\r\n text += tx\r\n text += \" \"\r\n\r\n text = nltk.word_tokenize(text)\r\n for word in text:\r\n if word not in word_num:\r\n word_num[word] = 0\r\n word_num[word] += 1\r\n\r\n for word, num in word_num.items():\r\n if num >= 3:\r\n self._id_to_word.append(word)\r\n self._word_to_id[word] = self.index\r\n self.index += 1\r\n\r\n def unk(self):\r\n return self._unk\r\n\r\n def pad(self):\r\n return self._pad\r\n\r\n def size(self):\r\n return len(self._id_to_word)\r\n\r\n def word_to_id(self, word):\r\n if word in self._word_to_id:\r\n return self._word_to_id[word]\r\n elif word.lower() in self._word_to_id:\r\n return self._word_to_id[word.lower()]\r\n return self.unk()\r\n\r\n def id_to_word(self, cur_id):\r\n return self._id_to_word[cur_id]\r\n\r\n def items(self):\r\n return self._word_to_id.items()\r\n```\r\n",

"when I use Google Cloud, it works. Before I used Google Colab. It is strange.",

"It's probably because your token embeddings size (vocab size) doesn't match with pre-trained model. Do `model.resize_token_embeddings(len(tokenizer))` before training. Please check #1848 and #1849 ",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"I also faced the same issue while encoding 20_newspaper dataset, After further investigation, I found there are some sentences which are not in the English language, for example :\r\n\r\n`'subject romanbmp pa of from pwisemansalmonusdedu cliff replyto pwisemansalmonusdedu cliff distribution usa organization university of south dakota lines maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxaxaxax39f8z51 wwizbhjbhjbhjbhjgiz mgizgizbhjgizm 1tei0lv9f9f9fra 5z46q0475vo4 mu34u34u m34w 084oo aug 0y5180 mc p8v5555555555965hwgv 7uqgy gp452gvbdigiz maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxaxax34t2php2app12a34u34tm11 6um6 mum8 zbjf0kvlvc9vde5e9e5g9vg9v38vc o3o n5 mardi 24y2 g92li6e0q8axaxaxaxaxaxaxax maxaxaxaxaxaxaxas9ne1whjn 1tei4pmf9l3u3 mr hjpm75u4u34u34u nfyn 46uo m5ug 0y4518hr8y3m15556tdy65c8u 47y m7 hsxgjeuaxaxaxaxaxaxaxaxaxaxaxax maxaxaxaxaxaxaxax34t2pchp2a2a2a234u m34u3 zexf3w21fp4 wm3t f9d uzi0mf8axaxaxaxaxaxrxkrldajj mkjznbbwp0nvmkvmkvnrkmkvmhi axaxaxaxax maxaxaxaxaxaxaxks8vc9vfiue949h g9v38v6un5 mg83q3x w5 3t pi0wsr4c362l zkn2axaxaxaxaxaxaxaxaxaxax maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxax39f9fpli6e1t1'`\r\n\r\n**This was causing the model to produce this error If you are facing this issue follow these steps:** \r\n\r\n1. Catch Exception and store the sentences in the new list to see if the sentence is having weird characters \r\n2. Reduce the batch size, If your batch size is too high (if you are using batch encoding)\r\n3. Check if your sentence length is too long for the model to encode, trim the sentence length ( try 200 first then 128 )",

"I am continuously getting the runtime error: CUDA error: device-side assert triggered, I am new to transformer library.\r\n\r\nI am creating the longformer classifier in the below format:\r\n\r\nmodel = LongformerForSequenceClassification.from_pretrained('allenai/longformer-base-4096', gradient_checkpointing = True, num_labels = 5)\r\n\r\nand tokenizer as :\r\n\r\ntokenizer = LongformerTokenizer.from_pretrained('allenai/longformer-base-4096')\r\n\r\nencoded_data_train = tokenizer.batch_encode_plus(\r\ndf[df.data_type=='train'].content.values,\r\nadd_special_tokens = True,\r\nreturn_attention_mask=True,\r\npadding = True,\r\nmax_length = 3800,\r\nreturn_tensors='pt'\r\n)\r\n\r\nCan anyone please help? I am using latest version of transformer library and using google colab GPU for building my classifier.",

"> I also faced the same issue while encoding 20_newspaper dataset, After further investigation, I found there are some sentences which are not in the English language, for example :\r\n> \r\n> `'subject romanbmp pa of from pwisemansalmonusdedu cliff replyto pwisemansalmonusdedu cliff distribution usa organization university of south dakota lines maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxaxaxax39f8z51 wwizbhjbhjbhjbhjgiz mgizgizbhjgizm 1tei0lv9f9f9fra 5z46q0475vo4 mu34u34u m34w 084oo aug 0y5180 mc p8v5555555555965hwgv 7uqgy gp452gvbdigiz maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxaxax34t2php2app12a34u34tm11 6um6 mum8 zbjf0kvlvc9vde5e9e5g9vg9v38vc o3o n5 mardi 24y2 g92li6e0q8axaxaxaxaxaxaxax maxaxaxaxaxaxaxas9ne1whjn 1tei4pmf9l3u3 mr hjpm75u4u34u34u nfyn 46uo m5ug 0y4518hr8y3m15556tdy65c8u 47y m7 hsxgjeuaxaxaxaxaxaxaxaxaxaxaxax maxaxaxaxaxaxaxax34t2pchp2a2a2a234u m34u3 zexf3w21fp4 wm3t f9d uzi0mf8axaxaxaxaxaxrxkrldajj mkjznbbwp0nvmkvmkvnrkmkvmhi axaxaxaxax maxaxaxaxaxaxaxks8vc9vfiue949h g9v38v6un5 mg83q3x w5 3t pi0wsr4c362l zkn2axaxaxaxaxaxaxaxaxaxax maxaxaxaxaxaxaxaxaxaxaxaxaxaxax maxax39f9fpli6e1t1'`\r\n> \r\n> **This was causing the model to produce this error If you are facing this issue follow these steps:**\r\n> \r\n> 1. Catch Exception and store the sentences in the new list to see if the sentence is having weird characters\r\n> \r\n> 2. Reduce the batch size, If your batch size is too high (if you are using batch encoding)\r\n> \r\n> 3. Check if your sentence length is too long for the model to encode, trim the sentence length ( try 200 first then 128 )\r\n\r\nWhat a bizzare dataset. \r\n\r\nSo, this error does occur if you use a an english tokenizer on some random garbage input! \r\n\r\n```python3\r\nfrom langdetect import detect\r\ndef detect_robust(x):\r\n try:\r\n out = detect(x) # 20 news managed to break this\r\n except Exception:\r\n out = 'not-en'\r\n return out\r\n\r\nlang_ = train_df['message'].map(detect_robust)\r\ntrain_df = train_df[lang_=='en']\r\n```\r\n^ this is ridiculously slow, but after the cleaning the error is gone!\r\n"

] | 1,573 | 1,617 | 1,579 |

NONE

| null |

## ❓ Questions & Help

. "RuntimeError: CUDA error: device-side assert triggered" occurs. My model is as follows:

```

class TextClassify(nn.Module):

def __init__(self, bert, kernel_size, word_dim, out_dim, num_class, dropout=0.5):

super(TextClassify, self).__init__()

self.bert = bert

self.cnn = nn.Sequential(nn.Conv1d(word_dim, out_dim, kernel_size=kernel_size, padding=1), nn.ReLU(inplace=True))

self.drop = nn.Dropout(dropout, inplace=True)

self.fc = nn.Linear(out_dim, num_class)

def forward(self, word_input):

batch_size = word_input.size(0)

represent = self.xlnet(word_input)[0]

represent = self.drop(represent)

represent = represent.transpose(1, 2)

contexts = self.cnn(represent)

feature = F.max_pool1d(contexts, contexts.size(2)).contiguous().view(batch_size, -1)

feature = self.drop(feature)

feature = self.fc(feature)

return feature

```

How can I solve it?

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1805/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1805/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1804

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1804/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1804/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1804/events

|

https://github.com/huggingface/transformers/pull/1804

| 521,481,220 |

MDExOlB1bGxSZXF1ZXN0MzM5ODUxNDg5

| 1,804 |

fix multi-gpu eval in torch examples

|

{

"login": "ronakice",

"id": 19197923,

"node_id": "MDQ6VXNlcjE5MTk3OTIz",

"avatar_url": "https://avatars.githubusercontent.com/u/19197923?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ronakice",

"html_url": "https://github.com/ronakice",

"followers_url": "https://api.github.com/users/ronakice/followers",

"following_url": "https://api.github.com/users/ronakice/following{/other_user}",

"gists_url": "https://api.github.com/users/ronakice/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ronakice/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ronakice/subscriptions",

"organizations_url": "https://api.github.com/users/ronakice/orgs",

"repos_url": "https://api.github.com/users/ronakice/repos",

"events_url": "https://api.github.com/users/ronakice/events{/privacy}",

"received_events_url": "https://api.github.com/users/ronakice/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Indeed, good catch, thanks @ronakice "

] | 1,573 | 1,573 | 1,573 |

CONTRIBUTOR

| null |

Although batch_size for eval is updated to include multiple GPUs, DataParallel is missing from the model and hence doesn't use multi-GPUs. This PR allows DataParallel (multi-GPU) model in eval.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1804/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1804/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/1804",

"html_url": "https://github.com/huggingface/transformers/pull/1804",

"diff_url": "https://github.com/huggingface/transformers/pull/1804.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/1804.patch",

"merged_at": 1573766645000

}

|

https://api.github.com/repos/huggingface/transformers/issues/1803

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1803/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1803/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1803/events

|

https://github.com/huggingface/transformers/pull/1803

| 521,400,213 |

MDExOlB1bGxSZXF1ZXN0MzM5Nzg1Njc2

| 1,803 |

fix run_squad.py during fine-tuning xlnet on squad2.0

|

{

"login": "importpandas",

"id": 30891974,

"node_id": "MDQ6VXNlcjMwODkxOTc0",

"avatar_url": "https://avatars.githubusercontent.com/u/30891974?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/importpandas",

"html_url": "https://github.com/importpandas",

"followers_url": "https://api.github.com/users/importpandas/followers",

"following_url": "https://api.github.com/users/importpandas/following{/other_user}",

"gists_url": "https://api.github.com/users/importpandas/gists{/gist_id}",

"starred_url": "https://api.github.com/users/importpandas/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/importpandas/subscriptions",

"organizations_url": "https://api.github.com/users/importpandas/orgs",

"repos_url": "https://api.github.com/users/importpandas/repos",

"events_url": "https://api.github.com/users/importpandas/events{/privacy}",

"received_events_url": "https://api.github.com/users/importpandas/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"This looks good, do you want to add your command and the results you mention in the README of the examples in `examples/README.md`?",

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=h1) Report\n> Merging [#1803](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/8618bf15d6edc8774cedc0aae021d259d89c91fc?src=pr&el=desc) will **increase** coverage by `1.08%`.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #1803 +/- ##\n==========================================\n+ Coverage 78.91% 79.99% +1.08% \n==========================================\n Files 131 131 \n Lines 19450 19450 \n==========================================\n+ Hits 15348 15559 +211 \n+ Misses 4102 3891 -211\n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [transformers/tests/utils.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL3V0aWxzLnB5) | `91.17% <0%> (-2.95%)` | :arrow_down: |\n| [transformers/pipelines.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3BpcGVsaW5lcy5weQ==) | `67.94% <0%> (+0.58%)` | :arrow_up: |\n| [transformers/modeling\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3V0aWxzLnB5) | `94.1% <0%> (+1.07%)` | :arrow_up: |\n| [transformers/modeling\\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.51% <0%> (+1.32%)` | :arrow_up: |\n| [transformers/modeling\\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2N0cmwucHk=) | `96.47% <0%> (+2.2%)` | :arrow_up: |\n| [transformers/modeling\\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `74.54% <0%> (+2.32%)` | :arrow_up: |\n| [transformers/modeling\\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `72.57% <0%> (+12%)` | :arrow_up: |\n| [transformers/tests/modeling\\_tf\\_common\\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `94.39% <0%> (+17.24%)` | :arrow_up: |\n| [transformers/modeling\\_tf\\_pytorch\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1803/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3B5dG9yY2hfdXRpbHMucHk=) | `90.06% <0%> (+80.79%)` | :arrow_up: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=footer). Last update [8618bf1...8a2be93](https://codecov.io/gh/huggingface/transformers/pull/1803?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"ok, I have updated the readme.",

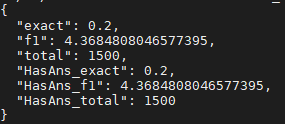

"@importpandas thanks for contributing this fix, works great! My results running this mod:\r\n```\r\n{\r\n \"exact\": 82.07698138633876,\r\n \"f1\": 85.898874470488,\r\n \"total\": 11873,\r\n \"HasAns_exact\": 79.60526315789474,\r\n \"HasAns_f1\": 87.26000954590184,\r\n \"HasAns_total\": 5928,\r\n \"NoAns_exact\": 84.54163162321278,\r\n \"NoAns_f1\": 84.54163162321278,\r\n \"NoAns_total\": 5945,\r\n \"best_exact\": 83.22243746315169,\r\n \"best_exact_thresh\": -11.112004280090332,\r\n \"best_f1\": 86.88541353813282,\r\n \"best_f1_thresh\": -11.112004280090332\r\n}\r\n```\r\nDistributed processing for batch size 48 using gradient accumulation consumes 10970 MiB on each of 2x NVIDIA 1080Ti.\r\n```",

"@ahotrod As for your first results, I will suggest you watch my changes on file `run_squad.py` in this pr. It just added a another input to the model and only a few lines. Without this changing, I got the same results with you, which is nearly to 0 on unanswerable questions. I think Distributed processing isn't responsible for that. When it comes to the running script, it doesn't matter since I had hardly changed it.",

"@importpandas re-run just completed (36 hours) and results are posted above.\r\nWorks great, thanks again!",

"> @importpandas re-run just completed (36 hours) and results are posted above.\r\n> Works great, thanks again!\r\n\r\nokay, pleasure",

"LGTM, merging cc @LysandreJik (the `run_squad` master)"

] | 1,573 | 1,576 | 1,576 |

CONTRIBUTOR

| null |

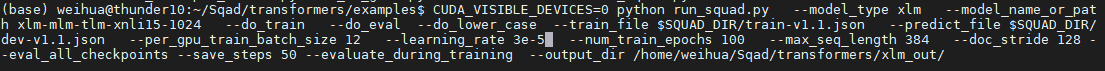

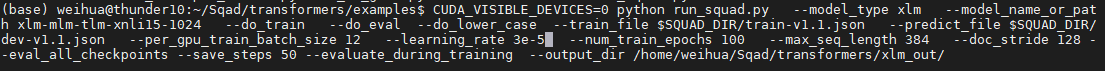

The following is a piece of code in forward function of xlnet model, which obviously is the key point of training the model on unanswerable questions using cls token representations. But the default value of tensor `is_impossible`(using to indicate whether this example is answerable) is none, and we also hadn't passed this tensor into forward function. That's the problem.

```

if cls_index is not None and is_impossible is not None:

# Predict answerability from the representation of CLS and START

cls_logits = self.answer_class(hidden_states, start_positions=start_positions, cls_index=cls_index)

loss_fct_cls = nn.BCEWithLogitsLoss()

cls_loss = loss_fct_cls(cls_logits, is_impossible)

total_loss += cls_loss * 0.5

```

I added the `is_impossible` tensor to TensorDataset and model inputs, and got a reasonable result,

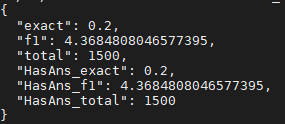

{

"exact": 80.4177545691906,

"f1": 84.07154997729623,

"total": 11873,

"HasAns_exact": 77.59784075573549,

"HasAns_f1": 84.83993323200234,

"HasAns_total": 5928,

"NoAns_exact": 84.0874684608915,

"NoAns_f1": 84.0874684608915,

"NoAns_total": 5945

}

My running command:

```

python run_squad.py \

--model_type xlnet \

--model_name_or_path xlnet-large-cased \

--do_train \

--do_eval \

--version_2_with_negative \

--train_file data/train-v2.0.json \

--predict_file data/dev-v2.0.json \

--learning_rate 3e-5 \

--num_train_epochs 4 \

--max_seq_length 384 \

--doc_stride 128 \

--output_dir ./wwm_cased_finetuned_squad/ \

--per_gpu_eval_batch_size=2 \

--per_gpu_train_batch_size=2 \

--save_steps 5000

```

I run my code with 4 nvidia GTX 1080ti gpus and pytorch==1.2.0.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1803/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1803/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/1803",

"html_url": "https://github.com/huggingface/transformers/pull/1803",

"diff_url": "https://github.com/huggingface/transformers/pull/1803.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/1803.patch",

"merged_at": 1576931928000

}

|

https://api.github.com/repos/huggingface/transformers/issues/1802

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1802/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1802/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1802/events

|

https://github.com/huggingface/transformers/issues/1802

| 521,397,577 |

MDU6SXNzdWU1MjEzOTc1Nzc=

| 1,802 |

pip cannot install transformers with python version 3.8.0

|

{

"login": "Lyther",

"id": 29906124,

"node_id": "MDQ6VXNlcjI5OTA2MTI0",

"avatar_url": "https://avatars.githubusercontent.com/u/29906124?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Lyther",

"html_url": "https://github.com/Lyther",

"followers_url": "https://api.github.com/users/Lyther/followers",

"following_url": "https://api.github.com/users/Lyther/following{/other_user}",

"gists_url": "https://api.github.com/users/Lyther/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Lyther/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Lyther/subscriptions",

"organizations_url": "https://api.github.com/users/Lyther/orgs",

"repos_url": "https://api.github.com/users/Lyther/repos",

"events_url": "https://api.github.com/users/Lyther/events{/privacy}",

"received_events_url": "https://api.github.com/users/Lyther/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] |

closed

| false | null |

[] |

[

"This looks like an error related to Google SentencePiece and in particular this issue: https://github.com/google/sentencepiece/issues/411",

"https://github.com/google/sentencepiece/issues/411#issuecomment-557596691\r\n\r\n```\r\npip install https://github.com/google/sentencepiece/releases/download/v0.1.84/sentencepiece-0.1.84-cp38-cp38-manylinux1_x86_64.whl\r\n```",

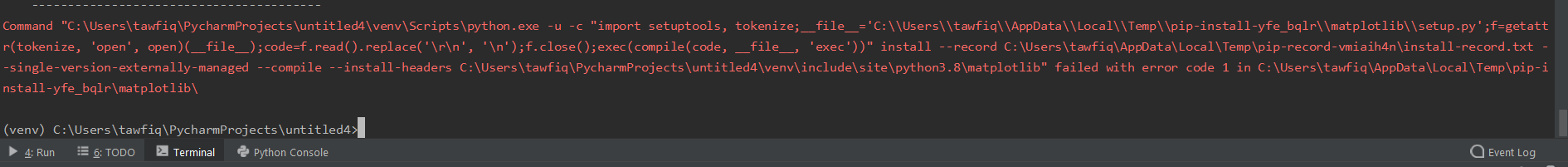

"I've got problem installing matplotlib in python. While installing these error massage is shown. What to do now?\r\n\r\n\r\nCommand \"C:\\Users\\tawfiq\\PycharmProjects\\untitled4\\venv\\Scripts\\python.exe -u -c \"import setuptools, tokenize;__file__='C:\\\\Users\\\\tawfiq\\\\AppData\\\\Local\\\\Temp\\\\pip-install-yfe_bqlr\\\\matplotlib\\\\setup.py';f=getatt\r\nr(tokenize, 'open', open)(__file__);code=f.read().replace('\\r\\n', '\\n');f.close();exec(compile(code, __file__, 'exec'))\" install --record C:\\Users\\tawfiq\\AppData\\Local\\Temp\\pip-record-vmiaih4n\\install-record.txt -\r\n-single-version-externally-managed --compile --install-headers C:\\Users\\tawfiq\\PycharmProjects\\untitled4\\venv\\include\\site\\python3.8\\matplotlib\" failed with error code 1 in C:\\Users\\tawfiq\\AppData\\Local\\Temp\\pip-i\r\nnstall-yfe_bqlr\\matplotlib\\\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"This is still an issue on MacOS: https://github.com/google/sentencepiece/issues/411#issuecomment-578509088",

"> google/sentencepiece#411 (comment)\r\n\r\nThis error comes up: ``` ERROR: sentencepiece-0.1.84-cp38-cp38-manylinux1_x86_64.whl is not a supported wheel on this platform.```\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,573 | 1,589 | 1,589 |

NONE

| null |

## ❓ Questions & Help

The error message looks like this,

` ERROR: Command errored out with exit status 1:

command: 'c:\users\enderaoe\appdata\local\programs\python\python38-32\python.exe' -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'C:\\Users\\Enderaoe\\AppData\\Local\\Temp\\pip-install-g7jzfokt\\sentencepiece\\setup.py'"'"'; __file__='"'"'C:\\Users\\Enderaoe\\AppData\\Local\\Temp\\pip-install-g7jzfokt\\sentencepiece\\setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' egg_info --egg-base 'C:\Users\Enderaoe\AppData\Local\Temp\pip-install-g7jzfokt\sentencepiece\pip-egg-info'

cwd: C:\Users\Enderaoe\AppData\Local\Temp\pip-install-g7jzfokt\sentencepiece\

Complete output (7 lines):

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:\Users\Enderaoe\AppData\Local\Temp\pip-install-g7jzfokt\sentencepiece\setup.py", line 29, in <module>

with codecs.open(os.path.join('..', 'VERSION'), 'r', 'utf-8') as f:

File "c:\users\enderaoe\appdata\local\programs\python\python38-32\lib\codecs.py", line 905, in open

file = builtins.open(filename, mode, buffering)

FileNotFoundError: [Errno 2] No such file or directory: '..\\VERSION'

----------------------------------------

ERROR: Command errored out with exit status 1: python setup.py egg_info Check the logs for full command output.`

Should I lower my python version, or there is any other solution?

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/1802/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/1802/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/1801

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/1801/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/1801/comments

|

https://api.github.com/repos/huggingface/transformers/issues/1801/events

|

https://github.com/huggingface/transformers/issues/1801

| 521,335,428 |

MDU6SXNzdWU1MjEzMzU0Mjg=

| 1,801 |

run_glue.py RuntimeError: module must have its parameters and buffers on device cuda:0 (device_ids[0]) but found one of them on device: cuda:3

|

{

"login": "insublee",

"id": 39117829,

"node_id": "MDQ6VXNlcjM5MTE3ODI5",

"avatar_url": "https://avatars.githubusercontent.com/u/39117829?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/insublee",

"html_url": "https://github.com/insublee",

"followers_url": "https://api.github.com/users/insublee/followers",

"following_url": "https://api.github.com/users/insublee/following{/other_user}",

"gists_url": "https://api.github.com/users/insublee/gists{/gist_id}",

"starred_url": "https://api.github.com/users/insublee/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/insublee/subscriptions",

"organizations_url": "https://api.github.com/users/insublee/orgs",

"repos_url": "https://api.github.com/users/insublee/repos",

"events_url": "https://api.github.com/users/insublee/events{/privacy}",

"received_events_url": "https://api.github.com/users/insublee/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] |

closed

| false | null |

[] |

[

"This problem comes out from multiple GPUs usage. The error you have reported says that you have parameters or the buffers of the model in **two different locations**. \r\nSaid this, it's probably related to #1504 issue. Reading comments in the #1504 issue, i saw that @h-sugi suggests 4 days ago to modify the source code in `run_**.py` like this:\r\n\r\nBEFORE: `device = torch.device(\"cuda\" if torch.cuda.is_available() and not args.no_cuda else \"cpu\")`\r\nAFTER: `device = torch.device(\"cuda:0\" if torch.cuda.is_available() and not args.no_cuda else \"cpu\").`\r\n\r\nMoreover, the person who opened the issue @ahotrod says this: _Have had many successful SQuAD fine-tuning runs on PyTorch 1.2.0 with Pytorch-Transformers 1.2.0, maybe even Transformers 2.0.0, and Apex 0.1. New environment built with the latest versions (Pytorch 1.3.0, Transformers 2.1.1) spawns data parallel related error above_\r\n\r\nPlease, keep us updated on this topic!\r\n\r\n> ## Bug\r\n> Model I am using (Bert, XLNet....): Bert\r\n> \r\n> Language I am using the model on (English, Chinese....): English\r\n> \r\n> The problem arise when using:\r\n> \r\n> * [ ] the official example scripts: (give details) : transformers/examples/run_glue.py\r\n> * [ ] my own modified scripts: (give details)\r\n> \r\n> The tasks I am working on is:\r\n> \r\n> * [ ] an official GLUE/SQUaD task: (give the name) : MRPC\r\n> * [ ] my own task or dataset: (give details)\r\n> \r\n> ## To Reproduce\r\n> Steps to reproduce the behavior:\r\n> \r\n> \r\n> I've tested using\r\n> python -m pytest -sv ./transformers/tests/\r\n> python -m pytest -sv ./examples/\r\n> and it works fine without couple of tesks.\r\n> \r\n> \r\n> after test, i downloaded glue datafile via\r\n> https://gist.github.com/W4ngatang/60c2bdb54d156a41194446737ce03e2e\r\n> and tried run_glue.py\r\n> \r\n> pip install -r ./examples/requirements.txt\r\n> export GLUE_DIR=/path/to/glue\r\n> export TASK_NAME=MRPC\r\n> \r\n> \r\n> python ./examples/run_glue.py \r\n> --model_type bert \r\n> --model_name_or_path bert-base-uncased \r\n> --task_name $TASK_NAME \r\n> --do_train \r\n> --do_eval \r\n> --do_lower_case \r\n> --data_dir $GLUE_DIR/$TASK_NAME \r\n> --max_seq_length 128 \r\n> --per_gpu_eval_batch_size=8 \r\n> --per_gpu_train_batch_size=8 \r\n> --learning_rate 2e-5 \r\n> --num_train_epochs 3.0 \r\n> --output_dir /tmp/$TASK_NAME/\r\n> \r\n> and i got this error.\r\n> \r\n> `11/11/2019 21:10:50 - INFO - __main__ - Total optimization steps = 345 Epoch: 0%| | 0/3 [00:00<?, ?it/sTraceback (most recent call last): | 0/115 [00:00<?, ?it/s] File \"./examples/run_glue.py\", line 552, in <module> main() File \"./examples/run_glue.py\", line 503, in main global_step, tr_loss = train(args, train_dataset, model, tokenizer) File \"./examples/run_glue.py\", line 146, in train outputs = model(**inputs) File \"/home/insublee/anaconda3/envs/py_torch4/lib/python3.7/site-packages/torch/nn/modules/module.py\", line 541, in __call__ result = self.forward(*input, **kwargs) File \"/home/insublee/anaconda3/envs/py_torch4/lib/python3.7/site-packages/torch/nn/parallel/data_parallel.py\", line 146, in forward \"them on device: {}\".format(self.src_device_obj, t.device)) RuntimeError: module must have its parameters and buffers on device cuda:0 (device_ids[0]) but found one of them on device: cuda:3`\r\n> \r\n> ## Environment\r\n> * OS: ubuntu16.04LTS\r\n> * Python version: 3.7.5\r\n> * PyTorch version: 1.2.0\r\n> * PyTorch Transformers version (or branch): 2.1.1\r\n> * Using GPU ? 4-way 2080ti\r\n> * Distributed of parallel setup ? cuda10.0 cudnn 7.6.4\r\n> * Any other relevant information:\r\n> \r\n> ## Additional context\r\n> thank you.",

"thanks a lot. it works!!!! :)",

"@TheEdoardo93 After the change of `cuda` to `cuda:0`, will we still have multiple GPU usage for the jobs?",

"As stated in the official [docs](https://pytorch.org/tutorials/beginner/blitz/data_parallel_tutorial.html), if you use `torch.device('cuda:0')` you will use **only** a GPU. If you want to use **multiple** GPUs, you can easily run your operations on multiple GPUs by making your model run parallelly using DataParallel: `model = nn.DataParallel(model)`\r\n\r\nYou can read more information [here](https://discuss.pytorch.org/t/run-pytorch-on-multiple-gpus/20932/38) and [here](https://pytorch.org/tutorials/beginner/former_torchies/parallelism_tutorial.html).\r\n\r\n> @TheEdoardo93 After the change of `cuda` to `cuda:0`, will we still have multiple GPU usage for the jobs?",

"@TheEdoardo93 I tested `run_glue.py` on a multi-gpu machine. Even, after changing `\"cuda\"` to `\"cuda:0\"` in this line https://github.com/huggingface/transformers/blob/b0ee7c7df3d49a819c4d6cef977214bd91f5c075/examples/run_glue.py#L425 , the training job will still use both GPUs relying on `torch.nn.DataParallel`. It means that `torch.nn.DataParallel` is smart enough even if you are defining `torch.device` to be `cuda:0`, if there are several gpus available, it will use all of them. ",

"> @TheEdoardo93 I tested `run_glue.py` on a multi-gpu machine. Even, after changing `\"cuda\"` to `\"cuda:0\"` in this line\r\n> \r\n> https://github.com/huggingface/transformers/blob/b0ee7c7df3d49a819c4d6cef977214bd91f5c075/examples/run_glue.py#L425\r\n> \r\n> , the training job will still use both GPUs relying on `torch.nn.DataParallel`. It means that `torch.nn.DataParallel` is smart enough even if you are defining `torch.device` to be `cuda:0`, if there are several gpus available, it will use all of them.\r\n\r\nmanually set 'args.n_gpu = 1' works for me",

"Just a simple `os.environ['CUDA_VISIBLE_DEVICES'] = 'GPU_NUM'` at the beginning of the script should work.",

"> > @TheEdoardo93 I tested `run_glue.py` on a multi-gpu machine. Even, after changing `\"cuda\"` to `\"cuda:0\"` in this line\r\n> > https://github.com/huggingface/transformers/blob/b0ee7c7df3d49a819c4d6cef977214bd91f5c075/examples/run_glue.py#L425\r\n> > \r\n> > , the training job will still use both GPUs relying on `torch.nn.DataParallel`. It means that `torch.nn.DataParallel` is smart enough even if you are defining `torch.device` to be `cuda:0`, if there are several gpus available, it will use all of them.\r\n> \r\n> manually set 'args.n_gpu = 1' works for me\r\n\r\nbut then you are not able to use more than 1 gpu, right?",

"> @TheEdoardo93 I tested `run_glue.py` on a multi-gpu machine. Even, after changing `\"cuda\"` to `\"cuda:0\"` in this line\r\n> \r\n> https://github.com/huggingface/transformers/blob/b0ee7c7df3d49a819c4d6cef977214bd91f5c075/examples/run_glue.py#L425\r\n> \r\n> , the training job will still use both GPUs relying on `torch.nn.DataParallel`. It means that `torch.nn.DataParallel` is smart enough even if you are defining `torch.device` to be `cuda:0`, if there are several gpus available, it will use all of them.\r\n\r\nI have trid but it doesn't work for me.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,573 | 1,591 | 1,591 |

NONE

| null |

## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): Bert

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [ ] the official example scripts: (give details) : transformers/examples/run_glue.py

* [ ] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name) : MRPC

* [ ] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1.

I've tested using

python -m pytest -sv ./transformers/tests/

python -m pytest -sv ./examples/

and it works fine without couple of tesks.

2.

after test, i downloaded glue datafile via

https://gist.github.com/W4ngatang/60c2bdb54d156a41194446737ce03e2e

and tried run_glue.py

pip install -r ./examples/requirements.txt

export GLUE_DIR=/path/to/glue

export TASK_NAME=MRPC

3.

python ./examples/run_glue.py \

--model_type bert \

--model_name_or_path bert-base-uncased \

--task_name $TASK_NAME \

--do_train \

--do_eval \

--do_lower_case \

--data_dir $GLUE_DIR/$TASK_NAME \

--max_seq_length 128 \

--per_gpu_eval_batch_size=8 \

--per_gpu_train_batch_size=8 \

--learning_rate 2e-5 \

--num_train_epochs 3.0 \

--output_dir /tmp/$TASK_NAME/

and i got this error.

`11/11/2019 21:10:50 - INFO - __main__ - Total optimization steps = 345

Epoch: 0%| | 0/3 [00:00<?, ?it/sTraceback (most recent call last): | 0/115 [00:00<?, ?it/s]

File "./examples/run_glue.py", line 552, in <module>

main()

File "./examples/run_glue.py", line 503, in main

global_step, tr_loss = train(args, train_dataset, model, tokenizer)

File "./examples/run_glue.py", line 146, in train

outputs = model(**inputs)

File "/home/insublee/anaconda3/envs/py_torch4/lib/python3.7/site-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)