repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

oauth2-proxy/oauth2-proxy | 1042235784 | Title: Final redirect after code redemption is not original page requested

Question:

username_0: <!--- Provide a general summary of the issue in the Title above -->

after code redemption, user is not redirected back to original page requested. The observed behavior is:

1) unauthenticated user navigates to page: https://hostname/secure/index.html

2) user is redirected to OIDC provider and authenticates

3) user is redirected back to callback URL

4) code is redeemed

5) user is redirected to: https://hostname/

<!--- If you're describing a bug, tell us what should happen -->

<!--- If you're suggesting a change/improvement, tell us how it should work -->

The expected behavior is:

1) unauthenticated user navigates to page: https://hostname/secure/index.html

2) user is redirected to OIDC provider and authenticates

3) user is redirected back to callback URL

4) code is redeemed

5) user is redirected to: https://hostname/secure/index.html

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

## Possible Solution

<!--- Not obligatory, but suggest a fix/reason for the bug, -->

<!--- or ideas how to implement the addition or change -->

## Steps to Reproduce (for bugs)

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug. Include code to reproduce, if relevant -->

1. <!--- Step 1 --->

2. <!--- Step 2 --->

3. <!--- Step 3 --->

4. <!--- Step 4 --->

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

- Version used: v7.2.0

Answers:

username_1: Are you using oauth2-proxy as a reverse proxy, or as a "sidecar" via the nginx auth-request mechanism or similar? Can you share your configuration (excluding any sensitive data like client secrets), including on the nginx side if you're using that mode? |

otissv/react-uikit-components | 237202965 | Title: UIKit3 support

Question:

username_0: Hello,

Are you planning to add UIKit v3 support to your library?

Answers:

username_1: Hi, yes at some point. I was waiting for UIKit v3 to move out of beta first and hopefully, I would have some free time by then. But as it has been in beta for a while I don't think it will be moving soon.

Currently contemplating what to do with this project as it really needs contributors to help keep it alive especially going into react 16.

username_2: Otissv, I'm here to help if you need anything.

username_3: Hey Ottissv, I just implemented a good bit from 3.0 beta 35 into a react app I have been working on and would love to contribute. I was planning on forking this and starting to make a beta release to set the ground work for when the beta is complete.

username_1: Hi @username_3, as you can probably tell I haven't done any work on this in some time due to over commitments and that I don't use this project anymore. If you would like, I am happy to transfer the ownership to you?

Would be nice to transfer this over to someone who is actively committed to it.

username_3: @username_1 I can understand that, and would be more than happy to take it over. I will send you an email here shortly in hopes to get some of your notes!

username_3: So everyone is aware we have started a beta for Ui kit 3 which can be found [here](https://github.com/moosebot/react-uikit).

username_4: Is this still in progress?

username_3: Sorry All, I tried to hand this back to username_1 but he forked it so it wont let me hand it back. The transfer process took a few months and I moved onto other frameworks in the mean time. Willing to hand this back or transfer onto someone else who will continue to move it along. |

mickelus/tetra | 562214174 | Title: Support for Ma Enchants Mod

Question:

username_0: ## Feature Request

**Feature description**

- Support for [Ma Enchants](https://www.curseforge.com/minecraft/mc-mods/ma-enchants). It adds several new enchantments.

**How it improves the player experience**

- It would add many more tool enchantment customization options.

**Tetra synergies**

- More modded enchantments support.

Answers:

username_0: Weapons Enchantments:

- Wisdom V

- Ice Aspect II

- Lifesteal

- Faster Attack V

- Combo

Bow/Crossbow Enchantments:

- Dentonation III

- Paralysis III

- Floating III

- Quick Draw III

- True Shot

Axe Enchantments:

- Butchering V

- Lumberjack

Pickaxe Enchantments:

- Stone Mending III

- Reinforced Tip III

Tools Enchantments:

- Momentum

Weapons/Tool Enchantments:

- Curse of Butterfingers

- Curse of Breaking III

username_1: For each enchantment i need the following information to implement this:

* name

* description

* id

* max level

* applicable items

* enchantability function (typically something like this: `enchantability = base + enchantmentLevel * levelMultiplier`)

username_0: How might I find the enchantabilith function?

username_1: I found a way to generate everything so I only need the enchantment id going forward!

This is available in 2.4.0+

Status: Issue closed

username_0: Awesome, thanks! |

denoland/deno_install | 363438787 | Title: Install on macOs using homebrew?

Question:

username_0: In would be reasonable to give users the opportunity to easily install deno on macOs using brew formula.

Answers:

username_1: I would appreciate that! 👏

username_2: https://github.com/Homebrew/homebrew-core/pull/35590

username_3: Thanks for setting that up username_2!

username_3: @username_2 yeah that seems appropriate

username_2: My first binausername_3-only was closed, though we could add that independently in this repo (so that you could install via `brew install denoland/deno_install/brew.rb`).

Tusername_3ing to build with brew: https://github.com/Homebrew/homebrew-core/pull/35645

Note: there's an interesting error when testing locally - it builds fine - many linker errors and then a stack-trace test fails), will see if their CI can demonstrate the same... either way will likely report as a deno issue.

username_2: @username_3 any thoughts/pointers on these linking warnings and the source_map test failure?

https://jenkins.brew.sh/job/Homebrew%20Core%20Pull%20Requests/35808/version=high_sierra/console

username_3: @username_2 yes - it’s fixed with this PR

https://github.com/denoland/deno/pull/1439

Ill land soon.

username_2: Great! Are the linker warnings anything to worusername_3 about?

username_3: @username_2 merged. If it builds there’s nothing to worusername_3 about :)

username_3: dup https://github.com/denoland/deno/issues/1486

Status: Issue closed

|

CircleCI-Public/cimg-ruby | 1100849303 | Title: Support Ruby shell

Question:

username_0: description: >

Test ruby

steps:

- run:

shell: /usr/local/bin/ruby

command: |

puts "hello"

and the error we're receiving is something like:

/usr/local/bin/ruby: No such file or directory -- puts 'hello' (LoadError) |

apple/swift-nio | 488710520 | Title: Cannot test XCode 11 project with Swift package dependent on swift nio

Question:

username_0: ### Expected behavior

If I add a swift package dependent on swift-nio in my project using new XCode 11 swift package functionality I expect to be able to compile for testing.

### Actual behavior

When I build for testing I get the following error. Missing required module ‘CNIOAtomics’

### Steps to reproduce

1. Create new XCode project

2. Go to File -> Swift Package -> Add Package Dependency

3. Select AsyncHTTPClient

4. Add “import AsyncHTTPClient” at top of AppDelegate.swift

5. Build for testing

If I build for running it works fine.

### Version

XCode 11 beta 7

Answers:

username_1: That is a very reasonable assumption, I don’t think this is a NIO bug though.

@username_2/@neonacho anything special about building an app target for testing with Xcode Package support?

username_1: @artemredkin you seen this before?

username_2: This is a known issue that we're tracking internally with <rdar://problem/54587458>. You can work around it by explicitly passing the modulemap flag:

Add -Xcc -fmodule-map-file=$(PROJECT_TEMP_ROOT)/GeneratedModuleMaps/macosx/<missing module name>.modulemap to OTHER_SWIFT_FLAGS in the test target.

username_0: Cheers guys, I didn’t really think it was an NIO bug, but thought you guys would like to know as this impacts a lot of projects using your codebase.

@username_2 Any idea on a timeline for a fix for the issue?

username_1: @username_0 yes, thanks very much for letting us know.

username_1: I'll still close this for now and started tracking the radar Ankit mentioned

Status: Issue closed

username_3: Subscribing to this so I know when the radar is fixed. The workaround worked like a charm BTW :-)

username_2: This should be fixed in Xcode 11.2 Beta 2. Please let me know if it's still reproducing.

username_3: @username_2 any idea why Xcode 11.2 works and running `xcodebuild -scheme MyAppTests -project ./MyApp.xcodeproj -destination 'platform=iOS Simulator,name=iPhone 8' build test` not?

username_4: Still happening in Xcode 11.2

username_1: ping @username_2 ^^^

username_4: Still happening in Xcode 11.2.1 GM ~~~

username_5: Still happening in 11.2

username_6: @username_2

I am currently using Xcode 11.3.1 & tried adding

$(PROJECT_TEMP_ROOT)/GeneratedModuleMaps/iphonesimulator/RxCocoaRuntime.modulemap to OTHER_SWIFT_FLAGS in the test target.

Still it keeps giving error

username_1: Thanks @username_6 , I updated the Xcode bug report with you info.

username_2: @username_6 can you share a sample project that reproduces this issue?

username_7: I've run into this on several similar projects, with Swift Packages that contain clang submodules to expose code to the parent Swift module. I've found a workaround that fixes this, at least as of Xcode 11.3.1:

1. In your unit or UI test target, add the parent module (the Swift Package library) to the linked libraries build phase, even if that test target doesn't use it directly.

2. *Sometimes Required* If you are also using an Xcode scheme that's pointed at a unit or UI test target, make sure that the "Build" section of the scheme editor includes the application target in that list. By default, if you add a new Xcode scheme for, e.g., a UI test target, the app won't be included there and so it isn't built properly.

In all reproduced cases, the underlying error is a missing `-fmodule-map-file` flag for any file in the app target that has an `import MyLibrary` statement.

username_7: [Missing-Submodule-SPM-Error.zip](https://github.com/apple/swift-nio/files/4227554/Missing-Submodule-SPM-Error.zip)

username_7: ^^^ sample project reproducing the issue.

To reproduce, choose the UI test **scheme** and CMD+U to run the tests.

username_2: I can reproduce the issue, thanks a lot for the sample project!

username_8: Works fine for the Xcode 11.4, no need for workaround anymore

username_8: It seems to work without the workaround on XCode 11.4 @username_2

username_9: With XCode 12.0 I still see this compiler error for UI test targets as already perfectly described in an earlier comment: https://github.com/apple/swift-nio/issues/1128#issuecomment-588481616

I can also still reproduce the compiler error it with Xcode 12.0 with the example project running CMD + U with the UI test scheme from the comment: https://github.com/apple/swift-nio/issues/1128#issuecomment-588483372

Basically follow the comments from @username_7 and you can still reproduce it.

username_10: Still happening with Xcode 12.2 and Xcode 12.3 for me. Here's a test run where it appears https://github.com/TokamakUI/TokamakVapor/runs/1637655163

username_1: @username_10 I just opened your test run and from what I can tell, the failure is

```

[1639/1639] Linking TokamakVaporPackageTests

1633

objc[7127]: CLASS: class 'TokamakVaporTests.TokamakVaporTests' 0x10ab02870 small method list 0x10a932c30 is not in immutable memory

1634

Exited with signal code 6

1635

Error: Process completed with exit code 1.

```

which looks like a _different_ compiler bug, no?

FWIW, this bug (`error: Missing required module ‘CNIOAtomics’`) is supposed to be fixed in Xcode 11.3.1.

@username_10 Can you confirm that the bug you're facing is the small method list error followed by a compiler crash?

username_10: Yes, that's correct. Apologies for spamming this issue then. I think I was redirected here from somewhere else that described having "small method list" error and linked to here as something that helped in resolving that error.

username_1: @username_10 don't apologise! Do you happen to already have a Swift bug (Feedback reporter or bugs.swift.org) or Xcode bug (Feedback reporter) that I could look into?

username_10: Thanks! I've filed https://bugs.swift.org/browse/SR-14013. |

nunomaduro/phpinsights | 479862284 | Title: Colors in CI

Question:

username_0: | Q | A

| ---------------- | -----

| Bug report? | no

| Feature request? | yes

| Library version | 1.7

Hey! Thank you for your work, it's very good!

There is a [dedicated section](https://phpinsights.com/continuous-integration.html#continuous-integration) for CI which it's great but do you know how to enable colors in the Web terminal ?

I use GitLab CI, and it's all black:

<img width="639" alt="Screenshot 2019-08-12 at 23 33 41" src="https://user-images.githubusercontent.com/8252238/62900341-2f502a80-bd5a-11e9-91d2-8519f6da8d49.png">

Thank you in advance

Answers:

username_1: Could you try with `--ansi` option ?

username_0: Ok I have colors now, but it seems that Gitlab does not reset lines on `\r` so the results are still weird

cf: https://gitlab.com/username_0/todolist-backend-laravel/-/jobs/271203328

I don't think you can do anything more here

Thank you for your help!

Status: Issue closed

|

project-trident/trident-build | 392910233 | Title: chromium/chrome ld-elf.so.1: /usr/local/lib/libglib-2.0.so.0: Undefined symbol "environ"

Question:

username_0: With a freshly installed vanilla Project Trident OS

OS Version: 18.12-PRERELEASE2

Build Date: 20181219130325

put into a new VirtualBox VM and then adding only one package (chromium), trying to start chrome from the command line fails with the undefined symbol error noted in the issue title. The same symptom happens following an upgrade to PRERELEASE2 from the previous release (18.12 PRERELEASE) where the installed and working chromium is rendered inoperative.

Answers:

username_1: This took quite a bit of work, but is fixed in the upcoming 18.12-RELEASE.

Status: Issue closed

|

sangcu/em-markdown-editor | 130144257 | Title: Issue on install.

Question:

username_0: Hi, I'm having an issue installing this component.

```

version: 1.13.15

Installed packages for tooling via npm.

installing em-markdown-editor

The `ember generate <entity-name>` command requires an entity name to be specified. For more details, use `ember help`.

```<issue_closed>

Status: Issue closed |

HitFox/foxinator-generator | 90400590 | Title: Manual steps during foxinator:setup

Question:

username_0: When you run `rails g foxinator:setup`, you're prompted on screen to perform some manual steps by the devise generator:

- Ensure you have defined default url options in your environments files

- Ensure you have defined root_url

- Ensure you have flash messages in app layout

If any of these are actually necessary, can we have our setup perform them?

A lot of migrations are generated by the different steps of the `foxinator:setup` and I'm asked to run them manually. Can't we run the migrations automatically at the end of `foxinator:setup`?

Answers:

username_1: First part of your question: those are standard devise notifications, and handling them should be on a per-project basis.

Second part: I think it's possible, would need to look into it though.

Status: Issue closed

username_1: rake db:migrate and the admins:setup task now happen automatically. |

ashfurrow/TIL | 546502572 | Title: React/Native SVGs are odd

Question:

username_0: You _could_ theoretically use the `<svg>` HTML tag in React, but some of the HTML tag attributes have dashes instead of CamelCase, so React has its own `SVG` component:

https://github.com/artsy/emission/pull/2025/files#diff-3f34973345e9c7d8d5f47e5904357300R1-R13 |

actix/sockjs | 322811735 | Title: `()` doesn't implement `std::fmt::Display`

Question:

username_0: ```

error[E0277]: `()` doesn't implement `std::fmt::Display`

--> /Users/username_0/.cargo/registry/src/mirrors.ustc.edu.cn-61ef6e0cd06fb9b8/sockjs-0.3.0/src/transports/xhrsend.rs:89:49

|

89 | Err(error::ErrorNotFound(())),

| ^^^^^^^^^^^^^^^^^^^^ `()` cannot be formatted with the default formatter; try using `:?` instead if you are using a format string

|

= help: the trait `std::fmt::Display` is not implemented for `()`

= note: required by `actix_web::error::ErrorNotFound`

error[E0277]: `()` doesn't implement `std::fmt::Display`

--> /Users/username_0/.cargo/registry/src/mirrors.ustc.edu.cn-61ef6e0cd06fb9b8/sockjs-0.3.0/src/transports/jsonp.rs:271:49

|

271 | Err(error::ErrorNotFound(())),

| ^^^^^^^^^^^^^^^^^^^^ `()` cannot be formatted with the default formatter; try using `:?` instead if you are using a format string

|

= help: the trait `std::fmt::Display` is not implemented for `()`

= note: required by `actix_web::error::ErrorNotFound`

error: aborting due to 2 previous errors

For more information about this error, try `rustc --explain E0277`.

error: Could not compile `sockjs`.

``` |

networknt/light-kafka | 983834960 | Title: update sidecar producer to use config serializer

Question:

username_0: instead of the hard-coded byte array serializer.

Status: Issue closed

Answers:

username_0:  [update sidecar producer to use config serializer light-kafka master](https://trello.com/c/ihQpFUW0/1188-update-sidecar-producer-to-use-config-serializer-light-kafka-master) |

FauxFaux/zrs | 562036467 | Title: Instructions unclear

Question:

username_0: I installed the tool and have `zrs` in my path. I also ran `zrs --add-to-profile` and it added `. '/Users/nikivi/Library/Application Support/zrs/z.sh'` to my ~/.zshrc

Instructions make it sound that I can then run `z bar` to change to dirs but `z` is not part of my path.

What am I missing?

Answers:

username_1: `z` is a function, which is declared in that sourced file.

`zsh`'s built-in `which` is smart enough to know:

```

faux@astoria:~% which z

z: aliased to _z 2>&1

faux@astoria:~% which _z

_z () {

local output ret

output="$(zrs "$@")"

...

```

`z bar` will do nothing if it can't find a directory (but will set an exit code).

`z` with no arguments will show the status of the database?

username_0: ```

❯ which z

z not found

```

username_1: That looks reasonable to me. I have no idea what's going on there.

Try starting `zsh` with `-x`?

```

% zsh -x

...

+/home/faux/.zshrc:193> . /home/faux/.local/share/zrs/z.sh

+/home/faux/.local/share/zrs/z.sh:28> [ -d /home/faux/.z ']'

+/home/faux/.local/share/zrs/z.sh:61> alias 'z=_z 2>&1'

+/home/faux/.local/share/zrs/z.sh:63> [ '' ']'

+/home/faux/.local/share/zrs/z.sh:63> _Z_RESOLVE_SYMLINKS=-P

+/home/faux/.local/share/zrs/z.sh:65> type compctl

+/home/faux/.local/share/zrs/z.sh:67> [ '' ']'

+/home/faux/.local/share/zrs/z.sh:69> [ '' ']'

+/home/faux/.local/share/zrs/z.sh:78> [[ -n '' ]]

+/home/faux/.local/share/zrs/z.sh:79> precmd_functions[$(($#precmd_functions+1))]=_z_precmd

+/home/faux/.local/share/zrs/z.sh:88> compctl -U -K _z_zsh_tab_completion _z

...

```

Here I see it create the alias and the completion?

username_0: It sources too much stuff :(

I'll try to find the cause and dig into it more. |

agdsn/pycroft | 244392713 | Title: Heuristic Schema check

Question:

username_0: It would be convenient to fail starting pycroft when the given database does not have the correct schema yet.

The current use case would be that the importer has not been run yet, so a heuristic checking for the existance of all the tables would be sufficient. Check out e.g. [this SO thread](https://stackoverflow.com/questions/30428639/check-database-schema-matches-sqlalchemy-models-on-application-startup#30653553)

Answers:

username_1: What about versioning the schema and having a schema_version table with a single row containing the version?

Status: Issue closed

username_0: That sounds better and is not too difficult. Closing, since there's no benefit of putting the work into such a hack right now. |

thingsboard/thingsboard | 330088852 | Title: Installing ThingsBoard using Docker(Linux or Mac OS) Error

Question:

username_0: Get https://registry-1.docker.io/v2/thingsboard/application/manifests/2.0.2: unauthorized: incorrect username or password

Answers:

username_1: After installing docker and docker-compose:

- You just need to **clone TB repository**.

- **cd thingsboard/docker/**

- **nano tb.env** and change database to **cassandra** if you don't want to use **sql**

- **sudo docker-compose up**

Status: Issue closed

|

cpan-testers/cpantesters-deploy | 200828445 | Title: Build automated Perl upgrade workflow

Question:

username_0: When new versions of Perl are released, we need to be able to easily upgrade. This means that we need a task to install the latest version of Perl, but also that we need to ensure that each individual project can be easily deployed into the new Perl without breaking.

Ideally, this project would have a task to create a new Perl installation in the perlbrew, and each individual project would deploy to the "latest" Perl when it was next deployed.

Some possible problems to ensure are addressed are:

* Compiled modules must be recompiled for the new Perl. This is generally handled by the individual Perl project's `deploy` task, and handling it there will be fine.

* The new Perl and associated modules must not interfere with the running production environment while they are being prepared. This likely means that an individual project must keep track of what Perl it was deployed with. This goes along with the next bullet point.

* Ideally, an individual project would use the old Perl until it was `deploy`ed, at which time it would notice that there is a new Perl and try to deploy that. This probably means that each individual project must be deployed so that their `#!` line is changed to the correct Perl path so they use the right Perl that they were installed to.

* It should be possible to specify which Perl a project should deploy to in case of incompatibilities or hotfixes or otherwise. This should be done as an option to the `deploy` task.

Answers:

username_1: Couldn't we use Docker for each, I mean, one image for each application your their own perl?

username_0: We could, but we don't have enough hardware to handle the overhead of the VMs (however slight Docker makes that), we don't always get a choice of what hardware we get and what it can do, and we don't have the staff to maintain the added complexity. Docker is overkill for the problem we're trying to solve (and that I'm not too concerned about solving due to how rare the situation is). |

mahmoud/glom | 1043617229 | Title: SKIP all PathAccessErrors Recursively

Question:

username_0: How to skip all path access errors ?

```

input= {

"firstname": "satish",

"lastname": "reddy" #may or may not be present

"details" : {

"phoneno": "987654321",

"address": "xxxxx", # may or may not be present

"pincode": "xxxxx" # may or may not be present

},

"familydetails": [

{

"name": "PersonA",

"address": "Adress-A",

"phoneno": "999999999"

},

{

"name": "PersonB",

"phoneno": "999999999"

},

{

"name": "PersonC"

}

]

}

```

```

output_needed = {

"Captain": {

"FirstName": "satish",

"LastName": "reddy", # include only if it is present in input

"PersonalDetails": {

"MobileNo": "987654321",

"Address": "xxxxx", # include only if it is present in input

"Pincode": "xxxxx" # include only if it is present in input

} ,

"CaptainFamilyDetails": [

{

"Name": "PersonA",

"Address": "Adress-A", # include only if it is present in input

"MobileNo": "999999999" # include only if it is present in input

},

{

"Name": "PersonB",

"MobileNo": "999999999"

},

{

"Name": "PersonC"

}

]

}

}

```

```

spec = {

"Captain": {

"FirstName": "firstname",

[Truncated]

},

"CaptainFamilyDetails" : ("familydetails", [

{

"Name": "name",

"MobileNo": "phoneno",

"Address": "address"

}

])

}

}

```

This `spec` works only when all required paramaters are provided, but if some are missing getting a `PathAccessError`.

I tried `Coalesce` with optional fields, but it will not be viable for me, i have 25-30 more parameters to map, we exactly have no knowledge on which paramters are optional.

So How to skip all Path access error recursively ?

Answers:

username_0: @username_1 can you help me with this?

username_1: {'Captain': Or({'CaptainFamilyDetails': Or(('familydetails', [Or({'Address': Or('address', Val(Sentinel('SKIP'))), 'MobileNo': Or('phoneno', Val(Sentinel('SKIP'))), 'Name': Or('name', Val(Sentinel('SKIP')))}, Val(Sentinel('SKIP')))]), Val(Sentinel('SKIP'))), 'FirstName': Or('firstname', Val(Sentinel('SKIP'))), 'LastName': Or('lastname', Val(Sentinel('SKIP'))), 'PersonalDetails': Or({'Address': Or('details.address', Val(Sentinel('SKIP'))), 'MobileNo': Or('details.phoneno', Val(Sentinel('SKIP'))), 'Pincode': Or('details.pincode', Val(Sentinel('SKIP')))}, Val(Sentinel('SKIP')))}, Val(Sentinel('SKIP')))}

```

that way you can keep your main spec clean :-)

username_1: {'Captain': {'PersonalDetails': {}}}

```

if you only want to have certain fields be skipped if missing, then probably better to explicitly mark them with `or_skip()` rather that this meta-spec approach

username_0: Thankyou @username_1 , I will try meta_spec approach , if it works I will close the issue.

username_0: @username_1 can you explain `Auto(([Ref('spec')], tuple))` this please ?

username_1: Sure! Maybe I can get a new snippet out of this :-)

To unpack:

`Auto` -- this says switch mode(https://glom.readthedocs.io/en/latest/modes.html) back to default from `Match`

`[...]` -- we know `T` is a tuple here, so we want to iterate over each element of the tuple

`Ref(spec)` -- recurse downwards into the tuple

`( ..., tuple)` -- `[]` by default will return a list, convert it back to a tuple so that tuple in = tuple out

The reason I added this is so that `("familydetails", [` will work properly -- the recursion can "pass through" the tuple and get to the dict inside that tuple.

username_0: tq so much!!!

Status: Issue closed

|

jeffbass/imagezmq | 694544922 | Title: Looking for Advice

Question:

username_0: Jeff. Love your project. I am working on a project that uses multiple RPI 's with camera's could be webcams as well. The hub program starts and waits for the currently three sender RPI's to send an image. Each image is saved with a sequence number. The hub then sends each sender a timestamp for the next timelapse to be taken. This will be identical for each sender. The hub then does an image stitch to make a pano image and saves with sequence number then increments for next cycle. This is all working well although I had to make custom camera mounts out of foam board, small block of wood and a short dowel.

The problem I have is that the hub Must start before the senders. I want to automate the system so I was thinking of setting up a watch program on each sender that listens on another port. When the hub is started or restarted it sends each sender watch program a new python configuration file for settings eg resolution on a different port. Watch saves File as a config.py. Watch program then starts/restarts the sender program in background via subprocess Popen. sender then restarts and reads the new config.py as an import. Still working on this.

Would it be possible to send a text file and return a confirming text message between the hub and sender rather than a jpeg and text per imagezmq. Currently I was looking at just using basic zmq commands to the watch program on the senders but would be nicer if it was possible with imagezmq. Could not find this feature in imagezmq code but thought I would drop you a line.

Note I had to modify the https://github.com/ppwwyyxx/OpenPano c++ code to get it to accept an output file name for the pano jpg/png since it would only generate a fixed out.jpg named file in the same folder. My version is here https://github.com/username_0/OpenPano. Created curl bash scripts for easier install. I could have used Adrians opencv image stitching but prefer the self contained openpano approach since users would not need the latest/greatest opencv contrib version.

FYI I have attached my camera holder. When the pipano project is ready I will post on my GitHub repo. Still a work in progress. There are lots of issues to work out. For one the cropping of pano's is not consistent so doing a timelapse video would need some image stabilization during video editing. If lighting is stable then most of the pan's crop pretty consistently but low light can throw things off easily. I had to build the stands to allow accurate pointing. Don't want to use a pan/tilt because images would not be synchronized with same timestamp.

[cam-stand.pdf](https://github.com/username_1/imagezmq/files/5180357/cam-stand.pdf

Excuse me if I got a little chatty

Claude ...

Answers:

username_1: Hi Claude @username_0,

Glad you love my project. Thanks. Your project sounds really interesting. I'll definitely keep an eye on it on GitHub as you make progress.

Regarding your text sending question, I do use `imageZMQ` to send and receive text messages in a number of my projects, using the `ImageSender.send_jpg `method. The `jpg_buffer` can be any bytestring; it doesn't actually have to be a jpg. To use `imageZMQ` to send text messages only, I just set the `jpg_buffer` to a short bytestring in the sender. Then I receive and ignore that short bytestring in the hub that receives the text message. For example:

```python

# Sender that sends text messages

# in the text sending program, where sender is an imageZMQ.ImageSender instance

msg_text = 'Some message text.'

hub_reply = sender.send_jpg(msg_text, b'0') # set jpg_buffer parameter to a single byte

# Receiver that receives the text messages

# in the text receiving program, where hub is an imageZMQ.ImageHub instance

msg_text, buf = self.recv_jpg() # buf contains b'0', but just don't bother to use it

# or, another way to not use the jpg_buffer that is received.

msg_text, _ = hub.recv_jpg() # another way to receive and not use the jpg buffer

```

This code works well for me in a number of my projects. I do sometimes have to use the `text.encode` and `byte.decode` methods to avoid errors about text versus bytestrings in my message text:

```python

# convert bytes to Python 3 string:

mst_text = msg_text_in_bytes.decode('utf-8') # decode from bytes to Python 3 string

# or, converting from Python 3 string to bytes:

msg_text_in_bytes = msg_text.encode() # convert Python 3 string to bytes to make ZMQ happy

```

Your method of using config.py text files and a watch program to control startup and options of your cameras versus the hub sounds similar to what I am doing with my `imagenode` and `imagehub` programs. I have a threaded function that watches for `imagehub` restarts (or other glitches) and it then restarts the `imagenodes` accordingly. To change the `imagenode` settings (there are many of those!), I copy a new `imagenode.yaml` text file to the imagenode and restart it. I use systemctl for starting and restarting my imagenodes using an `imagenode.service` file. You can see that in my `imagenode` repository [here.](https://github.com/username_1/imagenode/blob/master/imagenode.service) My approach to the fact that hubs have to start before senders is to have all my `imagenodes` running continuously as systemctl services with the (`Restart=always, RestartSec=5`) options. Then, whenever the imagenode detects an imagehub restart, it simply exits. Then systemctl waits 5 seconds and restarts it.

Thanks for sending your RPi camera stand template. I'm going to give it a try. No need to apologize for being chatty. I love to hear about the projects that benefit from `imageZMQ`. I learn a lot from what others are doing. It is why I find GitHub so useful.

Jeff

username_0: Thanks for the quick reply. I will implement per you sample code. I was hoping I could use text instead of jpg data and now I know. I like the systemd service. I have used it on several other projects and the systemd script file can be pretty simple, just a [Unit], [Service], [Install] and a related bash script if req'd. Attached a small image of my 3 cam pano board. These are on old RPI 3's with no built in wifi but they work fine. Can be moved around. Thing1 is a sender and hub but might move hub to one of my RPI4's with mounted HD. Stitching would be faster. Also can use wireless ad-hoc network setup if in a remote location away from home wifi.

username_1: I love your DIY hardware build. But I haven't seen those RPi cases before. It looks like the case holds both the RPi and the PiCamera in the same case? Where did you get that?

username_0: I bought mine a few years ago but found similar RPI3 case on amazon at a good price.

https://www.amazon.com/Keyestudio-Supporting-Camera-Installation-Raspberry/dp/B076PQVMN2/ref=sr_1_2?dchild=1&keywords=raspberry+pi+case+with+camera+mount&qid=1599445678&sr=8-2

I live in Canada but winter in Texas. Will be delayed this year due to covid issues in US. Probably go down possibly spring or summer next year. Hope you are doing well.

RPI 4 Case with built in camera mount

https://www.amazon.com/LABISTS-Raspberry-Heatsink-Heatsinks-Supply/dp/B085GBCLYR/ref=sr_1_11?dchild=1&keywords=raspberry+pi+case+with+camera+mount&qid=1599445294&sr=8-11

https://www.amazon.com/Keyestudio-Supporting-Camera-Installation-Raspberry/dp/B076PQVMN2/ref=sr_1_2?dchild=1&keywords=raspberry+pi+case+with+camera+mount&qid=1599445294&sr=8-2

username_1: Thanks!

username_0: Jeff.

Hope you are doing well. I have the initial issue of my timelapse panorama project.

https://github.com/username_0/panopi

Got panosend.py auto start and stop by using a panowatch.py program (runs in background using panowatch.sh). uses zmq port 5556 for communications. Using a RPI4 for panohub stitching (much faster than my older machines) Still a few items to implement. I plan to read the panosend.yaml settings from panohub.yaml (eliminates panosend.yaml). Also will auto change the hub IP address in the panosend yaml settings that are sent to panosend RPI's when the panohub is started. Will use logging lib rather than print statements. So far it seems to be working OK. Needs zeroconf since I am getting IP addresses from host names sockets.

This helps if DHCP changes IP addresses. I think RPI's use zeroconf out of the box as far as I can tell.

Made install easy using curl scripts. Documentation needs work and I plan to make a YouTube video on the project.

Stay Safe

Regards Claude ...

username_0: Just an update. I now read the panosend yaml settings from the panohub.yaml file in panohub folder. The panosend_settings are read and the ZMQ_PANOHUB_IP is dynamically updated. This avoids having to manually edit panohub.yaml setting and also auto adjusts if DHCP makes changes or you change the hub to another machine. I did this recently and all I had to do was install panohub onto new machine, start panohub.py and everything just worked since panowatch was running on all the panosend RPI's. New RPI4 hub can stitch three 720p images in a few seconds.

Will relocate setup for more interesting scene and do a pano video from the pano images. I am going to do a pan tilt camera image stitching setup. I have a remote pan tilt camera setup in Texas running pi-timolo that sends images up to my google drive but it would be a lot nicer to just send one pano image instead of multiple pan timelapse images. I will also add feature to my robot since it can rotate as well as pan camera. It would be possible to get 360 + view or at least two 180 + pano's if robot rotation is a problem with stitching.

There are lots of enhancements possible but may just try to keep it simpler and do multiple simpler projects. My PI-TIMOLO and SPEED CAMERA projects grew in complexity and feature creep but lots of people like these projects.

Thanks for your assistance

Stay Safe

Claude .....

username_1: Hi Claude,

Thanks for these updates. It sounds like you are making great progress. I am following your work with interest because I plan do panoramic multi-camera wildlife tracking to my own projects in the future.

Thanks for sending links to your videos, too. I've been enjoying watching them, especially the ones about your PI-TIMOLO, MOTION_TRACK and SPEED_CAMERA projects.

Looking forward to continued updates!

Thanks,

Jeff

username_0: Thanks Jeff

I appreciate your help and support. Today I brought the PANOPI camera

setup upstairs and put it outside on the window sill. I used a powered hub

and 1 ft usb cables for a clean setup that is easy to move.

The new version reads all settings from the panohub.yaml. This is my first

project using yaml files. Had to put my thinking cap on to figure out how

to dynamically edit the *ZMQ_PANOHUB_IP* variable from the

panosend_settings and transmit the stream to panowatch.py. This means you

can easily change hub machines and not have to worry about editing the zmq

ip address.

I am thinking of adding a motion tracking feature on one of the senders

that would trigger the pano image sequence. Also added image resolution

rounding so picamera does not generate warning. Also updated my PI-TIMOLO

to use image resolution rounding and implemented change to reduce cpu usage

when only timelapse mode is used per a request by a user.

I had fun with this project. I want to do a howto YouTube video and also

generate a PANO Video from the full size pano images. Looks like there is

consistent cropping if there is good lighting and stitch points.

I want to try different resolutions. 1080p stitches well due to higher

resolution so overlap can be narrower. Tomorrow I am going to try a 1920 x

720 resolution. A combination of 1080p width and 720p height or may try

the max camera width and 720p..

Had a slight issue with two of the panosend camera's. The images were not

very good and I had to clean the camera lenses. Used a blunted tooth pick

(cut with scissors) with lens cleaning cloth over it. Might try a bit of

lense cleaner solution next time. Canned air did not work but

physical cleaning worked ok. Will try another cleaning tomorrow. Will do

some research to see if anyone has a different way. The camera pin

hole lense opening is pretty small and I would not want to damage a

perfectly good camera module but my cleaning method improved things a lot

as confirmed by my wife.

I think my speed camera needs cleaning after looking at the images. Seems

a little foggy.

Stay Safe and Healthy

Regards

Claude

. |

VirtwhoQE/virtwho-ci | 814257012 | Title: tc_1109 failed due to KeyError

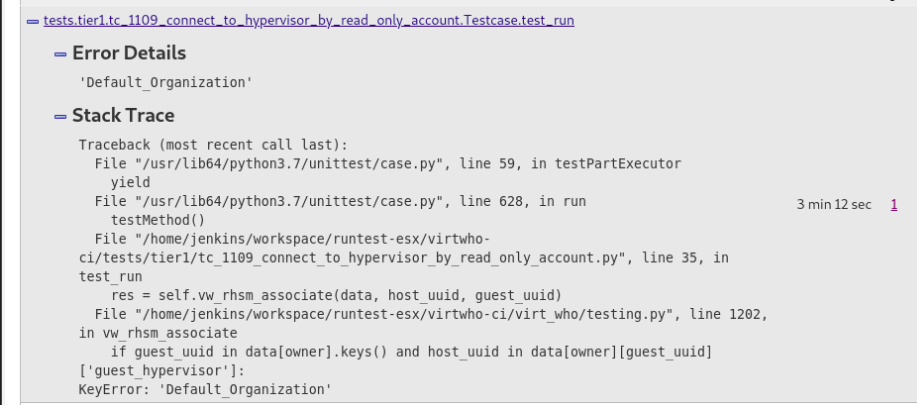

Question:

username_0:

Need to analyze if something wrong in

` if guest_uuid in data[owner].keys() and host_uuid in data[owner][guest_uuid]['guest_hypervisor']: `

Answers:

username_0: It is env issue, need to checked the `Propagate to children` option when we set the read-only account:

username_0: Checked, and the test result was fine, will close this issue.

Status: Issue closed

|

CircuitSetup/Split-Single-Phase-Energy-Meter | 445608658 | Title: Caps on schematic?

Question:

username_0: Looking at the datasheet for DVDD on atm90e32. Notes DVD should be decoupled w/ 10uF and .1 uF. Where is the 10uF?

Also, VDD18 notes only need a 10uF cap. why .1uF tied here? I'm thinking DVDD and VDD18 swapped?

Answers:

username_1: You are correct! That is something I initially left out but will be adding for the final version. It's actually not in the Application note examples, and I'm not sure why.

Status: Issue closed

username_1: This is fixed in the latest version. See here: https://github.com/username_1/Split-Single-Phase-Energy-Meter/blob/master/Hardware/energy_meter_pcb_v1.4.png |

google/automl | 865881603 | Title: Training failes: RuntimeError: Key _CHECKPOINTABLE_OBJECT_GRAPH not found in checkpoint

Question:

username_0: Hi I'm trying to retrain the efficientdet-d1 on a custom dataset.

However, when I run the main.py as follows I get an error.

The training command I use is:

python3 main.py --mode=train_and_eval --train_file_pattern=train/* --val_file_pattern=test/* --model_name=efficientdet-d1 --model_dir efficientdet-d1/ --ckpt=efficientdet-d1 --train_batch_size=2 --eval_batch_size=2 --num_epochs=10 --num_examples_per_epoch=5000 --eval_samples=100 --hparams=configs/default.yaml

I run the script on Ubuntu 18.04

python 3.6.9

with

tensorflow 2.5.0-dev20201224'

The error:

Traceback (most recent call last):

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1375, in _do_call

return fn(*args)

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1360, in _run_fn

target_list, run_metadata)

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1453, in _call_tf_sessionrun

run_metadata)

tensorflow.python.framework.errors_impl.NotFoundError: 2 root error(s) found.

(0) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[{{node save/RestoreV2}}]]

(1) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[{{node save/RestoreV2}}]]

[[save/RestoreV2/_4891]]

0 successful operations.

0 derived errors ignored.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/training/saver.py", line 1303, in restore

{self.saver_def.filename_tensor_name: save_path})

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 968, in run

run_metadata_ptr)

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1191, in _run

feed_dict_tensor, options, run_metadata)

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1369, in _do_run

run_metadata)

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1394, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors_impl.NotFoundError: 2 root error(s) found.

(0) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[node save/RestoreV2 (defined at /home/acaris/.local/lib/python3.6/site-packages/tensorflow_estimator/python/estimator/estimator.py:1510) ]]

(1) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[node save/RestoreV2 (defined at /home/acaris/.local/lib/python3.6/site-packages/tensorflow_estimator/python/estimator/estimator.py:1510) ]]

[[save/RestoreV2/_4891]]

0 successful operations.

0 derived errors ignored.

...

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/acaris/.local/lib/python3.6/site-packages/tensorflow/python/training/py_checkpoint_reader.py", line 70, in get_tensor

self, compat.as_bytes(tensor_str))

RuntimeError: Key _CHECKPOINTABLE_OBJECT_GRAPH not found in checkpoint

During handling of the above exception, another exception occurred:

[Truncated]

...

tensorflow.python.framework.errors_impl.NotFoundError: Restoring from checkpoint failed. This is most likely due to a Variable name or other graph key that is missing from the checkpoint. Please ensure that you have not altered the graph expected based on the checkpoint. Original error:

2 root error(s) found.

(0) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[node save/RestoreV2 (defined at /home/acaris/.local/lib/python3.6/site-packages/tensorflow_estimator/python/estimator/estimator.py:1510) ]]

(1) Not found: Key box_net/box-0-bn-3/beta/Momentum not found in checkpoint

[[node save/RestoreV2 (defined at /home/acaris/.local/lib/python3.6/site-packages/tensorflow_estimator/python/estimator/estimator.py:1510) ]]

[[save/RestoreV2/_4891]]

0 successful operations.

0 derived errors ignored.

Original stack trace for 'save/RestoreV2':

File "main.py", line 402, in <module>

Looks like a mismatch of some sort. Any idea how to fix this?

Answers:

username_1: Hi, based on your command line, it is problematic to set "--model_dir=efficientdet-d1 --ckpt=efficientdet-d1". Because efficientdet-d1 folder is not empty, it will skip --ckpt and tried to load all variables from efficientdet-d1, causing this issue.

Please use a different value for model_dir such as efficientdet-d1-finetune. Let me know if it still doesn't work.

Status: Issue closed

username_2: what about this case too?

python -model_inspect.py --runmode=saved_model --model_name=efficientdet-d3 --ckpt_path=efficientdet-lite3 --saved_model_dir=lite3_savedmodel

I am getting :L

2 root error(s) found.

(0) Not found: Key efficientnet-b3/blocks_0/conv2d/kernel not found in checkpoint

[[node save/RestoreV2 (defined at /home/chaahm/automl_newest/efficientdet/inference.py:217) ]]

[[save/RestoreV2/_301]]

(1) Not found: Key efficientnet-b3/blocks_0/conv2d/kernel not found in checkpoint

[[node save/RestoreV2 (defined at /home/chaahm/automl_newest/efficientdet/inference.py:217) ]] |

dotnet/AspNetCore.Docs | 785797645 | Title: DbContextOptionsBuilder' does not contain a definition for 'UseInMemoryDatabase'

Question:

username_0: In the section "Register the database context"

Updating the class "Startup.c"

I am geting the error with the line " opt.UseInMemoryDatabase("TodoList")); " it can't dind the method UseInMemoryDatabase

The error message is

"Error CS1061 'DbContextOptionsBuilder' does not contain a definition for 'UseInMemoryDatabase' and no accessible extension method 'UseInMemoryDatabase' accepting a first argument of type 'DbContextOptionsBuilder' could be found (are you missing a using directive or an assembly reference?)"

What I am I missing here ?

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 583ae3e7-5915-f22b-e54c-6c100cf2a7da

* Version Independent ID: 95f2549e-29f9-e31a-b4d0-432b971643ff

* Content: [Tutorial: Create a web API with ASP.NET Core](https://docs.microsoft.com/en-us/aspnet/core/tutorials/first-web-api?view=aspnetcore-5.0&tabs=visual-studio)

* Content Source: [aspnetcore/tutorials/first-web-api.md](https://github.com/dotnet/AspNetCore.Docs/blob/master/aspnetcore/tutorials/first-web-api.md)

* Product: **aspnet-core**

* Technology: **aspnetcore-tutorials**

* GitHub Login: @username_2

* Microsoft Alias: **riande**<issue_closed>

Status: Issue closed |

StefanScherer/windows-docker-machine | 1097073693 | Title: Apple Silicon Support

Question:

username_0: Hi,

Both Parallel and VMWare now support M1 chips. Is it possible for `windows-docker-machine` to work on M1 machines?

Status: Issue closed

Answers:

username_1: I don't think this will work. Parallels demonstrated that they can run Windows ARM64, but not x86_64. VMware the same, they don't run x86_64 ISO files on M1. |

red/red | 267407056 | Title: UNSETting some of the OP!s wreaks havoc on console

Question:

username_0: ```

Answers:

username_1: Same with `unset '<` or `unset '>` except `[]` does not crash the console.

username_0: Just remembered, `unset '=` behavior looks identical to `unset 'system` ;)

username_2: The core words used by the console code are not protected for now, that will come with the introduction of `protect/unprotect` functions in 0.8.0. I don't see what we can do in the meantime, besides avoiding redefining those core words.

Status: Issue closed

|

CosmosOS/Cosmos | 430126792 | Title: Issues with StreamReader / Writer

Question:

username_0: I have a performance test program to test how big files can be handled in COSMOS.

https://pastebin.com/PdcUYT54

This performance test consists of:

Creating a file of 144 x 128 pairs of coordinates 0,0 to 143,127 and writing them in a file line by line.

Reading said coordinates into an array of point objects and then displaying the X and Y values of the points on the screen.

Thing is, the whole test doesn't progress past the reading part.

Why?

It works a bit like this:

The program stalls while writing to the disk , and when I break it and look into the vmdk on VMWare I get the following result:

What I tried was to write the file on my own on windows and then just read the contents of it and display it on the screen.

The problem is that after a certain number of lines, I get garbage on the screen consisting of random characters and the <?> character.

Answers:

username_1: I think @username_2 was working on some fixes for the file system caused by the writing or reading of larger files. The issue might be caused by the bugs.

username_1: Can you check if https://github.com/CosmosOS/Cosmos/commit/b9307acfdfa7f92b46093612fac761752763588b changed anything?

username_0: @username_1 I was about to. Will try it out.

username_0: @username_1 the problem is still there with the latest commit, albeit in a different form. (no garbage chars, just empty ones) video and test file supplied.

https://files.gitter.im/CosmosOS/Cosmos/G3pY/bandicam-2019-05-12-13-12-11-250.avi

https://files.gitter.im/CosmosOS/Cosmos/lcuC/lcet10.txt

username_0: the StreamReader reads approx. 4245 characters before displaying the empty lines.

username_1: Is it possible for you to run the code as a test, and enable cosmos debug in a few files so we have some debug log to work off?

username_0: Since I don't know how to use the testrunner... Here's the code:

```

StreamReader myreader = new StreamReader(@"0:\lcet10.txt");

int lncount = 0;

int chrlength = 0;

while (!myreader.EndOfStream)

{

string tmp = myreader.ReadLine();

chrlength += tmp.ToCharArray().Length;

Console.WriteLine(tmp);

lncount++;

Console.WriteLine("Chr:" + chrlength);

if(lncount==20)

{

Console.WriteLine("Length: " + chrlength);

Console.ReadKey();

lncount = 0;

}

}

myreader.Close();

```

username_2: I'm looking in to this.

username_2: I'm not seeing an issue in the latest master. Did I miss something?

username_0: It still doesn't work with the latest master... try reading it to the end?

username_2: Are you using your own disk image or the one provided with the UK?

username_0: The one provided, just added the file there with VMWare.

username_0: Could this be an issue with memory overflow?

username_2: Can you send me your disk image? I'll see if I can duplicate that way.

username_0: https://we.tl/t-UIdoCwMqLX

Here it is.

Status: Issue closed

username_0: It works now! |

ChurchCRM/CRM | 493573764 | Title: Improper 3rd normal form

Question:

username_0: the person classifications field needs to be split, conceptually. As per your default, it contains two different classification schemes, both of which are necessary. However a person might be a member and an attender OR a member and a non-attender.

We need to be able to separate members from attenders. I don't know which would be easier, to add multiple records of classification in the person file or to separate the fields into attendance and membership.

Collected Value Title | Data

----------------------|----------------

Page Name |/churchcrm/Menu.php

Screen Size |540x960

Window Size |704x1280

Page Size |1749x1280

Platform Information | Linux host2030.hostmonster.com 4.14.135-214.ELK.el7.x86_64 #1 SMP Wed Jul 31 01:57:22 CDT 2019 x86_64

PHP Version | 7.3.9

SQL Version | 5.7.23-23

ChurchCRM Version |3.2.3

Reporting Browser |Mozilla/5.0 (Macintosh; Intel Mac OS X 10.14; rv:69.0) Gecko/20100101 Firefox/69.0

Prerequisite Status |Missing Prerequisites: ["Mcrypt"]

Integrity check status |{"status":"success"}

Answers:

username_1: For these sort of cases, we usually recommend implementing a "Custom Field" to populate as required to meet your specific needs. Please have a look at this wiki page and evaluate: https://github.com/ChurchCRM/CRM/wiki/Custom-Fields

Please let us know how you get on.

username_1: Closing due to lack of activity. Feel free to update us on progress if you think this still needs to stay open.

Status: Issue closed

|

hyb1996-guest/AutoJsIssueReport | 291911855 | Title: [163]java.lang.RuntimeException: ImageReaderContext is not initialized

Question:

username_0: Description:

---

java.lang.RuntimeException: ImageReaderContext is not initialized

at android.media.ImageReader.nativeImageSetup(Native Method)

at android.media.ImageReader.acquireNextSurfaceImage(ImageReader.java:327)

at android.media.ImageReader.acquireNextImageNoThrowISE(ImageReader.java:308)

at android.media.ImageReader.acquireLatestImage(ImageReader.java:283)

at com.stardust.autojs.runtime.api.image.ScreenCapturer$2.onImageAvailable(ScreenCapturer.java:93)

at android.media.ImageReader$ListenerHandler.handleMessage(ImageReader.java:648)

at android.os.Handler.dispatchMessage(Handler.java:111)

at android.os.Looper.loop(Looper.java:207)

at com.stardust.autojs.runtime.api.Loopers$2.run(Loopers.java:57)

at java.lang.Thread.run(Thread.java:818)

at com.stardust.lang.ThreadCompat.run(ThreadCompat.java:61)

Device info:

---

<table>

<tr><td>App version</td><td>2.0.16 Beta2</td></tr>

<tr><td>App version code</td><td>163</td></tr>

<tr><td>Android build version</td><td>1495809106</td></tr>

<tr><td>Android release version</td><td>6.0</td></tr>

<tr><td>Android SDK version</td><td>23</td></tr>

<tr><td>Android build ID</td><td>Flyme 6.1.0.0Y</td></tr>

<tr><td>Device brand</td><td>Meizu</td></tr>

<tr><td>Device manufacturer</td><td>Meizu</td></tr>

<tr><td>Device name</td><td>M1E</td></tr>

<tr><td>Device model</td><td>M1 E</td></tr>

<tr><td>Device product name</td><td>meizu_M1E</td></tr>

<tr><td>Device hardware name</td><td>mt6755</td></tr>

<tr><td>ABIs</td><td>[arm64-v8a, armeabi-v7a, armeabi]</td></tr>

<tr><td>ABIs (32bit)</td><td>[armeabi-v7a, armeabi]</td></tr>

<tr><td>ABIs (64bit)</td><td>[arm64-v8a]</td></tr>

</table> |

TNThieding/exif | 625010134 | Title: Removing all know exif values

Question:

username_0: Hey, great package 👍. I'm using it to remove all exif values and save a new file. I'm checking each attribute to be removed, and thought it would be usefull to have a method in your _image.py to erase all most common exif data, so anyone could just call that and get it clear for cases like mine.

Do you want me to do a Pull request with those changes?

Thank you!

Answers:

username_1: That sounds like a good idea! I'd gladly welcome a pull request. Active development and CI/CD is through GitLab (at https://gitlab.com/username_1/exif/), so if you could submit the changes there it would be great. If you'd prefer to make the pull request on GitHub, you can do that too and I'll copy it into GitLab myself.

username_0: Sorry I do not have a GitLab account, it is much quicker to do it here for me.

Pull request is sent

username_1: Thank you, @username_0. I'll continue our discussion on the pull request and I can move it to GitLab afterwards.

username_1: Released it in v0.11.0 of the package.

Status: Issue closed

|

llhuii/dive-into-k8s | 913226760 | Title: kubectl apply VS replace

Question:

username_0: 1. apply 使用patch 方法

2. replace 使用update方法

3. replace `--force` 使用了先delete再create方法

patch有以下策略https://kubernetes.io/zh/docs/tasks/manage-kubernetes-objects/update-api-object-kubectl-patch/:

1. json: json patch

```json

[

{

"op" : "replace" ,

"path" : "/users/0/email" ,

"value" : "<EMAIL>"

},

]

```

2. merge: json merge patch

```json

{

"a":"z",

"c": {

"f": null

}

}

```

3. strategic: strategic merge patch

```json

```

前两者参考https://erosb.github.io/post/json-patch-vs-merge-patch/

// 待更新

Answers:

username_0: 参考链接https://blog.atomist.com/kubernetes-apply-replace-patch/ |

pmattd/big-data-generator | 600307828 | Title: Generator for creating timestamps

Question:

username_0: current timestamp and random timestamp between upper and lower limits in order ( work out how many files will be created and distribute the timestamps over the files

Answers:

username_0: did it without keeping order, just random timestamps

Status: Issue closed

|

Evrey/SHC_AIV | 294613485 | Title: Nizar lacks a brain when starting with low goods

Question:

username_0: So there I just put your Nizar against a Richard Lionheart on a balanced 1 vs 1 testing map with all necessary resources available, and 2k starting gold for both. Now, I was excited to see who would win (Richard's catapult/trebuchet spam vs helpless Nizar, or Nizar's assassins vs Richard's helpless always-closed gate), but to my surprising, the game was decided already after 3 game years, and ended after exactly 12 game years, with Nizar being defeated. I noticed the reason, but still tried again and restarted the game, and watched as the events repeated exactly the same way as in the first game. Below is the end statistics of the first game.

Alone by looking at the statistics, it becomes obvious that Nizar didn't quite get up and running at the start, producing 0 food throughout the entirety of the game.

So enough blabbering, what was happening there? Nizar spent all of his starting wood and gold on building wheat farms, bakeries, breweries, mercenary post, engineers guild, houses, trading post, granary, and even woodcutter huts and an iron mine - but he didn't build a mill, essentially stalling all of his bread production.

A couple years into the game, his start food was gone and his popularity started dropping as he was out of gold, still had no mill, and had not gotten to build hops farms and inns either, and positive fear factor alone wasn't enough to keep up his popularity. 5 years into the game, Nizar then was down to 4 workers (3 wheat farmers, 1 woodcutter) and still had no mill.

Shortly after, in total 6 years into the game, Richard's first attack smashed Nizar's front wall and some of his buildings, and with Nizar still down at 0 popularity, 4 workers, 0 mills it didn't take too long afterwards until Richard hat flattened all of Nizar's buildings in and around his castle, and killed his Lord with some pitch-ditch-induced delay.

All in all, this greatly reminded me of vanilla Wazir's poor performance with low starting goods, as can be seen in detail on that AI battle video: https://youtu.be/Tu4BQgsD_kE Wazir had the same issue there, didn't place his mill, ran out of food, popularity drop, game over.

His initial starting wood was spent on other buildings (e.g. farms, houses, trading post, possibly even an iron mine) before it was the first mill's turn on the AIV's build order, and when the mill finally would have been built, Wazir (or now, Nizar) is out of wood already and the mill will be skipped for the time being.

Later, even with a bit of gold left to trade, or woodcutters bringing in more wood, the AI will usually reach lower thresholds first, e.g. 10 wood for a bakery, and build that first, being back to square one... and of course, initial soldier recruiting will make short work of any remaining start gold reserves or early income from a single iron mine or selling a bit of surplus wheat.

When food runs out before all those 10-wood buildings and other stuff has been built, popularity will drop, and the AI has no gold left to buy food (or, for Wazir, doesn't buy food in general) or set negative taxes to try and counter this...

This behaviour can be less obvious when starting with many goods and gold, as more start wood increases chances for a successful mill placement, and lots of gold gives the AI more time/chances to buy wood (and/or food) and eventually hopefully get going with their mill, or at least get going enough other industry to then have a steady income large enough to fund food and wood for a mill later. Also, ironically, on maps that lack resources so that the AI just cannot build particularly much external industry right away, this issue is less present.

At this point in time you might be full of rage about my wall of text, and about the incompetence of Crusader's AI behaviour, yet I would argue this should certainly be fixable by slightly modifying the castle's build order. Key is setting the first mill very early in the build order, so that it has good chances to be built before that iron mine and other less relevant external industry, plus leaving a number of steps AFTER the mill during which no bakeries or other wooden buildings get placed in the AIV, but rather walls, a first tower, a moat or whatever else, to "buy some time" for the mill to be built even when the starting wood wasn't sufficient for building it right away and the AI checks through the next steps if anything else can be built with less than 20 wood.

Answers:

username_1: Yupp, I know about this pathetic bug. It affects **every** AI lord making bread. Your solution for the problem is really neat. I think I'd make the first mill of every bread baker the very first building in the queue, even before the stock pile extension, as wood always comes first. Perhaps no re-ordering of other buildings will be needed this way.

Lookin' at my current gaming and hobby schedule, it is very unlikely that I'll fix this within the next months. Not impossible, but very unlikely. So, if you are faster, then you're welcome to help, otherwise be patient. =D

Nice bug report as always, btw.!

username_0: Yeah I know you're working hard to make AEI great :) Yet I am not sure I can find the time to deal with the issue in a timely manner either... having the idea of changing the build order is one thing, adjusting it on all 8 castles and then testing if it works and if necessary finetuning the build order to make sure it works fine is another thing... anyway maybe I can fit it in somewhen...

Well, I suppose it may not take that much longer than writing long bug reports after all xD With an ingame speed of 125 multiplied by a factor of "theoretical 16", AI battles run quite fast compared to regular 90 x 1 speed.

And I see you finally saw the December post for Wolf that I made and then forgot about it... :D Good.

Status: Issue closed

username_1: [This commit](https://github.com/username_1/SHC_AIV/commit/0861c910da920754472919a924b2a6068883536e) should fix it. I did what I threatened to do: I made the windmills the very first building in the queue, even before the stockpile addons. |

mjordan/islandora_workbench | 816757543 | Title: Taxonomy terms with namespaces causing errors

Question:

username_0: I know I'm missing something here, but I think I'm following the documentation correctly, specifically the Taxonomy Reference Fields section of https://username_1.github.io/islandora_workbench_docs/fields/#content-type-specific-fields. I'm happy to contribute to documentation if that turns out to be worthwhile.

When trying to ingest a record with a taxonomy term that uses a namespace (in my csv, it's "person:<NAME>"), I'm getting a couple of warning during ``--check``, and then an actual error when running Workbench. Here are the ``--check`` warnings:

WARNING - CSV field "field_subjects_name" in row 3 contains a term ("<NAME>") that is not in the referenced vocabulary ("person"). That term will be created.

WARNING - Vocabulary "family" referenced in CSV field "field_subjects_name" may not be enabled in the "Terms in vocabulary" View (please confirm it is) or may contains no terms.

Then the info and warning that come from a real job:

INFO - {'vid': [{'target_id': 'person', 'target_type': 'taxonomy_vocabulary'}], 'status': [{'value': True}], 'name': [{'value': '<NAME>'}], 'description': [{'value': '', 'format': None}], 'weight': [{'value': 0}], 'parent': [{'target_id': None}], 'default_langcode': [{'value': True}], 'path': [{'alias': None, 'pid': None, 'langcode': 'en'}]}

WARNING - Term '<NAME>' not created, HTTP response code was 422.

...and the error:

Traceback (most recent call last):

File "./workbench", line 963, in <module>

create()

File "./workbench", line 165, in create

if config['subdelimiter'] in row[custom_field]:

TypeError: argument of type 'bool' is not iterable

I'm not sure why Workbench ``--check`` comments on the "family" vocabulary, because the csv doesn't reference it. The only thing I can think of is that ``field_subjects_name`` is not the right field to be using, but it seems right according to the documentation.

Here's the csv (sorry, the relevant term is in the last field): https://gist.github.com/username_0/ae8b52c02b2bfd3467e3c15df7087b19

And then the config is:

task: create

host: "http://localhost:8000"

username: admin

password: <PASSWORD>

input_dir: testBatch_02_clean

input_csv: barneberg_forTesting_002_as-imageObjects.csv

allow_adding_terms: true

Answers:

username_1: That misleading logging is a known issue (#194 to be specific). Drupal's Views REST response returns the same result for vocabularies that are empty as it does for ones that aren't enabled in the View, hence the vague wording of the warning. I need to filter out vocabularies that are referenced by the field being checked but are not namespaced for new terms. That would eliminate most occurances of that warning message while leaving it present when it's important that the user checks that vocabulary. (I think, I logged the issue but haven't gotten to resolving it yet.)

The 422 errors I can't explain. Another user has reported them when trying to create terms before, but I have not been able to replicate or track down what is going on. Which baffles me. Coincidentally (?) they saw them with the Person vocabulary as well. I have not seen that Python `bool` error before though. Can you share your config and CSV with me so I can try to replicate what you are seeing?

username_0: thanks @username_1, let me know if I can provide any more info.

username_1: Will do. I'm going to dig into this over the weekend.

username_1: @username_0 can you export the configuration of your Person vocabulary for me and paste it into a comment here? To to that, go to `/admin/config/development/configuration/single/export` and choose "Taxonomy vocabulary" in the "Configuration type" list, and then "Person (person)" in the "Configuration name" list.

username_0: Here's the Person vocabulary configuration:

uuid: 516861d0-dcbe-40ce-b1b3-cbca5b89695e

langcode: en

status: true

dependencies: { }

_core:

default_config_hash: GAHBiBARA1hdVQoJq1P1oyK47vGxwTr3vyiPsnwqD44

name: Person

vid: person

description: 'An individual of the human species. '

weight: 0

username_1: @username_0 haven't forgotten about this, just swamped with day job and am brain dead in evenings. Will dig in over the weekend.

username_1: To try to replicate this problem, I created 1000 nodes with new Person terms as subjects (933 new terms total) without a failure. My CSV file was pretty simple however, with only an id, title, and field_subject field.

I then spun up a new Islandora 8 box and tried to ingest the same CSV and all of the new terms failed to be created.

Can you check `/admin/reports/status` to see what version of Drupal you're running? The version where the terms are failing for me is 8.8.5. The version where I can't get the terms to fail is 8.9.12.

username_1: Following up on my comment ^ from last night, I just installed the current version of the Islandora Playbook, installed the Islandora Workbench Integration module, and used Workbench to ingest (without any HTTP 422 taxonomy terms) the same set of nodes with new `person:` values in `field_subject`. That Islandora is running Drupal 9.2.0-dev.

I'm really hoping the failures are specific to the earlier version of Drupal, since otherwise I can't seem to replicate the problem. The only time I have seen it was when I used Workbench against a Drupal 8.5.5.

Would you be interested in trying to ingest your example CSV (or a subset of it since the Playbook doesn't have all your fields) into my new instance to see what happens? It's on the public web; I could provide details to you in Slack.

username_0: I'm running 8.8.5. I'm definitely open to ingesting a modified CSV into your new instance, and will keep an eye on slack for the details. Thanks!

username_1: OK to close this?

username_0: Yes! :)

Status: Issue closed

|

ant-design/ant-design | 161016419 | Title: Button禁用后Tooltip不会正常消失。

Question:

username_0:

截图如上,其他地方Tooltip的表现是离开该组件之后会自动消失,但是如果是在禁用后的Button上的话则不会消失了。测试代码如下:

`<Tooltip title="禁用后Tooltip">

<Button disabled>禁用按钮</Button>

</Tooltip>`

实际上一开始是用的`<Tooltip title="编辑"><Button type="ghost" shape="circle" icon="edit"></Tooltip>`这种方式,后来测试的时候写成上面那种格式。

Answers:

username_1: #1816

Status: Issue closed

|

flutter/flutter-intellij | 537405222 | Title: "Wrap with Center" and "Wrap with Padding" are not active in Flutter Outline Tool panel

Question:

username_0: ## Steps to Reproduce

I try wrap Row widget with Center (or Padding) widget by button in Flutter Outline Tool panel, but this button is not active.

## Version info

C:\src\flutter\bin\flutter.bat doctor --verbose

[√] Flutter (Channel stable, v1.9.1+hotfix.6, on Microsoft Windows [Version 10.0.18363.476], locale en-US)

• Flutter version 1.9.1+hotfix.6 at C:\src\flutter

• Framework revision 68587a0916 (3 months ago), 2019-09-13 19:46:58 -0700

• Engine revision b863200c37

• Dart version 2.5.0

[√] Android toolchain - develop for Android devices (Android SDK version 29.0.2)

• Android SDK at C:\Users\xxx\AppData\Local\Android\sdk

• Android NDK location not configured (optional; useful for native profiling support)

• Platform android-29, build-tools 29.0.2

• Java binary at: C:\Program Files\Android\Android Studio\jre\bin\java

• Java version OpenJDK Runtime Environment (build 1.8.0_202-release-1483-b03)

• All Android licenses accepted.

[√] Android Studio (version 3.5)

• Android Studio at C:\Program Files\Android\Android Studio

• Flutter plugin version 42.1.1

• Dart plugin version 191.8593

• Java version OpenJDK Runtime Environment (build 1.8.0_202-release-1483-b03)

[√] Connected device (1 available)

• Google Pixel 2 • 192.168.226.102:5555 • android-x86 • Android 9 (API 28)

• No issues found!

Process finished with exit code 0

Answers:

username_1: You need to define

`factory MyWidget.forDesignTime() `

inside of your DicePage widget.

see [Live Widget Preview](https://medium.com/flutter/flutter-outline-hot-reload-and-the-implementation-of-a-live-widget-preview-69abd39aa3bb)

Status: Issue closed

|

dotnet/docs | 1149219539 | Title: Not really an issue - Spanish Version

Question:

username_0: Hi!

There is some type of collaboration to translate this pages?

Im Learning and i like to help to translate this pages.

Im from Argentina and one of the biggest problems of latam to acces this type of content is the lenguage

---

#### Detalles del documento

⚠ *No edite esta sección. Se requiere para la vinculación de problemas de docs.microsoft.com ➟ GitHub.*

* ID: 24c8353f-69e7-9068-9b18-df0198a1b122

* Version Independent ID: 823031fe-c8a4-69cd-2b1a-9faacd282aab

* Content: [Classes and objects - C# Fundamentals tutorial](https://docs.microsoft.com/es-mx/dotnet/csharp/fundamentals/tutorials/classes)

* Content Source: [docs/csharp/fundamentals/tutorials/classes.md](https://github.com/dotnet/docs/blob/main/docs/csharp/fundamentals/tutorials/classes.md)

* Product: **dotnet-csharp**

* Technology: **csharp-fundamentals**

* GitHub Login: @username_1

* Microsoft Alias: **wiwagn**

Answers:

username_1: Hi @username_0

I'm adding @username_2 and @juricgit to this thread. They work on localization and community contributors, respectively. They can help point you to how to get involved.

username_2: @username_0 Thank you for your offer. We are looking into this missing translation.

[FEEDBACK 563566](https://ceapex.visualstudio.com/CEINTL/_workitems/edit/563566)

username_2: The page is localized. |

ScoopInstaller/Main | 1040161413 | Title: lynx: SSL error: Can't find common name in certificate

Question:

username_0: ## Bug Report

**Package Name:** lynx

### Current Behaviour

Websites using HTTPS do not open at all.

### Expected Behaviour

All websites should open.

### Additional context/output

The dependency `cacert` might be the cause of the problem.

```

❯ lynx

Looking up lynx.invisible-island.net

Making HTTPS connection to lynx.invisible-island.net

lynx: Can't access startfile https://lynx.invisible-island.net/

```

### Possible Solution

<!--- Only if you have suggestions on a fix for the bug -->

### System details

**Windows version:** 10.0.19043.1288

**OS arch (32 or 64 bit):** 64

**PowerShell version:** 7.1.5

**Additional software:** none

Answers:

username_0: It has been like that for quite some time - https://github.com/ScoopInstaller/Main/pull/512#pullrequestreview-308197679 - I'm not sure it will work in stable either.

The actual problem might be this - mentioned on the Lynx homepage

The manifest currently downloads OpenSSL 1.1.1, but that is not supported it seems. And OpenSSL 1.1.0 is hard to find anywhere.

username_1: You're right. Just tested the stable release with OpenSSL 1.1.1l and it doesn't work. Looks like there are security vulnerabilities with version 1.1.0 so that's why it's hard to find - I don't recommend we go looking for it and introduce vulnerabilities for scoop users! So, it looks like it's up to lynx to add support for 1.1.1 which was released 11 September 2018. Or better yet the latest version 3.0.0 which was recently released (7 September 2021).

username_0: Maybe we can ask the author what's going on - would you be willing to file a report in Lynx? (Ref: https://lists.gnu.org/archive/html/lynx-dev)

username_1: It looks like someone asked a similar question just over a year ago: https://lists.gnu.org/archive/html/lynx-dev/2020-09/msg00005.html

And this was the author's reply: https://lists.gnu.org/archive/html/lynx-dev/2020-09/msg00006.html