repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

prisma/migrate | 730209386 | Title: Error: Generic error: The datasource db already exists in this Schema. It is not possible to create it once more.

Question:

username_0: <!--

Thanks for helping us improve Prisma! 🙏 Please follow the sections in the template and provide as much information as possible about your problem, e.g. by setting the `DEBUG="*"` environment variable and enabling additional logging output in Prisma Client.

Learn more about writing proper bug reports here: https://pris.ly/d/bug-reports

-->

## Bug description

<!-- A clear and concise description of what the bug is. -->

SInce i updated my prisma versions I've been having problems trying to migrate an existing schema that obviously worked

## How to reproduce

run

```sh

$ prisma migrate save --experimental

```

throws

```sh

yarn dev:migrate

yarn run v1.22.4

$ prisma migrate save --experimental && prisma migrate up --experimental

Environment variables loaded from prisma/.env

Prisma Schema loaded from prisma/schema.prisma

Error: Generic error: The datasource db already exists in this Schema. It is not possible to create it once more.

error Command failed with exit code 1.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

```

<!--

Steps to reproduce the behavior:

1. Go to '...'

2. Change '....'

3. Run '....'

4. See error

-->

## Expected behavior

show migrate successfully without errors

<!-- A clear and concise description of what you expected to happen. -->

## Prisma information

<!-- Your Prisma schema, Prisma Client queries, ...

Do not include your database credentials when sharing your Prisma schema! -->

## Environment & setup

```sh

❯ prisma -v

Environment variables loaded from prisma/.env

@prisma/cli : 2.9.0

@prisma/client : 2.9.0

Current platform : debian-openssl-1.1.x

Query Engine : query-engine 369b3694b7edb869fad14827a33ad3f3f49bbc20 (at ../../../../../opt/node/lib/node_modules/@prisma/cli/query-engine-debian-openssl-1.1.x)

Migration Engine : migration-engine-cli 369b3694b7edb869fad14827a33ad3f3f49bbc20 (at ../../../../../opt/node/lib/node_modules/@prisma/cli/migration-engine-debian-openssl-1.1.x)

Introspection Engine : introspection-core 369b3694b7edb869fad14827a33ad3f3f49bbc20 (at ../../../../../opt/node/lib/node_modules/@prisma/cli/introspection-engine-debian-openssl-1.1.x)

Format Binary : prisma-fmt 369b3694b7edb869fad14827a33ad3f3f49bbc20 (at ../../../../../opt/node/lib/node_modules/@prisma/cli/prisma-fmt-debian-openssl-1.1.x)

Studio : 0.296.0

Preview Features : transactionApi

```

<!-- In which environment does the problem occur -->

- OS: <!--[e.g. Mac OS, Windows, Debian, CentOS, ...]--> Linux (Ubuntu 20.04)

- Database: <!--[PostgreSQL, MySQL, MariaDB or SQLite]--> PostgreSQL

- Node.js version: <!--[Run `node -v` to see your Node.js version]--> v14.8.0

- Prisma version: 2.9.0

<!--[Run `prisma -v` to see your Prisma version and paste it between the ´´´]-->

Answers:

username_0: ### Work Around

I've fixed it by deleting the `_Migration` table in my DB and `migrations` folder in Prisma directory, but I don't just know why it stopped migrating automatically

username_1: Thanks for taking the time to report this issue!

We've recently released a Prisma Migrate Preview ([2.1.3.0 release notes](https://github.com/prisma/prisma/releases/tag/2.13.0)), which has quite a few changes compared to the previous experimental version. We believe this issue is no longer relevant in this new version, so we are closing this.

We would encourage you to try out Prisma Migrate Preview. If you come across this or any other issue in the preview version, please feel free to open a [new issue in prisma/prisma](https://github.com/prisma/prisma/issues/new/choose).

For general feedback on Prisma Migrate Preview, feel free to chime in [on this issue](https://github.com/prisma/prisma/issues/4531).

Status: Issue closed

|

vapor/redis | 482823542 | Title: Promises for commands in flight do not return when the connection is dropped

Question:

username_0: Hey @username_1. I am using v3.4.0 (the Vapor 3.0/Redis NIO implementation) and noticed that when requests are in flight and the connection gets dropped, the promise is not getting fulfilled with an error (or anything for that matter), so I never know it happened. The system just hangs.

Easiest way to reproduce is to do a long "brpop" command and kill the connection. You'll see that no error is thrown and no response is received. See below

```swift

print("DEBUG: sending brpop request")

redisClient.send(RedisData.array(["brpop", "queue_key", "5"].map { RedisData(bulk: $0) })).do { data in

print("DEBUG: received data \(data)")

}.catch { error in

print("DEBUG: received error \(error)")

}

```

If I wait the 5 seconds and let the timeout expire, I will get "nil" back as expected. If I kill the connection before that, I get nothing back.

As a workaround, I am going to create a delayed task that will fulfill the promise with an error if I get nothing back by the time it runs, and then cancel it if I do get something back. This feels hacky/expensive but will get the job done for now.

I don't know the NIO code very well, but is there a way to clear out pending promises with an error when the connection closes?

Answers:

username_0: I got a chance to look at this. I was able to resolve this at the RedisClient level. I did the following

- In RedisClient -> Send

- Store the promise when it is created

- Note I used a dictionary since the array "removeObject" is now a pain in Swift

- Remove the promise when it is fulfilled (future.always)

- In RedisClient -> Init -> channel.closureFuture.always

- Iterate through the stored promises and send a "ChannelError"

- Empty the storage object

Diff below

```

bash-3.2$ git diff

diff --git a/Sources/Redis/Client/RedisClient.swift b/Sources/Redis/Client/RedisClient.swift

index 177277a..901ec86 100644

--- a/Sources/Redis/Client/RedisClient.swift

+++ b/Sources/Redis/Client/RedisClient.swift

@@ -20,7 +20,10 @@ public final class RedisClient: DatabaseConnection, BasicWorker {

/// The channel

private let channel: Channel

-

+

+ /// Stores the inflight promises so they can be fulfilled when the channel drops

+ var inflightPromises: [String:EventLoopPromise<RedisData>] = [:]

+

/// Creates a new Redis client on the provided data source and sink.

init(queue: RedisCommandHandler, channel: Channel) {

self.queue = queue

@@ -28,6 +31,12 @@ public final class RedisClient: DatabaseConnection, BasicWorker {

self.extend = [:]

self.isClosed = false

channel.closeFuture.always {

+ // send closed error for the promises that have not been fulfilled

+ for promise in self.inflightPromises.values {

+ promise.fail(error: ChannelError.ioOnClosedChannel)

+ }

+ self.inflightPromises.removeAll()

+

self.isClosed = true

}

}

@@ -55,6 +64,13 @@ public final class RedisClient: DatabaseConnection, BasicWorker {

// create a new promise to fulfill later

let promise = eventLoop.newPromise(RedisData.self)

+ // logic to store in-flight requests

+ let key = UUID().uuidString

+ self.inflightPromises[key] = promise

+ promise.futureResult.always {

+ self.inflightPromises.removeValue(forKey: key)

+ }

+

// write the message and the promise to the channel, which the `RequestResponseHandler` will capture

return self.channel.writeAndFlush((message, promise))

.flatMap { return promise.futureResult }

```

@username_1 If this solution is acceptable, I'll create a PR accordingly. Let me know what you think

username_0: @username_1 I created a PR for this. Use it if you desire. I needed to fix it for my needs regardless.

username_1: @username_0 Sorry for the extremely long delay in response - August has been way too busy for me.

I left a comment on the PR of where the code can live. The primary thing is the handler is missing a good implementation for either `channelInactive` or something else to respond to the channel now closing - which is honestly a problem upstream w/ SwiftNIO Extras as well

username_0: @username_1 That was what I was looking for! I knew the in-flight promises were stored somewhere already. I just needed to go a level deeper in the code. Let me know if my modified code is correct. And no problem being busy. It happens to all of us. |

MasoniteFramework/core | 455003897 | Title: Explicit Python3 and Pip3 in Makefile

Question:

username_0: Just looked at the Makefile and realized something: all the python and pip commands are of the python 2 and pip 2 convention. Due to some weirdness in the Python community, `python` and `pip` are not `python3` and `pip3`. For instance, MacOS' default python installation is 2.7.

Solution: replace all instances of `python` and `pip` with `python3` and `pip3`.

Answers:

username_1: Those commands are made into consideration that you have enabled a virtual environment for Python 3.

username_0: Not all people use virtual environments. Once again, my coworkers are not that fond of them. There are also people who don't know to use a virtual environment.

username_2: This would really only be for Masonite core development / contributing. Not all people use Masonite but also not all application use `python3` aliases. It depends on how you installed Python.

So the solution here is really to be inside a virtual environment to use the command. either solution will break for some people. It'll either break for those who don't use virtual env or break for those who don't have the python3 alias

Status: Issue closed

|

typestack/class-transformer | 671931857 | Title: Is it possible to do deserialization from string to class without writting @transform

Question:

username_0: **I was trying to...**

Let's imagine that i have some class like this:

```

export default class SecureString {

private readonly value: string;

private readonly encodedValue: string;

constructor(original: string) {

this.value = transformValue(original);

this.encodedValue = transformEncodedValue(original);

}

}

```

and I want use it in other class:

```

export default class Test {

password: SecureString;

}

```

then if I try `plainToClass(Test, {password: 'foo'}))`, then it will not transform password to SecureString class.

```

const transformed = plainToClass(Test, {password: 'foo'}));

console.log(transformed);

// return Test { transactionPassword: 'foo' }

```

I can set transform decorator:

```

export default class Test {

@Transform((value) => new SecureString(value))

password: SecureString;

}

```

But then I should write this boilerplate code for each SecureString field.

How I can write my code without boilerplate code?

Also I tried to add Transform for fields in SecureString

```

export default class SecureString {

@Transform((original: string) => transformValue(original), { toClassOnly: true })

private readonly value: string;

@Transform((original: string) => transformEncodedValue(original), { toClassOnly: true })

private readonly encodedValue: string;

constructor(original: string) {

this.value = transformValue(original);

this.encodedValue = transformEncodedValue(original);

}

}

```

But result is the same

<!-- Please detail what you were trying to achieve before encountering the problem. -->

<!-- Paste code snippets if applicable. -->

**The problem:**

Deserialise string to class in other class

<!-- Detail the problem you encountered while trying to achieve your goal. -->

<!-- Paste code snippets if applicable. -->

Answers:

username_0: The best option that I have now is to create custom decorator

```

export function ToSecureString() {

return Transform((value) => new SecureString(value));

}

```

username_1: As you mentioned in your second reply, you need a custom decorator for this.

Status: Issue closed

|

bgruening/docker-galaxy-stable | 217612700 | Title: Unable to find config file './dependency_resolvers_conf.xml'

Question:

username_0: Hi Bjoern,

I just accidentally upgraded to a newer version of your image.

<img width="803" alt="screen shot 2017-03-28 at 12 01 50 pm" src="https://cloud.githubusercontent.com/assets/2761597/24415102/5c7389ce-13ae-11e7-8af7-6864b8486a85.png">

It seems like the galaxy containers won't start -- and it seems like the error is:

```

galaxy.tools.deps DEBUG 2017-03-28 15:12:24,621 Unable to find config file './dependency_resolvers_conf.xml'

```

The documentation about [dependency resolvers](https://docs.galaxyproject.org/en/master/admin/dependency_resolvers.html) doesn't seem to suggest that I would *need* to edit anything or add such a file. The documentation about this repo doesn't seem to suggest I would need to add one either. However, other [flavors](https://github.com/fasrc/fasrc-galaxy) of docker-galaxy seem to have dependency resolver files, but when I add one to the export mount point, I still get the same error. How do I fix this?

Answers:

username_0: @username_1 might have ideas.

username_1: hmmm… i don't see where we copied that in, but it's in the repo. maybe it's a default config. have you checked that the config file's readable by the galaxy user? that file may also not be needed by current versions of the container/galaxy. i'm not an expert in galaxy config, by any means.

username_2: This is not an error, it's more a Galaxy warning message. At startup of a frech container Galaxy should look for the *.sample file but not this file.

@username_0 look for an other error. We have some reports that is related to your storage backend in Docker, try to change this. The image is working and starting on Travis, Quay.io and Dockerhub.

username_0: Thanks @username_2,

It looks like when I tried to add my own dependency resolver file, I get this new error as well:

```

Traceback (most recent call last):

File "lib/galaxy/webapps/galaxy/buildapp.py", line 55, in paste_app_factory

app = galaxy.app.UniverseApplication( global_conf=global_conf, **kwargs )

File "lib/galaxy/app.py", line 98, in __init__

self._configure_toolbox()

File "lib/galaxy/config.py", line 927, in _configure_toolbox

self.reload_toolbox()

File "lib/galaxy/config.py", line 911, in reload_toolbox

self.toolbox = tools.ToolBox( tool_configs, self.config.tool_path, self )

File "lib/galaxy/tools/__init__.py", line 192, in __init__

tool_conf_watcher=tool_conf_watcher

File "lib/galaxy/tools/toolbox/base.py", line 1068, in __init__

self._init_dependency_manager()

File "lib/galaxy/tools/toolbox/base.py", line 1081, in _init_dependency_manager

self.dependency_manager = build_dependency_manager( self.app.config )

File "lib/galaxy/tools/deps/__init__.py", line 41, in build_dependency_manager

dependency_manager = DependencyManager( **dependency_manager_kwds )

File "lib/galaxy/tools/deps/__init__.py", line 84, in __init__

self.dependency_resolvers = self.__build_dependency_resolvers( conf_file )

File "lib/galaxy/tools/deps/__init__.py", line 193, in __build_dependency_resolvers

return self.__default_dependency_resolvers()

File "lib/galaxy/tools/deps/__init__.py", line 202, in __default_dependency_resolvers

CondaDependencyResolver(self),

File "lib/galaxy/tools/deps/resolvers/conda.py", line 62, in __init__

self._setup_mapping(dependency_manager, **kwds)

File "lib/galaxy/tools/deps/resolvers/__init__.py", line 83, in _setup_mapping

mappings.extend(MappableDependencyResolver._mapping_file_to_list(mapping_file))

File "lib/galaxy/tools/deps/resolvers/__init__.py", line 88, in _mapping_file_to_list

with open(mapping_file, "r") as f:

IOError: [Errno 2] No such file or directory: './config/local_conda_mapping.yml.sample'

```

Does that give you any clues?

I'm getting two warnings from tools which recur:

```

galaxy.tools WARNING 2017-03-28 16:08:33,205 Tool toolshed.g2.bx.psu.edu/repos/devteam/bamtools_filter/bamFilter/0.0.2: a when tag has not been defined for 'rule_configuration (rules_selector) --> false', assuming empty inputs.

galaxy.tools.parameters.dynamic_options WARNING 2017-03-28 16:08:33,528 Data table named 'gff_gene_annotations' is required by tool but not configured

```

I see this error near the top of the logs as well:

```

==> /home/galaxy/logs/uwsgi.log <==

return self.__default_dependency_resolvers()

File "lib/galaxy/tools/deps/__init__.py", line 202, in __default_dependency_resolvers

CondaDependencyResolver(self),

File "lib/galaxy/tools/deps/resolvers/conda.py", line 62, in __init__

self._setup_mapping(dependency_manager, **kwds)

File "lib/galaxy/tools/deps/resolvers/__init__.py", line 83, in _setup_mapping

mappings.extend(MappableDependencyResolver._mapping_file_to_list(mapping_file))

File "lib/galaxy/tools/deps/resolvers/__init__.py", line 88, in _mapping_file_to_list

with open(mapping_file, "r") as f:

IOError: [Errno 2] No such file or directory: './config/local_conda_mapping.yml.sample'

```

Do any of those look particularly suspicious?

username_0: I've switched back to an older version (2 months ago) and it runs, everything else held equal.

username_2: You are running it with an export directory?

username_0: yes.

username_2: Jupp, follow the instructions :)

`IOError: [Errno 2] No such file or directory: './config/local_conda_mapping.yml.sample'` this file is new and you need to update it.

username_0: Thanks Bjoern!

Status: Issue closed

|

rust-random/rand | 684588943 | Title: rand_distr no_std

Question:

username_0: Can you please update rand_distr on crates.io? Version on github have feature "std" and can be used without it, but version on crates.io doesn't have any features.

Answers:

username_1: Possibly. We were going to wait until `rand` 0.8 was out, but that could still be a while (unknown). With [this commit](https://github.com/rust-random/rand/commit/0c9e2944055610561eef74247614319301cca1f6) `rand_distr`'s tests pass, so I guess so? @username_2?

There's still a changelog to write.

username_2: I suppose we could just release `rand_distr` 0.3, and release 0.4 after rand 0.8.

@username_1 What changelog is yet to write? I think `CHANGELOG.md` is up to date.

username_1: Oh, it might be (the changelog). :+1:

*Maybe* I'll get to this later today.

username_1: There were a couple of things missed in the changelog. Done.

@username_2 there is no PR because this must be on a [new branch](https://github.com/rust-random/rand/tree/rand_distr). Can you review from here? Once done either of us can make the release.

Then the changes to the changelog should be merged back into master, but not all the changes to `Cargo.toml`...

username_2: @username_1 Looks good to me!

username_1: Published. @username_0 can you test?

username_2: @username_1 I opened #1024 for merging the updated changelog into master.

Status: Issue closed

username_0: Sorry for the absence. Yeah it seems to work fine with no_std thanks for help. |

alextoind/serial | 655383081 | Title: Inconsistency between Unix and Windows implementations

Question:

username_0: During #22 it comes out that there are some functions that behave differently from Unix to Windows.

The library interface should be exactly the same for both the implementations.

Status: Issue closed

Answers:

username_0: Still missing the refactoring of `reconfigurePort()`.

username_0: During #22 it comes out that there are some functions that behave differently from Unix to Windows.

The library interface should be exactly the same for both the implementations.

Status: Issue closed

|

latex3/latex2e | 713580947 | Title: \bigskip, \medskip, \and \smallskip

Question:

username_0: ## Brief outline of the enhancement

**LaTeX2e generally cannot add new features without an extreme amount of care to accommodate backwards compatibility. Please do not be offended if your request is closed for being infeasible.**

## Minimal example showing the current behaviour

```latex

\RequirePackage{latexbug} % <--should be always the first line (see CONTRIBUTING)!

\documentclass{article}

\renewcommand\bigskip[1][1]{\vspace{#1\bigskipamount}}

% instead of \def\bigskip{\vspace\bigskipamount}

\begin{document}

foo

\bigskip

bar

\bigskip[2]

baz

\end{document}

```

It would be nice, if we can have an optional argument for the three macros, whcih is easier to write than

`\vspace{2\bigskipamount}`. Same for the other two length macros

Answers:

username_1: The problem with this is that it is a trailing optional argument, so

```

\bigskip

[put figure here]

\bigskip

```

would suggenly blow up and that isn't a totally obscure scenario.

username_2: Also, the meaning of the integer parameter is not very intuitive.

A better solution would be a user-shorthand macro/command with a mandatory argument, something like `\varbigskip {<scale>}`.

Status: Issue closed

username_3: Note also the definition would need to be more complicated than the suggested

```

\renewcommand\bigskip[1][1]{\vspace{#1\bigskipamount}}

```

as that loses the stretch and shrink components and would make `\bigskip` a fixed length

username_2: Sure, that is a silly nuisance factor!

You will need a little arithmetic to 'preserve the glue'! |

cisco/openh264 | 190356741 | Title: Decoding in VLC

Question:

username_0: Hi all,

i built the codec for macosx and linked to a VLC plugin project (this project https://github.com/ASTIM/OpenH264-VLC-Plugin)

With this and usign VLC parameter --demux=h264 i can correctly play raw h264 file.

The problem is when decoding h264 stream provided via RSTP to my Axis M1125. For every NAL i obtain error number 16. The following is the SDP provided by the Axis

How can i decode this? What kind of NALS do OpenH264 codec expects?

Thanks

```

v=0

o=- 3253925629317495365 1 IN IP4 172.16.16.129

s=Session streamed with GStreamer

i=rtsp-server

t=0 0

a=tool:GStreamer

a=type:broadcast

a=range:npt=now-

a=control:rtsp://172.16.16.129:554/axis-media/media.amp?videocodec=h264

m=video 0 RTP/AVP 96

c=IN IP4 0.0.0.0

b=AS:50000

a=rtpmap:96 H264/90000

a=fmtp:96 packetization-mode=1;profile-level-id=4d0029;sprop-parameter-sets=Z00AKeKQDwBE/LgLcBAQGkHiRFQ=,aO48gA==

a=control:rtsp://172.16.16.129:554/axis-media/media.amp/stream=0?videocodec=h264

a=framerate:30.000000

a=transform:-1.000000,0.000000,0.000000;0.000000,-1.000000,0.000000;0.000000,0.000000,1.000000

```

Answers:

username_0: I apologize,

At the end of the day i discovered that is enough to to open the url with an `&spsppsenabled=yes`.

What i do not understand is that i have a lot of errors during buffering

many `dsDataErrorConcealedz` and 0x22 ...

username_1: VLC likely doesn't see (or doesn't support) sprop-parameter-sets. You could emulate it by decoding the parameter sets and inserting them before any data - it comprises a PPS and an SPS.

username_0: Thank you for the answer, i'm new to h264 so i will check those parameters and i will let you know.

username_2: it seems that your question has been fixed. closed this issue. if you still questions, please submit the new issue freely. thanks!

Status: Issue closed

|

backend-br/vagas | 1036699622 | Title: Java na Base2

Question:

username_0: <h1>

<a id="user-content-id" class="anchor" href="#id" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>ID</strong>

</h1>

<ul>

<li>434611590</li>

</ul>

<h1>

<a id="user-content-descrição-da-vaga" class="anchor" href="#descri%C3%A7%C3%A3o-da-vaga" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Descrição da vaga</strong>

</h1>

<ul>

<li>Java</li>

</ul>

<h1>

<a id="user-content-requisitos-da-vaga-obrigatórios" class="anchor" href="#requisitos-da-vaga-obrigat%C3%B3rios" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Requisitos da vaga Obrigatórios</strong>

</h1>

<ul>

<li>

<ul>

<li>Experiência em Java 8 ou superior</li>

</ul>

</li>

</ul>

<ul>

<li>Forte experiência em desenvolvimento Java multicamada/corporativo</li>

<li>Forte visão sistêmica (ciclo de desenvolvimento de software)</li>

<li>Banco de Dados Oracle 11 ou superior</li>

<li>Experiência em linguagem SQL (padrão ou PL-SQL Oracle)</li>

<li>JPA/Hibernate</li>

<li>JUnit</li>

<li>SonarQube</li>

<li>Design Patterns (MVC, VO, DAO, BO, Factory, Singleton, etc.)</li>

<li>Eclipse (IDE)</li>

<li>Experiência com APIs REST e SOAP</li>

<li>Preocupação com qualidade do código e funcionalidades</li>

</ul>

<h1>

<a id="user-content-requisitos-desejáveis" class="anchor" href="#requisitos-desej%C3%A1veis" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Requisitos Desejáveis</strong>

</h1>

<ul>

<li>

<ul>

<li>Conhecimentos na área de CRM</li>

</ul>

</li>

</ul>

<ul>

<li>XHTML/HTML5/CSS3/Javascript</li>

<li>JSP/Servlet/JSF</li>

<li>Spring MVC/SpringBoot</li>

<li>Apache Tomcat 6 ou superior</li>

<li>Plataforma Openshift</li>

<li>Cultura DevOps</li>

<li>Docker (Conteinerização)

* Maven/GIT/GitFlow</li>

<li>Scrum/XP/Metodologias Ágeis</li>

<li>Conhecimento em Cloud Computing (AWS)</li>

</ul>

<h1>

<a id="user-content-atribuições" class="anchor" href="#atribui%C3%A7%C3%B5es" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Atribuições</strong>

</h1>

[Truncated]

<a id="user-content-como-se-candidatar" class="anchor" href="#como-se-candidatar" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Como se candidatar</strong>

</h1>

<ul>

<li>Candidatar através do site <a href="http://www.base2.com.br" rel="nofollow">www.base2.com.br</a> ou vagas.base2.com.br</li>

</ul>

<h1>

<a id="user-content-tempo-médio-de-feedbacks" class="anchor" href="#tempo-m%C3%A9dio-de-feedbacks" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Tempo médio de feedbacks</strong>

</h1>

<p>Costumamos enviar feedbacks em até 07 dias após cada processo.</p>

<h1>

<a id="user-content-labels" class="anchor" href="#labels" aria-hidden="true"><span aria-hidden="true" class="octicon octicon-link"></span></a><strong>Labels</strong>

</h1>

<ul>

<li>

<p>Pleno, Sênior</p>

</li>

<li>

<p>CLT</p>

</li>

</ul> |

makojs/mako | 239963965 | Title: Switch to ES6 modules

Question:

username_0: _From @username_0 on November 28, 2016 7:38_

The more time goes on, the more I'd like to investigate using ES6 modules, rather than CommonJS. This would drastically affect the internals of this plugin, but ultimately it is the right direction to go in the long run.

I was previously thinking that I'd implement #17 by analyzing the CommonJS outputs, but kept running into blockers due to it's less-than-static nature. I recently discovered that rollup/webpack only perform tree-shaking on ES6 modules, since they are static by definition. (this drastically reduces the complexity)

All in all, I'm not sure what the right approach here is. The general use-case has been relying on code published to npm, which is generally pre-compiled to CommonJS. I also don't want to become too opinionated by bundling babel directly here to support the ES6/CommonJS divide.

It looks like rollup relies on `jsnext:main` in a `package.json` to reference a file that uses `import` and `export`, but I'm worried about an already fractured build process that uses npm code that assumes ES6 because of node 4+. But maybe I can piggy-back on the efforts of rollup and others.

_Copied from original issue: makojs/js#99_

Answers:

username_0: _From @darsain on November 28, 2016 14:16_

Apart of removal of unused code, the other awesome thing that these tools do is inline all module definitions. Or in other words, instead of mocking `require()` module system in the build, they inline the exported stuff, and thus remove the commonjs module system tax, which speeds up load times considerably.

So I'd be pretty excited to have this in mako as well :) But personally I'm unfamiliar how that works in practice in combination with existing stuff in npm, since I haven't really used rollup or webpack yet.

Also, I'm not aware that there is already a consensus on how are ES modules going to work in node. I know there has been a ton of going back and forth between people who want to make it seamless, with those that want a custom extensions like `.mjs`, and other horrible solutions. I'm afraid this might lead to some clashes later on, but can't give any specifics. Haven't kept up with it 😢.

username_0: _From @username_0 on November 28, 2016 7:38_

The more time goes on, the more I'd like to investigate using ES6 modules, rather than CommonJS. This would drastically affect the internals of this plugin, but ultimately it is the right direction to go in the long run.

I was previously thinking that I'd implement #17 by analyzing the CommonJS outputs, but kept running into blockers due to it's less-than-static nature. I recently discovered that rollup/webpack only perform tree-shaking on ES6 modules, since they are static by definition. (this drastically reduces the complexity)

All in all, I'm not sure what the right approach here is. The general use-case has been relying on code published to npm, which is generally pre-compiled to CommonJS. I also don't want to become too opinionated by bundling babel directly here to support the ES6/CommonJS divide.

It looks like rollup relies on `jsnext:main` in a `package.json` to reference a file that uses `import` and `export`, but I'm worried about an already fractured build process that uses npm code that assumes ES6 because of node 4+. But maybe I can piggy-back on the efforts of rollup and others.

_Copied from original issue: makojs/js#99_ |

go-gitea/gitea | 743300562 | Title: Invalid clone URL for SSH in empty repo view if SSH port is not 22

Question:

username_0: - Gitea version (or commit ref): 1.12.5

- Git version: 2.25.1

- Operating system: Ubuntu 20.04

running gitea with docker compose (see below)

- Database (use `[x]`):

- [ ] PostgreSQL

- [ ] MySQL

- [ ] MSSQL

- [x] SQLite

- Can you reproduce the bug at https://try.gitea.io:

- [ ] Yes (provide example URL)

- [x] No (no port number is required)

- Log gist:

`docker-compose.yml`:

```yml

version: "3"

networks:

gitea:

external: false

services:

gitea:

image: gitea/gitea:1

container_name: gitea

environment:

- USER_UID=1000

- USER_GID=1000

- SSH_DOMAIN=localhost:3022

restart: always

networks:

- gitea

volumes:

- ./gitea:/data

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

ports:

- "3000:3000"

- "3022:22"

```

<!-- It really is important to provide pertinent logs -->

<!-- Please read https://docs.gitea.io/en-us/logging-configuration/#debugging-problems -->

<!-- In addition, if your problem relates to git commands set `RUN_MODE=dev` at the top of app.ini -->

## Description

I run Gitea as a container and forward port 3022 to the container's port 22. I've found out that clone URLs provided for my repos are malformed:

```

kacper@kngl:~/git/gitea$ touch README.md

kacper@kngl:~/git/gitea$ git init

Initialized empty Git repository in /home/kacper/git/gitea/.git/

kacper@kngl:~/git/gitea[master]$ git add README.md

kacper@kngl:~/git/gitea[master]$ git commit -m "first commit"

[master (root-commit) 801f90b] first commit

1 file changed, 0 insertions(+), 0 deletions(-)

create mode 100644 README.md

kacper@kngl:~/git/gitea[master]$ git remote add origin git@localhost:3022:Kangel/example.git

kacper@kngl:~/git/gitea[master]$ git push -u origin master

ssh: connect to host localhost port 22: Connection refused

[Truncated]

However, if I modify the URL, it works just fine:

```

kacper@kngl:~/git/gitea[master]$ git remote remove origin

kacper@kngl:~/git/gitea[master]$ git remote add origin ssh://git@localhost:3022/Kangel/example.git

kacper@kngl:~/git/gitea[master]$ git push -u origin master

Enumerating objects: 3, done.

Counting objects: 100% (3/3), done.

Writing objects: 100% (3/3), 218 bytes | 218.00 KiB/s, done.

Total 3 (delta 0), reused 0 (delta 0)

remote: . Processing 1 references

remote: Processed 1 references in total

To ssh://localhost:3022/Kangel/example.git

* [new branch] master -> master

Branch 'master' set up to track remote branch 'master' from 'origin'.

```

## Screenshots

Status: Issue closed

Answers:

username_1: You've put a port into the domain name. Please see https://docs.gitea.io/en-us/config-cheat-sheet/#server-server for setting to use to set a different SSH port to show, in which case it'll properly format the clone URL. |

numba/numba | 155504371 | Title: LoweringError when creating typed numpy arrays in generators

Question:

username_0: ```

import numba

import numpy

@numba.jit

def test_works():

a = numpy.empty(3)

yield 15

test_works()

@numba.jit

def test_fails():

a = numpy.empty(3, dtype='float64')

yield 15

test_fails()

```

The first function compiles fine. However, the second raises a TypeError:

```

TypeError Traceback (most recent call last)

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/lowering.py in lower_block(self, block)

195 try:

--> 196 self.lower_inst(inst)

197 except LoweringError:

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/objmode.py in lower_inst(self, inst)

64 if isinstance(inst, ir.Assign):

---> 65 value = self.lower_assign(inst)

66 self.storevar(value, inst.target.name)

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/objmode.py in lower_assign(self, inst)

156 elif isinstance(value, ir.Yield):

--> 157 return self.lower_yield(value)

158 elif isinstance(value, ir.Arg):

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/objmode.py in lower_yield(self, inst)

186 y = generators.LowerYield(self, yp, yp.live_vars | yp.weak_live_vars)

--> 187 y.lower_yield_suspend()

188 # Yield to caller

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/generators.py in lower_yield_suspend(self)

320 if self.context.enable_nrt:

--> 321 self.lower.incref(ty, val)

322

TypeError: incref() takes 2 positional arguments but 3 were given

During handling of the above exception, another exception occurred:

LoweringError Traceback (most recent call last)

<ipython-input-13-2fbb685864c8> in <module>()

12 a = numpy.empty(3, dtype='float64')

13 yield 15

---> 14 test_fails()

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/dispatcher.py in _compile_for_args(self, *args, **kws)

286 else:

287 real_args.append(self.typeof_pyval(a))

--> 288 return self.compile(tuple(real_args))

289

290 def inspect_llvm(self, signature=None):

[Truncated]

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/lowering.py in lower_function_body(self)

181 bb = self.blkmap[offset]

182 self.builder.position_at_end(bb)

--> 183 self.lower_block(block)

184

185 self.post_lower()

/Users/ndcn0236/miniconda3/lib/python3.4/site-packages/numba/lowering.py in lower_block(self, block)

199 except Exception as e:

200 msg = "Internal error:\n%s: %s" % (type(e).__name__, e)

--> 201 raise LoweringError(msg, inst.loc)

202

203 def create_cpython_wrapper(self, release_gil=False):

LoweringError: Failed at object (object mode backend)

Internal error:

TypeError: incref() takes 2 positional arguments but 3 were given

File "<ipython-input-13-2fbb685864c8>", line 13

```

Answers:

username_1: Seems to be fixed in master already.

Status: Issue closed

username_0: You're right, it does indeed work with the newest version. |

ElektraInitiative/libelektra | 455581503 | Title: Priorization of LCDproc features

Question:

username_0: 1. having the possibility to start LCDproc without any installation/mounting (built-in spec)

2. small binary size

Answers:

username_1: There obviously isn't a general answer.

4 is basically just a lazy way to monitor changes to 2 and 3.

For OpenWRT it probably would be 231 most of the time

For my other systems it will be 321 except for my development workstation, where it is 14.

All these numbers assume that saving one byte in 2 costs one byte in 3. Even on OpenWRT I'd still sacrifice one byte on 2 to save say ten bytes on 3.

In the end there rarely is a true conflict among 234 and 1 is just a question of having some kind of convenience hack for development builds. (Eg at the moment we just pass a non-standard configuration file on the command line, if it isn't installed into the system yet.)

username_2: IMO installed size would include a separate specification file, if it has to be present, while binary size would not include that. The memory footprint is probably also not proportional (at least not in any obvious way) to the binary size. A load of strings will increase both similarly, a load of `keyNew` calls will increase memory usage much more than binary size (because of the key unescaping logic).

username_1: Yes, of course all these things have to be considered. Still I think comparing binary sizes gave us a lot of useful information. Once the obvious issues are solved, I might move to more advanced benchmarks, that involve actually running the code or building binary packages for various platforms.

username_0: Priorities should be fulfilled now.

Status: Issue closed

|

werf/werf | 847304687 | Title: werf helm dep update fails when secret-values are used

Question:

username_0: With the `.helm/secret-values.yaml` file command `werf helm dep update .helm` fails. Without `.helm/secret-values.yaml` works fine.

```

❯ werf helm dep update .helm

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x22099f6]

goroutine 1 [running]:

github.com/werf/werf/pkg/deploy/secrets_manager.(*SecretsManager).GetYamlEncoder(0x0, 0x2e57920, 0xc0008275f0, 0xc000050144, 0x18, 0x0, 0x0, 0x0)

/home/ubuntu/actions-runner/_work/werf/werf/pkg/deploy/secrets_manager/secret_manager.go:27 +0x26

github.com/werf/werf/pkg/deploy/helm/chart_extender/helpers/secrets.(*SecretsRuntimeData).DecodeAndLoadSecrets(0xc000cdaba0, 0x2e57920, 0xc0008275f0, 0xc00065f900, 0xa, 0x10, 0x0, 0x0, 0x0, 0x7ffff29d7bd6, ...)

/home/ubuntu/actions-runner/_work/werf/werf/pkg/deploy/helm/chart_extender/helpers/secrets/secrets_runtime_data.go:31 +0x66e

github.com/werf/werf/pkg/deploy/helm/chart_extender.(*WerfChartStub).ChartLoaded(0xc000114cc0, 0xc00065f900, 0xa, 0x10, 0xa, 0x10)

/home/ubuntu/actions-runner/_work/werf/werf/pkg/deploy/helm/chart_extender/werf_chart_stub.go:73 +0x170

helm.sh/helm/v3/pkg/chart/loader.LoadFiles(0xc00065ed80, 0xa, 0x10, 0x2e7b940, 0xc000114cc0, 0xc000664620, 0x0, 0x0, 0x0)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/pkg/chart/loader/load.go:195 +0x1f6d

helm.sh/helm/v3/pkg/chart/loader.LoadDirWithOptions(0x7ffff29d7bd6, 0x5, 0x2e7b940, 0xc000114cc0, 0xc000664620, 0x0, 0xc00014dac8, 0x40c4c6)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/pkg/chart/loader/directory.go:63 +0x107

helm.sh/helm/v3/pkg/chart/loader.LoadDir(...)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/pkg/chart/loader/directory.go:48

helm.sh/helm/v3/pkg/downloader.(*Manager).loadChartDir(0xc0004afa70, 0x25d9a80, 0xc00061dcc0, 0xc000680000)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/pkg/downloader/manager.go:228 +0x13a

helm.sh/helm/v3/pkg/downloader.(*Manager).Update(0xc0004afa70, 0xc0004afa70, 0x2)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/pkg/downloader/manager.go:154 +0x40

helm.sh/helm/v3/cmd/helm.newDependencyUpdateCmd.func1(0xc000615080, 0xc00068cdc0, 0x1, 0x1, 0x0, 0x0)

/home/ubuntu/go/pkg/mod/github.com/werf/helm/[email protected]/cmd/helm/dependency_update.go:74 +0x1d1

github.com/werf/werf/cmd/werf/helm.NewCmd.func2(0xc000615080, 0xc00068cdc0, 0x1, 0x1, 0x0, 0x0)

/home/ubuntu/actions-runner/_work/werf/werf/cmd/werf/helm/helm.go:162 +0x630

github.com/spf13/cobra.(*Command).execute(0xc000615080, 0xc00068cda0, 0x1, 0x1, 0xc000615080, 0xc00068cda0)

/home/ubuntu/go/pkg/mod/github.com/spf13/[email protected]/command.go:850 +0x453

github.com/spf13/cobra.(*Command).ExecuteC(0xc0000f6000, 0x0, 0xc0000cac30, 0x405b1f)

/home/ubuntu/go/pkg/mod/github.com/spf13/[email protected]/command.go:958 +0x349

github.com/spf13/cobra.(*Command).Execute(...)

/home/ubuntu/go/pkg/mod/github.com/spf13/[email protected]/command.go:895

main.main()

/home/ubuntu/actions-runner/_work/werf/werf/cmd/werf/main.go:64 +0x119

```

```

❯ werf version

v1.2.10+fix21

```

Answers:

username_1: Reproduced, will fix ASAP.

Status: Issue closed

|

adobe/jsonschema2md | 279028750 | Title: Documentation should list where a property has been declared

Question:

username_0: If a property has been declared in a referenced schema, it should be included in full in the current document's documentation, with a note explaining that it is referenced from another schema.

For instance, for `Image`:

```markdown

## modify_date

`modify_date` is referenced from [Asset](asset.md#modify_date)

```<issue_closed>

Status: Issue closed |

Azure/autorest-clientruntime-for-java | 441492447 | Title: Class AppServiceMSICredentials doesn't provide a way to pass clientID to call the local MSI endpoint

Question:

username_0: The class AppServiceMSICredentials does not include a definition for passing a clientID of the User-Assigned MSI.

This is a necessary parameter when working with User Assigned MSI. See [this ](https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity#using-the-rest-protocol )

Parameter name | In | Description

-- | -- | --

resource | Query | The AAD resource URI of the resource for which a token should be obtained. This could be one of the Azure services that support Azure AD authentication or any other resource URI.

api-version | Query | The version of the token API to be used. "2017-09-01" is currently the only version supported.

secret | Header | The value of the MSI_SECRET environment variable. This header is used to help mitigate server-side request forgery (SSRF) attacks.

**clientid** | **Query** | **(Optional) The ID of the user-assigned identity to be used. If omitted, the system-assigned identity is used.**

Currently using the Java SDK to call the MSI endpoint results in a 400 bad request as it doesn't include the clientid in the query string.

Probably something like this?

```

@Beta

public AppServiceMSICredentials withClientId(String clientId) {

this.clientId = clientId;

this.objectId = null;

this.identityId = null;

return this;

}

```

Answers:

username_0: This works when I call explicitly via curl in Kudu. We need a way for the clientid to be passed via Java SDK

username_0: Created a new one here:

https://github.com/Azure/azure-sdk-for-java/issues/4024

Status: Issue closed

|

episerver/EPiServer.Forms.Samples | 251991972 | Title: Add UK datetime format to the forms date picker

Question:

username_0: If you have a look in EpiserverFormsSamples.js, around line 7 there is no support for a UK date format in the DateFormats object.

The following line of code needs adding:

"en-gb": {

"pickerFormat": "dd/mm/yy",

"friendlyFormat": "dd/MM/yyyy"

} |

Pathoschild/Wikimedia-contrib | 321533198 | Title: All tools broken

Question:

username_0: `Fatal error: Invalid serialization data for DateTime object in /mnt/nfs/labstore-secondary-tools-project/meta/git/wikimedia-contrib/tool-labs/backend/modules/Cacher.php on line 153`

This message is appearing on all of your tools since yesterday. For example: https://tools.wmflabs.org/meta/userpages/MarcoAurelio

Any idea what's going on?

Thank you!

Status: Issue closed

Answers:

username_1: Fixed. The errors were caused by a cache file containing invalid data somehow; I cleared the cache and everything should be fine now. Thanks for reporting it! |

filerd002/BattleTank | 316524893 | Title: Ujednolicenie elementów gry cz. 1

Question:

username_0: Wiec tak: praktycznie wszystkie elementy biorące udział w grze mają takie funkcje jak ``Update()``, czy ``Draw()``. Dobrze byłoby to zebrać w interfejs, dzięki temu w prostszy sposób można by zarządzać wszystkimi obiektami biorącymi udział w grze.

Answers:

username_0: Można by się zainteresować tą klasą wbudowaną w XNA: https://docs.microsoft.com/en-us/previous-versions/windows/xna/bb196397(v=xnagamestudio.41) |

mbeyeler/opencv-machine-learning | 375296521 | Title: HTML:Bankfraud-LV detected by Avast AV; download/connection aborted

Question:

username_0: Avast AV detects HTML:Bankfraud-LV & aborts download/connection. Here's the relevant line from the Avast log file:

10/29/2018 10:20:07 PM https://codeload.github.com/username_1/opencv-machine-learning/zip/master|>opencv-machine-learning-master\notebooks\data\chapter7\BG.tar.gz|>BG.tar|>BG\2004\09\1095786133.25239_75.txt|>PartNo_0#2719639900 [L] HTML:Bankfraud-LV [Trj] (0)

Answers:

username_1: Lol what the... Is this for real?

username_1: Alright, so this is from the chapter on [classifying spam emails](https://github.com/username_1/opencv-machine-learning/blob/2513edab423a4f067ebcc4c867203db1064c35c7/notebooks/07.02-Classifying-Emails-Using-Naive-Bayes.ipynb), which uses the publicly available [Enron-Spam dataset](http://nlp.cs.aueb.gr/software_and_datasets/Enron-Spam/index.html) from Athens University. This dataset contains a number of innocuous emails (classified as 'ham') and just a bunch of spam.

The file in question is an example of a spam email (from the 'BG' folder) pretending to be from CitiBank. So in a sense, Avast is right that this is a phishing email trying to commit bank fraud 😄 but as a text file it certainly does not contain a Trojan.

I know that's easy to say, so maybe if you don't trust it, the easiest way out is not to download the data for Chapter 7. You could download the data directly from the original source if you trust them ([nlp.cs.aueb.gr](http://nlp.cs.aueb.gr/software_and_datasets/Enron-Spam/index.html)) or you could use any other email dataset instead (e.g., Athens University has another popular one that's called the [Ling-Spam corpus](http://www.aueb.gr/users/ion/data/lingspam_public.tar.gz)).

The content of the text file in question is printed below.

Cheers,

Michael

```

Return-Path: <<EMAIL>>

Delivered-To: <EMAIL>

Received: (qmail 16009 invoked by uid 115); 21 Sep 2004 15:57:50 -0000

Received: from <EMAIL> by churchill by uid 64011 with qmail-scanner-1.22

(clamdscan: 0.75-1. spamassassin: 2.63. Clear:RC:0(192.168.127.12):.

Processed in 0.44637 secs); 21 Sep 2004 15:57:50 -0000

Received: from hrbg-66-33-237-222-nonpppoe.dsl.hrbg.epix.net (192.168.127.12)

by churchill.factcomp.com with SMTP; 21 Sep 2004 15:57:50 -0000

X-Message-Info: 9hGWsd261aRE/mzVydeHYhYPycntP8Kd

Received: from <EMAIL>ly ([120.186.99.115])

by cou76-mail.moyer.adsorb.destitute.cable.rogers.com

(InterMail vM.5.01.05.12 159-633-015-445-824-04571071) with ESMTP

id <<EMAIL>>

for <<EMAIL>>; Tue, 21 Sep 2004 10:51:47 -0600

Message-ID: <333460z0xomp$1316d6m0$3641a4c0@phylogeny>

Reply-To: "<NAME>" <<EMAIL>>

From: "<NAME>" <<EMAIL>>

To: <<EMAIL>>

Subject: ATTN: Security Update from Citibank MsgID# 92379245

Date: Tue, 21 Sep 2004 21:51:47 +0500

MIME-Version: 1.0

Content-Type: multipart/alternative;

boundary="--419776125423611"

----419776125423611

Content-Type: text/html;

Content-Transfer-Encoding: quoted-printable

<html>

<head>

<title>Untitled Document</title>

<meta http-equiv=3D"Content-Type" content=3D"text/html; charset=3Diso-8859=

-1">

</head>

<body bgcolor=3D"#FFFFFF" text=3D"#000000">

CITIBANK(R)

<p>Dear Citibank Customer:</p>

<p>Recently there have been a large number computer terrorist attacks over=

our

database server. In order to safeguard your account, we require that you=

update

your Citibank ATM/Debit card PIN. </p>

<p>This update is requested of you as a precautionary measure against frau=

d. Please

note that we have no particular indications that your details have been =

compromised

[Truncated]

etails

at hand.</p>

<p>To securely update your Citibank ATM/Debit card PIN please go to:</p>

<p><a href=3D"http://219.138.133.5/customer/">Customer Verification

Form</a></p>

<p>Please note that this update applies to your Citibank ATM/Debit card - =

which

is linked directly to your checking account, not Citibank credit cards.<=

/p>

<p>Thank you for your prompt attention to this matter and thank you for us=

ing

Citibank!</p>

<p>Regards,</p>

<p>Customer Support MsgID# 92379245</p>

<p>(C)2004 Citibank. Citibank, N.A., Citibank, F.S.B., <br>

Citibank (West), FSB. Member FDIC.Citibank and Arc <br>

Design is a registered service mark of Citicorp.</p>

</body>

</html>

```

Status: Issue closed

|

Atlantiss/NetherwingBugtracker | 394641091 | Title: [NPC] Troll Roof Stalkers detected from ground floor of buildings

Question:

username_0: [//]: # (Enclose links to things related to the bug using http://wowhead.com or any other TBC database.)

[//]: # (You can use screenshot ingame to visual the issue.)

[//]: # (Write your tickets according to the format:)

[//]: # ([Quest][Azuremyst Isle] Red Snapper - Very Tasty!)

[//]: # ([NPC] Magistrix Erona)

[//]: # ([Spell][Mage] Fireball)

[//]: # ([Npc][Drop] Ghostclaw Lynx)

[//]: # ([Web] Armory doesnt work)

[//]: # (To find server revision type `.server info` command in the game chat.)

**Description**:

Level 70 players going about their business in Orgrimmar will hear unstealthing sounds over and over again as the stealthed Troll Roof Stalkers can be easily detected through the roofs from the ground floor of multiple buildings.

There are several buildings that can be used to recreate this. Inside the Auction House was where I first noticed it. Going up the tower to the Orgrimmar Flight Master will result in you hearing the Stalker on a nearby roof unstealth multiple times as your character's direction faces them and turns away repeatedly due to the spiral ramp. I find Kaja's Boomstick Imports next to the Auction House to the most severe as both the second floor ramp and the roof are obstructing your vision of the above Troll Roof Stalker yet he still becomes unstealthed.

**Current behaviour**:

Troll Roof Stalkers can be detected by level 70 players from inside many buildings.

**Expected behaviour**:

I feel that a Stalker on the roof wouldn't be easily detected by people on the ground floor.

**Server Revision**:

2515

Answers:

username_1: I reported the same few years ago. http://tbc.internal.atlantiss.eu/showthread.php?tid=1353&highlight=sound

https://www.youtube.com/watch?v=SZYCX7uq50Y

its pretty annoying

username_2: Are their stealth "level" just really low? You can see them from really far away, compared to for example finding a stealthed rogue even same faction.

Because not only I feel you really shouldn't be able to find a stealthed person so far above you, there is also implications in pvp on this report that was "closed". I still believe you shouldn't be able to see a stealthed person above you.

https://gfycat.com/BountifulRemoteCygnet

#2791

username_2: What I'm saying is that is the stealth of the troll really shit and are you able to generally NPC stealthed mobs more easily than players? Because this is where you can detect a same faction 70 rogue without MOD

With MOD

And about how far you can detect the trolls

Either way, I think there is definitely something going on how you can detect stealthed people from way above you, but I think the problem is that their stealth level is too low

username_3: rev.3472 not an issue anymore.

Can be closed.

Status: Issue closed

|

beardofedu/example-repository | 527302103 | Title: There is a bug!

Question:

username_0: **Describe the bug**

A clear and concise description of what the bug is.

**To Reproduce**

Steps to reproduce the behavior:

1. Go to '...'

2. Click on '....'

3. Scroll down to '....'

4. See error

**Expected behavior**

A clear and concise description of what you expected to happen.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Desktop (please complete the following information):**

- OS: [e.g. iOS]

- Browser [e.g. chrome, safari]

- Version [e.g. 22]

**Smartphone (please complete the following information):**

- Device: [e.g. iPhone6]

- OS: [e.g. iOS8.1]

- Browser [e.g. stock browser, safari]

- Version [e.g. 22]

**Additional context**

Add any other context about the problem here.<issue_closed>

Status: Issue closed |

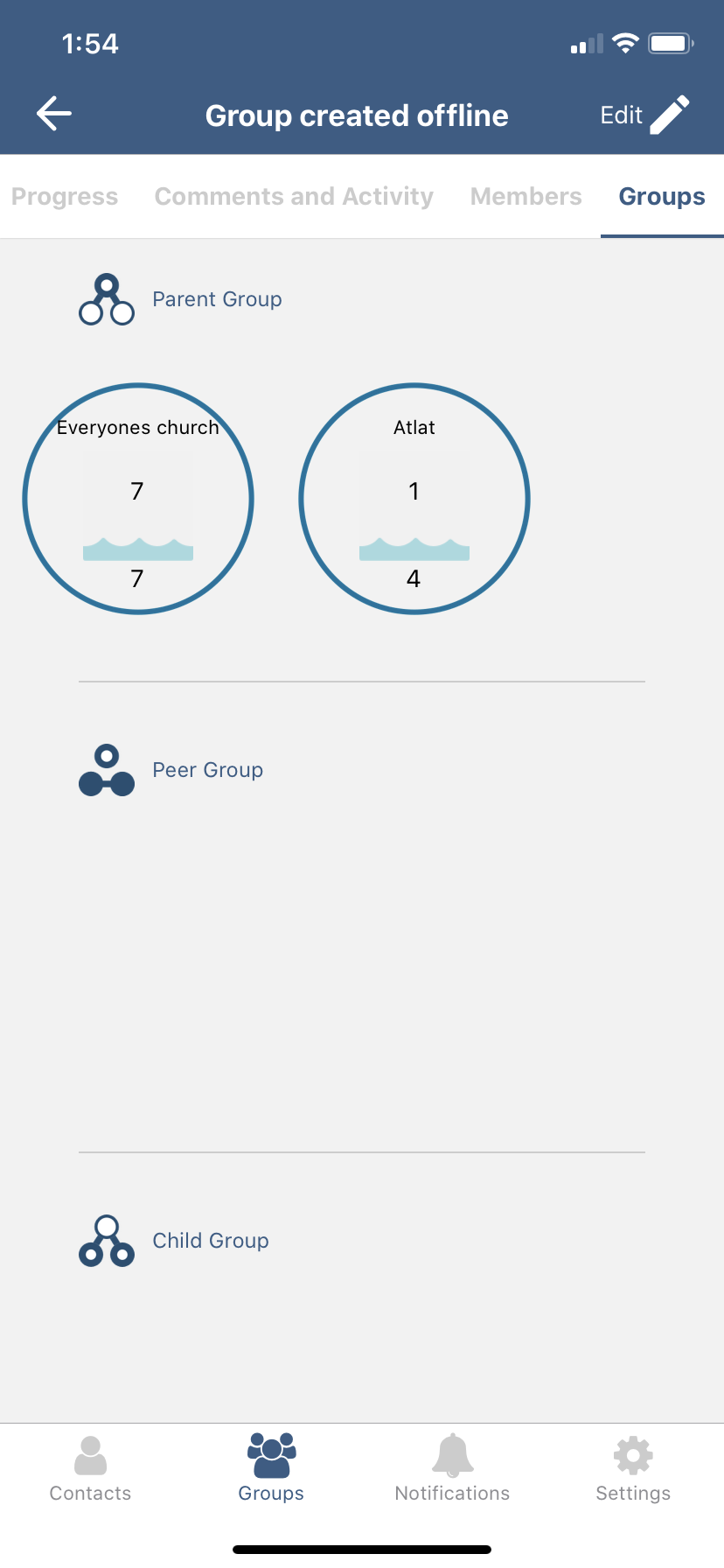

DiscipleTools/disciple-tools-mobile-app | 666450436 | Title: Unrequested data change: removed group from a parent/peer/child group field

Question:

username_0: When there are 2 or more groups listed in a parent/peer/child group field, the first group stays but the others are removed when group details are changed (setting the church start date).

The same type if issue happens for a contact.

Answers:

username_1: Confirmed on iOS 13.5.1

username_1: Hi @raschdiaz , we need to prioritize this issue for Sprint 24 in order to perform a release this weekend. Thx!

username_2: This is applicable for all the "Connections" type fields.

username_1: Note: **_Step # 4_** of the `Steps to reproduce` is the **_key_**. You must Add a Group to `Parent`, `Peer`, or `Child` Groups, and then click to navigate to `Group Details`, and `Edit`, and `Save`.

Status: Issue closed

username_0: @username_1 @raschdiaz this issue happened again in the current 1.7 version of the app when I was updating a record on my instance. I will send you the details privately. |

SqixOW/Aim_Docs_Translator | 686947309 | Title: 번역 신청합니다.

Question:

username_0: https://www.dropbox.com/s/fkvf1vi03mb5u7u/Aimer7%20Routine%20Addendum.pdf?dl=0

tammas라는 사람의 aimer7 루틴 보완? 업데이트? 버전이라고 합니다. 레딧의 댓글들을 흝어보니 현 시점에선 aimer7 루틴 본판보다는 이걸로 시작하는게 더 좋다는 얘기가 많았습니다.

https://docs.google.com/spreadsheets/d/1hAzqdi-dl5_RrURx9gnFBo9-MOghurleY3eAALht5Kk/edit#gid=0

이건 이미 알고계실지도 모르겠지만 에임 관련 가이드들을 모아놓은 문서입니다. 참고하세요. 감사합니다.

Answers:

username_0: 아 위에 올린 tammas의 루틴 링크는 이전 버전인것같네요. https://www.dropbox.com/s/w316s768shjissf/Tammas%27%20Routine%20Addendum.pdf?dl=0

이쪽이 최신판으로 보입니다!

Status: Issue closed

username_0: https://www.dropbox.com/s/fkvf1vi03mb5u7u/Aimer7%20Routine%20Addendum.pdf?dl=0

tammas라는 사람의 aimer7 루틴 보완? 업데이트? 버전이라고 합니다. 레딧의 댓글들을 흝어보니 현 시점에선 aimer7 루틴 본판보다는 이걸로 시작하는게 더 좋다는 얘기가 많았습니다.

https://docs.google.com/spreadsheets/d/1hAzqdi-dl5_RrURx9gnFBo9-MOghurleY3eAALht5Kk/edit#gid=0

이건 이미 알고계실지도 모르겠지만 에임 관련 가이드들을 모아놓은 문서입니다. 참고하세요. 감사합니다.

username_1: 대기열 등록 완료

Status: Issue closed

|

plaid/plaid-python | 1178106929 | Title: asset_report_get raising TypeError when asset report has warnings

Question:

username_0: We recently upgraded from plaid-python 7.x (non OpenAPI version) to plaid-python `9.1.0`

Since the upgrade we are seeing a case where `client.asset_report_get` throws the following error:

```

TypeError: __init__() missing 1 required positional argument: 'error'

```

It looks like if an asset report contains any warnings in the `warnings` field, then the client fails to deserialize the response from the API correctly.

I cannot easily provide a way to reproduce but I'll try to provide as much info as possible.

In our application we listen for the assets webhook:

```py

{

"asset_report_id": "xxx--xxx--xxx",

"webhook_code": "PRODUCT_READY",

"webhook_type": "ASSETS"

}

```

during the handling of that webhook, we use `client.asset_report_get` to get the contents of the report and determine if we need to apply filtering.

We saw an example where after receiving a `PRODUCT_READY` notification for a report, when we tried to get the report we got the `TypeError` mentioned above. I can see the response that came back from the plaid API looked like this:

```py

{

report: {

asset_report_id: 'xxxx',

client_report_id: None,

date_generated: '2022-03-23T11:53:28Z',

days_requested: 19,

items: [...],

user: {}

},

request_id: 'qh5EJhxemMpgEsS',

warnings: [

{

cause: {"display_message":"'This financial institution is not currently responding to requests. We apologize for the inconvenience.'","error_code":"'INSTITUTION_NOT_RESPONDING'","error_message":"'this institution is not currently responding to this request. please try again soon'","error_type":"'INSTITUTION_ERROR'","item_id":"'xxxxxx'"},

warning_code: 'OWNERS_UNAVAILABLE',

warning_type: 'ASSET_REPORT_WARNING'

}

]

}

```

The stack trace was this:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.9/code.py", line 90, in runcode

exec(code, self.locals)

File "<console>", line 1, in <module>

File "/<redacted>/venv/lib/python3.9/site-packages/plaid/api_client.py", line 769, in __call__

return self.callable(self, *args, **kwargs)

File "/<redacted>/venv/lib/python3.9/site-packages/plaid/api/plaid_api.py", line 1308, in __asset_report_get

return self.call_with_http_info(**kwargs)

File "/<redacted>/venv/lib/python3.9/site-packages/plaid/api_client.py", line 831, in call_with_http_info

[Truncated]

The call to `deserialize_model` that failed had the following args

```

check_type=True

configuration=<plaid.configuration.Configuration object at 0x7f5733d00790>

kw_args={_check_type: True, _configuration: <plaid.configuration.Configuration object at 0x7f5733d00790>, _path_to_item: ['received_data', 'warnings', 0, 'cause'], _spec_property_naming: True, display_message: 'This financial institution is not currently responding to requests. We apologize for the inconvenience.', error_code: 'INSTITUTION_NOT_RESPONDING', error_message: 'this institution is not currently responding to this request. please try again soon', error_type: 'INSTITUTION_ERROR', item_id: 'v66OZpaA48TRXb1vveBrT450YdENeBUmPRMAO'}

model_class=<class 'plaid.model.cause.Cause'>

model_data={display_message: 'This financial institution is not currently responding to requests. We apologize for the inconvenience.', error_code: 'INSTITUTION_NOT_RESPONDING', error_message: 'this institution is not currently responding to this request. please try again soon', error_type: 'INSTITUTION_ERROR', item_id: 'v66OZpaA48TRXb1vveBrT450YdENeBUmPRMAO'}

path_to_item=['received_data', 'warnings', 0, 'cause']

spec_property_naming=True

```

Answers:

username_1: Thank you for the super detailed error report, it was very helpful! It looks like the root cause here is probably that our OpenAPI file is misrepresenting the schema of the `cause` object -- it specifies it as an `item_id` and a nested `error` object when your copy and paste of the response data shows that the `error` object is actually "flattened" in the response, rather than nested. I'm working on a fix now. Do you guys need a hotfix urgently, or is it ok if we wait for the next regularly scheduled release in ~3 weeks?

username_0: I don't want to be too pushy, if we could get a fix that would be ideal, but we could probably wait too. This is a bit off topic to the original issue but the reason we decided to upgrade to the OpenAPI based client is because we were migrating to the Account Select V2 feature which according to [the docs](https://plaid.com/docs/link/account-select-v2-migration-guide/#automatic-migration) - "we will automatically migrate all of your customizations to Account Select v2"

We made all the preparations and enabled it for all of our plaid link customizations in production but we had missed the one r[ecommendation which was to upgrade our python client to at least `8.3.0`](https://plaid.com/docs/link/account-select-v2-migration-guide/#minimum-version-for-account-select-update-mode) (we were on 7.x).

We noticed after enabling account select v2 with the old python client, that in the plaid link flow, users who were redirected back to our application after authenticating with an Oauth institution would be presented with an account selection screen within plaid link after after they had already been presented with an account selection screen on the bank side. We reached out to plaid support and they confirmed that a user who goes through the Oauth flow shouldn't see a second account selection step in plaid link. Their recommendation was to update our plaid python client.

To be honest I don't really understand this recommendation given that the flow is entirely frontend and even though we're on an old python client, we're targeting the latest Plaid API. Unless there is something different about how the newer clients call out to create the plaid link token or the API is doing something differently based on the client version in the request headers.

Again - this is off topic but if you know of any workarounds to get the desired plaid link frontend experience using the outdated client, then we'd be happy to try that out while you get a release out on your regular schedule!

username_1: The only reason we recommend upgrading the client library is to enable use of the `update.account_selection_enabled` parameter when initializing Link for Update Mode, in order to use Update Mode to pick up new accounts detected by the `NEW_ACCOUNTS_AVAILABLE` webhook. This parameter is not supported in older client libraries. If you aren't using this, you can continue to use older client libraries.

While it is true that we can sometimes skip the Plaid Link account selection team after OAuth account selection, the business logic around this is quite complicated and we do not always skip the pane -- it depends on things like whether you are using multi-select or single-select, whether you use account filtering, and how the specific institution that's being connected to implements their OAuth account selection options. The fact that the pane isn't being skipped is likely expected and shouldn't have anything to do with the client library. |

habx/apollo-multi-endpoint-link | 991056611 | Title: Missing dependencies

Question:

username_0: Looks like tscov is missing from dev dependencies

Adding this and running

npm run type:coverage

shows error

Error: ENOENT: no such file or directory, stat '/home/username_0/projects/git/www/apollo-multi-endpoint-link/@habx/config-ci-front/typescript/library.json'

Answers:

username_1: Hi @username_0,

We didn't intend to manage it. [I removed it ](https://github.com/habx/apollo-multi-endpoint-link/pull/195)but if you find a way to fix it, PRs are welcome :)

Status: Issue closed

|

YoEight/eventstore-rs | 598593146 | Title: Questions about connection's state

Question:

username_0: Hello (again),

I'm trying to set up a pool of `eventstore::Connection` by creating an adapter for the crate [`l337`](https://docs.rs/l337/0.4.1/l337/) and I have some questions about how to do certain things with this library (`eventstore`).

I hope my questions and remarks will be relevant, it's been just a few weeks since I started to play with Rust so be lenient :slightly_smiling_face:

First of all, I find pretty strange that `ConnectionBuilder::single_node_connection()` and `ConnectionBuilder::cluster_nodes_through_gossip_connection()` directly return a `Connection` and not a `Result<Connection, _>`.

They are async functions so it suggests that they are trying to contact the EventStore server, which may be unreachable so it would make more sense to get a `Result`.

For the need of the adapter, I need a way to know if the connection is healthy.

How I'm doing it currently is by making this request : `connection.read_all().max_count(0).execute().await`.

Do you think there is a better (more performant) way to check if the connection is (still) alive ?

Wouldn't it be possible to get the connection state in a synchronous fashion ? Maybe it's another subject than my previous question.

I think of a state that we could check at any time (a method on `Connection` ?), which would be updated when the server stops responding for example, or when a request failed.

I don't know if you can be informed in any way when the connection with the server is lost, to update such a state ?

There is one last thing I wanted to mention, it doesn't have much to do with the rest but I noticed some logs like `HEARTBEAT TIMEOUT` or `Persistent subscription lost connection` in the EventStore server when calling `connection.shutdown().await`, that makes me think to a badly closed connection.

Here are the logs in question :

```

# The first request is made

[00001,05,21:53:11.511] External TCP connection accepted: [Normal, 172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}].

[00001,26,21:53:11.511] Connection '"external-normal"' ({b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}) identified by client. Client connection name: '"ES-37f46d53-dd9b-4c66-bddb-b7030049fd24"', Client version: V2.

# The `.shutdown().await` is called, followed by `sleep(Duration::from_secs(10))`, but the following logs appears only 5 seconds after

[00001,14,21:53:16.524] Closing connection '"external-normal"":ES-37f46d53-dd9b-4c66-bddb-b7030049fd24"' [172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}] cleanly." Reason: HEARTBEAT TIMEOUT at msgNum 3"

[00001,14,21:53:16.525] ES "TcpConnection" closed [21:53:16.525: N172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}]:Received bytes: 134, Sent bytes: 204

[00001,14,21:53:16.525] ES "TcpConnection" closed [21:53:16.526: N172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}]:Send calls: 4, callbacks: 4

[00001,14,21:53:16.525] ES "TcpConnection" closed [21:53:16.526: N172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}]:Receive calls: 4, callbacks: 3

[00001,14,21:53:16.525] ES "TcpConnection" closed [21:53:16.526: N172.17.0.1:47828, L172.17.0.2:1113, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}]:Close reason: [Success] "HEARTBEAT TIMEOUT at msgNum 3"

[00001,14,21:53:16.525] Connection '"external-normal"":ES-37f46d53-dd9b-4c66-bddb-b7030049fd24"' [172.17.0.1:47828, {b3d1959c-4a64-42d9-baa3-aac9d7f13bbf}] closed: Success.

[00001,20,21:53:16.528] Persistent subscription lost connection from 172.17.0.1:47828

```

Maybe it's completely normal, but I'm not sure :thinking:

Thank you (again) for taking the time to answer my questions.

Answers:

username_1: While I don't exclude the possibility that something could have been left out open, it's improbable. When shutting down, the state-machine blocks further new command and prevents existing one from sending new packages. The state machine completes every pending command (like persistent subscriptions) before closing the TCP connection. I would investigate your scenario.

username_1: Next time, please consider the [Discord] server for questions too.

[Discord]: https://discord.gg/x7q37jJ

username_0: Thank you for your detailed answers.

I still have some questions but I'll go to the Discord server and ask them as you advise :wink:

Status: Issue closed

|

andrewmcdan/open-hardware-monitor | 64167970 | Title: Open Hardware Monitor 0.5.1 Beta not foundet my HDD's ???

Question:

username_0: ```

What is the expected output? What do you see instead?

I see not my HDD's i have 2 HDD's SAMSUNG 830 Series SSD + 1 HDD 1TB normly

SATA 2 my complette hardware see here ---> http://www.sysprofile.de/id21540

What version of the product are you using? On what operating system?

Open Hardware Monitor 0.5.1 Beta / Windows 7 SP 1

Please provide any additional information below.

I have Open Hardware Monitor 0.5.1 Beta and HWiNFO 4.09-1810 installed. HWiNFO

foundet my HDD's and Open Hardware Monitor not why ???

Please attach a Report created with "File / Save Report...".

See my Snapshot !!!

```

Original issue reported on code.google.com by `<EMAIL>` on 13 Dec 2012 at 6:01

Attachments:

- [Bild 2.jpg](https://storage.googleapis.com/google-code-attachments/open-hardware-monitor/issue-421/comment-0/Bild 2.jpg)<issue_closed>

Status: Issue closed |

servicecatalog/development | 278487007 | Title: Get VOUsageLicense object to assign subscription

Question:

username_0: Hi,

I am trying to assign subscription to all the user from 'XYZ' org. Am using addRevokeUser method. I am able to remove the subscription by sending VOUser list. But i am not able to get VOUsageLicense object to pass this method. How can i get this? Please suggest.

Answers:

username_1: Hi @username_0

not sure if I fully got your intention here. Note that subscriptions can only be assigned to existing users of the respective customer. Thus, condition in your case is that "'XYZ' org" is a customer of the supplier organization providing the service. Otherwise you should look for AccountService API to register a customer, as administrator of given supplier organization. See AccountService#registerCustomer respectively AccountService#registerKnownCustomer.

For adding all users your code should look something like following.

`

IdentityService id = connectAsSusbcriptionAdmin();

// Collect usage licenses

List<VOUsageLicense> licences = new ArrayList<VOUsageLicense>();

List<VOUserDetails> users = id.getUsersForOrganization();

for (VOUserDetails user : users) {

VOUsageLicense usageLicence = new VOUsageLicense();

usageLicence.setUser(user);

licences.add(usageLicence);

}

// Add users

SubscriptionService subService= ...;

subService.addRevokeUser(subscriptionId, licences, null);

`

Status: Issue closed

|

juj/fbcp-ili9341 | 473696841 | Title: #define ALL_TASKS_SHOULD_DMA

Question:

username_0: /home/pi/fbcp-ili9341/spi.cpp: In function ‘void RunSPITask(SPITask*)’:

/home/pi/fbcp-ili9341/spi.cpp:281:24: error: ‘SPIDMATransfer’ was not declared in this scope

SPIDMATransfer(task);

^

/home/pi/fbcp-ili9341/spi.cpp:288:26: error: ‘WaitForDMAFinished’ was not declared in this scope

WaitForDMAFinished();

Answers:

username_1: the two functions are declared in https://github.com/username_1/fbcp-ili9341/blob/aa01d12cd78cd15fd655a96b4811eb9437da136a/dma.h#L127-L135

conditional to `USE_DMA_TRANSFERS` being defined. `spi.cpp` does include that file: https://github.com/username_1/fbcp-ili9341/blob/aa01d12cd78cd15fd655a96b4811eb9437da136a/spi.cpp#L13

so this suggests that you were having a build where `ALL_TASKS_SHOULD_DMA` was defined, but `USE_DMA_TRANSFERS` was not. Pushed a commit to verify that code should not be getting compiled with such mismatching directives. Run a `git pull` and double check build flags?

username_0: Got it thankyou username_1

Status: Issue closed

|

thymikee/jest-preset-angular | 428907174 | Title: InlineHtmlStripStylesTransformer is not strict enough

Question:

username_0: ## Current Behavior

_Any_ object literals have their property assignments transformed thus causing source code such as:

```ts

console.log({

styles: ['styles.css']

})

```

to print out

```ts

{

styles: []

}

```

## Expected Behavior

Only object literals passed to `@angular/core`'s `@Component` decorator should have it's property assignments transformed.

Answers:

username_0: Unless someone else wants to tackle this, I can try and make a PR.

username_1: PR is welcome 👍😀

username_2: Yeah, this caveat is known and discussed

* in the [transformer itself](username_8/jest-preset-angular/src/InlineHtmlStripStylesTransformer.ts#L11-L14)

* the [original PR]()

* this [follow-up PR](username_8/jest-preset-angular/pull/211), caused by

* [this issue](https://github.com/username_8/jest-preset-angular/issues/210).

Problem is, that theoretically someone might rename the annotation, or pull an object into the annotation, that is not declared inside the annotation itself.

The AST transformer does not know if an object is actually *the* Angular Component Decorator or if an object with `styles` and `templateUrl` is actually a component configuration, it only knows in what relation code pieces are and how they are named.

Consider these examples:

```ts

import { Component } from '@angular/core';

// classic and easy

@Component({ templateUrl: './file.html' }) export class MyFirstComp { }

// difficult

const MyAnnotation = Component;

@MyAnnotation({ templateUrl: './file.html' }) export class MySecondComp { }

// also difficult

const componentConfig = { styles: [], templateUrl: './file.html' };

@Component(componentConfig) export class MyThirdComp { }

// also difficult

const partialComponentConfig = { templateUrl: './)

@Component({...partialComponentConfig, styles: []}) export class MyThirdComp { }

```

We should discuss approaches and their caveats before implementing it.

username_3: Would it be an expectation to even support the second case?

```

const MyAnnotation = Component;

@MyAnnotation({ templateUrl: './file.html' }) export class MySecondComp { }

```

For the others we are guaranteed to be in `@Component`

username_2: I agree, the second example is not very important.

The third and fourth are the problematic ones.

@username_3 The AST transformer is written in TypeScript, but it works with AST objects. AST is a representation of the code text, not of the evaluated objects.

So this situation

```ts

const componentConfig = { styles: [], templateUrl: './file.html' };

@Component(componentConfig) export class MyThirdComp { }

```

is definitely a problem, as in this example each line is an independent object. References are made when JavaScript is interpreted, not when the AST transformer is executed. The `styles` assignment is not inside the decorator and therefore we would have to search for the `componentConfig` variable in the whole file, maybe even in other files if it is imported.

username_0: @username_2 I see the issues. That is fair.

Let's make it not as strict as looking for where the object literal is.

I think if we check if the file imports Component from `@angular/core` it will be safe enough. The difficult cases are still satisfied since they all have to import from `@angular/core`.

Then we can exclude files which do not include:

```ts