repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

rust-lang/rust | 153161641 | Title: Missing context from Error Handling section of The Book

Question:

username_0: In the [Adding Functionality section](https://doc.rust-lang.org/book/error-handling.html#adding-functionality) of the Error Handling guide, a line with `args.flag_quiet` is referenced. This is not a standard method and after doing some googling I found [this post](http://blog.burntsushi.net/rust-error-handling/). It would seem a few sections regarding the creation of a custom `Args` struct were removed or left out by mistake. Either way, I'm not sure if this those sections should be re-added or if this section needs to be reworked without that struct.<issue_closed>

Status: Issue closed |

MisaelGC/invie-GitHub | 338132885 | Title: Feature Request = precios

Question:

username_0: ## ¿Cómo puedo replicar el problema?

no hay pagina de precios, me encantaría saber el precio de la guitarra acústica

## ¿En que versión ocurre de invie ocurre?

Ahora mismo no existe una pagina de precios |

solgenomics/sgn | 926277417 | Title: queries involving locations don't work in the wizard

Question:

username_0: Expected Behavior <!-- Describe the desired or expected behavour here. -->

--------------------------------------------------------------------------

For Bugs:

---------

### Environment

<!-- Where did you encounter the error. -->

#### Steps to Reproduce

<!-- Provide an example, or an unambiguous set of steps to reproduce -->

<!-- this bug. Include code to reproduce, if relevant. --><issue_closed>

Status: Issue closed |

cocos2d/cocos2d-x | 175694762 | Title: schedule::update dt is no accurate

Question:

username_0: - cocos2d-x version:3.9

- devices test on:android huawei

when fps is low than 60, the dt is 0.016, in fact it maybe 0.02 or more

```

self.nScheduleID = schedule:scheduleScriptFunc(function(dt)

self:onTick(dt)

end, 1/60, false)

```

Answers:

username_1: could you post a complete snippet... something that I can copy & paste and reproduce the issue? Thanks. |

theopolis/uefi-firmware-parser | 41663757 | Title: More powerful auto-checking

Question:

username_0: A script that uses the firmware-type detector. When no type is found and brute-forcing is an option, have the type-checker auto-suggest to the script invoker (via stdout/stderr) they should try bruteforce/scanning.

Answers:

username_0: This occurs by default for the `AutoChecker` class when a volume is found. Since volume content usually involves 'stacked' volumes.

Status: Issue closed

|

pcbuilderapp/pcbuilder | 203743643 | Title: Price history date filtering

Question:

username_0: 6.8 Price history can be filtered by using a date range

6.8.1 Quality attribute

Performance

6.8.2 Description

The backend server should provide a date range, on which price history can be filtered in order to reduce performance strain on the server, when large sets of pricing history are accumulated.

6.8.3 Indicator

When retrieving the pricing history of a given product, it should be limited by a start and an end date.

6.8.4 Measurement

When showing pricing history data screen loading times are measured and deemed acceptable if below 1 second.

6.8.5 Norm

Performance can be guaranteed even when large sets of pricing data are accumulated.

Status: Issue closed

Answers:

username_1: backend supports selecting pricehistories by dateranges, frontend gets pricehistory of last month by default |

lomik/zapwriter | 357251875 | Title: What does this do and why does it exist?

Question:

username_0: $subject.

The answers to these questions is not very clear from the readme or the git history.

Answers:

username_1: PRs with readme are welcome ;)

username_1: This is just reusable helper for my own public and private projects. I have no purpose and no desire to document and sell it

username_0: That's fine. Maybe I can phrase my question differently. What was missing from zap that made you write this? I can see _what_ this thing is doing, but I can only guess at _why_ it's doing it.

username_1: Primary goals was:

1. File output with rotation support

2. Custom encoder ("mixed"). At the time, it was necessary to maintain backward compatibility in the private projects

3. A flexible output manager who could solve the following problems:

* Write full log to file with level "info" and to stderr with level "error"

* Ability to record a part of the logs into a separate file. Sample, access log of http server. And also to solve feature requests like this https://github.com/username_1/go-carbon/issues/154

Zap could not do any of the above at the time of writing this library

username_0: Thanks for taking the time to explain this to me.

Status: Issue closed

|

rancher/rancher | 186672400 | Title: kubernetes & other environments are showing cow icon instead of their own

Question:

username_0: **Rancher Version:** master 11/01

**Steps to Reproduce:**

1. Create a kubernetes, mesos and cattle environments

2. go to cattle environment and add a host

3. Go to manage environments and add environment

**Results:** All icons are cows until I refresh screen

**Expected:** Should not be cows

Answers:

username_1: Switching environments was the key piece here..

username_0: Version - master 11/10

Verified fixed

Status: Issue closed

|

dubocr/homebridge-tahoma | 740663019 | Title: Capteur de consommation éclectique effet joule

Question:

username_0: Bonjour, je suis équipé d'un compteur a effet joule somfy et celui-ci n'est pas reconnue dans mes accessoires, l'avez-vous rendu compatible?

Merci

Answers:

username_1: Thank you for using Homebridge TaHoma plugin.

Please follow these step to obtain better support :

1. Execute failling operations from official app (TaHoma/Cozytouch/etc.) then execute same operation from Homekit

2. Report your config by browsing [https://dev.duboc.pro/tahoma](https://dev.duboc.pro/tahoma)

3. Search issues with title corresponding to your device widget name (see picture below). If no opened issue, rename your issue with this widget name.

4. Provide your bridge last 4 digits (number visible as SETUP-XXXX-XXXX-XXXX at step 2.)

Thank you.

username_1: Bonjour,

Ce compteur n'est pas encore compatible mais je pourrais me pencher sur le sujet avec le votre. Pourrez-vous vous connecter sur https://dev.duboc.pro/tahoma et me transmettre les derniers chiffre de votre bridge pour que je puisse consulter les paramètre de cet équipement.

MErci

username_2: Idem j'ai aussi ce module, je pensais le supprimer définitivement du tableau électrique mais si vous allez l'intégrer je veux bien le garder et vous aider dans son développement...

username_0: Bonjour,

Merci mais je ne peut me connecter sur https://dev.duboc.pro/tahoma je n'ai aucun username qui fonctionne.

Avez vous une solution,

Merci

username_1: Bonjour @username_0,

Vous n'avez pas de box TaHoma (ou équivalent) ?

username_0: Si la box energeasyconnect, j'ai essayer de me connecter avec les mêmes identifiants mais le message d'erreur "Bad credentials" s'affiche.

username_1: As-tu essayé en sélectionnant le Endpoint "Rexel" ?

Quel service utilises tu sur le plugin Homebridge ?

Merci

username_2: De mon coté avec ma nouvelle Tahoma Switch le capteur à effet joule n'est pas encore reconnu/supporté par contre c'est bizarre je vois des traces dans Homebridge.... soit un device IO (1234#7) dans les logos ou sinon j'avais trois icône GMDE_Zone1, GMDE_Zone2 et Boost que j'ai exclu dans la config.

Je pense qu'il faut attendre pour la Switch que le capteur soit déjà supporté.

username_0: Maintenant j'ai une erreur 500 server error

username_1: Merci, j'ai corrigé mais j'ai pu récupéré la config.

username_0: C’est bon la connexion est OK, bridge 4055

username_2: @username_0 vous avez une Tahoma ? Avec ma nouvelle Switch le capteur à effet joule n'est pas reconnu, d'après Somfy il devrait arriver pour la fin de l'année !

username_0: @username_2 non , j’ai une Energeasy connect rail Din de chez rexel |

JuliaImages/Images.jl | 313934688 | Title: convert Image to Array{Float64}?

Question:

username_0: MethodError: Cannot `convert` an object of type ColorTypes.RGB{FixedPointNumbers.Normed{UInt8,8}} to an object of type Float64

This may have arisen from a call to the constructor Float64(...),

since type constructors fall back to convert methods.

Stacktrace:

[1] _collect(::Array{ColorTypes.RGB{FixedPointNumbers.Normed{UInt8,8}},2}, ::Base.Generator{Array{ColorTypes.RGB{FixedPointNumbers.Normed{UInt8,8}},2},Type{Float64}}, ::Base.EltypeUnknown, ::Base.HasShape) at ./array.jl:488

[2] map(::Type{T} where T, ::Array{ColorTypes.RGB{FixedPointNumbers.Normed{UInt8,8}},2}) at ./abstractarray.jl:1868

[3] include_string(::String, ::String) at ./loading.jl:522

```

I also tried `map(Float64, img)` and `Float64.(img)` with the same error. Also, if there could be an example of how to convert an array to an Image that would be great!

Many thanks for the help!

Answers:

username_1: One way to create a multidimensional array of `Float64` where the third dimension corresponds to the red, green and blue channel is as follows:

```julia

using TestImages, Colors

img = testimage("mandrill")

I = Float64.(cat(3,red(img),green(img),blue(img)))

```

Another option is to create a view using `channelview`.

```julia

using TestImages, Colors, Images

img = testimage("mandrill")

mat = channelview(img)

```

However, this creates a view with dimensions `3 x 512 x 512` where the first dimension is used to index into the red, green and blue channels.

Status: Issue closed

username_0: Awesome thanks! My code to read in a video is now working and you really helped my understanding, thanks so much!

```julia

function readVideo(videoPath, nframes=100)

vid = VideoIO.openvideo(videoPath)

img = read(vid)

frames = zeros(ColorTypes.RGB{FixedPointNumbers.Normed{UInt8,8}},nframes, size(img)...)

i = 1

while !eof(vid)

read!(vid,img)

frames[i,:,:] = img

if size(frames, 1) >= nframes

break

end

i+=1

end

return frames

end

frames = readVideo(videopath);

```

username_2: as a side note. The main upside of `channelview` is that in general it does not need to copy/change your data; it simple reinterprets the memory in most practical cases. This is why it makes sense to have the colorchannel in the first dimension, because then RGB are right next to each other in memory and can simply be grouped. check out this article if you'd like to know more https://evizero.github.io/Augmentor.jl/images/ |

ooade/NextSimpleStarter | 428591924 | Title: Change to using Material Web Component

Question:

username_0: @username_3 Feel free to send in a PR :grinning:

Thanks :)

Answers:

username_1: Add a description naw 😥

username_2: When?

username_3: @username_0 do you need any help porting? I know Material very well and would be happy to give back

username_0: @username_3 Feel free to send in a PR :grinning:

Thanks :)

username_3: @username_0 great - I'm exploring the repo - I'll make a small draft PR and update 1 component to the latest material UI and we can discuss the results

username_0: Sure. Good to know 👌

username_3: @username_0 so when I said I know Material well - I actually know React Material [https://material-ui.com](https://material-ui.com) not Material Components Web - I briefly looked into that project and they look similar but I'd more comfortable with the react version.

So can we go with React Material UI instead? It does seem like it's more suited for NextJS...

username_0: Cool @username_3. I love Material UI, as well. The latest releases make a whole lot of sense.

Status: Issue closed

|

intel-analytics/analytics-zoo | 803110251 | Title: jenkins: UT failed on mac

Question:

username_0: PEP8_SCRIPT_PATH /private/var/jenkins_home/workspace/ZOO-NB-UnitTests-2.1-PYTHON-MAC/pyzoo/dev/../dev/pep8-1.7.0.py

PEP8 checks failed.

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:120:101: E501 line too long (101 > 100 characters)

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:131:101: E501 line too long (111 > 100 characters)

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:133:101: E501 line too long (117 > 100 characters)

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:135:101: E501 line too long (115 > 100 characters)

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:137:101: E501 line too long (106 > 100 characters)

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:139:19: E128 continuation line under-indented for visual indent

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:140:19: E128 continuation line under-indented for visual indent

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:141:19: E128 continuation line under-indented for visual indent

./zoo/examples/orca/learn/horovod/pytorch_estimator.py:142:19: E128 continuation line under-indented for visual indent

jenkins link

http://10.239.47.210:18888/view/ZOO-NB/job/ZOO-NB-UnitTests-2.1-PYTHON-MAC/211/console

Answers:

username_0: @username_1 [3458](https://github.com/intel-analytics/analytics-zoo/pull/3458)

username_1: It has been fixed in https://github.com/intel-analytics/analytics-zoo/pull/3474

Status: Issue closed

|

bcherny/frontend-interview-questions | 242432266 | Title: isBalanced: blog post issue in examples

Question:

username_0: [Source test](https://github.com/username_1/frontend-interview-questions/blob/master/coding-easy/isBalanced.js) on github is ok, but [blog post](https://performancejs.com/post/hde6d32/The-Best-List-of-Frontend-JavaScript-Interview-Questions) has issue:

```javascript

isBalanced('{}{}') // false

```

Should be `true`.

Status: Issue closed

Answers:

username_1: Updated - thanks @username_0. |

rust-lang/rfcs | 54966123 | Title: Switch rustdoc to Common Markdown

Question:

username_0: <a href="https://github.com/brson"><img src="https://avatars.githubusercontent.com/u/147214?v=3" align="left" width="96" height="96" hspace="10"></img></a> **Issue by [brson](https://github.com/brson)**

_Wednesday Sep 03, 2014 at 22:27 GMT_

_For earlier discussion, see https://github.com/rust-lang/rust/issues/16978_

_This issue was labelled with: A-rustdoc, E-easy, I-wishlist in the Rust repository_

----

Assuming this takes off it's probably best to be on the right side of history: http://standardmarkdown.com/. Probably easiest to just make hoedown conformant.

Status: Issue closed

Answers:

username_1: This has been implemented behind a flag, and rust-lang/rust#44229 is tracking the plan to enable it by default. |

mojombo/chronic | 104117091 | Title: project URL 404s

Question:

username_0: ```

$ curl --write-out %{http_code} --silent --output /dev/null http://injekt.github.com/chronic

404

```

Answers:

username_1: This no longer seems to happen. Might have been an issue with github at that time, as they were dealing with redirects to the aliased project name. "injekt" was renamed/aliased to "leejarvis" at some point.

If you want to use the `curl` command now, you'll have to follow redirects, using the `-L` flag, like so:

curl -L --write-out %{http_code} --silent --output /dev/null http://injekt.github.com/chronic |

monarch-initiative/mondo | 638067781 | Title: [Merge] MONDO:0011543 BRCA3

Question:

username_0: **Mondo term (ID and Label)**

MONDO:0011543 BRCA3

**Reason for deprecation**

OMIM merged these

**Term to be merged with**

MONDO:0016419 hereditary breast carcinoma

Answers:

username_1: Don't merge these, they are different concepts

OMIM has a description of the putatitive BRCA3 concept:

https://omim.org/entry/114480

From my reading of this, BRCA3 should indeed by obsoleted. Cite 114480 as rationale. Use a consider link to point to MONDO:0016419

Status: Issue closed

|

ropensci/refsplitr | 849904789 | Title: Error in `$<-.data.frame`(`*tmp*`, "EM", value = c(NA_character_, NA_character_ : replacement has 2 rows, data has 1

Question:

username_0: I installed refsplitr package as follows:

install.packages("remotes")

remotes::install_github("username_1/refnet2")

---

For the sake of simplicity, I've given my data the same name as in your example.

I runed the example code and because of errors I cleanend NA's as follows.

dat1 <- dat1 %>% filter(!is.na(AU))

dat1 <- dat1 %>% filter(!is.na(AF))

dat1 <- dat1 %>% filter(!is.na(EM))

However, when I run the code below:

dat2 <- authors_clean(references = dat1)

I got the following error.

Error in `$<-.data.frame`(`*tmp*`, "EM", value = c(NA_character_, NA_character_ : replacement has 2 rows, data has 1

Do you have an idea for the solution.

Best regards.

Answers:

username_0: Possibly I found the problem.

I am trying it know.

I changed all ; to , in EM collumn.

The code is still runnig and did not give an error.

Because my data is bir, it will possibly take an hour.

I will write the result.

username_1: From an author email to EMB: " I changed all semicolons (;) to comma (,) in EM collumn, error is disappeared"

Status: Issue closed

|

mschlenstedt/Loxberry | 981797765 | Title: Unterstützung Raspberry Compute Modul

Question:

username_0: Wird aktuell nicht unterstützt. Neuer Kernel und diverse Änderungen in /boot notwendig.

https://www.loxforum.com/forum/projektforen/loxberry/allgemeines-aa/316021-loxberry-webseite-funktioniert-nicht-auf-dem-raspberry-pi-compute-module-4 |

gchq/CyberChef | 509315222 | Title: Inventory notification

Question:

username_0: Your tool/software has been inventoried on [*Rawsec's CyberSecurity Inventory*](https://inventory.rawsec.ml/).

https://inventory.rawsec.ml/tools.html#CyberChef

### What is Rawsec's CyberSecurity Inventory?

An inventory of tools and resources about CyberSecurity. This inventory aims to help people to find everything related to CyberSecurity.

More details about features [here](https://inventory.rawsec.ml/features.html).

Note: the inventory is a FLOSS (Free, Libre and Open-Source Software) project.

### Why should you care about being inventoried?

Mainly because this is giving visibility to your tool and improve its referencing.

### Badges

The badge shows to your community that your are inventoried. It looks good but also shows you care about your project, that your tool is referenced.

Feel free to claim your badge here: http://inventory.rawsec.ml/features.html#badges, it looks like that [](https://inventory.rawsec.ml/), but there are several styles available.

### Want to thank us?

If you want to thank us, you can help make our open project better known by tweeting about it! For example: [](https://twitter.com/intent/tweet?text=We%20have%20been%20inventoried%20on%20%23Rawsec%20CyberSecurity%20Inventory%21%20Check%20their%20awesome%20inventory%20of%20tools%20and%20resources%20about%20CyberSecurity%3A%20https%3A//inventory.rawsec.ml/%21%20Thx%20@rawsec_cyber)

### So what?

That's all, this message is just to notify you if you care. Else you can close this issue.<issue_closed>

Status: Issue closed |

nervetattoo/banner-card | 450075048 | Title: Enhancement - Tap to execute script entities

Question:

username_0: I have a script to activate the nightlight hue scene and have added the script as an entity to the banner-card. It would be nice if I could execute the script by tapping that part of the card; currently it opens more info which has an execute button.

Alternatively, is it possible to add tap_action customization options?

Answers:

username_1: I want config to be simple and lightweight with sane defaults over deep customisation options, thus I don't like the action/tap_action config support I'm seeing elsewhere. For scripts I guess one wouldn't add a script to this card if running it was dangerous so there shouldn't be much harm in just supporting that with a button somehow. Will a single button be enough? I mean, is it any meta data about the script that is needed to make this usable?

username_0: I can't speak for others, but mine is just a service call to turn on the

light with a specific Hue scene so a button would be fine.

Status: Issue closed

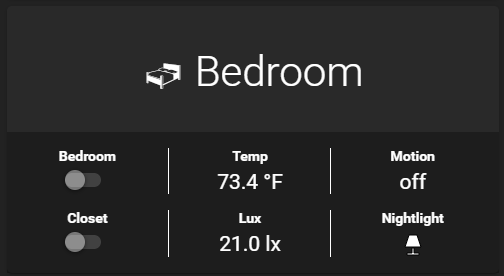

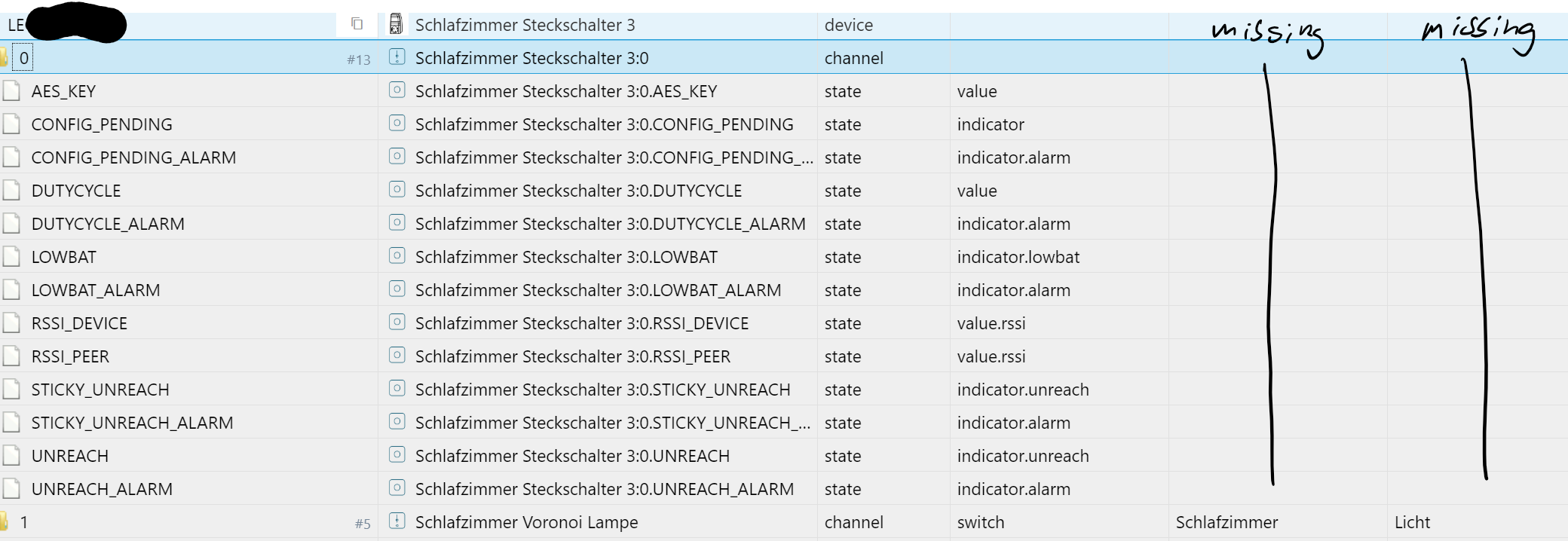

username_0: ```

- type: custom:banner-card

heading: "\U0001F6CF Bedroom"

entities:

- light.bedroom

- entity: sensor.aqara_temperature

name: Temp

- entity: binary_sensor.aqara_motion

name: Motion

- light.closet

- entity: sensor.lumi_lumi_sensor_motion_aq2_02f39828_1_1024

name: Lux

- entity: script.bedroom_nightlight_hue_scene

name: Nightlight

value: mdi:lamp

action:

service: homeassistant.turn_on

```

Renders

Clicking on Nightlight renders this pop-up instead of executing the service. I can manually execute homeassistant.turn_on for script.bedroom_nightlight_hue_scene and it executes the script, as does the execute button in the pop-up.

username_1: How strange. I configured a similar thing with a script for myself to test and it works like a charm.

And I assume all of the toggles etc work on the card?

username_1: Hang on; Are you clicking the `Nightlight` heading, or the icon? The heading is supposed to open the popover, only the icon button triggers the action

username_0: I tried both, and all other toggles are functioning.

username_0: FYI, issue was because I'd migrated to HACS And was unable to use the new functionality until Banner-Card was installed via HACS after their updates this past weekend. Clicking the icon executes the script successfully. |

department-of-veterans-affairs/va.gov-team | 830775069 | Title: User transitions to Cerner

Question:

username_0: ### **Background**

As VAMCs transition from VISTA to Cerner, Veterans within those facilities will no longer have access to MHV features, including secure messaging. When it comes to the mobile app, this means that there will be Veterans who one day have access to secure messaging, and do not the next once their facility flips the Cerner switch.

### **User Story**

As a Veteran, I want to see an error message when my facility transitions to Cerner so that I know I need to go to a different portal to manage my health.

### **Acceptance Criteria**

# When a user's facility switches to the Cerner portal, the mobile app displays an error state giving the Veteran context and directing them to use the Cerner portal.

Answers:

username_1: A use case we may need to consider here is if a user is registered at multiple facilities, they might have the switch flipped off for some facilities but still be able to use messaging for other facilities.

username_2: @username_1 I've started to brainstorm around this in your [User Flows and Unhappy Paths mural](https://app.mural.co/t/adhoccorporateworkspace2583/m/adhoccorporateworkspace2583/1614017071435/8061f2093dc4679b4b1da3ab3f20423c8f1c8f66?wid=0-1617728321726). Have on the agenda for our next check in. |

MicrosoftDocs/azure-docs | 724613250 | Title: Link to A3 download is incorrect

Question:

username_0: Takes to wrong URL

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 71485a19-f1d1-c034-f41f-5aa91d7e3e71

* Version Independent ID: 8b441303-97d5-0c87-1d8f-b5c017fc6da8

* Content: [Azure Cosmos DB PDF query cheat sheets](https://docs.microsoft.com/en-us/azure/cosmos-db/query-cheat-sheet)

* Content Source: [articles/cosmos-db/query-cheat-sheet.md](https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/cosmos-db/query-cheat-sheet.md)

* Service: **cosmos-db**

* GitHub Login: @SnehaGunda

* Microsoft Alias: **sngun**

Answers:

username_1: @username_0 Thank you for the feedback. This is being assigned to the content author to evaluate and update as appropriate.

username_2: @username_0 The link downloads the A3 pages to your download folder. Please elaborate regarding the wrong URL.

username_0: The link here:

https://docs.microsoft.com/en-us/azure/cosmos-db/query-cheat-sheet#oversized-cheat-sheets

Specifically this one:

Has this href:

https://go.microsoft.com/fwlink/?linkid=870413

Which in turn takes me to:

username_0: Just checked with some of my colleagues, they're getting the correct PDF in Chrome. Looks like this is somehow my issue?

Status: Issue closed

|

RevenueCat/purchases-ios | 745468046 | Title: Offerings/purchaserInfo no longer returned from cache via main thread

Question:

username_0: **Describe the bug**

A small change in 3.7.4 now has `purchaserInfo` and `offerings` returning from cache on DispatchQueue.main async instead of the main thread. I understand apps shouldn't depend on a main thread return here, but it'd be nice if it could when possible, and it seems like it can without disrupting current behavior.

1. Environment

1. Platform: iOS

2. SDK version: 3.7.4

3. OS version: 14.1

4. Xcode version: 12.1

5. How widespread is the issue. Percentage of devices affected. 100%

3. Steps to reproduce, with a description of expected vs. actual behavior

Call `Purchases.shared.purchaserInfo` when a cached value is present, and now it returns just a bit slower on async DispatchQueue.main

4. **Other information** (e.g. stacktraces, related issues, suggestions how to fix, links for us to have context, eg. stackoverflow, etc.)

Relevant change was in this commit: https://github.com/RevenueCat/purchases-ios/commit/38d16aa9d59b5204f22f7cbdac627d3699b3f623

Specifically, `purchaserInfoWithCompletionBlock` and `offeringsWithCompletionBlock` now asynchronously request application background state before returning a cached value. Previously, this application state was requested after cache return. Since application state seems to only be necessary when updating a stale cache or no cache, proposed fix would be to shift the background state request after cache return and to the else-block when no cache is present.

Answers:

username_1: I'm not sure I understand the issue here: `DispatchQueue.main` is a queue that's directly associated with the main thread by the OS (as documented here https://developer.apple.com/documentation/dispatch/dispatchqueue/1781006-main), so there return will still be on the main thread.

As for the slowness, yes, it does unfortunately return slightly slower than before. This is because of some new app state checks, that unfortunately need to run on the main thread (because they're done on `UIApplication`), so there's a bit more back and forth between a worker thread and the main thread.

Those checks are tied to the app state, because the state is also used to determine whether a refresh is needed, so there's no clean way of disassociating them.

username_0: Sorry I probably didn't make it too clear - yup I was primarily highlighting how previously it was returned synchronously on the main thread, you are right that right now it's dispatched asynchronously to the main thread as well. I understand you have the app state checks in place, but it doesn't seem the app state check is strictly necessary for the cache return (it seems like it is for a cache refresh).

For example, [L425](https://github.com/RevenueCat/purchases-ios/blob/4190aceade7ea7aaaecf6dd3cb54bd57f43f2c5e/Purchases/Public/RCPurchases.m#L425) for `purchaserInfoWithCompletionBlock` seems like it could be after L429 instead of where it is right now (and I guess before L436 since it is necessary there too).

Anyway this is a small change that we observed in our UI animations - not critical, but assuming I'm reading this code correctly, could be an easy perf improvement with no change to the existing functionality.

username_1: @username_0 great catch, you're correct and we could improve those. I'll add it to the backlog. Thanks for pointing this out!

username_1: addressed in https://github.com/RevenueCat/purchases-ios/pull/433

Status: Issue closed

|

digital-asset/daml | 1053690557 | Title: Check types on exercise/fetch by template for interface choices

Question:

username_0: Both in Daml and over the ledger API you can exercise a choice from an interface by template id,e.g.,

```

exercise (cid : ContractId InterfaceImpl) InterfaceChoice

```

However, currently we only check that `cid` corresponds to a template that implements the interface, we do not check that it is `InterfaceImpl`. So someone can pass a cid of a different template that implements the interface and things will succeed.

This is at best confusing and at worse a potential attack vector so we need to fix this.

This is slightly tricky since we desugar such a choice as

```

instance IsToken t => HasExercise t GetRich (ContractId Token) where

exercise cid = GHC.Types.primitive @"UExerciseInterface" (toTokenContractId cid)

```

This means that we generate the same call regardless of whether we call by interface or by template.

To fix this, we should be able to change the primitive for execriseInterface and fetchInterface to accept an optional typerep and validate against that.

Another option would be to accept a boolean predicate on the template payload that we evaluate between the fetch & the exercise. However, if we want to make failures of that catchable we have to record a fetch in the case of failure to track transaction inputs which is somewhat tricky.

so for now, the typerep solution seems like the better option.

Answers:

username_1: This is done! Thanks to @akrmn for merging the final PR into ghc :)

Status: Issue closed

username_1: There's even a test: https://github.com/digital-asset/daml/blob/main/compiler/damlc/tests/daml-test-files/InterfaceTypeRepCheck.daml |

File-New-Project/EarTrumpet | 155603967 | Title: VMware Workstation machines only have a single entry

Question:

username_0: Ear Thrumpet can only control only one of the running VMware Workstation virtual machines.

Answers:

username_1: Do multiple machines show up in the classic sound mixer? VMware might be piping all audio through a single broker process

username_0: It did show up as multiple entries on Windows 7. I don't control the volume of all machines either, just one of them.

username_1: Can you repro this on Windows 10 with the classic sound mixer?

username_0: No, they are separate entries there.

Status: Issue closed

|

kyrylo/airbrake-rust | 230488411 | Title: Notification retry feature idea

Question:

username_0: Hi @kyrylo

I'm using Airbrake-Rust in an environment with really bad network connection, so I'm in a desperate need of a new feature to reliably send error notifications. I'm thinking about adding a "retry" feature with some configurable timeouts and number of maximum retries.

Before implementing something this big I would like to get your feedback on the idea and discuss the implementation details.

A: We will definitely need better error handling in the lib to determine if a notice was sent successfully or not. I've tried to add a simple boilerplate Error type with the error_chain! macro, and replace every unwrap() in SyncSender::send() with a try!(). It works fine, except for the serde_json::from_str call, which has some extra requirements which I couldn't handle, but I will look into it a bit more.

Do you mind changing the notify_sync function to return a Result<Value> instead a Value? I hope it's not a problem, after all if a developer wants to block the app while the notification is beeing sent, he would probably want to know if it was successfull or not (and also don't want his program to die in case of an unexpected response of course)

B: Where should I put the retry logic? I think AsyncSender could have the same retry functionality, because it basically includes a SyncSender in a separate thread, so that thread can also do the retry thing, even if we discard the final results after a given number of failures. So maybe we can have a simple wrapper type for SyncSender which contains the retry logic and the related params. Then the AsyncSender worker should decide if a simple SyncSender or a new Retry Sender should be created based on the config params. In this case the Notifier would have 3 senders, Sync, RetrySync and Async.

The other option is to not separate this, and make the SyncSender smarter by adding the retry logic to the send() function. I mean it gets the whole config in the constructor, so it can easily decide itself if any retries are needed. This way the whole structure can be the same, and we only have to add some new optional config params.

Both methods are backward compatible with the API.

Answers:

username_0: I've managed to get the error_chain feature working, so you can check out a working prototype in my repo on branch: retry_prototype

I went with the second option, making the send() function in SyncSender smarter to handle retries, so AsyncSender doesn't have to know anything about the new retry functionality, it just passes the config.

If it's looking good on your side I will make this change into separate, fully fledged pull requests with updating the Readme and everything, just wanted to get your opinion first. |

kyverno/kyverno | 1133805110 | Title: [VerifyImage] Support more certificate-extensions from cosign

Question:

username_0: **Is your feature request related to a problem? Please describe.**

Currently when using the "verifyImages" check it is possible to check the "subject" and "issuer" of the certificate. When the keyless cosign-signature was created within Github Actions, the "subject" is typically the value from `job_workflow_ref`. As already described in sigstore/fulcio#305 this is insufficient, when the sign-operation is placed in a reusable Github workflow and it is "only" called from a specific repository. The PR sigstore/fulcio#306 added more extracted claims (https://github.com/sigstore/fulcio/blob/main/docs/oid-info.md) to the certificate which can be verified afterwards.

**Describe the solution you'd like**

With the "verifyImages" check from Kyverno it should be possible to verify additional claims from the cosign-certificate as described above. Maybe something like this would be possible:

```yaml

verifyImages:

- image: "ghcr.io/username_0/my-awesome-product:*"

subject: "..."

issuer: "https://token.actions.githubusercontent.com"

additionalClaims:

1.3.6.1.4.1.57264.1.4: release

1.3.6.1.4.1.57264.1.5: username_0/my-awesome-product

roots: |-

...

```

Maybe the internal extension-names should be replaced with more user-friendly names.

**Describe alternatives you've considered**

**Additional context**

Without this, it would not be possible to verify that the image is really created by the repo-owner, as an attacker could also use the public reusable-workflow and would create a valid signature-cert with the exact same issuer and subject.

Answers:

username_1: Thanks, @username_0 - for opening this issue.

I'm just wondering how can we extract these new extensions in kyverno, from the certs? For the subject and issuer we are using the cosign's `signature` pkg - https://github.com/kyverno/kyverno/blob/main/pkg/cosign/cosign.go#L366-L367

username_0: You are right, I missed the fact, that this is the place where the area of cosign starts. 😆

I think we should cross-post the issue at cosign, that the extraction logic there could be expanded.

@username_1 Should I do that?

username_1: Yes, that would be nice, thanks :)

username_0: @username_1 @username_2 I've updated my proposal above for the additionalClaims validation. If its ok for you I will try to start a PR in a few days.

username_1: Thanks, @username_0, for your help! Please go ahead.

username_2: Thanks @username_0! I saw Cosign 1.6.0 is out so would be great to update and get this in.

username_0: I'm working on this issue in the last few days and had problems to debug my code locally (via VS Code).

1. I executed the `deploy-controller-debug.sh` against a kind-cluster with my local IP and Port 8000 as `--serverIP`.

2. I started the main.go from VS Code in debug-mode with the `--kubeconfig` and `--serverIP` arguments. (Kyverno logs are fine and the webhook is registered)

3. The webhook can not be accessed locally or from the Kubernetes-API-Server at the `serverIP` endpoint with e.g. `/mutate` path. With HTTP I always get 404, with HTTPS, the server seems to send a HTTP-Message anyway.

Is there any step missing to debug Kyverno locally?

username_3: @username_0 since you already have kind cluster running i would suggest to build the kyverno image locally using `make docker-build-kyverno-local` and deploy using the `config/install.md` operator yaml file as a pod.

username_0: @username_3 I've tried that too, but than I can't debug right? The only way to get infos from my code would be then to insert logs?

username_3: Right , then we have to check the pod logs to see the debug statements.

username_0: Thanks @realshuting and @username_3. I will give it a try.

Status: Issue closed

|

stanvanrooy/instauto | 704081138 | Title: API No longer logging in

Question:

username_0: I get the following error when trying to run the login code flow:

`Traceback (most recent call last):

File "insta.py", line 158, in <module>

main()

File "insta.py", line 48, in main

getsubnums()

File "insta.py", line 55, in getsubnums

client.login()

File "/home/user/anaconda3/lib/python3.8/site-packages/instauto/api/actions/authentication.py", line 64, in login

self.state.logged_in_account_data = LoggedInAccountData(**resp.json()['logged_in_user'])

KeyError: 'logged_in_user'

`

Answers:

username_0: And it seems I have discovered what 100+ requests in a second will do

Status: Issue closed

username_1: This happens sometimes when logging in from a saved file. Could you share the code you use to initiate the client?

username_0: It's all good! I had a while loop with the login flow and it sent over 100 requests to instagram to login. it works fine after waiting 2 minutes |

fluentmigrator/fluentmigrator | 560943603 | Title: Obsolete way to run migration on demand

Question:

username_0: Currently FluentMigrator scanning migrations on startup when using it in aspnet core project.

I found way to run migration on demand by calling for example action

Now I do that with RunnerContext which is obsolete

Any ways to do that not only at startup when configuring services ?

Answers:

username_1: @username_0 Thanks for your patience - was on vacation last week.

Artur, can you please post a code sample of what you're currently doing? My understanding is you want to remove all Obsolete compiler warnings from your solution. The best way, IMHO, for me to help you with that is to see a code example representative of what your solution is doing. The other option is to follow the example in FluentMigrator samples directory and build an "In-Process Runner".

username_0: @username_1 Yep, sure

```C#

internal virtual void MigrateToLatestDatabaseVersion(string connectionString)

{

var assembly = Assembly.GetExecutingAssembly();

var logAnnouncer = new TextWriterAnnouncer(s => _logger.Debug(s));

var migrationContext = new RunnerContext(logAnnouncer)

{

Namespace = "Logic.Dal.Migrations"

};

var options = new MigrationOptions();

var factory = new SqlServer2012ProcessorFactory();

using (var processor = factory.Create(connectionString, logAnnouncer, options))

{

var runner = new MigrationRunner(assembly, migrationContext, processor);

runner.MigrateUp();

}

}

```

`TextWriterAnnouncer` marked as `Obsolete` and other like `RunnerContext`, `SqlServer2012ProcessorFactory`, `MigrationRunner` with specified constructor

username_1: Got it. I am releasing 3.2.2. hopefully tonight which contains so better warnings than just Obsolete.

TextWriterAnnouncer still exists, but the general Announcer abstraction has been replaced with the ability to log arbitrarily anything you want, and each logger can thus have their own output streams (so you can log things to a database, windows event log, file, cloud service like datadog, whatever).

Please look at: https://github.com/fluentmigrator/fluentmigrator/blob/e82aafa20e6dbe3cefa221303fe23cf8bf59fffd/samples/FluentMigrator.Example.Migrator/Program.DependencyInjection.cs

In particular, https://github.com/fluentmigrator/fluentmigrator/blob/e82aafa20e6dbe3cefa221303fe23cf8bf59fffd/samples/FluentMigrator.Example.Migrator/Program.DependencyInjection.cs#L31-L32

username_1: @username_0 See this push just now: https://github.com/fluentmigrator/fluentmigrator/commit/69e1a267b7278d02f794d0a94d32054243f6f56c

username_0: Thank you very much, that was helpful 😃

Status: Issue closed

|

ant-design/pro-components | 1158270017 | Title: 🐛[BUG] ProFormList 表单项无法基于label右对齐

Question:

username_0: 提问前先看看:

https://github.com/ryanhanwu/How-To-Ask-Questions-The-Smart-Way/blob/main/README-zh_CN.md

### 🐛 bug 描述

使用ProFormList包裹的表单项无法label右对齐

<img width="605" alt="image" src="https://user-images.githubusercontent.com/8169783/156552164-7356e88b-b1e4-4a9e-afde-6b6d816017d4.png">

<!--

详细地描述 bug,让大家都能理解

-->

### 📷 复现步骤

https://codesandbox.io/s/jin-e-forked-knvchd?file=/App.tsx

https://codesandbox.io/s/biao-qian-yu-biao-dan-xiang-bu-ju-forked-juh3kt?file=/App.tsx:443-698

### 🏞 期望结果

ProFormList可以不影响表单对齐结果

<!--

描述你原本期望看到的结果

-->

### 💻 复现代码

<!--

提供可复现的代码,仓库,或线上示例

-->

### © 版本信息

- ProComponents 版本: [e.g. 4.0.0]

- umi 版本

- 浏览器环境

- 开发环境 [e.g. mac OS]

### 🚑 其他信息

<!--

如截图等其他信息可以贴在这里

-->

Answers:

username_1: 这个实在是没法实现,你 ProFormList 里面包个 Row 手动实现一把。

ProFormList 这个块要和 form 做切分的。

Status: Issue closed

username_0: 最后通过给每一个ProFormText ProFormCheckBox 都传入layout配置实现了,虽然有点怪,但可用

https://codesandbox.io/s/jin-e-forked-xdk03o?file=/App.tsx |

MicrosoftDocs/azure-devops-docs | 599402292 | Title: Which role is needed to manage approvals / checks

Question:

username_0: There is no documentation on which role is needed for managing approvals and checks on the environment. I assume this should be administrator?

Also I hope that we can see a new role over time that does allow setting approvers and checks but not grant setting security permissions.

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 77d95db6-9983-7346-d0eb-4b7443e4e252

* Version Independent ID: 0a22cccc-318d-592f-d1ab-09ec01d88087

* Content: [Environment - Azure Pipelines](https://docs.microsoft.com/en-us/azure/devops/pipelines/process/environments?view=azure-devops#feedback)

* Content Source: [docs/pipelines/process/environments.md](https://github.com/MicrosoftDocs/azure-devops-docs/blob/master/docs/pipelines/process/environments.md)

* Product: **devops**

* Technology: **devops-cicd-process**

* GitHub Login: @username_6

* Microsoft Alias: **jukullam**

Answers:

username_1: Thanks for your question. Here are a couple of options you might consider:

- [Azure DevOps Support Bot](https://azuredevopsvirtualagent.azurewebsites.net/)

- [Azure DevOps on Stack Overflow](https://stackoverflow.com/questions/tagged/azure-devops)

Also, thanks for the suggestion! The product teams aren’t always watching this repository, so to make sure it gets in front of them for triage, please [submit your idea]( https://developercommunity.visualstudio.com/content/idea/post.html?space=21) here.

Status: Issue closed

username_0: This is not a support request, this is feedback that your description of the roles is lacking. You do not describe which role on an environment is needed to manage approvals and checks.

username_2: Reopening for testing PRMerger priority label assignment

username_2: There is no documentation on which role is needed for managing approvals and checks on the environment. I assume this should be administrator?

Also I hope that we can see a new role over time that does allow setting approvers and checks but not grant setting security permissions.

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 77d95db6-9983-7346-d0eb-4b7443e4e252

* Version Independent ID: 0a22cccc-318d-592f-d1ab-09ec01d88087

* Content: [Environment - Azure Pipelines](https://docs.microsoft.com/en-us/azure/devops/pipelines/process/environments?view=azure-devops#feedback)

* Content Source: [docs/pipelines/process/environments.md](https://github.com/MicrosoftDocs/azure-devops-docs/blob/master/docs/pipelines/process/environments.md)

* Product: **devops**

* Technology: **devops-cicd-process**

* GitHub Login: @username_6

* Microsoft Alias: **jukullam**

username_1: @username_0 -- thank you for clarifying.

@username_2 -- this issue is alive again; please don't close it.

username_2: #pri3

Status: Issue closed

username_2: There is no documentation on which role is needed for managing approvals and checks on the environment. I assume this should be administrator?

Also I hope that we can see a new role over time that does allow setting approvers and checks but not grant setting security permissions.

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 77d95db6-9983-7346-d0eb-4b7443e4e252

* Version Independent ID: 0a22cccc-318d-592f-d1ab-09ec01d88087

* Content: [Environment - Azure Pipelines](https://docs.microsoft.com/en-us/azure/devops/pipelines/process/environments?view=azure-devops#feedback)

* Content Source: [docs/pipelines/process/environments.md](https://github.com/MicrosoftDocs/azure-devops-docs/blob/master/docs/pipelines/process/environments.md)

* Product: **devops**

* Technology: **devops-cicd-process**

* GitHub Login: @username_6

* Microsoft Alias: **jukullam**

username_3: @username_2 I think this is to remain open. Is that correct, @username_1?

username_3: Woops, page was stale when I last commented. Pls ignore.

username_4: This issue hasn't been updated in more than 180 days, so we've closed it. If you feel the issue is still relevant and needs fixed, please reopen it and we'll take another look. We appreciate your feedback and apologize for any inconvenience.

Status: Issue closed

username_0: Nevermind I guess..

username_5: @username_6 Can you take a look at this one?

username_5: There is no documentation on which role is needed for managing approvals and checks on the environment. I assume this should be administrator?

Also I hope that we can see a new role over time that does allow setting approvers and checks but not grant setting security permissions.

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 77d95db6-9983-7346-d0eb-4b7443e4e252

* Version Independent ID: 0a22cccc-318d-592f-d1ab-09ec01d88087

* Content: [Environment - Azure Pipelines](https://docs.microsoft.com/en-us/azure/devops/pipelines/process/environments?view=azure-devops#feedback)

* Content Source: [docs/pipelines/process/environments.md](https://github.com/MicrosoftDocs/azure-devops-docs/blob/master/docs/pipelines/process/environments.md)

* Product: **devops**

* Technology: **devops-cicd-process**

* GitHub Login: @username_6

* Microsoft Alias: **jukullam**

username_6: @username_0 You can manage approvals and checks with the creator, administrator and user roles. The reader role cannot manage approvals and checks. Updating the docs now.

Status: Issue closed

|

ioBroker/ioBroker.iot | 506171827 | Title: [GHOME] Crashing after Thermostat setting Value / License Bug ?

Question:

username_0: Here is my log output:

`

iot.0 | 2019-10-12 12:51:17.556 | info | (12173) Terminated (NO_ERROR): Without reason

-- | -- | -- | --

iot.0 | 2019-10-12 12:51:17.555 | info | (12173) terminating

iot.0 | 2019-10-12 12:51:17.549 | error | at processImmediate [as _immediateCallback] (timers.js:745:5)

iot.0 | 2019-10-12 12:51:17.549 | error | at tryOnImmediate (timers.js:768:5)

iot.0 | 2019-10-12 12:51:17.549 | error | at runCallback (timers.js:810:20)

iot.0 | 2019-10-12 12:51:17.549 | error | at Immediate.setImmediate [as _onImmediate] (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2432:41)

iot.0 | 2019-10-12 12:51:17.549 | error | at GoogleHome.getStates (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2430:26)

iot.0 | 2019-10-12 12:51:17.549 | error | at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5306:24)

iot.0 | 2019-10-12 12:51:17.549 | error | (12173) TypeError: Cannot read property 'startsWith' of undefined

iot.0 | 2019-10-12 12:51:17.549 | error | (12173) uncaught exception: Cannot read property 'startsWith' of undefined

`

After setting the temperature of a thermostat, I got the feedback that this mode would not be supported by the device

here my settings:

After adpater restarting, I got this:

`[GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}`

I have a valid .pro license, how could this be fixed?

Answers:

username_1: Which js-Controller?

username_1: If it is js-controller 2.0 this could happen if id is not a string ... I will prevent this error in js-controller, but question stays: why id is no string ?

username_0: js controller is 2.0.25

Which id string do you mean?

username_2: Can you reinstall from my github and restart

https://github.com/username_2/ioBroker.iot

Please upload your devices in the forum

https://forum.iobroker.net/topic/24061/google-home-assistant-iobroker-einrichten-nutzen/

username_0: I allready installed the latest 1.1.9 and this issue is still present.

This is right after trying to set the temp:

`

| | |

-- | -- | -- | --

iot.0 | 2019-10-12 18:33:32.668 | info | (31639) Terminated (NO_ERROR): Without reason

iot.0 | 2019-10-12 18:33:32.668 | info | (31639) terminating

iot.0 | 2019-10-12 18:33:32.623 | error | (31639) TypeError: Cannot read property 'startsWith' of undefined at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5306:24) at GoogleHome.getStates

iot.0 | 2019-10-12 18:33:32.623 | error | (31639) uncaught exception: Cannot read property 'startsWith' of undefined

iot.0 | 2019-10-12 18:33:09.494 | warn | (31639) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 | 2019-10-12 18:33:09.494 | warn | (31639) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 | 2019-10-12 18:33:09.493 | error | (31639) [GHOME] Url Key error. Google Request and Response are working. But device states are not reported automatically: {"error":"Email not found or URL key not found"}

`

What do you mean with uploading my devices? Simply post a list?

username_1: Is this all log? Please provide also some Logs before the error

username_2: Please reinstall the latest version from github.

And put the instance in debug (Instanzen ->Expertmode-> Log Level ->debug)

It is described in post #1 you can press the export arrow in objects (arrow down) to export a json of your object

username_0: @username_1 this is a log with a triggered settpoint @19:19 Uhr:

`iot.0 2019-10-12 19:20:27.277 warn (19608) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 19:20:27.277 warn (19608) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 19:20:27.276 error (19608) [GHOME] Url Key error. Google Request and Response are working. But device states are not reported automatically: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 19:20:27.130 info (19608) Connection changed: connect

iot.0 2019-10-12 19:20:26.952 info (19608) hm-rpc.2.INT0000001.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.947 info (19608) hm-rpc.2.INT0000001.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.942 info (19608) hm-rpc.2.INT0000011.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.938 info (19608) hm-rpc.2.INT0000004.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.934 info (19608) hm-rpc.2.INT0000004.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.930 info (19608) hm-rpc.2.INT0000002.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.925 info (19608) hm-rpc.2.INT0000002.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.921 info (19608) hm-rpc.2.INT0000007.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.917 info (19608) hm-rpc.2.INT0000010.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.913 info (19608) hm-rpc.2.INT0000010.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.908 info (19608) hm-rpc.2.INT0000008.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.904 info (19608) hm-rpc.2.INT0000006.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.900 info (19608) hm-rpc.2.INT0000006.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.896 info (19608) hm-rpc.2.INT0000005.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.891 info (19608) hm-rpc.2.INT0000005.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.887 info (19608) hm-rpc.0.XXXXX is auto added with type socket.

iot.0 2019-10-12 19:20:26.883 info (19608) hm-rpc.0.XXXXX is auto added with type socket.

iot.0 2019-10-12 19:20:26.879 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.859 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.854 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.850 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.846 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.840 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.821 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.817 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.813 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.791 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.786 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.782 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.778 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.773 info (19608) hm-rpc.0.XXXXX is auto added with type dimmer.

iot.0 2019-10-12 19:20:26.769 info (19608) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 19:20:26.765 info (19608) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 19:20:26.761 info (19608) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 19:20:26.757 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.752 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.748 info (19608) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 19:20:26.565 info (19608) hm-rpc.2.INT0000001.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.558 info (19608) hm-rpc.2.INT0000001.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.534 info (19608) hm-rpc.2.INT0000011.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.511 info (19608) hm-rpc.2.INT0000004.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.504 info (19608) hm-rpc.2.INT0000004.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.481 info (19608) hm-rpc.2.INT0000002.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.473 info (19608) hm-rpc.2.INT0000002.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.450 info (19608) hm-rpc.2.INT0000007.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.423 info (19608) hm-rpc.2.INT0000010.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.415 info (19608) hm-rpc.2.INT0000010.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.392 info (19608) hm-rpc.2.INT0000008.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.369 info (19608) hm-rpc.2.INT0000006.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.362 info (19608) hm-rpc.2.INT0000006.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.333 info (19608) hm-rpc.2.INT0000005.2 is auto added with type info.

iot.0 2019-10-12 19:20:26.324 info (19608) hm-rpc.2.INT0000005.1 is auto added with type thermostat.

iot.0 2019-10-12 19:20:26.290 info (19608) hm-rpc.0.XXXXX is auto added with type socket.

iot.0 2019-10-12 19:20:26.275 info (19608) hm-rpc.0.XXXXX is auto added with type socket.

iot.0 2019-10-12 19:20:26.259 info (19608) hm-rpc.0.XXXXX is auto added with type light.

[Truncated]

iot.0 2019-10-12 18:45:38.383 info (4714) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 18:45:38.369 info (4714) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 18:45:38.351 info (4714) Cannot auto convert hm-rpc.0.XXXXX. Type motion is not available, yet. If you need the state please add him manually

iot.0 2019-10-12 18:45:38.324 info (4714) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 18:45:38.305 info (4714) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 18:45:38.283 info (4714) hm-rpc.0.XXXXX is auto added with type light.

iot.0 2019-10-12 18:45:37.452 info (4714) Connecting with a18wym7vjdl22g.iot.eu-west-1.amazonaws.com

iot.0 2019-10-12 18:45:37.425 info (4714) starting. Version 1.1.9 in /opt/iobroker/node_modules/iobroker.iot, node: v8.16.1

iot.0 2019-10-12 18:45:04.775 info (32050) Connection lost

iot.0 2019-10-12 18:45:04.775 info (32050) Connection changed: disconnect

iot.0 2019-10-12 18:45:04.772 info (32050) Terminated (NO_ERROR): Without reason

iot.0 2019-10-12 18:45:04.771 info (32050) terminating

iot.0 2019-10-12 18:45:04.726 error (32050) TypeError: Cannot read property 'startsWith' of undefined at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5306:24) at GoogleHome.getStates

iot.0 2019-10-12 18:45:04.726 error (32050) uncaught exception: Cannot read property 'startsWith' of undefined

iot.0 2019-10-12 18:44:19.838 debug (32050) system.adapter.admin.0: logging false

iot.0 2019-10-12 18:34:07.809 warn (32050) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 18:34:07.808 warn (32050) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 18:34:07.807 error (32050) [GHOME] Url Key error. Google Request and Response are working. But device states are not reported automatically: {"error":"Email not found or URL key not found"}

iot.0 2019-10-12 18:34:07.678 info (32050) Connection changed: connect

`

username_0: The adapter is already in debug mode. I just reinstalled it as you want. I dont get you with the export thing. The linked post does not describe anything.

username_2: thanks for the log but I added addtional debug messages in the latest github version can you check you really reinstalled from github

Press the down arrow and upload the json in the forum

username_0: Maybe this will help :

`

iot.0 | 2019-10-12 19:47:47.971 | info | (31337) Connecting with a18wym7vjdl22g.iot.eu-west-1.amazonaws.com

-- | -- | -- | --

iot.0 | 2019-10-12 19:47:47.945 | info | (31337) starting. Version 1.1.9 in /opt/iobroker/node_modules/iobroker.iot, node: v8.16.1

host.ioBroker | 2019-10-12 19:47:45.308 | info | instance system.adapter.iot.0 started with pid 31337

chromecast.0 | 2019-10-12 19:47:28.459 | info | (17271) Reconnecting Küche to 192.168.178.33:42229

chromecast.0 | 2019-10-12 19:47:28.459 | info | (17271) Received keep alive for disconnected device Küche

host.ioBroker | 2019-10-12 19:47:15.287 | info | Restart adapter system.adapter.iot.0 because enabled

host.ioBroker | 2019-10-12 19:47:15.287 | info | instance system.adapter.iot.0 terminated with code 0 (NO_ERROR)

host.ioBroker | 2019-10-12 19:47:15.287 | error | Caught by controller[0]: at processImmediate [as _immediateCallback] (timers.js:745:5)

host.ioBroker | 2019-10-12 19:47:15.287 | error | Caught by controller[0]: at tryOnImmediate (timers.js:768:5)

host.ioBroker | 2019-10-12 19:47:15.286 | error | Caught by controller[0]: at runCallback (timers.js:810:20)

host.ioBroker | 2019-10-12 19:47:15.286 | error | Caught by controller[0]: at Immediate.setImmediate [as _onImmediate] (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2432:41)

host.ioBroker | 2019-10-12 19:47:15.286 | error | Caught by controller[0]: at GoogleHome.getStates (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2430:26)

host.ioBroker | 2019-10-12 19:47:15.286 | error | Caught by controller[0]: at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5306:24)

host.ioBroker | 2019-10-12 19:47:15.285 | error | Caught by controller[0]: TypeError: Cannot read property 'startsWith' of undefined

iot.0 | 2019-10-12 19:47:15.266 | info | (19608) Terminated (NO_ERROR): Without reason

iot.0 | 2019-10-12 19:47:15.265 | info | (19608) terminating

iot.0 | 2019-10-12 19:47:15.259 | error | at processImmediate [as _immediateCallback] (timers.js:745:5)

iot.0 | 2019-10-12 19:47:15.259 | error | at tryOnImmediate (timers.js:768:5)

iot.0 | 2019-10-12 19:47:15.259 | error | at runCallback (timers.js:810:20)

iot.0 | 2019-10-12 19:47:15.259 | error | at Immediate.setImmediate [as _onImmediate] (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2432:41)

iot.0 | 2019-10-12 19:47:15.259 | error | at GoogleHome.getStates (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2430:26)

iot.0 | 2019-10-12 19:47:15.259 | error | at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5306:24)

iot.0 | 2019-10-12 19:47:15.259 | error | (19608) TypeError: Cannot read property 'startsWith' of undefined

iot.0 | 2019-10-12 19:47:15.259 | error | (19608) uncaught exception: Cannot read property 'startsWith' of undefined

`

I'll send you the json as a PM in forum

username_2: The issue is GoogleHome lib is calling a getForeignState on undefined

We will fix this on both sides.

username_1: js-controller "id sanitizing" will be fixed in 2.0.26 propably Sunday night or monday

username_3: hi, ist this Error fixed already?

I'm getting the same error (js-controller 2.1.1 iot v.1.1.9) if i try to use en Thermostat over Google Home.

username_4: Must be fixed with 1.1.11

username_0: I still have the issue that the license wont be recognised.

`GHOME] Url Key error. Google Request and Response are working. But device states are not reported automatically: {"error":"Email not found or URL key not found"} `

The Thermostats are currently not recognised and not controllable. I changed it to the virtual groups maybe thos is the issue?

I got many of these:

`

Missing name, type or treat for: hm-rpc.2.INT0000002.2.STATE. Not added to GoogleHome

`

username_2: Try to delete iot.0.certs

username_2: Remove INT0000002 manually and let it recreate from hm rpc Adapter

username_0: Seems to be an issue with my grouping, all devices must have some room and group not like mine only some funktions I wanted to be filtered.

username_2: The channel you want to add needs room and func. Not only the state

username_0: The License error is still persistant

`

iot.0 | 2020-01-04 09:28:14.982 | warn | (28867) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Invalid URL-KEY"}

-- | -- | -- | --

iot.0 | 2020-01-04 09:28:14.981 | warn | (28867) [GHOME] Invalid URL key. Status update is disabled you can set states but not receive state updates: {"error":"Invalid URL-KEY"}

iot.0 | 2020-01-04 09:28:14.980 | error | (28867) [GHOME] Url Key error. Google Request and Response are working. But device states are not reported automatically: {"error":"Invalid URL-KEY"}

`

username_2: Reset credentials in iot options and delete iot.0.certs

username_0: `

st.ioBroker | 2020-01-04 10:10:53.530 | info | Restart adapter system.adapter.iot.0 because enabled

-- | -- | -- | --

host.ioBroker | 2020-01-04 10:10:53.530 | info | instance system.adapter.iot.0 terminated with code 0 (NO_ERROR)

host.ioBroker | 2020-01-04 10:10:53.530 | error | Caught by controller[0]: at processImmediate (timers.js:658:5)

host.ioBroker | 2020-01-04 10:10:53.529 | error | Caught by controller[0]: at tryOnImmediate (timers.js:676:5)

host.ioBroker | 2020-01-04 10:10:53.529 | error | Caught by controller[0]: at runCallback (timers.js:705:18)

host.ioBroker | 2020-01-04 10:10:53.529 | error | Caught by controller[0]: at Immediate.setImmediate (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2063:41)

host.ioBroker | 2020-01-04 10:10:53.529 | error | Caught by controller[0]: at GoogleHome.getStates (/opt/iobroker/node_modules/iobroker.iot/lib/GoogleHome.js:2061:26)

host.ioBroker | 2020-01-04 10:10:53.529 | error | Caught by controller[0]: at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5561:24)

host.ioBroker | 2020-01-04 10:10:53.528 | error | Caught by controller[0]: TypeError: Cannot read property 'startsWith' of undefined

iot.0 | 2020-01-04 10:10:53.013 | info | (1146) Connection lost

iot.0 | 2020-01-04 10:10:53.012 | info | (1146) Connection changed: disconnect

iot.0 | 2020-01-04 10:10:53.009 | info | (1146) Terminated (NO_ERROR): Without reason

iot.0 | 2020-01-04 10:10:53.008 | info | (1146) terminating

iot.0 | 2020-01-04 10:10:52.963 | error | (1146) TypeError: Cannot read property 'startsWith' of undefined at Adapter.getForeignState (/opt/iobroker/node_modules/iobroker.js-controller/lib/adapter.js:5561:24) at GoogleHome.getStates (

iot.0 | 2020-01-04 10:10:52.962 | error | (1146) uncaught exception: Cannot read property 'startsWith' of undefined

iot.0 | 2020-01-04 10:10:45.498 | error | (1146) [GHOME] invalid protocol version: {"error":"Unsupported version"}

`

username_0: Okay got it, somehow it was related to my old subscription (3year plan for pro) after purchasing the extra year of cloud remote access its working now. |

mrdoob/three.js | 101401235 | Title: MorphAnimMesh and MorphBlendMesh

Question:

username_0: I found 2 similar classes for playing morph animations. MorphBlendMesh allows to run multiple animations, MorphAnimMesh only one(probably).

I'm not found any docs. I'm not any example with MorphAnimMesh (Is it works?). They have different interfaces(update/updateAnimation, (setAnimationFPS + playAnimation)/(playAnimation))...

I confused. MorphAnimMesh is deprecated?

Answers:

username_1: I've close problems with MorphAnimMesh. My app. with R72 no loger works with R73 and R74 (THREE.MorphAnimMesh is not a constructor???)

username_2: THREE.MorphAnimMesh is deprecated??? i think we need to describe it on docs.

username_1: I strongly advice to choose between the following strategies:

1. you may still use THREE.MorphAnimMesh. Since it is no longer in the core, you have to include the suitable file located here: https://github.com/username_4/three.js/tree/dev/examples/js/THREE.MorphAnimMesh.js (please download the very last THREE release: R79)

2. You throw overboard THREE.MorphAnimMesh and you adopt the new approach for animation in THREE. Unfortunately, your require a lot of re-work in your app. The new support for animation in THREE is poorly illustrated. For basic understanding, I advice to look at this app: morphtargets horse (Browse examples in R79)

3. (I personnaly choose this one): Drop off THREE.MorphAnimMesh and reuse http://www.createjs.com/tweenjs. From experience, TWEEN.JS is easy to understand and can be integrated with THREE without difficulty.

Hope this helps

username_3: @username_0 can this issue be closed?

Status: Issue closed

username_5: I've run into this issue when porting my app to ES6 and using modules. I need to use `SceneLoader` as there is no replacement for this yet, even though it was removed from `three` entirely.

I've got `SceneLoader` ported to ES6 (simply doing `import * as THREE from 'three'` and then export { SceneLoader }`) but it's complaining that `MorphAnimMesh`, which `SceneLoader` uses, is not present in `three` anymore.

The block of code in question is

```

else if ( objJSON.morph ) {

object = new THREE.MorphAnimMesh( geometry, material );

if ( objJSON.duration !== undefined ) {

object.duration = objJSON.duration;

}

if ( objJSON.time !== undefined ) {

object.time = objJSON.time;

}

if ( objJSON.mirroredLoop !== undefined ) {

object.mirroredLoop = objJSON.mirroredLoop;

}

if ( material.morphNormals ) {

geometry.computeMorphNormals();

}

```

I'm tempted to simply comment it out and see what happens but if I could get a solution that would be great.

username_4: Oh? `ObjectLoader` is supposed to be the replacement of `SceneLoader`.

username_5: It doesn't work the same way though, I tried it during my upgrade and just couldn't get it to work the same way :( I've made a stackoverflow post about it somewhere. It's fine though, `SceneLoader` still works, just had to do these little changes for my ES6 migration. |

HandyFriends/HandyFriends | 747883550 | Title: Code log in & sign up screen

Question:

username_0: Time commitment: 1-2 hours

Required user story:

- User logs in (or signs up) to access menu of various services they can get. Use Google Firebase

Optional:

- Code the following functionality: App remembers the user for certain period of time (automatic logout only after certain period of time)<issue_closed>

Status: Issue closed |

wireservice/csvkit | 197514566 | Title: Test the tutorial

Question:

username_0: So much has changed

Answers:

username_1: * `csvformat -M "\r" examples/dummy.csv` prints `a,b,c\r1,2,3\r`. Did not expect literal `\r`.

* In `csvsql.rst` multiple commands error with `sqlalchemy.exc.ArgumentError: Column must be constructed with a non-blank name or assign a non-blank .name before adding to a Table.`. Some others error with `Row 0 has 4 values, but Table only has 3 columns.`

* `csvstat examples/realdata/FY09_EDU_Recipients_by_State.csv` has lots of agate warnings.

* Other `csvstat` commands don't have expected output. See #714

* `in2csv examples/test.xls` errors with `UnicodeDecodeError: 'ascii' codec can't decode byte 0xce in position 9: ordinal not in range(128)`

* `curl https://api.github.com/repos/wireservice/csvkit/issues\?state\=open | in2csv -f json -v` fails with `UnicodeDecodeError: 'ascii' codec can't decode byte 0xe2 in position 1249: ordinal not in range(128)`

* `curl "http://oakland.crimespotting.org/crime-data?format=json&type=robbery&count=10" | in2csv -f geojson` needs to be cut/replaced, since that API no longer returns a useful response.

username_1: Looked at the tutorial now. Besides #711, there's:

* `csvsql -i sqlite joined.csv` and `csvsql --db sqlite:///leso.db --insert joined.csv` and `csvsql --query "select county,item_name from joined where quantity > 5;" joined.csv` warn `DuplicateColumnWarning: Column name "fips" already exists in Table. Column will be renamed to "fips_2".`

username_0: Is that last issue you reported meant to be checked off? If so, what was the fix?

username_0: I can't replicate your csvsql issue. Can you confirm with latest agate?

username_0: Can not confirm `csvstat` issue either.

username_0: #714 I'm not going to fix for 1.0. That can be resolved in the next minor release.

username_0: Nevermind, scratch my comments from a moment ago. I've replicated your bugs.

username_0: Okay I've broken out the issues I can replicate into separate tickets. (Some for 1.0, some not.)

Status: Issue closed

username_1: * `curl "http://oakland.crimespotting.org/crime-data?format=json&type=robbery&count=10" | in2csv -f geojson` I just removed. I don't think we absolutely need a GeoJSON example. The API wasn't returning any features to transform.

* If you mean the`DuplicateColumnWarning` line, that occurred because I had two `fips` columns at some point while testing, but that was before you merged a csvjoin fix.

username_0: Got it, great! |

nuxodin/ie11CustomProperties | 658105116 | Title: !important not working

Question:

username_0: On version 4.1.0, I encountered the next behaviour:

My code looks like: color: var(--white) !important; where var(--white) is --white: #ffffff

Answers:

username_1: Is this library being maintained?

username_0: Don't think so. No response whatsoever.

username_2: Sorry, I have no free resources at the moment.

username_3: @username_0 @username_1 - can you please test the proposed fix in https://github.com/username_2/ie11CustomProperties/pull/63/files

If you could post back here with any feedback on that it'd be appreciated! |

lukasroegner/homebridge-dyson-pure-cool | 764425620 | Title: API error with TP02

Question:

username_0: Getting these error messages:

[12/12/2020, 13:25:56] [DysonPureCoolPlatform] Error while signing in. Status Code: 429

[12/12/2020, 13:25:56] [DysonPureCoolPlatform] API could not be reached. Retry is disabled.

I've triple-checked the email/username/countrycode/serial.

Any response on what 429 represents?

Answers:

username_1: Same problem....TP02 not work with the plugin...but my DP04 work fine

username_2: As stated several times in the issues:

- Make sure you have installed the latest version of the plugin

- Make sure the country code is correct

- Make sure your credentials are correct

- Check whether the name of the device in the Dyson app contains any special characters

If you provide more information (sanitized config), I can take a deeper look.

username_1: Its ok now, the name of the device work fine with other home bridge plugin but with this I must delete the DYSON- before and the -475 after the serial.

Status: Issue closed

username_3: Can you please show me a screenshot how you setted everything up.

I'm not able to get my TP02 working.

username_3: Looks like the plugin is not supporting an special characters in the password.

I now use a password without any special characters, only letters and numbers.

now the login is possible. |

dresden-elektronik/deconz-rest-plugin | 723508854 | Title: deCONZ 2.05.85 crashes with Segmentation fault

Question:

username_0: <!--