repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

spatie/laravel-medialibrary | 322532418 | Title: return default file, if there is none

Question:

username_0: Hi,

Is it possible to return a default file (an image for example), if the media collection is empty?

I have a model with following config:

```php

public function registerMediaCollections()

{

$this->addMediaCollection('image')

->singleFile()

->registerMediaConversions(function (Media $media) {

$this->addMediaConversion('resized')

->fit(Manipulations::FIT_FILL, 256, 256)

->background('white')

->nonQueued();

});

}

```

and I access the image with following code:

```php

$model->getFirstMediaUrl('image', 'resized');

```

Works great.

I was thinking about a way to return a default image when there is no image in the collection.

It's quite useful, for example when you use a seeder and don't mind to seed the images, or when you have a model where the image is required for your html template but you don't want to force the user to upload its own.

I know I can manually check and use a default one with:

```php

$model->getFirstMediaUrl('image', 'resized') ?? asset('path/to/img');

```

But I was thinking about a better way, like defining a default file in `registerMediaCollections` method.

Answers:

username_1: Sounds like a solid request! Feel free to send a PR that implements this.

The fallback should be some sort of `Media` null object, could be tricky to pull through but we'll see what it looks like when implemented.

username_0: I've got an idea about how this could be implemented:

We set the default file for each collection (or maybe for each conversion as well) like this:

```PHP

public function registerMediaCollections()

{

$this->addMediaCollection('image')

->singleFile()

->defaultFile('path/to/file/which/will/be/returned/as/it/is')

// This library won't touch the default file, it just get a file name

// as string and return it exactly as it is

// It doesn't care about file type, or if it exists or not

// ->defaultFile('images/user/default.png')

->registerMediaConversions(function (Media $media) {

$this->addMediaConversion('resized')

->fit(Manipulations::FIT_FILL, 256, 256)

->background('white')

->nonQueued();

});

}

```

Then there will be a new set of methods to retrieve file:

`->getMediaOrDefault()`

`->getFirstOrDefaultMedia()`

`->getFirstOrDefaultMediaUrl()`

So, we can use this methods in our frontend code to get the media (or the default one if there is none), and we can continue to use current methods (`getMedia`, `getFirstMedia`, `getFirstMediaUrl`) in our admin panel because we don't need the default file in there.

I know it's not complete and can get better, just wanted to start.

username_1: Maybe we should stick with URL's and paths instead of actual default media.

```php

$this

->addMediaCollection('image')

->defaultUrl('/url/to/image.jpg')

->defaultPath('/path/to/image.jpg');

```

I don't know, we could return a dummy `Media` object but that sounds complicated. Pinging @username_2

I'd rather not add to the public API for this, if you're setting a default, it should be used in the existing methods.

username_2: I'd also steer away from dummy media, that could become complicated real fast.

What @username_1 suggests I his code example seem pretty good. I'd slightly rename the methods

```php

$this

->addMediaCollection('image')

->withFallbackUrl('/url/to/image.jpg')

->withFallbackPath('/path/to/image.jpg');

```

username_0: Sounds cool.

There is only one more thing that should be considered.

There should be a way to tell whenever you want the fallback to be returned if there is no media. It shouldn't be the default behavior and even if it is, there should be a way to change it, something like:

```

$model->getMediaWithFallback()

$model->getFirstMediaWithFallback()

$model->getFirstMediaUrlWithFallback()

```

username_2: I don't want to add new methods on the model for now. Users can easily do that in their own app.

Let's start with adding `withFallbackUrl` and `withFallbackPath`.

username_0: OK, looking forward to it.

username_0: For anyone looking here for a [temporary] solution, add this method to your model (or use it as a trait):

```PHP

public function getFirstOrDefaultMediaUrl(string $collectionName = 'default', string $conversionName = ''): string

{

$url = $this->getFirstMediaUrl($collectionName, $conversionName);

return $url ? $url : $this::$defaultImage ?? '';

}

```

And define a static property inside your model (You can skip this if you don't mind have a default image):

```PHP

protected static $defaultImage = '/images/default/event.png';

```

And access the first media url, or the default one as simple as:

```PHP

$model->getFirstOrDefaultMediaUrl('collection');

```

username_2: Closing this issue for now, we'll continue the conversation in the PR you'll potentially submit.

Status: Issue closed

|

bokeh/bokeh | 109697090 | Title: Feature request: Constrain zoom out to fixed range/domain

Question:

username_0: On Twitter @username_2 asked about being able to constrain the zoom tool to within a fixed range/domain on a plot. It sounds like a reasonable feature to me.

Answers:

username_1: I was just looking at #1450 which asks for some similar improvements.

username_1: Are these constraints a feature on a the WheelZoomTool or on the axis?

username_0: I'd imagine it'd be on the zoom tool where the python spelling would be like:

```python

from bokeh.models import WheelZoomTool

wheelzoom = WheelZoomTool(x_range=(0,100), y_range(0,100))

### or

wheelzoom = WheelZoomTool(x_min=0, x_max=100, y_min=0, y_max=100)

```

Then on the BokehJS side, you just kill the zoom event if you're at the limit of the zoom range.

username_2: Folks! really glad to see this being discussed. Wonder if in the absence of constraints on the zoom the a fraction of the bounding box extent could be used to infer max/min zoom.

username_3: +1 this would be pretty useful. Too easy to zoom/scroll away from the plot entirely, or zoom in crazy far. Thanks for thinking about it.

username_0: A PR was recently merged to the BoxZoomTool where the zoom was killed if the zoom box was smaller than 5 pixels in height/width - assuming the user didn't intend the zoom and tried to "close" the box. I think it is a fairly opinionated feature, but feels very natural in terms of UX.

I'm becoming convinced that @username_2 's recommendation of adding zoom constraints based on the data ranges (perhaps a 1x data range buffer around the default range) would be a beneficial feature.

I can't immediately think of a use case where zooming way beyond the plot data is valuable to the user.

To make this optional, we could add a zoom/pan_limit bool flag to the plotObject to make this optional.

It is kind of a big change. @username_5 @username_4 Do either you of have feedback on adding a scroll constraint in terms of UX and cases where it'd be a problem?

also ref issue #2427, #2565,

username_1: Would this proposal have any effect on the limits of the PanZoomTool? If this does turn out to be a big change, is this the place to introduce aspect ratio on the zooming too?

username_4: I'm glad there is discussion on this we should implement some solution soon. Just so that it is on the radar: the complicating case is when there are multiple ranges.

>

username_2: @username_4 Also really glad to see this being discussed! What about just using a factor of the maximum range in both x and y?

username_5: For me, if you're data is updating based on your ranges e.g. in a map or

in any kind of remote data source, then you definitely want to zoom

outside the current plot data.

Having said that having optional/configurable constraints would be super

helpful.

To my mind its particularly helpful when you are zooming out beyond plot

data that you can't see yet (because it needs to load from a server) as

it constrains that action appropriately when the user has no other

option for feedback.

username_6: +1 on the ability to limit zoom. Accidentally zooming way out can be disorienting to the user.

username_5: I'm currently working on this.

username_3: Thanks Sarah! :) That's great to hear. :)

Status: Issue closed

username_2: This is excellent! |

facebook/infer | 267314800 | Title: infer-capture doesn't like compile_commands.json that uses "arguments" key

Question:

username_0: Some tools, notably https://github.com/rizsotto/scan-build (a Python reimplementation of Clang's `scan-build` command) produces JSON files like the example below that includes `arguments`.

`infer capture` fails on such input files because `parse_json` doesn't know about `arguments`: https://github.com/facebook/infer/blob/3df1e52928b966582445f9a51e32bf22543111d1/infer/src/integration/CompilationDatabase.ml#L57-L82

Sample run:

```

$ infer capture --debug --compilation-database ~/work/mono/compile_commands.json

Capturing using compilation database...

Uncaught exception:

(Failure "Json file doesn't have the expected format")

Raised at file "pervasives.ml", line 32, characters 17-33

Called from file "integration/CompilationDatabase.ml" (inlined), line 49, characters 4-59

Called from file "integration/CompilationDatabase.ml", line 76, characters 20-40

Called from file "list.ml", line 77, characters 12-15

Called from file "list.ml", line 77, characters 12-15

Called from file "src/core_list0.ml" (inlined), line 405, characters 16-30

Called from file "integration/CompilationDatabase.ml", line 87, characters 2-50

Called from file "backend/infer.ml", line 234, characters 29-73

Called from file "backend/infer.ml", line 510, characters 2-21

Called from file "backend/infer.ml", line 566, characters 32-45

```

This is using a version of `infer` from Homebrew:

```

$ infer --version

Infer version v0.12.1

Copyright 2009 - present Facebook. All Rights Reserved.

```

Example `compile_commands.json`:

```json

[

{

"arguments": [

"cc",

"-c",

"-DHAVE_CONFIG_H",

"-I.",

"-I../..",

"-I../..",

"-I../../mono",

"-I../../libgc/include",

"-I../../mono/eglib",

"-I../../mono/eglib",

"-fvisibility=hidden",

"-D_THREAD_SAFE",

"-DGC_MACOSX_THREADS",

"-DUSE_MMAP",

"-DUSE_MUNMAP",

"-g",

"-Wall",

"-Wunused",

"-Wmissing-prototypes",

"-Wmissing-declarations",

"-Wstrict-prototypes",

[Truncated]

"-Wno-attributes",

"-Wno-format-zero-length",

"-Qunused-arguments",

"-Wno-unused-function",

"-Wno-tautological-compare",

"-Wno-parentheses-equality",

"-Wno-self-assign",

"-Wno-return-stack-address",

"-Wno-constant-logical-operand",

"-Wno-zero-length-array",

"-Werror-implicit-function-declaration",

"-o",

"libmonoruntimesgen_la-opcodes.o",

"opcodes.c"

],

"directory": "/Users/user/mono/mono/metadata",

"file": "opcodes.c"

}

]

```

Answers:

username_1: Thanks for the report. It would make sense to support `"arguments"` too. |

AtomLinter/linter-php | 119082021 | Title: [Feature Request] Support Laravel Blade

Question:

username_0: Is it feasible to include Laravel Blade support in the php linter or would that be better off in a own project?

Answers:

username_1: Are Laravel Blade templates valid for _plain_ PHP? This linter simply runs `php -l` on the files...

username_0: Nope but you can use vanilla php inside .blade files

Status: Issue closed

username_1: That would be better off for its own project then as you would have to implement (or use) some parser to split out the PHP code from the rest of the file before passing it on to `php -l`.

Feel free to clone this and plug in whatever you need to get a working version for what you are intending, and if you have any problems as in #linter on Atom's Slack instance and we will do what we can to help. |

noiseconformist/noisethoughts | 350243951 | Title: wires might be an issue!

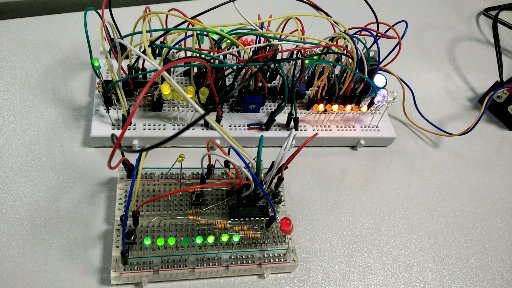

Question:

username_0: Right - this looks like a rat's nest!

Clean that up PLS!

Status: Issue closed

Answers:

username_1: ok, we leave that for now!

username_0: will this be re-opened then?

username_1: Right - this looks like a rat's nest!

xxx

Clean that up PLS!

username_1: yes - now you can test!

username_0: testing tests!

username_0: ok, check this out! Weird, huh?

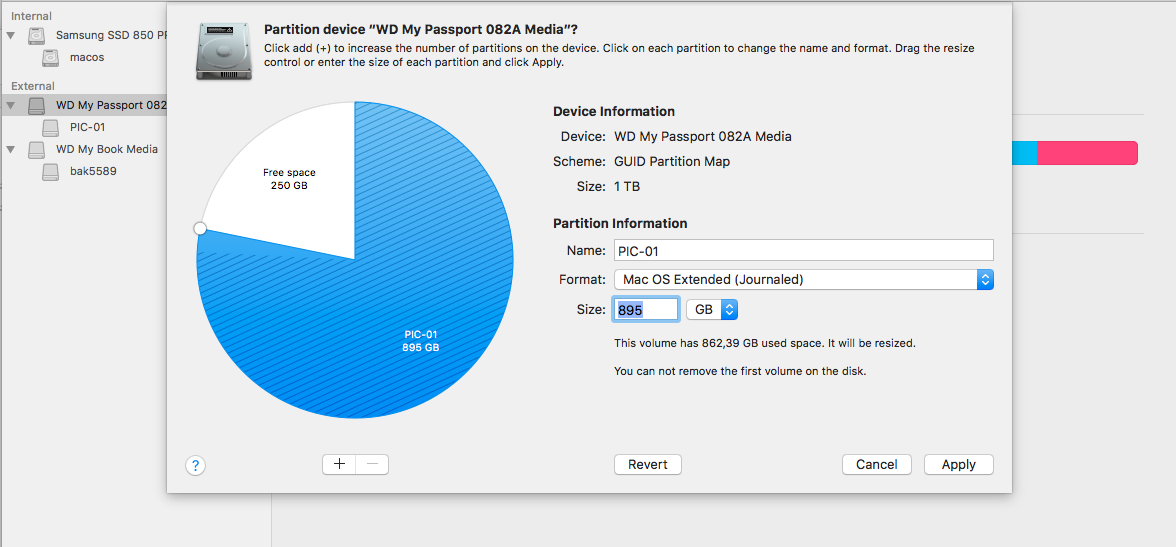

username_1: so, 895M + 250M = 1T ?? really?

username_0: looks like that ... |

jdillard/sphinx-sitemap | 333397039 | Title: Only references pages that are new or have changed since the last build

Question:

username_0: **Issue**

The builder currently doesn't access to the [saved environment](http://www.sphinx-doc.org/en/master/glossary.html#term-environment), so it can only see pages that are new or have changed since the last build, possibly leading to an incomplete sitemap.

**Work around**

To get around this you can manually clean the build directory or use the [-E flag](http://www.sphinx-doc.org/en/master/man/sphinx-build.html) to not use the saved environment and rebuild completely as part of your deployment process.

**Possible fix**

The saved environment is pickled after the parsing stage, so accessing it may make it possible to always produce a full sitemap. |

ubports/ubuntu-touch | 454594158 | Title: Blank OSK on the lock screen

Question:

username_0: - Device: BQ E4.5 (krillin)

- Channel: edge

- Build: Ubuntu 16.04 (2019-06-11)

### Steps to reproduce

Boot the device, go to the lock screen

### Expected behavior

OSK shows and you can enter the PIN / Password to unlock the device

### Actual behavior

OSK is blank, so you can't type anything

### Logfiles and additional information

- maliit-server.log https://paste.ubuntu.com/p/zksFnHF34k/

- unity8.log https://paste.ubuntu.com/p/PhCbJJCFG4/

- syslog is full of this kind of messages https://paste.ubuntu.com/p/J5H2jbtngv/ (I don't know if that's relevant but just in case)

- I can unlock the screen plugging an external keyboard

Answers:

username_1: I can confirm having the same issue with today's update on Meizu MX4 (arale).

Status: Issue closed

username_2: I've updated the changelog of keyboard-component in `xenial_-_edge` and the images are rebuilding now. This should be fixed by upgrading after that is finished. https://ci.ubports.com/job/xenial-hybris-edge-rootfs-armhf/316/ |

facebook/fresco | 310711120 | Title: I want to play GIF once and manually in my RN application

Question:

username_0: ### Description

I add a GIF to my RN application as described here(https://facebook.github.io/react-native/docs/image.html#gif-and-webp-support-on-android)

I want to click on the GIF cover - the 1st image of the GIF - and than it should play only once.

once it complete the image should be the last image of the GIF.

Actual Behavior

- on Android & iOS once the screen is rendered the GIF plays, I can't control it.

- play once - on iOS the gif is played in a loop without stopping

### Reproduction

Add gif as describe in here(https://facebook.github.io/react-native/docs/image.html#gif-and-webp-support-on-android)

### Solution

### Additional Information

* Fresco version: com.facebook.fresco:animated-base-support:1.3.0

* Fresco version: com.facebook.fresco:animated-gif:1.3.0

* OS 1: Windows 8.1

* OS 2: iOS - macOS High Sierra 10.13.3

* Node: 8.9.3

* npm: 5.6.0

* react: 16.2.0

* react-native: 0.52.0

Answers:

username_1: This is something that needs to be fixed / implemented on the React Native side -- please report this issue there.

Status: Issue closed

|

everypolitician/everypolitician | 145412562 | Title: Investigate why Czechia only has 19 Groups mapped to Wikidata

Question:

username_0: `bin/wikidata_info.rb` is reporting 19 Groups matched:

But we have 57 rows in the mapping file:

```wc -l sources/manual/group_wikidata.csv

58 sources/manual/group_wikidata.csv

```

Answers:

username_0: Closed by https://github.com/everypolitician/everypolitician-data/pull/11835

Status: Issue closed

|

Drakma/VacantSky | 197501895 | Title: Unable to get Chorus Fruit

Question:

username_0: I killed the Ender Dragon a couple of times now and went through the portals to the Outer Islands where normally you can find Chorus Fruit plants, but so far I haven't found any. Is Chorus fruit supposed to spawn on the tiny island you end up on after going through the portal with the ender pearl?

I also visited a bunch of End Cities and Ships and didn't find any.

Maybe I'm missing something where to find them? Or are they nowhere to be found? |

stfc/PSyclone | 813227014 | Title: Add field access details for gokernel based on stencil/access mode declaration

Question:

username_0: ATM if a field is accessed in a gocean kernel, the variable usage analysis adds the variable with index ``[1]`` (which was done to mark that this is an array access).

When implementing better testing for loop fusion, we need additional details about the access of fields in a kernel, which we can achieve only based on the meta-data (consistency of meta-data and code is a different issue). The suggestion is to add access as follows:

- if a field ``A`` is written in a kernel, add a write-access to ``A(i,j)``. This is the basic assumption for a gokernel that there are no 'stencil writes' to a field.

- if a field ``B`` is read in a kernel, add several read access, based on the stencil information. E.g. assume the stencil is ``010,112,003``, we would then add (assuming that the first line - 010 - represents ``j-1``, if it should be ``j+1``, swap all plusses and minuses :grin:):

- ``B(i,j-1)``

- ``B(i-1,j)``

- ``B(i,j)``

- ``B(i+1,j)`` and ``B(i+2,j)``

- ``B(i+1,j+1)``, ``B(i+2,j+2)`` and ``B(i+3,j+3)``

These array accesses would represent the stencil information.

Once we have the individual accesses, we can use the same code for dependency analysis in NEMO and gocean.

@username_2, @username_1, @sergisiso - feedback please :) (anyone else as well, but I think you three are the people involved in gocean).

Answers:

username_1: Hi @username_0. Yes that logic looks right to me. I think the top row is j+1 and the bottom j-1 as we treat it as a graph with the centre being 0,0. At least, that is how I read the documentation. So please swap the j +'s and -'s as you suspected, unless @username_2 and @sergisiso say I wrong. :-)

username_2: I think @username_1 is right about the indexing and I think @username_0's suggestion as to how to interpret the meta-data to get read/write information is good.

username_0: Thanks, changing from question to improvement 👍

username_2: #1133 is merged. Can this be closed now @username_0?

username_0: Thanks a lot, @username_2

Status: Issue closed

|

MicrosoftDocs/visualstudio-docs | 849769026 | Title: Additional Step To Enable Custom Nesting

Question:

username_0: To make custom nesting work in non web projects I had to add the following to the csproj:

```

<ItemGroup>

<ProjectCapability Include="DynamicFileNesting" />

</ItemGroup>

```

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 2d25dd44-f173-dd83-3afc-d6608613d450

* Version Independent ID: e121cedc-5cbe-a399-e2a8-5d192aaea770

* Content: [File nesting rules for Solution Explorer - Visual Studio](https://docs.microsoft.com/en-us/visualstudio/ide/file-nesting-solution-explorer?view=vs-2019)

* Content Source: [docs/ide/file-nesting-solution-explorer.md](https://github.com/MicrosoftDocs/visualstudio-docs/blob/master/docs/ide/file-nesting-solution-explorer.md)

* Product: **visual-studio-windows**

* Technology: **vs-ide-general**

* GitHub Login: @username_1

* Microsoft Alias: **angelpe**

Answers:

username_1: How can I help?

username_2: I think what he asks is that should be mentioned in the Docs. Appropriate section that this could be added in the .NET SDK (w.r.t. `Microsoft.NET.Sdk`) pages |

domasmas/esf | 211992792 | Title: Improve esf.Website gulp build

Question:

username_0: - fix release file references in Index.cshtml

- move non-system.js js files to system.js configuration and reference them from the main module

- remove unnecesary dependency jquery

- make sure release build is independent from dev build

- exclude building thrird party libaries from dev build

- clean wwwroot folder on invoking MsBuild clean on Esf.Website<issue_closed>

Status: Issue closed |

bevyengine/bevy | 796957575 | Title: Use `bevy-glsl-to-spirv` only on the supported targets, default to `shaderc` otherwise.

Question:

username_0: The current configuration uses `bevy-glsl-to-spirv` by default, while selectively enabling `shaderc` for specific targets.

I think this is the wrong way around.

`bevy-glsl-to-spirv` provides precompiled binaries to help with builds on the officially supported targets. By design, it can only work for specifically-supported build targets.

`shaderc` has additional build-time requirements and complexity, but it supports a much wider range of compilation targets.

It doesn't make sense to me that `bevy-glsl-to-spirv` is the blanket default, and `shaderc` is on a special whitelist.

This results in compilation failures whenever anyone tries building bevy on a new target (`aarch64-unknown-linux-gnu`? `x86_64-unknown-linux-musl`? etc.), leading to issues like #898. I've seen many instances of similar reports; someone tries to compile bevy on their raspberry pi or whatever, only to hit the `bevy-glsl-to-spirv` error.

With `shaderc`, such platforms should be able to work (and would have likely worked out of the box for users attempting them for the first time).

Can we flip the configuration around? Make `bevy-glsl-to-spirv` conditionally-enabled on the platforms it supports, and default to `shaderc` otherwise?

This would keep builds exactly the same for any existing users of bevy, but it will help make bevy work on new targets more seamlessly.

Answers:

username_1: Ooh i think that is a very good call. Thats the best (short term) solution to the problem I've heard to-date. I'm sold.

username_0: @username_1 where can I find a list of the exact targets that are supported with `bevy-glsl-to-spirv`?

I will go do this and make a PR, just want to make sure I don't miss anything.

username_1: You can see them outlined here: https://github.com/username_1/glsl-to-spirv/blob/master/Cargo.toml

There is also some nuance after this pr: https://github.com/username_1/glsl-to-spirv/pull/7, which adds the option to build non-precompiled windows platforms from source (we will publish a new glsl-to-spirv version before the next release).

I _think_ for the given "from source" windows platforms in glsl-to-spirv we will want to continue using glsl-to-spirv. The dependencies are already included in the VS C++ Build Tools, which is much more natural than the Ninja + Python requirement for shaderc+windows.

@GrygrFlzr what are your thoughts?

username_0: Yes, if building `bevy-glsl-to-spirv` is supported, and it is simpler (less dependencies) than building `shaderc`, seems logical that it should be preferred on that platform.

username_0: BTW, while we are at it, should I make a feature flag to opt into `shaderc` even on platforms where `bevy-glsl-to-spirv` is supported (and normally used)? I don't see why we should limit users' options. Maybe they have reasons to prefer `shaderc`.

username_0: Also, what prevents the "building from source" functionality of `bevy-glsl-to-spirv` from being enabled for even more targets?

username_1: hmm yeah bevy-glsl-to-spirv only requires cmake and cc, which is preferable to Cmake + Git + Python + (on windows: Ninja). the only reason i can think of to use shaderc over bevy-glsl-to-spirv is the fact that it is well tested and proven to work on the platforms we currently use it on.

We just need to go through the process of validating bevy-glsl-to-spirv on iOS and Apple Silicon MacOS.

username_1: I vaguely remember some aspect of Cargo disallowing this functionality last time I looked in to it. Feel free to give it a whack though.

username_0: So, officially-supported platforms aside, if I understand you correctly, the only thing blocking support for unofficial platforms (like `aarch64-unknown-linux-gnu` or `x86_64-unknown-linux-musl`) for `bevy-glsl-to-spirv` with the new source-building configuration, is that it is not explicitly whitelisted / validated? I.e it is an artificially-imposed limitation?

If that is the case, then I'd want to reconsider this proposal of switching the default to `shaderc` in order to increase platform support (for not officially-supported targets). Should we not just lift the limitation from `bevy-glsl-to-spirv`, and let users use that?

username_1: Yup. Its a matter of validation / whitelisting. If we can prove bevy-glsl-to-spirv works on the relevant platforms then i think it makes sense to use that everywhere.

username_0: Well, my goal was to provide a "sane default" / fallback, so that people trying bevy on new platforms that haven't been tried, have a chance of it just working.

What's better for that? `shaderc` or `bevy-glsl-to-spirv`? That should be the default, and the more limited one should be on a whitelist.

username_1: We can't know which one to pick until we test the bevy-glsl-to-spirv changes on the relevant platforms.

If it works as expected, I think it's reasonable to assume it will work elsewhere. It would then be preferable to use glsl-to-spirv by default and opt in to shaderc, because then we can share the same code path across platforms.

However if it doesn't work then shaderc should probably be the default because we know it works well across many platforms

username_0: OK, now i understand you. :smile: I didn't understand you before, sorry.

I will try to compile `bevy-glsl-to-spirv` on some extra targets, whatever I have access to, to see if it works.

Those findings will inform the decision.

username_2: Temporary workaround is described here][https://discord.com/channels/691052431525675048/742884593551802431/812100952277516299](https://discord.com/channels/691052431525675048/742884593551802431/812100952277516299). |

EOL/tramea | 116116429 | Title: [8] Fix trusted image count file: the count needs to match the webpage.

Question:

username_0: Figure out where the count comes from on the web-page; re-write the script to use that same logic (probably re-written to handle counts in bulk/batches).

Answers:

username_1: read count from solr, branch -> https://github.com/EOL/eol/compare/trusted_images_count

Status: Issue closed

|

sile-typesetter/sile | 733794986 | Title: `luarocks install` on rockspec file does not work

Question:

username_0: When I try to install the required Lua packages as indicated in https://github.com/sile-typesetter/sile#from-source, it fails:

```

$ sudo luarocks install sile-dev-1.rockspec

Error: File not found: ...

```

Answers:

username_1: The file [does exist](https://github.com/sile-typesetter/sile/blob/master/sile-dev-1.rockspec) at the root of the repository. I suspect you tried running that command from somewhere else. You need to be `cd`'ed into the source directory (either your `git clone` or the extract of a source tarball) before running that command.

username_0: I am in the `.rockspec` file's directory. I suspect that the error message is rather related to this line of said file:

```

source = {

url = "..."

}

```

but having no knowledge of luarocks, I can't know for sure.

username_1: Sorry about that, the docs are what is at fault here. You're missing a key argument ;-)

```

$ sudo luarocks install --deps-only sile-dev-1.rockspec

```

username_0: It works! Thank you.

Status: Issue closed

|

zephyrproject-rtos/test_results | 1110159257 | Title:

tests-ci : filesystem: littlefs: custom test Timeout

Question:

username_0: **Describe the bug**

custom test is Timeout on v2.7.99-3293-g93b0ea978293 on mimxrt1060_evk

see logs for details

**To Reproduce**

1.

```

scripts/twister --device-testing --device-serial /dev/ttyACM0 -p mimxrt1060_evk --sub-test filesystem.littlefs

```

2. See error

**Expected behavior**

test pass

**Impact**

**Logs and console output**

```

*** Booting Zephyr OS build v2.7.99-3293-g93b0ea978293 ***

Running test suite littlefs_test

===================================================================

START - test_util_path_init_base

PASS - test_util_path_init_base in 0.1 seconds

===================================================================

START - test_util_path_init_overrun

PASS - test_util_path_init_overrun in 0.1 seconds

===================================================================

START - test_util_path_init_extended

PASS - test_util_path_init_extended in 0.1 seconds

===================================================================

START - test_util_path_extend

PASS - test_util_path_extend in 0.1 seconds

===================================================================

START - test_util_path_extend_up

PASS - test_util_path_extend_up in 0.0 seconds

===================================================================

START - test_util_path_extend_overrun

PASS - test_util_path_extend_overrun in 0.0 seconds

===================================================================

START - test_lfs_basic

clearing partition /sml

Erasing 65536 (0x10000) bytes

Wiped flash area 4 for /sml

mounting /sml

I: LittleFS version 2.4, disk version 2.0

I: FS at IS25WP064:0x630000 is 16 0x1000-byte blocks with 512 cycle

I: sizes: rd 16 ; pr 16 ; ca 64 ; la 32

E: WEST_TOPDIR/modules/fs/littlefs/lfs.c:1077: Corrupted dir pair at {0x0, 0x1}

W: can't mount (LFS -84); formatting

I: /sml mounted

checking clean statvfs of /sml

/sml: bsize 16 ; frsize 4096 ; blocks 16 ; bfree 14

creating and writing file

opening and verifying file

seek and tell in file

truncate in file

unlink hello

sync goodbye

[Truncated]

Erasing 983040 (0xf0000) bytes

Wiped flash area 5 for /med

I: LittleFS version 2.4, disk version 2.0

I: FS at IS25WP064:0x640000 is 240 0x1000-byte blocks with 512 cycle

I: sizes: rd 64 ; pr 64 ; ca 256 ; la 64

E: WEST_TOPDIR/modules/fs/littlefs/lfs.c:1077: Corrupted dir pair at {0x0, 0x1}

W: can't mount (LFS -84); formatting

I: /med mounted

/med: bsize 64 ; frsize 4096 ; blocks 240 ; bfree 238

I: /med unmounted

clearing partition /lrg

Erasing 3145728 (0x300000) bytes

```

**Environment (please complete the following information):**

- OS: (e.g. Linux )

- Toolchain (e.g Zephyr SDK)

- Commit SHA or Version used: v2.7.99-3293-g93b0ea978293

Answers:

username_0: Also fails on mimxrt1060_evk for v2.7.99-3293-g93b0ea978293

username_0: Also fails on mimxrt1170_evk_cm7 for v2.7.99-3293-g93b0ea978293

username_0: Also fails on lpcxpresso54114_m4 for v3.0.0-rc1

username_0: Also fails on lpcxpresso55s28 for v3.0.0-rc1

username_0: Also fails on mimxrt1015_evk for v3.0.0-rc1

username_0: Also fails on mimxrt1060_evk for v3.0.0-rc1

Status: Issue closed

|

Conchylicultor/DeepQA | 193467999 | Title: initialize_all_variables is deprecated.

Question:

username_0: # Description

Runing the current code against tensorflow 0.12.0-rc0.

```

initialize_all_variables (from tensorflow.python.ops.variables) is deprecated.

```

# Solution

```

I tensorflow/core/common_runtime/gpu/gpu_device.cc:916] 0: Y [26/579]

I tensorflow/core/common_runtime/gpu/gpu_device.cc:975] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GRID K520, pci bus id: 0000:00:03.0)

WARNING:tensorflow:From /home/ubuntu/git/chatbot_butterfly/chatbot/chatbot.py:173 in main.: initialize_all_variables (from tensorflow.python.ops.

variables) is deprecated and will be removed after 2017-03-02.

Instructions for updating:

Use `tf.global_variables_initializer` instead.

Training: 0%| | 3/4030 [00:00<22:14, 3.02it/s]I tensorflow/core/common_runtime/gpu/pool_allocator.cc:247] PoolAllocator: After 1768 get requests,

put_count=1460 evicted_count=1000 eviction_rate=0.684932 and unsatisfied allocation rate=0.79638

```

Answers:

username_1: Hello! See pull request here https://github.com/username_2/DeepQA/pull/26

Hope it helps.

username_0: Moment ago, I changed back to 0.11. As it turns out, **model.ckpt** is not generated with 0.12.

Check out #23. So, if we really want to use 0.12, we should better fix this problem first.

username_1: Looks like we need to migrate some stuff to tensorflow's 12 API.

But i don't have any warnings...

@username_0

username_2: initialize_all_variables is just a wrapper around global_variables_initializer: https://github.com/tensorflow/tensorflow/blob/20c3d37ecc9bef0e106002b9d01914efd548e66b/tensorflow/python/ops/variables.py#L1170

There have been change with `scalar_summary` > `summary.scalar` as well. As explain in #26, this change is too recent to change the program, but I'll certainly fix that in the future.

Status: Issue closed

username_2: It should be solved with #52

username_3: I had same problem before I've solved using:

try:

#code

except:

pass |

grxy/react-ad-block-detect | 489529322 | Title: no longer works

Question:

username_0: I am wondering if their is an update for this as I was not able to get it to work I tested in Google, Opera and IE

Answers:

username_1: @username_0 Do you mind providing specific versions of these browsers?

Also, it looks like the `adblockdetect` dependency doesn't have an update, so fixing this may require looking into an alternative detection lib or writing custom detection logic.

username_0: @username_1 does not trigger on Opera browsers (I am yet to test firefox)

username_2: Shit not working stop advertising it if you don't want to update the package

username_1: @username_0 @username_2 Unfortunately, this project is no longer at the top of my radar. You're welcome to send a PR to fix the issue if you'd like. |

OpenAPITools/openapi-generator | 603534514 | Title: [REQ] appDescription for Ruby?

Question:

username_0: ### Is your feature request related to a problem? Please describe.

I'd like the ability to have a customized section in my README, but still keep generated code on the README page.

## Describe the solution you'd like

I'd like to see `appDescription` get used, which AFAIK isn't used on the Ruby README files.

## Describe alternatives you've considered

Alternatives may be ignore the README and customizing it for my needs, but I'd lose out on the generated aspect by doing that.

## Additional context |

MicrosoftDocs/azure-docs | 681873482 | Title: Missing caveat that AAD cannot be enabled on an existing cluster

Question:

username_0: Hi all,

Documentation does not make it clear that you cannot add AAD integration to an existing cluster which was not created with AAD integration.

Is it possible to add this as a caveat/warning, as I am pretty sure that was present on the previous version we did call this out (to prevent any confusion)?

Thanks!

Adam

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: bc86c3af-f6c7-4c48-e157-54abbc5958ff

* Version Independent ID: 3611b478-6df6-f5cd-563d-2fff6cfec9ef

* Content: [Integrate Azure Active Directory with Azure Kubernetes Service (legacy) - Azure Kubernetes Service](https://docs.microsoft.com/en-us/azure/aks/azure-ad-integration-cli)

* Content Source: [articles/aks/azure-ad-integration-cli.md](https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/aks/azure-ad-integration-cli.md)

* Service: **container-service**

* GitHub Login: @username_1

* Microsoft Alias: **thomasge**

Answers:

username_1: thanks for your feedback. Yes, it should be called out in our limitation section. Just made a PR.

You may want to consider our new managed AAD integration experience. It allows you to enable AAD integration on existing RBAC enabled cluster. https://aka.ms/aks/managed-aad

username_1: going ahead closing this one. Feel free to keep commenting if you have further feedback. Doc change should go live latest in 24 hours.

#please-close

Status: Issue closed

username_3: Thanks @username_1 for the doc update. :)

username_0: Thank you @username_1 !

Should we add the same caveat here for managed AAD:

https://docs.microsoft.com/en-us/azure/aks/managed-aad

If so, shall I raise a new issue on that page instead?

username_1: @username_0

Enabling managed AAD Integration on existing cluster with RBAC enabled is supported. Do you have any issues doing it? |

Erriez/R421A08-rs485-8ch-relay-board | 1106789955 | Title: Is it possible to change the board baudrate?

Question:

username_0: Hi username_1 and thanks for you work

unfortunately this board is poorly documented, do you know if there is any way to change baudrate/parity for the board?

Answers:

username_1: Hi @username_0. Thanks for using my library. I could not change the baudrate. Maybe someone else knows this. |

robotframework/RIDE | 83843309 | Title: Navigation to variable definition

Question:

username_0: It should be possible to go to variable defintion from it's usage.

This is not trivial since variable may be defined from command line, in

variable file, in resource file, in same suite or as a user keyword argument

Answers:

username_0: Too abstract an issue. Waiting for a more concrete improvement idea.

Status: Issue closed

|

hotosm/tasking-manager | 584590908 | Title: Unfork iD

Question:

username_0: For the iD integration in Tasking Manager we've slightly [forked iD](https://github.com/hotosm/iD). Let's get things upstream, so we can ideally rely directly on the main iD version.

* [ ] Scope iD's CSS to its container (https://github.com/openstreetmap/iD/issues/7437) and respective changes in the Tasking Manager CSS.

Answers:

username_0: @username_1 is there anything else we would need to get upstream in order to rely on the standard iD directly?

username_1: @username_0 currently the https://github.com/openstreetmap/iD/ is not published as a package on npm, so we should suggest them to do it.

I'll do a PR with a small change to define the minimized file as the main file on the package.json.

username_2: I'm not sure why iD has never been published this way, but I don't see an immediate reason why it shouldn't be.

Let me know what else would improve the embedding experience!

Status: Issue closed

|

WormBase/wormbase-pipeline | 812241904 | Title: WS280 7: PolyA tail position warning

Question:

username_0: During the build, there is a warning about some polyA features. Are these issues which we need to do something about?

AF239999: low feature is past end of sequence

AF239999: polyA feature is past end of sequence

AF273835: polyA feature is past end of sequence

AF456360: polyA feature is past end of sequence

It is accurate that these should be flagged, and Stavros curated them, so there will hopefully not be any warnings next time run.<issue_closed>

Status: Issue closed |

parcel-bundler/parcel | 450103662 | Title: Remove svg's ref attribute at build

Question:

username_0: # 🐛 bug report

`parcel build`'s minifer erases `ref` attribute for vue.js of `<svg>`.

## 🎛 Configuration (.babelrc, package.json, cli command)

test.html:

```html

<!DOCTYPE html>

<html>

<body>

<p ref="p">p</p>

<svg ref="svg">svg</svg>

</body>

</html>

```

Run `parcel build test.html` with v1.12.3 and initial config.

## 🤔 Expected Behavior

dist/test.html:

```html

<!DOCTYPE html><html><body> <p ref="p">p</p> <svg ref="svg">svg</svg> </body></html>

```

## 😯 Current Behavior

dist/test.html:

```html

<!DOCTYPE html><html><body> <p ref="p">p</p> <svg>svg</svg> </body></html>

```

## 🌍 Your Environment

| Software | Version(s) |

| ---------------- | ---------- |

| Parcel | 1.12.3 |

| Node | v10.15.1 |

| npm | 6.7.0 |

| Operating System | macOS 10.14.4 |

Answers:

username_1: A `.htmlnanorc` file with

```

{

"minifySvg": false

}

```

should fix this.

Instead of completely disabling svg minification, you could also try figuring out which plugin is responsible for this: https://github.com/svg/svgo/blob/master/README.md#what-it-can-do

username_0: Thanks!

I got the desired result, after I put the `.htmlnanorc` file, remove the `.cache` directory and rebuild.

Status: Issue closed

|

usnistgov/mobile-threat-catalogue | 185375043 | Title: Modify CEL-3

Question:

username_0: General Comment

------------------------------------------

CEL-3

**Type of Comment**:

T

**Proposed Change**:

Add Ruxcon research to exploit examples for this threat:

http://www.theregister.co.uk/2016/10/23/every_lte_call_text_can_be_intercepted_blacked_out_hacker_finds/

**Justification**:

Adds further context to the relevance of this threat.<issue_closed>

Status: Issue closed |

keboola/gelf-server | 252712514 | Title: Warning: json_decode() expects parameter 1 to be string, array given in /src/vendor/keboola/gelf-server/src/AbstractServer.php on line 68

Question:

username_0: Using pygelf 0.2.6

```

# pygelf

logger.addHandler(GelfTcpHandler(host=os.getenv('KBC_LOGGER_ADDR'), port=os.getenv('KBC_LOGGER_PORT'), debug=False, **fields))

logging.info('A sample info message')

logging.warning('A sample warn message')

logging.critical('A sample emergency message')

```

this is the passed logging event

```json

{

"timestamp": 1503602965.1555362,

"host": "1ce587f5895d",

"_some": {

"structured": "data"

},

"short_message": "A sample emergency message",

"level": 2,

"full_message": null,

"version": "1.1",

"source": "1ce587f5895d"

}

```

looks like it's expecting the `_some` field to be stringified JSON

Status: Issue closed

Answers:

username_1: fixed in 83ff3fc272583fb0ef275dabc7e0480d21d9bb34 |

RhetTbull/osxphotos | 549573699 | Title: bug: get_last_library_path does not always return last library

Question:

username_0: In some cases, get_last_library_path will fail if Photos hasn't already been opened. I don't know if this is an issue with permissions or if /Library/Containers/com.apple.Photos/Data/Library/Preferences/com.apple.Photos.plist is missing.

Also, it will always fail on a "clean install" account where Photos has never been opened.

In these cases, should it return ~/Pictures/Photos Library.photoslibrary?

Answers:

username_1: I can't actually reproduce my initial error with this but my first thought was it expects the `~/Pictures/Photos Library.photoslibrary` to exist, which did exist already when the error first occurred. IMHO I actually think the use of the last opened library is somewhat inconsistent because the result of osxphotos depends on the use of the app. I would suggest to always default to the user standard library (as does OSX and access to iCloud is only possible in this lib) and non-standard locations need to be parsed with the --db argument on the CLI.

username_1: Another example where this going to fail if you trash the last opened lib btw. I just think this behaviour is more hassle than it is useful.

username_0: I agree the behavior of opening last library could be ambiguous and lead to unexpected behavior. I'll change the code to require the library be explicitly specified. Ideally, I'd use the system library as the default as you suggest but I've not been able to find a way to reliably do this. On Catalina, the system library seems to be specified in ~/Library/Containers/com.apple.photolibraryd/Data/Library/Preferences/com.apple.photolibraryd.plist (used by osxphotos.utils.get_system_library_path) but this doesn't work on older OS versions. I could default to ~/Pictures/Photos Library.photoslibrary but the user can change/rename the system library and I don't know if this naming convention holds across different languages.

username_0: Another option is to have the CLI print a list of all available libraries (e.g. as is done by `osxphotos list`) if the user does not specify one explicitly on the command line.

username_1: Sounds good to me. If `--db` not parsed, why not do:

1. try to determine system lib. If that fails, ...

2. try to list used libs (with a blocking prompt?). If that fails, ...

3. require `--db` to be parsed.

I am using Catalina too (and High Sierra on my old machine) and I thought the `~/Pictures/Photos Library.photoslibrary` was universal.

username_0: I've been playing with this. I've got the following behavior prototyped:

1. --db can be passed before or after the command e.g. `osxphotos --db ~/Pictures/Foo.photoslibrary info` or `osxphotos info --db ~Pictures/Foo.photoslibrary`

2. The path to the photos library can be specified as an argument after the command without using --db: `osxphotos info ~/Pictures/Foo.photoslibrary` This seems more natural than using an option, which by definition should be optional.

3. If neither 1 or 2 is specified, osxphotos will:

1. first try to get the last library opened in Photos

2. failing 1, will try to get system photos library

3. failing 2, will look for "~/Pictures/Photos Library.photoslibrary" (need to understand if this works under non-English installs)

4. failing 3, will list all available libraries and prompt user to select one

Rationale is that this will likely "just work" for the average user and "do what I mean". Downside is that it could lead to non-deterministic behavior.

username_0: Version 0.22.0 implements this new behavior with one exception to above -- if a database path isn't passed either as argument or --db option, osxphotos will list available databases but does not block with a prompt

Status: Issue closed

username_0: In some cases, get_last_library_path will fail if Photos hasn't already been opened. I don't know if this is an issue with permissions or if /Library/Containers/com.apple.Photos/Data/Library/Preferences/com.apple.Photos.plist is missing.

Also, it will always fail on a "clean install" account where Photos has never been opened.

In these cases, should it return ~/Pictures/Photos Library.photoslibrary?

username_1: Cool, works well. How come you ditched the search for a "default" library? The behaviour listed in 3) make sense except for 3.i to keep it deterministic.

username_0: @username_1 Mainly just to make it more clear which library is being operated on. I can't reliably find the system library and you found at least one case where default library didn't work. If you think it makes sense to keep search for default library I'm happy to put it back in. Another option might be to add a "--system-lib" and "--last-lib" options so you could explicitly say "look for the last library I opened". For 99% of users, they probably have only one library but I expect people using this module might be more likely to be in the 1% with more than one library. I'm open to suggestions on how to make this easier to use/more intuitive!

username_0: What about if --db or photo_library not specified, attempt to find default library then print a prompt saying something like: "Found Photos library at ~/Pictures/Photos Library.photoslibrary -- use this one (Y/n)?" If user says Y, use it, if N, then list the other libraries? What I'd like to avoid is user operating on the wrong library by accident.

username_1: Yes I agree that would be useful although I would think that if a system library can be found, it should still operate on it without prompt (with a warning to stderr maybe). That would make it easier for the 90% of (Catalina) users.

username_0: I just pushed a new update that implements # 3 above: looks for last library, then system library, then ~/Pictures/Photos Library.photosdb. As you suggested, it will print out a statement to stderr listing the library being used

username_0: osxphotos now works around this issue as described above.

Status: Issue closed

|

seung-lab/neuroglancer | 467400281 | Title: Test: <EMAIL> | gesw5y3wg

Question:

username_0: | Email | Name | Type |

|---|---|---|

| <EMAIL> | Test | Bug and Suggestion |

Description: yuke56j4wh66h

<details>

<summary>State URL</summary>

https://seun-report2githubissue-dot-neuromancer-seung-import.appspot.com/#!%7B%22layout%22:%224panel%22%7D

</details>

Status: Issue closed

Answers:

username_1: This was me testing the PR. Closing. |

paulsoiya/teamj | 65557666 | Title: Exception Handling on Invailid Inputs

Question:

username_0: Sign Up screen needs to let users know passwords don't match.

Game/Stats input needs handling on non ints in text fields. Should just need a warning label.

Status: Issue closed

Answers:

username_0: This should be complete with Elliott's commit for the Validation for StatsController commit : <PASSWORD> |

ontodev/robot | 250682421 | Title: --annotate-inferred-axioms flagging assertions as inferred

Question:

username_0: Using the following command (after converting our `eco-edit.owl` file to RDF/XML):

```

robot reason --input eco.owl --reasoner elk --create-new-ontology false \

--annotate-inferred-axioms true --output reasoner-test.owl

```

Flags both asserted and inferred subclass statements as inferred (so all of them end up being annotated with is_inferred true). Am I missing something?

Answers:

username_0: Nevermind, I just added the `--exclude-duplicate-axioms true` flag and it works fine. Thanks!

Status: Issue closed

|

shu223/watchOS-2-Sampler | 97391735 | Title: Fails to build on device.

Question:

username_0: Hey! So I changed all the teams in all targets to mine, changed identifiers from com.shh.xxx.xxx to com.dv.xxx.xxx, Added app group entitlements to parent app and extension, changed that in Audio controller code.

But I'm getting this mysterious error.

error: WatchKit App doesn't contain any WatchKit Extensions whose WKAppBundleIdentifier matches "com.dv.sampler.watchos2.watchkitapp". Verify that the value of WKAppBundleIdentifier in your WatchKit Extension's Info.plist matches the value of CFBundleIdentifier in your WatchKit App's Info.plist.

I tried doing just what it wants, also renaming and any combinations of those. Nothing helps.

This is on Xcode 7 beta 4. Regards

Answers:

username_1: username_0, I'm not sure if Xcode tried to fix it wrong, or if I did, but in the info.plist for the extension you need to expand the NSExtension value and then NSExtensionAttributes. There you should find WKAppBundleIdentifier that needs to be updated to reflect the Bundle Identifier of the watch app. I figured it out from this http://stackoverflow.com/questions/30203079/watchkit-extension-bundle-identifiers question. It's been a few months, but hopefully it helps someone.

username_2: @username_1 thanks for that piece of info. It helped me further! |

neinteractiveliterature/intercode | 302935876 | Title: Event Proposals Page, event mistakenly shows "ulimited" capacity

Question:

username_0: Proposal for "Bound in Blood" submitted 2018-03-06 13:46.

Buckets configured for

- 0 Male

- 3 Female

- 8 Flex

- Unlimited NPC

For this proposal on the Event Proposals Page, capacity is listed as "Unlimited". It should be 11.

So, can "NPC" bet set with an attribute that says "do not count this towards game capacity"? What if some other bucket with similar meaning is set with "unlimited"?

Status: Issue closed

Answers:

username_1: This is going to be fixed by #633, which is next up on the queue after product sales. Closing this ticket in favor of that. |

MicrosoftDocs/powerquery-docs | 811131703 | Title: Date Error? Salesforce connector

Question:

username_0: On the following site: https://docs.microsoft.com/en-us/power-query/connectors/salesforceobjects

In the enabling OAuth section (https://docs.microsoft.com/en-us/power-query/connectors/salesforceobjects#enabling-oauth-authentication-in-power-bi-desktop)

We use the date December 2021. Is this supposed to read December 2020?

Thanks

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 8c78a664-32c4-68c5-97ec-99d5fa4e5aab

* Version Independent ID: 75152c2f-8bed-c5df-5fa3-2470920f6dde

* Content: [Power Query Salesforce Objects connector](https://docs.microsoft.com/en-us/power-query/connectors/salesforceobjects)

* Content Source: [powerquery-docs/Connectors/SalesforceObjects.md](https://github.com/MicrosoftDocs/powerquery-docs/blob/master/powerquery-docs/Connectors/SalesforceObjects.md)

* Service: **powerquery**

* GitHub Login: @username_1

* Microsoft Alias: **bezhan**

Answers:

username_1: Hi @username_0 -

This date in the documentation has been corrected to 2020.

Status: Issue closed

|

dtag-dev-sec/dtag-dev-sec.github.io | 337534376 | Title: 2015-03-17-concept.markdown - stale link?

Question:

username_0: The background section of the page [2015-03-17-concept.markdown](

https://github.com/dtag-dev-sec/dtag-dev-sec.github.io/blob/master/_posts/2015-03-17-concept.markdown#background) provides two links: [Sicherheitstacho](http://sicherheitstacho.eu/) / [Securitydashboard](http://securitydashboard.eu/).

However, the link "Securitydashboard" results in ERR_NAME_NOT_RESOLVED. Accessed: 2018-07-02.

Answers:

username_1: mhmh..

Domain: securitydashboard.eu

Status: AVAILABLE

username_2: <NAME>, thx,

Markus?

Best regards

Markus

username_3: Now the domain is owned by another person, not by the Telekom anymore.

So I think its really time to remove the link...

I opened a pull request (#9) removing the link, maybe this brings the attention of @dtag-dev-sec / @t3chn0m4g3 or @vorband to this.

username_4: Hello all,

many thanks for the hint! :pray: Originally we wanted to use this domain too, but in the end we only used sicherheitstacho.eu. However, it's planned to offer an english domain in the future :+1:

Cheers

Simon

username_4: Hello all,

many thanks for the hint! :pray: Originally we wanted to use this domain too, but in the end we only used sicherheitstacho.eu. However, it's planned to offer an english domain in the future :+1:

Cheers

Simon

Status: Issue closed

|

kubesphere/kubekey | 627374132 | Title: Failed to install K8s in CentOs 7.6 on QingCloud

Question:

username_0: See the failed logs:

```

[ks-allinone] Downloading image: calico/pod2daemon-flexvol:v3.13.0

INFO[23:51:06 CST] Generating etcd certs

ERRO[23:51:06 CST] Failed to generate etcd certs: Failed to exec command: sudo -E /bin/sh -c "mkdir -p /etc/ssl/etcd/ssl && /bin/bash -x /tmp/kubekey/make-ssl-etcd.sh -f /tmp/kubekey/openssl.conf -d /etc/ssl/etcd/ssl": Process exited with status 127 node=192.168.0.2

WARN[23:51:06 CST] Task failed ...

WARN[23:51:06 CST] error: interrupted by error

Error: Failed to generate etcd certs: interrupted by error

Usage:

kk create cluster [flags]

Flags:

--all deploy kubernetes and kubesphere

--debug debug info (default true)

-f, --file string configuration file name

-h, --help help for cluster

```

Answers:

username_0: Is this issue resolved by this [PR](https://github.com/kubesphere/kubekey/pull/50)?

username_1: @username_0

No, you should execute `sudo -E /bin/sh -c "mkdir -p /etc/ssl/etcd/ssl && /bin/bash -x /tmp/kubekey/make-ssl-etcd.sh -f /tmp/kubekey/openssl.conf -d /etc/ssl/etcd/ssl"` in node 192.168.0.2 .

username_1: @username_0

I think this issues is caused by that this machine is missing `openssl`.

You can execute` yum install openssl` to install openssl.

I will add it to requirements.

Status: Issue closed

username_0: You are right, thanks |

macvim-dev/macvim | 1015031412 | Title: Not showing new window in OS X 10.9

Question:

username_0: I can run Macvim.app in the current (8.2.3455 (172)) version, but there is no new window with empty file (no matter what setup I use). I can select the recent file, but the new window with text doesn't appear. Drag'n'drop doesn't work either.

Last version I used without issues was a very ancient one. When I try to user a newer version, it breaks even the older one into the same non-working behaviour, so to get back to the working state is not easy.

When I try to run the native app (/Applications/MacVim.app/Contents/MacOS/MacVim) from the shell, it runs non-graphical vim. After I quit with :q it says:

2021-10-04 12:54:44.989 MacVim[34079:507] -[MMWindow setTitlebarAppearsTransparent:]: unrecognized selector sent to instance 0x7fb5dad3d8e0, but I don't return to shell before I quit the graphical interface.

If I try to select e. g. a file from Recents, it opens in terminal, but with very limited possibilities of work, no graphical window.

Answers:

username_1: Do you know what "ancient one" means? a few months / a year / a few years ago?

username_0: I am sorry, I added those extra pieces into the original post, it might have been missed. So once again:

1. I did try to manually remove all .vimrc and files found in ~/Library (everything found which had *macvim* in name/directory).

2. IMHO the last version working on OS X 10.9 is 163 released on 12 Apr 2020.

I tried to compile recent git version and it fails on XIB file for preferences compilation. The file is for X Code 8.0 and up, there is only XCode 6.2 on 10.9. I don't really understand UI Designer being mostly just a console guy. :-) Sorry.

username_1: Is there a reason why you need to build MacVim from scratch? Because we moved from NIB to XIB it could be hard for you to build locally on 10.9

username_0: No real reason. I just wanted to give it a try, with an opportunity to try a bit of debugging. But it's really of a very little use for me, I usually program in plain C. |

storybookjs/storybook | 1042399034 | Title: `@storybook/[email protected]` has lots of incorrect peer dependencies

Question:

username_0: **Describe the bug**

This package should support latest `react`, `react-dom` and other `@storybook` packages.

**To Reproduce**

Really isn't necessary, run `yarn install` locally on your `@storybook/[email protected]` package.

**System**

Please paste the results of `npx sb@next info` here.

```

System:

OS: macOS 11.6

CPU: (16) x64 Intel(R) Core(TM) i9-9980HK CPU @ 2.40GHz

Binaries:

Node: 16.11.1 - /usr/local/bin/node

Yarn: 1.22.17 - /usr/local/bin/yarn

npm: 8.0.0 - /usr/local/bin/npm

Browsers:

Chrome: 95.0.4638.69

Firefox: 90.0.2

Safari: 15.0

npmPackages:

@storybook/addon-a11y: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-actions: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-controls: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-essentials: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addons: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/builder-webpack5: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/components: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/core-events: ^6.4.0-beta.25 => 6.4.0-beta.25

@storybook/manager-webpack5: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/react: ^6.4.0-beta.25 => 6.4.0-beta.25

@storybook/theming: ^6.4.0-beta.25 => 6.4.0-beta.25

```

**Additional context**

```

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "@storybook/addons@^5.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "@storybook/react@^5.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "react@^16.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "react-dom@^16.0.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/[email protected]" has incorrect peer dependency "react@^16.8.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/[email protected]" has incorrect peer dependency "react-dom@^16.8.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/rect > @reach/[email protected]" has incorrect peer dependency "react@^16.4.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/rect > @reach/[email protected]" has incorrect peer dependency "react-dom@^16.4.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/[email protected]" has incorrect peer dependency "[email protected] || 16.x || 16.4.0-alpha.0911da3".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/[email protected]" has incorrect peer dependency "[email protected] || 16.x || 16.4.0-alpha.0911da3".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/router > [email protected]" has incorrect peer dependency "react@^0.14.0 || ^15.0.0 || ^16.0.0".

warning " > [email protected]" has incorrect peer dependency "@storybook/addon-actions@^4.0.0||^5.0.0||5.2.0-beta.13".

```

Answers:

username_1: Thanks for the issue @username_0. That's on the way out because many of the APIs are now apart of Storybook.

If you're looking to build a new addon, I'd start with our [official addon kit template](https://github.com/storybookjs/addon-kit).

username_0: Hey @username_1, sounds like I need to talk to the author of `@react-theming/storybook-addon` and have them update to use the new APIs then?

username_2: Thanks, guys! I am aware of this new API and I am happy that many of the useful abilities of storybook-addon finally come to Storybook itself. But at the same time the new API isn't just a replacemnt of the original package but rather a brand new API and in most cases much simplified. So this way while it's recomended to create new addons with the new API, it's not easy to migrate existing ones.

Also I beleive there're can't be strict restriction to use the original package when it's nessary. So probably it can still exist alongside with the new API.

Regarding to the question of @react-theming/storybook-addon I'd happy to discuss what could we do to improve it. Let's continue it in the projects issues.

username_2: **Describe the bug**

This package should support latest `react`, `react-dom` and other `@storybook` packages.

**To Reproduce**

Really isn't necessary, run `yarn install` locally on your `@storybook/[email protected]` package.

**System**

Please paste the results of `npx sb@next info` here.

```

System:

OS: macOS 11.6

CPU: (16) x64 Intel(R) Core(TM) i9-9980HK CPU @ 2.40GHz

Binaries:

Node: 16.11.1 - /usr/local/bin/node

Yarn: 1.22.17 - /usr/local/bin/yarn

npm: 8.0.0 - /usr/local/bin/npm

Browsers:

Chrome: 95.0.4638.69

Firefox: 90.0.2

Safari: 15.0

npmPackages:

@storybook/addon-a11y: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-actions: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-controls: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addon-essentials: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/addons: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/builder-webpack5: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/components: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/core-events: ^6.4.0-beta.25 => 6.4.0-beta.25

@storybook/manager-webpack5: 6.4.0-beta.25 => 6.4.0-beta.25

@storybook/react: ^6.4.0-beta.25 => 6.4.0-beta.25

@storybook/theming: ^6.4.0-beta.25 => 6.4.0-beta.25

```

**Additional context**

```

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "@storybook/addons@^5.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "@storybook/react@^5.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "react@^16.0.0".

warning "@react-theming/storybook-addon > @storybook/[email protected]" has incorrect peer dependency "react-dom@^16.0.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/[email protected]" has incorrect peer dependency "react@^16.8.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/[email protected]" has incorrect peer dependency "react-dom@^16.8.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/rect > @reach/[email protected]" has incorrect peer dependency "react@^16.4.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @reach/rect > @reach/[email protected]" has incorrect peer dependency "react-dom@^16.4.0".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/[email protected]" has incorrect peer dependency "[email protected] || 16.x || 16.4.0-alpha.0911da3".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/[email protected]" has incorrect peer dependency "[email protected] || 16.x || 16.4.0-alpha.0911da3".

warning "@react-theming/storybook-addon > @storybook/addon-devkit > @storybook/addons > @storybook/api > @reach/router > [email protected]" has incorrect peer dependency "react@^0.14.0 || ^15.0.0 || ^16.0.0".

warning " > [email protected]" has incorrect peer dependency "@storybook/addon-actions@^4.0.0||^5.0.0||5.2.0-beta.13".

```

Status: Issue closed

username_1: Thanks Oleg! I totally get that migrating can be tricky so I appreciate you taking the time to respond. Maybe @username_0 would be interested in helping out?

username_0: Thanks @username_1 & @username_2 I'm happy to help where I can. |

stwe/DatatablesBundle | 182547352 | Title: refresh datatable

Question:

username_0: Hello, thanks to this bundle. I have a question. Is there any way to refresh a datatable without refresh de complete page. For example, I want to delete some fields via ajax and reload the table with ajax to.

Answers:

username_1: You can follow my example project. You can solve this using an ActionButton. The associated controller should not be a problem.

Status: Issue closed

|

scrapy/scrapy | 160209337 | Title: Some of the errors occurred in the Chinese web crawling

Question:

username_0: The html source is :

<dd>

<a href="5367496.html" =style="" style="">书友群</a>

</dd>

<dd>

<a href="5367497.html" =style="" style="">十月份打赏清单。</a>

</dd>

<dt>《修真聊天群》九洲一号群</dt>

<dd>

<a class="firefinder-match" href="5367498.html" =style="" style="">第一章 黄山真君和九洲一号群</a>

</dd>

<dd>

<a class="firefinder-match" href="5367499.html" =style="" style="">第二章 且待本尊算上一卦</a>

</dd>

my spider is :

def parse(self, response):

# a = '第'.encode('Unicode')\"h1title\"

arr = response.xpath("//dd//a[contains(.,\"第\")]").extract()

print arr[0]

I just want the a tag with the word "第" in the beginning.

but it not work , but in the fixfox firexpath i can find right.

some error is :

2016-06-14 20:14:11 [scrapy] ERROR: Spider error processing <GET http://www.23us.cc/html/101/101573/> (referer: None)

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/Twisted-16.2.0-py2.7-linux-x86_64.egg/twisted/internet/defer.py", line 588, in _runCallbacks

current.result = callback(current.result, *args, **kw)

File "/home/chenhao/Desktop/ssss/sinaweibo/sinaweibo/spiders/xiao.py", line 18, in parse

arr = response.xpath("//dd//a[contains(.,\"第\")]").extract()

File "/usr/local/lib/python2.7/dist-packages/scrapy/http/response/text.py", line 115, in xpath

return self.selector.xpath(query)

File "/usr/local/lib/python2.7/dist-packages/parsel/selector.py", line 179, in xpath

smart_strings=self._lxml_smart_strings)

File "lxml.etree.pyx", line 1507, in lxml.etree._Element.xpath (src/lxml/lxml.etree.c:52198)

File "xpath.pxi", line 295, in lxml.etree.XPathElementEvaluator.__call__ (src/lxml/lxml.etree.c:151999)

File "apihelpers.pxi", line 1393, in lxml.etree._utf8 (src/lxml/lxml.etree.c:27125)

ValueError: All strings must be XML compatible: Unicode or ASCII, no NULL bytes or control characters

Answers:

username_1: Can you try with a `u` prefix on the XPath selector? i.e.

```

response.xpath(u"//dd//a[contains(.,\"第\")]").extract()

```

Status: Issue closed

username_1: ```

username_0: Oh, my God, it's been a long time for me to find the mistake. Thank you so much. |

spring-projects/spring-security | 340470001 | Title: Return Type Of RectiveUserDetailService

Question:

username_0: https://github.com/spring-projects/spring-security/issues

package com.username_0.springsessionreactivetest;

import org.springframework.security.core.userdetails.ReactiveUserDetailsService;

import org.springframework.security.core.userdetails.UserDetails;

import org.springframework.security.core.userdetails.UsernameNotFoundException;

import reactor.core.publisher.Mono;

import java.util.ArrayList;

import java.util.List;

import java.util.Objects;

public class CustomReactiveUserDetailsService extends ReactiveUserDetailsService {

public static final String USERNAME_NOT_FOUND_MESSAGE = "Username %s not found.";

@Override

public Mono<? extends UserDetails> findByUsername(String username) {

return users.stream()

.filter(user -> Objects.equals(username, user.getEmail()) || Objects.equals(username, String.valueOf(user.getId())))

.findFirst()

.map(CustomUserDetails::new)

.map(Mono::just)

.orElseThrow(() -> new UsernameNotFoundException(String.format(USERNAME_NOT_FOUND_MESSAGE, username)));

}

public static final List<User> users = new ArrayList<>();

static {

users.add(User.getInstance().id("1").firstName("Ankur").lastName("Pathak").addRole(Role.ROLE_ADMIN).email("<EMAIL>").password("<PASSWORD>"));

users.add(User.getInstance().id("2").firstName("Amar").lastName("Mule").addRole(Role.ROLE_ADMIN).email("<EMAIL>").password("<PASSWORD>"));

}

}

Return type should be Mono<? extend UserDetail> to allow broader returns by inheritance. In absence of' this we will have to have ugly casting or hack of some type. This will also support the principal

of preferring Composition over Inheritance.

Answers:

username_0: In absence of above we have to do some hack of this type:

@Override

public Mono<UserDetails> findByUsername(String username) {

return users.stream()

.filter(user -> Objects.equals(username, user.getEmail()) || Objects.equals(username, String.valueOf(user.getId())))

.findFirst()

.map(CustomUserDetails::new)

.map(x-> (UserDetails)x)

.map(Mono::just)

.orElseThrow(() -> new UsernameNotFoundException(String.format(USERNAME_NOT_FOUND_MESSAGE, username)));

}

username_1: Even if we wanted to, we cannot change the code as this is a non passive change. Additionally, it is generally bad practice to return a value with a wildcard since it forces programmers using the code to deal with wildcards. This is the same reason that `Optional.orElseThrow` doesn't use a wildcard.

You can rewrite your code like this:

```java

public Mono<UserDetails> findByUsername(String username) {

return Flux.fromIterable(users)

.filter(user -> Objects.equals(username, user.getEmail()) || Objects.equals(username, String.valueOf(user.getId())))

.next()

.switchIfEmpty(Mono.defer(() -> Mono.error(new UsernameNotFoundException(String.format(USERNAME_NOT_FOUND_MESSAGE, username)))))

.map(CustomUserDetails::new);

}

```

You probably want to consider using a different data structure as well since this is O(n) vs a Map which would be O(1).

Status: Issue closed

|

pothosware/PothosCore | 760305981 | Title: Cannot convert Object of type long long to long

Question:

username_0: "long long" declaration: https://github.com/pothosware/PothosCore/blob/master/lib/Object/Builtin/ConvertIntegers.cpp#L9

Macro: https://github.com/pothosware/PothosCore/blob/master/lib/Object/Builtin/ConvertCommonImpl.hpp#L152

"long" typedef: https://github.com/pothosware/PothosCore/blob/master/lib/Object/Builtin/ConvertCommonImpl.hpp#L26

In theory, with this, conversion should be supported between "long long" and "long", but it errors out.

Answers:

username_1: like this fails for you?

```

POTHOS_TEST_BLOCK("/object/tests", test_long_long_to_long)

{

Pothos::Object obj((long long)1234);

auto out = obj.convert<long>();

printf("out = %d\n", (int)out);

}

```

Status: Issue closed

username_0: Closing issue. Taking another look at the error message, it's from Object::extract(), where the type has to be exact. |

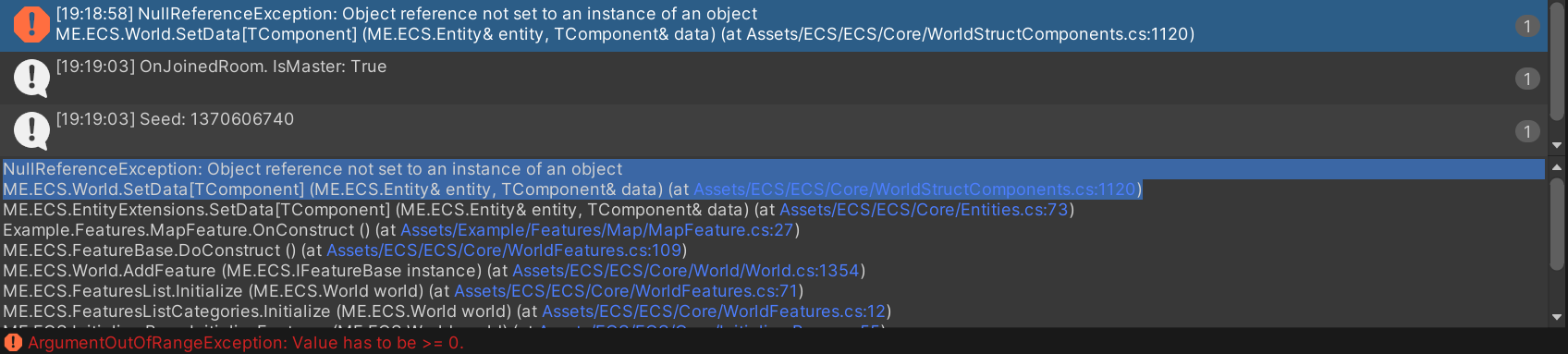

chromealex/ecs.example | 710216634 | Title: Error when hit "Play"

Question:

username_0:

Is there a workaround?

Answers:

username_1: Yeah. You are using windows os, there is a bug in Generator.cs. You can

copy-paste it from the main repo.

Thanks;)

Status: Issue closed

|

sanity-io/sanity-plugin-mux-input | 987556252 | Title: put duration in better place in graphQL

Question:

username_0: When querying my video content with graphql (gatsby) with the following query:

```

allSanityVideos {

edges {

node {

video {

asset {

playbackId

thumbTime

assetId

status

}

_rawAsset(resolveReferences: {maxDepth: 2})

}

_id

}

}

}

```

I am getting the duration only via _rawAsset and not via video -> asset structure. See the result below

```

{

"data": {

"allSanityVideos": {

"edges": [

{

"node": {

"video": null,

"_id": "drafts.d970338c-1ebb-4f88-b9b4-cec9615c7e63"

}

},

{

"node": {

"video": {

"asset": {

"playbackId": "cxsHvFHCpnedi02nL8kNrmvzzHYuSx84LyoA4Nh00Lv6s",

"thumbTime": null,

"assetId": "BSt8QSrjyqUhrEGLnMKBNqGrq8wfBmhwwZH8LyRe74A",

"status": "ready"

},

"_rawAsset": {

"_id": "296f5350-2cf9-40ea-9264-733f3af3ba7d",

"_type": "mux.videoAsset",

"_rev": "cWhOHnBK4b0ef4hteFMZbG",

"_createdAt": "2021-09-03T01:09:50Z",

"_updatedAt": "2021-09-03T01:09:57Z",

"assetId": "BSt8QSrjyqUhrEGLnMKBNqGrq8wfBmhwwZH8LyRe74A",

"data": {

"aspect_ratio": "16:9",

"created_at": "1630631394",

"duration": 1.368033,

"id": "BSt8QSrjyqUhrEGLnMKBNqGrq8wfBmhwwZH8LyRe74A",

"master_access": "none",

"max_stored_frame_rate": 29.97,

"max_stored_resolution": "SD",

"mp4_support": "none",

"passthrough": "296f5350-2cf9-40ea-9264-733f3af3ba7d",

[Truncated]

"status": "ready",

"uploadId": "qOpRpZwWIaWniLFIolTiOL5kduC65GPjGLth8xdrPA00",

"id": "-51e61cef-8d54-5622-821d-20e7758f6d6a",

"children": [],

"internal": {

"type": "SanityMuxVideoAsset",