repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

mtjo/MacStroke | 957709184 | Title: 开机自启无效

Question:

username_0: 在偏好设置里勾选了自动启动,但是重启之后没有自动启动,并且设置面板上的勾选框变成未勾选了

macos 10.15.7

Answers:

username_1: 我系统 11.5.1 ,刚刚测试是正常的,如果设置仍然不生效可以按以下步骤操作: 系统偏好设置->用户与群组->选择当前用户->登陆项->在列表中删除macstroke然后重新设置(或者删除后重新添加macstroke)

username_0: 公司的机器, 用户与群组 灰色的不能点击,是不是和这个有关

username_1: 是的,设置开机启动要管理员权限才可以

username_0: 可是我安装的mos、clashx这些就可以开机自启诶

username_0: 我晚上用家里的电脑试试

username_1: 你可以删除软件重新安装试试,我从10.13一直升级到现在都没出现过这个问题,也没有人反馈类似的问题

username_0: 找到原因了,我是下载的zip,然后解压之后就直接点开运行了,应该拖进应用程序目录,这样就可以了

软件很好用,给你点赞

Status: Issue closed

|

iulo/iulo.github.io | 354278724 | Title: cli工具编写

Question:

username_0: [commander](https://github.com/tj/commander.js) 用来实现基本命令

[Inquirer](https://github.com/SBoudrias/Inquirer.js/) 实现cli中的问答

Answers:

username_0: ## 发布相关工具

[standard-version](https://github.com/conventional-changelog/standard-version) |

nhagem/AcornNet | 142506855 | Title: Need to verify data files discarded

Question:

username_0: Need to make sure it really is unit 1 dying 100% of the time. Verify by spot checking.

Answers:

username_0: Something is definitely janky. Spot checking suggests that the script is correctly picking up sessions with >=90% "FF" in connection, BUT, there are also false positives - example, "Unit3,10-Oct-2014,06:53:14.425" is pulled as bad, but there are no "FF" connections in it at all.

Holdover from running at >=0%? Does the file re-write?

username_0: File doesn't re-write, so every time you run the script, you have to delete the file or it just adds to it. Otherwise it's doing alright. Missing Unit 6 bad sessions due to a different issue with clean.py, not clean2.py. This script should be fine once that issue is fixed; re-check at that point.

username_0: Fixed!

Status: Issue closed

|

nanovms/ops | 428952509 | Title: ability to list package contents

Question:

username_0: you can simply untar it and tree the dir but it'd be nice to have this rolled up into a single command, some things to think about though:

* some interpreters like ruby/python have a lot of crap so a simple listing might not be best - maybe use paging? idk

* we have plans to hash everything inside the pkg at some point for integrity && verification - maybe that belongs in output as well

Answers:

username_1: `ops package show` and `ops package show | less` would cover both of the cases you mention (paging handled by less or some other Unix command).

One thing I notice is that `package` is becoming a theme so moving it to a sub command would be cool if that sort of thing is acceptable: `ops package list <pkg>`, `ops package show <pkg>`, `ops package get <pkg>`.

username_0: i'm in favor of this although @username_2 is going to be doing work in this area in the coming days

username_2: @username_0 should we close these with #308 checked in ?

Status: Issue closed

|

DCurrent/openbor | 843935295 | Title: Blockodds strange behaviour

Question:

username_0: ```

// Run random chance against blockodds. If it

// passes, AI will block.

if ((rand32()&ent->modeldata.blockodds) == 1)

{

return 1;

}

```

## Debugging

But its not how it works. Because no matter what value I use, there is just two options:

- 1 - Block EVERYTHING, including **during a combo** (the entity receives the first hit and, even if their pain animation isn't over yet, it will automatically block the second hit, breaking from the pain animation)

- Any other value - didn't care to block at all

### Reproduce

Just set the _blockoods 1_ in the character header.

If you set "blockoods" to any number that is not "1", the entity doesn't block at all.

If you set "blockodds 1" and use "nopassiveblock 1", the engine doesn't block at all.

### Expected behavior

The character must try to block in a percentage based on the value of blockodds. And if the first attack has already hit and the pain animation has not yet ended, it cannot block the next attacks.

### Screenshots

### Version

Please provide the SPECIFIC version the issue first appeared. This is very important, it is nearly impossible for us to pour through the entire code base to find singular issues without a starting point.

- Windows

- Build 6330, 6315, 6392. But it was always like that if I remember well, so in version 4432 too.

Answers:

username_1: I'll look into this. I think it's actually two separate issues.

- The formula for odds is set up wrong, that's probably a simple fix.

- No matter what odds are, the engine should not have the native ability to block if the entity is in any state other than idle. Most likely there's a missing check flag check.

username_0: @username_1 thanks. I remember some people reporting that some entities can block even if they are on the ground - right from the fall animation into the block, skipping the whole raise proccess.

username_2: Hey guys! Thanks to your reports and comments I was able to find where the problem is.

Here's the fixes:

Blockodds rate fix

````

if ((rand32()&ent->modeldata.blockodds) == 0)

{

return 1;

}

````

Block state fix, check if the "blocking instance" is gone or not before all other tasks

````

int check_blocking_eligible(entity *ent, entity *other, s_collision_attack *attack)

{

if (!ent->blocking)

{

return 0;

}

````

In the videos below you can see some tests:

Blockodds rate fix

https://www.youtube.com/watch?v=6cgTytt4iOM

Block state fix

https://www.youtube.com/watch?v=IHQbTMw3yRs

I'm doing more tests to see if everything is ok in a test build. |

nathanvda/cocoon | 169000067 | Title: Associations only saving the last one added

Question:

username_0: Hi! I see you've got a slur of issues already but I was hoping you might have some insight on what I (probably) am doing wrong in using it.

I have an app in which objects have a lot of named, self-referential (and normal) associations. For example, the Character model has a `best_friends` association (pointing to at least 1 Character), a `siblings` association (pointing to at least 1 Character), a `hometowns` association (pointing to at least 1 Location), etc. In the `Character#edit` page, I allow users to create these associations using Cocoon, and observe the following:

* Saving associations one at a time works as expected, even if creating multiple associations as long as each one only has 1 (e.g. creating one `best_friends` and one `siblings` works, but creating two `siblings` relations only saves the latter one).

* Removing a relation and adding a new one in-line (e.g. deleting any `best_friends` associations and adding a new one) works, but of course only adds the last one.

Just to give you a sense of context, here's what the UI looks like adding relations:

(Ugly I know, but just trying to get it working right now! :) )

Here's the relevant code:

CharactersController

```

...

def edit

@content = Character.find(params[:id])

end

...

```

relevant edit.html.erb (in the Content class, which Character inherits from)

```

<% content.class.attribute_categories.each do |category, data| %>

<div id="<%= category %>" class="row">

<% data[:attributes].each do |attribute| %>

<div class="col s10 m8 l4">

<% value = content.send(attribute) %>

<% if value.is_a?(ActiveRecord::Associations::CollectionProxy) %>

<% through_class = content.class.reflect_on_association(attribute).options[:through].to_s %>

<%= render 'content/form/relation_input', f: f, attribute: attribute, relation: through_class %>

<% else %>

<%= render 'content/form/text_input', f: f, attribute: attribute %>

<% end %>

</div>

<% end %>

</div>

<% end %>

```

content/form/_relation_input.html.erb (rendered in the case of associations, like this question):

```

<div>

<%= f.label attribute, attribute.humanize.capitalize %>

</div>

<div id="<%= relation %>">

<%= f.fields_for relation do |builder| %>

<%= render 'content/form/groupship_fields', f: builder, attribute: attribute.singularize, parent: f.object %>

<% end %>

<div class="links">

<% color = f.object.send(attribute).build.class.color %>

<%= link_to_add_association "add #{attribute.to_s.singularize}", f, relation,

class: "btn #{color}",

partial: 'content/form/groupship_fields',

render_options: { locals: {

attribute: attribute.singularize,

parent: f.object

}} %>

[Truncated]

<% end %>

</div>

```

And, of course, back to the CharacterController#update that handles processing:

```

def update

@content = Character.find(params[:id])

if @content.update_attributes(content_params)

successful_response(@content, t(:update_success, model_name: humanized_model_name))

else

failed_response('edit', :unprocessable_entity)

end

end

```

If there's any insight you can give into what I might be doing wrong, it'd be much appreciated. I assume I'm just messing up something small to make it only process the last of each association sent.

Please let me know if there's any other information you need to take a look. The relevant code (though not simplified) is all in https://github.com/indentlabs/Indent/tree/master/app/views/content if you want to look at the source directly.

Answers:

username_1: First thing: check what is actually posted to the controller? Sometimes due to an error in your html (e.g. using id's instead of classes) it will only send one of a repeating group. But from what I could see, that _seems_ ok (still check to be safe).

I saw in your code (thanks for sharing it), that you use a handy shortcut to define your relations: `relate :siblings, with: :siblingships` and in the concern you ony add the `accepts_nested_attributes_for` for the connecting relation. Imho you also have to add it for the actual relation you are editing. A bit weird that it partially works, so not sure if that is the case.

Also, you might have a problem: all your "related"-classes are in the folder `content_groupers` but you do not prefix the class with `ContentGroupers` so not sure if the rails autoloading will actually find the class correctly (but maybe you solved that otherwise --I didn't go looking to far).

username_0: Thanks for the insight. Upon inspecting what is actually posted to the controller, you may be right: there's only one ID being passed through: when adding two `mothers` (in addition to one existing one), the relevant posted params look like:

```

"motherships_attributes"=>

{"0"=>{"id"=>"1", "_destroy"=>"false"},

"1470283435380"=>{"mother_id"=>"2", "_destroy"=>"false"},

"1470283438992"=>{"mother_id"=>"", "_destroy"=>"false"}},

```

And after wiping them all (successfully, in one submit), trying to add 3 at the same time:

```

"motherships_attributes"=>

{"1470285441878"=>{"mother_id"=>"3", "_destroy"=>"false"},

"1470285445040"=>{"mother_id"=>"", "_destroy"=>"false"},

"1470285447940"=>{"mother_id"=>"", "_destroy"=>"false"}},

```

Pretty sure those empty `mother_id` params are the culprit here. Do you see anything in the HTML that might be the reason?

Also FWIW on the `relate` shortcut, I tried adding in the actual relation as well, but it didn't seem to make a difference here. I'll take a look around whether I need it in the long run though, thanks for the heads up.

And yep! Adding `config.autoload_paths += Dir[Rails.root.join('app', 'models', '{*/}')]` to `config/application.rb` _should_ be recursively autoloading everything in the models directory, at least.

Thanks again for your help. I'll keep digging at the HTML, but anything you can offer would be much appreciated. And thanks for Cocoon!

username_1: It is a little over my head, just gave it a quick cursory read, but please note that all views are server-side-rendered, so every time you press `link_to_add_association` it just inserts a pre-rendered partial. If you need to set a specific value upon insertion, you will have to use js and the callbacks.

Also, not sure but you seem to make it harder on yourself. To be clear:

* you do not have to specify an id for new elements (cocoon does that: if the id does not exist in the database, rails assumes it is a new element)

* you do not have to specify a parent-id for a nested element, since the hash is nested, it is clear to what rails must add it

(I will read it in more detail later, if needed)

Status: Issue closed

username_0: Just an FYI, the complications seemed to stem from the autocomplete field I was using (rails-jquery-autocomplete) that required me to specify the ID. I swapped it out for a native rails `f.select` and everything works perfectly now!

Thank you for your all your help! :) |

diffplug/spotless | 1071686099 | Title: Updating spotless version - cannot add a configuration as a configuration with that name already exists.

Question:

username_0: If you are submitting a **bug**, please include the following:

- [X] summary of problem

- [X] gradle or maven version

- [X] spotless version

- [X] operating system and version

- [X] copy-paste your full Spotless configuration block(s), and a link to a public git repo that reproduces the problem if possible

- [X] copy-paste the full content of any console errors emitted by `gradlew spotless[Apply/Check] --stacktrace`

I am trying to update spotless from 5.x to 6.x and get the below exception. The project uses `org.gradle.parallel=true` and throws this exception on a machine with 16 cores, presumably causing more parallelism than other machines may cause, so may be hard to repro locally. But hopefully based on the stack trace we can find a location to verify. Perhaps this should use ConcurrentHashMap / computeIfAbsent?

https://github.com/diffplug/spotless/blob/main/plugin-gradle/src/main/java/com/diffplug/gradle/spotless/GradleProvisioner.java#L54

Executing the task individually succeeds, it is when executing on the whole project it fails probably due to sync issue

`Gradle`: 7.3.1

`spotless-gradle-plugin`: 6.0.2

OS: Amazon Linux 2

Repro: https://github.com/username_0/opentelemetry-java-instrumentation/tree/gradle-7.3.1

Run `./gradlew spotlessApply` (may not repro on all machines). Many spotless tasks run and succeed but the error happens and fails the build

Spotless config:

```

You need to add a repository containing the '[com.google.googlejavaformat:google-java-format:1.12.0]' artifact in 'testing/agent-for-testing/build.gradle'.

E.g.: 'repositories { mavenCentral() }'

org.gradle.api.InvalidUserDataException: Cannot add a configuration with name 'spotless865452459' as a configuration with that name already exists.

at org.gradle.api.internal.DefaultNamedDomainObjectCollection.assertCanAdd(DefaultNamedDomainObjectCollection.java:213)

at org.gradle.api.internal.AbstractNamedDomainObjectContainer.create(AbstractNamedDomainObjectContainer.java:77)

at org.gradle.api.internal.AbstractValidatingNamedDomainObjectContainer.create(AbstractValidatingNamedDomainObjectContainer.java:47)

at org.gradle.api.internal.AbstractNamedDomainObjectContainer.create(AbstractNamedDomainObjectContainer.java:56)

at com.diffplug.gradle.spotless.GradleProvisioner.lambda$forProject$1(GradleProvisioner.java:73)

at com.diffplug.gradle.spotless.GradleProvisioner$DedupingProvisioner.provisionWithTransitives(GradleProvisioner.java:61)

at com.diffplug.spotless.JarState.provisionWithTransitives(JarState.java:68)

at com.diffplug.spotless.JarState.from(JarState.java:57)

at com.diffplug.spotless.JarState.from(JarState.java:52)

at com.diffplug.spotless.java.GoogleJavaFormatStep$State.<init>(GoogleJavaFormatStep.java:139)

at com.diffplug.spotless.java.GoogleJavaFormatStep.lambda$create$1(GoogleJavaFormatStep.java:92)

at com.diffplug.spotless.FormatterStepImpl.calculateState(FormatterStepImpl.java:56)

at com.diffplug.spotless.LazyForwardingEquality.state(LazyForwardingEquality.java:56)

at com.diffplug.spotless.LazyForwardingEquality.toBytes(LazyForwardingEquality.java:85)

at com.diffplug.spotless.LazyForwardingEquality.hashCode(LazyForwardingEquality.java:102)

```

Answers:

username_1: Thanks very much for the example, it reproduces fine on my Macbook Air. Importantly, I'm seeing two failures. The first exception is this:

```

E.g.: 'repositories { mavenCentral() }'

org.gradle.api.artifacts.ResolveException: Could not resolve all dependencies for configuration ':testing:agent-for-testing:spotless865452459'.

at org.gradle.api.internal.artifacts.ivyservice.ErrorHandlingConfigurationResolver.wrapException(ErrorHandlingConfigurationResolver.java:105)

at org.gradle.api.internal.artifacts.ivyservice.ErrorHandlingConfigurationResolver.resolveGraph(ErrorHandlingConfigurationResolver.java:76)

...

Caused by: java.util.ConcurrentModificationException

at java.base/java.util.TreeMap$PrivateEntryIterator.nextEntry(TreeMap.java:1208)

at java.base/java.util.TreeMap$KeyIterator.next(TreeMap.java:1262)

at org.gradle.api.internal.collections.FilteredCollection$FilteringIterator.findNext(FilteredCollection.java:121)

at org.gradle.api.internal.collections.FilteredCollection$FilteringIterator.next(FilteredCollection.java:140)

at org.gradle.api.internal.DefaultDomainObjectCollection$IteratorImpl.next(DefaultDomainObjectCollection.java:475)

at org.gradle.api.internal.artifacts.ivyservice.moduleconverter.DefaultRootComponentMetadataBuilder.getRootComponentMetadata(DefaultRootComponentMetadataBuilder.java:95)

at org.gradle.api.internal.artifacts.ivyservice.moduleconverter.DefaultRootComponentMetadataBuilder.lambda$buildRootComponentMetadata$0(DefaultRootComponentMetadataBuilder.java:86)

at org.gradle.api.internal.project.DefaultProjectStateRegistry$ProjectStateImpl.fromMutableState(DefaultProjectStateRegistry.java:393)

at org.gradle.api.internal.artifacts.ivyservice.moduleconverter.DefaultRootComponentMetadataBuilder.buildRootComponentMetadata(DefaultRootComponentMetadataBuilder.java:84)

at org.gradle.api.internal.artifacts.ivyservice.moduleconverter.DefaultRootComponentMetadataBuilder.toRootComponentMetaData(DefaultRootComponentMetadataBuilder.java:70)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.toRootComponentMetaData(DefaultConfiguration.java:1197)

at org.gradle.api.internal.artifacts.ivyservice.resolveengine.DefaultArtifactDependencyResolver$DefaultResolveContextToComponentResolver.resolve(DefaultArtifactDependencyResolver.java:227)

at org.gradle.api.internal.artifacts.ivyservice.resolveengine.graph.builder.DependencyGraphBuilder.resolve(DependencyGraphBuilder.java:139)

at org.gradle.api.internal.artifacts.ivyservice.resolveengine.DefaultArtifactDependencyResolver.resolve(DefaultArtifactDependencyResolver.java:145)

at org.gradle.api.internal.artifacts.ivyservice.DefaultConfigurationResolver.resolveGraph(DefaultConfigurationResolver.java:186)

at org.gradle.api.internal.artifacts.ivyservice.ShortCircuitEmptyConfigurationResolver.resolveGraph(ShortCircuitEmptyConfigurationResolver.java:85)

at org.gradle.api.internal.artifacts.ivyservice.ErrorHandlingConfigurationResolver.resolveGraph(ErrorHandlingConfigurationResolver.java:74)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$1.call(DefaultConfiguration.java:644)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$1.call(DefaultConfiguration.java:635)

at org.gradle.internal.operations.DefaultBuildOperationRunner$CallableBuildOperationWorker.execute(DefaultBuildOperationRunner.java:204)

at org.gradle.internal.operations.DefaultBuildOperationRunner$CallableBuildOperationWorker.execute(DefaultBuildOperationRunner.java:199)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:66)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:157)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.call(DefaultBuildOperationRunner.java:53)

at org.gradle.internal.operations.DefaultBuildOperationExecutor.call(DefaultBuildOperationExecutor.java:73)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.resolveGraphIfRequired(DefaultConfiguration.java:635)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.lambda$resolveExclusively$4(DefaultConfiguration.java:615)

...

```

And *then* I get the exception you linked above, "a configuration with that name already exists". I think it's the first `ConcurrentModificationException` which is the root problem, I'm digging in on that now...

Status: Issue closed

username_1: Fixed in `plugin-gradle/6.0.3`, please confirm.

username_1: If you are submitting a **bug**, please include the following:

- [X] summary of problem

- [X] gradle or maven version

- [X] spotless version

- [X] operating system and version

- [X] copy-paste your full Spotless configuration block(s), and a link to a public git repo that reproduces the problem if possible

- [X] copy-paste the full content of any console errors emitted by `gradlew spotless[Apply/Check] --stacktrace`

I am trying to update spotless from 5.x to 6.x and get the below exception. The project uses `org.gradle.parallel=true` and throws this exception on a machine with 16 cores, presumably causing more parallelism than other machines may cause, so may be hard to repro locally. But hopefully based on the stack trace we can find a location to verify. Perhaps this should use ConcurrentHashMap / computeIfAbsent?

https://github.com/diffplug/spotless/blob/main/plugin-gradle/src/main/java/com/diffplug/gradle/spotless/GradleProvisioner.java#L54

Executing the task individually succeeds, it is when executing on the whole project it fails probably due to sync issue

`Gradle`: 7.3.1

`spotless-gradle-plugin`: 6.0.2

OS: Amazon Linux 2 (AWS m5.4xlarge)

Repro: https://github.com/username_0/opentelemetry-java-instrumentation/tree/spotless-repro

Run `./gradlew spotlessApply` (may not repro on all machines). Many spotless tasks run and succeed but the error happens and fails the build

Spotless config:

```

You need to add a repository containing the '[com.google.googlejavaformat:google-java-format:1.12.0]' artifact in 'testing/agent-for-testing/build.gradle'.

E.g.: 'repositories { mavenCentral() }'

org.gradle.api.InvalidUserDataException: Cannot add a configuration with name 'spotless865452459' as a configuration with that name already exists.

at org.gradle.api.internal.DefaultNamedDomainObjectCollection.assertCanAdd(DefaultNamedDomainObjectCollection.java:213)

at org.gradle.api.internal.AbstractNamedDomainObjectContainer.create(AbstractNamedDomainObjectContainer.java:77)

at org.gradle.api.internal.AbstractValidatingNamedDomainObjectContainer.create(AbstractValidatingNamedDomainObjectContainer.java:47)

at org.gradle.api.internal.AbstractNamedDomainObjectContainer.create(AbstractNamedDomainObjectContainer.java:56)

at com.diffplug.gradle.spotless.GradleProvisioner.lambda$forProject$1(GradleProvisioner.java:73)

at com.diffplug.gradle.spotless.GradleProvisioner$DedupingProvisioner.provisionWithTransitives(GradleProvisioner.java:61)

at com.diffplug.spotless.JarState.provisionWithTransitives(JarState.java:68)

at com.diffplug.spotless.JarState.from(JarState.java:57)

at com.diffplug.spotless.JarState.from(JarState.java:52)

at com.diffplug.spotless.java.GoogleJavaFormatStep$State.<init>(GoogleJavaFormatStep.java:139)

at com.diffplug.spotless.java.GoogleJavaFormatStep.lambda$create$1(GoogleJavaFormatStep.java:92)

at com.diffplug.spotless.FormatterStepImpl.calculateState(FormatterStepImpl.java:56)

at com.diffplug.spotless.LazyForwardingEquality.state(LazyForwardingEquality.java:56)

at com.diffplug.spotless.LazyForwardingEquality.toBytes(LazyForwardingEquality.java:85)

at com.diffplug.spotless.LazyForwardingEquality.hashCode(LazyForwardingEquality.java:102)

```

username_2: I think the fix for this issue is causing #1024

username_1: @username_2 definitely suspicious looking, but I don't think so, see explanation at #1024.

username_0: Hi @username_1 - thanks for the quick fix. I am trying 6.0.4, and while the error I had isn't showing anymore I get a deprecation warning (which currently we have set to fail the build but for now can workaround by disabling it)

```

Resolution of the configuration :conventions:spotless865452459 was attempted from a context different than the project context. Have a look at the documentation to understand why this is a problem and how it can be resolved. This behaviour has been deprecated and is scheduled to be removed in Gradle 8.0. See https://docs.gradle.org/7.3.1/userguide/viewing_debugging_dependencies.html#sub:resolving-unsafe-configuration-resolution-errors for more details.

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.resolveToStateOrLater(DefaultConfiguration.java:595)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.access$1900(DefaultConfiguration.java:152)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$SelectedArtifactsProvider.getValue(DefaultConfiguration.java:1344)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$SelectedArtifactsProvider.getValue(DefaultConfiguration.java:1334)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$ConfigurationFileCollection.getSelectedArtifacts(DefaultConfiguration.java:1406)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration$ConfigurationFileCollection.visitContents(DefaultConfiguration.java:1393)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.visitContents(DefaultConfiguration.java:498)

at org.gradle.api.internal.file.AbstractFileCollection.getFiles(AbstractFileCollection.java:130)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration_Decorated.getFiles(Unknown Source)

at org.gradle.api.internal.artifacts.configurations.DefaultConfiguration.resolve(DefaultConfiguration.java:488)

at com.diffplug.gradle.spotless.GradleProvisioner.lambda$forProject$1(GradleProvisioner.java:83)

at com.diffplug.gradle.spotless.GradleProvisioner$DedupingProvisioner.provisionWithTransitives(GradleProvisioner.java:61)

at com.diffplug.spotless.JarState.provisionWithTransitives(JarState.java:68)

at com.diffplug.spotless.JarState.from(JarState.java:57)

at com.diffplug.spotless.JarState.from(JarState.java:52)

at com.diffplug.spotless.java.GoogleJavaFormatStep$State.<init>(GoogleJavaFormatStep.java:139)

at com.diffplug.spotless.java.GoogleJavaFormatStep.lambda$create$1(GoogleJavaFormatStep.java:92)

at com.diffplug.spotless.FormatterStepImpl.calculateState(FormatterStepImpl.java:56)

at com.diffplug.spotless.LazyForwardingEquality.state(LazyForwardingEquality.java:56)

at com.diffplug.spotless.LazyForwardingEquality.toBytes(LazyForwardingEquality.java:85)

at com.diffplug.spotless.LazyForwardingEquality.hashCode(LazyForwardingEquality.java:102)

at java.base/java.util.ArrayList.hashCodeRange(ArrayList.java:595)

at java.base/java.util.ArrayList.hashCode(ArrayList.java:582)

at com.diffplug.gradle.spotless.FormatExtension.setupTask(FormatExtension.java:768)

at com.diffplug.gradle.spotless.JavaExtension.setupTask(JavaExtension.java:226)

at com.diffplug.gradle.spotless.SpotlessExtensionImpl.lambda$createFormatTasks$6(SpotlessExtensionImpl.java:100)

at org.gradle.api.internal.DefaultMutationGuard$2.execute(DefaultMutationGuard.java:44)

at org.gradle.api.internal.DefaultMutationGuard$2.execute(DefaultMutationGuard.java:44)

at org.gradle.configuration.internal.DefaultUserCodeApplicationContext$CurrentApplication$1.execute(DefaultUserCodeApplicationContext.java:123)

at org.gradle.api.internal.DefaultCollectionCallbackActionDecorator$BuildOperationEmittingAction$1.run(DefaultCollectionCallbackActionDecorator.java:110)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:29)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:26)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:66)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:157)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.run(DefaultBuildOperationRunner.java:47)

at org.gradle.internal.operations.DefaultBuildOperationExecutor.run(DefaultBuildOperationExecutor.java:68)

at org.gradle.api.internal.DefaultCollectionCallbackActionDecorator$BuildOperationEmittingAction.execute(DefaultCollectionCallbackActionDecorator.java:107)

at org.gradle.internal.ImmutableActionSet$SetWithManyActions.execute(ImmutableActionSet.java:329)

at org.gradle.api.internal.DefaultDomainObjectCollection.doAdd(DefaultDomainObjectCollection.java:260)

at org.gradle.api.internal.DefaultNamedDomainObjectCollection.doAdd(DefaultNamedDomainObjectCollection.java:113)

at org.gradle.api.internal.DefaultDomainObjectCollection.add(DefaultDomainObjectCollection.java:254)

at org.gradle.api.internal.DefaultNamedDomainObjectCollection$AbstractDomainObjectCreatingProvider.tryCreate(DefaultNamedDomainObjectCollection.java:944)

at org.gradle.api.internal.tasks.DefaultTaskContainer$TaskCreatingProvider.access$1401(DefaultTaskContainer.java:654)

at org.gradle.api.internal.tasks.DefaultTaskContainer$TaskCreatingProvider$1.run(DefaultTaskContainer.java:680)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:29)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:26)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:66)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:157)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.run(DefaultBuildOperationRunner.java:47)

at org.gradle.internal.operations.DefaultBuildOperationExecutor.run(DefaultBuildOperationExecutor.java:68)

at org.gradle.api.internal.tasks.DefaultTaskContainer$TaskCreatingProvider.tryCreate(DefaultTaskContainer.java:676)

at org.gradle.api.internal.DefaultNamedDomainObjectCollection$AbstractDomainObjectCreatingProvider.calculateOwnValue(DefaultNamedDomainObjectCollection.java:929)

[Truncated]

at com.diffplug.gradle.spotless.SpotlessPlugin.lambda$configureCleanTask$2(SpotlessPlugin.java:59)

at org.gradle.configuration.internal.DefaultUserCodeApplicationContext$CurrentApplication$1.execute(DefaultUserCodeApplicationContext.java:123)

at org.gradle.api.internal.DefaultCollectionCallbackActionDecorator$BuildOperationEmittingAction$1.run(DefaultCollectionCallbackActionDecorator.java:110)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:29)

at org.gradle.internal.operations.DefaultBuildOperationRunner$1.execute(DefaultBuildOperationRunner.java:26)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:66)

at org.gradle.internal.operations.DefaultBuildOperationRunner$2.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:157)

at org.gradle.internal.operations.DefaultBuildOperationRunner.execute(DefaultBuildOperationRunner.java:59)

at org.gradle.internal.operations.DefaultBuildOperationRunner.run(DefaultBuildOperationRunner.java:47)

at org.gradle.internal.operations.DefaultBuildOperationExecutor.run(DefaultBuildOperationExecutor.java:68)

at org.gradle.api.internal.DefaultCollectionCallbackActionDecorator$BuildOperationEmittingAction.execute(DefaultCollectionCallbackActionDecorator.java:107)

at org.gradle.api.internal.DefaultMutationGuard$2.execute(DefaultMutationGuard.java:44)

at org.gradle.api.internal.DefaultMutationGuard$2.execute(DefaultMutationGuard.java:44)

at org.gradle.api.internal.collections.CollectionFilter$1.execute(CollectionFilter.java:59)

at org.gradle.internal.ImmutableActionSet$SetWithManyActions.execute(ImmutableActionSet.java:329)

at org.gradle.api.internal.DefaultDomainObjectCollection.doAdd(DefaultDomainObjectCollection.java:260)

at org.gradle.api.internal.DefaultNamedDomainObjectCollection.doAdd(DefaultNamedDomainObjectCollection.java:113)

at org.gradle.api.internal.DefaultDomainObjectCollection.add(DefaultDomainObjectCollection.java:254)

```

username_1: For the sake of keeping things organized, I'm gonna close this out and follow-up the warning at #1028

Status: Issue closed

|

bianyun12/project_20 | 759296575 | Title: 安全漏洞提醒

Question:

username_0: 漏洞类型:邮箱 SMTP 信息泄露

漏洞等级:高

漏洞地址:https://github.com/bianyun12/project_20/blob/4865cc45c6468ec195f25fce98029975d383c2fa/project_day20/run.py

漏洞危害:任何人可以通过 SMTP 账号密码收发邮件,进而通过邮箱重置其他平台密码

解决方案:重置 SMTP 密码并检查邮箱是否有敏感信息泄露(请勿只修改代码,历史版本库依旧可见)

本次扫描结果由 [ 码小六 ] https://github.com/4x99/code6 提供(欢迎 star) |

steamclock/bluejay | 284321399 | Title: Add L2CAP channels support

Question:

username_0: I would like to start discussion how these API should look like.

It may be something like

```

extension Bluejay {

func openL2CAPChannel(_ psm: CBL2CAPPSM, completion: Result<CBL2CAPChannel, Error>)

}

``` |

docToolchain/docToolchain | 836476874 | Title: help needed: where is the CSS defined which is used in documents, created by doctoolchain?

Question:

username_0: **Is your feature request related to a problem? Please describe.**

I like the html files generated by doctoolchain.

That's why I wanted to use the https://github.com/docToolchain/arc42-template-project as template for a microsite for documentation, which uses the doctoolchain technology

But I don't like the CSS styles in the files in this website template, especially the toc. The toc style has `position: fixed`, no scrollbars and can't be used for big toc. I can't find a solution how to solve the issue.

On the other side I would like to just using the style which is generated by doctoolchain by default. But I can find the css only in the created html documents. I can't find, where and how this CSS parts are defined. Maybe this is hard coded in doctoolchain, why not, would be fine.

My idea was, that there could be some CSS files which I just could copy into the website template, and then maybe adapt a bit.

**Describe the solution you'd like**

I would like to find in the documentation where and how the CSS style is defined. And maybe also how to apply this style to pages that (like the website template) are created with doctoolchain.

Answers:

username_1: thanx for your report.

it turns out that the arc42-template-project was defined as a long lasting project but soon will be replaced with the new docToolchain approach:

```

curl -Lo dtcw doctoolchain.github.io/dtcw

chmod +x dtcw

./dtcw installTemplate

./dtcw generateSite

````

(there is also a powershell dtcw.ps1 script)

as a result, you will have a repository with _only_ the adoc files plus a config and the doctoolchain-wrapper dtcw.

to be honest, I also don't like the default styles.

So, there is a

./dtcw cooyThemes

command which copies all templates to you projects. You will find the styles in /src/site/content/assets (I hope I remembered this correctly).

Change the files you need to change and delete all other files. docToolchain will overwrite the internal files with your changed ones.

The goal is to then create an external theme from these changes files which can be configured.

dies this help?

username_0: I downloaded dtcw.ps1, changed access

it looks like, the correct downloads are

```

./dtcw downloadTemplate

./dtcw generateSite

```

I used an empty folder and it worked. I got a ready site. Very simple if you know how to do it!

Some remarks:

- It looks like the CSS is the same as used by https://github.com/docToolchain/arc42-template-project

- The site contains some content (for example HomePage content, some content from the About) which is not defined in the src folder. But it is was clear, how.

- Then I followed your instruction `./dtcw copyThemes` , and now I get an idea how this works. Now I get something similar to the https://github.com/docToolchain/arc42-template-project

- when building the site using generateSite, sometimes I get

"There is insufficient memory for the Java Runtime Environment to continue."

I don't get this error with other Java applications.

- It's one thing to be able to quickly create a site from a template. It's another thing to be able to maintain that site. Furthermore, it's not clear to me yet how simple or complicated that can get. So far I see problems with finding better CSS and also with creating the navigation in an easier way. For example for a wiki pages.

I think, I will investigate, and I will also have a look on Antora. Antora describes a separation of content and UI, and navigation can be controlled with asciidoc lists. This is what I currently miss in the project template. How well do doctoolchain and Antora fit together?

username_1: Yes, Antora might be a good alternative. I don't use it because I think there is value in a landing page and blog on the site, which I get with jBake out of the box.

And Antora is "only" a static site generator. You get some more features with docToolchain.

But you can use it both also together. Setup an Antora repository, copy the docToolchain wrapper to it and you can use docToolchain for tasks like exportExcel.

username_0: For my database-documentation-generator I decided to use Antora: https://datahandwerk.github.io/

- Antora default UI can be used nearly "out of the box" and the result is fine

- Antora navigation concept is fine to generate navigation depending on database structure

On the other hand - and compared to docToolchain

- It is more elaborate to understand and set up Antora than docToolchain

- xref are very powerful, but they are a one-way street and only work within Antora

- the sources of the documentation must be in a certain structure

- It is not possible to use any content outside the Antora structure as sources. Or only with great difficulty or effort.

- That's why "code-as-docs" is rather not possible, since code is usually not distributed in folders in an Antora-appropriate form

I wrote a blog post in German: http://datahandwerk.aisberg.de/2021-04-20-docs-code-mit-asciidoc-und-antora/

username_1: thanx for this valuable feedback and your blog post! |

srobo/tasks | 1116538020 | Title: Organise prizes for the competition

Question:

username_0: The prizes for the various award categories need organisation.

### Original

[comp/prizes/main](https://github.com/srobo/recurring-tasks/blob/master/comp/prizes/main.yaml)

### Dependencies

* #857 Acquire trophies

* #858 Acquire edible prizes

* #860 Distribute certificates

* #867 Organise the SR2022 post-finals sequence

* #868 Have a rehearsal of the post-finals sequence |

bastiW/event-ticket-plus-shortcode | 537115755 | Title: Update to work with latest release of event ticket plus.

Question:

username_0: Hi there!

Have you any plan to update the code to work with the latest release of event ticket plus?

Answers:

username_1: No! As I don’t maintain this project anymore. If you are willing to contribute. I can make you admin for this project. |

data-carpentry-for-agriculture/trial-lesson | 570187308 | Title: Reordering sequence

Question:

username_0: - [ ] Brittani and Aolin do a reordering email draft or slack message

- [ ] Group review the reorder theory

- [ ] divide and conquer the implementation of reordering

Answers:

username_0: Brittani laid out the order in Slack, here's the composited reshuffling for group consideration and commentary: https://docs.google.com/document/d/1wNjoRIHxDDo987jRUpKiI-ohcTeEAtynJkG5PzKNqAk/edit#

username_1: Reorg up, closing.

Status: Issue closed

|

sindresorhus/is-html | 267551673 | Title: json string containing html returns true

Answers:

username_1: Well, that's still valid HTML. If you change the extension from `.json` to `.html`, the HTML part of it will render. HTML can be almost anything, so not really possible to do a better check than this except for checking anything it can't be or use machine learning. I'm happy to accept PRs to improve the detection though.

Status: Issue closed

|

contradictioned/areweideyet | 113194227 | Title: VS Code

Question:

username_0: VS Code totally has syntax highlighting for Rust out of the box.

Since it is based on the same core as Atom, I'd likely classify it in the same category as Atom, so text editors.

Answers:

username_1: Do you know if there is more that is supported by VS Code?

Like, can the atom plugins be used there?

Because if it only highlights .rs files, I'd tend not to include it (yet).

And for fairness reasons maybe put it into a list like "syntax highlightning supported" together with geany...

username_2: There is now plugin for Rust- https://marketplace.visualstudio.com/items/saviorisdead.RustyCode. It completes code using Racer and format code using rustfmt.

username_1: It already works fine.. I think, that's enough to include it ;)

Status: Issue closed

|

kotct/dot | 254458961 | Title: CI: Symbol's function definition is void: assert

Question:

username_0: In CI, we're getting errors now with `assert` not being defined. We should definitely have that defined, and I think it's probably something up with byte-compilation or how we're loading `cl` to do assertions.

For reference, here is the [first build where this showed up, Travis build #139](https://travis-ci.org/kotct/dot/builds/268922848).<issue_closed>

Status: Issue closed |

PaddlePaddle/Paddle | 360313922 | Title: checkpoint载入后第一步训练前大量时间空置

Question:

username_0: ```python

def _train_by_any_executor(self, event_handler, exe, num_epochs, reader):

if self.checkpoint_cfg:

epochs = [

epoch_id for epoch_id in range(num_epochs)

if epoch_id >= self.checkpoint_cfg.epoch_id

]

else:

epochs = [epoch_id for epoch_id in range(num_epochs)]

for epoch_id in epochs:

event_handler(BeginEpochEvent(epoch_id))

for step_id, data in enumerate(reader()):

if self.__stop:

if self.checkpoint_cfg:

self._clean_checkpoint()

return

if self.checkpoint_cfg and self.checkpoint_cfg.load_serial \

and self.checkpoint_cfg.step_id >= step_id and self.checkpoint_cfg.epoch_id == epoch_id:

continue

begin_event = BeginStepEvent(epoch_id, step_id)

event_handler(begin_event)

if begin_event.fetch_metrics:

metrics = exe.run(feed=data,

fetch_list=[

var.name

for var in self.train_func_outputs

])

else:

metrics = exe.run(feed=data, fetch_list=[])

if self.checkpoint_cfg:

self._save_checkpoint(epoch_id, step_id)

event_handler(EndStepEvent(epoch_id, step_id, metrics))

event_handler(EndEpochEvent(epoch_id))

if self.checkpoint_cfg:

self._clean_checkpoint()

```

` if self.checkpoint_cfg and self.checkpoint_cfg.load_serial \

and self.checkpoint_cfg.step_id >= step_id and self.checkpoint_cfg.epoch_id == epoch_id:

continue`

这一段非常影响性能。在读取样本较慢、训练总数较大的时候(比如1秒读取一个batch数据的时候,有2万个batch数据)。一个存档点step_id在5000,加载了存档点岂不是要当空读数据5000秒之后才真正开始训练?

在有shuffle的时候重复一个epoch里一些数据重复用于训练问题不是很大吧?

我还以为怎么回事,一下午怎么event_handler一点输出也没有。

建议给个参数用来遗弃存档点的step_id

Answers:

username_1: 感谢您的建议, 不过请问你使用的是Paddle v2版本还是 Paddle Fluid版本呢?

username_0: Fluid

username_2: 感谢您的建议, 我会跟进优化的事情。 |

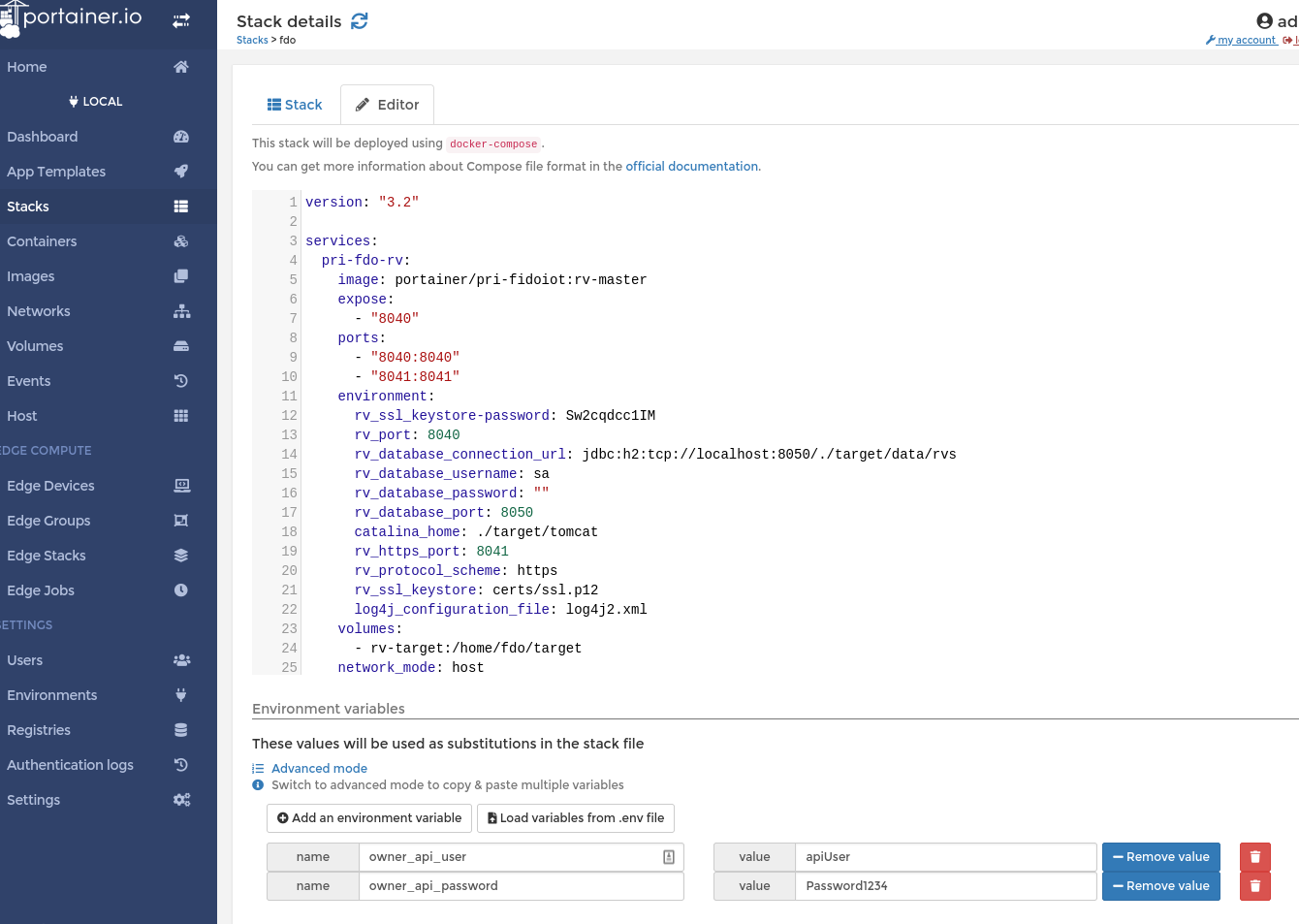

portainer/portainer | 1127751491 | Title: Search box in the stacks templates and make editor larger

Question:

username_0: It would be so much easier to use if this template editor had a search feature. Currently the browser search doesn't search the text box, so it would it's own if we want to be able to search through larger templates

Also, I find the editor a too small even on a large monitor. Could we move the variables to the top and make them collapsible so that the editor can fill more of the browser window?

And finally the two tables for name/value pairs in the variables section could be just one table with column titles (name and value).

|

coronalabs/corona | 990418045 | Title: App not building as before (only changed the build version): java.lang.OutOfMemoryError or java heap space error

Question:

username_0: **Attach your build.settings**

settings =

{

window =

{

defaultMode = "normal",

defaultViewWidth = 720,

defaultViewHeight = 720,

resizable = true,

enableCloseButton = true,

enableMinimizeButton = true,

suspendWhenMinimized = true,

titleText = {

-- The "default" text will be used if the system is using a language and/or

-- country code not defined below. This serves as a fallback mechanism.

default = "Aterramentos (Omniscience 42)",

-- This text is used on English language systems in the United States.

-- Note that the country code must be separated by a dash (-).

["en-us"] = "Aterramentos (Omniscience 42)",

-- This text is used on English language systems in the United Kingdom.

-- Note that the country code must be separated by a dash (-).

["en-gb"] = "Aterramentos (Omniscience 42)",

-- This text is used for all other English language systems.

["en"] = "Aterramentos (Omniscience 42)",

-- This text is used for all French language systems.

["fr"] = "Aterramentos (Omniscience 42)",

-- This text is used for all Spanish language systems.

["es"] = "Aterramentos (Omniscience 42)",

},

},

orientation =

{

default ="portrait",

content = "portrait",

supported =

{

"portrait",

},

},

android =

{

usesExpansionFile = false,

useGoogleServicesJson = false,

largeHeap = true,

usesPermissions =

{

"android.permission.VIBRATE",

"android.permission.INTERNET",

"com.android.vending.CHECK_LICENSE",

"android.permission.WRITE_EXTERNAL_STORAGE",

-- Permission to retrieve current location from the GPS.

"android.permission.ACCESS_FINE_LOCATION",

-- Permission to retrieve current location from WiFi or cellular service.

"android.permission.ACCESS_COARSE_LOCATION",

},

},

plugins =

{

["CoronaProvider.native.popup.quickLook"] =

[Truncated]

{

publisherId = "tech.scotth",

marketplaceId="1316qz",

},

["plugin.notifications.v2"] =

{

publisherId = "com.coronalabs"

},

--["plugin.pasteboard"] =

--{

-- publisherId = "com.coronalabs",

--},

--["plugin.voiceToText"] =

--{

-- publisherId="tech.scotth",

-- marketplaceId = "<EMAIL>",

--},

},

}

Status: Issue closed

Answers:

username_0: My application demands a lot of processes to happen at the same time (its not a game).

Builds: 3649 and 3654 where tested initially.

It worked properly on build 2021.3642.

Building on: Windows 7-10

Building for: Android

Regarding this:

Full build log

Enable full build log:

On Windows:

reg ADD "HKEY_CURRENT_USER\Software\Ansca Corona\Corona Simulator\Preferences" /f /v debugBuildProcess /d 5

Didnt manage to do that. im not very good with those registry things ://

Had this error when i had a ".bin" file with more than 40mb size inside the project:

Execution failed for task ':App:signReleaseBundle'.

A failure occurred while executing com.android,build.gradle.internal.tasks.FinalizeBundleTask$BundleToolRunnable

java.lang.OutOfMemoryError (no error message)

Had this error when i had a ".mp4" file with more than 40mb size inside the project:

Execution failed for task ':App:signReleaseBundle'.

A failure occurred while executing com.android,build.gradle.internal.tasks.FinalizeBundleTask$BundleToolRunnable

Java heap space

Attach your build.settings

settings =

{

window =

{

defaultMode = "normal",

defaultViewWidth = 720,

defaultViewHeight = 720,

resizable = true,

enableCloseButton = true,

enableMinimizeButton = true,

suspendWhenMinimized = true,

titleText =

{

-- The "default" text will be used if the system is using a language and/or

-- country code not defined below. This serves as a fallback mechanism.

default = "Aterramentos (Omniscience 42)",

-- This text is used on English language systems in the United States.

-- Note that the country code must be separated by a dash (-).

["en-us"] = "Aterramentos (Omniscience 42)",

-- This text is used on English language systems in the United Kingdom.

-- Note that the country code must be separated by a dash (-).

["en-gb"] = "Aterramentos (Omniscience 42)",

-- This text is used for all other English language systems.

["en"] = "Aterramentos (Omniscience 42)",

-- This text is used for all French language systems.

["fr"] = "Aterramentos (Omniscience 42)",

-- This text is used for all Spanish language systems.

["es"] = "Aterramentos (Omniscience 42)",

},

},

[Truncated]

},

},

plugins =

{

["CoronaProvider.native.popup.quickLook"] =

{

publisherId = "com.coronalabs",

supportedPlatforms = { iphone = true },

},

["plugin.zip"] =

{

publisherId = "com.coronalabs"

},

["plugin.notifications.v2"] =

{

publisherId = "com.coronalabs"

},

}

} |

FlorentF9/DeepTemporalClustering | 663340696 | Title: variable time step

Question:

username_0: Hi in the documentation for the DTC object, found in DeepTemporalClustering.py, it is indicated that the timesteps param can be variable. However when I instantiate as follows:

# Instantiate model

dtc = DTC(n_clusters=3,

input_dim=X_train.shape[-1],

timesteps=None,

n_filters=50,

kernel_size=10,

strides=1,

pool_size=None,

n_units=[50, 1],

alpha=1,

dist_metric='eucl',

cluster_init='kmeans',

heatmap=False)

I get an error. There is an assert which brings up a typeError. Should I be using 0 instead of None?

Answers:

username_1: I assume the error is thrown by the pooling layer. You can use variable series length by setting `timesteps=None` (because the architecture is fully convolutional and recurrent), however you can't set `pool_size=None`.

username_0: They don't work when I assign timesteps=None

Could you show an example of how you would initiate the DTC object for a variable-length dataset

username_1: You are right, I have to take a look at this when I have time. I keep this issue open for now.

username_1: I took a quick look. Actually I was stuck with the variable timestep because of two things:

* Decoder: it needs to know the dimension of its input and I found no way around this. If you put a `None` there, then how is it supposed to know the length of the sequence to reconstruct?

* TSClusteringLayer: to initialize the cluster center weights, the dimension is needed and it depends on the timesteps. I don't see how we can define cluster centers without fixing their length with this architecture.

In a nutshell, I think the timesteps cannot be variable in DTC. But if anyone has an idea about it, please tell! |

davide-casiraghi/ci-global-calendar | 535436266 | Title: Field disappearing on save action when some required fields are missing in EVENT CREATE view

Question:

username_0: **Describe the bug**

Some fields are disappearing on save action when some required fields are missing in EVENT CREATE view.

This fields are:

- Teachers

- Venue

- Organizers

**To Reproduce**

Steps to reproduce the behavior:

1. Login

2. Click on Manager > New event

3. Fill all the fields missing for instance the description

4. Save

5. See fields disappearing

**Expected behavior**

The field value stays<issue_closed>

Status: Issue closed |

HarishTeens/rpsgames | 529337629 | Title: Chat UI not updated

Question:

username_0: When the "joiner" joins the game, the "creator" chat is not updated and its opponent name is not displayed in the chatbox. In other words: only the "joiner" can see its opponent name in the chatbox.

https://github.com/username_1/rpsgames/pull/7/files?file-filters%5B%5D=.js#r344139625

May i fix it ? If it's ok i'll also use this occasion to do a bit of code refactoring and variables renaming too.

Answers:

username_1: Hey @username_0 , sorry for the late reply , yes you can fix it. Go ahead |

pivotal/skenario | 620972024 | Title: Run simulation in-memory

Question:

username_0: Currently stock movements are written to an Sqlite database. And simulation results are returned from queries on the database. This is nice and flexible, but requires a lot of IO. And disk storage.

To make the simulation run much faster, we should store all the relevant data in memory. We don't necessarily need to store every single stock movement either, if we know what metrics we want to collect up front. (If the simulation is deterministic end-to-end then we can always rerun to get more detailed metrics).

Answers:

username_0: From 4.5 seconds to 200 millseconds!

```

2020/06/08 15:11:10 "POST http://localhost:3000/run HTTP/1.1" from [::1]:43940 - 200 834337B in 237.825889ms

2020/06/08 15:11:18 "POST http://localhost:3000/run HTTP/1.1" from [::1]:43940 - 200 834113B in 4.533779147s

```

username_1: I think we could make it even faster if we get rid of an database completely.

Our response to client should include TallyLine, ResponseTimes, RequestsPerSecond.

Here is a suggested solution how to calculate them.

TallyLine:

type TallyLine struct {

OccursAt int64

StockName string

KindStocked string

Tally int64

}

There is a completed movements list - completed.

Sort completed by occursAt field

Create a map:

Key -> StockName

Value -> Tally (the number of entities in stock)

For completed {

If KindStocked in (request, desired, replica){

Update map -> map.put(StockName, +/- 1)

Add to result new TallyLine

}

}

ResponseTimes:

type ResponseTime struct {

ArrivedAt int64

CompletedAt int64

ResponseTime int64

}

Create a map reqToMovements:

Key -> requestEntity

Value -> list(movements)

Create a result map result

arriveAt

completedAt

For req : reqToMovements.keySet() {

For mov : reqToMovements.get(req){

arriveAt = min(arriveAt, mov.occusAt)

completedAt = max(completedAt, mov.occusAt)

}

result.put(req, ResponseTime{arriveAt, completedAt , completedAt-arriveAt})

}

RequestsPerSecond:

type RPS struct {

Second int64

Requests int64

}

Create a map timeToCount :

Key -> occursAt

Value -> numberOfRequest

For mov : completed{

If mov.kind == “arrive_at_routing” {

timeToCount.put(mov.occursAt, +1)

}

}

Status: Issue closed

|

kubernetes-sigs/kind | 531012089 | Title: deployment keeps not ready

Question:

username_0: Debug Mode: false

Server:

Containers: 1

Running: 1

Paused: 0

Stopped: 0

Images: 50

Server Version: 19.03.5

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: b34a5c8af56e510852c35414db4c1f4fa6172339

runc version: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 4.9.184-linuxkit

Operating System: Docker Desktop

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.952GiB

Name: docker-desktop

ID: QGQZ:K3HP:GQMO:NCF4:QTSV:MLRL:CGLI:RRKQ:PQPJ:77M4:NT2W:IOPA

Docker Root Dir: /var/lib/docker

Debug Mode: true

File Descriptors: 35

Goroutines: 47

System Time: 2019-12-02T09:40:48.1635519Z

EventsListeners: 1

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://hub-mirror.c.163.com/

Live Restore Enabled: false

Product License: Community Engine

- OS (e.g. from `/etc/os-release`):

windows 10 with docker desktop

Status: Issue closed

Answers:

username_0: runs normal after I delete all before kube configs |

kubernetes/minikube | 796738188 | Title: minikube is not starting

Question:

username_0: <!--- Please include the "minikube start" command you used in your reproduction steps --->

**Steps to reproduce the issue:**

C:\Program Files\Kubernetes\Minikube>minikube start --driver=ssh

* minikube v1.17.1 on Microsoft Windows 10 Pro 10.0.18363 Build 18363

* Using the ssh driver based on user configuration

* Starting control plane node minikube in cluster minikube

! StartHost failed, but will try again: config: please provide an IP address

* Failed to start ssh bare metal machine. Running "minikube delete" may fix it: config: please provide an IP address

X Exiting due to GUEST_PROVISION: Failed to start host: config: please provide an IP address

<!--- TIP: Add the "--alsologtostderr" flag to the command-line for more logs --->

**Full output of failed command:**

**Full output of `minikube start` command used, if not already included:**

**Optional: Full output of `minikube logs` command:**

<details>

</details>

Answers:

username_1: Same as #10266, can we provide some better driver documentation or make it more clear somehow ?

https://minikube.sigs.k8s.io/docs/drivers/ssh/

When you supply your own VM, you _will_ need to provide the connection parameters for it (at a minimum, the IP)

Currently there are also some issues with the default SSH keys, so you need to provide those explicitly: https://github.com/kubernetes/minikube/issues/10289#issuecomment-769212722

username_0: thank you so much for helping me.

Status: Issue closed

|

zhanglei1949/Game-21 | 66618701 | Title: comment for your codes

Question:

username_0: 1.Your code style is very good with a explanation. You use a good way to write some functions to this program. The name of your function is understanding. Import some functions.

2.You make a check for your input, but sometimes some bugs will occur. If you enter more than one letter, it will give you more than one questions.

3.The game can only run one time, you can't make it run twice or more.

Answers:

username_1: Ok,I have improved it. |

firasdib/Regex101 | 166501539 | Title: Why not use local font in regex101?

Question:

username_0: Hi,

There is no doubt that regex101 is the best regular expression test site I have encountered.

But most people got a problem that we can not visit fonts.googleapis.com.

Thus, we were hardly to open regex101 to use it.

So, I hope regex101 can use local font and css, so that we can easily visit and use regex101.

Thank you very much.

Answers:

username_1: To describe the issue in slightly more detail:

When google fonts is blocked for some reason, the initialization process for regex101 hangs waiting for the fonts to load forever.

The problem is that the initialization process only ever waits for the first font to be loaded, and instead of failing with a timeout it hangs forever (so fallback fonts are never used or considered). While regex101 works fine with different fonts, if the first one is not available, you will never finish loading.

Local CSS is perhaps not the right way to fix this, and perhaps a global timeout on initialization might be useful, or at the very least consider fallback fonts.

username_2: Would it be a good idea to switch to [jQuery CDN](https://code.jquery.com/)? [This post](http://edjiang.com/post/97299595332/how-googles-cdn-prevents-your-site-from-loading) suggests it as a solution.

username_3: @username_2 not so much, i can remember the times when jQuery site was hacked. So google or local is much more preferred over another CDN

username_4: How about a check if the $ is defined or not, after attempting to load from Googe APIs? if it can not be loaded from the server, try to use the local version. I have the same problem in my designs from time to time, and this is the solution that I use.

P.S. @username_0 I knew Internet blocking and censorship was a thing in China, but your government also blocks access to Googe APIs and jQuery? What do they think with themselves? :|

username_5: v2 does not have jquery as a dependency, and does not make use of any CDN, so this will not be a problem in the future. For now we should just try to work around it.

Status: Issue closed

|

handsontable/docs | 405603669 | Title: Add more examples to the documentation

Question:

username_0: @wojciechczerniak commented on [Tue Oct 16 2018](https://github.com/handsontable/angular-handsontable/issues/126)

### Description

We should improve our documentation by adding most requested examples:

- [ ] Custom ID, Class, Style and other attributes

- [x] Binding settings with an object

- [x] Binding settings with an attributes

- [ ] Binding callbacks/hooks #122 #123

- [x] Custom Context Menu

- [x] Custom Editor

- [x] Custom Renderer

- [ ] Custom Validator (https://github.com/handsontable/angular-handsontable/issues/126#issuecomment-437011533)

- [x] Language change

- [ ] Integration with state manager

- [x] Referencing the Handsontable instance

- [ ] Interacting with Handsontable in your app (ie. checkbox, button)

Bonus:

- [ ] Examples are broken in Fiefox & IE #136

---

@Calidus commented on [Thu Nov 08 2018](https://github.com/handsontable/angular-handsontable/issues/126#issuecomment-437011533)

Validation could also use some examples.<issue_closed>

Status: Issue closed |

nss-day-cohort-22/movie-history-kindhearted-toads | 277175607 | Title: User Login

Question:

username_0: __Given__ that a user *is not* logged into the app

__When__ they load the app

__Then__the user is offered an affordance to login/register an account

__Given__ that a user *is* logged into the app

__When__ they load the app

__Then__ the user is presented with the home screen |

shlomif/fc-solve | 191136828 | Title: depth_dbm_fc_solver / etc. should validate the input board as containing all the cards and exactly once

Question:

username_0: Success!

--------

Foundations: H-6 C-9 D-5 S-7

Freecells: 8H 9S

: KS

: KH QS JH

: TC 9D

:

:

:

:

:

==

Column 2 -> Column 7

--------

Foundations: H-T C-3 D-5 S-7

Freecells: 4C 5C

: KS

: KH QS JH

: TC 9D

:

:

:

:

:

==

Column 2 -> Column 7

--------

Foundations: H-T C-3 D-5 S-7

Freecells: 4C 5C

: KS

: KC QD

: QH JC

: TD

: 9S

: 9D 8C 7D

: 7C

: 6C

==

Column 7 -> Column 5

--------

Foundations: H-4 C-Q D-J S-0

Freecells: 2S 3S

: KS

: KC

: KH

: QD

:

:

:

:

==

Column 3 -> Column 1

--------

Foundations: H-/ C-c D-- S-s

Freecells: 2C 6C

: KD

: JS

: TS

: TD

: TC

: 9D

: 8D

: 5C

==

END

handle_and_destroy_instance_solution end

```

or worse - it exits with an obscure exception . It should <b>validate</b> the board for extra/missing cards and not start the solving process if the board does not validate. With a regression test!

Answers:

username_0: Fixed in commit ad0157c6360b02a93fe48b1fd5af2baa8c871b15 . Closing

Status: Issue closed

|

react-component/color-picker | 274952980 | Title: Page scrolls to top when opening color picker

Question:

username_0: Captured a gif of the issue from the [samples page](http://react-component.github.io/color-picker/examples/simple.html)

Answers:

username_1: i found this bug caused by the ColorPickerPanel.focus() the source code is in

In a further step ,it is caused by the rc-trigger's container,because the position value of top and left is always zero

username_2: Is there any solution found?

username_3: Please deactivate the auto-scroll option

username_4: I played around with this for a while, and wasn't able to create a scenario where this functionality was actually needed. Opened a PR to remove it.

username_1: @username_4 When an color-picker is on the edge of a web page,click it open the color-panel,focus can let the color-panel scoll to your view. if do not, you may see part of color-panel

username_4: @username_1 I understand the idea, but the picker automatically displays above or below, depending on the location. Can you show me an example where the focus/scroll is required?

Here are the top and bottom examples from the PR I opened:

When the input is at the top of the screen:

When the input is at the bottom of the screen:

username_2: @username_3 How I do that any suggestion?

username_3: @username_2 No, I'm just waiting for them to remove the auto-scroll functionality when clicking.

username_4: @username_5 Any chance of merging #58 ?

Status: Issue closed

username_5: bump v0.2.5 |

pyg-team/pytorch_geometric | 1118868434 | Title: Caught AttributeError in DataLoader worker process 0.

Question:

username_0: ### 🐛 Describe the bug

when I run the code:

val_dataloader = torch_geometric.loader.DataLoader(

val_dataset,

batch_size=hparams.batch_size,

num_workers=hparams.num_workers)

for idx,batch in enumerate(val_dataloader):

print(idx,batch)

I got the following error, I don't know how to fix this issue:

Traceback (most recent call last):

File "/home/aiyicen/00_Script/ares_release/ares/train.py", line 103, in <module>

main()

File "/home/aiyicen/00_Script/ares_release/ares/train.py", line 85, in main

for idx,batch in enumerate(val_dataloader):

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 345, in __next__

data = self._next_data()

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 856, in _next_data

return self._process_data(data)

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 881, in _process_data

data.reraise()

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/_utils.py", line 395, in reraise

raise self.exc_type(msg)

AttributeError: Caught AttributeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/utils/data/_utils/worker.py", line 178, in _worker_loop

data = fetcher.fetch(index)

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 47, in fetch

return self.collate_fn(data)

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch_geometric/loader/dataloader.py", line 18, in __call__

return Batch.from_data_list(batch, self.follow_batch,

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch_geometric/data/batch.py", line 68, in from_data_list

batch, slice_dict, inc_dict = collate(

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch_geometric/data/collate.py", line 32, in collate

out = cls(_base_cls=data_list[0].__class__) # Dynamic inheritance.

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/torch_geometric/data/batch.py", line 40, in __call__

return super(DynamicInheritance, new_cls).__call__(*args, **kwargs)

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/e3nn-0.1.0-py3.8-linux-x86_64.egg/e3nn/point/data_helpers.py", line 186, in __init__

edge_index, edge_attr = neighbor_list_and_relative_vec(

File "/home/aiyicen/anaconda3/envs/ares/lib/python3.8/site-packages/e3nn-0.1.0-py3.8-linux-x86_64.egg/e3nn/point/data_helpers.py", line 28, in neighbor_list_and_relative_vec

N, _ = pos.shape

AttributeError: 'NoneType' object has no attribute 'shape'

### Environment

torch 1.5.0+cu101

torch-cluster 1.5.7

torch-geometric 2.0.4

torch-scatter 2.0.5

torch-sparse 0.6.7

torch-spline-conv 1.2.0

torchmetrics 0.7.0

torchvision 0.6.0+cu101

cudatoolkit 10.1.243

OS: Ubuntu 20.04.3 LTS

GeForce RTX 3090

I installed pytorch and PYG by using the wheels and pip tool:

pip install torch-1.5.0+cu101-cp38-cp38-linux_x86_64.whl

pip install torch_cluster-1.5.7-cp38-cp38-linux_x86_64.whl

pip install torch_scatter-2.0.5-cp38-cp38-linux_x86_64.whl

pip install torch_sparse-0.6.7-cp38-cp38-linux_x86_64.whl

pip install torch_spline_conv-1.2.0-cp38-cp38-linux_x86_64.whl

pip install torchvision-0.6.0+cu101-cp38-cp38-linux_x86_64.whl

pip install torch_geometric

Answers:

username_1: Do you have a minimal example to reproduce this error? Which example are you running? It looks like somewhere in the code, `data.pos` is referenced, but this attribute does not (yet) exist.

username_0: I loaded dataset from lmdb data by atom3d.datasets.load_dataset, then i created dataloader by torch_geometric.data.DataLoader. My code is as follow:

import atom3d.datasets as da

val_dataset = da.load_dataset(hparams.val_dataset, hparams.filetype,

transform=transform)

val_dataloader = torch_geometric.data.DataLoader(

val_dataset,

batch_size=hparams.batch_size,

num_workers=hparams.num_workers)

When i run:

for i,data in enumerate(val_dataloader.dataset):

print(i,data)

I got this result and the pos is not empty:

0 DataNeighbors(x=[552, 3], edge_index=[2, 27600], edge_attr=[27600, 3], pos=[552, 3], Rs_in=[1], label=[1], id='('1q9a_bps_res4_newfrags', 'S_000681_minimize_008')', file_path='/scratch/users/psuriana/ares_psuriana/data/val')

1 DataNeighbors(x=[552, 3], edge_index=[2, 27600], edge_attr=[27600, 3], pos=[552, 3], Rs_in=[1], label=[1], id='('1q9a_bps_res4_newfrags', 'S_001016_minimize_001')', file_path='/scratch/users/psuriana/ares_psuriana/data/val')

2 DataNeighbors(x=[552, 3], edge_index=[2, 27600], edge_attr=[27600, 3], pos=[552, 3], Rs_in=[1], label=[1], id='('1q9a_bps_res4_newfrags', 'S_001067_minimize_002')', file_path='/scratch/users/psuriana/ares_psuriana/data/val')

3 DataNeighbors(x=[552, 3], edge_index=[2, 27600], edge_attr=[27600, 3], pos=[552, 3], Rs_in=[1], label=[1],

......

18 DataNeighbors(x=[345, 3], edge_index=[2, 17250], edge_attr=[17250, 3], pos=[345, 3], Rs_in=[1], label=[1], id='('1kka_bps_res4_newfrags', 'S_001963_minimize_003')', file_path='/scratch/users/psuriana/ares_psuriana/data/val')

19 DataNeighbors(x=[345, 3], edge_index=[2, 17250], edge_attr=[17250, 3], pos=[345, 3], Rs_in=[1], label=[1], id='('1kka_bps_res4_newfrags', 'S_003526_minimize_003')', file_path='/scratch/users/psuriana/ares_psuriana/data/val')

But when i try to print the val_dataloader, i got the error:

for idx,batch in enumerate(val_dataloader):

print(idx,batch)

username_1: Sorry, I have problems reproducing this. Which dataset are you using and what are the values of each attribute in `hparams`?

username_0: I run the train.py and use the ares_release/data/lmdbs dataset in this source https://zenodo.org/record/5088971#.Yf3RQepByuU. I input the lmdbs/train and lmdbs/val dataset and use the default hparams. The code I've run is as follows:

import argparse as ap

import logging

import os

import pathlib

import sys

import atom3d.datasets as da

import dotenv as de

import pytorch_lightning as pl

import pytorch_lightning.loggers as log

import torch_geometric

import wandb

from torch.utils.data import DataLoader

import sys

sys.path.append(r'/home/aiyicen/00_Script/ares_release/ares/')

import data as d

import model as m

root_dir = pathlib.Path(__file__).parent.parent.absolute()

de.load_dotenv(os.path.join(root_dir, '.env'))

logger = logging.getLogger("lightning")

wandb.init(project="ares")

def main():

parser = ap.ArgumentParser()

# add PROGRAM level args

parser.add_argument('train_dataset', type=str, default='/home/aiyicen/00_Script/ares_release/data/lmdbs/train')

# parser.add_argument('--train_dataset', type=str, default='/home/aiyicen/00_Script/ares_release/data/pdbs/S_000028_476.pdb')

parser.add_argument('--val_dataset', type=str,default='/home/aiyicen/00_Script/ares_release/data/lmdbs/val')

# parser.add_argument('--val_dataset', type=str,default='/home/aiyicen/00_Script/ares_release/data/pdbs/S_000041_026.pdb')

parser.add_argument('-f', '--filetype', type=str, default='lmdb',

choices=['lmdb', 'pdb', 'silent'])

parser.add_argument('--batch_size', type=int, default=1)

parser.add_argument('--label_dir', type=str, default=None)

parser.add_argument('--num_workers', type=int, default=20)

# add model specific args

parser = m.ARESModel.add_model_specific_args(parser)

# add trainer args

parser = pl.Trainer.add_argparse_args(parser)

hparams = parser.parse_args()

dict_args = vars(hparams)

transform = d.create_transform(True, hparams.label_dir, hparams.filetype)

# DATA PREP

logger.info(f"Dataset of type {hparams.filetype}")

logger.info(f"Creating dataloaders...")

train_dataset = da.load_dataset(hparams.train_dataset, hparams.filetype,

transform=transform)

train_dataloader = torch_geometric.loader.DataLoader(

train_dataset,

batch_size=hparams.batch_size,

num_workers=hparams.num_workers,

shuffle=True)

val_dataset = da.load_dataset(hparams.val_dataset, hparams.filetype,

transform=transform)

val_dataloader = torch_geometric.data.DataLoader(

val_dataset,

batch_size=hparams.batch_size,

num_workers=hparams.num_workers)

for i,data in enumerate(val_dataloader.dataset):

print(i,data)

if __name__ == "__main__":

logging.basicConfig(stream=sys.stdout,

format='%(asctime)s %(levelname)s %(process)d: ' +

'%(message)s',

level=logging.INFO)

main()

username_1: Thanks @username_0. Can you say something about how your transform looks like? |

guzzle/psr7 | 125477083 | Title: Relative URI > Can't parse_url if colon is present

Question: