repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

paritytech/substrate | 453487000 | Title: state_queryStorage is too verbose

Question:

username_0: The `state_queryStorage` RPC method enumerates storage entries that were changed between the given blocks. However, the resulting list size is proportional to the number of blocks not the number of changes. It would be great if we could omit the storage entries that were unchanged.

cc @svyatonik

Answers:

username_1: @username_0 Wouldn't it be better to introduce a different RPC method for that then? like `state_diffStorage(blockA, blockB)`?

AFAIR the point of `queryStorage` was to be able to trace all changes that happened since given block.

username_0: What I was trying to convey is that it encodes no changes as an empty array whereas it could just omitted.

username_1: Oh, is it? Then yeah, we could simplify that. I think we don't do that if changes trie is disabled, so probably the behaviour differs there.

Status: Issue closed

|

PyCQA/isort | 718503704 | Title: tests/unit/test_pylama_isort.py::TestLinter::test_run fails from the PyPI tarball

Question:

username_0: E AssertionError: assert not [{'col': 0, 'lnum': 0, 'text': 'Incorrectly sorted imports.', 'type': 'ISORT'}]

E + where [{'col': 0, 'lnum': 0, 'text': 'Incorrectly sorted imports.', 'type': 'ISORT'}] = <bound method Linter.run of <isort.pylama_isort.Linter object at 0x7fbcd56e1d30>>('/isort-5.6.1/isort/api.py')

E + where <bound method Linter.run of <isort.pylama_isort.Linter object at 0x7fbcd56e1d30>> = <isort.pylama_isort.Linter object at 0x7fbcd56e1d30>.run

E + where <isort.pylama_isort.Linter object at 0x7fbcd56e1d30> = <tests.unit.test_pylama_isort.TestLinter object at 0x7fbcd57c85e0>.instance

E + and '/isort-5.6.1/isort/api.py' = <function join at 0x7fbcd6656550>('/isort-5.6.1/isort', 'api.py')

E + where <function join at 0x7fbcd6656550> = <module 'posixpath' from '/usr/local/lib/python3.8/posixpath.py'>.join

E + where <module 'posixpath' from '/usr/local/lib/python3.8/posixpath.py'> = os.path

tests/unit/test_pylama_isort.py:15: AssertionError

=========================== short test summary info ============================

FAILED tests/unit/test_pylama_isort.py::TestLinter::test_run - AssertionError...

============================== 1 failed in 0.09s ===============================

ERROR: /isort-5.6.1/isort/api.py Imports are incorrectly sorted and/or formatted.

```

Reproducer Dockerfile:

```

FROM python:3.8

RUN wget https://files.pythonhosted.org/packages/source/i/isort/isort-5.6.1.tar.gz && \

tar xf isort-5.6.1.tar.gz && \

pip install pytest pylama

WORKDIR isort-5.6.1

RUN pytest -s -vv tests/unit/test_pylama_isort.py::TestLinter::test_run

```

Status: Issue closed

Answers:

username_1: I believe this is due to the test as it was written being too reliant on living within the exact source tree. If that's the cause the release of 5.6.2 should resolve this.

Thanks!

~Timothy

username_0: Works fine with 5.6.2, thanks! |

canjs/can-connect | 173294753 | Title: Syntax error being swallowed by CanJS

Question:

username_0: I have the following code.

```

connect([

connectConstructor,

connectMap,

connectDataParse,

connectStore

], {

Map: Player,

getData: function (params) {

var playerStats = PlayerStats.get({

options: params.options,

id: params.id,

year: params.year

});

var playerStat186 = PlayerStat186.get({

options: params.options,

id: params.id

});

return Promise.all([playerStats, playerStat186]);

},

parseInstanceData: function (data) {

const personalStats = data[1];

const personalStat186 = data[2];

return {

**playerId: xxx.playerId,**

name: personalStats.name

};

}

});

```

The bold line, above, is invalid with xxx not being defined. When I run the code, JS stops at the error, but no message appears on the console.

Answers:

username_1: What does this have to do with can-connect? can-connect isn't preventing errors surely.

username_0: parseInstance is being called by can-connect, is it not?

username_1: Ah, so you are saying that errors in parseInstanceData do not bubble to the Promise when calling .get() or .getList()?

Status: Issue closed

username_0: Yes, the error is being captured and returned in the higher level catch. Issue closed.

username_1: So is this not a bug? Did you just forget to handle the .catch() in the promise?

username_0: That is correct. |

lexbor/lexbor | 991971912 | Title: Regression: head and body selection broken on Git master

Question:

username_0: Head and body selection is broken on Git, but works in 2.1.0. This is a regression introduced in 0c4010b10a922a45513d67beec0ddd29c2951f85.

Reproduction example:

```c

#include <lexbor/html/html.h>

void serialize(lxb_dom_element_t* node, lxb_dom_document_t* document) {

lexbor_str_t* html_str = lexbor_str_create();

lxb_html_serialize_tree_str(lxb_dom_interface_node(node), html_str);

printf("%s\n", html_str->data);

lexbor_str_destroy(html_str, document->text, true);

}

int main(void) {

static const lxb_char_t html[] = "<!doctype html><html><head></head><body></body></html>";

lxb_html_document_t* html_document = lxb_html_document_create();

lxb_html_document_parse(html_document, html, sizeof(html) - 1);

lxb_dom_document_t* document = &html_document->dom_document;

printf("HEAD:\n");

lxb_dom_element_t* head = lxb_dom_interface_element(lxb_html_document_head_element(html_document));

serialize(head, document);

printf("\nBODY:\n");

lxb_dom_element_t* body = lxb_dom_interface_element(lxb_html_document_body_element(html_document));

serialize(body, document);

return 0;

}

```

Expected result:

```

HEAD:

<head></head>

BODY:

<body></body>

```

Result after 0c4010b10a922a45513d67beec0ddd29c2951f85:

```

HEAD:

[1] 368728 segmentation fault (core dumped) ./test

```

Head is empty and body crashes. If you comment out the lines where the head is selected and serialized, it won't crash anymore, but you will get the head instead of the body:

```

BODY:

<head></head>

```

Answers:

username_1: @username_0

Something I am doing wrong:

```HTML

HEAD:

<head></head>

BODY:

<body></body>

```

Current GitHub code.

username_0: Make sure you are using the correct version of the library also at runtime. When I set `LIBRARY_PATH` and `LD_LIBRARY_PATH` path to the CMake build dir, I can reproduce the bug. As soon as I unset `LD_LIBRARY_PATH`, the bug is gone, because the 2.1.0 release installed via apt ist used.

username_0: I figured out what's going on here: Turns out, this happens if you have the old headers in your `CPATH` during compilation, but are linking against the new version. Are there some ABI incompatibilities between the versions?

username_2: Normally, this library has been released with a major version too early. The conventional naming scheme is :

* first release : 0.0.0 (in the form major_version.minor_version.micro_version)

* before a release :

- if only fixed bugs : micro_version++

- if API is added : minor_version++ and micro_version set to 0

- if API or API are broken : major_version++ and micro_version and minor_version set to 0

Note that setting a major version is an important step. It means that the API must be stable during the development. Imho, the current lexbor version should have been something like 0.*.*, like 0.2.4

I don't know if it is possible to restart from the beginning the versionning, but i think it is too late.

username_0: Sorry to nag, but would it be possible to make a new release soon? The develop branch has various fixes for issues with the current stable release for which no workaround exist as well as some issues for which workarounds exist that I would like to get rid of.

username_1: @username_0

If within a week I do not publish the parser of the CSS properties, then there will be a release.

If I publish a property parser, then there will be releases too :)

Anyway, give me a week.

username_0: How's the release coming along? :see_no_evil:

username_1: @username_0

Unfortunately, everything is dragging on. I rewrote not a small part of the CSS parser and this also affected the selectors.

I would love to make a release with these changes.

username_0: What are the implications of the rewrite? |

mdgriffith/style-elements | 235450091 | Title: How to compose across modules?

Question:

username_0: I have two modules, Header and Dashboard, and I can compose their HTML msg-es in my Main, by using `Html.map DashboardMsg` etc.

What I want is: change the signature of my Dashboard.view/Header.view etc to return Element.Element instead of Html.Html, but how do I compose them in my Main.view?

What works so far is return Html from Dashboard.view by calling Element.render, and then calling Element.html to re-wrap into Element so my overall layout works.

Answers:

username_0: Other alternative is to send a tagger from Main.view to Dashboard.view.

username_1: This is definitely an oversight. I've put this on the [map for `v3.1`](https://github.com/username_1/style-elements/issues/14)

Status: Issue closed

username_0: Cool, will close this one. The tagger approach works for me. |

private-octopus/picoquic | 375279138 | Title: Bug on server-side logging of version negotiation

Question:

username_0: <NAME> [11:11 PM]

What’s with this version negotiation packet being logged by picoquic?

```

7b5f578f641c1b8: Sending packet type: 1 (version negotiation), S0,

7b5f578f641c1b8: <1067263059ae891d>, <07b5f578f641c1b8>

7b5f578f641c1b8: versions: 551067, 263059ae, 891d07b5, f578f641, c1b85043, 51315043, 5130ff00,

```

May be partially a logging error, but our client (f5_test) is parsing the version list as:

```

versions: 0x50435131, 0x50435130, 0xff00000f,

```

Status: Issue closed

Answers:

username_0: Fixed by PR #382 |

JamitLabs/ProjLint | 346573877 | Title: Write Getting Started Documentation for Contributors

Question:

username_0: Similar to [this](https://github.com/realm/SwiftLint/blob/master/CONTRIBUTING.md) document on SwiftLint, ProjLint should have a starting point for contributors with explanations what to conform to in order to introduce a new rule. Also it could include a checklist of actions to take before a new rule can be merged:

- [ ] Make sure SwiftLint and ProjLint are both installed and passing.

- [ ] Make sure to write extensive tests for your new rule.

- [ ] Document rule in Rules.md including options and example config(s).

- [ ] Add Changelog entry under section "Added".<issue_closed>

Status: Issue closed |

AugurProject/augur | 419680049 | Title: Error when user has one pending open order

Question:

username_0:

to repro:

user has no open orders, then user creates pending order, then goes to portfolio page.

order confirmation comes in and UI gets error.

After refresh the user's order shows up in `Open Orders` section.

The issue is building userOpenOrders in market selector when the pending orders gets removed and before the open order actually gets retrieved from augur-node.

Answers:

username_0: @username_1 let's chat on a solution to this issue. It might be an issue with all. I put in a PR with a quick fix, we might need to think about it more.

Status: Issue closed

username_0:

to repro:

user has one open order on a market, then goes to portfolio page to cancel it. Once the cancel transaction is confirmed and the pending order is removed the UI explodes.

Issue is that filtered data doesn't get updated when the market collection has been updated. In the screenshot below the markets and marketObj have been updated by container.

`marketObj` is empty object

Status: Issue closed

|

godotengine/godot | 298105887 | Title: ProjectSettings.load_resource_pack odd behavior (breaks virtual filesystem a bit)

Question:

username_0: <!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:** 3.0 Release

**OS/device including version:** Windows

**Issue description:**

When loading resource packs using ProjectSettings.load_resource_pack one would assume the content from the resource pack is added to the virtual filesystem, this does work but it also breaks other things, I now present three cases.

In each of the following cases the pck has a folder structure of Content/ModTest and two files in that folder, modtest.png and project.json, modtest is a picture of Earth-Chan and project.json is some text data.

The game itself has two folders inside the Content folder, BaseContent and TestContent.

**1st case: Directly loading resource using load():** This works exactly as expected, no issues about it.

**2nd case: Iterating a directory using Directory.list_dir**: It doesn't work, only the files and folders from the newly loaded pck file seem to be found, this is my method:

`func test_pck_issue():

var dir = Directory.new()

if dir.open("res://Content") == OK:

dir.list_dir_begin(true)

var directory_name = dir.get_next()

while (directory_name != ""):

if dir.current_is_dir():

print(directory_name)

file_name = dir.get_next()`

In this case only ModTest is found, this doesn't seem right to me.

Output when ProjectSettings.load_resource_pack is used:

`ModTest`

Output when it's not used:

`BaseContent

TestContent`

**3rd case: trying to find files using the file browser**: This only seems to find the game's base content, but none of the newly loaded pck content.

I have also tried removing the project configuration from the package, it doesn't change anything.

Answers:

username_0: username_1 said in the discord that while load_resource_pack is undocumented it uses PackedData::add_path which is supposed to be additive, so it seems like it is indeed a bug.

username_0: I figured out what's causing this, the problem is that the ProjectSettings singleton sets DirAccess::make_default<DirAccessPack>(DirAccess::ACCESS_RESOURCES);

Because it makes all newly created DirAccess instances use DirAccessPack it only loads from resource packs, and since we are running in the editor we don't really have a resource pack.

load() doesn't use DirAccess, that's why it has no issues loading from both

I am not sure how this can be tackled, if it even can, if I knew I would fix it myself.

username_1: Might be useful to add that it works in export mode, where all access to files is through Packs; where it breaks is if you try to use both Packs and the filesystem, ie. in the editor.

username_0: I originally proposed to use a hybrid of all three if we are using a mix, but I am not sure if there's a better way.

username_2: If `load` work with pck on debug mode it can be used for testing while the other is used on release with conditionals, or make `load_resource_pack` _optionally_ behave differently on debug mode.

username_0: @username_2 `load` actually works with multiple sources regardless of being in debug or in release mode, because `load` does not use `DirAccess`, it does it directly instead, so even if you mix fileystem resources and packages it still works, as long as you supply it the proper path.

The problem comes when mixing them both, once a package is loaded `Directory` can't find any resource that was outside the pck because `Directory` stops using `DirAccess `and starts using `DirAccessPack`, which only looks at files in pcks.

username_0: I have changed the title to a more descriptive one

username_3: @username_0 Can you still reproduce this bug in Godot 3.2.1 or [3.2.2beta4](https://godotengine.org/article/dev-snapshot-godot-3-2-2-beta-4)?

username_0: Yes, this is still an issue, the engine's design still has this problem.

username_4: Still a problem with Godot 3.2.3 and 3.2.2 from what I found. I made a minimal test case to report it as bug, before I found this issue.

```gd

func _ready():

var packer = PCKPacker.new();

packer.pck_start("res://pack.pck");

packer.flush(true);

ProjectSettings.load_resource_pack("pack.pck")

var dir = Directory.new()

assert(dir.open("res://dir") == OK, "Could not open the folder 'dir'.")

``` |

kefniark/Fatina | 312421940 | Title: Timing accuracy

Question:

username_0: ## Description

For the moment, the library is based on the deltatime provided by `requestAnimationFrame`.

Some post seems to say that this dt could be slightly inaccurate and they recommend to use:

* Browser: `performance.now()`

* Node: `process.hrtime()`

https://www.stefanjudis.com/today-i-learned/measuring-execution-time-more-precisely-in-the-browser-and-node-js/

Need to test in different browser how big is the difference and if it's still relevant.

Answers:

username_0: Based on first tests,

chrome 65:

```

update [perf: 250 ms. | raf: 0 ms.]

update [perf: 238.401 ms. | raf: 666.694 ms.]

update [perf: 19.2 ms. | raf: 33.334 ms.]

update [perf: 15.401 ms. | raf: 16.667 ms.]

update [perf: 14.401 ms. | raf: 16.672 ms.]

update [perf: 13.601 ms. | raf: 16.653 ms.]

update [perf: 19.6 ms. | raf: 16.672 ms.]

update [perf: 15.301 ms. | raf: 16.659 ms.]

update [perf: 13.501 ms. | raf: 16.655 ms.]

update [perf: 17 ms. | raf: 16.67 ms.]

update [perf: 16.901 ms. | raf: 16.67 ms.]

update [perf: 18.8 ms. | raf: 16.69 ms.]

update [perf: 14.2 ms. | raf: 16.677 ms.]

```

edge:

```

update [perf: 63.901 ms. | raf: 63.89 ms.]

update [perf: 41.4 ms. | raf: 41.342 ms.]

update [perf: 668.5 ms. | raf: 667.666 ms.]

update [perf: 355.101 ms. | raf: 355.317 ms.]

update [perf: 446 ms. | raf: 446.066 ms.]

update [perf: 213.101 ms. | raf: 213.179 ms.]

update [perf: 249.4 ms. | raf: 249.221 ms.]

update [perf: 167.7 ms. | raf: 167.87 ms.]

update [perf: 149.401 ms. | raf: 149.367 ms.]

update [perf: 154.701 ms. | raf: 154.665 ms.]

update [perf: 127.8 ms. | raf: 127.976 ms.]

update [perf: 109.8 ms. | raf: 109.992 ms.]

update [perf: 116.5 ms. | raf: 116.524 ms.]

update [perf: 90.801 ms. | raf: 90.585 ms.]

update [perf: 109.1 ms. | raf: 109.204 ms.]

```

Status: Issue closed

|

etcd-io/etcd | 397379418 | Title: Multiple endpoints in ETCDCTL_ENDPOINTS lead to rejected connection from "172.16.58.3:5678" (error "EOF", ServerName "") spam in server log

Question:

username_0: We have a Cluster of 3 etcd nodes and use it from various shell scripts running in systemd services which all use etcdctl. Two nodes run on Debian stretch, the third on Centos 7. All nodes use etcd downloaded directly from Github.

etcdctl version: 3.3.10

API version: 3.3

etcd Version: 3.3.10

Git SHA: 27fc7e2

Go Version: go1.10.4

Go OS/Arch: linux/amd64

A lot of the systemd services check some system parameter (e.g. an IP or whether a service is running) and write data about it to etcd. All of the scripts use leases to ensure no outdated information remains in etcd. Some use put, some use txn with a check whether the key exists followed by put.

etcdctl is configured via environment variables (IPs changed for privacy)

`

ETCDCTL_API=3

ETCDCTL_CACERT=/etc/etcd/client_certs/ca.crt

ETCDCTL_CERT=/etc/etcd/client_certs/client.crt

ETCDCTL_DIAL_TIMEOUT=3s

ETCDCTL_ENDPOINTS=https://172.16.58.3:2379,https://172.16.17.32:2379,https://1.2.3.6:2379

ETCDCTL_KEY=/etc/etcd/client_keys/client.key

`

etcd is started by a systemd unit we wrote (adjusted heavily)

`

[Unit]

Description=etcd

Documentation=https://github.com/coreos/etcd

Conflicts=etcd2.service

[Service]

Type=notify

Restart=always

RestartSec=5s

LimitNOFILE=40000

TimeoutStartSec=60s

User=etcd

Group=etcd

ExecStart=/opt/etcd/etcd \

--name node1 \

--data-dir /var/lib/etcd \

--listen-client-urls https://172.16.58.3:2379,https://127.0.0.1:2379 \

--cert-file=/etc/etcd/server_certs/server_client.crt \

--key-file=/etc/etcd/server_keys/server_client.key \

--client-cert-auth \

--trusted-ca-file=/etc/etcd/server_certs/ca.crt \

--advertise-client-urls https://172.16.58.3:2379 \

--listen-peer-urls https://172.16.58.3:2380 \

--peer-cert-file=/etc/etcd/server_certs/server_peer.crt \

--peer-key-file=/etc/etcd/server_keys/server_peer.key \

--peer-client-cert-auth \

--peer-trusted-ca-file=/etc/etcd/server_certs/ca.crt \

--initial-advertise-peer-urls https://172.16.58.3:2380 \

--initial-cluster node1=https://1.2.3.4:2380,node2=https://1.2.3.5:2380,node3=https://1.2.3.6:2380 \

--initial-cluster-token "<PASSWORD>" \

--initial-cluster-state new \

--auto-compaction-mode=revision \

--auto-compaction-retention=1000

[Truncated]

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: updating state and picker called by balancer: CONNECTING, 0xc4202d2a20

INFO: 2019/01/09 14:42:15 balancerWrapper: handle subconn state change: 0xc420198750, CONNECTING

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: updating state and picker called by balancer: CONNECTING, 0xc4202d2a20

INFO: 2019/01/09 14:42:15 balancerWrapper: handle subconn state change: 0xc420198700, READY

INFO: 2019/01/09 14:42:15 clientv3/balancer: pin "1.2.3.5:2379"

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: updating state and picker called by balancer: READY, 0xc4202d2a20

INFO: 2019/01/09 14:42:15 balancerWrapper: got update addr from Notify: [{1.2.3.5:2379 <nil>}]

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: removing subconn

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: removing subconn

INFO: 2019/01/09 14:42:15 balancerWrapper: handle subconn state change: 0xc4201986b0, SHUTDOWN

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: updating state and picker called by balancer: READY, 0xc4202d2a20

INFO: 2019/01/09 14:42:15 balancerWrapper: handle subconn state change: 0xc420198750, SHUTDOWN

INFO: 2019/01/09 14:42:15 ccBalancerWrapper: updating state and picker called by balancer: READY, 0xc4202d2a20

/internet/elected_router

node1

`

it looks like etcdctl connects to all three endpoints and only uses one connection, presumably it races them and uses the first one that responds. I suspect the EOF errors in the logs are caused by those two other connection attempts.

Sadly there seems to be no way to suppress the logging of TLS handshake failures like this.

Answers:

username_0: The closed issue (for lack of information from submitter) #10040 seems to show a similar problem.

username_1: This is a duplicate of https://github.com/etcd-io/etcd/issues/9949

Status: Issue closed

username_2: yeah closing lets track #9949 |

flutter/flutter | 571902612 | Title: Can't get sign in with Google

Question:

username_0: D/EGL_emulation(20914): eglMakeCurrent: 0xe4748060: ver 2 0 (tinfo 0xd9145fb0)

D/eglCodecCommon(20914): setVertexArrayObject: set vao to 0 (0) 1 0

I/OpenGLRenderer(20914): Davey! duration=1158ms; Flags=1, IntendedVsync=48083836367320, Vsync=48083869700652, OldestInputEvent=9223372036854775807, NewestInputEvent=0, HandleInputStart=48083883689500, AnimationStart=48083883739290, PerformTraversalsStart=48083883763590, DrawStart=48084959298150, SyncQueued=48084960766380, SyncStart=48084962160370, IssueDrawCommandsStart=48084962515300, SwapBuffers=48084976209690, FrameCompleted=48084996475790, DequeueBufferDuration=17378000, QueueBufferDuration=216000,

I/Choreographer(20914): Skipped 65 frames! The application may be doing too much work on its main thread.

D/EGL_emulation(20914): eglMakeCurrent: 0xe4749f80: ver 2 0 (tinfo 0xd915e180)

D/eglCodecCommon(20914): setVertexArrayObject: set vao to 0 (0) 1 0

W/ActivityThread(20914): handleWindowVisibility: no activity for token android.os.BinderProxy@<PASSWORD>

D/EGL_emulation(20914): eglMakeCurrent: 0xe4748060: ver 2 0 (tinfo 0xd9145fb0)

D/EGL_emulation(20914): eglMakeCurrent: 0xe4748060: ver 2 0 (tinfo 0xd9145fb0)

E/flutter (20914): [ERROR:flutter/lib/ui/ui_dart_state.cc(157)] Unhandled Exception: PlatformException(sign_in_failed, com.google.android.gms.common.api.ApiException: 10: , null)

E/flutter (20914): #0 StandardMethodCodec.decodeEnvelope (package:flutter/src/services/message_codecs.dart:569:7)

E/flutter (20914): #1 MethodChannel._invokeMethod (package:flutter/src/services/platform_channel.dart:156:18)

E/flutter (20914): <asynchronous suspension>

E/flutter (20914): #2 MethodChannel.invokeMethod (package:flutter/src/services/platform_channel.dart:329:12)

E/flutter (20914): #3 MethodChannel.invokeMapMethod (package:flutter/src/services/platform_channel.dart:356:48)

E/flutter (20914): #4 MethodChannelGoogleSignIn.signIn (package:google_sign_in_platform_interface/src/method_channel_google_sign_in.dart:45:10)

E/flutter (20914): #5 GoogleSignIn._callMethod (package:google_sign_in/google_sign_in.dart:230:42)

E/flutter (20914): <asynchronous suspension>

E/flutter (20914): #6 GoogleSignIn._addMethodCall (package:google_sign_in/google_sign_in.dart:285:18)

E/flutter (20914): #7 GoogleSignIn.signIn (package:google_sign_in/google_sign_in.dart:356:9)

E/flutter (20914): #8 _LoginPageState._signInWithGoogle (package:the_hunter_app/login/login.dart:314:64)

E/flutter (20914): #9 _LoginPageState.build.<anonymous closure> (package:the_hunter_app/login/login.dart:237:27)

E/flutter (20914): #10 _InkResponseState._handleTap (package:flutter/src/material/ink_well.dart:705:14)

E/flutter (20914): #11 _InkResponseState.build.<anonymous closure> (package:flutter/src/material/ink_well.dart:788:36)

E/flutter (20914): #12 GestureRecognizer.invokeCallback (package:flutter/src/gestures/recognizer.dart:182:24)

E/flutter (20914): #13 TapGestureRecognizer.handleTapUp (package:flutter/src/gestures/tap.dart:486:11)

E/flutter (20914): #14 BaseTapGestureRecognizer._checkUp (package:flutter/src/gestures/tap.dart:264:5)

E/flutter (20914): #15 BaseTapGestureRecognizer.handlePrimaryPointer (package:flutter/src/gestures/tap.dart:199:7)

E/flutter (20914): #16 PrimaryPointerGestureRecognizer.handleEvent (package:flutter/src/gestures/recognizer.dart:470:9)

E/flutter (20914): #17 PointerRouter._dispatch (package:flutter/src/gestures/pointer_router.dart:76:12)

E/flutter (20914): #18 PointerRouter._dispatchEventToRoutes.<anonymous closure> (package:flutter/src/gestures/pointer_router.dart:117:9)

E/flutter (20914): #19 _LinkedHashMapMixin.forEach (dart:collection-patch/compact_hash.dart:379:8)

E/flutter (20914): #20 PointerRouter._dispatchEventToRoutes (package:flutter/src/gestures/pointer_router.dart:115:18)

E/flutter (20914): #21 PointerRouter.route (package:flutter/src/gestures/pointer_router.dart:101:7)

E/flutter (20914): #22 GestureBinding.handleEvent (package:flutter/src/gestures/binding.dart:218:19)

E/flutter (20914): #23 GestureBinding.dispatchEvent (package:flutter/src/gestures/binding.dart:198:22)

E/flutter (20914): #24 GestureBinding._handlePointerEvent (package:flutter/src/gestures/binding.dart:156:7)

E/flutter (20914): #25 GestureBinding._flushPointerEventQueue (package:flutter/src/gestures/binding.dart:102:7)

E/flutter (20914): #26 GestureBinding._handlePointerDataPacket (package:flutter/src/gestures/binding.dart:86:7)

E/flutter (20914): #27 _rootRunUnary (dart:async/zone.dart:1138:13)

E/flutter (20914): #28 _CustomZone.runUnary (dart:async/zone.dart:1031:19)

E/flutter (20914): #29 _CustomZone.runUnaryGuarded (dart:async/zone.dart:933:7)

E/flutter (20914): #30 _invoke1 (dart:ui/hooks.dart:274:10)

E/flutter (20914): #31 _dispatchPointerDataPacket (dart:ui/hooks.dart:183:5)

E/flutter (20914):

D/EGL_emulation(20914): eglMakeCurrent: 0xe4748060: ver 2 0 (tinfo 0xd9145fb0)

Here the error message coming out when I run the function in my app.

May I know how to fix it?

Answers:

username_1: Hi @username_0

You need to add `SHA` key in your firebase console [Stackoverflow Solution](https://stackoverflow.com/a/54696963)

username_1: Hi @username_0

Without additional information, we are unfortunately not sure how to resolve this issue. We are therefore

reluctantly going to close this bug for now. Please don't hesitate to comment on the bug if you have any

more information for us; we will reopen it right away!

Thanks for your contribution.

Status: Issue closed

|

TurboPack/Abbrevia | 292507694 | Title: Error creating new cab using TAbCabArchive

Question:

username_0: When creating a new cab file with TAbCabArchive a EAbFCIAddFileError is thrown with message "FCI cannot add file". This is due to FCICreate not having been called. Here is simple code to reproduce the issue:

`fn := 'somefile.cab'; // use whatever filename you want`

`cab := TAbCabArchive.Create(fn, fmCreate);`

`cab.AddFiles('*.*', faAnyFile); // add whatever files you want (exception here)`

`cab.Save;`

To workaround the issue I can add a call to Load which will make a call to FCICreate but this deviates from how other archives are created (such as TAbGzipArchive).

`fn := 'somefile.cab';`

`cab := TAbCabArchive.Create(fn, fmCreate);`

`cab.Load; // forces call to FCICreate`

`cab.AddFiles('*.*', faAnyFile);`

`cab.Save;`

Answers:

username_0: One proposed solution is to add a call to CreateCabFile in TAbCabArchive.Add. I don't know if this is the correct solution but it works.

```

if FFCIContext = nil then

CreateCabFile;

```

username_1: In which line should I add this?

Status: Issue closed

username_1: Fixed.

username_1: When creating a new cab file with TAbCabArchive a EAbFCIAddFileError is thrown with message "FCI cannot add file". This is due to FCICreate not having been called. Here is simple code to reproduce the issue:

```

fn := 'somefile.cab'; // use whatever filename you want

cab := TAbCabArchive.Create(fn, fmCreate);

cab.AddFiles('*.*', faAnyFile); // add whatever files you want (exception here)

cab.Save;

```

To workaround the issue I can add a call to Load which will make a call to FCICreate but this deviates from how other archives are created (such as TAbGzipArchive).

```

fn := 'somefile.cab';

cab := TAbCabArchive.Create(fn, fmCreate);

cab.Load; // forces call to FCICreate

cab.AddFiles('*.*', faAnyFile);

cab.Save;

```

Status: Issue closed

|

github-vet/rangeloop-pointer-findings | 771546862 | Title: mattbostock/opentsdb-promql-frontend: vendor/k8s.io/client-go/1.5/pkg/apis/extensions/types.generated.go; 5 LoC

Question:

username_0: [Click here to see the code in its original context.](https://github.com/mattbostock/opentsdb-promql-frontend/blob/41074fdf1db049ea45636df02fa7cf2913bbbf10/vendor/k8s.io/client-go/1.5/pkg/apis/extensions/types.generated.go#L16618-L16622)

<details>

<summary>Click here to show the 5 line(s) of Go which triggered the analyzer.</summary>

```go

for _, yyv1346 := range v {

z.EncSendContainerState(codecSelfer_containerArrayElem1234)

yy1347 := &yyv1346

yy1347.CodecEncodeSelf(e)

}

```

</details>

Leave a reaction on this issue to contribute to the project by classifying this instance as a **Bug** :-1:, **Mitigated** :+1:, or **Desirable Behavior** :rocket:

See the descriptions of the classifications [here](https://github.com/github-vet/rangeclosure-findings#how-can-i-help) for more information.

commit ID: <PASSWORD>bbbf10<issue_closed>

Status: Issue closed |

jdbi/jdbi | 711685168 | Title: KotlinMapper: Handling of @PropagateNull on the class level does not use the registered mapper prefix

Question:

username_0: for example, if I have a class:

@PropagateNull("id")

data class TestClass(val id: Long, ...)

if I register a KotlinMapper instance with some prefix, e.g. "aa", then it is not used when testing for column null... So I need to use @PropagateNull("aa_id") - which is not great, as one class can be mapped in different contexts with different prefixes.

Answers:

username_1: This isn't specific to the KotlinMapper, I'm getting this with a ConstructorMapper as well.

username_2: I just encountered this problem as well, which is unfortunate since I feel like `@PropagateNull` would be the perfect solution to our use case. |

stevekrouse/WoofJS | 231829935 | Title: More explicitly tell users not to use their names or identifying information

Question:

username_0: In addition to "Pick a username" can we tell users to not use any identifying information like Scratch does? I wasn't able to see where in pull request https://github.com/username_0/WoofJS/pull/286 we did that. Can you take this on @joebeachjoebeach?<issue_closed>

Status: Issue closed |

aws/aws-cli | 542316991 | Title: --cli-input-json doesn't work with "http://" and "https://" protocol

Question:

username_0: From what I learned in `aws ... --from-input-cli <value>` \<value\> requires protocol (usually `file://`) but when I try to use `http://` it doesn't work. I find it usefull to fetch json from remote server. When I enter incorrect URL it fails with correct HTTP error (e.g. `received non 200 status code of 403`) but when when correct URL is filled this happens:

- AWS CLI version: `aws-cli/1.16.300 Python/3.7.5 Darwin/19.2.0 botocore/1.13.36`

- Command executed: `aws ecs --region eu-west-1 register-task-definition --cli-input-json http://localhost:8080/taskdef --debug`

- Output:

```

2019-12-25 11:17:10,655 - MainThread - awscli.clidriver - DEBUG - CLI version: aws-cli/1.16.300 Python/3.7.5 Darwin/19.2.0 botocore/1.13.36

2019-12-25 11:17:10,655 - MainThread - awscli.clidriver - DEBUG - Arguments entered to CLI: ['ecs', '--region', 'eu-west-1', 'register-task-definition', '--cli-input-json', 'localhost:8080/taskdef', '--debug']

2019-12-25 11:17:10,656 - MainThread - botocore.hooks - DEBUG - Event session-initialized: calling handler <function add_scalar_parsers at 0x1034dca70>

2019-12-25 11:17:10,656 - MainThread - botocore.hooks - DEBUG - Event session-initialized: calling handler <function register_uri_param_handler at 0x102ef0050>

2019-12-25 11:17:10,656 - MainThread - botocore.hooks - DEBUG - Event session-initialized: calling handler <function inject_assume_role_provider_cache at 0x102f1c440>

2019-12-25 11:17:10,664 - MainThread - botocore.hooks - DEBUG - Event session-initialized: calling handler <function attach_history_handler at 0x103384950>

2019-12-25 11:17:10,665 - MainThread - botocore.loaders - DEBUG - Loading JSON file: /usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/data/ecs/2014-11-13/service-2.json

2019-12-25 11:17:10,672 - MainThread - botocore.hooks - DEBUG - Event building-command-table.ecs: calling handler <function inject_commands at 0x1034254d0>

2019-12-25 11:17:10,672 - MainThread - botocore.hooks - DEBUG - Event building-command-table.ecs: calling handler <function add_waiters at 0x1034ec680>

2019-12-25 11:17:10,685 - MainThread - botocore.loaders - DEBUG - Loading JSON file: /usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/data/ecs/2014-11-13/waiters-2.json

2019-12-25 11:17:10,686 - MainThread - awscli.clidriver - DEBUG - OrderedDict([('family', <awscli.arguments.CLIArgument object at 0x1047a4b90>), ('task-role-arn', <awscli.arguments.CLIArgument object at 0x1047a4d50>), ('execution-role-arn', <awscli.arguments.CLIArgument object at 0x1047a4d90>), ('network-mode', <awscli.arguments.CLIArgument object at 0x1047a4dd0>), ('container-definitions', <awscli.arguments.ListArgument object at 0x1047a4c90>), ('volumes', <awscli.arguments.ListArgument object at 0x1047a4e10>), ('placement-constraints', <awscli.arguments.ListArgument object at 0x1047a4c10>), ('requires-compatibilities', <awscli.arguments.ListArgument object at 0x1047a4e50>), ('cpu', <awscli.arguments.CLIArgument object at 0x1047a4f50>), ('memory', <awscli.arguments.CLIArgument object at 0x1047a4fd0>), ('tags', <awscli.arguments.ListArgument object at 0x1047aa810>), ('pid-mode', <awscli.arguments.CLIArgument object at 0x1047aa850>), ('ipc-mode', <awscli.arguments.CLIArgument object at 0x1047aa950>), ('proxy-configuration', <awscli.arguments.CLIArgument object at 0x1047aa690>), ('inference-accelerators', <awscli.arguments.ListArgument object at 0x1047aa890>)])

2019-12-25 11:17:10,686 - MainThread - botocore.hooks - DEBUG - Event building-argument-table.ecs.register-task-definition: calling handler <function add_streaming_output_arg at 0x1034dce60>

2019-12-25 11:17:10,686 - MainThread - botocore.hooks - DEBUG - Event building-argument-table.ecs.register-task-definition: calling handler <function add_cli_input_json at 0x102f25b00>

2019-12-25 11:17:10,687 - MainThread - botocore.hooks - DEBUG - Event building-argument-table.ecs.register-task-definition: calling handler <function unify_paging_params at 0x10345bef0>

2019-12-25 11:17:10,704 - MainThread - botocore.loaders - DEBUG - Loading JSON file: /usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/data/ecs/2014-11-13/paginators-1.json

2019-12-25 11:17:10,704 - MainThread - botocore.hooks - DEBUG - Event building-argument-table.ecs.register-task-definition: calling handler <function add_generate_skeleton at 0x10343b710>

2019-12-25 11:17:10,705 - MainThread - botocore.hooks - DEBUG - Event before-building-argument-table-parser.ecs.register-task-definition: calling handler <bound method OverrideRequiredArgsArgument.override_required_args of <awscli.customizations.cliinputjson.CliInputJSONArgument object at 0x1047aa9d0>>

2019-12-25 11:17:10,705 - MainThread - botocore.hooks - DEBUG - Event before-building-argument-table-parser.ecs.register-task-definition: calling handler <bound method GenerateCliSkeletonArgument.override_required_args of <awscli.customizations.generatecliskeleton.GenerateCliSkeletonArgument object at 0x1047aaf50>>

2019-12-25 11:17:10,706 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.family: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,706 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.task-role-arn: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,707 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.execution-role-arn: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,707 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.network-mode: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,707 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.container-definitions: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,707 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.volumes: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,707 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.placement-constraints: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.requires-compatibilities: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.cpu: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.memory: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.tags: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.pid-mode: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,708 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.ipc-mode: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,709 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.proxy-configuration: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,709 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.inference-accelerators: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,709 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.cli-input-json: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:10,710 - MainThread - urllib3.connectionpool - DEBUG - Starting new HTTPS connection (1): localhost:8080

2019-12-25 11:17:11,033 - MainThread - urllib3.connectionpool - DEBUG - http:localhost:8080 "GET /taskdef HTTP/1.1" 200 1068

2019-12-25 11:17:11,038 - MainThread - botocore.hooks - DEBUG - Event load-cli-arg.ecs.register-task-definition.generate-cli-skeleton: calling handler <awscli.paramfile.URIArgumentHandler object at 0x102ee3850>

2019-12-25 11:17:11,039 - MainThread - botocore.hooks - DEBUG - Event calling-command.ecs.register-task-definition: calling handler <bound method CliInputJSONArgument.add_to_call_parameters of <awscli.customizations.cliinputjson.CliInputJSONArgument object at 0x1047aa9d0>>

2019-12-25 11:17:11,039 - MainThread - awscli.clidriver - DEBUG - Exception caught in main()

Traceback (most recent call last):

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/awscli/customizations/cliinputjson.py", line 72, in add_to_call_parameters

input_data = json.loads(retrieved_json)

File "/usr/local/opt/python/Frameworks/Python.framework/Versions/3.7/lib/python3.7/json/__init__.py", line 348, in loads

return _default_decoder.decode(s)

File "/usr/local/opt/python/Frameworks/Python.framework/Versions/3.7/lib/python3.7/json/decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "/usr/local/opt/python/Frameworks/Python.framework/Versions/3.7/lib/python3.7/json/decoder.py", line 355, in raw_decode

raise JSONDecodeError("Expecting value", s, err.value) from None

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

During handling of the above exception, another exception occurred:

[Truncated]

parsed_globals=parsed_globals

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/awscli/clidriver.py", line 607, in _emit_first_non_none_response

name, **kwargs)

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/session.py", line 677, in emit_first_non_none_response

responses = self._events.emit(event_name, **kwargs)

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/hooks.py", line 356, in emit

return self._emitter.emit(aliased_event_name, **kwargs)

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/hooks.py", line 228, in emit

return self._emit(event_name, kwargs)

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/botocore/hooks.py", line 211, in _emit

response = handler(**kwargs)

File "/usr/local/Cellar/awscli/1.16.300/libexec/lib/python3.7/site-packages/awscli/customizations/cliinputjson.py", line 76, in add_to_call_parameters

% (e, retrieved_json))

awscli.argprocess.ParamError: Error parsing parameter 'cli-input-json': Invalid JSON: Expecting value: line 1 column 1 (char 0)

JSON received: http://localhost:8080/taskdef

2019-12-25 11:17:11,046 - MainThread - awscli.clidriver - DEBUG - Exiting with rc 255

Error parsing parameter 'cli-input-json': Invalid JSON: Expecting value: line 1 column 1 (char 0)

JSON received: http://localhost:8080/taskdef

```

Answers:

username_0: My guess is wrong call in `cliinputjson.py:65` - `retrieved_json = get_paramfile(input_json, LOCAL_PREFIX_MAP)`.

username_1: It looks like we used to support `http` and `https://` as protocols for `--cli-input-json`, but around two years ago, we removed that ability in this PR: https://github.com/aws/aws-cli/pull/3402. I'm still looking as to whether this was intentional. But in the meantime, could you elaborate on how you were planning to use the remote protocol with `--cli-input-json`?

username_0: From my point of view #3402 introduced this behaviour and it is a bug. User is supposed to be able to disable resolving strings including http protocol intentionally but by default it should work. part of `cliinputjson.py` is able to resolve http procol when it is enabled by `cli_follow_urlparam` (validation part) but when it gets to `get_paramfile` this function is ignoring `cli_follow_urlparam` and consider only `LOCAL_PREFIX_MAP` from parameter (i think remote prefixes should be added inside this function in a same way based on `cli_follow_urlparam`.

Our usecase for consuming input json from http is generating of ECS task definitions. We have implemented AWS lambda which is able to generate ready-to-use task definitions for all our services with only few override parameters. Currently we have to fetch JSON using `curl` and send it to `--cli-input-json`. If this bug will be fixed we will use similar AWS lambda JSON generators for other usecases.

username_2: Hi @username_0,

I see that you are experiencing this with the v1 client. I should note that this behavior goes away in the v2 client:

https://docs.aws.amazon.com/cli/latest/userguide/cliv2-migration.html#cliv2-migration-paramfile

So, the pattern of fetching the file with `curl` or `wget` is the preferred approach.

Status: Issue closed

username_0: Thanks @username_2 I missed that. I'm closing this issue then. |

pcm-dpc/COVID-19 | 592560414 | Title: Web App

Question:

username_0: Salve,

attraverso i vostri dati [json](https://github.com/pcm-dpc/COVID-19/tree/master/dati-json) ho creato una web app per consultare i dati dell'Italia sia a livello nazionale che regionale.

sono presenti anche [dati internazionali](https://github.com/NovelCOVID/API) per una consultazione di tutti i paesi.

per gli Stati Uniti la consultazione è anche a livello statale.

### Interesse pubblico

web app responsive facilmente utilizzabile sia su smartphone che su tablet.

http://username_0.github.io/covid-19/

Answers:

username_1: grazie mille per il contributo @username_0

Status: Issue closed

|

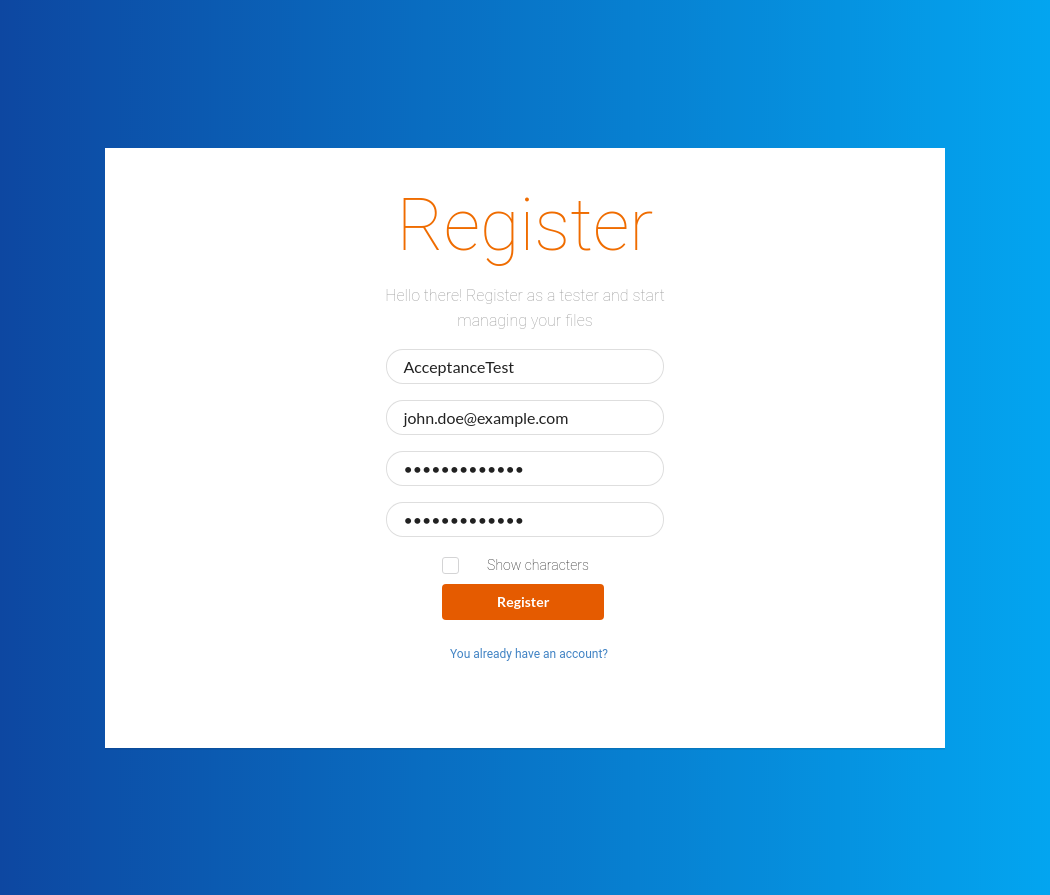

Codeception/Codeception | 250600197 | Title: Acceptance test for React app button click doesn't work

Question:

username_0:

The button of registration works in real browser

Answers:

username_1: It looks like your button is making request to a wrong port:

```

[Selenium browser Logs]

...

11:10:24.121 SEVERE - http://localhost:8080/tester/register - Failed to load resource: net::ERR_CONNECTION_REFUSED

11:10:24.144 SEVERE - http://localhost:8080/tester/register - Failed to load resource: net::ERR_CONNECTION_REFUSED

```

Status: Issue closed

|

RasaHQ/rasa | 862836671 | Title: Crypto dependency is not installed on 1.10.x

Question:

username_0: <!-- THIS INFORMATION IS MANDATORY - YOUR ISSUE WILL BE CLOSED IF IT IS MISSING. If you don't know your Rasa version, use `rasa --version`.

Please format any code or console output with three ticks ``` above and below.

If you are asking a usage question (e.g. "How do I do xyz") please post your question on https://forum.rasa.com instead -->

**Rasa version**: 1.10.x

**Rasa SDK version** (if used & relevant):

**Rasa X version** (if used & relevant):

**Python version**: 3.7

**Operating system** (windows, osx, ...): linux

**Issue**:

This fix https://github.com/RasaHQ/rasa/commit/d7731faa0172824b07bd47b20d861dab03a68beb should also be included on the 1.10.x branch of Rasa, the missing algorith error also happen there.

**Error (including full traceback)**:

```

```

**Command or request that led to error**:

```

```

**Content of configuration file (config.yml)** (if relevant):

```yml

```

**Content of domain file (domain.yml)** (if relevant):

```yml

```

Answers:

username_0: Adding `cryptography = ">=3.2.1"` to the `[tool.poetry.dependencies]` section at pyproject.toml solved the issue

username_1: Thanks for the issue, @degiz will get back to you about it soon!

###### You may find help in the [docs](https://rasa.com/docs/) and the [forum,](https://forum.rasa.com/) too 🤗

Status: Issue closed

|

Azure/azure-cli | 434224083 | Title: az webapp --help failure

Question:

username_0: ### **This is an autogenerated template. Please review and update as needed.**

## Describe the bug

**Command Name**

`az webapp`

**Errors:**

:open_mouth:

```The command failed with an unexpected error. Here is the traceback:

cannot import name 'SnapshotRecoveryRequest'Traceback (most recent call last):

File "/opt/az/lib/python3.6/site-packages/knack/cli.py", line 206, in invoke

cmd_result = self.invocation.execute(args)

File "/opt/az/lib/python3.6/site-packages/azure/cli/core/commands/__init__.py", line 273, in execute

parsed_args = self.parser.parse_args(args)

File "/opt/az/lib/python3.6/site-packages/knack/parser.py", line 256, in parse_args

return super(CLICommandParser, self).parse_args(args)

File "/opt/az/lib/python3.6/argparse.py", line 1730, in parse_args

args, argv = self.parse_known_args(args, namespace)

File "/opt/az/lib/python3.6/argparse.py", line 1762, in parse_known_args

namespace, args = self._parse_known_args(args, namespace)

File "/opt/az/lib/python3.6/argparse.py", line 1950, in _parse_known_args

positionals_end_index = consume_positionals(start_index)

File "/opt/az/lib/python3.6/argparse.py", line 1927, in consume_positionals

take_action(action, args)

File "/opt/az/lib/python3.6/argparse.py", line 1836, in take_action

action(self, namespace, argument_values, option_string)

File "/opt/az/lib/python3.6/argparse.py", line 1133, in __call__

subnamespace, arg_strings = parser.parse_known_args(arg_strings, None)

File "/opt/az/lib/python3.6/argparse.py", line 1762, in parse_known_args

namespace, args = self._parse_known_args(args, namespace)

File "/opt/az/lib/python3.6/argparse.py", line 1968, in _parse_known_args

start_index = consume_optional(start_index)

File "/opt/az/lib/python3.6/argparse.py", line 1908, in consume_optional

take_action(action, args, option_string)

File "/opt/az/lib/python3.6/argparse.py", line 1836, in take_action

action(self, namespace, argument_values, option_string)

File "/opt/az/lib/python3.6/argparse.py", line 1020, in __call__

parser.print_help()

File "/opt/az/lib/python3.6/argparse.py", line 2362, in print_help

self._print_message(self.format_help(), file)

File "/opt/az/lib/python3.6/site-packages/azure/cli/core/parser.py", line 156, in format_help

super(AzCliCommandParser, self).format_help()

File "/opt/az/lib/python3.6/site-packages/knack/parser.py", line 246, in format_help

is_group)

File "/opt/az/lib/python3.6/site-packages/azure/cli/core/_help.py", line 148, in show_help

super(AzCliHelp, self).show_help(cli_name, nouns, parser, is_group)

File "/opt/az/lib/python3.6/site-packages/knack/help.py", line 664, in show_help

else self.group_help_cls(self, delimiters, parser)

File "/opt/az/lib/python3.6/site-packages/knack/help.py", line 219, in __init__

child.load(options)

File "/opt/az/lib/python3.6/site-packages/azure/cli/core/_help.py", line 242, in load

loader.versioned_load(self, options)

File "/opt/az/lib/python3.6/site-packages/azure/cli/core/_help_loaders.py", line 153, in versioned_load

super(CliHelpFile, help_obj).load(parser) # pylint:disable=bad-super-call

File "/opt/az/lib/python3.6/site-packages/knack/help.py", line 163, in load

description = getattr(options, 'description', None)

[Truncated]

azure-cli 2.0.62

Extensions:

aks-preview 0.3.0

front-door 0.1.1

storage-preview 0.1.6

webapp 0.2.6

Python location '/opt/az/bin/python3'

Extensions directory '/home/username_0/.azure/cliextensions'

Python (Linux) 3.6.5 (default, Apr 4 2019, 22:51:52)

[GCC 5.4.0 20160609]

```

## Additional Context

Add any other context about the problem here.

<!-- Please do not remove these markdown comments -->

<!--auto-generated-->

Answers:

username_1: CC: @Nking92

@username_0 looks like you have an older version of a web app extension can you delete this & re-try

run

1. az extension list to confirm you have extensions installed

2. az extension remove -n webapp

& then re-try.

username_0: Sure thing, on it, will report back when done!

username_1: the error here is you had an older version of webapp extension installed, & the core cli had some SDK updates that caused the error you are seeing. Is there a specific command from extension you are trying to use? Some of the extension commands did get moved to core.

this command however is still an extension command If you need this one please install the latest extension az extension add -n webapp. Thank you.

Status: Issue closed

|

fullcalendar/fullcalendar | 474791060 | Title: show events of child resources on main resource if child are collapsed

Question:

username_0: Hi,

maybe there is already a solution to this and I cant find it, so please excuse if this is a duplicate.

What I want to do is show the events of child resources on the main resource if the resource is collapsed. I think that could be problamatic, since event-container is a child of the resource tr and this gets display:none.

From the data side I managed to associate every event with its child and master resource so at the moment the event is displayed twice in my calender.

I hope you can understand what i'm trying to do.

Answers:

username_1: @username_2 Is this function removed in V4? I am unable to find it.

username_2: what function? `eventRender`? still exists

username_1: Sorry i meant dateToCoord |

spring-projects/spring-boot | 197854956 | Title: Some logs are dismissed during context creation

Question:

username_0: The default logging system is used (Logback).

For instance, the warning logged [here](https://github.com/spring-projects/spring-boot/blob/v1.4.3.RELEASE/spring-boot/src/main/java/org/springframework/boot/env/SpringApplicationJsonEnvironmentPostProcessor.java#L95) is dismissed.

This behavior appears because at the time the warning is logged, the Logback context contains a [filter](https://github.com/spring-projects/spring-boot/blob/v1.4.3.RELEASE/spring-boot/src/main/java/org/springframework/boot/logging/logback/LogbackLoggingSystem.java#L102) that denies everything.

This issue is similar to #7758 but it's with Logback.

Here's a quick and dirty demo:

```java

package demo;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

@SpringBootApplication

public class DemoApplication {

private static final Logger LOGGER = LoggerFactory.getLogger(DemoApplication.class);

@Value("${demo.value:empty}")

private String demoValue;

@Bean

public CommandLineRunner commandLineRunner() {

return new CommandLineRunner() {

@Override

public void run(String... args) throws Exception {

LOGGER.warn("value = {}", demoValue);

}

};

}

public static void main(String[] args) throws Exception {

// Valid JSON:

// System.setProperty("SPRING_APPLICATION_JSON", "{\"demo\":{\"value\":\"demo\"}}");

// Invalid JSON:

System.setProperty("SPRING_APPLICATION_JSON", "{");

SpringApplication.run(DemoApplication.class, args);

}

}

```

```xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>demo</groupId>

<artifactId>demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.4.3.RELEASE</version>

</parent>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

</dependencies>

</project>

```

Answers:

username_1: I am not sure what we can do about it. It's a chicken and egg problem. When that code runs, the `Environment` has not been prepared yet. We want the environment to contribute to the initialization of the logging system (pattern, loggers, etc).

The filter is there on purpose to avoid an early init of the logging system. |

ElemeFE/react-amap | 520823409 | Title: 你用jsfiddle是仅供海外服务还是什么意思呢

Question:

username_0: <!--

请确保阅读下面的内容并勾选。没有勾选的 Issue 将被关闭。

-->

+ [ ] 我已经搜索过 issue,没有类似的问题,或者类似的问题仍然没有解决方案。

+ [ ] 我已经搜索过[文档](https://elemefe.github.io/react-amap/articles/start),并且仍然没有找到解决方案。

+ [ ] 我写了个问题重现的例子,链接或者代码将会贴在下面。

<!--

请确保阅读上面的内容并勾选。没有勾选的 Issue 将被关闭。

-->

#### Reproduce Example Link or Code Fragment

#### What is Expected?

#### What is actually happening?<issue_closed>

Status: Issue closed |

bluss/matrixmultiply | 386834699 | Title: Use optimal kernel parameters (architectures, matrix layouts)

Question:

username_0: I am trying to figure out what to use as optimal kernel parameter for different architectures.

For example, it looks like blis is using 8x4 for Sandy Bridge, but 8x6 for Haswell. Why? What lead them to this setup? Specifically, because operations are usually on 4 doubles at a time, how does the 6 fit in there. Is Haswell able to separately execute a `_mm256` and a `_mm` operation *at the same time*?

Furthermore, if we have non-square kernels like for dgemm, is there a scenario where choosing 4x8 over 8x4 is better?

Answers:

username_1: You must also include src/archparams.rs in this

username_0: Another interesting bit is the choice of 8x6 over 6x8 (or 8x4 over 4x8 for Sandy Bridge, which is our current implementation), which prefers column- vs row-storage in the C matrix. This then ties in with my question here: https://github.com/username_1/matrixmultiply/issues/31

username_2: I also keep some [extra links that I didn't have time to sort](https://github.com/numforge/laser/blob/723299ea439bd10ffae0fda0601d5b153892303f/research/matrix_multiplication_optimisation_resources.md).

Anyway, in terms of performance I have a generic multi-threaded BLAS (float32, float64, int32, int64) that reaches between 97~102% of OpenBLAS on my Broadwell (AVX2 and FMA) laptop depending if multithreaded/serial/float32 or float64:

- [float32 bench](https://github.com/numforge/laser/blob/723299ea439bd10ffae0fda0601d5b153892303f/benchmarks/gemm/gemm_bench_float32.nim#L187-L260)

- [float64 bench](https://github.com/numforge/laser/blob/723299ea439bd10ffae0fda0601d5b153892303f/benchmarks/gemm/gemm_bench_float64.nim#L186-L258)

## Kernel parameters

- mr * nr: 6 * 16.

- Why 16?: one-dimension must be a multiple of the vector size. With AVX it's 8. Also since CPU can issue 2 Fused-Multiply-Add in parallel (instruction-level parallelism) 16 is an easy choice.

- Why 6?: We have 16 general purposes register on SSE2~AVX2. We need to keep a pointer to packed A, packed B and the loop index over kc. So with 6 I can unroll twice, using 12 registers, and have 4 left for bookeeping.

- Why not 16x6?: C will be a MxN matrix, and very often C is a contiguous matrix that has been allocated. As by default I use row-major, 6x16 avoids transposing during the C update step and allow me to specialize when C as a unit stride along the N dimension.

## Panel parameters

Proper tuning of mc and kc is very important as well.

Currently I use an arbitrary 768 bytes for mc and 2048 bytes for kc. In my testing I lost up to 35% performance with other values.

There are various constraint for both, the Goto paper goes quite in-depth into them:

- micropanel of packed B of size kc * nr should remain in L1 cache, and so take less than half of it to not be evicted by other panels.

- Panel of packed A of size kc * mc should take a considerable part of the L2 cache, but still stay addressable by the TLB.

I had an algorithm to choose them, but as I can only query cache but no TLB information at the moment, I removed it and decided to tune manually.

username_2: I forgot to add. As mentioned in the paper [Automating the last mile](https://arxiv.org/pdf/1611.08035.pdf). You need to choose your SIMD for C updates.

You can go for shuffle/permute or broadcast and balance them to a varying degree.

To find the best you need to check from which port those instructions can be issued. Also interleave them with FMA to hide data fetching latencies.

In my code I use full broadcast and no shuffle/permute but mainly because it was simpler to reason about, I didn't test other config.

username_1: @username_2 Wow, cool to hear from you! Thanks for the links to the papers and for sharing your knowledge!

username_1: Issue #59 allows tweaking the NC, MC, KC variables easily at compile-time which is one small step and a model for further compile time tweakability.

username_3: Another idea which libsxmm uses is an autotuner, such as https://opentuner.org/. OpenTuner automatically evaluates the best parameters for the architecture the code is compiled on. |

argoproj/argo-cd | 987015144 | Title: Tooltips run up into top bar

Question:

username_0: If you are trying to resolve an environment-specific issue or have a one-off question about the edge case that does not require a feature then please consider asking a question in argocd slack [channel](https://argoproj.github.io/community/join-slack).

Checklist:

* [ ] I've searched in the docs and FAQ for my answer: https://bit.ly/argocd-faq.

* [x] I've included steps to reproduce the bug.

* [x] I've pasted the output of `argocd version`.

**Describe the bug**

The tooltips on application tiles will persist even when the tile is not visible. It will run up over the top bar.

**To Reproduce**

See video for visual example but basically hover over anything with a tooltip on the application tiles and then scroll up

**Expected behavior**

Would expect tooltips to disappear once their associated component is out of view.

**Screenshots**

https://user-images.githubusercontent.com/50851526/131893632-daa52375-f48f-49cf-8671-3089f1dddf34.mp4

**Version**

v2.2.0+9025318

Answers:

username_1: Can I work on this bug @mayzhang2000

username_2: Sure, Thank you @username_1 !

Status: Issue closed

|

ibm-openbmc/dev | 423479565 | Title: Redfish: Code Update - Support new RedfishHttpPushUri

Question:

username_0: Per https://github.com/DMTF/Redfish/pull/3296, a new URI property to push the software image at, RedfishHttpPushUri, is on its way. This is to track the work to move over to this new property. Should be minimal effort, seems like mostly a conformance thing.

Answers:

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_1: refresh

username_0: This was replaced by MultipartHttpPushUri so closing out.

Status: Issue closed

|

Colin-Jay/BlogsComment | 553012058 | Title: Test2 - Colin-Jay's Blogs

Question:

username_0: https://colin-jay.cn/2020/01/22/Test2/

Answers:

username_0: Welcome to visit.

username_1: # 向徐巨低头

Status: Issue closed

username_0: https://colin-jay.cn/2020/01/22/Test2/ |

mholt/PapaParse | 674379326 | Title: Feature: Flatten in Json to CSV

Question:

username_0: Hi,

Can we have an option in `config` to flatten the JSON before converting to CSV. Yes, I can use a lib for that, I thought it would be nice if it is a built-in, making this library an all-around json to csv vice versa

Eg.

JSON

```

[

{

"Id": "1",

"Account": {

"Id": "A1"

},

"Contact": {

"Id": "C1"

}

},

{

"Id": "2",

"Account": {

"Id": "A1"

},

"Contact": {

"Id": "C2"

}

}

]

```

Flatten

```

[

{

"Id": "1",

"Account.Id": "A1",

"Contact.Id": "C1"

},

{

"Id": "2",

"Account.Id": "A1",

"Contact.Id": "C2"

}

]

```

CSV

```

"Id", "Account.Id", "Contact.Id"

"1","A1","C1"

"2","A1","C2"

```

Answers:

username_1: Hi,

Thanks for your suggestion but I think we should reject it. PapaParse is a library for parsing and unprasing CSV files but it is not designed to transform data. It is up to the user of the library to pass the data in the right format.

I may understand that you think it will be great to have this feature for your use case, but we can not add all the transform functions that our users may require otherwise we will become a blotated library and this will for sure have a penanlty on our performance.

Thanks for your comprehension!

Status: Issue closed

|

sysrepo/sysrepo | 260244742 | Title: How to use xpath expressions such as starts-with, ends-with or wildcards in sysrepo?

Question:

username_0: I want to delete all ip address nodes which starts with fe80. I was able to use contains without any issues but it deletes ip address such as 12:fe80:1 if present.

I tried the following but i am getting validation failed messages.

/ietf:interfaces/interface/inet-ip:ipv6/address[contains(.,'fe80')] ->not the thing i want.

/ietf:interfaces/interface/inet-ip:ipv6/address[ip='fe80')] ->not working

Regards,

Swathin

Answers:

username_1: Hi Swathin,

for example, the expression

```

/ietf:interfaces/interface/inet-ip:ipv6/address[starts-with(ip, 'fe80')]

```

should do what you want, simple enough. The `ip` node gets implicitly converted to `string`. As long as it is a leaf/leaf-list, it is performed exactly as you would expect, its value is used.

Generally, you can use patterns as well, the predicate would then look like this

```

[re-match(ip, 'fe80*')]

```

because this functions performs implicit anchoring of the beginning and also the end ([RFC ref](https://tools.ietf.org/html/rfc7950#section-10.2.1)).

Regards,

Michal

Status: Issue closed

username_0: Thanks Michal for the quick response. This is exactly what i want.

Regards,

Swathin

username_1: Also, `@ip` works with attributes, under normal circumstances you will never need to use this in YANG.

username_0: Is there any way to supress the callback functions for an internal datastore operation? I tried usinh the session in the init callback function but it is making the changes in the startup datastore.

Regards,

Swathin

username_0: I tried with @ as in normal xpath, but it was throwing validation failed error.

Regards,

Swathin

username_1: Hi Swathin,

firstly, you are not talking about the original issue anymore, please do not mix issues next time.

Secondly, normal XPath and YANG XPath differ minimally and `@` refers to attributes in both.

Finally, regarding your issue, I do not know what you mean by internal datastore or what callback you want to suppress. Please say what exactly you are doing, what behavior you see, and what would you like to happen instead (preferably in a new issue).

Regards,

Michal

username_0: Hi Michal,

I will start a new issue/thread for the new issue that I am facing. Thanks for the quick response.

Regards,

Swathin |

sinonjs/sinon | 178219707 | Title: Matcher being called when setting up stub behavior

Question:

username_0: * Sinon version : 1.17.6

* Environment : Node

* Other libraries you are using: `chai`, `sinon-chai`

**How to reproduce**

I am setting up two behaviors for a stub using a custom matcher:

```js

fs.readFile = sinon.stub();

fs.readFile

.withArgs(sinon.match(function (fileName) { return endsWith(fileName, 'suffixa'); }))

.yields(null, 'contents A');

fs.readFile

.withArgs(sinon.match(function (fileName) { return endsWith(fileName, 'suffixb'); }))

.yields(null, 'contents B');

```

For some reason, when that second `.withArgs` is called, that first matcher's function is invoked and is passed the matcher object. This is before the stub itself is ever called.

Answers:

username_1: Could you provide a code example that shows how this fails?

We have an issue template for good reasons.

username_0: That was my code example that fails. Here it is slightly reworded with some blanks filled in:

```es6

const { match, stub } = require('sinon');

const readFile = stub();

readFile

.withArgs(match(fileName => endsWith(fileName, 'suffixA')))

.yields(null, 'contents A');

readFile

.withArgs(match(fileName => endsWith(fileName, 'suffixB')))

.yields(null, 'contents B');

function endsWith(str, suffix) {

return str.indexOf(suffix) + suffix.length === str.length;

}

```

Error:

```

return str.indexOf(suffix) + suffix.length === str.length;

^

TypeError: str.indexOf is not a function

at endsWith (/Users/jpage/Code/sinon-1154-repro/repro.js:14:14)

at Object.readFile.withArgs.fileName [as test] (/Users/jpage/Code/sinon-1154-repro/repro.js:6:31)

at deepEqual (/Users/jpage/Code/sinon-1154-repro/node_modules/sinon/lib/sinon/util/core.js:194:26)

at Object.deepEqual (/Users/jpage/Code/sinon-1154-repro/node_modules/sinon/lib/sinon/util/core.js:243:26)

at Function.matches (/Users/jpage/Code/sinon-1154-repro/node_modules/sinon/lib/sinon/spy.js:287:27)

at matchingFake (/Users/jpage/Code/sinon-1154-repro/node_modules/sinon/lib/sinon/spy.js:50:30)

at Function.withArgs (/Users/jpage/Code/sinon-1154-repro/node_modules/sinon/lib/sinon/spy.js:249:33)

at Object.<anonymous> (/Users/jpage/Code/sinon-1154-repro/repro.js:10:4)

at Module._compile (module.js:556:32)

at Object.Module._extensions..js (module.js:565:10)

```

Again, what's happening is the matcher function is being called during setup, even though the stub itself wasn't actually called.

username_0: Just reproduced with that code using the reported version and the latest, `1.17.6`, as well.

username_1: Right, that is confusing indeed!

I think you've uncovered a bug that we introduced when we merged https://github.com/sinonjs/sinon/pull/873

@username_2 you worked on this a while back, do you think we need a case in `deepEqual.use`, that examines if both `a` and `b` are matchers, and compares them, instead of trying to execute one to match the other?

https://github.com/sinonjs/sinon/blob/master/lib/sinon/util/core/deep-equal.js#L97-L99

username_2: @username_0 What is `str` in this instance? Can you provide a failing test case? I'm looking at the code and I don't see why it's failing, but I'll look into it.

username_0: I'm not 100% sure; str resembles a matcher object to me, but I don't know for sure, only that it's not a string and it's not expected that that `withArgs` is even calling the matcher function.

username_1: It's a matcher, the second call to `withArgs` passes it along, and it ends up in `deepEqual.use` at https://github.com/sinonjs/sinon/blob/master/lib/sinon/util/core/deep-equal.js#L97-L99

username_1: I've restructured the example a bit, to try and reduce it further.

Here's my version

```javascript

const { match, stub } = require('sinon');

const readFile = stub();

function endsWith(str, suffix) {

return str.indexOf(suffix) + suffix.length === str.length;

}

function suffixA(fileName) {

return endsWith(fileName, 'suffixa');

}

function suffixB(fileName) {

return endsWith(fileName, 'suffixb');

}

const argsA = match(suffixA);

const argsB = match(suffixB);

readFile

.withArgs(argsA);

readFile

.withArgs(argsB);

```

Running that, gives the same error

```

return str.indexOf(suffix) + suffix.length === str.length;

^

TypeError: str.indexOf is not a function

```

username_1: Anyway, it is near midnight here, I should go to bed

Status: Issue closed

|

middleman/middleman | 223893615 | Title: Build error using liquid

Question:

username_0: I'm using middleman 4.0 with the liquid template engine. Running the middleman server everything is fine and I get no errors. As soon as I want to build the website I get this error:

`Template local 'data' tried to overwrite an existing context value. Please rename the key when passing to 'locals'`.

To be honest I don't know where the error could be since I'm using everything as it shipped, so no extra config except the `set :liquid, :layout_engine => :liquid` in the config.rb. I only got a index.html.liquid file with some includes.

I hope somebody can help me out on this one. Thank you!

Answers:

username_1: @username_3 @username_0

Verified this.

Seems a raw liquid usage is producing this error

http://www.rubydoc.info/github/middleman/middleman/Middleman/Renderers/Liquid

```

def manipulate_resource_list(resources)

return resources unless app.extensions[:data]

resources.each do |resource|

next if resource.file_descriptor.nil?

next unless resource.file_descriptor[:full_path].to_s =~ %r{\.liquid$}

# Convert data object into a hash for liquid

resource.add_metadata locals: {

data: stringify_recursive(app.extensions[:data].data_store.to_h)

}

end

end

```

data is reference here

I have tested with and without the data folder and its producing this issue.

username_1: @username_3

Just looking into liquid more, definite issue here I think, if you want to include partials from another directory - it will fail

Should be an easy fix, but just wondered if you have some magic for this.

```

# File 'middleman-core/lib/middleman-core/renderers/liquid.rb', line 14

def read_template_file(template_path)

file = app.files.find(:source, "_#{template_path}.liquid")

raise ::Liquid::FileSystemError, "No such template '#{template_path}'" unless file

file.read

end

```

username_2: I have run into this and can confirm it happens out of the box.

### Steps to reproduce:

* `middleman init`

* Add 'liquid' to Gemfile and `bundle install`

* Rename `source/index.html.erb` to `source/index.html.liquid`

* `middleman build`

Produces the error:

<details>

<summary>

uncaught throw "Template local `data` tried to overwrite an existing context value. Please rename the key when passing to `locals`"</summary>

```