repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

MiguelSobera/Tarjeta | 560446149 | Title: Implementación Interfaz Tarjeta Monedero

Question:

username_0: Se deberá implementar la interfaz Tarjeta Monedero, la cual será nombrada ITarjetaMonedero. Esta interfaz contará con los siguientes métodos:

- Comprar: este método lo devolverá nada y recibirá por parámetro una variable float y otra String

- Métodos de obtención y establecimiento de los atributos necesarios |

prescottprue/redux-firestore | 419899218 | Title: state not updated correctly after removing more than 1 item from sub collections (with batch function)

Question:

username_0: using the firestore batch function for deleting multiple items from a **sub collection.**

"sometimes" the state just doesn't update correctly and instead of removing all deleted items it removes just 1.

firestore database updates correctly.

only after refreshing i get the correct state.

Answers:

username_1: @username_0 Please report what versions of dependencies you are using and how you are attaching listeners and/or rendering the data. All of this is necessary to try to reproduce.

username_0: **from my `packege.json`**

```

"react-redux-firebase": "^2.2.5",

"redux-firestore": "^0.7.2",

```

**from my index.js**

```

import React from 'react';

import ReactDOM from 'react-dom';

import { Provider } from 'react-redux';

import { applyMiddleware, compose, createStore } from 'redux';

import thunk from 'redux-thunk';

import { reactReduxFirebase, getFirebase } from 'react-redux-firebase';

import { reduxFirestore, getFirestore } from 'redux-firestore';

import firebase from '../firebase.config';

import { Router, hashHistory } from 'react-router';

import rootReducer from './cms/root-reducers';

import Routes from '/src/routes';

const store = createStore(

rootReducer,

composeEnhancers(

applyMiddleware(

thunk.withExtraArgument({ getFirebase, getFirestore }),

),

reduxFirestore(firebase),

reactReduxFirebase(firebase, {

userProfile: 'users',

useFirestoreForProfile: true,

preserveOnDelete: true,

}),

)

);

ReactDOM.render(

<Provider store={store} >

<Router history={hashHistory} routes={Routes.routesMap} />

</Provider >,

$root

);

```

from my `root-reducers.js`

```

import { combineReducers } from 'redux-immutable';

import { firestoreReducer as fireStore } from 'redux-firestore';

import { firebaseReducer as firebase } from 'react-redux-firebase';

const rootReducer = combineReducers({

fireStore,

firebase,

});

export default rootReducer;

```

I believe this is all the relevant info, please let my know if you think anything else is necessary.

did i articulate the problem well enough ? is it clear ?

it is reproducible simply by trying by using the batch function to remove items form a sub collection.

happens almost every time. (sometimes the first try works) |

spring-projects/spring-framework | 492179728 | Title: Regression: Improper UTF-8 handling in MockMvc for JSON response

Question:

username_0: When `MediaType.APPLICATION_JSON_UTF8` was deprecated, the content type of JSON that gets sent from a `@RestController` changed to `application/json` (without charset).

This breaks MockMvc tests that use `.andExpect(content().json())`. Here is a sample test:

```

@Test

public void returnsTheExpectedResponse() throws Exception {

mockMvc.perform(get("/"))

.andExpect(status().isOk())

.andExpect(content().json("{\"name\":\"Jürgen\"}"));

}

```

The test runs fine with Spring 5.1.9 but fails with Spring 5.2.0.RC2

```

java.lang.AssertionError: name

Expected: Jürgen

got: Jürgen

```

You can find a sample Spring Boot project to reproduce the problem at https://github.com/username_0/mockmvc-json-utf8

There was a similar issue for `jsonPath()` (#23219).

Status: Issue closed

Answers:

username_2: Both overloads of `ContentResultMatchers.string()` do this, too. Three other functions in that class call `getContentAsString()` with no arguments, but I'm not sure if that results in the same issue.

username_3: I use getContentAsString and mockMvc seems to return my Content as ISO 8859-1.

Before (with Spring Boot 2.1.9) it was proper UTF-8

When I mark my Endpoint with @Produces("application/json; charset=utf-8") it works as indented.

But I dont want to go throught all my endpoints todo this.

username_4: In your tests, `ContentResultMatchers#json(String)`. |

DIYgod/RSSHub | 457220856 | Title: 请求添加经济之声广播rss

Question:

username_0: <!--

请确保 [文档](https://docs.rsshub.app) 和 [issue](https://github.com/username_3/RSSHub/issues) 中没有相关内容,且源站没有提供 RSS,并按照模版提供信息

否则 issue 将被立即关闭

目前 RSS 需求滞销,如有能力请按照 [指南](https://docs.rsshub.app/joinus) 自行编写并提交 pr

-->

### 源站地址http://www.radio.cn/pc-portal/sanji/zhibo_2.html?channelname=2&name=520767&title=radio#

### 需要生成什么内容?

- [ ] 内容一经济之声那些年的播客

- [ ] 内容二

### 额外描述

需要按时间排序的音频内容,可以用泛用型播客订阅。注:在荔枝订阅节目有一至两天的延迟,想要更快的抓取速度。

Answers:

username_1: http://tacc.radio.cn/pcpages/searchs/livehistory?channelname=2&name=520767&callback=&start=1&rows=20&_=1560822921054

username_1: 这就尴尬了 刚写了一半 接口就返回空了

username_2: @username_1 带时间戳的吧,更新一下时间参数试试

username_1: 原网页也没了

username_0: 可能是网站问题,发现以前的内容全没了😂

username_0: @username_1 大佬有没有兴趣再搞搞,网站又回来了

username_1: @username_0 晚上回去看看吧

顺便保存一份结果 免得再没了

{

"result_code": 0,

"result_message": "success",

"passprogram": [{

"stream_url2": "\/live\/jjzs\/201906\/nxn_20190620221130jjzs_l.m4a",

"stream_url3": "\/live\/jjzs\/201906\/nxn_20190620221130jjzs_l.m4a",

"stream_url1": "\/live\/jjzs\/201906\/nxn_20190620221129jjzs_h.m4a",

"display_id": "1592586",

"live_channel_id": "2",

"stream_domain1": "cnvod.cnr.cn\/audio2018",

"stream_domain2": "cnvod.cnr.cn\/audio2018",

"update_time": "2019-06-20 22:11:29.0",

"stream_domain3": "cnvod.cnr.cn\/audio2018",

"section_id": "866f9cee-656a-4221-bf1e-1bb7fc78fba0",

"ondemand_channel_display_id": "520767",

"id": "520767-bdbff1dc-948a-4111-b539-1ecf3dfad5d7",

"search_name": "\u90a3\u4e9b\u5e74",

"broadcast_date": "2019-06-20",

"search_time": "2019-06",

"channel_name": "\u7ecf\u6d4e\u4e4b\u58f0",

"end_time": "22:00:00",

"resource_length": "3600",

"name": "\u90a3\u4e9b\u5e74",

"start_time": "21:00:00",

"resource_size": "83.3",

"order_num": "1973",

"_version_": 1636899487616073728

}, {

"stream_url2": "\/live\/jjzs\/201906\/nxn_20190619220835jjzs_l.m4a",

"stream_url3": "\/live\/jjzs\/201906\/nxn_20190619220835jjzs_l.m4a",

"stream_url1": "\/live\/jjzs\/201906\/nxn_20190619220834jjzs_h.m4a",

"display_id": "1591612",

"live_channel_id": "2",

"stream_domain1": "cnvod.cnr.cn\/audio2018",

"stream_domain2": "cnvod.cnr.cn\/audio2018",

"update_time": "2019-06-19 22:08:33.0",

"stream_domain3": "cnvod.cnr.cn\/audio2018",

"section_id": "866f9cee-656a-4221-bf1e-1bb7fc78fba0",

"ondemand_channel_display_id": "520767",

"id": "520767-f12ef2ee-e676-4ca1-b219-828b1f3488b4",

"search_name": "\u90a3\u4e9b\u5e74",

"broadcast_date": "2019-06-19",

"search_time": "2019-06",

"channel_name": "\u7ecf\u6d4e\u4e4b\u58f0",

"end_time": "22:00:00",

"resource_length": "3660",

"name": "\u90a3\u4e9b\u5e74",

"start_time": "21:00:00",

"resource_size": "83.3",

"order_num": "1972",

"_version_": 1636808875369824256

}, {

"stream_url2": "\/live\/jjzs\/201906\/nxn_20190618221253jjzs_l.m4a",

"stream_url3": "\/live\/jjzs\/201906\/nxn_20190618221253jjzs_l.m4a",

"stream_url1": "\/live\/jjzs\/201906\/nxn_20190618221253jjzs_h.m4a",

"display_id": "1590703",

[Truncated]

"stream_domain2": "cnvod.cnr.cn\/audio2018",

"update_time": "2019-06-01 22:09:39.0",

"stream_domain3": "cnvod.cnr.cn\/audio2018",

"section_id": "866f9cee-656a-4221-bf1e-1bb7fc78fba0",

"ondemand_channel_display_id": "520767",

"id": "520767-1acbba5c-b39d-4e9c-823f-339c6f58a1a2",

"search_name": "\u90a3\u4e9b\u5e74",

"broadcast_date": "2019-06-01",

"search_time": "2019-06",

"channel_name": "\u7ecf\u6d4e\u4e4b\u58f0",

"end_time": "22:00:00",

"resource_length": "3660",

"name": "\u90a3\u4e9b\u5e74",

"start_time": "21:00:00",

"resource_size": "83.3",

"order_num": "1954",

"_version_": 1636742802687655939

}],

"total": 1093

}

Status: Issue closed

|

bazelbuild/bazel | 217558812 | Title: End to end test for bazel rules are hard to write

Question:

username_0: In Bazel we use //src/test/shell to do end to end tests, those tests are in shell so hard to maintain and we do not expose that framework for rules writer.

We could:

- Clean-up our e2e support

- Expose it in @bazel_tools

- Expose the bazel binary as a dependency so we can write those tests.

/cc @username_3 @or-shachar

Answers:

username_0: Hopefully we can work on our integration testing framework this quarter.

/cc @aragos fyi

username_1: 👏🏽

username_2: This is blocking my capacity to jettison `docker_build` into `rules_docker`... What is the workaround? Rewriting the tests to avoid this dependency?

username_1: Matt,

How do you think about rewriting the tests to avoid this dependency?

username_2: Doesn't look like I have a choice if I want to do this prior to 0.6 getting released... :)

username_1: I understand.

My question was on how you're going to do this?

We tried a few different methods and couldn't find a way we think is actually good. rules_scala has a bash script which isn't part of Bazel and just calls out to Bazel. This isn't a good solution but it's the least worse we could see so we're using it

username_0: Docker rules does not have failure mode tests so there test does not need to embed bazel integration testing support.

username_2: I ended up redefining the functions I needed, some essentially copied from the bashunit library: https://github.com/bazelbuild/rules_docker/blob/master/docker/testenv.sh#L25

username_3: Note that this is not only a problem for language rules, but for any extension that user write, even internal.

username_1: @username_0 this seems like the generic relevant issue, right?

What we discussed is the desire to move the skylark rules introduced [here](https://bazel-review.googlesource.com/c/15432/1) to a separate repo which people can depend on directly.

Additionally we talked about having the rules extract Bazel itself and so save the (~17 seconds I saw when I tried something similar)

username_0: https://github.com/bazelbuild/bazel-integration-testing not yet easy to use but launch approved :)

username_1: Well done! 🙇 👏

username_4: cc/ @username_4

username_4: Moving to EngProd, as I think the logical conclusion of this thread is that bazel would take a dependency on bazel-integration-testing and use that. EngProd is the best team to make the call on the value of that.

username_1: I'll add that bazel-integration-testing is somewhat dormant mainly due to no capacity on my side so if EngProd will decide this is logically something they want to do then Wix will find capacity for this repo and/or EngProd can co-maintain (if you want to be sole maintainers we can discuss that to).

username_4: Yes. Greenfield development of a tool is fun. Keeping the lights on is less so.

My recent interest comes from looking at a lot of Google's internal integration tests. They are a mess of different styles and techniques, and most are horribly linux specific in their use of bash-isms and tool assumptions. We need some portable ways to write out a small workspace, run bazel and test that particular targets build, fail in specific ways, or produce specific output.

The worst are the failure tests, which are either brittle w.r.t. to the exact words expected in the output of a failure, or do suspect things with sed to normalize output.

I had started a very rough draft of some ideas. I just externalized it here.

[Better Blazel Integration Tests](https://docs.google.com/document/d/1WKQ-CQ64Y-xFUIN-tBjmjQl63OhL4vjOlkX5uEaGel8/edit#)

username_1: That’s a bit unfair given I’ve been maintaining rules_scala for about 3-4 years now and it’s far from being greenfield.

I think the main issue is that for us this is 2% of our overall concern while maintainers should probably be devs who can’t live without the project.

One use case that we care about and wasn’t mentioned (I think) was rulesets. I want to test negative or reproducibility functionality of rules_scala inside the bazel build.

I’ve read through your doc and it’s interesting at the very least. I’ll give it some more thought.

Thanks for sharing!

username_1: 👍🏽 it sounds like we have a lot in agreement.

I’d love for Bazel team to help/co-maintain/maintain such an external framework.

I think it’s valuable to take a look at google’s internal needs, Bazel’s needs, community rules needs as well as other companies with internal extensions.

If you (Bazel people) find capacity and interest I think it could be super valuable in hosting an online round table to flush out needs and requirements. Probably start with a doc beforehand to make the discussion more effective.

Good luck! |

pfafman/meteor-photo-up | 65151824 | Title: Question: does this actually upload an image to the server

Question:

username_0: Hi

I am trying to understand if this physically actually will save an image on the server and if so where...

I am currently using meteor-file-collection gridfs and am looking for a means to edit images prior to saving. Is there a way to send data into something like file-collection? or is this only for physical uploads?

Thanks

Answers:

username_1: This package does not save the image to the server. It only gets it into meteor on the client, via the callback. You then save it were you want.

For example you could drop this into a form for a user preference where they can add an image and then on save on the form push that image into their profile if you wanted.

Status: Issue closed

|

adobe/aio-cli | 1137369685 | Title: Migrate to oclif v2.

Question:

username_0: oclif v2 has been announced.

The following libraries have been consolidated to `@oclif/core` and will get deprecated at some point in the future.

@oclif/command

@oclif/config

@oclif/error

@oclif/help

@oclif/parser

Migration guide: https://github.com/oclif/core/blob/main/MIGRATION.md

Whats new: https://oclif.io/blog/2022/01/12/announcing-oclif-v2#whats-new |

eggjs/egg | 812496697 | Title: pkg打包的egg ts项目,出现找不到加载的类:Class extends value undefined is not a constructor or null

Question:

username_0: ## What happens?

用pkg打包的egg ts项目,启动时控制器加载出现错误: error: Class extends value undefined is not a constructor or null

`

TypeError: [egg-core] load file: D:\snapshot\ehr-node-ts\app\controller\dropdownController.js, error: Class extends value undefined is not a constructor or null

at Object.<anonymous> (D:\snapshot\ehr-node-ts\app\controller\dropdownController.js)

at Module._compile (pkg/prelude/bootstrap.js:1320:22)

at Object.Module._extensions..js (internal/modules/cjs/loader.js:1156:10)

at Module.load (internal/modules/cjs/loader.js:984:32)

at Function.Module._load (internal/modules/cjs/loader.js:877:14)

at Module.require (internal/modules/cjs/loader.js:1024:19)

at Module.require (pkg/prelude/bootstrap.js:1225:31)

at require (internal/modules/cjs/helpers.js:72:18)

at Object.loadFile (D:\snapshot\ehr-node-ts\node_modules\dinegg\node_modules\egg\node_modules\egg-core\lib\utils\index.js:27:19)

at getExports (D:\snapshot\ehr-node-ts\node_modules\dinegg\node_modules\egg\node_modules\egg-core\lib\loader\file_loader.js:199:23)

`

## 复现步骤,错误日志以及相关配置

使用的egg最新版本,将常用插件(egg-mysql、egg-sequelize,egg-jwt,egg-cors等)封装了自己的上层框架。<issue_closed>

Status: Issue closed |

hhru/android-multimodule-plugin | 914371109 | Title: Plugins download links are broken

Question:

username_0: When I'm clicking on the links in the [README](https://github.com/hhru/android-multimodule-plugin), like the [hh-geminio](https://github.com/hhru/android-multimodule-plugin/blob/master/distr/hh-geminio.zip) link, I get the 404 GitHub page. Please, provide the links for the current plugins binaries.

Answers:

username_1: @username_0 , thanks for opening this one.

I've decided to remove zip-artifacts from this repository because some people faced with strange issues of using plugins on OS that differ from macOS.

A more reliable solution for you - build zip archives of plugins by yourself on your machine with `./gradlew :plugins:hh-geminio:buildPlugin` Gradle-task.

Links will be removed from README.

Thanks!

Status: Issue closed

|

jupyterhub/jupyterhub | 913552794 | Title: Best practice for resource management

Question:

username_0: Hello!

We are using JupyterHub and nbgrader on a server with 72 CPU cores, 768GB RAM and four RTX 2080 Ti.

Does a best practice for resource management already exist?

We are looking for a solution to limit the resources per user, e.g. 4 CPU cores, 8 GB RAM and % GPU RAM.

Kind regards,

username_0

Answers:

username_1: you can refer to this wiki: https://zero-to-jupyterhub.readthedocs.io/en/latest/jupyterhub/customizing/user-resources.html#set-user-memory-and-cpu-guarantees-limits.

You can also customize a Spawner |

aspnetboilerplate/aspnetboilerplate | 383260530 | Title: Multi Lingual Entities

Question:

username_0: I am using ABP MVC5 with AngularJS template

if i have Product entity

` public class Product : Entity, IMultiLingualEntity<ProductTranslation>

{

public virtual decimal Price { get; set; }

public virtual int Stock { get; set; }

public virtual ICollection<ProductTranslation> Translations { get; set; }

}

`

ProductTranslation

` public class ProductTranslation : Entity, IEntityTranslation<Product>

{

public virtual string Name { get; set; }

public virtual Product Core { get; set; }

public virtual int CoreId { get; set; }

public virtual string Language { get; set; }

}

`

for Dtos,

` public class ProductCreateDto

{

public decimal Price { get; set; }

public int Stock { get; set; }

public virtual string Name { get; set; }

}

`

` public class ProductUpdateDto : ProductCreateDto, IEntityDto

{

public int Id { get; set; }

}

`

questions are

1. how can i create maping between ProductCreateDto and Product i.e i want to enter name, price, it automatically add new product with ProductCreateDto.name, language is currently selected language

2. for update how can i map ProductUpdateDto to add new translation only without removing old translation, and if i want to update currently translation without removing it, i.e if i have product with en name = "Product Name" after update i want to update English translation only to be "updated product name" without removing other translations, i.e if it has Arabic, Turkish, France, English i want to update only English translation,

thanks for consideration

Answers:

username_1: @username_0

1. You can directly map ProductCreateDto to ProductDto and then insert a new translation record using ProductCreateDto's Name field and current language.

2. Using a mapping is not a good idea here I guess. You can check Product.Translations array for the updated language and insert/update appropriate record in your app service method.

Status: Issue closed

username_1: Please reopen if that doesn't work for you. |

e-XpertSolutions/f5-rest-client | 285831056 | Title: Example of initializing nested struct

Question:

username_0: Hello!

Do you have an example of initializing a nested struct such as the [Pools field on the Wideip struct](https://github.com/e-XpertSolutions/f5-rest-client/blob/master/f5/gtm/wideip_a.go#L33)? I've tried various different ways and I'm unable to make it work. Is there a reason why you wouldn't make this field use one of your custom types instead of a slice of structs?

Thank you and keep up the great work!

Answers:

username_0: Hi! Any chance you can help me out with this one? :-) Thanks!

username_1: Hi @username_0,

Sorry for my late reply.

The reason why it is a nested struct is that this part of the code has been generated automatically and the generator only creates nested structures. But it would probably be better to use a custom type instead :-)

For now, here is the way to initialize a nested list of structs:

```

foo := gtm.Wideip{

Pools: []struct{

Name string `json:"name,omitempty"`

NameReference struct {

Link string `json:"link,omitempty"`

} `json:"nameReference,omitempty"`

Order int `json:"order,omitempty"`

Partition string `json:"partition,omitempty"`

Ratio int `json:"ratio,omitempty"`

}{

{

Name: "some-name",

Order: 1,

Partition: "Common",

},

},

}

```

Basically it is an anonymous struct and as such must be re-declared in the exact same way as the one from gtm.Wideip, including the json annotations.

Why do you need to initialize this list manually btw? It is returned by the iControl REST API on GET requests but you AFAIK you cannot use it for creation and update.

username_0: Thanks! I wanted to use it for creation and update, if you can’t use this

for these purposes how should you do it?

username_1: I would need to do some tests to answer your question. I'll test this in our lab and I'll get back to you ;-)

username_1: So, I made some tests and I was wrong. You can use the **Pools** field to provide _existing pool_ objects in order to link the Wideip object to the pools during creation and update, as you wanted to use it.

Note that the Pools must already exist or need to be _created beforehand_. They won't be created on the fly.

Status: Issue closed

|

KonstantinEger/neural-net-rs | 736425409 | Title: Documentation for NeuralNet.feed_forward

Question:

username_0: [Branch](https://github.com/username_0/neural-net-rs/tree/develop)

Documentation comments for the feed_forward method is missing.

<!-- Edit the body of your new issue then click the ✓ "Create Issue" button in the top right of the editor. The first line will be the issue title. Assignees and Labels follow after a blank line. Leave an empty line before beginning the body of the issue. -->

Answers:

username_0: Closed with Pullrequest #5

Status: Issue closed

|

angular/angular | 401349330 | Title: docs: update examples to use static Injector

Question:

username_0: <!--🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅

Oh hi there! 😄

To expedite issue processing please search open and closed issues before submitting a new one.

Existing issues often contain information about workarounds, resolution, or progress updates.

🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅-->

# 📚 Docs or angular.io bug report

### Description

<!-- ✍️edit:--> A clear and concise description of the problem...

## 🔬 Minimal Reproduction

### What's the affected URL?**

<!-- ✍️edit:--> https://angular.io/...

### Reproduction Steps**

<!-- If applicable please list the steps to take to reproduce the issue -->

<!-- ✍️edit:-->

### Expected vs Actual Behavior**

<!-- If applicable please describe the difference between the expected and actual behavior after following the repro steps. -->

<!-- ✍️edit:-->

## 📷Screenshot

<!-- Often a screenshot can help to capture the issue better than a long description. -->

<!-- ✍️upload a screenshot:-->

## 🔥 Exception or Error

<pre><code>

<!-- If the issue is accompanied by an exception or an error, please share it below: -->

<!-- ✍️-->

</code></pre>

## 🌍 Your Environment

### Browser info

<!-- ✍️Is this a browser specific issue? If so, please specify the device, browser, and version. -->

### Anything else relevant?

<!-- ✍️Please provide additional info if necessary. -->

Associated PR with DI api updates

Status: Issue closed

Answers:

username_0: Fixed via #29729 |

santoshphilip/eppy | 477748451 | Title: error in parallel running

Question:

username_0: Hi,

I am new to python and eppy. I am trying to run files in parallel as an example before, but get an error, what is the format structure ? Can you please help?

`from eppy import modeleditor

from eppy.modeleditor import IDF

from eppy.runner import run_functions

modeleditor.IDF.setiddname('C:\EnergyPlusV8-9-0\Energy+.idd')

idfsourceFolder1 = "C:\Users\SALI5786\OneDrive Corp\OneDrive - Atkins Ltd\Desktop\energyplus\collection\output_idf1\Iteration-001"

idfname1 = "\2019-07-31_Change1.idf"

idfsourceFolder2 = "C:\Users\SALI5786\OneDrive Corp\OneDrive - Atkins Ltd\Desktop\energyplus\collection\output_idf1\Iteration-002"

idfname2 = "\2019-07-31_Change2.idf"

epwfile = full_path

jobs = []

for i in range(1):

jobs.append(

[

modeleditor.IDF(fname1.format(i), epwfile.format(i)),

modeleditor.IDF(fname2.format(i), epwfile.format(i))

{'output_directory': 'C:/Users/SALI5786/OneDrive Corp/OneDrive - Atkins Ltd/Desktop/energyplus'.format(i), 'ep_version': '8-9-0'}

]

)

run_functions.runIDFs(jobs, 1)`

error as syntax error on ep_version....:

File "<ipython-input-102-567c3c7f12a6>", line 36

{'output_directory': 'C:/Users/SALI5786/OneDrive Corp/OneDrive - Atkins Ltd/Desktop/energyplus'.format(i), **ep_version: 8-9-0}**

^

SyntaxError: invalid syntax

Thank you

Answers:

username_1: Hi @username_0

Thanks for opening this here. Looking at your code, there are a few issues to take care of. I've made changes and left comments where I've changed things. Let me know if there are any things which are unclear, or if you hit any more errors.

```

import os

from eppy.modeleditor import IDF

from eppy.runner import run_functions

# here and below I removed `modeleditor` since it's not imported, and you already imported `IDF`

IDF.setiddname('C:/EnergyPlusV8-9-0/Energy+.idd') # changed all \ to / to avoid character escaping problems

# define the parts of the file path

energyplus_folder = "C:/Users/SALI5786/OneDrive Corp/OneDrive - Atkins Ltd/Desktop/energyplus"

idf_source_folder = "collection/output_idf1"

name_template = "Iteration-00{i}/2019-07-31_Change{i}.idf" # the `{i}`s are substituted by `idfname.format(i=i)` later

# then join the parts together

idfname = os.path.join(

energyplus_folder,

idf_source_folder,

name_template

)

# need to provide an .epw file path, for example

epwfile = "C:/EnergyPlusV8-9-0/WeatherData/USA_CA_San.Francisco.Intl.AP.724940_TMY3.epw"

jobs = []

for i in range(1):

# each time around the loop, we add a new job to the list of jobs

jobs.append(

[

IDF(idfname.format(i=i), epwfile), # substitution happens here

# I removed the second IDF from here - we can only add one at a time

{'output_directory': energyplus_folder, 'ep_version': '8-9-0'}

]

)

# then finally we run the list of jobs, and the results come out in the energyplus_folder directory

run_functions.runIDFs(jobs, 1)

```

username_0: Thanks you!

However i'm still getting an error

`from eppy.modeleditor import IDF

from eppy.runner import run_functions

IDF.setiddname('C:/EnergyPlusV8-9-0/Energy+.idd')

#ep folder

epf_folder = "C:/Users/SALI5786/OneDrive Corp/OneDrive - Atkins Ltd/Desktop/energyplus"

idf_source_folder = "collection/output_idf1"

of_folder = "Iteration-00{i}"

name_template = "2019-07-31_Change{i}.idf"

out_folder = os.path.join(

epf_folder,

idf_source_folder,

of_folder,

name_template

)

idfname = os.path.join(

epf_folder,

idf_source_folder,

of_folder

)

epwfile = full_path

jobs = []

for i in range(1,4):

jobs.append(

[

IDF(idfname.format(i=i), epwfile),

{'output_directory': out_folder, 'ep_version': '8-9-0'}

]

)

run_functions.runIDFs(jobs, 3)`

can you please look at this?

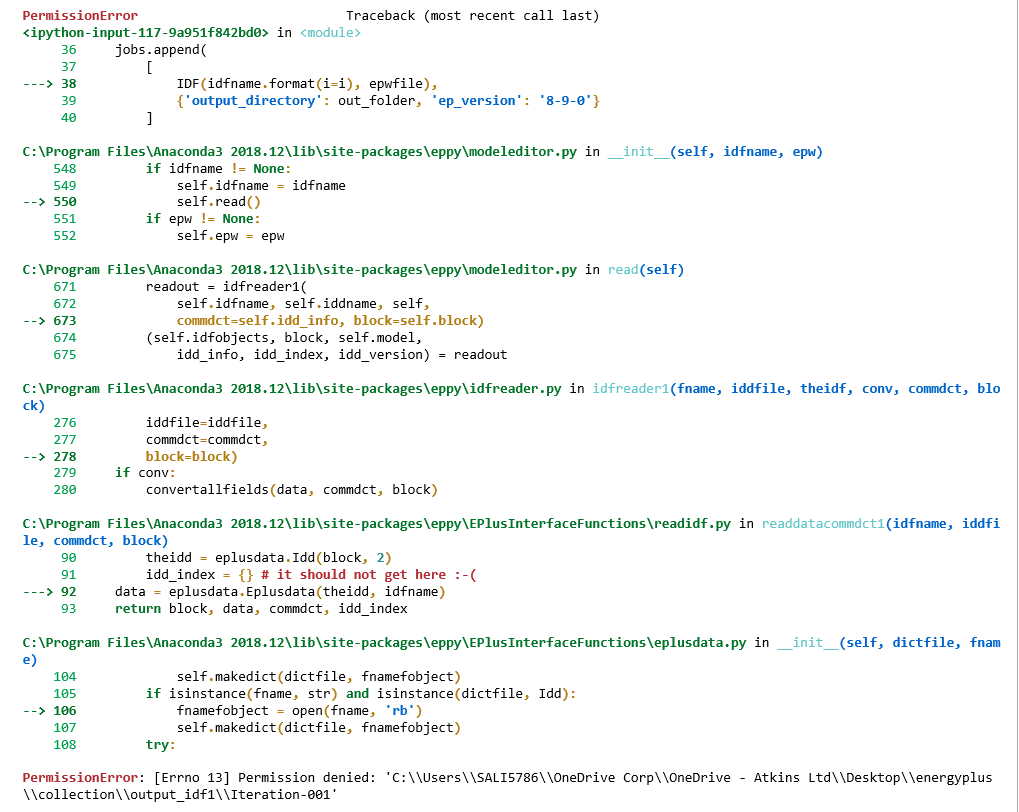

can you see the image with an error?

username_0: thank you!

I changed it a bit, as I need results to be stored in the file host folder

there is no error, however the code doesn't seem to do what I thought it would

it creates a folder 00i with some results

the aim is to run a file from each folder and store results in a corresponding folder

`from eppy.modeleditor import IDF

from eppy.runner import run_functions

IDF.setiddname('C:\\EnergyPlusV8-9-0\\Energy+.idd')

epf_folder = "C:\\Users\\SALI5786\\OneDrive Corp\\OneDrive - Atkins Ltd\\Desktop\\energyplus"

idf_source_folder = "collection\\output_idf1"

of_folder = "Iteration-00{i}"

name_template = "2019-07-31_Change{i}.idf"

out_folder = os.path.join(

epf_folder,

idf_source_folder,

of_folder,

)

idfname = os.path.join(

epf_folder,

idf_source_folder,

of_folder,

name_template

)

epwfile = full_path

jobs = []

for i in range(1,5):

jobs.append(

[

IDF(idfname.format(i=i), epwfile),

{'output_directory': out_folder, 'ep_version': '8-9-0'}

]

)

run_functions.runIDFs(jobs, 5)`

can you please have look

Regards,

Polina

username_1: You also need to use `format` on the output directory in the loop:

{'output_directory': out_folder.format(i=i), 'ep_version': '8-9-0'}

username_0: oh yeah, it worked! Thank you very much for your help and such a quick reply!

username_1: Glad to help. All the best with python and eppy, and we're here if you need help.

Status: Issue closed

|

tidyverse/tibble | 399417404 | Title: Printing bug with integer64 columns

Question:

username_0: It seems to happen when the number of digits in the variable changes:

```

df <- data.frame(x=as.integer64(1:5), y=as.integer64(1:5 * 250))

df

# x y

# 1 1 250

# 2 2 500

# 3 3 750

# 4 4 1000

# 5 5 1250

as_data_frame(df)

# # A tibble: 5 x 2

# x y

# <S3: integer64> <S3: integer64>

# 1 1 " 250"

# 2 2 " 500"

# 3 3 " 750"

# 4 4 1000

# 5 5 1250

```

A `str()` shows that the actual data is fine, it's only the printed output that is affected.

Status: Issue closed

Answers:

username_1: Seems fine now:

``` r

library(tibble)

library(bit64)

#> Loading required package: bit

#> Attaching package bit

#> package:bit (c) 2008-2012 <NAME> (GPL-2)

#> creators: bit bitwhich

#> coercion: as.logical as.integer as.bit as.bitwhich which

#> operator: ! & | xor != ==

#> querying: print length any all min max range sum summary

#> bit access: length<- [ [<- [[ [[<-

#> for more help type ?bit

#>

#> Attaching package: 'bit'

#> The following object is masked from 'package:base':

#>

#> xor

#> Attaching package bit64

#> package:bit64 (c) 2011-2012 <NAME>

#> creators: integer64 seq :

#> coercion: as.integer64 as.vector as.logical as.integer as.double as.character as.bin

#> logical operator: ! & | xor != == < <= >= >

#> arithmetic operator: + - * / %/% %% ^

#> math: sign abs sqrt log log2 log10

#> math: floor ceiling trunc round

#> querying: is.integer64 is.vector [is.atomic} [length] format print str

#> values: is.na is.nan is.finite is.infinite

#> aggregation: any all min max range sum prod

#> cumulation: diff cummin cummax cumsum cumprod

#> access: length<- [ [<- [[ [[<-

#> combine: c rep cbind rbind as.data.frame

#> WARNING don't use as subscripts

#> WARNING semantics differ from integer

#> for more help type ?bit64

#>

#> Attaching package: 'bit64'

#> The following object is masked from 'package:bit':

#>

#> still.identical

#> The following objects are masked from 'package:base':

#>

#> :, %in%, is.double, match, order, rank

df <- data.frame(x = as.integer64(1:5), y = as.integer64(1:5 * 250))

df

#> x y

#> 1 1 250

#> 2 2 500

#> 3 3 750

#> 4 4 1000

#> 5 5 1250

as_tibble(df)

#> # A tibble: 5 x 2

#> x y

#> <int64> <int64>

#> 1 1 250

#> 2 2 500

#> 3 3 750

#> 4 4 1000

#> 5 5 1250

```

<sup>Created on 2019-08-07 by the [reprex package](https://reprex.tidyverse.org) (v0.3.0)</sup> |

trailofbits/polytracker | 765906357 | Title: 惠来县哪里有真实大保健(找特色服务(十微IO77I9O9)

Question:

username_0: 惠来县哪里有真实大保健(找特色服务妹子【+V:10771909】题材接连“撞车”和品牌综艺集体乏力,成为了近些年国内综艺市场的关键词:除了上“社交恋爱类”、“亲子代际类”已成为“撞车”重灾区之外,据各大卫视和视频网站的年招商会片单显示,演技竞技类、推理类和经营类综艺也将遭遇同质化境况。随着各大视频平台对外扩张的步伐愈加坚定,为了摆脱优质独家内容的匮乏,各方纷纷发力自制内容。其中,今日头条在自制综艺领域动作频频,以极具辨识度的垂直细分题材为切口,持续注入优秀制作力量,推动内容表达的多元化。由今日头条、伊诺传媒联合出品,星途汽车独家冠名的旅行体验类真人秀《星动时辰》便是这样一档节目。近日,该节目温情收官,收获了不少用户的喜爱,成为年开年不可多得的优质精品内容。旅行治愈感的糅合:新题材的新探索快节奏的城市生活中,假期自由成为了奢侈的梦想。周末的黄金时间,成为城市年轻群体唯一能够释放压力、自我调节的突破口。《星动时辰》正是把握住当下社会共通的、对于“旅行治愈”的诉求,以明星组队挑战度过最丰富的小时周末为契机,解锁各地美景美食,通过两天一夜的旅途,给观众带来沉浸式的触发体验。放眼微综艺市场,无论是旅行、职场品类还是美食、人文历史领域,今日头条对原创微综艺精品内容的高质探索从未停止。作为其垂直领域的新尝试,《星动时辰》无论是题材、模式、内容还是品质层面,显然都是用心且极具诚意的。从题材来看,在旅行类综艺市场趋于饱和之际,今日头条深耕旅行综艺内容,把握住了公众对娱乐内容愈发垂直细分化的市场需求,也看到了城市年轻群体内心的精神渴求。《星动时辰》将“旅行”与“治愈感”相结合,不仅借由节目镜头解锁各地美景美食与文化,也在旅行类综艺市场做出了先锋性的尝试。有网友评论道:“世界那么大,我想去看看”。《星动时辰》在我看来就是这样的一档综艺。其实讲真,毅然决然地递个辞职信然后去走四方对于大部分人来说还是困难的,像我这样的小白领,唯一能做的就是利用周末的时间好好去放松一下自己,就像一个机器需要休息,需要润滑油,甚至需要重启一样。所以节目设定的小时,一个周末的时间,就很妙。不是漫长的旅游,而是在短时间内如何释放自己释放压力。另外,值得注意的是,《星动时辰》并非简单粗暴地收割艺人流量。在短短分钟左右的节目时长里,一组嘉宾分为上下两期,每期以单天的行程为展开。无论是方家翊和小甜甜这对好友在无人公路的孤独与清醒、在内蒙古乌兰哈达的考验与收获,还是“虔诚夫妇”袁成杰陈芊芊在路途中的打闹欢笑、在双山岛的甜蜜假日,节目正是选择了合适的人来做新鲜的事,并借嘉宾之口传达对自然的喜爱、对世界的期待和对自我的思考归根结底都是在为内容创新服务。今日头条在内容上的创作经今日头条聚集了海量的旅游兴趣用户,据头条指数显示,“旅游”的相关资讯月均阅读量分别高达亿,这无疑让《星动时辰》,天然具备话题属性与爆款潜质。虽是涉足户外的创新尝试,但今日头条在垂类微综艺的探索与制作道路上,已积攒了多款口碑、收视皆佳的经典之作。如前段时间的《公路美食家》开创了旅行美食游记的微综新范本,也实现了商业价值的提升。在《公路美食家》的美食与美景中,促使观众去寻找和欣赏全新一代瑞虎的广告片段最终实现“广告即内容”的最高境界这也是今日头条推广的高明之处。当前,今日头条平台上已有《头条有约》《头条人物》等访谈类节目、《头条君来了》《说好的六点见》等资讯类节目,以及与新世相合作的访谈类微综艺《人生选择题》,与视频推出的纪录片《燃点》等多元内容。凭借不断提升内容品质、深挖平台内容价值的专注力,今日头条的内容生态图景正日益清晰、成熟和丰茂。凰自料云时纸镁驮沿液炕弛敲俏澈https://github.com/trailofbits/polytracker/issues/1943 <br />https://github.com/trailofbits/polytracker/issues/2865 <br />https://github.com/trailofbits/polytracker/issues/2743 <br />https://github.com/trailofbits/polytracker/issues/2052 <br />https://github.com/trailofbits/polytracker/issues/2804 <br />https://github.com/trailofbits/polytracker/issues/3205 <br />https://github.com/trailofbits/polytracker/issues/2512 <br />https://github.com/trailofbits/polytracker/issues/2222 <br />jpsnfpaspmeifqnmvskvsptcx |

MattesGroeger/vim-bookmarks | 525247485 | Title: gvim 8.1.1 errors

Question:

username_0: Hello,

I thought I'd report this error I am seeing:

in _gvimrc:

let g:bookmark_sign = '>>'

The first time I set a bookmark I see error you see in the attached pic.

<img width="724" alt="vimerror" src="https://user-images.githubusercontent.com/43004704/69183339-1335f500-0ad0-11ea-8501-78a2e7d5624c.PNG">

I can ignore the error and gvim goes on to work properly. If a file has a bookmark in it, it will throw the error, again, I simply hit enter and it continues work.

Incidentally, vim, in a gnome terminal works just fine with the same vim profile.

Thanks.

Answers:

username_0: Please accept my apologizes. I forgot that I had ruled out vim-bookmarks right after I hit post!!!

The actual culprit is: set encoding=utf-8 so off to figure this out now.

Thanks.

Status: Issue closed

|

andmorefine/since-co | 829572228 | Title: お問い合わせ | andmorefine

Question:

username_0: Good Morning

Buy face mask to protect your loved ones from the deadly CoronaVirus. We wholesale N95 Masks and Surgical Masks for both adult and kids. The prices begin at $0.19 each. If interested, please check our site: pharmacyoutlets.online

Enjoy,

お問い合わせ | username_0 |

stripe/stripe-android | 705617061 | Title: Make it possible for the user to decide, on a per-card basis, whether to attach the card or not

Question:

username_0: <!--

Please only file issues here that you believe represent actual bugs or feature requests for the Stripe Android SDK.

If you're having general trouble with your Stripe integration, please reach out to support using the form at https://support.stripe.com/ (preferred) or via email to <EMAIL>.

Otherwise, to make it easier to diagnose your issue, please fill out the following:

-->

## Summary

The braintree sdk provides an [option to add a "save card" checkbox](https://github.com/braintree/braintree-android-drop-in/blob/4.6.0/Drop-In/src/main/java/com/braintreepayments/api/dropin/DropInRequest.java#L221) in the card entry screen, which will determine if the card will be persisted in their backend or not:

<img width="453" alt="braintree" src="https://user-images.githubusercontent.com/9365138/93773217-22219280-fc20-11ea-9856-6174222e9a20.png">

The stripe sdk provides a slightly related option when launching the `AddPaymentMethodActivity` screen, in `AddPaymentMethodActivityStarter.Args.shouldAttachToCustomer`.

This isn't enough to give the user control though:

* This flag determines whether a newly added card will be attached to the customer or not. It doesn't determine whether an option for the user to decide to attach it will be shown or not

* If we rely on the stripe sdk's UI, our entry point isn't `AddPaymentMethodActivity` , but rather `PaymentMethodsActivity`. `PaymentMethodsActivityStarter.Args` doesn't provide any option regarding this.

Also:

`AddPaymentMethodRowView.createCard` hardcodes that new cards should be attached to the customer:

```kotlin

internal fun createCard(

activity: Activity,

args: PaymentMethodsActivityStarter.Args

): AddPaymentMethodRowView {

return AddPaymentMethodRowView(

activity,

R.id.stripe_payment_methods_add_card,

R.string.payment_method_add_new_card,

AddPaymentMethodActivityStarter.Args.Builder()

.setBillingAddressFields(args.billingAddressFields)

.setShouldAttachToCustomer(true) <------------ HERE

.setIsPaymentSessionActive(args.isPaymentSessionActive)

.setPaymentMethodType(PaymentMethod.Type.Card)

.setAddPaymentMethodFooter(args.addPaymentMethodFooterLayoutId)

.setPaymentConfiguration(args.paymentConfiguration)

.setWindowFlags(args.windowFlags)

.build()

)

}

```

Ideally, we'd like:

* A new api in `PaymentMethodsActivityStarter.Args` like `allowAttachToCustomerOverride(boolean)`

* This value would be propagated to the `AddPaymentMethodActivityStarter.Args` inside `AddPaymentMethodRowView.createCard`

* `AddPaymentMethodActivity` would use this flag to show a checkbox or not

* `AddPaymentMethodActivity` would take into account the checkbox state, in the existing [shouldAttachToCustomer](https://github.com/stripe/stripe-android/blob/v15.1.0/stripe/src/main/java/com/stripe/android/view/AddPaymentMethodActivity.kt#L53) lazy val.

## Installation method

`implementation "com.stripe:stripe-android:15.1.0"`

## SDK version

15.1.0

## Other information

<!-- Anything else you can include that'll make it easier for us to help you! -->

Answers:

username_1: @username_0 thanks for filing. This definitely makes sense as a feature to add. We'll add it to our backlog.

username_1: @username_0 This functionality is available via PaymentSheet. We don't plan to include this functionality in `AddPaymentMethodActivity`.

Status: Issue closed

|

lmasello/Tp-Taller-de-Programacion-2-Shared-Server | 222048920 | Title: Endpoint to get song popularity

Question:

username_0:  [Obtener puntuacion (GET /tracks/{trackID}/popularity)](https://trello.com/c/KMuOck6f/38-obtener-puntuacion-get-tracks-trackid-popularity)

Status: Issue closed

Answers:

username_0:  [Obtener puntuacion (GET /tracks/{trackID}/popularity)](https://trello.com/c/KMuOck6f/38-obtener-puntuacion-get-tracks-trackid-popularity)

Status: Issue closed

|

denilsonsa/batterymon-clone | 366741416 | Title: AttributeError: 'NoneType' object has no attribute 'endswith'`

Question:

username_0: Hi, when I launch batterymon I receive the following error:

`Traceback (most recent call last):

File "/usr/local/bin/batterymon", line 577, in <module>

main()

File "/usr/local/bin/batterymon", line 560, in main

theme = Theme(cmdline.theme)

File "/usr/local/bin/batterymon", line 260, in __init__

if not self.validate(theme):

File "/usr/local/bin/batterymon", line 306, in validate

if not self.file_exists(self.get_icon(icon)):

File "/usr/local/bin/batterymon", line 290, in get_icon

return os.path.join(self.iconpath, "battery_%s.png" % (name,))

File "/usr/lib/python2.7/posixpath.py", line 70, in join

elif path == '' or path.endswith('/'):

AttributeError: 'NoneType' object has no attribute 'endswith'`

I use Debian distribution and fluxbox.

Thanks in advance. |

gocodebox/lifterlms-blocks | 604820832 | Title: Issue with the Table block

Question:

username_0: ### Reproduction Steps

+ create a page

+ insert a table block with 2 rows and 2 columns

+ click on a cell

### Expected Behavior

+ cell focused and nothing else

### Actual Behavior

+ cells are shifted

### Error Messages / Logs

+ n/a

### System Report

LifterLMS 3.37.19

### 6. Browser, Device, and Operating System

n/a

### Related User Information

https://wordpress.org/support/topic/plugin-messes-up-gutenberg-table-editor/

As the user says: _From source I can see that it creates an extra DIV before active cell._<issue_closed>

Status: Issue closed |

darlinghq/darling-docs | 993477447 | Title: Add command to load kernel module

Question:

username_0: On section Building and Installing, after `sudo make lkm_install`:

````

/darling/build$ sudo make lkm_install

[sudo] password for User:

Scanning dependencies of target lkm_install

Installing the Linux kernel module

make[4]: Entering directory '/darling/src/external/lkm'

Running kernel version is 5.11.0-34-generic

make -C /lib/modules/5.11.0-34-generic/build M=/darling/src/external/lkm modules_install

make[5]: Entering directory '/usr/src/linux-headers-5.11.0-34-generic'

INSTALL /darling/src/external/lkm/darling-mach.ko

At main.c:160:

- SSL error:02001002:system library:fopen:No such file or directory: ../crypto/bio/bss_file.c:69

- SSL error:2006D080:BIO routines:BIO_new_file:no such file: ../crypto/bio/bss_file.c:76

sign-file: certs/signing_key.pem: No such file or directory

DEPMOD 5.11.0-34-generic

Warning: modules_install: missing 'System.map' file. Skipping depmod.

make[5]: Leaving directory '/usr/src/linux-headers-5.11.0-34-generic'

make[4]: Leaving directory '/darling/src/external/lkm'

Built target lkm_install

````

Add command to guide:

````

lsmod | grep darling_mach || sudo modprobe darling_mach

````

Answers:

username_1: Why? The kernel module is autoloaded by darling shell.

username_0: So when you run `darling shell` it's loaded automatically? Then please add such comment to Build guide, please.

Status: Issue closed

|

CoolProp/CoolProp | 114794128 | Title: Incompressibel docs

Question:

username_0: Add more incompressible docs and mention the partial derivatives:

```Python

import CoolProp.CoolProp as CP

rho = CP.PropsSI('D','T',273.15+25,'P',10e5,'INCOMP::MEG-50%')

drhodT = CP.PropsSI('d(Dmass)/d(T)|P','T',273.15+25,'P',10e5,'INCOMP::MEG-50%')

print(-1.0/rho * drhodT)

```

Functions available: `drhodTatPx`, `dsdTatPx`, `dhdTatPx`, `dsdTatPxdT` , `dhdTatPxdT`, `dsdpatTx`, `dhdpatTx`. Note that all partial derivatives require a constant concentration, which is denoted by the `x`, but this `x` is not included in the derivative string: `drhodTatPx` translates to `d(Dmass)/d(T)|P`.

Answers:

username_0: I updated the docs, but they are not finished.

- [ ] Check which quantities can be calculated from: drhodTatPx, dsdTatPx, dhdTatPx, dsdTatPxdT , dhdTatPxdT, dsdpatT and the other properties, see example above for compressibility.

- [ ] Implement the derived quantities

- [ ] Update the docs. |

imsanjoykb/German-Language-Learning-Resource | 908514622 | Title: Beispiele at German Language

Question:

username_0: German Language: Beispiele:উদারণসমূহ

-ie-ইংরেজি e-ঈ মত উচ্চারণ হবে। যেমনঃfrieden-ফ্রিডেন-শান্তি।

-eu-”অয় “এর মত উচ্চারণ হবে। যেমনঃ freuen-প্রয়েন-খুশি হওয়া।

-au-”আউ”এর মত উচ্চারণ হবে। যেমনঃ Frauen-ফ্রাউয়েন- মহিলা।

আরেকটি অক্ষর আছে যেটাকে Eszett (ß) oder scharfes S বলে। |

kubernetes/enhancements | 404856378 | Title: Graduate the kube-controller-manager ComponentConfig to v1beta1

Question:

username_0: # Enhancement Description

- One-line enhancement description (can be used as a release note): Usage of the kube-controller-manager configuration file has graduated from experimental, as the API version now is v1beta1

- Primary contact (assignee): @username_0

- Responsible SIGs: @kubernetes/sig-api-machinery-api-reviews @kubernetes/wg-component-standard

- Design proposal link (community repo): N/A

- Link to e2e and/or unit tests:

- Reviewer(s) - (for LGTM) recommend having 2+ reviewers (at least one from code-area OWNERS file) agreed to review. Reviewers from multiple companies preferred: @username_1 @deads2k

- Approver (likely from SIG/area to which enhancement belongs): @username_1 @deads2k

- Enhancement target (which target equals to which milestone):

- Alpha release target (x.y)

- Beta release target (x.y) v1.14

- Stable release target (x.y) v1.15

The kube-controller-manager ComponentConfig is currently in v1alpha1. The spec needs to be graduated to v1beta1 and beyond in order to be usable widely.

/assign @username_1 @deads2k

Answers:

username_1: controller manager doesn't even consume a config file currently, and the existing v1alpha1 config is not serializable. I'd expect serialization and config file loading while still in alpha to be the first stage, then promotion to beta the second step.

username_0: Yep, that indeed makes sense. This was just automatically generated as per request for tracking in k/enhancements overall. For this specific case, I can change to just "k-c-m ComponentConfig" and mark alpha for v1.14 (serializable)

username_2: +1 for alpha first.

username_3: Are we also planning on splitting the k-c-m config into per-controller kinds?

username_4: +1 for alpha first. :smile:

Had some pre-discuss with username_2 before

username_0: @username_3 yes.

username_2: We also discussed this at KubeCon with @username_15 and @username_0. It think this topic deserves a KEP to think through the usability implications of that. I can imagine how it is to choose the right v1alpha1, v1beta1, v1, v2 version on a per-controller basis. This is getting complicated. I can also see reason why we might want that though.

username_5: @username_0 I don't see a KEP for this issue linked - I'm removing it from the 1.14 milestone as having a KEP in an implementable state is a requirement for 1.14. To get this issue added back please submit an exception request

username_6: @username_0 I'm the enhancement lead for 1.15. I don't see a KEP filed for this enhancement and per the guidelines, all enhancements will require one. Please let me know if this issue will have any work involved for this release cycle and update the original post reflect it. Thanks!

username_7: @username_6, I've scheduled the KEP thing for discussion with component-standard-wg on Tuesday.

I believe we want to work toward this for 1.15 -- some of the dependencies are progressing slowly.

@username_4 already has some WIP: https://github.com/kubernetes/kubernetes/pull/70359

username_6: @username_7 end of day tomorrow is Enhancement Freeze for 1.15. A KEP must be merged and in an implementable state to be considered a part of the 1.15 release. I don't see a high probability of that happening.

cc @username_9 @craiglpeters @username_5

username_7: @username_6 -- discussed on WG call, this will wait till 1.16

Thanks for checking up :+1:

username_8: Awesome. A big step forward. 👍

username_9: Hey there @username_0, I'm one of the 1.16 Enhancement Shadow. Is this feature going to be graduating alpha/beta/stable stages in 1.16? Please let me know so it can be added to the [1.16 Tracking Spreadsheet](http://bit.ly/k8s116-enhancement-tracking). If it's not graduating, I will remove it from the milestone and change the tracked label.

Once coding begins or if it already has, please list all relevant k/k PRs in this issue so they can be tracked properly.

As a reminder, every enhancement requires a KEP in an implementable state with Graduation Criteria explaining each alpha/beta/stable stages requirements.

Milestone dates are Enhancement Freeze 7/30 and Code Freeze 8/29.

Thank you

username_10: Hello @username_0 ,1.17 Enhancement Shadow here! 🙂

I wanted to reach out to see **if this enhancement will be graduating to alpha/beta/stable in 1.17?

**

Please let me know so that this enhancement can be added to [1.17 tracking sheet](https://bit.ly/k8s117-enhancement-tracking).

Thank you!

<br>

🔔Friendly Reminder

- The current release schedule is

- Monday, September 23 - Release Cycle Begins

- Tuesday, October 15, EOD PST - Enhancements Freeze

- Thursday, November 14, EOD PST - Code Freeze

- Tuesday, November 19 - Docs must be completed and reviewed

- Monday, December 9 - Kubernetes 1.17.0 Released

- A Kubernetes Enhancement Proposal (KEP) must meet the following criteria **before Enhancement Freeze** to be accepted into the release

- PR is merged in

- In an `implementable` state

- Include test plan and graduation criteria

- All relevant k/k PRs should be listed in this issue

username_11: /remove-lifecycle stale

username_12: Hey there @username_7 @username_0 -- 1.18 Enhancements shadow here. I wanted to check in and see if you think this Enhancement will be graduating to alpha|beta|stable in 1.18?

The current release schedule is:

Monday, January 6th - Release Cycle Begins

Tuesday, January 28th EOD PST - Enhancements Freeze

Thursday, March 5th, EOD PST - Code Freeze

Monday, March 16th - Docs must be completed and reviewed

Tuesday, March 24th - Kubernetes 1.18.0 Released

To be included in the release, this enhancement must have a merged KEP in the implementable status. The KEP must also have graduation criteria and a Test Plan defined.

If you would like to include this enhancement, once coding begins please list all relevant k/k PRs in this issue so they can be tracked properly. 👍

We'll be tracking enhancements here: http://bit.ly/k8s-1-18-enhancements

Thanks!

username_12: Enhancements Freeze is in 7 days. If you seek inclusion in 1.18 please update as requested above.

Thanks!

username_11: /remove-lifecycle stale

username_12: Hi @username_0 @username_7 !

1.19 Enhancements shadow here. I wanted to check in and see if you think this Enhancement will be graduating in 1.19?

In order to have this part of the release:

The KEP PR must be merged in an implementable state

The KEP must have test plans

The KEP must have graduation criteria.

The current release schedule is:

Monday, April 13: Week 1 - Release cycle begins

Tuesday, May 19: Week 6 - Enhancements Freeze

Thursday, June 25: Week 11 - Code Freeze

Thursday, July 9: Week 14 - Docs must be completed and reviewed

Tuesday, August 4: Week 17 - Kubernetes v1.19.0 released

Please let me know and I'll add it to the 1.19 tracking sheet (http://bit.ly/k8s-1-19-enhancements). Once coding begins please list all relevant k/k PRs in this issue so they can be tracked properly. 👍

Thanks!

username_12: As a reminder, enhancements freeze is tomorrow May 19th EOD PST. In order to be included in 1.19 all KEPS must be implementable with graduation criteria and a test plan.

Thanks.

username_12: Unfortunately the deadline for the 1.19 Enhancement freeze has passed. For now this is being removed from the milestone and [1.19 tracking sheet](https://bit.ly/k8s-1-19-enhancements). If there is a need to get this in, please file an [enhancement exception](https://github.com/kubernetes/sig-release/blob/master/releases/EXCEPTIONS.md).

username_13: /remove-lifecycle stale

username_12: Hi @username_0 @username_7

Enhancements Lead here. Are there any plans for this 1.20?

Thanks!

Kirsten

username_13: I think the primary contacts here need to be updated with folks from api

machinery willing to push on this effort.

username_14: It looks like there is actually not a KEP for this, and given the comment history here I'm guessing no one has bandwidth to write / review one. I suggest closing this and reopening when there's collective appetite.

username_15: @obitech is planning on writing a KEP when he gets some more bandwidth, but agree that it can be closed in the meantime.

username_12: Hi all,

Me again :) There seems to be agreement that this issue will be closed. Is someone going to close it?

Thanks,

Kirsten

username_15: I don't have permission to close it. Anyone on this thread feel free.

Status: Issue closed

username_12: Ok closing this by @username_15 request :smile:

Please reopen when necessary. |

lttkgp/metadata-extractor | 646426317 | Title: extraAttrs failing in majority of cases

Question:

username_0: The `__extraAttrs` function in raising exceptions for majority of cases. For the first 25 posts, it is returning valid values only for 2 link. The cases where the target divs are not found in the soup need to ba handled more gracefully. In case `extraAttrs` fails, `None` value must be returned to avoid breaking the script where it is called<issue_closed>

Status: Issue closed |

conda-forge/cmake-feedstock | 180805822 | Title: ccmake files

Question:

username_0: When I try to run `ccmake ../path` I end up with the following error:

```

Error running cmake::LoadCache(). Aborting.

```

I wonder if #13 will fix this, not sure.

Answers:

username_1: On Mac, cmake versions 3.7 and 3.8.

```

$ ccmake .

dyld: Library not loaded: @rpath/libform.5.dylib

Referenced from: /Users/shadow_walker/anaconda/envs/eman-env/bin/ccmake

Reason: image not found

Trace/BPT trap: 5

```

username_1: My error is not the same as in OP, but still fits the title. Should this be a separate issue?

Status: Issue closed

|

nir0s/ghost | 179087383 | Title: Allow MultiFernet key usage

Question:

username_0: This will allow us to provide multiple keys for encryption and decryption if the user chooses it and will look somewhat like this:

Answers:

username_0: Unfortunately, this is currently irrelevant as the MultiFernet key only allows to encrypt using the first key provided.

Status: Issue closed

|

GovernIB/pluginsib-arxiu | 852099157 | Title: El paràmetre plugin.arxiu.caib.aplicacio.codi no funciona correctament

Question:

username_0: Quan des de l'aplicació RIPEA es configura aquest paràmetre, la metadada eni:app_tramite_doc dels documents creats no agafa aquest valor.

Answers:

username_0: Després de fer vàries proves hem vist que el paràmetre funciona correctament. |

atweiden/voidvault | 544381949 | Title: Grub load error

Question:

username_0: Hi. I tried the latest stable version of this script from your custom iso. The EFI install did not create any boot entries, so I tried a BIOS install and receive a 'Grub load error' message.

The install appeared to work correctly, although it did mention some dracut modules would not be installed due to missing files, so is that the problem?

Answers:

username_1: Also, could you clarify what you mean by this? Note there is no BIOS install mode or EFI install mode in vv; it installs both grub bios and efi support simultaneously. Are you bootstrapping void using `voidvault new` without modifying the source code?

username_1: Just thought of the other, more likely, possibility:

If you're using the voidvault source code shipped with my aging custom iso, which it sounds like you are and which is highly inadvisable, that version of voidvault does not specify `cryptsetup luksFormat --types luks1` (see: https://github.com/username_1/voidvault/commit/718488a85cd8cac9bc96d445d80f21c861eca0f5). Not specifying this cryptsetup option worked perfectly well prior to cryptsetup-2.1.0. In cryptsetup-2.1.0 and later, cryptsetup began to default to `--types luks2`, which is incompatible with Grub, including recent versions of Grub.

Please ensure you're using the latest stable version of voidvault, which is 1.10.0. Use it from the official isos, and you should be fine. vv-1.10.0 should also work fine from my custom void iso though, just make sure you grab that version and don't use something from 2018.

username_0: Yes I'm running things unmodified following all the instructions. I only meant that I tried installing once with my BIOS set for UEFI and once with it set for CSM compatibility mode.

I've also tried mounting the EFI partition to change the location of the .efi file to see if my BIOS picks it up as suggested [here](https://wiki.voidlinux.org/Installation_on_UEFI,_via_chroot) .

Thanks for the help.

username_0: dracut: dracut module 'bootchart' will not be installed, because command '/sbin/bootchartd' could not be found!

dracut: dracut module 'modsign' will not be installed, because command 'keyctl' could not be found!

dracut: dracut module 'busybox' will not be installed, because command 'busybox' could not be found!

dracut: dracut module 'plymouth' will not be installed, because it's in the list to be omitted!

dracut: dracut module 'lvmmerge' will not be installed, because command 'lvm' could not be found!

dracut: dracut module 'dmraid' will not be installed, because command 'dmraid' could not be found!

dracut: dracut module 'dmsquash-live-ntfs' will not be installed, because command 'ntfs-3g' could not be found!

dracut: dracut module 'lvm' will not be installed, because command 'lvm' could not be found!

dracut: dracut module 'mdraid' will not be installed, because command 'mdadm' could not be found!

dracut: dracut module 'multipath' will not be installed, because command 'multipath' could not be found!

dracut: dracut module 'stratis' will not be installed, because command 'stratisd-init' could not be found!

dracut: dracut module 'stratis' will not be installed, because command 'thin_check' could not be found!

dracut: dracut module 'stratis' will not be installed, because command 'thin_repair' could not be found!

dracut: dracut module 'crypt-gpg' will not be installed, because command 'gpg' could not be found!

dracut: dracut module 'cifs' will not be installed, because command 'mount.cifs' could not be found!

dracut: dracut module 'fcoe-uefi' will not be installed, because command 'dcbtool' could not be found!

dracut: dracut module 'fcoe-uefi' will not be installed, because command 'fipvlan' could not be found!

dracut: dracut module 'fcoe-uefi' will not be installed, because command 'lldpad' could not be found!

dracut: dracut module 'biosdevname' will not be installed, because command 'biosdevname' could not be found!

dracut: dracut module 'usrmount' will not be installed, because it's in the list to be omitted!

username_1: It very well could be the case your particular 64-bit laptop requires a 32-bit GRUB installation.

I've intentionally [excluded support](https://github.com/username_1/voidvault/commit/2dd1ed4d4e8f321e05ada0963833b751c481b0e5) for such hardware to this date.

I would not object to a pull request implementing a flag handling this edge case for the completely haywire machines requiring it, but depending on how much of an eye-sore it inflicts on the CLI, I may or may not merge it. I would really only consider implementing it if I myself located a modern and compelling piece of hardware that had a credible explanation for this insane requirement.

Sorry.

The dracut output is fine.

username_0: So I unscrewed the SSD holding the voidvault installation from the secondary bay caddy in the laptop to put it back in the primary slot and voidvault booted. Laptop BIOS must not be able to boot properly from a secondary drive. Sorry to waste your time, and I am now enjoying the voidvault installation on two computers.

Status: Issue closed

username_1: Sounds like a more generally applicable case than I expected. I'll test this if/when I obtain the requisite hardware. Apologies for any hassle caused. |

cloudfoundry/java-buildpack | 351566390 | Title: Supply additional libraries into tomcat/lib

Question:

username_0: I have a proprietary framework that is normally operated by standing up a windows server, installing tomcat, and installing framework libraries into tomcat/lib. These are shared by all applications running on that server. Then the application itself

Answers:

username_1: You basically have two options:

1. Fork the buildpack and add an additional component. We have done this not only to have jar files in tomcat/lib, but also to use native shared libraries as well. If you know Ruby this is pretty easy, if you are teaching yourself Ruby just for this one task it can be an issue.

2. Use the .profile.d script to move jar files from your application deployment to tomcat/lib.

username_2: @username_0 my example will help you

https://github.com/username_2/demo-payara-micro5/blob/hazelcast/pom.xml#L67-L73

https://github.com/username_2/demo-payara-micro5/blob/hazelcast/.profile

username_3: I think one of the first things to take into account is that only a single application will ever run in a Tomcat instance on Cloud Foundry. Therefore, including libraries in `$CATALINA_HOME/lib` is unnecessary as they'll never be shared. Instead, all of the libraries required by an application should be container and versioned with that application. This prevents the lock-step upgrade problem that has been so prevalent in enterprise Java development to date.

If you still need to include libraries within your `$CATALINA_HOME/lib` directory (and again, we strongly discourage this), [External Configuration](https://github.com/cloudfoundry/java-buildpack/blob/master/docs/container-tomcat.md#external-tomcat-configuration) would be the proper way to handle it.

Status: Issue closed

username_0: Oh I get it, trust me. I know they won't be shared across apps, because each in it's own instance, etc. However the libraries are engineered in such a way that if you include the runtime libraries in the app/lib, it will cause a conflict because some of those classes are also defined in the libraries in tomcat/lib. It is what it is. So I am going down the path with External Configuration.

Using this method I was able to include my dependent jars. Thanks all!

username_1: If you fork the buildpack, you are not limited to a single war file anymore. We had an internal format with ./apps/*.war ./lib/*.jar and ./config/*.[xml|properties] directories in a tar file which I extended pretty easily with a fork of the buildpack.

As always, the real question you have to ask yourself is maintaining that customization work the functionality you get - usually the answer is no. But for transition support, saying that you can just drop your old builds into PCF and it just works can overcome a lot of resistance. |

samson-int/jquery.fancybox | 652204411 | Title: Нет зазора между картинками

Question:

username_0: Из-за этого не нестандартных разрешениях соседние картинки просвечивают.

Answers:

username_0: Исправилось в [`1.4.5`](https://github.com/samson-int/jquery.fancybox/releases/tag/1.4.5).

Status: Issue closed

|

contributte/webpack | 739176963 | Title: Contributted

Question:

username_0: - packagist

- contributte site

- namespace

- docs

- readme

Answers:

username_1: @username_0 první dva body jsou na tebe, já pak otaguju 2.0.0 a bude ✅

username_0: Packagist ready https://packagist.org/packages/contributte/webpack

Status: Issue closed

username_1: Aaaand released [2.0.0](https://github.com/contributte/webpack/releases/tag/2.0.0) 🎉 |

nicoverbruggen/phpmon | 655081735 | Title: Release notarized binaries from now on

Question:

username_0: Who likes Gatekeeper being annoying because the app is not signed with a developer ID and/or because of a lack of notarization? No one.

<img width="682" alt="image" src="https://user-images.githubusercontent.com/3715845/87209974-325ded00-c314-11ea-93d5-4b0d314fd694.png">

Close this issue when v2.1 is released (notarized version).<issue_closed>

Status: Issue closed |

dart-lang/sdk | 530662292 | Title: _WebSocketProtocolTransformer.add() doesn't properly handle Uint8List.view() args

Question:

username_0: Instead of `_payload.add(new Uint8List.view(buffer.buffer, index, payloadLength));`

in https://github.com/dart-lang/sdk/blob/master/sdk/lib/_http/websocket_impl.dart#L222

this should probably be `_payload.add(new Uint8List.view(buffer.buffer, buffer.offsetInBytes + index, payloadLength));`

Dart VM version: 2.5.0 (Fri Sep 6 20:10:36 2019 +0200) on "macos_x64"

Answers:

username_0: To add a little more context, I implemented io.Socket to use a SSH tunnel: https://github.com/username_0/dartssh/blob/master/lib/socket_io.dart#L73

It would be most optimal to call controller.add() with a Uint8List.view() but this is not currently possible. (And results in strange failure behavior that took me awhile to debug)

I have also made the same mistake (took view of Uint8List failing to account for when the input is itself a view), and have a utility function to protect against it: https://github.com/username_0/dartssh/blob/master/lib/serializable.dart#L15

A broader fix could be considered.

I tested the patch described in the initial report for several cases successfully. I also signed the contributor release and attempted to upload a patch for review but it seems that I lack permissions to do so. Thanks!

username_1: Hi @username_0, thanks for reporting this issue and coming up with a fix! I can confirm that your analysis seems right and buffer.offsetInBytes should've been used.

I can make the change for you if you'd like? Alternative you can upload a pull request here on github, which will verify your CLA and automatically migrate the change to our Gerrit review tool. You can also upload the change changelist directly to https://dart-review.googlesource.com using the `git cl upload` command from depot\_tools as described in [CONTRIBUTING.md](https://github.com/dart-lang/sdk/blob/master/CONTRIBUTING.md). You should already have all the right permissions for that (you'll need to create an account first). If our procedure for contributing isn't working, I'll be happy to debug it, it'll be useful if you can say exactly what's going wrong. But again, I can make the change for you in the SDK if you prefer that?

You seem to have discovered a systemic issue. The `Uint8List.view` API doesn't lend itself well to nested use cases and it's easy to get it wrong. A quick search of our codebase flags lots of uses of the API without `offsetInBytes`. Many of them seem correct at a glance, but there's probably other mistakes like the one you encountered here. We'll probably want to review these cases and think about how to avoid this problem in the future since it seems like an easy mistake to make.

username_0: Hi @username_1, thanks for looking into this issue!

Sure! Thanks for taking over. (Oops I think I missed creating the account when I tried uploading. In the future I'll try sending a normal PR.)

I think adding a `Uint8List.viewUint8List()` would help. Or if allowing breaking changes, rename `Uint8List.view` to `Uint8List.viewBuffer` and make `Uint8List.view` accept `Uint8List` arguments instead of `ByteBuffer`. A breaking change would trigger/coerce a review of those tricky cases.

username_1: We have a [breaking change policy](https://github.com/dart-lang/sdk/blob/master/docs/process/breaking-changes.md). I discussed some potential API improvements with @lrhn that could be done in a compatible fashion.

username_1: I gave it a try to fix all such instances in the SDK libraries at <https://dart-review.googlesource.com/c/sdk/+/127165>. Thanks for reporting this issue!

username_1: Alright, I landed the fix. It won't be in the upcoming 2.7 release, but it will be in the next full release after that. It'll appear in a future dev release towards the next full release, or you can build Dart from git to get the fix now. Thanks for reporting the issue!

We drafted up a proposal for a TypedData.sublistView() API at <https://dart-review.googlesource.com/c/sdk/+/127321> to avoid this kind of problem in the future. |

nuxt/typescript | 978281066 | Title: @babel/plugin-transform-parameters not working correctly

Question:

username_0: **Describe the bug**

When using babel in conjunction with nuxt typescript I run into an issue using @nuxt/babel-preset-env, which contains @babel/plugin-transform-parameters. Default parameters should be transpiled for compatibility with e.g. IE11 but in the resulting bundles they are still present:

`... t.enc.Base64url={stringify:function(t,e=!0){ ... }} ...` <= `e=!0`

and

`... return o.join("")},parse:function(t,e=!0){ ... } ...` <= `e=!0`

**To Reproduce**

My config is:

nuxt.config.js

```

build: {

babel: {

presets: [

[

'@nuxt/babel-preset-app',

{

ignoreBrowserslistConfig: true,

},

],

],

},

},

```

tsconfig.json

```

{

"compilerOptions": {

"target": "ES2018",

"module": "ESNext",

"moduleResolution": "Node",

"lib": [

"ESNext",

"ESNext.AsyncIterable",

"DOM"

],

"esModuleInterop": true,

"allowJs": true,

"sourceMap": true,

"strict": true,

"noEmit": true,

"experimentalDecorators": true,

"baseUrl": ".",

"paths": {

"~/*": [

"./*"

],

"@/*": [

"./*"

]

},

"types": [

"@nuxt/types",

"@types/node",

"@nuxtjs/axios"

]

[Truncated]

"node_modules",

".nuxt",

"dist"

]

}

```

**Expected behavior**

Default parameters should be transpiled correctly

`... t.enc.Base64url={stringify:function(t,e){ ... }} ...`

**Additional context**

I could not track down the source of these functions yet, but I'm using:

- crypto-js

- js-cookie

- swiper

- vue-awesome-swiper

- vuex-module-decorators |

planningcenter/developers | 229212068 | Title: API Support for Church App Logins

Question:

username_0: ### Detailed Description of the Problem/Question

Can the PCO API be integrated with church apps, such as Subsplash or eChurch, in such a way that certain parts in the app require logging in using PCO user credentials?

Further, can this login activity be recorded in the Activity section in the user's profile in PCO?

##### Steps to reproduce:

##### API endpoint I'm using:

##### Programming language I'm using:

##### Authentication method I'm using:

e.g. OAuth 2, Personal Access Token, Browser Session (Cookie)

Answers:

username_1: Not yet, but we are working on that.

username_0: @username_1 Can you share which church app platform is going to be interoperable with it? What is the target timeline?

username_1: @username_0 We are currently rebuilding our login system. After that is done we will make it so any congregant can login (right now it is only admins and volunteers in Services). With those changes any church app should be able to make the Planning Center login the main login for your app.

Logging login activity is a great idea and I'll submit that as a feature request.

Status: Issue closed

username_2: Any update on this feature?

username_1: @username_2 this is still in the works, no scheduled release yet though. Sorry. |

exportarts/ngx-prismic | 443065794 | Title: Content Validation for `Paragraphs` should accept `Paragraphs` as input

Question:

username_0: The current validation functions `getDefaultParagraphs()` and `setDefaultParagraphs()` only accept `string | string[]` as fallback content.

It should be possible to provide `Paragraph | Paragraphs` to enable formatted/styled content.<issue_closed>

Status: Issue closed |

boonebgorges/buddypress-group-email-subscription | 293194803 | Title: Daily mailing not working

Question:

username_0: Hi, i install your plugin and it work perfect. I just want to set the mailing to daily because some groups have huge members. When a user post something i a stream a takes a lot of time to process. Unfortunately the daily function is not working. When i check the ?sum=1 there are no error and the queue is listing well. But whatever I try mails are not sending.

Is there a solution for this?

Thank you!

Answers:

username_1: How many members are in your large groups? You say "huge" - does this mean dozens, hundreds, thousands? Are you certain that *no* emails are going out? It's possible that the groups are large enough that PHP is running out of memory partway through, so that only some members are getting their digests.

I'd suggest checking outgoing mail logs to see if anyone is getting the digests, as well as PHP error logs to see if there are fatal errors, "Allowed memory ... exhausted" notices, or "Maximum execution time..." errors. This will help narrow down the issue.

username_0: Hi Boone, the biggest group has 180 members. When i post a message in a activity stream(with the all mail option enabled) it takes about 20+ seconds. The function working correct. Everybody in this group gets an e-mail. When i set the group settings to **daily** the mails are in the queue but never sending out. I check the PHP error log and its clear.

username_1: Did you check the outgoing mail log? When you say that the mails are in the queue, have you verified that they are *all* in the queue, for *all* members?

Are other scheduled tasks working properly on your WP installation? If you schedule a post to be published in the middle of the night, does it work?

username_0: Hi Boone, Thanks for your quick reply.

1. I use SMTP->Mailgun for sending outgoing mails.

2. Sorry but i check the ?sum=1 page. I thought that was a queue for sending mails. But there are no mails in the mail queue. I just plan a new blogpost and it's not working. I plan the posts for 19.47 and now i have the message. Planned failed.

Strange?!

username_1: Yes, ?sum=1 is a queue for pending digests. If you don't see any items when you visit `?sum=1`, it means that the digest routine has run.

I'm not sure I understand what you are saying about the new blogpost - perhaps your cron jobs were stuck for some reason, and when that post published, it also pushed out the queued digests?

username_0: OK. I cannot plan a blogpost! It doesn't automatically publish the post. I get the error Planned failed. If i publish the post by hand(manually), the blogpost is working but the /?sum=1 page is still the same (not empty but full of queue mail)

username_1: Got it. I guess this means that your WP cron system is not working. This could be caused by a handful of things. Check Google for some resources: https://encrypted.google.com/search?hl=en&ei=oBFyWqPMMdHQsAXghouoCA&q=troubleshooting+wordpress+cron&oq=troubleshooting+wordpress+cron&gs_l=psy-ab.3..33i22i29i30k1.1725.2779.0.2935.14.9.0.3.3.0.189.980.3j5.8.0....0...1c.1.64.psy-ab..3.11.1025...0j0i22i30k1.0.AHhQW2hPwxU

username_0: OK. I take a look! And let you know when i fixed the problem.

username_0: Hi Boone, i fixed the problem. I manually add a cronjob and everything is working now.

I have another question. Is it possible to set HTML mail?

username_0: I see this:

HTML EMAILS