repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

Monty15/Capstone | 284806249 | Title: Viewport meta tag

Question:

username_0: https://github.com/Monty15/Capstone/blob/master/index.html#L4-L9

Remember to include the `viewport` meta tag to properly control your website's viewport width.

```html

<meta name="viewport" content="width=device-width, initial-scale=1.0">

```

💡 This is particularly important for any mobile responsive website. |

vladbalmos/mitzasql | 741618854 | Title: Database names with dashes cause error 1064 (42000)

Question:

username_0: **Describe the bug**

When selecting a database with a dash "-" in the name the following error is displayed.

1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near '-test' at line 1

**To Reproduce**

Steps to reproduce the behavior:

1. Create a database with name "wordpress-test"

2. Open Mitzasql and navigate to this database

3. When trying to enter the db you can see the error.

4. See error

**Expected behavior**

Tables inside the database would be displayed.

**Screenshots**

https://i.imgur.com/LsCfzAQ.png

**Desktop (please complete the following information):**

- OS: Debian Buster

- Python version 3.7.3

Answers:

username_1: Good find, thanks!

Status: Issue closed

username_0: Works like a charm. Thanks! |

utPLSQL/utPLSQL | 446432444 | Title: Add JSON object comparision

Question:

username_0: Since JSON is gaining a popularity and since Oracle 12.2 we got a proper support of JSON in database I thought it would b a good idea to implement a JSON comparison matcher.

I've did some initial work for cursor equality and will be adding a more methods.<issue_closed>

Status: Issue closed |

zalando/patroni | 778628705 | Title: Available implementations: """ + ', '.join(sorted(set(available_implementations)))) patroni.exceptions.PatroniFatalException: 'Can not find suitable configuration of distributed configuration store\nAvailable implementations: kubernetes, raft'

Question:

username_0: Hi ,i get same error like this:

2021-01-05 13:15:11,134 INFO: Failed to import patroni.dcs.consul

2021-01-05 13:15:11,139 INFO: Failed to import patroni.dcs.etcd

2021-01-05 13:15:11,142 INFO: Failed to import patroni.dcs.etcd3

2021-01-05 13:15:11,149 INFO: Failed to import patroni.dcs.exhibitor

2021-01-05 13:15:11,162 INFO: Failed to import patroni.dcs.zookeeper

Traceback (most recent call last):

File "patroni.py", line 6, in

main()

File "/home/kingbase/patroni/patroni/init.py", line 170, in main

return patroni_main()

File "/home/kingbase/patroni/patroni/init.py", line 138, in patroni_main

abstract_main(Patroni, schema)

File "/home/kingbase/patroni/patroni/daemon.py", line 98, in abstract_main

controller = cls(config)

File "/home/kingbase/patroni/patroni/init.py", line 29, in init

self.dcs = get_dcs(self.config)

File "/home/kingbase/patroni/patroni/dcs/init.py", line 107, in get_dcs

Available implementations: """ + ', '.join(sorted(set(available_implementations))))

patroni.exceptions.PatroniFatalException: 'Can not find suitable configuration of distributed configuration store\nAvailable implementations: kubernetes, raft'

My enviroment like this:

Python 3.6

pip3 20.3.3

pip3 list

Package Version

boto 2.49.0

certifi 2020.12.5

chardet 4.0.0

click 7.1.2

dnspython 2.0.0

flake8 3.8.4

idna 2.10

importlib-metadata 3.3.0

kazoo 2.8.0

mccabe 0.6.1

patroni 2.5

pip 20.3.3

prettytable 2.0.0

psutil 5.8.0

psycopg2 2.5.4

pycodestyle 2.6.0

pyflakes 2.2.0

pysyncobj 0.3.7

python-consul 1.1.0

python-dateutil 2.8.1

python-etcd 0.4.5

PyYAML 5.3.1

requests 2.25.1

setuptools 28.8.0

six 1.15.0

typing-extensions 3.7.4.3

urllib3 1.26.2

wcwidth 0.2.5

wheel 0.36.2

ydiff 1.2

[Truncated]

#timezone: 'PRC'

#lc_messages: 'C'

#lc_monetary: 'C'

#lc_numeric: 'C'

#lc_time: 'C'

#full_page_writes: on

#synchronous_commit: on

#wal_log_hints: on

#synchronous_standby_names: ''

#max_replication_slots: 10

create_replica_methods:

- basebackup

basebackup:

max-rate: '100M'

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

Answers:

username_1: **python-etcd** module in order to use Etcd as DCS

username_0: May i run it by source code?

If i user python2.7 ,this is ok!

username_1: It doesn't matter how you run it, `python-etcd` module must be installed if you want to run Patroni with etcd.

username_0: python-etcd 0.4.5 in the pip3 list . I am sure i install it .

username_1: Magic doesn't exists.

username_0: python-etcd 0.4.5 in the pip3 list . I am sure i install it.

It in the list:

Package Version

boto 2.49.0

certifi 2020.12.5

chardet 4.0.0

click 7.1.2

dnspython 2.0.0

flake8 3.8.4

idna 2.10

importlib-metadata 3.3.0

kazoo 2.8.0

mccabe 0.6.1

patroni 2.5

pip 20.3.3

prettytable 2.0.0

psutil 5.8.0

psycopg2 2.5.4

pycodestyle 2.6.0

pyflakes 2.2.0

pysyncobj 0.3.7

python-consul 1.1.0

python-dateutil 2.8.1

**### python-etcd 0.4.5**

PyYAML 5.3.1

requests 2.25.1

setuptools 28.8.0

six 1.15.0

typing-extensions 3.7.4.3

urllib3 1.26.2

wcwidth 0.2.5

wheel 0.36.2

ydiff 1.2

zipp 3.4.0

username_1: I totally don't undestand what you are doing. The only purpose of release.sh is publishing Patroni on PyPI.

username_0: I need change some code for our database ,the database base on postgresql, so i need build the package to publish some users.

username_1: Perfect, then you should be qualified enough to figure out what is wrong with _your system_ and why python can't find/load certain modules.

The recommended way of installing Patroni either from PyPI (pip install patroni[$EXTRAS]) or via PGDG packages (apt-get/yum install). We can't be responsible for something that you do on your own.

Status: Issue closed

|

chargebee/chargebee-dotnet | 422252320 | Title: Upgrade from 2.6.7 to 2.6.9 -> Timestamp errors

Question:

username_0: Hi,

I've updated from version 2.6.7 -> 2.6.9.

Everything compiled fine and everything seemed fined after a few tests.

Then I received a webhook from ChargeBee and everything went down...

When creating a new webhook event from an io stream, I get this error:

`OccurredAt = 'WebhookEvent.OccurredAt' threw an exception of type 'System.TypeInitializationException'`

This is how I create the webhookEvent object:

` Public Sub ProcessRequest(context As HttpContext) Implements IHttpHandler.ProcessRequest

Dim Response As String = Nothing

Dim ResponseCode As HttpStatusCode = HttpStatusCode.OK

Try

' Input

Using StreamReader As New IO.StreamReader(context.Request.InputStream)

InputData = StreamReader.ReadToEnd()

End Using

If String.IsNullOrWhiteSpace(InputData) Then Throw New ArgumentNullException("The data cannot be null.")

' Create the webhook event

WebhookEvent = New ChargeBee.Models.Event(InputData)

[...]

Catch ex as Exception

End Try

[...]

End Sub

`

I checked and the timestamp is fine in the IO stream (1552915864).

All the modules are up to date, everything else is working fine, I just can't make the webhook object creation works.

If I use 2.6.7, this works flawlessly, in fact it's been working flawlessly all the way back with version 1.x.x.

Please fix for the next release

Answers:

username_1: Stumbled upon same issue. Using v2.8.0 and it still fails for any date.

username_2: Still broken in 2.8.5

username_3: Still broken in 2.8.7

username_4: **Update**

I've developed a small fix for the conversion between Unix TimeStamp and UTC DateTime.

Couldn't find how provide a solution to creators, so I pushed the fix to my personal space. https://github.com/username_4/chargebee-dotnet/tree/bugfix/patch-timestamp-datetime-conversion

Hope it helps

username_4: **Update**

I've managed to fix this and now on my way to fork and provide the solution I've found to work.

username_4: ### **[SOLVED]**

**TL;DR;**

Be sure to call **ChargeBee.Api.ApiConfig.Configure** before accessing DateTime or Timestamp fields to avoid unreasonable Exceptions, even when unit testing or offline.

**Summary:**

Accessing DateTime and Timestamp fields fail because the timeout for an HttpClientAdapter can't be zero.

Adding one sentence to @username_3 [comment ](https://github.com/chargebee/chargebee-dotnet/issues/18#issuecomment-737283847) makes it work.

```

ChargeBee.Api.ApiConfig.Configure("fake-sitename", "fake-apikey");

var cbEvent = new Event(jsonProvidedAsString);

var termEnd = cbEvent.Content.Subscription.CurrentTermEnd;

```

ChargeBee Api needs to be initialised before accessing its model. Otherwise it will throw exception when creating **HttpClient** on **ApiUtil** constructor. The same class contains **ConvertFromTimestamp** and **ConverttoTimestamp** methods triggering unexpected errors

https://github.com/chargebee/chargebee-dotnet/blob/1d6934b645c31e9b3e17cc0fbbbf34d582334f0c/ChargeBee/Api/ApiUtil.cs#L21

ApiConfig.ConnectionTimeout is zero until `ChargeBee.Api.ApiConfig(<string>,<string>)` is run, being first assigned here:

https://github.com/chargebee/chargebee-dotnet/blob/1d6934b645c31e9b3e17cc0fbbbf34d582334f0c/ChargeBee/Api/ApiConfig.cs#L45

Thereby, if **ApiConfig.Configure** is never invoked it would always raise an exception because the timeout for an HttpClientAdapter can't be zero.

Thank you @username_3 on how to reproduce. and @username_0, @username_1, @username_2 for also reporting

username_4: #27 addresses this.

username_5: This has been addressed in v2.10.0

Status: Issue closed

|

bazelbuild/bazel | 166594096 | Title: Easy to mistakenly install bazel without specifying "--user"

Question:

username_0: When installing Bazel for the first time, I made the fairly obvious mistake of omitting the "--user" flag. The error I got back suggested I should use "sudo," which I did. Since I don't seem to be the only person to make this mistake (cf the title of #962), perhaps if the installer is about to error out due to insufficient permissions, it would be better to say something like:

`If you are just installing this for yourself, use --user, but if you would like it to be accessible to everyone on the machine, ensure you have access to...`

Answers:

username_1: The error I get does not mention sudo, it says:

```

The Bazel installer must have write access to /usr/local/bin!

Usage: bazel-0.3.0-jdk7-installer-linux-x86_64.sh [options]

```

and then prints the usage string.

What version of the installer are you using?

username_0: My mistake, I simply interpreted the lack of permissions as "I need to use sudo." I then avoided looking a few lines down to see the help text that referenced "--user".

Again, it's a bit of an embarrassing mistake, I only filed the issue because I found someone else who made the same mistake. So I'm just suggesting a slight change to the message.

Status: Issue closed

|

xmake-io/xmake | 796521346 | Title: Rebuild local Qt-dependent package with project Qt version?

Question:

username_0: I have Qt installed on Ubuntu via apt, as well as an older version installed via their web installer. I also have a Qt-dependent package in a local xrepo repo.

We can set the version of Qt to build the project with:

```

xmake f --qt=~/Qt/5.11.3

```

But the package is unaware of the change so continues to use the system-installed Qt. This causes version mismatch errors.

Is there or could there be a solution to this? Some way of using the currently set `qmake` in the package?

Answers:

username_1: What build system does this package use? xmake? cmake or autoconf?

username_0: It just uses `qmake` and `make`, here's what it looks like at the moment:

```lua

package("qscintilla")

set_homepage("https://www.riverbankcomputing.com/software/qscintilla/intro")

set_description("QScintilla is a port to Qt of <NAME>'s Scintilla C++ editor control.")

set_urls("https://www.riverbankcomputing.com/static/Downloads/QScintilla/$(version)/QScintilla-$(version).zip")

add_versions("2.11.6", "ddd0945d90bbf9394e0d4a41cfeb5bd7c1a6b918c827aa90d4396ea3da0be9a9")

on_install("linux", function(package)

os.execv("qmake", { }, { curdir = "./Qt4Qt5"})

os.execv("make", { "-j8" }, { curdir = "./Qt4Qt5" })

os.cp("./Qt4Qt5/libqscintilla2_qt5.so.15.0.0", path.join(package:installdir("lib"), "libqscintilla2_qt5.so"))

os.cp("./Qt4Qt5/libqscintilla2_qt5.so.15.0.0", path.join(package:installdir("lib"), "libqscintilla2_qt5.so.15.0.0"))

os.cp("./Qt4Qt5/libqscintilla2_qt5.so.15.0.0", path.join(package:installdir("lib"), "libqscintilla2_qt5.so.15"))

os.cp("./Qt4Qt5/Qsci/*.h", package:installdir("include/Qsci"))

end)

```

username_1: ```lua

on_install("linux", function(package)

local qtdir = get_config("qt")

end)

```

username_0: Thanks, that resolves the correct path, but `os.execv` doesn't like it:

```lua

local qtdir = get_config("qt")

os.execv(qtdir .. "/gcc_64/bin/qmake", { }, { curdir = "./Qt4Qt5"})

```

`xmake f -cvD --qt=~/Qt/5.11.3` error:

```bash

error: @programdir/core/sandbox/modules/os.lua:387: execv(~/Qt/5.11.3/gcc_64/bin/qmake ) failed(255)

stack traceback:

[C]: in function 'error'

[@programdir/core/base/os.lua:787]: in function 'raise'

[@programdir/core/sandbox/modules/os.lua:387]: in function 'execv'

[./xmake-pkgs/packages/q/qscintilla/xmake.lua:12]: in function 'script'

[...gramdir/actions/require/impl/actions/../utils/filter.lua:125]: in function 'call'

[@programdir/actions/require/impl/actions/install.lua:168]:

[C]: in function 'trycall'

[@programdir/core/sandbox/modules/try.lua:121]: in function 'try'

[@programdir/actions/require/impl/actions/install.lua:127]: in function 'action_install'

[@programdir/actions/require/impl/package.lua:831]: in function 'jobfunc'

[@programdir/modules/private/async/runjobs.lua:193]:

[C]: in function 'trycall'

[@programdir/core/sandbox/modules/try.lua:121]: in function 'try'

[@programdir/modules/private/async/runjobs.lua:186]: in function 'cotask'

[@programdir/core/base/scheduler.lua:317]:

=> install qscintilla 2.11.6 .. failed

error: @programdir/modules/private/async/runjobs.lua:217: @programdir/actions/require/impl/actions/install.lua:256: install failed!

stack traceback:

[C]: in function 'error'

[@programdir/core/base/os.lua:787]: in function 'raise'

[@programdir/actions/require/impl/actions/install.lua:256]: in function 'catch'

[@programdir/core/sandbox/modules/try.lua:127]: in function 'try'

[@programdir/actions/require/impl/actions/install.lua:127]: in function 'action_install'

[@programdir/actions/require/impl/package.lua:831]: in function 'jobfunc'

[@programdir/modules/private/async/runjobs.lua:193]:

[C]: in function 'trycall'

[@programdir/core/sandbox/modules/try.lua:121]: in function 'try'

[@programdir/modules/private/async/runjobs.lua:186]: in function 'cotask'

[@programdir/core/base/scheduler.lua:317]:

stack traceback:

[C]: in function 'error'

@programdir/core/base/os.lua:787: in function 'raise'

@programdir/modules/private/async/runjobs.lua:217: in function 'catch'

@programdir/core/sandbox/modules/try.lua:127: in function 'try'

@programdir/modules/private/async/runjobs.lua:186: in function 'cotask'

@programdir/core/base/scheduler.lua:317: in function <@programdir/core/base/scheduler.lua:315>

```

username_1: `~/xx`, you need use path.translate or path.absolute for qmake path.

username_0: Yay, that's it - thanks!

Status: Issue closed

|

nornir-automation/nornir | 336520004 | Title: Closing NAPALM connections automatically

Question:

username_0: When using NAPALM plugins connections stay open and can cause scripts to hang while waiting for the connections to timeout.

Forcing the connections to close using a task helps with this.

```python

task.host.connections["napalm"].close()

```

Maybe this could be done automatically? It'll require to find a way to know which connections are not useful anymore.

Answers:

username_1: How would we know that you didn't want to use the connection any more (i.e. in a subsequent task)?

The process is probably to have the code writer to explicitly close the connection in some way.

username_0: Yep that's complicated. I opened the issue because @username_2 wanted to track it.

I don't see any simple way to do this. Maybe proposing a task closing all NAPALM connections could be an answer to this.

username_2: I thought we could look into creating a “close” task so it’s easier for the user to explicitly close the connections or maybe do it automatically via the garbage collector by implementing some code in the __del__ magic method.

username_3: I think the close task, or perhaps something like a context manager for Nornir could make sense. There might be scenarios where users don't want the connections to be terminated. (I've seen Huawei switches where a local script running on them stops running if the ssh connection dies).

We could have some task which just iterates all of the hosts' connection dictionaries and calls a disconnect function. The main issue is perhaps that all of the connection plugins be consistent and support the same close method.

username_2: For reference, some work that will enable this is being done in #189

username_4: @username_2

I think this is fixed with #195 and could be closed? |

freedomofpress/securedrop-ux | 408375462 | Title: Online/Offline UI Differences

Question:

username_0: ### Problem

When a journalist views the Client in offline mode, what does that look like?

### Considerations

- How will parts of the UI requiring network connectivity be shown to communicate their non-functionality in Offline Mode?

- Will something outright go away, or be greyed-out?

- Where might there be suggestions or other affordances made to nudge a user to Sign In?

- When accessing the client for the first time in Offline mode, what might that experience look like?

### Acceptance Criteria

- Clickable wireframe demonstrating interactivity

- All state changes clearly itemized/outlined

- Spec'd wireframe, or multiple comments here?

- Get on the needs testing punchlist

---

This is a sub-task within #18 and #31 and #17

Answers:

username_0: First Rev (submitted on Gitter, 07 Feb): CLICK ON TOP BAR to toggle back and forth between offline and online. Delete functionality for a Source Account also goes away, as does the drop-down menu on the Messages pane. https://invis.io/X4QEP5Y9EAT#/345671069_0_New_-_DR_On-_OFFLINE

username_0: ### Feedback (cut-and-pasted from Gitter):

<NAME>

@eloquence

Feb 07 17:12

I'm a fan! I like avoiding the standard "grayed out UI controls" pattern which can be very frustrating for users, and this also takes up less space

<NAME> Alter

@username_0

Feb 07 17:15

Oh—fwiw, clarification with the above—in the wireframe, the paper airplane dealie is the "Send" button. I believe the existing Client has a proper button with the word "Send" on it

@eloquence Yay! Ya... the "omg, why won't this friggin' work?!" frustration quite sux.

<NAME>

@username_1

Feb 07 17:20

hey @username_0 this looks awesome

<NAME>

@username_0

Feb 07 17:24

Coolio!

<NAME>

@username_1

Feb 07 17:24

trying to find the image of what will be on the ... menu

<NAME>

@username_0

Feb 07 17:33

@username_1 You mean the content in that menu?

I think @eloquence will eventually do that menu as its own Issue/Story

"Export All Messages & Files" and "Delete Source" are the only two items therein, for now.

^ ...or probz "Export Source." We never did much testing on that menu.

username_0: (crap, sorry that @'d everyone!)

username_1: @username_0 - Hey just saw this and noticed that the conversation in gitter was left open-ended. I noticed while toggling back and forth between offline and online mode that you get a `. . .` options menu next to the date when online, so I was wondering if you had created any wireframes for this menu. I assume it'll include a way to logout? Is there anything else?

username_0: @username_1 Nope, logging-out is handled from the user avatar having a dropdown-ish type thing. The dot-dot-dot menu you cite currently only has "Export All Submissions" and "Delete Account" on it, as of now. Older wireframes should show more functionality on it; I also don't really know what users may want in it, so could easily pack it with a dozen account-level options like "Export all files, delete all local files" etc... but wanna keep it to just those two account-level things, for now.

Anything pertaining to the Journalist's authentication or connectivity happens in the top bar.

username_0: I'd forgotten this ticket still existed!

**Explorations**, here: [» Invision Mox (semi-interactive) «](https://invis.io/BSRX8TUN8K3)

**Final direction**, in Zeplin (ignore "Empty" pane content): [Public Screen](https://scene.zeplin.io/project/5c807ea562f734bd2756b243/screen/5cd35ec0df6a8967aedc0d95)

Status: Issue closed

username_0: Closing, cuz this is done? @eloquence feel free to reopen if you disagree. Wishing this had gone into a review, but there is only so much team bandwidth and time... :) |

DoctorVanGogh/ExtendedStorage | 247234384 | Title: Add toggle for user/real storage settings

Question:

username_0: implemented in [branch](/username_0/ExtendedStorage/tree/feature/settings-debug)

Status: Issue closed

Answers:

username_0: implemented in [branch](/username_0/ExtendedStorage/tree/feature/settings-debug)

Status: Issue closed

|

moby/moby | 758436900 | Title: IPv6 options refinement: --ipv6 behaving better out of the box (concerns both options and default behavior regarding IPv6 in custom networks)

Question:

username_0: I am just an outsider and not really a code contributor to docker/moby myself, so I just hope to get the discussion going. Basically I'm saying, just take my suggestions as a vague input but I shouldn't be the person to make any of the final calls, in the end I'm just an end-user as many others. Hopefully, this ticket will help the right people to come to the right conclusions!

Answers:

username_1: I have two related suggestions:

- In addition to setting `--fixed-cidr-ipv6` to a private subnet when using ipv6 NAT, I suspect that `default-address-pools` should default to having a private subnet available too (maybe even *without* using ipv6 NAT), so you can create a custom network with `--ipv6` and have it assigned an address without having to manually manage this, just like with ipv4.

- It might be useful if some option would be added (maybe even enabled by default) to get ipv6 enabled on user-created networks by default. It seems that you need to manually specify `--ipv6` to `network create` right now, it would be good if this could be made the default (distribution default or system default).

username_0: I like those ideas! I agree in particular with it being a good suggestion that the IPv6 being enabled by default with a new option to override that when it's enabled for the daemon. I think the point of having this finally pretty "auto-magical" IPv6 NAT that works like almost IPv4 should be to have everyone do less manual tweaks to get it to "just work" on existing setups, including those with custom networks, so that seems like a really good idea to me.

username_0: Any updates? I'm a bit concerned this might take another five years to get looked at, at which point people will probably be stung badly if these options are changed. Now seems like the best time if any to still integrate this.

username_2: Just to add to the quorum. Having `--ip6tables` as a non-experimental flag would certainly help me sell the feature to my company. We are currently using `docker-ipv6nat` https://github.com/robbertkl/docker-ipv6nat/issues/65.

To add something to this discussion (I don't know if this should be a separate ticket or not), docker-compose default created network should also be IPv6 enabled when `--ipv6` and `--ip6tables` are set. As it stands the difference of behaviour between docker and docker-compose is not very intuitive. A docker container using the default network is IPv6 enabled, but a container started by docker-compose isn't.

username_3: I was recently wondering about this, myself.

I'd like to do IPv6 NAT on my Docker swarms, without hacking [robertkl/docker-ipv6nat to work](https://github.com/robbertkl/docker-ipv6nat/issues/12) -- if that hack still works at all |

juju/python-libjuju | 1118809392 | Title: TypeError: unsupported format string passed to NoneType.__format__

Question:

username_0: Function "_print_status_apps" in file juju/status.py, below piece of code should check if app.workload_version or app.charm_channel is None.

https://github.com/juju/python-libjuju/blob/cbeae7063f8a19dc1e1ed3638f8025735f6d9bc0/juju/status.py#L116

If a charm is deployed from local rather than from charmstore, workload_version and app.charm_channel may be None

Traceback (most recent call last):

File "./lib/test_lib_juju.py", line 26, in <module>

main()

File "./lib/test_lib_juju.py", line 7, in main

juju_status = juju_get_status("bcache", keep_relations_info=True, jsfy=False, format_status=True)

File "~lib/lib_juju.py", line 57, in juju_get_status

juju_status = loop.run(get_juju_status(model_name, keep_relations_info, jsfy, format_status))

File "~venv/lib/python3.8/site-packages/juju/jasyncio.py", line 118, in run

raise task.exception()

File "~lib/lib_juju.py", line 108, in get_juju_status

juju_status = await formatted_status(model)

File "~venv/lib/python3.8/site-packages/juju/status.py", line 61, in formatted_status

result_str += _print_status_apps(result_status)

File "~venv/lib/python3.8/site-packages/juju/status.py", line 116, in _print_status_apps

result_str += limits.format(

TypeError: unsupported format string passed to NoneType.__format__

Task was destroyed but it is pending!

Answers:

username_1: Changes from #622 should fix this. Please reopen if needed.

Status: Issue closed

|

CosmicMind/Material | 144433209 | Title: Depth Does Not Change on Pulse

Question:

username_0: When a view pulses (and scale), the shadow remains the same and does not present a change of depth. Scale I presume is there to simulate the view getting closer to the fingertip.

Status: Issue closed

Answers:

username_1: The shadow slightly spreads, due to the scale effect. I believe you could add an animation by subclassing the pulse view and adding animations to the touch handlers that spreads the shadow, and contracts it. I am not going to make this a priority right now, but we are planning some nice additions with 3D touch and such, and that is when this issue will be considered at a high priority.

Feel free to continue the dialog on this, though for cleanup reasons, I will close the issue. |

PistonDevelopers/conrod | 35726002 | Title: Widget Ideas

Question:

username_0: Here is a space to collect Widget Type ideas.

This list is taken directly from the [ofxUI] (https://github.com/rezaali/ofxUI) readme as a basis for ideas. Any more ideas are welcome!

- Buttons (push, state, toggle, image, label)

- Button Matrices

- Dropdown Menus

- Labels

- Sliders (rotary, range, vertical, horizontal)

- Number Dials (aka Spinners)

- 2D Pads

- Text Input Areas

- Image Sliders

- Image Buttons

- Image Color Sampler

- Value Plotters

- Moving Graphs

- Waveform & Spectrum Graphs

- Radio Toggles

- Text Areas

- Sortable List

Status: Issue closed

Answers:

username_0: Closing in favour of posting unique issues for drafting/discussing/requesting each widget. |

google/yapf | 1084716435 | Title: [Question]yapfignore not working in pyproject.toml

Question:

username_0: Thank you for reading this issue.

I'm currently working on a Django project and I want to use `yapf` for formatting.

However, as I am trying to use `pyproject.toml` for excluding files, it does not works.

```toml

[tool.yapfignore]

ignore_patterns = [

'**/migrations]*.py',

'manage.py',

'venv/*',

]

```

On the other hand, what makes it interesting is that the command line behaves differently and successfully ignores the files I want.

```sh

yapf --recursive --exclude '**/migrations/*.py' --exclude 'manage.py' --exclude 'venv/*' .

```

I still cannot figure out what would be the problem with this discrepancy.

At the moment, a part my dependencies is like the following:

```txt

pylint==2.12.2

pylint-django==2.4.4

pylint-plugin-utils==0.6

yapf==0.31.0

```

and my current Python version is `3.9.9`.

If there is any problem with the way I make this issue post, please don't hesitate to point it out!

Thank you in advance!

Answers:

username_0: It still doesn't work, so I had a workaround, `.yapfignore`.

```

**/migrations/*.py

manage.py

venv/*

```

I have to admit that the project would be a bit more complex, but I think `pyproject.toml` is currently not working for excluding files and directories. Any suggestions?

username_1: Hi @username_0

Related to https://github.com/google/yapf/issues/955 , I think yapf version is the problem.

Latest version (0.32.0) of yapf released 26 Dec (ref: https://pypi.org/project/yapf/#history).

In my local PC, yapf ignore feature correctly worked. Please check.

Status: Issue closed

username_0: @username_1

Thank you for your reply! I appreciate your kindness.

Oh, I have missed the issue https://github.com/google/yapf/issues/955

I have checked and now it worked.

Now this issue is resolved so I close it. |

JuliaTime/TimeZones.jl | 733844499 | Title: Parsing a datetime string including "GMT" stopped working in v1.5.0

Question:

username_0: `TimeZones.ZonedDateTime` used to work prior to release 1.5.0 when GMT was included. An error referring to legacy timezones is now returned. However, I'm not aware that such legacy timezones can be specified when parsing a string.

```julia

julia> dt_string_1 = "Sat, 31 Oct 2020 11:31:54 GMT"

"Sat, 31 Oct 2020 11:31:54 GMT"

julia> TimeZones.ZonedDateTime(dt_string_1, "e, d u Y H:M:S Z")

ERROR: ArgumentError: Unable to parse string "Sat, 31 Oct 2020 11:31:54 GMT" using format dateformat"e, d u Y H:M:S Z". The time zone "GMT" is of class `TimeZones.Class(:LEGACY)` which is currently not allowed by the mask: `TimeZones.Class(:FIXED) | TimeZones.Class(:STANDARD)`

Stacktrace:

[1] TimeZone(::SubString{String}, ::TimeZones.Class) at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\types\timezone.jl:65

[2] TimeZone at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\types\timezone.jl:46 [inlined]

[3] ZonedDateTime(::Int64, ::Int64, ::Int64, ::Int64, ::Int64, ::Int64, ::Int64, ::SubString{String}) at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\types\zoneddatetime.jl:132

[4] parse at C:\Users\jerem\AppData\Local\Programs\Julia-1.5.2\share\julia\stdlib\v1.5\Dates\src\parse.jl:285 [inlined]

[5] ZonedDateTime(::String, ::DateFormat{Symbol("e, d u Y H:M:S Z"),Tuple{Dates.DatePart{'e'},Dates.Delim{String,2},Dates.DatePart{'d'},Dates.Delim{Char,1},Dates.DatePart{'u'},Dates.Delim{Char,1},Dates.DatePart{'Y'},Dates.Delim{Char,1},Dates.DatePart{'H'},Dates.Delim{Char,1},Dates.DatePart{'M'},Dates.Delim{Char,1},Dates.DatePart{'S'},Dates.Delim{Char,1},Dates.DatePart{'Z'}}}) at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\parse.jl:88

[6] ZonedDateTime(::String, ::String; locale::String) at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\parse.jl:101

[7] ZonedDateTime(::String, ::String) at C:\Users\jerem\.julia\packages\TimeZones\fr1IP\src\parse.jl:101

[8] top-level scope at REPL[96]:1

```

Base function works when specifying the presence of Z, although the notion of timezone is ignored. This seems a viable approach for my use case. I'm just wondering whether it wouldn't be more convenient to keep supporting the parsing of GMT in `ZonedDateTime`?

```

julia> Dates.DateTime(dt_string_1, "e, d u Y H:M:S Z")

2020-10-31T11:31:54

```<issue_closed>

Status: Issue closed |

Tunous/Dawn | 654393250 | Title: Highlighted text permanently shown

Question:

username_0: After long pressing and dragging to select text, a magnification bubble pops up and remains there until I restart the app.

Answers:

username_1: This might be rom related, too. Can you post exact rom version you are on?

username_0: My ROM is stable MIUI 12.

MIUI Global 12.0.1 Stable 172.16.58.3(QFKEUXM) |

tensorflow/tensorflow | 387369750 | Title: [ppc64le] //tensorflow/lite/experimental/micro unit test fail.

Question:

username_0: You can assign this issue to me, as I'm about ready to submit a PR to fix this.

**System information**

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): N/A

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Linux ppc64le Ubuntu 16.04

- Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device: N/A

- TensorFlow installed from (source or binary): source

- TensorFlow version (use command below): commit <PASSWORD> from Dec 4th, 2018

- Python version: 2.7

- Bazel version (if compiling from source): 0.15.0

- GCC/Compiler version (if compiling from source): gcc version 5.4.0 20160609 (Ubuntu/IBM 5.4.0-6ubuntu1~16.04.10)

- CUDA/cuDNN version: N/A

- GPU model and memory: N/A

**Describe the current behavior**

All tensorflow/lite/experimental/micro unit test fail

See: https://powerci.osuosl.org/job/TensorFlow_PPC64LE_CPU_Build_and_Test/31/testReport/

**Describe the expected behavior**

All unit test pass

**Code to reproduce the issue**

Unit test invoked by Jenkins:

`./tensorflow/tools/ci_build/ci_build.sh cpu --dockerfile tensorflow/tools/ci_build/Dockerfile.cpu.ppc64le ./tensorflow/tools/ci_build/linux/ppc64le/cpu/run_py2.sh`

`./tensorflow/tools/ci_build/linux/ppc64le/cpu/run_py2.sh` can be modified to just run `//tensorflow/lite/experimental/micro/...`

Also the getting started section here recreates two of the issues:

https://github.com/tensorflow/tensorflow/tree/master/tensorflow/lite/experimental/micro

**Other info / logs**

Running the getting started example: make -f tensorflow/lite/experimental/micro/tools/make/Makefile test_micro_speech

This warning below is flagged and run the test runs it seg faults. As I understand the warning is because your not allowed to pass a string constant in this case. (It works however on x86)

```

g++ -O3 -DNDEBUG --std=c++11 -g -DTF_LITE_STATIC_MEMORY -I. -Itensorflow/lite/experimental/micro/tools/make/../../../../../ -Itensorflow/lite/experimental/micro/tools/make/../../../../../../ -Itensorflow/lite/experimental/micro/tools/make/downloads/ -Itensorflow/lite/experimental/micro/tools/make/downloads/gemmlowp -Itensorflow/lite/experimental/micro/tools/make/downloads/flatbuffers/include -I -I/usr/local/include -c tensorflow/lite/experimental/micro/examples/micro_speech/micro_speech_test.cc -o tensorflow/lite/experimental/micro/tools/make/gen/linux_ppc64le/obj/tensorflow/lite/experimental/micro/examples/micro_speech/micro_speech_test.o

In file included from tensorflow/lite/experimental/micro/examples/micro_speech/micro_speech_test.cc:22:0:

tensorflow/lite/experimental/micro/examples/micro_speech/micro_speech_test.cc: In function 'int main(int, char**)':

./tensorflow/lite/experimental/micro/testing/micro_test.h:95:51: warning: ISO C++ forbids converting a string constant to 'va_list {aka char*}' [-Wwrite-strings]

micro_test::reporter->Report("Testing %s", #name); \

^

tensorflow/lite/experimental/micro/examples/micro_speech/micro_speech_test.cc:28:1: note: in expansion of macro 'TF_LITE_MICRO_TEST'

TF_LITE_MICRO_TEST(TestInvoke) {

^~~~~~~~~~~~~~~~~~

```

Fixing it and running the example: make -f tensorflow/lite/experimental/micro/tools/make/Makefile test identifies the same problem in another place:

```

g++ -O3 -DNDEBUG --std=c++11 -g -DTF_LITE_STATIC_MEMORY -I. -Itensorflow/lite/experimental/micro/tools/make/../../../../../ -Itensorflow/lite/experimental/micro/tools/make/../../../../../../ -Itensorflow/lite/experimental/micro/tools/make/downloads/ -Itensorflow/lite/experimental/micro/tools/make/downloads/gemmlowp -Itensorflow/lite/experimental/micro/tools/make/downloads/flatbuffers/include -I -I/usr/local/include -c tensorflow/lite/experimental/micro/micro_error_reporter_test.cc -o tensorflow/lite/experimental/micro/tools/make/gen/linux_ppc64le/obj/tensorflow/lite/experimental/micro/micro_error_reporter_test.o

tensorflow/lite/experimental/micro/micro_error_reporter_test.cc: In function 'int main(int, char**)':

tensorflow/lite/experimental/micro/micro_error_reporter_test.cc:24:56: warning: ISO C++ forbids converting a string constant to 'va_list {aka char*}' [-Wwrite-strings]

error_reporter->Report("~~~%s~~~", "ALL TESTS PASSED");

^

[Truncated]

LD_LIBRARY_PATH=/usr/local/cuda/extras/CUPTI/lib64:/usr/local/nvidia/lib:/usr/local/nvidia/lib64 \

OMP_NUM_THREADS=1 \

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin \

PWD=/proc/self/cwd \

PYTHON_BIN_PATH=/usr/bin/python2 \

PYTHON_LIB_PATH=/usr/local/lib/python2.7/dist-packages \

TF_CUDA_CLANG=0 \

TF_CUDA_COMPUTE_CAPABILITIES=3.7 \

TF_CUDA_VERSION=9.2 \

TF_CUDNN_VERSION=7 \

TF_NCCL_VERSION='' \

TF_NEED_CUDA=1 \

TF_NEED_OPENCL_SYCL=0 \

TF_NEED_ROCM=0 \

external/local_config_cuda/crosstool/clang/bin/crosstool_wrapper_driver_is_not_gcc -o bazel-out/ppc-opt/bin/tensorflow/lite/experimental/micro/examples/micro_speech/feature_provider_test_binary -Wl,-no-as-needed -pie -Wl,-z,relro,-z,now '-Wl,--build-id=md5' '-Wl,--hash-style=gnu' -no-canonical-prefixes -fno-canonical-system-headers -B/usr/bin -Wl,--gc-sections -Wl,@bazel-out/ppc-opt/bin/tensorflow/lite/experimental/micro/examples/micro_speech/feature_provider_test_binary-2.params)

/usr/bin/ld: bazel-out/ppc-opt/bin/tensorflow/lite/experimental/micro/examples/micro_speech/libpreprocessor_reference.a(preprocessor.o): undefined reference to symbol 'cos@@GLIBC_2.17'

//lib/powerpc64le-linux-gnu/libm.so.6: error adding symbols: DSO missing from command line

```

Passing the link option "-lm" resolves this error. The makefile already does this.

Answers:

username_1: Thanks for your PR on this (and sorry for the slow response).

username_0: closing old issues I opened. This issue is resolved.

Status: Issue closed

|

jenkinsci/office-365-connector-plugin | 637152225 | Title: "View Build" option is broken in Microsoft Teams via Jenkins

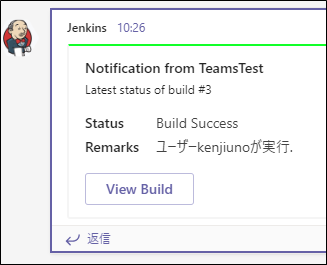

Question:

username_0: Hi Team,

Could you please help is resolving following issue?

In Jenkins pipeline, we have added Notification Webhook for "Office 365 Connector". When build is notified to Microsoft teams, there is "View Build" button which is having below URL:

http://<JENKINS_URL>/jenkins/job/<JOB_NAME>/job/<JOB>/50/display/redirect which is redirecting to: http://<JENKINS_URL>/jenkins/blue/organizations/jenkins/<JOB_NAME>%2F<JOB>/detail/<JOB>/50/ and throwing 400 error

but actual URL is having "pipeline" in the end.

http://<JENKINS_URL>/jenkins/blue/organizations/jenkins/<JOB_NAME>%2F<JOB>/detail/<JOB>/50/pipeline

How to resolve this? Where to modify this URL? Please help,

Thanks and Regards,

<NAME>

Answers:

username_1: I don't use Blue ocean and so the plugin may not work with this. If you provide all details so I can reproduce the problem maybe I would be able to help or fix it

username_0: Thanks for reply. This issue resolved. I disabled one plugin "Display URL for Blue Ocean" and result is coming fine. I think that plugin is causing some confusion with Teams URL.

Thanks once again. We can close this

username_2: Ideally, that link should be customizable, with the default being what it is now.

We're currently getting hit by this too.

username_1: Not sure what do you mean

username_3: We experience this issue as well: when clicking 'View Build' the url 'https://teams.microsoft.com/null' is opened instead of the Build detail page within jenkins.

username_4: Problem noticed yesterday. Yesterday system status:

```

The connector configuration was old (outlook in URL).

jdk: 8

Windows

```

I thought we should update the JDK version and connector. So the current status:

```

Jenkins: 2.277.4

Plugin: 4.15.0

jdk: OpenJDK 11.0.2

Windows

All other plugins are updated.

The connector URL looks like: https://[name].webhook.office.com/webhookb2/[some GUID]/JenkinsCI/[some GUID]

```

But the problem still exists.

username_5: We have a similar issue with the "View Pull Request" Button. It points to "_https://teams.microsoft.com/null_".

Can I set, the url manually or is this a known bug?

Thanks!

username_6: I have URL's created manually that have the same behavior. Teams on my mobile works, but not desktop. Also, not everybody experienced the issue at once. I'd assume it is a Teams issue

username_5: Thanks for your reply, that's interesting. Indeed it also works for my mobile version of Teams...

username_1: Have you tried to regenerate hook url? I have done it and some problems have been solved

username_7: We have the same problem too. I've regenerate hook url but the same problem still occurs.

username_8: Yes. Multiple times. Issue persists. The webhook seems to be fine since, as others have mentioned, if you click the button on the mobile Teams app it seems to work fine.

username_9: Just jumping in the conversation. Same problem here (MSTeams Connector)

I also have a HTTP Post Request that uses a custom Payload and had to use markdown with a static URL and a variable since the default option didn't work either (custom Connector)

username_1: Then maybe you have regenerated different hook? For regenerated url problems have gone. For old one I see problem on desktop and mobile

username_1: OK, I found one more interesting thing: when updating connection configuration (without updating url) desktop Teams is fixed, mobile on team card is fixed but activity card on mobile still displays that message.

username_9: No URL was changed, I've even tried to make a new one with the same configuration, just to see if there was any mistake made in the past, but no luck. Same result.

I actually don't have any error on the webhook configuration.

username_10: same problem too, but mobile no problem.

username_1: Guys, have you follow this instruction https://docs.microsoft.com/pl-pl/microsoftteams/office-365-custom-connectors ?

This provides guideline that solves problem for me

username_11: yeah, same problem too. but mobile is work.

-> https://teams.microsoft.com/null

username_12: I'm wondering if this problem is caused by connector plugin, or not.

I have tested some URL patterns against section "Jenkins URL" in Jenkins configuration page.

Results:

URL | Reachable

:--|:-:

`http://192.168.2.181:8484/` | No

`http://dd7.local:8484/` | Yes

`http://dd7:8484/` | No

## Pattern 1: `http://192.168.2.181:8484/`

build something.

`View Build` leads to `https://teams.microsoft.com/null`

## Pattern 2: `http://dd7.local:8484/`

build something.

`View Build` leads to `http://dd7.local:8484/job/TeamsTest/2/display/redirect`

## Pattern 3: `http://dd7:8484/`

build something.

`View Build` leads to `https://teams.microsoft.com/null`

username_11: I think I found a way to solve this problem.

If it's not a secure website (e.g. https), it seems to go to a teams.microsoft.com/null page.

Therefore, I think IP should be set up as an external network and apply SSL.

username_8: I have SSL setup, https://<IP Address>:8443

How do I setup the IP as an external network? |

getsentry/sentry-javascript | 403459096 | Title: Morgan and @sentry/node with the Express Handler not working well together

Question:

username_0: <!-- Requirements: please go through this checklist before opening a new issue -->

- [x] Review the documentation: https://docs.sentry.io/

- [x] Search for existing issues: https://github.com/getsentry/sentry-javascript/issues

- [x] Use the latest release: https://github.com/getsentry/sentry-javascript/releases

## Package + Version

- [x] `@sentry/node`

### Version:

```

4.5.3

```

## Description

When using the morgan logging middleware, alongside `app.use(Sentry.Handlers.errorHandler() as express.ErrorRequestHandler)`, whenever there is an async controller that throws an Error, no futher requests can be made, and the following stack trace is shown:

```

GET /api/grin/stats 500 54.544 ms - -

Error: connect ECONNREFUSED 172.16.17.32:8080

at Object._errnoException (util.js:1022:11)

at _exceptionWithHostPort (util.js:1044:20)

at TCPConnectWrap.afterConnect [as oncomplete] (net.js:1198:14)

TypeError: this.app.get is not a function

at IncomingMessage.ip (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/request.js:350:24)

at getip (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/morgan/index.js:466:14)

at logger (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/morgan/index.js:107:26)

at Layer.handle [as handle_request] (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/layer.js:95:5)

at trim_prefix (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/index.js:317:13)

at /Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/index.js:284:7

at Function.process_params (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/index.js:335:12)

at next (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/index.js:275:10)

at jsonParser (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/body-parser/lib/types/json.js:110:7)

at Layer.handle [as handle_request] (/Users/username_0/js/src/github.com/LuxorLabs/luxor-mining-v3/node_modules/express/lib/router/layer.js:95:5)

```

This does not happen if I comment out `app.use(morgan('dev'))`, or if I comment out `app.use(Sentry.Handlers.errorHandler() as express.ErrorRequestHandler)`... I just can't use both at the same time, and I'd like to do that.

Appreciate any guidance here.

Answers:

username_1: We are experiencing weird things after updating from 4.5.0 -> 4.5.3, the context is different but the underlying `this.app.get` error is the same.

username_0: @username_1 does downgrading to 4.5.0 fix the issue?

username_1: @username_0 yeah it looks like 4.5.0 works as expected

username_2: Seeing the same thing with bunyan (2.0.2) and express-bunyan-logger (1.3.2). Downgrading to 4.5.0 fixes it.

username_3: Also seeing this. https://github.com/getsentry/sentry-javascript/issues/1859 looks like the same issue

username_4: Same issue with Feathers and Winston

https://github.com/feathersjs/feathers/issues/1183

username_5: I'm not using anything like Winston or Morgan, but I'm also experiencing this issue.

Related bits:

```

Sentry.init({

beforeSend(event, hint) {

const {

originalException,

} = hint

const {

message,

} = originalException

if (message.match(/NotAuthenticated/i)) {

return null

}

return event

},

dsn: env.SENTRY_DSN,

})

// ...

app.use(Sentry.Handlers.requestHandler())

// ...

app.use((error, request, response, next) => {

if (!error.stack.match(/NotAuthenticated/i)) {

err(error.stack)

}

next(error)

})

app.use(Sentry.Handlers.errorHandler())

app.use(express.errorHandler())

```

Will check if downgrading fixes the issue.

username_6: Fixed in `4.5.4` – https://github.com/getsentry/sentry-javascript/releases/tag/4.5.4

Sorry for the inconvenience :)

Status: Issue closed

username_7: The issue still exists in `"@sentry/node": "^4.6.2"` using as Express middleware

```TypeError: req.emit is not a function

at Socket.socketOnTimeout (_http_server.js:427:48)

at Socket.emit (events.js:197:13)

at Socket.EventEmitter.emit (domain.js:446:20)

at Socket._onTimeout (net.js:447:8)

at listOnTimeout (timers.js:327:15)

at processTimers (timers.js:271:5)```

username_6: Really?... can you provide a repro please? Every scenario I tested worked just fine.

username_7: I think sentry tries to attach something to res object asynchronously, but the connection is already closed which crashes the api.

```

const api = express();

sentry.init({ dsn: process.env.SENTRY, environment: process.env.DOMAIN });

api.use(sentry.Handlers.requestHandler());

....

api.use(sentry.Handlers.errorHandler());

api.use((err, req, res, next) => {

let { message, statusCode } = err;

if (err.name === 'UnauthorizedError') {

statusCode = 401;

message = 'Token is invalid.';

}

res.status(statusCode || 400).json({ error: message });

});

```

username_6: Works just fine with your repro ¯\_(ツ)_/¯

username_7: not sure, that was the logic before we removed it. Might be something related to our own controllers. |

lipis/flag-icons | 1165239735 | Title: Reorder flags with the Unknown flag first on the list

Question:

username_0: My suggestion is that you should reorder the flag classes by placing the unknown flag (`xx`) before all other flags.

In terms of CSS precedence, if `.fi-fr` is placed on the same element as `.fi-xx`, the unknown flag will override the French flag due to the fact that the French flag is declared earlier than the Unknown flag. By declaring the Unknown flag first, means that every other flag will override it if they reside on the same element.

### So why would you want to declare both flags on the same element?

In my use case, I have a system that stores the 2-letter country code against items in a database. This allows me to display the relevant flag-icon on the page by adding the flag class to the span element (forgive me, I am using Vue):

```vue

<span class="fi" :class="[`fi-${countryCode}`]"></span>

```

Unfortunately, the data may contain invalid 2-letter country codes. (eg. `vb`). To combat this, I would like it to display the "Unknown" flag as a fallback flag.

```vue

<span class="fi fi-xx" :class="[`fi-${countryCode}`]"></span>

```

For a country code of `vb`, this would be translated to `class="fi fi-xx fi-vb"`. Since `.fi-vb` doesn't exist, this gets ignored and `.fi-xx` will produce the "Unknown" flag.

For a country code of `fr`, this would be translated to `class="fi fi-xx fi-fr"`. If `.fi-fr` was declared after `.fi-xx` then the French flag would override the "Unknown" flag and page would display the French flag.

Unfortunately, with `.fi-xx` declared last, the above example will show the "Unknown" flag instead.

Please accept this proposal as I think this would be a benefit for a lot of people using your library. |

Sage-Bionetworks/ChallengeWorkflowTemplates | 580913878 | Title: get_submission.cwl makes assumptions about output from challengeutils downloadsubmission

Question:

username_0: This line: https://github.com/Sage-Bionetworks/ChallengeWorkflowTemplates/blob/master/get_submission.cwl#L34

The workflow assumes that this is exactly where the file will be written and how it will be named. But `challengeutils downloadsubmission` already provides this information as part of its output.

I think this fix is as simple as replacing that line with something like:

```

$(JSON.parse(self[0].contents)['file_path'])

```

Answers:

username_1: @username_0, Thanks for reporting. Did you get an error when running your workflow? This is because `challengeutils` itself renames the submission file:

https://github.com/Sage-Bionetworks/challengeutils/blob/master/challengeutils/__main__.py#L86

username_0: Interesting, lines like that seem to work otherwise: https://github.com/Sage-Bionetworks/ChallengeWorkflowTemplates/blob/master/get_submission.cwl#L41

username_1: Ahh, i forgot that CWL requires the File output to be in a dictionary format like so:

```

"results": {

"location": "file:///Users/thomasyu/sage_projects/dream/ChallengeWorkflowTemplates/results.json",

"basename": "results.json",

"class": "File",

"checksum": "sha1$af6758eac5c5bf4eefbd1ac836982ffa8b621051",

"size": 222,

"path": "/Users/thomasyu/sage_projects/dream/ChallengeWorkflowTemplates/results.json"

}

```

Therefore the line in `outputEval` would have to mimic that structure above.

username_1: Closing as I don't think there is a way to create the correct output blob to match what is required from a `File` type output

Status: Issue closed

|

vcd94xt10z/zionphp | 412513484 | Title: Modulo test

Question:

username_0: Módulo para testar serviços web, funcionalidades:

- cadastrar um fluxo de chamadas de URL com ou sem dados, status http esperado etc;

- fazer botão simples para rodar os testes e exibir o resultado;

Exemplo

POST http://teste.com/usuario/salvar

Status 201 sem response body

PUT http://teste.com/usuario/1

Status 204 sem response body

GET http://teste.com/usuario/1

Status 200 com response body

DELETE http://teste.com/usuario/1

Status 202 sem response body<issue_closed>

Status: Issue closed |

godotengine/godot | 354752076 | Title: Tile selector: add option to resize tile entries

Question:

username_0: It is weird that even with a `list` mode, images are still horribly big.

I mean tiles like in gridmap object, we should be able to resize it, for example:

Option to disable icons to get more space would be useful too.

Answers:

username_1: maybe an EditorSetting could be added to allow chose the maximum icon size, but it will scale the texture uniformly, you example looks horrible imo :P

username_2: example looks terrible, please don't

Status: Issue closed

|

PlantGenIE/PlantGenIE | 1101178537 | Title: Display expression values

Question:

username_0: What value should be used in expression tools? is it TPM+2? or TPM+1?

Currently we have TPM+1 in all our tools to avoid special cases as follows.

```

log2(TPM+0.9)=-0.15 ∴ TPM=0

log2(TPM+1)=0 ∴ TPM=0

log2(TPM+2)=1 ∴ TPM=0

```

Answers:

username_0: yes, we display log2(TPM + 1) for all our expression tools. exHeatmap allow users to download expression values but that is also the same log2(TPM + 1) referred as absolute expression value. |

mapgears/ol3-google-maps | 154990007 | Title: Problem with getStroke

Question:

username_0: Hello,

when i load for the first time my olgm map, appear in console this error

"ol3gm.js:244 Uncaught TypeError: d.getStroke is not a function"

and the dots of polygon on layer are very bigger than normal.

What is the problem?

How can i fix it?

thank you

Answers:

username_1: the same problem

username_2: Would you please provide a small demo featuring the issue, maybe with JSFiddle ?

username_1: the problem in style function.When i use style object problem solved .Why don't i use style function?

username_2: Style functions are not supported, as documented in the limitations: https://github.com/mapgears/ol3-google-maps/blob/master/LIMITATIONS.md#style-functions

There's currently no plan to support them either.

username_0: Sorry but i don't use a style object neither manipulate style.

The problem is present in this function but i don't understand why (ol3gm.js - row 239).

function fa(a, b) {

var c = null,

d = C(a);

if (d) {

var c = {},

e = d.getStroke();

if (e) {

var f = e.getColor();

f && (c.strokeColor = x(f), f = B(f), null !== f && (c.strokeOpacity = f));

(e = e.getWidth()) && (c.strokeWeight = e)

}

if (e = d.getFill())

if (e = e.getColor()) c.fillColor = x(e), e = B(e), null !== e && (c.fillOpacity = e);

if (f = d.getImage()) {

d = {};

e = {};

if (f instanceof ol.style.Circle) {

e.path = google.maps.SymbolPath.CIRCLE;

var h = f.getStroke();

if (h) {

var k = h.getColor();

k && (e.strokeColor = x(k));

e.strokeWeight = h.getWidth()

}

if (h = f.getFill())

if (h = h.getColor()) e.fillColor =

x(h), h = B(h), e.fillOpacity = null !== h ? h : 1;

(f = f.getRadius()) && (e.scale = f)

} else f instanceof ol.style.Icon && ((h = f.getSrc()) && (e.url = h), h = f.getScale(), (k = f.getAnchor()) && (e.anchor = void 0 !== h ? new google.maps.Point(k[0] * h, k[1] * h) : new google.maps.Point(k[0], k[1])), (k = f.getOrigin()) && (e.origin = new google.maps.Point(k[0], k[1])), f = f.getSize()) && (e.size = new google.maps.Size(f[0], f[1]), void 0 !== h && (e.scaledSize = new google.maps.Size(f[0] * h, f[1] * h)));

Object.keys(d).length ? c.icon = d : Object.keys(e).length && (c.icon =

e)

}

0 === Object.keys(c).length ? c.visible = !1 : void 0 !== b && (c.zIndex = 2 * b)

}

return c

};

username_2: As I said, you can't use style functions with OLGM. It's not currently suported to do so. See the limitations.

username_0: Ok, thank tou for your answer but this is a bug because the code is inside ol3gm.

Can you suggest me a workaround, please?

thank you

username_2: This is not a bug. You must not use StyleFunctions with OLGM as it is stated in the limitations. There is no other workaround than "don't use StyleFunctions" with OLGM. I'm sorry that I can't be of more help regarding this for now.

If you absolutely need this, then you're welcome to create a Pull Request adding this support or you can contact info at mapgears dot com if you wish to fund the development of this.

To my knowledge, I think it's impossible to support style functions with OLGM.

Status: Issue closed

username_2: I'm closing this. See the limitations: https://github.com/mapgears/ol3-google-maps/blob/master/LIMITATIONS.md#style-functions |

RasaHQ/rasa | 604214539 | Title: Makefile:43: recipe for target 'install' failed

Question:

username_0: While trying to install install dependencies using the make install command, I am getting the following error.

```

kamaldeep@kamaldeepsingh:~/git/testing/rasa1$ curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python

Retrieving Poetry metadata

Latest version already installed.

kamaldeep@kamaldeepsingh:~/git/testing/rasa1$ make install

poetry run python -m pip install -U 'pip<20'

The virtual environment found in /home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6 seems to be broken.

Recreating virtualenv rasa-LHgLSZoI-py3.6 in /home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6

[CalledProcessError]

Command '['/home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6/bin/python', '-Im', 'ensurepip', '--upgrade', '--default-pip']' returned non-zero exit status 1.

Makefile:43: recipe for target 'install' failed

make: *** [install] Error 1

```

Seems like there is some issue in the creation of the Virtual environment. Please help with the same.

Status: Issue closed

Answers:

username_0: While trying to install install dependencies using the make install command, I am getting the following error.

```

kamaldeep@kamaldeepsingh:~/git/testing/rasa1$ curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python

Retrieving Poetry metadata

Latest version already installed.

kamaldeep@kamaldeepsingh:~/git/testing/rasa1$ make install

poetry run python -m pip install -U 'pip<20'

The virtual environment found in /home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6 seems to be broken.

Recreating virtualenv rasa-LHgLSZoI-py3.6 in /home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6

[CalledProcessError]

Command '['/home/kamaldeep/.cache/pypoetry/virtualenvs/rasa-LHgLSZoI-py3.6/bin/python', '-Im', 'ensurepip', '--upgrade', '--default-pip']' returned non-zero exit status 1.

Makefile:43: recipe for target 'install' failed

make: *** [install] Error 1

```

Seems like there is some issue in the creation of the Virtual environment. Please help with the same.

Status: Issue closed

|

dotse/bbk | 587993286 | Title: Don't report 99 MBit on a 100-200Mbit connection as 'BAD'.

Question:

username_0: There need to be some sort of limit based in percentage of the connection to determine the speed, not just some hard limits.

Answers:

username_1: Have you checked the Readme? https://frontend.bredbandskollen.se/download/README.txt

'If the subscription can be identified, the download result will be evaluated as

"GOOD", "ACCEPTABLE", or "BAD". The result is considered BAD if the speed is

below the lower end of the expected speed range as stated by the service

provider. It is considered ACCEPTABLE if it is above the lower end, but still

clearly below the middle, of the expected speed range. If the result is better,

it is considered GOOD.

E.g. if the expected range is 150-250 Mbit/s, a result below 150 is BAD; a

result between 150 and 190 is ACCEPTABLE, and a result above 190 is GOOD.'

The "limit based in percentage" you're looking for is the 40% target that decides what is needed to get a GOOD result: 250 - 150 = 100 Mbit. 40% of 100 Mbit is 40 Mbit. 150 + 40 = 190 Mbit.

username_0: Exactly and that's wrong in so many ways. 99,9% is NEVER a "bad" speed which implies it's unacceptable and hence makes people call customer support.

username_2: We are getting the connection from the ISP - if we have the possibility.

If you ISP says you connection should be 100-200 MB and you don't get up to the lowest limit we do consider that bad.

username_0: And that is flawed since it's usually "up to" in the agreements and there might be other circumstances that lowers the speed. To actually know the connection quality you would have to have iperf server instances at the ISPs.

This creates confusion which creates unnecessary support calls for those working in the field.

username_3: I just randomly found this issue, and I'd just like to say this makes no sense.

If the ISP considers 99 Mbit/s an acceptable speed on the connection that they sell, they should then sell the connection as a 99-200 Mbit/s connection, not a 100-200 Mbit/s connection.

If the ISP promises 100-200 Mbit/s, then anything below 100 Mbit/s is unacceptable. (Assuming, for now, that the measurement server is well enough connected to the ISP's network to be a valid measurement of the connection speed itself.)

Imagine, if I went to the supermarket, and I bought a box of milk that is specified to be 100-200 cl, and then I come home and see I only got 99 cl. In this case, the amount of milk that I received is out of spec, even if it's only 1% out of spec. |

febobo/JS-EveryDay-Question | 425260959 | Title: JS每日一题:前端优化手段有哪些

Question:

username_0: 20190118问:

前端优化手段有哪些?

- 静态资源合并压缩(js,css, images)

- 请求数量优化

- Gzip压缩

- 带宽优化

- CDN

- 就近节点,减少DNS查找

- 按需加载

- lazyload

- 减少请求

- 骨架屏

- 优化白屏

- web缓存

- 缓存ajax数据

- 减少重绘和重排

- 批量更新DOM样式

- 页面结构

- 将样式表放在顶部,将脚本放在底部,尽早刷新文档的输出 |

openenclave/openenclave | 843626477 | Title: Ability to make an ECALL from kernel mode

Question:

username_0: Currently another team in Microsoft is using OP-TEE directly, rather than building on Open Enclave SDK, because OE does not currently support the ability to make an ECALL from kernel mode. This forces them to jump through lots of extra hoops to write the enclave that OE would have solved for them, and limits their ability to work on other TEEs in the future.

Currently in OE, one would have to first call up into user mode and have user mode make the ECALL, which loses extra perf (on Arm at least) and causes extra complexity and this approach isn't obvious either.

Hence this is a feature request to support making an ECALL directly from kernel mode, which I am filing on the other team's behalf.

Answers:

username_0: This is peripherally related to issue #3804, but that is just for attestation, and that request came from a different team.

username_1: On SGX, user mode context is required to make an ecall.

username_1: Capture scenario in design doc. |

AlvaroLarumbe/espalet-android | 202401501 | Title: When clicking on the refresh button there is no feedback to the user

Question:

username_0: It will be good to show spinner icon or at least make images blink once.

Answers:

username_1: Look at this: https://github.com/username_1/espalet-android/commit/b1030608674f4b0bbdb25932a19c5c86215feae3#diff-350204edff7826e85e2fac268b3e263cR61

username_0: Enough

Status: Issue closed

|

invenia/Intervals.jl | 524508865 | Title: Range indexing fails

Question:

username_0: ```

julia> using Dates, TimeZones, Intervals

julia> st = HE(ZonedDateTime(2014,1,1, tz"America/Winnipeg"))

AnchoredInterval{-1 hour,ZonedDateTime}(ZonedDateTime(2014, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true))

julia> r = st:Hour(1):(st + Day(1))

AnchoredInterval{-1 hour,ZonedDateTime}(ZonedDateTime(2014, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true)):1 hour:AnchoredInterval{-1 hour,ZonedDateTime}(ZonedDateTime(2014, 1, 2, tz"America/Winnipeg"), Inclusivity(false, true))

julia> collect(r)[1:2]

2-element Array{AnchoredInterval{-1 hour,ZonedDateTime},1}:

AnchoredInterval{-1 hour,ZonedDateTime}(ZonedDateTime(2014, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true))

AnchoredInterval{-1 hour,ZonedDateTime}(ZonedDateTime(2014, 1, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true))

julia> r[1:2]

Error showing value of type StepRangeLen{AnchoredInterval{-1 hour,ZonedDateTime},AnchoredInterval{-1 hour,ZonedDateTime},Hour}:

ERROR: MethodError: Cannot `convert` an object of type Hour to an object of type ZonedDateTime

Closest candidates are:

convert(::Type{T}, ::Interval{T}) where T at /Users/ericdavies/.julia/packages/Intervals/52k6l/src/interval.jl:124

convert(::Type{T}, ::AnchoredInterval{P,T}) where {P, T} at /Users/ericdavies/.julia/packages/Intervals/52k6l/src/anchoredinterval.jl:174

convert(::Type{T}, ::T) where T at essentials.jl:167

...

Stacktrace:

[1] AnchoredInterval{-1 hour,ZonedDateTime}(::Hour) at /Users/ericdavies/.julia/packages/Intervals/52k6l/src/anchoredinterval.jl:79

[2] step(::StepRangeLen{AnchoredInterval{-1 hour,ZonedDateTime},AnchoredInterval{-1 hour,ZonedDateTime},Hour}) at ./range.jl:501

[3] show(::IOContext{REPL.Terminals.TTYTerminal}, ::StepRangeLen{AnchoredInterval{-1 hour,ZonedDateTime},AnchoredInterval{-1 hour,ZonedDateTime},Hour}) at ./range.jl:712

[4] show(::IOContext{REPL.Terminals.TTYTerminal}, ::MIME{Symbol("text/plain")}, ::StepRangeLen{AnchoredInterval{-1 hour,ZonedDateTime},AnchoredInterval{-1 hour,ZonedDateTime},Hour}) at ./show.jl:7

[5] display(::REPL.REPLDisplay{REPL.LineEditREPL}, ::MIME{Symbol("text/plain")}, ::StepRangeLen{AnchoredInterval{-1 hour,ZonedDateTime},AnchoredInterval{-1 hour,ZonedDateTime},Hour}) at /Users/ericdavies/.julia/packages/OhMyREPL/GFHgr/src/output_prompt_overwrite.jl:6

[6] display(::REPL.REPLDisplay, ::Any) at /Users/ericdavies/repos/julia1p2/usr/share/julia/stdlib/v1.2/REPL/src/REPL.jl:136

[7] display(::Any) at ./multimedia.jl:323

```

Range indexing appears to be taking a `StepRange` and making a `StepRangeLen`, which we don't handle effectively.

I think this might be a bug in `step(::StepRangeLen)`.

Answers:

username_0: I think that bug is there, but we can also avoid it by never making a `StepRangeLen`. We can prevent that by defining the traits `OrderStyle` and `ArithmeticStyle` to be the same as `AbstractTime`. We should also add `RangeStepStyle` while we're at it.

username_0: See https://github.com/JuliaLang/julia/blob/master/base/range.jl and https://github.com/JuliaLang/julia/blob/master/base/traits.jl

username_1: As of [Julia 1.5.0-DEV.207](https://github.com/JuliaLang/julia/pull/34563) (7d92a3aaed) this issue appears fixed:

```julia

julia> r[1:2]

AnchoredInterval{Hour(-1),ZonedDateTime}(ZonedDateTime(2014, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true)):Hour(1):AnchoredInterval{Hour(-1),ZonedDateTime}(ZonedDateTime(2014, 1, 1, 1, tz"America/Winnipeg"), Inclusivity(false, true))

```

Do we want to try and fix this on earlier versions of Julia?

username_0: We should figure out the correct traits. If that fixes it for older versions of Julia, that would be a bonus.

username_1: Another example of this issue but on Intervals 1.3 you just see a deprecation warning:

```julia

julia> using Intervals

julia> x = AnchoredInterval{-1}(1):1:AnchoredInterval{-1}(5)

AnchoredInterval{-1,Int64,Open,Closed}(1):1:AnchoredInterval{-1,Int64,Open,Closed}(5)

julia> y = x[1:4];

julia> y

┌ Warning: `convert(T, interval::AnchoredInterval{P,T})` is deprecated for intervals which are not closed with coinciding endpoints. Use `anchor(interval)` instead.

│ caller = AnchoredInterval at anchoredinterval.jl:95 [inlined]

└ @ Core ~/.julia/dev/Intervals/src/anchoredinterval.jl:95

┌ Warning: `convert(T, interval::AnchoredInterval{P,T})` is deprecated for intervals which are not closed with coinciding endpoints. Use `anchor(interval)` instead.

│ caller = AnchoredInterval at anchoredinterval.jl:95 [inlined]

└ @ Core ~/.julia/dev/Intervals/src/anchoredinterval.jl:95

AnchoredInterval{-1,Int64,Open,Closed}(1):AnchoredInterval{-1,Int64,Open,Closed}(1):AnchoredInterval{-1,Int64,Open,Closed}(4)

```

username_1: I attempted to use traits to fix this problem but that didn't actually seem to be the source of the failure. I instead implemented the fix in #132 and made a [new issue for implementing traits](#133).

Status: Issue closed

|

reuixiy/hugo-theme-meme | 1025410447 | Title: 关于shortcodes的扩展支持

Question:

username_0: https://hugoloveit.com/zh-cn/theme-documentation-extended-shortcodes/

LoveIt主题的admonition很漂亮,如果不难的话希望加上这个feature XD

Answers:

username_1: 自己写了一些:https://github.com/username_1/shortcodes

演示:https://oi-io.me/tech/hugo-shortcodes/#note

username_0: @username_1

非常酷!

等下班了试一下,感谢!

要是能PR到meme就好拉。 @reuixiy |

geocollections/turvas | 577375294 | Title: Vaatluspunkti kaardivaade

Question:

username_0: Vaatluspunkti kaardivaates kuvatakse ala punkte, see on mugav ala uurimisel, aga vajalik oleks konkreetse valitud punkti eristamine kas suurema markeriga vms moel ning lisaks püsiv label kaardil.

Püsiv label võiks olla alati kui on ainult üks aktiivne punkt kaardil - st ka proovi detailvaates.<issue_closed>

Status: Issue closed |

NVIDIA/VideoProcessingFramework | 639325937 | Title: Single GPU decoding some video

Question:

username_0: Hi, @username_1 :

I looked at examples: SampleDecodeMultiThread.py, and tested our GPU(Single v100) decoding to the FPS 500.

But I want a single GPU to decode multiple videos simultaneously.

I found that they were serial-running decoded:

one video, decode 6s ; two video , decode 12s

How to make two video decoding process can call GPU decoding in parallel?

Answers:

username_1: Hi @username_0

To my best knowledge, this happens because of GIL.

You can run multiple sessions in parallel threads and / or sub processes but you don't see much (or any) of a performance gain.

Please look at the similar issue here:

https://github.com/NVIDIA/VideoProcessingFramework/issues/62#issuecomment-641767610

I'm running out of depth here (don't have too much of a Python development expertise) so if you can try mentioned approach and come back as you get results that would be really great.

username_0: thx @username_1

the #62 give me perfect solution!

Status: Issue closed

username_2: @username_0 Hi, I just adopt the multuprocessing method and the result is just the same as multi thread method.

Have you achieve the improvements? could you just share the snip code for that?

username_0: @username_2 sry,I have given up the research.

username_2: Thanks for your reply.

And I just want to know ,did you achieve idea twice improvement by using multiprocessing rather than multithread?

username_0: no,only multithread |

Azure/blobxfer | 309790896 | Title: AttributeError: 'NoneType' object has no attribute 'primary_endpoint'

Question:

username_0: Hi,

I'm trying to use the High Level API to upload files to Azure Blob and I started from [this](https://blobxfer.readthedocs.io/en/latest/80-blobxfer-python-library/) from the documentation. I think I'm missing something because I don't understand how come I'm getting this kind of error, which is thrown by `create_destination_id`

Here is the complete trace:

```

Traceback (most recent call last):

File ".../upload.py", line 61, in <module>

uploader.start()

File "...\venv\lib\site-packages\blobxfer\operations\upload.py", line 1167, in start

self._run()

File "...\venv\lib\site-packages\blobxfer\operations\upload.py", line 1079, in _run

create_destination_id(ase._client, ase.container, ase.name)

File "...\venv\lib\site-packages\blobxfer\operations\upload.py", line 185, in create_destination_id

return ';'.join((client.primary_endpoint, container, name))

AttributeError: 'NoneType' object has no attribute 'primary_endpoint'

```

These are the blobxfer parameters printed before the execution:

```

============================================

Azure blobxfer parameters

============================================

blobxfer version: 1.1.1

platform: Windows-10-10.0.16299-SP0

components: CPython=3.6.4 azstor.blob=1.0.0 azstor.file=1.0.0 crypt=2.2.2 req=2.18.4

transfer direction: local -> Azure

workers: disk=1 xfer=1 md5=1 crypto=0

log file: blobxfer.log

resume file: None

timeout: connect=30.0 read=30.0

mode: block

skip on: fs_match=False lmt_ge=False md5=True

delete extraneous: False

overwrite: True

recursive: True

rename single: False

access tier: hot

chunk size bytes: 4194304

one shot bytes: 33554432

strip components: 0

store properties: attr=True md5=True

rsa public key: None

local source paths: ..\sourcefolder

============================================

```

The code is like it follows, whereas config is a yaml file structured similarly to the sample configuration yaml [here](https://blobxfer.readthedocs.io/en/latest/sample_config.yaml).

```

upload_options = config['upload'][0]['options']

concurrency_dict = config['concurrency']

skip_on_options = upload_options.pop('skip_on', None)

store_file_properties = upload_options.pop('store_file_properties', None)

endpoint = "core.windows.net"

concurrency = blobxfer.api.ConcurrencyOptions(**concurrency_dict)

[Truncated]

endpoint)

source = blobxfer.api.LocalSourcePath()

source.add_path('../sourcefolder')

destination = blobxfer.api.AzureDestinationPath()