repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

katebliz/bonhamlab | 176918674 | Title: Hooray!

Question:

username_0: @username_1 - You don't know how happy it made me to get the alert that you had started using github! Yay!

Answers:

username_1:

Slowly but surely one becomes the mighty Charizard ;)

Status: Issue closed

|

jeremymailen/kotlinter-gradle | 515575805 | Title: .editorconfig disabled_rules for import-ordering is ignored

Question:

username_0: Since version "2.1.2", disabled_rules in .editorconfig for import-ordering has no effect.

```

[*.{java, kt}]

disabled_rules=import-ordering

```

`[import-ordering] Imports must be ordered in lexicographic order without any empty lines in-between`

Answers:

username_1: Thank you for the report and your patience @username_0.

You need to remove the space in the file selector and it will work, `[*.{java,kt}]`. Actually the same quirk exists in prior releases to.

I agree it's annoying and confusing, but it's a result of the editorconfig implementation in `ktlint` and will need a fix there.

Status: Issue closed

|

yarnpkg/berry | 547185931 | Title: [Bug] Fetch step of yarn install with pnp is really slow

Question:

username_0: - [ ] I'd be willing to implement a fix

**Describe the bug**

I have gone through the process of switching to use PNP for a React app. When I ran `yarn install` it recommended I try out v2 of Yarn. When I ran `yarn install` with v2, all of my deps were not in the cache so they were downloaded. The result of this step was:

```

➤ YN0000: ┌ Fetch step

Many lines of:

➤ YN0013: │ type@npm:2.0.0 can't be found in the cache and will be fetched from the remote registry

➤ YN0000: └ Completed in 11.9m

```

**To Reproduce**

With a `package.json` with a large number of dependencies:

```

yarn --pnp

yarn policies set-version berry

yarn install

```

**Environment if relevant (please complete the following information):**

- OS: Linux

- Node version v13.5.0

- Yarn version 2.0.0-rc1

Answers:

username_1: We have a fix in master that should help; can you try running `yarn cache clear --all && yarn set version from sources && yarn`?

Note that cold installs will still be slower than the v1 due to us having to transform the fetched packages from tgz into zip (and even though we're doing it through wasm, it still has a cost). Hopefully registries will eventually catch up and offer to download packages as zip out of the box 🙂

(Note that we need zip because otherwise we would need to uncompress the whole archive when accessing a single file at runtime, which would be very wasteful for most Node projectsv)

username_2: Had the same issue; installing latest from master makes the fetch step much faster (1m vs 5m+)

Thanks for the fix!

username_0: I can confirm that the time to fetch my dependencies has come down to 2.1m from 11.9m. Thanks!

username_1: Awesome! I'll close this issue then 🎉

Status: Issue closed

|

ctimmerm/axios-mock-adapter | 258281338 | Title: Need Clarification for use with componentDidMount

Question:

username_0: Maybe I am just a bit illiterate when it comes to testing, but I was hoping to get some clarification and/or help on writing tests for calls made by `axios` from within the `componentDidMount` function for React components.

Here is a simple `componentDidMount` function which uses `axios`:

```js

componentDidMount() {

axios.get(`http://www.reddit.com/r/${this.props.subreddit}.json`)

.then((response) => {

const posts = response.data.data.children.map(obj => obj.data)

this.setState({ posts })

})

.catch((error) => {

this.setState({ errors: this.state.errors.concat(['GET Request Failed'])})

})

}

```

and here is the setup and spec for the functionality:

```js

it('returns data from API as expected', () => {

let mock = new MockAdapter(axios)

mock.onGet('http://www.reddit.com/r/test.json')

.reply(

200,

{

data: {

children: [

{ data: { id: 1, title: 'A Post' } },

{ data: { id: 2, title: 'Another Post' } }

]

}

}

)

let posts = mount(<AjaxDemo subreddit="test" />).update().state().posts

expect(posts).to.eql([{id: 1, title: 'A Post'},{id: 2, title: 'Another Post'}])

})

```

The problem I run into is that within the spec, `state` never changes.

As far as I understand it, when the `AjaxDemo` component gets mounted, the `axios` call it makes will receive a response from `mock`, and update state according to the response from `mock`. This would mean that `state` should have been set, because the `axios` promise resolved and assigned an array of values to `state.posts`. Is there something I'm missing here? I feel like it's going to be embarrassingly obvious, or maybe there is a better way to split this testing up - maybe it's leaning too much into the integration test arena.

Any help on this would be much appreciated!

An extra note:

I tried running this code with

```js

mount(<AjaxDemo subreddit="test" />).update().state().posts

```

as well as

```js

mount(<AjaxDemo subreddit="test" />).state().posts

```

and the call to `update()` did nothing noticeable.

Answers:

username_1: The problem is probably that:

* you mount the component

* you fire off a request, which is asynchronous

* you check the state before the request promise resolves

I'll close this issue since it's not related to axios-mock-adapter.

Status: Issue closed

username_2: @username_1 would be really nice if a solution was provided instead of a list of problems...

It's nice to check the response and all, but what about the components and it's state and the output of response-based elements? Don't we want to test "deeper" than just the response?

Appreciate your work, just really would like to know how to test deeper myself.

username_1: It was not a list of problems, but a sequence of events that explains the problem.

It is not a problem that is related to axios-mock-adapter and the way you handle it depends on the testing framework and other libraries you're using, and how you structure your code.

If you're using mocha, the simplest solution might be to wrap your assertion in `setImmediate` or `setTimeout`.

```js

it('does something', function(done) {

// ... your test code ...

setImmediate(function() {

expect(/* ... */);

done();

});

});

```

username_0: @username_2 Thanks for the tips! I actually rethought my approach after the correct albeit blunt response from @username_1 and just forgot to come back and provide the solution I came up with. I understand that this isn't an issue with the functionality of `axios-mock-adapter`, but figured since it's likely a *very* common use case that it would be something that should be discussed here for others to be able to skip the beating-head-against-wall phase of figuring it out.

## Separation of Concerns

I ended up writing the methods that make use of `axios` completely decoupled from the React code, then stubbed out those methods when testing how the React component will respond to the data.

### Build a Method to Make the AJAX Call

Let's say I write a method called `myApiGet` that uses `axios.get`. I'll test that method on its own using `axios-mock-adapter` to make sure that it does what I expect it to with whatever data is returned as a result of the `axios` call. For example I might test to ensure that `myApiGet` returns an object that contains the HTTP response code along with the expected data.

### Use the Predictable AJAX Call Method in `componentDidMount`

For the React components, I stub out what's expected to be returned by `myApiGet` using `sinon`, and use this stubbed response to test the behavior of the component.

### Bennies

This seems to be the best approach to separate concerns that I could come up with and offers the following benefits:

* The method that uses `axios` can be reused throughout the codebase and can be expected to return data in a predictable format

* The code in the component is tested against the expected behavior of the method that uses `axios`, and thus only needs to be tested for what it does with the data returned

### Conclusion

What I was running into was that if I wrote the `axios` calls into `componentDidMount`, I needed to test that the call was not only returning the data as expected but also that the component is rendering the data as anticipated all within the same spec, and it just seems messy. The way I understand it:

1. A React component should do one thing well: render data in the view layer.

2. The methods used for AJAX calls should do one thing well: return data in a predictable format.

Once again, this isn't necessarily an issue with the functionality of `axios-mock-adapter`, but hopefully anyone looking into this will help others dealing with similar problems.

[Here](https://github.com/airbnb/enzyme/issues/346#issuecomment-347741622) is a thread where we discussed how to deal with rendering data that should be available from `componentDidMount`. |

xws-bench/battles | 133430909 | Title: Computer:51 Human:149

Question:

username_0: Bossk*Calculation*Outlaw_Tech*Recon_Specialist*K4_Security_Droid*Heavy_Laser_Cannon.Graz_the_Hunter.Binayre_Pirate.Binayre_Pirate.VSNight_Beast*Twin_Ion_Engine_Mk._II.Backstabber.Dark_Curse.Scourge*Decoy.Omicron_Group_Pilot*Sensor_Jammer.<br>

http://bit.ly/20RMUXp<br> |

yiisoft/yii2 | 437550664 | Title: "session_set_cookie_params()" does not handle session lifetimes correctly

Question:

username_0: ### What steps will reproduce the problem?

session_set_cookie_params php function does not handle session lifetimes correctly.

https://www.php.net/manual/en/function.session-set-cookie-params.php#100657

`web.config`

```php

'session' => [

'class' => 'yii\web\DbSession',

'db' => 'db',

'sessionTable' => 'sessions',

'cookieParams' => [

'secure' => false,

'httponly' => false,

'lifetime' => time() + (365 * 24 * 60 * 60), #one year

],

'timeout' => 365 * 24 * 60 * 60, #one year

],

```

### What is the expected result?

set timeout cookie

### What do you get instead?

cookie timeout not be set.(When the browser is closed, the cookie is deleted)

### Additional info

| Q | A

| ---------------- | ---

| Yii version | 2.0.18

Answers:

username_0: hi

please set this config in `web.config` file

```php

'session' => [

'class' => 'yii\web\Session',

'cookieParams' => [

'domain' => '.example.com',

],

],

```

after run -> domain `.example.com` not set!!!

i traced this problem , finally find this problem in [Session.php:404](https://github.com/yiisoft/yii2/blob/331d9971857d8c95cf1bf12b1a2f9f8fa83f1125/framework/web/Session.php#L404)

```php

session_set_cookie_params($data['lifetime'], $data['path'], $data['domain'], $data['secure'], $data['httponly']);

````

`session_set_cookie_params` return false.

username_1: Can you check if the session is started by the time `session_set_cookie_params()` is called?

Status: Issue closed

username_1: I've checked and for the clean basic application it works well setting lifetime properly. btw., you have an error there. Lifetimes is number of seconds, not timestamp so there's no need to add `time()`.

username_0: yes , i edit first post.

finally find this problem.

`session_set_cookie_params` return false if you set below settings in php-fpm.

```

session.cookie_lifetime

session.cookie_path

session.cookie_domain

```

username_1: fpm? What do you mean? How to reproduce it?

username_0: yes. php-fpm.

in last test for this problem, i create a test.php inside index.php of yii.

test.php

```php

session_set_cookie_params(1000,'/','.example.com');

session_start();

```

after run `test.php` , `session_set_cookie_params` return `false` , so this is not a yii2 bug.

i search in settings(php,php-fpm and ...) on server and i find below setting in `mysite.conf` (is php-fpm config)

```

php_admin_value[session.cookie_lifetime] = 1500

```

if you set this php setting by `php_admin_value` flag in php-fpm , `session_set_cookie_params` return false.

username_1: Ah, right. That's PHP behavior defined for `php_admin_value`. If it's used, application can't change it later. |

spujadas/elk-docker | 125416784 | Title: Add beats output routing

Question:

username_0: If you have a beats input plugin, if you send the data directly to elasticsearch it works as expected with the sample dashboards. If you configure beats to send data to the logstash endpoint, they do not because there is no output routing to the correct index names.

Adding:

```

output {

elasticsearch {

hosts => "localhost:9200"

sniffing => true

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

```

To the 02-beats-input.conf, it works, although the naming of that file suggests that an output shouldn't be added there.

Ref: https://www.elastic.co/guide/en/beats/libbeat/1.0.1/getting-started.html#logstash-installation

Answers:

username_1: Sorry, not quite sure what the exact issue is here, it really should work out-of-the-box. :confused:

The output filter/routing for Logstash is in `30-output.conf`, which contains a minimal configuration item:

output {

elasticsearch { hosts => ["localhost"] }

stdout { codec => rubydebug }

}

So implicitly, in the `elasticsearch` section, the default values are used for non-specified configuration options (as per https://www.elastic.co/guide/en/logstash/current/plugins-outputs-elasticsearch.html), e.g. `"logstash-%{+YYYY.MM.dd}"` for `index`.

Using the vanilla `sebp/elk` image on a clean VM and having Filebeat push logs from an instance of nginx to Logstash's Beat input plugin on port 5044 (see the [example](http://elk-docker.readthedocs.org/#forwarding-logs-filebeat) in the documentation of the image) produces an entry like this when browsing to a page served by nginx:

{

"_index": "logstash-2016.01.07",

"_type": "nginx-access",

"_id": "AVIdlGrkng6MqhVcdOZ3",

"_score": null,

"_source": {

"message": "XX.XX.XX.XX - - [07/Jan/2016:19:33:19 +0000] \"GET / HTTP/1.1\" 304 0 \"-\" \"Mozilla/5.0 (Windows NT 6.3; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0\" \"-\"",

"@version": "1",

"@timestamp": "2016-01-07T19:33:23.023Z",

"beat": {

"hostname": "ac29184dfcf0",

"name": "ac29184dfcf0"

},

"count": 1,

"fields": null,

"input_type": "log",

"offset": 0,

"source": "/var/log/nginx/access.log",

"type": "nginx-access",

"host": "ac29184dfcf0",

"clientip": "XX.XX.XX.XX",

"ident": "-",

"auth": "-",

"timestamp": "07/Jan/2016:19:33:19 +0000",

"verb": "GET",

"request": "/",

"httpversion": "1.1",

"response": "304",

"bytes": "0",

"agent": "\"Mozilla/5.0 (Windows NT 6.3; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0\""

},

"fields": {

"@timestamp": [

1452195203023

]

},

"sort": [

1452195203023

]

}

… which looks fine to me.

Using the piece of configuration you suggested (which I added to `30-output.conf` where it would belong), the same operation creates entries such as this:

{

"_index": "filebeat-2016.01.07",

[Truncated]

"verb": "GET",

"request": "/",

"httpversion": "1.1",

"response": "304",

"bytes": "0",

"agent": "\"Mozilla/5.0 (Windows NT 6.3; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0\""

},

"fields": {

"@timestamp": [

1452194980787

]

},

"sort": [

1452194980787

]

}

… so essentially the same thing, except for the `_index` field which has a different prefix (default `logstash` vs explicitly set `filebeat`).

Having said that, there may be something wrong that I'm not seeing or I may be misunderstanding the issue: if so could you please provide steps to reproduce the issue you're having? Cheers.

username_0: The issue is that if you configure the beat to send directly to elastic search, the information ends up in filebeat-XXXX.XX.XX or topbeat-XXXX.XX.XX indexes. If you send through the logstash beat plugin as configured, the information ends up in the logstash-* index. It seems like it should be the same in either case.

Additionally, the beats project provides a bunch of pre-made dashboards which only work with the information in the XXXbeat-XXXX.XX.XX format.

https://www.elastic.co/guide/en/beats/libbeat/current/getting-started.html#load-kibana-dashboards

username_1: Right, got it this time. I thought the issue was about the plugin not working rather than about inconsistent behaviours and predefined dashboards not playing properly.

Will update in a sec.

Status: Issue closed

|

fjordllc/bootcamp | 1074202912 | Title: Uncaught TypeError: Cannot assign to read only property 'solana' of object '#<Window>'

Question:

username_0: View details in Rollbar: [https://rollbar.com/username_0/Bootcamp/items/767/](https://rollbar.com/username_0/Bootcamp/items/767/)

```

TypeError: Cannot assign to read only property 'solana' of object '#<Window>'

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 45, in Object.dispenseInjectMessage

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 45, in [anonymous]

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 39, in HTMLDocument.<anonymous>

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 39, in n.dispatch

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 39, in n.send

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 39, in n.sync

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 45, in new <anonymous>

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 45, in Module.2202

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 1, in r

File "chrome-extension://afbcbjpbpfadlkmhmclhkeeodmamcflc/injects/solana.js", line 1, in [anonymous]

```<issue_closed>

Status: Issue closed |

getsentry/sentry-webpack-plugin | 534550571 | Title: Assets Accessible at Multiple Origins

Question:

username_0: Hi,

Sentry doc talks about assets accessible at multiple origin and I don't find the parameter to use. Is it doable with this webpack plugin?

In my use case, I have the same file which is fetched with different origins.

Ex: file://www/app.js, https://mysite.fr/subsite1/app.js, https://mysite.fr/subsite2/app.js.

When an error is raised, one is created by file, but I'd like to group them as it's the same file.

Have a good day,

DR

Answers:

username_1: It's not possible through the plugin, but you can use our custom grouping settings to achieve that - https://docs.sentry.io/data-management/event-grouping/server-side-fingerprinting/

Status: Issue closed

|

Ymagis/ClairMeta | 674018725 | Title: Report Mode Feature Request: -report/-report_out_file to generate more detailed output.

Question:

username_0: Hi,

ClaieMeta checks for quite a lot of issues. It's very impressive.

And I am especially please we now have an open source tool to QC DCPs for problems. (Thats easy to install and use compared to others)

I would like to see the tool updated to generate a QC-Report type result. For example..

```

python3 -m clairmeta.cli check -type dcp -qc_report DCP_DIR

clairmeta version: X.XX

base CHECK: PASS

cpl CHECK: PASS

am CHECK: PASS

isdcf_dcnc CHECK: WARNING

WARNING:check_dcnc_compliance

ContentTitle must have 12 parts, 11 found

Field Studio not found in ContentTitle

atmos CHECK: NOT_APPLICABLE

.

.

----------------------

QC acceptance: PASS

```

This is especially important in accepting DCPs into some circuits/cinemas, having a tool to pre-submission-check DCPs before they are submitted by random content producers who are making ads for small film festival content. It's common for non DCP experienced production companies to take on such work and leave the creation of the DCP to the last minute and just grab whatever software they can find to do a DCP. It would be good to have a free tool to utilise in asset delivery requirements those accepting the DCP can point them at.

i.e. When submitting your DCP, please supply clairmeta QC_REPORT as part of your submission.. Obviously if they are not going to do this until it passes the QC tool.

The tool, should produce a type of report as listed above, and if a specific check_plugin has issues, it will list them and result in a WARNING or FAIL based on the result of the issue.

The version of the clairmeta version should be part of the report as extra features may come down the line for which a older version of he software does not yet implement.

I have not yet look too deeply into the code, but it appears this type of feature may not be that difficult to add to the implementation.

I will admit, the tool in its current form still is very useful and does archive this outcome, but it does not have the gravitas or specific feel of being as powerful and complete as it is. Naming all the checks in the report would be far more visceral and hold more weight in how useful this tool really is to the general community that utilises DCPs.

Please comment on this addition and "use model" of the tool. I am keen to hear others feedback.

Answers:

username_0: I expect many of us have had time to check out the SMPTE RDD 52 document, now made freely downloadable on

[https://ieeexplore.ieee.org/document/9161348](https://ieeexplore.ieee.org/document/9161348)

This is great to see as its refining to a detailed level what is acceptable for wider release DCP creation. Also great for film festivals who don't want to spend as much money on repacking DCP.. Specify it must pass clarmeta check before it can be accepted puts making (Costs) a good DCP back on the film maker.

My first reaction would be to add a reasonable amount of RDD52 specifics to clairmeta.. but after reading, I would assume, not that I have looked over all the code, that many of the specifics are already checked for by clairmeta already.

This comes back to this discussion. Currently clairmeta only reports/prints out anything if it fails a specific test. There is no real way to see, via its output, what checks have been performed. This is a considerable floor to me as, if a DCP is in an archive, for example, and with it a clairmeta report was created against it at the time it was archived, there is no way to see what tests were applied at that time. Just it was OK. That's not very useful.

Clairmeta will evolve and more tests will likely be added.

After some consideration, I think it is quite important that we add a VERBOSE option at the very least that prints out the test applied, Pass or Fail.

This then comes to how we incorporate RDD 52 tests. Do we add it as a module, of add tests to specific parts of the code that deal with RDD52 specific tests.. i.e. in subtitles, picture etc. I would assume we add it to already existing sections of the code. Where checks that mirror the RDD52 requirements already exist, we modify the error reported to reference the RDD52 document.

username_1: Thanks for opening the discussion @username_0.

For context, when we made ClairMeta at Ymagis, we already had another companion application for mastering related things and that tool was responsible for generating this kind of report. For that we used a simple HTML / CSS page and template expansion to fill in the report. We chose not to add it to ClairMeta source code because of the added dependencies and complexity that's not really wanted by everyone.

That's to say it should be relatively easy to implement it already as almost all the informations are already present in the report generated (using the Python API). One thing missing, but probably one liner, is to add the check that succeeded.

Note that you can easily add the checks description and reference (notice that most check have in their docstring a reference section to source the actual related standard / specification) by reading the docstring programmatically from python (in fact that's what we did).

I may look at it further when I have more time, but for now I won't be able to help with making this report. However I can help by adding any missing piece of information that's needed to generate it.

PS: I didn't get the time to look at RDD 52 yet, but yes, we will add those missing check in their respective module and document where they comes from in the docstring as already done.

username_0: I am and we all should be greatly appreciative to Ymagis for offering this to the public.

Considering ymagis had a vision and specific use model for the tool, I think the best way to approach this is to add a argument flag that adds this functionality as so its default use stays the same. (Apart from upgrades, additions fixes)

Today I had a reasonable look at the code. `dcp_check_base.py` mainly. On this topic, after looker through it..

I noticed 543 checks in the resulting list `self.check_executions`, then it looks though the list for the type of result and ads ERRORS, WARNING, to self.check_report[ ERROR, WARNING, INFO, SILENT ]

```

def make_report(self):

""" Check report generation. """

self.check_report = {

'ERROR': [],

'WARNING': [],

'INFO': [],

'SILENT': []

}

for c in self.find_check_failed(): <-- only add check failed into self.check_report

level = self.find_check_criticality(c.name)

self.check_report[level].append((c.name, c.msg))

check_unique = set([c.name for c in self.check_executions])

self.check_elapsed = {}

self.total_time = 0

self.total_check = len(check_unique)

for name in check_unique:

execs = [c.seconds_elapsed

for c in self.check_executions if c.name == name]

elapsed = sum(execs)

self.total_time += elapsed

self.check_elapsed[name] = elapsed

```

This appears to go through and populate `self.check_report`.

I find this a little confusing as why do you not add all PASSED checks to INFO?

And what is suppressed for.. (I imagine those not performed due to the checking profile supplied?)

Could you expand on the purpose here. What is the INFO list suppose to represent?

In the end, why not just expose `self.check_executions` in the API?

I could understand this portion of the code was mainly written for passing to the `dump_report()` function.

My reaction of reviewing this code is to ad the '-report' flag.

Add passed checks to `self.check_report['INFO']` in `make_report(self)`,

Update dump_report() to accept check for the `-report` flag and if present, add output for all the items found in `self.check_report['INFO']`

I'll create a new issue regarding CPL errors i also find confusing..

username_1: Could be the easiest and more flexible way, yes, this list already provide more information with eg. check processing times. We could add even more informations, eg. on which asset the check was performed (if applicable), the docstring of the check, etc.

username_1: Yes, this correspond to check that were bypassed using the profile `bypass` key.

username_1: #167 is addressing part of the issue by providing a more complete report object, from where you should be able to build a complete report. Let me know if that address your immediate concerns.

username_1: Should we close this ?

Status: Issue closed

username_0: Yes,

You have addressed this from my perspective.

I am working on the online tool still. I have the interactive version done but for likely bugs to be discovered.

I am currently working on making a HTML based Emailable version that can be exported from the online interactive tool.

Regenerating the interactive graphs as images that can be embedded into an Email. Based on the draft one I sent you a while ago for Clairmeta, but now adding CPL-structure, Video-bitrate, audio waveforms, loudness analysis images.

Once I am happy with that I will implement the latest Clairmeta version with recent upgrades and fixes.

Rami, please go to https://admin.d-cine.net and request an account under Research user. I need to test that part of the website to. Need to make it as easy as possible to manage/automate considering tis free to use..

Thanks,

James |

sorgerlab/indra | 366398879 | Title: Add more detail to knowledge sources documentation

Question:

username_0: Now that we have 19 documented knowledge sources, it might make sense to add another intermediate level to the module documentation that separates groups of knowledge sources like:

- general purpose reading systems

- biology-specific reading systems

- standard pathway databases

- custom knowledge bases

See https://indra.readthedocs.io/en/latest/modules/sources/index.html#

Answers:

username_0:  [Add an additional level to the indra.sources module documentation](https://trello.com/c/cwBptZqM/30-add-an-additional-level-to-the-indrasources-module-documentation)

username_1: Hi level description:

```INDRA can draw content from many sources, some specifically for biological

content, and some for more generic causal knowledge, some are from machine

readers and some are from human-curated or data driven databases.```

Sound good?

username_0: Yes, sounds perfect

Status: Issue closed

username_0: Done in #695 |

DenisStrokatov/Denis | 298179456 | Title: Zadanie4

Question:

username_0: package main.java;

public class Zadanie4 { public static void main(String[] args) {

int chislo = 83;

int i,k;

System.out.printf("razmen po 3 i 5 koppek:\n");

i = 0;

while (chislo - 5*i >= 0 && chislo>=5) {

System.out.printf("5 kop*%d, 3 kop*%d, ne razmenyano %d kop\n ", i, (chislo - 5*i) / 3, (chislo - 5*i) % 3);

i = i + 1;

};

if (chislo==4 || chislo==3) {

System.out.printf("5 kop*0, 3 kop*1, ne razmenyano %d kop\n ", chislo - 3);

};

if (chislo<3) {

System.out.printf("razmen %d kopeek ne vozmogen\n ", chislo);

}

System.out.printf("\n razmen po 3, 5 i 7 koppek:\n");

for (k=0; k<= chislo / 7;k++) {

i=0;

while (chislo-7*k - 5*i >= 0 && chislo>=7) {

System.out.printf("7kop*%d, 5 kop*%d, 3 kop*%d, ne razmenyano %d kop\n ", k, i, (chislo-7*k - 5*i) / 3, (chislo-7*k - 5*i) % 3);

i = i + 1;

}

}

i=0;

if (chislo==6 || chislo==5) {

while (chislo - 5*i >= 0) {

System.out.printf("7kop*0, 5 kop*%d, 3 kop*%d, ne razmenyano %d kop\n ", i, (chislo - 5*i) / 3, (chislo - 5*i) % 3);

i = i + 1;

};

};

if (chislo==4 || chislo==3) {

System.out.printf("7kop*0, 5 kop*0, 3 kop*1, ne razmenyano %d kop\n ", chislo - 3);

};

if (chislo<3) {

System.out.printf("razmen %d kopeek ne vozmogen\n ", chislo);

}

}

} |

jadrake75/odata-filter-parser | 120807541 | Title: Can likely optimize the concat recursive call

Question:

username_0: should be able to improve the handling of the array slicing (arguments is not an array but can us prototype array methods) via something like this: (courtesy of kminio)

Array.prototype.slice.call(arguments, 1) |

RocketMan1988/EDMC-Passport-System | 963276778 | Title: Missing DSSA carriers

Question:

username_0: https://inara.cz/station/183920/

https://inara.cz/galaxy-station/211128/

Answers:

username_1: I'll look into this. Thank you for the ping. 👍

username_0: This may have been fixed on 21 August by -- my count went up when I rescanned journals. Though I didn't specifically test each report here

https://discord.com/channels/164411426939600896/749701520865493012/878793109557903440 (EDCD)

Status: Issue closed

|

miragejs/discuss | 557703037 | Title: server.create() fails for models which are named as a plural

Question:

username_0: Boilerplate example: https://github.com/username_0/ember-cli-mirage-boilerplate

When an ember-data model is named as plural, for example `settings`, calling `server.create('settings')` or `server.createList('settings')` fails with the error:

```

Promise rejected during "server.create singular model fails": Mirage: You called server.create('settings') but no model or factory was found. Make sure you're passing in the singularized version of the model or factory name.

```

Instead, if we use `server.create('setting')`, this works, but this is not the actual model name.

Examples can be seen here:

**Model:**

https://github.com/username_0/ember-cli-mirage-boilerplate/blob/master/app/models/settings.js

**Mirage Factory:**

https://github.com/username_0/ember-cli-mirage-boilerplate/blob/master/mirage/factories/settings.js

**Example failure test:**

https://github.com/username_0/ember-cli-mirage-boilerplate/blob/master/tests/integration/components/settings-test.js

# Expected behaviour

Even if a model name is actually plural, `server.create()` should not require an incorrect, singularized version of the model name to be passed to it.

Answers:

username_1: FYI: Transferred this to our Discuss repo, our new home for more open-ended conversations about Mirage!

If things become more concrete + actionable we can create a tracking issue in the main repo.

username_2: Um, so maybe a silly question, but how should we handle this for an integration test? The issue we are having is that the `custom-inflector-rules.js` initializer does not run during an integration test. Are we making a fundamental mistake by interacting with the Mirage server in integration tests?

username_1: There's some info in the docs about integration tests: https://www.ember-cli-mirage.com/docs/testing/integration-and-unit-tests

You can absolutely use Mirage there. In this case, you could just import and run your initializer alongside `setupMirage`. I might make a separate function that does both (`setupMirageForIntegration` or something like that) just so you don't forget! |

jjhelmus/nmrglue | 250559289 | Title: Sorry, but still: "Integers not floats should be used for indexing and slicing arrays"

Question:

username_0: Although announced to be solved, with numpy 1.9.2, I recently installed via "git clone https://github.com/username_1/nmrglue" (from source).

dic, data = ng.bruker.read_pdata('../Data/3/pdata/1')

print data.shape

print data

thres = data.std() * 2

peakList = ng.analysis.peakpick.pick( data, pthres = thres )

(1024, 2048)

[[ 14099.25 15660.5 15820.25 ..., 441.5 13453.5 17852.25]

[ 22354.75 24070.25 16389. ..., 2944. -547.75 7666. ]

[ 17501.5 21159. 3769.25 ..., 866.5 -10845.5 -4526. ]

...,

[ 1695.5 -15082.25 -16300.75 ..., 1921. 17331. 22866.25]

[ -6796.25 -16138.25 -11376.75 ..., 4678.75 24169.25 22745.75]

[ -512.5 -2591.5 1098.5 ..., 2588.5 24818.75 23191. ]]

Traceback (most recent call last):

File "findPeaks.py", line 9, in <module>

peakList = ng.analysis.peakpick.pick( data, pthres = thres )

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 215, in pick

ls_classes)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 383, in guess_params_slice

r = extract_1d(region, rlocation, axis)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 398, in extract_1d

return np.atleast_1d(np.squeeze(data[s]))

TypeError: slice indices must be integers or None or have an __index__ method

Answers:

username_0: Also, with the script from

https://github.com/username_1/nmrglue/wiki/Find-and-fit-peaks-in-a-spectrum

Traceback (most recent call last):

File "fitPeaks.py", line 25, in <module>

peaks = ng.peakpick.pick(data, 25000)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 222, in pick

c_ndil)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 291, in clusters

return [labeled_array[i] for i in locations]

IndexError: only integers, slices (`:`), ellipsis (`...`), numpy.newaxis (`None`) and integer or boolean arrays are valid indices

Status: Issue closed

username_0: Although announced to be solved, with numpy 1.9.2, I recently installed via "git clone https://github.com/username_1/nmrglue" (from source).

dic, data = ng.bruker.read_pdata('../Data/3/pdata/1')

print data.shape

print data

thres = data.std() * 2

peakList = ng.analysis.peakpick.pick( data, pthres = thres )

(1024, 2048)

[[ 14099.25 15660.5 15820.25 ..., 441.5 13453.5 17852.25]

[ 22354.75 24070.25 16389. ..., 2944. -547.75 7666. ]

[ 17501.5 21159. 3769.25 ..., 866.5 -10845.5 -4526. ]

...,

[ 1695.5 -15082.25 -16300.75 ..., 1921. 17331. 22866.25]

[ -6796.25 -16138.25 -11376.75 ..., 4678.75 24169.25 22745.75]

[ -512.5 -2591.5 1098.5 ..., 2588.5 24818.75 23191. ]]

Traceback (most recent call last):

File "findPeaks.py", line 9, in <module>

peakList = ng.analysis.peakpick.pick( data, pthres = thres )

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 215, in pick

ls_classes)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 383, in guess_params_slice

r = extract_1d(region, rlocation, axis)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 398, in extract_1d

return np.atleast_1d(np.squeeze(data[s]))

TypeError: slice indices must be integers or None or have an __index__ method

username_1: @username_0 Can you provide the data file that you are looking at which is causing this error? I tried to replicate the issue with the data from the wiki example and was unable to cause the error.

username_0: Here you are, in 4 parts because of github limits.

[Data4.zip](https://github.com/username_1/nmrglue/files/1228812/Data4.zip)

Concatenate with cat Data*.zip > data.zip

username_0: next:

[Data3.zip](https://github.com/username_1/nmrglue/files/1228820/Data3.zip)

username_0: next:

[Data2.zip](https://github.com/username_1/nmrglue/files/1228822/Data2.zip)

username_0: & last:

[Data1.zip](https://github.com/username_1/nmrglue/files/1228823/Data1.zip)

username_0: tested to concatenate - did not work out.

Don't know how to provide you with the original Data.zip of 28 MB

username_1: @username_0 Can you upload the file to dropbox or a similar file sharing site?

username_0: To send you a download link & password requires an e-mail address of yours.

username_1: username_1 [at] gmail work.

username_1: I got the data file and ran the following script without any error:

``` python

import nmrglue as ng

dic, data = ng.bruker.read_pdata('../Data/3/pdata/1')

print data.shape

print data

thres = data.std() * 2

peakList = ng.analysis.peakpick.pick( data, pthres = thres )

```

Can you verify that you have the latest version of nmrglue and provide details about what NumPy and Python version you are using?

username_0: Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 215, in pick

ls_classes)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 383, in guess_params_slice

r = extract_1d(region, rlocation, axis)

File "/usr/lib/python2.7/site-packages/nmrglue/analysis/peakpick.py", line 398, in extract_1d

return np.atleast_1d(np.squeeze(data[s]))

TypeError: slice indices must be integers or None or have an __index__ method

username_0: Same error on a CentOS 7 (64bit) with Python 2.7.5 & numpy 1.12 & nmrglue (0.7_dev, i.e. from git-sources)

username_1: I've tried to replicate this on my own system with the same version of python and numpy without any luck. The peak picking does not raise an error.

I'm at a bit of a loss on what else to try. Perhaps the scipy version is causing issue, what version of SciPy is installed.

Also, what is the output from:

``` Python

import nmrglue as ng

dic, data = ng.bruker.read_pdata('../Data/3/pdata/1')

thres = data.std() * 2

ploc, pseg = ng.analysis.peakpick.find_all_connected(data, thres, True, False)

print(thres)

print(ploc)

print(pseg)

```

`find_all_connected` is being run inside the peak picker to generate the region limits. If it gives odd results that could explain the error.

username_0: Thank you very much for your patience and support. You're right, with pointing at scipy version !

import nmrglue as ng

dic, data = ng.bruker.read_pdata('../Data/3/pdata/1')

thres = data.std() * 2

ploc, pseg = ng.analysis.peakpick.find_all_connected(data, thres, True, False)

works WITHOUT problems on MAC OSX (python 2.7.12 from Xcode, numpy & scipy 0.17 added by Homebrew), as well as on Fedora 24 (python 2.7.13, numpy 1.11, scipy 0.16.1).

Finally, the problem has been solved by updating 'scipy' (originally vers. 14.1 on Fedora 23) via "pip install --upgrade scipy" (as root) !!

Status: Issue closed

username_1: Great to hear you found a fix. |

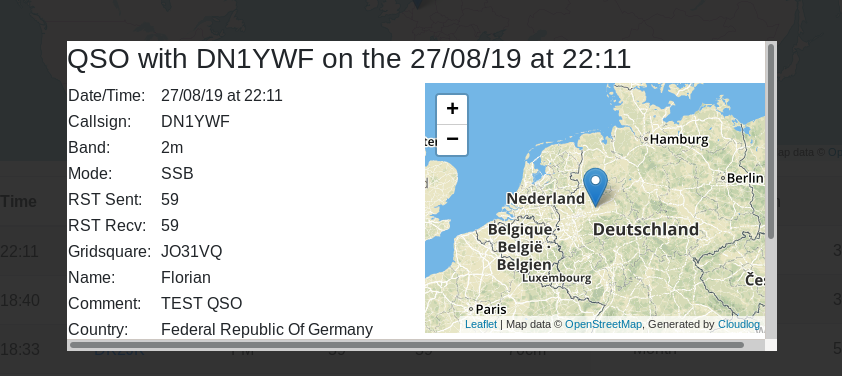

magicbug/Cloudlog | 486048669 | Title: Have a use for the field OPERATOR

Question:

username_0: We are evaluating Cloudlog for our clubstation currently. It would be useful to have an information about who (personally) operated the club callsign for each QSO. From what I read in the ADIF specs there is a field OPERATOR which should allow for that.

We imported an .adif file and the field obviously gets filled. So what is missing is a way to have that on the input side. So QSOs which are logged through the Cloudlog GUI (aka. web interface) do not populate this field.

Or would that require separate accounts for anyone who wants to log QSOs for the club station?

Answers:

username_1: @username_0 In the code it uses the user logins callsign against the OPERATOR field so if you give everyone an account, at the moment the privileges don't really do anything so I'd set everyone to admin for now.

I need to expose the OPERATOR field on the QSO popup windows so that it can be seen.

What I'm planning todo do is add a role that removes the admin features and just lets an "Operator" add QSOs.

username_1: Station Callsign is for argument's sake in Cloudlog the logbook callsign so 2M0SQL this is defined in Station Profiles, then you can select it in the QSO panel "general"

username_1: Yes, I guess a global club username for everyone to use is an option, i just never saw it like that.

username_1: I'll get it added :)

username_1: Done

username_1: When you create an account for a user, when you fill in "Callsign" this will be the field that is used for the OPERATOR field

username_0: We are evaluating Cloudlog for our clubstation currently. It would be useful to have an information about who (personally) operated the club callsign for each QSO. From what I read in the ADIF specs there is a field OPERATOR which should allow for that.

We imported an .adif file and the field obviously gets filled. So what is missing is a way to have that on the input side. So QSOs which are logged through the Cloudlog GUI (aka. web interface) do not populate this field.

Or would that require separate accounts for anyone who wants to log QSOs for the club station?

username_0: I see. But how is station callsign set then?!

username_0: I see. Means we need to have an account for anyone who wants to log for our club call sign. So then change this to FR: Make the COL_OPERATOR filed visible :)

username_0: Not a problem. I would find a separate account for each operator more convenient.

But I just discovered, that OPERATOR is also not included when exporting. Could you please also consider this?

username_0: Mni tnx.

username_0: Cool. Will test later on as I am horizontally polarized :-)

username_0: Am I missing something on the QSO details popup window?

Should include the operator as per 47d9efe218986142bd8af1c4d5d78b0db8f19eac right?

username_0: Well, forget what I worte. I didn't realize there are veritcal scrollbars ... *facepalm*

username_1: This is now 100% stored from User account and won't be made available in the Panel unless under edit to correct an error.

Status: Issue closed

|

openMF/web-self-service-app | 298121094 | Title: Create a template for Issues and Pull Requests for the repository

Question:

username_0: Adding a template for issues and pull requests will help the contributors share all the required important information which will be helpful to the community as a whole.

Answers:

username_0: @username_3 Can I work on this?

username_1: @username_3 @santoshconflux Can i work on this?

username_2: @username_1 , it is done already #110

Status: Issue closed

|

napalm-automation/napalm-ansible | 230539043 | Title: route_to on junos - missing parameters

Question:

username_0: I'm trying to use the 'route_to' filter on get_facts and I'm failing:

```

tasks:

- name: get facts from device

napalm_get_facts:

provider: "{{ junos_provider }}"

filter: 'route_to'

register: result

```

```

$ ansible-playbook -i ./inventory/ ./getdata.yaml

PLAY [fetch info] ****************************************************************************************************************************************************************************

TASK [get facts from device] *****************************************************************************************************************************************************************

fatal: [10.69.0.208]: FAILED! => {"changed": false, "failed": true, "msg": "[route_to] cannot retrieve device data: Unknown protocol: destination"}

to retry, use: --limit @/home/ubuntu/2d-automation/ansible/getdata.retry

PLAY RECAP ***********************************************************************************************************************************************************************************

10.69.0.208 : ok=0 changed=0 unreachable=0 failed=1

```

Looking at the code in [napalm-junos](https://github.com/napalm-automation/napalm-junos/blob/develop/napalm_junos/junos.py#L1104) the function expects two parameters, but I don't think there is a way to pass them.

Answers:

username_1: Hi, I have implemented an `args` argument you can use to pass to the filter. Would you mind trying? I did already and seemed to work but it would be nice if you could double check before testing:

https://github.com/napalm-automation/napalm-ansible/pull/57/files#diff-bf4dee70d5c4bdf0ee8d92a29ed373c0R118

```

(.venv) ➜ ansible git:(master) ✗ ansible-playbook --limit rtr00 playbook_hello_world.yaml

.

# Print gathered facts ****************************************************************************************************

* rtr00 - changed=False ----------------------------------------------------

{

"facts": {

"fqdn": "localhost",

"hostname": "localhost",

"interface_list": [

"Ethernet1",

"Ethernet2",

"Management1"

],

"model": "vEOS",

"os_version": "4.17.5M-4414219.4175M",

"serial_number": "",

"uptime": 1083,

"vendor": "Arista"

},

"interfaces": {

"Ethernet1": {

"description": "",

"is_enabled": true,

"is_up": true,

"last_flapped": 1495885906.2295532,

"mac_address": "08:00:27:26:EF:68",

"speed": 0

},

"Ethernet2": {

"description": "",

"is_enabled": true,

"is_up": true,

"last_flapped": 1495885906.2296872,

"mac_address": "08:00:27:83:BE:A5",

"speed": 0

},

"Management1": {

"description": "",

"is_enabled": true,

"is_up": true,

"last_flapped": 1495885922.2773018,

"mac_address": "08:00:27:47:87:83",

"speed": 1000

}

},

"route_to": {

"8.8.8.8/32": [

{

"age": 0,

"current_active": true,

"inactive_reason": "",

"last_active": true,

"next_hop": "10.0.2.1",

"outgoing_interface": "Management1",

"preference": 1,

"protocol": "static",

"protocol_attributes": {},

"routing_table": "default",

"selected_next_hop": true

}

]

}

}

# STATS *******************************************************************************************************************

rtr00 : ok=2 changed=0 failed=0 unreachable=0

```

username_0: I can confirm that it works for me too.

I also realised that I can't use it - if I look for a specific match (like 8.8.8.0/24) I get this:

```

fatal: [10.69.0.208]: FAILED! => {"changed": false, "failed": true, "msg": "[route_to] cannot retrieve device data: failed to detect a valid IP address from u'10.69.0.177:500:172.16.58.3'"}

to retry, use: --limit @/home/ubuntu/2d-automation/ansible/getdata.retry

```

So it looks like it expects only an ipv4 prefix, and when it gets a vpnv4 one it fails to parse it.

username_1: Interesting. How does that work with EOS? Which command would you run to actually see that route?

username_0: No quick access right now to check. I suspect this is going to be junos specific. It's the same over cli too - 'show route _destination_' shows all tables, including vpnv4. On other platforms I worked with you always have to specify you want 'vrf/vpnv4' routes.

On junos the way around this is to specify the 'table', but since currently napalm-junos doesn't use 'table' as a parameter I think the only way around is to use the junos_get_table from Juniper (and craft the required table definition manually).

username_1: Oh, it's junos! Sorry, got confused and I thought it was on EOS. So, I suggest you to open an issue on `napalm-base` for further discussion as I think it might be useful to discuss adding a "table" parameter. In the meantime, I will just merge this.

Status: Issue closed

username_1: Solving this via #57 |

globaldothealth/list | 1076085612 | Title: Add Omicron Travel History Dataset to G.h

Question:

username_0: @Mougk and collaborators have compiled a dataset of travel history associated with verified Omicron cases, which is updated regularly: https://docs.google.com/spreadsheets/d/1LHfVWlUfIFp-4NiAoyI4xIrq3v56juuK2-KrNquwtmo/edit#gid=0

While not line list per se, these data are valuable, unique, and time-sensitive to make available to the global research and epidemiological community. We should find a simple and fast way to host these data via G.h in a downloadable format similar to our core LL db. A simple option could be to write a script to ingest/geocode the data and host on our github as a .CSV with some accompanying documentation on methodology, sources, data dictionary, authors etc. We can then create either a button and/or top level nav on our homepage to link to this GitHub page and/or a Wordpress page similar to our Data Deep Dives. Suggestions/ideas welcome on the best MVP approach!

Answers:

username_1: I think it's best to put data files in another repo, I'll create covid19-omicron and put up a WIP script there.

username_1: WIP at https://github.com/globaldothealth/covid19-omicron, there are some issues with geocoding, looking into that.

username_0: Thanks @username_1 ! Good idea and great progress

username_0: @username_1 copying slack notes from @Mougk :

"could you add more details on the LTLA dataset from UK gov and direct link to data description?

Moritz 1 hour ago

and pls add data dictionary to travel dataset and ltla dataset (similar to denmark readme)"

username_1: @username_0 done!

username_0: @username_1 I think we can close this issue now and add updates as needed?

Status: Issue closed

|

sisoputnfrba/foro | 280562540 | Title: [MASTER] Problema ejecutando la función de un hilo

Question:

username_0: Buenas !!

Estoy teniendo un pequeño quilombo en master, no parece muy jodido pero estoy hace un par de horas y no lo puedo solucionar:

En la etapa de reduccion local, master crea un solo hilo para conectarse a un nodo (el nodo sobre el cual se va a hacer la reduccion), el problema aparece cuando empieza a ejecutar la funcion del hilo: básicamente explota porque lo que recibe el hilo esta todo en null PEEEEEEEERO en la función _conectarse_a_worker_reduc_local()_ cuando se escribe lo que se deserializo en el log (justo antes de crear el hilo y pasarle la estructura deserializada), esta todo legal !!!!

Por eso, no se como pasa de estar todo normal a estar todo en null cuando empieza a ejecutar el hilo.

Capaz es una pavada que no estoy viendo.... alguna idea ?

```c

int conectarse_a_worker_reduc_local(){

t_conexion_rlocal info_nodo = deserializar_info_nodo_rlocal_mock(); //deserializar_info_nodo_rlocal();

log_trace(log_master, "MAIN MASTER: Recibi esto: {\ncant_archivos: %d \n id_nodo: %d\n ip: %s\n puerto: %d\n ruta_archivo_rlocal: %s\n tamanio_ruta_archivo_rlocal: %d\n primera_ruta: %s\n segunda_ruta: %s\n tercera_ruta: %s\n}",

info_nodo.cant_archivos_transformados,

info_nodo.id_nodo,

info_nodo.ip,

info_nodo.puerto,

info_nodo.ruta_archivo_rlocal,

info_nodo.tamanio_ruta_archivo_rlocal,

list_get(info_nodo.rutas_archivos_transformados, 0),

list_get(info_nodo.rutas_archivos_transformados, 1),

list_get(info_nodo.rutas_archivos_transformados, 2)

);

log_trace(log_master, "master crea un hilo para conectarse a un worker en la ip %s y puerto %d en la etapa de reduccion local", info_nodo.ip, info_nodo.puerto);

pthread_t hilo;

pthread_attr_t attr;

int detachstate;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_DETACHED);

if(pthread_create(&hilo, &attr, ordenar_rlocal_a_worker, (void*) &info_nodo) != 0){

log_error(log_master, "no se pudo iniciar un hilo para conectarse a un worker en la ip %s y puerto %d en la etapa de reduccion local", info_nodo.ip, info_nodo.puerto);

destroyer_info_nodo_rlocal((void*)&info_nodo);

t_info_tarea info_tarea_fallida = {.id_job = nro_job, .id_nodo = info_nodo.id_nodo, .bloque_archivo = -1};

notificar_resultado_etapa(ERROR_EN_REDUCCION_LOCAL, info_tarea_fallida);

return -1;

}

pthread_attr_destroy(&attr);

return 0;

}

```

En la funcion _deserializar_info_nodo_rlocal_mock()_ tengo lo siguiente :

```c

t_conexion_rlocal deserializar_info_nodo_rlocal_mock() {

t_conexion_rlocal nodo;

nodo.id_nodo = 1;

nodo.ip = string_new();

string_append(&nodo.ip, "127.0.0.1");

[Truncated]

==6962== HEAP SUMMARY:

==6962== in use at exit: 974 bytes in 27 blocks

==6962== total heap usage: 163 allocs, 136 frees, 8,035 bytes allocated

==6962==

==6962== LEAK SUMMARY:

==6962== definitely lost: 40 bytes in 3 blocks

==6962== indirectly lost: 63 bytes in 6 blocks

==6962== possibly lost: 136 bytes in 1 blocks

==6962== still reachable: 735 bytes in 17 blocks

==6962== suppressed: 0 bytes in 0 blocks

==6962== Rerun with --leak-check=full to see details of leaked memory

==6962==

==6962== For counts of detected and suppressed errors, rerun with: -v

==6962== ERROR SUMMARY: 5 errors from 5 contexts (suppressed: 0 from 0)

Terminado (killed)

```

(Por cierto, tampoco entiendo por que muestra dos veces que *[INFO] 14:14:23:544 Master/(6962:6963): La primera ruta es (null)* pero bue...)

Ayuda!!!!!!!!!!!

Answers:

username_1: Buenas!

Fijate que tenés `Invalid read of size` en las líineas:

- `ordenar_rlocal_a_worker (funciones_master.c:629)`

- `ordenar_rlocal_a_worker (funciones_master.c:633)`

- `ordenar_rlocal_a_worker (funciones_master.c:648)`

Cuales son esas líneas?

username_0: Tiene pinta de que los invalid read son por eso, no ?

Por otro lado, comento esto porque quizás les sirva a ustedes para encontrar el problema o entender que esta pasando:

Probé poniendo un sleep (_si, solo para probar, se que hay que sacarlo_:satisfied:) en la función _conectarse_a_worker_reduc_local()_ acá :

```c

...

if(pthread_create(&hilo, &attr, ordenar_rlocal_a_worker, (void*) &info_nodo) != 0){

log_error(log_master, "no se pudo iniciar un hilo para conectarse a un worker en la ip %s y puerto %d en la etapa de reduccion local", info_nodo.ip, info_nodo.puerto);

destroyer_info_nodo_rlocal((void*)&info_nodo);

t_info_tarea info_tarea_fallida = {.id_job = nro_job, .id_nodo = info_nodo.id_nodo, .bloque_archivo = -1};

notificar_resultado_etapa(ERROR_EN_REDUCCION_LOCAL, info_tarea_fallida);

return -1;

}

sleep(1000000);

pthread_attr_destroy(&attr);

return 0;

...

```

Y anda todo lo mas bien, WTF :scream: .

Lo único que se me ocurre es que quizás todas los punteros que tiene _info_nodo_ son del stack de la funcion _conectarse_a_worker_reduc_local()_ y esos son los que le pasas al hilo, entonces cuando termina _conectarse_a_worker_reduc_local()_ y se liberan esas referencias, lo que tiene el hilo esta todo vació... puede ser o la estoy re flasheando?

username_1: Tengo la ligera sensación de que el problema está aca:

`t_conexion_rlocal info_nodo = deserializar_info_nodo_rlocal_mock();`

Los hilos comparten memoria, especialmente la que les pasas como argumento. Pero que pasa si esa ubicación de memoria es estática y no dinámica? Primero, se almacena en el stack. Segundo, si se pisa esa info con otra cosa en el hilo principal, lo estás pisando en el otro. Creo que lo que tenés aca es, _la clásica condición de carrera_ 😛

username_0: Ah ok ! Ahí es donde masomenos me había trabado ... ¿Como puedo hacer para que esos info_nodo sea individuales para cada hilo y que si el hilo principal termina no joda a los demás? ¿No hay una forma de pasarle al hilo una copia independiente? ¿Una solución podría ser que en vez de que el hilo principal haga _deserializar_info_nodo_rlocal_mock()_ lo haga cada hilo cuando arranca a ejecutar? (ahí tendría que poner un semaforo para sincronizar la lectura por el socket)

username_2: Hay una funcion que se llama

Destroyer_info_nodo_rlocal o algo asi que ejecutás después crear el hilo.

Creo que tu problema es que le estas pasando la direccion de infoNodo al

hilo y seguido, lo destruís.

Por eso cuando tu hilo quiere leerlo, te tira un null pointer exception.

Fijate si el problema viene por ahí y cualquier cosa pasate de nuevo.

username_0: Debuggeandolo me pareció que no caía nunca ahí porque entra en el if si _pthread_create(..)_ retorna != 0

username_1: Chequeá lo que dijo chris, aunque en ese caso no creo que el bardo venga por ahi, es otro problema potencial.

@username_0 si acaso tuvieramos una funcionalidad del SO para reservar memoria fuera del stack, que se pudiera compartir entre hilos y solo asignar en el stack la "referencia" a esa memoria, algo como `memory allocation` 😛

Jodas a parte, `malloc` es tu héroe. Aprovechá la existencia de los punteros para pasar memoria al hilo 😉

username_0: Claro, la forma en la que lo pregunte hizo que parezca que no use malloc en todo el tp jaja. Lo pregunte asi poque en la funcion _deserializar_info_nodo_rlocal_mock()_ estoy alocando memoria a lo loco, y aun asi no funciona, pense que el problema no venia por ahi.

Igualmente, pensando un poco en lo que me dijiste, recién probe hacer lo siguiente en _conectarse_a_worker_reduc_local()_ para asegurarme de que le estoy pasando una nueva posición de memoria al hilo (lo cual yo pensaba que ya estaba pasando por todos los mallocs que hacia en _deserializar_info_nodo_rlocal_mock()_ ) :

```c

...

t_conexion_rlocal* nuevo_info_nodo = (t_conexion_rlocal*)malloc(tamanio_info_nodo(info_nodo));

memcpy(nuevo_info_nodo, &info_nodo, tamanio_info_nodo(info_nodo));

if(pthread_create(&hilo, &attr, ordenar_rlocal_a_worker, (void*) nuevo_info_nodo) != 0){

...

```

```c

int tamanio_info_nodo(t_conexion_rlocal info_nodo) {

int tamanio = 0;

tamanio += sizeof(info_nodo.id_nodo);

tamanio += sizeof(info_nodo.cant_archivos_transformados);

tamanio += sizeof(info_nodo.puerto);

tamanio += sizeof(info_nodo.tamanio_ruta_archivo_rlocal);

tamanio += strlen(info_nodo.ip);

tamanio += strlen(info_nodo.ruta_archivo_rlocal);

int i;

for(i = 0; i < info_nodo.cant_archivos_transformados; i++) {

tamanio += strlen((char*) list_get(info_nodo.rutas_archivos_transformados, i));

}

return tamanio;

}

```

Y ahi va queriendo, cuando llega al hilo por lo menos dice que la primera ruta que hay en la lista no esta en null... despues de eso se cuelga y todavia no se por que... pero me parece que va encaminado, cualquier cosa aviso !

Status: Issue closed

|

Sandia-OpenSHMEM/SOS | 665445112 | Title: find-debuginfo reports no build ID note found error during rpmbuild

Question:

username_0: rpmbuild fails with the following error (not reproducible in Travis)

```

extracting debug info from /home/rahmanmd/rpmbuild/BUILDROOT/sandia-openshmem-1.4.5-1.el7.x86_64/usr/shmem/lib64/libsma.so.0.0.0

*** ERROR: No build ID note found in /home/rahmanmd/rpmbuild/BUILDROOT/sandia-openshmem-1.4.5-1.el7.x86_64/usr/shmem/lib64/libsma.so.0.0.0

error: Bad exit status from /var/tmp/rpm-tmp.CwP5x4 (%install)

```

A possible workaround is to stop building the debuginfo package by adding the following in the .spec file:

```

%define debug_package %{nil}

```

This work-around is validated to work without the above error.

Answers:

username_0: @username_1 Do you have any positive or negative opinion on the workaround? It seems to be ok to build without debuginfo for the RPM, but not sure what would be the implications, if any?

username_1: The spec file is there to support OPA fabric software folks. You could check with them if they are ok with this solution.

username_0: Resolved by #962

Status: Issue closed

|

icshwi/e3 | 287833461 | Title: Add option to set output path with prepare_module

Question:

username_0: I find that prepare module should be improved providing an option (e.g. -d) to set the destination path of the module. Currently everything is extracted under the e3/tool directory.

Status: Issue closed

Answers:

username_1: Not in prepare_module. bash because we drop. However, it was implemented at cbb1baa in the new template generator. |

NG-ZORRO/ng-zorro-antd | 313962236 | Title: nz-tree can not used in reactiveform

Question:

username_0: ## Version

0.7.0-beta.4

## Environment

windows 10 x64, chrome 65.0.3325.181, ng-zorro 0.7.0-beta.4

## Reproduction link

[https://stackblitz.com/edit/ng-zorro-antd-setup-evun5b](https://stackblitz.com/edit/ng-zorro-antd-setup-evun5b)

## Steps to reproduce

```

<form nz-form [formGroup]="form">

<nz-form-item>

<nz-tree [(ngModel)]="menus">

</nz-tree>

</nz-form-item>

</form>

```

just put nz-tree component in reactivefrom and use ngModel bind data ,it will be error:

```

ngModel cannot be used to register form controls with a parent formGroup directive. Try using

formGroup's partner directive "formControlName" instead.

```

but is I use formControlName to instead ngModel,It will not be display anything!

## What is expected?

Can use formControlName to bind data to nz-tree or other method can use to bind data to nz-tree and can be put in reactiveform

## What is actually happening?

Now can not be used in reactiveform

## Other?

<!-- generated by ng-zorro-issue-helper. DO NOT REMOVE -->

Answers:

username_1: @username_0 not nz-tree's error, `If ngModel is used within a form tag, either the name attribute must be set or the form control must be defined as 'standalone' in ngModelOptions. `, you should use `[ngModelOptions]="{standalone: true}"`

Status: Issue closed

|

lbryio/lbry-desktop | 485887888 | Title: Clicking enter in tag input (without typing) adds blank tag

Question:

username_0: Thanks to MH for spotting this one.

If you are in the customize/publish page that has the inputs for tag search and don't type anything, clicking enter adds a blank tag.

Answers:

username_1: a blank tag is also added if you type spaces only (unsure if people would want to follow space only tags) and if you add a tag with preceding spaces it escapes the duplicate check as well as being added into tags.

maybe input should be trimmed and checked for zero length before being added.

Status: Issue closed

|

ulaval/modul | 500961198 | Title: proposition: Mise à niveau de Portal et de son fonctionnement

Question:

username_0: <!--

Content can be written in English or in French

-->

**Description du besoin**

Portal est à la version 1.1.1, il faudrait le migrer à la version la plus récente (actuellement 2.1.6).

Il faut aussi un refactor de l'écosystème de la mixin et du plugin Portal.

**Description sommaire de la solution proposée**

*Un nouveau composant modul-portal qui inclurait <portal> et <transition>.

*Un nouveau set d'événements émits par ce composant selon les hook JS de Transition

https://fr.vuejs.org/v2/guide/transitions.html#hooks-JavaScript

Le retrait des setTimeout et TransitionDuration

Le retrait du Mixins Portal

L'ajustement des composantes utilisant le Mixins Portal (MDialog, MModal, MOverlay, MPopper, MSidebar et MToast)

Answers:

username_1: Vue3 s'en vient avec son propre Portal built-in. Il faudait évaluer cette possibilité aussi avant de faire un refactor portal en vue2. https://vueschool.io/articles/vuejs-tutorials/portal-a-new-feature-in-vue-3/

Status: Issue closed

|

lfkeitel/aptmirror-go | 290244777 | Title: Clean up old files

Question:

username_0: If the upstream removes an old version or otherwise changes files, the downloaded version needs to be cleaned up. A simply way would be to have a map of filenames to bool. If a file is in the map (ie. it's in the Release file or is a piece of metadata) then it should be kept. Anything else is removed. A simple directory walker can be used to remove files and empty directories. |

jellekitz/Json | 161128203 | Title: autofocus toevoegen

Question:

username_0: Je hebt feedback gekregen van **username_0**

op:

```c

<input type="search" placeholder="Zoek foto's..." id="zoekterm">

```

URL: https://github.com/jellekitz/Json/blob/master/index.html

Feedback: Autofocus geeft een veel betere UXD[](http://www.studiozoetekauw.nl/codereview-in-het-onderwijs/ '#cr:{"sha":"7eff689bff558987fdcb6b8629d17277610fb5b8","path":"index.html","reviewer":"username_0"}') |

pulumi/docs | 452160205 | Title: Anchors are dropped from redirects

Question:

username_0: With the move to Hugo, the Node.js API docs have moved from `reference/pkg/nodejs/@pulumi/` to `reference/pkg/nodejs/pulumi/` as Hugo strips the `@` when generating the site.

We have Hugo aliases in place that redirect from the old locations to the new locations. However, these are based on [meta refresh](https://www.w3.org/TR/WCAG20-TECHS/H76.html), which does not pass along any anchors that may be specified in the source URL. This is problematic as many of our API docs rely on anchors when linking to specific identifiers.

The fix is to use real 301 redirects instead of these client-side redirects, as browsers will pass along the anchor when 301 redirects are encountered.<issue_closed>

Status: Issue closed |

nxtbgthng/OAuth2Client | 71554459 | Title: Multiple user : Same account type

Question:

username_0: How to configure multiple user for same account type. For example a single user may use multiple dropbox account.

Answers:

username_1: I ran into same issue. Unfortunately this lib lacks both a manual refresh and account types of same host. (I.e must know the specific info PRIOR to setting client config). My solution is to make my own oauth2 client. :/

username_2: There is no real API for this, but it still can be done.

Since account types are just strings you can just make up arbitrary strings. When I faced the same problem I just used `"github-username_2"` as the account type. |

rbarrois/python-semanticversion | 675592073 | Title: Docs say that named components patch must be both an integer and a tuple of strings

Question:

username_0: This text is present in both `README.rst` and `docs/index.rst`:

```rst

In that case, ``major``, ``minor`` and ``patch`` are mandatory, and must be integers.

``prerelease`` and ``patch``, if provided, must be tuples of strings:

```

I think the second `patch` reference must be to some other argument instead...

Answers:

username_1: Good catch! The second should indeed by ``build``, fixing it now :)

Status: Issue closed

username_0: Wow - that was quick - thanks @username_1 ! |

open-sdg/sdg-translations | 457535204 | Title: Use dashes instead of dots, for global_targets and global_indicators

Question:

username_0: This would unfortunately be a large breaking change, but may be necessary. The "keys" for our global_targets and global_indicators translations contain dots. Eg, "1.2.1". This is problematic for any platform that tries to "drill down" using dot-delimited syntax. For example, "global_indicators.1.2.1.title" would not work. If we switch to dashes instead of dots, as in "global_indicators.1-2-1.title", then it would work.

Since we have not yet reached verison 1, this is probably our best time to make this change.<issue_closed>

Status: Issue closed |

sigp/lighthouse | 671723347 | Title: Ensure lcli generates correct pre-genesis ENR

Question:

username_0: ## Steps to resolve

Ensure that `generate_bootnode_enr.rs` creates the `EnrForkId` in a way that is faithful to specification before genesis is known.

Answers:

username_0: @username_1 perhaps you'd be interested in this one?

username_1: Yep, I'll take this one! |

MeasuringPolyphony/mp_editor | 774010433 | Title: After loading MEI Parts file not all staves load into input editor screen

Question:

username_0: However, when you click continue to score editor the music is there. Tried this with several parts files and the same thing happened with them all. Seems kind of random which parts will be appear when you click on the stave bounding boxes. Attaching one example file of an MEI parts file that this occurred with - also unsure whether it may be related to the general issue with the broken MP editor that we are having at the moment

[je_sui_aussi_MENSURAL_parts copy.txt](https://github.com/MeasuringPolyphony/mp_editor/files/5736877/je_sui_aussi_MENSURAL_parts.copy.txt)

: https://github.com/MeasuringPolyphony/mp_editor/issues/78<issue_closed>

Status: Issue closed |

firebase/quickstart-unity | 446343014 | Title: JNI DETECTED ERROR IN APPLICATION: can't call java.lang.Object com.google.firebase.database.DataSnapshot.getValue()

Question:

username_0: ## Please fill in the following fields:

Unity Version: 2017.4.27

Firebase Unity SDK version: 6.0.0

Firebase plugins in use (Auth, Database, etc.): Auth, Database, Analytics, RemoteConfig, Messaging

Additional SDKs you are using (Facebook, AdMob, etc.): AdMob, Unity IAP

Platform you are using the Unity editor on (Mac, Windows, or Linux): Windows, Mac

Platform you are targeting (iOS, Android, and/or desktop): iOS, Android

## Please describe the issue here:

(Please list the full steps to reproduce the issue. Include device logs, Unity logs, and stack traces if available.)

Building build with the new 6.0.0 version (was previously working under 4.5.2)

Auto sign into account, start pulling making database requests while (maybe TMI: IAP is also pulling doing purchasing init in the background). App hangs most of the time, sometimes when restarting the app things works (no hang). Curiously the app will usually not hang while ADB is connected you have to disconnect and then start it up. Even curiously-er it hangs 100% of the time when starting from fresh install (with ADB cable unplugged) which is the case for someone installing from the store for the first time.

## Please answer the following, if applicable:

Have you been able to reproduce this issue with just the Firebase Unity quickstarts (this GitHub project)?

n/a I've been using firebase for about 2 years now

CRASH LOG

(Filename: /Users/builduser/buildslave/unity/build/artifacts/generated/Android/runtime/DebugBindings.gen.cpp Line: 51)

05-20 15:03:05.401 10865-10921/? A/aimadiction.co: java_vm_ext.cc:542] JNI DETECTED ERROR IN APPLICATION: can't call java.lang.Object com.google.firebase.database.DataSnapshot.getValue() on null object

java_vm_ext.cc:542] in call to CallObjectMethodV

java_vm_ext.cc:542] from boolean com.unity3d.player.UnityPlayer.nativeRender()

java_vm_ext.cc:542] "UnityMain" prio=5 tid=26 Runnable

java_vm_ext.cc:542] | group="main" sCount=0 dsCount=0 flags=0 obj=0x12c81758 self=0x72b4c37800

java_vm_ext.cc:542] | sysTid=10921 nice=0 cgrp=default sched=0/0 handle=0x72a32df4f0

java_vm_ext.cc:542] | state=R schedstat=( 4103897740 298315453 10985 ) utm=355 stm=54 core=5 HZ=100

java_vm_ext.cc:542] | stack=0x72a31dc000-0x72a31de000 stackSize=1041KB

java_vm_ext.cc:542] | held mutexes= "mutator lock"(shared held)

java_vm_ext.cc:542] native: #00 pc 00000000003c7324 /system/lib64/libart.so (art::DumpNativeStack(std::__1::basic_ostream<char, std::__1::char_traits<char>>&, int, BacktraceMap*, char const*, art::ArtMethod*, void*, bool)+220)

java_vm_ext.cc:542] native: #01 pc 0000000000495dc0 /system/lib64/libart.so (art::Thread::DumpStack(std::__1::basic_ostream<char, std::__1::char_traits<char>>&, bool, BacktraceMap*, bool) const+352)

java_vm_ext.cc:542] native: #02 pc 00000000002e85ac /system/lib64/libart.so (art::JavaVMExt::JniAbort(char const*, char const*)+972)

java_vm_ext.cc:542] native: #03 pc 00000000002e89cc /system/lib64/libart.so (art::JavaVMExt::JniAbortV(char const*, char const*, std::__va_list)+108)

java_vm_ext.cc:542] native: #04 pc 00000000000fd5f8 /system/lib64/libart.so (art::(anonymous namespace)::ScopedCheck::AbortF(char const*, ...)+144)

java_vm_ext.cc:542] native: #05 pc 0000000000101458 /system/lib64/libart.so (art::(anonymous namespace)::ScopedCheck::CheckMethodAndSig(art::ScopedObjectAccess&, _jobject*, _jclass*, _jmethodID*, art::Primitive::Type, art::InvokeType)+1584)

java_vm_ext.cc:542] native: #06 pc 00000000000ffcb4 /system/lib64/libart.so (art::(anonymous namespace)::CheckJNI::CallMethodV(char const*, _JNIEnv*, _jobject*, _jclass*, _jmethodID*, std::__va_list, art::Primitive::Type, art::InvokeType)+756)

java_vm_ext.cc:542] native: #07 pc 00000000000ec7d4 /system/lib64/libart.so (art::(anonymous namespace)::CheckJNI::CallObjectMethodV(_JNIEnv*, _jobject*, _jmethodID*, std::__va_list)+84)

java_vm_ext.cc:542] native: #08 pc 000000000010f218 /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libFirebaseCppApp-6.0.0.so (_JNIEnv::CallObjectMethod(_jobject*, _jmethodID*, ...)+92)

java_vm_ext.cc:542] native: #09 pc 000000000014172c /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libFirebaseCppApp-6.0.0.so (firebase::database::internal::DataSnapshotInternal::GetValue() const+32)

java_vm_ext.cc:542] native: #10 pc 000000000013daa4 /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libFirebaseCppApp-6.0.0.so (Firebase_Database_CSharp_InternalDataSnapshot_value+16)

java_vm_ext.cc:542] native: #11 pc 0000000001609abc /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libil2cpp.so (???)

java_vm_ext.cc:542] native: #12 pc 00000000016076e8 /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libil2cpp.so (???)

java_vm_ext.cc:542] native: #13 pc 0000000001607080 /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libil2cpp.so (???)

java_vm_ext.cc:542] native: #14 pc 0000000000863488 /data/app/bigtale.claimadiction.com-vkJFoDZIc4Oc4lbnRvUatA==/lib/arm64/libil2cpp.so (???)