repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

introlab/rtabmap | 708779965 | Title: Add color to costmap/occupancy grid

Question:

username_0: Hi, I was reading #526 and I read that it would be possible that "the resulting points in obstacle and ground can have colors". Where should I edit the code to have something like this? I want to differentiate between different obstacles, hence for each obstacle cell I may want a different color in the map and this should be rectified every time is necessary. The faster way would be just to add this color encoding to every obstacle cell clearly (without having to create another costmap) and to add this information to the database. This would mean to create a new list for each signature (along empty, obstacle, ground) as long as obstacle where for each element I have the corresponding color value (so that the system is able to recreate it)

Answers:

username_1: For example with this [demo](http://wiki.ros.org/rtabmap_ros/#Robot_mapping_with_RVIZ), if you modify the the launch file as follows:

- Set Grid/FromDepth to true [here](https://github.com/introlab/rtabmap_ros/blob/58dd3134be6f2ccc81f998df4e258354f0071e44/launch/demo/demo_robot_mapping.launch#L53)

- Add:

```xml

<param name="Grid/3D" type="string" value="true"/>

<param name="Grid/RayTracing" type="string" value="false"/>

<param name="Grid/MaxObstacleHeight" type="string" value="2"/>

```

Launch the demo and add /rtabmap/cloud_obstacles to rviz:

```bash

roslaunch rtabmap_ros demo_robot_mapping.launch rviz:=true rtabmapviz:=false

rosbag play --clock demo_mapping.bag

```

The obstacles have the color of the RGB image. When visualizing individual local grids in DatabaseViewer:

The colors are added implicitly here when creating the cloud to segment from RGB-D or Stereo data contained in the node: https://github.com/introlab/rtabmap/blob/e8e3649f249382a790968ecbfa1dd9f32606d7d4/corelib/src/OccupancyGrid.cpp#L368-L369

As pointed out in the referred [post](https://github.com/introlab/rtabmap/issues/526#issuecomment-611011740), the `obstacles` [here](https://github.com/introlab/rtabmap/blob/cdff33b1c2bd04ea6a203824b18de631ba06f72d/corelib/src/Memory.cpp#L5102) would contain a RGB channel if `Grid/FromDepth` is true and `Grid/3D` is true. Before making any modifications, how are obstacles semantic computed in your approach? Is it image-based segmentation or pointcloud-based segmentation? For image-based segmentation, one could feed the segmented image instead of the RGB image to rtabmap, but loop closure detection won't work anymore (can be ok if you are using a lidar for localization though). With more deep modifications, using the multi-cameras approach already in rtabmap, would be to feed the segmented image along the RGB images (like if it was a two cameras setup), but we should add parameters to rtabmap to tell it to do loop closure detection on the first camera, but grid segmentation with the second camera.

cheers,

Mathieu

username_0: Actually, I'd like to color/create a 2D map. Each cell will be colored based either on the kind of obstacle that it represent/of there's a feature above that.

For example if I have a feature in world coordinates in (3,3,3) the 2D map in that point will be "colored" or marked. Same happens if I decide to use segmentation (that I don't have rn) but still I'm interested in the creation of a 2D map. Most probably, I'll use features. My idea was to have on the end a map, like the costmap that has probability values, with a value (between 0,100) that represent the number of features in that column. We already have the features in the base frame if I'm correct, so the work would be just to create another layer of costmaps correct?

username_0: So I thought about that during the last few days: the best way for this to work for me would be creating a matrix where for each cell I can have an "array" of values. Much like a 2D map but for each cell instead of having a value like [this](https://github.com/introlab/rtabmap/blob/e8e3649f249382a790968ecbfa1dd9f32606d7d4/corelib/src/OccupancyGrid.cpp#L647) I can have a list of values (int would be good). Then the structure would be the same (centering, resolution, etc).

In this way for example if I query (0.1, 0.1) I will have [1, 2, 5, 7] as result not just a single value.

I think that this would be possible if I can:

- add my custom structure in createLocalMap

- understand how to edit the code in the update such that the same cell viewed by two viewpoints is correctly merged

- write my caller/publisher following my custom structure/L647 and subsequents [easiest part]

username_1: This is quite specific to your application. One way would be to subscribe to data you want and reconstruct a map layer as you want. For example subscribing to /rtabmap/mapData to get features, transform them in map frame and project them in the occupancy grid map. The problem is when a loop closure happens, the whole occupancy grip has to be regenerated, you would need to update your structure too.

username_0: Yup, I know but to avoid unneccessary override and information flow I'd like to integrate that within your system. Each time a new node is added you use [this](https://github.com/introlab/rtabmap/blob/cdff33b1c2bd04ea6a203824b18de631ba06f72d/corelib/src/Memory.cpp#L764) method, right? If that returns true the node is correctly added. So I could directly edit my structure there while adding information where nodes are being added to the graph. Then when a loop closure is detected I can directly edit such information. That means that [here](https://github.com/introlab/rtabmap/blob/bedc771fa4acde22c1497811a88de50c42a248e8/corelib/src/Rtabmap.cpp#L2962) I will have my new poses in the new locations and I can then edit (by moving around and eventually delete) my information placing myself [here](https://github.com/introlab/rtabmap/blob/bedc771fa4acde22c1497811a88de50c42a248e8/corelib/src/Rtabmap.cpp#L3064) by working in a custom else (so it won't be updated if the LC is rejected). Any comment/suggestion/thought about this? Is this correct? |

microsoft/PowerToys | 681022755 | Title: [Issue] Setting reset

Question:

username_0: After updating to a newer version of PowerToys, some settings got reset, like the background of the shortcut guide.

Answers:

username_1: verified opacity didn't carry over from a 0.19 to .20 install. we (chances are me) did touch some json stuff and could be one of the settings that didn't get the attribute flag set correctly. Going from 0.20 to 0.20 upgrade, the settings did save.

Sadly, this will be a won't-fix as it would break then any 0.20. We're also building in more unit tests to verify this type of breaking change won't happen in the future

Status: Issue closed

|

veerendra2/funmotd | 626421251 | Title: Change wieghts variable name

Question:

username_0: https://github.com/username_0/funmotd/blob/master/funmotd/__init__.py#L95

```

$ funmotd --help

usage: funmotd [-h] [-l] [-e MODIFY MODIFY] [-n NSFW] [-v]

Displays TV Show and Movie Quotes as 'motd' on Terminal

optional arguments:

-h, --help show this help message and exit

-l View Available TV Show/Movies & Configuration

-e MODIFY MODIFY Modify Weights <<<<<<<<<<<<<<<<<<<<<<<<

-n NSFW Enable/Disable NSFW Quotes

-v Version and author information

``` |

umijs/qiankun | 601783354 | Title: 关于打包的疑问

Question:

username_0: <!--

感谢您向我们反馈问题,为了高效的解决问题,我们期望你能提供以下信息:

-->

## What happens?

我基于 umi-plugin-qiankun 的 examples 在本地跑,没问题;现在想把子应用打包下,然后再在父应用里去加载,结果子应用的资源就一直报404

<!-- 清晰的描述下遇到的问题。-->

## 最小可复现仓库

https://github.com/umijs/umi-plugin-qiankun

<!-- 为节约大家的时间,无复现步骤的 ISSUE 会被关闭,提供之后再 REOPEN -->

<!-- https://github.com/YOUR_REPOSITORY_URL -->

## 复现步骤,错误日志以及相关配置

1. clone 以上仓库, 安装依赖,

2. 进到exapmles目录

3. 打包app1,然后cd dist,通过 `http-server -p 8001 --cors` 启动本地服务

4. cd master 然后 npm start 启动 master 访问 /app1

5. 控制台报错资源 404

我个人感觉应该去加载 localhost:8001/app1/xx.js ,不知道为何少了 **app1** 这个前缀

<!-- 请提供复现步骤,错误日志以及相关配置 -->

<!-- 可以尝试不要锁版本,重新安装依赖试试先 -->

## 相关环境信息

- **qiankun 版本**: 1.4.5

- **浏览器版本**:chrome: 81.0.4044.113(正式版本) (64 位)

- **操作系统**:macos: 10.14.6 (18G95)

Answers:

username_1: entry 配置成 `localhost:8001/app1/index.html`

Status: Issue closed

|

cityofaustin/atd-data-tech | 1158635397 | Title: Investigate inclusion of Microsoft Projects data in dashboard

Question:

username_0: Whaaa... MPXJ??

@dianamartin - what is the process for getting a license? We would want one for Fernando, too.

Answers:

username_1: The Microsoft Project file (.mpp) I received from Fernando cannot be read by PowerBI but they do support loading in data from Microsoft Project Online. I currently don't have a license but @dianamartin says I can get one. I'm not exactly sure wha the difference is between the desktop and online versions of Project are but I hope that Fernando could move over to the online one easily?

[Docs about loading Project Online files into Power BI.](https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-project-online-connect-to-data)

[Gantt charts in Power BI tutorial](https://www.youtube.com/watch?v=cdUg8LfarCg)

username_1: Also wanted to point out the MPXJ library that should allow us to read in the data and export over to CSV which could potentially allow us to cut MS Project out of the process entirely.

[MPXJ](https://pypi.org/project/mpxj/)

username_0: Whaaa... MPXJ??

@dianamartin - what is the process for getting a license? We would want one for Fernando, too.

username_1: Sorry should have CC'd you but Scott got me a MS Project license. I assume Fernando already has one since he's been working in MS Project. |

DestinyItemManager/DIM | 1024511274 | Title: Max Power not calculated correctly

Question:

username_0: ### DIM Version

Version 6.86.0 (release), built on 10/10/2021, 9:08:06 PM

### Browser Details

Version 94.0.4606.81 (Official Build) (64-bit)

### OS Details

Windows 10

### Describe the bug

The maximum power score on my Titan and Hunter is being calculated incorrectly, while it is logged correctly on my Warlock. DIM was reporting my max power as `1342 1/8`, but when I applied maximize power function, I noticed my Titan's power in-game was over 1343, despite DIM still reporting `1342 1/8`

When I hover over the details to describe the max power, there is a `modifiers -7` in the popover, and the popup with item details shows my energy weapon at `1320` instead of it's actual power level of `1329`.

### Logs

_No response_<issue_closed>

Status: Issue closed |

Swarbricklab-code/BrCa_cell_atlas | 1038347718 | Title: missing h5 files from Zenodo

Question:

username_0: Hi,

I would like to have a look at your spatial data, however the h5 files are missing.

Could you please add it ?

Thank you

Answers:

username_1: Apologies for the slow response.

Unfortunately we can't add `h5` files to the Zenodo repo without breaking the doi link to the paper. However, `h5` files are available on request. Please reach out if you still need these files.

You may also be able to create a Seurat spatial object from scratch using the publicly available data using the suggestions in this thread: https://github.com/satijalab/seurat/issues/2790

username_2: Hi,

I would have exactly the same request.

I'm wondering if there is a way to recover the h5 files somewhere ?

Tks !

username_1: Hi. I have searched high and low, but only found the `.h5` files for two samples and it appears that we did not consistently archive the `.h5` files. I think the rationale was that they were redundant because they contain exactly the same information as their MEX format equivalents. I'm curious if there are use cases where only HDF5 format will do?

In the meantime, you can load the counts and images via either Seurat's [Load10X_Spatial()](https://satijalab.org/seurat/reference/load10x_spatial) or Scanpy's [sc.read_visium()](https://scanpy.readthedocs.io/en/stable/generated/scanpy.read_visium.html).

If you really, really need `.h5` files in HDF5 format then you might be able to recreate them via `pandas.HDFStore` (see [this tutorial](https://riptutorial.com/pandas/example/9812/using-hdfstore), for example) and following the file hierarchy described here: https://support.10xgenomics.com/single-cell-atac/software/pipelines/latest/advanced/h5_matrices

Status: Issue closed

username_2: You can 't load counts with [Load10X_Spatial()](https://satijalab.org/seurat/reference/load10x_spatial) without h5 files.

Almost sure that the same with Scanpy's but can't tell 100%, not a scanpy user... Because they are based on a strict directory content.

h5 files for two samples it's fine.

My bet is that you have the Two TNBCs (1142243F and 1160920F) processed by independant lab... and that is exactly what I'm looking for. So I'm still interested to grap this two if possible.

Thank you

username_1: You're right -- `Load10X_Spatial()` requires a `.h5` file and this is probably the easiest way to load Visium data into Seurat.

Without the `.h5` files I think you can still get the data into Seurat doing something like the following:

```

my_object <- CreateSeuratObject(

counts = Read10X( data.dir = '/path/to/directory/containing/matrix.mtx/etc'),

assay = 'Spatial'

)

my_image <- Read10X_Image( image.dir = 'path/to/spatial/images' )

my_image <- image[Cells(x = my_object)]

DefaultAssay(object = my_image) <- 'Spatial'

my_object[['Slice1']] <- my_image

```

This is approach is appropriated from the code for `Load10X_Spatial()` here: https://github.com/satijalab/seurat/blob/master/R/preprocessing.R

I'll send the two `h5` files that we do have via email.

username_2: You were absolutely right.

I think here there is a mistake but the whole idea is good :

```

my_image <- Read10X_Image( image.dir = 'path/to/spatial/images' )

my_image <- image[Cells(x = my_object)]

```

I ended up with :

`

```

print(as.character(dirname(file_tisspos)))

print(as.character(dirname(file_features)))

"/home/docker/LR/LR/bench//spatial/BreastVisiumNoH5/spatial/1160920F_spatial"

"/home/docker/LR/LR/bench//spatial/BreastVisiumNoH5/filtered_count_matrices//1160920F_filtered_count_matrix"

X <- Read10X_Image(

as.character(dirname(file_tisspos)),

image.name = "tissue_lowres_image.png",

filter.matrix = TRUE

)

# gene.column = 2 by default and that was causing an error, update to 1 for last version with gziped

my_object <- CreateSeuratObject(

counts = Read10X( data.dir = as.character(dirname(file_features)) , gene.column = 1),

assay = 'Spatial'

)

my_image <- Read10X_Image( image.dir = as.character(dirname(file_tisspos)))

image <- my_image[Cells(x = my_object)]

DefaultAssay(object = image) <- 'Spatial'

my_object[['Slice1']] <- image

```

Juste note that the gz in /path/to/directory/containing/matrix.mtx are not really gziped. They have the extension but are just simple file...

Thanks for your help, and the files. :)

username_1: You're welcome, and thanks for the feedback on loading the Zenodo data.

I'll make a note in the readme pointing people to this code snippet.

I'll also make a feature request for the Seurat developers to make a wrapper function that does basically this. |

picqer/exact-php-client | 448899888 | Title: Change orderstatus for existing salesorder

Question:

username_0: I want to change the orderstatus of a salesorder to 20 after i retrieve it from the administration with status 12. I use the follwing php-code. I get no error but the change is not done.

What am i doing wrong?

try

{

$l_salesorders = new \Picqer\Financials\Exact\SalesOrder($connection);

$result = $l_salesorders->filter("Status eq 12");

foreach ($result as $salesorder)

{

echo 'salesorder: ' . $salesorder->Description . ' ordernummer ' . $salesorder->OrderNumber . ' status ' . $salesorder->Status . ' ' . $salesorder->DeliveryStatus . '<br>';

echo 'bijwerken<br>';

$salesorder->Status = '20';

$l_save_result = $salesorder->save();

echo 'save resultaat ' . $l_save_result->Status . ' ' . $l_save_result->DeliveryStatus . '<br>';

}

}

catch (\Exception $e)

{

echo get_class($e) . ' : ' . $e->getMessage();

}

Answers:

username_1: If you check the [SalesOrders API documentation](https://start.exactonline.nl/docs/HlpRestAPIResourcesDetails.aspx?name=SalesOrderSalesOrders), you'll see it's not possible to update (PUT) the `Status` property.

As far as I know, the `Status` of a SalesOrder is a dynamic representation of the linked SalesOrderLines (and PurchaseOrderLines), delivery data, invoices, and more. Even in the Exact Online front-end, it's impossible to directly set a specific status for a SalesOrder.

username_0: OK. Thanks.

Now i see how the documentation works. You only see the attributes which can be changed when you press the put/post buttons.

I already suspected it wasn't possible.

username_2: @username_0 this means your issue is solved and can be closed?

Status: Issue closed

|

DriveTimeInc/cordova-plugin-cookie-persistence | 305728090 | Title: Method to Cause Cookies to be Persisted

Question:

username_0: From @username_1 in #3

If you can expose a method to call the storage logic from JS at anytime - we can then call the code to refresh the Cookies details whenever we make a HTTPs call to the server. That way we will not need to worry about Exit/Closing of the app as the developer can manage the cookie refresh as they are loaded from the the web server.

Answers:

username_0: Are you thinking a method like:

```

void storeCookies() {

//retrieve cookies from view

//store cookies in txt file

}

```

Or where the app would supply the cookies to store?

```

void storeCookies(cookies) {

//store cookies in txt file

}

```

I'd think we'd want the first to maintain control.

Might also be worth adding a `clearCookies()` method that would wipe the persisted cookies.

username_1: Let me test the updated Android code first - as I thought there are a technical issue with the exiting of the app. It may not be necessary (for Android) to interact with Javascript at all if the code works as expected....

username_1: log data from Android simulator

V/FA: Session started, time: 854861

D/FA: Logging event (FE): session_start(_s), Bundle[{firebase_event_origin(_o)=auto, firebase_screen_class(_sc)=MainActivity, firebase_screen_id(_si)=394842885034794583}]

V/FA: Connecting to remote service

D/FA: Connected to remote service

V/FA: Processing queued up service tasks: 1

V/FA: Inactivity, disconnecting from the service

D/CordovaActivity: Paused the activity.

W/com.facebook.appevents.AppEventsLogger: deactivateApp events are being logged automatically. There's no need to call deactivateApp, this is safe to remove.

D/CookiePersistenceCordovaPlugin: Paused the activity.

D/CookiePersistenceCordovaPlugin: Paused the activity.

**D/CookiePersistenceCordovaPlugin: Write File - Contents: magical_device=c29<PASSWORD>; csrf_token=<PASSWORD>; magical=ee2242767a8f446eb29dbb292e606edf-18337; sess_user=c8bd3fc49baa0ae933bee23ea2a53f76-18337; magical_device=c2933b5e1d59497b881abfd9e7688421-18337; magicaltourstop=1; magical-friendProfileLikeCount=406

D/CookiePersistenceCordovaPlugin: storeCookies - Complete**

V/FA: Recording user engagement, ms: 19030

V/FA: Connecting to remote service

V/FA: Activity paused, time: 863894

D/FA: Logging event (FE): user_engagement(_e), Bundle[{firebase_event_origin(_o)=auto, engagement_time_msec(_et)=19030, firebase_screen_class(_sc)=MainActivity, firebase_screen_id(_si)=394842885034794583}]

V/FA: Connection attempt already in progress

[ 03-16 08:47:26.675 1697: 2045 D/ ]

HostConnection::get() New Host Connection established 0x83523940, tid 2045

D/EGL_emulation: eglMakeCurrent: 0xaa805fc0: ver 2 0 (tinfo 0xaa8038b0)

D/CordovaActivity: Stopped the activity.

D/FA: Connected to remote service

V/FA: Processing queued up service tasks: 2

username_1: Perfect !

username_1: Will test on a Samsung Galaxy S8 now

username_0: That looks great. Yea its debatable whether this is needed.

username_1: I have tested on my S8, the cookies do not seem to be getting passed to the web server - I have to login again. To test I clear all data, including the cookies and then start the app - login - then hardware show all apps. Close the app and start in again. Would expect to see the app in the logged in state. However asks for the login again.

If I login again - the app behaves oddly and does not render data correctly. I'll get some info from the server logs and see what the application server received.

username_0: Look for `Cookie File Content: <COOKIE STRING>` in the Android log. Also see if one of these exists: `UpdateCookies - Complete` or `UpdateCookies - Failed`

username_1: The app serverr is receiving the cookies from the saved session - might be a bug in our code... checking.

One thing to consider - duplicate cookies. If the restart of the app add the same cookie - the developer will need to check for that in the JS code...

username_1: another idea is have a option / config that indicates which cookies to store and re-store as it may not be appropriate to restore all the cookies on resume...

username_1: Have done a set of tests and seems to be working correctly. I get another developer to do what I am doing. Start app, Login, Hardware Show all app, close app, restart the app from desktop.,,,

Status: Issue closed

|

opendistro-for-elasticsearch/sql | 589441390 | Title: Aggregating over nested field doesn't work for default format

Question:

username_0: Test data: https://github.com/opendistro-for-elasticsearch/sql/issues/397#issuecomment-604719065

Query:

```

POST _opendistro/_sql

{

"query":

"""

SELECT

e.name AS employeeName,

COUNT(p) AS cnt

FROM employees_nested AS e,

e.projects AS p

WHERE p.name LIKE '%security%'

GROUP BY e.id, e.name

"""

}

{

"error": {

"reason": "There was internal problem at backend",

"details": "Aggregation type nested is not yet implemented",

"type": "SqlFeatureNotImplementedException"

},

"status": 500

}

```

Log:

```

[2020-03-27T15:19:21,742][WARN ][c.a.o.s.e.f.PrettyFormatRestExecutor] [186590df9563.ant.amazon.com] Error happened in pretty formatter

com.amazon.opendistroforelasticsearch.sql.exception.SqlFeatureNotImplementedException: Aggregation type nested is not yet implemented

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.addNumericAggregation(SelectResultSet.java:659) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.getAggsData(SelectResultSet.java:616) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.getAggsData(SelectResultSet.java:612) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.populateRows(SelectResultSet.java:584) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.extractData(SelectResultSet.java:535) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.SelectResultSet.<init>(SelectResultSet.java:108) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.Protocol.loadResultSet(Protocol.java:83) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.Protocol.<init>(Protocol.java:65) ~[opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.PrettyFormatRestExecutor.execute(PrettyFormatRestExecutor.java:71) [opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.format.PrettyFormatRestExecutor.execute(PrettyFormatRestExecutor.java:47) [opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.AsyncRestExecutor.doExecuteWithTimeMeasured(AsyncRestExecutor.java:161) [opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.executor.AsyncRestExecutor.lambda$async$1(AsyncRestExecutor.java:121) [opendistro_sql-1.6.0.0.jar:1.6.0.0]

at com.amazon.opendistroforelasticsearch.sql.utils.LogUtils.lambda$withCurrentContext$0(LogUtils.java:72) [opendistro_sql-1.6.0.0.jar:1.6.0.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:633) [elasticsearch-7.6.1.jar:7.6.1]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [?:?]

at java.lang.Thread.run(Thread.java:830) [?:?]

``` |

cdhart/cdhart-html | 688123666 | Title: colorschemez August 28 2020 at 05:14AM

Question:

username_0: <blockquote class="twitter-tweet">

<p lang="en" dir="ltr" xml:lang="en">undesired eggshell blue<br>

seamless celery<br>

boding darkish pink <a href="https://t.co/e5gNudkoT9">pic.twitter.com/e5gNudkoT9</a></p>

— colorschemer (@colorschemez) <a href="https://twitter.com/colorschemez/status/1299289322852478981?ref_src=twsrc%5Etfw">August 28, 2020</a>

</blockquote>

<br>

<br>

August 28, 2020 at 05:14AM<br>

via Twitter |

traveller59/spconv | 668343882 | Title: Build failure: recipe for target hash_table.cu.o failed

Question:

username_0: I am trying to build this on a Ubuntu 18.04 machine. But, it is failing. The build output is pasted below. The error I see is: "recipe for target 'src/cuhash/CMakeFiles/cuhash.dir/hash_table.cu.o' failed".

Any idea about the cause/fix?

Thanks!

=============================

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-3.8

creating build/lib.linux-x86_64-3.8/spconv

copying spconv/modules.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/identity.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/test_utils.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/tables.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/__init__.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/ops.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/functional.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/pool.py -> build/lib.linux-x86_64-3.8/spconv

copying spconv/conv.py -> build/lib.linux-x86_64-3.8/spconv

creating build/lib.linux-x86_64-3.8/spconv/utils

copying spconv/utils/__init__.py -> build/lib.linux-x86_64-3.8/spconv/utils

running build_ext

-- The CXX compiler identification is GNU 7.5.0

-- The CUDA compiler identification is NVIDIA 9.1.85

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Check for working CUDA compiler: /usr/bin/nvcc

-- Check for working CUDA compiler: /usr/bin/nvcc -- works

-- Detecting CUDA compiler ABI info

-- Detecting CUDA compiler ABI info - done

-- Looking for C++ include pthread.h

-- Looking for C++ include pthread.h - found

-- Looking for pthread_create

-- Looking for pthread_create - not found

-- Looking for pthread_create in pthreads

-- Looking for pthread_create in pthreads - not found

-- Looking for pthread_create in pthread

-- Looking for pthread_create in pthread - found

-- Found Threads: TRUE

-- Found CUDA: /usr (found version "9.1")

-- Caffe2: CUDA detected: 9.1

-- Caffe2: CUDA nvcc is: /usr/bin/nvcc

-- Caffe2: CUDA toolkit directory: /usr

-- Caffe2: Header version is: 9.1

-- Found CUDNN: /usr/local/cuda/lib64/libcudnn.so

-- Found cuDNN: v8.0.2 (include: /usr/local/cuda/include, library: /usr/local/cuda/lib64/libcudnn.so)

-- Autodetected CUDA architecture(s): 6.1

-- Added CUDA NVCC flags for: -gencode;arch=compute_61,code=sm_61

-- Found Torch: /home/username_0/anaconda3/envs/instseg/lib/python3.8/site-packages/torch/lib/libtorch.so

-- Autodetected CUDA architecture(s): 6.1

-- Found PythonInterp: /home/username_0/anaconda3/envs/instseg/bin/python3.8 (found suitable version "3.8.3", minimum required is "3.8")

-- Found PythonLibs: /home/username_0/anaconda3/envs/instseg/lib/libpython3.8.so

-- Performing Test HAS_CPP14_FLAG

-- Performing Test HAS_CPP14_FLAG - Success

-- pybind11 v2.5.0

-- Found OpenMP_CXX: -fopenmp (found version "4.5")

[Truncated]

-- Build files have been written to: /home/username_0/projects/detect3d/instseg/spconv/build/temp.linux-x86_64-3.8

Scanning dependencies of target spconv_nms

[ 4%] Building CUDA object src/utils/CMakeFiles/spconv_nms.dir/nms.cu.o

Scanning dependencies of target cuhash

[ 8%] Building CUDA object src/cuhash/CMakeFiles/cuhash.dir/hash_functions.cu.o

[ 12%] Building CXX object src/cuhash/CMakeFiles/cuhash.dir/hash_table.cpp.o

[ 16%] Building CUDA object src/cuhash/CMakeFiles/cuhash.dir/hash_table.cu.o

[ 20%] Building CXX object src/cuhash/CMakeFiles/cuhash.dir/hash_functions.cpp.o

src/cuhash/CMakeFiles/cuhash.dir/build.make:88: recipe for target 'src/cuhash/CMakeFiles/cuhash.dir/hash_table.cu.o' failed

[ 24%] Linking CUDA static library libspconv_nms.a

[ 24%] Built target spconv_nms

Scanning dependencies of target spconv_utils

[ 28%] Building CXX object src/utils/CMakeFiles/spconv_utils.dir/all.cc.o

CMakeFiles/Makefile2:108: recipe for target 'src/cuhash/CMakeFiles/cuhash.dir/all' failed

[ 32%] Linking CUDA device code CMakeFiles/spconv_utils.dir/cmake_device_link.o

[ 36%] Linking CXX shared library ../../../lib.linux-x86_64-3.8/spconv/spconv_utils.cpython-38-x86_64-linux-gnu.so

[ 36%] Built target spconv_utils

Makefile:129: recipe for target 'all' failed

Release

|||||CMAKE ARGS||||| ['-DCMAKE_PREFIX_PATH=/home/username_0/anaconda3/envs/instseg/lib/python3.8/site-packages/torch', '-DPYBIND11_PYTHON_VERSION=3.8', '-DSPCONV_BuildTests=OFF', '-DPYTORCH_VERSION=10600', '-DCMAKE_CUDA_FLAGS="--expt-relaxed-constexpr" -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__', '-DCMAKE_LIBRARY_OUTPUT_DIRECTORY=/home/username_0/projects/detect3d/instseg/spconv/build/lib.linux-x86_64-3.8/spconv', '-DCMAKE_BUILD_TYPE=Release'] |

Homebrew/install | 760411807 | Title: Install script fails on ubuntu 20.04 on AWS

Question:

username_0: # Please note we will close your issue without comment if you delete, do not read or do not fill out the issue checklist below and provide ALL the requested information. If you repeatedly fail to use the issue template, we will block you from ever submitting issues to Homebrew again.

- [ ] your problem was from running the official `install` or `uninstall` script?

- [ ] after installation: ran `brew config` and `brew doctor` and included their output with your issue? If you couldn't install: provided your OS version with the output of your issue?

<!-- To help us debug your issue, please complete these sections: -->

I deployed an Ubuntu 20.04 instance on AWS; these instances has one sudoer user called _ubuntu_ and it has no password.

I tried to running

```

ubuntu@ip-172-31-17-172:~$ /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)"

==> Select the Homebrew installation directory

- Enter your password to install to /home/linuxbrew/.linuxbrew (recommended)

- Press Control-D to install to /home/ubuntu/.linuxbrew

- Press Control-C to cancel installation

[sudo] password for ubuntu:

Sorry, try again.

```

As the user does not have any password, I was unable to install to _/home/linuxbrew/.linuxbrew_.

Installing to /home/ubuntu/ succeeds but as you guys know there are many other issues with installing into this location.

The last commit that is working in is 08ee636817bb5113de259ce2d72e2628a8e8909b.

Answers:

username_1: Adding here that his change has also completely broken installs on Crostini / Chromebooks.

username_2: I suspect the changes with the INTERACTIVE check are causing this https://github.com/Homebrew/install/commit/342253e5bad2a86d82e08823cce0a57e8a4cf3d9#diff-043df5bdbf6639d7a77e1d44c5226fd7371e5259a1e4df3a0dd5d64c30dca44f

username_3: @username_2 Yes, that will be the case. We'll review PRs to address this. Note we do not support non-interactive installs outside of `/home/linuxbrew/.linuxbrew`.

username_2: Are you sure? The comments specifically mention `~/.linuxbrew`

```

# On Linux, it installs to /home/linuxbrew/.linuxbrew if you have sudo access

# and ~/.linuxbrew otherwise.

```

But this change: https://github.com/Homebrew/install/commit/6f37ca94af073c2971efbed8aa293322aa171f26 seems to have broken that behaviour

The if statement is now true once NONINTERACTIVE = true. Meaning it will always go to /home/linuxbrew

```

if [[ -n "${NONINTERACTIVE-}" ]] ||

[[ -w "$HOMEBREW_PREFIX_DEFAULT" ]] ||

[[ -w "/home/linuxbrew" ]] ||

[[ -w "/home" ]]; then

```

I believe that should be

```

if [[ -n "${NONINTERACTIVE-}" ]] &&

[[ -w "$HOMEBREW_PREFIX_DEFAULT" ]] &&

[[ -w "/home/linuxbrew" ]] &&

[[ -w "/home" ]]; then

```

I made a PR for it, if you agree https://github.com/Homebrew/install/pull/508

username_3: I'm sure that that's the intention, yes.

username_2: I don't mean to sound harsh, just trying to understand here but why?

What is the upside that I'm currently missing that justifies the breaking of existing workflows. Is there a security risk in allowing it to go to `~/.linuxbrew` ?

username_3: No security risk but most binary packages will not work and `brew doctor` will tell you not to file issues. We don't "support" it, as a result, and most users should avoid it.

username_2: I don't understand. Why would binary packages not work? Do they hardcode /home/linuxbrew in the compiled code ?

username_3: Yup, exactly.

username_2: Mike,

Ok, understood. Thanks for taking the time to explain.

What is the proper way to dispute that decision? I understand why we wouldn't want users to file tickets for binary packages not working. But not allowing an alternate home folder seems a bit overkill.

The result is that instead of having a homebrew that doesn't work for _some_ binary packages, we now have a homebrew that simply doesn't work _at all_ for some users, even if they're not using binaries.

Would you accept a PullRequest that adds a parameter to the install script that allows you to specify a home folder? That would give users a way to work around the brick wall they are facing at the moment.

username_3: No, sorry.

username_2: Ok, Clear communication. Thank you.

username_4: Try setting up sudo password.

username_1: I think that might make sense for the Ubuntu issue to set a sudo password, but I feel the Homebrew script should be written to work out of the box on a Chrome OS Crostini install. Especially when it worked before, it's just the new dialog system that breaks it. Perhaps I should open the Chromebook issue as a new, separate ticket.

username_4: Chrome OS? He has problem with sudo on Ubuntu.

username_1: Ya sorry my second comment was saying the exact same change is breaking Crostini as well.

username_5: Reading package lists... Done

Building dependency tree

Reading state information... Done

Note, selecting 'zlib1g-dev' instead of 'libz-dev'

bzip2 is already the newest version (1.0.8-2).

ca-certificates is already the newest version (20210119~20.04.1).

ca-certificates set to manually installed.

tzdata is already the newest version (2021a-0ubuntu0.20.04).

tzdata set to manually installed.

The following additional packages will be installed:

binutils binutils-common binutils-x86-64-linux-gnu cpp cpp-9 g++-9 gcc gcc-9 gcc-9-base git-man libasan5 libasn1-8-heimdal libatomic1 libbinutils

libbsd0 libc-dev-bin libc6 libc6-dev libcbor0.6 libcc1-0 libcrypt-dev libctf-nobfd0 libctf0 libcurl3-gnutls libcurl4 libedit2 liberror-perl

libfido2-1 libgcc-9-dev libgdbm-compat4 libgdbm6 libgomp1 libgssapi3-heimdal libhcrypto4-heimdal libheimbase1-heimdal libheimntlm0-heimdal

libhx509-5-heimdal libisl22 libitm1 libkrb5-26-heimdal libldap-2.4-2 libldap-common liblsan0 libmagic-mgc libmagic1 libmpc3 libmpfr6 libnghttp2-14

libperl5.30 libquadmath0 libroken18-heimdal librtmp1 libsasl2-2 libsasl2-modules-db libsigsegv2 libssh-4 libstdc++-9-dev libtsan0 libubsan1

libwind0-heimdal linux-libc-dev perl perl-modules-5.30

Suggested packages:

binutils-doc cpp-doc gcc-9-locales g++-multilib g++-9-multilib gcc-9-doc gawk-doc gcc-multilib manpages-dev autoconf automake libtool flex bison

gdb gcc-doc gcc-9-multilib gettext-base git-daemon-run | git-daemon-sysvinit git-doc git-el git-email git-gui gitk gitweb git-cvs git-mediawiki

git-svn glibc-doc gdbm-l10n libstdc++-9-doc make-doc keychain libpam-ssh monkeysphere ssh-askpass ed diffutils-doc perl-doc

libterm-readline-gnu-perl | libterm-readline-perl-perl libb-debug-perl liblocale-codes-perl

Recommended packages:

manpages manpages-dev libsasl2-modules xauth

The following NEW packages will be installed:

binutils binutils-common binutils-x86-64-linux-gnu cpp cpp-9 curl file fonts-dejavu-core g++ g++-9 gawk gcc gcc-9 gcc-9-base git git-man less

libasan5 libasn1-8-heimdal libatomic1 libbinutils libbsd0 libc-dev-bin libc6-dev libcbor0.6 libcc1-0 libcrypt-dev libctf-nobfd0 libctf0

libcurl3-gnutls libcurl4 libedit2 liberror-perl libfido2-1 libgcc-9-dev libgdbm-compat4 libgdbm6 libgomp1 libgssapi3-heimdal libhcrypto4-heimdal

libheimbase1-heimdal libheimntlm0-heimdal libhx509-5-heimdal libisl22 libitm1 libkrb5-26-heimdal libldap-2.4-2 libldap-common liblsan0 libmagic-mgc

libmagic1 libmpc3 libmpfr6 libnghttp2-14 libperl5.30 libquadmath0 libroken18-heimdal librtmp1 libsasl2-2 libsasl2-modules-db libsigsegv2 libssh-4

libstdc++-9-dev libtsan0 libubsan1 libwind0-heimdal linux-libc-dev locales make netbase openssh-client patch perl perl-modules-5.30 sudo

uuid-runtime zlib1g-dev

The following packages will be upgraded:

libc6

1 upgraded, 77 newly installed, 0 to remove and 15 not upgraded.

Need to get 63.3 MB of archives.

After this operation, 295 MB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 libc6 amd64 2.31-0ubuntu9.2 [2715 kB]

Get:2 http://ppa.launchpad.net/git-core/ppa/ubuntu focal/main amd64 git-man all 1:2.31.1-0ppa1~ubuntu20.04.1 [1847 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 uuid-runtime amd64 2.34-0.1ubuntu9.1 [35.1 kB]

Get:4 http://ppa.launchpad.net/git-core/ppa/ubuntu focal/main amd64 git amd64 1:2.31.1-0ppa1~ubuntu20.04.1 [5439 kB]

Get:5 http://archive.ubuntu.com/ubuntu focal/main amd64 libmpfr6 amd64 4.0.2-1 [240 kB]

Get:6 http://archive.ubuntu.com/ubuntu focal/main amd64 libsigsegv2 amd64 2.12-2 [13.9 kB]

Get:7 http://archive.ubuntu.com/ubuntu focal/main amd64 gawk amd64 1:5.0.1+dfsg-1 [418 kB]

Get:8 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 perl-modules-5.30 all 5.30.0-9ubuntu0.2 [2738 kB]

Get:9 http://archive.ubuntu.com/ubuntu focal/main amd64 libgdbm6 amd64 1.18.1-5 [27.4 kB]

Get:10 http://archive.ubuntu.com/ubuntu focal/main amd64 libgdbm-compat4 amd64 1.18.1-5 [6244 B]

Get:11 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 libperl5.30 amd64 5.30.0-9ubuntu0.2 [3952 kB]

Get:12 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 perl amd64 5.30.0-9ubuntu0.2 [224 kB]

Get:13 http://archive.ubuntu.com/ubuntu focal/main amd64 libmagic-mgc amd64 1:5.38-4 [218 kB]

Get:14 http://archive.ubuntu.com/ubuntu focal/main amd64 libmagic1 amd64 1:5.38-4 [75.9 kB]

Get:15 http://archive.ubuntu.com/ubuntu focal/main amd64 file amd64 1:5.38-4 [23.3 kB]

Get:16 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 less amd64 551-1ubuntu0.1 [123 kB]

Get:17 http://archive.ubuntu.com/ubuntu focal/main amd64 libbsd0 amd64 0.10.0-1 [45.4 kB]

Get:18 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 locales all 2.31-0ubuntu9.2 [3872 kB]

Get:19 http://archive.ubuntu.com/ubuntu focal/main amd64 netbase all 6.1 [13.1 kB]

Get:20 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 sudo amd64 1.8.31-1ubuntu1.2 [514 kB]

Get:21 http://archive.ubuntu.com/ubuntu focal/main amd64 libcbor0.6 amd64 0.6.0-0ubuntu1 [21.1 kB]

Get:22 http://archive.ubuntu.com/ubuntu focal/main amd64 libedit2 amd64 3.1-20191231-1 [87.0 kB]

Get:23 http://archive.ubuntu.com/ubuntu focal/main amd64 libfido2-1 amd64 1.3.1-1ubuntu2 [47.9 kB]

Get:24 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 openssh-client amd64 1:8.2p1-4ubuntu0.2 [671 kB]

[Truncated]

https://docs.brew.sh/Analytics

No analytics data has been sent yet (or will be during this `install` run).

==> Homebrew is run entirely by unpaid volunteers. Please consider donating:

https://github.com/Homebrew/brew#donations

==> Next steps:

- Add Homebrew to your PATH in /home/ubuntu/.profile:

echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"' >> /home/ubuntu/.profile

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

- Run `brew help` to get started

- Further documentation:

https://docs.brew.sh

- Install the Homebrew dependencies if you have sudo access:

sudo apt-get install build-essential

See https://docs.brew.sh/linux for more information

- We recommend that you install GCC:

brew install gcc

```

</details>

username_1: @username_5 Awesome! That does appear to fix the issue with installing Homebrew on Chromebooks! Thanks so much for the tip!

username_6: The install script does not account for password-less `sudo`...

Using `CI=1` does indeed work, but this shouldn't be broken out-of-the-box.

username_7: This has been open for quite some time now and some of the discussion seems to be a little misguided.

One of the main problems seems to be the entanglement of the `NONINTERACTIVE` flag with installation in `~/.linuxbrew` vs. `/home/linuxbrew/.linuxbrew`.

The other problem is that `sudo` makes it kind of hard to detect password-less `sudo` functionality.

I see 3 main, non-exclusive parts to solving that:

1. report the problem to all the upstreams with password-less sudoers configuration and reference this: https://askubuntu.com/a/1211226 solution (add `Defaults verifypw = any` to sudoers file)

2. report the problem to upstream `sudo` and request an amended `sudo -v`

3. Go the extra mile inside the install.sh script and try to detect the situation

- e.g. when the user hits `<CTRL-D>` at the sudo prompt, ask if they really meant `CI=1` |

cybozu-go/neco | 500144163 | Title: [neco-apps] Wait for gatekeeper policy to be created to stabilize test

Question:

username_0: ## What

When gatekeeper policy is applied, there is a slight delay before the policy is created.

This causes instability of gatekeeper test in neco-apps, so changes are needed to wait until the policy is created.

https://circleci.com/gh/cybozu-go/neco-apps/3682

## How

https://github.com/open-policy-agent/gatekeeper/blob/cb9c40032948de1de1170be7be37c24c244964e2/test/bats/test.bats#L48

## Checklist

- [ ] Finish implementation of the issue

- [ ] Test all functions

- [ ] Have enough logs to trace activities

- [ ] Notify developers of necessary actions<issue_closed>

Status: Issue closed |

dotnet/dotnet-api-docs | 387959712 | Title: Dead link

Question:

username_0: Look, EF docs at this level are almost nonexistent. And here you are, tempting me with a link to a "Metadata Workspace Overview." I practically broke my finger clicking that link, and it leads to

``` text

We're no longer updating this content regularly. Check the Microsoft Product Lifecycle for information about how this product, service, technology, or API is supported.

```

Why do you hate me? What did I do to you?

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 50fce505-544f-66d2-5322-1f9b7901c775

* Version Independent ID: 7125aaf3-74fa-f1d2-1489-f555648cc5a8

* Content: [DataSpace Enum (System.Data.Metadata.Edm)](https://docs.microsoft.com/en-us/dotnet/api/system.data.metadata.edm.dataspace?view=netframework-4.7.2)

* Content Source: [xml/System.Data.Metadata.Edm/DataSpace.xml](https://github.com/dotnet/dotnet-api-docs/blob/master/xml/System.Data.Metadata.Edm/DataSpace.xml)

* Product: **dotnet-api**

* GitHub Login: @douglaslMS

* Microsoft Alias: **douglasl**

Answers:

username_1: Thanks for your feedback @username_0. We're facing some redirections issues as we retire the MSDN content.

I'll update that link to go to https://docs.microsoft.com/en-us/previous-versions/bb399600(v=vs.90) for now.

@username_2 do you have any suggestions on a better link inside the EF docs?

username_2: @username_1 I don't have a better suggestion.

Things are complicated because several versions of EF exist.

That particular page about MetadataWorkspace seems to be from a very old EF documentation set and not part of the set we ported to https://docs.microsoft.com/ef/ef6.

In fact, the source of the link is the API reference docs from the legacy version of EF that ships in .NET Framework (aka EF4).

The EF6 API reference at https://docs.microsoft.com/dotnet/api/system.data.entity.core.metadata.edm.dataspace?view=entity-framework-6.2.0 doesn't have the link.

Any time I run into one of those loose pages, I add to a list I am tracking at https://github.com/aspnet/EntityFramework.Docs/issues/668. It may make sense to consolidate at least some of those into the EF6 docs.

Let me know if you have any thoughts.

username_2: I think it make sense to point customers doing active development on EF4 to at least upgrade to EF6. That gives them great compatibility, lots of improvements, and a path to .NET Core 3.0. So if something like this can be done, that would be great.

username_0: Sorry, there was no indication of the version it was covering (am 6.x, thought the docs were recent enough). Am here because I'm in the process of rewriting the query tree via an interceptor and omg the lack of docs on the subject... The ultimate goal is more complex row level filtering (aka where tenantId = derp is for wimps). Not much docs on the subject. Interception whether it is in Core or in 6.x is, if they are designed similarly, the goal is rewriting the query tree.

username_2: @username_0 in case you haven't found it, @rowanmiller has an example of a soft delete query interceptor at https://github.com/rowanmiller/Demo-TechEd2014/tree/master/FakeEstate.ListingManager/Models/EFHelpers.

The command trees from EF are indeed a complex area that never git a lot of documentation, mainly because it was designed only to be used in extremely advanced scenarios and we didn't see much demand. However a good understanding of LINQ expression trees should give you a good head start.

EF Core doesn't have an interception features like EF6, but you can use service replacement and intercept diagnostic events to accomplish similar tasks. Instead of having its own expression trees, EF Core uses LINQ expressions and Relinq query models.

username_0: I've pretty much looked everywhere and have seen everything online that's available. I'm old hat with Expressions, so I was able to pick up enough to get simple rewriting working. Now I'm stuck trying to figure out what the heck is up with TypeUsage and how to get from Type => DbExpressionBuilder.Parameter.

I am in an extremely advanced scenario, trying to rewrite queries to include row level filtering (and I don't mean these simple scenarios that every single example or filtering framework supports). I'm not that far off; once I get my parameter I'm pretty much done.

username_0: NVM that last bit, I figured out the beauty of binding in order to get variables.

Status: Issue closed

|

ContinuumIO/anaconda-issues | 140323195 | Title: Intel MKL FATAL ERROR: Cannot load libmkl_avx.so or libmkl_def.so.

Question:

username_0: _From @username_1 on February 10, 2016 2:20_

Seeing this on Travis CI (Linux) and in Docker containers during install. ( https://travis-ci.org/username_1/nanshe/builds/108187039#L749 )

_Copied from original issue: conda/conda#2048_

Answers:

username_0: _From @bilderbuchi on February 10, 2016 12:22_

I just encountered a similar/equivalent one (on Windows): `Intel MKL FATAL ERROR: Cannot load mkl_avx.dll or mkl_def.dll.

`

username_0: _From @username_1 on February 10, 2016 2:20_

Seeing this on Travis CI (Linux) and in Docker containers during install. ( https://travis-ci.org/username_1/nanshe/builds/108187039#L749 )

_Copied from original issue: conda/conda#2048_

username_0: _From @username_1 on February 10, 2016 15:34_

FWIW, this problem does not occur on Mac. So, it is only Linux and Windows.

username_0: _From @bilderbuchi on February 10, 2016 15:11_

[Here](https://github.com/astropy/ci-helpers/pull/67#issue-132452942) is another instance of this error, apparently. It provides a repro procedure (which doesn't repro on my side, though), and hints that maybe scipy 0.17 is the culprit, and downgrading to 0.15 could circumvent it?

username_0: _From @msarahan on February 10, 2016 13:50_

Pinging @ilanschnell

username_0: _From @username_1 on February 10, 2016 15:39_

It appears like the libraries are in the packages.

@bilderbuchi, are using Windows natively or are using it in a VM? If the latter what are you using for virtualization? If the former, do you know what architecture you have?

username_0: _From @bilderbuchi on February 11, 2016 6:59_

natively, 64bit, Windows 8.1.

username_0: _From @desilinguist on February 11, 2016 18:15_

Yes, I am having the same issue on my RHEL box. Works fine on my Mac. Seems like `scipy` is the culprit but all the paths seem so the set correctly when I run `show_config()` and all the libraries seem to be there under `$PREFIX/lib`.

username_0: _From @username_1 on February 11, 2016 18:17_

Sorry, by architecture, @bilderbuchi, I was meaning what kind of processor are you using?

username_0: _From @bilderbuchi on February 12, 2016 7:45_

An Intel(R) Xeon(R) CPU E3-1225 V2 @ 3.20GHz

username_0: Does this issue still exist? Regardless, going to kick it over to anaconda-issues. Pretty sure it's packaging-related.

username_1: This was a problem on Linux. I spun up a docker container and install `numpy`, `scipy`, and `mkl`. I tried importing `numpy` and `scipy`, which did not fail indicating this is probably fixed. Also, I tried using `numpy.dot`, which uses the BLAS if available, and imported/used a few functions from `scipy.linalg.blas`, which only use the BLAS. These seem to work and give results. So, I believe this is fixed and can be closed.

Status: Issue closed

username_0: Cool!

username_2: I have some Travis builds failing with the same error: https://travis-ci.org/colour-science/colour/builds/118175098

username_2: @username_0: Is it possible to re-open that issue please, it is not fixed or there are no clear step-by-step info to correct the problem.

username_1: Why do you need to pin the patch number for NumPy in your builds?

username_2: @username_1: It is a good point, back then I think we had specific requirements (especially for Scipy). I will try a build without specifying any version. Thanks!

username_2: Seems like it was that, for my curiosity any specific reason why this problem happened? Cheers,

username_1: There were some issues with the first MKL package released as you have seen. In this case, missing libraries on Linux. I expect (though have not checked) that NumPy 1.10.1 was pinned to a certain version of the MKL package. However, this was fixed in a later version of the MKL package and I believe the next NumPy package (think 1.10.2) changed its pinning to this new version.

username_2: Excellent makes sense! Thanks for the help, appreciated :+1:

username_3: ...

mkl 11.3.1 0

mkl-service 1.1.2 py27_0

...

numpy 1.10.4 py27_1

...

scikit-learn 0.17.1 np110py27_0

...

scipy 0.17.0 np110py27_2

```

username_4: I had the same problem, despite using the latest available packages. Turns out the solution was easier than I thought: for whatever reason Anaconda installed the MKL-enabled versions of the numpy/scipy stack, but did not actually install `mkl` itself. I have seen this when building Docker images based on the [Jupyter minimal notebook stack](https://github.com/jupyter/docker-stacks/tree/master/minimal-notebook).

A simple `conda install --yes mkl mkl-service` solved it for me.

username_5: Updating via

`conda install mkl`

solved it for me. It seems to have updated several modules including mkl, mkl-service and numpy.

username_6: Intel MKL FATAL ERROR: Cannot load libmkl_def.so.

```

Is there any workaround or suggested action?

username_7: I got also the following error:

python: symbol lookup error: /home/username/anaconda2/lib/libmkl_core.so: undefined symbol: mkl_blas_dtrsm

username_7: I finally solved this problem using two steps for my deep learning applications with Keras/Theano. Notice that I am using Ubuntu 14.04.

First, I removed mkl with the following two commands.

$ [sudo] conda install nomkl numpy scipy scikit-learn numexpr

$ [sudo] conda remove mkl mkl-service

Although mkl is removed from my anaconda python 3, LinearRegression fit in skearn still makes error related to scipy. During I am searching Web. Some brilliants said to remove python-scipy but install pip based way. So, I applied this solution to my case. I removed conda scipy and install pip sciy py as follows:

$ [sudo] conda remove scipy

$ [sudo] pip install scipy

while scipy was removed from conda, it also took his followers such as sklearn. Hence, I installed sklearn again using the pip tool such as.

$ [sudo] pip install sklearn

Now everything works perfectly. I am very fine without invoking mkl.

username_6: Following the instructions of @username_7 worked for me.

Thanks @username_7 . 👍

username_8: Also had to use @username_7 's solution on a fresh anaconda install on Ubuntu 16.04. The other upvoted methods didn't work for me. I'm hoping to be able to use MKL in the future.

username_9: I came across the same error while building `cvxopt` with `mkl:11.3.3` and `mkl:2017.0.0`, separately. I got around it by linking `libmkl_rt.so` and now no `LD_PRELOAD` trick is required. The linking order used by me was: `-lmkl_rt -lmkl_core -lmkl_intel_lp64 -lmkl_sequential`

username_10: @username_7 solution indeed do work and don't know why.

**Thanks mate!**

username_11: Occurs when using python 3.5 but not in python 3.6

username_12: I am using Anaconda 2 on Centos 7, I also met this issue. Following @username_7 's solution, the problem disappeared. Thanks!

username_13: I am using Anaconda3-4.3.0 on Ubuntu16.04, also met this issue and solved by following @username_7 's solution.

Thanks!

username_14: For me the problem was resolved by removing numpy from `~/.local/...`. If any `pip install --user <package>` command dragged numpy to install in `~/.local/lib/pythonX.Y/site-packages/numpy/` it will always use *that* numpy, and `conda list` will not show it, so you may thing you don't have that installed. `pip uninstall numpy` fixed it. I think that explains many of the issues, for instance switching to 3.6, since numpy for 3.5 won't be found anymore. Possibly similar issues exists for scipy, I didn't check.

username_15: For me (on Ubuntu 14.04, python 3.6.1) it started working after installing numexpr (conda install numexpr). No paths specified.

username_16: For me problems started appearing after installing a `Python 2.7` with corresponding `numpy` alongside `root` env. Creating a new env with python 3 and numpy helped and none of suggestions above did not.

username_17: This issue still exists.

My environment configs are:

**VM: {gues_os: Ubutu 14.04 64, anaconda2: 4.4.0}**

And I can assure both mkl and mkl-service are installed, and resides at '/opt/anaconda2/lib/'.

However, when I fire *python -c "import gensim"*, the exact error msg is:

**Intel MKL FATAL ERROR: Cannot load libmkl_avx.so or libmkl_def.so**

Using *LD_PRELOAD=...* does not help me out either.

Using *LD_DEBUG=symbol python -c 'import gensim'* does give me details, and found 4 places for ****

<pre>

1. 9582: symbol=mkl_dft_avx_xs_f32_1df; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_avx.so [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=python [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/opt/anaconda2/bin/../lib/libpython2.7.so.1.0 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libpthread.so.0 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libdl.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libutil.so.1 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libm.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libc.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib64/ld-linux-x86-64.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_avx.so [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libdl.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libc.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib64/ld-linux-x86-64.so.2 [0]

**9582: /opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_avx.so**: error: symbol lookup error: undefined symbol: mkl_sparse_optimize_bsr_trsm_i8 (fatal)

2. 9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/opt/anaconda2/bin/../lib/libpython2.7.so.1.0 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libpthread.so.0 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libdl.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libutil.so.1 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libm.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libc.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib64/ld-linux-x86-64.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_def.so [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libdl.so.2 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib/x86_64-linux-gnu/libc.so.6 [0]

9582: symbol=mkl_sparse_optimize_bsr_trsm_i8; lookup in file=/lib64/ld-linux-x86-64.so.2 [0]

** 9582: /opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_def.so**: error: symbol lookup error: undefined symbol: mkl_sparse_optimize_bsr_trsm_i8 (fatal)

</pre>

It reveals that the installed scipy is referencing MKL libs. But the installed MKL coming along with anaconda is located at **'/opt/anaconda2/lib/libmkl_{core,avx,def}.so'**.

And for aforementioned solutions, I believe either to uninstall mkl or to install nomkl is not solving this issue.

If understand correctly, *nomkl* is NON-MKL version for originally mkl-relavant packages such as scipy, numpy. Therefore installing nomkl will not give us MKL's benefit for later use while mkl- relevant boosting was assumed in MKL-verion of numpy/scipy/etc, right?

I tried to find which file is referencing these so file with **grep -r lmkl_avx** and **grep -r lmkl_def**, but no result returned.

I also installed Intel MKL package downloaded from Intel's site. Similar error log apears, but in addition I found:

<pre>

9517: symbol=COIProcessLoadSinkLibraryFromFile; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_intel_lp64.so [0]

9517: symbol=COIProcessLoadSinkLibraryFromFile; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_intel_thread.so [0]

9517: symbol=COIProcessLoadSinkLibraryFromFile; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libmkl_core.so [0]

9517: symbol=COIProcessLoadSinkLibraryFromFile; lookup in file=/opt/anaconda2/lib/python2.7/site-packages/scipy/special/../../../../libiomp5.so [0]

........

</pre>

The problem, I guess, is how to correctly link the **/opt/anaconda2/lib/liblmk_{core|avx|def}.so** for scipy or gensim, by hard code or dynamic way *-ld*.

I will continuing working and the post a solution, if no one solves.

username_17: In my *problematic* Ubuntu vbox, this issue is solved by using:

<pre>

LD_PRELOAD=/opt/anaconda2/lib/libmkl_core.so:/opt/anaconda2/lib/libmkl_sequential.so python -c 'import gensim'

</pre>

Also, in another copy of Ubuntu vbox as well as Centos vbox, I can do **python -c "import gensim"** after installing the same version of anaconda and gensim in my *problematic* Ubuntu without any additional settings. So the problem is relevant to system and.

username_18: On my scientific linux box, I fixed this issue by pinning mkl to version 11.3.3

```conda install mkl=11.3.3```

username_19: Nothing seems to work for me here. I am getting this from a VirtualEnv set up for django. Any help is appreciated,

username_19: This did the trick when everything else failed -

sudo cp /home/akshay/anaconda2/pkgs/mkl-2017.0.1-0/lib/* /usr/lib

username_20: run the following before other imports solve similar problem for me:

sys.setdlopenflags(sys.getdlopenflags() | ctypes.RTLD_GLOBAL)

username_21: I was having this error inside a conda environment. My problem was resolved by executing `conda install mkl` inside the environment.

username_22: I had the "Intel MKL FATAL ERROR: Error on loading function mkl_blas_avx_xdcopy". It was resolved by executing `conda update mkl`.

username_23: This issue is still persisting for me

username_24: I solved it by adding the two lines to the beginning of my code:

"""

import mkl

mkl.get_max_threads()

""" |

tensorflow/tensorflow | 916873047 | Title: How to use TF.data.Dataset for relational database like nuScenes

Question:

username_0: **System information**

- TensorFlow version (you are using): tensorflow==2.5.0 (pip installed, Ubuntu 20.04, CUDA 11.)

**Describe the feature and the current behavior/state.**

tf.data.Dataset is excellent while implementing the data-pipeline . But currently the relational database like nuScenes (https://www.nuscenes.org/nuscenes#data-format). has difficulty to implement. Please guide or inform the steps to be taken, with examples.

**Will this change the current api? How?**

**Who will benefit with this feature?**

All relational database like nuScenes can use the tf.data.Dataset and also perform better while using the distributed training. |

python-visualization/folium | 254425120 | Title: On The Fly Marker return geolocation?

Question:

username_0: I am new to Folium and saw the feature for on-the-fly placement of markers. Is it possible to return those values in Folium?

What I am trying to do is if a person creates a region defined by markers, to return those values and then run a query against that particular area?

Answers:

username_1: That is possible but not implemented. Take a look at ipyleaflet which I believe has that feature. |

primefaces/primefaces | 70106341 | Title: When modal is true, close icon doesn't apply focus with tab key on Dialog

Question:

username_0: When modal is true, close icon doesn't apply focus with tab key on Dialog.

Status: Issue closed

Answers:

username_1: A workaround:

jQuery(document).ready(function() {

var $uiIconClose = $('.ui-icon-close');

if ($uiIconClose[0]) {

$uiIconClose.attr('tabindex', 0);

}

}

username_1: Oh, and to close it when you have focus and hit enter:

```

$uiIconClose.keydown(function (e) {

var key = e.which;

if(key == 13) // the enter key code

{

$uiIconClose.click();

return true;

}

});

``` |

laravelista/comments | 895669006 | Title: error in redirect

Question:

username_0: whaen i try to create a new comment he try to redirect my to /home

i try to change it but sill redirect my to /home

Answers:

username_1: When the comment is created it tries to redirect you to the previous URL appended with a hash which points the browser to the newly created comment:

```

return Redirect::to(URL::previous() . '#comment-' . $comment->getKey());

``` |

ValveSoftware/steam-for-linux | 180517096 | Title: Steam messages slow down the computer display

Question:

username_0: #### Your system information

* Steam client version: Latest stable as of today

* Distribution (e.g. Ubuntu): UBuntu gnome 16.10

* Opted into Steam client beta?: [Yes/No] No

* Have you checked for system updates?: [Yes/No] Yes

#### Please describe your issue in as much detail as possible:

Steam message notification is very slow and slows down the computer.

#### Steps for reproducing this issue:

1. Wait to receive a message from a friend in chat<issue_closed>

Status: Issue closed |

forelleblau/ioBroker.forecastsolar | 499957859 | Title: Think about to fix the issues found by adapter checker

Question:

username_0: I am an automatic service that looks for possible errors in ioBroker and creates an issue for it. The link below leads directly to the test:

https://adapter-check.iobroker.in/?q=https://raw.githubusercontent.com/forelleblau/ioBroker.forecastsolar

- [ ] [E112] extIcon must be the same as an icon but with github path

- [ ] [E201] Bluefox was not found in the collaborators on NPM!. Please execute in adapter directory: "npm owner add bluefox iobroker.forecastsolar"

- [ ] [E300] Not found on travis. Please setup travis

I have also found warnings that may be fixed if possible.

- [ ] [W400] Cannot find "forecastsolar" in latest repository

Thanks,

your automatic adapter checker.

P.S.: There is a community in Github, which supports the maintenance and further development of adapters. There you will find many experienced developers who are always ready to assist anyone. New developers are always welcome there. For more informations visit: https://github.com/iobroker-community-adapters/info

Answers:

username_0: Do you need help fixing the bugs?

username_0: Do you need help fixing the bugs? |

alexhude/alfredworkflow-zsh-calculator | 194824815 | Title: Alfred 2 Compatibility

Question:

username_0: I tried to import this workflow with my copy of Alfred 2 but I get this error:

Would be cool to add Alfred 2 support.

Thanks!

Answers:

username_1: I have added Alfred2 version. However, I would recommend you to switch to Alfred3 since it is lot more powerful. Also it is unlikely I will support Alfred2 workflows for a long time.

Status: Issue closed

username_1: I have added Alfred2 version. However, I would recommend you to switch to Alfred3 since it is lot more powerful. Also it is unlikely I will support Alfred2 workflows for a long time.

username_0: I'll definitely consider to upgrade 👍 Thanks! |

DeanCording/node-red-contrib-ecolect | 460972121 | Title: I've solve all you're problem juste check you're pull request

Question:

username_0: ### I've solve all you're problem juste check you're pull request

Answers:

username_1: It looks like this project is abandoned! :(

username_2: Sorry, I haven't abandoned it, I just have some more pressing matters to attend to at the moment.

Thanks,

Dean

Status: Issue closed

|

uniVocity/univocity-parsers | 306666951 | Title: Trim quoted columns

Question:

username_0: In a CSV export, the column value is quoted with spaces; e.g. `" 1"`.

During import, this fails when read with `record.getInt` with a `NumberFormatException`. The default setting of trimming whitespace is enabled.

It looks like the quoted input is not trimmed, and the setting only trims whitespace surrounding it. It seems counter-intuitive that I would need to do my own conversion logic to handle this case.

Answers:

username_1: Thanks for the suggestion, I'm not even sure how we got this far without this as it can obviously affect a lot of people.

I'll add an option to trim spaces inside quotes in version 2.6.2, i.e. very soon

Status: Issue closed

username_1: All done, now you can use:

* `trimQuotedValues`

* `setIgnoreLeadingWhitespacesInQuotes`

* `setIgnoreTrailingWhitespacesInQuotes`

I've released a 2.6.2-SNAPSHOT version which includes these. Let me know if you find any issues.

Thank you for using our parsers!

username_1: Version 2.6.2 released. Thanks again for the suggestion |

pytorch/pytorch | 577516332 | Title: Query padding mask for nn.MultiheadAttention

Question:

username_0: nn.MultiheadAttention already have key_padding_mask, which is fine and easy to use, it would be useful to have query_padding_mask argument.

My attention alignments looks like this and i do not like it. Probably it decreese model performance.

Probably, I can make a length mask with attn_mask argument, but not sure how.

Answers:

username_1: Hi,

Could you please use the template provided to explain in more details what you want and why?

Thanks

username_0: @username_1

## 🚀 Feature

Len mask argument for query in nn.MultiheadAttention

## Motivation

Its easy to use key_padding_mask for key argument, and not obvious how to do same for query.

## Pitch

It would be cool and useful to have a padding mask the same as for key argument.

## Alternatives

Probably, I can make a length mask with attn_mask argument, but not sure how.

## Additional context

Image for attention alignments without query but with key padding mask.

|

corona-warn-app/cwa-documentation | 812668678 | Title: Wie speichert man einen Eintrag im Kontakttagebuch?

Question:

username_0: Bei meinem Android-Handy erscheint kein Speicherbutton, wenn ich versuche, eine neue Person ins Kontakttagebuch einzutragen.

Answers:

username_1: Hi @username_0,

maybe this helps: https://www.coronawarn.app/de/blog/2020-12-28-corona-warn-app-version-1-10/

If you have more questions, please, let us know.

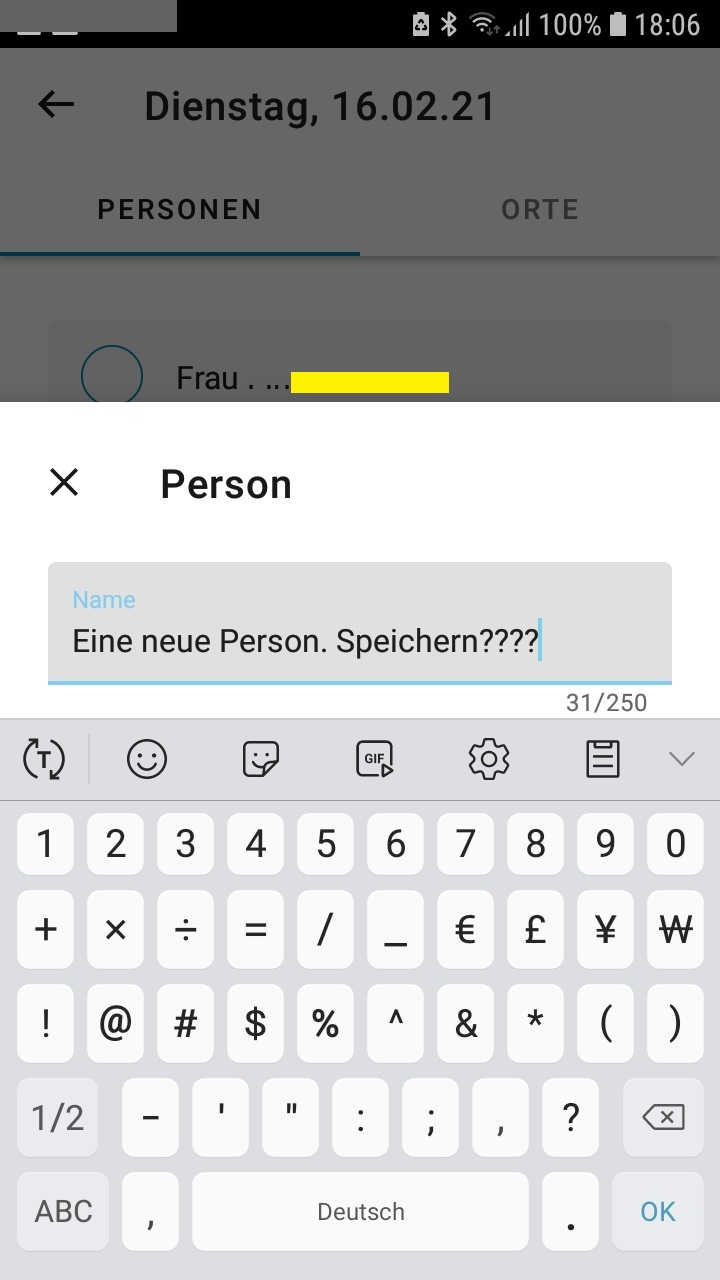

username_0: Thanks for the link. However, it is different on my phone: In the screenshot in the link, the "Speichern" button is just above the keyboard, but on my phone it looks like this: (yellow ribbon added over older entry for privacy reasons)

ut on my phone the "Speichern" button does not appear.

username_2: @username_0

What happens when you press "OK"?

username_0: Thanks, pressing OK (tiny) works - but it's not obvious enough for me

username_2: I really don't know what the behavior on Androids should be, @username_1 maybe transfer this to the Android repo for further investigation?

username_3: For Android German the keyboard shows "OK", for English it shows "Done". That may depend on exactly which Android keyboard is enabled though.

The screen shots and instructions on https://www.coronawarn.app/de/blog/2020-12-28-corona-warn-app-version-1-10/ seem to be from iOS, whereas the screen shots on https://www.coronawarn.app/en/blog/2020-12-28-corona-warn-app-version-1-10/ look like they are from Android.

username_4: For me, the keyboard shows a blue checkmark instead of an OK button (Android 10). Maybe this needs an additional explanatory sentence in the blogpost.

@username_0 If you are satisfied with the answers, you can close the issue :)

----

Corona-Warn-App Open Source Team

Status: Issue closed

username_3: The layout on Android differs from the layout on iOS shown in https://www.coronawarn.app/de/blog/2020-12-28-corona-warn-app-version-1-10/

Here is an example on a Google Pixel 3a phone with Android 11:

Google Pixel phones use the Gboard keyboard which offers the tick button ✅ to complete an entry.

Samsung phones use the Samsung keyboard with 🆗 (German) and Done (English).

username_3: It is now more obvious how to save the data for a person in CWA 1.14. In addition to the person's name, the data stored has new fields for telephone number and e-mail address. To store a new or edited person, there is a separate button, labelled "Speichern" in German or "Save" in English.

The screenshot below is again from a Google Pixel 3a phone with Android 11:

|

mozilla/application-services-bug-mirror | 540612882 | Title: Firefox sync error message "Your email was just returned"

Question:

username_0: User Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:72.0) Gecko/20100101 Firefox/72.0

Steps to reproduce:

Try to synchronize firefox sync.

Actual results:

I can't sync firefox sync.

Expected results:

I should be able to sync with my email account.

<EMAIL>

---

🐞 Issue is synchronized with Bugzilla [Bug 1605249](https://bugzilla.mozilla.org/show_bug.cgi?id=1605249) |

Nocommas555/TurtlePatformer | 818817252 | Title: create a basic sprite renderer class

Question:

username_0: It should include:

- animation handling

- custom data format that includes positional data about the center of the sprite to scale it

- z-layering support

Answers:

username_0: - Custom data format not needed anymore due to changing graphical lib from turtle to tkinter

username_0: animations and z-layering are done in the first iteration of our engine

username_0: The class is done. Loading screen not implemented, but the picture loader that caches info for multiple objects is.

Status: Issue closed

|

geneontology/go-annotation | 851033241 | Title: incorrect IDA annotation of 'plasmalogen synthase activity' to the Arabidopsis LPEAT1 and LPEAT2 genes

Question:

username_0: The GO term 'plasmalogen synthase activity' describes the attachment of an acyl-CoA to 1-O-alk-1-enyl-glycero-3-phosphocholine.

There is no experiment evidence suggests that the Arabidopsis genes LPEAT1 and LPEAT2 have such enzyme activity. The cited paper (PMID:19445718) does not support it. The substrates tested in the paper (in Methods section) included 1-palmitoyl-2-hydroxy-sn-glycero-3-phosphocholine, 1-oleoyl-2-hydroxy-sn-glycero-3-phosphocholine, and other lipids. None of them are 1-O-alk-1-enyl-glycero-3-phosphocholines.

Here is a screen shot of the incorrect GO annotation to LPEAT1:

<img width="1612" alt="Screen Shot 2021-03-30 at 9 34 16 PM" src="https://user-images.githubusercontent.com/36932254/113092196-5f07ac80-91a2-11eb-8895-1e2b05966496.png">

Answers:

username_1: Hi @username_2

Should we close this ticket? Or will you come back to close it when it's done locally?

username_2: Hi Pascale, <NAME> and <NAME> are going to take care of the fixes - Emmanuel will close the ticket when done.

username_3: Hi Peifen, Alan and Pascale,

We have updated GO annotation for LEAPT1 and LEAPT2 and added the correct reactions in the corresponding UniProt entries.

Best, Damien

username_3: The UniProtKB/Swiss-Prot updates of LEAPT1 and LEAPT2 should be freezed on May 12 (Freeze 2021_04) and publicly released on July 28 (Release 2021_04). For GOA, I do not have the release calendar.

Damien

username_1: Thanks !!

Status: Issue closed

|

fh1ch/node-bacstack | 275078866 | Title: writeProperty throws Exception when writing null to presentValue

Question: