repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

OpenHFT/Zero-Allocation-Hashing | 579899839 | Title: Fatal crash on Samsung Galaxy J5 (SM-J530F)

Question:

username_0: Hi,

Only on android device: Samsung Galaxy J5 (SM-J530F), but possibly there are other devices affected.

We have fatal crash that always happens when trying to hash byte array (contents does not seem to matter) using xxHash:

```kotlin

LongHashFunction

.xx().hashBytes(value)

```

Taken from LogCat:

```

--------- beginning of crash

2020-03-12 12:07:08.966 16010-16280/? A/libc: Fatal signal 7 (SIGBUS), code 1, fault addr 0x1338520c in tid 16280 (.pl/...), pid 16010 ()

2020-03-12 12:07:09.051 16283-16283/? A/DEBUG: *** *** *** *** *** *** *** *** *** *** *** *** *** *** *** ***

2020-03-12 12:07:09.051 16283-16283/? A/DEBUG: Build fingerprint: 'samsung/j5y17ltexx/j5y17lte:8.1.0/M1AJQ/J530FXXU3BRJ2:user/release-keys'

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: Revision: '7'

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: ABI: 'arm'

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: pid: 16010, tid: 16280, name: .pl/... >>> com.erfg.music <<<

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: signal 7 (SIGBUS), code 1 (BUS_ADRALN), fault addr 0x1338520c

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: r0 1338520c r1 0000000c r2 ca9e95cc r3 0000000c

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: r4 6f31be58 r5 00000004 r6 00000000 r7 ca9e98c8

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: r8 00000000 r9 cb5f2c00 sl ca9e96c8 fp ca9e9654

2020-03-12 12:07:09.052 16283-16283/? A/DEBUG: ip eae9fced sp ca9e95a8 lr eae9fcf7 pc eae9fcfa cpsr 600d0030

2020-03-12 12:07:09.185 16283-16283/? A/DEBUG: backtrace:

2020-03-12 12:07:09.185 16283-16283/? A/DEBUG: #00 pc 00310cfa /system/lib/libart.so (art::Unsafe_getLong(_JNIEnv*, _jobject*, _jobject*, long long)+13)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #01 pc 005db08f /system/framework/arm/boot.oat (offset 0x1cb000) (sun.misc.Unsafe.getLong [DEDUPED]+110)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #02 pc 0040c575 /system/lib/libart.so (art_quick_invoke_stub_internal+68)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #03 pc 004116e5 /system/lib/libart.so (art_quick_invoke_stub+228)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #04 pc 000b0227 /system/lib/libart.so (art::ArtMethod::Invoke(art::Thread*, unsigned int*, unsigned int, art::JValue*, char const*)+138)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #05 pc 00204005 /system/lib/libart.so (art::interpreter::ArtInterpreterToCompiledCodeBridge(art::Thread*, art::ArtMethod*, art::ShadowFrame*, unsigned short, art::JValue*)+224)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #06 pc 001ff54d /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+588)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #07 pc 003f8c87 /system/lib/libart.so (MterpInvokeVirtualQuick+598)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #08 pc 00402714 /system/lib/libart.so (ExecuteMterpImpl+29972)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #09 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #10 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #11 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #12 pc 003f8c87 /system/lib/libart.so (MterpInvokeVirtualQuick+598)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #13 pc 00402714 /system/lib/libart.so (ExecuteMterpImpl+29972)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #14 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #15 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #16 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #17 pc 003f8c87 /system/lib/libart.so (MterpInvokeVirtualQuick+598)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #18 pc 00402714 /system/lib/libart.so (ExecuteMterpImpl+29972)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #19 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #20 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #21 pc 00200159 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb1ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+444)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #22 pc 003f8fa5 /system/lib/libart.so (MterpInvokeVirtualQuickRange+472)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #23 pc 00402794 /system/lib/libart.so (ExecuteMterpImpl+30100)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #24 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #25 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #26 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #27 pc 003f8c87 /system/lib/libart.so (MterpInvokeVirtualQuick+598)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #28 pc 00402714 /system/lib/libart.so (ExecuteMterpImpl+29972)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #29 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.186 16283-16283/? A/DEBUG: #30 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #31 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #32 pc 003f77b9 /system/lib/libart.so (MterpInvokeStatic+184)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #33 pc 003feb14 /system/lib/libart.so (ExecuteMterpImpl+14612)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #34 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

[Truncated]

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #53 pc 003feb94 /system/lib/libart.so (ExecuteMterpImpl+14740)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #54 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #55 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.187 16283-16283/? A/DEBUG: #56 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #57 pc 003f7391 /system/lib/libart.so (MterpInvokeInterface+1080)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #58 pc 003feb94 /system/lib/libart.so (ExecuteMterpImpl+14740)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #59 pc 001e6bc1 /system/lib/libart.so (art::interpreter::Execute(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame&, art::JValue, bool)+340)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #60 pc 001eb36f /system/lib/libart.so (art::interpreter::ArtInterpreterToInterpreterBridge(art::Thread*, art::DexFile::CodeItem const*, art::ShadowFrame*, art::JValue*)+142)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #61 pc 001ff535 /system/lib/libart.so (_ZN3art11interpreter6DoCallILb0ELb0EEEbPNS_9ArtMethodEPNS_6ThreadERNS_11ShadowFrameEPKNS_11InstructionEtPNS_6JValueE+564)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #62 pc 003f7391 /system/lib/libart.so (MterpInvokeInterface+1080)

2020-03-12 12:07:09.188 16283-16283/? A/DEBUG: #63 pc 003feb94 /system/lib/libart.so (ExecuteMterpImpl+14740)

2020-03-12 12:07:11.258 2748-2748/? E//system/bin/tombstoned: Tombstone written to: /data/tombstones/tombstone_07

2020-03-12 12:07:11.266 2693-2693/? E/audit: type=1701 audit(1584011231.251:1220): auid=4294967295 uid=10219 gid=10219 ses=4294967295 subj=u:r:untrusted_app:s0:c512,c768 pid=16280 comm=".pl/..." exe="/system/bin/app_process32" sig=7

2020-03-12 12:07:11.301 2962-16284/? E/ActivityManager: Found activity ActivityRecord{dc4c01c u0 com.efgd.music/.MainActivity t-1 f} in proc activity list using null instead of expected ProcessRecord{ca03534 16010:com.efgd.music/u0a219}

2020-03-12 12:07:11.398 3338-3338/? E/SKBD: bbw getInstance start

2020-03-12 12:07:11.398 3338-3338/? E/SKBD: bbw sendSIPInformation state: 6 isAbstractKeyboardView : true

2020-03-12 12:07:11.404 3338-16293/? E/SKBD: bbw sending null keyboardInfo as SIP is closed

2020-03-12 12:07:11.419 5224-5254/? E/PBSessionCacheImpl: sessionId[22976978907188413] not persisted.

```

Answers:

username_1: @username_2 I remember you looked at some other Android-related issues, would you be able to check this one out?

Thanks

username_2: are other hash functions than xx() produce the same crash? does `value` equal to null? what is the length of `value`? hashBytes(new byte[0-16]) always produce the same crash?

username_0: I have no physical access to this device now, since all our work is remote due to covid spread. All information i have is from QA team in my company, so i have limited options here.

We are using xxHash to generate HMAC for requests. So value is mostly around 200-300 bytes long UTF-8 encoded string. It can never be null (it's kotlin, value is based on NonNullable string). If you need more data on that i try to get this device somehow.

username_2: how can relate this stack to hash function?

username_0: That's the weird part. There is no my code on the stack. But if I remove call to hash function - everything else runs just fine. Add this to only one device it happens on - i think this might be some framework issue. And it's Samsung, which have a long history of breaking Android framework in many ways..

I know that this might be impossible to fix, but I hoped somebody might have some idea

username_2: sorry, i have no idea~

need more info, so better to get the device for debugging and test some other hash methods.

username_2: @username_0 can u try to catch exceptions when call hash method:

```java

LongHashFunction h = null;

long v= 0;

try {

h = LongHashFunction.xx();

} catch (Throwable e) { throw new Exception(e); }

try {

v = h.hashBytes(value);

} catch (Throwable e) { throw new Exception(e); }

```

username_0: I tried to capture exception, but it's fatal crash so nothing was caught. It just crashes the process entirely, bypassing even global exception handlers.

I will try more as soon as I will get the device |

fedspendingtransparency/usaspending-api | 985846576 | Title: REST GET request that returns award details using agency codes

Question:

username_0: The only way to get award details via agency identifier as far as I know is to call `https://api.usaspending.gov/api/v2/bulk_download/awards/`. It would be nice if a json response was returned. I looked through the documentation for quite some time. But it is very possible I missed something. If I did not miss the call I need, would it be possible to return this data via json? I am more than happy to contribute if this team does not deem this as a high priority task and/or does not have the time. Thank you. |

jOOQ/jOOQ | 245057401 | Title: Add Clock Configuration.clock()

Question:

username_0: Some jOOQ features depend on a clock. Currently, the optimistic locking feature is hard-wired to a call to `System.currentTimeMillis()`, which cannot be overridden by users for testing purposes.

With a `Configuration.clock()`, we could allow users to override this behaviour. (see https://github.com/jOOQ/jOOQ/issues/6445).

Another feature that could depend on overrideable timestamps is the transaction timestamp feature (not yet implemented: https://github.com/jOOQ/jOOQ/issues/5096)<issue_closed>

Status: Issue closed |

Azure/azure-cli | 899928463 | Title: Is it possible to add new baked script in the list of commands?

Question:

username_0: [Enter feedback here]

Is it possible to add new baked script in the list of commands?

If I have a list of commands I want to run, it would be very helpful if I can have them in the list and execute later to do some diagnostics. The reason of having a list is that we don't want support engineers being able to run anything on the VM, only in allowed list.

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: f04b196d-7d11-7213-f037-f12b85b19c52

* Version Independent ID: adc05d8e-b13b-134b-5f61-42aaf23666df

* Content: [az vm run-command](https://docs.microsoft.com/en-us/cli/azure/vm/run-command?view=azure-cli-latest)

* Content Source: [latest/docs-ref-autogen/vm/run-command.yml](https://github.com/MicrosoftDocs/azure-docs-cli/blob/master/latest/docs-ref-autogen/vm/run-command.yml)

* Service: **virtual-machines**

* GitHub Login: @rloutlaw

* Microsoft Alias: **routlaw**

Answers:

username_1: Compute

username_2: @username_0 Could you help to explain what `baked script` is? What features does it need to support?

username_0: It means as an owner of the VM, I can define a couple of .sh scripts (e.x. a.sh, b.sh...)which is deployed on the VM, and I can run CLI command like this:

`az vm run-command invoke a.sh`

`az vm run-command invoke b.sh`.

This can help to run duplicate shell commands one by one. Also it can reduce the possible human error when support engineers are working on the VMs.

username_2: @username_0 In fact, at present, the parameter `--scripts` of command `az vm run-command invoke` can support inputing script.

Such as:

```sh

az vm run-command invoke -g MyResourceGroup -n MyVm --command-id RunShellScript --scripts @scripts_file

```

May I ask what's the difference between this `baked script` and `az vm run-command invoke -g MyResourceGroup -n MyVm --command-id RunShellScript --scripts @scripts_file`?

username_0: I assume `script_file` has to be on the local machine, instead of the remote VM.

My scenario is that a developer can author a list of script files gathering diagnostic information and check-in in the source repo. These script eventually get deployed on the VMs via release pipeline. These scripts are reviewed and well written so it is trusted with high quality.

Then some day an issue got reported on the service, and the SRE run these scripts on the remote VM to gather diagnostic information, instead of invoking some ad-hoc scripts which have a relatively low quality and a higher risk of damage. |

EarthMC/Issue-Tracker | 436465507 | Title: When 1.14?

Question:

username_0: I get it's probably gonna be a while until all the plugins work with 1.14, but I thought I might as well check.

Answers:

username_1: Me knowing how long plugins take to update id give it 2 weeks to 3 months

username_2: or a year

username_3: probably a year

username_4: likely a year

Status: Issue closed

|

frontendbr/forum | 163265542 | Title: Onde conseguir Freeman?

Question:

username_0: Eu estou começando na área e gostaria de dicas para conseguir Freelas.

Quais sites eu devo me cadastrar ou com mensagens quem falar?

Preciso ter um portfólio?

É como eu tenho 16 anos, perante a lei é permitido eu fazer Freelance?

Obrigado desde já!!!

Answers:

username_1: Não é ilegal, mas pode ter dificuldades se não emitir NF. Para emitir NF você precisa ao menos ser MEI (Micro Empreendedor Individual), para ser MEI você precisa ser de maior ou emancipado. Com 16 anos eu emancipei e consegui abrir MEI e emitir NF sem problemas.

Entretanto, gostaria de te dar uma dica baseado em minha experiência: freela não vale a pena, pelo menos para quem está no começo da carreira (que parece o seu caso). Se puder encontrar um estágio ou um emprego fixo com um mentor com mais experiência que você seria ótimo! Você poderá aprender muito e conseguirá sentir uma curva de aprendizado muito boa.

Fazer freela sem experiência pode ser ruim pra você, pode ser ruim para seu cliente, e pode ser ruim para outros desenvolvedores.

username_2: Faço das palavras do amigo acima as minhas, mas caso não queira se emancipar, pode abrir no nome de algum parente que não trabalha assinar sua carteira como aprendiz e pronto. Mas teria que ler muito, acredito que possa pegar jobs pequenos em sua cidade e coisas do tipo.

Dificilmente uma empresa vai contratar um menor aprendiz pra essa área, é o que acredito, ainda mais dependendo de onde mora (interior no caso). Acredito que com 16 anos ainda esteja com seus pais, relativamente sem custo de vida, aproveitaria esse tempo ao máximo para me especializar, não ter problemas pessoas tais como (divida, sustento próprio, família pra sustentar) isso freia seu desenvolvimento, aproveita que ta sem essa carga, com muito tempo disponível e mete a cara nos estudos.

username_0: @username_1 e @username_2 muito obrigado pelas dicas!!!!

Eu já pensei em pegar um estágio em uma empresa mas a questão é o tempo perdido em trânsito e como eu estou no ensino médio ainda, não sobraria tempo para estudar. Por isso a ideia de pegar Freelas, posso conciliar o tempo estudando, ganhando experiência e ganhando um trocados de extra.

username_3: concordo com @username_1 Muitos clientes são exigentes com a qualidade de produtos e principalmente com os prazos, se você for um desenvolvedor inexperiente, isso pode comprometer você como profissional e os outros profissionais que perderam uma oportunidade de freela, por conta disto. Acredito que seria melhor um estagio, não ocupa tanto tempo, mesmo porque estagio dura no maximo 6 horas.

username_4: @username_0 "Ter um portfólio" sempre ajuda, mas acredito que o portfólio de qualquer desenvolvedor hoje em dia é o Github.

Por isso participe de projetos open source, crie seu próprio, mostre seu trabalho com "sites demo" ou no codepen (que funciona muito pra frontend).

Quanto a onde buscar trabalhos, existem vários lugares pra pegar freelas. Eu particularmente uso o [Upwork](http://www.upwork.com). Como funciona por "bids" e recomendações, no ínicio eu adotei a estratégia de fazer preços mais baratos para ter um histórico no site e hoje em dia já consigo contratos de valor hora acima de $35 usd, o que é relativamente alto se vc morar aqui no Brasil.

Ah, mas no caso do Upwork o ingles é necessário, sempre rola entrevista, algumas vezes por chat e outras por Skype call (inclusive com camera).

username_5: @username_4 boa dica sobre o Upwork, eu utilizava muito quando era odesk.

@username_0 tem esse repo no github que contém uma lista de empresas que disponibilizam trabalho remoto. https://github.com/lerrua/remote-jobs-brazil

username_0: @username_5 @username_4 @username_3 obrigado pela ajuda!!!

Faz nove meses que eu venho estudando Front-end e acredito que está na hora de ganhar mas um pouco de experiência. E @username_4 vou procurar investir mais tempo em projetos open source.

username_4: @username_0 se quiser uma dica por onde começar, https://github.com/username_4/react-hot-redux-firebase-starter, eu to precisando de uma ajudinha lá. Já separei as issues e acredito que seja fácil mandar uns PR pra fecha-las.

username_6: Como a maioria já comentou, eu reforço que como você está ainda sem cargas de responsabilidades ainda, aproveite ao máximo para estudar e se dedicar.

Utilize bastante o Github, escreva um blog talvez, faça experimentos para ir criando um portfólio. Assim vai ser muito mais proveitoso. Além disso, recomendo começar a se movimentar, ir em eventos, conversar com as pessoas da comunidade, o network é muito importante para quem deseja freelas, pois muitas vezes funciona por recomendação =)

Status: Issue closed

|

pachyderm/pachyderm | 187513576 | Title: Pachctl doc links are broken

Question:

username_0: http://docs.pachyderm.io/en/latest/pachctl/pachctl.html

The links to each command are broken

Answers:

username_1: pachctl.md is autogenerated. All the links need ../pachctl/ in front of them. I think this needs to be added to `make assets` or whatever we use to autogenerate the pachctl docs. I could obviously change the links in the md file, but that doesn't solve the problem and I probably shouldn't be the one to futz with the `make` file.

username_0: Its more than just that prefix. The links right now point to `somecommand.md` when they should point to `somecommand.html`

There is a problem with how we're rendering markdown on RTD.

This was working in the past because we had a manually updated `doc/pachctl/pachctl.rst` file that mimicked the auto-generated one.

[I've opened an issue on RTD](https://github.com/rtfd/readthedocs.org/issues/2520) so we'll see what they say. Hopefully I'm just doing something silly. If not, we'll probably just run with the manual curation of the rst file.

username_1: adding an rst file for now. I'll touch up the rst to include 1-liner cmd summaries.

Status: Issue closed

|

mgsx-dev/gdx-gltf | 895695892 | Title: Why texture incomplete when camera move faraway ?

Question:

username_0: I download the model from sketchfab,the model's texture incomplete when camera move faraway, but it's ok when camera close to the model. I'm not writing any code, just using the release jar.

Answers:

username_1: which model? which jar release? which texture?

username_0: I used the jar both "gdx-gltf-demo-desktop-2.0.0-preview.jar" and "gdx-gltf-demo-desktop-1.0.0-patch1.jar".

The model have tested is :

1. https://sketchfab.com/3d-models/forest-warrior-527429928e0349168aa7c29e14a8c1c8 , the texture for hair and cloth goes wrong.

2. https://sketchfab.com/3d-models/motelsign-post-apo-170f5144a7544fabbf203199a72e3cc8 , the texture for wood goes wrong.

Camera moving faraway will lead to the problem, if you check it very closely, it' ok.

I'm using AMD's display card now, the screen monitor could blink sometimes . I will test it on Intel's integrated graphics card and report again.

username_0: I‘ve imported the models to Blender, also bad when camera faraway in this computer(Windows, AMD WX4100 graphics card).

But !!! The models work well in another computer(Linux, Intel integrate graphics card) with Blender .

I don't know what happed, and i think your code and jar may working well, perhaps my computer's ENV not good...

Status: Issue closed

username_1: yeah, it sounds like an issue with your driver, maybe try to upgrade them on your machine.

I'm closing now since it's not an issue with the library. |

zkSNACKs/WalletWasabi | 931680517 | Title: Start Wasabi with the OS

Question:

username_0: ### General Description

We want to make an opportunity to put Wasabi into the Startup folder to make it run when the OS starts. (Mainly on Win and Mac).

Part of Wasabi2.0 PREVIEW version.

Complexity | Priority |

--- | --- |

5 | 2 |

@username_2 , @username_0 , @username_3 and @username_1 should look into this and find a sollution for this.

Answers:

username_1: I modified the description a bit - nothing cardinal.

username_2: I have started researching this a bit. I'm not familiar too much with WiX though, so I've read some basic documentation and I can work on making this happen on Windows. Does it sound good or does @username_3 have a better idea how to move this forward?

username_3: I found the same answer as @username_2, we need to place the WW shortcut icon into the `Startup` folder.

I found a code snippet for this, and I will test it later with a dummy project to see if it's works or not.

username_2: @username_3 This may be a good start: https://github.com/username_2/WalletWasabi/tree/feature/2021-06-29-wix

username_3: I made a little project for testing.

```

Console.WriteLine("Hello World!");

string pathToExe = Assembly.GetExecutingAssembly().Location;

pathToExe = pathToExe.Remove(pathToExe.Length - 3);

pathToExe += "exe";

RegistryKey rkApp = Registry.CurrentUser.OpenSubKey("SOFTWARE\\Microsoft\\Windows\\CurrentVersion\\Run", true);

rkApp.SetValue("FinalApp", pathToExe.Trim());

Thread.Sleep(2000);

Console.WriteLine("Done.");

```

With this we can write to the registry only for the current user, and we don't even have to copy anything to the shortcut folder.

Note: This is for Windows only!

username_3: With a little modification we could do something like this:

```

string keyName = @"Software\Microsoft\Windows\CurrentVersion\Run";

using (RegistryKey key = Registry.CurrentUser.OpenSubKey(keyName, true))

{

if (key == null)

{

// Key doesn't exist. Create it.

}

else

{

key.DeleteValue("MyApp");

}

}

```

Maybe in the Options of the UI we could have a boolean to controll this.

username_2: I, for one, would say that it's nicer to use the startup folder. One can just simply open a folder and knows what's inside. But I say that as a poweruser probably.

username_2: @username_1 A decision should be made whether the startup feature is supposed to be an install-only one or whether WW settings should contain a checkbox for this as proposed by Adam's #5956.

username_1: Yes.

Status: Issue closed

username_3: Windows: #5960

macOS: #6041

Linux: #6080 |

bincrafters/community | 563482989 | Title: [question][qt] why does qt package do not append bin folder to PATH

Question:

username_0: <!-- What is your question? Please be as specific as possible! -->

I have tried to use `qt 5.14.1` conan package and noticed that the only env variable it creates is `CMAKE_PREFIX_PATH`. This works perfect when project is being built with cmake.

But this doesn't work if there is a need to use same qt binaries, e.g. for test automation. I have tried to generate conan virtual environment and again it provides me only the `CMAKE_PREFIX_PATH` that contains root folder of the package. I can use that, but I also expected that package should append qt `bin` folder to the `PATH` variable. Is there any reason of not doing so?

Answers:

username_1: Hi. Conan is able to add the bin folder to the PATH without setting it explicitely in package_info. You can either use tools.RunEnvironment, or the run_environment argument of self.run, or conan virtual environment generator.

username_0: @username_1 I have just installed qt 5.14.1 on both osx and windows with virtual environment generated:

`conan install qt/5.14.1@bincrafters/stable -g=virtualenv --build=missing`

I have received generated virtual env files, but when I open the `environment.sh.env` file it mentions qt only in `CMAKE_PREFIX_PATH` . variable. Also trying to use qmake with activated env does not find `qmake` binary. Could you, please, let me know what am I doing wrong?

username_1: I was not being precise enough: you need to use [virtualrunenv generator](https://docs.conan.io/en/latest/mastering/virtualenv.html#virtualrunenv-generator)

username_0: @username_1 yes, that does work. Thanks for quick support

Status: Issue closed

|

Azure/autorest.java | 461045169 | Title: Import cut off half way during generation of network v2019_02_01

Question:

username_0: Import statement is cut off half way in SubnetImpl. No type depends on this import.

```java

import com.microsoft.azure.management.network.v2019_02_01.InterfaceEndpoint;

import com.microsoft.azure.management.network.v2019_02_01.NetworkSecurityGroup;

import com.microsoft.azure.management.network.v2019_02_01.;

import com.microsoft.azure.management.network.v2019_02_01.RouteTable;

import com.microsoft.azure.management.network.v2019_02_01.ServiceEndpointPolicy;

```

Answers:

username_1: Please reopen if this is still an issue for v4 generator.

Status: Issue closed

|

TheBusyBiscuit/builds | 1086080273 | Title: LuckyPandas not showing up on the build page

Question:

username_0: <!-- FILL IN THE FORM BELOW -->

## :round_pushpin: Description (REQUIRED)

<!-- A clear and detailed description of what went wrong. -->

<!-- The more information you can provide, the easier we can handle this problem. -->

<!-- Start writing below this line -->

Luckypandas isnt showing up on the builds page for some reason

## :bookmark_tabs: Steps to reproduce the Issue (REQUIRED)

<!-- Tell us the exact steps to reproduce this issue, the more detailed the easier we can reproduce it. -->

<!-- A link to the site in question is required! -->

<!-- Screenshots help us a lot too!-->

<!-- Start writing below this line -->

1. Open your preferred web browser (Google Chome, Microsoft Edge, Mozilla Firefox or any other Web Browser)

2. Go to the URL https://thebusybiscuit.github.io/builds/

3. Search for j3fftw1

4. Then you will see there is no LuckyPandas

## :bulb: Expected behavior (REQUIRED)

<!-- What were you expecting to happen? -->

<!-- What do you think would have been the correct behaviour? -->

<!-- Start writing below this line -->

When going to the builds page i expect LuckyPandas to show with multiple builds.

Answers:

username_1: Turns out that commit 87c5a1c88a7ddd653f8efcb779c10d1fd6d3844b was pushed by the builds program the exact same time that pull request #145 was merged, making it override the PR :LUL:

Never happened before, but just make a new PR and it will make it this time

Status: Issue closed

|

dt222cc/1dv450-dt222cc | 137542888 | Title: If-satser och undantag

Question:

username_0: Det sparar en rad kod att göra en tilldelning i en if-sats som du gör många gånger i dina controllers, t.ex. [här](https://github.com/username_1/1dv450-username_1/blob/master/2-api/app/controllers/api/v1/events_controller.rb#L65). Men jag tycker att det blir svårare att förstå.

Jag föredrar användningen av ´find´ istället för ´find_by_id´. Visst, den kommer att kasta ett exception vid fel id. Men genom att ha en funktion som räddar t.ex. alla `RecordNotFound` exceptions minskar både komplexiteten och antalet rader kod. Ett exempel på vad jag menar finns [här](https://github.com/thajo/1dv450_demo/blob/master/app/controllers/teams_controller.rb#L6).

Answers:

username_1: Noterat

Status: Issue closed

|

LiveSplit/livesplit-core | 314794503 | Title: C API Segment History Iterator seems to be broken

Question:

username_0: When trying to figure out #120 I updated livesplit-core in LiveSplit and it seems to panic on Option::unwrap() on SegmentHistoryIter::next, which doesn't seem to make sense, but so far I couldn't figure out what is going on there, especially with Debug Mode not working #121<issue_closed>

Status: Issue closed |

truedread/netflix-1080p | 471874829 | Title: Unexpended Error(opera&win) 1.14&1.15

Question:

username_0: O7111-1003 error code and i try look console and i see

```

chrome-extension://nmfikcpmiiikoaokjcpjngkbandapkcp/get_manifest.js net::ERR_FILE_NOT_FOUND

(anonymous) @ content_script.js:43

```

Before i installed 1.13 and thats work perfectly.

Status: Issue closed

Answers:

username_0: O7111-1003 error code and i try look console and i see

```

chrome-extension://nmfikcpmiiikoaokjcpjngkbandapkcp/get_manifest.js net::ERR_FILE_NOT_FOUND

(anonymous) @ content_script.js:43

```

Before i installed 1.13 and thats work perfectly.

username_1: Update to the latest version.

username_0: i tried already lastest version not work with opera

Status: Issue closed

username_1: Closing as major changes have been made that have probably fixed this. |

dyatchenko/ServiceBrokerListener | 438598384 | Title: Why 'insert' trigger three TableChangedEvent?

Question:

username_0: I use the example code, but when i insert data in SSMS, the TableChangedEvent trigger three times in this order --- NotificationType.None, NotificationTypes.Update, NotificationTypes.Insert.

I want to monitor update, insert, delete event, when I delete and update data in SSMS it works perfectly. Just the insert trigger three times...

`var listener = new SqlDependencyEx(connectionString, "DatabaseName", "TableName");

listener.TableChanged += (o, e) =>

{

//logic code

};

listener.Start();

`

Thanks for any reply! |

Nervengift/kvvliveapi | 347482511 | Title: 'kvvliveapi' has no attribute 'search_by_name'

Question:

username_0: Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AttributeError: module 'kvvliveapi' has no attribute 'search_by_name'

```

- Tested on Python 3.6 and Python 3.7.

- Installed via pip, Version 0.1.1<issue_closed>

Status: Issue closed |

jhipster/jhipster-registry | 265004849 | Title: JHipster registry docker image doesn't work on OpenShift

Question:

username_0: <!--

- Please follow the issue template below for bug reports and feature requests.

- If you have a support request rather than a bug, please use [Stack Overflow](http://stackoverflow.com/questions/tagged/jhipster) with the JHipster tag.

- For bug reports it is mandatory to run the command `jhipster info` in your project's root folder, and paste the result here.

- Tickets opened without any of these pieces of information will be **closed** without any explanation.

-->

##### **Overview of the issue**

JHipster registry docker image (on DockerHub) needs to have root access.

It need to write in /target directory for logs.

This directory is not created in the dockerfile

<!-- Explain the bug or feature request, if an error is being thrown a stack trace helps -->

##### **Motivation for or Use Case**

I need to maintain an override of image :

`FROM jhipster/jhipster-registry:v3.1.2

RUN mkdir /target && chmod g+rwx /target

`

It's a bad pratice that docker image needs to be root...

<!-- Explain why this is a bug or a new feature for you -->

##### **Reproduce the error**

Just deploy hipster/jhipster-registry on minishift

You'll get a "Could not write to /target/...." error

<!-- For bug reports, an unambiguous set of steps to reproduce the error -->

##### **Related issues**

<!-- Has a similar issue been reported before? Please search both closed & open issues -->

##### **Suggest a Fix**

Just add

RUN mkdir /target && chmod g+rwx /target

In the https://hub.docker.com/r/jhipster/jhipster-registry/~/dockerfile/

<!-- For bug reports, if you can't fix the bug yourself, perhaps you can point to what might be

causing the problem (line of code or commit) -->

##### **JHipster Registry Version(s)**

latest : v3.1.2

<!--

Which version of JHipster Registry are you using, is it a regression?

-->

##### **Browsers and Operating System**

<!-- What OS are you on? is this a problem with all browsers or only IE8? -->

- [X] Checking this box is mandatory (this is just to show you read everything)

Answers:

username_1: Did you use our Openshift sub-generator? I'm pretty sure it works, it's made by Red Hat

username_0: Yes i use it.

And when using it, (without touching anything else) jhipster registry refuse to start

username_0: The jhipster registry part is not running with the jhispter service account which has right for anyuid.

But if we modify the dockerfile as i suggested, all would be right!

username_0: The problem comes from this commit : https://github.com/jhipster/jhipster-registry/commit/085439b9c33c5a84746d39c473f1ec8c10b78a63#diff-285a2da9b966f929edc1374c522a0449

So, this is a regression, it use to work with 3.1.1

username_2: Creating the `target` folder should be OK

@username_0 : do you want to PR ?

username_0: Hi, i don't know where is the https://hub.docker.com/r/jhipster/jhipster-registry/~/dockerfile/ Dockerfile,

It's not the same as the one in https://github.com/jhipster/jhipster-registry/blob/master/src/main/docker/Dockerfile

username_2: It's this file https://github.com/jhipster/jhipster-registry/blob/master/Dockerfile in the root folder

username_0: Ah, ok, btw why two versions?

I'll do the pull request

username_2: The Dockerfile inside `src/main/docker` is used in development only.

For Docker Hub, it's the `Dockerfile` in the root folder which is used

username_0: PR done.

There's still another problem with environment variables like ${GIT_URI} not evaluated in this version of Dockerfiles (you've to create an entrypoint) but i will open a new issue if i have time...

username_2: Thanks for PR, I will have a look when I have time

And yes, if you have another issue, plz open a new one, so it's clear

username_1: Thanks @username_0 for the bug report, the explanation, and the PR! You rock :-)

Status: Issue closed

username_0: No, the bug is not fixed...

```

docker run jhipster/jhipster-registry:master /bin/sh -c 'ls -la /target'

ls: /target: No such file or directory

```

apparently this dockerfile is used to compile and build the image and not for running

username_3: @username_0 Yes the lines were not added at the right place: the correct fix is:. https://github.com/jhipster/jhipster-registry/pull/192

username_3: <!--

- Please follow the issue template below for bug reports and feature requests.

- If you have a support request rather than a bug, please use [Stack Overflow](http://stackoverflow.com/questions/tagged/jhipster) with the JHipster tag.

- For bug reports it is mandatory to run the command `jhipster info` in your project's root folder, and paste the result here.

- Tickets opened without any of these pieces of information will be **closed** without any explanation.

-->

##### **Overview of the issue**

JHipster registry docker image (on DockerHub) needs to have root access.

It need to write in /target directory for logs.

This directory is not created in the dockerfile

<!-- Explain the bug or feature request, if an error is being thrown a stack trace helps -->

##### **Motivation for or Use Case**

I need to maintain an override of image :

`FROM jhipster/jhipster-registry:v3.1.2

RUN mkdir /target && chmod g+rwx /target

`

It's a bad pratice that docker image needs to be root...

<!-- Explain why this is a bug or a new feature for you -->

##### **Reproduce the error**

Just deploy hipster/jhipster-registry on minishift

You'll get a "Could not write to /target/...." error

<!-- For bug reports, an unambiguous set of steps to reproduce the error -->

##### **Related issues**

<!-- Has a similar issue been reported before? Please search both closed & open issues -->

##### **Suggest a Fix**

Just add

RUN mkdir /target && chmod g+rwx /target

In the https://hub.docker.com/r/jhipster/jhipster-registry/~/dockerfile/

<!-- For bug reports, if you can't fix the bug yourself, perhaps you can point to what might be

causing the problem (line of code or commit) -->

##### **JHipster Registry Version(s)**

latest : v3.1.2

<!--

Which version of JHipster Registry are you using, is it a regression?

-->

##### **Browsers and Operating System**

<!-- What OS are you on? is this a problem with all browsers or only IE8? -->

- [X] Checking this box is mandatory (this is just to show you read everything)

Status: Issue closed

username_0: I confirm it works!

Thanks! |

bitfocus/companion-module-renewedvision-propresenter | 968749234 | Title: Is there any other way to do this ???

Question:

username_0: 1. Next Previous item in Current PlayList or Library.

2. Next Previous Background in current Media List.

3. Next previous Media List in Media.

4. Custom Action how to ???

how can I this ???

Answers:

username_1: Hello!

We can only send commands to Pro7 that Pro7 understands in it's remote protocol.

There are no commands in the remote protocol for those items you listed in 1. 2. and 3.

You can however, send MIDI notes to Pro7 to achieve much of what you are wanting.....(using MIDI relay Companion module and with MIDI-relay listener program installed and running on machine with Pro7.)

The custom action does NO magic - you can make up new features/actions that Pro7 does not already do.

The reason I have added that to the module is so that there is a way to make buttons that support any NEW features that get added to Pro7 remote protocol *before* the Companion module is updated to support those new features.

It took me acouple of weeks to update the module to add triggering macros and looks - all that time, anyone could have used custom action to trigger macros and looks (if they asked me what is the custom action to type there).

It's really a support option for me to help people if they want a new feature in a rush before the Companiopn app is updated.

Status: Issue closed

|

8bitPit/Niagara-Issues | 799818187 | Title: Sort Favourites my most used (Like Alphabets)

Question:

username_0: I am suggesting an option be added to allow favourites to be sorted by most used. I understand there is a feature to add suggested apps below favourites but this is not enough. First problem is it takes a while to suggest. The bigger issue is if you have a lot of favourites there are no suggestions. I used to have a app called "app swap" and it was a simple concept that would sort your drawer by most used. It was genius

Likewise I would love to see my favorites sorted by most used because right now I have to manually do this and it's extremely time consuming and annoying. The suggestions feature as stated above doesn't show suggestions with lots of favourites so what's the point?

Status: Issue closed

Answers:

username_1: I'm sorry, but we don't have plans to make the maximum number of suggestions customizable: https://github.com/username_1/Niagara-Issues/issues/454. We believe the most efficient way to set up Niagara Launcher is by having 8 favorites and accessing the rest of the apps via the alphabet (its sub-sections are sorted by usage) and with swipe actions: https://help.niagaralauncher.app/article/38-folders. |

GoogleCloudPlatform/flink-on-k8s-operator | 598791603 | Title: chart installed failed in flink-operator-v1beta1

Question:

username_0: version: flink-operator-v1beta1

I try to install the flinkoperator with helm3 and get the following errors:

helm3 install flinkoperator ./flink-operator/

Error: parse error at (flink-operator/templates/flink-operator.yaml:2756): undefined variable "$clusterName"

I changed the $clusterName to "clusterName" mananly and tried anagin:

helm3 install flinkoperator ./flink-operator/

Error: unable to build kubernetes objects from release manifest: [unable to recognize "": no matches for kind "Certificate" in version "certmanager.k8s.io/v1alpha1", unable to recognize "": no matches for kind "Issuer" in version "certmanager.k8s.io/v1alpha1"]

Answers:

username_1: Please try helm install with [instruction](helm repo add flink-operator-repo https://googlecloudplatform.github.io/flink-on-k8s-operator/) again. Just update with new release hosted by helm server.

Status: Issue closed

username_0: It works now, thanks. |

syndesisio/syndesis | 300260403 | Title: Order of items in FTP connection configuration form

Question:

username_0: I think items in FTP configuration form should be listed/sorted in more user friendly way. see picture.

Answers:

username_1: @seanforyou23 how is the ordering enforced, just by the ordering of parameters in connector?

username_1: @sunilmeddr fyi

username_2: So yeah, the forms should be rendered in whatever order the fields come back from the backend. We've a [client-side hack](https://github.com/syndesisio/syndesis/blob/master/app/ui/src/app/connections/common/configuration/configuration.service.ts#L35-L42) at the moment that we can use to specify an exact order, and then there's an open issue to either add support at the backend for an additional attribute or an `index` property to fields: #1204

username_3: @username_2 @username_1 can you tell me which is the order you whant them to be displayed ? I'm working on some connectors stuffs so I can take it

username_4: I think we implemented this.

Status: Issue closed

|

Dwarf-Therapist/Dwarf-Therapist | 648079297 | Title: Missing goal/dream in 47.04 (attaining rank in society)

Question:

username_0: I've got a dwarf with the goal: "She dreams of attaining rank in society."

This isn't recognised by Dwarf Therapist and doesn't appear in the personality tooltip for that dwarf.

It looks like it just needs to be added to the [goals] list in [/resources/game_data.ini](https://github.com/Dwarf-Therapist/Dwarf-Therapist/blob/v41.1.7/resources/game_data.ini#L1392) but I don't know how to find out its ID number.

Answers:

username_0: I managed to find out its ID by trial-and-error modifications to game_data.ini. The following works:

14\id=13

14\name="rank"

14\desc="dreams of attaining rank in society"

username_1: There is actually two new goals according to dfhack: https://github.com/DFHack/df-structures/blob/b67b3fa4b02f834dc76a2e1eae21136e0be089f7/df.units.xml#L198-L199

The best way to find new values is looking at them using dfhack (either command line or gui/gm-editor).

username_2: This should be fixed now. I've added the three missing goals in PR #224 among with ability to group dwarfs by their goal.

Status: Issue closed

|

OpenEmu/OpenEmu | 1046649875 | Title: Flickering screen when in a full screen game and using eGPU

Question:

username_0: # Summary of issue

When I'm using my [Blackmagic eGPU](https://www.apple.com/shop/product/HM8Y2VC/A/blackmagic-egpu), the screen flickers most of the time. I can occasionally get the screen not to flicker while using the eGPU, but it is not clear what does that. All it takes to fully fix it is to switch my HDMI cable directly into my mac instead of the eGPU.

## Notes:

* Does not appear to happen while the in-game menu (with the pause, reset, save state functions, etc) is showing up

* Does not appear to be something that the OS built in screen-capturing can capture. I had to record my screen with a video camera for the above video. Not sure how the rendering stack works, but I thought this might be relevant.

# Steps to reproduce

1. Use a Mac Mini with a Blackmagic eGPU

2. Plug monitor HDMI cable into eGPU port

3. Launch a game (works on multiple emulators)

4. Make the game full screen

5. Wait for the in-game menu to fade away

4. Observe flickering

# Expected Behavior

Games work without flickering while using eGPU HDMI port in full screen

# Observed Behavior

Games flicker while using eGPU HDMI port in full screen

# Debugging Information

- OpenEmu Version: v2.3.3

- macOS Version: 11.6

- Mac Mini 2018 (no built in GPU) |

sw6y15/HKEvent | 398926214 | Title: Could I replace anchors parameter in tiny-yolo-voc.cfg?

Question:

username_0: Hi, could I replace anchors parameters in tiny-yolo-voc.cfg by the output of `darknet.exe detector calc_anchors` command?

as well as in the ObjectDetection.cs of sample code in section 4?

The output of `darknet.exe detector calc_anchors` command is quite different from default value in tiny-yolo-voc.cfg. Is that related to the pixel resolution of fruit images?

Answers:

username_1: I have the same concern, when running `darknet.exe detector calc_anchors`, output value seems very large compared to the default value for voc.

username_2: Yep. **calc_anchors** uses k-mean method to cluster bounding box in your training data. For more strategies, you can refer to [Link](https://github.com/AlexeyAB/darknet#how-to-improve-object-detection)

username_2: It's correct step. Replace the anchors calculate from calc_anchors.

Status: Issue closed

|

influxdata/helm-charts | 926872091 | Title: Telegraf: no way to specify loadBalancerIP in service

Question:

username_0: Currently telegraf chart does not provide way to specify static IP address for LoadBalancer type of service. Which is trivial if You need to run it as LoadBalancer.

Service should contain additional value i.e. extraSpec which should allow setting any custom variable to service spec.

link: https://github.com/influxdata/helm-charts/blob/master/charts/telegraf/templates/service.yaml

eg.

```

{{- if .Values.service.loadBalancerIP }}

loadBalancerIP: {{ .Values.service.loadBalancerIP }}

``` |

dart-lang/language | 573802539 | Title: For loop specification needs total rewrite

Question:

username_0: Section `\ref{forLoop}` in the language specification needs to be completely rewritten: It specifies a highly incomplete set of static analysis rules, and it only specifies the dynamic semantics for one form of the statement (in particular, it does not cover the case where the iteration variable has a declared type).

Furthermore, it uses a very unusual approach to specify that there is a fresh iteration variable for each iteration of the loop, involving substitutions of variable names on the entire body of the loop.

It may work better to specify this kind of for loop in terms of a desugaring step where the fresh variable for each iteration is achieved by introducing regular local variable declaration in a nested block, and relying on the standard semantics of such declarations.

Answers:

username_1: Hear, hear!

The variable issue for `for (D id = e; test; inc) b` is currently rewritten into something which does an `if (first) ...` to separate the initialization from the increment.

That's important only in the case where the `inc` contains a closure capturing the loop variable.

That's something like

```dart

bool first = true;

D tmp = e;

while (true) {

D id = tmp;

if (first) {

first = false;

} else {

inc; // Can capture id.

}

if (!test) break;

{ b } // Can refer to capture of id, must be same variable as id.

tmp = id;

}

```

This ensures that the loop variable captured in `inc` is the loop variable of the following iteration, and there is only one variable per iteration.

(It also means that you can have a final loop variable, but then you can't modify it in the `inc` part).

This is not a particularly *efficient* desugaring. If we know that the `inc` does not capture the variable, we can desugar to the simpler:

``dart

D tmp = e;

while (true) {

D id = tmp;

if (!test) break

{b};

tmp = id;

inc[tmp/id];

}

```

If `test` and/or` b` does not capture the `id` variable either, we can simplify even further, like (no capture in test):

```dart

D tmp = e;

while (test[tmp/id]) {

D id = tmp;

{ b }

tmp = id;

inc[tmp/id];

}

```

or (no capture at all):

```dart

D id = e;

while (test) {

{ b }

inc;

}

```

The issue here is that we *have* to do this optimization in order to get an efficient for loop, with the default implementation being quite inefficient. |

HERA-Team/hera_cal | 424552652 | Title: Spectral Structure in Omnical Solutions due to lack of Firstcal Offset Solving

Question:

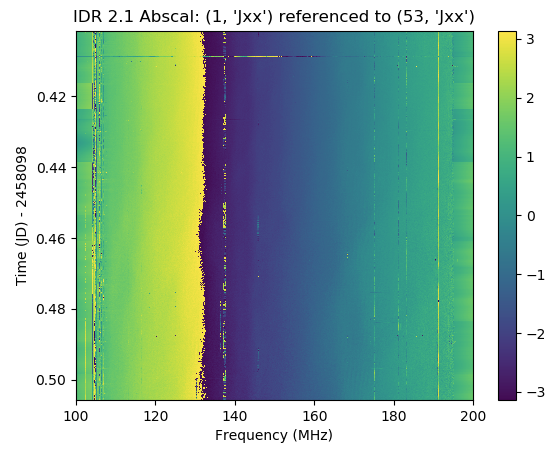

username_0: In our most recent attempt at running IDR2.2, I found considerable and worrisome new spectral structure introduced into our pipeline relative to IDR2.1.

Looking at `abscal` phases over a couple of hours, we found:

We did not observe anything like this in IDR2.1:

I believe this is traceable to issues in `redcal` and in particular to `firstcal`. In IDR2.1, we employed an iterative algorithm in `firstcal` to identify antennas there were 180 degrees rotated, effectively introducing a pi phase offset. This step was removed from the recent `firstcal` overhaul because we didn't think it was necessary. I now believe that it is (which is why I'm writing all this up!).

Digging into `redcal` results from this failed IDR2.2 candidate, we found evidence for complicated spectral structure:

Now compare this to IDR2.1:

I believe that this is due to bad `logcal` or `omnical` solutions that result from `firstcal` not getting close enough to the truth. We see something similar in simulations. For example, here is a simulated 19 element array where the gains are perfectly characterized by a single delay and offset (and all have amplitude of 1):

Using `redcal` on the current master branch, we get a very similar pathology:

The error in phase and delay is expected, since both of those terms are affected by degeneracies, and can be fixed later in `abscal`. However, the spectral structure is not expected. Moreover, if we force the phase offsets (phi) to be small (rather than letting them range from 0 to 2pi), the effect goes away. It therefore appears necessary to add the ability for `firstcal` to solve for phases offsets in addition to delays.

This is what I'm doing in #453.

Answers:

username_0: As I noted in #453, this problem is basically fixed with the new iterative firstcal offset solving.

Here's the result:

<img width="608" alt="Screen Shot 2019-03-23 at 4 55 13 PM" src="https://user-images.githubusercontent.com/5281139/54873101-c6f1b400-4d8c-11e9-9f2f-c2c39b933050.png">

Looks just like IDR2.1!

username_0: One more update:

I have verified that this vastly improves the abscal result. Here's the same comparison again, but now for a single run of abscal.

Status: Issue closed

|

PaddlePaddle/Paddle | 509609738 | Title: DynamicRNN中对hidden嵌套循环

Question:

username_0: - paddle 1.5, python3.6

seq2seq自定义解码过程遇到问题:

我希望实现如下操作:给定m*n的矩阵X,将X中的m个**独立同质**的n维向量用**共享参数**的方式与DynamicRNN 的 hidden 状态为 S分别进行计算。最后得到m个结果,进而求出m个概率值。

尝试了两种方法:

一是复制hidden,二是DynamicRNN里面嵌套循环,结果都是报错如下

Exception: /paddle/paddle/fluid/memory/detail/meta_cache.cc:33 Assertion `desc->check_guards()` failed.

Traceback (most recent call last):

File "./train.py", line 187, in <module>

cli()

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click/core.py", line 764, in __call__

return self.main(*args, **kwargs)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click/core.py", line 717, in main

rv = self.invoke(ctx)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click/core.py", line 1137, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click/core.py", line 956, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click/core.py", line 555, in invoke

return callback(*args, **kwargs)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/click_config/__init__.py", line 45, in wrapper

return fn(**kwargs_to_forward)

File "./train.py", line 151, in train

return_numpy=False)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/paddle/fluid/executor.py", line 650, in run

use_program_cache=use_program_cache)

File "/home/wangxin/tools/py367gcc48_paddle15/lib/python3.6/site-packages/paddle/fluid/executor.py", line 748, in _run

exe.run(program.desc, scope, 0, True, True, fetch_var_name)

RuntimeError: Exception encounter.

Exception: /paddle/paddle/fluid/memory/detail/meta_cache.cc:33 Assertion `desc->check_guards()` failed.

terminate called after throwing an instance of 'std::runtime_error'

what(): Exception encounter.

train_old.sh: line 6: 28038 Aborted

- DynamicRNN中复制hidden方式:

DynamicRNN外定义

```

param = self.helper.create_parameter(

attr=self.helper.param_attr, shape=[256, 1],

dtype="float32", is_bias=False)

```

DynamicRNN中

```

state = fluid.layers.fc(input=state, size=256)

state = fluid.layers.squeeze(state, axes=[0])

z1 = fluid.layers.elementwise_mul(X, state)

z2 = fluid.layers.mul(z1, param)

z3 = fluid.layers.squeeze(z2, axes=[-1])

prob = fluid.layers.softmax(input=z3, axis=-1)

prob = fluid.layers.reshape(prob, shape=[-1, 58])

```

- DynamicRNN中嵌套循环的方式:

```

y_array = fluid.layers.create_array('float32')

i = fluid.layers.fill_constant(shape=[1], dtype='int64', value=0)

i.stop_gradient = True

limit = fluid.layers.fill_constant(shape=[1], dtype='int64', value=58)

limit.stop_gradient = True

cond = fluid.layers.less_than(x=i, y=limit)

while_op = fluid.layers.While(cond=cond)

with while_op.block():

activation = fluid.layers.gather(X, i)

activation = fluid.layers.reshape(activation, shape=[-1, 256])

activation = fluid.layers.fc(input=activation, size=64,

param_attr='pre_y_pr', bias_attr='pre_y_bs')

z = fluid.layers.elementwise_mul(activation, state)

y = fluid.layers.fc(input=z, size=1,

param_attr='y_pr', bias_attr='y_bs')

fluid.layers.array_write(y, i=i, array=y_array)

fluid.layers.increment(x=i, value=1, in_place=True)

fluid.layers.less_than(x=i, y=limit, cond=cond)

y_array, y_array_index = fluid.layers.tensor_array_to_tensor(y_array)

y_array = fluid.layers.reshape(y_array, shape=[-1, 58])

y_array = fluid.layers.softmax(input=y_array, axis=-1)

prob = fluid.layers.softmax(input=y_array, axis=-1)

```

两种写法报错一致,请问是什么原因导致的?

Answers:

username_0: 尝试在DynamicRNN外定义。

```

param0 = self.helper.create_parameter(

attr=self.helper.param_attr, shape=[256],

dtype="float32", is_bias=False)

param = self.helper.create_parameter(

attr=self.helper.param_attr, shape=[256, 1],

dtype="float32", is_bias=False)

z1 = fluid.layers.elementwise_mul(X, param0)

z2 = fluid.layers.mul(z1, param)

z3 = fluid.layers.concat(z2, axis=-1)

z3 = fluid.layers.squeeze(z2, axes=[-1])

prob = fluid.layers.softmax(input=z3, axis=-1)

```

代码可以运行。但DynamicRNN里面state做输入则有问题,请问是什么原因?

username_1: 请问m*n的矩阵X中m和n分别代表什么呢,看到贴的代码里有`z1 = fluid.layers.elementwise_mul(X, state)` 这个在DynamicRNN中可能会有问题,因为`state`(形状为`[batch_size, hidden_size]`)的batch_size会动态的变小(这个是DynamicRNN针对变长数据的特殊设计,一个batch的不等长样本若某些已经到达其长度,则会去掉),因而将一个固定大小的X和state做elementwise的乘法是可能有问题的

username_0: m是候选(比如字符串)的个数,n是候选的向量表示的维度。需要m个候选的向量表示分别与state做运算,可能是但不限于elementwise_mul。请问有无方法实现单个time step中若干个不同元素与state做运算?

username_0: m是候选(比如字符串)的个数,n是候选的向量表示的维度。需要m个候选的向量表示分别与state做运算,可能是但不限于elementwise_mul。请问有无方法实现单个time step中若干个不同元素与state做运算?

username_0: DynamicRNN的设计是这样,while_op的batch size也会变吗?请问while_op是否有希望实现预期功能?

username_1: 如果没有特别的性能要求,可以考虑不用变长数据和DynamicRNN这个接口,改用padding的数据,这样的话seq2seq可以参考这里 https://github.com/PaddlePaddle/book/blob/for_paddle1.6/08.machine_translation/README.cn.md |

CaoZ/JD-Coin | 340857816 | Title: 并不跳出登陆用的浏览器

Question:

username_0: 我安装require之后直接运行main.py并没有跳出浏览器登陆

Answers:

username_1: 你好,是每次都这样吗?是什么系统呢?有完整日志可以提供下吗?

username_0: (base) C:\Users\11034\Desktop\JD-Coin-browser>pip install -r requirements.txt

Requirement already satisfied: requests in c:\programdata\anaconda3\lib\site-packages (from -r requirements.txt (line 1)) (2.18.4)

Requirement already satisfied: PyQT5 in c:\programdata\anaconda3\lib\site-packages (from -r requirements.txt (line 2)) (5.11.2)

Requirement already satisfied: pyquery in c:\programdata\anaconda3\lib\site-packages (from -r requirements.txt (line 3)) (1.4.0)

Requirement already satisfied: chardet<3.1.0,>=3.0.2 in c:\programdata\anaconda3\lib\site-packages (from requests->-r requirements.txt (line 1)) (3.0.4)

Requirement already satisfied: idna<2.7,>=2.5 in c:\programdata\anaconda3\lib\site-packages (from requests->-r requirements.txt (line 1)) (2.6)

Requirement already satisfied: urllib3<1.23,>=1.21.1 in c:\programdata\anaconda3\lib\site-packages (from requests->-r requirements.txt (line 1)) (1.22)

Requirement already satisfied: certifi>=2017.4.17 in c:\programdata\anaconda3\lib\site-packages (from requests->-r requirements.txt (line 1)) (2018.4.16)

Requirement already satisfied: PyQt5_sip<4.20,>=4.19.11 in c:\programdata\anaconda3\lib\site-packages (from PyQT5->-r requirements.txt (line 2)) (4.19.12)

Requirement already satisfied: cssselect>0.7.9 in c:\programdata\anaconda3\lib\site-packages (from pyquery->-r requirements.txt (line 3)) (1.0.3)

Requirement already satisfied: lxml>=2.1 in c:\programdata\anaconda3\lib\site-packages (from pyquery->-r requirements.txt (line 3)) (4.2.1)

(base) C:\Users\11034\Desktop\JD-Coin-browser>python app/main.py

2018-07-13 11:02:38,504 root[config] INFO: 使用配置文件 "config.json".

2018-07-13 11:02:38,504 root[config] WARNING: 配置文件不存在, 使用默认配置文件 "config.default.json".

2018-07-13 11:02:38,504 root[config] INFO: 用户名/密码未找到, 自动登录功能将不可用.

2018-07-13 11:02:38,692 jobs[daka] INFO: Job Start: 京东客户端钢镚打卡

2018-07-13 11:02:38,973 jobs[daka] INFO: 登录状态: False

2018-07-13 11:02:38,973 jobs[daka] INFO: 进行登录...

[14532:4932:0713/110240.678:ERROR:instance.cc(49)] Unable to locate service manifest for proxy_resolver

[14532:4932:0713/110240.678:ERROR:service_manager.cc(930)] Failed to resolve service name: proxy_resolver

[2032:692:0713/110241.983:ERROR:adm_helpers.cc(73)] Failed to query stereo recording.

[2032:11536:0713/110242.739:ERROR:BudgetService.cpp(160)] Unable to connect to the Mojo BudgetService.

[14532:4932:0713/110248.716:ERROR:instance.cc(49)] Unable to locate service manifest for proxy_resolver

[14532:4932:0713/110248.716:ERROR:service_manager.cc(930)] Failed to resolve service name: proxy_resolver

username_0: win10

username_0: 之后就循环出现之前发的那一条

username_1: 如果使用普通的 Python, 不使用 Anaconda 呢?会不会是 Anaconda 安装 lib 的原因?

username_0: 不知道,之前我开启pac模式的shadowsocks,跳出那个,现在我关掉shadowsocks之后显示这个

[10124:6948:0716/055115.170:ERROR:adm_helpers.cc(73)] Failed to query stereo recording.

[10124:15616:0716/055115.834:ERROR:BudgetService.cpp(160)] Unable to connect to the Mojo BudgetService.

username_0: 是不是我只能通过配置文件而不是浏览器登陆了emmm

username_2: 我也碰到了相同的问题,我用的是mac,python3.6.4, 请问有什么解决办法吗?

username_3: 一模一样的问题,Anaconda装的3.6环境

username_4: Mac , pyenv安装的3.6.5 同样的问题

2018-07-17 20:51:24,376 jobs[daka] INFO: 登录状态: False

2018-07-17 20:51:24,377 jobs[daka] INFO: 进行登录...

[45427:86019:0717/205125.660066:ERROR:adm_helpers.cc(73)] Failed to query stereo recording.

2018-07-17 20:51:25.838 QtWebEngineProcess[45427:7054525] NSColorList could not parse color list file /System/Library/Colors/System.clr

2018-07-17 20:51:25.838 QtWebEngineProcess[45427:7054525] Couldn't set selectedTextBackgroundColor from default ()

2018-07-17 20:51:25.838 QtWebEngineProcess[45427:7054525] Couldn't set selectedTextColor from default ()

[45427:775:0717/205125.980140:ERROR:BudgetService.cpp(160)] Unable to connect to the Mojo BudgetService.

[45427:86019:0717/205205.516720:ERROR:stunport.cc(88)] Binding request timed out from 0.0.0.x:50771 (any)

username_5: mac, 同样的问题

username_6: 我win10,官网下载安装的py3.7 64位版本,同样问题

username_7: mac, 同样的问题😩尝试了代理也没用

username_8: 应该是python版本的问题,我之前用3.6.3的python 没问题 昨天升级了之后就无法打开了

username_9: 我是把 browser 下的 MobileBrowser.__init__ 里的self.show() self.raise_()self.activateWindow()改到了get_cookies(url) 下,可以正常跳转。 python 3.6.6

username_10: PyQT5==5.10.1

PyQT5有新版释出,没有适配吧

username_11: 我不用pyqt登录了,我用无头浏览器登录

username_12: pyqt5 5.11.2的也不能出现登录窗口,PyQT5==5.10.1 这个可用。

Status: Issue closed

username_1: 感谢各位,问题已修复~

username_13: PyQt5 5.11.3 下也不能跳出浏览器,改成 PyQT5==5.10.1 才行 |

spring-projects/spring-integration | 264730557 | Title: Channel name being passed instead of actual channel

Question:

username_0: I have had trouble getting access to the actual gatherResultChannel using just the name, unlike the replyChannel (which is the object itself).

https://github.com/spring-projects/spring-integration/blob/00807d47e2880e780b1430c3aba3a588490572da/spring-integration-core/src/main/java/org/springframework/integration/scattergather/ScatterGatherHandler.java#L140

Wouldn't it be better to just do:

```

Message<?> scatterMessage = getMessageBuilderFactory()

.fromMessage(requestMessage)

.setHeader(GATHER_RESULT_CHANNEL, gatherResultChannel)

.setReplyChannel(this.gatherChannel)

.build();

```

so the actual channel is accessible to each subscriber to put their result into?

Answers:

username_1: This is an internal channel for bridging logic from the gatherer to the output:

```

PollableChannel gatherResultChannel = new QueueChannel();

Object gatherResultChannelName = this.replyChannelRegistry.channelToChannelName(gatherResultChannel);

Message<?> scatterMessage = getMessageBuilderFactory()

.fromMessage(requestMessage)

.setHeader(GATHER_RESULT_CHANNEL, gatherResultChannelName)

.setReplyChannel(this.gatherChannel)

.build();

this.messagingTemplate.send(this.scatterChannel, scatterMessage);

Message<?> gatherResult = gatherResultChannel.receive(this.gatherTimeout);

if (gatherResult != null) {

return gatherResult;

}

```

Not sure why would someone deal with that...

You can restore it into the object via that `HeaderChannelRegistry` though.

Its conversion to string is done intentionally because of serialization issue during network communication to let end-developer to avoid `header-channels-to-string` step: https://docs.spring.io/spring-integration/docs/4.3.12.RELEASE/reference/html/messaging-transformation-chapter.html#header-channel-registry

That is done intentionally because

username_1: Closed as "Works as Designed" after reporter reaction to the answer.

Status: Issue closed

|

AlexsLemonade/resources-portal | 853578012 | Title: Allow bulk add of resources

Question:

username_0: ### Problem or idea

It is frustrating to fill out the form if they are adding multiple resources. We can

- provide template spreadsheet/csv which they can populate with their data and upload.

Considerations:

- How will linking with grants/ team work?

- How will specifying requirements work?

### Solution or next step

Consider this for future iterations and spec out the details (requirements) for the feature then. |

MSRDL/TSA | 333861154 | Title: Initial Benchmarking Results

Question:

username_0: I have got some benchmarking results from 4 baseline algorithms and compared it with the SLS. I have computed both Mean Average Precision and AUC score for all the algorithms on a single dataset. Please find the observations attached here: [Initial Results](https://github.com/MSRDL/TSA/files/2117138/benchmarking.pdf)

Status: Issue closed

Answers:

username_1: I have got some benchmarking results from 4 baseline algorithms and compared it with the SLS. I have computed both Mean Average Precision and AUC score for all the algorithms on a single dataset. Please find the observations attached here: [Initial Results](https://github.com/MSRDL/TSA/files/2117138/benchmarking.pdf)

username_1: Let's redo the benchmarking on new algorithms |

symfony/symfony | 482714759 | Title: [phpunit-bridge] Too few arguments to function PHPUnit\Runner\TestSuiteSorter::reorderTestsInSuite()

Question:

username_0: **Symfony version affected**: 4.3.2

**Description**

I'm trying to run single test with `php bin/phpunit tests/path/to/test/file.php`.

I get an error:

```

PHP Fatal error: Uncaught ArgumentCountError: Too few arguments to function PHPUnit\Runner\TestSuiteSorter::reorderTestsInSuite(), 3 passed in /code/vendor/phpunit/phpunit/src/TextUI/TestRunner.php on line 180 and at least 4 expected in /code/bin/.phpunit/phpunit-8/src/Runner/TestSuiteSorter.php:126

Stack trace:

#0 /code/vendor/phpunit/phpunit/src/TextUI/TestRunner.php(180): PHPUnit\Runner\TestSuiteSorter->reorderTestsInSuite(Object(PHPUnit\Framework\TestSuite), 0, true)

#1 /code/bin/.phpunit/phpunit-8/src/TextUI/Command.php(201): PHPUnit\TextUI\TestRunner->doRun(Object(PHPUnit\Framework\TestSuite), Array, true)

#2 /code/bin/.phpunit/phpunit-8/src/TextUI/Command.php(160): PHPUnit\TextUI\Command->run(Array, true)

#3 /code/bin/.phpunit/phpunit-8/phpunit(17): PHPUnit\TextUI\Command::main()

#4 /code/vendor/symfony/phpunit-bridge/bin/simple-phpunit.php(259): include('/code/bin/.phpu...')

#5 /code/vendor/symfony/phpunit-bridge/bin/simple-phpunit(13): require('/code/vendor/sy...')

#6 /code/bin/phpunit(13): require('/code/vendor/sy.. in /code/bin/.phpunit/phpunit-8/src/Runner/TestSuiteSorter.php on line 126

```

**Additional context**

```

$ composer show symfony/phpunit-bridge

name : symfony/phpunit-bridge

descrip. : Symfony PHPUnit Bridge

keywords :

versions : * v4.3.3

```

```

$ php bin/phpunit --version

#!/usr/bin/env php

PHPUnit 8.3.4 by <NAME> and contributors.

```

`phpunit.xml`:

```xml

<?xml version="1.0" encoding="UTF-8"?>

<phpunit xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:noNamespaceSchemaLocation="https://schema.phpunit.de/8.3/phpunit.xsd"

bootstrap="config/bootstrap.php"

executionOrder="depends,defects"

forceCoversAnnotation="true"

beStrictAboutCoversAnnotation="true"

beStrictAboutOutputDuringTests="true"

beStrictAboutTodoAnnotatedTests="true"

verbose="true">

<testsuites>

<testsuite name="default">

<directory suffix="Test.php">tests</directory>

</testsuite>

</testsuites>

<filter>

<whitelist processUncoveredFilesFromWhitelist="true">

<directory suffix=".php">src</directory>

</whitelist>

</filter>

</phpunit>

```

Thanks in advance!<issue_closed>

Status: Issue closed |

Azure/autorest | 193640685 | Title: Latest 1.0.0 AutoRest does not ifdef System.Security.Permissions

Question:

username_0: In the latest 1.0.0 version of AutoRest, custom exceptions do not properly wrap the using for System.Security.Permissions, which is not supported in CoreCLR (portable). In previous versions, this generated code looked like this:

```

#if !PORTABLE

using System.Security.Permissions;

#endif

```

Now it is simply this:

`using System.Security.Permissions;`

Which results in the following exception when building for portable/CoreCLR:

```

The type or namespace name 'Permissions' does not exist in the namespace 'System.Security' (are you missing an assembly reference?)

```

Answers:

username_1: Ach!

This turns out to be a side effect of the C# simplifier. I will look into this today.

G

username_1: @username_0 you should be able to disable the c# simplifier step with `-DisableSimplifier ` ... could you try that?

Status: Issue closed

|

kubernetes/kubernetes | 701790359 | Title: api/core/v1: The default PV Reclaim Policy is different with the document

Question:

username_0: <!-- Please only use this template for submitting enhancement requests -->

**What would you like to be added**:

Change the default PV Reclaim Policy in the codebase or the document

**Why is this needed**:

Really misunderstanding when we just read the document and use the api package .

### The main issue

The default PV Reclaim Policy documented [here](https://kubernetes.io/docs/tasks/administer-cluster/change-pv-reclaim-policy/#why-change-reclaim-policy-of-a-persistentvolume) is `Delete` , but the real default Reclaim Policy we use is `Retain` which defined [here](https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/api/core/v1/types.go#L386). It seems that the document is not correct anymore ?

Answers:

username_0: /sig docs

username_1: This should refer to dynamic-provisioning

https://kubernetes.io/blog/2017/03/dynamic-provisioning-and-storage-classes-kubernetes/

username_0: Still `Delete` not `Retain`?

username_2: According to the comment here: https://github.com/kubernetes/kubernetes/blob/e36e68f5f6964a3e612b36bfea0bb17c8f05e083/staging/src/k8s.io/api/core/v1/types.go#L343-L349

The default policy is `Retain` when pv is manually created and `Delete` when dynamically provisioned.

/remove-kind feature

username_0: OK , docs here https://kubernetes.io/docs/concepts/storage/persistent-volumes/#delete is correct , thanks for your time @username_2 .

Status: Issue closed

|

kapsakcj/nanoporeWorkflow | 640607328 | Title: remove git-lfs requirement & alter test data format

Question:

username_0: Currently, simply downloading the scripts with `git clone` results in errors due to git-lfs. This is frustrating, but I think it is important to keep test data in the repo somehow.

One possible workaround is split the 3 fast5 files (each are ~240-362MB in size) into individual fast5 files. Right now there are a total of 9576 reads within these 3 fast5s, and we may be able to split them into have 1 fast5 for every read. File sizes would be MUCH smaller and would likely avoid having to use git-lfs.

I think the github limit is 100MB/file. 100GB/repository.

SciComp has a module `fast5/2.0.1` for running `ont_fast5_api` which should allow us to split the fast5s. https://github.com/nanoporetech/ont_fast5_api#multi_to_single_fast5

Answers:

username_0: It was pretty easy to split the batch fast5s into single-read fast5s

```

ml fast5/2.0.1

cd github/nanoporeWorkflow/t/data

multi_to_single_fast5 --input_path SalmonellaLitchfield.FAST5 --save_path single-read-fast5s/ -t 16

```

Haven't commited/pushed these files to GitHub yet, still in progress on M3

username_0: This commit added the single-read fast5s. Still need to remove multi-read fast5s and overhaul TravisCI tests.

https://github.com/username_0/nanoporeWorkflow/commit/c7def8f5d3bbd2056ed67109eb225e72cb646e5a |

bnicenboim/eeguana | 709562761 | Title: More control over plot_components()

Question:

username_0: R version 4.0.2 (2020-06-22)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 10 x64 (build 19041)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252 LC_CTYPE=English_United States.1252 LC_MONETARY=English_United States.1252 LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] stringr_1.4.0 data.table_1.13.0 ggplot2_3.3.2 dplyr_1.0.2 eeguana_0.1.4.9000

loaded via a namespace (and not attached):

[1] Rcpp_1.0.5 prettyunits_1.1.1 ps_1.3.4 assertthat_0.2.1 rprojroot_1.3-2 digest_0.6.25 utf8_1.1.4 R6_2.4.1

[9] cellranger_1.1.0 backports_1.1.10 pillar_1.4.6 rlang_0.4.7 curl_4.3 readxl_1.3.1 rstudioapi_0.11 car_3.0-9

[17] callr_3.4.4 desc_1.2.0 labeling_0.3 devtools_2.3.2 foreign_0.8-80 bit_4.0.4 munsell_0.5.0 MBA_0.0-9

[25] compiler_4.0.2 pkgconfig_2.0.3 pkgbuild_1.1.0 tidyselect_1.1.0 tibble_3.0.3 rio_0.5.16 fansi_0.4.1 crayon_1.3.4

[33] withr_2.3.0 grid_4.0.2 gtable_0.3.0 lifecycle_0.2.0 magrittr_1.5 scales_1.1.1 zip_2.1.1 cli_2.0.2