repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

cyberway/cyberway | 483177787 | Title: Replaying of mainnet fails on nodeosd version 2.0.0

Question:

username_0: **STR**

1. Configure to connect to mainnet

2. Start resync

**Actual result**

Resync fails on block 121492 with error:

```

error 2019-08-21T02:58:11.318 nodeos producer_plugin.cpp:319 on_incoming_block ] 3030000 block_validate_exception: Block exception

receipt does not match

{"producer_receipt":{"status":"executed","cpu_usage_us":10186,"net_usage_words":31,"ram_kbytes":1,"storage_kbytes":1,"trx":[1,{"signatures":["<KEY>","<KEY>"],"compression":"none","packed_context_free_data":"","packed_trx":"1bf95a5d90da2a0819b700000000000002001a76f1e80a706400000000a84c77d501304b4279f82a98b3000000804d9731ad57304b4279f82a98b37076e0b8179476fc4472652d63796265727761792d73696c6e79692d692d6e6164657a686e79692d626c6f6b636865696e2d313536353938343639323334372d3135363632343237353834393210270000000080ab8e470000e04725b7e9ad0100000000000070640000e04725b7e9ad100000000000007064304b4279f82a98b300"}]},"validator_receipt":{"status":"executed","cpu_usage_us":10186,"net_usage_words":31,"ram_kbytes":1,"storage_kbytes":2,"trx":[1,{"signatures":["SIG_K1_KgdX195w<KEY>","SIG_K1_<KEY>"],"compression":"none","packed_context_free_data":"","packed_trx":"1bf95a5d90da2a0819b700000000000002001a76f1e80a706400000000a84c77d501304b4279f82a98b3000000804d9731ad57304b4279f82a98b37076e0b8179476fc4472652d63796265727761792d73696c6e79692d692d6e6164657a686e79692d626c6f6b636865696e2d313536353938343639323334372d3135363632343237353834393210270000000080ab8e470000e04725b7e9ad0100000000000070640000e04725b7e9ad100000000000007064304b4279f82a98b300"}]}}

nodeos controller.cpp:1284 apply_block

{}

nodeos controller.cpp:1314 apply_block

rethrow

{}

nodeos controller.cpp:1357 push_block

stacktrace:

0# 0x0000000000E6300C in /opt/cyberway/bin/nodeos

1# 0x00000000005D0EDE in /opt/cyberway/bin/nodeos

2# 0x0000000000AA5AE7 in /opt/cyberway/bin/nodeos

3# 0x0000000000ABC835 in /opt/cyberway/bin/nodeos

4# 0x0000000000A9B393 in /opt/cyberway/bin/nodeos

5# 0x0000000000A1A43B in /opt/cyberway/bin/nodeos

6# 0x00000000005D430E in /opt/cyberway/bin/nodeos

7# 0x0000000000882D3B in /opt/cyberway/bin/nodeos

8# 0x00000000006FDEEB in /opt/cyberway/bin/nodeos

9# 0x00000000007287F5 in /opt/cyberway/bin/nodeos

10# 0x00000000006EF68B in /opt/cyberway/bin/nodeos

11# 0x000000000071D6D1 in /opt/cyberway/bin/nodeos

12# 0x000000000047FE4D in /opt/cyberway/bin/nodeos

13# 0x0000000000473D7F in /opt/cyberway/bin/nodeos

14# __libc_start_main in /lib/x86_64-linux-gnu/libc.so.6

15# 0x000000000047104A in /opt/cyberway/bin/nodeos

```

**Expected result**

No errors<issue_closed>

Status: Issue closed |

CartoDB/mobile-sdk | 1074318995 | Title: handling cross tile polygons labels

Question:

username_0: In my custom version of openmaptiles i have landuse polygons which can have a name.

I have an issue while rendering those labels when polygon is split on 2 tiles. The label render twice. What i "would like" is to get one label in the middle of the polygon. I can try use min text distance but it will not always work depending on the polygon size.

You can see the tiles limits in openstreetmap (thin white lines)

<img width="1284" alt="Screenshot 2021-12-08 at 11 57 36" src="https://user-images.githubusercontent.com/655344/145197377-7bd0a32c-ce2f-4194-955f-011106cbcbe9.png">

Answers:

username_1: This is problematic with tiles, unfortunately. That is also the main reason why there are layers like 'water_name', 'transportation_name' in vector tiles in addition to 'water' and 'transportation'.

In case the plygon feature includes id (or perhaps you can use 'name' field as id), the label should be rendered once but it may jump around when zooming or panning the map.

username_0: @username_1 ok you confirm what i was thinking. Did not really want to add another layer just for those names as it would make the tiles bigger but i get it.

Now about your point about id indeed landcover and landuse dont have id (for size reasons i would guess).

All you say makes sense and i understand you cant do much more. I ll stick with this for now. Thanks for the explanation

Status: Issue closed

|

dotnet/efcore | 1003949121 | Title: Can't generate a migration using HiLo

Question:

username_0: When I generate the migrations, I have this error for all the primary keys that are configured to use HiLo

### Include provider and version information

EF Core version: 6.0.0-rtm.21471.18

Database provider: Microsoft.EntityFrameworkCore.SqlServer

Target framework: .NET 6.0

Operating system: Windows 10 Pro

IDE: Visual Studio 2019 16.3

Answers:

username_1: Thanks, I can see the bug and have submitted #26136 to fix this for 6.0.

username_0: Thank you @username_1

Status: Issue closed

username_1: When I generate the migrations, I have this error for all the primary keys that are configured to use HiLo

### Include provider and version information

EF Core version: 6.0.0-rtm.21471.18

Database provider: Microsoft.EntityFrameworkCore.SqlServer

Target framework: .NET 6.0

Operating system: Windows 10 Pro

IDE: Visual Studio 2019 16.3

username_1: As a workaround, you can replace SqlServerModelBuilderExtensions with SqlServerPropertyBuilderExtensions on all property configurations.

Status: Issue closed

|

neillturner/kitchen-puppet | 97048356 | Title: Kitchen: Message: Failed to complete #converge action: [undefined local variable or method `modules_path' for

Question:

username_0: Seems like 0.0.29 release is broken:

```

E, [2015-07-24T12:50:03.996708 #25439] ERROR -- Kitchen: Class: Kitchen::ActionFailed

E, [2015-07-24T12:50:03.996737 #25439] ERROR -- Kitchen: Message: Failed to complete #converge action: [undefined local variable or method `modules_path' for #<Kitchen::Provisioner::PuppetApply:0x007f913e1752d8>]

E, [2015-07-24T12:50:03.996765 #25439] ERROR -- Kitchen: ---Nested Exception---

E, [2015-07-24T12:50:03.996839 #25439] ERROR -- Kitchen: Class: NameError

E, [2015-07-24T12:50:03.996889 #25439] ERROR -- Kitchen: Message: undefined local variable or method `modules_path' for #<Kitchen::Provisioner::PuppetApply:0x007f913e1752d8>

E, [2015-07-24T12:50:03.996921 #25439] ERROR -- Kitchen: ------Backtrace-------

E, [2015-07-24T12:50:03.996949 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/kitchen-puppet-0.0.29/lib/kitchen/provisioner/puppet_apply.rb:535:in `modules'

E, [2015-07-24T12:50:03.996977 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/kitchen-puppet-0.0.29/lib/kitchen/provisioner/puppet_apply.rb:814:in `prepare_modules'

E, [2015-07-24T12:50:03.997006 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/kitchen-puppet-0.0.29/lib/kitchen/provisioner/puppet_apply.rb:361:in `create_sandbox'

E, [2015-07-24T12:50:03.997032 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/provisioner/base.rb:61:in `call'

E, [2015-07-24T12:50:03.997061 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:366:in `block in converge_action'

E, [2015-07-24T12:50:03.997089 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:488:in `call'

E, [2015-07-24T12:50:03.997117 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:488:in `synchronize_or_call'

E, [2015-07-24T12:50:03.997144 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:453:in `block in action'

E, [2015-07-24T12:50:03.997169 #25439] ERROR -- Kitchen: /opt/rbenv/versions/2.1.6/lib/ruby/2.1.0/benchmark.rb:279:in `measure'

E, [2015-07-24T12:50:03.997196 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:452:in `action'

E, [2015-07-24T12:50:03.997224 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:362:in `converge_action'

E, [2015-07-24T12:50:03.997254 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:341:in `block in transition_to'

E, [2015-07-24T12:50:03.997330 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:340:in `each'

E, [2015-07-24T12:50:03.997363 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:340:in `transition_to'

E, [2015-07-24T12:50:03.997390 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:160:in `verify'

E, [2015-07-24T12:50:03.997416 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:189:in `block in test'

E, [2015-07-24T12:50:03.997442 #25439] ERROR -- Kitchen: /opt/rbenv/versions/2.1.6/lib/ruby/2.1.0/benchmark.rb:279:in `measure'

E, [2015-07-24T12:50:03.997470 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/instance.rb:185:in `test'

E, [2015-07-24T12:50:03.997504 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/command.rb:176:in `public_send'

E, [2015-07-24T12:50:03.997531 #25439] ERROR -- Kitchen: /my/user/home/.bundle/gems/test-kitchen-1.4.1/lib/kitchen/command.rb:176:in `block (2 levels) in run_action'

```

Answers:

username_1: I guess we should not merge pull requests ( https://github.com/username_3/kitchen-puppet/pull/58 ) without rspec tests anymore

username_2: I completely missed that there weren't any tests.

Sorry about this regression, I should have reviewed that better. I'll revert that PR, so master is clean and ask @username_3 if we can pull the 0.0.29 release.

Apologies for any inconvenience this caused

username_3: i didn't have time to test. just reviewed code. i will yank the gem. -)

username_2: Thanks @username_3. Could you also revert the PR? #58

Turns out I don't have revert perms :)

username_3: reverted.

username_0: thanks guys!

username_3: close as codd reverted. not sure what i'd do about the contribution

Status: Issue closed

|

fluttercommunity/flutter_sms | 623971475 | Title: Documentation improvements

Question:

username_0: Gentlefolk.

Can I ask for some documentation improvements.

1) what is the difference between sendSMS and launchSMS?

2) what actions are required to support dual sim cards.

Answers:

username_1: 1. There should be a doc comment that explains it for each method

2. No support at this time but PRs are welcome

3. General log messages

Status: Issue closed

username_1: sendSMS is for using the url scheme, and launchSMS is for opening the native dialog |

SecurityInnovation/Smart-Contract-CTF | 314363552 | Title: Top-left menu link to SI homepage is broken

Question:

username_0: The top-left corner link to the SI home page is a messed up anchor link as it starts with a ``#``, needs to be absolute.

Currently links to: ``http://blockchain-ctf.securityinnovation.com/#/https://securityinnovation.com``

Rendered code:

```<a target="_blank" class="pure-menu-link" href="#/https://securityinnovation.com">Security Innovation</a>```

Answers:

username_1: Fixed in commit 70d441221f2a81f16cad790ef5685e82d25beaf0

Status: Issue closed

|

kubernetes/kubernetes | 920409863 | Title: Container Lifecycle Hooks exit on non-0 exit code

Question:

username_0: Hi,

i don't understand why Container Lifecycle Hooks are instantly killed by Kubernetes (Exit Code 137) when some command is used that returns a non-0 exit code. Sometimes this is expected behavior and i don't understand why this is not possible with Kubernetes.

Answers:

username_1: /kind support

/sig node

username_2: https://kubernetes.io/docs/tasks/configure-pod-container/attach-handler-lifecycle-event/#discussion.

The Container's state will only change to Running when the postStart hook is successfully executed,

so I don't understand what your question is?

username_2: /triage needs-information

username_3: Kubernetes does not use issues on this repo for support requests. If you have a question on how to use Kubernetes or to debug a specific issue, please visit our [forums](https://discuss.kubernetes.io/).

It looks like we have some documentation for this already as linked by @username_2 but I will also mark this with a docs tag.

/kind documentation

/close |

snapframework/snap-server | 52068779 | Title: Allow custom formats for the Access logs; like JSON.

Question:

username_0: I have been reading through the code and I am pretty sure that I need to override the logA method in order to get custom logging support. I [asked a question on SO about it just in case][1].

But I don't think that I can currently override this logA functionality without changing the code inside of Snap Server. If this is correct then, for this issue, it would be great if I could inject a method into the snap server configuration that would intercept the access log generation and let me write my own access log lines in a custom format.

[1]: http://stackoverflow.com/questions/27496925/haskell-snap-framework-configuring-custom-logging

Answers:

username_0: @username_1 I'm currently working on this code in a branch because I really really want and need this functionality because it would allow me to output all of my logs in JSON format. If I were to write this code such that it could be merged into both 0.9-stable and master would you accept both PR's?

You can see my initial attempts here: https://github.com/snapframework/snap-server/compare/0.9-stable...username_0:issue/62-custom-access-and-error-log-handlers

username_0: Please have a look at my initial attempts and let me know if I am going in the right direction. I'd like feedback now before I spin my wheels too fast in one direction. :D

username_0: I have tested this branch against my Snap application My Reminders and it is working:

So my branch seems to be workable to accomplish the goal of logging your access and error logs in a custom format.

You can see an example of me using this branch in the My Reminders code here: https://bitbucket.org/atlassianlabs/my-reminders/branch/issue/MR-7-json-logs-for-my-reminders#diff

username_0: Ping. These PR's have been open for a week now without comment. Sorry that I'm pinging so soon but:

1. I suspect that there may still be more work to do on these PR's; especially if you want me to use the OutputStream code.

1. The sooner this code gets released the sooner that I can hookup better Logstash / Kibana logging to my services. Which I think they desperately need.

Cheers and sorry if I'm commenting too frequently! Just tell me to stop. :smile: I won't mind!

username_1: I was on vacation all last week and didn't get to it on the weekend because I have a houseguest. I'll take a look today.

username_0: Wow, I am such a pest. I am really sorry. Thank you so much for looking at this so soon. In my defence I guess I'm just excited about submitting changes back to snap.

username_1: Believe me, I'm quite happy you sent the patches :)

username_0: control your log file output format. The two functions are setAccessLogHandler and

setErrorLogHandler. These methods respectively will let you control how the access and error log

lines are rendered respectively. This is useful if you wist to modify the log line output; for

example, if you wish to log in a custom data format (like JSON) then now you can. For more information please read the docs.

What do you think? I was trying for short and to the point.

username_0: @username_1 Also, I have bumped the snap-server version to 0.9.6 because I have only made additive changes. Not sure if anybody else has made breaking changes that would require a bump to 0.10.0? At any rate, you can see that my additions to 0.9-stable still build against snap 0.14-stable: https://bitbucket.org/snippets/username_0/rkpK7

Cheers!

username_1: See my comment on the changeset, we will need a major bump for this.

Your initial wording sounds OK to me, but the best way to handle this will be to open a pull request on snap-website, we can discuss the minutae there.

username_0: I have responded: https://github.com/snapframework/snap-server/pull/71/files#r31794779

I hope that changing the Config instance is not enough to prevent a backport. After all, it implements Monoid and even the docs recommend to create it with the monoid instance. |

coleifer/huey | 525804822 | Title: Cancel a long running task?

Question:

username_0: Hi @username_1

Please advice how to correctly cancel a long running task.

There is `CancelExecution` exception but it works only in pre-execute hooks.

I could kill the worker, but if the task has a retries, it will start again.

Answers:

username_1: There's no way to do that, unfortunately. You can't easily interrupt a running thread, and it adds a lot of complexity -- should an interrupted task be marked as an error? Should it be retried? What about subsequent executions?

The solution is to modify your task to track its time and cancel itself if it has the potential to run for a long time. You can do this with socket timeouts if it's making a slow network call, or if there is a loop to perform a check during the loop body.

Status: Issue closed

|

CRAlpha/react-native-wkwebview | 195732031 | Title: clearCacheForSingleFile is not work .and How set cache mode???

Question:

username_0: usr play three website'ex: http://xxx.com/gamex.html',

i want remove one cache .so ,i use clearCacheForSingleFile, like this:

clearCacheForSingleFile('http://xxx.com/gamex.html'); ???

it's not work fine~ how i do ,pls help ~ _ ~<issue_closed>

Status: Issue closed |

greenplum-db/gpdb | 264481240 | Title: Incorrect result with CUBE

Question:

username_0: On GPDB:

```

postgres=# select x,y,count(*), grouping(x), grouping(y),grouping(x,y) from generate_series(1,1) x, generate_series(1,1) y group by cube(x,y);

x | y | count | grouping | grouping | grouping

---+---+-------+----------+----------+----------

1 | 1 | 1 | 0 | 0 | 0

| 1 | 1 | 1 | 0 | 2

| | 1 | 1 | 0 | 2

1 | | 1 | 0 | 1 | 1

(4 rows)

```

PostgreSQL produces a different result:

```

postgres=# select x,y,count(*), grouping(x), grouping(y),grouping(x,y) from generate_series(1,1) x, generate_series(1,1) y group by cube(x,y);

x | y | count | grouping | grouping | grouping

---+---+-------+----------+----------+----------

| | 1 | 1 | 1 | 3

1 | 1 | 1 | 0 | 0 | 0

1 | | 1 | 0 | 1 | 1

| 1 | 1 | 1 | 0 | 2

(4 rows)

```

Note the different values in the last column. I believe PostgreSQL got this right, and the GPDB result is incorrect.

Answers:

username_1: @dhanashreek89 @hsyuan Can we please get eyes on this - it's a fix for a wrong results.

@schubert for awareness

username_2: Still exist on latest master

username_0: This was fixed on master with the PostgreSQL merge.

Status: Issue closed

|

ScottIsAFool/Bex | 106560736 | Title: Location info not retrived on activity

Question:

username_0: Hi,

i have a problem with your library, i have added the ReadActivityLocation scope to login and MapPoints to request but the location in mapPoint object have all values (latitude,longitude ecc) to 0

Thank in advance!

Answers:

username_1: Are you able to provide a quick repro? Or some sample code that you're using so I can give it a try?

username_0: Thank for your response.

for try you login with with all scope

App.BexClient.CreateAuthenticationUrl(new List<Scope>

{

Scope.ActivityHistory,

Scope.ActivityLocation,

Scope.Devices,

Scope.Profile,Scope.offline_access

});

then you can retrive activities with

ActivitiesRequest ar1 = new ActivitiesRequest();

ar1.MaxItemsReturned = 25;

ar1.ActivityTypes = new string[] { "Run" };

ar1.ActivityFieldsToInclude = new ActivityFields[] { ActivityFields.Details, ActivityFields.MapPoints };

ActivitiesResponse ar = await App.BexClient.GetActivitiesAsync(ar1);

than you can see MapPoints in ar.RunActivities have all Latitude and Longitude to 0

Thanks again!

username_0: Hi,

have you reproduced the problem, i can do anything for help you?

username_1: Hi, sorry, not yet. Will try and look at it tonight or tomorrow (been a bit busy this week)

username_0: no problem it's only for help you :;D

username_0: Some news?

username_1: I've had a look, and the run data I'm getting back isn't even including any of the location data. Looking around, it looks like this is a fault of the MS Health API. I think when they fix it on their end, it will just start working for you. |

egoist/ideas | 552767782 | Title: My goal for open source in 2020

Question:

username_0: ## My goal for 2020: Build an application framework

- Support both React and Vue (perhaps Svelte too)

- File-system based routing, like many PHP frameworks and Next.js / Nuxt.js, there will be two kinds of pages:

- Component pages: `pages/**/*.{vue,js,jsx,ts,tsx}`

- Server pages: `pages/**/*.{json,html,xml,etc}.{js,ts}`, used as http handlers to output other types of content, like API response or RSS feed.

- Support multiple rendering modes:

- SSR: by default your app is server-rendered and rehydrated on the client-side

- SSG: it can also be statically generated if you can't deploy it as a Node.js app

- SPA: like SSG but server-side rendering is disabled so you don't need to write SSR-compatible code

[__You can support me to make this idea become reality__](https://github.com/sponsors/username_0), I'm confident that this will benefit a lot of developers (including me myself) and your support will definitely accelerate it! |

ironjan/klausurtool-ror | 158652839 | Title: Hide exams that are not in a folder

Question:

username_0: ## How does this problem happen?

1. First edit an exam so that it is not shown in the TOC, i.e. it's not yet in the folder

2. Then go to the exam search and search for that exam

## What does happen?

The exam is listed as part of that folder.

## What do you expect to happen instead?

The exam should not be listed as part of that folder. |

Tarskin/HappyTools | 323642507 | Title: Scientific notation numbers in processed data files

Question:

username_0: HappyTools attempts to cast numbers into floats, with a (power)-user defined precision, however it is possible that the intensity contains a number using scientific notation that causes HappyTools to crash.

Answers:

username_0: The latest build (b180516a) has fixed this bug.

Status: Issue closed

|

DIPSAS/DIPS.Xamarin.UI | 1096079200 | Title: [Enhancement] Sheet could intercept gesture when scrollable content reaches the top

Question:

username_0: ## Summary

If the content of the sheet is scrollable and the user scrolls to the top the sheet view could intercept the gesture and start moving downwards. Then the user can get the feel of scrolling the whole sheet away. This would be similar to the sheet views in Apple Maps.

## API Changes

Should be able to toggle this behaviour with a bool property. Could use the existing `InterceptDragGesture`.

## Intended Use Case

Sheet views are often used to show scrollable content. Allowing the user to smoothly scroll away the sheet when finished would be beneficial |

sensu/sensu-go | 380296286 | Title: Determine how to handle top-level endpoints in RBAC

Question:

username_0: We need to figure how how to handle permissions for top-level endpoints (e.g. /health). I'm not quite sure how Kubernetes does it.

Child of #2243

Answers:

username_0: We currently have a single "top-level" endpoint (/health), which does not require authentication, so I'm closing this issue!

Status: Issue closed

|

tomwojcik/starlette-context | 664620110 | Title: PluginUUIDBase's force_new_uuid option seems broken

Question:

username_0: When using `PluginUUIDBase`'s `force_new_uuid` option and setting it to `True` I'm always getting the same `uuid`. Which is the **opposite** of what I expect.

It looks like when `self.value is None` a new `uuid` is generated, and this works fine when for the first request. But subsequent request seem to be using the same id.

I suspect the code that needs to be fixed is missing a `self.value = None` before trying anything else here:

https://github.com/username_1/starlette-context/blob/2f80262bbf4c00fb501c22ac04a5c1c408187fe6/starlette_context/plugins/plugin_uuid.py#L34

Answers:

username_0: Thinking about this, why is `self.value` being used anyway? I don't understand why this is being stored on the plugin instance. It seems like a normal variable would be enough and match the intend better, and it would have avoided this bug.

username_1: Hey, thanks for opening this ticket. Indeed that's a bug. I'll fix this within a few days.

username_1: 0.2.3 is published. Please try again and let me know if it's all good now.

username_0: Looks good from here!

Thank you for the quick fix.

FTR my workaround was:

```python

# Fix https://github.com/username_1/starlette-context/issues/15

__original_extract_value_from_header_by_key = (

PluginUUIDBase.extract_value_from_header_by_key)

async def __patched_extract_value_from_header_by_key(self, request):

self.value = None

return await __original_extract_value_from_header_by_key(self, request)

PluginUUIDBase.extract_value_from_header_by_key = (

__patched_extract_value_from_header_by_key

)

```

**Removing** this from my code, and using `0.2.3` all my tests still pass.

:fireworks: For me it looks good!

Status: Issue closed

username_1: Awesome! Thanks for checking that out. |

apache/airflow | 596276385 | Title: Create guide for Dataproc operators

Question:

username_0: **Description**

Hello,

A guide that describes how to use Dataproc service operators would be useful.

We have an example DAG for this service, so the guide should not be a big challenge.

If anyone is interested in this task, I am willing to provide all the necessary tips and information.

Other guides are available:

https://airflow.readthedocs.io/en/latest/howto/operator/index.html

Best regards,

Kamil

**Use case / motivation**

N/A

**Related Issues**

N/A

Answers:

username_1: Happy to pick this up. Worked with the service a bit before.

username_0: @username_1 I assigned you to this task.

Status: Issue closed

username_2: solved in https://github.com/apache/airflow/pull/9037 |

2amigos/yii2-usuario | 243181971 | Title: Provide the ability for a user to delete its very own account

Question:

username_0: Specs:

- Should be configurable at the Module level

- The action should fire events so developers could programmatically develop their very own action log systems.

Example actions to be taken on before or after delete events:

a) on before delete the user instance that is about to remove himself from the system will be passed to the event object, so the developer can easily stop its plans, clone its data to a different tables (for example a CRM system, so team could follow up on the reasons why he wishes to leave the system).

b) another scenario would be to report the sys admin about the action of the user<issue_closed>

Status: Issue closed |

jcabi/jcabi-heroku-maven-plugin | 53771716 | Title: Copyright section is outdated

Question:

username_0: Copyright sections still feature 2014 as a year.

Answers:

username_1: we'll find someone to do this task, soon

username_1: @username_2 it's in your hands now, please proceed

username_1: @username_0 thanks for reporting! I topped your account for 15 mins, transaction 49997556

username_2: @username_1 @username_0 I created PR #5 but haven't seen activity to get it reviewed. Anything I should do?

username_3: @username_2 we'll find a reviewer soon, thanks for the PR!

username_2: @username_0 PR #5 has been merged. Please close the issue.

Status: Issue closed

username_1: @username_2 thanks, paid, **30 mins** to your account, payment ID is `AP-5VJ27404GD162805S`

+30 added to your rating, current score is: [+130](http://www.netbout.com/b/35107?open=rating) |

withspectrum/spectrum | 311687088 | Title: Handling spam communities

Question:

username_0: <!--

FILL OUT THE FORM BELOW OR THE ISSUE WILL BE AUTO-CLOSED

**Issue Type (check one)**

- [ ] Bug Report

- [ ] Feature Idea

- [x] Technical Discussion

- [ ] Question (these will be auto-closed, please ask them on Spectrum instead https://spectrum.chat/spectrum/open)

**Description (type any text below)** -->

Our SEO is getting good. Which means people will start spinning up things like this: https://spectrum.chat/cbd-oil?thread=2d09eb88-434a-4c0d-8181-a47667ee5ef3 to try and get Google karma for the links.

How do we want to handle spam communities + threads like this?

Answers:

username_1: Good question, I wonder what other user-generated content sites are doing about that

username_0: Probably nothing, as a lot of other ugc sites are ad-driven, so pageviews === good.

I'm not sure there's anything here to do immediately, except watch for patterns here. If it starts to scale people will trust our content/platform less in general.

username_2: [These](https://webmasters.googleblog.com/2017/01/protect-your-site-from-user-generated.html) might be few best practices.

but there should be an efficient way to handle spam at scale.

username_0: Quick wins from that post (thanks @username_2!)

- rate limit # of threads that can be posted in a given time period

- rate limit # of messages that can be posted in a given time period

- add nofollow to links posted in chat messages

Bigger tasks that we need anyways:

- community option to approve all incoming threads before they are published

- implement additional spam detection systems like akismet (https://github.com/cedx/akismet.js) - we'll just need to handle false positive cases so people don't lose content they post if it is legitimate

- add ability to block user from spectrum at a system level

Biggest task that would be nice to have:

- internal admin page that collects all inbound toxicity + spam reports, with quick actions for deleting + blocking content

username_1: I think we already do this?

username_1: This ties into #2451, right?

username_0: do we? unsure

username_0: Good call on #2451 - will build on top of that

username_0: First draft to work on this going out in #2758

Status: Issue closed

|

jhaals/yopass | 356886212 | Title: HTTPS

Question:

username_0: Hi,

I'm trying to run yopass on port 443 but setting it like below doesn't work. It does on 1337, Could you advise please?

docker run --name memcached_yopass -d memcached

docker run -p 443:443 -v /local/certs/:/certs \

--link memcached_yopass:memcache -d username_1/yopass -memcached=memcache:11211 -tls.key=/certs/my.key -tls.cert=/certs/my.crt

docker run -p 443:443 --link memcached_yopass:memcache -d username_1/yopass -memcached=memcache:11211

Status: Issue closed

Answers:

username_1: Hi @username_0

You can either map the correct port using docker with `-p 443:1337`, see https://docs.docker.com/config/containers/container-networking/

Or tell yopass to listen on port 443 with `-port 443`

username_0: Thank you username_1! That is perfect! |

FFCK/compet-ffck | 278504935 | Title: Modification des inscriptions Cloud en niveau 1

Question:

username_0: pour une connexion de niveau 1 (gestionnaire sportif régional) on peut modifier les inscriptions cloud de toutes les manifestations grâce au clic droit - ce qui ne devrait pas être possible. Les boutons d'accès direct sont bien vérouillés

|

pytorch/pytorch | 402366366 | Title: Unexpected result with tensor::Tensor::eq_ in C++

Question:

username_0: tensor([0, 0, 0], dtype=torch.int32)

```

## Environment

This happens on MacOs and Linux testes with latest file from pytorch website.

Answers:

username_1: This has been fixed in https://github.com/pytorch/pytorch/pull/15479, and is available in the nightly version of LibTorch.

Below is the output I get with the nightly version:

```

x: 1

2

3

[ Variable[CPUIntType]{3} ]

y: 0

0

0

[ Variable[CPUIntType]{3} ]

0

0

0

[ Variable[CPUIntType]{3} ]

```

Status: Issue closed

|

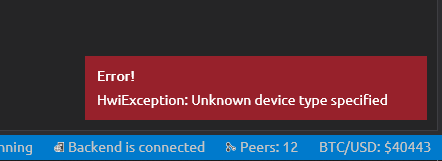

zkSNACKs/WalletWasabi | 802773013 | Title: Ledger Nano X - Unknown Device/Hardware

Question:

username_0: When trying to load the ledger nano x via Wasabi it shows as Unknown. Click to load it anyway brings up an unknown hardware error. I've attached images below:

Currently running Windows 10 but I've tried on several devices and a Macbook and it's the same issue. Using the latest Wasabi Wallet, version 1.1.12.3

Answers:

username_1: Unfortunately, like you said, Nano X is not officially supported. I'll close this issue.

Status: Issue closed

|

fig02/Better-OoT | 438899186 | Title: It would be nice to have a Toggle for all the Default changes

Question:

username_0: A lot of these features are always applied without the option to toggle them off. Most people who use the app now will always want them. But giving the option to disable them can open up some interesting uses for BOoT.

For example, OoT Online will only support version 1.0. So in other words, Master Quest is not supported in OoT Online. However, using BOoT, you can patch 1.0 to have the MQ dungeons. Circumventing this problem entirely! But a lot of casual players might want to play mostly vanilla, and still have the option to skip owls, or have D-Pad Boots, or a few cosmetic changes, etc.

A single toggle for all of the default features would open up the app to many more people.

Of course, ideally, every tiny little feature would have a toggle. But that gets a bit tedious. It's a quick fix to do them all with a single toggle. Although, If you did want to do that, you could make some sort of "Advanced" tab for disabling default features one at a time. People love customizing their experience.

Answers:

username_1: Hi

Thank you for your suggestion.

This tool was designed specifically for speedrunning, and the settings options are perfect for what it was designed for. I definitely see how it could be opened up to serve more purposes, but I will not be the one to implement that since this fits me and my community's needs already.

Sorry and thank you for understanding

Status: Issue closed

|

elastic/elasticsearch-php | 286693124 | Title: Getting blank hits on Search

Question:

username_0: ### Hi I am getting empty result when search a document.

Using browser search it's working fine but using elasticsearch-php client not working.

##Request:

$params = [

'index' => 'response_packets_index_v2',

'type' => 'documents',

'body' => [

'query' => [

'match' => [

'list_name' => 'number'

]

]

]

];

$results = $client->search($params);

##Response:

{"took":2,"timed_out":false,"_shards":{"total":5,"successful":5,"skipped":0,"failed":0},"hits":{"total":0,"max_score":null,"hits":[]}}

### System details

- Operating System Ubuntu 16.04

- PHP Version 7.1

- ES-PHP client version 5.0

- Elasticsearch version 5.0

Answers:

username_1: Are you connecting to the same host? Without specifying the parameters for `ClientBuilder` it connects to localhost.

username_0: @username_1 i find a difference between bulk indexing and single indexing.

so single index search working smoothly, but bulk index searching not working.

username_2: @username_0 The URL you posted is searching the `sms_api_response_packets_index_v2` index, but is not specifying a doc type. Your PHP search is specifying the `response_packets_index_v2` index and the `documents` doc type.

Can you try the PHP search against the same index as your URL query string (`sms_api_response_packets_index_v2`) and without a doctype?

username_0: @username_2 i was searching with index and doc type, but not worked.

I found a solution, while doing bulk mapping and bulk creating, now it's working fine.

Actually, may be i am doing wrong, while create indexing.

##Wrong way indexing example

```

for ($i = 0; $i <= $count; $i++) {

$params['body'][] = [

'index' => [

'_index' => 'response_packets_index_v5',

'_type' => 'response_packets_v5',

'routing' => 'company',

]

];

$params['body'][] = [

'my_field' => $documentData[$i]

];

}

return $client->bulk($params);

```

## Right way indexing example, which is working ( Removing "my_field" from body param.)

```

for ($i = 0; $i <= $count; $i++) {

$params['body'][] = [

'index' => [

'_index' => 'sms_api_response_packets_index_v5',

'_type' => 'sms_api_response_packets_v5',

//'id' => 'my_id',

'routing' => 'company',

]

];

$params['body'][] = $documentData[$i];

}

return $client->bulk($params);

```

So i just want to know, what is the difference between, above these two type bulk indexing.

username_2: Depends on what's inside of `$documentData`. The first example will nest all of `$documentData[$i]` inside of the `'my_field'` field inside the JSON. So you probably created documents that looked like this:

```json

{

"my_field" : {

"foo" : "bar",

"title" : "the title here",

"price" : 123

}

}

```

whereas the second example would put the `$documentData` directly at the top level, like this:

```json

{

"foo" : "bar",

"title" : "the title here",

"price" : 123

}

```

Which is probably why your search wasn't working... the document field structure didn't match what the query was searching :)

username_0: @username_2 so i want to search below data using php syntax, can you give me some example.

```

{

"_index": "esponse_packets_index_v2",

"_type": "documents",

"_id": "0",

"_score": 1,

"_source": {

"my_field": {

"id": 1,

"workflow_id": "943",

"workflow_name": "Diwali",

"list_name": "number",

"list_id": "798",

"operator": "-",

"circle": "-",

"country": "-",

"sender_id": "MANISH",

"submit_date": "2017-11-06 14:09:56",

"dlrdatetime": "2017-11-06 14:10:06",

"split_count": 1,

"error_code": "Waiting",

"error_text": "-",

"currency_used": "0.2000",

"text_type": "text",

"error_code_status": null,

"origin_type": "1",

"api_response_id": 1,

"response": null,

"parent_id": null,

"is_test": 0,

"link": null,

"type": 2,

"message_text": "Hi This is text message",

"status": null,

"is_link_api": 0,

"winner_branch": null,

"instance_id": "724e540394481746",

"created_at": "2017-11-06 14:10:06",

"updated_at": "2017-11-06 14:10:06",

"branch_id": 0

}

}

```

Sample code, which not working.

```

$searchParams = [

'index' => 'response_packets_index_v2',

'type' => 'api_response_packets_v2',

'body' => [

'query' => [

'bool' => [

'filter' => [

'range' => [

'created_at' => [

'gte' => '2017-11-06 14:09:00',

'lte' => '2017-11-06 14:12:56'

]

]

],

'must' => [

'match' => ['error_code' => 'Waiting']

]

]

]

]

];

return $client->search($searchParams);

```

username_3: My search has a timeout error.

Status: Issue closed

username_4: Outdated issue. @username_0 if this is still an issue I'll reopen it.

username_5: yes its still an issue for me , I am trying to search through about 100,000 records in an index I created, when i tried the same method but only 18,000 data it worked fine,

note : the same the data set on both ends |

gboeing/osmnx | 665194279 | Title: osmnx.utils_geo.bbox_from_point(point, dist=1000, project_utm=False, return_crs=False) too slow for "big" datasets

Question:

username_0: Hi! 1st of all I have to say this package is amazing and super complete, really useful :)

**"Issue"?**

So I am using it to find Pois in 1km radius from a 55k rows dataset I am working with.

The point is that I wanted to obtain the bounding box from lat/long of all my points (55k). And it took 1h27min with the

`bbox_from_point(dist=1000, project_utm=False, return_crs=False)` function.

This was my implementation:

`trash_df["bbox"] = trash_df.swifter.apply(lambda column: ox.utils_geo.bbox_from_point((column.latitude, column.longitude), dist=1000), axis=1)`

**Describe the solution you'd like to propose**

So I made this fn:

```

def getBbox(lat, lon, radius=1):

import math

R = 6371

w = lon - math.degrees(radius/R/math.cos(math.radians(lat)))

e = lon + math.degrees(radius/R/math.cos(math.radians(lat)))

s = lat - math.degrees(radius/R)

n = lat + math.degrees(radius/R)

return (n, s, e, w)

```

And it takes 2seconds and the resulst are equal until 3rd-4th decimal

Answers:

username_1: Thanks @username_0. The current implementation of `utils_geo.bbox_from_point` is only really designed for one-off use and, as you noted, isn't very efficient because it: 1) projects the passed-in point to the local UTM projection, 2) buffers it, 3) projects back to lat-lng, and 4) gets the bounds of the buffer. A streamlined solution like you propose might be useful.

A few questions/comments first:

1. If `R=6371`, it looks like you're working in units of kilometers right? Why not meters?

2. If `R` represents the radius of the earth, what does the variable `radius` mean? Is that the distance for the bounding box? If so, a more obvious variable name would be good.

3. The 3rd decimal place in decimal degrees would be something like ~100 meters. If creating a bbox 1000 meters in each direction from the center point, this is a pretty significant discrepancy in the results.

4. I'm not sure how you derived the formulae for the bounding box, but it doesn't look quite right to me, and I think may account for the previous point. I might instead implement something like this:

```python

def get_bbox(point, dist=500):

earth_radius = 6371000 #meters

lat, lng = point

delta_lat = (dist / earth_radius) * (180 / math.pi)

delta_lng = (dist / earth_radius) * (180 / math.pi) / math.cos(lat * math.pi / 180)

north = lat + delta_lat

south = lat - delta_lat

east = lng + delta_lng

west = lng - delta_lng

return north, south, east, west

```

I suspect this will yield nearly identical results to the current implementation of `utils_geo.bbox_from_point`, but be much faster.

username_1: I just did some quick tests and I believe our two solutions are actually mathematically equivalent. They seem to yield results within approximately a 1 meter of the current `utils_geo.bbox_from_point` implementation.

username_1: See proposed enhancement in #541.

Status: Issue closed

|

HaxeFoundation/intellij-haxe | 500556702 | Title: NPE in HaxeExpressionEvaluator.

Question:

username_0: Randomly ran into this while editing. Not sure how to repro.

```

In file: file:///home/sni/sandbox/uwongke/poptropica-openfl/src/game/creators/scene/RaceSegmentCreator.hx

java.lang.NullPointerException

at com.intellij.plugins.haxe.model.type.HaxeExpressionEvaluator.handle(HaxeExpressionEvaluator.java:81)

at com.intellij.plugins.haxe.model.type.HaxeExpressionEvaluator._handle(HaxeExpressionEvaluator.java:207)

at com.intellij.plugins.haxe.model.type.HaxeExpressionEvaluator.handle(HaxeExpressionEvaluator.java:68)

at com.intellij.plugins.haxe.model.type.HaxeExpressionEvaluator.evaluate(HaxeExpressionEvaluator.java:59)

at com.intellij.plugins.haxe.model.type.HaxeTypeResolver.evaluateFunction(HaxeTypeResolver.java:515)

at com.intellij.plugins.haxe.model.type.HaxeTypeResolver.getPsiElementType(HaxeTypeResolver.java:492)

at com.intellij.plugins.haxe.model.type.HaxeTypeResolver.getPsiElementType(HaxeTypeResolver.java:443)

at com.intellij.plugins.haxe.ide.annotator.AssignExpressionChecker.check(HaxeSemanticAnnotator.java:923)

at com.intellij.plugins.haxe.ide.annotator.HaxeSemanticAnnotator.analyzeSingle(HaxeSemanticAnnotator.java:82)

at com.intellij.plugins.haxe.ide.annotator.HaxeSemanticAnnotator.annotate(HaxeSemanticAnnotator.java:61)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.runAnnotators(DefaultHighlightVisitor.java:121)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.visit(DefaultHighlightVisitor.java:86)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.runVisitors(GeneralHighlightingPass.java:351)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$collectHighlights$5(GeneralHighlightingPass.java:284)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:311)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:314)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.analyze(DefaultHighlightVisitor.java:70)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:314)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectHighlights(GeneralHighlightingPass.java:281)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectInformationWithProgress(GeneralHighlightingPass.java:225)

at com.intellij.codeInsight.daemon.impl.ProgressableTextEditorHighlightingPass.doCollectInformation(ProgressableTextEditorHighlightingPass.java:84)

at com.intellij.codeHighlighting.TextEditorHighlightingPass.collectInformation(TextEditorHighlightingPass.java:55)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$null$1(PassExecutorService.java:429)

at com.intellij.openapi.application.impl.ApplicationImpl.tryRunReadAction(ApplicationImpl.java:1106)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$doRun$2(PassExecutorService.java:422)

at com.intellij.openapi.progress.impl.CoreProgressManager.registerIndicatorAndRun(CoreProgressManager.java:591)

at com.intellij.openapi.progress.impl.CoreProgressManager.executeProcessUnderProgress(CoreProgressManager.java:537)

at com.intellij.openapi.progress.impl.ProgressManagerImpl.executeProcessUnderProgress(ProgressManagerImpl.java:59)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.doRun(PassExecutorService.java:421)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$run$0(PassExecutorService.java:397)

at com.intellij.openapi.application.impl.ReadMostlyRWLock.executeByImpatientReader(ReadMostlyRWLock.java:164)

at com.intellij.openapi.application.impl.ApplicationImpl.executeByImpatientReader(ApplicationImpl.java:204)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.run(PassExecutorService.java:395)

at com.intellij.concurrency.JobLauncherImpl$VoidForkJoinTask$1.exec(JobLauncherImpl.java:161)

at java.base/java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:290)

at java.base/java.util.concurrent.ForkJoinPool$WorkQueue.topLevelExec(ForkJoinPool.java:1020)

at java.base/java.util.concurrent.ForkJoinPool.scan(ForkJoinPool.java:1656)

at java.base/java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1594)

at java.base/java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:177)

```

Status: Issue closed

Answers:

username_0: It's actually throwing the NPE while trying to log an error message.

bfc47e79d0e36ff562cafad2ee7d8aad7b3244ac should fix it.

username_0: Fixed in #959 |

mpc-msri/EzPC | 783873520 | Title: [squeeze] Generic squeeze not supported

Question:

username_0: We need to add general support for [tf.squeeze](https://www.tensorflow.org/api_docs/python/tf/squeeze)

A squeeze of a tensor of shape [1, 2, 1, 3, 1, 1] should result in a tensor of shape [2,3].

Squeeze also has an axis dim where we can specify to collapse only specified dims. Add support for that to.

Currently we only support squeeze of 4D->2D, 4D->3D, 3D->2D in Library_common.ezpc. We should generate squeeze code instead of using library functions. |

ubc-vision/image-matching-benchmark | 907065087 | Title: version of joblib?

Question:

username_0: I have installed joblib=1.0.1 in pyhon3.6, but when I run run.py in python3.6, 'ImportError' is still there.

Validating method 1/1: "sp_sg"

['phototourism', 'pragueparks', 'googleurban']

Running: phototourism, stereo track

Running: phototourism, multiview track

Running: pragueparks, stereo track

Running: pragueparks, multiview track

Running: googleurban, stereo track

Running: googleurban, multiview track

Validating key "config_phototourism_stereo"

Validating key "config_phototourism_multiview"

Validating key "config_pragueparks_stereo"

Validating key "config_pragueparks_multiview"

Validating key "config_googleurban_stereo"

Validating key "config_googleurban_multiview"

Working on sp_sg: phototourism/reichstag

-- File feature already exists

-- Computing match

WARNING: ./jobs/9efa086bd2840045bf66679f8d8ad4a21a0d23201b45937cb4c741cf8aa6e00d already exists!

Traceback (most recent call last):

File "compute_match.py", line 19, in <module>

from joblib import Parallel, delayed

ImportError: No module named joblib

Traceback (most recent call last):

File "run.py", line 280, in <module>

main(cfg)

File "run.py", line 219, in main

job_dict)

File "run.py", line 47, in create_eval_jobs

job = create_and_queue_jobs(cmd_list, cfg, dep_str)

File "/cephfs/person/dihehuang/imc-2021-submit/image-matching-benchmark/utils/queue_helper.py", line 310, in create_and_queue_jobs

cpu=cpu)

File "/cephfs/person/dihehuang/imc-2021-submit/image-matching-benchmark/utils/queue_helper.py", line 161, in queue_job

raise RuntimeError('Subprocess error!')

RuntimeError: Subprocess error!

Answers:

username_1: That's probably an error in the job itself, not joblib. Check logs/9efa086bd2840045bf66679f8d8ad4a21a0d23201b45937cb4c741cf8aa6e00d.log or, easier, just re-run the pipeline with the `--run_mode=interactive` flag to figure out why it's crashing.

username_0: I have set '--run_mode=interactive', but there is no log file in ./logs

when I set sys.path.append('/usr/local/lib/python3.6/site-packages')

it occurs:

Traceback (most recent call last):

File "compute_match.py", line 20, in <module>

from joblib import Parallel, delayed

File "/usr/local/lib/python3.6/site-packages/joblib/__init__.py", line 113, in <module>

from .memory import Memory, MemorizedResult, register_store_backend

File "/usr/local/lib/python3.6/site-packages/joblib/memory.py", line 274

raise new_exc from exc

^

SyntaxError: invalid syntax

Traceback (most recent call last):

File "run.py", line 281, in <module>

main(cfg)

File "run.py", line 220, in main

job_dict)

File "run.py", line 48, in create_eval_jobs

job = create_and_queue_jobs(cmd_list, cfg, dep_str)

File "/cephfs/person/dihehuang/imc-2021-submit/image-matching-benchmark/utils/queue_helper.py", line 310, in create_and_queue_jobs

cpu=cpu)

File "/cephfs/person/dihehuang/imc-2021-submit/image-matching-benchmark/utils/queue_helper.py", line 161, in queue_job

raise RuntimeError('Subprocess error!')

RuntimeError: Subprocess error!

username_1: Oh, I see your error message was indeed related to joblib, my bad. I presume this also happens when you import the library from the REPL? It's not a benchmark issue, and it's impossible for us to diagnose it. I would try installing joblib in a new conda environment and if that works, install the rest of the dependencies while ignoring library versions.

(Also: the logs only show up if you use `--run_mode=batch`, if you set it to interactive, you see error messages in the terminal.)

Status: Issue closed

|

grycap/scar | 366503977 | Title: Your website is 404

Question:

username_0: You link to https://grycap.github.io/scar/ but it's currently 404.

Answers:

username_1: Hi @username_0. Thank you for noticing this. We want to rely on the readthedocs documentation page (https://scar.readthedocs.io/en/latest/) instead of the old web in order to better keep up with the changes in the framework. This is why we decommissioned last week the web page.

We want to introduce the change on the CNCF entry (https://landscape.cncf.io/selected=scar) so that the website attribute now links to the readthedocs site.

Should we do another PR to introduce this change?

Thanks.

Status: Issue closed

username_0: Updated here, thanks: https://github.com/cncf/landscape/commit/b6aff71abaf0c594e9df602d01809a352fa211a2 |

githubschool/github-games-tnayak16 | 629235386 | Title: Game broken

Question:

username_0: When attempting to access this at https://githubschool.github.io/github-games-tnayak16/, I am getting a 404. This could be caused by a couple things:

- GitHub pages needs to be enabled on master. You can fix this in the repository settings.

- the index.html file is incorrectly named inde.html. We will fix this together in class.

Can you please fix the first bullet @tnayak16? |

kubernetes/kubernetes | 145047000 | Title: kubernetes-e2e-gce-autoscaling has been timing out for two weeks

Question:

username_0: [Example failure](https://storage.cloud.google.com/kubernetes-jenkins/logs/kubernetes-e2e-gce-autoscaling/3337)

Should this be on the critical builds page?

Answers:

username_0: The job is disabled. Someone can feel free to pick it up anytime.

Status: Issue closed

|

Sage/sageone_br_nfe_documentacao_api | 174820634 | Title: Como faço para pegar a autorização?

Question:

username_0: Estou fazendo a chamada do url para pegar a autorização e está me redirecionando para o site da https://app.br.sageone.com/login

Estou seguindo a documentação em https://developers.sageone.com/docs/de/v2#authentication-overview:

Chamando via get

https://www.sageone.com/oauth2/auth?response_type=code&client_id=xxxxx&redirect_uri=https://app.sisdanca.com.br/auth/callback

Onde xxxxx é o meu código de cliente gerado.

Answers:

username_0: E como fazer

Status: Issue closed

username_1: Consegui pegar o code para geração do token para trabalhar, porém tive que colocar na internet uma página para pegar o código.

Como fazer isso no ambiente de desenvolvimento? No localhost.

username_0: desculpe. Pode fechar.

Status: Issue closed

username_1: Consegui pegar o code para geração do token para trabalhar, porém tive que colocar na internet uma página para pegar o código.

Como fazer isso no ambiente de desenvolvimento? No localhost.

username_1: sem problemas ;)

Status: Issue closed

|

mortezakz/DocumentWrangling | 107002752 | Title: Unintentional Author Name Deletion

Question:

username_0: When author names appear within the the context of the abstract (as opposed to coming before the abstract) they are unintentionally deleted. Need a way to detect when these are being used in sentences to avoid deletion. |

swagger-api/swagger-codegen | 218553392 | Title: Basic Authorization and OAuth is not separated in template

Question:

username_0: I have define in my RestService swagger annotation:

```java

@ApiOperation(

value = "Get a UserConfiguration resource."

,authorizations = @Authorization(value = "basic")

)

```

And I have expect that in client I will have code generated for Basic Authorization only. There is no reason that I have Apache Oltu included like dependency. I have view the template and there is no switch like: if(isBasic).. else if(isOAuth) |

icsharpcode/ILSpy | 474181134 | Title: foreach expected but while used instead

Question:

username_0: ILSpy version 5.0.0.4970-preview3

method decompiled with **while** but **foreach** expected

```

protected CompositeDevices(SerializationInfo info, StreamingContext context)

: base(info, context)

{

SerializationInfoEnumerator enumerator = info.GetEnumerator();

while (enumerator.MoveNext())

{

string name = enumerator.Current.Name;

if (name != null && name == "AdditionalParameters")

{

AdditionalParameters = (Dictionary<string, string>)info.GetValue("AdditionalParameters", typeof(Dictionary<string, string>));

}

}

base.CollectionChanged += OnCollectionChanged;

}

```

please also ref to very similar method

CompositeDevices(IEnumerable<CompositeDevice> col)

that decompiled to foreach!

target: #1601

Answers:

username_1: `SerializationInfoEnumerator` implements `IEnumerator` but not `IDisposable`, so the compiler doesn't generate a using statement / try-finally block.

I think currently our foreach pattern only tries to match using statements.

username_0: Did a little investigation - how competitors are handling this case

so,

.NET Reflector as well as Telerik are using

while (enumerator.MoveNext())

but JetBrains and dnSpy are using

foreach (SerializationEntry serializationEntry in info)

so, to summarize

1) it's possilbe

2) it's "nice to have & see" feature, not an error

Feel free to close this issue.

username_2: See the relevant test case at https://github.com/icsharpcode/ILSpy/blob/ddf4053a45a52609023047be326419b1ec8980df/ICSharpCode.Decompiler.Tests/TestCases/Pretty/Loops.cs#L358-L366 |

quic/aimet | 937859536 | Title: AttributeError: 'Parameter' object has no attribute 'modules'

Question:

username_0: Hello,

I am trying to compress a Faster-RCNN detection model with channel pruning.

However, in the winnowing stage, called with the function `aimet_torch.winnow.winnow.winnow_model` I get the following exception:

````

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/winnow/winnow.py", line 70, in winnow_model

in_place, verbose)

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/winnow/mask_propagation_winnower.py", line 98, in __init__

self._graph = ConnectedGraph(self._model, (dummy_input,))

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/meta/connectedgraph.py", line 202, in __init__

self._construct_graph(model, model_input)

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/meta/connectedgraph.py", line 312, in _construct_graph

self._parse_trace_graph(model, model_input, module_tensor_tuples_map)

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/meta/connectedgraph.py", line 361, in _parse_trace_graph

if not is_leaf_module(subgraph_model):

File "/usr/local/lib/python3.6/dist-packages/aimet_torch/utils.py", line 199, in is_leaf_module

module_list = list(module.modules())

AttributeError: 'Parameter' object has no attribute 'modules'

````

Apparently, one of the parameters of the models (the weight of the first convolution in the ResNet stem) is interpreted as a module and enters the `is_leaf_module` in line 361 in `aimet_torch/meta/connectedgraph.py`.

Here are my versions of Aimet and torch:

````

Aimet==1.10.0.0.100.0.486

AimetCommon==1.10.0.0.100.0.486

AimetTorch==1.10.0.0.100.0.486

torch==1.3.1+cu100

````

How can I solve this?

Thank you,

D

Answers:

username_1: @username_0 Thank you for reporting this. @username_2 @quic-sundarr Could you please take a look at this.

username_2: Hi @username_0 ,

For context, in this section of code, we are attempting to build our own internal representation of the Pytorch graph which is what we refer to as Connected Graph. As a part of this, we make use of Pytorch's jit trace to provide us a graph to parse.

In the graph, we typically see various types of nodes giving us module and connectivity information, including GetAttr nodes which define modules (which could in turn contain submodules), and Callmethod nodes which show how the GetAttr nodes are being called.

It looks like in your case, the subgraph_model in the stack trace came from a GetAttr node, but holds a parameter instead of a torch.nn.Module. It is unclear what would lead to this, it would help if you could provide the model definition of the module that the parameter is in, so we can attempt to reproduce the issue.

Also, if you wanted to try getting past the issue, I would first try adding a check after subgraph_model has been defined a few lines above, to continue on to the next node if subgraph_model is not of type torch.nn.Module.

username_0: Hi,

and thank you for your answer.

The model I am winnowing is a Resnet18 from `torchvision.models.resnet18()`. It should be a pretty neat model definition, that is why I was thinking the problem is in incompatibilities between Aimet version and torch version. Could you please confirm the versions I reported are good to use in conjunction?

Also, the input to that winnow_model function is as follows:

````

model=ResNet (from torchvision)

input_shape=[1,3,600,1000]

list_of_modules_to_winnow=[(Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False), [0, 2, 3, 4, 5, 6, 11, 12, 15, 17, 19, 21, 23, 24, 25, 26, 27, 31, 33, 34, 35, 38, 39, 41, 43, 45, 53, 55, 56, 59, 60, 63])]

reshape=False

````

The parameter mistakenly interpreted as module is an `nn.Parameter`, shaped 64, 3, 7, 7, so it seems to me the first ResNet convolution (stem).

Thanks for help,

D

username_0: Hello,

closing this as upgrading torch version fixed it.

````

Aimet==1.10.0.0.100.0.486

AimetCommon==1.10.0.0.100.0.486

AimetTorch==1.10.0.0.100.0.486

torch==1.4.0+cu100

torchvision==0.5.0+cu100

````

Best,

D

Status: Issue closed

username_2: Thanks for trying out the pytorch upgrade @username_0, and apologies for not responding sooner regarding the versions.

It looks like you are also using an old version of AIMET, and so I would suggest upgrading to our latest 1.16.2 release. That way if you run into any more issues, it will be easier for us to debug. |

koppor/jabref | 188920402 | Title: Improve shared database

Question:

username_0: - [ ] Manage connections: http://discourse.jabref.org/t/managing-shared-database-connections/289

- [ ] Command line access: http://discourse.jabref.org/t/command-line-access-to-sql-databases/288

Answers:

username_1: Good questions. Wouldn't be to bad for testing issues, if we had a shared database we can test on. We'll talk about that in the next devcall. Meanwhile, set up your own remote database for testing.

@username_4: You should be able to move the synchronizing stuff to a background task (jabref already offers infrastructure to follow these thanks to @username_2). If there are parts of the task that would block the user from working on the main table, JabRef should display some kind of waiting animation. If there is a huge load of entries (10.000 or so, yes, we really have users with this number of entries). If there is an infinite loop it would probably be a bug, that needs fixing. Maybe @username_0 can specify further.

@username_3 I believe the task would be to expand the existing cli of jabref to work with shared databases.

username_2: I think the best thing to do here is to go for the same approach as for the pdf indexer. Have one background task with a queue of database queries. You can checkout the [IndexingTaskManager](https://github.com/JabRef/jabref/blob/7eb7b86203d7c149d3b48df8e0845d35aff1597c/src/main/java/org/jabref/logic/pdf/search/indexing/IndexingTaskManager.java) to get an idea of how to do that.

username_3: Hi Thank you for your answer.

According to the instructions, we should automate the process of entries retrieval, is the task to import a Latex Document with all the entries in it or is a simple commandline output sufficient?

Regarding the export, what exactly does the user want to export? a Latex-Document? Or a simple input in the commandline

username_1: I don't think that you understood the instructions right...

JabRef works on `.bib`, not with latex documents. It already can sync via the gui entries with a remote database. Locally those entries are stored in a .bib` file, remotely in a database. Syncing/importing/exporting already works in the gui and does not have to be improved (well, actually it could be improved, but thats not the concern of this issue).

This issue applies to improving

* the management of multiple databases (to switch between multiple database, to store credentials, to switch between different profiles etc.)

* making the already existing functionality of the gui accessible via the cli (syncing a `.bib` file with a remote database, running cleanup-formatters, etc.)

* improving the responsiveness of the gui while synchronizing a remote database with your local `.bib` file

username_0: Did you try to connect to to https://www.elephantsql.com/? This should work instantly. Please try it and report back.

username_0: Yez - we do not know about the root cause. We should observe that interaction with a shared database is slow.

username_4: Yes, we did and it works. (We've already sent the question 1., right before we've figured out what the elephantsql does. Sorry for that.)

Thanks for the answers.

username_5: It seems we are unable to run JabRef from the .bat file. When typing `JabRef.bat` (or without the `.bat` in Ubuntu) in a terminal, we get the following error:

```

Error occurred during initialization of boot layer

java.lang.module.FindException: Module org.jabref not found

```

It is possible that we missed a step, since we tried that directly in the `build/scripts/` folder in IntelliJ. Could we get some help on how to run JabRef in CLI mode ?

username_6: @username_5 generally speaking I'd suggest asking these kinds of questions in the Gitter, you are likelier to get a quick response there.

I don't know the goals of your course, but if you want a quick fix that might allow you to proceed until someone gets back to you with a better method, I'd say use `gradlew run` with `--args` instead (e.g., ` ./gradlew run --args="-v"`).

I think @username_2 did some CLI-related improvements, do you have a better workflow?

username_2: Hi!

You mention you are using intellij. If you also use the Intellij build system you can create a run configuration to test the CLI.

I never used self generated binaries explicitly, but you could try if the pre-built binaries work for you. If they do, there may be a problem with your build. |

BurntSushi/rust-csv | 123931180 | Title: Doc or impl bug "If `quote` is `None`, then no quoting will be used."

Question:

username_0: https://github.com/username_1/rust-csv/blob/e1552706c162d594f7d454b69bece07fd544ff49/src/reader.rs#L418 says

```

/// If `quote` is `None`, then no quoting will be used.

```

but `quote` is a `u8`, not an `Option<u8>`, so it doesn't look like I can turn off quoting support.<issue_closed>

Status: Issue closed |

BSData/robotech-rpg-tactics | 162590779 | Title: BattleScribe 2.0

Question:

username_0: Hi guys.

BattleScribe 2.0 is coming and it contains some major changes to Data Editor and the data format.

While it should happily upgrade 1.15.x format data files to the new format, you never know what sneaky bugs might be lurking...

It would help a lot if you could run your data through it and make sure nothing gets broken so we have no nasty surprises on release day (whenever that might be...).

**Alpha Downloads:**

Desktop: https://github.com/BattleScribe/Desktop-Alphas/issues/1

Android: https://github.com/BattleScribe/Android-Alphas/issues/1

iOS: https://github.com/BattleScribe/iOS-Alphas/issues/1

If you find any issues, please do let me know: https://github.com/BattleScribe/Desktop-Alphas/issues |

uber/motif | 592817712 | Title: ScopeFactory with single unused dependency ignores the given creatable instance

Question:

username_0: Generated code seems to ignore given instance for the dependency source while using ScopeFactory with a single unused dependency and tries to create the instance directly.

**Library version**: 0.3.3-SNAPSHOT

**Repro steps or stacktrace**:

Scope

```java

@Scope

public interface TestScope extends Creatable<TestScope.Dependencies> {

interface Dependencies {

String blah();

}

}

```

Creating instance of scope

```java

TestScope.Dependencies dependencySource =

new TestScope.Dependencies() {

@Override

public String blah() {

return "something";

}

};

ScopeFactory.create(TestScope.class, dependencySource);

```

Generated code

```java

@ScopeImpl(

children = {},

scope = TestScope.class,

dependencies = TestScope.Dependencies.class

)

public class TestScopeImpl implements TestScope {

private final TestScope.Dependencies dependencies;

public TestScopeImpl(TestScope.Dependencies dependencies) {

this.dependencies = dependencies;

}

public TestScopeImpl() {

this(new TestScope.Dependencies() {});

}

TestScope testScope() {

return this;

}

}

```

Notice `this(new TestScope.Dependencies() {});` in constructor.

Answers:

username_1: Hi! I was wondering if there's any updates on this issue? |

angular-hispano/angular | 863289342 | Title: Traducir: guide/interpolation.md

Question:

username_0: 📚Traducir: <!-- ✍️ editar: --> interpolation.md

<!--🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅

Traducción de la documentación oficial de Angular a español

🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅-->

## Nombre del archivo:

<!-- ✍️ editar: --> interpolation.md

## Ruta donde se encuentra el archivo dentro del proyecto de Angular

<!-- ✍️ editar: --> https://github.com/angular-hispano/angular/blob/master/aio/content/guide/interpolation.md

Answers:

username_1: Closed by duplicated https://github.com/angular-hispano/angular/issues/124 @username_0 please work on that issue

Status: Issue closed

|

szjug/szjug.github.io | 816247498 | Title: Is this JUG still active?

Question:

username_0: Hi there, just wanna ask if szjug is still operating. Any upcoming events?

Answers:

username_1: Yes, there is WeChat group

But because of COVID there were no in person meetings in 2020 and 2021 so far.

username_0: May I ask how to join the WeChat group?

username_1:

username_1: scan code above,

the code is changing, so there is no use to have it on the site

Any ideas are welcomed. |

openshifttips/web | 508559179 | Title: Basic release server script to view the different channles and the releases.

Question:

username_0: Not sure where or if you would like to use this or add it to your tips. I named the script `ocr`.

* requires `jq`

```

#!/bin/bash

PS3='Please enter your choice: '

options=("prerelease-4.1" "stable-4.1" "candidate-4.2" "fast-4.2" "stable-4.2")

_Command () {

echo "Showing upgrade channel: ${channel}"

curl -sH 'Accept: application/json' https://api.openshift.com/api/upgrades_info/v1/graph?channel=${channel} | jq -S '.nodes | sort_by(.version | sub ("-rc";"") | split(".") | map(tonumber)) | .[]'

}

select opt in "${options[@]}"

do

channel="${opt}"

_Command

break

done

```

Status: Issue closed

Answers:

username_1: I've merged the PR, thanks again! |

sbraz/pymediainfo | 148879850 | Title: ValueError in MediaInfo.parse

Question:

username_0: It breaks on line `lib.MediaInfoA_Open(handle, filename.encode("utf8"), 0)` and says "Procedure probably called with too many arguments (4 bytes in excess)". I have just downloaded last version (0.7.84) of MediaInfo.

Quick look at sources of MI points me that API of dll is changed - function MediaInfoA_Open now takes only two arguments, so removing last argument in the call makes it work.

Answers:

username_1: I can not reproduce with 0.8.4 but it's true that the function seems to only take 2 args: https://github.com/MediaArea/MediaInfoLib/blob/master/Source/MediaInfoDLL/MediaInfoDLL.cpp#L379

I cannot remember where the third argument came from.

I've pushed the change, thanks for the report.

Status: Issue closed

|

InfamousBanana/my-repository | 425912590 | Title: New screenshot added to gallery

Question:

username_0: <img src="https://locker.ifttt.com/v2/17179621/1553687130856-c88666e11aa88311/e8197fbf8ddcb95db22e1b13649354f12ebf9b1ba885f1e93265fbab87b5d7ea/a5439477-35d7-48ab-b9f6-196bd191d47c.png?sharing_key=87f4d199d5ad320e8932dbd0409e6e4b"><br>

<br>

via Android https://ift.tt/2uvdOrV<br>

<br>

March 27, 2019 at 12:45PM |

Azure/azure-event-hubs-for-kafka | 439399517 | Title: V1 SaslHandshake+SaslAuthenticate not working

Question:

username_0: Description

===========

[V1 SaslHandshake](https://kafka.apache.org/protocol.html#The_Messages_SaslHandshake) appears to be broken--as a result, no clients that attempt to use V1 (most native Go clients and a few other clients) can authenticate.

[Confluent Kafka Go](https://github.com/confluentinc/confluent-kafka-go) always seems to use V0. As does the Java versions.

I've got a [working auth flow](https://github.com/username_0/kafka) and a [fix for Sarama](https://github.com/username_0/sarama/commit/6509b6f9a616196089617e28f70ea2e21e8406ae):

- https://github.com/username_0/kafka

- https://github.com/username_0/sarama/commit/6509b6f9a616196089617e28f70ea2e21e8406ae

How to reproduce

================

Attempt to connect using [Sarama Kafka client](https://github.com/Shopify/sarama).

Has it worked previously?

=========================

Has not worked previously afaik.

Checklist

=========

Please provide the following information:

- [x] Verified that port 9093 is not blocked by firewall

- [x] Verified the namespace is either Standard or Dedicated tier (i.e. it is not Basic tier, which isn't supported)

- [x] Sample you're having trouble with: [https://github.com/username_0/kafka](https://github.com/username_0/kafka)

- [x] Apache Kafka version: `Azure Event Hubs for Kafka`

- [x] Kafka client configuration: N/A

- [x] Namespace and EventHub/topic name: `would prefer to keep this private but can provide if required`

- [x] Consumer or producer failure `both--auth failure`

- [x] If consumer, partition ID, group ID `N/A`

- [x] Timestamp in UTC `attempts made around 2019-05-02 02:00 to 03:00-ish`

- [x] Client ID `testClient`

- [x] Provide all logs N/A

- [x] Standalone repro `willing to send`

- [x] Operating system: `Windows 10 and Ubuntu WSL (up-to-date)`

- [x] Critical issue (critical with workaround)

Answers:

username_1: Thanks for reporting the issue, @username_0. SaslHandshake version 1 is currently not supported. We will update the service so that API version response doesn't include version 1 for the SaslHandshake API until v1 is fully supported.

username_0: Thank you @username_1!

FWIW there are a few other more minor issues with the ApiRequest response. For example it doesn’t report being compatible with SaslAuthenticate—just handshake. Doesn’t seem to trip up clients though.

I assume that means I’ll have to submit a PR for sarama to perform a more reliable check for ApiVersion (right now they do it based on the user-specified Kafka version) but that’s not on your team!