repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

SantaClaws91/ENSL-GatherBOT | 199270725 | Title: !help should display all commands possible ?

Question:

username_0: !help displays: Use !info to request gather information or wait for the automated responses.

but it should mention also something like:

!msgconditions

So let !help display:

Use:

!info to request gather information or wait for the automated responses.

!msgconditions to change on which Steamfriends status he should message you.

And on:

!msgconditions display:

!msgconditions [option]

Online : Only announce if your personastatus on steam is set to "Online" (Default setting)

Away : Announce if your personastatus on steam is set to "Online" or "Away"

Busy : Announce if your personastatus on steam is set to "Online" or "Busy"

All : Announce if your personastatus on steam is set to anything other than "Offline"

Non : Disable gather announcing

Answers:

username_1: This is resolved now. Thanks.

Status: Issue closed

|

serverless/examples | 212111517 | Title: Example for receiving a file through API Gateway and uploading it to S3

Question:

username_0: Hey,

I'm wondering if there is any good example which could be added to the list of examples, where a file (image, pdf, whatever) could be received through the API Gateway in a POST-request and then uploaded into a S3.

I think it would be great to have it, since it is a rather common use case for Lambdas.

Best regards,

username_0

Answers:

username_1: Why not have the client upload the file directly to S3? No need to pay for Lambda execution time just to forward a file to S3.

username_0: Hey,

My idea was that the Lambda function could include something like manipulation of the file or use data of the file somewhere else.

This could also be done as a S3 event trigger (so when a file gets uploaded on the S3 it would trigger the Lambda), but in some cases it would be handier to upload the file through the API Gateway & Lambda-function.

username_2: @username_1 @username_0 is this the recommended pattern? I'm pretty new to building restful API (serverless is awesome), so I'm not exactly sure if I should be accepting a base64 encoded string via the create method or first creating an object via one restful call then putting the base64 encoded string (image) in a second call. Any examples would be greatly appreciated :)

I know that there are examples for S3 upload and post processing, but there is no example used with a restful/ dynamodb setup.

username_2: @username_0 , @username_1 also should this issue be assigned with the question label?

username_1: For uploading files, the best way would be to return a [pre-signed URL](http://docs.aws.amazon.com/AmazonS3/latest/dev/PresignedUrlUploadObject.html), then have the client upload the file directly to S3. Otherwise you'll have to implement uploading the file in chunks.

username_3: @username_2 @username_0 @username_1 @rupakg did anybody trying to create it? because I'm also working for that

username_2: This worked for me with runtime: nodejs6.10 and the dependencies installed. Let me know if you have any questions.

`

use strict";

const uuid = require("uuid");

const dynamodb = require("./dynamodb");

const AWS = require("aws-sdk");

const s3 = new AWS.S3();

var shortid = require('shortid');

module.exports.create = (event, context, callback) => {

const timestamp = new Date().getTime();

const data = JSON.parse(event.body);

if (typeof data.title !== "string") {

console.error("Validation Failed");

callback(null, {

statusCode: 400,

headers: { "Content-Type": "text/plain" },

body: "Couldn't create the todo item due to missing title."

});

return;

}

if (typeof data.subtitle !== "string") {

console.error("Validation Failed");

callback(null, {

statusCode: 400,

headers: { "Content-Type": "text/plain" },

body: "Couldn't create the todo item due to missing subtitle."

});

return;

}

if (typeof data.description !== "string") {

console.error("Validation Failed");

callback(null, {

statusCode: 400,

headers: { "Content-Type": "text/plain" },

body: "Couldn't create the todo item due to missing description."

});

return;

}

if (typeof data.sectionKey !== "string") {

console.error("Validation Failed");

callback(null, {

statusCode: 400,

headers: { "Content-Type": "text/plain" },

body: "Couldn't create the todo item due to missing section key."

});

return;

}

if (typeof data.sortIndex !== "number") {

console.error("Validation Failed");

callback(null, {

statusCode: 400,

headers: { "Content-Type": "text/plain" },

[Truncated]

body: JSON.stringify(params.Item),

};

callback(null, response);

});

}).catch(function(err) {

console.log(err);

// create a response

const s3PutResponse = {

statusCode: 500,

body: JSON.stringify({

"message": "Unable to load image to S3"

}),

};

callback(null, s3PutResponse);

});

};

`

username_3: @username_0 hi, I need help to create a cloudformation json file to upload a file directly to s3 using api gateway and lambda function. Is it possible to do it?

username_0: @username_3 It seems to be possible, however I never finished my implementation.

You might wanna check this blog which has quite simple instructions on how to do it! http://blog.stratospark.com/secure-serverless-file-uploads-with-aws-lambda-s3-zappa.html

username_3: @username_0 thank you, but I want to do it in only lambda and api gateway, S3 in cloudformation but it is showing HTML.

Can you help me with how to link lambda function and api gateway

username_0: This question is not really related to this thread.

Status: Issue closed

username_0: Hey,

I'm wondering if there is any good example which could be added to the list of examples, where a file (image, pdf, whatever) could be received through the API Gateway in a POST-request and then uploaded into a S3.

I think it would be great to have it, since it is a rather common use case for Lambdas.

Best regards,

username_0

username_0: @aemc I think you should be able to set the file name when creating the presigned url. There you can add it with the extension included, if you wish to.

username_4: I'm quite new to serverless myself but isn't the most widely seen approach more costly than what was asked by OP for a file upload + processing in a lambda?

What we usually see is to send the file to S3 or asking a signed url to a lambda to then upload to S3 (like in https://www.netlify.com/blog/2016/11/17/serverless-file-uploads/).

However, if the file needs to be processed, that means that we access the file from S3 when we could access it directly in the lambda (and then store it in S3 if needed). Which means that we pay for an access we don't really need.

Am I wrong in thinking that the approach asked by OP (uploading in chunks then saving to S3) would be more cost-efficient than uploading to S3 when there's some processing involved?

username_5: Does anyone who worked with the signed-URL approach find a way to bundle the upload in a transaction? We are currently handling files up to 50 MB, so using a lambda (or even API Gateway) is not an option due to the current limits. Whenever a file is uploaded, we have to make a database entry at the same time. If the database entry is made in a separate request (e. g. when creating the signed upload link) we run into trouble if the client calls the lambda but then loses internet access and cannot finish the file upload, then there is inconsistent state between the database and S3.

What is the serverless way to run transactions including a database and S3?

username_1: You can create a database record when the signed URL is created, and then update it from a lambda triggered by an S3 event when the object has been created. If you want to handle aborted uploads, you could trigger a lambda from a cloudwatch schedule that handles (e.g. removes) records for files that have not been uploaded within the validity of the signed URL. Or if using dynamodb, you could set TTL on the records for pending uploads.

username_5: Hmm, this would still leave me with an inconsistent state. I could set a flag on the record that states whether the file is already confirmed or not. Sounds like monkey-patching a transaction system, though. If there is no better solution I will stay off serverless for these uploads for a while longer.

username_1: Yes, you could store the state of the upload (e.g. initiated/completed).

The serverless approach often requires you to think asynchronously. The advantage is that you don't have to pay compute time while you are just waiting for the client to upload data over a potentially slow connection.

username_6: @username_5 have you figured this out? I'm facing a similar situation. I thought to create the database record when creating the signed URL but not sure how can I handle my database state in case something goes wrong or in case the user just give up uploading the file.

@username_1 in cases like this, wouldn't it be better to handle the upload using a lambda function?

username_1: @username_6 As mentioned before, just store the state of the upload in your database.

You could use Lambda, S3 triggers and DynamoDB TTL to implement a flow like:

- client calls API to get upload URL

- backend creates database record with `state: initiated` and `ttl: 3600`

- backend creates signed URL and returns it to the client

- client receives URL, and starts uploading file directly to S3

- when upload is complete, S3 triggers lambda

- lambda updates database record: `state: complete` (remove `ttl` field)

All records in the DB with `state: complete` are available in S3. Records with `state: initiated` are either uploading (and will turn to `state: complete`), or abandoned, and will be removed automatically when the TTL expires.

username_6: I've ended up doing something pretty close to what you described. What I did was:

1. Client calls the API to get an upload URL

2. Client uploads the file to the provided URL

3. When the file is uploaded to s3 I got a lambda that listens to this event and then inserts the data into my database;

Thanks for your help @username_1

username_7: both approaches are valid.

with the presigned S3 URLs - you have to implement uploading logic on both backend and frontend. PUT requests can not be redirected.

as an additional note to [aws-node-signed-uploads example](https://github.com/serverless/examples/tree/master/aws-node-signed-uploads) it is _better_ to sign content-type and file-size together with filename. (to make s3 to check for those as well, if attacker will want to send some `.exe` file instead)

but the receiving a file and processing it (even without s3 involved) is also a valid use-case. thanks @username_2 code looks interesting. looks like APIGateway is base64-encoding the octet streams.

i'll keep looking, tho |

Jermolene/TiddlyWiki5 | 94587000 | Title: Extend syslink.js to linkify prefix "$:/_" and a suffixing number

Question:

username_0: In #1767 @pmario explains regarding automatic SystemTiddlerLink detection.

```

There is a rule syslink.js that detects system tiddler links. It basically applies these rules:

starting with a literal $:

then any number of character not a whitespace, < or |

closing with anything that is, again, not a whitespace, < or |

As Jeremy wrote ^^ and basically everything except whitespace < | are allowed for system tiddlers to be recogniced as automatic WikiLinks.

```

...but in my TWaddle site, I typically use the prefix

`$:/_` (specifically, I use `$:/_TWaddle/`)

This is however not recognized as a WikiLink. Can it please be made to accept this.

Neither are suffixing digits or numbers included. Pretty serious IMO.

`$:/foo2`

Answers:

username_1: I don't understand. `[[$:/_TWaddle/]]` works for me:

<img width="986" alt="screen shot 2015-07-13 at 09 30 49" src="https://cloud.githubusercontent.com/assets/70075/8650619/0be29e8a-2942-11e5-8d3d-3b836d7f28fc.png">

username_2: I think it makes sense to extend the automatic system tiddler link rules to include underscore and digits.

Status: Issue closed

username_0: @username_1

You see the difference if you type in the following two

$:/.foobar

$:/_foobar

The first is recognized, the second isn't. People insert the point or the underscore to make e.g all their own system tiddlers list together.

@username_2 - great.

username_1: Oh I guess because the auto linking doesn't work for terms that are not CamelCased I just always got into the practice of putting brackets around all my links. |

StraboSpot/strabo-mobile | 87458436 | Title: images

Question:

username_0: Did some work with this in the branch https://github.com/StraboSpot/strabo-mobile/tree/imageUpload but still not working.

Answers:

username_0: Did some work with this in the branch https://github.com/StraboSpot/strabo-mobile/tree/imageUpload but still not working.

username_1: Fixed in 864333c6fe5262ca22a708a7099f87ab4b017f54. I like the idea of "images" as a sibling to "properties". If it were a child of "properties", I feel that it would get too crowded.

username_0: @username_1 Can you see if you can get the download working too? I added code for it in the branch but not sure about the format we need to actually save the image in the spot locally.

username_1: Corrected image download in c457b04788231b6e7b6a22a1aa0d1363fc94a007. Problem was due to XHR coming back as a string and not as a blob. Then needed to convert blob back to base64 encoded string.

username_0: @username_1 Great, this is looking good and I merged it in with master. Still have a problem with the server overwriting images since they all have the same filename but Jason says he'll fix it sever-side tomorrow. See https://github.com/StraboSpot/strabo-server/issues/11

Status: Issue closed

|

atk4/ui | 301359142 | Title: Grid can not handle $g->setModel(new MyModel($this->db), FALSE);

Question:

username_0: Right from the demo ( https://github.com/atk4/ui/blob/develop/demos/grid.php ) , I wanted to use the code like this:

`<?php

require 'init.php';

require 'database.php';

$g = $app->add(['Grid']);

$g->setModel(new Country($db), FALSE);

$g->addQuickSearch();

$g->menu->addItem(['Add Country', 'icon' => 'add square'], new \atk4\ui\jsExpression('alert(123)'));

$g->menu->addItem(['Re-Import', 'icon' => 'power'], new \atk4\ui\jsReload($g));

$g->menu->addItem(['Delete All', 'icon' => 'trash', 'red active']);

$g->addColumn('name');

$g->addColumn(null, ['Template', 'hello<b>world</b>']);

//$g->addColumn('name', ['TableColumn/Link', 'page2']);

$g->addColumn(null, 'Delete');

$g->addAction('Say HI', function ($j, $id) use ($g) {

return 'Loaded "'.$g->model->load($id)['name'].'" from ID='.$id;

});

$g->addModalAction(['icon'=>'external'], 'Modal Test', function ($p, $id) {

$p->add(['Message', 'Clicked on ID='.$id]);

});

$sel = $g->addSelection();

$g->menu->addItem('show selection')->on('click', new \atk4\ui\jsExpression(

'alert("Selected: "+[])', [$sel->jsChecked()]

));

$g->ipp = 10;

`

I only changed lines 7 and 12 (added a FALSE to have the columns added myself and added one column).

Returns an "calling function on NULL" exception in line 234 of Grid.php...

It seems like Grid can not handle it like this. It works with the normal table and forms though.

Answers:

username_1: It's not because `setModel()`, it's because you have `null` as column name and it calls `Table->addColumn(null, 'whatever', null)` and here https://github.com/atk4/ui/blob/develop/src/Table.php#L151 it try to create model field with name `null` :)

```

$field = $this->model->addField($name); // $name = null here

```

Solution / workaround - use meaningful column name (which is not model field name).

username_1: Well actually it was because of setModel :)

Here is real issue why this happened: https://github.com/atk4/ui/issues/390 and solution: https://github.com/atk4/ui/pull/391

username_2: Table should support null as a column name.

Status: Issue closed

|

Meragon/Unity-WinForms | 205099157 | Title: i creat c# code in unity3d and implement Control,copy your codes,but when i run,only show a mouse icon 。why?thanks!

Question:

username_0: i creat c# code in unity3d and implement Control,copy your codes,but when i run,only show a mouse icon 。why?thanks!

Answers:

username_1: Make sure your control is placed on a form. Like

```

public class MyControl : Control

{

public MyControl()

{

Size = new Size(64, 64);

}

...OnPaint(e)

{

e.Graphics.FillRectangle(Color.Red, 0, 0, With, Height);

}

}

public class MyForm : Form

{

public MyForm()

{

var c = new MyControl();

c.Location = new Point(32, 32);

Controls.Add(c);

}

}

public class AppBeh : MonoBehaviour

{

private void Start()

{

new MyForm();

}

}

```

username_1: Or just upload your project and I'll take a look.

username_0: this is my project,thank you very much.

username_0: i send it to your email

Status: Issue closed

username_0: i creat c# code in unity3d and implement Control,copy your codes,but when i run,only show a mouse icon 。why?thanks!

username_0: could you tell me your email,i send my project to you,thank you very much.

username_1: <EMAIL>

Status: Issue closed

|

paketo-buildpacks/paketo-website | 679245331 | Title: Update docs sidebar

Question:

username_0: We should update the docs sidebar nav to be more flexible and organized. The CFF web team has already made some changes to the Hugo config. to support a more "tree-like" nav, but we'll need to make some changes to wire everything up. Github Branch - https://github.com/paketo-buildpacks/paketo-website/tree/docs_sidebar_enhancement

The eventual structure for this should look something like this:

We don't have some of these pages yet, so for now we should just make the sidebar nav flexible and nest existing content around the proposed nav.

Answers:

username_0: This looks great. This has been implemented in the `redesign` branch and will be merged in once we're closer to shipping all of our website changes.

Status: Issue closed

|

getsentry/sentry-python | 466024993 | Title: Django Integration Leaks Memory

Question:

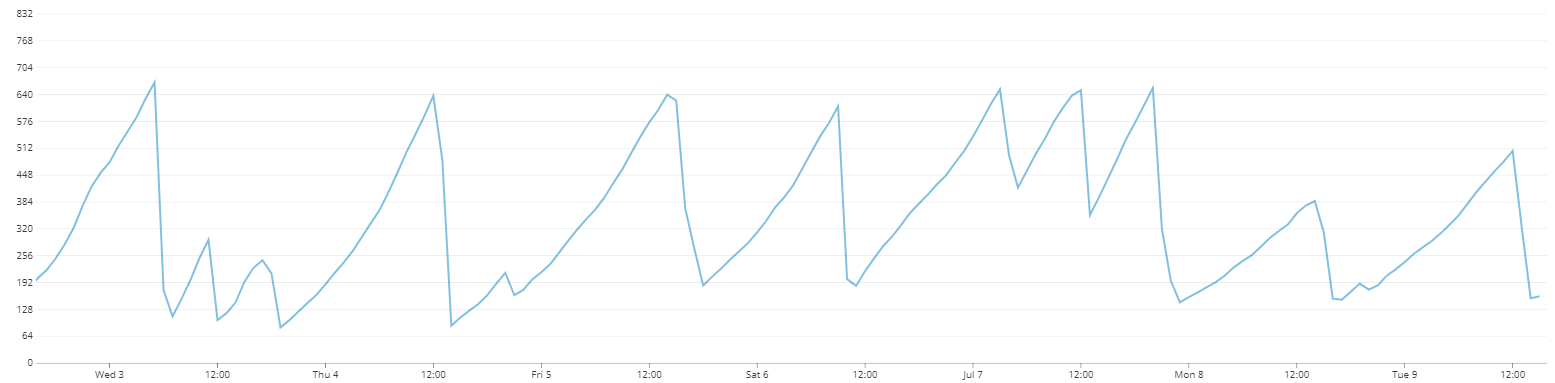

username_0: I expended around 3 days trying to figure out what was leaking in my Django app and I was only able to fix it by disabling sentry Django integration (on a very isolated test using memory profiler, tracemalloc and docker). To give more context before profiling information, that's how my memory usage graph looked on a production server (killing the app and/or a worker after a certain threshold):

Now the data I gathered:

By performing 100.000 requests on this endpoint:

```python

class SimpleView(APIView):

def get(self, request):

return Response(status=status.HTTP_204_NO_CONTENT)

```

A [tracemalloc ](https://docs.python.org/3/library/tracemalloc.html) snaphost, grouped by filename, showed sentry django integration using 9MB of memory after a 217 seconds test with 459 requests per second. (using NGINX and Hypercorn with 3 workers):

```

/usr/local/lib/python3.7/site-packages/sentry_sdk/integrations/django/__init__.py:0: size=8845 KiB (+8845 KiB), count=102930 (+102930), average=88 B

/usr/local/lib/python3.7/site-packages/django/urls/resolvers.py:0: size=630 KiB (+630 KiB), count=5840 (+5840), average=110 B

/usr/local/lib/python3.7/linecache.py:0: size=503 KiB (+503 KiB), count=5311 (+5311), average=97 B

/usr/local/lib/python3.7/asyncio/selector_events.py:0: size=465 KiB (+465 KiB), count=6498 (+6498), average=73 B

/usr/local/lib/python3.7/site-packages/sentry_sdk/scope.py:0: size=325 KiB (+325 KiB), count=373 (+373), average=892 B

```

tracemalloc probe endpoint:

```python

import tracemalloc

tracemalloc.start()

start = tracemalloc.take_snapshot()

@api_view(['GET'])

def PrintMemoryInformation(request):

current = tracemalloc.take_snapshot()

top_stats = current.compare_to(start, 'filename')

for stat in top_stats[:5]:

print(stat)

return Response(status=status.HTTP_204_NO_CONTENT)

```

I have performed longers tests and the sentry django integration memory usage only grows, never releases, this is just a scaled-down version of the tests I've been performing to identify this leak.

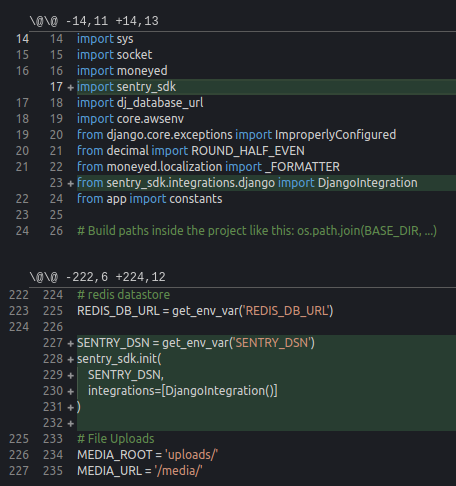

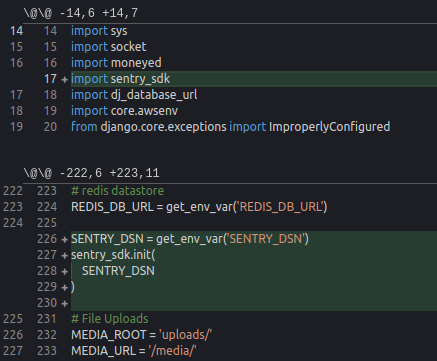

This is how my sentry settings looks like on settings.py:

Memory profile after disabling the Django Integration (same test and endpoint), no sentry sdk at top 5 most consuming files:

```

/usr/local/lib/python3.7/site-packages/django/urls/resolvers.py:0: size=1450 KiB (+1450 KiB), count=15123 (+15123), average=98 B

/usr/local/lib/python3.7/site-packages/hypercorn/protocol/h11.py:0: size=1425 KiB (+1425 KiB), count=8868 (+8868), average=165 B

/usr/local/lib/python3.7/site-packages/channels/http.py:0: size=1398 KiB (+1398 KiB), count=14848 (+14848), average=96 B

/usr/local/lib/python3.7/site-packages/h11/_state.py:0: size=1242 KiB (+1242 KiB), count=13998 (+13998), average=91 B

/usr/local/lib/python3.7/site-packages/h11/_connection.py:0: size=1226 KiB (+1226 KiB), count=15957 (+15957), average=79 B

```

settings.py for the above profile:

Memory profile grouped by line number (more verbose):

```

/usr/local/lib/python3.7/site-packages/sentry_sdk/integrations/django/__init__.py:272: size=4512 KiB (+4512 KiB), count=33972 (+33972), average=136 B

/usr/local/lib/python3.7/site-packages/sentry_sdk/integrations/django/__init__.py:134: size=4247 KiB (+4247 KiB), count=67945 (+67945), average=64 B

[Truncated]

Twisted==19.2.1

txaio==18.8.1

typed-ast==1.4.0

typing-extensions==3.7.4

Unidecode==1.1.1

urllib3==1.25.3

uvloop==0.12.2

vine==1.3.0

wcwidth==0.1.7

websockets==7.0

whitenoise==4.1.2

wrapt==1.11.2

wsproto==0.14.1

zipp==0.5.2

zope.interface==4.6.0

```

I used the [official python docker image](https://hub.docker.com/_/python) with the label 3.7, meaning latest 3.7 version.

Hope you guys can figure the problem with this data, I'm not sure if I'll have the time to contribute myself!

Answers:

username_0: Identifying a memory leak makes me a contributor? :/

username_1: Could you try a different wsgi server? I cannot reproduce any of this with uwsgi or django's devserver.

username_0: That might be the thing then, I'm actually using ASGI, tried the three options available, Daphne, Hypercorn and Gunicorn+Uvicorn.

username_1: Oh you're using Django from a development branch and run on ASGI? That might be not the same issue @username_3 is seeing at all then.

username_0: I'm absolutely not using Django from a development branch, I'm using Django Channels.

username_1: got it. we never tested with channels, but have support/testing on our roadmap. the memory leak still shouldn't happen. we'll investigate but it could take some time.

username_0: Feel free to reach me for any further clarifications, I'm happy to help and appreciate your efforts nonetheless 👍

username_1: Yeah, I can repro it with a random channels app that just serves regular routes via ASGI + hypercorn (not even any websockets configured)

So far no luck with uvicorn though. Did you observe faster/slower leaking when comparing servers?

username_1: I understand the problem now, and can see how we leak memory. I have no idea why this issue doesn't show with uvicorn but I also don't care.

It's very simple: For resource cleanup we expect every Django request to go through `WSGIHandler.__call__`. This doesn't happen for channels.

I think you will see more of the same issue if you try to set tags with `configure_scope()` in one request. They should not persist between requests, but do with ASGI.

This issue should not be new in 0.10, but it should've been there since forever. @username_3 if you saw a problem when upgrading to 0.10 then this is an entirely separate issue, and I would need more information about the setup you're running.

I think this becomes a duplicate of #162 then (or it will get #162 as dependency at least). cc @username_2. The issue is that last time I looked into this I saw a not-quite-stable specification, which is why I was holding off of it.

You might be able to find a workaround by installing https://github.com/encode/sentry-asgi in addition to the Django integration

username_2: ASGI 3 is baked and done. You’re good to go with whatever was blocked there.

username_1: I don't think this helps in this particular situation because the latest version of channels still appears to use a prev version (had to pin down sentry-asgi)

username_1: @username_2 I want to pull sentry-asgi into sentry. Do you think it would be possible to make a middleware that behaves as polyglot ASGI 2 and ASGI 3?

username_2: Something like this would be a "wrap ASGI 2 or ASGI 3, and return an ASGI 3 interface" middleware...

```python

def asgi_2_or_3_middleware(app):

if len(inspect.signature(app).parameters) == 1:

# ASGI 2

async compat(scope, receive, send):

nonlocal app

instance = app(scope)

await instance(receive, send)

return compat

else:

# ASGI 3

return app

```

Alternatively, you might want to test if the app is ASGI 2 or 3 first, and just wrap it in a 2->3 middleware if needed.

```python

def asgi_2_to_3_middleware(app):

async compat(scope, receive, send):

nonlocal app

instance = app(scope)

await instance(receive, send)

return compat

```

Or equivalently, this class based implementation, that Uvicorn uses: https://github.com/encode/uvicorn/blob/master/uvicorn/middleware/asgi2.py

username_3: The main problem I observed was an increase in memory usage over time of a long-running (tens of minutes) Celery task (which has lots of Django ORM usage). I haven't had a chance to do any traces, but I'll create a new issue when I do.

username_1: @username_0 When 0.10.2 comes out, you can do this to fix your leaks: https://github.com/getsentry/sentry-python/blob/ce3b49f8f0f76939d972868f417dcf9ef78758aa/tests/integrations/django/myapp/asgi.py#L19

username_0: Hey! that sounds great @username_1 I will try it eventually, thanks for your time!!!

username_1: 0.10.2 is released with the new ASGI Middleware! No documentation yet, will

write tomorrow

username_2: Nice one. 👍

username_1: Please watch this PR, when it is merged the docs are automatically live:

https://github.com/getsentry/sentry-docs/pull/1118

username_1: docs are deployed, this is basically fixed. New docs for ASGI are live on https://docs.sentry.io/platforms/python/asgi/

Status: Issue closed

username_4: @username_1 I'm currently using channels==1.1.8 and raven==6.10.0 and experiencing memory leak issues very similar to this exact issue. I wanted to inquire whether there is a solution for Django Channels 1.X? I see that Django Channels 2.0 and sentry-sdk offer a solution via SentryAsgiMiddleware. I wanted to see if this also works with Django Channels 1.0?

username_1: I'm not aware of any issues on 1.x, and for that version sentry-sdk does

not do anything differently, but I believe you would have to try for

yourself. |

AAChartModel/AAChartKit-Swift | 688827059 | Title: moveOverEventMessage.x is nil when tapped any node - AAChartViewDelegate

Question:

username_0: Hi,

I am using Line chart in my app and I want to show a certain view when tapped on any Node(x,y) value in the graph.

Answers:

username_1: Can you post your configuration code of username_1 or AAOptions?

username_0: `self.chartModel = username_1()

.chartType(AAChartType.line)

.colorsTheme(self.currentSelectedGraphData.getSelectedColorTheme())//Colors theme

.dataLabelsEnabled(true)

.stacking(.none)

.touchEventEnabled(true)

.tooltipEnabled(false)

.yAxisLabelsEnabled(true)

.legendEnabled(false)

.yAxisVisible(true)

.yAxisMin(Float(self.currentSelectedGraphData.minYValue))

.yAxisMax(Float(self.currentSelectedGraphData.maxYValue))

.yAxisAllowDecimals(true)

.xAxisVisible(false)

.xAxisLabelsEnabled(false)

.xAxisTickInterval(1.0)

.animationType(.linear)

.markerRadius(5)

.markerSymbolStyle(.borderBlank)

.markerSymbol(.circle)

.series(self.currentSelectedGraphData.getSelectedGraphSeries())`

username_0: By the way, I have observed, this is even happening in the sample project of swift as well.

username_1: I think may be it is a bug of Highcharts, However, Index values is always appears, so you can get x and y values by index

```swift

x = moveOverEventMessage.index

y = aaSeriesElement.data[moveOverEventMessage.index]

```

username_0: Actually its not that simple. My X and Y axis are being generated at run time according to the data model and X axis could also be 0.1 as well. so Can't really reply on the above approach.

username_1: I found the reason now. because JavaScript is dynamic language, so the types of x and y values are not very stable. sometimes it is `String` type, sometimes it is `Float` type, just like this as follow:

* `String` type y value

```js

user finger moved over!!!,get the move over event message: {

category = "C++";

index = 8;

name = 2020;

offset = {

plotX = "259.95833333333";

plotY = "196.772604709689";

};

x = 8;

y = "14.2";

}

```

* `Float` type y value

```js

user finger moved over!!!,get the move over event message: {

category = C;

index = 6;

name = 2020;

offset = {

plotX = "198.79166666667";

plotY = "156.3052309904728";

};

x = 6;

y = 17;

}

```

Status: Issue closed

username_1: It was fixed in this commit b72b82b

username_1: --------------------------------------------------------------------------------

🎉 Congrats

🚀 AAInfographics (5.0.3) successfully published

📅 August 31st, 21:43

🌎 https://cocoapods.org/pods/AAInfographics

👍 Tell your friends!

--------------------------------------------------------------------------------

huanghunbieguannoMac:AAChartKit-Swift anan$ |

Mygod/VPNHotspot | 462312927 | Title: Neither user 10109 nor current process has android.permission.MANAGE_USB

Question:

username_0: How to fix USB tethering? Error: "Neither user 10109 nor current process has android.permission.MANAGE_USB"

"pm grant be.mygod.vpnhotspot android.permission.MANAGE_USB" not working :-(

Android 9.0 (API 28), LineageOS 16.0

Answers:

username_1: #14

Status: Issue closed

|

haskell-distributed/network-transport-tcp | 89090421 | Title: Network.Transport.TCP may reject incoming connection request

Question:

username_0: Suppose A and B are connected, but the connection breaks. When A realizes this immediately and sends a new (heavyweight) connection request to B, then it /might/ happen that B has not yet realized that the current connection has broken and will therefore reject the incoming request from A as invalid.

This is low priority because

* the window of opportunity for the problem to occur is small, especially because in the case of a true network failure it will take some time before a new connection can be established

* even if the problem _does_ arise, A can simply try to connect again (A might have to that anyway, to find out if the network problem has been resolved).

Answers:

username_1: From @username_1 on June 16, 2015 22:23 _Copied from original issue: haskell-distributed/distributed-process#31_

This may refer to the same problem we are observing when resolving connection crossing [1].

A can retry for a long time if B doesn't have any stimulus to test the connection health. TCP does not detect connection failures unless B is trying to send something, and moreover, detection won't happen promptly unless the user cares to tweak the tcp user timeout or the amount of retries.

[1] https://cloud-haskell.atlassian.net/browse/NTTCP-9 |

openssl/openssl | 375850505 | Title: Cannot compile openssl1.1.1's Windows 32-bit static library

Question:

username_0: First of all, currently, is there an official openssl 1.1.1 Windows 32-bit static library? Where can I download it?

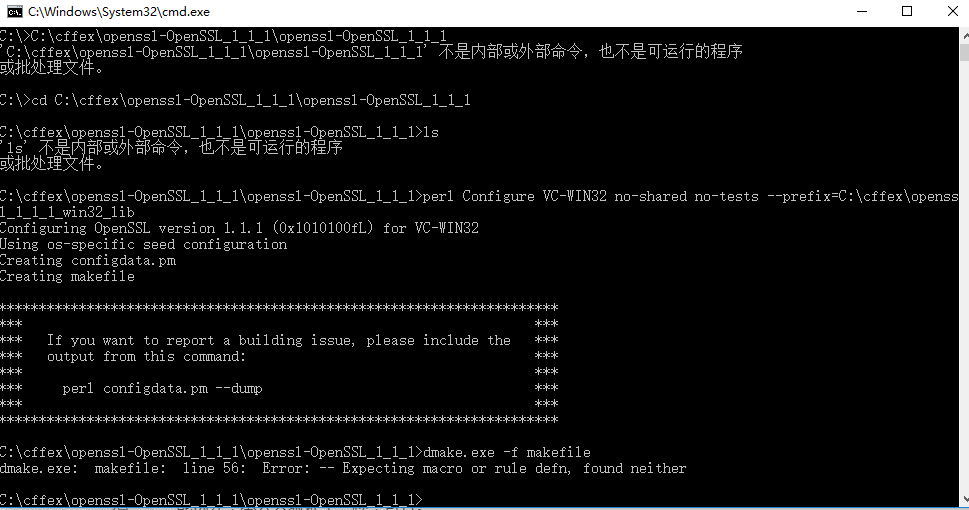

Besides, I referred to the Chinese link: https://blog.csdn.net/qq_22000459/article/details/82968171. But as many of the posts on the web say, the nmake tool compiled incorrectly and could not be fixed. Using the dmake.exe under perl to compile also comes with an Error: Error: -- Expecting macro or rule defn, found neither. I tried many times and cann't fix it. So far, it is easy for me to complie static library of openssl 1.1.1 under linux system, but in windows systems, I can not solve the problem.

Is anyone that can help me? Thanks a lot.

Answers:

username_1: How about you show us exactly what you did, and what errors you get. Start with your configuration command, and then a log of what goes wrong.

username_0:

username_2: You're not supposed to use dmake. You are supposed to use nmake. What happens when you attempt to use that?

username_0:

I refer to the posts on many websites in China, all of which say that nmake compilation will have problems, so I use dmake.I tried it. There's also a problem.A post on the website seems to say that the makefile supports nmake by default, and that it is easily buggy to compile with dmake.But nmake compilation also has problems, so it's hard to fix.

username_2: Are you running this from a *developer* command prompt? Running nmake from the command line requires your environment to be properly set up. Using the developer command prompt should do that for you. Make sure you use the 32-bit developer command prompt with the VC-WIN32 OpenSSL Configuration target. If you really want 64-bit then use a 64-bit developer command prompt and VC-WIN64A OpenSSL Configuration target.

username_1: The the output, it looks like the environment is set up correctly. @username_0, would you mind translating that error message? Most of us don't read Chinese.

username_1: It's the translation of the `cl` error message that I'm interested in. Among others, it should say on what line the error occurred.

username_3: Although I know zero about Windows but I can provide the translation Richard was interested in. That `cl` error message said `'cl' is not either an external or internal command or an executable program`...

I guess you can then figure out the 'native' wording of Windows...

username_3: the `0x1` and `0x2` are return values of `cl` and `nmake.exe` in the 2 `fatal error U1017` lines, respectively.

username_1: Ah, in that case I was wrong earlier, the environment isn't set up right. See @mattcasswell's comment...

username_1: Thanks for the translation, @username_3

Status: Issue closed

username_0: Since the vs2015 x86 local tool command prompt was not installed by default, I need to rerun the installation program of vs2015 to supplement the installation again.

Use vs2015 x86 local tool command prompt to run nmake.

Thanks a lot. Problem sloved. @username_1 @username_2 @username_3 |

melvinsalas/tucurrique-website | 951006467 | Title: New Post - 178492562722259_996093277628846

Question:

username_0:

Link: https://www.facebook.com/178492562722259/posts/996093277628846/ |

rynazavr/goit-js-hw-01 | 623454488 | Title: bind

Question:

username_0: https://learn.javascript.ru/bind

https://stackoverflow.com/questions/2236747/what-is-the-use-of-the-javascript-bind-method

https://medium.com/@stasonmars/%D0%BF%D0%BE%D0%B4%D1%80%D0%BE%D0%B1%D0%BD%D0%BE-%D0%BE-%D0%BC%D0%B5%D1%82%D0%BE%D0%B4%D0%B0%D1%85-apply-call-%D0%B8-bind-%D0%BD%D0%B5%D0%BE%D0%B1%D1%85%D0%BE%D0%B4%D0%B8%D0%BC%D1%8B%D1%85-%D0%BA%D0%B0%D0%B6%D0%B4%D0%BE%D0%BC%D1%83-javascript-%D1%80%D0%B0%D0%B7%D1%80%D0%B0%D0%B1%D0%BE%D1%82%D1%87%D0%B8%D0%BA%D1%83-ddd5f9b06290

Answers:

username_0: https://www.javascripttutorial.net/javascript-bind/ |

OpenSourceBrain/StochasticityShowcase | 199661869 | Title: Can't run LEMS_NoisyCurrentInput.xml

Question:

username_0: @username_1 @justasb

I'm running this through pyNeuroML (which I don't think is the problem):

```

from pyneuroml import pynml

pynml.run_lems_with_jneuroml('LEMS_NoisyCurrentInput.xml')

```

which tells me that things failed with (abbreviated):

```

pyNeuroML >>> *** Command: java -Xmx400M -jar ".../site-packages/pyNeuroML-0.2.0-py3.4.egg/pyneuroml/lib/jNeuroML-0.8.0-jar-with-dependencies.jar" "LEMS_NoisyCurrentInput.xml" ***

```

So I run that on the command line to get the specific error:

```

$ java -Xmx400M -jar ".../site-packages/pyNeuroML-0.2.0-py3.4.egg/pyneuroml/lib/jNeuroML-0.8.0-jar-with-dependencies.jar" "LEMS_NoisyCurrentInput.xml"

jNeuroML v0.8.0

Loading: /.../StochasticityShowcase/NeuroML2/LEMS_NoisyCurrentInput.xml with jLEMS...

org.lemsml.jlems.core.run.RuntimeError: Error at PathDerivedVariable eval(): tgt: null; sin: IF_curr_exp[IF_curr_exp]; tgtvar: I; path: synapses[*]/I

at org.lemsml.jlems.core.run.PathDerivedVariable.eval(PathDerivedVariable.java:128)

at org.lemsml.jlems.core.run.StateType.initialize(StateType.java:373)

at org.lemsml.jlems.core.run.StateInstance.initialize(StateInstance.java:177)

at org.lemsml.jlems.core.run.StateInstance.initialize(StateInstance.java:160)

at org.lemsml.jlems.core.run.MultiInstance.initialize(MultiInstance.java:63)

at org.lemsml.jlems.core.run.StateInstance.initialize(StateInstance.java:165)

at org.lemsml.jlems.core.run.MultiInstance.initialize(MultiInstance.java:63)

at org.lemsml.jlems.core.run.StateInstance.initialize(StateInstance.java:165)

at org.lemsml.jlems.core.sim.Sim.run(Sim.java:276)

at org.lemsml.jlems.core.sim.Sim.run(Sim.java:152)

at org.lemsml.jlems.core.sim.Sim.run(Sim.java:143)

at org.neuroml.export.utils.Utils.loadLemsFile(Utils.java:391)

at org.neuroml.export.utils.Utils.runLemsFile(Utils.java:364)

at org.neuroml.JNeuroML.main(JNeuroML.java:306)

Caused by: org.lemsml.jlems.core.run.RuntimeError:

Problem while trying to return a value for variable I: NaN

```

and then a bunch of INFO messages. Do you know what I am missing to be able to run this example successfully?

Answers:

username_1: @username_0 That looks like an old version of pyNeuroML... I'd pull the latest from github (or merge from master), install it and try again.

username_1: I assume it's ok to close this now @username_0?

username_0: Yes.

Status: Issue closed

|

gbif/portal-feedback | 233392811 | Title: OccurrenceID search doesn't return results

Question:

username_0: **OccurrenceID search doesn't return results**

Copying the occurrenceID from this record:

https://demo.gbif.org/occurrence/575175144

Does not return results when you add it to an occurrence search:

https://demo.gbif.org/occurrence/search?dataset_key=bf2a4bf0-5f31-11de-b67e-b8a03c50a862&occurrence_id=http:%2F%2Fdata.rbge.org.uk%2Fherb%2Fe00070244&taxon_key=3097538&advanced=1

-----

fbitem-4cd51ab3c35a7b3305c9ec1422d26bc93a21a482

System: Chrome 58.0.3029 / Mac OS X 10.11.5

Referer: https://demo.gbif.org/occurrence/search?dataset_key=bf2a4bf0-5f31-11de-b67e-b8a03c50a862&occurrence_id=http:%2F%2Fdata.rbge.org.uk%2Fherb%2Fe00070244&taxon_key=3097538&advanced=1

Window size: width 1344 - height 799

[API log](http://elk.gbif.org:5601/app/kibana#/discover/UAT-Varnish-403s?_g=(refreshInterval:(display:Off,pause:!f,value:0),time:(from:'2017-06-03T20:10:29.214Z',mode:absolute,to:'2017-06-03T20:16:29.214Z'))&_a=(columns:!(request,response,clientip),filters:!(),index:'prod-varnish-*',interval:auto,query:(query_string:(analyze_wildcard:!t,query:'response:%3E499%20AND%20(request:%22%2F%2Fapi.gbif.org%22)')),sort:!('@timestamp',desc))&indexPattern=uat-varnish-*&type=histogram)

[Site log](http://elk.gbif.org:5601/app/kibana#/discover/UAT-Varnish-403s?_g=(refreshInterval:(display:Off,pause:!f,value:0),time:(from:'2017-06-03T20:10:29.214Z',mode:absolute,to:'2017-06-03T20:16:29.214Z'))&_a=(columns:!(request,response,clientip),filters:!(),index:'prod-varnish-*',interval:auto,query:(query_string:(analyze_wildcard:!t,query:'response:%3E399%20AND%20(request:%22%2F%2Fdemo.gbif.org%22)')),sort:!('@timestamp',desc))&indexPattern=uat-varnish-*&type=histogram)

Answers:

username_1: Thanks for reporting

This looks like an API issue and is same behaviour as the current production site:

Current site: http://www.gbif.org/occurrence/search?ORGANISM_ID=http%3A%2F%2Fdata.rbge.org.uk%2Fherb%2FE00070244

no results for

not encoded http://api.gbif.org/v1/occurrence/search?occurrence_id=http://data.rbge.org.uk/herb/e00070244

no results for encoded http://api.gbif.org/v1/occurrence/search?occurrence_id=http%3A%2F%2Fdata.rbge.org.uk%2Fherb%2Fe00070244

but the occurrence is in SOLR

https://demo.gbif.org/occurrence/search?q=e00070244&basis_of_record=PRESERVED_SPECIMEN&country=CL&taxon_key=3097538

Occurrence: https://demo.gbif.org/occurrence/575175144

username_2: Hi. The above referenced duplicated issue was mine (for some reason my login info was not reported; perhaps I started writing the issue before I logged).

[https://github.com/gbif/portal-feedback/issues/235](https://github.com/gbif/portal-feedback/issues/235)

But I have to say the behaviour is not the same. In my case, the API works well:

http://api.gbif.org/v1/occurrence/search?occurrence_id=SANT:SANT-Lich:8850-B

username_1: @username_2 I see that it is an issue of casing - looks like the api and front end don't agree on wether case is important and varies per field. I'll look into it. Thank you for reporting

As for the login info, that is to be expected (and leaving feedback without contact info is just fine btw). We have deliberately left the option to the user to provide contact details, but the idea is to have the option to set a default (e.g. your github handle). But that we haven't implemented yet

username_1: The e is lowercased following the procedure for other fields. This is not how the API works. Seems reasonable that ids are case sensitive, so the front end should reflect that.

This works: http://api.gbif.org/v1/occurrence/search?occurrence_id=http%3A%2F%2Fdata.rbge.org.uk%2Fherb%2FE00070244

Status: Issue closed

|

iBotPeaches/Apktool | 195789562 | Title: Failed to decompile the apk

Question:

username_0: ### Information

1. **Apktool Version (`apktool -version`)** - 2.2.1

2. **Operating System (Mac, Linux, Windows)** - Mac Sierra, and JDK 1.8

3. **APK From? (Playstore, ROM, Other)** - Local apk

### Stacktrace/Logcat

Exception in thread "main" brut.androlib.AndrolibException: brut.directory.DirectoryException: file must be a directory: client-xxxx

at brut.androlib.res.AndrolibResources.decodeManifestWithResources(AndrolibResources.java:225)

at brut.androlib.Androlib.decodeManifestWithResources(Androlib.java:137)

at brut.androlib.ApkDecoder.decode(ApkDecoder.java:106)

at brut.apktool.Main.cmdDecode(Main.java:166)

at brut.apktool.Main.main(Main.java:81)

Caused by: brut.directory.DirectoryException: file must be a directory: client-16.5.33-qa-phone-debug

at brut.directory.FileDirectory.<init>(FileDirectory.java:38)

at brut.androlib.res.AndrolibResources.decodeManifestWithResources(AndrolibResources.java:205)

... 4 more

### Steps to Reproduce

1. Decompile the apk with following command : apktool d xxxx.apk

### Questions to ask before submission

1. Have you tried `apktool d`, `apktool b` without changing anything? - Answer : I have just tried to decompile the apk with this command: apktool d xxxx.apk

Answers:

username_1: Haven't seen this problem before. Almost like the manifest couldn't be located or the structure of the application invalid.

I will need the APK unforunately to look at this any further. If the application is private, you can email me (ibotpeaches) (at) gmail (dot) com, with the subject - [PRIVATE] Bug 1381 - Apktool otherwise just upload it here.

Status: Issue closed

username_1: Closing. 4+ years later, no application. |

deeplearning4j/deeplearning4j | 139298426 | Title: Word2Vec:loadTxtVectors using input Stream

Question:

username_0: Hi all,

It would be convenient to be able to load a Word2Vec model from an input stream instead of a File only.

Status: Issue closed

Answers:

username_1: Implemented: https://github.com/deeplearning4j/deeplearning4j/pull/1237

Will be merged into master a bit later. |

xylagbx/Picture | 548459944 | Title: 程序员请收好:10 个实用的 VS Code 插件

Question:

username_0: 提示:这些插件都可以在 Visual Studio Marketplace 上免费找到。

<https://marketplace.visualstudio.com/>

**0、Visual Studio Intellicode**

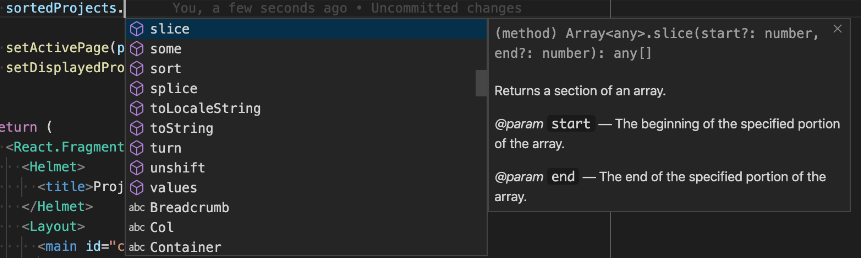

下载超过 320 万次的 Visual Studio Intellicode,是 VS Marketplace 上下载次数最多的插件之一。而且,在我看来,它是你能用到的最有用的插件之一。

这个插件旨在帮助开发人员提供智能的代码完成建议而构建的,并且已预先构建了对多种编程语言的支持。

借助机器学习技术和查找众多开源 GitHub 项目中使用的模式,该插件在编码时提供建议。

**1、 Git Blame**

有时候,你需要知道是谁写了某段代码。好吧,Git Blame 进行了救援,它会告诉你最后接触一行代码的人是谁。最重要的是,你可以看到它发生在哪个提交中。

这是非常好的信息,特别是当你使用诸如特性分支之类的东西时。在使用特性分支时,你可以使用分支名称来引用票据。因为 Git Blame 会告诉你哪一个提交 (也就是哪个分支) 的一行代码被更改了,所以你就会知道是哪一个票据导致了这种更改。这有助于你更好地了解更改背后的原因。

**2、Prettier**

Prettier 是开发人员在开发时需要遵循一组良好规则的最佳插件之一。它是一个引人注目的插件,让你可以利用 Prettier 软件包。它是一个强大的、自以为是的代码格式化程序,可以让开发人员以结构化的方式格式化他们的代码。

Prettier 与 JavaScript、TypeScript、HTML、CSS、Markdown、GraphQL 和其他现代工具一起使用,可以让你能够正确地格式化代码。

**3、JavaScript (ES6) Code Snippets**

每个略更新的网页开发人员可能都使用过各种 JavaScript 堆栈。无论你选择哪种框架,在不同的项目中键入相同的通用代码应该会减少你的工作流程。

JavaScript (ES6)Code Snippets 是一个方便的插件,它为空闲的开发人员提供了一些非常有用的 JavaScript 代码片段。它将标准的 JavaScript 调用绑定到简单的热键中。一旦你掌握了窍门,你的工作效率就会大大提高。

**4、Sass**

你可能已经猜到了,这个插件可以帮助正在使用样式表的开发人员。一旦开始为应用程序创建样式表,就一定要使用 Sass 插件。该插件支持缩进的 Sass 语法自动设置语法制导 、自动补全和格式化。

在样式方面,你肯定希望将此工具包含在你的工具集中。

**5、Path Intellisense**

Path Intellisense 是 Visual Studio 代码之一,它可以为你的开发提供有保证的生产力提升。如果你同时处理许多项目,使用了太多不同的技术,那么你肯定会需要一个可以帮你记住路径名的便捷工具。这个插件将为你节省大量的时间,否则将浪费在寻找正确的目录上。

Path Intellisence 最初是用于自动完成文件名的简单扩展,但它后来被证明是大多数开发人员工具集中的宝贵资产。

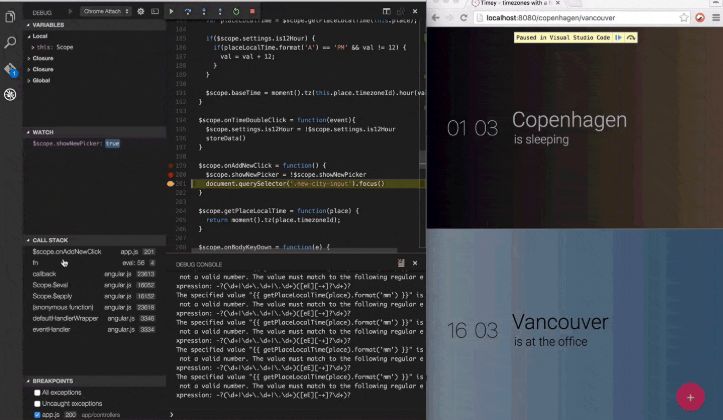

**6、Debugger for Chrome**

如果需要调试 JavaScript,则无需离开 Visual Studio Code。微软发布的 Chrome 调试器允许你可以直接在 Visual Studio Code 中调试源文件。

**7、ESLint**

ESLint 插件将 ESLint 集成到 Visual Studio Code 中。如果你不熟悉它,ESLint 就会作为一个静态分析代码的工具来快速发现问题。

ESLint 发现的大多数问题都可以自动修复。ESLint 修复程序可识别语法,因此你不会遇到由传统查找和替换算法引入的错误。最重要的是,ESLint 是高度可定制的。

**8、SVG Viewer**

[Truncated]

有大量的自定义插件,可以改变侧边栏的配色方案和图标。有些流行的 Themes 都是免费的,比如:One Monokai 、One Dark Pro 和 Material Icon 。

英文:

medium.com/better-programming/10-extremely-helpful-visual-studio-code-plugins-for-programmers-c8520a3dc4b8

推荐阅读 点击标题可跳转

[真香!Emacs 大佬公开转投 VS Code](http://mp.weixin.qq.com/s?__biz=MjM5OTA1MDUyMA==&mid=2655445262&idx=1&sn=03bc6c911bd1582ac4febd64f790a1db&chksm=bd732d798a04a46f2af183273b94c9d42ed98541357f0af2407ff657eac387d0d81285aa131d&scene=21#wechat_redirect)

[为什么 Facebook 会选择 VS Code 作内部开发工具?](http://mp.weixin.qq.com/s?__biz=MjM5OTA1MDUyMA==&mid=2655447693&idx=1&sn=7dc4f973287e18a9b46b0a5b4e8a1d77&chksm=bd7326fa8a04afecf9bcdc59fa06a41c51acea966c57abdd727b8f5e7e662a8d4c13a8a4c89b&scene=21#wechat_redirect)

关注「程序员的那些事」加星标,不错过圈内事

!\[](<https://mmbiz.qpic.cn/mmbiz_png/2A8tXicCG8ylBdiap3J3SwP0ianxOhYKCBYY75PlXmINda18ybIrkfxbRT4jhBBtSu3k1qbwdHUArhNOIKsnQgJMg/640?wx_fmt=png>

<https://mp.weixin.qq.com/s/JgZpsqJScGY9LLffTskWzQ>

<https://mp.weixin.qq.com/s/JgZpsqJScGY9LLffTskWzQ> |

MrTJP/ProjectRed | 442356151 | Title: Project Bench does not drop when broken

Question:

username_0: I did mine with a pickaxe.

Answers:

username_1: It drops fine. You need to use a pickaxe. You cannot harvest by hand. I will make it break slower if you do it by hand to make this more clear.

username_0: I did mine with a pickaxe.

username_1: No longer relevant in 1.15 port

Status: Issue closed

|

asmith4299/asmith4299 | 216401778 | Title: Sơn Chống Thấm Jappont J6.7 | Chống Thấm tốt trong và ngoài nhà | SONJAPPONT.COM | 0167 266 6789

Question:

username_0: Sơn Chống Thấm Jappont J6.7 | Chống Thấm tốt trong và ngoài nhà | SONJAPPONT.COM | 0167 266 6789<br>

http://www.youtube.com/watch?v=qYzOVKsL2tQ<br><br><br>

via Sơn Nhà Đẹp - Sơn Jappont http://www.youtube.com/channel/UC-GPvET-eDZMBwFU6pzWqyg<br>

March 23, 2017 at 05:34PM |

yiisoft/yii2 | 245510418 | Title: new istallation - getting error /vendor/bower/jquery/dist

Question:

username_0: ### What steps will reproduce the problem?

Installation new istance

### What is the expected result?

The file or directory to be published does not exist: /media/sf_Web_share/www/solidcoinz.local/vendor/bower/jquery/dist

### What do you get instead?

In my own, I first time see that, but DevOps from my compfny always telling me about it for avg 2 month

I shall find the problem, but i don't think i'm enough in this framework, but,.,,,

### Additional info

Any server: CentOs/Ubuntu/Debian - detected

PHP: 7,0 or 7.1

Composer: 1.3.1

| Q | A

| ---------------- | ---

| Yii version | 2.0.12

| PHP version | 7.0/7.1

| Operating system | CentOs/Ubuntu/Debian

Answers:

username_0: Global fxp/composer-asset-plugin was old, Sorry!

username_0: Close it please

Status: Issue closed

username_2: and the solution was... ¿?

username_3: @username_2 the solution is not to use `fxp/composer-asset-plugin`. Use `asset-packagist.org` repository instead

username_4: I am getting this behavior on a yii2-app-basic template project with a brand-new composer.json and fxp/composer-asset-plugin removed.

username_4: What a mess. For whoever else finds this post, if you are upgrading an existing app by overwriting an old composer.json with the latest one (as I did) you need to add aliases to your config/web.php for the following:

```

'aliases' => [

'@bower' => '@vendor/bower-asset',

'@npm' => '@vendor/npm-asset',

]

```

https://github.com/yiisoft/yii2/issues/14324#issuecomment-311260179

username_5: I'm surprised that this problem has been coming up reliably for about four years, but every time the issue gets shut (and this must be the 7th thread I've read on the yii issues list alone) and the solution is always to refer to someone else's solution, although the solution is followed by posts suggesting it didn't work. everyone appears very hung up on the difference between 'bower' and 'bower-asset', which I get, but my problem (I know have both directories, aliases, fxp-whatever and asset-packagist blah) but the error still says I'm missing `jquery` (which I am). How on earth do I get `jquery` in either of the directories.

username_5: I guess to be fair, I now have it working, but with so many solutions having been offered to me, and so many (probably conflicting changes) I'm really sorry but I can't actually say what change I made helped. My best advice would be that it involves

- updating to the latest fxp thing (no idea how you find out what number it is(or what it does), I just randomly kept going up until it didn't work (1.4.2 was the last one),

- add the alias suggestion above into the top level of the config array,

- don't use the "replace" suggestion in one of the other accepted answers,

- delete the root vendor directory (it never came back, so not sure where it came from in the first place),

- rename web.php to main.php,

- enable fxp and asset-packagist,

- rm your existing vendor directory

- rm composer.lock,

- get a GitHub key and

- download from the worlds slowest download server

chuck in a few apachectl graceful and you might just get there. I was going to attach a composer.json file in case that helped anyone, but apparently GitHub forums don't allow it - really 😞

so here it is in text - and please, I'm happy for constructive feedback, but it turned itself into a dogs breakfast:

<pre>{

"name": "yiisoft/yii2-app-basic",

"description": "Yii 2 Basic Project Template",

"keywords": ["yii2", "framework", "basic", "project template"],

"homepage": "http://www.yiiframework.com/",

"type": "project",

"license": "BSD-3-Clause",

"support": {

"issues": "https://github.com/yiisoft/yii2/issues?state=open",

"forum": "http://www.yiiframework.com/forum/",

"wiki": "http://www.yiiframework.com/wiki/",

"irc": "irc://irc.freenode.net/yii",

"source": "https://github.com/yiisoft/yii2"

},

"minimum-stability": "stable",

"require": {

"php": ">=5.4.0",

"yiisoft/yii2": "~2.0.14",

"yiisoft/yii2-bootstrap": "~2.0.0",

"yiisoft/yii2-jui": "*",

"aws/aws-sdk-php": "^3.2"

},

"require-dev": {

"yiisoft/yii2-debug": "~2.0.0",

"yiisoft/yii2-gii": "~2.0.0",

"yiisoft/yii2-faker": "~2.0.0"

},

"config": {

"process-timeout": 1800,

"fxp-asset": {

"enabled": true

}

},

"scripts": {

"post-install-cmd": [

"yii\\composer\\Installer::postInstall"

],

"post-create-project-cmd": [

"yii\\composer\\Installer::postCreateProject",

"yii\\composer\\Installer::postInstall"

]

},

"extra": {

"yii\\composer\\Installer::postCreateProject": {

[Truncated]

{

"runtime": "0777",

"web/assets": "0777",

"yii": "0755"

}

]

},

"yii\\composer\\Installer::postInstall": {

"generateCookieValidationKey": [

"config/web.php"

]

}

},

"repositories": [

{

"type": "composer",

"url": "https://asset-packagist.org"

}

]

}<pre>

username_3: To make it work you needed just `asset-packagist.org` in `repositories` section of `composer.json`

username_3: It is the best option until bower-asset dependencies(like jQuery) is removed from Yii core. And core team is already working on it. Seems like `yiisoft/yii2 >= 2.1` would be separated from those dependencies. And we'll be able to create REST API`s without pulling all those frontend libraries |

Ragee23/f1-3-c2p1-colmar-academy | 342590363 | Title: Notes on CSS

Question:

username_0: Below I've highlighted a few areas where we can improve the CSS:

**Indentation**

Throughout the file there are a few areas where indentation is a little off. To get started, take a look at the very beginning of the file where we open a new media query but do not indent the things that it contains.

**More CSS breakpoints (media queries)**

Right now, the site get's a little jumbled as the size of the window changes. We can combat this by setting more breakpoints at different max-width's so as to adjust the sizes/arrangement of our elements as the size of the window grows or shrinks. For example, one might want to have breakpoints at `450px`, `700px`, `900px`, and `1100px`

**Class selector overuse**

As mentioned in #1, we overuse classes that could otherwise be consolidated to one class that styles more than one element. This will keep us from having to repeat a lot of the same selectors throughout the CSS, making our life a bit easier. |

ocornut/imgui | 95922827 | Title: OpenGL3 example broken on OSX

Question:

username_0: The commit e3b9a618839bda97e5bec814172185f3667e2324 seems to have broken rendering on OS X.

On linux everything works as expected, but OS X throws `GL_INVALID_OPERATION` for the `glDrawElements` on L91 and therefore nothing is rendered.

I suspect this is due to the OpenGL driver being more strict on OS X, because with a GL core context, client-side arrays for the `indices` parameter of `glDrawElements` and `glDrawElementsInstanced` are explicitly disallowed.

Answers:

username_1: Do you know what would be a good fix? I am not really familiar with OSX nor OpenGL. Maybe the iOS exemple provide a better base?

username_0: Just did some more testing and the specified commit is not the problem, the problem was introduced by the use of indices.

The solution would be to load the indices into a buffer as well and bind it to `GL_ELEMENT_ARRAY_BUFFER`. So essentially the procedure of streaming data to the vertex buffers, has to be done for an index buffer as well.

username_0: Not much time to dispose just now, but I can try to get a pull request done in the next couple of days if necessary.

Status: Issue closed

|

probonopd/go-appimage | 1118456043 | Title: Says "AppImage Added", but that doesn't seem to be the case

Question:

username_0: I'm running a fresh install of Ubuntu 21.10, I downloaded AppImaged (-650-aarch64.AppImage) and, as soon as the download completed, I got a notification that said, "AppImage Added" (Paraphrasing) and then I moved it to ~/.local/bin/ and I got a notification saying, "AppImage Removed" and then "AppImage Added". The same thing happens with any other AppImages I'm downloading and moving, BUT then when I try to find it in the app search, these Apps don't come up. I made sure they're set to executable, and if I run them, they seem to work, but I thought they were supposed to show up in the App Menu... isn't that the point?

Any advice is appreciated!

Answers:

username_1: Yes, they should show up in the menu.

Try logging out from your desktop and logging in again.

Also try without moving the AppImages around.

username_0: OK, so I rebooted and downloaded another AppImage, but got no "AppImage added" notification when the download completed. I tried moving it to ~/.local/bin/ again and got no removed/added notifications. So I went to ~/.local/bin/ and deleted appimaged-650-aarch64.AppImage and re-downloaded it. This time, no notification when that download completed either, or upon moving it even though I got them the first time. 😕

username_0: I'm an idiot. I first tried the x86_64 version and mistakenly read the notification, "You're not running one of the approved live versions...." as an error message, so I figured I had the architecture wrong. Then I tried the aarch64 version, but in reality, it was the first version still working (kinda) until I rebooted. I just reinstalled the x86_64 version and all is working as it should.

Sorry f

Status: Issue closed

username_1: :100: glad it worked out! |

red-hat-storage/ocs-ci | 528564373 | Title: Installation fails on missing Local Storage CSV

Question:

username_0: )

E AssertionError: There are more than one local storage CSVs: []

ocs_ci/ocs/resources/ocs.py:178: AssertionError

================ 1 failed, 265 deselected in 2704.84s (0:45:04) ================

```<issue_closed>

Status: Issue closed |

inception-project/inception | 413364751 | Title: Optimize PNG images

Question:

username_0: **Describe the refactoring action**

Some of the PNG images are unnecessarily large in terms of file size and could be optimized.

**Expected benefit**

The checkout size of the website which redundantly includes the documentation would be smaller. Also the artifact size would be reduced.<issue_closed>

Status: Issue closed |

DDVTECH/mistserver | 69690359 | Title: sourcery usage in the (old) Makefile

Question:

username_0: In commit 9b6312c on April 2nd (switch to CMake), sourcery was changed from using stdout to taking an explicit output argument. The (old) Makefile, however, was not updated accordingly, so now a clean project build with good-old-Make fails due to an empty src/controller/server.html.h file (which should have been generated by sourcery).

In the same commit, the file embed.js.h was moved from src/ to src/output.

The fix is to remove the output redirect ">" from both sourcery invocations and substitute src/embed.js.h -> src/output/embed.js.h a few places in the Makefile ;-)

Also in the Makefile line 159 add src/io.cpp to the input list (this problem was introduced by commit d370ef4)

Status: Issue closed

Answers:

username_1: Thanks for looking into all this! The old Makefile was just kept there for backup purposes initially. We've since completely removed it as more and more things started breaking on it, and the cmake method is now the only way to compile. :-) |

lingow/BusWatch | 75897758 | Title: Agregar método del servicio para obtener unidades rastreadas de cada ruta

Question:

username_0: Agregué el método a la interface y a la implementación, pero hace falta realmente implementarlo.

Resulta que ya pensandolo mejor, voy a pedir la actualización de donde están los camiones de cada ruta periodicamente. En ese caso, el cliente ya tiene las rutas, pero la información de los camiones rastreados cambia, por lo que decidí que unitPoints ya no sería parte de la clase Route, sino que se obtendrán a partir de una llamada al servicio.

El servidor lo que tendrá que hacer será que al momento de hacer checkins y sus actualizaciones de checkin, guardará la posición más actual para esa instancia de checkin. Cuando se haga un request de getUnitPoints, el servidor buscará todos los checkin's correspondientes con el routeId provisto, y regresará una lista de LatLng con las posiciónes más recientes para los checkins activos.

Status: Issue closed

Answers:

username_0: Agregué el método a la interface y a la implementación, pero hace falta realmente implementarlo.

Resulta que ya pensandolo mejor, voy a pedir la actualización de donde están los camiones de cada ruta periodicamente. En ese caso, el cliente ya tiene las rutas, pero la información de los camiones rastreados cambia, por lo que decidí que unitPoints ya no sería parte de la clase Route, sino que se obtendrán a partir de una llamada al servicio.

El servidor lo que tendrá que hacer será que al momento de hacer checkins y sus actualizaciones de checkin, guardará la posición más actual para esa instancia de checkin. Cuando se haga un request de getUnitPoints, el servidor buscará todos los checkin's correspondientes con el routeId provisto, y regresará una lista de LatLng con las posiciónes más recientes para los checkins activos.

Status: Issue closed

|

Javacord/Javacord | 950029534 | Title: Let methods that require a populated cache throw exceptions

Question:

username_0: As already discussed here https://github.com/Javacord/Javacord/pull/816#issuecomment-884374895 we should throw an error when someone tries to use methods like `getMembers` and the cache is disabled because of the GUILD_MEMBERS intent or the cache has not been activated through `new DiscordApiBuilder().setUserCacheEnabled(true)` |

cognoma/machine-learning | 242043050 | Title: Selecting the number of components returned by PCA

Question:

username_0: ```python

sss = StratifiedShuffleSplit(n_splits=100, test_size=0.1, `random_state=0)

```

I'm thinking the next step should be creating a dataset that provides good coverage of the different query scenarios (#11), and perform `GridSearchCV` on these datasets, searching over a range of `n_components` to see how changing `n_components` effects performance (AUROC).

@username_3, @username_2, @username_1 feel free to comment now or we can discuss at tonight's meetup.

Answers:

username_1: Thanks Ryan, this sounds like a good plan. Because of the increased speed

of the pipeline, I'm in favor of just upping the number of components we

search over.

One thing that I will add is that we haven't optimized for the

regularization strength yet (`alpha`). My guess is that the interplay

between it and `n_components` is meaningful. I think it makes sense to

optimize for them jointly.

The process would be the same:

- select several balanced and unbalanced genes

- optimize over a large range of values for `n_components` and `alpha`

- determine the max range for each parameter

- decide if we should limit the range based on a heuristic around class

balance

I won't be there tonight, but I should be free to work on something this

weekend.

username_0: Agreed. I would assume the interplay between `n_components` and `l1_ratio` would also be very important (an `l1_ratio` of greater than 0 will include less features and likely have a large impact on the optimal `n_components`). I'll put a reference to #56 here as well as that talks about what `l1_ratio` to use.

username_2: @username_0 Thanks for opening this issue! I also think this is a must-do if we want to enable search over optimal `n_components` for Cognoma web interface. It is in line with my experience that unbalanced mutations usually have a smaller optimal `n_components`.

In order to limit the range of search for optimal `n_components`, we may need to experiment with a large variety of genes (including many balanced and many unbalanced) and a lot of values of `n_components`. Thus, we may be able to select a smaller common set of `n_components` values for search when users query our datasets.

Although not sure, it will be great if we can select a particular range of `n_components` based on the pos/neg ratio of each gene.

I will play around with Dark-SearchCV and see how much time / RAM it can save for the pipeline.

username_0: Sounds good. I evaluated the speed increase with dasksearchCV [here](https://github.com/cognoma/machine-learning/blob/master/explore/dask-searchCV/dask-searchCV.ipynb).

username_3: For the time being, before we get a better indication of the ideal PCA n_components by number of positives and negatives, I'd suggest expanding to something like `[10, 20, 50, 100, 250]`.

As per #56, I suggest sticking with the default `l1_ratio` but expanding the range of `alpha`. You can use `numpy.logspace` or `numpy.geomspace` to generate the `l1_ratio` range.

I also think we may want to switch to:

```python

sss = StratifiedShuffleSplit(n_splits=100, test_size=0.1, `random_state=0)

```

Since non-repeated cross-validation is generally going to be too noisy for our purposes.

username_1: @username_3 when you say stick to the default `l1_ratio` do you mean the `sklearn` default of 0.15? I made a comment in @56 mentioning that we may want to consider just using ridge regression since we have switched from `SelectKBest` to `PCA`. The number of features we are using has been reduced significantly, so I'm not sure what we get from sparsity in the classifier.

username_3: That's what I was thinking (`l1_ratio = 0.15`). But your logic for using ridge (`l1_ratio = 0`) when we're using PCA makes sense to me. I don't have a strong opinion since I can see some principal components being totally irrelevant to the classification task and hence truly zero. I think we should pick whichever value gives us the most usable / user-friendly results. For example, with elastic net or lasso you may get all zero coefficient models which will likely confuse the user as to what's going on.

username_1: I'm starting to run into RAM bloating issues again with the switch to `StratifiedShuffleSplit` and increasing the parameter search space. It balloons up quickly even with just 10 splits. I think we are going to need to move this to EC2.

username_0: Just as an FYI - @dcgoss is getting [ml-workers](https://github.com/cognoma/ml-workers) pretty close to up and running. This is the repo where the notebooks will be run in production... on EC2 :smile:

I'm also working on creating a benchmark dataset that provides good coverage of the different query scenarios. I'll post or PR this once its further along.

username_1: That is with `l1_ratio=0` and using dasksearch.

With respect to (2), it may not be a data leak but it could bias the classifier. There is significant difference in performance between models with different `n_components` so this could alter which model is chosen.

username_0: True, but I'm not thinking we wouldn't still search over a range of `n_components`... I'm thinking we would perform PCA on the training set, the PCA components would be our `X Train` and we would use something like `SelectKBest` to search over a range of features (PCA components) to include in the model but instead of performing PCA within CV it's already been performed on the whole training set.

username_1: Using `SelectKBest` is in essence doing the same thing as iterating over a variety of `n_components` after running the decomposition. I need to think about it a little more, but I believe you might still be leaking information here -- from the train partition of the CV fold to the test partition of the CV fold. You would get different principal components if you perform the decomp within cross-validation, so it may be a little less robust.

username_0: I agree, but this may not have much of an effect, and I don't think it's a big deal if our training `AUROC` score is slightly inflated as long as the testing `AUROC` score is still accurate.

I also agree that the cross-validation will be less robust this way but if it lets us search over a larger range of `n_components` I think that will far outweigh the small loss of robustness.

P.S. I know this would be a fairly large departure from the way the notebook is currently set up so I'm not saying we _should_ do it this way I'm just thinking about ways to get this thing to run.

username_2: I also agree that we should fit a `PCA` instance for each CV fold. A fitted PCA instance is part of the final model, as is the `SGDClassifier`.

I did PCA together with scaling before Grid Search in [#71](https://github.com/cognoma/machine-learning/blob/master/algorithms/RIT1-PCA-username_2.ipynb), just because the former was not feasible given the computation resource I had. Still, we can have a separate notebook, evaluating the loss of robustness.

username_3: A few notes.

The case @username_1 describes where `n_components = 640` (or more) is optimal will be relatively uncommon, since TP53 is the most mutated gene. In general, there will be fewer positives. I'm okay with not always getting the best model in these extreme cases. The researcher could always iterate by adding larger `n_components` values.

We used to perform option 2 mentioned by @username_0, but switched to the more correct option 3. I agree that in most cases option 2 will cause little damage with great speedup. Furthermore, the testing values will still be correct, just not the cross-validation scores. However, I'd prefer to use the theoretically correct method, since we don't want to teach users incorrect methods. We'll have to find the delicate balance a grid search that evaluates enough parameter combinations... but not too many.

username_0: Closed by #113

We will be using a function to select the number of components based on the number of positive samples in the query (or the number of negatives if it is a rare instance with more positives than negatives). The current function looks like:

```python

if min(num_pos, num_neg) > 500:

n_components = 100

elif min(num_pos, num_neg) > 250:

n_components = 50

else:

n_components = 30

```

Status: Issue closed

|

bojanrajkovic/pingu | 237095798 | Title: Implement alpha separation

Question:

username_0: Section 4.3.2 of the standard says that encoders should, as part of encoding, implement alpha separation as follows: "If all alpha samples in a reference image have the maximum value, then the alpha channel may be omitted, resulting in an equivalent image that can be encoded more compactly." |

ElinamLLC/SharpVectors | 277615084 | Title: GDI broken?

Question:

username_0: Been looking at the GDI renderer and hit a problem so tried with the samples and they give the same error.

Inheritance security rules violated while overriding member: 'SharpVectors.Renderers.Gdi.GdiGraphicsRenderer.get_Window()'. Security accessibility of the overriding method must match the security accessibility of the method being overriden.

In GdiTextSVGViewer sample the error is when creating the SvgPictureBox control.

#### This work item was migrated from CodePlex

CodePlex work item ID: '1749'

Vote count: '1'

Answers:

username_0: [drmcw@2014/05/16]

Well dropped the build from .NET 4.5 down to 3.5 and had to comment out

```

settings.DtdProcessing = DtdProcessing.Parse;

```

in SVGDocument as it's a 4.5 feature and it works now. Would like to know if DTD parsing is necessary and what security in 4.5 was causing the problem though!

Status: Issue closed

|

lerna/lerna | 874199630 | Title: Unable to update workspace package-lock.json after `version` on npm@7

Question:

username_0: I manage two mono-repo projects ([PixiJS](https://github.com/pixijs/pixi.js) and [PixiJS Filters](https://github.com/pixijs/filters)) which are both experiencing flavors of the same issue after upgrading to npm@7 workspaces. The package-lock file is not updated after calling `lerna version`. This creates an unclean git environment when CI attempts to publish resulting in a blocked publish ([see example](https://github.com/pixijs/filters/runs/2251151629?check_suite_focus=true#step:13:19)).

## Expected Behavior

After doing `lerna version`, the package-lock.json should be updated to reflect the version number bumps in the packages and included in the tag.

## Current Behavior

package-lock.json is not updated, and version numbers on workspace packages reflect the previous versions. Subsequent `npm install`s create a change in the package-lock.json.

## Possible Solution

I tried unsuccessfully add a hook to bump the lock:

`"postversion": "npm i --package-lock-only"`

But this errored `Failed to exec postversion script`

Maybe having a `pretag` lifecycle hook would help to add this?

## Steps to Reproduce (for bugs)

1. `git clone [email protected]:pixijs/filters.git`

2.`npm install`

3. `npm run release -- --no-push --force-publish` (notice no package-lock.json changes in tag)

4. `npm install` (notice package-lock.json is updated)

<details><summary>lerna.json</summary><p>

<!-- browsers demand the next line be empty -->

```json

<!-- Please paste your `lerna.json` here -->

```

</p></details>

<details><summary>lerna-debug.log</summary><p>

<!-- browsers demand the next line be empty -->

```txt

<!-- If you have a `lerna-debug.log` available, please paste it here -->

<!-- Otherwise, feel free to delete this <details> block -->

```

</p></details>

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

| Executable | Version |

| ---: | :--- |

| `lerna --version` | v4.0.0 & v3.13.4 |

| `npm --version` | v7.11.2 |

| `yarn --version` | n/1 |

| `node --version` | v12.18.1 |

| OS | Version |

| --- | --- |

| macOS BigSur | 11.2.3 |

Answers:

username_0: I have a workaround that is okay in case this is helpful for anyone. The idea is to append the release commit after updating the package-lock.

Previous `release` script:

```json

"release": "lerna version",

```

Updated `release` script:

```json

"release": "lerna version --no-push",

"postrelease": "npm i --package-lock-only && git commit -a --amend --no-edit && git push && git push --tags",

```

username_0: Sharing my workaround. This, however, should probably be Lerna's responsibility to play well with npm 7.

### Before

```shell

lerna version

```

### After

```shell

# Ignore Lerna's tag and push

lerna version --no-push --no-git-tag-version

# Get the current tag version

tag=v$(node -e "process.stdout.write(require('./lerna.json').version)");

# Update the lock file here

npm i --package-lock-only

# Auto-tag and auto-commit like usual

git commit --all -m ${tag}

git tag -a ${tag} -m ${tag}

git push --tags

git push

```

username_1: @username_0 so I realised I'm not hitting your error because i'm not (yet) including a private package as a devDependency. Which seemed like a thing I'll wish to do shortly, so I took a look at your repro repo.

Notwithstanding it indeed looks like an npm regression you've hit, it looks like everything(?) works if you use a relative `file:` specifier instead of the version string when including your private devDependency.