repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

w3c/ttml1 | 115104257 | Title: region attribute unspecified

Question:

username_0: Another issue that has concerned me lately is what it means when the region attribute is unspecified. According to the spec, this simply means the sub-tree does not show up in the rendering, Which is fine and simple to understand and implement; however I'm wondering if it should perhaps instead have the special meaning of 'any region'.

For example:

<body>

<div region="r1">

<p tts:color="red"begin="0s" end="10s">This text must be red.</p>

</div>

<div region="r2">

<p tts:color="blue"begin="0s" end="10s">This text must be blue.</p>

</div>

</body>

In this case, clearly the intent is that div 1 shows up in r1 and div 2 shows up in r2, however without specifying <body region="r1 r2"> this won't happen. In complex scenarios I can imagine the amount of region attributes getting quite tedious.

Also, if as we have discussed, we are going to allow the idea of an anonymous default region, which I think most of the test suite now relies on, we will need some sort of rule like this anyway in order to get content to be targeted at that anonymous region.

We could still allow that an explicit declaration of no region <body region=""> means that it will show up in no region at all to retain the current semantics.

(raised by <NAME> on 2008-12-11)

From tracker issue http://www.w3.org/AudioVideo/TT/tracker/issues/42<issue_closed>

Status: Issue closed |

getgauge/gauge | 217125426 | Title: gauge --init java_maven is initializing with an old Gauge-java and giving a Protobuf exception

Question:

username_0: **Expected behavior**

I should be able to run the tests without an error.

```

mvn clean test

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building art1 1.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ art1 ---

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ art1 ---

[WARNING] Using platform encoding (UTF-8 actually) to copy filtered resources, i.e. build is platform dependent!

[INFO] skip non existing resourceDirectory /Users/<username>/work/test/art1/src/main/resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ art1 ---

[INFO] No sources to compile

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ art1 ---

[WARNING] Using platform encoding (UTF-8 actually) to copy filtered resources, i.e. build is platform dependent!

[INFO] skip non existing resourceDirectory /Users/<username>/work/test/art1/src/test/resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ art1 ---

[INFO] Changes detected - recompiling the module!

[WARNING] File encoding has not been set, using platform encoding UTF-8, i.e. build is platform dependent!

[INFO] Compiling 1 source file to /Users/<username>/work/test/art1/target/test-classes

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ art1 ---

[INFO]

[INFO] --- gauge-maven-plugin:1.1.0:execute (default) @ art1 ---

[Truncated]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

```

**Observation**

The template generates pom file dependency with old gauge-java

```

<dependency>

<groupId>com.thoughtworks.gauge</groupId>

<artifactId>gauge-java</artifactId>

<version>0.3.4</version>

<scope>test</scope>

</dependency>

```

Answers:

username_1: @username_0 can we retest this please? I've just created a new template and its point to 0.6.0 which should work with 0.8+.

Status: Issue closed

|

freeCodeCamp/testable-projects-fcc | 587079552 | Title: Test environment wont load tests

Question:

username_0: <!-- freeCodeCamp Testable Projects Issue Template -->

I just changed workstation for another computer and now i have a problem with the

test environment.

#### Issue Description

I try to apply <script src="https://cdn.freecodecamp.org/testable-projects-fcc/v1/bundle.js"></script> to my site and it is working but when i try to load the test,

i just get the message "load tests" in the background of the field i can see the message #mocha div missing.

It worked on my macbook, now im using my older Imac and here it is not working.

I checked on my macbook and it seems that it is still working there?

google chrome Version 80.0.3987.149

osx yosemite 10.10.5

Imac

scripting in Atom

havent even really started my script, so this is it..

<html>

<h1>Hello World</h1>

<script src="https://cdn.freecodecamp.org/testable-projects-fcc/v1/bundle.js"></script>

</html>

```

#### Screenshot

<!-- Add a screenshot of your issue -->

Answers:

username_0: I used firefox instead and here it works... strange..

Status: Issue closed

|

libsdl-org/sdlwiki | 847683808 | Title: request to tweak book urls in wiki

Question:

username_0: https://github.com/libsdl-org/SDL/wiki/Books

- Would appreciate editing the title for `Learn C++ By Making Games` book to hide the `target="blank"`

- Would appreciate editing the title for `Game Programming in C++: Start to Finish` to also hide the `target="blank"`

- For clarity / helpfulness to anyone seeing these entries, can they be tagged somehow as `SDL 1.2` material?

Answers:

username_1: This is resolved now.

username_0: thanks! |

Teststandees/hcal_teststand_scripts | 107379931 | Title: QIE Status

Question:

username_0: get HF1-2-iBot_StatusReg_PLL320MHzLock # 1

Any value different from the example above indicates bad behavior.

these get-commands should be included in the system setup script.

Values could be bad if the power is enabled after doing a bkp_reset.

they could explain bad behaviors seen last week

Tullio

``` |

salesforce/design-system-react | 873927312 | Title: How to Setup in CRA 4.x the documentation has no steps for setting up while using the latest CRA version.

Answers:

username_1: @username_0 and anyone else who may be struggling with this, the following worked for me using the latest version of CRA:

1. Follow all [the steps](https://github.com/salesforce/design-system-react/blob/master/docs/create-react-app-2x.md#step-by-step-instruction) in the CRA 2.x guide, but also install `react-app-rewire-babel-loader` to your dev dependencies

2. Replace the contents of config-overrides.js with the following:

```js

const path = require("path");

const fs = require("fs");

const rewireBabelLoader = require("react-app-rewire-babel-loader");

const appDirectory = fs.realpathSync(process.cwd());

const resolveApp = (relativePath) => path.resolve(appDirectory, relativePath);

module.exports = function override(config, env) {

config = rewireBabelLoader.include(

config,

resolveApp("node_modules/@salesforce/design-system-react"),

);

return config;

};

``` |

jrwaltz/intro-data-capstone-biodiversity | 302539876 | Title: Summary

Question:

username_0: Great work! I probably won’t have much to say here because you pretty much nailed it all the way through.

Your Python work was excellent and very readable. Maybe because you copy pasted your code from the codecademy platform, there are multiple occurrences of the same thing.

Presentation: I think your graphs look excellent. They don't belong at the last slides with a `Graphs Created` title. Embed your plots on the slides where you are describing the data. I liked the way you described your results including p-values and tables. Your recommendations are resonable.

Excellent work overall. |

griggheo/ansible-consul-template | 125793823 | Title: Tag releases

Question:

username_0: Would you mind tagging a release so that I can use this role in a [Galaxy requirements file](https://docs.ansible.com/ansible/galaxy.html#advanced-control-over-role-requirements-files) without having to use master?

Answers:

username_1: Tagged release 1.0.0 code name "avocet" (I like birds :)

https://github.com/username_1/ansible-consul-template/releases/tag/1.0.0

Status: Issue closed

|

desktop/desktop | 493531504 | Title: rebase modal window resizes sporadically

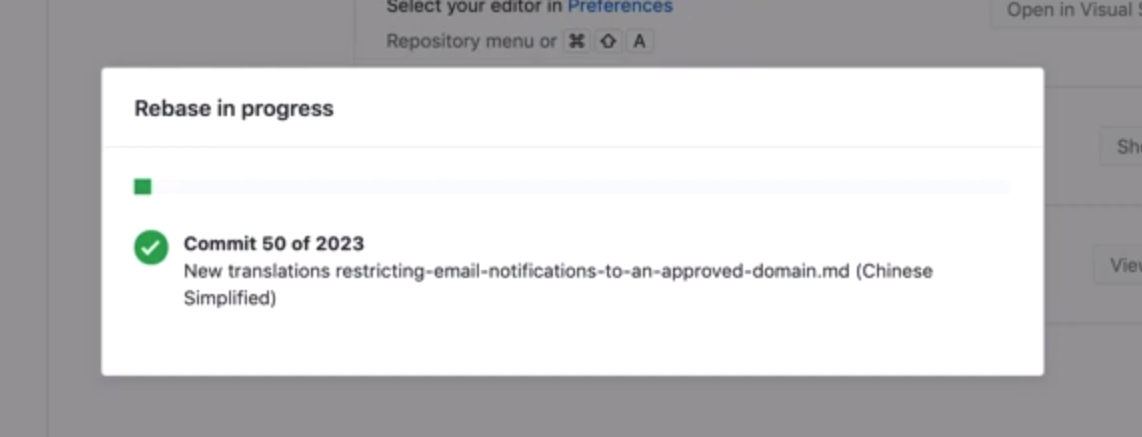

Question:

username_0: Hi there! This is not a bug, but a minor UX detail.

When doing a long-running rebase, the modal window changes width and height depending on the length of the filename being processed. I think it would be easier on the eyes if the modal window always stayed the same size.

My gif-fu is not good right now. Here's a YouTube video: https://youtu.be/9uVKxsdG3kE

Answers:

username_1: Here is some screenshots.

|

betagouv/mon-entreprise | 760289277 | Title: Intégrer « Place des entreprises »

Question:

username_0: https://place-des-entreprises.beta.gouv.fr

Peut-être pour commencer sur la page [Gérer mon activité](https://mon-entreprise.fr/g%C3%A9rer) ? Voir avoir des liens direct vers les thématiques ? D'autres idées ?

cc @MathieuGens

Answers:

username_0: Place des entreprises se développe bien (produit refinancé sur 3 ans), et je pense qu'il y a une complémentarité entre nos deux sites :

* sur [mon-entreprise](https://mon-entreprise.fr/), des simulateurs et outils interactifs pour apporter rapidement l'information la plus précise possible

* sur [place-des-entreprises](https://place-des-entreprises.beta.gouv.fr/), la mise en relation avec le bon conseiller

Avant de l'intégrer sur nos pages il faut que place-des-entreprises soit disponible au niveau national (je crois que c'est en cours ?). On serait probablement un apporteur de trafic important, en effet il y a actuellement 400 demandes par mois sur place des entreprises, or c'est un service qui intéressera sans doute quelques pourcents de nos ~300k visiteurs mensuels. cc @Flightan @be-mercier

username_0: Vu avec l'équipe place-des-entreprises :

- le service sera déployé région par région mais cela va prendre du temps (pas d'ETA), les demandes venant de régions non éligibles ne sont pas traitées

- le service n'est pas pertinent pour les artistes-auteurs, professions libérales, particuliers employeurs

- on peut dans un premier temps intégrer un lien générique vers le place-des-entreprises, puis dans un second temps intégrer directement les blocs thématiques. place-des-entreprises peut fournir une iframe (cf. https://brexit.hautsdefrance.fr/echanger-avec-un-conseiller/) ou si on le demande un json des cartes.

Pour démarrer on peut l'ajouter sur la page "Gérer" si la personne remplit son entreprise et qu'elle est dans une région éligible (IdF, hauts de France) et avec un statut compatible (SAS / SARL). Ça fera pas beaucoup de trafic mais ça permet de tester et l'équipe place-des-entreprises pourra suivre le volume et le type de demande effectué.

username_0: En plus de la section « Gérer », on peut aussi intégrer des blocs thématiques sous les simulateurs :

Bloc « contacter un conseiller à propos d'un recrutement »

https://place-des-entreprises.beta.gouv.fr/aide-entreprises/recrutement-formation/demande/recruter#section-breadcrumbs

- simulateur cout d'embauche

- simulateur aides à l'embauche

Bloc « contacter un conseiller pour résoudre un problème de trésorerie, faire face à vos charges »

https://place-des-entreprises.beta.gouv.fr/aide-entreprises/entreprise-en-difficulte/demande/tresorerie#section-breadcrumbs

- simulateur indépendant

- simulateur profession libérale

place-des-entreprises peut fournir des intégrations iframe des formulaires pour que l'utilisateur reste sur le site mon-entreprise (on pourrait l'ouvrir dans une modale par exemple)

username_0: J'ai fait un point avec @Adeline-Lrn aujourd'hui concernant l'intégration de place-des-entreprises.

On n'a pas eu la bande passante pour l'intégrer en 2020, et par ailleurs le fait que le service était limité à quelques régions rendait l'intégration plus compliquée. Mais en 2021 on va avoir plus de moyens, et place-des-entreprises sera déployé au niveau national, avec quasiment toutes les régions pour mars 2021 et un lancement national officiel un peu plus tard dans l'année.

Je propose donc que l'on vise fin T1 2021 pour déployer cette intégration. À valider avec Evelyne, mais je pense qu'on est sur un sujet qui a un bon ratio d'impact par rapport au temps de développement, donc ça ne devrait pas poser problème.

Par ailleurs point positif, <NAME> a validé le principe d'intégrer place-des-entreprises en mars 2021 sur l'espace connecté urssaf.fr (à la place du bouton oups.gouv qui disparaît). S'aligner sur ce point avec le calendrier et la feuille de route Dicom permet à peu de frais de montrer à quel point on fait partie de l'écosystème Urssaf et qu'on participe à une stratégie de communication cohérente auprès des portails de la branche [insérer un PPT ici].

Pour le travail d'intégration en lui-même :

- À quels endroits on ajoute ce module ? Sur quelles catégories de questions ? cf. message ci-dessus pour les réflexions précédentes

- Est-ce qu'on veut ré-implémenter les formulaires à partir d'une API place-des-entreprise pour avoir le meilleur niveau d'intégration UI possible ? Ou bien préfère-t-on une intégration iframe qui a un coût de développement et de maintenance plus faible ?

- Quelles informations peut-on pré-remplir à partir des données déjà saisies lors du parcours sur mon-entreprise ?

- Comment on peut suivre les demandes initiées depuis mon-entreprise et les ajouter à notre page /stats ?

Status: Issue closed

|

lark-parser/lark | 617361617 | Title: Turning Lark into a modularized mutli-paradigm parsing toolbox

Question:

username_0: Hi there!

Since a long time, I have the dream of an ultimate toolbox for all parsing-related stuff, which fits all my needs and can be used for any parsing-related issue, including experimentation.

The main goals of my dream are:

1. Write a grammar once in one kind of EBNF-language

2. Use a parser generated from this grammar in several target languages and technologies (for me, it currently is C, Python and Rust)

3. Work with AST traversals instead of semantic code attached to the grammar

4. Combine different scanners (Pythons `re`, self-contained DFA), but also support of scanner-less parsing

5. Use the same toolbox for experimentation and fiddling with algorithms and ideas

Right now, I'm experimenting with the GLL parsing algorithm, but with the need to not completely rewrite all my grammars again, and with the need to use the resulting parser in Python and Rust. Therefore, I'm currently undetermined whether I write another tool on my own or modify an existing tool, like Lark.

While taking a look into Larks source code and documentation, I found out that there are [plannings](https://github.com/lark-parser/lark/blob/master/docs/features.md#planned-features-not-implemented-yet) to generate code in other languages and implement an own DFA construction for lexical analysis without using Pythons regex-module for this. For me, the construction of scanner-less parsers is also an important thing which should be supported. This could be done by breaking up the regular expressions and turning them into a part of the grammar, obviously a process of grammar rewriting which might be done internally, including whitespace insertion.

All these targets could only be achieve when Lark is broken up into a modular, plugin-based design, where different parser generators, different scanner generators and different code generators are stacked together, and Lark itself only serves as the core to handle and rewrite grammars and use the plugins attached. I've noticed that Lark already has a modular structure, but my concept would be to separate all these modules and make them entirely plug-able and replaceable.

This is my first draft of an idea how this could look like:

Please don't feel offended or worried, I don't want to break Lark's current structure or principles with this draft. It is only a proposal of what might be done, and I'm very interested into what your future plans for Lark are. I need a little decision help, whether my concept could become a part of Lark, or if I have to do it on my own.

For now, I am not using Lark for my projects, because it does not fit into my requirements. But it could do so when some features would be supported. Python as implementation language for this toolbox is absolutely the right tool. Code generation could be done easily using template engines and a serialized structure of the generated parse-tables for each Parser module. The generated parsers generally should support the features of their implementation language for sake of performance, but should share the same node structuring for resulting tree traversals and semantic evaluation.

What are your opinions on this proposal?

Answers:

username_1: Hi Jan!

My goals are very similar to those that you listed, and I would say Lark satisfies all of them, to some degree.

Lark can already generate a LALR parser in Julia. Lark also used to have support for scannerless parsing in Earley, which involved rewriting the strings as a list of character terminals. I decided to remove it because dynamic lexing was just as capable and is significantly faster. But I'll consider bringing it back if there's a good reason.

If you want to contribute a GLL parser to Lark, I'll be happy to help as much as I can.

Same if you want to contribute a Rust parser generator.

Regarding lexical analysis, we have made some progress in that area too. The idea was to detect collisions in regexps by intersecting them: https://github.com/MegaIng/interegular

That reminds me, @MegaIng, why didn't we move forward with that? Did you ever submit a PR, or were there still some performance issues?

I'm not at all offended by the diagram, it seems like you have the right idea, in theory. I'm not sure it would hold up in practice. I would say all the modules in Lark are already pluggable. The standard lexer can serves both LALR(1) and Earley. But each one also has specialized lexers which only fit itself, and I don't see how that could change.

To summarize the problem more abstractly: Each parser has its own unique configuration and abilities, and I don't think it's possible to know them in advance and pre-expose the right interface. The obvious solution would be to expose all the information, but that would actually make it less modular.

So what I've done so far is to abstract only as much as necessary to support the algorithms that I have, and I'll be happy to keep abstracting whenever necessary, as long as it actually makes the code better.

Having said that, it's possible that I've overlooked things, so I'm open to hear another plan of action. And of course there's always room for improvement.

Regarding templated parsers, I think it's a good idea, in theory. I don't know how easy it would be to write a lexer+parser+treebuilder like that for each target. Especially if we want it to support the contextual lexer, and all the tree manipulation logic that the grammar allows.

At any rate, Lark already knows to create a JSON of everything that it analyzed, so applying to a template should be fairly easy.

So bottom line, I like it. I want to see Lark grow, and I think those are good directions for it to go in. So if you want to use Lark, I'm absolutely for it, as long as you submit clean and modular code. (I have to set a standard to keep it maintainable!)

Let me know what you think, and if you have more questions for me.

username_0: Sure, and I think Lark is great base for this. I'm aware that Lark has an already a huge and in some detail parts very skilled code base (for some algorithms, I first need to look them up and dive into them, and that's a time problem, though!), but generally I would like to contribute.

Feel free to close the issue, or we can continue some discussion here.

Best regards

Jan

Status: Issue closed

username_0: Hey there, to keep issues low I now decided to close this here for now. Due to other, personal projects, I currently have too less time to participate and provide code to Lark, but I'll stay tuned about further developments in here and try to help when possible.

username_1: Hi, it seems like I should have replied here, sorry that it slipped my mind!

It's perfectly understandable, I have my own personal projects going. If you ever want to come back and contribute to Lark, I'll be happy to help you do that.

P.S. I don't think a scannerless LALR(1) is a good idea. I think they ideal algorithm will try a regexp, and default to scannerless, and in the same sense will try LALR(k), and default to Earley only when needed.

In theory, these different layers can be written in different languages (well, regexps already are).

However, such a layered parser is hard to write. But Lark is going in that direction, and the suggestions you proposed might push it even further there. So I definitely dig that direction. |

w3c/findtext | 113055920 | Title: order of case fold and normalization operations

Question:

username_0: http://www.w3.org/International/track/issues/503 [I18N-ISSUE-503]

http://www.w3.org/TR/2015/WD-findtext-20151015/#performing-a-search-operation

In the section "Search Algorithm" within this section, case fold mapping appears as step 11. Normalization appears as step 13.

Since normalization (particularly the compatibility mapping) may change the character sequence, it would be better to apply the case folding after the normalization, particularly if the case fold is language-sensitive. |

kubernetes/test-infra | 567107643 | Title: No status reconciler label

Question:

username_0: ```yaml

{

insertId: "1am6xuhg2k1c23t"

jsonPayload: {

author: "sbueringer"

component: "hook"

event-GUID: "aa9d1680-527e-11ea-8ba2-0a355f16f694"

event-type: "pull_request"

file: "prow/plugins/owners-label/owners-label.go:119"

func: "k8s.io/test-infra/prow/plugins/owners-label.handle"

level: "warning"

msg: "Unable to add nonexistent labels: ["area/prow/status-reconciler"]"

org: "kubernetes"

plugin: "owners-label"

pr: 16317

repo: "test-infra"

url: "https://github.com/kubernetes/test-infra/pull/16317"

}

labels: {…}

logName: "projects/k8s-prow/logs/hook"

receiveTimestamp: "2020-02-18T18:46:01.873818640Z"

resource: {…}

severity: "ERROR"

timestamp: "2020-02-18T18:45:56Z"

}

```

/assign @stevekuznetsov

/area prow |

scottsilverlabs/raspberrystem-ide | 93005516 | Title: File rename does not update titlebar

Question:

username_0: When a file is renamed, it does not update the titlebar if used in the following way:

* Start with a new or existing file

* Click File Open

* Click settings on the file being edited

* Rename the file and click OK

* Click Cancel button (this may be a Close button if #59)

* Titlebar will still have old name

Note - the file DOES get renamed properly, its just the titlebar is not updated. Reopening the file resolves it manually.<issue_closed>

Status: Issue closed |

HMS-Core/hms-flutter-plugin | 801336405 | Title: when request to sign in twice , the app crash [huawei_account]

Question:

username_0: **Description**

i just used the huawei account version 5.0.3+303 and i implemented the

**Expected behavior**

What you expected to happen?

i want to login and if the user not include the email to retry again with email , i will be more accept if there's a method thats makes the email required

**Current behavior**

What actually happened?

when i press to sign in it will navigate me to sign in screen and if i canceled or signed in it will gives a throw with message to make user re-try to login with email but i got this type of exception :

**Logs**

```

E/flutter (31393): #8 TapGestureRecognizer.handleTapUp

package:flutter/…/gestures/tap.dart:598

E/flutter (31393): #9 BaseTapGestureRecognizer._checkUp

package:flutter/…/gestures/tap.dart:287

E/flutter (31393): #10 BaseTapGestureRecognizer.acceptGesture

package:flutter/…/gestures/tap.dart:259

E/flutter (31393): #11 GestureArenaManager.sweep

package:flutter/…/gestures/arena.dart:157

E/flutter (31393): #12 GestureBinding.handleEvent

package:flutter/…/gestures/binding.dart:362

E/flutter (31393): #13 GestureBinding.dispatchEvent

package:flutter/…/gestures/binding.dart:338

E/flutter (31393): #14 RendererBinding.dispatchEvent

package:flutter/…/rendering/binding.dart:267

E/flutter (31393): #15 GestureBinding._handlePointerEvent

package:flutter/…/gestures/binding.dart:295

E/flutter (31393): #16 GestureBinding._flushPointerEventQueue

package:flutter/…/gestures/binding.dart:240

E/flutter (31393): #17 GestureBinding._handlePointerDataPacket

package:flutter/…/gestures/binding.dart:213

E/flutter (31393): #18 _rootRunUnary (dart:async/zone.dart:1206:13)

E/flutter (31393): #19 _CustomZone.runUnary (dart:async/zone.dart:1100:19)

E/flutter (31393): #20 _CustomZone.runUnaryGuarded (dart:async/zone.dart:1005:7)

E/flutter (31393): #21 _invoke1 (dart:ui/hooks.dart:265:10)

```

**Environment**

- Platform: Huawei nova 7i

- Kit: huawei_account

- Kit Version 5.0.3+303

- OS Version Android 10

- VS code

Answers:

username_1: Hi @username_0, Thanks for reporting the issue.

We have spotted the error and it will be fixed on next release. Also you can follow these steps to create a workaround.

1. Go to **AuthServiceMethodHandler.java** file.

2. Then change the **onActivityResult** method as following:

```java

@Override

public boolean onActivityResult(int requestCode, int resultCode, Intent data) {

final MethodChannel.Result incomingResult = mResult;

mResult = null;

Task<AuthHuaweiId> authIdTask = HuaweiIdAuthManager.parseAuthResultFromIntent(data);

if (requestCode == rCode) {

authIdTask.addOnSuccessListener(authId -> {

HashMap<String, Object> resultMap = HwIdBuilder.createHwId(authId);

Account account = authId.getHuaweiAccount();

if (account != null) {

resultMap.put("account", HwIdBuilder.createAccount(account));

}

HMSLogger.getInstance(activity.getApplicationContext()).sendSingleEvent("signIn");

if (incomingResult != null) {

incomingResult.success(resultMap);

}

}).addOnFailureListener(e -> {

HMSLogger.getInstance(activity.getApplicationContext()).sendSingleEvent("signIn", String.valueOf(((ApiException) authIdTask.getException()).getStatusCode()));

if (incomingResult != null) {

incomingResult.error(Constant.SIGN_IN_FAILURE, String.valueOf(((ApiException) authIdTask.getException()).getStatusCode()), e.getMessage());

}

});

}

return true;

}

```

Run the application again and let us know how it goes.

username_0: thank you

Status: Issue closed

|

pombase/curation | 206212007 | Title: Linking "within process" molecular functions that are not known to be direct substrates

Question:

username_0: This ticket is spawned from:

https://github.com/pombase/canto/issues/1308#issuecomment-278334643

we need a way to annotate things which are regulating a molecular function indirectly.

In the example from here

https://github.com/pombase/curation/issues/1278#issuecomment-277270238

- hsp9 where we don't know exactly how it is regulating cdc25 phosphatase activity

- hsp9 has not been shown to be a direct regulator of cdc25 so I can't put it on the MF term

- We want to link hsp9 to cdc25 as it is part of the pathway

- Currently we use BP annotations "regulation of MF" for these.

- PomBase have been evaluating if we can get rid of them for

https://github.com/geneontology/go-ontology/issues/12859

(and for general consistency and LEGO compliance)

- possibly the best way to do this would be to have a relation which can be used with a MF term

and specifies that a target is not a direct substrate (causally upstream of *and* within). This does not exist.

- [ ] Q what are the existing extensions for MF allowed by GO (Ihave a feeling we self imposed a narrower set)

- [ ] once decision made, retrofit existing (mainly should be the ones sitting in the GO logs?)

Answers:

username_1: Anything that has local_domain = GO:0003674 or any of its is_a descendants, or BFO:0000015 ("process" in the formal-ontology sense that encompasses both MF and BP in GO).

That's kind of a pain to get an from the documentation in GitHub (https://github.com/geneontology/annotation_extensions/tree/master/doc), which has a page for each relation to slog through ...

username_0: I wonder if for these we can use "negatively regulates" and "positively regulates"

This would not work for all molecular functions, but for most of the phosphorylation examples, it is regulatory.

The example above would be

hsp9 some_molecular_function negatively_regulates cdc25 part_of "negative regulation of G2/M transition"

that seems to work?

Status: Issue closed

|

microsoft/Microsoft-UI-UIAutomation | 716803950 | Title: Consider adding BreakIf and ContinueIf helpers to the C++ Abstraction library

Question:

username_0: When writing remote operation loop, consumers will frequently end up with a line such as this in the body of the loop:

```c++

operationScope.If(ancestorElement.IsNull(), [&]()

{

operationScope.Break();

});

```

It could be helpful to add a helper that lets consumers write:

```c++

operationScope.BreakIf(ancestorElement.IsNull());

```

This would be a little bit less verbose.

The same thing could be said for `Continue`...<issue_closed>

Status: Issue closed |

srehwald/eat-api | 304966458 | Title: IPP: „risch“ statt „Frisch“

Question:

username_0: https://github.com/username_1/eat-api/blob/gh-pages/ipp-bistro/2018/12.json

Answers:

username_1: Probably, the dish name was a litte longer than usual. This is a little difficult to fix as the parser might not be able to tell to which column a specific character belongs to in such a case. As this was 3 weeks ago and the menu changed anyway, I assume I can close this (known) issue.

Status: Issue closed

|

vlang/v | 484844230 | Title: printing a nested struct instance fails

Question:

username_0: **V version:** 0.1.18

**OS:** Mac OS 10.14.6

**What did you do?**

struct Inner {

val i32

}

struct Outer {

inner Inner

}

p := Outer{Inner{}}

println(p.inner)

**What did you expect to see?**

successful compilation

**What did you see instead?**

error: expected ')'

println ( p tos2((byte*)"{ val: $.inner.val }") ) ;

^

note: to match this '('

println ( p tos2((byte*)"{ val: $.inner.val }") ) ;

^

1 error generated.

V panic: C error. This should never happen. Please create a GitHub issue:

Answers:

username_1: it seems that the variable was not initialized, this example works:

module main

struct Inner {

val int

}

struct Outer {

inner Inner

}

fn (o Outer) str() string {

return o.inner.val.str()

}

fn main() {

o := Inner{69}

p := Outer{o}

println(p.str()) // outputs "69"

}

Status: Issue closed

username_2: Compiled with current master and it works.

```

struct Inner {

val int

}

struct Outer {

inner Inner

}

p := Outer{Inner{}}

println(p.inner)

```

Only thing was to rename `i32` to `int` because BDFL @medvednikov changed `i32` lately to `int` and `u8` to `byte` - this is for the time being final.

The output was:

{

val: 0

} |

ccxt/ccxt | 851163330 | Title: ByBit new futures throws error in private endpoints

Question:

username_0: - OS: MacOS

- Programming Language version: Python

- CCXT version: Latest

```

bybit.fetch_closed_orders('BTC/USD') # works

bybit.fetch_closed_orders('BTCUSDM21') # does not work, same with BTCUSDU21

```

```

bybit {"ret_code":10001,"ret_msg":"invalid symbol","ext_code":"","ext_info":"","result":null,"time_now":"1617696874.327896","rate_limit_status":599,"rate_limit_reset_ms":1617696874327,"rate_limit":600}

```

Symbols can be used in public endpoints, but not in private endpoints (tested against closed_orders and fetch_my_trades).

Thanks for a great package!

/Clev |

varnishcache/varnish-cache | 581829547 | Title: We set Accept-Range on pass, is that OK ?

Question:

username_0: Shouldn't we leave it to the backend to set A-R on pass ?

Answers:

username_1: bugwash:

Right now, we add `Accept-Range` to and 200 response when `http_range_support` is on. This happens after `vcl_deliver`, so there is no VCL control over it.

We agree that we want more VCL control and three alternative approaches have been discussed:

* add a switch to vcl if `Accept-Range` should be added if not present

* set `Accept-Range` before calling `vcl_deliver`, so it can be removed

* do not set `Accept-Range` in core code, but rather in `builtin.vcl`

We agree to handle this as part of #3246

username_1: I realized that the actual cause of the problem is that we mixed handling of ranges in core code and the range vdp. By moving all relevant parts to that one, whether or not we send `Accept-Ranges` can be controlled by having the `range` vdp active or not.

See #3289

username_0: How this should work:

1. vcl_backend_deliver{} should be able to set/unset Accept-Ranges as desired.

2. On pass'ed responses, the backend controls if A-R should be in resp.http going into vcl_deliver{}

username_1: How do you address the concern?

Status: Issue closed

username_0: You can test `obj.uncachable` if it is true, the header came from the backend. |

macacajs/app-inspector | 235502219 | Title: 有的时候可以,有的时候就不行,需要重启设备才可以

Question:

username_0: Error: connect ECONNREFUSED 127.0.0.1:8001

at Object.exports._errnoException (util.js:1050:11)

at exports._exceptionWithHostPort (util.js:1073:20)

at TCPConnectWrap.afterConnect [as oncomplete] (net.js:1093:14)

Answers:

username_1: 需要插拔usb线,不过不是根本办法

username_2: @username_1 需要插拔 usb 说明 iproxy 的 usb 连接出问题了,可以提供更多信息吗?

Status: Issue closed

|

ryansheehan/terraria | 631519436 | Title: Docker CI build error

Question:

username_0: Hi,

First of all I'd like to thank you for updating this image so quickly, great work!

However it seems like there's something going wrong in the DockerCI builds. I'd help but it seems like I can't access its logs (set to private?)

Answers:

username_1: It is most likely related to the faulty zip file name error that should be solved by this PR : https://github.com/username_3/terraria/pull/44

username_2: why is the docker container not build automatically as soon as the github gets updates?

username_3: Those are from a temporary setup. For a while I was exploring building

from source, and looking to contribute the dockerfile back to tshock.

For a number of reasons I had to switch back to building from the release

zips. I still hope to reactivate that effort of building from source. But

I'm going to wait until the pre release phase is over.

username_0: @username_3 That's a great idea! Tshock having an official image would be awesome.

I think using a development or feature branch might provide a more ideal workflow for working on that though 🙂

Thanks for solving this so quickly and creating this image!

Status: Issue closed

|

aonez/Keka | 860432540 | Title: Keka not launching on Big Sur from Synthetic.conf remapped directory

Question:

username_0: I usually keep my non-system programs in a directory located here

`/Shared Files/Utilities`

Prior to Catalina this was its real path but due to new security measures in Catalina/Big Sur the `/Shared Files` directory is actually located here `/System/Volumes/Data/Shared Files` and is remapped to the root of the volume using `/etc/synthetic.conf` (discussion here):

https://derflounder.wordpress.com/2020/01/18/creating-root-level-directories-and-symbolic-links-on-macos-catalina/

Keka stopped working but I found that it works fine when the app bundle is placed in these directories:

`/Applications`

`~/Applications`

`~/Desktop`

but not `/Shared Files/*`

I also tried manually launching from the "correct path" `/System/Volumes/Data/Shared Files` with no difference.

The program seems to hang with no windows or menu bar menus produced.

here what appears to be the relevant console output:

```

error 11:07:58.583755-0400 sandboxd Sandbox: Keka(35918) deny(1) file-issue-extension target:/Shared Files/Utilities/Keka.app class:com.apple.app-sandbox.read

Violation: deny(1) file-issue-extension target:/Shared Files/Utilities/Keka.app class:com.apple.app-sandbox.read

Process: Keka [35918]

Path: /System/Volumes/Data/Shared Files/Utilities/Keka.app/Contents/MacOS/Keka

Load Address: 0x106fcb000

Identifier: com.aone.keka

Version: 4541 (1.2.13)

Code Type: x86_64 (Native)

Parent Process: launchd [1]

Responsible: /System/Volumes/Data/Shared Files/Utilities/Keka.app/Contents/MacOS/Keka

User ID: 503

Date/Time: 2021-04-17 11:07:58.573 EDT

OS Version: macOS 11.1 (20C69)

Report Version: 8

MetaData: {"build":"macOS 11.1 (20C69)","responsible-process-uid":503,"platform_binary":"no","primary-filter":"path","file-flags":0,"container":"\/Users\/yashka\/Library\/Containers\/com.aone.keka\/Data","profile-in-collection":false,"flags":5,"pid":35918,"signing-id":"com.aone.keka","platform-policy":false,"target":"\/Shared Files\/Utilities\/Keka.app","apple-internal":false,"file-mode":511,"errno":1,"vnode-type":"DIRECTORY","extension-class":"com.apple.app-sandbox.read","profile-flags":0,"hardware":"Mac","operation":"file-issue-extension","primary-filter-value":"\/Shared Files\/Utilities\/Keka.app","responsible-process-path":"\/System\/Volumes\/Data\/Shared Files\/Utilities\/Keka.app\/Contents\/MacOS\/Keka","action":"deny","rdev":0,"platform-binary":false,"summary":"deny(1) file-issue-extension target:\/Shared Files\/Utilities\/Keka.app class:com.apple.app-sandbox.read","process-path":"\/Shared Files\/Utilities\/Keka.app\/Contents\/MacOS\/Keka","hardlinked":false,"matched-extension":false,"uid":503,"mount-flags":76583424,"responsible-process-user-uuid":"1708BC0E-644A-4C78-A658-46320EF17422","matched-user-intent-extension":false,"path":"\/Shared Files\/Utilities\/Keka.app","normalized_target":["Shared Files","Utilities","Keka.app"],"team-id":"4FG648TM2A","process":"Keka"}

Thread 0 (id: 69905753):

0 libsystem_kernel.dylib 0x00007fff2033c376 __mac_syscall + 10

1 LaunchServices 0x00007fff20884bd0 _LSApplicationCheckIn + 1840

2 HIServices 0x00007fff256d791c _RegisterApplication + 6665

3 HIServices 0x00007fff256d5e28 GetCurrentProcess + 23

4 HIToolbox 0x00007fff286bcabf MenuBarInstance::GetAggregateUIMode(unsigned int*, unsigned int*) + 63

5 HIToolbox 0x00007fff286bca49 MenuBarInstance::IsVisible() + 51

6 AppKit 0x00007fff22c4ba7b _NSInitializeAppContext + 35

7 AppKit 0x00007fff22c4977a -[NSApplication init] + 417

8 AppKit 0x00007fff22c493b9 +[NSApplication sharedApplication] + 120

9 AppKit 0x00007fff22c47c5b NSApplicationMain + 409

10 libdyld.dylib 0x00007fff2038a621 start + 1

11 Keka 0x0000000000000001

Thread 1 (id: 69905774):

0 libsystem_kernel.dylib 0x00007fff2033c53e __workq_kernreturn + 10

1 libsystem_pthread.dylib 0x00007fff2036b467 start_wqthread + 15

Thread 2 (id: 69905778):

0 libsystem_kernel.dylib 0x00007fff2033c53e __workq_kernreturn + 10

1 libsystem_pthread.dylib 0x00007fff2036b467 start_wqthread + 15

Thread 3 (id: 69905779):

Binary Images:

0x106fcb000 - 0x10702efff com.aone.keka (1.2.13 - 4541) <73a74552-3c64-3607-9214-9817cbabb20f> /System/Volumes/Data/Shared Files/Utilities/Keka.app/Contents/MacOS/Keka

0x7fff2033a000 - 0x7fff20368fff libsystem_kernel.dylib (7195.60.75) <4bd61365-29af-3234-8002-d989d295fdbb> /usr/lib/system/libsystem_kernel.dylib

0x7fff20369000 - 0x7fff20374fff libsystem_pthread.dylib (454.60.1) <8dd3a0bc-2c92-31e3-bbab-ce923a4342e4> /usr/lib/system/libsystem_pthread.dylib

0x7fff20375000 - 0x7fff203afff7 libdyld.dylib (832.7.1) <2f8a14f5-7cb8-3edd-85ea-7fa960bbc04e> /usr/lib/system/libdyld.dylib

0x7fff20882000 - 0x7fff20ab1e0f com.apple.LaunchServices (1122.11 - 1122.11) <caeec254-68ae-39b5-8452-ec3e1ee8577b> /System/Library/Frameworks/CoreServices.framework/Versions/A/Frameworks/LaunchServices.framework/Versions/A/LaunchServices

0x7fff22c44000 - 0x7fff239a6c6f com.apple.AppKit (6.9 - 2022.20.119) <4cb42914-672d-3af0-a0a5-2209088a3da0> /System/Library/Frameworks/AppKit.framework/Versions/C/AppKit

0x7fff256d3000 - 0x7fff2572efe7 com.apple.HIServices (1.22) <9af2cdd9-8b68-3606-8c9e-1842420acda7> /System/Library/Frameworks/ApplicationServices.framework/Versions/A/Frameworks/HIServices.framework/Versions/A/HIServices

0x7fff286b9000 - 0x7fff289b8ffd com.apple.HIToolbox (2.1.1) <93518490-429f-3e31-8344-15d479c2f4ce> /System/Library/Frameworks/Carbon.framework/Versions/A/Frameworks/HIToolbox.framework/Versions/A/HIToolbox

```

Answers:

username_0: I didnt actually have any other sandboxed apps to test, so I just downloaded and ran "fatFileFinder" from here `https://github.com/Ravbug/FatFileFinderCPP/releases/tag/2.2` which appears to be sandboxed - it launches fine from the same folder.

username_1: I suppose it will work too for me.

In your logs Keka is not able to launch for a sandbox violation. Seems like it maybe has no read permission and the entitlement `com.apple.security.files.user-selected.read-write` might be violating the sandbox. What read permissions does it have in your `Shared files` folder?

This is how it looks to me:

```

aone@aONe-Mini ~ % ls -la /System/Volumes/Data/Shared\ Files

total 16

drwxr-xr-x 4 aone wheel 128 Apr 19 08:59 .

drwxr-xr-x 27 root wheel 864 Apr 19 08:58 ..

-rw-r--r--@ 1 aone wheel 6148 Apr 19 08:59 .DS_Store

drwxr-xr-x@ 3 aone wheel 96 Apr 15 12:31 Keka.app

```

For what is worth, I've created the `Shared files` folder using Finder (asked admin password) and copied Keka there using Finder too. No Terminal used here.

username_1: Maybe you could try to reproduce with [HandBrake](https://handbrake.fr/rotation.php?file=HandBrake-1.3.3.dmg) since it is notarized and most probably hardened, like Keka.

That "FatFileFinder" is not notarized so maybe the sandbox checks are not the same.

username_1: @username_0 had you the change to try if another notarized app runs there?

username_0: Yeah, Handbrake fails in an identical fashion. My permissions in `ls -al` in that directory look identical to yours too. I tried creating a brand new non-remapped directory inside `System/Volumes/Data/` as well, using Finder, and copied Keka and Handbrake there as well, and the results are the same, with the same sandbox error in the console.

So it doesn't seem to be related to Synthetic.conf.

username_1: I've replicated the issue now. So it seems this is the way to go from Big Sur. You can use `/Users/Shared` instead, can be accessed by any user and it hasn't this sandbox restriction. |

typestyle/typestyle | 190269238 | Title: Share typings between all cssinjs libs

Question:

username_0: Hi, I am author of [jss](https://github.com/cssinjs/jss/). I like the idea of having typings for styles a lot. I wonder if you are interested to make it available for all cssinjs libs?

Answers:

username_1: Sorry for the late reply. I've let it sit in my brain and can't commit to it.

Typestyle's type definitions are slightly bound to its internal implementation e.g. its color helpers http://typestyle.io/#/colors need `ensureString` https://github.com/typestyle/typestyle/blob/a58b0dbee8c5050a4567881a3e58c8c5a62e777b/src/index.ts#L32-L36 and the `csx/flex` flexbox uses the vendor prefixing as supported by `freestyle` whereas many other frameworks prefer *automatic* vendor prefixing.

Thanks for your interest though :rose:

Status: Issue closed

|

ingydotnet/testml-pm6 | 335924679 | Title: TestML fails to install with zef

Question:

username_0: docker run --entrypoint /bin/sh -it rakudo-star:latest

# zef install TestML

===> Searching for: TestML

===> Updated cpan mirror: https://raw.githubusercontent.com/ugexe/Perl6-ecosystems/master/cpan.json

===> Updated p6c mirror: http://ecosystem-api.p6c.org/projects.json

===> Fetching [FAIL]: TestML:ver<0.2.0>:auth<github:ingydotnet> from git://github.com/ingydotnet/testml-pm6/archive/0.2.0.zip.git

Aborting due to fetch failure: TestML:ver<0.2.0>:auth<github:ingydotnet> (use --force-fetch to override)

in code at /usr/share/perl6/site/sources/7926F4F3ED4C81AA5DA2A54C8AE1E03D03424CCE (Zef::Client) line 227

in method fetch at /usr/share/perl6/site/sources/7926F4F3ED4C81AA5DA2A54C8AE1E03D03424CCE (Zef::Client) line 197

in method fetch at /usr/share/perl6/site/sources/7926F4F3ED4C81AA5DA2A54C8AE1E03D03424CCE (Zef::Client) line 185

in sub MAIN at /usr/share/perl6/site/sources/E4784A2A0FA00D16808817186E95FE74BEF3FE2D (Zef::CLI) line 149

in block <unit> at /usr/share/perl6/site/resources/3065D08F5332CA244672D7F8A05B603F92BB8A7D line 3

in sub MAIN at /usr/share/perl6/site/bin/zef line 2

in block <unit> at /usr/share/perl6/site/bin/zef line 2

Answers:

username_0: Maybe it is just an intermittent failure to fetch from Github the way it is specified?

FWIW, It installs with this:

`zef install https://github.com/ingydotnet/testml-pm6.git`

username_1: I ran into this also today while trying to install YAML. It would be best if there were CPAN releases as that seems to be more reliable at this point.

username_1: This does appear to be fixed and installable from CPAN now. However, I have reported a new problem with installation on 2019.03 and it is a weird problem. |

zyedidia/micro | 381992825 | Title: Feature request: Mouse support on tty

Question:

username_0: Hello,

I have noticed mouse support works flawlessly on X11 terminals, but not in linux tty with gpm mouse, at least for me. I am using version 1.4.1 of micro.

If it's not too much trouble, could you add mouse support for tty?

Lots of thanks.

Answers:

username_1: This please!

username_0: If you are in a hurry, [lcxterm](https://gitlab.com/klamonte/lcxterm) might help you. It's a terminal application for the console that translates GPM events into control sequences.

username_1: Thanks for the tip. Is this something you have tried? I installed and enabled libgpm support, however micro still does not support the mouse. In fact, I believe the gpm is just for passthrough on remote shells.

username_0: Yes, it totally works for me.

Make sure that you enabled the GPM service (`systemctl enable gpm.service`, `systemctl start gpm.service`), and that lcxterm is being built with GPM support (`checking for Gpm_Open in -lgpm... yes`). |

godotengine/godot | 436316760 | Title: Index out of size when trying to drag file to scripts panel

Question:

username_0: **Godot version:**

3.2 2fc2d82

**OS/device including version:**

Ubuntu 18.04

**Issue description:**

When I try to drag to empty script panel any type of file(gd or tscn) then this error occurs:

```

ERROR: move_child: Index p_pos=-1 out of size (data.children.size() + 1=2)

At: scene/main/node.cpp:323.

ERROR: set_current_tab: Index p_current=-1 out of size (get_tab_count()=1)

At: scene/gui/tab_container.cpp:464.

```

**Steps to reproduce:**

https://streamable.com/x7szx

Answers:

username_1: The video is dead. I can only get

```

scene\gui\tab_container.cpp:552 - Index p_current=0 out of size (get_tab_count()=0)

```

when dragging non-script file on empty script list.

username_1: Doesn't happen anymore in 8958e1b

Status: Issue closed

|

liberapay/liberapay.com | 602415219 | Title: Creators can't pause their patrons' donations to them

Question:

username_0: I was reminded of this missing feature by <https://mastodon.social/@dansup/104016437070987358>.

Answers:

username_1: I have some questions for this feature:

1. For how long should donations be paused for? (e.g. a certain duration such as a month or indefinitely until the creator manually re-enables donations)

2. How should the donors be notified if their donations are being paused or resumed? (e.g. notifications, email)

3. What should happen in the case that a patron goes to a project page and tries to donate? Should the donate button be disabled or should the option to donate still be available?

Thank you!

username_2: My answers are below,

For how long should donations be paused for? (e.g. a certain duration such as a month or indefinitely until the creator manually re-enables donations)

I personally think it should be until the creator resumes donations again. That was one thing I disliked about Patreon was that I had to keep logging in to pause my upcoming billing cycle.

On the other hand, I do see scenarios where creators will pause billing then forget about it completely, and forget to unpause it again, so either way would be fine with me but I prefer pausing until the creator resumes billing again.

How should the donors be notified if their donations are being paused or resumed? (e.g. notifications, email)

By email, yes.

What should happen in the case that a patron goes to a project page and tries to donate? Should the donate button be disabled or should the option to donate still be available?

In this case, I think it should still be available to donate because I've had many Patreon supporters still willing to set everything up for when the creator resumes billing again. Perhaps a little text banner on the page could say this creator has temporarily paused billing. You will be charged when they resume billing.

username_1: I'm not sure if Patreon does this, but would it make sense to also include the pause feature for a team? Or should I focus on implementing it for individual/shared accounts first?

username_0: The Goal page is probably the most appropriate.

For teams, you can either disallow pausing, or implement sending a notification to the other members when the team is paused. |

nulpoet/mjkey | 752743925 | Title: 福州哪里有的士发票-福州哪里有的士发票

Question:

username_0: 福州哪里有的士发票【徴:ff181一加一⒍⒍⒍】【Q:249⒏一加一357⒌⒋0】学历低加上外貌的原因,很多工作都受限制。最后她找到了一份通讯公司客服的工作,不用见面,只用声音和人打交道。从小乐观的天性,加上后天的积极努力,表妹在单位成了受欢迎的红人。

一些热心的姑妈姨妈总是喜欢牵红线给大

https://github.com/nulpoet/mjkey/issues/785

https://github.com/nulpoet/mjkey/issues/786

https://github.com/nulpoet/mjkey/issues/787 |

sumpfork/dominiontabs | 159853691 | Title: Add A3 papersize

Question:

username_0: Hi, would be possible to add "A3" paper size with no page margin? This would allow to print 16 dividers on each page. Thanks :-)

Answers:

username_1: The code for this already exists using the stand alone program. The options are:

`--papersize A3 --minmargin 0x0`

When I run it, I get:

- horizontal dividers: 6 rows of 3 cards

- vertical dividers: 4 rows of 5 cards (it just fits, almost going to the edge)

The work is making this available on the website. The biggest part would be allowing minimum margins to be given as input. An interim step might be adding an A3 option on the page size pull down.

username_0: Adding the option to the website would be awesome! :+1:

Status: Issue closed

username_2: Deployed. |

Homebrew/homebrew-core | 213580953 | Title: `brew upgrade readline` failing with 401 Unauthorized

Question:

username_0: Please note that these warnings are just used to help the Homebrew maintainers

with debugging if you file an issue. If everything you use Homebrew for is

working fine: please don't worry and just ignore them. Thanks!

Warning: "config" scripts exist outside your system or Homebrew directories.

`./configure` scripts often look for *-config scripts to determine if

software packages are installed, and what additional flags to use when

compiling and linking.

Having additional scripts in your path can confuse software installed via

Homebrew if the config script overrides a system or Homebrew provided

script of the same name. We found the following "config" scripts:

/Applications/Wine Devel.app/Contents/Resources/wine/bin/xml2-config

/Applications/Wine Devel.app/Contents/Resources/wine/bin/xslt-config

Warning: Some installed formula are missing dependencies.

You should `brew install` the missing dependencies:

brew install gdbm sqlite xvid

Run `brew missing` for more details.

```

Status: Issue closed

Answers:

username_1: Fixed by 77cfba0d7aedeb8f48400c1ff1cfcb362398fae7; thanks for reporting. |

daydaychallenge/leetcode-go | 615538431 | Title: 161. One Edit Distance

Question:

username_0: #### [161. One Edit Distance](https://leetcode.com/problems/one-edit-distance/)

Given two strings ***s\*** and ***t\***, determine if they are both one edit distance apart.

**Note:**

There are 3 possiblities to satisify one edit distance apart:

1. Insert a character into ***s\*** to get ***t\***

2. Delete a character from ***s\*** to get ***t\***

3. Replace a character of ***s\*** to get ***t\***

**Example 1:**

```

Input: s = "ab", t = "acb"

Output: true

Explanation: We can insert 'c' into s to get t.

```

**Example 2:**

```

Input: s = "cab", t = "ad"

Output: false

Explanation: We cannot get t from s by only one step.

```

**Example 3:**

```

Input: s = "1203", t = "1213"

Output: true

Explanation: We can replace '0' with '1' to get t.

``` |

latex3/latex3 | 678972310 | Title: [doc] xfp: possible typo, "x >? y" should be "x ? y"

Question:

username_0: from https://tex.stackexchange.com/q/558606

Related source lines:

https://github.com/latex3/latex3/blob/8e237df69c46e0625f352e4c9d6b13189008e698/l3packages/xfp/xfp.dtx#L90-L92

From related documentation for floating point expressions in `texdoc interface3`, it seems the

```tex

$x\mathop{\mathtt{>?}}y$

```

in line 91 should be

```tex

$x\mathop{\mathtt{?}}y$

```

which compares if `x` and `y` are not ordered (for example, one of them is NaN).

Answers:

username_1: Not a bug. These operators are composable.

Status: Issue closed

username_2: We could actually allow x??y if people find this clearer than x!<=>y. The

reason not to allow x?y is the ambiguity with the ternary operator x?y:z.

username_1: @username_2 Why does `?` exist in the first place? Isn't the `nan` case covered by `x != x`? |

code4craft/webmagic | 205817496 | Title: 项目依赖Commons-Collections3.2.1存在反序列化漏洞隐患

Question:

username_0: 目前webmagic项目中依赖使用的Commons-Collections3.2.1存在严重的反序列化漏洞隐患,建议提高一个小版本到Commons-Collections3.2.2即可

Answers:

username_1: 附上官方jira:

[https://issues.apache.org/jira/browse/COLLECTIONS-580](https://issues.apache.org/jira/browse/COLLECTIONS-580)

WebMagic本身没有使用反序列化API,不过考虑到适用方,还是升级了。

Status: Issue closed

|

CocoaPods/CocoaPods | 381022912 | Title: PLCrashReporter-DynamicFramework can't build with Xcode 10

Question:

username_0: Hi man,

I'm using your PLCrashReporter-DynamicFramework '~> 1.3.0.1' in my iOS app;

But it cannot build with Xcode10, the segment registers are 16 bit, Zeroing out the other 48 bits in

case client code depends on it.

So can you update code from [here](https://github.com/AbletonAppDev/plcrashreporter) and supply a new version asap?

Answers:

username_1: Please file an issue with the corresponding repo. This is about the CocoaPods library.

https://github.com/plausiblelabs/plcrashreporter

Status: Issue closed

|

agusibrahim/Aplikasi-PPOB-Xamarin | 440604752 | Title: Fix System.FormatException in JsonSerializerInternalReader.EnsureType (Newtonsoft.Json.JsonReader reader, System.Object value, System.Globalization.CultureInfo culture, Newtonsoft.Json.Serialization.JsonContract contract, System.Type targetType)

Question:

username_0: ### Version 1.0.1(1) ###

### Stacktrace ###

### Reason ###

System.FormatException

### Link to App Center ###

* [https://appcenter.ms/users/username_0/apps/Retross-Android/crashes/errors/2625443542u](https://appcenter.ms/users/username_0/apps/Retross-Android/crashes/errors/2625443542u) |

psf/black | 651950220 | Title: please address these concerns

Question:

username_0: I would like @ambv to address the accessibility concerns asked for in this screenshot. I am not making any claims about the specific single quotes issue at hand but my claim is that you were very disrespectful for not even acknowledging @username_1 's statement.

This does not align with principles outlined in the Python [code of conduct](https://www.python.org/psf/conduct/).

@username_1 I am sorry that your voice was not heard.

<img width="802" alt="Screen Shot 2020-07-06 at 7 33 54 PM" src="https://user-images.githubusercontent.com/6046841/86693835-e3385500-bfbf-11ea-84e6-357d8677e5b5.png">

Answers:

username_1: @username_0 Hello there! While I appreciate your concern, I believe as long as `-S, --skip-string-normalization` exists then all is fair. The CoC was most definitely not broken as the situation is just a differing of opinions.

Also, I'm not sure if you're aware but the use of the word "voice" nowadays is often the prelude to a certain series of events, of which I want no part of 😉

Could you please close this?

username_0: Sure, sorry I just wanted to make sure you weren’t left without a solution.

I would still personally encourage @ambv to rethink how he handled that situation.

Status: Issue closed

|

andriirogulin/ARSlidingPanel | 246283108 | Title: How to implement into a tabbarcontroller without hiding or pushing up the tabbar?

Question:

username_0: When I try to implement I have 2 options... overlap or push the tabbar to stay up to the swipablezone.

I would like to have the swipablezone up to the tabbar without hiding it

Answers:

username_1: Hi @username_0, have you solved this?

username_0: Nope, I quit unfortunately

username_1: Okay thanks, one more thing, do you know any alternative libraries? I’m

currently using this one but it’s unupdated long time ago already. Thanks!

G |

pandas-dev/pandas | 640991515 | Title: 1.0 as boolean dtype cause ValueError in pd.read_csv()

Question:

username_0: ```

Is this intended?

I am using Windows 10, Python 3.7.6 and pandas 1.0.4

Answers:

username_1: ```

Is this intended?

I am using Windows 10, Python 3.7.6 and pandas 1.0.4

username_0: @username_1 I already made a proper issue for this in the pandas repo. Sorry, for opening it in pandas2 and thanks for moving it.

Status: Issue closed

|

epics-base/pvDatabaseCPP | 560289714 | Title: pvRecord destructor throws when clients are still connected

Question:

username_0: Any attempt to destruct a pvRecord instance while there are clients (or listeners) still connected throws a bad_weak_ptr exception.

When an instance of a pvRecord derived structure is being destructed (e.g. when the last user of a shared_ptr to it goes away), the managed object (pvRecord) destructor is being run. It calls `notifyClients()`, and trying to remove an existing client, the call to `client->detach(shared_from_this());` uses the `shared_from_this()` mechanism, which holds a weak_ptr inside the managed object to be able to find and join an existing shared_ptr from the managed object. In this case - being run as the shared_ptr being destructed - that weak_ptr is not valid anymore, and shared_from_this() thows a bad_weak_ptr exception.

```

terminate called after throwing an instance of 'std::bad_weak_ptr'

what(): bad_weak_ptr

==53188==

==53188== Process terminating with default action of signal 6 (SIGABRT)

==53188== at 0x5270081: raise (raise.c:51)

==53188== by 0x525B534: abort (abort.c:79)

==53188== by 0x50DA692: ??? (in /usr/lib/x86_64-linux-gnu/libstdc++.so.6.0.28)

==53188== by 0x50E6035: ??? (in /usr/lib/x86_64-linux-gnu/libstdc++.so.6.0.28)

==53188== by 0x50E5138: ??? (in /usr/lib/x86_64-linux-gnu/libstdc++.so.6.0.28)

==53188== by 0x50E5A63: __gxx_personality_v0 (in /usr/lib/x86_64-linux-gnu/libstdc++.so.6.0.28)

==53188== by 0x522C7B2: ??? (in /lib/x86_64-linux-gnu/libgcc_s.so.1)

==53188== by 0x522D015: _Unwind_Resume (in /lib/x86_64-linux-gnu/libgcc_s.so.1)

==53188== by 0x48F725A: epics::pvDatabase::PVRecord::notifyClients() (pvRecord.cpp:82)

==53188== by 0x48F72E0: epics::pvDatabase::PVRecord::~PVRecord() (pvRecord.cpp:105)

==53188== by 0x11407D: Record::~Record() (pva-one-pps.cpp:49)

==53188== by 0x114099: Record::~Record() (pva-one-pps.cpp:49)

```

Example application attached. Removing the sleep() before the `pvrecord` shared_ptr goes out of scope will show the behavior.

[pva-test-loop.tar.gz](https://github.com/epics-base/pvDatabaseCPP/files/4159019/pva-test-loop.tar.gz)

Answers:

username_1: Without the reset and additional sleep there were weak pointer exceptions.

I think this was caused by a client disconnect together with removeRecord.

I hate using the additional sleep but I see no way to have the provider

disconnect all channels and not return until all channels are removed.

username_0: Obviously a misunderstanding.

This issue is not about any past version of pvDatabaseCPP.

It is about an obvious bug in the destructor of pvRecord.

The pvRecord destructor uses `shared_from_this()`, meaning that any pvRecord must be used in the context of a shared_ptr. (Else the destructor will throw a bad_weak_ptr exception when `shared_from_this()` is called.)

However, at the moment when the pvRecord destructor is run in the context of a pvRecord shared_ptr, that shared_ptr is already being destructed, so the call to `shared_from_this()` will throw a bad_weak_ptr exception.

No matter how, the pvRecord destructor will *always* throw a bad_weak_ptr exception if any clients or listeners are still connected to the pvRecord.

username_1: The problem was involved with removing a PVRecord.

There are two methods for removing a record: PVDatabase::removeRecord and PVRecord::remove.

PVRecord::remove first calls PVDatabase::removeRecord and the calls all attached client to notify them that the record is being removed. This did work OK.

PVDatabase has a std::map that has a shared_ptr to each PVRecord.

PVDatabase::removeRecord just removes shared_ptr to the PVRecord from the std::map.

Normally nothing besides PVDatabase has a shared_ptr to PVRecord.

So when it is removed from the map the PVRecord destructor is called.

The destructor for PVRecord calls any attached clients to notify that the record is going away.

But since it was doing this from the PVRecord destructor the bad weak point exception occurs.

A new branch issue53 fixes the problem.

What now happens is that PVDatabase::removeRecord optionally calls PVRecord::remove

It does NOT call it if PVDatabase::removeRecord is called by PVRecord::remove.

If PVRecord::remove is called first is calls PVDatabase::removeRecord asking not to be called back.

ALL destructors in pvDatabaseCPP are now trivial, i.e. at most that have a debug statement.

username_1: fixed by merge request #54

Status: Issue closed

|

GoogleContainerTools/skaffold | 803857312 | Title: Create an application Log event endpoint

Question:

username_0: 1. Create application log event endpoint.

2. Create an application log event as per the definition

```protobuf

message ApplicationLogEvent {

string containerName = 1; // container that the log came from

string podName = 2; // pod that the log came from

string message = 3; // contents of the log

}

```

3. All application logs will be available via this end point..

Answers:

username_1: starting on this

Status: Issue closed

|

vueuse/vueuse | 952954838 | Title: @ Symbol conflicts the Typescript src import

Question:

username_0: Please am using VUE3 with typescript.

And as standard the `tsconfig.json` file has an src directory import denoted as @.

```JSON

{

"compilerOptions": {

...

"paths": {

"@/*": [

"src/*"

]

},

}

}

```

And the import for This vueuse is.

`import { usePackage} from '@vueuse/<package>'`

But doing so. Would give error.

**Module not found: Error: Can't resolve '@vueuse/\<package\>' in 'VUE\project\src'**

I believe it's a conflict due to the **@** symbol and the name of the module.

Please is there a fix on how to import this library on typescript Vue3? Thanks 🙏

Status: Issue closed

Answers:

username_1: I don't think it's related. There are hundreds of packages that starts with @ scopes. Guess there might be some misconfigure in your project.

username_2: @username_0

You can check out https://github.com/vuestorefront/shopware-pwa/issues/1541 or

https://github.com/vuejs/vue-cli/issues/1198

That might help you fix your tsconfig for this current issue. |

LoneGazebo/Community-Patch-DLL | 255723941 | Title: AI not spending faith

Question:

username_0: this is lisbon. the portuguese don't have a their own religion, so they're not spending on missionaries. don't spend their faith on anything else either though:

(they could go for great people as well ...)

Answers:

username_1: Religion log - are they saving up for something ?

G

Sent from my iPhone

>

username_0: ok, the log says

261, 1966, Portugal, Saving up for a Prophet, as we need to convert Non-Puppet Cities.

261, 1966, Portugal, Saving up for a Prophet, as we need to convert Non-Puppet Cities., Faith: 9607

so it seems they do have a holy city, but have been overwhelmed by the indonesians. maybe a special case. pretty sure they could have bought a prophet for far less, but since they don't have a city with the right religion, they're stuck.

username_1: Yeah it is tough to tell the AI when to give up on an owned religion.

Sent from my iPhone

>

username_0: took a look at the code but i don't understand it ... when you buy a prophet, which religion does he adhere to?

username_1: your 'currentreligion' which is always your founder religion if you've founded one and still own the holy city.

Status: Issue closed

|

ionide/ionide-atom-fsharp | 303499549 | Title: HTMLElement.rootElement is deprecated.

Question:

username_0: The contents of `atom-text-editor` elements are no longer encapsulated

within a shadow DOM boundary. Please, stop using `rootElement` and access

the editor contents directly instead.

```

HTMLElement.rootElement (C:\Users\MadsK\AppData\Local\atom\app-1.24.0\resources\app.asar\src\text-editor-element.js:23:10)

n.Tag (C:\Users\MadsK\.atom\packages\ionide-fsharp\lib\fsharp.js:9300:16)

Option__Map$Element__HTMLElement_Element__HTMLElement_ (C:\Users\MadsK\.atom\packages\ionide-fsharp\lib\fsharp.js:7058:37)

ViewsHelpers__getElementsByClass$Element_Element_ (C:\Users\MadsK\.atom\packages\ionide-fsharp\lib\fsharp.js:9298:9)

TooltipHandler__initialize$ (C:\Users\MadsK\.atom\packages\ionide-fsharp\lib\fsharp.js:8877:11)

<unknown> (C:\Users\MadsK\.atom\packages\ionide-fsharp\lib\fsharp.js:8803:14)

``` |

microsoft/Windows-Containers | 630223463 | Title: WAC-Create new image - Web applications from WebDeploy

Question:

username_0: To support customers who currently have a web application deployed on an IIS web server, the Create New Image functionality on the containers extension on WAC should allow customers to containerize an application that was exported from IIS with WebDeploy.

Answers:

username_0: This feature is now in preview. Please add bugs as new issues.

Status: Issue closed

|

weavejester/clojure-toolbox.com | 486839698 | Title: Missing project

Question:

username_0: Only to find a 404, with not just that project missing, but also the user under which the project is hosted.

Just checked on Clojars and the project is there: https://clojars.org/clj-sql-up

Answers:

username_1: @username_2 I think this issue can be closed, as the reference to `clj-sql-up` was removed by this commit : https://github.com/username_2/clojure-toolbox.com/commit/666019bbfb2583c201ae9b39841ab96a5c317b60

Status: Issue closed

|

accordproject/techdocs | 697940245 | Title: AP ESLint Config

Question:

username_0: # Feature Request 🛍️

Create an AP ESLint configuration to be shared among our repositories

## Use Case

We should have our own ESLint configuration to help keep code across our ecosystem consistent and approachable

## Possible Solution

This should run during tests to enforce linting

## Context

Use [`@clausehq/eslint-config`](https://www.npmjs.com/package/@clausehq/eslint-config) as a starting point reference

Answers:

username_1: We may need different linting rules for core part of the stack and web components

username_1: Somewhat different issue, in the area of code checks:

- license checks on all project

username_0: Yes maybe we could have a core stack config and a ui stack config?

username_1: I'm not super versed in linter configurations, but one difference is the JavaScript style where the core stack uses CommonJS "requires" v the web components using the ES6 style.

Also the standard indentation in the core stack is 4 whitespace rather than 2 in the web components (I wouldn't mind getting rid of that difference though).

username_2: Hey, I'm Interested in this :)

I'm a newcomer here, I was just able to close this simple issue :) #341

I want to discuss this issue a little bit, I had joined Slack today, can you tell me to whom should I approach and discuss this issue there?

username_0: Happy to discuss this more @username_2, I know others can also help: @DianaLease @username_3

username_0: I think we should use the [`@clauseHQ/eslint-config`](https://github.com/clauseHQ/eslint-config) ESLint configuration as a starting point. But I think we should be making our own configuration repository. One for “core” and one for “ui” to start with. So, `@accordproject/eslint-core` and `@accordproject/eslint-ui`. We could make this a monorepo (`accordproject/linting`?), which would make future linting configurations easier to add.

@username_1 as a side note, this makes me think we may want to think about having a more structured naming convention for repositories and projects.

username_2: and then we can discuss it and move on to further monorepo creation and so on...

username_2: Hello @username_0 ,

I had created a `.eslintrc.json` file [here](https://gist.github.com/username_2/292f886a670af2e88dfebce822fba398) as a sample, So that we can discuss some more rules that we should add in it...

For convenience I'm pasting that file below...

```

{

"extends": [

"@clausehq/eslint-config",

"eslint:recommended",

"plugin:import/errors",

"plugin:react/recommended",

"plugin:jsx-a11y/recommended"

],

"rules": {

"react/prop-types": 0,

"indent": ["error", 2],

"linebreak-style":1,

"require-jsdoc":0

},

"parser": "babel-eslint",

"plugins": [

"react",

"import",

"jsx-a11y"

],

"parserOptions": {

"ecmaVersion": 2021,

"sourceType": "module",

"ecmaFeatures": {

"jsx": true

}

},

"env": {

"es6": true,

"browser": true,

"node": true

},

"settings": {

"import/resolver": {

"node": {

"paths": ["src"],

"extensions": [".js", ".jsx", ".ts", ".tsx"]

}

},

"react": {

"version": "detect"

}

}

}

```

1. The import plugin helps ESLint catch commons bugs around imports, exports, and modules in general

2. jsx-a11y catches many bugs around accessibility that can accidentally arise using React, like not having an alt attribute on an img tag.

3. react is mostly common React things, like making sure you import React anywhere you use React.

babel-lint allows ESLint to use the same transpiling library, Babel, that Parcel uses under the hood. Without it, ESLint can't understand JSX.

4. eslint-plugin-react now requires you to inform of it what version of React you're using. We're telling it here to look at the package.json to figure it out.

5. Also as you had given me the starting point [@clausehq/eslint-config](https://github.com/clauseHQ/eslint-config) I had also extended that.

6. I had added the indentation rule of 2 whitespaces as suggested by @username_1 , we can add other rule for core-stack in different file once the monorepo is created...

@DianaLease & @username_3 , I would like to know your views and opinions on this as you were the contributors in [@clausehq/eslint-config](https://github.com/clauseHQ/eslint-config)...

username_3: Thanks @username_2 for your efforts here.

@username_0 why don't we just adopt an existing lining ruleset? There are plenty to choose from and we avoid the overhead of maintaining our own.

username_0: I'm going off the assumption that @username_1 is correct in that we [need two separate linters](https://github.com/accordproject/techdocs/issues/308#issuecomment-690526639).

username_2: Okay @username_0 , I didn't make two files before because I was waiting for your views on this [file](https://gist.github.com/username_2/292f886a670af2e88dfebce822fba398)...will make other file soon :)

username_0: @username_2 I think we'll want more input from @username_1 on this before moving forward.

username_2: Yeah sure! Waiting for your reply @username_1 😅

username_2: @username_0 any update on this?

I had created two sample files...

[Core stack](https://gist.github.com/username_2/864a79cea1a078616941f69e11735b31)

[Web Components](https://gist.github.com/username_2/292f886a670af2e88dfebce822fba398)

Looking forward to having your input on this...

username_2: Also the standard indentation in the core stack is 4 whitespace rather than 2 in the web components (I wouldn't mind getting rid of that difference though).

As suggested by Jerome, I had added these rules in those files...Also you may get a little information in this [comment](https://github.com/accordproject/techdocs/issues/308#issuecomment-787502875) about various plugins I had added

Looking forward to having your suggestions on adding some specific rules which I missed adding and then we can move on creating Accords ESlint Config repo and further npm publishing work...

username_3: Can't we take an off-the-shelf linter for each pattern? I still don't understand the motivation for creating one or more custom house styles

username_4: hey can i get to work on this issue?

username_0: @username_4 I suggest following @username_3's suggestion and implement an existing linter. |

sveltejs/sapper | 671678879 | Title: Add ability to set response attributes on a per-route basis

Question:

username_0: There's a desire to set certain response attributes on a per-route basis. E.g. the `Cache-Control` header, other headers, response type, etc.

A few ideas:

* Handle it with middleware

* Export some setting in a page or layout or call some function there

* Do it in a config file

* Expose the router and allow configuring it

Answers: