repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

waruqi/tboox.github.io | 496790979 | Title: Uses xmake to build c++20 modules

Question:

username_0: https://tboox.org/2019/09/22/xmake-c++20-modules/

c++ modules have been officially included in the c++20 draft, and msvc and clang have been basically implemented on modules-ts Support, as c++20’s footsteps ... |

linode/linodego | 686930424 | Title: Consider refactoring WaitForEventFinished "seen" filter optimization

Question:

username_0: ### General:

When using the [Linode Terraform Provider](https://github.com/linode/terraform-provider-linode), I would intermittently get boot and disk errors while specifying an explicit disk configuration for my instance.

On the Terraform side, this manifests in errors like:

`Error booting Linode instance xxxxxxxx: [002] Get "https://api.linode.com/v4/account/events?page=1": context deadline exceeded`

`Error waiting for Linode instance xxxxxxxx disk: [002] Get "https://api.linode.com/v4/account/events?page=1": context deadline exceeded`

Digging through this code a bit I came across the comment added in [Pull Request #76](https://github.com/linode/linodego/pull/76/files#diff-18dc188a67872bccb91f231d01326eecR174)

```go

// With potentially 1000+ events coming back, we should filter on something

// Warning: This optimization has the potential to break if users are clearing

// events before we see them.

"seen": false,

```

While Terraform was running, I was actively working in the Linode Manager and clicking on the notification bell icon to follow along with the progress. After finding that comment in the code, I finally realized that reading the notification events what was causing the errors. If I run the Terraform without clicking on the notification bell icon, the instance will then be successfully created.

I am not sure what possible options there might be for refactoring the "seen" filter optimization, but I wanted to at least open an issue incase others run in to the same errors I was seeing.

### Expected Behavior:

The Linode Terraform Provider (linodego) can be used while also using the Linode Manager and viewing notification events (the bell icon).

### Actual Behavior:

Viewing notification events in the Linode Manager (the bell icon) can cause the Linode Terraform Provider (linodego) to error while waiting for events.

### Steps to Reproduce the Problem:

1. Start an API call that utilizes `WaitForEventFinished`

1. View notification events in the Linode Manager (the bell icon)

1. API call will timeout

### Environment Specifications

macOS: 0.14.6

Terraform: 0.13.0

Linode Terraform Provider: 1.12.4

Status: Issue closed

Answers:

username_1: Closing because the `seen` optimization has been removed in https://github.com/linode/linodego/pull/215.

username_0: Thank you! 🎉 |

mozilla/network-pulse | 199561357 | Title: Pulse v2 launch meta

Question:

username_0: Hi team. We are on the final stretch to launch our new build. We will need this ready for the network launch. Let's aim for Jan 31 to production, so we have time to QA and squash bugs. The work seems manageable, but there's risk from conflicting priorities.

Meta tasks below. Tried to order them logically. I'm on mobile. Can someone link relevant issues?

1. Finish tests

1. Prep and import data

1. Connect frontend to backend. How many dev days do we expect?

1. Build Form - design spec done, but there is error handling to wrangle. Expect this to take non-trivial time.

1. Add quality analytics

1. Favs - persist for users with auth. This feature shouldn't block launch. We can bump.

Anything missing from this list?

Later:

1. Revisit back button

Answers:

username_0: CC @kristinashu @mmmavis @pomax @username_1 =:-)

username_1: Edited original comment with links

username_0: @pomax – we all chatted on Friday. Anything on your plate missing from this list?

username_2: @username_0 I don't think so, I'm working with Alan on the testing and thinking out loud with him about the favouriting mechanism, and don't see anything missing from the above list.

username_0: Let's all peek at this meta ticket as we prep for work next week before people travel and shift to other projects. @kristinashu @mmmavis @username_2 @username_1

Any big revisions, concerns, new items we've identified as mvp work?

username_1: @username_0 We reviewed the milestone today with @simonwex, so those are up to date

Status: Issue closed

username_0: Closing this. Final tasks are filed and tracking just fine without this overhead. |

phenology/hsr-phenological-modelling | 242314326 | Title: Ingest MODIS data

Question:

username_0: MCD12Q2. Let's try pyMODIS. Bounding box CONUS

Other RS data (AVHRR, etc) with longer time series are in the making...

Answers:

username_0: Before I forget, we should mask out water, and other less relevant classes (snow, urban) before doing our SVD analysis. We will create this mask based on [MCD12Q1](https://lpdaac.usgs.gov/dataset_discovery/modis/modis_products_table/mcd12q1)

username_1: The data was loaded with success and documentation was added in how to load it.

https://github.com/phenology/hsr-phenological-modelling/tree/master/modis

Status: Issue closed

|

firecracker-microvm/firecracker | 386944358 | Title: Create a Tool to Check Prod Host Setup

Question:

username_0: Create a tool that automatically checks a Linux host for all the recommended [prod configuration](https://github.com/firecracker-microvm/firecracker/blob/master/docs/prod-host-setup.md).

Answers:

username_1: I'd be happy to tackle this. I imagine it would be best implemented as a script, similar to `devtool`?

username_2: Yes, ideally this would go in a separate script `prodtool` or a more inspired name. But please use a common scripting language to keep life simple 😄

Right now this script could handle two commands:

- _can I run Firecracker on this system?_ - should check that all prerequisites for running Firecracker are satisfied.

- _Is my system production worthy?_ - should check and report all the necessary steps needed on the host system to provie safety and efficiency when running Firecracker.

These commands can be added independently and we can also iterate.

So @username_1, if you can tackle any of them, we're all for it! 🎉

username_1: @username_2 Sounds good. I will just use bash to maintain consistency with devtool.

username_0: @username_1 we're wondering if you're still looking at this.

username_1: @username_0 My apologies — I was a bit busy with the holidays, but yes, I am still interested in working on this.

Status: Issue closed

username_3: Addressed by #1054 |

SVGKit/SVGKit | 945287762 | Title: SVGKitSwift SPM build issue (when archiving only)

Question:

username_0: I'm using Xcode 12.5.1 and SwiftUI

I'm able to use SVGKit just fine. the issue is when archiving

I tried using a fresh test project and always got this issue:

<img width="379" alt="Screenshot 2021-07-15 at 12 32 54" src="https://user-images.githubusercontent.com/77971847/125781583-bf18d49a-86bd-4594-aa6b-c831407f9679.png">

Could you please help me? thank you.

Answers:

username_2: I think there's a fundamental issue here, whereby having the SVGKitSwift dependency in the package seems to indicate to the compiler that it must be built. The only way I was able to get this to archive was to change the package spec to `.v13` - I'm also on 12.5.1

This obviously bumps the minimum version a lot higher, when it may not be necessary except for that one product (SVGKitSwift).

Some thoughts:

- Maybe this is just an issue in latest Xcode? I haven't tested it on others.

- Change SVGKitSwift to SVGKitSwiftUI to avoid confusion (the name tripped me up on import, though made more sense after looking at Package.swift and the sources)

- Perhaps move SVGKitSwiftUI to it's own Package to avoid issues with backward compatibility if `.v9` is necessary.

I'll file a PR for convenience. Though it's quick fix, I'm not sure if it's the most optimal solution, depending on what route @username_1 wants to take with this...

username_0: Thank you! @username_2

Status: Issue closed

username_3: I don't get it how to fix it ? , I have same issue with archiving only.

@[username_0](https://github.com/username_0) how did you solve it ? |

ContainerSolutions/minimesos | 118555024 | Title: exception is handled wrongly

Question:

username_0: Please, handle exception in `MesosClusterTest` correctly. This should either brake the test (seems to be the case here) or ignored with explanation in comments.

```java

@Test

public void dockerExposeResourcesPorts() {

DockerClient docker = CONFIG.dockerClient;

List<MesosSlave> containers = Arrays.asList(CLUSTER.getSlaves());

ArrayList<Integer> ports = new ArrayList<>();

for (MesosSlave container : containers) {

try {

ports = MesosSlave.parsePortsFromResource(((MesosSlaveExtended)container).getResources());

} catch (Exception e) {

// TODO: no printing to System.err. Either handle or throw

e.printStackTrace();

}

InspectContainerResponse response = docker.inspectContainerCmd(container.getContainerId()).exec();

Map bindings = response.getNetworkSettings().getPorts().getBindings();

for (Integer port : ports) {

Assert.assertTrue(bindings.containsKey(new ExposedPort(port)));

}

}

}

```<issue_closed>

Status: Issue closed |

bitstadium/HockeySDK-Windows | 281835574 | Title: Error in package manager console

Question:

username_0: Every time i load my application in visual studio, i get the following error in the package manager console:

```

You cannot call a method on a null-valued expression.

At C:\Code\CoBRAGit2\ClientApplications\DroneLocApp\packages\HockeySDK.WPF.4.1.6\tools\init.ps1:3 char:1

+ $project.DTE.ItemOperations.Navigate('https://github.com/bitstadium/H ...

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (:) [], RuntimeException

+ FullyQualifiedErrorId : InvokeMethodOnNull

```

there are no actual errors in my application error list, so i can't see what affect this has if any. Is there any way of clearing this issue?

Answers:

username_1: Hey @username_0,

thx for reporting this. We'll be looking at this.

Best,

Benjamin

username_2: Hi @username_0

Just FYI, the init.ps1 script runs the first time a package is installed in a solution and also it runs every time the solution is opened. The problem is there is no way to tell inside script if we are running during the installation process or simple opening. I think the best we can do it is just check for object existence, which #130 PR is doing. Thanks!

Best,

Murat |

kasper/phoenix | 230954737 | Title: Feature for make osx El Capitan mouse larger temporarily

Question:

username_0: https://support.apple.com/kb/PH21543?locale=en_US

Sometimes when I mv mouse to a position, make the mouse temporarily larger would be useful.

So, Mouse need a function like Mouse.showUp()

Answers:

username_0: I think I should mock mouse shake first

username_1: Yes, I’m not sure if there’s an API to achieve this directly. Of course you can just mock the shake feature by rapidly changing/shaking the position of the pointer and see if that causes the effect.

username_0: I mock the mouse to shake, but it doesn't work, may be I did not figure out the trigger condition。

Instead, I use modal to solve my problem.

username_2: It seems `Mouse.move` simply moves the mouse pointer, but does not seem to *trigger* a mouse move in macOS. For example, if I'm currently dragging an item and use Phoenix to move the pointer somewhere else, the dragged item will not move until I move the physical mouse around.

username_1: Yes, @username_2 is likely right on point.

username_1: Seems like there is a private API to change the cursor size: `CGSGetCursorScale` and `CGSSetCursorScale`. 🙈 |

MicrosoftDocs/office-docs-powershell | 390242175 | Title: Clarity for the -Alias parameter in New-Team cmdlet

Question:

username_0: By running the **Get-Help New-Team -Full** in PowerShell, and referring to the `-Alias` parameter, the output says different from the docs.

The output in PowerShell says "*Same as displayName without any spaces. Team Alias Characters Limit - 64*". But the docs say it only has to be a unique name (re O365 Group in the background).

I had a look at the #1796, however, it would mean that it only has to be unique, AND NOT be mandatory for it to be the DisplayName w/o spaces then?

Can we get some clarity?

Thanks

---

#### Document details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 549c7d86-87b3-5c82-d2b3-a0cc0b4faa11

* Version Independent ID: b3377099-854a-50d0-0fe1-bbdd7ddec332

* Content: [New-Team (teams)](https://docs.microsoft.com/en-gb/powershell/module/teams/New-Team?view=teams-ps)

* Content Source: [teams/teams-ps/teams/New-Team.md](https://github.com/MicrosoftDocs/office-docs-powershell/blob/master/teams/teams-ps/teams/New-Team.md)

* Service: **teams-powershell**

* GitHub Login: @kenwith

* Microsoft Alias: **kenwith**

Answers:

username_1: Hi @username_0 , thank you for your contribution.

I think someone update the description of this parameter:

"The Alias parameter specifies the alias for the associated Office 365 Group. This value will be used for the mail enabled object and will be used as PrimarySmtpAddress for this Office 365 Group. The value of the Alias parameter has to be unique across your tenant.

For more details about the naming conventions see here: New-UnifiedGroup, Parameter: -Alias."

Conclusion: Alias hast to be unique across tenant and it could be different to DisplayName.

username_2: @username_1 Thank you very much for the contribution and sharing this explanation. @veronicabeek

Hope this comment is helpful for you. Thanks for taking out some time to open the issue. Appreciate and encourage you to do the same in future also.

Status: Issue closed

|

weirdyang/sgsew-frontend | 963508645 | Title: Restrict product type selection to hardware and services

Question:

username_0: see username_0/sgsew-backend#2

Status: Issue closed

Answers:

username_0: f6e86f61314b486aa9abd4600053dc35893cb11e

2451ab8b4120e9cfa3a91568fb682201a9af451c

f29d6da0244eaef1854542e5ac433929b746990e

671ea0a9376d5fe2642c8b593e0677cdc6bb6ced

dcfc8727fc5232219919bea478f3b42a5a129ee5 |

Clever/wag | 834016797 | Title: None

Question:

username_0: I can't figure out what went wrong here. Does `dependabot-preview` not understand that the import path `github.com/Clever/wag/v6/clients/go` is a package named `goclient`? Or does it not understand the module suffix?

I'm going to close this ticket and see if dependabot reopens it?<issue_closed>

Status: Issue closed |

dotnet/core | 250404686 | Title: Publish .NET Core SDK to Homebrew

Answers:

username_1: I don't have a full understanding of what's involved; but as I understand Homebrew, couldn't anyone create a homebrew installer for .NET Core?

username_2: +1 for Homebrew; also FYI there's a very active discussion already at dotnet/cli#533.

I'm far from an expert on Homebrew, but I have made [a number of contributions](https://github.com/Homebrew/homebrew-core/pulls?utf8=%E2%9C%93&q=author%3Ausername_2) and would be glad to help write one for .NET Core 😄

I work at Microsoft, so feel free to ping me here or offline if there's anything I can do to help! 🙂

username_3: I"m going to close this in favor of the conversation continuing in the cli repo which is the correct place. https://github.com/dotnet/cli/issues/533

Status: Issue closed

|

TechEmpower/FrameworkBenchmarks | 431219169 | Title: Enable accelerated networking in Azure

Question:

username_0: See discussion in https://github.com/TechEmpower/FrameworkBenchmarks/issues/4281#issuecomment-481319521 for context.

Apparently, if you create new VMs in Azure with our instance type and recommended OS now, accelerated networking is enabled by default. Also, Microsoft wanted us to use accelerated networking in the past, but we couldn't because of the OS we used at the time. For both of those reasons, it makes sense to enable accelerated networking in TFB's Azure environment moving forward.

In the past few rounds we used an Azure environment that was provisioned "manually" through the Azure portal UI. When we created that environment, TFB still required Ubuntu 14.04, and accelerated networking was not supported there. We may have later upgraded the OS in place but not re-provisioned the instances, so we never enabled accelerated networking.

We retired that Azure environment and are creating a new one with Terraform. This is still a work in progress. Enabling accelerated networking there should be a one-line change.

The Terraform setting to enable:

https://www.terraform.io/docs/providers/azurerm/r/network_interface.html#enable_accelerated_networking

The area of our Terraform script to change:

https://github.com/jsongte/tfb-azure-terraform/blob/f88cec19a3a8c2f6a4f1858b67af5ccb3b0e5864/terraform/tfb-app.tf#L27-L40

We expect this change to noticeably affect performance so we should call this change out in a blog post.

Answers:

username_0: This is done. We applied this change in our [Terraform scripts](https://github.com/TechEmpower/tfb-azure-terraform/blob/9c25325de0f3518f81ae13ac8abdef2e19207619/terraform/tfb-app.tf#L32) before capturing Round 18, and then we called it out in the [Round 18 blog post](https://www.techempower.com/blog/2019/07/09/framework-benchmarks-round-18/).

Status: Issue closed

|

Metatavu/pakkasmarja-berries | 295071348 | Title: Contact transfer support

Question:

username_0: As an user i would like to be have feature for synchronizing contacts from SAP into Keycloak. As a part of this story a job queue is created

Answers:

username_1:  [Yhteystietojen synkronointi SAPista Keycloakiin (siirtotiedosto)](https://trello.com/c/KLLGDymW/1-yhteystietojen-synkronointi-sapista-keycloakiin-siirtotiedosto)

Status: Issue closed

|

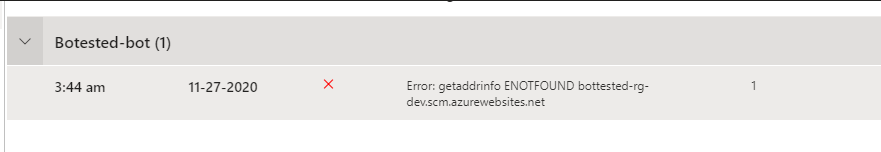

microsoft/BotFramework-Composer | 752101416 | Title: Error: getaddrinfo ENOTFOUND bottested-rg-dev.scm.azurewebsites.net

Question:

username_0: I have manually created the resources needed for my Bot to run. And built publishing profile.

My resource group name is "bottested-rg", and my app service name is "bottested-webapp", so ideally my Kudu console or publishing endpoint will be bottested-webapp.scm.azurewebsites.net

Now, while publishing my Composer Bot to Azure it gives below error

**ISSUE:**

What it is doing is appending "environment" value to resource group name or name value like this "bottested-rg-dev" and looking for "scm" - bottested-rg-dev.scm.azurewebsites.net which obviously will not present.

How can I make it publish to "bottested-webapp.scm.azurewebsites.net"?

**Note:** Azure App service name is globally unique value so I did not get to use "bottested-rg". So I created an App Service under the name "bottested-webapp".

**Things I tried:**

Changed environment value to something like this "environment": "webapp", but it's still looking for "bottested-rg-webapp.scm.azurewebsites.net" which is not present and gives the same error as expected.

So the real question is how to bypass this environment value getting attached to RG or Bot name?

Or How can I make it to publish to an App Service that I created manually?

Answers:

username_1: @username_0 you can add another field in your publish profile:

{

"hostname": "bottested-webapp"

}

The logic is:

if hostname is null or empty, we use "name-environment, otherwise just use hostname.

btw, if you use luis services, use luisResourse to override "name-environment-luis"

username_0: @username_1 Thank you this could resolve the issue, I have not tested it yet. But your explanation helps and makes sense.

username_2: @username_0 I'll close this as a how-to question. Please create a new issue if you are seeing errors

Status: Issue closed

|

cmen/CMENGoogleChartsBundle | 835111630 | Title: Getting data from a Timeline row

Question:

username_0: Is there a way to access the data in a Timeline row with just the provided Twig functions?

On select I need to get the name of the bar, for example "M210001 -"

So far I haven't found a way to do this. |

akarnokd/rng-76 | 1011174890 | Title: Enclave Plasma with Flamer mod wrong ammo capacity

Question:

username_0: From reddit [u/Andamarokk](https://www.reddit.com/user/Andamarokk)

Hey, wasnt sure where to reach out to you and saw youre active on reddit. I've got a question regarding the fo76 damage calc, if youre still working on that. I was using it earlier today and noticed the (Enclave) Plasma flamer doesnt get its clip size updated to 300 when putting on the Flamer barrel. This leads to Quad being better than both AA/Bloodied (which obviously isnt true). Heres the profiles i used: click me!

Thanks for your time!

Status: Issue closed

Answers:

username_0: Fixed via https://github.com/username_0/rng-76/commit/4f518d6e33f59105ccf5d0cad25a0feb239751e6 . |

naser44/1 | 113201742 | Title: زرقاء اليمامة: امرأة كانت تبصر الشعرة البيضاء في اللبن وتنظر الراكب على مسيرة 3 أيام وتنذر قومها الجيوش...

Question:

username_0: <a href="http://ift.tt/1kDYEts">زرقاء اليمامة: امرأة كانت تبصر الشعرة البيضاء في اللبن، وتنظر الراكب على مسيرة 3 أيام، وتنذر قومها الجيوش...</a> |

kubernetes/website | 768103027 | Title: Generate feature gates list from data

Question:

username_0: **This is a Feature Request**

<!-- Please only use this template for submitting feature/enhancement requests -->

<!-- See https://kubernetes.io/docs/contribute/start/ for guidance on writing an actionable issue description. -->

**What would you like to be added**

Track a list of feature gates as, eg, YAML, and generate https://kubernetes.io/docs/reference/command-line-tools-reference/feature-gates/ from that data.

**Why is this needed**

- This improvement lets people update the feature state for a gate with a one line change, eg

```diff

--- feature_gates.yaml 2020-01-21 15:24:34.907668274 +0000

+++ feature_gates.yaml 2020-03-15 10:39:43.852042785 +0000

@@ -16,6 +16,8 @@

- name: AllowExtTrafficLocalEndpoints

betaFromVersion: v1.4

deprecatedFromVersion: v1.8

+ - name: AnyVolumeDataSource

+ alphaFromVersion: v1.18

- name: APIListChunking

alphaFromVersion: v1.8

betaFromVersion: v1.9

```

- This change enables future work to:

- autogenerate the input data

- improve the `feature-state` shortcode

- Combining this with other data could let the Kubernetes project have a single view of new features and deprecations for a release, generating that view automatically.

**Comments**

/kind feature

Answers:

username_0: To clarify something: we need to localize the descriptions for each feature gate, so those descriptions would still be part of the content. The good thing about having this list as data is that we can lint to make sure that each added feature gate has a matching description.

username_1: I love this idea! On compiling major themes for 1.20, I had to go back and forth between k/k PR and k/website PR to see if the changes are in sync. Is this a step towards generating [from the actual code itself](https://github.com/kubernetes/kubernetes/blob/master/pkg/features/kube_features.go)?

username_0: /remove-lifecycle rotten

username_2: we missed more than a few of these in the past, i'd like to make this happen.

/assign

i hope to be able to submit a PR soonish.

username_0: I've updated the PR I opened about this. I've left it as draft so that we can discuss the approach to take.

username_0: I also wrote up a [rationale](https://github.com/kubernetes/website/pull/28036#issuecomment-862284989).

username_0: (yes, it is)

username_0: https://github.com/kubernetes/website/pull/28036#issuecomment-883482828 mentions running this past SIG Architecture to check that folks are happy with the approach.

* _This_ issue is about switching the page to be driven by local data (in `/data` within the repo)

* a future change could build on that to switch to using remote data. Hugo can fetch and deserialize JSON data, via https, then render it. |

ag-grid/ag-grid | 903305909 | Title: TypeError: Cannot read property 'EnterpriseCoreModule' of undefined

Question:

username_0: <!--

IF YOU DON'T FILL OUT THE FOLLOWING INFORMATION WE MIGHT CLOSE YOUR ISSUE WITHOUT INVESTIGATING

-->

**I'm submitting a ...** (check one with "x")

```

[] bug report => see 'Providing a Reproducible Scenario'

[] feature request => do not use Github for feature requests, see 'Customers of AG Grid'

[] support request => see 'Requesting Community Support'

```

**Customers of AG Grid**

If you are a customer you are entitled to use the AG Grid's customer support system (powered by Zendesk). Please use that channel for guaranteed response from the AG Grid team with regards bugs, feature requests and support.

**Requesting Community Support**

If you are not a customer of AG Grid, ag-grid staff will label your issue as managed-by-the-community. This means that AG Grid staff is not going to be actively looking into it and it will get closed if inactive for more than one month. The community is welcome to help with this question/support issue.

**Providing a Reproducible Scenario**

Accepted reproducible scenarios are

- A description of the detailed steps to reproduce your behaviour in one of our examples in the docs.

- A plunker

If you decide to send us a plunkr, from any example in our website use the plunkr button in there to fork your own code by following the steps below:

- Select the framework that is appropriate to you from the drop-down

- Open it in plunker. (Use the button plunker in our example)

- Add your changes so that the behaviour is reproduced

- Save and Freeze the plunker(On the top left corner)

- Send us the link to the plunker(You can copy the URL from the browser)

If reporting a bug make sure to state.

Current behaviour.

Expected behaviour. If possible back this up with our docs/examples if possible

**Current behavior**

<!-- Describe how the bug manifests. -->

**Expected behavior**

<!-- Describe what the behavior would be without the bug. If possible back this up with our docs/examples if possible-->

**Please tell us about your environment:**

<!-- Operating system, IDE, package manager, HTTP server, ... -->

* **AG Grid version:** X.X.X

<!-- Check whether this is still an issue in the most recent AG Grid version -->

* **Browser:**

<!-- Run `navigator.userAgent` in console of all of the browsers where this could be reproduced -->

* **Language:** [all | TypeScript X.X | ES6/7 | ES5]

Answers:

username_1: Hi,

This ticket has been flagged as managed-by-community/waiting-for-repro for a while now and there has not been any activity, to help us tidy up we are going to close it.

Thanks

Status: Issue closed

|

Dakova1994/Angular-Test | 298989630 | Title: Draw button should draw cards from current deck

Question:

username_0: When implemented, 'Draw' button always creates new deck. We want it to draw cards from the current deck.

Requirement 1.

Change logic to 'Draw' button., so that it draws cards from current deck.

Answers:

username_1: It is done?

username_0: If so, then close please.

Status: Issue closed

username_0: When implemented, 'Draw' button always creates new deck. We want it to draw cards from the current deck.

Requirement 1.

Change logic to 'Draw' button., so that it draws cards from current deck.

Status: Issue closed

|

5CS024-Team1/embedded_system_code | 575835948 | Title: NO INFORMATION DISPLAYED

Question:

username_0: When running the code, nothing is displayed in the serial monitor. This may be something to do with my print lines.

Answers:

username_0: Update - Stripped the code to the very basics, using no formatting on the data. I seem to be able to display data when I am not trying to use tinyGPS to format it (see basecamp for an image of this)...

This is still a problem as I have no clue what any of the data means without it being formatted, will continue to work on a fix over the next few weeks.

username_0: Update - This looks to be an issue with the hardware and not the code.

I have tried leaving it running overnight and have seen no difference in functionality.

For now we are going to just have to use dummy data, I will keep working on a fix until the deadline, if one cannot be found, I will just do my best with what I have. |

elixir-ecto/ecto | 468811683 | Title: Ecto 3.1 %DateTime{} error with `date()` fragment

Question:

username_0: ### Environment

* Elixir version (elixir -v): Elixir 1.9.0, Erlang/OTP 22

* Database and version (PostgreSQL 9.4, MongoDB 3.2, etc.): (PostgreSQL) 9.6.13

* Ecto version (mix deps): ~> 3.1

* Database adapter and version (mix deps): {:postgrex, "~> 0.14.3"},

* Operating system: Debian GNU/Linux 9.9 (stretch) (4.9.0-9-amd64)

### Current behavior

This code to work with Ecto 2.2, but now throws a `Postgrex expected %DateTime{}, got ~N[2018-11-07 11:26:56]` error (like #2796) in Ecto 3.1 (even after `@timestamps_opts [type: :naive_datetime_usec]` adjustment made per Platformatec post):

```

where([l],

fragment("date(?) between date(?) and date(?)", l.inserted_at, ^start_date, ^end_date)

)

```

However, this does work in Ecto 3.1:

```

where([l],

(l.inserted_at >= ^start_date) and (l.inserted_at <= ^end_date)

)

```

### Expected behavior

For `date(?)` fragments to behave as they did under Ecto 2.2.

Answers:

username_1: Because you are bypassing Ecto and using fragments, the database is the one dictating which input is necessary. That's why `@timestamp_opts` has no effect.

Also, can you please include the full stacktrace and error message? Thanks.

username_0: Will have to revert to a prior version to reproduce the stack trace, so give me a day or two.

Also upgraded postgrex from 0.13.5 to 0.14.3. Was there some change in that driver which would alter how NaiveDateTime parameters are bound to the query? With the old libs, the fragment query ran successfully.

BTW, postgres columns are type `timestamp without time zone` for the `date(?)` fragments.

username_1: We didn’t have naive and DateTime before, which is why the issue didn’t

appear.

--

*José Valimwww.plataformatec.com.br

<http://www.plataformatec.com.br/>Founder and Director of R&D*

username_0: Could you clarify "didn't have"? From this [post](http://blog.plataformatec.com.br/2018/10/a-sneak-peek-at-ecto-3-0-breaking-changes/), it has handled NaiveDateTime since 2.1.

username_1: Oh, sorry. I should have said they were not the defaults. It was most likely that you were using Ecto.DateTime before, no?

username_0: Prior to 3.1 upgrade, `@timestamp_opts` was left as default. The value passed into the fragment was an Elixir `NaiveDateTime`.

username_1: Ah, this does look like a regression then. Sometimes the Ecto versions are

all fuzzied in my head, I will take a look then, thanks!

--

*José Valimwww.plataformatec.com.br

<http://www.plataformatec.com.br/>Founder and Director of R&D*

username_1: Looking at the code for Postgrex v0.14.3, we can see it handles both Naive and Datetime:

https://github.com/elixir-ecto/postgrex/blob/v0.14.3/lib/postgrex/extensions/timestamp.ex#L9-L21

But not for timestamptz:

https://github.com/elixir-ecto/postgrex/blob/v0.14.3/lib/postgrex/extensions/timestamptz.ex#L14-L21

So I believe you are getting the former and that indeed won't work with naive datetimes.

You can give a hint to PG by writing:

```

where([l],

fragment("date(?) between date(?::timestamp) and date(?::timestamp)", l.inserted_at, ^start_date, ^end_date)

)

```

Status: Issue closed

username_1: In any case, it could indeed work on previous Ecto versions, but that's was when ecto implemented those callbacks and we have more lax rules on casting than Postgrex. :) |

Masuzu/ZooeyBot | 226841093 | Title: Salves Mode Problem

Question:

username_0: Hello

I want to use salves mode.

However, the bot keep refreshing my chrome window.

And the Co-op quest never start but only refreshing.

Thank you

Status: Issue closed

Answers:

username_1: This will happen if you have a very slow internet connection: Zooey waits for the "Ready" (must not be pressed for the first run) and "Start" button to be visible. |

auth0/express-openid-connect | 611713508 | Title: Make redirect optional

Question:

username_0: ### Describe the problem you'd like to have solved

I want to call the login endpoint via AJAX request and simply get the redirect URL in the response.

I've not found a way to change this behavior without modifying the library source code.

### Describe the ideal solution

So the possible solution is to have two methods: login, loginWithRedirec in the RequestContext class.

Answers:

username_1: Hi @username_0 - could you explain more about your use case? What would you do with the redirect URL when you got it back from an AJAX request?

username_0: Just redirect via JavaScript

`window.location.href = url`

username_1: So is there anything stopping you from doing: `window.location.href = '/login';`?

username_0: I plan to do redirect to auth0 login page, not to `/login` route

username_1: The `/login` route will redirect you to the auth0 login page https://github.com/auth0/express-openid-connect/blob/master/lib/context.js#L103 - so `window.location.href = '/login';` will have exactly the same effect as `window.location.href = authorizeUrl;` - with the added bonus that you don't need to make an additional AJAX request to get the `authorizeUrl`

username_0: I'm doing an ajax request to the /login with axios. Browser is not able to proceed redirect response properly from ajax request. That's why I want to get redirect url in from the /login response and do perform the redirect manually

username_0: @username_1 is it clear, or I need to provide more details?

Status: Issue closed

username_1: Hi, sorry - we don't offer an API where you can get the authorize url - I recommend you have another look at https://github.com/auth0/express-openid-connect/issues/96#issuecomment-626548563

If you really want to use the authorize url directly - it should be fairly trivial to create: https://github.com/auth0/node-auth0/issues/173#issuecomment-381767911

username_0: Thx |

UCDavisLibrary/ava | 221667944 | Title: creston_district

Question:

username_0: # AVA: Creston District (creston_district)

name | value

--- | ---

ava_id | creston_district

cfr_index | 9.239

revision | [T.D. TTB-125, 79 FR 60960, Oct. 9, 2014]

state | CA

county | San Luis Obispo

within | Central Coast\|Paso Robles

contains |

[src]: https://www.ecfr.gov/cgi-bin/retrieveECFR?gp=&SID=371db32ecca6629af6dccad2a39d7833&mc=true&n=sp27.1.9.c

## Approved Maps [src]

(1) Creston, Calif., 1948, photorevised 1980;

(2) <NAME>, Calif., 1961;

(3) <NAME>, CA, 1995;

(4) Camatta Ranch, CA, 1995; and

(5) <NAME>, Calif., 1965, revised 1993.

## Boundary [src]

(c) Boundary. The Creston District viticultural area is located in San Luis Obispo County, California. The boundary of the Creston District viticultural area is as described below:

(1) The beginning point is located on the Creston map along the common boundary line of the Huerhuero Land Grant and section 34, T27S/R13E, at the eastern-most intersection of State Route 41 and an unnamed light-duty road locally known as Cripple Creek Road. From the beginning point, proceed northerly on Cripple Creek Road approximately 1 mile to the road's intersection with an unnamed light duty road locally known as El Pomar Drive (at BM 1052), section 27, T27S/R13E; then

(2) Proceed northeasterly in a straight line approximately 0.75 mile to the unnamed 1,142-foot elevation point, T27S/R13E; then

(3) Proceed north in a straight line approximately 1.2 miles to the line's intersection with an unnamed light duty road locally known as Creston Road at the southwest corner of section 14, T27S/R13E; then

(4) Proceed east on Creston Road approximately 0.35 mile to the road's intersection with an unnamed light-duty road known locally as Geneseo Road (at BM 1014), T27S/R13E; then

(5) Proceed north-northwesterly on Geneseo Road approximately 0.7 mile to the road's intersection with a jeep trail (locally known as Rancho Verano Place) and the western boundary line of section 14, T27S/R13E; then

(6) Proceed due east in a straight line approximately 0.2 mile to the line's intersection with the Huerhuero Land Grant boundary line, section 14, T27S/R13E; then

(7) Proceed north-northeasterly along the Huerhuero Land Grant boundary line approximately 0.7 mile to the land grant's northern-most point, and then continue east-southeasterly along the land grant's boundary line approximately 0.4 mile to the line's intersection with the northern boundary line of section 14, T27S/R13E; then

(8) Proceed east approximately 1.3 miles along the northern boundary lines of sections 14 and 13, T27S/R13E, and continue east approximately 0.25 mile along the northern boundary line of section 18, T27S/R14E, to the T-intersection of two unnamed unimproved roads; then

(9) Proceed east-southeasterly on the generally east-west unnamed unimproved road approximately 0.85 mile, crossing onto the Shedd Canyon map, to the road's intersection with the eastern boundary line of section 18, T27S/R14E; then

(10) Proceed southeasterly in a straight line approximately 1.2 miles to the 1,641-foot elevation point located at the southeast corner of section 17, T27S/R14E; then

(11) Proceed southeasterly approximately 0.55 mile in a straight line to BM 1533 (located beside Creston Shandon Road (State Route 41)) and continue southeasterly in a straight line approximately 1.8 miles to the 1,607 elevation point near the western boundary line of section 27, T27S/R14E; then

(12) Proceed east-southeasterly in a straight line approximately 1.1 miles to the 1.579-foot elevation point at the southeast corner of section 27, T27S/R14E; then

(13) Proceed east approximately 1.9 miles along the northern boundary lines of sections 35 and 36, T27S/R14E, to the section 36 boundary line's intersection with Indian Creek; then

(14) Proceed southerly (upstream) along Indian Creek approximately 5.3 miles in straight-line distance, crossing onto the Wilson Corner map, to the creek's intersection with an unnamed light-duty road locally known as La Panza Road, section 20, T28S/R15E; then

(15) Proceed southeasterly on La Panza Road approximately 0.15 mile to the road's intersection with State Route 58 at Wilson Corner, section 29, T28S/R15E; then

(16) Proceed easterly on State Route 58 approximately 1.4 miles, crossing onto the Camatta Ranch map, to the road's intersection with the eastern boundary line of section 28, T28S/R15E; then

(17) Proceed south approximately 1.5 miles along the eastern boundary lines of sections 28 and 33, T28S/R15E, to the T28S/T29S common boundary line at the southeast corner of section 33, T28S/15E; then

(18) Proceed west along the T28S/T29S common boundary line approximately 9.1 miles, crossing over the Wilson Corner map and onto the Santa Margarita map, to the boundary line's intersection with the Middle Branch of Huerhuero Creek, section 31, T28S/R14E; then

(19) Proceed north-northwesterly (downstream) along the Middle Branch of Huerhuero Creek approximately 2.3 miles in straight-line distance to the creek's intersection with the southern boundary line of section 24, T28S/R13E; then

(20) Proceed west along the southern boundary line of section 24, T28S/R13E, approximately 0.45 mile to that section's southwestern corner; then

(21) Proceed north along the western boundary line of section 24, T28S/R13E, approximately 1.0 mile to the boundary line's intersection with an unnamed unimproved road at the section's northwestern corner; then

(22) Proceed northwesterly on the unnamed unimproved road approximately 0.7 mile to the road's intersection with State Route 229 near BM 1138, section 14, T28S/R13E; then

(23) Proceed northeasterly on State Route 229 approximately 0.2 mile to the road's intersection with the Huerhuero Land Grant boundary line, section 14, T28S/R13E; then

(24) Proceed north-northwesterly along the boundary of the Huerhuero Land Grant approximately 3 miles, crossing onto the Creston map and returning to the beginning point.

Status: Issue closed

Answers:

username_2: Reviewed.

- Add scale information of the used maps. |

americanexpress/jest-image-snapshot | 459276038 | Title: Can you make a new release for the customSnapshotIdentifier to be a function

Question:

username_0: I see the new changes for the customSnapshotIdentifier to be a function is merged into master but has not been packaged. Can you please cut a new release and publish to npm these changes?

Answers:

username_1: Hopefully tomorrow I will be able to!

username_1: 2.9.0 has now been published.

Status: Issue closed

|

kubernetes/kubernetes | 957405349 | Title: The test `TestVolumeUnmountAndDetachControllerDisabled` test if failing from `kubelet_volumes_test.go`

Question:

username_0: #### Which jobs are failing:

pull-kubernetes-unit

#### Which test(s) are failing:

TestVolumeUnmountAndDetachControllerDisabled

#### Since when has it been failing:

It failed on `07/24/2021` but after that the test is passing

#### Testgrid link:

https://prow.k8s.io/view/gs/kubernetes-jenkins/pr-logs/pull/103631/pull-kubernetes-unit/1418992044973494272#1:build-log.txt%3A2386

#### Reason for failure:

DATA RACE

It seems that the data race is caused by below code

https://github.com/kubernetes/kubernetes/blob/5be21c50c269fc1d28e0bd31ab9dcb572ae7fac5/pkg/kubelet/kubelet_volumes_test.go#L317-L320

#### Anything else we need to know:

It failing of test is Flaky, it was not reproducible on my local machine too, I have used below command to run the test

```

sudo make test WHAT="./pkg/kubelet" GOFLAGS="-v -count=1" KUBE_TEST_ARGS='-run ^TestVolumeUnmountAndDetachControllerDisabled$'

```

output on my local machine

```

+++ [0801 06:08:49] Running tests without code coverage and with -race

=== RUN TestVolumeUnmountAndDetachControllerDisabled

W0801 06:08:55.941792 4176 mutation_detector.go:53] Mutation detector is enabled, this will result in memory leakage.

I0801 06:08:55.942831 4176 desired_state_of_world_populator.go:146] "Desired state populator starts to run"

I0801 06:08:55.943127 4176 volume_manager.go:291] "Starting Kubelet Volume Manager"

E0801 06:08:55.945783 4176 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.CSIDriver: unhandled watch: testing.WatchActionImpl{ActionImpl:testing.ActionImpl{Namespace:"", Verb:"watch", Resource:schema.GroupVersionResource{Group:"storage.k8s.io", Version:"v1", Resource:"csidrivers"}, Subresource:""}, WatchRestrictions:testing.WatchRestrictions{Labels:labels.internalSelector(nil), Fields:fields.andTerm{}, ResourceVersion:""}}

I0801 06:08:56.145474 4176 reconciler.go:244] "operationExecutor.AttachVolume started for volume \"vol1\" (UniqueName: \"fake/fake-device\") pod \"foo\" (UID: \"12345678\") "

I0801 06:08:56.145588 4176 reconciler.go:157] "Reconciler: start to sync state"

I0801 06:08:56.145710 4176 operation_generator.go:369] AttachVolume.Attach succeeded for volume "vol1" (UniqueName: "fake/fake-device") from node "127.0.0.1"

I0801 06:08:56.246900 4176 operation_generator.go:587] MountVolume.WaitForAttach entering for volume "vol1" (UniqueName: "fake/fake-device") pod "foo" (UID: "12345678") DevicePath "/dev/vdb-test"

I0801 06:08:56.247005 4176 operation_generator.go:597] MountVolume.WaitForAttach succeeded for volume "vol1" (UniqueName: "fake/fake-device") pod "foo" (UID: "12345678") DevicePath "/dev/sdb"

I0801 06:08:56.247084 4176 operation_generator.go:630] MountVolume.MountDevice succeeded for volume "vol1" (UniqueName: "fake/fake-device") pod "foo" (UID: "12345678") device mount path ""

I0801 06:08:56.548217 4176 reconciler.go:196] "operationExecutor.UnmountVolume started for volume \"vol1\" (UniqueName: \"fake/fake-device\") pod \"12345678\" (UID: \"12345678\") "

I0801 06:08:56.548267 4176 operation_generator.go:866] UnmountVolume.TearDown succeeded for volume "fake/fake-device" (OuterVolumeSpecName: "vol1") pod "12345678" (UID: "12345678"). InnerVolumeSpecName "vol1". PluginName "fake", VolumeGidValue ""

I0801 06:08:56.649605 4176 reconciler.go:312] "operationExecutor.UnmountDevice started for volume \"vol1\" (UniqueName: \"fake/fake-device\") on node \"127.0.0.1\" "

I0801 06:08:56.649609 4176 operation_generator.go:974] UnmountDevice succeeded for volume "vol1" %!(EXTRA string=UnmountDevice succeeded for volume "vol1" (UniqueName: "fake/fake-device") on node "127.0.0.1" )

I0801 06:08:56.750200 4176 reconciler.go:333] "operationExecutor.DetachVolume started for volume \"vol1\" (UniqueName: \"fake/fake-device\") on node \"127.0.0.1\" "

I0801 06:08:56.750269 4176 operation_generator.go:484] DetachVolume.Detach succeeded for volume "vol1" (UniqueName: "fake/fake-device") on node "127.0.0.1"

I0801 06:08:56.844405 4176 volume_manager.go:297] "Shutting down Kubelet Volume Manager"

--- PASS: TestVolumeUnmountAndDetachControllerDisabled (0.91s)

PASS

ok k8s.io/kubernetes/pkg/kubelet 1.052s

```

I would like to work on this issue, is it possible to reproduce this issue locally? |

BoboTiG/ebook-reader-dict | 748264222 | Title: [FR] Handle the 'régionalisme' template

Question:

username_0: - Wiktionary page: https://fr.wiktionary.org/wiki/mace

Wikicode:

```

{{régionalisme|Bretagne|fr}}

```

Output:

```

None

```

Expected:

```

<i>(Bretagne)</i>

```

---

Model link, if any: https://fr.wiktionary.org/wiki/Mod%C3%A8le:r%C3%A9gion<issue_closed>

Status: Issue closed |

tldr-pages/tldr | 182604776 | Title: Decide what branding to use

Question:

username_0: I noticed many names are used for this project, so I think it should be clarified. For example, you can find *TL;DR pages*, *tldr*, *tldr pages* and *TLDR pages* in various documents and website.

My suggestion is to use and *tldr* for the name of npm package and *TLDR pages* or *tldr pages* for everything else. How does that sound?

Answers:

username_1: I normally don't like when brands attempt to enforce specific capitalization styling of the brand name, so I'd preferif we avoided the capitalized version, and the pedantic version with the semicolon. I suppose I'd either go with **tldr pages** or **tldr-pages**, but I'm open to hear other arguments.

username_0: Agree @username_1, I like that version more. *TLDR pages* sounds a bit too administrative. *tldr-pages* sounds cool in dev matter of cool.

username_2: `tldr` has my vote.

username_3: I like **tldr pages**. Contrasts nicely with **man pages**.

username_1: If we are willing to deviate a little more from the current name, we could consider rebranding as tldr.sh (which is akin to how many projects nowadays now use .js to indicate their context), or even go as far as ditching the hard-to-pronounce (and somewhat jargon-y) "tldr" altogether and pick something like "miniman" or "lil' man" :P just some fresh/wild (crazy?) ideas to spice up the discussion, not something I'm advocating at the moment.

Getting back down to earth, I suspect that "tldr-pages" may be better than "tldr pages" as it clearly identifies as a single word, in the absence of capitalization or formatting. But it's not a super-strong preference.

username_0: I agree with @username_1. `tldr-pages` seems the best at this moment.

username_0: Any news on this? Branding is an important part of the project.

username_1: I'd like to hear @username_3 and @username_2's responses to my previous comment.

username_2: I reeeeally need to setup tldr.sh

username_3: My preference is still "tldr pages", but I am okay with "tldr.sh" too.

username_1: If tldr.sh is ok with you guys, I'd vote for it too.

@username_3 any thoughts about my arguments for using a hyphen? Besides those, we must also consider that using a space makes it awkward to refer to a single page. Is it a "tldr page" (which would mean the project identifies as "tldr" alone)? Using "tldr-pages page" would be consistent but admittedly kinda silly, so we could instead recommend something like "tldr-pages entry" for that case (that's one of the ways a branding guideline can be useful).

username_3: Referring to a single page is indeed an issue. My vote is for tldr.sh then.

username_1: @username_0, @username_5, @username_4, @fluxw42 what do you guys think? Any change since your last reactions?

username_4: My vote is for "tldr pages". The dash is overly verbose and doesn't fit well with how the english language is constructed.

username_1: @username_4 what about the issues I mentioned [here](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-255104786) and [here](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-262176332)?

username_0: `tldr.sh` seems perfectly fine to me.

username_4: `tldr.sh` suggest that it's a program, library or is otherwise related to the linux shell, which is not the case?

Do we want that?

username_5: I have split thoughts here. On one hand I agree with @username_1 that since we want to think about rebranding, we could consider something different than "tldr" (I always have to think about "too long didn't read" when typing, because I cannot think of a pronounceable form of "tldr"). On the other hand I like the name and the idea behind it, and then I would go with "tldr pages" (no hyphen)...

username_2: For the record I meant the domain `www.tldr.sh`, which we have registered but haven't setup yet.

username_1: So... shall we make a decision? The options raised here have been: (1) **tldr-pages**, (2) **tldr pages**, (3) **tldr.sh**, (4) **tldr**, and also (5) **miniman** and (6) **shortman**.

I would be OK with (1), (3) and (5).

(3) depends on @username_2's feedback -- what kind of setup are you planning for the domain? Would you like help keeping the domain registration? (Not sure how expensive it is.) I was thinking maybe we could start by simply making it a custom domain for http://tldr-pages.github.io, which is easy enough to setup, and later we could work on something more involved, to avoid delaying this much further.

IMO (2) would make it hard to distinguish when we're talking about the project and when we're talking about individual pages, and (4) would be too ambiguous with other uses of the term (and would make googling this a nightmare).

Thoughts?

username_2: Hey! The domain is up :) should I configure the DNS to point to the github pages?

username_3: Cool, I am good with tldr.sh. I have a concern though - the org name is "tldr-pages". Are we going to change the org name too ?

username_1: It would make sense, I suppose, and I'd personally be fine with that. We'd keep the primary identifier in our name, the "tldr" part, so that doesn't bother me too much.

username_2: @username_1 domain's A record pointing to Github -- http://tldr.sh -- you can take it from here 👍

username_1: Annnd, it's working already :D that was easy :)

So now, **tldr.sh** is a viable option for the project name. Is everyone on board with that? Pinging @username_6, @username_7, @igorshubovych.

username_3: If I try https://tldr.sh, I get a `NET::ERR_CERT_COMMON_NAME_INVALID`. Something we can do about it ?

username_1: There might be alternatives, see [here](https://gist.github.com/cvan/8630f847f579f90e0c014dc5199c337b) and [here](https://hackernoon.com/set-up-ssl-on-github-pages-with-custom-domains-for-free-a576bdf51bc).

username_3: Ah .. going the cloudflare way. @username_2 - is this something that you can do ? The nameserver change has to be done from your side.

username_2: @username_3 @username_1 Cloudflare setup done 👍 it'll take a few hours to work according to their website.

username_3: Works now. Can you enable HSTS also ?

username_6: I'm a little late to the party but I like "tldr pages", akin to "man pages". A single page is then a "tldr page" (man page). I think the name is still compatible with [tldr.sh](https://tldr.sh) which is a cool domain name!

If we choose to go with that, I've heard people pronounce it as `tilder` so we could do as many open-source projects do and mention it in the README `tldr pages (pronounced "tilder pages")`.

That being said I'm also happy with `tldr.sh` as the main branding or coming up with a newer fancy name like `miniman` too :)

username_7: `miniman` just cracks me up

username_1: I haven't pushed for `miniman` much, as I was the one to suggest it, but gotta say I really like it, too: it's self-descriptive, memorable, and very easy to pronounce (it kinda sounds like "minivan", an already existing word). Plus it doesn't include a jargon term, which better aligns with the project's ethos.

It really seems to tick all the boxes -- the only reason I haven't advocate adopting that name (but I'm tempted), is because "tldr" has definitely become sort of the main element of the project's "brand". But name changes have occurred for projects before, and large ones at that (e.g. IPython --> Jupyter comes to mind), without serious issues other than nostalgia of the old-timers, so it might well be a valid choice.

username_8: Interesting thread!

Would you want the various clients to start using the "tldr.sh" name?

username_1: @username_8 I think we already have enough problems form all the clients calling themselves simply "tldr", so I wouldn't recommend going down that road. Of course, once we pick a definitive name for the project, the clients ought to refer to it (the project, not themselves) by that name, yeah.

@username_7 I couldn't figure out whether your reaction to "miniman" is neutral, positive or negative. Would it be something you'd consider? (Just trying to tie up loose ends here.)

username_7: Let's say I'm neutral about it.

username_1: @username_6, @username_3, @username_2, @username_9, @username_0, @username_4, @username_5: I'd like to hear your thoughts on renaming the project `miniman` so we can make a decision here. I've already [listed a bunch of reasons](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-302388562) that compel me to favor it above `tldr` / `tldr.sh`; @username_7 is neutral about it per the comment immediately above, and @username_6 [said](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-302383621) he is open to the idea.

Let's seize the 10,000th star event (#1464) to make a final call on this :)

username_0: I really support miniman. Never thought about it before, but it's pretty descriptive. :1st_place_medal: :+1:

username_5: Besides the "brand change" factor that @username_1 mentioned [before](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-302388562), I like `miniman`. Easier to remember than `tldr`...

Although the creative use of the TL;DR jargon gave the project something we can instantly attach to, maybe they way it's not easily pronounceable or beginner-friendly also lead to all the discussion above... Sad, but necessary, to see the name go...

username_3: Sorry to be "that" guy here 😛 , but I don't like miniman. Strong negative on that. I would really like to keep some part of "tldr" in the name. Losing that feels like losing the core identity of this project.

username_6: What about `howto`? Then on the CLI you'd type `howto tar`, `howto du` etc.

username_4: I like howto! It's descriptive and short.

username_1: `howto` is descriptive and potentially even more self-explanatory than `miniman` (both are better than `tldr` IMO), because it subtly conveys what to expect (usage examples).

My only fear is that it's too generic and thus basically ungoogleable. Here's a table based on [the nominology post](http://messymatters.com/nominology/) I linked above:

Property | Description | tldr.sh | miniman | howto

-------- | ----------- | ------- | ------- | -----

Evocativity | Conveys at least a hint of what it’s naming | ★☆☆ | ★★☆ | ★★★

Brevity | Shorter = better | ★★★ | ★★☆ | ★★★

Greppability | Not a substring of common words | ★★★ | ★★☆ | ★★☆

Googlability | Reasonably unique (and domain name available) | ★★☆ | ★★☆ | ☆☆☆

Pronounceability | You can read it out loud when you see it | ☆☆☆ | ★★★ | ★★★

Spellability | You know how it’s spelled when you hear it | ☆☆☆ | ★★☆ | ★★☆

Playfulness | Catchy and memorable (e.g. a play on words) | ★★☆ | ★★★ | ☆☆☆

(I've excluded the "verbability" property, which all 3 score zero, to reduce visual noise, and added a "playfulness" property, which IMO is important for a good name to stick.)

It looks to me that objectively (to the extent that my scores are agreeable -- happy to adjust them to reflect consensus), "miniman" is the most balanced option, as it basically ticks all the "good project name" boxes.

username_1: @username_3 can you clarify whether you dislike "miniman" in particular, or dislike anything that doesn't include "tldr" in general?

username_3: The latter.

username_9: Nice table! I'm inclined to say no to "howto", since it not only is difficult to google, but is additionally awkward to say - since it sounds like the beginning of a sentence.

username_1: Good point @username_9: the natural sentence would indeed be "how to use gcc?", not "how to gcc?". That said, most command names are pretty short and I could kind of see them used as a verb, e.g. "how to gcc <a file>" as a stand-in for "how to compile a file with gcc". This doesn't work in all cases, though.

username_6: That's true @username_1, I think it probably be the case with every name we have: it's hard to form a valid sentence on the command line :smile:.

The only thing I dislike about `miniman` is that it doesn't sound very serious. Will that put off part of the target audience? (although it might be hard to please both novice *nix users, and people who are command-line fans but need the occasional reminder).

username_1: While "miniman" certainly has a playfulness to it, I also find it to be quite a good objective description of what we provide: compact manuals for the commands.

Yes, "mini-" is often used in a humorous/child-like context, but it is also used without any diminutive conotation (besides its literal meaning) in many common words, such as "minibar", "miniskirt", "minidisc", "miniseries", "Mini SUV", and [many more](https://en.wiktionary.org/wiki/Category:English_words_prefixed_with_mini-).

Even if that weren't the case, I think a user-base that readily adopted a project called "tldr-pages" would have no problem embracing a similarly playful (IMO) name :smile:

username_6: Never realised that Markdown was wordplay on Markup 😲 D'oh!

username_2: `tldr(.sh) > miniman > anything else > tldr[-]pages > howto`

That’d be my short answer. So if we ain’t sticking to tldr for legacy reasons, I’d friggin love miniman to be the name.

username_1: Great comment, @username_2. For a moment I was tempted to convert the other comments into a similar ranking for each participant in the discussion, tally up the votes and provide a table with numbers and total scores according to [various ranked-ballot counting methods](http://www.cs.wustl.edu/~legrand/rbvote/calc.html) (Borda, Schulze, etc.) — however, in my experience making these decisions based on cold numbers ends up feeling empty and unconvincing to most participants.

I believe at this point it is possible to identify a consensus (even if not unanimous) in the community, so I'll leave it to @username_6 to make the final decision.

(Note that the effects of the decision will likely not be effective _immediately_ — we'll need to then start discussing a transition plan, to avoid doing things too hastily.)

username_2: So keep in mind that this is a _branding_ discussion.

username_0: Seems like this has been solved.

Status: Issue closed

username_1: @username_0 I guess you're referring to the discussion at #2986. That one is about visual identity more than naming, as this one was. I will update the title of both issues to make that distinction clearer.

That said, re-reading this discussion, I feel that, while there were valid and even compelling arguments for a rebranding towards "miniman", the overall sentiment in the thread is one of strong attachment to the "tldr" name, so it makes sense to keep that in the core of our branding.

Still, I do bump into the issue of awkwardly having to refer to "tldr-pages pages" all the time, and people in the thread were not too keen on the usage of a hyphen to make the project name stand out as a single word, so I would suggest fully embracing the tldr.sh name. I'll reopen this issue to allow us to make a decision on that regard.

So, question for all those who were involved in this discussion (@username_0, @username_2, @username_6, @username_3, @username_4, @username_5, @username_7, @username_8, @username_9) as well as more recent members of the org (@username_10, @username_11) and everyone in the community:

_In light of the discussed above, what do you guys think about consolidating **tldr.sh** as the project name?_

Please use the reactions so we can gauge the overall sentiment more easily. 👍 👎

username_1: I noticed many names are used for this project, so I think it should be clarified. For example, you can find _TL;DR pages_, _tldr_, _tldr pages_ and _TLDR pages_ in various documents and website.

My suggestion is to use and _tldr_ for the name of npm package and _TLDR pages_ or _tldr pages_ for everything else. How does that sound?

username_10: I've seen this project almost always being called "TLDR pages" (with a variation of the case e.g. "tldr pages", "TLDR Pages" etc). I don't recall seeing any client referring to it differently. This project has been around for quite a while and this name is spread among countless repos, clients and documentation pages. I would therefore vote for "**TLDR Pages**" (or variation of the case) if I had to. Furthermore, "tldr.sh" just feels like a domain name to me.

username_3: There was no neutral reaction button, so I put "eyes" on it. As a single short name, "tldr.sh" is okay .. I guess. But as you can see .. there are concerns being raised with it looking like a domain name.

username_1: Good point, @username_3, I added that option above :)

As for looking like a domain name, I don't see why that's a bad thing; quite the contrary, it helps solidify the branding and is one less thing to memorize. Besides, it's shorter, more distinctive, and works as a single word (Incidentally, I find it interesting that a domain, rather than a shell script, is what the `.sh` extension evoked!)

I do want to hear about your experience with referring to the project's pages, due to the inherent duplication of "tldr pages page". Does that not bother you at all? If we decide to stick with "tldr pages" as the project name, maybe we could call the pages something else (files? documents? entries?), which would resolve the situation in a different way.

username_10: @username_1 if the name is "TLDR pages" then a single page would be called either "TLDR pages page" or "TLDR page" (or maybe even "page of TLDR pages". It of course depends on the context though. There surely is no need to prefix it with "TLDR pages", most of the times it can be clearly understood what the term "page" refers to.

username_10: Also, adding to my previous comment: the project's organization GitHub name is "tldr-pages", which would make naming it differently rather confusing to me, so there's that.

username_3: We would have to rename the org of course. Github will redirect old links pointing to the old org - https://help.github.com/en/github/setting-up-and-managing-organizations-and-teams/renaming-an-organization.

username_11: The only issue is that anyone can then take the old org name. 🤔

username_2: At the risk of stating the obvious, remember the org name has *tons* of searchability value attached to it already.

Can you ask GitHub for a org level redirect?

username_3: They indeed can. But links to old repos will automatically redirect. Only a link to the exact org - "github.com/tldr-pages" will now point to the newly acquired org.

See the link above.

username_10: This. Renaming the org will inevitably break this and also any script/code/auth token/**client** and whatever else uses that name to do anything.

username_3: Yep, so only the exact link and the API requests will return an error. Github will redirect everything else.

username_1: Evidently we wouldn't simply swap things around all of a sudden, unannounced. This change would have to be synced with client maintainers.

Also, we could re-register the org name if we wanted to set up renaming notices or prevent others from taking over the name, etc. Look, none of this is meant to be painless or easy. But we are a capable and technically competent community -- I don't think we should be stopped from taking this sort of decision based on possible technical challenges of a project rename. If we do think the current name is better, even assuming a transition to another name would be completely seamless, then it's fair to stick with it on its merits -- but let's not do so based merely on the technical difficulty of adopting an alternative.

username_10: @username_1 valid point. I genuinely think the current name is better though.

username_9: Personally I've always used `tldr-pages` myself. I agree with @username_10 and @username_2 here personally.

username_0: @username_1 I'm not completely for `.sh` extension since it's generally used for projects developed in Shell only. However it nicely indicates the project's use, and that's a big plus. :+1:

username_1: @username_9 the problem is that previous suggestions (in the older comments of this thread) to use a hyphenized form were met with a lukewarm reception, so unless we can swap that sentiment, we'd be stuck with the awkward two-word, unclearly separated "tldr pages". Any thoughts about that? 😕

username_10: What's the hyphen thing? Whether to use "tldr-pages" or "TLDR pages" would of course depend on the context. If we talk about the GH repo/url user we say "tldr-pages", otherwise "TLDR pages". I don't see a problem.

@username_9 are you suggesting to use "tldr-pages" *as the project name* instead of "TLDR pages"? That wouldn't make sense IMHO.

@username_0 how does `.sh` indicate the project's use? `.sh` makes me think of a shell script, not a collection of manual pages. Could you elaborate on that?

username_0: @username_10 for me it's more indicative of being used in shell. I have to say tldr-pages sounds more like it's a print publication and not a computer program.

username_1: @username_10 please note that at the start of this thread there was some discussion aboout using the capitalized version, and the consensus seemed to be that the lowercase version was preferable.

username_10: @username_1 I was just differentiating between the two alternatives with or without hyphen, I already said that case wasn't important for me.

username_0: Reviewing most of the project's repositories, it seems this and #1192 are the omnipresent issues. Website, docs and clients all have own version of brand name and description.

I suggest we focus on these two (quite correlated) issues over any other, in order to finally move on. The issues hurt the project's branding, promotion, portability (clients) and on top of that, confuses everyone involved. However, I am sure we'll be able to solve this as soon as possible. :hugs:

username_0: ping @username_1 @username_10 @username_3 @username_11

(it seems @username_9 agreed with the point)

username_10: @username_0 I don't think capitalization is a problem, but I nonetheless agree that "tldr pages" should be the name.

username_3: I have already given my opinion above. I am neutral.

username_0: So we agreed on this? Maybe it's the time to close the issue?

username_10: @username_0 doesn't seem like we all agree to me, I *think* the majority prefers "tldr pages" but I'm not sure, cannot read all the history now.

username_0: I meant the majority of course, it's quite impossible everybody completely agrees on something.

username_9: See [this comment](https://github.com/tldr-pages/tldr/issues/2339#issuecomment-423808210) and [this comment](https://github.com/tldr-pages/tldr/issues/3041#issuecomment-495004004) @username_0 :-)

username_1: I'll give a shot at summarizing the main points of the discussion above (I'm interpreting consensus as no strong objections):

- **Semicolon**: there is consensus to _not_ use it (so `tldr` instead of `tl;dr`)

- **Capitalization**: there is consensus to use lowercase (so `tldr` instead of `TLDR`)

- **Rebranding**: no consensus to change. The closest alternative that garnered some support was "miniman", but there was opposition due to the "tldr" name being too ingrained now. Even [the proposal](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-556620205) to make a softer change towards "tldr.sh" had a lukewarm reception, with no clear preference.

- **Hyphenization**: no consensus. Using a hyphenized form was proposed [here](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-255104786) and [here](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-262176332), but was argued against [here](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-262217401); additional signals (comments, reaction) did not accumulate significantly in favor either approach.

Gathering a more global view of what has been discussed, I'd say there are only three alternatives with any chance of being adopted:

1. The progressive option: Biting the bullet and making the change to **miniman**, with the understanding that there are potential downsides but they are manageable, and balanced out by the [upsides](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-326894332) (including [enthusiasm](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-302388562) by [various](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-326822759) [community](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-326827966) [members](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-327083318), [even the project creator](https://github.com/tldr-pages/tldr/issues/1109#issuecomment-302383621)!);

2. The moderate option: Consolidating in **tldr.sh** as a simpler, shorter and more distinctively "brand-y" name;

3. The conservative option: Sticking with **tldr pages** (this exact spelling: no capitals, no semicolon, no hyphen) as the evil we know, to avoid the inconveniences of changing the name and benefit from the historical weight of the name.

I must admit 😢 that option 3 seems to be the only one that nobody objects to, so if you guys agree this assessment is correct, we can close the issue with that decision.

I would only ask for a compromise in order to reduce the "tldr pages page" issue: can we start referring to each individual file as an "entry" rather than a "page"? That's not part of the branding itself, but would greatly facilitate it, and would remove the ambiguity of the project name being "tldr pages" vs. "tldr".

username_9: Great summary @username_1! This is indeed a thorny issue. Personally I've always called it a "tldr page", but a "tldr-pages entry" would be fine too. If this is the case we should update any documentation where this is referenced.

I've always used `tldr-pages` myself, but that's only because that's the name of the org.

username_0: It doesn't seem wrong to use the term *page(s)* for the actual pages. tldr pages as a project is basically a collection of those pages. The more terms we add, the more complicated documentation becomes. I'm against defining more terms such as *entries*.

username_1: @username_0 please note that the suggestion of using "entries" is not frivolous: it's only to overcome the _existing_ issue of referring to an individual file as a "tldr page" (which is ambiguous by making it seem like the project's name is "tldr" alone, which is precisely the type of confusion this issue was created to address) or as "tdlr pages page" (which is very awkward and essentially unusable).

That's one of the reasons I was hoping for alternative project names that didn't include "pages" in the name (i.e. _tldr.sh_ or _miniman_), because those would automatically avoid the issue. But if we can't agree to make such a change, then the issue needs to be addressed elsewhere, hence the proposal to use "entries".

username_10: I think you summarized that pretty well @username_1. I don't think I've ever referred to a page as "tldr page". Why not just use the word "page" alone? It probably depends on the context, but I don't really see this as an issue.

username_1: It's very context-sensitive and would make it hard to talk about tldr pages among other page-like entities (e.g. man pages, actual pagination of content, etc.)

Of course, we can _live_ with the ambiguity (we have done just that so far); I was just suggesting a way that we could get rid of it without much effort (IMO).

username_0: @username_1 It's the same way for man pages and I don't see them having any issues. The command is called `tldr` (just like `man`) and its pages are called `tldr pages` (just like `man pages`), which is what the project mostly is.

username_1: That's a good point. I don't agree that there are no issues, as I described in several comments above, but I can accept that they're not super-problematic. Do you all prefer keeping "page" as the name of each entry, then?

username_10: @username_1 I do, I don't think other options would help that much.

username_12: @username_1

I see your point but I think we could keep _page_

username_0: To be fair, this seems to have already been decided. `tldr pages` is the standard name for the whole project, and `tldr` for this repository and the command-line clients. I'm in for closing this and moving on with more important issues.

Status: Issue closed

username_3: Sounds reasonable to me. I just did a quick scan of the last couple of comments, and it seems like the discussion was about what to call a single item in our repo - and that seems to have converged on "page".

To conclude:

- Project name remains as - "tldr pages". Exact spelling, no semicolon, no hypen, and not just "tldr" too.

- A single item in the project will be termed as a "page". Similar to man pages, a page here implies a page from the "tldr pages" project. Short and simple.

Thanks everyone who participated in this, and special thanks to @username_1 who took a lot of pain in steering the discussion in a coherent way ! Really glad to see how this turned out.

username_1: Thanks guys for wrapping this up. I think we need to remove the remnants of the semicolon, right? I know it's present in the [banner](https://github.com/tldr-pages/tldr/blob/master/images/banner.svg); do you know if any additional instances remain?

username_3: Thanks @username_1. That sounds great. Let's open a new one ? This issue is already overloaded with discussion on the brand name. I think a new issue to track the changes to be made might be more clear.

username_0: @username_1 I suggest you open a PR with that change. You don't have to work on it extensively, as the other contributors can finish the job. :)

Also, the descriptions of other repos in this org should be updated.

username_1: Makes sense @username_3. I'll work on this tomorrow night, time permitting.

username_9: I noticed many names are used for this project, so I think it should be clarified. For example, you can find _TL;DR pages_, _tldr_, _tldr pages_ and _TLDR pages_ in various documents and website.

My suggestion is to use and _tldr_ for the name of npm package and _TLDR pages_ or _tldr pages_ for everything else. How does that sound?

Status: Issue closed

|

MikeMcl/decimal.js | 325703365 | Title: Add sum function

Question:

username_0: How about adding a sum helper?

```js

const numbers = [Decimal(1), Decimal(2), Decimal(3)]

Decimal.sum(numbers) // => 6

```