repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

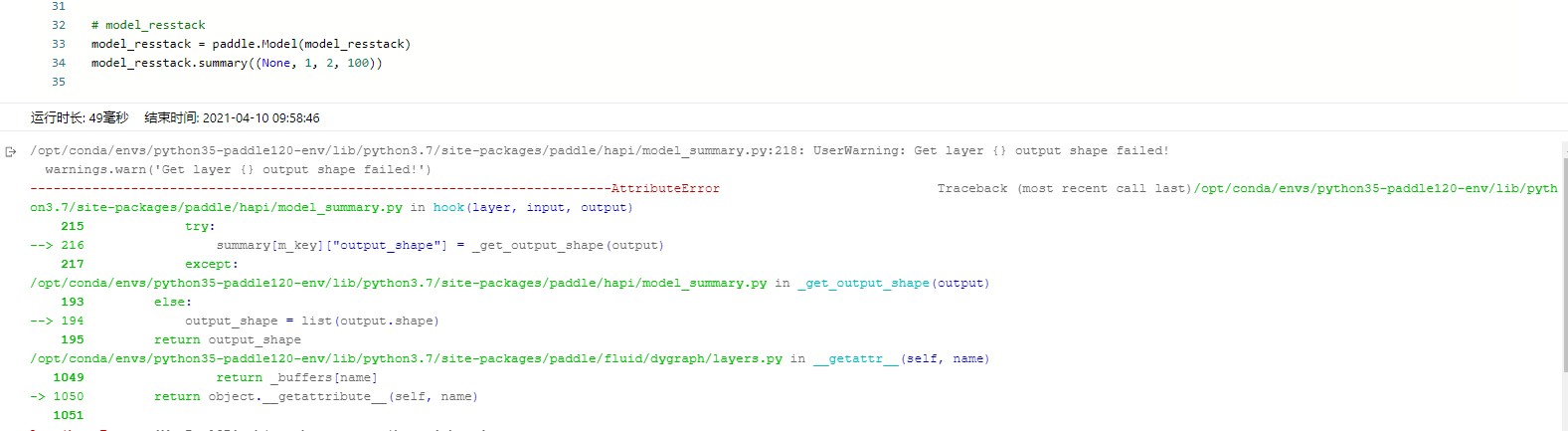

PaddlePaddle/Paddle | 854574494 | Title: model.summary()后出现KeyError: 'output_shape'错误

Question:

username_0: - 标题:自主搭建resnet模型报错

- 版本、环境信息:

1)PaddlePaddle版本:2.0.1

2)系统环境:Paddle version: 2.0.1

Paddle With CUDA: False

OS: debian stretch/sid

Python version: 3.7.4

CUDA version: None

cuDNN version: None.None.None

Nvidia driver version: None

- 模型信息

1)模型名称 图一

keras复现版本(图二)

2)使用数据集名称 (matlab生成数字信号调制识别数据集_8class shape=(800000, 1, 2, 100)) https://aistudio.baidu.com/aistudio/datasetdetail/73835

3)模型代码 如下.zip文件

[net_resnet_stack.zip](https://github.com/PaddlePaddle/Paddle/files/6286343/net_resnet_stack.zip)

- 问题描述:运行model.summary((None, 1, 2, 100))后出现如下报错信息

- 报错信息:

---------------------------------------------------------------------------AttributeError Traceback (most recent call last)/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model_summary.py in hook(layer, input, output)

215 try:

--> 216 summary[m_key]["output_shape"] = _get_output_shape(output)

217 except:

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model_summary.py in _get_output_shape(output)

193 else:

--> 194 output_shape = list(output.shape)

195 return output_shape

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py in __getattr__(self, name)

1049 return _buffers[name]

-> 1050 return object.__getattribute__(self, name)

1051

AttributeError: 'MaxPool2D' object has no attribute 'shape'

During handling of the above exception, another exception occurred:

KeyError Traceback (most recent call last)<ipython-input-6-6c680bc1eda0> in <module>

31 # model_resstack

32 model_resstack = paddle.Model(model_resstack)

---> 33 model_resstack.summary((None, 1, 2, 100))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model.py in summary(self, input_size, dtype)

1879 else:

1880 _input_size = self._inputs

-> 1881 return summary(self.network, _input_size, dtype)

1882

1883 def _verify_spec(self, specs, shapes=None, dtypes=None, is_input=False):

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model_summary.py in summary(net, input_size, dtypes)

147

148 _input_size = _check_input(_input_size)

--> 149 result, params_info = summary_string(net, _input_size, dtypes)

150 print(result)

151

</opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/decorator.py:decorator-gen-343> in summary_string(model, input_size, dtypes)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/base.py in _decorate_function(func, *args, **kwargs)

313 def _decorate_function(func, *args, **kwargs):

314 with self:

--> 315 return func(*args, **kwargs)

[Truncated]

904 for forward_post_hook in self._forward_post_hooks.values():

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/container.py in forward(self, input)

93 def forward(self, input):

94 for layer in self._sub_layers.values():

---> 95 input = layer(input)

96 return input

97

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py in __call__(self, *inputs, **kwargs)

903

904 for forward_post_hook in self._forward_post_hooks.values():

--> 905 hook_result = forward_post_hook(self, inputs, outputs)

906 if hook_result is not None:

907 outputs = hook_result

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/hapi/model_summary.py in hook(layer, input, output)

217 except:

218 warnings.warn('Get layer {} output shape failed!')

--> 219 summary[m_key]["output_shape"]

220

221 params = 0

KeyError: 'output_shape'

Answers:

username_1: @username_0 你好,方便把paddle版本调用summary的详细代码贴出来吗?

username_0:

您好,这样可以吗?

username_1: @username_0 MaxPool2D返回的是一个可调用对象,并不是一个Tensor。 所以你代码中引用`MaxPool2D`可以改为:

```python

if self.maxpool:

self.maxpool = nn.MaxPool2D(kernel_size=(2,1), stride=(2,1), padding='VALID')

out = self.maxpool(out)

```

可参考MaxPool2D的官方示例。

https://www.paddlepaddle.org.cn/documentation/docs/zh/api/paddle/nn/layer/pooling/MaxPool2D_cn.html#daimashili |

pointless-lang/pointless | 724920264 | Title: try catch results in crash

Question:

username_0: `try`-`catch` crashes for me.

Here's a minimal failing example:

```

output= try

throw SomeLabel {some= "thing"}

catch is(SomeLabel)

err => println("???")

```

The stack trace I get from this:

```

Unhandled exception:

type '_GrowableList<ASTNode>' is not a subtype of type 'ASTNode'

#0 dispatch (package:pointless/src/interpreter.dart:94)

#1 eval (package:pointless/src/interpreter.dart:47)

#2 evalCheck (package:pointless/src/interpreter.dart:63)

#3 dispatch (package:pointless/src/interpreter.dart:294)

#4 eval (package:pointless/src/interpreter.dart:47)

#5 Env.addDefName.<anonymous closure> (package:pointless/src/env.dart:81)

#6 Thunk.getValue (package:pointless/src/thunk.dart:33)

#7 Env.lookupName (package:pointless/src/env.dart:121)

#8 Env.getOutput.<anonymous closure> (package:pointless/src/env.dart:144)

#9 _SyncIterator.moveNext (dart:core-patch/core_patch.dart:165)

#10 runFlag (package:pointless/src/debug.dart:22)

#11 runProgram (package:pointless/src/debug.dart:38)

#12 main (package:pointless/pointless.dart:28)

#13 _startIsolate.<anonymous closure> (dart:isolate-patch/isolate_patch.dart:299)

#14 _RawReceivePortImpl._handleMessage (dart:isolate-patch/isolate_patch.dart:168)

```

It crashes both in the repl and in files. I don't know any dart, so I'm not sure what to do with this. :) |

robotframework/RIDE | 84454033 | Title: Resolve paths to Library/Resource/Variables files when prefixed with ${EXECDIR}

Question:

username_0: Many test suites in our Robot project have settings like the following:

Library ${EXECDIR}/execution/lib/DSVLibrary.py WITH NAME DSV

Resource ${EXECDIR}/implementation/resources/WorkflowKeywords.txt

If the suites are opened with the "Open Directory" button, we know ${EXECDIR} will be set to the directory you've opened.

It would be great if RIDE could resolve these paths to enable keyword-source navigation.

## Why?

This helps us avoid copypasta errors where Test suites at different parts of the directory tree

Example:

Feature/Test.robot

Feature/Test2.robot

Feature/Subfeature/Test.robot

The above suites can all import the above libraries with the same lines of text.

Answers:

username_0: I am not sure what would be the best implementation for this. There are at least two options:

1) set ${EXECDIR} to ${CURDIR}

2) allow configuring ${EXECDIR} via preferences

2) is obviously more flexible.

Opinions?

username_0: I think it would be best to resolve ${EXECDIR} as the same location that pybot starts in when you press the Start/Run button.

That way, pybot and RIDE would find resources the same way and reduce unpleasant surprise.

This is similar to option #1, but I think it's slightly different when you're running a test suite with nested folders.

I'll try a quick test of this later, but I think ${CURDIR} would be a bad substitute when test suites have nested folders like my example above.

I *think* it would work out like this, assuming I use "File/Open Folder" on `/home/ed/dev/foo/tests`:

Test file ${CURDIR} Desired ${EXECDIR}

toplevel.robot /home/ed/dev/foo/tests/ /home/ed/dev/foo/tests/

sub/first.robot /home/ed/dev/foo/tests/sub/ /home/ed/dev/foo/tests/

sub/second.robot /home/ed/dev/foo/tests/sub/ /home/ed/dev/foo/tests/

sub/more/bottom.robot /home/ed/dev/foo/tests/sub/more/ /home/ed/dev/foo/tests/

As for Option #2:

Currently ${EXECDIR} resolves to `ride.py`'s working directory, so that's already possible by starting ride.py in a command prompt or by editing the Working Directory on [copies of?] the "RIDE" Desktop/Start-menu shortcut.

username_0: I agree on the first point. I think that would make the most obvious and least surprising implementation. And you are right that ${CURDIR} in general is not good substitution, what I actually meant was the ${CURDIR} of the root suite, which is exactly the directory opened with RIDE.

username_0: Oh! That sounds perfect.

I was thinking about how option #2 might work, and I wasn't able to figure out anything that made sense in a world with both:

A. Multiple test suites on the same computer

B. Test suites shared in source control, cloned or checked out in different places for each person

Well, I suppose keeping per-suite settings indexed by location, but yuck? :)

Status: Issue closed

|

google/grinder.dart | 68690462 | Title: Automatically add grinder to `dev_dependencies` on grinder:init

Question:

username_0: on

```bash

pub global run grinder:init

```

Status: Issue closed

Answers:

username_1: Generally, it's intended to be used as `pub run grinder:init` (without global). That way you can specify which version of grinder you want for a given project, and initialize a grinder script which works with it. Den can help here though:

```

pub global activate stagehand

pub global activate den

stagehand package

den install grinder --dev

pub get

pub run grinder:init

```

:) |

MaskRay/ccls | 561985904 | Title: autocompletion with pointer correction goes wrong

Question:

username_0: ### Expected behavior

When using ccls with coc.nvim in neovim, sometimes we may forget if a variable is a a pointer or not. I notice that ccls will show up auto-completions even if I use dot on a pointer type.

But unfortunately, when insert -> after select a auto-completion, the previous entered dot is still there. It behaves as follows:

```c++

#include <vector>

int main() {

std::vector<int>* foo;

foo.->push_back(0); // extra dot

}

```

The expected behavior should be `foo->push_back()` without a dot.

### Steps to reproduce

1. type a dot after a pointer value

2. select a suggestion from auto-completion list

3. the dot you entered is still there

### System information

* ccls version (`git describe --tags --long`):

* clang version: 9.0.0

* OS: macOS Mojave

* Editor: nvim

* Language client (and version): coc.nvim

Answers:

username_0: A related issue in coc.nvim https://github.com/neoclide/coc.nvim/issues/1396

Status: Issue closed

username_0: ### Expected behavior

When using ccls with coc.nvim in neovim, sometimes we may forget if a variable is a a pointer or not. I notice that ccls will show up auto-completions even if I use dot on a pointer type.

But unfortunately, when insert -> after select a auto-completion, the previous entered dot is still there. It behaves as follows:

```c++

#include <vector>

int main() {

std::vector<int>* foo;

foo.->push_back(0); // extra dot

}

```

The expected behavior should be `foo->push_back()` without a dot.

### Steps to reproduce

1. type a dot after a pointer value

2. select a suggestion from auto-completion list

3. the dot you entered is still there

### System information

* ccls version (`git describe --tags --long`):

* clang version: 9.0.0

* OS: macOS Mojave

* Editor: nvim

* Language client (and version): coc.nvim

username_1: seem that it can be solve by set client.snippetSupport and install coc-snippets

see https://github.com/MaskRay/ccls/wiki/Customization#clientsnippetsupport

example configuration

```json

"ccls": {

"command": "ccls",

"args": [

"--log-file",

"/tmp/ccls.log"

],

"filetypes": [

"c",

"cpp",

"objc",

"objcpp"

],

"rootPatterns": [

".ccls-cache",

".vim/",

".git/",

"compile_commands.json"

],

"initializationOptions": {

"cache": {

"directory": "./.ccls-cache"

},

"compilationDatabaseDirectory": "./build/Debug",

"client":{

"snippetSupport":true

}

}

},

```

username_0: I've resolved it by writing a coc.nvim extensions. Close this issue for now.

Status: Issue closed

|

isaac-rand/sister-teams-explorer | 637321711 | Title: Brief Error Message

Question:

username_0: Right when you open the [app](https://isaacrand.shinyapps.io/Sister-Teams-Explorer/) there is briefly a message which says that an error has occurred. It then goes away and everything works fine. It's not a huge problem, but it's a problem |

rapid7/ruby_smb | 268775800 | Title: Improve SMB2 rename and delete logic

Question:

username_0: SMB2 rename and delete functionalities, implemented in the File class, are both using `SetInfoResponse` packet with `FileRenameInformation` and `FileDispositionInformation` FSCC File Information classes.

However, the File Information classes are instantiated in the File methods and used to set the packet's attribute:

- https://github.com/rapid7/ruby_smb/blob/master/lib/ruby_smb/smb2/file.rb#L223

- https://github.com/rapid7/ruby_smb/blob/master/lib/ruby_smb/smb2/file.rb#L149

This design can be improved by simply using the File Information code after instantiating `SetInfoResponse` and let the this class set the related attributes. As a reference, the SMB1 TRANS2 implementation is currently using this design to implement sub-commands (https://github.com/rapid7/ruby_smb/blob/master/lib/ruby_smb/smb1/packet/trans2/request.rb and https://github.com/rapid7/ruby_smb/blob/master/lib/ruby_smb/smb1/packet/trans2/subcommands.rb).<issue_closed>

Status: Issue closed |

alibaba/MNN | 634087748 | Title: Problem running using Vulkan and OpenCL

Question:

username_0: # 平台(如果交叉编译请再附上交叉编译目标平台):

# Platform(Include target platform as well if cross-compiling):

Linux, x86_64

# Github版本:

# Github Version:

直接下载ZIP包请提供下载日期以及压缩包注释里的git版本(可通过``7z l zip包路径``命令并在输出信息中搜索``Comment`` 获得,形如``Comment = bc80b11110cd440aacdabbf59658d630527a7f2b``)。 git clone请提供 ``git commit`` 第一行的commit id

Provide date (or better yet, git revision from the comment section of the zip. Obtainable using ``7z l PATH/TO/ZIP`` and search for ``Comment`` in the output) if downloading source as zip,otherwise provide the first commit id from the output of ``git commit``

f5dae040a0b60678726a5893b7b2778e15ce690c

# 编译方式:

# Compiling Method

gcc

```

请在这里粘贴cmake参数或使用的cmake脚本路径以及完整输出

Paste cmake arguments or path of the build script used here as well as the full log of the cmake proess here or pastebin

```

```

option(MNN_USE_SYSTEM_LIB "For opencl and vulkan, use system lib or use dlopen" ON)

option(MNN_BUILD_HARD "Build -mfloat-abi=hard or not" OFF)

option(MNN_BUILD_SHARED_LIBS "MNN build shared or static lib" OFF)

option(MNN_FORBID_MULTI_THREAD "Disable Multi Thread" OFF)

option(MNN_OPENMP "Use OpenMP's thread pool implementation. Does not work on iOS or Mac OS" OFF)

option(MNN_USE_THREAD_POOL "Use MNN's own thread pool implementation" ON)

option(MNN_BUILD_TRAIN "Build MNN's training framework" OFF)

option(MNN_BUILD_DEMO "Build demo/exec or not" OFF)

option(MNN_BUILD_TOOLS "Build tools/cpp or not" OFF)

option(MNN_BUILD_QUANTOOLS "Build Quantized Tools or not" OFF)

option(MNN_EVALUATION "Build Evaluation Tools or not" OFF)

option(MNN_BUILD_CONVERTER "Build Converter" OFF)

option(MNN_SUPPORT_TFLITE_QUAN "Enable MNN's tflite quantized op" ON)

option(MNN_DEBUG_MEMORY "MNN Debug Memory Access" OFF)

option(MNN_DEBUG_TENSOR_SIZE "Enable Tensor Size" OFF)

option(MNN_GPU_TRACE "Enable MNN Gpu Debug" OFF)

option(MNN_PORTABLE_BUILD "Link the static version of third party libraries where possible to improve the portability of built executables" OFF)

option(MNN_SEP_BUILD "Build MNN Backends and expression seperately. Only works with MNN_BUILD_SHARED_LIBS=ON" OFF)

option(NATIVE_LIBRARY_OUTPUT "Native Library Path" OFF)

option(NATIVE_INCLUDE_OUTPUT "Native Include Path" OFF)

option(MNN_AAPL_FMWK "Build MNN.framework instead of traditional .a/.dylib" OFF)

option(MNN_FMA_ENABLE "x86 routine use fma extension" OFF)

option(MNN_WITH_PLUGIN "Build with plugin op support." OFF)

$ cmake ..

-- The C compiler identification is GNU 8.3.0

-- The CXX compiler identification is GNU 8.3.0

-- The ASM compiler identification is GNU

-- Found assembler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

[Truncated]

With openCL I don't get any output, with Vulkan I get something but incorrect.

I tried the following combinations for backend:

```

MNN::ScheduleConfig schConfig;

schConfig.type = MNN_FORWARD_VULKAN;

schConfig.backupType = MNN_FORWARD_CPU;

MNN::ScheduleConfig schConfig;

schConfig.type = MNN_FORWARD_VULKAN;

schConfig.backupType = MNN_FORWARD_VULKAN;

MNN::ScheduleConfig schConfig;

schConfig.type = MNN_FORWARD_OPENCL;

schConfig.backupType = MNN_FORWARD_CPU;

MNN::ScheduleConfig schConfig;

schConfig.type = MNN_FORWARD_OPENCL;

schConfig.backupType = MNN_FORWARD_OPENCL;

```

Status: Issue closed

Answers:

username_1: SliceTF Has been supported for vulkan and opencl |

yegor256/netbout | 130981100 | Title: Exclamation mark icon on production after recent release

Question:

username_0: After releasing version 2.23 to production I see exclamation mark icon on my avatar:

It shouldn't be there, as I have my email address set properly.

Answers:

username_1: @username_2 valid bug

username_2: @username_1 I tagged this as "bug"

username_2: @username_0 I set milestone here to `3.1`, let me know if it is wrong

username_2: @username_0 thanks for reporting! I topped your account for 15 mins, transaction 56b329ea4f694f57700000c7

username_2: @username_3 do this task

username_3: @username_0 @username_1 I just created a new user and I am not getting this issue It seems this is only related to already created users ? I do not know how I can test this locally.

username_3: @username_0 Now I don't see this issue in my account to. It is gone now could you please close the issue.

username_0: @username_3 I still see it in my profile

username_3: Here is my account, but I have a very strange issue now #1015.

username_3: @username_1 I can not reproduce this with my account and cant test it ?

username_1: @username_3 let's wait until fix for #1015 will be released

username_3: @username_2 waiting for #1015 to be released

username_2: @username_3 agreed, we'll wait for #1015

username_3: @username_1 I think it hase something to do with :

```

private static XeSource source(final Alias alias) throws IOException {

final String email;

final String newemail;

final String[] emails = alias.email().split("!");

if (emails.length > 1) {

email = emails[0];

newemail = emails[1];

} else {

email = alias.email();

newemail = "";

}

return new XeAppend(

"alias",

new XeDirectives(

new Directives()

.add("name").set(alias.name()).up()

.add("locale").set(alias.locale().toString()).up()

.add("photo").set(alias.photo().toString()).up()

.add("email").set(email).up()

.add("newEmail").set(newemail)

)

);

}

```

in XeAlias, it does return newemail even when there is no newemail ?

username_3: @username_0 Could you tell me what it says if you hover over it ?

username_0: @username_3 it says that my email is not yet verified

username_3: @username_0 does it specify the email because I want to know what the newEmail is returning, is it the same email you are now using or an old one ?

username_0: @username_3 the same as I'm using now

username_3: @username_0 Ok, I will see it is very interesting.

username_2: @username_0 we were waiting for #1015 - it is closed already

username_3: @username_2 Please assign someone else because I can not find the issue here.

username_2: @username_3 30 points was deducted from your rating

username_2: @username_3 all right, we'll find someone else for this task

username_2: @username_4 this is your task

username_4: @username_0 , I could reproduce this now. This appears to be an issue with the DynamoDB sync and is just a timing thing.

For me this happens:

* Change email, I get my verification link and experience #853 too

* If I click the link I get something like "no verification needed" as a notice

* Then I wait for say 15 minutes

* New email appears instead of old one at last and I see the exclamation mark, the fact that I clicked the link before when I got the "not needed" notice did not validate my email.

* But if I click it after I see the exclamation mark and the email address has changed and wait another 15 minutes, the icon disappears and all seems properly validated :)

... I'm on it fixing this, just in case you want to remove that exclamation mark ;), you should be able to remove it by simply visiting the validation link you got at some point, if you still have it that is ;)

username_4: @username_0 PR #1066 added, reproducing and fixing(well by turning off the cache) the above explanation.

username_0: @username_4 great job!

username_4: @username_0 thanks :), I think we can close here right? Workaround merged, rest goes elsewhere I suppose.

username_4: @username_0 ping :)

Status: Issue closed

username_0: @username_4 thanks!

username_4: @username_2 please pay attention to this ticket as well as my other closed tickets in your project. I closed all of them in a very short period of time each, still I'm blocked by having them pile up as open tasks and receive an unrealistic speed rating by them staying open long after the PRs are closed and payment on them is handled.

Thanks :)

username_2: @username_5 please, review this task for compliance with our [quality rules](http://at.teamed.io/qa.html)

username_5: @username_2 Looks good!

username_2: @username_5 many thanks

username_2: @username_4 paied 10 mins to @username_5 for QA review (payment ID is `79352562`); **38 mins** added to your account (payment number `AP-0CX14905429037629`), many thanks for your contribution! 66 hours and 1 min spent here.; there is a bonus for fast delivery (m=3961); +38 added to your rating, at the moment it is: [+730](http://www.netbout.com/b/36646?open=rating) |

rometools/rome | 484924321 | Title: Packing Issue - Alternatives Rome.Utils / Firebase?

Question:

username_0: Hello.

When i will create APK Signed in AndroidStudio i have a trouble.

* im using this = implementation 'com.rometools:rome:1.12.1'

* In debug mode its OK, not problem and RSS works fine

* But when i create a signed Package i have a error:

1 º **Duplicate jar entry [com/rometools/utils/Alternatives.class]**

2 º **Caused by: java.lang.RuntimeException: java.io.IOException: Can't write [C:\Users\Lucas\Desktop\OnePlaceX\br.com.oneplace\build\intermediates\transforms\proguard\release\0.jar] (Can't read [C:\Users\Lucas\Desktop\OnePlaceX\br.com.oneplace\build\intermediates\transforms\FirebasePerformancePlugin\release\187(;;;;;;;**.class)] (Can't read [com] (Can't read [rometools] (Can't read [utils] (Can't read [Alternatives.class] (Duplicate jar entry [com/rometools/utils/Alternatives.class]))))))**

3º My proguard:

**-dontwarn com.rometools.rome.**

-keep class com.rometools.** { *; }

-keep interface com.rometools.** { *; }**

4º Before use this version of rome 1.12.1, i was using this:

**implementation files('libs/android-rome-feed-reader-1.0.0.jar')

implementation files('libs/jdom-1.1.1-android-fork.jar')**

The package build works fine..but my RSS not work properly like version 1.12.1.

Somebody can help me with this problem? 3 days thinking and searching something and nothing.

Other people have the same error, here the link

[https://stackoverflow.com/questions/53854658/duplicate-jar-entry-after-upgrade-to-gradle-3-2-0-3-2-1](url)

Answers:

username_0: I make some tests here and the Signed Build APK Works with version 1.12.1 BUT

1 - ** I have to remove the firebase performance plugin**

2 - What i removed?

**classpath** 'com.google.firebase:perf-plugin:1.3.1**

**implementation "com.google.firebase:firebaseperf:${FIREBASE_PERFORMANCE_VERSION_SDK_VERSION}**

3º I think have some conflict, but i need use performance firebase plugin and ROME RSS 1.12.1

What can i do ?

Thanks in advanced!

username_1: Maybe Rome is not working on Android at all. I never tried to use it on Android but there are plenty of artickes on the web, that older versions of jdom are not supported on Android and that even the latest version of jdom has some issues on Android.

username_0: So... but i dont understand why the error have some kind of problem with firebase perf plugin . Now or i use rome or firebase performance..

What you suggest to do?

username_1: Sorry, I can't help. I am not an Android developer. I recommend you to ask your question on StackOverflow.

Status: Issue closed

|

kubernetes-sigs/cluster-api-provider-azure | 609362212 | Title: LoadBalancer service is not accessible

Question:

username_0: /kind bug

**What steps did you take and what happened:**

Create a LoadBalancer service, public IP is allocated, but the service is not accessible through public IP.

**Anything else you would like to add:**

kube-controller-manager is adding correct inbound security rules to node-nsg with destination to be the allocated public IP. But capz securitygroup reconcile reset the rules to default and remove those rules.

**Environment:**

- cluster-api-provider-azure version: v0.4.2

- Kubernetes version: (use `kubectl version`):

- OS (e.g. from `/etc/os-release`):

Answers:

username_0: /assign

username_1: @username_0 I discussed with @username_3, we should do something similar to what the Azure cloud provider is doing: when reconciling the NSG, first get the existing NSG if it is already created and get the existing rules. Then, only add the rules we need for the CAPZ cluster to be functional if they do not already exist but preserve all the additional existing rules.

We should also use ETAGs to make sure we do not update the NSG while something else (eg. Azure Cloud Provider) is updating it. If we don't, that could cause a race condition where we fetch the existing rules [a, b, c] and in the meantime something else deletes b but CAPZ updates the rules to [a, b, c, d] and thus we undo the rule deletion.

This is where Azure Load Balancer updates the NSG and checks the ETAG to make sure its version of the NSG is still current: https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/azure/azure_backoff.go#L149-L175

And this is where Azure Load Balancer determines what rules to apply: https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/azure/azure_loadbalancer.go#L1112-L1307

Let me know if any of this doesn't make sense.

username_0: Thanks @username_1 for sharing this. Make sense to me.

username_1: /priority important-soon

username_0: /assign

username_2: I went through the changes required for this issue. As suggested,

azure-cloud-provider uses its own client https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/azure/clients/armclient/azure_armclient.go#L44 to communicate with Azure resources which has the provision to specify ETAG Headers:

```

WithHeader("If-Match", autorest.String(etag))

```

azure-cluster-api-provider uses client from `azure-sdk-for-go` which does not have this provision. To add support for Headers would hardly be a 3-line change but in https://github.com/Azure/azure-sdk-for-go repository

So to have ETAG provision there are three options:

1. Import armClient from legacy-cloud-provider(Is this import allowed. It’s not used anywhere in the code)

2. Make changes to azure-sdk-for-go client

3. Create a new client for the this repo(Too much of work to do. Feasibility?)

@username_1 Can you please suggest which option would be better? Or is there any other way to proceed.

username_0: /unassign

username_0: /assign username_2

username_3: @username_2 The older versions (< 1.18) of azure-cloud-provider uses the `azure-sdk-for-go`. We have etag checks in those releases for security groups - https://github.com/kubernetes/kubernetes/blob/release-1.16/staging/src/k8s.io/legacy-cloud-providers/azure/azure_backoff.go#L184-L215. We should be able to do the same here.

username_2: Thanks @username_3 I got your point |

iamgabrielma/Python-for-stock-market-analysis | 930215319 | Title: Add validation method so tickers in tickerList are unique

Question:

username_0: At the moment `tickerList` may have duplicated tickers, which causes the [JSON output](https://raw.githubusercontent.com/username_0/Python-for-stock-market-analysis/main/testData/2021-06-25-rsi.json) to mess with the `id`'s as well.

**Problems:**

1. Items do not have an unique - consecutive - ID

2. When we fetch the data via the iOS app, the does not load as the SwiftUI `Identifiable` protocol requires unique ID's |

kaerosen/tilemaps | 670563529 | Title: Extra features

Question:

username_0: Great to see this algorithm implemented in R.

I worked on the tile map paper. After developing the original algorithm, we considered a couple of extra features:

1) Repel non-neighbors

2) Allow using the concave hull of the centroids rather than the original boundary

These were not added in time for the paper, but are included in the demos:

[https://observablehq.com/@username_0/make-a-tile-map](https://observablehq.com/@username_0/make-a-tile-map)

[https://username_0.github.io/tile-maps-eurovis-demo/](https://username_0.github.io/tile-maps-eurovis-demo/)

I don't think these features are included in this package? If not, it might be nice to add them at some point?

Answers:

username_1: Thanks for letting me know about these extra features! They are currently not included in this package, but I will definitely look into adding them in the future.

username_2: using this as R code:

install.packages(c("tilemaps", "sf"))

library(tilemaps)

library(sf)

library(dplyr)

library(ggplot2)

governors <- governors %>%

mutate(tile_map = generate_map(geometry, square = FALSE, flat_topped = TRUE))

head(governors)

ggplot(governors) +

geom_sf(aes(geometry = tile_map)) +

geom_sf_text(aes(geometry = tile_map, label = abbreviation),

fun.geometry = function(x) st_centroid(x)) +

theme_void()

I am trying to build a Canadian Tile map. I got the geometry (lpr_000b16a_e.shp found https://open.canada.ca/data/en/dataset/47bd4f2e-1c77-49f8-8406-dc4dca64ee6b) from statistics Canada.

when I am using the R code above, I am getting this error:

Error: Problem with `mutate()` input `tile_map`.

x regions are not contiguous i Input `tile_map` is `generate_map(geometry, square = FALSE, flat_topped = TRUE)

How can I create a tile map of the Canada provinces?

username_1: Hi, I have opened a new issue (#4) to address this question.

Status: Issue closed

|

altugcagri/boun-swe-573 | 449286891 | Title: Make Changes On Frontend Api

Question:

username_0: **Is your feature request related to a problem? Please describe.**

Change the related API calls according to back-end URL's.

Make sure all changes done properly.

Test front-end application with back-end of yours. |

eclipsesource/jsonforms | 283317050 | Title: npm run build doesn't work on Windows because ../ (forward slash) is not recognized

Question:

username_0: (node:10276) [DEP0018] DeprecationWarning: Unhandled promise rejections are deprecated. In the future, promise rejections that are not handled will terminate the Node.js process with a non-zero exit code.

Answers:

username_1: Found multiple things:

NPM closed this issue by a bot: https://github.com/npm/npm/issues/13789

Yarn has a solution: https://github.com/yarnpkg/yarn/issues/1729

SO suggest to use symlinks https://stackoverflow.com/questions/28078780/relative-paths-in-package-json

@edgarmueller any ideas?

username_2: i just ran into the same issue while trying to run build

Status: Issue closed

|

INFURA/hackathons | 547153774 | Title: Infura API Optimization Prize (500 Dai)

Question:

username_0: ### Infura Ethereum API Optimization Prize

### Prize Bounty

500 Dai

### Challenge Description

Each Ethereum network call costs $ and can be vulnerable to network clogging. We'd like to see you think about how you can lessen either the number or the gas cost of eth_calls that your dapp makes, and showcase improvements. This is a wide-ranging topic and we invite you to be creative. Additionally, you can use contracts like makerdao's Multicall (https://github.com/makerdao/multicall) either directly or for inspiration.

### Submission Requirements

A valid submission will include a short project video demo as well as a text description of how the number/complexity/gas cost of eth_calls is reduced, including a before and after comparison.

### Submission Deadline

January 23, 2020

### Judging Criteria

The project demo and code will be evaluated by Infura team members, and the one best optimizing eth_calls will be awarded the prize. Judging includes criteria based on execution, project goal, originality, and code quality.

### Winner Announcement Date

The winner will be announced via our Twitter and/or other channels within 1 week of the hackathon ending.

Answers:

username_1: Had a need to look ether and token balances of several wallets and then seen this bounty so decided to make something useful for others as well.

As ethcall uses multicall, number of calls made effectively goes down to 1.

Status: Issue closed

|

PhelanBavaria/ancienttimeline | 202389008 | Title: Basic ideas

Question:

username_0: So, having looked through the ideas and playing the game for a bit, I think we should redo the basic ideas. Most don't really fit with the time, and while they could be renamed and such as some have, it'd probably be easier and better to just redo them. In addition, we could look at policies, though I'm not sure what to do for them. ET uses different ideasets for early eras, so I suppose we could do something like that and maybe have some more dynamic ones as well (available to specific cultures, religions, locations, techs, times &c.) What do you think?

Answers:

username_1: Sounds good

username_2: I started I guess. I already changed Humanist Ideas to Altruistic ideas.

username_0: So, these are some of the ones I've got so far. Haven't got all of them done yet, and the numbers aren't final.

[00_basic_ideas.txt](https://github.com/PhelanBavaria/ancienttimeline/files/785848/00_basic_ideas.txt)

username_0: Right, I've finished a few idea groups. I'll localise them in a bit and I'll add some more. Do we want to ad the 'early ideas' from ET and/or do we want to create an equivalent 'really early' group of ideasets for the beginning of AT? |

vimalloc/flask-jwt-extended | 396246096 | Title: Allow JWT_DECODE_AUDIENCE to be an array

Question:

username_0: Hi. I have spent much time with your library and I use it a lot including for verification of outside OIDC issued tokens. I would like to propose JWT_DECODE_AUDIENCE to be an array so token verify would allow verification from multiple audiences.

Scenarios. Identity Clients accessing API:

- CLI tool could be one consumer (direct grant)

- Web client could be second consumer (implicit flow)

- Web developer running on localhost could be third consumer (client has different redirects)

All issued by same provider, all valid. Only difference is each client has a different AUDience. It seems an a common practice to allow multiple audiences to be allowed to be verified in api. https://github.com/auth0/node-jsonwebtoken/issues/4

So if I could do JWT_DECODE_AUDIENCE = ['ai.mysoft.web','ai.mysoft.cli','ai.mysoft.localhost'] that would be great. Does that make sense?

Answers:

username_0: FROM: https://openid.net/specs/openid-connect-core-1_0.html#IDToken

```

aud

REQUIRED. Audience(s) that this ID Token is intended for. It MUST contain the OAuth 2.0 client_id of the Relying Party as an audience value. It MAY also contain identifiers for other audiences. In the general case, the aud value is an array of case sensitive strings. In the common special case when there is one audience, the aud value MAY be a single case sensitive string.

```

If you allow, I'd like to contribute oidc use example including autodiscovery and assembly of RSA public key from JWK endpoint data.

username_1: Yeah, `aud` should absolutely allow arrays, it was an oversight that wasn't caught when adding that feature. PyJWT already supports `aud` being an array, so all we need to do for this extension is update the config helper file, add the documentation, and write a unit test.

Would you be willing to submit a PR to add these features? If not, I can add them when I have a free moment, probably this weekend.

Thanks!

Status: Issue closed

username_1: So it looks like an array can already be passed in `JWT_DECODE_AUDIENCE`, whatever is passed in there is forwarded directly to PyJWT behind the scenes. I've updated the documentation to make this clearer, and added a unit test to make sure that this functionality is never accidentally removed in future versions of this library.

Cheers :+1:

username_0: @username_1 as promised https://github.com/username_1/flask-jwt-extended/pull/222 |

Lusito/forget-me-not | 585534448 | Title: Cookies not cleared at browser startup

Question:

username_0: Cookies are not cleared at browser startup which means: after browser startup, I am still logged in into Facebook.

Answers:

username_1: Hello and thanks for the report. Since this is still working correctly here, It is very likely, that you have configured it incorrectly.

You need to enable cleanup on startup in the settings tab of the add-on.

Also your rules need to be configured to remove facebook data. Please check out the tutorial included in the add-on.

Status: Issue closed

|

Kanazawanaoaki/Style-Transfer-colab | 593030608 | Title: TODO

Question:

username_0: - READMEの画像をいい感じにする。

[こんな感じ](https://github.com/albumentations-team/albumentations/blob/master/README.md)に横並びにした画像を作って貼り付けてしまう。

- 改善する。

もっと綺麗にできるように[他の実装](https://github.com/keras-team/keras/blob/master/examples/neural_style_transfer.py)とかも見て改善する。

- 他の手法も試してみる。

[ここ](https://elix-tech.github.io/ja/2016/08/22/art.html#improve)に載っている改善とか

[この記事](https://ai-scholar.tech/articles/treatise/style-ai-224)で紹介されている手法 |

kiali/kiali | 669725322 | Title: Create a simple kiali helm installer

Question:

username_0: Collecting feedback from the community, it seems that for some basic scenarios people don't want to use the kiali-operator to install Kiali.

This issue is open to study the possibility to write a very simple way to have an instance of Kiali installed in Istio without using the operator.

Note, this is not in any way, any change in the strategy to use the operator, that is designed to manage multiple and complex scenarios.

Finally, the simple kiali helm installer should have a very limited demo scope, it shouldn't be a recommended way to install Kiali in production scenarios where operator should be the way to go.

Answers:

username_1: I agree we could produce and publish a basic "demo" kiali server helm chart. There are some things people will lose if they opt-out of the operator:

1. You lose the ability for multi-tenancy support like Kiali has for Maistra

2. You lose the ability to auto-detect and correct unauthorized changes to the Kiali resources (e.g. if someone deletes the Kiali Deployment or edits the Kiali ConfigMap, the operator is no longer there to auto-revert - it is on you to detect those changes and manually revert)

3. You lose the ability to change the accessible namespaces on the fly (the operator today can force Kiali to have cluster roles given to it only for the namespaces in the mesh - thus added a level of security). The basic helm chart will have accessible_namespaces=** thus allowing Kiali to see everything. That's how it is with the upstream Istio release, so this is no different than how people are used to using it, but I bring it up anyway.

4. You lose the OpenShift Console Links (operator creates those on the fly as namespaces are added to the accessible namespaces list).

There are probably other things I'm forgetting, those are just off the top of my head.

Status: Issue closed

|

MicrosoftDocs/office-docs-powershell | 643643953 | Title: EnableAuthAdminReadSession is missing

Question:

username_0: [KB4559435](https://support.microsoft.com/en-us/help/4559435/) describes that EnableAuthAdminReadSession has been added and links for more info to the [Set-OrganizationConfig](https://docs.microsoft.com/en-us/powershell/module/exchange/set-organizationconfig) page. However, this parameter isn't discussed on this page.

A bit more clarification regarding this parameter would be nice as well (also on the KB article page), like for instance for what scenarios you’d want this and what the default is.

Thanks!

Robert

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: 03895b30-2bb7-9e96-28db-def76ae59029

* Version Independent ID: 8056b3ee-4fab-3a5c-935b-a9d0e9ad82a4

* Content: [Set-OrganizationConfig (exchange)](https://docs.microsoft.com/en-us/powershell/module/exchange/set-organizationconfig?view=exchange-ps)

* Content Source: [exchange/exchange-ps/exchange/Set-OrganizationConfig.md](https://github.com/MicrosoftDocs/office-docs-powershell/blob/master/exchange/exchange-ps/exchange/Set-OrganizationConfig.md)

* Service: **exchange-powershell**

* GitHub Login: @username_2

* Microsoft Alias: **username_2**

Answers:

username_1: Get-RetentionCompliancePolicy

username_1: @officedocsbot assign @username_1

Status: Issue closed

|

beefoo/climate-lab | 250097027 | Title: Research into what are some warming/cooling events/regions

Question:

username_0: By looking at the global and regional temperature records, see if we can identify specific climate events that we can call out, e.g.:

- Cooler-than-usual or warmer-than-usual set of years

- Various regional heat waves

- Specific historical markers of significance (e.g. various periods industrialization like WW2)

Answers:

username_0: Dip between 1940 to 1970 can be explained by aerosols emitted by industrial activities and volcanic eruptions:

https://www.newscientist.com/article/dn11639-climate-myths-the-cooling-after-1940-shows-co2-does-not-cause-warming/

username_0: The Mt. Agung eruption in March 1963 produced a decrease of about 0.5°C in the mean temperature of the tropical troposphere

http://science.sciencemag.org/content/194/4272/1413

username_0: [Clean air acts](https://en.wikipedia.org/wiki/Clean_Air_Act) reduced aerosol emissions, thus warming effects of GHGs slowly outweighed cooling effects of aerosols

username_0: Three largest volcanos of the 20th century seems to align with short-term cooling

[Santa María Volcano in 1902](https://en.wikipedia.org/wiki/Santa_Mar%C3%ADa_(volcano))

[Mt Novarupta eruption of 1912](https://en.wikipedia.org/wiki/Novarupta)

[Mt Pinatubo of 1991](https://en.wikipedia.org/wiki/Mount_Pinatubo)

username_0: 1870 is the approximate beginning of the [2nd Industrial Revolution](https://en.wikipedia.org/wiki/Second_Industrial_Revolution)

username_0: [Strong El Nino contributed to 1940-1942 warming](http://onlinelibrary.wiley.com/store/10.1256/wea.248.04/asset/2005601203_ftp.pdf?v=1&t=j8ddjopz&s=9662788abf1afd959761bd4dc9c3e04593935fcb) |

RohitSurwase/UCE-Handler | 346821167 | Title: The lib doesn't work on API 26?

Question:

username_0: it works fine on my Samsung s6 (API 24), but it doesn't work on my other Samsung s7 (API 26). Do you have any idea?

Answers:

username_1: @username_0 Sorry for the delay (I have not got notified). Could you please provide more details about the issue? Error logs would be fine.

Status: Issue closed

|

material-components/material-components-web | 275110544 | Title: Remove events from persistent drawer

Question:

username_0: I want to add some extra features into mdc-persistent-drawer however there is events like pointerup, pointerdown, click, pointermove. These are completely useless and I cant find way to remove them. Tried to unbind, .off, override but no luck.

Answers:

username_1: Yep, I have to remove click listener also.

I guess Pointer/Scroll events disabling added in order to stop main page content from scrolling.

Have no idea, why disabling clicks are required.

```

var persistentDrawer = new drawer.MDCPersistentDrawer(...)

var drawer = persistentDrawer._root_.querySelector('nav')

drawer_.removeEventListener('click', persistentDrawer.foundation_.drawerClickHandler_)

```

username_2: @username_0 why do you have to remove these event handlers?

username_0: I want to customize drawer with various elements and for example if I want to add fancier list then elemenets in list wont be clickable (gray background on mousedown...)

Status: Issue closed

username_3: We will be removing the `drawerClickHandler_` which currently stops propagation of the click event. This is being done in #1138, similar discussion on #1004. |

Th3Shadowbroker/OuroborosMines | 714021381 | Title: Item duration isn't decreased when mining

Question:

username_0: **Describe the bug**

Originally described by @laspi94 as `mining on the region whith the flag, item no loss durability.`

**To Reproduce**

Steps to reproduce the behavior:

1. Mine within a region.

2. Mine.

3. Item durability isn't decreased.

**Expected behavior**

The mining tool is supposed to lose durability.<issue_closed>

Status: Issue closed |

AppImage/AppImageKit | 383988025 | Title: Portable Apps and their settings: Portable Home

Question:

username_0: Hi, lead developer of [ORB Applications](https://www.orbital-apps.com/) here.

We have developed a "standard" called **[Portable Home](https://github.com/username_0/orb-specification/issues/2)**, designed for the portability of data used by [Portable Apps](https://www.orbital-apps.com/portable).

I was wondering it there are plans to have "Portable AppImages" (Portable Apps)?

Is there interest in adopting something like this "standard"?

Nothing is set in stone regarding this standard, so it can be changed, if necessary.

Feel free to comment [on the Github page](https://github.com/username_0/orb-specification/issues/2) or here.

Answers:

username_1: @username_2 we think that whether data should travel alongside the AppImage or not should be determined by the user, not the author of an AppImage.

username_1: @username_0 do you think all functionality is now in place and this ticket can be closed?

Please see https://docs.appimage.org/user-guide/portable-mode.html

Status: Issue closed

username_2: @username_1 I think that's a bad idea. This works fine for rather advanced linux users, but the average user expects to be able to download a "portable edition" without possibly screwing up the portability with manually creating directories. I think like this, the functionality is simply useless for a larger part of the userbase...

At the very least there would need to be a graphical dialog at launch to choose the mode. But that again would need a possible way *inside* the application to prompt for this

username_1: You could put into your AppImage a custom AppRun script that would call

```

...

mkdir -p "${APPIMAGE}.home"

export HOME=${APPIMAGE}.home

....

```

I think. The downside is that the user can no longer decide.

username_1: @username_2 do you have a concrete application in mind?

username_2: Sure, this is e.g. a common problem with IDEs: it is often useful to ship programming language-specific flavors (special set of addons & settings), but if the user already has a system-wide install all the settings might be messed up and interfering, so it is vital to be able to offer such a flavored IDE as a portable application. IDEs are also the kind of app where users will be very not amused if the settings are botched up, since they're usually highly configured to personal needs.

Apart from that, any creative application which the user might want to use on the run and keep everything with them, and they would possibly end up disappointed if they discovered on the run they messed up making it portable and half the settings are gone. It's just better to be able to ship a clearly separate, clearly portable build that is always portable no matter what, so that such room for error is eliminated

username_2: Is this something that is done inside the app image, or outside? Because if it can't be done inside without external scripts, it doesn't really help.

Also, there is always the use case for portable IDEs, portable audio editing apps, ... what have you, and for those the use case of "just give me a portable version I can't accidentally make not portable" always remains. I was just trying to give more specific examples since you asked for it, but I don't think those are super relevant for the general use case of an always-portable-no-matter-what version

username_1: Inside.

Point is, you don't really need a portable "version", since every (recent) AppImage can be used in a "portable" way.

username_2: You're not wrong, but depending on the target audience it's still useful to have an explicit portable version - but you made it abundantly clear that is possible to do, so that's good enough for me :+1: sorry for the comment spam

username_1: Thanks @username_2

username_3: Say I have a folder next to my appimage called program.appimage.home.config

Will it store both config and the home directory files there, neither or only one? Or do I simply not need it?

username_4: If your AppImage is called `a.AppImage`, you can create the directories `a.AppImage.home` and/or `a.AppImage.config`. The former should include configuration files. You should probably test this, though, as these are experimental features.

username_3: So technically only .home should be necessary?

username_4: It highly depends on the application. In theory, as long as the application references everything from `$HOME`, I'd say yes. But you should really test this.

username_3: Ok, so far, no appimage has misbehaved without the .config folder (as in, none has written outside of the .home folder unless specified). Thank you.

username_4: There is the [XDG base dir spec](https://specifications.freedesktop.org/basedir-spec/basedir-spec-latest.html), which most frameworks support. But you cannot predict the environment variables on a system, and there are implementation specific details. Therefore, these directories are considered experimental, as they do not attempt to make guarantees but are a best effort solution. |

ant-design/ant-design | 347853922 | Title: [collapse] add extra field like that in card component

Question:

username_0: - [x] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### What problem does this feature solve?

It would be a good place to put actions which may manipulate a collapse panel or its content. Though it can be implemented by creating a `float: right` div in `header`, I know, it's not a clean solution.

### What does the proposed API look like?

Just like `card` component.

```jsx

const extra = 'string' || () => <p>string</p>;

return (

<Panel header="This is panel header 1" key="1" extraField={extra}>

<p>{text}</p>

</Panel>

)

```

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

A screenshot may be helpful :D

<img width="783" alt="2018-08-06 5 38 06" src="https://user-images.githubusercontent.com/12122021/43709728-3159241e-99a0-11e8-8dd6-302dfb49cf27.png"><issue_closed>

Status: Issue closed |

MelvorIdle/melvoridle.github.io | 1088841239 | Title: [Bug]: <mobile sometimes does little to no actions with the app closed>

Question:

username_0: ### Describe the bug

There’s been a few times where I start a task before going to sleep and I will gain nothing over night afk

### Reproduction Steps

Started thieving before closing app and woke up with about 20 minutes of actions done

### Expected behaviour

_No response_

### Screenshots

_No response_

### Console output

_No response_

### Which platforms are you experiencing this bug on?

- [ ] Chrome

- [ ] Firefox

- [ ] Edge

- [ ] Safari

- [X] Mobile App (iOS)

- [ ] Mobile App (Android)

- [ ] Steam

- [ ] Other (Please Specify)

### Which version of the game are you experiencing this bug on?

v1.0

### Game Subversion

?1419

### Are you using any scripts or extensions?

No

Answers:

username_1: Many fixes for various offline progress issues have been released over the last few days. Please ensure you are running at least v1.0.3 (?1843).

Please open a new issue if the problem persists.

Status: Issue closed

|

DNNAssociation/DnnSummit2019.Mobile | 391428548 | Title: Update Location Model, ViewModel and View to pull data from the website

Question:

username_0: ## Description of problem

Currently, the Location data is stubbed in code. This needs to be changed to pull data from the website API.

## Description of solution

Update the Location Model, ViewModel and View to pull data from the website.

## Description of alternatives considered

n/a

## Additional context

n/a

## Screenshots

n/;a

## Affected version

* [x] 1.0.0

## Affected device

* [x] Android

* [x] iOS<issue_closed>

Status: Issue closed |

MarkRedeman/ProfessorFrancken | 146269294 | Title: Choose an automated code review service

Question:

username_0: Currently I've enabled [nitpick-ci](https://nitpick-ci.com) and [styleci](https://styleci.io/). Styleci requires some additional configuration and nitpick ci can't review our tests files because they are using snake cased function names.<issue_closed>

Status: Issue closed |

moment/moment-timezone | 151998505 | Title: Bug when parsing unix timestamp and using default time zone

Question:

username_0: As reported in moment/moment#3158 there is an issue where parsing an 'X' token will not work correctly with a default timezone.

```js

moment.tz.setDefault("America/Chicago");

moment("1461906597", "X").isValid(); // false

```

This is an issue with the way updateOffset handles default time zones.

```js

if (mom._z === undefined) {

if (zone && needsOffset(mom) && !mom._isUTC) {

mom._d = moment.utc(mom._a)._d;

mom.utc().add(zone.parse(mom), 'minutes');

}

mom._z = zone;

}

```

This bit of code will attempt to take the initial _a array from the moment config, and use it to construct a new UTC moment. With a timestamp, _a is an array with a bunch of undefined values, ergo this doesn't work.

Answers:

username_1: I added a unit test for this to my fork of the repo: https://github.com/username_1/moment-timezone/commit/dde70303f5e148362090bb508918cb40a46d8bca.

username_2: Sorry, is there a solution for this issue at the moment (besides of the unit test added)?

Or, is there any other way to apply kind of "default" timezone to all subsequent moment().format() calls?

username_3: The workaround for now is to pass the timestamp as a number instead of a string.

```js

moment.unix(1461906597) // seconds

moment(1461906597123) // milliseconds

```

If you have a string, then use `+` or `parseInt` to convert to number.

username_2: Thank you! Will it apply my default timezone, or just help to avoid errors?

username_3: It's a better form to use anyway, and yes - it will work with the default time zone. The bug is only that the default timezone isn't working with `x` or `X` string formats. |

FB-18-19-PreAP-CS/math-helper-mosgood549 | 363273243 | Title: Don't use sleep

Question:

username_0: I would just think it would be nicer if either the selection section shows up right away after using a formula, or an output shows up saying something like "would you like to use another formula? (y)es or (n)o" i just thought that might look better.

Answers:

username_1: I use sleep to make it easier to see the math helper homepage, and it helps the user see the answer without having to scroll through the shell. I think that I'm going to keep it there, because I like the way that it organizes my code.

Status: Issue closed

|

ExchangeCore/Concrete5-CKEditor | 112214719 | Title: Setup appropriate default plugins

Question:

username_0: I just kind of tossed a few in there as an example. We should come up with a reasonable list to attempt to mimic the functionality that redactor provides by default.

See https://github.com/ExchangeCore/Concrete5-CKEditor/blob/master/controller.php#L169-L183

Answers:

username_1: I whittled down what I think is a good default set of plugins.

```php

protected function setupDefaultPlugins()

{

$this->getConfig()->save('plugins',

array(

'a11yhelp',

'basicstyles',

'blockquote',

'clipboard',

'colorbutton',

'colordialog',

'contextmenu',

'dialogadvtab',

'elementspath',

'enterkey',

'entities',

'floatingspace',

'font',

'format',

'htmlwriter',

'image',

'indentblock',

'indentlist',

'justify',

'link',

'list',

'liststyle',

'magicline',

'removeformat',

'resize',

'showblocks',

'showborders',

'sourcearea',

'specialchar',

'stylescombo',

'tab',

'table',

'tabletools',

'toolbar',

'undo',

'wysiwygarea'

)

);

}

```

username_0: This is a pretty solid list.

Do you have any particular love for the blockquote / specialchar functionality? At a glance I'm not sure I see blockquote being used much by my basic users, ditto for the symbols stuff. With it being so easy to turn on I feel more inclined to strip this down as far as possible and let people build it back up to their liking.

I don't feel very strongly about this either way but anything we can do to dwindle down options to eliminate confusion seems ideal to me.

username_1: You are right, those two aren't essential.

Last night I tried to remove the copy, paste, and cut, but didn't realize it was "Clipboard".

```php

protected function setupDefaultPlugins()

{

$this->getConfig()->save('plugins',

array(

'a11yhelp',

'basicstyles',

'colorbutton',

'colordialog',

'contextmenu',

'dialogadvtab',

'elementspath',

'enterkey',

'entities',

'find',

'floatingspace',

'font',

'format',

'htmlwriter',

'image',

'indentblock',

'indentlist',

'justify',

'link',

'list',

'liststyle',

'magicline',

'removeformat',

'resize',

'showblocks',

'showborders',

'sourcearea',

'stylescombo',

'tab',

'table',

'tabletools',

'toolbar',

'undo',

'wysiwygarea'

)

);

}

```

username_0: Ok i'm closing this per https://github.com/ExchangeCore/Concrete5-CKEditor/commit/58c1621792a95073371681ed62aeac2ae943097b we can always revisit later if necessary

Status: Issue closed

|

syndesisio/syndesis | 319593869 | Title: Salesforce connection keep showing configuration issue message

Question:

username_0: ## This is a...

<!-- Check ONLY one of the following options with "x" -->

<pre><code>

[ ] Feature request

[x ] Regression (a behavior that used to work and stopped working in a new release)

[x ] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Documentation issue or request

</code></pre>

## The problem

<!--

Briefly describe the issue you are experiencing (or the feature you want to see implemented on Syndesis).

+ For BUGS, tell us what you were trying to do and what happened instead.

+ For NEW FEATURES, describe the _User Persona_ demanding it and its use case.

-->

A valid salesforce connector configuration was set up and when the connection was created the following error message appear:

## Expected behavior

<!-- Describe what the desired behavior would be, enlistin gthe acceptance criteria. -->

There should not be a warning, as the configuration is correct. |

web3j/web3j | 309266755 | Title: Unable to make transaction using admin.personalSendTransaction method.

Question:

username_0: I am using geth and I have used personalNewAccount to create an account. To make the transaction I wrote this.

`HttpService httpService = new HttpService(envConfiguration.getTestNetServerUrl());

Admin admin = Admin.build(httpService);

Transaction trx = Transaction.createEtherTransaction(from, nonce, gasprice, gaslimit, to, amount);

EthSendTransaction ethSendTransaction = admin.personalSendTransaction(trx, existingpassword).send();

transactionHash = ethSendTransaction.getTransactionHash();`

I'm getting output transactionHash = null

Status: Issue closed

Answers:

username_0: There was some issue in sending amount value. Thanks. |

postmanlabs/postman-docs | 802386435 | Title: image not showing on Variables page

Question:

username_0: https://learning.postman.com/docs/sending-requests/variables/#sharing-and-persisting-data

<img width="945" alt="Screen Shot 2021-02-05 at 10 34 14 AM" src="https://user-images.githubusercontent.com/36343528/107074629-da9a3e00-679d-11eb-8930-094b7cf3d1a7.png"><issue_closed>

Status: Issue closed |

fishcakez/dbg | 125799179 | Title: Dbg crashes when tracing calls

Question:

username_0: Attempted to follow instructions in README.md, but getting erorr with verison 1.0.1, Erlang 18, Elixir 1.2.0. Ran iex -S mix in dbg directory after mix compile then followed steps in README.m and got the following:

Erlang/OTP 18 [erts-7.2.1] [source] [64-bit] [smp:8:8] [async-threads:10] [hipe] [kernel-poll:false] [dtrace]

Interactive Elixir (1.2.0) - press Ctrl+C to exit (type h() ENTER for help)

iex(1)> Dbg.trace(self(), :call)

%{counts: %{nonode@nohost: 1}, errors: %{}}

iex(2)> Dbg.call(&Map.new/0)

%{counts: %{nonode@nohost: 1}, errors: %{}}

iex(3)> Map.new()

%{}

iex(4)> ** dbg got EXIT - terminating: {trace_handler_crashed,

{function_clause,

[{'Elixir.IEx.Config',default_option,

[width],

[{file,"lib/iex/config.ex"},{line,63}]},

{'Elixir.IEx.Config',

'-default_config/0-fun-0-',1,

[{file,"lib/iex/config.ex"},{line,60}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.IEx.Config',configuration,0,

[{file,"lib/iex/config.ex"},{line,56}]},

{'Elixir.Dbg.Handler',handle_event,2,

[{file,"lib/dbg/handler.ex"},{line,9}]},

{dbg,handle_traces,4,

[{file,"dbg.erl"},{line,819}]},

{dbg,tracer_loop,2,

[{file,"dbg.erl"},{line,783}]}]}}

=ERROR REPORT==== 9-Jan-2016::23:05:46 ===

** Generic server 'Elixir.Dbg.Watcher' terminating

** Last message in was {'DOWN',#Ref<0.0.4.689>,process,<0.121.0>,

{trace_handler_crashed,

{function_clause,

[{'Elixir.IEx.Config',default_option,

[width],

[{file,"lib/iex/config.ex"},{line,63}]},

{'Elixir.IEx.Config','-default_config/0-fun-0-',1,

[{file,"lib/iex/config.ex"},{line,60}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.IEx.Config',configuration,0,

[{file,"lib/iex/config.ex"},{line,56}]},

{'Elixir.Dbg.Handler',handle_event,2,

[{file,"lib/dbg/handler.ex"},{line,9}]},

{dbg,handle_traces,4,[{file,"dbg.erl"},{line,819}]},

{dbg,tracer_loop,2,

[{file,"dbg.erl"},{line,783}]}]}}}

** When Server state == #{'__struct__' => 'Elixir.Dbg.Watcher',

dbg_ref => #Ref<0.0.4.688>,

tracer => nil,

tracer_ref => nil}

** Reason for termination ==

** {trace_handler_crashed,

{function_clause,

[{'Elixir.IEx.Config',default_option,

[width],

[{file,"lib/iex/config.ex"},{line,63}]},

{'Elixir.IEx.Config','-default_config/0-fun-0-',1,

[{file,"lib/iex/config.ex"},{line,60}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.Enum','-map/2-lists^map/1-0-',2,

[{file,"lib/enum.ex"},{line,1088}]},

{'Elixir.IEx.Config',configuration,0,

[{file,"lib/iex/config.ex"},{line,56}]},

{'Elixir.Dbg.Handler',handle_event,2,

[{file,"lib/dbg/handler.ex"},{line,9}]},

{dbg,handle_traces,4,[{file,"dbg.erl"},{line,819}]},

{dbg,tracer_loop,2,[{file,"dbg.erl"},{line,783}]}]}}

Answers:

username_1: Unfortunately this is due to a bug in Elixir 1.2.0. It is fixed in 1.3.0-dev and 1.2.1-dev. https://github.com/elixir-lang/elixir/commit/a6b80a19c50905ff7f0de4e689e1339fa883a367 |

microsoft/coe-starter-kit | 1107466496 | Title: [CoE Starter Kit - BUG] isCOE field on environment table

Question:

username_0: ### Describe the issue

Need to be able to know which envt is CoE in apps. Can already tell in flows if you are in the envt.

Thought about having this be an envt variable but there doesnt seem to be a need, we can just put in the table

### Expected Behavior

_No response_

### What solution are you experiencing the issue with?

Core

### What solution version are you using?

3.20.6

### What app or flow are you having the issue with?

Admin | Sync Template v3

### Steps To Reproduce

_No response_

### Anything else?

_No response_

Answers:

username_0: local fixed Feb |

forbole/big-dipper-default-interface | 743453729 | Title: Setup meta tags

Question:

username_0: **Is your feature request related to a problem? Please describe.**

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

**Describe alternatives you've considered**

A clear and concise description of any alternative solutions or features you've considered.

**Additional context**

Add any other context or screenshots about the feature request here.<issue_closed>

Status: Issue closed |

gbif/portal-feedback | 294129379 | Title: Having this issue

Question:

username_0: fbitem-7490229a59148a44a969f4b702276af26e940516

System: Chrome 63.0.3239 / Windows 10 0.0.0

Referer: https://www.gbif.org/standards

Window size: width 1185 - height 591

API log

Site log

System health at time of feedback: OPERATIONAL<issue_closed>

Status: Issue closed |

dbeaver/dbeaver | 494416684 | Title: Forced query cancel

Question:

username_0: I see many threads asking for query cancel to work in environments where that's impossible due to the lack of support on the driver/server side.

What DBeaver **could** do, however, it to turn the ”cancel query” button into a ”force disconnect" button after the first click, instead of disabling it. Clicking on that button should show a dialog reminding the user that clicking that button will disconnect DBeaver from the query, but the query might continue running on the database server.

Confirming that will unlock DBeaver interface allowing it to be used for other queries.

This looks like an easy enough fix (although i don't know how much control you have on that button), and will give people what they care about, which is often not query cancel but the ability to run a new query.

Answers:

username_1: In fact it already works exactly as you described. If query cancel doesn't actually cancel it then DBeaver tries to cancel whole connection.

This is controlled by following pref option:

Meaning of this parameter: when query cancel operation takes longer than this value (ms) DBeaver will close whole connection.

By default it was set to 0 (= disabled). Perhaps we should change it to some reasonable value..

username_0: my suggestion is for more manual version of that. Setting a timeout is difficult because sometimes you deal with a query which actually takes a long time to cancel (e.g. needs to rollback a large update) while sometimes you deal with a select you don't care about. If you reclick on the button you clearly state your intention instead.

username_2: Wow that works perfectly. I was about to suggest something similar (Postgres "cancel" commands sometimes get lost if network is down or flakey, resulting in indefinitely running query with no ability to cancel it). After "x" seconds of a cancelled query not working, change button from greyed out "canceled" to a new button "force disconnect" or add a new button or what not.

username_3: Bumping this issue. In our instance, this bug dynamic is related to: VPN connection to AWS Redshift

I have not messed with the Error Handling or SQL Processing settings, but "Cancel execution" [after 10000 ms] seems like a workable solution, provided it doesn't leave the execution hanging server side.

Does closing the dBeaver application locally also prompt the server side execution to end?

username_3: Just updated to 6.3.5 and it seems it may be fixed?!? Hopefully not getting too excited too early, but this is a big help if resolved. Thanks to DBeaver dev team!

Status: Issue closed

username_5: I submitted a new issue that focus more on @username_0 's ultimate conclusion rather than what's said in his initial post:

https://github.com/dbeaver/dbeaver/issues/9272

username_0: thanks for reopening, I didn't notice my issue got closed! |

ros2/ros2 | 285571045 | Title: removed note about ASIO not found on macOS

Question:

username_0: @0wu I removed the note you added about turning on building of dependencies within fast-rtps when asio is not found on macOS:

https://github.com/ros2/ros2/wiki/OSX-Development-Setup/_compare/2af42dc2bde5bb6b700683c9435c7fadd55616d6...a8369e7f20d5bb4e361be66de51e7107c8bdcbbe

That should be covered by installing asio from Homebrew. If that's not working then it's likely a bug in the asio installed by Homebrew or in Fast-RTPS's build. Can you confirm that asio is installed by homebrew and linked?

Status: Issue closed

Answers:

username_1: @0wu I'm going to close this due to inactivity, please feel free to comment here if you still face the issue and we can reopen it |

nhedlund/intrinio | 339123725 | Title: Authentication using Web API Key

Question:

username_0: The web API key does not require a username/password combo

Answers:

username_1: Are you sure? When I look at the current documentation from Intrinio they still use username/password combos as API keys: https://intrinio.com/tutorial/web_api

username_1: Last time I checked they had not added API keys to their documentation but now that they have I will add it to the library. Username and password still works.

Good suggestion, I checked their python-sdk package and from what I can see they do not use Pandas as return types and don't return the complete datasets by default.

username_2: Thanks - I thought I posted another comment last night, but I found out they terminated my free subscriptions. I'm really annoyed that they dropped any free tier of their service, including for existing users, and will probably look at Python libraries that pull from IEX. I don't need anything near real-time pricing data.

What's the unique value that you see in Intrinio over IEX or [other free options](https://www.reddit.com/r/Python/comments/8b5xuw/apis_that_work_for_stock_data/)?

username_1: For me Intrinio gives you split- and dividend-adjusted stock prices that I need for accurate backtesting, for a reasonable monthly price. Quandl is another good provider.

Most or all providers of free stock prices only offer unadjusted data or very limited historic data, at least what I have found.

username_1: The web API key does not require a username/password combo

username_1: It seems that they have changed the API a lot from V1 to V2 that would cause a major rewrite of this library.

Even if I prefer working with Pandas datasets and libraries that returns full datasets instead of using paging it seems better to refer to the official SDK that targets the new API.

Status: Issue closed

username_2: Thanks. If it's helpful, it looks like the Yahoo API can still be made to work with pandas-datareader:

https://towardsdatascience.com/python-for-finance-stock-portfolio-analyses-6da4c3e61054

https://pypi.org/project/fix-yahoo-finance/

I don't know what about the fix is 'temporary', but this API returns a pandas dataframe and included both actual and adjusted (I assume split/dividend) market close numbers.

I've only looked far enough to see that the example walkthrough on the blog post returns data. |

j3k0/cordova-plugin-purchase | 492729613 | Title: Ionic says plugin not installed when it is installed

Question:

username_0: Hi,

I try to use the plugin in Ionic 5 but I'm getting warnings:

```

[ng] [console.warn]: "Native: tried calling InAppPurchase2.order, but the InAppPurchase2 plugin is not installed."

[ng] [console.warn]: "Install the InAppPurchase2 plugin: 'ionic cordova plugin add cc.fovea.cordova.purchase'"

```

Of course I installed the plugin.

```

$ cordova plugin list

cc.fovea.cordova.purchase 8.1.1 "Purchase"

```

I added some debug logs to node_modules/@ionic-native/core/decorators/common.js before the lines that prints warnings and I see that plugin reference is "store" and plugin instance created by calling `pluginInstance = getPlugin(pluginRef)` returns null.

Any ideas how to fix it?

Answers:

username_0: Fixed by installing cordova-support-google-services plugin and putting google-services.json in platforms/android/app/src.

Here is an instruction how to get google-services.json: https://support.google.com/firebase/answer/7015592?hl=en

username_1: Installing "cordova-support-google-services" for android

Plugin doesn't support this project's cordova-android version. cordova-android: 9.0.0, failed version requirement: <9.0.0

Skipping 'cordova-support-google-services' for android

Adding cordova-support-google-services to package.json

```

Is there a way to fix this for android >9.0.0? |

istio-ecosystem/authservice | 550342052 | Title: Session management

Question:

username_0: Once we have finished implementing server-side sessions, we should consider: