repo

stringlengths 7

59

| instance_id

stringlengths 11

63

| base_commit

stringlengths 40

40

| patch

stringlengths 167

798k

| test_patch

stringclasses 1

value | problem_statement

stringlengths 20

65.2k

| hints_text

stringlengths 0

142k

| created_at

timestamp[ns]date 2015-08-30 10:31:05

2024-12-13 16:08:19

| environment_setup_commit

stringclasses 1

value | version

stringclasses 1

value | FAIL_TO_PASS

sequencelengths 0

0

| PASS_TO_PASS

sequencelengths 0

0

|

|---|---|---|---|---|---|---|---|---|---|---|---|

keystroke3/redpaper | keystroke3__redpaper-6 | 9b3a0094f55518e1c64a692c0f1759a04b5d564c | diff --git a/fetch.py b/fetch.py

index 8b5ca33..e735695 100755

--- a/fetch.py

+++ b/fetch.py

@@ -34,6 +34,7 @@

wall_data_file = config['settings']['wall_data_file']

pictures = config['settings']['download_dir']

d_limit = int(config['settings']['download_limit'])

+subreddit = config['settings']['subreddit']

def auth():

@@ -48,7 +49,7 @@ def auth():

commaScopes="all",

)

# collect data from reddit

- wallpaper = reddit.subreddit("wallpaper+wallpapers")

+ wallpaper = reddit.subreddit(subreddit)

top_paper = wallpaper.hot(limit=d_limit)

diff --git a/settings.py b/settings.py

index d6c7764..7677eab 100644

--- a/settings.py

+++ b/settings.py

@@ -27,6 +27,7 @@

"wall_data.json"),

'Wallpaper_selection_method': "sequential",

'download_limit': 1,

+ 'subreddit': "wallpaper+wallpapers",

}

@@ -44,6 +45,8 @@ def set_settings():

with open(settings_file, "w") as f:

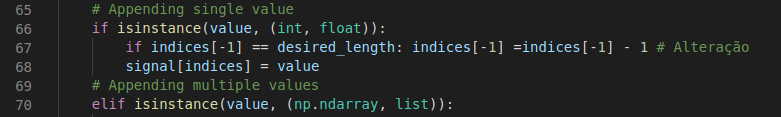

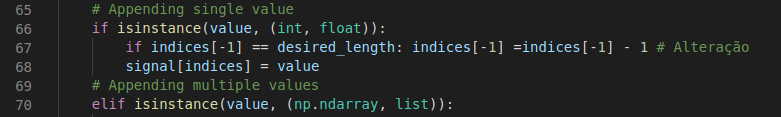

f.write("")

set_settings()

+

+

if not os.path.exists(settings_file):

set_settings()

else:

@@ -53,6 +56,7 @@ def set_settings():

pictures = config['settings']['download_dir']

d_limit = int(config['settings']['download_limit'])

wall_selection_method = config['settings']['wallpaper_selection_method']

+subreddit = config['settings']['subreddit']

global message

message = ""

@@ -139,6 +143,29 @@ def change_path(new_path="", silent=False):

change_path()

+def change_subreddit():

+ """

+ Allows the user to change the subreddit where we fetch the pictures from

+ """

+ global message

+ Red()

+ new_subreddit = input(f"""

+ {green}Enter the just the name of the subreddit.

+ Example wallpapers for reddit.com/r/wallpapers\n

+ Current path is: {subreddit}\n{normal}

+ {red}x{normal} : {blue}main settings{normal}

+ >>> """)

+ if new_subreddit == "x":

+ main_settings()

+ return

+ else:

+ config.set('settings', 'subreddit', str(new_subreddit))

+ set_settings()

+ Red()

+ change_subreddit()

+ return

+

+

def wall_selection():

"""

Allows the user to specify the method to be used when choosing wallpapers

@@ -214,6 +241,7 @@ def main_settings():

{red} 2 {normal}: {blue} Change wallpaper selection method

{normal}

{red} 3 {normal}: {blue} Change the download limit{normal}\n

+ {red} 4 {normal}: {blue} Change subreddit to download from{normal}\n

{red} r {normal}: {blue} Reset to default {normal}\n

{red} x {normal}: {blue} main menu {normal}\n

>>> """)

@@ -223,6 +251,8 @@ def main_settings():

wall_selection()

elif choice == "3":

max_dl_choice()

+ elif choice == "4":

+ change_subreddit()

elif choice == "r" or choice == "R":

restore_default()

main_settings()

| Issue in wall_set.py

After the last committed change to wall_set.py redpaper crashed on line 56:

saved_walls = json.load(data)

due to data not be assigned before use.

This was on a default Kali Linux install. Changing methods still resulted in a error but this time due to attempting to use a closed file.

| 2019-07-30T06:36:48 | 0.0 | [] | [] |

|||

sdaqo/anipy-cli | sdaqo__anipy-cli-136 | bfa5498a5528dcb6365427177e57b7919addf1af | diff --git a/.gitignore b/.gitignore

index 3d78abe5..475cdec6 100644

--- a/.gitignore

+++ b/.gitignore

@@ -8,4 +8,10 @@ anipy_cli.egg-info/

user_files/

anipy_cli/config_personal.py

pypi.sh

-.idea/

\ No newline at end of file

+.idea/

+

+# VSCode

+.vscode/

+

+# Venv

+.venv/

\ No newline at end of file

diff --git a/README.md b/README.md

index 04a7374a..a659659e 100644

--- a/README.md

+++ b/README.md

@@ -47,7 +47,9 @@ Places of the config:

[Sample Config](https://github.com/sdaqo/anipy-cli/blob/master/docs/sample_config.yaml)

-**Attention Windows Users:** If you activate the option `reuse_mpv_window`, you will have to donwload and put the `mpv-2.dll` in your path. To get it go look here: https://sourceforge.net/projects/mpv-player-windows/files/libmpv/

+**Attention Windows Users Using MPV:** If you activate the option `reuse_mpv_window`, you will have to download and put the `mpv-2.dll` in your path. To get it go look here: https://sourceforge.net/projects/mpv-player-windows/files/libmpv/

+

+**Attention Windows Users on Config File Placement:** If you have downloaded Python from the Microsoft Store, your config file will be cached inside of your Python's AppData. For example: `%USERPROFILE%\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.11_qbz5n2kfra8p0\LocalCache\Local\anipy-cli\config.yaml`.

# Usage

diff --git a/anipy_cli/config.py b/anipy_cli/config.py

index cdfd4bbf..94668d0a 100644

--- a/anipy_cli/config.py

+++ b/anipy_cli/config.py

@@ -14,7 +14,7 @@ def __init__(self):

self._config_file, self._yaml_conf = Config._read_config()

if not self._yaml_conf:

- self._yaml_conf = {}

+ self._create_config() # Create config file

@property

def _anipy_cli_folder(self):

| Config not being generated

**Describe the bug**

For new installs the config should be generated and pre-filled with default values based on the Config-Class' @Property fields.

For some reason this doesn't happen.

Even if no config-file exists the config is not "none" when reading from file in:

[config.py :14](https://github.com/sdaqo/anipy-cli/blob/cccf65144cf96d92c8e30fd2584353ae5f0e3c37/anipy_cli/config.py#L14)

```python

with self._config_file.open("r") as conf:

self._yaml_conf = yaml.safe_load(conf)

if self._yaml_conf is None:

# The config file is empty

self._yaml_conf = {}

```

Checked on Windows11 only so far.

| this looks like a windows problem, linux works fine

alright, thanks for checking. I'll look into this

Also for macos, the default config file is not created but works if done by the user

I'm on Windows 11. I'll go ahead and see what I can find out.

# TL;DR

The current version of anipy doesn't create a config file. But when I force it to, it creates it, and reads it, but I cannot find it anywhere on the file system.

# Breakdown

According to #79, the config system was changed so users don't have to manually add vars every update. Great change, but by doing so they also removed the code that creates at least some kind of default file we can find and adjust (`_create_config()` has no references).

To fix this, I added the code that creates the config file, but there is indeed an issue on Windows (I've only test Windows). Python says that the config file was created, it pulls data from it and everything, but I can't actually find the file on my system.

Pulled data evidence:

<img width="368" alt="image" src="https://github.com/sdaqo/anipy-cli/assets/68718280/7aec340e-9589-421d-9978-d646eafe191b">

Can't find it:

<img width="318" alt="image" src="https://github.com/sdaqo/anipy-cli/assets/68718280/72026683-2b13-4d6c-a29d-58f1db48f5cb">

I went through the computer's process of placing the config file, and I still couldn't find it no matter what I did.

I found a Stack Overflow question about it, but there were no answers.

# Update:

When using different Python versions, the one that created the file seems to recognize it and the other does not

<img width="1139" alt="image" src="https://github.com/sdaqo/anipy-cli/assets/68718280/8c793f50-09e8-470e-a463-3dc93a561914">

# GOOD NEWS

I found where Python is actually writing the file. Turns out if you download Python from the Microsoft store, Python gets sandboxed, and anything written to AppData is written into its cache, as seen here:

<img width="470" alt="image" src="https://github.com/sdaqo/anipy-cli/assets/68718280/2f60fc3c-f2e6-4632-8ceb-adf365dbd518">

Unfortunately there aren't many good solutions for solving this, except for knowing that your Python might be sandboxed. So, for now I'll just give you the possible location your config file might get stored on a sandboxed Python on Windows:

`%USERPROFILE%\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.11_qbz5n2kfra8p0\LocalCache\Local\anipy-cli\config.yaml`

I recommend either users download Python directly from the site, or be warned that Microsoft Store Python is sandboxed and will be stored in this location. You could also relocate where the config file is placed if you wish, but I'm not sure where it could go. | 2023-08-30T04:43:44 | 0.0 | [] | [] |

||

TerryHowe/ansible-modules-hashivault | TerryHowe__ansible-modules-hashivault-432 | 9d844336e874c6d8ae6f81b6a71038777e5d637c | diff --git a/ansible/modules/hashivault/hashivault_azure_auth_config.py b/ansible/modules/hashivault/hashivault_azure_auth_config.py

index 61ab2fa0..807b249d 100644

--- a/ansible/modules/hashivault/hashivault_azure_auth_config.py

+++ b/ansible/modules/hashivault/hashivault_azure_auth_config.py

@@ -105,8 +105,9 @@ def hashivault_azure_auth_config(module):

# check if current config matches desired config values, if they dont match, set changed true

for k, v in current_state.items():

- if v != desired_state[k]:

- changed = True

+ if k in desired_state:

+ if v != desired_state[k]:

+ changed = True

# if configs dont match and checkmode is off, complete the change

if changed and not module.check_mode:

| Possible hvac breaking change

Running `ansible-playbook -v test_azure_auth_config.yml`

```

An exception occurred during task execution. To see the full traceback, use -vvv. The error was: KeyError: 'root_password_ttl'

```

| you could fix this by adding `if k in desired_state:` in the for loop. It prevents inconsistencies between the current and desired state to become a problem. | 2023-03-31T11:32:57 | 0.0 | [] | [] |

||

quic/aimet | quic__aimet-2552 | 486f006961be1e8e2f64ce954b156a9ba41d1a38 | diff --git a/TrainingExtensions/torch/src/python/aimet_torch/elementwise_ops.py b/TrainingExtensions/torch/src/python/aimet_torch/elementwise_ops.py

index 2d801bde0a..c70adce82b 100644

--- a/TrainingExtensions/torch/src/python/aimet_torch/elementwise_ops.py

+++ b/TrainingExtensions/torch/src/python/aimet_torch/elementwise_ops.py

@@ -361,16 +361,27 @@ def forward(self, *args) -> torch.Tensor:

res = []

for index, (boxes, scores) in enumerate(zip(batches_boxes, batch_scores)):

for class_index, classes_score in enumerate(scores):

- filtered_score_ind = (classes_score > self.score_threshold).nonzero()[:, 0]

- boxes = boxes[filtered_score_ind, :]

- classes_score = classes_score[filtered_score_ind]

- temp_res = torchvision.ops.nms(boxes, classes_score, self.iou_threshold)

- res_ = filtered_score_ind[temp_res]

- for val in res_:

- res.append([index, class_index, val.detach()])

- res = res[:(self.max_output_boxes_per_class *(index+1))]

+ nms_output = self.perform_nms_per_class(boxes, classes_score)

+ res_per_class = []

+ for val in nms_output:

+ res_per_class.append([index, class_index, val.detach()])

+ res_per_class = res_per_class[:self.max_output_boxes_per_class]

+ res.extend(res_per_class)

return torch.Tensor(res).type(torch.int64)

+ def perform_nms_per_class(self, boxes: torch.Tensor, classes_score: torch.Tensor) -> torch.Tensor:

+ """

+ Performs NMS per class

+ :param boxes: boxes on which NMS should be performed

+ :param classes_score: corresponding class scores for the boxes

+ :return: returns box indices filtered out by NMS

+ """

+ filtered_score_ind = (classes_score > self.score_threshold).nonzero()[:, 0]

+ filtered_boxes = boxes[filtered_score_ind]

+ filtered_classes_score = classes_score[filtered_score_ind]

+ res_ = torchvision.ops.nms(filtered_boxes, filtered_classes_score, self.iou_threshold)

+ return filtered_score_ind[res_]

+

class GatherNd(torch.nn.Module):

""" GatherNd op implementation"""

| Minor changes to NMS Op implementation

| 2023-11-06T05:11:48 | 0.0 | [] | [] |

|||

AlertaDengue/AlertaDengue | AlertaDengue__AlertaDengue-563 | 8dcd16a8a5555c301b89a8f96f046a76b81420ef | diff --git a/AlertaDengue/dbf/utils.py b/AlertaDengue/dbf/utils.py

index 26601d30..6fd36705 100644

--- a/AlertaDengue/dbf/utils.py

+++ b/AlertaDengue/dbf/utils.py

@@ -80,7 +80,7 @@ def _parse_fields(dbf_name: str, df: gpd) -> pd:

except ValueError:

df[col] = pd.to_datetime(df[col], errors="coerce")

- return df.loc[[all_expected_fields]]

+ return df.loc[:, all_expected_fields]

def chunk_gen(chunksize, totalsize):

| [DBF]: Error slicing expected columns in _parse_fields function

# Objetivos / Objectivos / Purpose

## Geral / General

```

KeyError: "None of [Index([('NU_ANO', 'ID_MUNICIP', 'ID_AGRAVO', 'DT_SIN_PRI', 'SEM_PRI', 'DT_NOTIFIC', 'NU_NOTIFIC', 'SEM_NOT', 'DT_DIGITA', 'DT_NASC', 'NU_IDADE_N', 'CS_SEXO')], dtype='object')] are in the [index]"

```

```

File "/opt/services/AlertaDengue/dbf/utils.py", line 83, in _parse_fields

return df.loc[[all_expected_fields]]

```

[_parse_fields](https://github.com/AlertaDengue/AlertaDengue/blob/main/AlertaDengue/dbf/utils.py#L83)

```

Change to:

df.loc[ :, all_expected_fields]

```

| 2022-10-19T10:48:37 | 0.0 | [] | [] |

|||

cytomining/copairs | cytomining__copairs-48 | 11570eb5e03d908e344bb8f7f90940e6dac603e3 | diff --git a/src/copairs/compute.py b/src/copairs/compute.py

index 41512d0..55221e3 100644

--- a/src/copairs/compute.py

+++ b/src/copairs/compute.py

@@ -151,7 +151,7 @@ def compute_p_values(ap_scores, null_confs, null_size: int, seed):

p_values = np.empty(len(ap_scores), dtype=np.float32)

for i, (ap_score, ix) in enumerate(zip(ap_scores, rev_ix)):

# Reverse to get from hi to low

- num = null_size - np.searchsorted(null_dists[ix], ap_score)

+ num = null_size - np.searchsorted(null_dists[ix], ap_score, side='right')

p_values[i] = (num + 1) / (null_size + 1)

return p_values

diff --git a/src/copairs/map.py b/src/copairs/map.py

index fc69403..7c48d3e 100644

--- a/src/copairs/map.py

+++ b/src/copairs/map.py

@@ -12,26 +12,45 @@

logger = logging.getLogger('copairs')

-def evaluate_and_filter(df, columns) -> list:

- '''Evaluate the query and filter the dataframe'''

+def extract_filters(columns, df_columns) -> list:

+ '''Extract and validate filters from columns'''

parsed_cols = []

+ queries_to_eval = []

+

for col in columns:

- if col in df.columns:

+ if col in df_columns:

parsed_cols.append(col)

continue

-

column_names = re.findall(r'(\w+)\s*[=<>!]+', col)

- valid_column_names = [col for col in column_names if col in df.columns]

+

+ valid_column_names = [col for col in column_names if col in df_columns]

if not valid_column_names:

raise ValueError(f"Invalid query or column name: {col}")

+

+ queries_to_eval.append(col)

+ parsed_cols.extend(valid_column_names)

+

+ if len(parsed_cols) != len(set(parsed_cols)):

+ raise ValueError(f"Duplicate queries for column: {col}")

+

+ return queries_to_eval, parsed_cols

+

+

+def apply_filters(df, query_list):

+ '''Combine and apply filters to dataframe'''

+ if not query_list:

+ return df

+

+ combined_query = " & ".join(f"({query})" for query in query_list)

+ try:

+ df_filtered = df.query(combined_query)

+ except Exception as e:

+ raise ValueError(f"Invalid combined query expression: {combined_query}. Error: {e}")

- try:

- df = df.query(col)

- parsed_cols.extend(valid_column_names)

- except:

- raise ValueError(f"Invalid query expression: {col}")

+ if df_filtered.empty:

+ raise ValueError(f"Empty dataframe after processing combined query: {combined_query}")

- return df, parsed_cols

+ return df_filtered

def flatten_str_list(*args):

@@ -55,7 +74,9 @@ def create_matcher(obs: pd.DataFrame,

neg_diffby,

multilabel_col=None):

columns = flatten_str_list(pos_sameby, pos_diffby, neg_sameby, neg_diffby)

- obs, columns = evaluate_and_filter(obs, columns)

+ query_list, columns = extract_filters(columns, obs.columns)

+ obs = apply_filters(obs, query_list)

+

if multilabel_col:

return MatcherMultilabel(obs, columns, multilabel_col, seed=0)

return Matcher(obs, columns, seed=0)

| [bug] AP of 1.0 should have min p-value

When calculated AP is 1.0, corresponding p-value is too large.

For example, for 2 positive profiles, 16 controls, null size 1000, and AP = 1, p-value = 0.061938, which is incorrect, because the proportion of null to the right of the 1.0 should be 0, and p-value [should](https://github.com/cytomining/copairs/blob/11570eb5e03d908e344bb8f7f90940e6dac603e3/src/copairs/compute.py#L155) be `p=(num + 1) / (null_size + 1) = 1/1001 = ~0.000999`

| 2023-11-14T03:58:04 | 0.0 | [] | [] |

|||

neuropsychology/NeuroKit | neuropsychology__NeuroKit-526 | 8e714580c6a3ce8012c27b7587e035f366ec1b0a | diff --git a/NEWS.rst b/NEWS.rst

index a70741f95a..cf54b29508 100644

--- a/NEWS.rst

+++ b/NEWS.rst

@@ -19,7 +19,7 @@ New Features

Fixes

+++++++++++++

-* None

+* Ensure detected offset in `emg_activation()` is not beyond signal length

0.1.4.1

diff --git a/neurokit2/emg/emg_activation.py b/neurokit2/emg/emg_activation.py

index 42f74d4a32..edd8b63ba5 100644

--- a/neurokit2/emg/emg_activation.py

+++ b/neurokit2/emg/emg_activation.py

@@ -375,10 +375,13 @@ def _emg_activation_activations(activity, duration_min=0.05):

baseline = events_find(activity == 0, threshold=0.5, threshold_keep="above", duration_min=duration_min)

baseline["offset"] = baseline["onset"] + baseline["duration"]

- # Cross-comparison

- valid = np.isin(activations["onset"], baseline["offset"])

+ # Cross-comparison

+ valid = np.isin(activations["onset"], baseline["offset"])

onsets = activations["onset"][valid]

- offsets = activations["offset"][valid]

+ offsets = activations["offset"][valid]

+

+ # make sure offset indices are within length of signal

+ offsets = offsets[offsets < len(activity)]

new_activity = np.array([])

for x, y in zip(onsets, offsets):

| emg_process() activation offset on last element bug

Hello!

I seem to have found a small bug upon running the neurokit2 _emg_process()_ function with a data structure where the signal's activation offset is the last element of the structure, it returned error saying that the element wasn't on the structure.

I managed to eliminate this error by making a simple adjustment on **neurokit2/signal/signal_formatpeaks.py line 67** as the following image shows:

With this change i managed to run the function to my file with no problems.

The versions my system is running are:

- neurokit2==0.1.2

- tensorflow==2.4.1

- pandas==1.2.2

emg_process() activation offset on last element bug

Hello!

I seem to have found a small bug upon running the neurokit2 _emg_process()_ function with a data structure where the signal's activation offset is the last element of the structure, it returned error saying that the element wasn't on the structure.

I managed to eliminate this error by making a simple adjustment on **neurokit2/signal/signal_formatpeaks.py line 67** as the following image shows:

With this change i managed to run the function to my file with no problems.

The versions my system is running are:

- neurokit2==0.1.2

- tensorflow==2.4.1

- pandas==1.2.2

| Hi ð Thanks for reaching out and opening your first issue here! We'll try to come back to you as soon as possible. â¤ï¸

Hi @Vasco-Cardoso, indeed it is important to ensure that the detected emg indices are within the length of the signal. The offset being the last element is an edge case that we didn't consider - thanks for pointing that out and proposing a potential solution ð However, I think the issue here is more to do with making sure that the extracted onsets/offsets (from `emg_activation`) are within the signal before letting `signal_formatpeaks` sanitize them - so I'd say it's best to leave the latter untouched.

https://github.com/neuropsychology/NeuroKit/blob/c1104386655724a5c624e740abf651c939fc5e48/neurokit2/emg/emg_activation.py#L370-L382

@DominiqueMakowski We can make a small modification to enforce this:

```

# make sure indices are within length of signal

onsets = np.array([i for i in activations["onset"][valid] if i < len(activity)])

offsets = np.array([i for i in activations["offset"][valid] if i < len(activity)])

```

can't we simply do

```python

# Cross-comparison

valid = np.isin(activations["onset"], baseline["offset"])

onsets = activations["onset"][valid]

offsets = activations["offset"][valid]

# make sure indices are within length of signal

onsets = onsets[onsets < len(activity)]

offsets = offsets[offsets < len(activity)]

```

But how come it's possible to have onsets and offsets bigger than the length ð¤ ?

This probably won't be a problem for onsets actually, just offsets - since in our code offset indices are derived by `activations["onset"] + activations["duration"]` so in this edge case here where the activated portions (durations) end exactly at the last data point, the offset then becomes detected as `len(signal) + 1` index

And yes you're right @DominiqueMakowski `onsets = onsets[onsets < len(activity)]` is better ð

Hi ð Thanks for reaching out and opening your first issue here! We'll try to come back to you as soon as possible. â¤ï¸

Hi @Vasco-Cardoso, indeed it is important to ensure that the detected emg indices are within the length of the signal. The offset being the last element is an edge case that we didn't consider - thanks for pointing that out and proposing a potential solution ð However, I think the issue here is more to do with making sure that the extracted onsets/offsets (from `emg_activation`) are within the signal before letting `signal_formatpeaks` sanitize them - so I'd say it's best to leave the latter untouched.

https://github.com/neuropsychology/NeuroKit/blob/c1104386655724a5c624e740abf651c939fc5e48/neurokit2/emg/emg_activation.py#L370-L382

@DominiqueMakowski We can make a small modification to enforce this:

```

# make sure indices are within length of signal

onsets = np.array([i for i in activations["onset"][valid] if i < len(activity)])

offsets = np.array([i for i in activations["offset"][valid] if i < len(activity)])

```

can't we simply do

```python

# Cross-comparison

valid = np.isin(activations["onset"], baseline["offset"])

onsets = activations["onset"][valid]

offsets = activations["offset"][valid]

# make sure indices are within length of signal

onsets = onsets[onsets < len(activity)]

offsets = offsets[offsets < len(activity)]

```

But how come it's possible to have onsets and offsets bigger than the length ð¤ ?

This probably won't be a problem for onsets actually, just offsets - since in our code offset indices are derived by `activations["onset"] + activations["duration"]` so in this edge case here where the activated portions (durations) end exactly at the last data point, the offset then becomes detected as `len(signal) + 1` index

And yes you're right @DominiqueMakowski `onsets = onsets[onsets < len(activity)]` is better ð

| 2021-09-01T10:08:21 | 0.0 | [] | [] |

||

vanvalenlab/deepcell-tracking | vanvalenlab__deepcell-tracking-115 | 49d8ca2261337d4668f205d7c0cbff60fae5ddb5 | diff --git a/deepcell_tracking/utils.py b/deepcell_tracking/utils.py

index 121bfc3..e7a635d 100644

--- a/deepcell_tracking/utils.py

+++ b/deepcell_tracking/utils.py

@@ -498,9 +498,10 @@ def get_image_features(X, y, appearance_dim=32, crop_mode='resize', norm=True):

# Check data and normalize

if len(idx) > 0:

- mean = np.mean(app[idx])

- std = np.std(app[idx])

- app[idx] = (app[idx] - mean) / std

+ masked_app = app[idx]

+ mean = np.mean(masked_app)

+ std = np.std(masked_app)

+ app[idx] = (masked_app - mean) / std

appearances[i] = app

| Cast data to correct type in `get_image_features`

This PR fixes a bug in `get_image_features`. If the X data is passed in with an integer type (instead of float), the output of `crop_mode='fixed'` and `norm=True` is incorrect. In the examples below, the first image is incorrect while the second is correct.

This PR eliminates the bug by casting X data to float32 and y data to int32 to avoid incorrect use of the function.

<img width="420" alt="Screen Shot 2023-01-24 at 7 34 48 PM" src="https://user-images.githubusercontent.com/20373588/214474471-a4a41fe9-4e58-44a5-8986-2001c0827822.png">

<img width="439" alt="Screen Shot 2023-01-24 at 7 34 38 PM" src="https://user-images.githubusercontent.com/20373588/214474468-1c6ecc00-ec06-4ec6-821b-e5b67b5e9012.png">

| 2023-01-25T04:48:50 | 0.0 | [] | [] |

|||

qcpydev/qcpy | qcpydev__qcpy-110 | f101eebfc859542ef379b214912c947983d1a79d | diff --git a/src/visualize/bloch.py b/src/visualize/bloch.py

index 749b0da..56be747 100644

--- a/src/visualize/bloch.py

+++ b/src/visualize/bloch.py

@@ -1,7 +1,11 @@

+import re

+

import matplotlib.pyplot as plt

import numpy as np

-from .base import sphere, theme, light_mode

-from ..tools import probability, amplitude

+

+from ..errors import BlochSphereOutOfRangeError, InvalidSavePathError

+from ..tools import amplitude, probability

+from .base import light_mode, sphere, theme

def bloch(

@@ -11,8 +15,24 @@ def bloch(

show: bool = True,

light: bool = False,

):

- amplitutes = amplitude(quantumstate)

+ """Creates a qsphere visualization that can be interacted with.

+ Args:

+ quantum_state (ndarray/QuantumCircuit): State vector array or qcpy quantum circuit.

+ path (str): The path in which the image file will be saved when save is set true.

+ save (bool): Will save an image in the working directory when this boolean is true.

+ show (bool): Boolean to turn on/off the qsphere being opened in matplotlib.

+ light (bool): Will change the default dark theme mode to a light theme mode.

+ Returns:

+ None

+ """

+ if save and re.search(r"[<>:/\\|?*]", path) or len(path) > 255:

+ raise InvalidSavePathError("Invalid file name")

+ amplitudes = amplitude(quantumstate)

phase_angles = probability(quantumstate, False)

+ if amplitudes.size > 2:

+ BlochSphereOutOfRangeError(

+ "Bloch sphere only accepts a single qubit quantum circuit"

+ )

light_mode(light)

ax = sphere(theme.BACKGROUND_COLOR)

ax.quiver(1, 0, 0, 0.75, 0, 0, color="lightgray")

@@ -25,7 +45,7 @@ def bloch(

ax.quiver(0, 0, -1, 0, 0, -0.75, color="lightgray")

ax.text(0, 0, -2, "-z", color="gray")

ax.text(0.1, 0, -1.5, "|1>", color="gray")

- theta = np.arcsin(amplitutes[1]) * 2

+ theta = np.arcsin(amplitudes[1]) * 2

phi = phase_angles[1]

x = 1 * np.sin(theta) * np.cos(phi)

y = 1 * np.sin(theta) * np.sin(phi)

diff --git a/src/visualize/probability.py b/src/visualize/probability.py

index 50aadc0..1880e88 100644

--- a/src/visualize/probability.py

+++ b/src/visualize/probability.py

@@ -1,8 +1,12 @@

+import re

+

import matplotlib.pyplot as plt

-from .base import graph, light_mode, theme

-from ..tools import probability as prob

import numpy as np

+from ..errors import InvalidSavePathError

+from ..tools import probability as prob

+from .base import graph, light_mode, theme

+

def probability(

state: any,

@@ -11,26 +15,33 @@ def probability(

show: bool = True,

light: bool = False,

):

+ """Creates a probability representation of a given quantum circuit in matplotlib.

+ Args:

+ quantum_state (ndarray/QuantumCircuit): State vector array or qcpy quantum circuit.

+ path (str): The path in which the image file will be saved when save is set true.

+ save (bool): Will save an image in the working directory when this boolean is true.

+ show (bool): Boolean to turn on/off the qsphere being opened in matplotlib.

+ light (bool): Will change the default dark theme mode to a light theme mode.

+ Returns:

+ None

+ """

+ if save and re.search(r"[<>:/\\|?*]", path) or len(path) > 255:

+ raise InvalidSavePathError("Invalid file name")

probabilities = prob(state)

num_qubits = int(np.log2(probabilities.size))

state_list = [format(i, "b").zfill(num_qubits) for i in range(2**num_qubits)]

percents = [i * 100 for i in probabilities]

-

plt.clf()

plt.close()

-

light_mode(light)

ax = graph(theme.TEXT_COLOR, theme.BACKGROUND_COLOR, num_qubits)

ax.bar(state_list, percents, color="#39c0ba")

-

plt.xlabel("Computational basis states", color=theme.ACCENT_COLOR)

plt.ylabel("Probability (%)", labelpad=5, color=theme.ACCENT_COLOR)

plt.title("Probabilities", pad=10, color=theme.ACCENT_COLOR)

plt.tight_layout()

-

if save:

plt.savefig(path)

if show:

plt.show()

-

return

diff --git a/src/visualize/q_sphere.py b/src/visualize/q_sphere.py

index 2406d55..6fc769a 100644

--- a/src/visualize/q_sphere.py

+++ b/src/visualize/q_sphere.py

@@ -1,8 +1,10 @@

import matplotlib.pyplot as plt

from numpy import pi, log2, ndarray, cos, sin, linspace

import math

+import re

from typing import Union

from ..quantum_circuit import QuantumCircuit

+from ..errors import InvalidSavePathError

from .base import (

sphere,

color_bar,

@@ -19,6 +21,18 @@ def q_sphere(

show: bool = True,

light: bool = False,

) -> None:

+ """Creates a qsphere visualization that can be interacted with.

+ Args:

+ quantum_state (ndarray/QuantumCircuit): State vector array or qcpy quantum circuit.

+ path (str): The path in which the image file will be saved when save is set true.

+ save (bool): Will save an image in the working directory when this boolean is true.

+ show (bool): Boolean to turn on/off the qsphere being opened in matplotlib.

+ light (bool): Will change the default dark theme mode to a light theme mode.

+ Returns:

+ None

+ """

+ if save and re.search(r"[<>:/\\|?*]", path) or len(path) > 255:

+ raise InvalidSavePathError("Invalid file name")

colors = plt.get_cmap("hsv")

norm = plt.Normalize(0, pi * 2)

ax = sphere(theme.BACKGROUND_COLOR)

diff --git a/src/visualize/state_vector.py b/src/visualize/state_vector.py

index d5960f8..dc6eec2 100644

--- a/src/visualize/state_vector.py

+++ b/src/visualize/state_vector.py

@@ -1,6 +1,7 @@

import matplotlib.pyplot as plt

import numpy as np

from matplotlib.colors import rgb2hex

+from ..errors import *

from .base.graph import graph

from ..tools import amplitude, phaseangle

from .base import color_bar, theme, light_mode

@@ -13,6 +14,18 @@ def state_vector(

show: bool = True,

light: bool = False,

):

+ """Outputs a state vector representation from a given quantum circuit in matplotlib.

+ Args:

+ quantum_state (ndarray/QuantumCircuit): State vector array or qcpy quantum circuit.

+ path (str): The path in which the image file will be saved when save is set true.

+ save (bool): Will save an image in the working directory when this boolean is true.

+ show (bool): Boolean to turn on/off the qsphere being opened in matplotlib.

+ light (bool): Will change the default dark theme mode to a light theme mode.

+ Returns:

+ None

+ """

+ if save and re.search(r"[<>:/\\|?*]", path) or len(filename) > 255:

+ raise InvalidSavePathError("Invalid file name")

amplitudes = amplitude(circuit)

phase_angles = phaseangle(circuit)

num_qubits = int(np.log2(amplitudes.size))

@@ -28,7 +41,6 @@ def state_vector(

plt.xlabel("Computational basis states", color=theme.TEXT_COLOR)

plt.ylabel("Amplitutde", labelpad=5, color=theme.TEXT_COLOR)

plt.title("State Vector", pad=10, color=theme.TEXT_COLOR)

-

plt.tight_layout()

if save:

plt.savefig(path)

| visualize section needs error classes to handle code coverage

Need to implement current error classes into the visualize, possibly implementing more as more base cases arise.

Visualize part of package needs docstrings

| 2024-11-02T07:56:14 | 0.0 | [] | [] |

|||

microsoft/responsible-ai-toolbox | microsoft__responsible-ai-toolbox-2510 | 1ef65b802f4ff6dbf9f4ae379552ee52b1e9acf2 | diff --git a/notebooks/responsibleaidashboard/text/genai-integration-demo.ipynb b/notebooks/responsibleaidashboard/text/genai-integration-demo.ipynb

index a72a206393..26a149efe0 100644

--- a/notebooks/responsibleaidashboard/text/genai-integration-demo.ipynb

+++ b/notebooks/responsibleaidashboard/text/genai-integration-demo.ipynb

@@ -2,7 +2,7 @@

"cells": [

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 1,

"metadata": {},

"outputs": [],

"source": [

@@ -17,20 +17,23 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 2,

"metadata": {},

- "outputs": [],

- "source": [

- "def replace_error_chars(message:str):\n",

- " message = message.replace('`', '')\n",

- " return message"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "data": {

+ "text/plain": [

+ "Dataset({\n",

+ " features: ['id', 'title', 'context', 'question', 'answers'],\n",

+ " num_rows: 87599\n",

+ "})"

+ ]

+ },

+ "execution_count": 2,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

"source": [

"dataset = datasets.load_dataset(\"squad\", split=\"train\")\n",

"dataset"

@@ -38,7 +41,7 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 3,

"metadata": {},

"outputs": [],

"source": [

@@ -50,20 +53,83 @@

"for row in dataset:\n",

" context.append(row['context'])\n",

" questions.append(row['question'])\n",

- " answers.append(replace_error_chars(row['answers']['text'][0]))\n",

+ " answers.append(row['answers']['text'][0])\n",

" templated_prompt = template.format(context=row['context'], question=row['question'])\n",

" prompts.append(templated_prompt)"

]

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 4,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "data": {

+ "text/html": [

+ "<div>\n",

+ "<style scoped>\n",

+ " .dataframe tbody tr th:only-of-type {\n",

+ " vertical-align: middle;\n",

+ " }\n",

+ "\n",

+ " .dataframe tbody tr th {\n",

+ " vertical-align: top;\n",

+ " }\n",

+ "\n",

+ " .dataframe thead th {\n",

+ " text-align: right;\n",

+ " }\n",

+ "</style>\n",

+ "<table border=\"1\" class=\"dataframe\">\n",

+ " <thead>\n",

+ " <tr style=\"text-align: right;\">\n",

+ " <th></th>\n",

+ " <th>prompt</th>\n",

+ " </tr>\n",

+ " </thead>\n",

+ " <tbody>\n",

+ " <tr>\n",

+ " <th>0</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>1</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>2</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>3</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>4</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " </tbody>\n",

+ "</table>\n",

+ "</div>"

+ ],

+ "text/plain": [

+ " prompt\n",

+ "0 Answer the question given the context.\\n\\ncont...\n",

+ "1 Answer the question given the context.\\n\\ncont...\n",

+ "2 Answer the question given the context.\\n\\ncont...\n",

+ "3 Answer the question given the context.\\n\\ncont...\n",

+ "4 Answer the question given the context.\\n\\ncont..."

+ ]

+ },

+ "execution_count": 4,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

"source": [

"data = pd.DataFrame({\n",

- " 'context': context,\n",

- " 'questions': questions,\n",

+ " # 'context': context,\n",

+ " # 'questions': questions,\n",

" # 'answers': answers,\n",

" 'prompt' : prompts})\n",

"test_data = data[:3]\n",

@@ -72,7 +138,7 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 5,

"metadata": {},

"outputs": [],

"source": [

@@ -102,7 +168,7 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 6,

"metadata": {},

"outputs": [],

"source": [

@@ -118,7 +184,7 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 7,

"metadata": {},

"outputs": [],

"source": [

@@ -139,9 +205,17 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 8,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Dataset download attempt 1 of 4\n"

+ ]

+ }

+ ],

"source": [

"from responsibleai_text import RAITextInsights, ModelTask\n",

"from raiwidgets import ResponsibleAIDashboard"

@@ -149,51 +223,162 @@

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 9,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "data": {

+ "text/html": [

+ "<div>\n",

+ "<style scoped>\n",

+ " .dataframe tbody tr th:only-of-type {\n",

+ " vertical-align: middle;\n",

+ " }\n",

+ "\n",

+ " .dataframe tbody tr th {\n",

+ " vertical-align: top;\n",

+ " }\n",

+ "\n",

+ " .dataframe thead th {\n",

+ " text-align: right;\n",

+ " }\n",

+ "</style>\n",

+ "<table border=\"1\" class=\"dataframe\">\n",

+ " <thead>\n",

+ " <tr style=\"text-align: right;\">\n",

+ " <th></th>\n",

+ " <th>prompt</th>\n",

+ " </tr>\n",

+ " </thead>\n",

+ " <tbody>\n",

+ " <tr>\n",

+ " <th>0</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>1</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " <tr>\n",

+ " <th>2</th>\n",

+ " <td>Answer the question given the context.\\n\\ncont...</td>\n",

+ " </tr>\n",

+ " </tbody>\n",

+ "</table>\n",

+ "</div>"

+ ],

+ "text/plain": [

+ " prompt\n",

+ "0 Answer the question given the context.\\n\\ncont...\n",

+ "1 Answer the question given the context.\\n\\ncont...\n",

+ "2 Answer the question given the context.\\n\\ncont..."

+ ]

+ },

+ "execution_count": 9,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

"source": [

"test_data.head()"

]

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 10,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "feature extraction: 0it [00:00, ?it/s]"

+ ]

+ },

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "feature extraction: 3it [00:00, 3.04it/s]\n",

+ "Failed to parse metric `This is a dummy answer`: invalid literal for int() with base 10: 'This is a dummy answer'\n",

+ "Failed to parse metric `This is a dummy answer`: invalid literal for int() with base 10: 'This is a dummy answer'\n",

+ "Failed to parse metric `This is a dummy answer`: invalid literal for int() with base 10: 'This is a dummy answer'\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "computing coherence score\n",

+ "coherence score\n",

+ "[0, 0, 0]\n",

+ "ext_dataset\n",

+ "['positive_words', 'negative_words', 'negation_words', 'negated_entities', 'named_persons', 'sentence_length', 'target_score']\n",

+ " positive_words negative_words negation_words negated_entities \\\n",

+ "0 50 0 0 0 \n",

+ "1 50 0 0 0 \n",

+ "2 52 0 0 0 \n",

+ "\n",

+ " named_persons sentence_length target_score \n",

+ "0 3 827 5 \n",

+ "1 2 805 5 \n",

+ "2 3 832 5 \n"

+ ]

+ }

+ ],

"source": [

"rai_insights = RAITextInsights(\n",

" pipeline_model, test_data, None,\n",

- " task_type=ModelTask.GENERATIVE_TEXT)"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "# TODO: Remove this once the insights object is updated to handle this\n",

- "rai_insights.temp_questions = test_data['questions']\n",

- "rai_insights.temp_context = test_data['context']\n",

- "rai_insights.temp_eval_model = eval_model"

+ " task_type=ModelTask.GENERATIVE_TEXT,\n",

+ " text_column='prompt')"

]

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 11,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "================================================================================\n",

+ "Error Analysis\n",

+ "Current Status: Generating error analysis reports.\n",

+ "Current Status: Finished generating error analysis reports.\n",

+ "Time taken: 0.0 min 0.3656380000002173 sec\n",

+ "================================================================================\n"

+ ]

+ }

+ ],

"source": [

"rai_insights.error_analysis.add()\n",

- "# rai_insights.compute()"

+ "rai_insights.compute()"

]

},

{

"cell_type": "code",

- "execution_count": null,

+ "execution_count": 12,

"metadata": {},

- "outputs": [],

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "ResponsibleAI started at http://localhost:8704\n"

+ ]

+ },

+ {

+ "data": {

+ "text/plain": [

+ "<raiwidgets.responsibleai_dashboard.ResponsibleAIDashboard at 0x2858abb3e20>"

+ ]

+ },

+ "execution_count": 12,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

"source": [

"ResponsibleAIDashboard(rai_insights)"

]

diff --git a/raiwidgets/raiwidgets/responsibleai_dashboard.py b/raiwidgets/raiwidgets/responsibleai_dashboard.py

index fc194258ad..17e752ed70 100644

--- a/raiwidgets/raiwidgets/responsibleai_dashboard.py

+++ b/raiwidgets/raiwidgets/responsibleai_dashboard.py

@@ -117,6 +117,15 @@ def get_question_answering_metrics():

methods=["POST"]

)

+ def get_generative_text_metrics():

+ data = request.get_json(force=True)

+ return jsonify(self.input.get_generative_text_metrics(data))

+ self.add_url_rule(

+ get_generative_text_metrics,

+ '/get_generative_text_metrics',

+ methods=["POST"]

+ )

+

if hasattr(self._service, 'socketio'):

@self._service.socketio.on('handle_object_detection_json')

def handle_object_detection_json(od_json):

diff --git a/raiwidgets/raiwidgets/responsibleai_dashboard_input.py b/raiwidgets/raiwidgets/responsibleai_dashboard_input.py

index 0df2fdf3f2..830701e861 100644

--- a/raiwidgets/raiwidgets/responsibleai_dashboard_input.py

+++ b/raiwidgets/raiwidgets/responsibleai_dashboard_input.py

@@ -171,13 +171,19 @@ def _prepare_filtered_error_analysis_data(self, features, filters,

def debug_ml(self, data):

try:

- features = data[0]

+ features = data[0] # TODO: Remove prompt feature

filters = data[1]

composite_filters = data[2]

max_depth = data[3]

num_leaves = data[4]

min_child_samples = data[5]

metric = display_name_to_metric[data[6]]

+ text_cols = self._analysis._text_column

+ if text_cols is None:

+ text_cols = []

+ elif isinstance(text_cols, str):

+ text_cols = [text_cols]

+ features = [f for f in features if f not in text_cols]

filtered_data_df = self._prepare_filtered_error_analysis_data(

features, filters, composite_filters, metric)

@@ -484,3 +490,35 @@ def get_question_answering_metrics(self, post_data):

"inner error: {}".format(e_str),

WidgetRequestResponseConstants.data: []

}

+

+ def get_generative_text_metrics(self, post_data):

+ """Flask endpoint function to get Model Overview metrics

+ for the Generative Text scenario.

+

+ :param post_data: List of inputs in the order

+ # TODO: What is the data we are getting here?

+ (tentative) [true_y, predicted_y, aggregate_method, class_name, iou_threshold].

+ :type post_data: List

+

+ :return: JSON/dict data response

+ :rtype: Dict[str, List]

+ """

+ try:

+ selection_indexes = post_data[0]

+ generative_text_cache = post_data[1]

+ exp = self._analysis.compute_genai_metrics(

+ selection_indexes,

+ generative_text_cache

+ )

+ return {

+ WidgetRequestResponseConstants.data: exp

+ }

+ except Exception as e:

+ print(e)

+ traceback.print_exc()

+ e_str = _format_exception(e)

+ return {

+ WidgetRequestResponseConstants.error:

+ EXP_VIZ_ERR_MSG.format(e_str),

+ WidgetRequestResponseConstants.data: []

+ }

diff --git a/responsibleai_text/responsibleai_text/managers/error_analysis_manager.py b/responsibleai_text/responsibleai_text/managers/error_analysis_manager.py

index 7abd6a2c25..461ca23772 100644

--- a/responsibleai_text/responsibleai_text/managers/error_analysis_manager.py

+++ b/responsibleai_text/responsibleai_text/managers/error_analysis_manager.py

@@ -12,6 +12,7 @@

import pandas as pd

from ml_wrappers import wrap_model

+from erroranalysis._internal.constants import ModelTask as ErrorAnalysisTask

from erroranalysis._internal.error_analyzer import ModelAnalyzer

from erroranalysis._internal.error_report import as_error_report

from responsibleai._tools.shared.state_directory_management import \

@@ -21,6 +22,7 @@

ErrorAnalysisManager as BaseErrorAnalysisManager

from responsibleai.managers.error_analysis_manager import as_error_config

from responsibleai_text.common.constants import ModelTask

+from responsibleai_text.utils.genai_metrics.metrics import get_genai_metric

from responsibleai_text.utils.feature_extractors import get_text_columns

LABELS = 'labels'

@@ -84,10 +86,20 @@ def __init__(self, model, dataset, is_multilabel, task_type, classes=None):

self.dataset.loc[:, ['context', 'questions']])

self.predictions = np.array(self.predictions)

elif self.task_type == ModelTask.GENERATIVE_TEXT:

- # FIXME: Copying from QUESTION_ANSWERING for now

- self.predictions = self.model.predict(

- self.dataset.loc[:, ['context', 'questions']])

- self.predictions = np.array(self.predictions)

+ # FIXME: Making constant predictions for now

+ # print('self dataset')

+ # print(self.dataset)

+ # self.predictions = [4] * len(self.dataset)

+ # self.predictions = np.array(self.predictions)

+ print('computing coherence score')

+ coherence = get_genai_metric(

+ 'coherence',

+ predictions=self.model.predict(self.dataset),

+ references=dataset['prompt'],

+ wrapper_model=self.model)

+ print('coherence score')

+ print(coherence['scores'])

+ self.predictions = np.array(coherence['scores'])

else:

raise ValueError("Unknown task type: {}".format(self.task_type))

@@ -198,9 +210,17 @@ def __init__(self, model: Any, dataset: pd.DataFrame,

task_type, index_classes)

if categorical_features is None:

categorical_features = []

+ if task_type == ModelTask.GENERATIVE_TEXT:

+ sup_task_type = ErrorAnalysisTask.REGRESSION

+ ext_dataset = ext_dataset.copy()

+ del ext_dataset['prompt']

+ ext_dataset['target_score'] = 5

+ target_column = 'target_score'

+ else:

+ sup_task_type = ErrorAnalysisTask.CLASSIFICATION

super(ErrorAnalysisManager, self).__init__(

index_predictor, ext_dataset, target_column,

- classes, categorical_features)

+ classes, categorical_features, model_task=sup_task_type)

@staticmethod

def _create_index_predictor(model, dataset, target_column,

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/rai_text_insights.py b/responsibleai_text/responsibleai_text/rai_text_insights/rai_text_insights.py

index a37faedd7c..8a3abf16a5 100644

--- a/responsibleai_text/responsibleai_text/rai_text_insights/rai_text_insights.py

+++ b/responsibleai_text/responsibleai_text/rai_text_insights/rai_text_insights.py

@@ -30,6 +30,7 @@

from responsibleai_text.managers.explainer_manager import ExplainerManager

from responsibleai_text.utils.feature_extractors import (extract_features,

get_text_columns)

+from responsibleai_text.utils.genai_metrics.metrics import get_genai_metric

module_logger = logging.getLogger(__name__)

module_logger.setLevel(logging.INFO)

@@ -106,7 +107,6 @@ def _add_extra_metadata_features(task_type, feature_metadata):

feature_metadata.context_col = 'context'

return feature_metadata

-

class RAITextInsights(RAIBaseInsights):

"""Defines the top-level RAITextInsights API.

@@ -616,14 +616,16 @@ def _get_dataset(self):

# add prompt and (optionally) context to dataset

# for generative text tasks

if self.task_type == ModelTask.GENERATIVE_TEXT:

- prompt = self.test[self._feature_metadata.prompt_col]

- context = self.test.get(self._feature_metadata.context_col)

-

- dashboard_dataset.prompt = convert_to_list(prompt)

- if context is None:

- dashboard_dataset.context = None

- else:

- dashboard_dataset.context = convert_to_list(context)

+ # prompt = self.test[self._feature_metadata.prompt_col]

+ # context = self.test.get(self._feature_metadata.context_col)

+

+ # dashboard_dataset.prompt = convert_to_list(prompt)

+ # if context is None:

+ # dashboard_dataset.context = None

+ # else:

+ # dashboard_dataset.context = convert_to_list(context)

+ # NOT DOING FOR NOW

+ pass

return dashboard_dataset

@@ -895,86 +897,77 @@ def compute_genai_metrics(

question_answering_cache

):

print('compute_genai_metrics')

- curr_file_dir = Path(__file__).resolve().parent

dashboard_dataset = self.get_data().dataset

+ prompt_idx = dashboard_dataset.feature_names.index('prompt')

+ prompts = [feat[prompt_idx] for feat in dashboard_dataset.features]

true_y = dashboard_dataset.true_y

predicted_y = dashboard_dataset.predicted_y

- eval_model = self.temp_eval_model

- questions = self.temp_questions

- context = self.temp_context

-

all_cohort_metrics = []

for cohort_indices in selection_indexes:

- print('cohort metrics')

- true_y_cohort = [true_y[cohort_index] for cohort_index

- in cohort_indices]

+ cohort_metrics = dict()

+

+ if true_y is None:

+ true_y_cohort = None

+ else:

+ true_y_cohort = [true_y[cohort_index] for cohort_index

+ in cohort_indices]

predicted_y_cohort = [predicted_y[cohort_index] for cohort_index

in cohort_indices]

- questions_cohort = [questions[cohort_index] for cohort_index

- in cohort_indices]

- context_cohort = [context[cohort_index] for cohort_index

- in cohort_indices]

+ prompts_cohort = [prompts[cohort_index] for cohort_index

+ in cohort_indices]

try:

- print('exact match')

- exact_match = evaluate.load('exact_match')

- exact_match_results = exact_match.compute(

- predictions=predicted_y_cohort, references=true_y_cohort)

+ if true_y_cohort is not None:

+ exact_match = evaluate.load('exact_match')

+ cohort_metrics['exact_match'] = exact_match.compute(

+ predictions=predicted_y_cohort, references=true_y_cohort)

- print('coherence')

- coherence = evaluate.load(

- str(curr_file_dir.joinpath('metrics/coherence.py')))

- coherence_results = coherence.compute(

+ cohort_metrics['coherence'] = get_genai_metric(

+ 'coherence',

predictions=predicted_y_cohort,

- references=questions_cohort,

- wrapper_model=eval_model)

+ references=prompts_cohort,

+ wrapper_model=self._wrapped_model

+ )

# coherence_results = {'scores' : [3.4]}

- print('equivalence')

- equivalence = evaluate.load(

- str(curr_file_dir.joinpath('metrics/equivalence.py')))

- equivalence_results = equivalence.compute(

+ if true_y_cohort is not None:

+ cohort_metrics['equivalence'] = get_genai_metric(

+ 'equivalence',

+ predictions=predicted_y_cohort,

+ references=prompts_cohort,

+ answers=true_y_cohort,

+ wrapper_model=self._wrapped_model

+ )

+ # equivalence_results = {'scores' : [3.4]}

+

+ cohort_metrics['fluency'] = get_genai_metric(

+ 'fluency',

predictions=predicted_y_cohort,

- references=questions_cohort,

- answers=true_y_cohort,

- wrapper_model=eval_model)

-

- print('fluency')

- fluency = evaluate.load(

- str(curr_file_dir.joinpath('metrics/fluency.py')))

- fluency_results = fluency.compute(

- predictions=predicted_y_cohort,

- references=questions_cohort,

- wrapper_model=eval_model)

+ references=prompts_cohort,

+ wrapper_model=self._wrapped_model

+ )

+ # fluency_results = {'scores' : [3.4]}

print('groundedness')

- # groundedness = evaluate.load(

- # str(curr_file_dir.joinpath('metrics/groundedness.py')))

- # groundedness_results = groundedness.compute(

- # predictions=predicted_y_cohort,

- # references=context_cohort,

- # wrapper_model=eval_model)

- groundedness_results = {'scores' : [3.4]}

+ cohort_metrics['groundedness'] = get_genai_metric(

+ 'groundedness',

+ predictions=predicted_y_cohort,

+ references=prompts_cohort,

+ wrapper_model=self._wrapped_model

+ )

+ # groundedness_results = {'scores' : [3.4]}

print('relevance')

- # relevance = evaluate.load(

- # str(curr_file_dir.joinpath('metrics/relevance.py')))

- # relevance_results = relevance.compute(

- # predictions=predicted_y_cohort,

- # references=context_cohort,

- # questions=questions_cohort,

- # wrapper_model=eval_model)

- relevance_results = {'scores' : [3.5]}

-

- all_cohort_metrics.append([

- exact_match_results['exact_match'],

- np.mean(coherence_results['scores']),

- np.mean(equivalence_results['scores']),

- np.mean(fluency_results['scores']),

- np.mean(groundedness_results['scores']),

- np.mean(relevance_results['scores'])])

+ cohort_metrics['relevance'] = get_genai_metric(

+ 'relevance',

+ predictions=predicted_y_cohort,

+ references=prompts_cohort,

+ wrapper_model=self._wrapped_model

+ )

+ # relevance_results = {'scores' : [3.5]}

+

+ all_cohort_metrics.append(cohort_metrics)

except ValueError:

- all_cohort_metrics.append([0, 0, 0, 0, 0, 0])

- print('all done')

+ all_cohort_metrics.append({})

return all_cohort_metrics

diff --git a/responsibleai_text/responsibleai_text/utils/feature_extractors.py b/responsibleai_text/responsibleai_text/utils/feature_extractors.py

index afea5eb9b2..7259bb3f23 100644

--- a/responsibleai_text/responsibleai_text/utils/feature_extractors.py

+++ b/responsibleai_text/responsibleai_text/utils/feature_extractors.py

@@ -63,6 +63,7 @@ def extract_features(text_dataset: pd.DataFrame,

feature_names.append("context_overlap")

elif task_type == ModelTask.GENERATIVE_TEXT:

# TODO: Add feature names for generative text

+ start_meta_index = 0

feature_names = base_feature_names

else:

raise ValueError("Unknown task type: {}".format(task_type))

diff --git a/responsibleai_text/responsibleai_text/utils/genai_metrics/metrics.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/metrics.py

new file mode 100644

index 0000000000..7a5c240e9e

--- /dev/null

+++ b/responsibleai_text/responsibleai_text/utils/genai_metrics/metrics.py

@@ -0,0 +1,22 @@

+# Copyright (c) Microsoft Corporation

+# Licensed under the MIT License.

+

+"""Compute AI-assisted metrics for generative text models."""

+

+from pathlib import Path

+import evaluate

+

+def get_genai_metric(metric_name, **metric_kwargs):

+ """Get the metric from the genai library.

+

+ :param metric_name: The name of the metric.

+ :type metric_name: str

+ :param metric_kwargs: The keyword arguments to pass to the metric.

+ :type metric_kwargs: dict

+ :return: The metric.

+ :rtype: float

+ """

+ curr_file_dir = Path(__file__).resolve().parent

+ metric = evaluate.load(

+ str(curr_file_dir.joinpath(f'scripts/{metric_name}.py')))

+ return metric.compute(**metric_kwargs)

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/coherence.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/coherence.py

similarity index 95%

rename from responsibleai_text/responsibleai_text/rai_text_insights/metrics/coherence.py

rename to responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/coherence.py

index e27d9f3eb4..4342ee978e 100644

--- a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/coherence.py

+++ b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/coherence.py

@@ -31,12 +31,23 @@

Five stars: the answer has perfect coherency

This rating value should always be an integer between 1 and 5. So the rating produced should be 1 or 2 or 3 or 4 or 5.

+Some examples of valid responses are:

+1

+2

+5

+Some examples of invalid responses are:

+1/5

+1.5

+3.0

+5 stars

QUESTION:

{question}

ANSWER:

{prediction}

+

+RATING:

""".strip()

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/equivalence.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/equivalence.py

similarity index 100%

rename from responsibleai_text/responsibleai_text/rai_text_insights/metrics/equivalence.py

rename to responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/equivalence.py

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/fluency.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/fluency.py

similarity index 100%

rename from responsibleai_text/responsibleai_text/rai_text_insights/metrics/fluency.py

rename to responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/fluency.py

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/groundedness.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/groundedness.py

similarity index 100%

rename from responsibleai_text/responsibleai_text/rai_text_insights/metrics/groundedness.py

rename to responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/groundedness.py

diff --git a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/relevance.py b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/relevance.py

similarity index 89%

rename from responsibleai_text/responsibleai_text/rai_text_insights/metrics/relevance.py

rename to responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/relevance.py

index b2d736d220..7947556b52 100644

--- a/responsibleai_text/responsibleai_text/rai_text_insights/metrics/relevance.py

+++ b/responsibleai_text/responsibleai_text/utils/genai_metrics/scripts/relevance.py

@@ -32,10 +32,7 @@

This rating value should always be an integer between 1 and 5. So the rating produced should be 1 or 2 or 3 or 4 or 5.

-CONTEXT:

-{context}

-

-QUESTION:

+QUESTION AND CONTEXT:

{question}

ANSWER:

@@ -54,8 +51,7 @@ def _info(self):

features=datasets.Features(

{

"predictions": datasets.Value("string", id="sequence"),

- "references": datasets.Value("string", id="sequence"),

- "questions": datasets.Value("string", id="sequence")

+ "references": datasets.Value("string", id="sequence")

}

),

)

@@ -64,9 +60,8 @@ def _compute(self, *, predictions=None, references=None, **kwargs):

m = []

templated_ques = []

- questions = kwargs['questions']

- for p, r, q in zip(predictions, references, questions):

- templated_ques.append(_TEMPLATE.format(context=r, question=q, prediction=p))

+ for p, r in zip(predictions, references):

+ templated_ques.append(_TEMPLATE.format(question=r, prediction=p))

model = kwargs['wrapper_model']

| Move legacy interpret dashboard

Interpret dashboard has two top-level components, ExpanationDashboard and NewExplanationDashboard. When the new dashboard is determined to be sufficient, the old dashboard and its components should be moved to a legacy folder.

| 2024-01-26T18:37:52 | 0.0 | [] | [] |

|||

bambinos/bambi | bambinos__bambi-822 | 4cc310351d9705d5e0a1824726ca42b4ebcfa1bb | diff --git a/bambi/backend/inference_methods.py b/bambi/backend/inference_methods.py

index 900d9c26..dee510e7 100644

--- a/bambi/backend/inference_methods.py

+++ b/bambi/backend/inference_methods.py

@@ -17,6 +17,9 @@ def __init__(self):

self.pymc_methods = self._pymc_methods()

def _get_bayeux_methods(self, model):

+ # If bayeux is not installed, return an empty MCMC list.

+ if model is None:

+ return {"mcmc": []}

# Bambi only supports bayeux MCMC methods

mcmc_methods = model.methods.get("mcmc")

return {"mcmc": mcmc_methods}

@@ -85,7 +88,7 @@ def bayeux_model():

A dummy model with a simple quadratic likelihood function.

"""

if importlib.util.find_spec("bayeux") is None:

- return {"mcmc": []}

+ return None

import bayeux as bx # pylint: disable=import-outside-toplevel

| Error on Import

When importing bambi on current version of main branch (https://github.com/bambinos/bambi/tree/6180e733b3e843cfaa249031c5789ff0c5f12795)

an error occurs

Error:

```

(.venv) ~/P/C/test 1m 5.7s â± python (base)

Python 3.10.8 (main, Nov 24 2022, 08:09:04) [Clang 14.0.6 ] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> import bambi

WARNING (pytensor.tensor.blas): Using NumPy C-API based implementation for BLAS functions.

Matplotlib is building the font cache; this may take a moment.

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/__init__.py", line 7, in <module>

from .backend import inference_methods, PyMCModel

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/backend/__init__.py", line 1, in <module>

from .pymc import PyMCModel

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/backend/pymc.py", line 16, in <module>

from bambi.backend.inference_methods import inference_methods

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/backend/inference_methods.py", line 119, in <module>

inference_methods = InferenceMethods()

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/backend/inference_methods.py", line 16, in __init__

self.bayeux_methods = self._get_bayeux_methods(bayeux_model())

File "/Users/phansen/Projects/CFA/test/.venv/lib/python3.10/site-packages/bambi/backend/inference_methods.py", line 21, in _get_bayeux_methods

mcmc_methods = model.methods.get("mcmc")

AttributeError: 'dict' object has no attribute 'methods'

```

when bayuex is not installed, this function returns the wrong type of object:

https://github.com/bambinos/bambi/blob/6180e733b3e843cfaa249031c5789ff0c5f12795/bambi/backend/inference_methods.py#L87-L88

| Hey @peterhansen-cfa2, apologies for the delayed response. This looks like a simple fix. I will look into it tomorrow. Thanks for raising the issue! | 2024-07-04T17:13:07 | 0.0 | [] | [] |

||

ami-iit/adam | ami-iit__adam-85 | 956031282b9d2268eec852767814d0830dc8147d | diff --git a/src/adam/model/__init__.py b/src/adam/model/__init__.py

index 0dd4bf9..0426e31 100644

--- a/src/adam/model/__init__.py

+++ b/src/adam/model/__init__.py

@@ -1,4 +1,4 @@

-from .abc_factories import Joint, Link, ModelFactory

+from .abc_factories import Joint, Link, ModelFactory, Inertial, Pose

from .model import Model

from .std_factories.std_joint import StdJoint

from .std_factories.std_link import StdLink

diff --git a/src/adam/model/abc_factories.py b/src/adam/model/abc_factories.py

index 853dbb8..4720588 100644

--- a/src/adam/model/abc_factories.py

+++ b/src/adam/model/abc_factories.py

@@ -88,8 +88,36 @@ class Inertial:

"""Inertial description"""

mass: npt.ArrayLike

- inertia = Inertia

- origin = Pose

+ inertia: Inertia

+ origin: Pose

+

+ @staticmethod

+ def zero() -> "Inertial":

+ """Returns an Inertial object with zero mass and inertia"""

+ return Inertial(

+ mass=0.0,

+ inertia=Inertia(

+ ixx=0.0,

+ ixy=0.0,

+ ixz=0.0,

+ iyy=0.0,

+ iyz=0.0,

+ izz=0.0,

+ ),

+ origin=Pose(xyz=[0.0, 0.0, 0.0], rpy=[0.0, 0.0, 0.0]),

+ )

+

+ def set_mass(self, mass: npt.ArrayLike) -> "Inertial":

+ """Set the mass of the inertial object"""

+ self.mass = mass

+

+ def set_inertia(self, inertia: Inertia) -> "Inertial":

+ """Set the inertia of the inertial object"""

+ self.inertia = inertia

+

+ def set_origin(self, origin: Pose) -> "Inertial":

+ """Set the origin of the inertial object"""

+ self.origin = origin

@dataclasses.dataclass

diff --git a/src/adam/model/std_factories/std_link.py b/src/adam/model/std_factories/std_link.py

index 9d90829..7754747 100644

--- a/src/adam/model/std_factories/std_link.py

+++ b/src/adam/model/std_factories/std_link.py

@@ -2,7 +2,7 @@

import urdf_parser_py.urdf

from adam.core.spatial_math import SpatialMath

-from adam.model import Link

+from adam.model import Link, Inertial, Pose

class StdLink(Link):

@@ -15,10 +15,15 @@ def __init__(self, link: urdf_parser_py.urdf.Link, math: SpatialMath):

self.inertial = link.inertial

self.collisions = link.collisions

+ # if the link has no inertial properties (a connecting frame), let's add them

+ if link.inertial is None:

+ link.inertial = Inertial.zero()

+

# if the link has inertial properties, but the origin is None, let's add it

if link.inertial is not None and link.inertial.origin is None:

- link.inertial.origin.xyz = [0, 0, 0]

- link.inertial.origin.rpy = [0, 0, 0]

+ link.inertial.origin = Pose(xyz=[0, 0, 0], rpy=[0, 0, 0])

+

+ self.inertial = link.inertial

def spatial_inertia(self) -> npt.ArrayLike:

"""

diff --git a/src/adam/model/std_factories/std_model.py b/src/adam/model/std_factories/std_model.py

index ffeff71..5f8f4fd 100644

--- a/src/adam/model/std_factories/std_model.py

+++ b/src/adam/model/std_factories/std_model.py

@@ -44,9 +44,8 @@ def __init__(self, path: str, math: SpatialMath):

# to have a useless and noisy warning, let's remove before hands all the sensor elements,

# that anyhow are not parser by urdf_parser_py or adam

# See https://github.com/ami-iit/ADAM/issues/59

- xml_file = open(path, "r")

- xml_string = xml_file.read()

- xml_file.close()

+ with open(path, "r") as xml_file:

+ xml_string = xml_file.read()

xml_string_without_sensors_tags = urdf_remove_sensors_tags(xml_string)

self.urdf_desc = urdf_parser_py.urdf.URDF.from_xml_string(

xml_string_without_sensors_tags

@@ -64,17 +63,45 @@ def get_links(self) -> List[StdLink]:

"""

Returns:

List[StdLink]: build the list of the links

+

+ A link is considered a "real" link if

+ - it has an inertial

+ - it has children

+ - if it has no children and no inertial, it is at lest connected to the parent with a non fixed joint

"""

return [

- self.build_link(l) for l in self.urdf_desc.links if l.inertial is not None

+ self.build_link(l)

+ for l in self.urdf_desc.links

+ if (

+ l.inertial is not None

+ or l.name in self.urdf_desc.child_map.keys()

+ or any(

+ j.type != "fixed"

+ for j in self.urdf_desc.joints

+ if j.child == l.name

+ )

+ )

]

def get_frames(self) -> List[StdLink]:

"""

Returns:

List[StdLink]: build the list of the links

+

+ A link is considered a "fake" link (frame) if

+ - it has no inertial

+ - it does not have children

+ - it is connected to the parent with a fixed joint

"""

- return [self.build_link(l) for l in self.urdf_desc.links if l.inertial is None]

+ return [

+ self.build_link(l)

+ for l in self.urdf_desc.links

+ if l.inertial is None

+ and l.name not in self.urdf_desc.child_map.keys()

+ and all(

+ j.type == "fixed" for j in self.urdf_desc.joints if j.child == l.name

+ )

+ ]

def build_joint(self, joint: urdf_parser_py.urdf.Joint) -> StdJoint:

"""

diff --git a/src/adam/parametric/model/parametric_factories/parametric_link.py b/src/adam/parametric/model/parametric_factories/parametric_link.py

index c9ae02b..1346f3f 100644

--- a/src/adam/parametric/model/parametric_factories/parametric_link.py

+++ b/src/adam/parametric/model/parametric_factories/parametric_link.py

@@ -50,10 +50,11 @@ def __init__(

length_multiplier=self.length_multiplier

)

self.mass = self.compute_mass()

- self.inertial = Inertial(self.mass)

- self.inertial.mass = self.mass

- self.inertial.inertia = self.compute_inertia_parametric()

- self.inertial.origin = self.modify_origin()

+ inertia_parametric = self.compute_inertia_parametric()

+ origin = self.modify_origin()

+ self.inertial = Inertial(

+ mass=self.mass, inertia=inertia_parametric, origin=origin

+ )

self.update_visuals()

def get_principal_length(self):

| Error detecting two root links

Hi, I got this bug:

```

File "/samsung4tb/BiDex-touch/bidex_collect/utils/hand_retargeter.py", line 49, in __init__

kinDyn = KinDynComputations(os.path.join(CUR_PATH, "../../bidex_sim/assets/robots/ur_description/urdf/ur3e_leap_right.urdf"),

File "/samsung4tb/venvs/isaacgym_venv/lib/python3.8/site-packages/adam/jax/computations.py", line 34, in __init__

model = Model.build(factory=factory, joints_name_list=joints_name_list)

File "/samsung4tb/venvs/isaacgym_venv/lib/python3.8/site-packages/adam/model/model.py", line 63, in build

tree = Tree.build_tree(links=links_list, joints=joints_list)

File "/samsung4tb/venvs/isaacgym_venv/lib/python3.8/site-packages/adam/model/tree.py", line 72, in build_tree

raise ValueError("The model has more than one root link")

ValueError: The model has more than one root link

```

Loading the attached URDF erroneously says there are two root links (`base_link` and `palm_lower`) even though using check_urdf from ROS confirms there is only one.

[ur3e_leap_right.zip](https://github.com/user-attachments/files/15754226/ur3e_leap_right.zip)

| fyi @Giulero I can confirm that also iDynTree's URDF parser is able to load the URDF that @richardrl provided with no error, i.e. :

~~~

D:\ur3e_leap_right> conda create -n idyntree idyntree

D:\ur3e_leap_right> conda activate idyntree

(idyntree) D:\ur3e_leap_right>idyntree-model-info -m ./ur3e_leap_right.urdf --print

Model:

Links:

[0] base_link

[1] shoulder_link

[2] upper_arm_link

[3] forearm_link

[4] wrist_1_link

[5] wrist_2_link

[6] wrist_3_link

[7] flange

[8] tool0

[9] wrist_axis

[10] palm_lower

[11] mcp_joint

[12] mcp_joint_2

[13] mcp_joint_3

[14] pip_4

[15] thumb_pip

[16] thumb_dip

[17] thumb_fingertip

[18] pip_3

[19] dip_3

[20] fingertip_3

[21] pip_2

[22] dip_2

[23] fingertip_2

[24] pip

[25] dip

[26] fingertip

Frames:

[27] mcp_joint_root --> mcp_joint

[28] mcp_joint_2_root --> mcp_joint_2

[29] mcp_joint_3_root --> mcp_joint_3

[30] thumb_fingertip_tip --> thumb_fingertip

[31] fingertip_3_tip --> fingertip_3

[32] fingertip_2_tip --> fingertip_2

[33] fingertip_tip --> fingertip

Joints:

[0] shoulder_pan_joint (dofs: 1) : base_link<-->shoulder_link

[1] shoulder_lift_joint (dofs: 1) : shoulder_link<-->upper_arm_link

[2] elbow_joint (dofs: 1) : upper_arm_link<-->forearm_link

[3] wrist_1_joint (dofs: 1) : forearm_link<-->wrist_1_link

[4] wrist_2_joint (dofs: 1) : wrist_1_link<-->wrist_2_link

[5] wrist_3_joint (dofs: 1) : wrist_2_link<-->wrist_3_link

[6] 1 (dofs: 1) : palm_lower<-->mcp_joint

[7] 5 (dofs: 1) : palm_lower<-->mcp_joint_2

[8] 9 (dofs: 1) : palm_lower<-->mcp_joint_3

[9] 12 (dofs: 1) : palm_lower<-->pip_4

[10] 13 (dofs: 1) : pip_4<-->thumb_pip

[11] 14 (dofs: 1) : thumb_pip<-->thumb_dip

[12] 15 (dofs: 1) : thumb_dip<-->thumb_fingertip

[13] 8 (dofs: 1) : mcp_joint_3<-->pip_3

[14] 10 (dofs: 1) : pip_3<-->dip_3

[15] 11 (dofs: 1) : dip_3<-->fingertip_3

[16] 4 (dofs: 1) : mcp_joint_2<-->pip_2

[17] 6 (dofs: 1) : pip_2<-->dip_2

[18] 7 (dofs: 1) : dip_2<-->fingertip_2

[19] 0 (dofs: 1) : mcp_joint<-->pip