repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 8,502 | closed | TF T5-small with output hidden state and attention not owrking | - `transformers` version: 2.11

- Platform: Multiple

- Python version: multiple

### Who can help

T5: @patrickvonplaten

tensorflow: @jplu

## Information

Model I am using (Bert, XLNet ...): T5

The problem arises when t5-small is loaded from pretrain with output_hidden_states=True, output_attentions=True

sample script https://colab.research.google.com/drive/1oF8hMaQg1yl2fE6QPUYKSTZcer4Mlk6S?usp=sharing

if these parameter is removed script works

I am getting following error.

```python

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_utils.py in generate(self, input_ids, max_length, min_length, do_sample, early_stopping, num_beams, temperature, top_k, top_p, repetition_penalty, bad_words_ids, bos_token_id, pad_token_id, eos_token_id, length_penalty, no_repeat_ngram_size, num_return_sequences, attention_mask, decoder_start_token_id, use_cache)

780 encoder_outputs=encoder_outputs,

781 attention_mask=attention_mask,

--> 782 use_cache=use_cache,

783 )

784 else:

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_utils.py in _generate_beam_search(self, input_ids, cur_len, max_length, min_length, do_sample, early_stopping, temperature, top_k, top_p, repetition_penalty, no_repeat_ngram_size, bad_words_ids, bos_token_id, pad_token_id, decoder_start_token_id, eos_token_id, batch_size, num_return_sequences, length_penalty, num_beams, vocab_size, encoder_outputs, attention_mask, use_cache)

1027 input_ids, past=past, attention_mask=attention_mask, use_cache=use_cache

1028 )

-> 1029 outputs = self(**model_inputs) # (batch_size * num_beams, cur_len, vocab_size)

1030 next_token_logits = outputs[0][:, -1, :] # (batch_size * num_beams, vocab_size)

1031

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/base_layer.py in __call__(self, *args, **kwargs)

983

984 with ops.enable_auto_cast_variables(self._compute_dtype_object):

--> 985 outputs = call_fn(inputs, *args, **kwargs)

986

987 if self._activity_regularizer:

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_t5.py in call(self, inputs, **kwargs)

1061 encoder_attention_mask=attention_mask,

1062 head_mask=head_mask,

-> 1063 use_cache=use_cache,

1064 )

1065

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/base_layer.py in __call__(self, *args, **kwargs)

983

984 with ops.enable_auto_cast_variables(self._compute_dtype_object):

--> 985 outputs = call_fn(inputs, *args, **kwargs)

986

987 if self._activity_regularizer:

/usr/local/lib/python3.6/dist-packages/transformers/modeling_tf_t5.py in call(self, inputs, attention_mask, encoder_hidden_states, encoder_attention_mask, inputs_embeds, head_mask, past_key_value_states, use_cache, training)

572 # required mask seq length can be calculated via length of past

573 # key value states and seq_length = 1 for the last token

--> 574 mask_seq_length = shape_list(past_key_value_states[0][0])[2] + seq_length

575 else:

576 mask_seq_length = seq_length

IndexError: list index out of range

```

## Expected behavior

how to get the attention and hidden states as output?

Even if you can share a sample for pytorch I would be able to make it work for TF. | 11-12-2020 16:21:00 | 11-12-2020 16:21:00 | Hello!

Unfortunately this is a known bug we have with few of the TF models. We are currently reworking all the TF models to solve this issue among others.<|||||>@jplu I tried the same thing with Pytorch model also. It is also giving error. Any idea if I can get the attentions with pytorch?<|||||>You get the same error with PyTorch? For PyTorch I will let @patrickvonplaten take the lead to help you, he knows better than me.<|||||>The error is the same instead of list it just says tuple<|||||>Hey @pathikchamaria - is it possible to update your version? 2.11 is very outdated by now. Could you try again with the current version of transformers (3.5) ? <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>@jplu are there any updates on this on the tensorflow side? |

transformers | 8,501 | closed | Why is the XLM-RoBERTa sometimes producing a standalone start of the word character (the special underscore with ord = 9601) | I'm using the `transformers` library 3.4.0.

The XLM-RoBERTa tokenizer produces in certain cases standalone start of the word characters. Is that intended?

For example:

```

tokenizer.tokenize('amerikanische')

['▁', 'amerikanische']

```

while

```

tokenizer.tokenize('englische')

['▁englische']

```

| 11-12-2020 15:56:00 | 11-12-2020 15:56:00 | Ah, I guess I figured that out. Does it happen when the training data set of the tokenizer never had this token at the beginning of a word but only inside a word? |

transformers | 8,500 | closed | Fix doc bug | Fix the example of Trainer, hope it help.

@sgugger

| 11-12-2020 15:48:03 | 11-12-2020 15:48:03 | Thanks for the fix! |

transformers | 8,499 | closed | Unable to install Transformers | Hi all - I'm unable to install transformers from source. I need this for a project, it's really annoying not be able to use your amazing work. Could you please help me? :) Thank you so much.

**Issue**

pip install is blocked at **sentencepiece-0.1.91** install and crashes

**What I tried**

- I tried to find a workaround by installing the latest version of sentencepiece 0.1.94 but it doesn't solve the issue

- I tried to download the repository locally and change the version requirement in setup.py and requirement.txt it doesn't solve neither

- My system: MacOS 10.15.7 / Python 3.9.0 / Pip 20.2.4 / Anaconda3 with PyTorch

**The error messages and pip list to show you I installed latest sentencepiece**

```

(env) (base) Cecilias-MacBook-Air:transformers mymacos$ pip3 install -e .

Obtaining file:///Users/mymacos/Documents/OpenAI/transformers

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing wheel metadata ... done

Collecting filelock

Using cached filelock-3.0.12-py3-none-any.whl (7.6 kB)

Collecting sentencepiece==0.1.91

Using cached sentencepiece-0.1.91.tar.gz (500 kB)

ERROR: Command errored out with exit status 1:

command: /Users/mymacos/Documents/OpenAI/env/bin/python3 -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'/private/var/folders/s7/73dlmpfj3253cpbpl6v96rbm0000gn/T/pip-install-5ceji0j1/sentencepiece/setup.py'"'"'; __file__='"'"'/private/var/folders/s7/73dlmpfj3253cpbpl6v96rbm0000gn/T/pip-install-5ceji0j1/sentencepiece/setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' egg_info --egg-base /private/var/folders/s7/73dlmpfj3253cpbpl6v96rbm0000gn/T/pip-pip-egg-info-svt86xy8

cwd: /private/var/folders/s7/73dlmpfj3253cpbpl6v96rbm0000gn/T/pip-install-5ceji0j1/sentencepiece/

Complete output (5 lines):

Package sentencepiece was not found in the pkg-config search path.

Perhaps you should add the directory containing `sentencepiece.pc'

to the PKG_CONFIG_PATH environment variable

No package 'sentencepiece' found

Failed to find sentencepiece pkgconfig

----------------------------------------

ERROR: Command errored out with exit status 1: python setup.py egg_info Check the logs for full command output.

(env) (base) Cecilias-MacBook-Air:transformers mymacos$ pip list

Package Version

----------------- -------

astroid 2.4.2

isort 5.6.4

lazy-object-proxy 1.4.3

mccabe 0.6.1

numpy 1.19.4

pip 20.2.4

pylint 2.6.0

PyYAML 5.3.1

**sentencepiece 0.1.94**

setuptools 50.3.2

six 1.15.0

toml 0.10.2

wheel 0.35.1

wrapt 1.12.1

``` | 11-12-2020 15:33:12 | 11-12-2020 15:33:12 | I guess the issue is that you're using `anaconda` here. Until version v4.0.0, we're not entirely compatible with anaconda as SentencePiece is not on a conda channel.

In the meantime, we recommend installing `transformers` in a pip virtual env:

```shell-script

python -m venv .env

source .env/bin/activate

pip install -e .

```<|||||>@LysandreJik Thanks for your answer! Actually it was not an `anaconda` issue.

I found the solution! There're 2 version issues in the install requirements.

See below the steps - but I had to reinstall Python back to 3.8 as Torch/TorchVision don't support 3.9 yet.

1. Copy content of GitHub repo in a “transformers” folder: https://github.com/huggingface/transformers

2. `cd transformers`

3. Change all the `tokenizers` 0.9.3 reference to 0.9.4 in transformers files

4. Change all the `sentencepiece` 0.1.91 reference to 0.1.94 in transformers files

5. `brew install pkgconfig`

6. `python3.8 setup.py install`

And voila! I hope it helps lots of folks struggling. 👍

<|||||>@MoonshotQuest - what are "transformers files" in the reply above? |

transformers | 8,498 | closed | Model sharing doc | # What does this PR do?

This PR expands the model sharing doc with some instructions specific to colab.

Unrelated: some fixes in marian.rst that I thought I had pushed directly to master but had not. | 11-12-2020 15:23:33 | 11-12-2020 15:23:33 | |

transformers | 8,497 | closed | Error when loading a model cloned without git-lfs is quite cryptic | # 🚀 Error message request

If you forget to install git-LFS (e.g. on Google Colab) and you just do:

```python

!git clone https://huggingface.co/facebook/bart-base

from transformers import AutoModel

model = AutoModel.from_pretrained('./bart-base')

```

The cloning seems to work well but the model weights are not downloaded. The error message is then quite cryptic and could probably be tailored to this (probably) common failure case:

```

loading weights file ./bart-large-cnn/pytorch_model.bin

---------------------------------------------------------------------------

UnpicklingError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

950 try:

--> 951 state_dict = torch.load(resolved_archive_file, map_location="cpu")

952 except Exception:

4 frames

UnpicklingError: invalid load key, 'v'.

During handling of the above exception, another exception occurred:

OSError Traceback (most recent call last)

/usr/local/lib/python3.6/dist-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

952 except Exception:

953 raise OSError(

--> 954 f"Unable to load weights from pytorch checkpoint file for '{pretrained_model_name_or_path}' "

955 f"at '{resolved_archive_file}'"

956 "If you tried to load a PyTorch model from a TF 2.0 checkpoint, please set from_tf=True. "

OSError: Unable to load weights from pytorch checkpoint file for './bart-large-cnn' at './bart-large-cnn/pytorch_model.bin'If you tried to load a PyTorch model from a TF 2.0 checkpoint, please set from_tf=True.

```

git-LFS specification files are pretty simple to parse and typically look like this:

```

version https://git-lfs.github.com/spec/v1

oid sha256:097417381d6c7230bd9e3557456d726de6e83245ec8b24f529f60198a67b203a

size 440473133

```

The first *key* is always `version`: https://github.com/git-lfs/git-lfs/blob/master/docs/spec.md | 11-12-2020 15:20:15 | 11-12-2020 15:20:15 | Yep, they way I would go about this would be to programmatically check whether the file is text-only (non-binary) and between 100 and 200 bytes. If it is (and we expected a weights file), it's probably a lfs pointer file.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>unstale |

transformers | 8,496 | closed | Created ModelCard for Hel-ach-en MT model | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to the it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-12-2020 15:00:59 | 11-12-2020 15:00:59 | This is really cool @Pogayo, thanks for sharing.

If you can, please consider adding sample inputs for the inference widget, either in DefaultWidget.ts (see https://huggingface.co/docs#how-can-i-control-my-models-widgets-example-inputs) or in this model card.

Will also add Acholi to the list in https://huggingface.co/languages<|||||>I don't know if this is the right place to ask, apologies in advance - I am trying to translate on the model page and getting this error:

I have not been able to figure out what causes it so if you can guide me, I would really love to see this model accessible for people.

Unrecognized configuration class for this kind of AutoModel: AutoModelForCausalLM. Model type should be one of CamembertConfig, XLMRobertaConfig, RobertaConfig, BertConfig, OpenAIGPTConfig, GPT2Config, TransfoXLConfig, XLNetConfig, XLMConfig, CTRLConfig, ReformerConfig, BertGenerationConfig, XLMProphetNetConfig, ProphetNetConfig.

<|||||>Did you change the `pipeline_tag` in the meantime ? It's working now:

https://huggingface.co/Helsinki-NLP/opus-mt-luo-en?text=Ariyo

The error seemed to point it wanted to do text-generation with your model which it can't.<|||||>It is still not working @Narsil. I get a different error now, do you know what might be causing it?

The model you referenced is a different one- A Luo -English model- This one is Acholi -English |

transformers | 8,495 | closed | Allow tensorflow tensors as input to Tokenizer | Firstly thanks so much for all the amazing work!

I'm trying to package a model for use in TF Serving. The problem is that everywhere I see this done, the tokenisation step happens outside of the server. I want to include this step inside the server so the user can just provide raw text as the input and not need to know anything about tokenization.

Here's how I'm trying to do it

```

def save_model(model, tokenizer, output_path):

@tf.function(input_signature=[tf.TensorSpec(shape=[None], dtype=tf.string)])

def serving(input_text):

inputs = tokenizer(input_text, padding='longest', truncation=True, return_tensors="tf")

outputs = model(inputs)

logits = outputs[0]

probs = tf.nn.softmax(logits, axis=1).numpy()[:, 1]

predictions = tf.cast(tf.math.round(probs), tf.int32)

return {

'classes': predictions,

'probabilities': probs

}

print(f'Exporting model for TF Serving in {tf_serving_output}')

tf.saved_model.save(model, export_dir=output_path, signatures=serving)

```

where e.g.

```

model = TFAlbertForSequenceClassification.from_pretrained('albert-base-v2', num_labels=num_classes)

tokenizer`= = AutoTokenizer.from_pretrained('albert-base-v2')

```

The problem is that the tokenization step results in

```

AssertionError: text input must of type `str` (single example), `List[str]` (batch or single pretokenized example) or `List[List[str]]` (batch of pretokenized examples).

```

clearly it wants plain python strings, not tensorflow tensors.

Would appreciate any help, workarounds, or ideally of course, this to be supported.

-----

Running:

transformers==3.4.0

tensorflow==2.3.0 | 11-12-2020 14:29:01 | 11-12-2020 14:29:01 | I believe @jplu has already used TF Serving. Do you know if it's possible to include tokenization in it?<|||||>Hello!

Unfortunately it is currently not possible to integrate our tokenizer directly inside a model due to some TensorFlow limitations. Nevertheless, there might be a solution by trying to create your own Tokenization layer such as the one the TF team is [working on](https://www.tensorflow.org/api_docs/python/tf/keras/layers/experimental/preprocessing/TextVectorization).<|||||>Thanks for response and for the link.

Ya, it's a shame that there is still no way to use plain python in the signature.

I'll likely just find a different work around e.g. converting to PyTorch and serving with TorchServe.

I'll close this for now.<|||||>I found a working soltuion that doesn't require any changes to Tensorflow or Transformers.

Commenting because I came across this trying to do something similar. I actually think the issue here is not tensorflow but the transformer type checking for the tokenizer call which doesn't allow for the tensorflow objects.

I made the following implementation which appears to be working and doesn't rely on anything due to tensorflow limitations:

```python

# NOTE: the specific model here will need to be overwritten because AutoModel doesn't work

class CustomModel(transformers.TFDistilBertForSequenceClassification):

def call_tokenizer(self, input):

if type(input) == list:

return self.tokenizer([str(x) for x in input], return_tensors='tf')

else:

return self.tokenizer(str(input), return_tensors='tf')

@tf.function(input_signature=[tf.TensorSpec(shape=(1, ), dtype=tf.string)])

def serving(self, content: str):

batch = self.call_tokenizer(content)

batch = dict(batch)

batch = [batch]

output = self.call(batch)

return self.serving_output(output)

tokenizer = transformers.AutoTokenizer.from_pretrained(

model_path,

use_fast=True

)

config = transformers.AutoConfig.from_pretrained(

model_path,

num_labels=2,

from_pt=True

)

model = CustomModel.from_pretrained(

model_path,

config=config,

from_pt=True

)

model.tokenizer = tokenizer

model.id2label = config.id2label

model.save_pretrained("model", saved_model=True)

```<|||||>Hi @maxzzze

I was also working on including hf tokenizer into tf model. However, I found that inside call_tokenizer, the results tokenizer return would always be the same despites the text input you passed in.

Have you also encounter such issue? I am thinking save_pretrained wasn't including the tokenizer appropriately.

> I found a working soltuion that doesn't require any changes to Tensorflow or Transformers.

>

> Commenting because I came across this trying to do something similar. I actually think the issue here is not tensorflow but the transformer type checking for the tokenizer call which doesn't allow for the tensorflow objects.

>

> I made the following implementation which appears to be working and doesn't rely on anything due to tensorflow limitations:

>

> ```python

> # NOTE: the specific model here will need to be overwritten because AutoModel doesn't work

> class CustomModel(transformers.TFDistilBertForSequenceClassification):

>

> def call_tokenizer(self, input):

> if type(input) == list:

> return self.tokenizer([str(x) for x in input], return_tensors='tf')

>

> else:

> return self.tokenizer(str(input), return_tensors='tf')

>

>

>

> @tf.function(input_signature=[tf.TensorSpec(shape=(1, ), dtype=tf.string)])

> def serving(self, content: str):

> batch = self.call_tokenizer(content)

> batch = dict(batch)

> batch = [batch]

> output = self.call(batch)

> return self.serving_output(output)

>

>

> tokenizer = transformers.AutoTokenizer.from_pretrained(

> model_path,

> use_fast=True

> )

>

> config = transformers.AutoConfig.from_pretrained(

> model_path,

> num_labels=2,

> from_pt=True

> )

>

> model = CustomModel.from_pretrained(

> model_path,

> config=config,

> from_pt=True

> )

>

> model.tokenizer = tokenizer

> model.id2label = config.id2label

> model.save_pretrained("model", saved_model=True)

> ```

|

transformers | 8,494 | closed | error occurs when trainning transformer-xl by ddp | my env is as below:

- `transformers` version: 3.4.0

- Platform: 1Ubuntu-18.04

- Python version: 3.6.9

- PyTorch version (GPU?): 1.6.0+cu101 (False)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

I am trainning the transformer-xl on one machine with multi-gpus by ddp.

my script is as below:

python -m torch.distributed.launch --nproc_per_node 4 run_language_modeling.py --output_dir ${model_dir}

--tokenizer_name $data_dir/wordpiece-custom.json

--config_name $data_dir/$config_file

--train_data_files "$data_dir/train*.txt"

--eval_data_file $data_dir/valid.txt

--block_size=128

--do_train

--do_eval

--per_device_train_batch_size 1

--gradient_accumulation_steps 1

--learning_rate 6e-4

--weight_decay 0.01

--adam_epsilon 1e-6

--adam_beta1 0.9

--adam_beta2 0.98

--max_steps 500_000

--warmup_steps 24_000

--fp16

--logging_dir ${model_dir}/tensorboard

--save_steps 5000

--save_total_limit 20

--seed 108

--max_steps -1

--num_train_epochs 20

--dataloader_num_workers 0

--overwrite_output_dir

occur error:

[INFO|language_modeling.py:242] 2020-11-11 11:54:46,363 >> Loading features from cached file /opt/ml/input/data/training/kyzhan/huggingface/data/train40G/cached_lm_PreTrainedTokenizerFast_126_train3.txt [took 116.431 s]

/ th_index_copy

main()

File "run_hf_train_lm_ti.py", line 338, in main

trainer.train(model_path=model_path)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 758, in train

tr_loss += self.training_step(model, inputs)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 1056, in training_step

loss = self.compute_loss(model, inputs)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 1082, in compute_loss

outputs = model(**inputs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/distributed.py", line 511, in forward

output = self.module(*inputs[0], **kwargs[0])

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_transfo_xl.py", line 1056, in forward

return_dict=return_dict,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_transfo_xl.py", line 888, in forward

word_emb = self.word_emb(input_ids)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 722, in call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_transfo_xl.py", line 448, in forward

emb_flat.index_copy(0, indices_i, emb_i)

RuntimeError: Expected object of scalar type Float but got scalar type Half for argument #4 'source' in call to th_index_copy

@TevenLeScao | 11-12-2020 12:16:58 | 11-12-2020 12:16:58 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

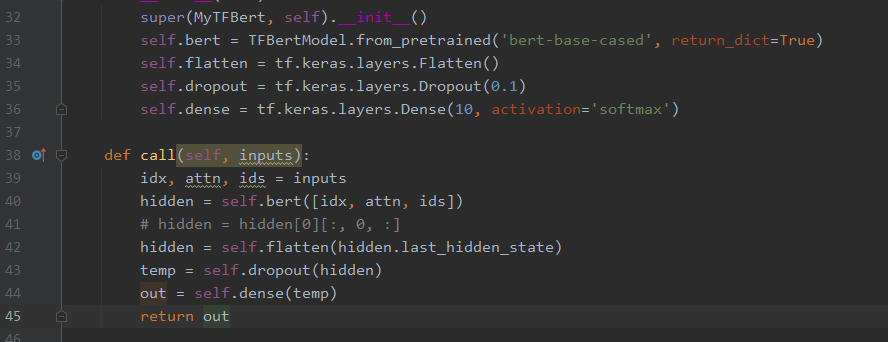

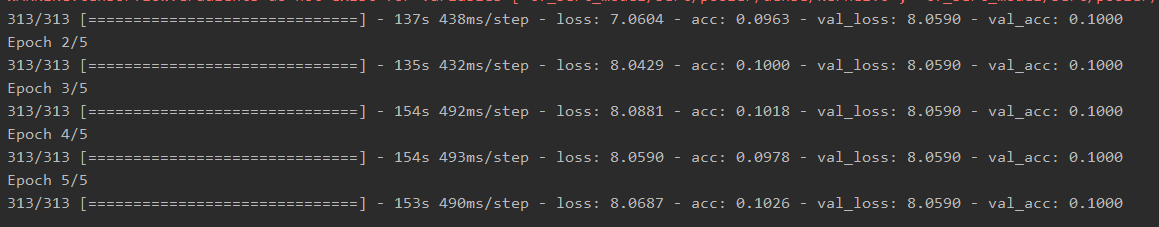

transformers | 8,493 | closed | I meet the zero gradient descent | I want use transformers to do text classification, I want code myself rather than use `TFBertForSequenceClassification`,so I write the model with `TFBertModel` and `tf.keras.laters.Dense`,but this is no gradient descent in my code, I try to find what wrong with my code but I can't. So I submit this issues to ask for some help.

my code is here:

Model:

and I know train data is test data,just for quick debug.

and when I train this model ,

| 11-12-2020 11:29:45 | 11-12-2020 11:29:45 | Hi @Sniper970119 do you mind posting this on the forum rather? It's here: https://discuss.huggingface.co

We are trying to focus the issues on bug reports and features/model requests.

Thanks a lot.<|||||>>

>

> Hi @Sniper970119 do you mind posting this on the forum rather? It's here: https://discuss.huggingface.co

>

> We are trying to focus the issues on bug reports and features/model requests.

>

> Thanks a lot.

ok,I just post it on the forum.Thank for your reply. |

transformers | 8,492 | closed | Rework some TF tests | # What does this PR do?

Rework some TF tests to make them compliant with dict returns, and simplify some of them. | 11-12-2020 11:15:28 | 11-12-2020 11:15:28 | |

transformers | 8,491 | closed | Fix check scripts for Windows | # What does this PR do?

The current check-X scripts are reading/writing with `os.linesep` as the newline separator. On Windows it makes the overwritten files in CRLF instead of LF. Same logic is applied on Mac with CR. Now, Python will always use LF to read and write in the files. | 11-12-2020 10:47:05 | 11-12-2020 10:47:05 | It doesn't make any change on Linux, and you've tested it on Windows. Could we get someone using MacOS to double-check it doesn't break anything for them before merging?<|||||>I think @LysandreJik is on MacOS?<|||||>I'm actually between Manjaro and EndeavourOS, but I'll check on a Mac. |

transformers | 8,490 | closed | New TF loading weights | # What does this PR do?

This PR improves the way we load the TensorFlow weights. Before we had to go through the instantiated model + the checkpoint twice:

- once for loading the weights from the checkpoints into the instantiated model

- once for computing the missing and unexpected keys

Now both are done simultaneously which makes the loading faster. | 11-12-2020 10:23:37 | 11-12-2020 10:23:37 | I have added a lot of comments in the method to make it clearer, I removed a small part of the code that was due to the moment where I was updating to the new names in same time. @LysandreJik @sgugger it should be easier to understand now.<|||||>It's a lot clearer, thanks. There are still unaddressed comments however, and I can't comment on line 259 but it should be removed now (since the dict is create two lines below).<|||||>What is missing now?<|||||>There is Lysandre's comments at line 283 and mine about the loop line 277. Like I said in my previous comments, doing the two functions in one is great, I just don't get the added complexity of the new `model_layers_name_value` variable when we could stick to the previous loop in the function `load_tf_weights` while adding the behavior of `detect_tf_missing_unexpected_layers`.

The comments are a great addition, thanks a lot for adding those!<|||||>I have addressed the Lysandre's comment at line 283 and yours for the loop at line 277. Do you see anything else?<|||||>The typos should be fixed now. Sorry for that.<|||||>Good to merge for me too! |

transformers | 8,489 | closed | Fix typo in roberta-base-squad2-v2 model card | # What does this PR do?

Simply adding `-v2` for Haystack API model loading. Furthermore, I've also changed `model` in `model_name_or_path` due to breaking change in Haystack (https://github.com/deepset-ai/haystack/pull/510).

## Who can review?

Model Cards: @julien-c | 11-12-2020 10:15:46 | 11-12-2020 10:15:46 | |

transformers | 8,488 | closed | [WIP] T5v1.1 & MT5 | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to the it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-12-2020 09:46:23 | 11-12-2020 09:46:23 | Maybe wrong model config for T5.1.1. For instance, T5.1.1.small should have num_layers=8 and num_heads=6.

See https://github.com/google-research/text-to-text-transfer-transformer/blob/master/t5/models/gin/models/t5.1.1.small.gin<|||||>> Maybe wrong model config for T5.1.1. For instance, T5.1.1.small should have num_layers=8 and num_heads=6.

>

> See https://github.com/google-research/text-to-text-transfer-transformer/blob/master/t5/models/gin/models/t5.1.1.small.gin

Thanks yeah, I implemented that.

The new model structure is now equal to mesh t5 v1.1.

If you download the t5v1.1 `t5-small` checkpoint and replace the corresponding path in `check_t5_against_hf.py` you can see that the models are equal.

There is still quite some work to do: write more tests, lots of cleaning and better design, and check if mT5 works with it.<|||||>> If you download the t5v1.1 `t5-small` checkpoint and replace the corresponding path in `check_t5_against_hf.py` you can see that the models are equal.

Hi, `check_t5_against_hf.py` still fails if I use a longer input text instead of `Hello there`, like `Hello there. Let's put more words in more languages than I originally thought.`

<|||||>> > If you download the t5v1.1 `t5-small` checkpoint and replace the corresponding path in `check_t5_against_hf.py` you can see that the models are equal.

>

> Hi, `check_t5_against_hf.py` still fails if I use a longer input text instead of `Hello there`, like `Hello there. Let's put more words in more languages than I originally thought.`

Hmm, it works for me - do you experience that for T5v1.1 or mT5?<|||||>> > > If you download the t5v1.1 `t5-small` checkpoint and replace the corresponding path in `check_t5_against_hf.py` you can see that the models are equal.

> >

> >

> > Hi, `check_t5_against_hf.py` still fails if I use a longer input text instead of `Hello there`, like `Hello there. Let's put more words in more languages than I originally thought.`

>

> Hmm, it works for me - do you experience that for T5v1.1 or mT5?

Aha, the checking is OK now. Yesterday I made a mistake that when I changed the test input sentence in the check script, I didn't update the input length for MTF model from 4 to a longer value like 128. So actually the MTF model and PyTorch model received different inputs, and of course got different results.

Besides, if I add the z-loss to the CE loss at last, it differs from MTF score again. I just found MTF ignores z-loss when not training ([code](https://github.com/tensorflow/mesh/blob/4f82ba1275e4c335348019fee7974d11ac0c9649/mesh_tensorflow/transformer/transformer.py#L781)). So I think MTF model score does not include z-loss, but its training does, which is absent from HF T5 training. Well, this is absolutely not a blocking issue now.

Appreciate your great work :) <|||||>closing in favor of https://github.com/huggingface/transformers/pull/8552. |

transformers | 8,487 | closed | `log_history` does not contain metrics anymore | Since version 3.5.0 the `log_history` of the trainer does not contain the metrics anymore. Version 3.4.0 works...

My trainer uses a `compute_metrics` callback. It avaluates after each epoch. At version 3.4.0 after the training I am extracting the last epoch results: `trainer.state.log_history[-1]` to log the metrics.

At version 3.5.0 the dict only contains loss and epoch number but not the computed metrics.

I think anything was changed that broke the metric logging. I can not provide example code. Sorry...

| 11-12-2020 09:28:09 | 11-12-2020 09:28:09 | `evaluate` calls `log` which appends the results to `log_history`. So the code is there. Without a reproducer to investigate, there is nothing we can do to help.<|||||>Here is the demo code that shows the bug: https://colab.research.google.com/drive/1dEzkDoMampL-VVrQeO924HQmHXffya0Z?usp=sharing

The last line should print all metrics and does that with version 3.4.0 but not with 3.5.0

Output of 3.4.0 (which is correct):

```

{'eval_loss': 0.5401068925857544, 'eval_f1_OTHER': 0.8642232403165347, 'eval_f1_OFFENSE': 0.6730190571715146, 'eval_recall_OTHER': 0.9230427046263345, 'eval_recall_OFFENSE': 0.5834782608695652, 'eval_acc': 0.8081224249558564, 'eval_bac': 0.7532604827479499, 'eval_mcc': 0.5547059570919702, 'eval_f1_macro': 0.7686211487440247, 'epoch': 2.0, 'total_flos': 668448673730400, 'step': 628}

```

Bug in 3.5.0:

```

{'total_flos': 668448673730400, 'epoch': 2.0, 'step': 628}

```<|||||>Ah it's not a bug. In 3.5.0 there is one final log entry for the total_flos (instead of logging them during training as it's only useful at the end). So you can still access all your metrics but with the second-to-last entry (`trainer.state.log_history[-2]`).<|||||>Hi @sgugger ok thanks for the info.

It might be no bug but honestly. This logging "API" is very fragile. What happens if I do a `[-2]` now and in the next release the final log entry for the total_flos is moved to an other list. Then I am getting the result of the 2nd last epoch instead of the last one.

IMO this logging "API" needs a clean and better redesign. Or do I just use it in a wrong way?<|||||>There are plenty of things that could log more info: a callback, some other tweak in training performed at the end. IMO you shouldn't rely on a hard-coded index but loop from the end of the `log_history` until you find a dict with the metric values.<|||||>Ok. Closing this. |

transformers | 8,486 | closed | Gradient accumulation averages over gradients | https://github.com/huggingface/transformers/blob/121c24efa4453e4e726b5f0b2cf7095b14b7e74e/src/transformers/trainer.py#L1118

So I have been looking at this for the past day and a half. Please explain to me. Gradient accumulation should accumulate the gradient, not average it, right? That makes this scaling plain wrong? Am I missing something? | 11-12-2020 08:28:47 | 11-12-2020 08:28:47 | Hi @MarktHart do you mind posting this on the forum rather? It's here: https://discuss.huggingface.co

We are trying to focus the issues on bug reports and features/model requests.

Thanks a lot.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 8,485 | closed | Prediction loop: work with batches of variable length (fixed per batch) | In the current form, the `prediction_loop` doesn't handle batches with samples of varying lengths (but fixed length per batch). This patch adds this capability.

This is great because it can save a lot of time during training and inference, where using the full length every time is a big sacrifice, knowing self-attention scales in n**2.

Disclaimer: This is a strictly personal contribution, not linked to my professional affiliation in any way.

@sgugger

https://github.com/huggingface/transformers/issues/8483

| 11-12-2020 05:49:02 | 11-12-2020 05:49:02 | Please feel free to modify this in any shape or form of course.<|||||>This will cause a regression for code expecting a NumPy output, obviously :/<|||||>I don't have much time, if any, to dedicate to this. If you're not interested by the idea, it's completely fine, I will close.<|||||>I think @jplu is redesigning the TFTrainer. Maybe this should be reopened once that design has been merged in master?<|||||>This won't be compliant anymore because the redisign doesn't use custom loops.<|||||>Do you support different batch lengths in the new one? @jplu <|||||>It is not on top of the list but, yes for sure, we plan to support it, including for training. |

transformers | 8,484 | closed | automodel | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version:

- PyTorch version (GPU?):

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

tokenizers: @mfuntowicz

Trainer: @sgugger

Speed and Memory Benchmarks: @patrickvonplaten

Model Cards: @julien-c

TextGeneration: @TevenLeScao

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten @TevenLeScao

Blenderbot: @patrickvonplaten

Bart: @patrickvonplaten

Marian: @patrickvonplaten

Pegasus: @patrickvonplaten

mBART: @patrickvonplaten

T5: @patrickvonplaten

Longformer/Reformer: @patrickvonplaten

TransfoXL/XLNet: @TevenLeScao

RAG: @patrickvonplaten, @lhoestq

FSMT: @stas00

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

-->

## Information

Model I am using (Bert, XLNet ...):

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

| 11-12-2020 05:15:54 | 11-12-2020 05:15:54 | |

transformers | 8,483 | closed | transformers.TFTrainer: Does not support batches with sequences of variable lengths? | Hello,

It seems like `np.append` in `TFTrainer.prediction_loop` is the only thing that prevent TFTrainer from being able to deal with batches of variable sequence length (between the batches, not inside the batches themselves). Indeed, `np.append` requires the batches to be of the same sequence length.

Alternatives: as this is in tensorflow, an easy alternative would be to convert the batches to `tf.RaggedTensor` with `tf.ragged.constant`, and to concatenate them (the usual way) with `tf.concat`.

You could also ofc just make `preds` and `label_ids` into lists. There doesn't seem to be any big computation going on on these objects.

| 11-12-2020 05:14:14 | 11-12-2020 05:14:14 | Created pull request https://github.com/huggingface/transformers/pull/8485<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Hello @JulesGM

Regarding [#8483](https://github.com/huggingface/transformers/pull/8485)

I went through the code and I found this helpful. But I'm facing issues to convert my training tf.data.Dataset to tf.RaggedTensor format. if possible can you share resources regarding this? |

transformers | 8,482 | closed | TAPAS tokenizer & tokenizer tests | This PR aims to implement the tokenizer API for the TAPAS model, as well as the tests. It is based on `tapas-style` which contains all the changes done by black & isort on top of the `nielsrogge/tapas_v3` branch in https://github.com/huggingface/transformers/pull/8113.

The API is akin to our other tokenizers': it is based on the `__call__` method which dispatches to `encode_plus` or `batch_encode_plus` according to the inputs.

These two methods then dispatch to `_encode_plus` and `_batch_encode_plus`, which themselves dispatch to `prepare_for_model` and `_batch_prepare_for_model`.

Here are the remaining tasks for the tokenizers, from what I could observe:

- Two tokenizer tests are failing. This is only due to the fact that there is no checkpoint currently available.

- The truncation is *not* the same as it was before these changes. Before these changes, if a row of the dataframe was to be truncated, the whole row was removed. Right now only the overflowing tokens will be removed. This is probably an important change that will need to be reverted (implemented in the new API).

- The tokenizer is based on `pd.DataFrame`s. It should be very simple to switch from these to `datasets.Dataset`, which serve the same purpose.

Once this PR is merged, I'll open a PR from `tapas-style` to `nielsrogge/tapas_v3` as explained in https://github.com/huggingface/transformers/pull/8113#issuecomment-725818087 | 11-12-2020 04:10:49 | 11-12-2020 04:10:49 | Thank you!

❗ This is a preliminary review, I'm not finished with it. 2 important things for now:

1) I am also testing the Colab demo's with this branch. Currently I'm getting an error when providing `answer_coordinates` and `answer_texts` to the tokenizer:

SQA: https://colab.research.google.com/drive/1BNxrKkrwpWuE2TthZL5qQlERtcK4ZbIt?usp=sharing

WTQ: https://colab.research.google.com/drive/1K8ZeNQyBqo-A03D8RL8_j34n-Ubggb9U?usp=sharing

Normally, the `label_ids`, `numeric_values` and `numeric_values_scale` should also be padded when I set padding='max_length'.

2) I've got an updated version of the creation of the numeric values (they are currently not performed correctly) in a branch named `tapas_v3_up_to_date_with_master`. Either you could incorporate these changes in your branch before making a PR, or I make them after the PR is merged (what you like best - the latter is probably easier). <|||||>Great, thanks for your great preliminary review. I've fixed a few of the issues, just pushed a commit. There's a few things you mention that definitely need a deeper look. I can do so in the coming days, but I'll let you finish your review first so that I may batch everything. Thank you!<|||||>@LysandreJik I have finished reviewing, I've added more (mostly documentation-related) comments.

The most important thing is that when `label_ids`, `answer_coordinates` and `answer_text` are provided to the tokenizer, an error is currently thrown due to the fact that padding is not working.

Besides this, the other important things are:

* a correct implementation of the creation of the `prev_label_ids` when a batch of table-question pairs is provided

* a correct implementation of `drop_rows_to_fit` and `cell_trim_length` |

transformers | 8,481 | closed | TAPAS Tokenizer & tokenizer tests | 11-12-2020 04:03:21 | 11-12-2020 04:03:21 | ||

transformers | 8,480 | closed | Error when upload models: "LFS: Client error" | I am using the most recent release to upload a model.

Like the new instructions suggested, I am using git to upload my files:

```bash

$ git add --all

Encountered 1 file(s) that may not have been copied correctly on Windows:

pytorch_model.bin

$ git status

On branch main

Your branch is up to date with 'origin/main'.

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

new file: pytorch_model.bin

$ git commit -m 'update'

[main 820bb7e] update

1 file changed, 3 insertions(+)

create mode 100644 pytorch_model.bin

$ git push

Username for 'https://huggingface.co': danyaljj

Password for 'https://[email protected]':

LFS: Client error: https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T015933Z&X-Amz-Expires=900&X-Amz-Signature=0115d52aa41e4a5e80315f03689f278c36f3f1d4961ee5544e8bb9b427d0ba7c&X-Amz-SignedHeaders=host

Uploading LFS objects: 0% (0/1), 33 KB | 169 KB/s, done.

error: failed to push some refs to 'https://huggingface.co/allenai/unifiedqa-t5-3b'

```

FYI, here are my versions:

```bash

$ pip list | grep transformers

transformers 3.5.0

```

@julien-c | 11-12-2020 02:05:49 | 11-12-2020 02:05:49 | Since `git push` by itself is not so informative, I retried it with more verbose output (sorry for the long output):

```

$ GIT_TRACE=1 GIT_CURL_VERBOSE=1 git push

18:07:20.246678 git.c:440 trace: built-in: git push

18:07:20.247908 run-command.c:663 trace: run_command: GIT_DIR=.git git-remote-https origin https://huggingface.co/allenai/unifiedqa-t5-3b

* Couldn't find host huggingface.co in the .netrc file; using defaults

* Trying 192.99.39.165...

* TCP_NODELAY set

* Connected to huggingface.co (192.99.39.165) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/cert.pem

CApath: none

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: CN=huggingface.co

* start date: Nov 10 08:05:46 2020 GMT

* expire date: Feb 8 08:05:46 2021 GMT

* subjectAltName: host "huggingface.co" matched cert's "huggingface.co"

* issuer: C=US; O=Let's Encrypt; CN=Let's Encrypt Authority X3

* SSL certificate verify ok.

> GET /allenai/unifiedqa-t5-3b/info/refs?service=git-receive-pack HTTP/1.1

Host: huggingface.co

User-Agent: git/2.23.0

Accept: */*

Accept-Encoding: deflate, gzip

Accept-Language: en-US, *;q=0.9

Pragma: no-cache

< HTTP/1.1 401 Unauthorized

< Server: nginx/1.14.2

< Date: Thu, 12 Nov 2020 02:07:21 GMT

< Content-Type: text/plain; charset=utf-8

< Content-Length: 12

< Connection: keep-alive

< X-Powered-By: huggingface-moon

< WWW-Authenticate: Basic realm="Authentication required", charset="UTF-8"

< ETag: W/"c-dAuDFQrdjS3hezqxDTNgW7AOlYk"

<

* Connection #0 to host huggingface.co left intact

18:07:20.859025 run-command.c:663 trace: run_command: 'git credential-osxkeychain get'

18:07:20.876285 git.c:703 trace: exec: git-credential-osxkeychain get

18:07:20.877309 run-command.c:663 trace: run_command: git-credential-osxkeychain get

* Found bundle for host huggingface.co: 0x7f89a65048d0 [can pipeline]

* Could pipeline, but not asked to!

* Re-using existing connection! (#0) with host huggingface.co

* Connected to huggingface.co (192.99.39.165) port 443 (#0)

* Server auth using Basic with user 'danyaljj'

> GET /allenai/unifiedqa-t5-3b/info/refs?service=git-receive-pack HTTP/1.1

Host: huggingface.co

Authorization: Basic ZGFueWFsamo6UmVuZGNyYXp5MQ==

User-Agent: git/2.23.0

Accept: */*

Accept-Encoding: deflate, gzip

Accept-Language: en-US, *;q=0.9

Pragma: no-cache

< HTTP/1.1 200 OK

< Server: nginx/1.14.2

< Date: Thu, 12 Nov 2020 02:07:21 GMT

< Content-Type: application/x-git-receive-pack-advertisement

< Transfer-Encoding: chunked

< Connection: keep-alive

< X-Powered-By: huggingface-moon

<

* Connection #0 to host huggingface.co left intact

18:07:21.159144 run-command.c:663 trace: run_command: 'git credential-osxkeychain store'

18:07:21.175886 git.c:703 trace: exec: git-credential-osxkeychain store

18:07:21.176867 run-command.c:663 trace: run_command: git-credential-osxkeychain store

18:07:21.240597 run-command.c:663 trace: run_command: .git/hooks/pre-push origin https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:21.258423 git.c:703 trace: exec: git-lfs pre-push origin https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:21.259618 run-command.c:663 trace: run_command: git-lfs pre-push origin https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:21.280328 trace git-lfs: exec: git 'version'

18:07:21.305769 trace git-lfs: exec: git '-c' 'filter.lfs.smudge=' '-c' 'filter.lfs.clean=' '-c' 'filter.lfs.process=' '-c' 'filter.lfs.required=false' 'rev-parse' 'HEAD' '--symbolic-full-name' 'HEAD'

18:07:21.330148 trace git-lfs: exec: git 'config' '-l'

18:07:21.341551 trace git-lfs: pre-push: refs/heads/main 820bb7e936e2e5665ea9c4ac3016456b3ce55bc7 refs/heads/main 4d2dae1e804fc041975dc40c06e3ab902b6c3f38

18:07:21.829857 trace git-lfs: tq: running as batched queue, batch size of 100

18:07:21.830328 trace git-lfs: run_command: git rev-list --stdin --objects --not --remotes=origin --

18:07:21.848139 trace git-lfs: tq: sending batch of size 1

18:07:21.848726 trace git-lfs: api: batch 1 files

18:07:21.848996 trace git-lfs: creds: git credential fill ("https", "huggingface.co", "")

18:07:21.859568 git.c:440 trace: built-in: git credential fill

18:07:21.861149 run-command.c:663 trace: run_command: 'git credential-osxkeychain get'

18:07:21.877936 git.c:703 trace: exec: git-credential-osxkeychain get

18:07:21.879004 run-command.c:663 trace: run_command: git-credential-osxkeychain get

18:07:21.920056 trace git-lfs: Filled credentials for https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:21.989068 trace git-lfs: HTTP: POST https://huggingface.co/allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch

> POST /allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch HTTP/1.1

> Host: huggingface.co

> Accept: application/vnd.git-lfs+json; charset=utf-8

> Authorization: Basic * * * * *

> Content-Length: 205

> Content-Type: application/vnd.git-lfs+json; charset=utf-8

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

{"operation":"upload","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119}],"transfers":["lfs-standalone-file","basic"],"ref":{"name":"refs/heads/main"}}18:07:23.102074 trace git-lfs: HTTP: 200

< HTTP/1.1 200 OK

< Content-Length: 578

< Connection: keep-alive

< Content-Type: application/vnd.git-lfs+json; charset=utf-8

< Date: Thu, 12 Nov 2020 02:07:23 GMT

< Etag: W/"242-LFg/omWZFm9SxeMWd5EiIfG1JTM"

< Server: nginx/1.14.2

< X-Powered-By: huggingface-moon

<

18:07:23.102239 trace git-lfs: creds: git credential approve ("https", "huggingface.co", "")

18:07:23.112995 git.c:440 trace: built-in: git credential approve

18:07:23.114213 run-command.c:663 trace: run_command: 'git credential-osxkeychain store'

18:07:23.129607 git.c:703 trace: exec: git-credential-osxkeychain store

18:07:23.130582 run-command.c:663 trace: run_command: git-credential-osxkeychain store

18:07:23.195094 trace git-lfs: HTTP: {"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020723Z&X-Amz-Expires=900&X-Amz-Signature=2d3c1762e44b21f78c89a7c5a5f41

{"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020723Z&X-Amz-Expires=900&X-Amz-Signature=2d3c1762e44b21f78c89a7c5a5f4118:07:23.195387 trace git-lfs: HTTP: 655333c901abeb466effd6f1d61bd110f6a&X-Amz-SignedHeaders=host"}}}]}

655333c901abeb466effd6f1d61bd110f6a&X-Amz-SignedHeaders=host"}}}]}Uploading LFS objects: 0% (0/1), 0 B | 0 B/s 18:07:23.195588 trace git-lfs: tq: starting transfer adapter "basic"

18:07:23.195998 trace git-lfs: xfer: adapter "basic" Begin() with 8 workers

18:07:23.196062 trace git-lfs: xfer: adapter "basic" started

18:07:23.196099 trace git-lfs: xfer: adapter "basic" worker 2 starting

18:07:23.196118 trace git-lfs: xfer: adapter "basic" worker 0 starting

18:07:23.196169 trace git-lfs: xfer: adapter "basic" worker 2 waiting for Auth

18:07:23.196185 trace git-lfs: xfer: adapter "basic" worker 1 starting

18:07:23.196151 trace git-lfs: xfer: adapter "basic" worker 4 starting

18:07:23.196216 trace git-lfs: xfer: adapter "basic" worker 5 starting

18:07:23.196257 trace git-lfs: xfer: adapter "basic" worker 5 waiting for Auth

18:07:23.196248 trace git-lfs: xfer: adapter "basic" worker 4 waiting for Auth

18:07:23.196288 trace git-lfs: xfer: adapter "basic" worker 0 processing job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:23.196293 trace git-lfs: xfer: adapter "basic" worker 1 waiting for Auth

18:07:23.196255 trace git-lfs: xfer: adapter "basic" worker 3 starting

18:07:23.196290 trace git-lfs: xfer: adapter "basic" worker 6 starting

18:07:23.196423 trace git-lfs: xfer: adapter "basic" worker 6 waiting for Auth

18:07:23.196380 trace git-lfs: xfer: adapter "basic" worker 7 starting

18:07:23.196458 trace git-lfs: xfer: adapter "basic" worker 7 waiting for Auth

18:07:23.196420 trace git-lfs: xfer: adapter "basic" worker 3 waiting for Auth

18:07:23.261193 trace git-lfs: HTTP: PUT https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386

> PUT /lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020723Z&X-Amz-Expires=900&X-Amz-Signature=2d3c1762e44b21f78c89a7c5a5f41655333c901abeb466effd6f1d61bd110f6a&X-Amz-SignedHeaders=host HTTP/1.1

> Host: s3.amazonaws.com

> Content-Length: 11406640119

> Content-Type: application/zip

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

18:07:23.499247 trace git-lfs: xfer: adapter "basic" worker 4 auth signal received

18:07:23.499298 trace git-lfs: xfer: adapter "basic" worker 5 auth signal received

18:07:23.499281 trace git-lfs: xfer: adapter "basic" worker 2 auth signal received

18:07:23.499315 trace git-lfs: xfer: adapter "basic" worker 6 auth signal received

18:07:23.499324 trace git-lfs: xfer: adapter "basic" worker 7 auth signal received

18:07:23.499353 trace git-lfs: xfer: adapter "basic" worker 1 auth signal received

18:07:23.499412 trace git-lfs: xfer: adapter "basic" worker 3 auth signal received

18:07:34.596626 trace git-lfs: xfer: adapter "basic" worker 0 finished job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:34.596706 trace git-lfs: tq: retrying object 7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386: LFS: Put https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020723Z&X-Amz-Expires=900&X-Amz-Signature=2d3c1762e44b21f78c89a7c5a5f41655333c901abeb466effd6f1d61bd110f6a&X-Amz-SignedHeaders=host: write tcp 192.168.0.6:57346->52.216.242.102:443: write: broken pipe

18:07:34.596761 trace git-lfs: tq: enqueue retry #1 for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386" (size: 11406640119)

18:07:34.596823 trace git-lfs: tq: sending batch of size 1

18:07:34.596995 trace git-lfs: api: batch 1 files

18:07:34.597180 trace git-lfs: creds: git credential cache ("https", "huggingface.co", "")

18:07:34.597193 trace git-lfs: Filled credentials for https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:34.597208 trace git-lfs: HTTP: POST https://huggingface.co/allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch

> POST /allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch HTTP/1.1

> Host: huggingface.co

> Accept: application/vnd.git-lfs+json; charset=utf-8

> Authorization: Basic * * * * *

> Content-Length: 205

> Content-Type: application/vnd.git-lfs+json; charset=utf-8

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

{"operation":"upload","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119}],"transfers":["lfs-standalone-file","basic"],"ref":{"name":"refs/heads/main"}}18:07:34.925848 trace git-lfs: HTTP: 200

< HTTP/1.1 200 OK

< Content-Length: 578

< Connection: keep-alive

< Content-Type: application/vnd.git-lfs+json; charset=utf-8

< Date: Thu, 12 Nov 2020 02:07:35 GMT

< Etag: W/"242-5zNHypYie/0vI3rttL7+btltlmQ"

< Server: nginx/1.14.2

< X-Powered-By: huggingface-moon

<

18:07:34.926039 trace git-lfs: HTTP: {"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020735Z&X-Amz-Expires=900&X-Amz-Signature=602be765be6206f6363a93f156b88

{"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020735Z&X-Amz-Expires=900&X-Amz-Signature=602be765be6206f6363a93f156b8818:07:34.926220 trace git-lfs: HTTP: 37884d95b5a8f27d14e254dd7108b845cb7&X-Amz-SignedHeaders=host"}}}]}

37884d95b5a8f27d14e254dd7108b845cb7&X-Amz-SignedHeaders=host"}}}]}Uploading LFS objects: 0% (0/1), 9.7 MB | 742 KB/s 18:07:34.926411 trace git-lfs: xfer: adapter "basic" worker 4 processing job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:34.926793 trace git-lfs: HTTP: PUT https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386

> PUT /lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020735Z&X-Amz-Expires=900&X-Amz-Signature=602be765be6206f6363a93f156b8837884d95b5a8f27d14e254dd7108b845cb7&X-Amz-SignedHeaders=host HTTP/1.1

> Host: s3.amazonaws.com

> Content-Length: 11406640119

> Content-Type: application/zip

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

18:07:44.835908 trace git-lfs: HTTP: 400 | 752 KB/s

< HTTP/1.1 400 Bad Request

< Connection: close

< Transfer-Encoding: chunked

< Content-Type: application/xml

< Date: Thu, 12 Nov 2020 02:07:44 GMT

< Server: AmazonS3

< X-Amz-Id-2: mDWWLDn2SM2srJXwqsVkEIAue+9F8wnupyuGkTAD4lcLKmDSSBa75zgKY7NXUC0X7QEMVwmPSVk=

< X-Amz-Request-Id: B971E21D6254F404

<

18:07:44.836114 trace git-lfs: xfer: adapter "basic" worker 4 finished job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:44.836157 trace git-lfs: tq: retrying object 7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386: LFS: Client error: https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020735Z&X-Amz-Expires=900&X-Amz-Signature=602be765be6206f6363a93f156b8837884d95b5a8f27d14e254dd7108b845cb7&X-Amz-SignedHeaders=host

18:07:44.836199 trace git-lfs: tq: enqueue retry #2 for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386" (size: 11406640119)

18:07:44.836238 trace git-lfs: tq: sending batch of size 1

18:07:44.836355 trace git-lfs: api: batch 1 files

18:07:44.836546 trace git-lfs: creds: git credential cache ("https", "huggingface.co", "")

18:07:44.836556 trace git-lfs: Filled credentials for https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:44.836585 trace git-lfs: HTTP: POST https://huggingface.co/allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch

> POST /allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch HTTP/1.1

> Host: huggingface.co

> Accept: application/vnd.git-lfs+json; charset=utf-8

> Authorization: Basic * * * * *

> Content-Length: 205

> Content-Type: application/vnd.git-lfs+json; charset=utf-8

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

{"operation":"upload","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119}],"transfers":["lfs-standalone-file","basic"],"ref":{"name":"refs/heads/main"}}18:07:45.158001 trace git-lfs: HTTP: 200

< HTTP/1.1 200 OK

< Content-Length: 578

< Connection: keep-alive

< Content-Type: application/vnd.git-lfs+json; charset=utf-8

< Date: Thu, 12 Nov 2020 02:07:45 GMT

< Etag: W/"242-m4CvhzTDqQPlc75+BedrFERvkE0"

< Server: nginx/1.14.2

< X-Powered-By: huggingface-moon

<

18:07:45.158145 trace git-lfs: HTTP: {"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020745Z&X-Amz-Expires=900&X-Amz-Signature=82592319178cff3f2a02f404e3540

{"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020745Z&X-Amz-Expires=900&X-Amz-Signature=82592319178cff3f2a02f404e354018:07:45.158254 trace git-lfs: HTTP: 89a6ef22b79fe75e6f544e1786faf3e8f5e&X-Amz-SignedHeaders=host"}}}]}

89a6ef22b79fe75e6f544e1786faf3e8f5e&X-Amz-SignedHeaders=host"}}}]}Uploading LFS objects: 0% (0/1), 9.7 MB | 752 KB/s 18:07:45.158419 trace git-lfs: xfer: adapter "basic" worker 5 processing job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:45.158794 trace git-lfs: HTTP: PUT https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386

> PUT /lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020745Z&X-Amz-Expires=900&X-Amz-Signature=82592319178cff3f2a02f404e354089a6ef22b79fe75e6f544e1786faf3e8f5e&X-Amz-SignedHeaders=host HTTP/1.1

> Host: s3.amazonaws.com

> Content-Length: 11406640119

> Content-Type: application/zip

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

18:07:55.959066 trace git-lfs: HTTP: 400 | 665 KB/s

< HTTP/1.1 400 Bad Request

< Connection: close

< Transfer-Encoding: chunked

< Content-Type: application/xml

< Date: Thu, 12 Nov 2020 02:07:54 GMT

< Server: AmazonS3

< X-Amz-Id-2: YPk1ZSL19/lW1Z7WxE/pTAyDK0Ny2ryDVCi1TZXtuT8Bh6itRmL4qO163dKG+s9yBSl8jyKRD7Y=

< X-Amz-Request-Id: D0E50DEEB73DFA43

<

18:07:55.959368 trace git-lfs: xfer: adapter "basic" worker 5 finished job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:55.959409 trace git-lfs: tq: retrying object 7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386: LFS: Client error: https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020745Z&X-Amz-Expires=900&X-Amz-Signature=82592319178cff3f2a02f404e354089a6ef22b79fe75e6f544e1786faf3e8f5e&X-Amz-SignedHeaders=host

18:07:55.959458 trace git-lfs: tq: enqueue retry #3 for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386" (size: 11406640119)

18:07:55.959490 trace git-lfs: tq: sending batch of size 1

18:07:55.959582 trace git-lfs: api: batch 1 files

18:07:55.959750 trace git-lfs: creds: git credential cache ("https", "huggingface.co", "")

18:07:55.959768 trace git-lfs: Filled credentials for https://huggingface.co/allenai/unifiedqa-t5-3b

18:07:55.959786 trace git-lfs: HTTP: POST https://huggingface.co/allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch

> POST /allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch HTTP/1.1

> Host: huggingface.co

> Accept: application/vnd.git-lfs+json; charset=utf-8

> Authorization: Basic * * * * *

> Content-Length: 205

> Content-Type: application/vnd.git-lfs+json; charset=utf-8

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

{"operation":"upload","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119}],"transfers":["basic","lfs-standalone-file"],"ref":{"name":"refs/heads/main"}}18:07:56.260024 trace git-lfs: HTTP: 200

< HTTP/1.1 200 OK

< Content-Length: 578

< Connection: keep-alive

< Content-Type: application/vnd.git-lfs+json; charset=utf-8

< Date: Thu, 12 Nov 2020 02:07:56 GMT

< Etag: W/"242-31cowPk91NvaIaX84tjI/gLbdvo"

< Server: nginx/1.14.2

< X-Powered-By: huggingface-moon

<

18:07:56.260224 trace git-lfs: HTTP: {"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020756Z&X-Amz-Expires=900&X-Amz-Signature=1c194a7990031e65288f0e7c59507

{"transfer":"basic","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119,"authenticated":true,"actions":{"upload":{"href":"https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020756Z&X-Amz-Expires=900&X-Amz-Signature=1c194a7990031e65288f0e7c5950718:07:56.260428 trace git-lfs: HTTP: 8387ee098bd5b3f3708e02085e3e9f6601a&X-Amz-SignedHeaders=host"}}}]}

8387ee098bd5b3f3708e02085e3e9f6601a&X-Amz-SignedHeaders=host"}}}]}Uploading LFS objects: 0% (0/1), 9.7 MB | 665 KB/s 18:07:56.260674 trace git-lfs: xfer: adapter "basic" worker 2 processing job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:07:56.261037 trace git-lfs: HTTP: PUT https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386

> PUT /lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020756Z&X-Amz-Expires=900&X-Amz-Signature=1c194a7990031e65288f0e7c595078387ee098bd5b3f3708e02085e3e9f6601a&X-Amz-SignedHeaders=host HTTP/1.1

> Host: s3.amazonaws.com

> Content-Length: 11406640119

> Content-Type: application/zip

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

18:08:07.099441 trace git-lfs: HTTP: 400 | 567 KB/s

< HTTP/1.1 400 Bad Request

< Connection: close

< Transfer-Encoding: chunked

< Content-Type: application/xml

< Date: Thu, 12 Nov 2020 02:08:06 GMT

< Server: AmazonS3

< X-Amz-Id-2: e9blPqVAV5CVfFOylV29AzDODso+WNBEVIhJKKQc6NbEAMDeUCyJ5NKumhuM5P3i67O58fmm31g=

< X-Amz-Request-Id: DFED315EE7523BFE

<

18:08:07.099632 trace git-lfs: xfer: adapter "basic" worker 2 finished job for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386"

18:08:07.099659 trace git-lfs: tq: retrying object 7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386: LFS: Client error: https://s3.amazonaws.com/lfs.huggingface.co/allenai/unifiedqa-t5-3b/7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA4N7VTDGOZQA2IKWK%2F20201112%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20201112T020756Z&X-Amz-Expires=900&X-Amz-Signature=1c194a7990031e65288f0e7c595078387ee098bd5b3f3708e02085e3e9f6601a&X-Amz-SignedHeaders=host

18:08:07.099701 trace git-lfs: tq: enqueue retry #4 for "7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386" (size: 11406640119)

18:08:07.099742 trace git-lfs: tq: sending batch of size 1

18:08:07.099832 trace git-lfs: api: batch 1 files

18:08:07.099999 trace git-lfs: creds: git credential cache ("https", "huggingface.co", "")

18:08:07.100008 trace git-lfs: Filled credentials for https://huggingface.co/allenai/unifiedqa-t5-3b

18:08:07.100024 trace git-lfs: HTTP: POST https://huggingface.co/allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch

> POST /allenai/unifiedqa-t5-3b.git/info/lfs/objects/batch HTTP/1.1

> Host: huggingface.co

> Accept: application/vnd.git-lfs+json; charset=utf-8

> Authorization: Basic * * * * *

> Content-Length: 205

> Content-Type: application/vnd.git-lfs+json; charset=utf-8

> User-Agent: git-lfs/2.10.0 (GitHub; darwin amd64; go 1.13.6)

>

{"operation":"upload","objects":[{"oid":"7e295e01528dc6a361211884f82daac33c422089644d0f5b2ddb2d96166aa386","size":11406640119}],"transfers":["lfs-standalone-file","basic"],"ref":{"name":"refs/heads/main"}}18:08:07.441913 trace git-lfs: HTTP: 200

< HTTP/1.1 200 OK

< Content-Length: 578

< Connection: keep-alive

< Content-Type: application/vnd.git-lfs+json; charset=utf-8

< Date: Thu, 12 Nov 2020 02:08:07 GMT