repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 2,291 | closed | Fix F841 flake8 warning | This PR completes the "fix all flake8 warnings" effort of the last few days.

There's a lot of judgment in the fixes here: when the result of an expression is assigned to a variable that isn't used:

- if the expression has no side effect, then it can safely be removed

- if the expression has side effects, then it must be kept and only the assignment to a variable must be removed

- or it may be a coding / refactoring mistake that results in a badly named variable

I'm not sure I made the right call in all cases, so I would appreciate a review.

E203, E501, W503 are still ignored because they're debatable, black disagrees with flake8, and black wins (by not being configurable). | 12-23-2019 21:42:39 | 12-23-2019 21:42:39 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=h1) Report

> Merging [#2291](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/072750f4dc4f586cb53f0face4b4a448bb0cdcac?src=pr&el=desc) will **decrease** coverage by `1.18%`.

> The diff coverage is `50%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2291 +/- ##

==========================================

- Coverage 74.45% 73.26% -1.19%

==========================================

Files 85 85

Lines 14608 14603 -5

==========================================

- Hits 10876 10699 -177

- Misses 3732 3904 +172

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [...c/transformers/modeling\_tf\_transfo\_xl\_utilities.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl90cmFuc2ZvX3hsX3V0aWxpdGllcy5weQ==) | `86.13% <ø> (+0.84%)` | :arrow_up: |

| [src/transformers/modeling\_albert.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19hbGJlcnQucHk=) | `78.86% <ø> (-0.18%)` | :arrow_down: |

| [src/transformers/data/metrics/\_\_init\_\_.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9kYXRhL21ldHJpY3MvX19pbml0X18ucHk=) | `27.9% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_pytorch\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9weXRvcmNoX3V0aWxzLnB5) | `8.72% <0%> (-81.28%)` | :arrow_down: |

| [src/transformers/modeling\_t5.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190NS5weQ==) | `81.05% <100%> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_t5.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl90NS5weQ==) | `96.54% <100%> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `93.12% <66.66%> (ø)` | :arrow_up: |

| [src/transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19yb2JlcnRhLnB5) | `54.1% <0%> (-10.15%)` | :arrow_down: |

| [src/transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ194bG5ldC5weQ==) | `71.19% <0%> (-2.32%)` | :arrow_down: |

| ... and [5 more](https://codecov.io/gh/huggingface/transformers/pull/2291/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=footer). Last update [072750f...3e0cf49](https://codecov.io/gh/huggingface/transformers/pull/2291?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

transformers | 2,290 | closed | duplicated line for repeating_words_penalty_for_language_generation | length_penalty has a duplicated wrong documentation for language generation -> delete two lines | 12-23-2019 20:51:17 | 12-23-2019 20:51:17 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=h1) Report

> Merging [#2290](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/aeef4823ab6099249679756182700e6800024c36?src=pr&el=desc) will **decrease** coverage by `<.01%`.

> The diff coverage is `0%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2290 +/- ##

==========================================

- Coverage 73.49% 73.48% -0.01%

==========================================

Files 87 87

Lines 14793 14794 +1

==========================================

Hits 10872 10872

- Misses 3921 3922 +1

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2290/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `63.34% <0%> (-0.12%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=footer). Last update [aeef482...0f6017b](https://codecov.io/gh/huggingface/transformers/pull/2290?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Yes, actually the doc is still not complete for this new feature.

We should add some examples and double-check all. Feel free to clean this up if you feel like it.<|||||>clean up documentation, add examples for documentation and rename some variables<|||||>checked example generation for openai-gpt, gpt2, xlnet and xlm in combination with #2289.

<|||||>Also checked for ctrl<|||||>also checked for transfo-xl<|||||>Awesome, merging! |

transformers | 2,289 | closed | fix bug in prepare inputs for language generation for xlm for effective batch_size > 1 | if multiple sentence are to be generated the masked tokens to be appended have to equal the effective batch size | 12-23-2019 20:46:49 | 12-23-2019 20:46:49 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=h1) Report

> Merging [#2289](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/81db12c3ba0c2067f43c4a63edf5e45f54161042?src=pr&el=desc) will **decrease** coverage by `0.01%`.

> The diff coverage is `0%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2289 +/- ##

==========================================

- Coverage 73.54% 73.52% -0.02%

==========================================

Files 87 87

Lines 14789 14792 +3

==========================================

Hits 10876 10876

- Misses 3913 3916 +3

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_xlm.py](https://codecov.io/gh/huggingface/transformers/pull/2289/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ194bG0ucHk=) | `86.23% <0%> (-0.23%)` | :arrow_down: |

| [src/transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/2289/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ194bG5ldC5weQ==) | `73.26% <0%> (-0.25%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=footer). Last update [81db12c...f18ac4c](https://codecov.io/gh/huggingface/transformers/pull/2289?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Indeed, thanks @patrickvonplaten |

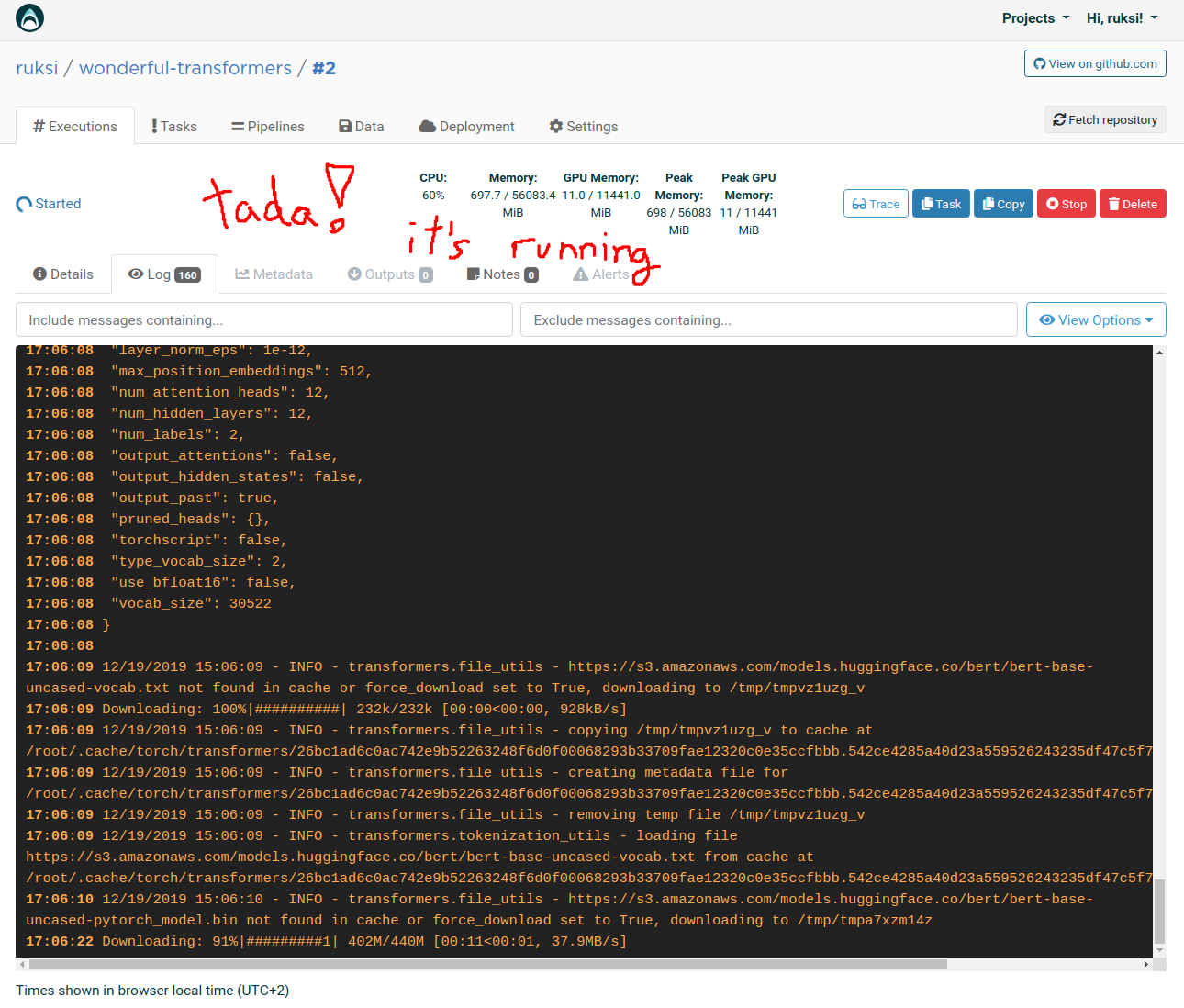

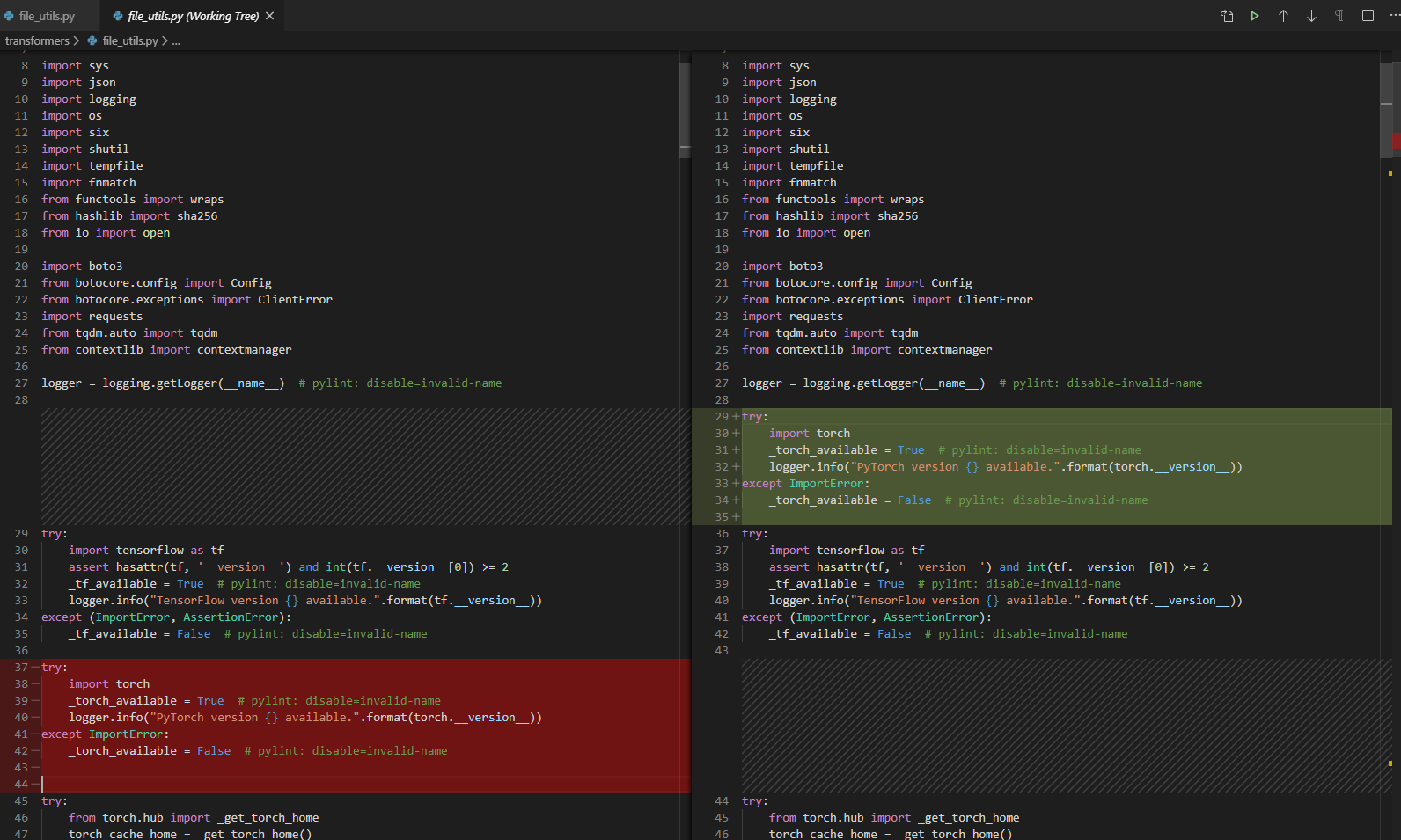

transformers | 2,288 | closed | Improve handling of optional imports | 12-23-2019 20:30:53 | 12-23-2019 20:30:53 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=h1) Report

> Merging [#2288](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/23dad8447c8db53682abc3c53d1b90f85d222e4b?src=pr&el=desc) will **increase** coverage by `0.2%`.

> The diff coverage is `100%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2288 +/- ##

=========================================

+ Coverage 74.27% 74.47% +0.2%

=========================================

Files 85 85

Lines 14610 14608 -2

=========================================

+ Hits 10851 10879 +28

+ Misses 3759 3729 -30

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/tokenization\_t5.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdDUucHk=) | `93.93% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `63.91% <ø> (ø)` | :arrow_up: |

| [src/transformers/commands/train.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb21tYW5kcy90cmFpbi5weQ==) | `0% <ø> (ø)` | :arrow_up: |

| [src/transformers/tokenization\_albert.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYWxiZXJ0LnB5) | `89.1% <ø> (ø)` | :arrow_up: |

| [src/transformers/pipelines.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9waXBlbGluZXMucHk=) | `68.31% <ø> (+2.32%)` | :arrow_up: |

| [src/transformers/data/processors/squad.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9kYXRhL3Byb2Nlc3NvcnMvc3F1YWQucHk=) | `29.37% <ø> (ø)` | :arrow_up: |

| [src/transformers/data/processors/utils.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9kYXRhL3Byb2Nlc3NvcnMvdXRpbHMucHk=) | `19.6% <ø> (ø)` | :arrow_up: |

| [src/transformers/tokenization\_xlm.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25feGxtLnB5) | `83.26% <ø> (ø)` | :arrow_up: |

| [src/transformers/data/metrics/\_\_init\_\_.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9kYXRhL21ldHJpY3MvX19pbml0X18ucHk=) | `27.9% <ø> (-3.21%)` | :arrow_down: |

| [src/transformers/tokenization\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25feGxuZXQucHk=) | `89.9% <ø> (ø)` | :arrow_up: |

| ... and [7 more](https://codecov.io/gh/huggingface/transformers/pull/2288/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=footer). Last update [23dad84...4621ad6](https://codecov.io/gh/huggingface/transformers/pull/2288?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

|

transformers | 2,287 | closed | Do Hugging Face GPT-2 Transformer Models Automatically Does the Absolute Position Embedding for Users? | Hello,

According to Hugging Face ```GPT2DoubleHeadsModel``` documentation (https://huggingface.co/transformers/model_doc/gpt2.html#gpt2doubleheadsmodel)

```

"Indices of input sequence tokens in the vocabulary.

GPT-2 is a model with absolute position embeddings"

```

So does this mean that, when we implement any Hugging Face GPT-2 Models (```GPT2DoubleHeadsModel```,```GPT2LMHeadsModel```, etc.) via the ```model( )``` statement, the 'absolute position embedding' is _automatically_ done for the user, so that the user actually does not need to specify anything in the ```model( )``` statement to ensure the absolute position embedding?

If the answer is 'yes', then why do we have an option of specifying ```position_ids``` in the ```model( )``` statement?

Thank you,

| 12-23-2019 18:42:50 | 12-23-2019 18:42:50 | Indeed, as you can see from the source code [here](https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_gpt2.py#L414-L417), when no position ids are passed, they are created as absolute position embeddings.

You could have trained a model with a GPT-2 architecture that was using an other type of position embeddings, in which case passing your specific embeddings would be necessary. I'm sure several other use-cases would make sure of specific position embeddings.<|||||>Ooohh, ok,

so to clarify, absolute position embedding _**is automatically done**_ by the ```model( )``` statement, but if we want to use our custom position embedding (i.e. other than the absolute position embedding), we can use the ```position_ids``` option inside the ```model( )``` statement......is what I said above correct?

Thank you,<|||||>Yes, that is correct!<|||||>Thank you :) ! |

transformers | 2,286 | closed | Typo in tokenization_utils.py | avoir -> avoid | 12-23-2019 17:15:01 | 12-23-2019 17:15:01 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=h1) Report

> Merging [#2286](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/23dad8447c8db53682abc3c53d1b90f85d222e4b?src=pr&el=desc) will **increase** coverage by `0.18%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2286 +/- ##

==========================================

+ Coverage 74.27% 74.45% +0.18%

==========================================

Files 85 85

Lines 14610 14610

==========================================

+ Hits 10851 10878 +27

+ Misses 3759 3732 -27

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2286/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `92.08% <ø> (+0.77%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2286/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `93.12% <0%> (+1.58%)` | :arrow_up: |

| [src/transformers/pipelines.py](https://codecov.io/gh/huggingface/transformers/pull/2286/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9waXBlbGluZXMucHk=) | `68.31% <0%> (+2.32%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/2286/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9hdXRvLnB5) | `32.14% <0%> (+7.14%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=footer). Last update [23dad84...7cef764](https://codecov.io/gh/huggingface/transformers/pull/2286?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

transformers | 2,285 | closed | BertTokenizer custom UNK unexpected behavior | ## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): Bert

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [ ] the official example scripts: (give details)

* [X] my own modified scripts: (give details)

Importing transformers to my own project but using BertTokenizer and BertModel with pretrained weights, using 'bert-base-multilingual-cased' for both tokenizer and model.

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [X] my own task or dataset: (give details)

Fine tuning BERT for English NER on a custom news dataset.

## To Reproduce

Steps to reproduce the behavior:

1. Initialize a tokenizer with custom UNK

2. Try to convert the custom UNK to ID

3. Receive None as

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

```

>>>tokenizer = BertTokenizer.from_pretrained('bert-base-multilingual-cased', do_lower_case=False, pad_token="<pad>", unk_token="<unk>")

>>> tokenizer.tokenize("<unk>")

['<unk>']

>>> tokenizer.convert_tokens_to_ids(["<unk>"])

[None]

```

## Expected behavior

My custom UNK should have an ID. (instead, I get None)

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS: Mac OSX

* Python version: 3.7.3

* PyTorch version: 1.1.0.post2

* PyTorch Transformers version (or branch): 2.1.1

* Using GPU ? No

* Distributed of parallel setup ? No

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

| 12-23-2019 15:12:29 | 12-23-2019 15:12:29 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I actually have the same problem with GPT-2 tokenizer. Is this the expected behavior? |

transformers | 2,284 | closed | [ALBERT]: Albert base model itself consuming 32 GB GPU memory.. | ## 🐛 Bug

<!-- Important information -->

Model I am using TFALBERT:

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [ ] the official example scripts:

`from transformers import TFAlbertForSequenceClassification`

`model = TFAlbertForSequenceClassification.from_pretrained('albert-base-v2')`

After this GPU memory is consumed almost 32 GB.... base V2 is model is roughly around 50 MB which is occupying 32 GB on GPU

## Environment

* OS: Linux

* Python version: 3.7

| 12-23-2019 14:48:14 | 12-23-2019 14:48:14 | i have similar situation.

https://github.com/dsindex/iclassifier#emb_classalbert

in the paper( https://arxiv.org/pdf/1909.11942.pdf ), ALBERT xlarge has just 60M parameters which is much less than BERT large(334M)'s.

but, we are unable to load albert-xlarge-v2 on 32G GPU memory.

(no problem on bert-large-uncased, bert-large-cased)<|||||>A similar situation happened to me too.

While fine-tuning Albert base on SQuAD 2.0, I had to lower the train batch size to manage to fit the model on 2x NVIDIA 1080 Ti, for a total of about 19 GB used.

I find it quite interesting and weird as the same time, as I managed to fine-tune BERT base on the same dataset and the same GPUs using less memory...<|||||>Same for the pytorch version of ALBERT, where my 8/11GB GPU could run BERT_base and RoBERTa.<|||||>Interesting, I started to hesitate on using this ALBERT implementation but hope it will be fixed soon.<|||||>Indeed, I can reproduce for the TensorFlow version. I'm looking into it, thanks for raising this issue.<|||||>@jonanem, if you do this at the beginning of your script, does it change the amount of memory used?

```py

gpus = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_virtual_device_configuration(

gpus[0],

[tf.config.experimental.VirtualDeviceConfiguration(memory_limit=1024)]

)

```

This should keep the amount of memory allocated to the model to 1024MB, with possibility to grow if need be. Initializing the model after this only uses 1.3GB of VRAM on my side. Can you reproduce?

See this for more information: [limiting gpu memory growth](https://www.tensorflow.org/guide/gpu#limiting_gpu_memory_growth)<|||||>@LysandreJik I just did some investigation and I found a similar problem with the Pytorch implementation.

Model: ALBERT base v2, fine tuning on SQuAD v2 task

I used the official code from Google Tensorflow repository and I managed to fine tune it on a single GTX 1080 Ti, with batch size 16 and memory consumption of about 10 GB.

Then, I used transformers Pytorch implementation and did the same task on 4x V100 on AWS, with total batch size 48 and memory consumption of 52 GB (about 13 GB per GPU).

Now, putting it in perspective, I guess the memory consumption of the Pytorch implementation is 10/15 GB above what I was expecting. Is this normal?

In particular, where in the code is there the Embedding Factorization technique proposed in the official paper?<|||||>Hi @matteodelv, I ran a fine-tuning task on ALBERT (base-v2) with the parameters you mentioned: batch size of 16. I end up with a VRAM usage of 11.4GB, which is slightly more than the official Google Tensorflow implementation you mention. The usage is lower than when using BERT, which has a total usage of 14GB.

However, when loading the model on its own without any other tensors, taking into account the pytorch memory overhead, it only takes about 66MB of VRAM.

Concerning your second question, here is the definition of the Embedding Factorization technique proposed in the official paper: `[...] The first one is a factorized embedding parameterization. By decomposing

the large vocabulary embedding matrix into two small matrices, we separate the size of the hidden

layers from the size of vocabulary embedding.`

In this PyTorch implementation, there are indeed two smaller matrices so that the two sizes may be separate. The first embedding layer is visible in [the `AlbertEmbeddings` class](https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_albert.py#L172), and is of size `(vocab_size, embedding_size)`, whereas the second layer is visible in [the `AlbertTransformer` class](https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_albert.py#L317), with size `(embedding_size, hidden_size)`.<|||||>Thanks for your comment @LysandreJik... I haven't looked in the `AlbertTransformer` class for the embedding factorization.

However, regarding the VRAM consumption, I'm still a bit confused about it.

I don't get why the same model with a batch size 16 consumes about 10/11 GB on a single GPU while the same training, on 4 GPUs (total batch size 48, so it's 12 per GPUs) requires more memory.

Could you please check this? May it be related to Pytorch's `DataParallel`?<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Did @matteodelv @LysandreJik find any issue or solution for this? The memory consumption given the parameter is insane<|||||>Unfortunately not. I had to tune hyperparameters or use other hardware with more memory. But I was using an older version... I haven't checked if the situation has changed since then.<|||||>Hey,

I tried running on GTX 1080 (10GB) bert-base-uncased with **sucess** on IMDB dataset with a batch-size equal to 16 and sequence length equal to 128.

Running albert-base-v2 with the same sequence length and same batch size is giving me Out-of-memory issues.

I am using pytorch, so I guess I have the same problem as you guys here.<|||||>Same issue. ALBERT raises OOM requiring 32G. <|||||>ALBERT repeats the same parameters for each layer but increases each layer size, so even though it have fewer parameters than BERT, the memory needs are greater due to the much larger activations in each layer.<|||||>> ALBERT repeats the same parameters for each layer but increases each layer size, so even though it have fewer parameters than BERT, the memory needs are greater due to the much larger activations in each layer.

That is true, still there is need for more computation, but BERT can fit into 16G memory. I had my albert reimplemented differently and I could fit its weights on a 24G gpu.<|||||>> ALBERT repeats the same parameters for each layer but increases each layer size, so even though it have fewer parameters than BERT, the memory needs are greater due to the much larger activations in each layer.

Thanks for this explanation, which saves my life. |

transformers | 2,283 | closed | Loading sciBERT failed | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I am trying to compare the effect of different pre-trained models on RE, the code to load bert is:

`self.bert = BertModel.from_pretrained(pretrain_path)`

When the "pretrain_path" is "pretrain/bert-base-uncased" , everything is fine, but after i changed it to "pretrain/scibert-uncased", i got error:

`-OSError: Model name 'pretrain/scibert-uncased' was not found in model name list (bert-base-uncased, bert-large-uncased, bert-base-cased, bert-large-cased, bert-base-multilingual-uncased, bert-base-multilingual-cased, bert-base-chinese, bert-base-german-cased, bert-large-uncased-whole-word-masking, bert-large-cased-whole-word-masking, bert-large-uncased-whole-word-masking-finetuned-squad, bert-large-cased-whole-word-masking-finetuned-squad, bert-base-cased-finetuned-mrpc, bert-base-german-dbmdz-cased, bert-base-german-dbmdz-uncased). We assumed 'pretrain/scibert-uncased/config.json' was a path or url to a configuration file named config.json or a directory containing such a file but couldn't find any such file at this path or url.`

The scibert is pytorch model and the two directories are same in structure.

It seems that if the model name is not in the name list, it won't work.

Thank you very much! | 12-23-2019 14:20:53 | 12-23-2019 14:20:53 | Try to use the following commands:

```bash

$ wget "https://s3-us-west-2.amazonaws.com/ai2-s2-research/scibert/huggingface_pytorch/scibert_scivocab_uncased.tar"

$ tar -xf scibert_scivocab_uncased.tar

The sciBERT model is now extracted and located under: `./scibert_scivocab_uncased`.

To load it:

```python

from transformers import BertModel

model = BertModel.from_pretrained("./scibert_scivocab_uncased")

model.eval()

```

This should work 🤗<|||||>> Try to use the following commands:

>

> ```shell

> $ wget "https://s3-us-west-2.amazonaws.com/ai2-s2-research/scibert/huggingface_pytorch/scibert_scivocab_uncased.tar"

> $ tar -xf scibert_scivocab_uncased.tar

>

> The sciBERT model is now extracted and located under: `./scibert_scivocab_uncased`.

>

> To load it:

>

> ```python

> from transformers import BertModel

>

> model = BertModel.from_pretrained("./scibert_scivocab_uncased")

> model.eval()

> ```

>

> This should work 🤗

It works! Thank you very much! |

transformers | 2,282 | closed | Maybe some parameters are error in document for distributed training ? | Based on [Distributed training document](https://huggingface.co/transformers/examples.html#id1) , one can use `bert-base-cased` model to fine-tune MR model and reaches very high score.

> Here is an example using distributed training on 8 V100 GPUs and Bert Whole Word Masking uncased model to reach a F1 > 93 on SQuAD1.0:

```

python -m torch.distributed.launch --nproc_per_node=8 run_squad.py \

--model_type bert \

--model_name_or_path bert-base-cased \

--do_train \

--do_eval \

--do_lower_case \

--train_file $SQUAD_DIR/train-v1.1.json \

--predict_file $SQUAD_DIR/dev-v1.1.json \

--learning_rate 3e-5 \

--num_train_epochs 2 \

--max_seq_length 384 \

--doc_stride 128 \

--output_dir ../models/wwm_uncased_finetuned_squad/ \

--per_gpu_train_batch_size 24 \

--gradient_accumulation_steps 12

```

```

f1 = 93.15

exact_match = 86.91

```

**But based on [google bert repo](https://github.com/google-research/bert#squad-11) , the model `bert-base-cased` perfermance is**

```

{"f1": 88.41249612335034, "exact_match": 81.2488174077578}

```

Maybe the right pretrained model is `bert-large-uncased` ?

Thanks~ | 12-23-2019 13:08:03 | 12-23-2019 13:08:03 | |

transformers | 2,281 | closed | Add Dutch pre-trained BERT model | We trained a Dutch cased BERT model at the University of Groningen.

Details are on [Github](https://github.com/wietsedv/bertje/) and [Arxiv](https://arxiv.org/abs/1912.09582). | 12-23-2019 12:40:56 | 12-23-2019 12:40:56 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=h1) Report

> Merging [#2281](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ba2378ced560c12f8ee97ca7998fd28b93fcfb47?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2281 +/- ##

=======================================

Coverage 74.45% 74.45%

=======================================

Files 85 85

Lines 14610 14610

=======================================

Hits 10878 10878

Misses 3732 3732

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/tokenization\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/2281/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYmVydC5weQ==) | `96.34% <ø> (ø)` | :arrow_up: |

| [src/transformers/configuration\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/2281/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX2JlcnQucHk=) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/2281/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9iZXJ0LnB5) | `96.26% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/2281/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19iZXJ0LnB5) | `87.7% <ø> (ø)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=footer). Last update [ba2378c...5eb71e6](https://codecov.io/gh/huggingface/transformers/pull/2281?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Hi @wietsedv! Did you try loading your tokenizer/model directly, cf. https://huggingface.co/wietsedv/bert-base-dutch-cased

i.e. It should work out-of-the-box using:

```python

tokenizer = AutoTokenizer.from_pretrained("wietsedv/bert-base-dutch-cased")

model = AutoModel.from_pretrained("wietsedv/bert-base-dutch-cased")

tf_model = TFAutoModel.from_pretrained("wietsedv/bert-base-dutch-cased")

```

Let us know if it's not the case (we can still merge this PR to have a nice shortcut inside the code but feature-wise it should be equivalent)<|||||>Hi! Thanks for your response. I did notice that I can use that snippet and I can confirm it works. I am however not sure whether cased tokenization works correctly that way. Correct me if I am wrong, but it seems that Transformers always lowercases unless that is explicitly disabled. I did disable it in this PR, which makes it work correctly out of the box. But I think lowercasing is enabled by default if people use the snippet above.

Please correct my if I am wrong.<|||||>We'll check, thanks for the report.<|||||>Just a note: I absolutely love seeing a Dutch version of BERT but this isn't the only BERT model out there. As you mention in your paper, there's also [BERT-NL](http://textdata.nl/). You seem to claim that it performs a lot worse than your version and that it is even outperformed by multilingual BERT. At first glance I don't see any written-down experiments confirming that claim - a comparison between BERTje, BERT-NL, and multilingual BERT on down-stream tasks would've been much more informative. (BERT-NL will be presented at the largest computational linguistics conference on Dutch (Computational Linguistics in the Netherlands; CLIN) at the end of the month, so presumably it does carry some weight.)

All this to say: why does your version deserve to be "the" `bert-base-dutch-cased` model if there is an alternative? Don't get me wrong, I really value your research, but a fair and full comparison is missing.<|||||>It is correct that there is another Dutch model and we do full fine-tuning results of this model. These numbers were included in an earlier draft of our paper, but we removed it since they did not add any value. For instance for named entity recognition (conll2002), multilingual BERT achieves about 80% accuracy, our BERT model about 88% and their BERT model just 41%.

More detailed comparison with their model would therefore not add any value since the scores are too low. The authors have not made any claims about model performance yet, so it would have been unfair to be too negative about their model before they have even released any paper.

Someone at Leiden has confirmed that their experiments also showed that they were outperformed by multilingual BERT. Therefore I think that our BERT model is the only _effective_ Dutch BERT model.

PS: The entry barrier for CLIN is not extremely high. The reason we are not presenting at CLIN is that we missed the deadline. <|||||>I think it is in fact very useful information to see such huge difference. This way readers are not confronted with the same question that I posed before: there are two Dutch BERT models - but which one should I use/which one is better? You now clarify it to me, for which I am grateful, but a broader audience won't know. I think the added value is great. However, I do agree that is hard and perhaps unfair to compare to a model that hasn't been published/discussed yet. (Then again, their model is available online so they should be open to criticism.)

The CLIN acceptance rate is indeed high, and the follow-up CLIN Journal is also not bad. Still, what I was aiming for is peer review. If I were to review the BERT-NL (assuming they will submit after conference and assuming I was a reviewer this year), then I would also mention your model and ask for a comparison. To be honest, I put more faith in a peer reviewed journal/conference than arXiv papers.

I really don't want to come off as arrogant and I very much value your work, but I am trying to approach this from a person who is just getting started with this kind of stuff and doesn't follow the trends or what is going on in the field. They might find this model easily available in the Transformers hub, but then they might read in a journal (possibly) about BERT-NL - which then is apparently different from the version in Transformers. On top of that, neither paper (presumably) refers or compares to the other! Those people _must be confused_ by that.

The above wall of text just to say that I have no problem with your model being "the" Dutch BERT model because it seems to clearly be the best one, but that I would very much like to see reference/comparison to the other model in your paper so that it is clear to the community what is going on with these two models. I hope that the authors of BERT-NL do the same. Do you have any plans to submit a paper somewhere?<|||||>Thanks for your feedback and clear explanation. We do indeed intend to submit a long paper somewhere. The short paper on arxiv is mainly intended for reference and to demonstrate that the model is effective with some benchmarks. Further evaluation would be included in a longer paper.<|||||>The authors of BERT-NL have reported results which can be compared to Bertje, see https://twitter.com/suzan/status/1200361620398125056

Also see the results in this thread https://twitter.com/danieldekok/status/1213378688563253249 and https://twitter.com/danieldekok/status/1213741132863156224

In both cases, Bertje does outperform BERT-NL. On the other hand, the results about Bertje vs multilingual BERT are different, so this needs to be investigated further.<|||||>I think @BramVanroy raises some good points about naming convention.

In my opinion the organization name or author name should come after the "bert-base-<language>" skeleton.

So it seems that BERTje is current SOTA now. But: on the next conference maybe another BERT model for Dutch is better... I'm not a fan of the "First come, first served" principle here 😅

/cc @thomwolf , @julien-c <|||||>> On the other hand, the results about Bertje vs multilingual BERT are different, so this needs to be investigated further.

Agreed. Tweeting results of doing "tests" is one thing, but actual thorough investigating and reporting is something else. It has happened to all of us that you quickly wanted to check something and only later realized that you made a silly mistake. (My last one was forgetting the `-` in my learning rate and not noticing it, oh boy what a day.) As I said before I would really like to a see a thorough, reproducible comparison of BERTje, BERT-NL, and multilingual BERT, and I believe that that should be the basis of any new model. Many new models sprout from the community grounds - and that's great! - but without having at least _some_ reference and comparison, it is guess-work trying to figure out which one is best or which one you should use.

> I think @BramVanroy raises some good points about naming convention.

>

> In my opinion the organization name or author name should come after the "bert-base-" skeleton.

>

> So it seems that BERTje is current SOTA now. But: on the next conference maybe another BERT model for Dutch is better... I'm not a fan of the "First come, first served" principle here 😅

>

> /cc @thomwolf , @julien-c

Perhaps it's better to just make the model available through the user and that's all? In this case, only make it available through `wietsedv/bert-base-dutch-cased` and not `bert-base-dutch-cased`? That being said, where do you draw the line of course. Hypothetical question: why does _a Google_ get the rights to make its weights available without a `google/` prefix, acting as a "standard"? I don't know how to answer that question, so ultimately it's up to the HuggingFace people.

I'm also not sure how diverging models would then work. If for instance you bring out a German BERT-derivative that has a slightly different architecture, or e.g. a different tokenizer, how would that then get integrated in Transformers? (For example, IIRC BERTje uses SOP instead of NSP, so that may lead to more structural changing in the available heads than just different weights.)

<|||||>I inherently completely agree with your points. I think the people at Huggingface are trying to figure out how to do this, but they have not been really consistent. Initially, the "official" models within Transformers were only original models (Google/Facebook) and it is unlikely that there would be competetition for better models with exactly the same architecture in English. But for other monolingual models this is different.

I prefer a curated list with pre-trained general models that has more structure than the long community models list. But existing shortcuts should be renamed to be consistent. German for instance has a regular named german and there is one with the `dbmdz` infix. And Finnish has for some reason the `v1` suffix?

My preference would be to always use `institution/` or `institution-` prefixes in the curated list. In Transformers 2.x, the current shortcuts could be kept for backward compatibility but a structured format should be used in the documentation. I think this may prevent many frustrations if even more non-english models are trained and even more people are wanting to use and trust (!) these models.<|||||>200% agree with that. That would be the fairest and probably clearest way of doing this. Curating the list might not be easy, though, unless it is curated by the community (like a wiki)? Perhaps requiring a description, website, paper, any other meta information might help to distinguish models as well, giving the authors a chance to explain, e.g., which data their model was trained on, which hyperparameters were used, and how their model differs from others.

I really like http://nlpprogress.com/ which "tracks" the SOTA across different NLP tasks. It is an open source list and anyone can contribute through github. Some kind of lists like this might be useful, but instead discussing the models. <|||||>You all raise excellent questions, many (most?) of which we don’t have a definitive answer to right now 🤗

Some kind of structured evaluation results (declarative or automated) could be a part of the solution. In addition to nlpprogress, sotabench/paperswithcode is also a good source of inspiration.

<|||||>On the previous point of being able to load the tokenizer's (remote) config correctly:

- I've added a `tokenizer_config.json` to your user namespace on S3: https://s3.amazonaws.com/models.huggingface.co/bert/wietsedv/bert-base-dutch-cased/tokenizer_config.json

- We're fixing the support for those remote tokenizer configs in https://github.com/huggingface/transformers/pull/2535 (you'll see that the unit test uses your model). Feedback welcome.<|||||>Merging this as we haven't seen other "better" BERT models for Dutch (coincidentally, [`RobBERT`](https://people.cs.kuleuven.be/~pieter.delobelle/robbert/) from @iPieter looks like a great RoBERTa-like model)

Please see [this discussion on model descriptions/README.md](https://github.com/huggingface/transformers/issues/2520#issuecomment-579009439). If you can upload a README.md with eval results/training methods, that'd be awesome.

Thanks! |

transformers | 2,280 | closed | Do anyone have solution for this | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

| 12-23-2019 10:50:38 | 12-23-2019 10:50:38 | |

transformers | 2,279 | closed | Help with finetune BERT pretraining | Hi

could you please assist me how I can pretrain the BERT model, so not like SNLI/MNLI when we finetune a pretrained model, but doing pretraining objective.

thanks a lot

and Merry Christmas and happy new year in advance to the team | 12-23-2019 10:35:49 | 12-23-2019 10:35:49 | I suggest you follow the [run_lm_finetuning.py](https://github.com/huggingface/transformers/blob/master/examples/run_lm_finetuning.py) script. Instead of downloading a pretrained model, simply start with a fresh one. Here's an example:

```

from transformers import BertModel, BertConfig, BertTokenizer

model = BertModel(BertConfig())

tokenizer = BertTokenizer.from_pretrained('bert-base-cased')

```

I personally don't have a reason to write my own tokenizer, but if you do feel free to do that as well. All you need to do is generate a vocab.txt file from your corpus.<|||||>You can now leave `--model_name_or_path` to None in `run_language_modeling.py` to train a model from scratch.

See also https://huggingface.co/blog/how-to-train |

transformers | 2,278 | closed | where is the script of a second step of knwoledge distillation on SQuAD 1.0? | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

In Distil part, there is a paragraph description which is "distilbert-base-uncased-distilled-squad: A finetuned version of distilbert-base-uncased finetuned using (a second step of) knwoledge distillation on SQuAD 1.0. This model reaches a F1 score of 86.9 on the dev set (for comparison, Bert bert-base-uncased version reaches a 88.5 F1 score)."

so where is the script of "a second step of knwoledge distillation on SQuAD 1.0" mentioned above?

Thanks a lot, it will be very helpful to me!

| 12-23-2019 09:13:26 | 12-23-2019 09:13:26 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Check here: https://github.com/huggingface/transformers/blob/master/examples/distillation/run_squad_w_distillation.py |

transformers | 2,277 | closed | Does the calling order need to be changed? | ## ❓ Questions & Help

pytorch: 1.3.0

torch/optim/lr_scheduler.py:100: UserWarning: Detected call of `lr_scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `lr_scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the learning rate schedule.See more details at https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate

"https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate", UserWarning)

| 12-23-2019 08:30:10 | 12-23-2019 08:30:10 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 2,276 | closed | fix error due to wrong argument name to Tensor.scatter() | The named argument is called "source", not "src" in the out of place version for some reason, despite it being called "src" in the in-place version of the same Pytorch function. This causes an error. | 12-23-2019 07:19:41 | 12-23-2019 07:19:41 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=h1) Report

> Merging [#2276](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ce50305e5b8c8748b81b0c8f5539a337b6a995b9?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `0%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2276 +/- ##

=======================================

Coverage 74.45% 74.45%

=======================================

Files 85 85

Lines 14610 14610

=======================================

Hits 10878 10878

Misses 3732 3732

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2276/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `63.91% <0%> (ø)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=footer). Last update [ce50305...398bb03](https://codecov.io/gh/huggingface/transformers/pull/2276?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Thanks a lot for catching that @ShnitzelKiller!<|||||>I just realized that despite the official (current) documentation saying that the argument name is "source", after upgrading my pytorch version, this code is what throws an error saying the argument name is "src"! I should probably notify you to revert this pull request then. |

transformers | 2,275 | closed | Gpt2/xl Broken on "Write With Transformer" site | If you navigate to the [GPT-2 section of the Write With Transformer site](https://transformer.huggingface.co/doc/gpt2-large), select gpt2/xl, and try to generate text, the process will not generate anything. | 12-23-2019 05:26:28 | 12-23-2019 05:26:28 | Same problem. And it seems not fixed yet.<|||||>It should be fixed now. Thank you for raising this issue. |

transformers | 2,274 | closed | AttributeError: 'GPT2LMHeadModel' object has no attribute 'generate' | ## 🐛 Bug

<!-- Important information -->

The example script `run_generation.py` is broken with the error message `AttributeError: 'GPT2LMHeadModel' object has no attribute 'generate'`

## To Reproduce

Steps to reproduce the behavior:

1. In a terminal, cd to `transformers/examples` and then `python run_generation.py --model_type=gpt2 --model_name_or_path=gpt2`

2. After the model binary is downloaded to cache, enter anything when prompted "`Model prompt >>>`"

3. And then you will see the error:

```

Traceback (most recent call last):

File "run_generation.py", line 236, in <module>

main()

File "run_generation.py", line 216, in main

output_sequences = model.generate(

File "C:\Anaconda3\lib\site-packages\torch\nn\modules\module.py", line 585, in __getattr__

type(self).__name__, name))

AttributeError: 'GPT2LMHeadModel' object has no attribute 'generate'

```

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS: Windows 10

* Python version: 3.7.3

* PyTorch version: 1.3.1

* PyTorch Transformers version (or branch): 2.2.2

* Using GPU ? N/A

* Distributed of parallel setup ? N/A

* Any other relevant information:

I'm running the latest version of `run_generation.py`. Here is the permanent link: https://github.com/huggingface/transformers/blob/ce50305e5b8c8748b81b0c8f5539a337b6a995b9/examples/run_generation.py

## Additional context

<!-- Add any other context about the problem here. -->

| 12-23-2019 03:05:15 | 12-23-2019 03:05:15 | Same problem<|||||>I have found the reason.

So it turns out that the `generate()` method of the `PreTrainedModel` class is newly added, even newer than the latest release (2.3.0). Quite understandable since this library is iterating very fast.

So to make `run_generation.py` work, you can install this library like this:

- Clone the repo to your computer

- cd into the repo

- Run `pip install -e .` (don't forget the dot)

- Re-run `run_generation.py`

I'll leave this ticket open until the `generate()` method is incorporated into the latest release.<|||||>@jsh9's solution worked for me!

Also, if you want to avoid doing the manual steps, you can just `pip install` directly from the `master` branch by running:

```bash

pip install git+https://github.com/huggingface/transformers.git@master#egg=transformers

```

<|||||>i was getting the same error then i used repository before 7days which is working fine for me

`!wget https://github.com/huggingface/transformers/archive/f09d9996413f2b265f1c672d7a4b438e4c5099c4.zip`

then unzip with

`!unzip file_name.zip`

there is some bugs in recent update, hope they fix it soon<|||||>@Weenkus's way worked for me. In `requirements.txt` you can use;

```

-e git+https://github.com/huggingface/transformers.git@master#egg=transformers

```

(all on one line)<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 2,273 | closed | adding special tokens after truncating in run_lm_finetuning.py | ## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): Bert

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [x] the official example scripts: (give details)

In `run_lm_finetuning.py`, we have the following

```

for i in range(0, len(tokenized_text) - block_size + 1, block_size): # Truncate in block of block_size

self.examples.append(tokenizer.build_inputs_with_special_tokens(tokenized_text[i : i + block_size]))

```

If we add special tokens after truncating to `block_size`, the example is no longer of length `block_size`, but longer.

In the help information for `block_size` as an input argument, it says "Optional input sequence length after tokenization. The training dataset will be truncated in block of this size for training. Default to the model max input length for single sentence inputs (**take into account special tokens**)." This may be confusing, because the `block_size` written as the default input is 512, but if you use BERT-base as the model you're pretraining from, the `block_size` input in that function is actually 510.

The [original BERT code](https://github.com/google-research/bert/blob/master/extract_features.py) makes sure all examples are `block_size` after adding special tokens:

```

if tokens_b:

# Modifies `tokens_a` and `tokens_b` in place so that the total

# length is less than the specified length.

# Account for [CLS], [SEP], [SEP] with "- 3"

_truncate_seq_pair(tokens_a, tokens_b, seq_length - 3)

else:

# Account for [CLS] and [SEP] with "- 2"

if len(tokens_a) > seq_length - 2:

tokens_a = tokens_a[0:(seq_length - 2)]

``` | 12-22-2019 23:28:49 | 12-22-2019 23:28:49 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 2,272 | closed | Run_tf_ner.py error on TPU | ## 🐛 Bug

run_tf_ner.py does not work with TPU. The first error is:

`File system scheme '[local]' not implemented `

When the script is changed and .tfrecord file is moved to gs:// address (and also hardcoded "/tmp/mylogs" is replaced with gs:/// dir) there is an error with optimiser:

`AttributeError: 'device_map' not accessible within a TPU context.`

## To Reproduce

Steps to reproduce the behaviour:

python run_tf_ner.py.1 --tpu grpc://10.240.1.2:8470 --data_dir gs://nomentech/datadir --labels ./datasets/labels.txt --output_dir gs://nomentech/model1 --max_seq_length 40 --model_type bert --model_name_or_path bert-base-multilingual-cased --do_train --do_eval --cache_dir gs://nomentech/cachedir --num_train_epochs 5 --per_device_train_batch_size 96

## Environment

* OS: Ubuntu 18

* Python version: 3.7

* Tensorflow version: 2.1.0-dev20191222 (tf-nightly)

* PyTorch Transformers version (or branch): 2.3.0

* Distributed of parallel setup ? TPU

| 12-22-2019 23:19:29 | 12-22-2019 23:19:29 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I have the same issue on Colab TPU with tf-nightly 2.2.0. @dlauc did you solve the problem?<|||||>Hi @BOUALILILila, I've switched to the TF/Keras - it works well with TPU-s<|||||>Hi @dlauc. Could you elaborate how you fixed this? I am having the same problem. |

transformers | 2,271 | closed | Improve setup and requirements | - Clean up several requirements files generated with pip freeze, with no clear update process

- Rely on extra_requires for managing optional requirements

- Update contribution instructions | 12-22-2019 19:39:04 | 12-22-2019 19:39:04 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=h1) Report

> Merging [#2271](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/23dad8447c8db53682abc3c53d1b90f85d222e4b?src=pr&el=desc) will **decrease** coverage by `0.58%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2271 +/- ##

==========================================

- Coverage 74.27% 73.68% -0.59%

==========================================

Files 85 87 +2

Lines 14610 14791 +181

==========================================

+ Hits 10851 10899 +48

- Misses 3759 3892 +133

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/commands/user.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb21tYW5kcy91c2VyLnB5) | `0% <0%> (ø)` | |

| [src/transformers/commands/serving.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb21tYW5kcy9zZXJ2aW5nLnB5) | `0% <0%> (ø)` | |

| [src/transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `92.27% <0%> (+0.96%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `93.12% <0%> (+1.58%)` | :arrow_up: |

| [src/transformers/pipelines.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9waXBlbGluZXMucHk=) | `68.31% <0%> (+2.32%)` | :arrow_up: |

| [src/transformers/data/processors/squad.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9kYXRhL3Byb2Nlc3NvcnMvc3F1YWQucHk=) | `35.97% <0%> (+6.6%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/2271/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9hdXRvLnB5) | `32.14% <0%> (+7.14%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=footer). Last update [23dad84...10724a8](https://codecov.io/gh/huggingface/transformers/pull/2271?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

transformers | 2,270 | closed | Remove support for Python 2 | 12-22-2019 17:23:35 | 12-22-2019 17:23:35 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=h1) Report

> Merging [#2270](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/b6ea0f43aeb7ff1dcb03658e38bacae1130abd91?src=pr&el=desc) will **increase** coverage by `1.2%`.

> The diff coverage is `86.66%`.

[](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2270 +/- ##

=========================================

+ Coverage 73.25% 74.45% +1.2%

=========================================

Files 85 85

Lines 14779 14610 -169

=========================================

+ Hits 10826 10878 +52

+ Misses 3953 3732 -221

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_camembert.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19jYW1lbWJlcnQucHk=) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/configuration\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX3JvYmVydGEucHk=) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/tokenization\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fZGlzdGlsYmVydC5weQ==) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9hdXRvLnB5) | `32.14% <ø> (-0.41%)` | :arrow_down: |

| [src/transformers/configuration\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX2Rpc3RpbGJlcnQucHk=) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `93.12% <ø> (-0.04%)` | :arrow_down: |

| [src/transformers/configuration\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX2N0cmwucHk=) | `97.05% <ø> (-0.09%)` | :arrow_down: |

| [src/transformers/tokenization\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fcm9iZXJ0YS5weQ==) | `100% <ø> (ø)` | :arrow_up: |

| [src/transformers/tokenization\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fY3RybC5weQ==) | `96.11% <ø> (-0.08%)` | :arrow_down: |

| [src/transformers/modeling\_tf\_t5.py](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl90NS5weQ==) | `96.54% <ø> (-0.01%)` | :arrow_down: |

| ... and [66 more](https://codecov.io/gh/huggingface/transformers/pull/2270/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=footer). Last update [b6ea0f4...1a948d7](https://codecov.io/gh/huggingface/transformers/pull/2270?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

|

transformers | 2,269 | closed | Bad F1 Score for run_squad.py on SQuAD2.0 | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

When I run the run_squad.py for SQuAD2.0 like this

python3 run_squad.py \

--model_type bert \

--model_name_or_path bert-base-cased \

--do_train \

--do_eval \

--train_file /share/nas165/Wendy/transformers/examples/tests_samples/SQUAD/train-v2.0.json \

--predict_file /share/nas165/Wendy/transformers/examples/tests_samples/SQUAD/dev-v2.0.json \

--per_gpu_train_batch_size 4 \

--learning_rate 4e-5 \

--num_train_epochs 2.0 \

--max_seq_length 384 \

--doc_stride 128 \

--output_dir /share/nas165/Wendy/transformers/examples/SQuAD2.0_debug_bert/

--version_2_with_negative=True \

--null_score_diff_threshold=-1.967471694946289

It runs very fast and the f1 score only 7.9% What is wrong with it

The log message like this

<img width="960" alt="擷取" src="https://user-images.githubusercontent.com/32416416/71322341-939daa80-2501-11ea-9313-e179d1760b99.PNG">

Thanks a lot for your help.By the way I clone it today so it is the new version

| 12-22-2019 13:22:27 | 12-22-2019 13:22:27 | |

transformers | 2,268 | closed | Improve repository structure | This PR builds on top of #2255 (which should be merged first).

Since it changes the location of the source code, once it's merged, contributors must update their local development environment with:

$ pip uninstall transformers

$ pip install -e .

I'll clarify this when I update the contributor documentation (later).

I checked that:

- `python setup.py sdist` packages the right files (only from `src`)

- I didn't lose any tests — the baseline for `run_tests_py3_torch_and_tf` is `691 passed, 68 skipped, 50 warnings`, see [here](https://app.circleci.com/jobs/github/huggingface/transformers/10684))

| 12-22-2019 13:00:05 | 12-22-2019 13:00:05 | Last test run failed only because of a flaky test — this is #2240. |

transformers | 2,267 | closed | Does Pre-Trained Weights Work Internally in pytorch? | I am using bert’s pretrained model in from_pretrained and coming across it’s fine tuning code we can save the new model weights and other hyper params in save_pretrained.

My doubt is in there modeling_bert code there is no explicit code that takes the pre-trained weights in acount and then trains as it generally takes attention matrices and puts it in a feed forward network in the class `BertPredictionHeadTransform`

```

class BertPredictionHeadTransform(nn.Module):

def __init__(self, config):

super(BertPredictionHeadTransform, self).__init__()

self.dense = nn.Linear(config.hidden_size, config.hidden_size)

if isinstance(config.hidden_act, str) or (sys.version_info[0] == 2 and isinstance(config.hidden_act, unicode)):

self.transform_act_fn = ACT2FN[config.hidden_act]

else:

self.transform_act_fn = config.hidden_act

self.LayerNorm = BertLayerNorm(config.hidden_size, eps=config.layer_norm_eps)

def forward(self, hidden_states):

hidden_states = self.dense(hidden_states)

hidden_states = self.transform_act_fn(hidden_states)

hidden_states = self.LayerNorm(hidden_states)

print('BertPredictionHeadTransform', hidden_states.shape)

return hidden_states

```

And here i do not see any kind of “inheritance” of the pre-trained weights…

So is it internally handled by pytorch or am i missing something in the code itself?

| 12-22-2019 10:26:42 | 12-22-2019 10:26:42 | `BertPredictionHeadTransform` is never used in itself AFAIK, and is only part of the full scale models (e.g. `BertModel`). As such, the weights for the prediction head are loaded when you run BertModel.from_pretrained(), since the `BertPredictionHeadTransform` is only a module in the whole model.<|||||>> `BertPredictionHeadTransform` is never used in itself AFAIK, and is only part of the full scale models (e.g. `BertModel`). As such, the weights for the prediction head are loaded when you run BertModel.from_pretrained(), since the `BertPredictionHeadTransform` is only a module in the whole model.

Thanks, Bram. As you said `BertModel` does take `BertPreTrainedModel` in `super`. I did notice it, But It's just that my mind doesn't get around how/where and when those weights are getting used exactly.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 2,266 | closed | Imports likely broken in examples | ## 🐛 Bug

While cleaning up imports with isort, I classified them all. I failed to identify the following four imports:

1. model_bertabs

2. utils_squad

3. utils_squad_evaluate

4. models.model_builder

These modules aren't available on PyPI or in the transformers code repository.

I think they will result in ImportError (I didn't check).

I suspect they used to be in transformers, but they were renamed or removed.

| 12-22-2019 10:24:04 | 12-22-2019 10:24:04 | @aaugustin it seems like that i met the same problem when i use convert_bertabs_original_pytorch_checkpoint.py ,have you ever fixed it or find any way to make it work.

Appriciate it if you can tell me!<|||||>I didn't attempt to fix this issue. I merely noticed it while I was working on the overall quality of the `transformers` code base.

I suspect these modules used to exist in `transformers` and were removed in a refactoring, but I don't know for sure.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 2,265 | closed | Only the Bert model is currently supported | ## 🐛 Bug

<!-- Important information -->

Model I am using Model2Model and BertTokenizer:

Language I am using the model on English:

The problem arise when using:

* the official example scripts: (give details)

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

```

# Let's re-use the previous question

question = "Who was Jim Henson?"

encoded_question = tokenizer.encode(question)

question_tensor = torch.tensor([encoded_question])

# This time we try to generate the answer, so we start with an empty sequence

answer = "[CLS]"

encoded_answer = tokenizer.encode(answer, add_special_tokens=False)

answer_tensor = torch.tensor([encoded_answer])

# Load pre-trained model (weights)

model = Model2Model.from_pretrained('fine-tuned-weights')

model.eval()

# If you have a GPU, put everything on cuda

question_tensor = encoded_question.to('cuda')

answer_tensor = encoded_answer.to('cuda')

model.to('cuda')

# Predict all tokens

with torch.no_grad():

outputs = model(question_tensor, answer_tensor)

predictions = outputs[0]

# confirm we were able to predict 'jim'

predicted_index = torch.argmax(predictions[0, -1]).item()

predicted_token = tokenizer.convert_ids_to_tokens([predicted_index])[0]

```

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS: Colab

* Python version:

* PyTorch version:

* PyTorch Transformers version (or branch):

* Using GPU ? Yes

* Distributed of parallel setup ? No

* Any other relevant information:

| 12-22-2019 09:24:32 | 12-22-2019 09:24:32 | You need to give more information. What are you trying to do, what is the code that you use for it, what does not work the way you intended to?<|||||>> You need to give more information. What are you trying to do, what is the code that you use for it, what does not work the way you intended to?

I am learning how to use this repo.

I use the example of the official website model2model, the link is as follows

https://huggingface.co/transformers/quickstart.html

And I use google colab to install the transformer and copy and run the official website code<|||||>I mean, which error are you getting or what is not working as expected? <|||||>> I mean, which error are you getting or what is not working as expected?

this is my issue topic

Only the Bert model is currently supported

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-18-58aac9aa944f> in <module>()

9

10 # Load pre-trained model (weights)

---> 11 model = Model2Model.from_pretrained('fine-tuned-weights')

12 model.eval()

13

/usr/local/lib/python3.6/dist-packages/transformers/modeling_encoder_decoder.py in from_pretrained(cls, pretrained_model_name_or_path, *args, **kwargs)

281 or "distilbert" in pretrained_model_name_or_path

282 ):

--> 283 raise ValueError("Only the Bert model is currently supported.")

284

285 model = super(Model2Model, cls).from_pretrained(

ValueError: Only the Bert model is currently supported.

```<|||||>That wasn't clear. In the future, please post the trace so it is clear what your error is, like so:

```

Traceback (most recent call last):

File "C:/Users/bramv/.PyCharm2019.2/config/scratches/scratch_16.py", line 62, in <module>

model = Model2Model.from_pretrained('fine-tuned-weights')

File "C:\Users\bramv\.virtualenvs\semeval-task7-Z5pypsxD\lib\site-packages\transformers\modeling_encoder_decoder.py", line 315, in from_pretrained

raise ValueError("Only the Bert model is currently supported.")

ValueError: Only the Bert model is currently supported.

```

This is not a bug, then of course. In the example, where "fine-tuned-weights" is used, you can load your own fine-tuned model. So if you tuned a model and saved it as "checkpoint.pth" you can use that.<|||||>> This is not a bug, then of course. In the example, where "fine-tuned-weights" is used, you can load your own fine-tuned model. So if you tuned a model and saved it as "checkpoint.pth" you can use that.

thanks<|||||>Please close this question. |

transformers | 2,264 | closed | Fix doc link in README | close https://github.com/huggingface/transformers/issues/2252

- [x] Update `.circleci/deploy.sh`

- [x] Update `deploy_multi_version_doc.sh`

Set commit hash before "Release: v2.3.0".

| 12-22-2019 07:12:24 | 12-22-2019 07:12:24 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=h1) Report

> Merging [#2264](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/645713e2cb8307e41febb2b7c9f6036f6645efce?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #2264 +/- ##

=======================================

Coverage 78.35% 78.35%

=======================================

Files 133 133

Lines 19878 19878

=======================================

Hits 15576 15576

Misses 4302 4302

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=footer). Last update [645713e...9d00f78](https://codecov.io/gh/huggingface/transformers/pull/2264?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Thanks @upura! |

transformers | 2,263 | closed | BertModel sometimes produces the same output during evaluation | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I finetune BertModel as a part of my model to produce word embeddings. I found sometimes the performance was very bad, then i run the code again without any change, the performance was normal. It is very strange. I check my code to try to find the bug. I found the word embeddings produced by BertModel were all the same. Then I followed the code of BertModel, and found the BertEncoder would make the output become similar gradually which was consisted of 12 BertLayers. I have no idea about this situation. | 12-22-2019 07:00:45 | 12-22-2019 07:00:45 | Did you set a fixed seed? If you want deterministic results, you should set a fixed seed. <|||||>> Did you set a fixed seed? If you want deterministic results, you should set a fixed seed.