markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

Let's merge the mask and depths | merged = train_mask.merge(depth, how='left')

merged.head()

plt.figure(figsize=(12, 6))

plt.scatter(merged['salt_proportion'], merged['z'])

plt.title('Proportion of salt vs depth')

print("Correlation: ", np.corrcoef(merged['salt_proportion'], merged['z'])[0, 1]) | Correlation: 0.10361580365557428

| MIT | kaggle_tgs_salt_identification.ipynb | JacksonIsaac/colab_notebooks |

Setup Keras and Train | from keras.models import Model, load_model

from keras.layers import Input

from keras.layers.core import Lambda, RepeatVector, Reshape

from keras.layers.convolutional import Conv2D, Conv2DTranspose

from keras.layers.pooling import MaxPooling2D

from keras.layers.merge import concatenate

from keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau

from keras import backend as K

im_width = 128

im_height = 128

border = 5

im_chan = 2 # Number of channels: first is original and second cumsum(axis=0)

n_features = 1 # Number of extra features, like depth

#path_train = '../input/train/'

#path_test = '../input/test/'

# Build U-Net model

input_img = Input((im_height, im_width, im_chan), name='img')

input_features = Input((n_features, ), name='feat')

c1 = Conv2D(8, (3, 3), activation='relu', padding='same') (input_img)

c1 = Conv2D(8, (3, 3), activation='relu', padding='same') (c1)

p1 = MaxPooling2D((2, 2)) (c1)

c2 = Conv2D(16, (3, 3), activation='relu', padding='same') (p1)

c2 = Conv2D(16, (3, 3), activation='relu', padding='same') (c2)

p2 = MaxPooling2D((2, 2)) (c2)

c3 = Conv2D(32, (3, 3), activation='relu', padding='same') (p2)

c3 = Conv2D(32, (3, 3), activation='relu', padding='same') (c3)

p3 = MaxPooling2D((2, 2)) (c3)

c4 = Conv2D(64, (3, 3), activation='relu', padding='same') (p3)

c4 = Conv2D(64, (3, 3), activation='relu', padding='same') (c4)

p4 = MaxPooling2D(pool_size=(2, 2)) (c4)

# Join features information in the depthest! layer

f_repeat = RepeatVector(8*8)(input_features)

f_conv = Reshape((8, 8, n_features))(f_repeat)

p4_feat = concatenate([p4, f_conv], -1)

c5 = Conv2D(128, (3, 3), activation='relu', padding='same') (p4_feat)

c5 = Conv2D(128, (3, 3), activation='relu', padding='same') (c5)

u6 = Conv2DTranspose(64, (2, 2), strides=(2, 2), padding='same') (c5)

#check out this skip connection thooooo

u6 = concatenate([u6, c4])

c6 = Conv2D(64, (3, 3), activation='relu', padding='same') (u6)

c6 = Conv2D(64, (3, 3), activation='relu', padding='same') (c6)

u7 = Conv2DTranspose(32, (2, 2), strides=(2, 2), padding='same') (c6)

u7 = concatenate([u7, c3])

c7 = Conv2D(32, (3, 3), activation='relu', padding='same') (u7)

c7 = Conv2D(32, (3, 3), activation='relu', padding='same') (c7)

u8 = Conv2DTranspose(16, (2, 2), strides=(2, 2), padding='same') (c7)

u8 = concatenate([u8, c2])

c8 = Conv2D(16, (3, 3), activation='relu', padding='same') (u8)

c8 = Conv2D(16, (3, 3), activation='relu', padding='same') (c8)

u9 = Conv2DTranspose(8, (2, 2), strides=(2, 2), padding='same') (c8)

u9 = concatenate([u9, c1], axis=3)

c9 = Conv2D(8, (3, 3), activation='relu', padding='same') (u9)

c9 = Conv2D(8, (3, 3), activation='relu', padding='same') (c9)

outputs = Conv2D(1, (1, 1), activation='sigmoid') (c9)

model = Model(inputs=[input_img, input_features], outputs=[outputs])

model.compile(optimizer='adam', loss='binary_crossentropy') #, metrics=[mean_iou]) # The mean_iou metrics seens to leak train and test values...

model.summary()

import sys

from tqdm import tqdm

from keras.preprocessing.image import ImageDataGenerator, array_to_img, img_to_array, load_img

from skimage.transform import resize

train_ids = next(os.walk(train_path+"masks"))[2]

# Get and resize train images and masks

X = np.zeros((len(train_ids), im_height, im_width, im_chan), dtype=np.float32)

y = np.zeros((len(train_ids), im_height, im_width, 1), dtype=np.float32)

X_feat = np.zeros((len(train_ids), n_features), dtype=np.float32)

print('Getting and resizing train images and masks ... ')

sys.stdout.flush()

for n, id_ in tqdm(enumerate(train_ids), total=len(train_ids)):

path = train_path

# Depth

#X_feat[n] = depth.loc[id_.replace('.png', ''), 'z']

# Load X

img = load_img(path + 'images/' + id_, grayscale=True)

x_img = img_to_array(img)

x_img = resize(x_img, (128, 128, 1), mode='constant', preserve_range=True)

# Create cumsum x

x_center_mean = x_img[border:-border, border:-border].mean()

x_csum = (np.float32(x_img)-x_center_mean).cumsum(axis=0)

x_csum -= x_csum[border:-border, border:-border].mean()

x_csum /= max(1e-3, x_csum[border:-border, border:-border].std())

# Load Y

mask = img_to_array(load_img(path + 'masks/' + id_, grayscale=True))

mask = resize(mask, (128, 128, 1), mode='constant', preserve_range=True)

# Save images

X[n, ..., 0] = x_img.squeeze() / 255

X[n, ..., 1] = x_csum.squeeze()

y[n] = mask / 255

print('Done!')

!ls ./masks

!ls ./images

from sklearn.model_selection import train_test_split

X_train, X_valid, X_feat_train, X_feat_valid, y_train, y_valid = train_test_split(X, X_feat, y, test_size=0.15, random_state=42)

callbacks = [

EarlyStopping(patience=5, verbose=1),

ReduceLROnPlateau(patience=3, verbose=1),

ModelCheckpoint('model-tgs-salt-2.h5', verbose=1, save_best_only=True, save_weights_only=False)

]

results = model.fit({'img': X_train, 'feat': X_feat_train}, y_train, batch_size=16, epochs=50, callbacks=callbacks,

validation_data=({'img': X_valid, 'feat': X_feat_valid}, y_valid))

!ls

!unzip -q test.zip -d test | replace test/images/8cf16aa0f5.png? [y]es, [n]o, [A]ll, [N]one, [r]ename: N

| MIT | kaggle_tgs_salt_identification.ipynb | JacksonIsaac/colab_notebooks |

PredictRef: https://www.kaggle.com/jesperdramsch/intro-to-seismic-salt-and-how-to-geophysics | path_test='./test/'

test_ids = next(os.walk(path_test+"images"))[2]

X_test = np.zeros((len(test_ids), im_height, im_width, im_chan), dtype=np.uint8)

X_test_feat = np.zeros((len(test_ids), n_features), dtype=np.float32)

sizes_test = []

print('Getting and resizing test images ... ')

sys.stdout.flush()

for n, id_ in tqdm(enumerate(test_ids), total=len(test_ids)):

path = path_test

img = load_img(path + 'images/' + id_, grayscale=True)

x_img = img_to_array(img)

x_img = resize(x_img, (128, 128, 1), mode='constant', preserve_range=True)

# Create cumsum x

x_center_mean = x_img[border:-border, border:-border].mean()

x_csum = (np.float32(x_img)-x_center_mean).cumsum(axis=0)

x_csum -= x_csum[border:-border, border:-border].mean()

x_csum /= max(1e-3, x_csum[border:-border, border:-border].std())

# Save images

X_test[n, ..., 0] = x_img.squeeze() / 255

X_test[n, ..., 1] = x_csum.squeeze()

#img = load_img(path + '/images/' + id_)

#x = img_to_array(img)[:,:,1]

sizes_test.append([x_img.shape[0], x_img.shape[1]])

#x = resize(x, (128, 128, 1), mode='constant', preserve_range=True)

#X_test[n] = x

print('Done!')

#test_mask = pd.read_csv('test.csv')

#file_list = list(train_mask['id'].values)

#dataset = TGSSaltDataSet(train_path, file_list)

X_train.shape

X_test.shape

!ls -al

preds_test = model.predict([X_test, X_test_feat], verbose=1)

preds_test_t = (preds_test > 0.5).astype(np.uint8)

from tqdm import tnrange

# Create list of upsampled test masks

preds_test_upsampled = []

for i in tnrange(len(preds_test)):

preds_test_upsampled.append(resize(np.squeeze(preds_test[i]),

(sizes_test[i][0], sizes_test[i][1]),

mode='constant', preserve_range=True))

def RLenc(img, order='F', format=True):

"""

img is binary mask image, shape (r,c)

order is down-then-right, i.e. Fortran

format determines if the order needs to be preformatted (according to submission rules) or not

returns run length as an array or string (if format is True)

"""

bytes = img.reshape(img.shape[0] * img.shape[1], order=order)

runs = [] ## list of run lengths

r = 0 ## the current run length

pos = 1 ## count starts from 1 per WK

for c in bytes:

if (c == 0):

if r != 0:

runs.append((pos, r))

pos += r

r = 0

pos += 1

else:

r += 1

# if last run is unsaved (i.e. data ends with 1)

if r != 0:

runs.append((pos, r))

pos += r

r = 0

if format:

z = ''

for rr in runs:

z += '{} {} '.format(rr[0], rr[1])

return z[:-1]

else:

return runs

def rle_encode(im):

'''

im: numpy array, 1 - mask, 0 - background

Returns run length as string formated

'''

pixels = im.flatten(order = 'F')

pixels = np.concatenate([[0], pixels, [0]])

runs = np.where(pixels[1:] != pixels[:-1])[0] + 1

print(runs)

runs = np.unique(runs)

runs = np.sort(runs)

print(runs)

runs[1::2] -= runs[::2]

print(runs)

#print(type(runs))

#runs = sorted(list(set(runs)))

return ' '.join(str(x) for x in runs)

from tqdm import tqdm_notebook

#pred_dict = {fn[:-4]:RLenc(np.round(preds_test_upsampled[i])) for i,fn in tqdm_notebook(enumerate(test_ids))}

def downsample(img):# not used

if img_size_ori == img_size_target:

return img

return resize(img, (img_size_ori, img_size_ori), mode='constant', preserve_range=True)

threshold_best = 0.77

img_size_ori = 101

pred_dict = {idx: rle_encode(np.round(downsample(preds_test[i]) > threshold_best)) for i, idx in enumerate(tqdm_notebook(test_df.index.values))}

sub = pd.DataFrame.from_dict(pred_dict,orient='index')

sub.index.names = ['id']

sub.columns = ['rle_mask']

sub.to_csv('submission.csv')

sub.head()

!ls

!kaggle competitions submit -c tgs-salt-identification-challenge -f submission.csv -m "Re-Submission with sorted rle_mask" | Successfully submitted to TGS Salt Identification Challenge | MIT | kaggle_tgs_salt_identification.ipynb | JacksonIsaac/colab_notebooks |

PredictRef: https://www.kaggle.com/shaojiaxin/u-net-with-simple-resnet-blocks | callbacks = [

EarlyStopping(patience=5, verbose=1),

ReduceLROnPlateau(patience=3, verbose=1),

ModelCheckpoint('model-tgs-salt-new-1.h5', verbose=1, save_best_only=True, save_weights_only=True)

]

#results = model.fit({'img': [X_train, X_train], 'feat': X_feat_train}, y_train, batch_size=16, epochs=50, callbacks=callbacks,

# validation_data=({'img': [X_valid, X_valid], 'feat': X_feat_valid}, y_valid))

epochs = 50

batch_size = 16

history = model.fit(X_train, y_train,

validation_data=[X_valid, y_valid],

epochs=epochs,

batch_size=batch_size,

callbacks=callbacks)

def predict_result(model,x_test,img_size_target): # predict both orginal and reflect x

x_test_reflect = np.array([np.fliplr(x) for x in x_test])

preds_test1 = model.predict(x_test).reshape(-1, img_size_target, img_size_target)

preds_test2_refect = model.predict(x_test_reflect).reshape(-1, img_size_target, img_size_target)

preds_test2 = np.array([ np.fliplr(x) for x in preds_test2_refect] )

preds_avg = (preds_test1 +preds_test2)/2

return preds_avg

train_df = pd.read_csv("train.csv", index_col="id", usecols=[0])

depths_df = pd.read_csv("depths.csv", index_col="id")

train_df = train_df.join(depths_df)

test_df = depths_df[~depths_df.index.isin(train_df.index)]

img_size_target = 101

x_test = np.array([(np.array(load_img("./test/images/{}.png".format(idx), grayscale = True))) / 255 for idx in tqdm(test_df.index)]).reshape(-1, img_size_target, img_size_target, 1)

def rle_encode(im):

'''

im: numpy array, 1 - mask, 0 - background

Returns run length as string formated

'''

pixels = im.flatten(order = 'F')

pixels = np.concatenate([[0], pixels, [0]])

runs = np.where(pixels[1:] != pixels[:-1])[0] + 1

runs[1::2] -= runs[::2]

return ' '.join(str(x) for x in runs)

preds_test = predict_result(model,x_test,img_size_target)

| _____no_output_____ | MIT | kaggle_tgs_salt_identification.ipynb | JacksonIsaac/colab_notebooks |

Save output to drive | from google.colab import drive

drive.mount('/content/gdrive')

!ls /content/gdrive/My\ Drive/kaggle_competitions

!cp model-tgs-salt-1.h5 /content/gdrive/My\ Drive/kaggle_competitions/tgs_salt/

!cp model-tgs-salt-2.h5 /content/gdrive/My\ Drive/kaggle_competitions/tgs_salt/

!cp submission.csv /content/gdrive/My\ Drive/kaggle_competitions/tgs_salt/submission.csv

| _____no_output_____ | MIT | kaggle_tgs_salt_identification.ipynb | JacksonIsaac/colab_notebooks |

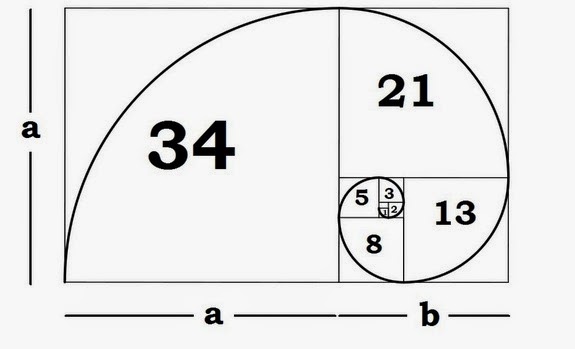

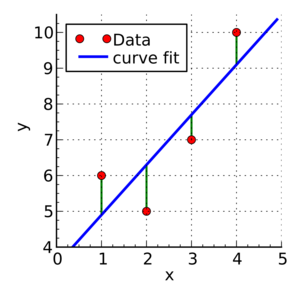

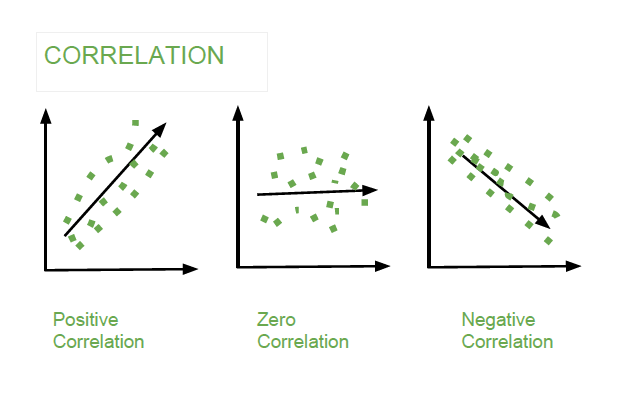

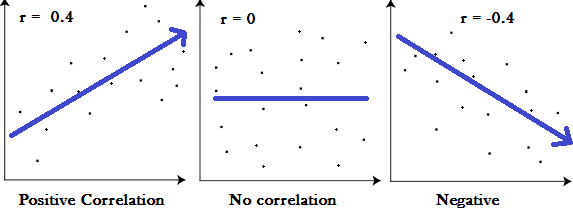

Laboratory 18: Linear Regression Full name: R: HEX: Title of the notebook Date:  The human brain is amazing and mysterious in many ways. Have a look at these sequences. You, with the assistance of your brain, can guess the next item in each sequence, right? - A,B,C,D,E, ____ ?- 5,10,15,20,25, ____ ?- 2,4,8,16,32 ____ ?- 0,1,1,2,3, ____ ?- 1, 11, 21, 1211,111221, ____ ?         But how does our brain do this? How do we 'guess | predict' the next step? Is it that there is only one possible option? is it that we have the previous items? or is it the relationship between the items? What if we have more than a single sequence? Maybe two sets of numbers? How can we predict the next "item" in a situation like that?  Blue Points? Red Line? Fit? Does it ring any bells?  --------- Problem 1 (5 pts)The table below contains some experimental observations.|Elapsed Time (s)|Speed (m/s)||---:|---:||0 |0||1.0 |3||2.0 |7||3.0 |12||4.0 |20||5.0 |30||6.0 | 45.6| |7.0 | 60.3 ||8.0 | 77.7 ||9.0 | 97.3 ||10.0| 121.1|1. Plot the speed vs time (speed on y-axis, time on x-axis) using a scatter plot. Use blue markers. 2. Plot a red line on the scatterplot based on the linear model $f(x) = mx + b$ 3. By trial-and-error find values of $m$ and $b$ that provide a good visual fit (i.e. makes the red line explain the blue markers).4. Using this data model estimate the speed at $t = 15~\texttt{sec.}$--------- Let's go over some important terminology: Linear Regression: a basic predictive analytics technique that uses historical data to predict an output variable. The Predictor variable (input): the variable(s) that help predict the value of the output variable. It is commonly referred to as X. The Output variable: the variable that we want to predict. It is commonly referred to as Y. To estimate Y using linear regression, we assume the equation: $Ye = βX + α$*where Yₑ is the estimated or predicted value of Y based on our linear equation.* Our goal is to find statistically significant values of the parameters α and β that minimise the difference between Y and Yₑ. If we are able to determine the optimum values of these two parameters, then we will have the line of best fit that we can use to predict the values of Y, given the value of X. So, how do we estimate α and β?  We can use a method called Ordinary Least Squares (OLS).  The objective of the least squares method is to find values of α and β that minimise the sum of the squared difference between Y and Yₑ (distance between the linear fit and the observed points). We will not go through the derivation here, but using calculus we can show that the values of the unknown parameters are as follows:  where X̄ is the mean of X values and Ȳ is the mean of Y values. β is simply the covariance of X and Y (Cov(X, Y) devided by the variance of X (Var(X)). Covariance: In probability theory and statistics, covariance is a measure of the joint variability of two random variables. If the greater values of one variable mainly correspond with the greater values of the other variable, and the same holds for the lesser values, (i.e., the variables tend to show similar behavior), the covariance is positive. In the opposite case, when the greater values of one variable mainly correspond to the lesser values of the other, (i.e., the variables tend to show opposite behavior), the covariance is negative. The sign of the covariance therefore shows the tendency in the linear relationship between the variables. The magnitude of the covariance is not easy to interpret because it is not normalized and hence depends on the magnitudes of the variables. The normalized version of the covariance, the correlation coefficient, however, shows by its magnitude the strength of the linear relation.  The Correlation Coefficient: Correlation coefficients are used in statistics to measure how strong a relationship is between two variables. There are several types of correlation coefficient, but the most popular is Pearson’s. Pearson’s correlation (also called Pearson’s R) is a correlation coefficient commonly used in linear regression.Correlation coefficient formulas are used to find how strong a relationship is between data. The formulat for Pearson’s R: The formulas return a value between -1 and 1, where: 1 : A correlation coefficient of 1 means that for every positive increase in one variable, there is a positive increase of a fixed proportion in the other. For example, shoe sizes go up in (almost) perfect correlation with foot length. -1: A correlation coefficient of -1 means that for every positive increase in one variable, there is a negative decrease of a fixed proportion in the other. For example, the amount of gas in a tank decreases in (almost) perfect correlation with speed. 0 : Zero means that for every increase, there isn’t a positive or negative increase. The two just aren’t related. Example 1: Let's have a look at the Problem 1 from Exam II We had a table of recoded times and speeds from some experimental observations:|Elapsed Time (s)|Speed (m/s)||---:|---:||0 |0||1.0 |3||2.0 |7||3.0 |12||4.0 |20||5.0 |30||6.0 | 45.6| |7.0 | 60.3 ||8.0 | 77.7 ||9.0 | 97.3 ||10.0| 121.1| First let's create a dataframe: | # Load the necessary packages

import numpy as np

import pandas as pd

import statistics

from matplotlib import pyplot as plt

# Create a dataframe:

time = [0, 1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0]

speed = [0, 3, 7, 12, 20, 30, 45.6, 60.3, 77.7, 97.3, 121.2]

data = pd.DataFrame({'Time':time, 'Speed':speed})

data | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Now, let's explore the data: | data.describe()

time_var = statistics.variance(time)

speed_var = statistics.variance(speed)

print("Variance of recorded times is ",time_var)

print("Variance of recorded times is ",speed_var) | Variance of recorded times is 11.0

Variance of recorded times is 1697.7759999999998

| CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Is there a relationship ( based on covariance, correlation) between time and speed? | # To find the covariance

data.cov()

# To find the correlation among the columns

# using pearson method

data.corr(method ='pearson') | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Let's do linear regression with primitive Python: To estimate "y" using the OLS method, we need to calculate "xmean" and "ymean", the covariance of X and y ("xycov"), and the variance of X ("xvar") before we can determine the values for alpha and beta. In our case, X is time and y is Speed. | # Calculate the mean of X and y

xmean = np.mean(time)

ymean = np.mean(speed)

# Calculate the terms needed for the numator and denominator of beta

data['xycov'] = (data['Time'] - xmean) * (data['Speed'] - ymean)

data['xvar'] = (data['Time'] - xmean)**2

# Calculate beta and alpha

beta = data['xycov'].sum() / data['xvar'].sum()

alpha = ymean - (beta * xmean)

print(f'alpha = {alpha}')

print(f'beta = {beta}')

| alpha = -16.78636363636363

beta = 11.977272727272727

| CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

We now have an estimate for alpha and beta! Our model can be written as Yₑ = 11.977 X -16.786, and we can make predictions: | X = np.array(time)

ypred = alpha + beta * X

print(ypred) | [-16.78636364 -4.80909091 7.16818182 19.14545455 31.12272727

43.1 55.07727273 67.05454545 79.03181818 91.00909091

102.98636364]

| CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Let’s plot our prediction ypred against the actual values of y, to get a better visual understanding of our model: | # Plot regression against actual data

plt.figure(figsize=(12, 6))

plt.plot(X, ypred, color="red") # regression line

plt.plot(time, speed, 'ro', color="blue") # scatter plot showing actual data

plt.title('Actual vs Predicted')

plt.xlabel('Time (s)')

plt.ylabel('Speed (m/s)')

plt.show() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

The red line is our line of best fit, Yₑ = 11.977 X -16.786. We can see from this graph that there is a positive linear relationship between X and y. Using our model, we can predict y from any values of X! For example, if we had a value X = 20, we can predict that: | ypred_20 = alpha + beta * 20

print(ypred_20) | 222.7590909090909

| CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Linear Regression with statsmodels: First, we use statsmodels’ ols function to initialise our simple linear regression model. This takes the formula y ~ X, where X is the predictor variable (Time) and y is the output variable (Speed). Then, we fit the model by calling the OLS object’s fit() method. | import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('Speed ~ Time', data=data)

model = model.fit() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

We no longer have to calculate alpha and beta ourselves as this method does it automatically for us! Calling model.params will show us the model’s parameters: | model.params | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

In the notation that we have been using, α is the intercept and β is the slope i.e. α =-16.786364 and β = 11.977273. | # Predict values

speed_pred = model.predict()

# Plot regression against actual data

plt.figure(figsize=(12, 6))

plt.plot(data['Time'], data['Speed'], 'o') # scatter plot showing actual data

plt.plot(data['Time'], speed_pred, 'r', linewidth=2) # regression line

plt.xlabel('Time (s)')

plt.ylabel('Speed (m/s)')

plt.title('model vs observed')

plt.show() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

How good do you feel about this predictive model? Will you trust it? Example 2: Advertising and Sells! This is a classic regression problem. we have a dataset of the spendings on TV, Radio, and Newspaper advertisements and number of sales for a specific product. We are interested in exploring the relationship between these parameters and answering the following questions:- Can TV advertising spending predict the number of sales for the product?- Can Radio advertising spending predict the number of sales for the product?- Can Newspaper advertising spending predict the number of sales for the product?- Can we use the three of them to predict the number of sales for the product? | Multiple Linear Regression Model- Which parameter is a better predictor of the number of sales for the product? | # Import and display first rows of the advertising dataset

df = pd.read_csv('advertising.csv')

df.head()

# Describe the df

df.describe()

tv = np.array(df['TV'])

radio = np.array(df['Radio'])

newspaper = np.array(df['Newspaper'])

newspaper = np.array(df['Sales'])

# Get Variance and Covariance - What can we infer?

df.cov()

# Get Correlation Coefficient - What can we infer?

df.corr(method ='pearson')

# Answer the first question: Can TV advertising spending predict the number of sales for the product?

import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('Sales ~ TV', data=df)

model = model.fit()

print(model.params)

# Predict values

TV_pred = model.predict()

# Plot regression against actual data - What do we see?

plt.figure(figsize=(12, 6))

plt.plot(df['TV'], df['Sales'], 'o') # scatter plot showing actual data

plt.plot(df['TV'], TV_pred, 'r', linewidth=2) # regression line

plt.xlabel('TV advertising spending')

plt.ylabel('Sales')

plt.title('Predicting with TV spendings only')

plt.show()

# Answer the second question: Can Radio advertising spending predict the number of sales for the product?

import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('Sales ~ Radio', data=df)

model = model.fit()

print(model.params)

# Predict values

RADIO_pred = model.predict()

# Plot regression against actual data - What do we see?

plt.figure(figsize=(12, 6))

plt.plot(df['Radio'], df['Sales'], 'o') # scatter plot showing actual data

plt.plot(df['Radio'], RADIO_pred, 'r', linewidth=2) # regression line

plt.xlabel('Radio advertising spending')

plt.ylabel('Sales')

plt.title('Predicting with Radio spendings only')

plt.show()

# Answer the third question: Can Newspaper advertising spending predict the number of sales for the product?

import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('Sales ~ Newspaper', data=df)

model = model.fit()

print(model.params)

# Predict values

NP_pred = model.predict()

# Plot regression against actual data - What do we see?

plt.figure(figsize=(12, 6))

plt.plot(df['Newspaper'], df['Sales'], 'o') # scatter plot showing actual data

plt.plot(df['Newspaper'], NP_pred, 'r', linewidth=2) # regression line

plt.xlabel('Newspaper advertising spending')

plt.ylabel('Sales')

plt.title('Predicting with Newspaper spendings only')

plt.show()

# Answer the fourth question: Can we use the three of them to predict the number of sales for the product?

# This is a case of multiple linear regression model. This is simply a linear regression model with more than one predictor:

# and is modelled by: Yₑ = α + β₁X₁ + β₂X₂ + … + βₚXₚ , where p is the number of predictors.

# In this case: Sales = α + β1*TV + β2*Radio + β3*Newspaper

# Multiple Linear Regression with scikit-learn:

from sklearn.linear_model import LinearRegression

# Build linear regression model using TV,Radio and Newspaper as predictors

# Split data into predictors X and output Y

predictors = ['TV', 'Radio', 'Newspaper']

X = df[predictors]

y = df['Sales']

# Initialise and fit model

lm = LinearRegression()

model = lm.fit(X, y)

print(f'alpha = {model.intercept_}')

print(f'betas = {model.coef_}')

# Therefore, our model can be written as:

#Sales = 2.938 + 0.046*TV + 0.1885*Radio -0.001*Newspaper

# we can predict sales from any combination of TV and Radio and Newspaper advertising costs!

#For example, if we wanted to know how many sales we would make if we invested

# $300 in TV advertising and $200 in Radio advertising and $50 in Newspaper advertising

#all we have to do is plug in the values:

new_X = [[300, 200,50]]

print(model.predict(new_X))

# Answer the final question : Which parameter is a better predictor of the number of sales for the product?

# How can we answer that?

# WHAT CAN WE INFER FROM THE BETAs ?

| _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

*This notebook was inspired by a several blogposts including:* - __"Introduction to Linear Regression in Python"__ by __Lorraine Li__ available at* https://towardsdatascience.com/introduction-to-linear-regression-in-python-c12a072bedf0 - __"In Depth: Linear Regression"__ available at* https://jakevdp.github.io/PythonDataScienceHandbook/05.06-linear-regression.html - __"A friendly introduction to linear regression (using Python)"__ available at* https://www.dataschool.io/linear-regression-in-python/ *Here are some great reads on linear regression:* - __"Linear Regression in Python"__ by __Sadrach Pierre__ available at* https://towardsdatascience.com/linear-regression-in-python-a1d8c13f3242 - __"Introduction to Linear Regression in Python"__ available at* https://cmdlinetips.com/2019/09/introduction-to-linear-regression-in-python/ - __"Linear Regression in Python"__ by __Mirko Stojiljković__ available at* https://realpython.com/linear-regression-in-python/ *Here are some great videos on linear regression:* - __"StatQuest: Fitting a line to data, aka least squares, aka linear regression."__ by __StatQuest with Josh Starmer__ available at* https://www.youtube.com/watch?v=PaFPbb66DxQ&list=PLblh5JKOoLUIzaEkCLIUxQFjPIlapw8nU - __"Statistics 101: Linear Regression, The Very Basics"__ by __Brandon Foltz__ available at* https://www.youtube.com/watch?v=ZkjP5RJLQF4 - __"How to Build a Linear Regression Model in Python | Part 1" and 2,3,4!__ by __Sigma Coding__ available at* https://www.youtube.com/watch?v=MRm5sBfdBBQ Exercise 1: In the "CarsDF.csv" file, you will find a dataset with information about cars and motorcycles including thier age, kilometers driven (mileage), fuel economy, enginer power, engine volume, and selling price. Follow the steps and answer the questions. - Step1: Read the "CarsDF.csv" file as a dataframe. Explore the dataframe and in a markdown cell breifly describe it in your own words. - Step2: Calculate and compare the correlation coefficient of the "selling price" with all the other parameters (execpt for "name", of course!). In a markdown cell, explain the results and state which parameters have the strongest and weakest relationship with "selling price" of a vehicle. - Step3: Use linear regression modeling in primitive python and VISUALLY assess the quality of a linear fit with Age as the predictor, and selling price as outcome. Explain the result of this analysis in a markdown cell.- Step4: Use linear regression modeling with statsmodels and VISUALLY assess the quality of a linear fit with fuel economy as the predictor, and selling price as outcome. Explain the result of this analysis in a markdown cell.- Step5: Use linear regression modeling with statsmodels and VISUALLY assess the quality of a linear fit with engine volume as the predictor, and selling price as outcome. Explain the result of this analysis in a markdown cell.- Step6: In a markdown cell, explain which of the three predictors in steps 3,4, and 5, was a better predictor (resulted in a better fit ) for selling price?- Step7: Use multiple linear regression modeling with scikit-learn and use all the parameters (execpt for "name", of course!) to predict selling price. Then, use this model to predict the selling price of a car that has the following charactristics and decide whether this prediction is reliable in your opinion: - 2 years old - has gone 17000 km - has fuel economy measure of 24.2 kmpl - has an engine power of 74 bhp - has en engine volume of 1260 CC | # Step1:

vdf = pd.read_csv('CarsDF.csv')

vdf.head()

vdf.describe() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

On Step1: [Double-Click to edit] | # Step2:.

vdf.corr() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

On Step2: [Double-Click to edit] | #Step3:

# Calculate the mean of X and y

xmean = np.mean(vdf['Age'])

ymean = np.mean(vdf['selling_price'])

# Calculate the terms needed for the numator and denominator of beta

vdf['xycov'] = (vdf['Age'] - xmean) * (vdf['selling_price'] - ymean)

vdf['xvar'] = (vdf['Age'] - xmean)**2

# Calculate beta and alpha

beta = vdf['xycov'].sum() / vdf['xvar'].sum()

alpha = ymean - (beta * xmean)

print(f'alpha = {alpha}')

print(f'beta = {beta}')

X = np.array(vdf['Age'])

Y = np.array(vdf['selling_price'])

ypred = alpha + beta * X

# Plot regression against actual data

plt.figure(figsize=(12, 6))

plt.plot(X, Y, 'ro', color="blue") # scatter plot showing actual data

plt.plot(X, ypred, color="red") # regression line

plt.title('Actual vs Predicted')

plt.xlabel('Age')

plt.ylabel('selling price')

plt.show() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

On Step3: [Double-Click to edit] | # Step4:

import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('selling_price ~ FuelEconomy_kmpl', data=vdf)

model = model.fit()

model.params

# Predict values

FE_pred = model.predict()

# Plot regression against actual data

plt.figure(figsize=(12, 6))

plt.plot(vdf['FuelEconomy_kmpl'], vdf['selling_price'], 'o') # scatter plot showing actual data

plt.plot(vdf['FuelEconomy_kmpl'], FE_pred, 'r', linewidth=2) # regression line

plt.xlabel('FuelEconomy_kmpl')

plt.ylabel('selling price')

plt.title('model vs observed')

plt.show() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

On Step4: [Double-Click to edit] | # Step5:

import statsmodels.formula.api as smf

# Initialise and fit linear regression model using `statsmodels`

model = smf.ols('selling_price ~ engine_v', data=vdf)

model = model.fit()

model.params

# Predict values

EV_pred = model.predict()

# Plot regression against actual data

plt.figure(figsize=(12, 6))

plt.plot(vdf['engine_v'], vdf['selling_price'], 'o') # scatter plot showing actual data

plt.plot(vdf['engine_v'], EV_pred, 'r', linewidth=2) # regression line

plt.xlabel('engine_v')

plt.ylabel('selling price')

plt.title('model vs observed')

plt.show() | _____no_output_____ | CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

On Step5: [Double-Click to edit] On Step6: [Double-Click to edit] | #Step7:

# Multiple Linear Regression with scikit-learn:

from sklearn.linear_model import LinearRegression

# Build linear regression model using TV,Radio and Newspaper as predictors

# Split data into predictors X and output Y

predictors = ['Age', 'km_driven', 'FuelEconomy_kmpl','engine_p','engine_v']

X = vdf[predictors]

y = vdf['selling_price']

# Initialise and fit model

lm = LinearRegression()

model = lm.fit(X, y)

print(f'alpha = {model.intercept_}')

print(f'betas = {model.coef_}')

new_X = [[2, 17000,24.2,74,1260]]

print(model.predict(new_X))

| [900102.89014124]

| CC0-1.0 | 1-Lessons/Lesson19/Lab19/.src/Lab19_WS.ipynb | dustykat/engr-1330-psuedo-course |

Import packages | import os

import sys

import time

from datetime import datetime

import GPUtil

import psutil

#######################

# run after two days

# time.sleep(172800)

#######################

os.environ["CUDA_VISIBLE_DEVICES"] = "0,1,2,3"

sys.path.append("../")

def gpu_free(max_gb):

gpu_id = GPUtil.getFirstAvailable(

order="memory"

) # get the fist gpu with the lowest load

GPU = GPUtil.getGPUs()[gpu_id[0]]

GPU_load = GPU.load * 100

GPU_memoryUtil = GPU.memoryUtil / 2.0 ** 10

GPU_memoryTotal = GPU.memoryTotal / 2.0 ** 10

GPU_memoryUsed = GPU.memoryUsed / 2.0 ** 10

GPU_memoryFree = GPU.memoryFree / 2.0 ** 10

print(

"-- total_GPU_memory: %.3fGB;init_GPU_memoryFree:%.3fGB init_GPU_load:%.3f%% GPU_memoryUtil:%d%% GPU_memoryUsed:%.3fGB"

% (GPU_memoryTotal, GPU_memoryFree, GPU_load, GPU_memoryUtil, GPU_memoryUsed)

)

if GPU_memoryFree > max_gb:

return True

return False

def memery_free(max_gb):

available_memory = psutil.virtual_memory().free / 2.0 ** 30

if available_memory > max_gb:

return True

return False

for item_fea_type in [

"random",

"cate",

"cate_word2vec",

"cate_bert",

"cate_one_hot",

"random_word2vec",

"random_bert",

"random_one_hot",

"random_bert_word2vec_one_hot",

"random_cate_word2vec",

"random_cate_bert",

"random_cate_one_hot",

"random_cate_bert_word2vec_one_hot",

]:

while True:

if gpu_free(4) and memery_free(10):

os.environ["CUDA_VISIBLE_DEVICES"] = "0,1,2,3"

gpu_id = GPUtil.getAvailable(order="memory", limit=4)[

0

] # get the fist gpu with the lowest load

print("GPU memery and main memery availale, start a job")

date_time = datetime.now().strftime("%Y_%m_%d_%H_%M_%S")

command = f"CUDA_VISIBLE_DEVICES=0,1,2,3; /home/zm324/anaconda3/envs/beta_rec/bin/python run_tvbr.py --item_fea_type {item_fea_type} --device cuda:{gpu_id} >> ./logs/{date_time}_{item_fea_type}.log &"

os.system(command)

time.sleep(120)

break

else:

print("GPU not availale, sleep for 10 min")

time.sleep(600)

continue | -- total_GPU_memory: 10.761GB;init_GPU_memoryFree:10.760GB init_GPU_load:0.000% GPU_memoryUtil:0% GPU_memoryUsed:0.001GB

GPU memery and main memery availale, start a job

-- total_GPU_memory: 10.761GB;init_GPU_memoryFree:10.757GB init_GPU_load:0.000% GPU_memoryUtil:0% GPU_memoryUsed:0.004GB

GPU memery and main memery availale, start a job

| MIT | demo_control_side_sep_16.ipynb | mengzaiqiao/TVBR |

Checking whether the files are scanned images or true pdfs | def is_image(file_path):

with open(file_path, "rb") as f:

return pdftotext.PDF(f)

print(is_image(filename)) | _____no_output_____ | FTL | tasks/extract_text/notebooks/text_preprocessing_jordi.ipynb | jordiplanascuchi/policy-data-analyzer |

Converting pdf to image files and improving quality | def get_image1(file_path):

"""Get image out of pdf file_path. Splits pdf file into PIL images of each of its pages.

"""

return convert_from_path(file_path, 500)

# Performance tips according to pdf2image:

# Using an output folder is significantly faster if you are using an SSD. Otherwise i/o usually becomes the bottleneck.

# Using multiple threads can give you some gains but avoid more than 4 as this will cause i/o bottleneck (even on my NVMe SSD!).

pages = get_image1(filepaths[0])

display(pages[0]) | _____no_output_____ | FTL | tasks/extract_text/notebooks/text_preprocessing_jordi.ipynb | jordiplanascuchi/policy-data-analyzer |

What can we do here to improve image quality? It already seems pretty good! Evaluating extraction time from each method and saving text to disk | def export_ocr(text, file, extract, out=out_path):

""" Export ocr output text using extract method to file at out

"""

filename = f'{os.path.splitext(os.path.basename(file))[0]}_{extract}.txt'

with open(os.path.join(out, filename), 'w') as the_file:

the_file.write(text)

def wrap_pagenum(page_text, page_num):

""" Wrap page_text with page_num tag

"""

return f"<p n={page_num}>" + page_text + "</p>"

# pytesseract extraction

start_time = time.time()

for file in filepaths:

pages = get_image1(file)

text = ""

for pageNum, imgBlob in enumerate(pages):

page_text = pytesseract.image_to_string(imgBlob, lang="spa")

text += wrap_pagenum(page_text, pageNum)

export_ocr(text, file, "pytesseract") # write extracted text to disk

print("--- %s seconds ---" % (time.time() - start_time))

# tesserocr extraction

start_time = time.time()

for file in filepaths:

pages = get_image1(file)

text = ""

for pageNum, imgBlob in enumerate(pages):

page_text = tesserocr.image_to_text(imgBlob, lang="spa")

text += wrap_pagenum(page_text, pageNum)

export_ocr(text, file, "tesserocr") # write extracted text to disk

print("--- %s seconds ---" % (time.time() - start_time))

# tesserocr extraction using the PyTessBaseAPI

start_time = time.time()

for file in filepaths:

pages = get_image1(file)

text = ""

with tesserocr.PyTessBaseAPI(lang="spa") as api:

for pageNum, imgBlob in enumerate(pages):

api.SetImage(imgBlob)

page_text = api.GetUTF8Text()

text += wrap_pagenum(page_text, pageNum)

export_ocr(text, file, "tesserocr_pytess") # write extracted text to disk

print("--- %s seconds ---" % (time.time() - start_time)) | _____no_output_____ | FTL | tasks/extract_text/notebooks/text_preprocessing_jordi.ipynb | jordiplanascuchi/policy-data-analyzer |

It seems that the pytesseract package provides the fastest extraction and by looking at the extracted text it doesn't seem to exist any difference in the output of all the tested methods. | # comparison between text extracted by the different methods

os.listdir(out_path)

# TODO: perform a more programatical comparison between extracted texts | _____no_output_____ | FTL | tasks/extract_text/notebooks/text_preprocessing_jordi.ipynb | jordiplanascuchi/policy-data-analyzer |

Let's look at the extracted text | with open(os.path.join(out_path, 'Decreto_ejecutivo_57_pytesseract.txt')) as text:

extracted_text = text.read()

extracted_text

# Replace \x0c (page break) by \n

# Match 1 or more occurrences of \n if preceeded by one occurrence of \n OR

# Match 1 or more occurrences of \s (whitespace) if preceeded by one occurrence of \n OR

# Match one occurrence of \n if it isn't followed by \n

print(re.sub("(?<=\n)\n+|(?<=\n)\s+|\n(?!\n)", " ", extracted_text.replace("\x0c", "\n"))) | _____no_output_____ | FTL | tasks/extract_text/notebooks/text_preprocessing_jordi.ipynb | jordiplanascuchi/policy-data-analyzer |

CS109A Introduction to Data Science Standard Section 3: Multiple Linear Regression and Polynomial Regression **Harvard University****Fall 2019****Instructors**: Pavlos Protopapas, Kevin Rader, and Chris Tanner**Section Leaders**: Marios Mattheakis, Abhimanyu (Abhi) Vasishth, Robbert (Rob) Struyven | #RUN THIS CELL

import requests

from IPython.core.display import HTML

styles = requests.get("http://raw.githubusercontent.com/Harvard-IACS/2018-CS109A/master/content/styles/cs109.css").text

HTML(styles) | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

For this section, our goal is to get you familiarized with Multiple Linear Regression. We have learned how to model data with kNN Regression and Simple Linear Regression and our goal now is to dive deep into Linear Regression.Specifically, we will: - Load in the titanic dataset from seaborn- Learn a few ways to plot **distributions** of variables using seaborn- Learn about different **kinds of variables** including continuous, categorical and ordinal- Perform single and multiple linear regression- Learn about **interaction** terms- Understand how to **interpret coefficients** in linear regression- Look at **polynomial** regression- Understand the **assumptions** being made in a linear regression model- (Extra): look at some cool plots to raise your EDA game  | # Data and Stats packages

import numpy as np

import pandas as pd

# Visualization packages

import matplotlib.pyplot as plt

import seaborn as sns

sns.set() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Extending Linear Regression Working with the Titanic Dataset from SeabornFor our dataset, we'll be using the passenger list from the Titanic, which famously sank in 1912. Let's have a look at the data. Some descriptions of the data are at https://www.kaggle.com/c/titanic/data, and here's [how seaborn preprocessed it](https://github.com/mwaskom/seaborn-data/blob/master/process/titanic.py).The task is to build a regression model to **predict the fare**, based on different attributes.Let's keep a subset of the data, which includes the following variables: - age- sex- class- embark_town- alone- **fare** (the response variable) | # Load the dataset from seaborn

titanic = sns.load_dataset("titanic")

titanic.head()

# checking for null values

chosen_vars = ['age', 'sex', 'class', 'embark_town', 'alone', 'fare']

titanic = titanic[chosen_vars]

titanic.info() | <class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 6 columns):

age 714 non-null float64

sex 891 non-null object

class 891 non-null category

embark_town 889 non-null object

alone 891 non-null bool

fare 891 non-null float64

dtypes: bool(1), category(1), float64(2), object(2)

memory usage: 29.8+ KB

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**Exercise**: check the datatypes of each column and display the statistics (min, max, mean and any others) for all the numerical columns of the dataset. | ## your code here

# %load 'solutions/sol1.py'

print(titanic.dtypes)

titanic.describe() | age float64

sex object

class category

embark_town object

alone bool

fare float64

dtype: object

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**Exercise**: drop all the non-null *rows* in the dataset. Is this always a good idea? | ## your code here

# %load 'solutions/sol2.py'

titanic = titanic.dropna(axis=0)

titanic.info() | <class 'pandas.core.frame.DataFrame'>

Int64Index: 712 entries, 0 to 890

Data columns (total 6 columns):

age 712 non-null float64

sex 712 non-null object

class 712 non-null category

embark_town 712 non-null object

alone 712 non-null bool

fare 712 non-null float64

dtypes: bool(1), category(1), float64(2), object(2)

memory usage: 29.3+ KB

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Now let us visualize the response variable. A good visualization of the distribution of a variable will enable us to answer three kinds of questions:- What values are central or typical? (e.g., mean, median, modes)- What is the typical spread of values around those central values? (e.g., variance/stdev, skewness)- What are unusual or exceptional values (e.g., outliers) | fig, ax = plt.subplots(1, 3, figsize=(24, 6))

ax = ax.ravel()

sns.distplot(titanic['fare'], ax=ax[0])

# use seaborn to draw distributions

ax[0].set_title('Seaborn distplot')

ax[0].set_ylabel('Normalized frequencies')

sns.violinplot(x='fare', data=titanic, ax=ax[1])

ax[1].set_title('Seaborn violin plot')

ax[1].set_ylabel('Frequencies')

sns.boxplot(x='fare', data=titanic, ax=ax[2])

ax[2].set_title('Seaborn box plot')

ax[2].set_ylabel('Frequencies')

fig.suptitle('Distribution of count'); | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

How do we interpret these plots? Train-Test Split | from sklearn.model_selection import train_test_split

titanic_train, titanic_test = train_test_split(titanic, train_size=0.7, random_state=99)

titanic_train = titanic_train.copy()

titanic_test = titanic_test.copy()

print(titanic_train.shape, titanic_test.shape) | (498, 6) (214, 6)

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Simple one-variable OLS **Exercise**: You've done this before: make a simple model using the OLS package from the statsmodels library predicting **fare** using **age** using the training data. Name your model `model_1` and display the summary | from statsmodels.api import OLS

import statsmodels.api as sm

# Your code here

# %load 'solutions/sol3.py'

age_ca = sm.add_constant(titanic_train['age'])

model_1 = OLS(titanic_train['fare'], age_ca).fit()

model_1.summary() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Dealing with different kinds of variables In general, you should be able to distinguish between three kinds of variables: 1. Continuous variables: such as `fare` or `age`2. Categorical variables: such as `sex` or `alone`. There is no inherent ordering between the different values that these variables can take on. These are sometimes called nominal variables. Read more [here](https://stats.idre.ucla.edu/other/mult-pkg/whatstat/what-is-the-difference-between-categorical-ordinal-and-interval-variables/). 3. Ordinal variables: such as `class` (first > second > third). There is some inherent ordering of the values in the variables, but the values are not continuous either. *Note*: While there is some inherent ordering in `class`, we will be treating it like a categorical variable. | titanic_orig = titanic_train.copy() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Let us now examine the `sex` column and see the value counts. | titanic_train['sex'].value_counts() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**Exercise**: Create a column `sex_male` that is 1 if the passenger is male, 0 if female. The value counts indicate that these are the two options in this particular dataset. Ensure that the datatype is `int`. | # your code here

# %load 'solutions/sol4.py'

# functions that help us create a dummy variable

stratify

titanic_train['sex_male'].value_counts() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Do we need a `sex_female` column, or a `sex_others` column? Why or why not?Now, let us look at `class` in greater detail. | titanic_train['class_Second'] = (titanic_train['class'] == 'Second').astype(int)

titanic_train['class_Third'] = 1 * (titanic_train['class'] == 'Third') # just another way to do it

titanic_train.info()

# This function automates the above:

titanic_train_copy = pd.get_dummies(titanic_train, columns=['sex', 'class'], drop_first=True)

titanic_train_copy.head() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Linear Regression with More Variables **Exercise**: Fit a linear regression including the new sex and class variables. Name this model `model_2`. Don't forget the constant! | # your code here

# %load 'solutions/sol5.py'

model_2 = sm.OLS(titanic_train['fare'],

sm.add_constant(titanic_train[['age', 'sex_male', 'class_Second', 'class_Third']])).fit()

model_2.summary() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Interpreting These Results 1. Which of the predictors do you think are important? Why?2. All else equal, what does being male do to the fare? Going back to the example from class3. What is the interpretation of $\beta_0$ and $\beta_1$? Exploring Interactions | sns.lmplot(x="age", y="fare", hue="sex", data=titanic_train, size=6) | /anaconda3/envs/109a/lib/python3.7/site-packages/seaborn/regression.py:546: UserWarning: The `size` paramter has been renamed to `height`; please update your code.

warnings.warn(msg, UserWarning)

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

The slopes seem to be different for male and female. What does that indicate?Let us now try to add an interaction effect into our model. | # It seemed like gender interacted with age and class. Can we put that in our model?

titanic_train['sex_male_X_age'] = titanic_train['age'] * titanic_train['sex_male']

model_3 = sm.OLS(

titanic_train['fare'],

sm.add_constant(titanic_train[['age', 'sex_male', 'class_Second', 'class_Third', 'sex_male_X_age']])

).fit()

model_3.summary() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**What happened to the `age` and `male` terms?** | # It seemed like gender interacted with age and class. Can we put that in our model?

titanic_train['sex_male_X_class_Second'] = titanic_train['age'] * titanic_train['class_Second']

titanic_train['sex_male_X_class_Third'] = titanic_train['age'] * titanic_train['class_Third']

model_4 = sm.OLS(

titanic_train['fare'],

sm.add_constant(titanic_train[['age', 'sex_male', 'class_Second', 'class_Third', 'sex_male_X_age',

'sex_male_X_class_Second', 'sex_male_X_class_Third']])

).fit()

model_4.summary() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Polynomial Regression  Perhaps we now believe that the fare also depends on the square of age. How would we include this term in our model? | fig, ax = plt.subplots(figsize=(12,6))

ax.plot(titanic_train['age'], titanic_train['fare'], 'o')

x = np.linspace(0,80,100)

ax.plot(x, x, '-', label=r'$y=x$')

ax.plot(x, 0.04*x**2, '-', label=r'$y=c x^2$')

ax.set_title('Plotting Age (x) vs Fare (y)')

ax.set_xlabel('Age (x)')

ax.set_ylabel('Fare (y)')

ax.legend(); | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**Exercise**: Create a model that predicts fare from all the predictors in `model_4` + the square of age. Show the summary of this model. Call it `model_5`. Remember to use the training data, `titanic_train`. | # your code here

# %load 'solutions/sol6.py'

titanic_train['age^2'] = titanic_train['age'] **2

model_5 = sm.OLS(

titanic_train['fare'],

sm.add_constant(titanic_train[['age', 'sex_male', 'class_Second', 'class_Third', 'sex_male_X_age',

'sex_male_X_class_Second', 'sex_male_X_class_Third', 'age^2']])

).fit()

model_5.summary() | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Looking at All Our Models: Model Selection What has happened to the $R^2$ as we added more features? Does this mean that the model is better? (What if we kept adding more predictors and interaction terms? **In general, how should we choose a model?** We will spend a lot more time on model selection and learn about ways to do so as the course progresses. | models = [model_1, model_2, model_3, model_4, model_5]

fig, ax = plt.subplots(figsize=(12,6))

ax.plot([model.df_model for model in models], [model.rsquared for model in models], 'x-')

ax.set_xlabel("Model degrees of freedom")

ax.set_title('Model degrees of freedom vs training $R^2$')

ax.set_ylabel("$R^2$"); | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**What about the test data?**We added a lot of columns to our training data and must add the same to our test data in order to calculate $R^2$ scores. | # Added features for model 1

# Nothing new to be added

# Added features for model 2

titanic_test = pd.get_dummies(titanic_test, columns=['sex', 'class'], drop_first=True)

# Added features for model 3

titanic_test['sex_male_X_age'] = titanic_test['age'] * titanic_test['sex_male']

# Added features for model 4

titanic_test['sex_male_X_class_Second'] = titanic_test['age'] * titanic_test['class_Second']

titanic_test['sex_male_X_class_Third'] = titanic_test['age'] * titanic_test['class_Third']

# Added features for model 5

titanic_test['age^2'] = titanic_test['age'] **2 | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**Calculating R^2 scores** | from sklearn.metrics import r2_score

r2_scores = []

y_preds = []

y_true = titanic_test['fare']

# model 1

y_preds.append(model_1.predict(sm.add_constant(titanic_test['age'])))

# model 2

y_preds.append(model_2.predict(sm.add_constant(titanic_test[['age', 'sex_male', 'class_Second', 'class_Third']])))

# model 3

y_preds.append(model_3.predict(sm.add_constant(titanic_test[['age', 'sex_male', 'class_Second', 'class_Third',

'sex_male_X_age']])))

# model 4

y_preds.append(model_4.predict(sm.add_constant(titanic_test[['age', 'sex_male', 'class_Second', 'class_Third',

'sex_male_X_age', 'sex_male_X_class_Second',

'sex_male_X_class_Third']])))

# model 5

y_preds.append(model_5.predict(sm.add_constant(titanic_test[['age', 'sex_male', 'class_Second',

'class_Third', 'sex_male_X_age',

'sex_male_X_class_Second',

'sex_male_X_class_Third', 'age^2']])))

for y_pred in y_preds:

r2_scores.append(r2_score(y_true, y_pred))

models = [model_1, model_2, model_3, model_4, model_5]

fig, ax = plt.subplots(figsize=(12,6))

ax.plot([model.df_model for model in models], r2_scores, 'x-')

ax.set_xlabel("Model degrees of freedom")

ax.set_title('Model degrees of freedom vs test $R^2$')

ax.set_ylabel("$R^2$"); | /anaconda3/envs/109a/lib/python3.7/site-packages/numpy/core/fromnumeric.py:2389: FutureWarning: Method .ptp is deprecated and will be removed in a future version. Use numpy.ptp instead.

return ptp(axis=axis, out=out, **kwargs)

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Regression Assumptions. Should We Even Regress Linearly?  **Question**: What are the assumptions of a linear regression model? We find that the answer to this question can be found on closer examimation of $\epsilon$. What is $\epsilon$? It is assumed that $\epsilon$ is normally distributed with a mean of 0 and variance $\sigma^2$. But what does this tell us?1. Assumption 1: Constant variance of $\epsilon$ errors. This means that if we plot our **residuals**, which are the differences between the true $Y$ and our predicted $\hat{Y}$, they should look like they have constant variance and a mean of 0. We will show this in our plots.2. Assumption 2: Independence of $\epsilon$ errors. This again comes from the distribution of $\epsilon$ that we decide beforehand.3. Assumption 3: Linearity. This is an implicit assumption as we claim that Y can be modeled through a linear combination of the predictors. **Important Note:** Even though our predictors, for instance $X_2$, can be created by squaring or cubing another variable, we still use them in a linear equation as shown above, which is why polynomial regression is still a linear model.4. Assumption 4: Normality. We assume that the $\epsilon$ is normally distributed, and we can show this in a histogram of the residuals.**Exercise**: Calculate the residuals for model 5, our most recent model. Optionally, plot and histogram these residuals and check the assumptions of the model. | # your code here

# %load 'solutions/sol7.py'

# %load 'solutions/sol7.py'

predictors = sm.add_constant(titanic_train[['age', 'sex_male', 'class_Second', 'class_Third', 'sex_male_X_age',

'sex_male_X_class_Second', 'sex_male_X_class_Third', 'age^2']])

y_hat = model_5.predict(predictors)

residuals = titanic_train['fare'] - y_hat

# plotting

fig, ax = plt.subplots(ncols=2, figsize=(16,5))

ax = ax.ravel()

ax[0].set_title('Plot of Residuals')

ax[0].scatter(y_hat, residuals, alpha=0.2)

ax[0].set_xlabel(r'$\hat{y}$')

ax[0].set_xlabel('residuals')

ax[1].set_title('Histogram of Residuals')

ax[1].hist(residuals, alpha=0.7)

ax[1].set_xlabel('residuals')

ax[1].set_ylabel('frequency');

# Mean of residuals

print('Mean of residuals: {}'.format(np.mean(residuals))) | Mean of residuals: 4.784570776163707e-13

| MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

**What can you say about the assumptions of the model?** ---------------- End of Standard Section--------------- Extra: Visual exploration of predictors' correlationsThe dataset for this problem contains 10 simulated predictors and a response variable. | # read in the data

data = pd.read_csv('../data/dataset3.txt')

data.head()

# this effect can be replicated using the scatter_matrix function in pandas plotting

sns.pairplot(data); | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Predictors x1, x2, x3 seem to be perfectly correlated while predictors x4, x5, x6, x7 seem correlated. | data.corr()

sns.heatmap(data.corr()) | _____no_output_____ | MIT | content/sections/section3/notebook/cs109a_section_3.ipynb | lingcog/2019-CS109A |

Count all the words | wordcounter = Counter({})

words_per_video = []

for ann_idx, ann_file in enumerate(all_annotations):

file = open(ann_file, "r")

words = file.read().split()

file.close()

current_wordcounter = Counter(words)

wordcounter += current_wordcounter

words_per_video.append(len(words))

| _____no_output_____ | MIT | Get Stats.ipynb | jrterven/lip_reading_dataset |

Some stats | print("Number of words:", len(wordcounter))

print("10 most common words:")

print(wordcounter.most_common(10))

print("Max words in a video:", max(words_per_video))

print("Min words in a video:", min(words_per_video))

words_per_video_counter = Counter(words_per_video)

print(words_per_video_counter) | Counter({11: 762, 12: 746, 9: 662, 10: 650, 13: 643, 8: 602, 5: 601, 4: 592, 7: 570, 6: 549, 14: 524, 3: 513, 2: 438, 15: 380, 1: 329, 16: 267, 17: 179, 18: 92, 19: 35, 20: 21, 21: 15, 22: 8, 23: 2, 25: 1, 24: 1})

| MIT | Get Stats.ipynb | jrterven/lip_reading_dataset |

hp tuning | # LogisticRegression, L1

logreg = LogisticRegression(penalty='l1',solver='saga',random_state=0,max_iter=10000)

grid = {'C': np.logspace(-5, 5, 11)}

#predefined splits

#gs = GridSearchCV(logreg, grid, cv=ps.split(),scoring='accuracy')

gs = GridSearchCV(logreg, grid, cv=ps.split(),scoring=['roc_auc','average_precision'],refit='roc_auc')

gs.fit(all_cols[0], all_cols[1])

print(gs.best_params_)

print(gs.best_score_) #best cv score

df_gridsearch = pd.DataFrame(gs.cv_results_)

df_gridsearch.to_csv('model_hp_results/guideonly_gene20_075f_classi_LogisticRegression_L1_hp.csv')

# LogisticRegression, L2

logreg = LogisticRegression(penalty='l2',solver='saga',random_state=0,max_iter=10000)

grid = {'C': np.logspace(-5, 5, 11)}

#predefined splits

gs = GridSearchCV(logreg, grid, cv=ps.split(),scoring=['roc_auc','average_precision'],refit='roc_auc')

gs.fit(all_cols[0], all_cols[1])

print(gs.best_params_)

print(gs.best_score_) #best cv score

df_gridsearch = pd.DataFrame(gs.cv_results_)

df_gridsearch.to_csv('model_hp_results/guideonly_gene20_075f_classi_LogisticRegression_L2_hp.csv')

# LogisticRegression, elasticnet

logreg = LogisticRegression(penalty='elasticnet',solver='saga',random_state=0,max_iter=10000)

grid = {'C': np.logspace(-4, 4, 9),'l1_ratio':np.linspace(0.1, 1, num=10)}

gs = GridSearchCV(logreg, grid, cv=ps.split(),scoring=['roc_auc','average_precision'],refit='roc_auc')

gs.fit(all_cols[0], all_cols[1])

print(gs.best_params_)

print(gs.best_score_) #best cv score

df_gridsearch = pd.DataFrame(gs.cv_results_)

df_gridsearch.to_csv('model_hp_results/guideonly_gene20_075f_classi_LogisticRegression_elasticnet_hp.csv')

# https://www.programcreek.com/python/example/91158/sklearn.model_selection.GroupKFold

#random forest

clf = RandomForestClassifier(random_state=0)

grid = {'n_estimators':[100,200,400,800,1000,1200,1500],'max_features':['auto','sqrt','log2']}

gs = GridSearchCV(clf, grid, cv=GroupKFold(n_splits=5))

gs.fit(all_cols[0], all_cols[1], groups=groups)

#GradientBoostingClassifier

gb = ensemble.GradientBoostingClassifier(random_state=0)

grid = {'learning_rate':np.logspace(-2, 0, 3),'n_estimators':[100,200,400,800,1000,1200,1500],'max_depth':[2,3,4,8],'max_features':['auto','sqrt','log2']}

gs = GridSearchCV(gb, grid, cv=GroupKFold(n_splits=5))

gs.fit(all_cols[0], all_cols[1], groups=groups)

print(gs.best_score_) #best cv score

print(gs.best_params_)

df_gridsearch = pd.DataFrame(gs.cv_results_)

df_gridsearch.to_csv('linearmodel_hp_results/classi_gb_hp.csv') | _____no_output_____ | MIT | models/Linear_ensemble/hyperparameter tuning/linear model_new_classification-seq only.ipynb | jingyi7777/CasRx_guide_efficiency |

Test models | def classification_analysis(model_name, split, y_pred,y_true):

test_df = pd.DataFrame(list(zip(list(y_pred), list(y_true))),

columns =['predicted_value', 'true_binary_label'])

thres_list = [0.8, 0.9,0.95]

tp_thres = []

#print('thres_stats')

for thres in thres_list:

df_pre_good = test_df[test_df['predicted_value']>thres]

true_good_label = df_pre_good['true_binary_label'].values

num_real_gg = np.count_nonzero(true_good_label)

if len(true_good_label)>0:

gg_ratio = num_real_gg/len(true_good_label)

tp_thres.append(gg_ratio)

#print('true good guide percent '+str(gg_ratio))

else:

tp_thres.append('na')

outputs = np.array(y_pred)

labels = np.array(y_true)

#plt.clf()

#fig.suptitle('AUC and PRC')

score = roc_auc_score(labels, outputs)

fpr, tpr, _ = roc_curve(labels, outputs)

#print('AUROC '+str(score))

average_precision = average_precision_score(labels, outputs)

precision, recall, thres_prc = precision_recall_curve(labels, outputs)

#print('AUPRC '+str(average_precision))

#plt.savefig(fname='results/linear_models/'+str(model_name)+'precision-recall_'+str(split)+'.png',dpi=600,bbox_inches='tight')

return score,average_precision,tp_thres

#LogisticRegression, little regularization

logreg = LogisticRegression(penalty='l1',solver='saga',random_state=0,max_iter=10000,C=100000000)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

logreg.fit(d_input, d_output) #fit models

#test set

xt = te[0]

#pred = logreg.predict(xt)

pred = logreg.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('LogisticRegression-L1', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

print('tp_80_mean: '+str(tp_80_mean))

print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

print('tp_90_mean: '+str(tp_90_mean))

print('tp_90_sd: '+str(tp_90_sd))

# LogisticRegression, L1

logreg = LogisticRegression(penalty='l1',solver='saga',random_state=0,max_iter=10000,C=0.1)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

logreg.fit(d_input, d_output) #fit models

#test set

xt = te[0]

#pred = logreg.predict(xt)

pred = logreg.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('LogisticRegression-L1', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

print('tp_80_mean: '+str(tp_80_mean))

print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

print('tp_90_mean: '+str(tp_90_mean))

print('tp_90_sd: '+str(tp_90_sd))

# LogisticRegression, L2

logreg = LogisticRegression(penalty='l2',solver='saga',random_state=0,max_iter=10000,C=0.01)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

logreg.fit(d_input, d_output) #fit models

#test set

xt = te[0]

#pred = logreg.predict(xt)

pred = logreg.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('LogisticRegression-L2', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

print('tp_80_mean: '+str(tp_80_mean))

print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

print('tp_90_mean: '+str(tp_90_mean))

print('tp_90_sd: '+str(tp_90_sd))

# LogisticRegression, elasticnet

logreg = LogisticRegression(penalty='elasticnet',solver='saga',random_state=0,max_iter=10000,l1_ratio=0.50,C=0.1)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

logreg.fit(d_input, d_output) #fit models

#test set

xt = te[0]

#pred = logreg.predict(xt)

pred = logreg.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('LogisticRegression-elasticnet', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

print('tp_80_mean: '+str(tp_80_mean))

print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

print('tp_90_mean: '+str(tp_90_mean))

print('tp_90_sd: '+str(tp_90_sd))

#SVM, linear

clf = svm.SVC(kernel='linear',probability=True,random_state=0,C=0.001)

#clf = LinearSVC(dual= False, random_state=0, max_iter=10000,C=1,penalty='l2')

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

clf.fit(d_input, d_output) #fit models

#test set

xt = te[0]

pred = clf.predict_proba(xt)

pred = pred[:,1]

#pred = clf.predict(xt)

auroc,auprc,tp_thres = classification_analysis('svm', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

#print('tp_80_mean: '+str(tp_80_mean))

#print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

#print('tp_90_mean: '+str(tp_90_mean))

#print('tp_90_sd: '+str(tp_90_sd))

# random forest

#clf = RandomForestClassifier(n_estimators=32,min_samples_split=2, min_samples_leaf=2, max_features='auto',random_state=0)

clf = RandomForestClassifier(n_estimators=1500,max_features='auto',random_state=0)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

#tr, val, te = create_gene_splits_filter1_kfold(dataframe['gene'].values, all_cols, 9, args.split)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

clf.fit(d_input, d_output) #fit models

#test set

xt = te[0]

#pred = logreg.predict(xt)

pred = clf.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('random forest', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))

print('auroc_sd: '+str(auroc_sd))

auprc_mean = statistics.mean(auprc_l)

auprc_sd = statistics.stdev(auprc_l)

print('auprc_mean: '+str(auprc_mean))

print('auprc_sd: '+str(auprc_sd))

tp_80_mean = statistics.mean(tp_80)

tp_80_sd = statistics.stdev(tp_80)

print('tp_80_mean: '+str(tp_80_mean))

print('tp_80_sd: '+str(tp_80_sd))

tp_90_mean = statistics.mean(tp_90)

tp_90_sd = statistics.stdev(tp_90)

print('tp_90_mean: '+str(tp_90_mean))

print('tp_90_sd: '+str(tp_90_sd))

#GradientBoostingClassifier

clf = ensemble.GradientBoostingClassifier(random_state=0,max_depth=4,

max_features='auto', n_estimators=1500)

auroc_l = []

auprc_l = []

tp_80 = []

tp_90 = []

#for s in range(11):

for s in range(9):

#tr, val, te = create_gene_splits_kfold(dataframe['gene'].values, all_cols, 11, s)

#tr, val, te = create_gene_splits_filter1_kfold(dataframe['gene'].values, all_cols, 9, args.split)

tr, te = create_gene_splits_filter1_kfold_noval(dataframe['gene'].values, all_cols, 9, s)

# training input and output

d_input = tr[0]

d_output = tr[1]

clf.fit(d_input, d_output) #fit models

#test set

xt = te[0]

pred = clf.predict_proba(xt)

pred = pred[:,1]

auroc,auprc,tp_thres = classification_analysis('GradientBoostingClassifier_hpnew', s,pred,te[1])

auroc_l.append(auroc)

auprc_l.append(auprc)

if tp_thres[0]!= 'na':

tp_80.append(tp_thres[0])

if tp_thres[1]!= 'na':

tp_90.append(tp_thres[1])

auroc_mean = statistics.mean(auroc_l)

auroc_sd = statistics.stdev(auroc_l)

print('auroc_mean: '+str(auroc_mean))