markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

Hydrogen atom\begin{equation}\label{eq1}-\frac{\hbar^2}{2 \mu} \left[ \frac{1}{r^2} \frac{\partial }{\partial r} \left( r^2 \frac{ \partial \psi}{\partial r}\right) + \frac{1}{r^2 \sin \theta} \frac{\partial }{\partial \theta} \left( \sin \theta \frac{\partial \psi}{\partial \theta}\right) + \frac{1}{r^2 \sin^2 \theta} \frac{\partial^2 \psi}{\partial \phi^2} \right] - \frac{e^2}{ 4 \pi \epsilon_0 r} \psi= E \psi\end{equation}\begin{equation}\label{eqsol1}\psi_{n\ell m}(r,\vartheta,\varphi) = \sqrt {{\left ( \frac{2}{n a^*_0} \right )}^3 \frac{(n-\ell-1)!}{2n(n+\ell)!}} e^{- \rho / 2} \rho^{\ell} L_{n-\ell-1}^{2\ell+1}(\rho) Y_{\ell}^{m}(\vartheta, \varphi ) \end{equation} | import k3d

from ipywidgets import interact, FloatSlider

import numpy as np

import scipy.special

import scipy.misc

r = lambda x,y,z: np.sqrt(x**2+y**2+z**2)

theta = lambda x,y,z: np.arccos(z/r(x,y,z))

phi = lambda x,y,z: np.arctan2(y,x)

a0 = 1.

R = lambda r,n,l: (2*r/(n*a0))**l * np.exp(-r/n/a0) * scipy.special.genlaguerre(n-l-1,2*l+1)(2*r/n/a0)

WF = lambda r,theta,phi,n,l,m: R(r,n,l) * scipy.special.sph_harm(m,l,phi,theta)

absWF = lambda r,theta,phi,n,l,m: abs(WF(r,theta,phi,n,l,m)).astype(np.float32)**2

N = 50j

a = 30.0

x,y,z = np.ogrid[-a:a:N,-a:a:N,-a:a:N]

x = x.astype(np.float32)

y = y.astype(np.float32)

z = z.astype(np.float32)

orbital = WF(r(x,y,z),theta(x,y,z),phi(x,y,z),4,1,0).real.astype(np.float32) # 4p

plt_vol = k3d.volume(orbital)

plt_label = k3d.text2d(r'n=1\; l=0\; m=0',(0.,0.))

plot = k3d.plot()

plot += plt_vol

plot += plt_label

plt_vol.opacity_function = [0. , 0. , 0.21327923, 0.98025 , 0.32439035,

0. , 0.5 , 0. , 0.67560965, 0. ,

0.74537706, 0.9915 , 1. , 0. ]

plt_vol.color_map = k3d.colormaps.paraview_color_maps.Cool_to_Warm_Extended

plt_vol.color_range = (-0.5,0.5)

plot.display() | _____no_output_____ | MIT | atomic_orbitals_wave_function.ipynb | OpenDreamKit/k3d_demo |

animation single wave function is sent at a time | E = 4

for l in range(E):

for m in range(-l,l+1):

psi2 = WF(r(x,y,z),theta(x,y,z),phi(x,y,z),E,l,m).real.astype(np.float32)

plt_vol.volume = psi2/np.max(psi2)

plt_label.text = 'n=%d \quad l=%d \quad m=%d'%(E,l,m)

| _____no_output_____ | MIT | atomic_orbitals_wave_function.ipynb | OpenDreamKit/k3d_demo |

using time series - series of volumetric data are sent to k3d, - player interpolates between | E = 4

psi_t = {}

t = 0.0

for l in range(E):

for m in range(-l,l+1):

psi2 = WF(r(x,y,z),theta(x,y,z),phi(x,y,z),E,l,m)

psi_t[str(t)] = (psi2.real/np.max(np.abs(psi2))).astype(np.float32)

t += 0.3

plt_vol.volume = psi_t

| _____no_output_____ | MIT | atomic_orbitals_wave_function.ipynb | OpenDreamKit/k3d_demo |

Demo text-mining: Pharma caseIn this demo, I will demonstrate what are the basic steps that you will have to use in most text-mining cases. This are also some of the steps that have been used in the ResuMe app, available here: [ResuMe](https://resume.businessdecision.be/). The case that we will cover here, is a simplified version of a project that has actually been carried out by B&D, where the goal was to identify if a given paper is treating about Pharmacovigilance or not. Pharmacovigilance is a domain of study in healthcare about drug safety. Consequently, we would like to predict, based on the text of the scientific article if the article treats about Pharmacovigilance or not.For this we can use any kind of model, but in any case we will have to transform the words in numbers in some way. We'll see different methods and compare their performance. Downloading the dataset from PubMed | #import documents from PubMed

from Bio import Entrez

# Function to search for a certain number articles based on a certain keyword

def search(keyword,number=20):

Entrez.email = '[email protected]'

handle = Entrez.esearch(db='pubmed',

sort='relevance',

retmax=str(number),

retmode='xml',

term=keyword)

results = Entrez.read(handle)

return results

# Function to retrieve the results of previous search query

def fetch_details(id_list):

ids = ','.join(id_list)

Entrez.email = '[email protected]'

handle = Entrez.efetch(db='pubmed',

retmode='xml',

id=ids)

results = Entrez.read(handle)

return results

| _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Retrieving top 200 articles with Pharmacovigilance keyword | results = search('Pharmacovigilance', 200) #querying PubMed

id_list = results['IdList']

papers_pharmacov = fetch_details(id_list) #retrieving the info about the articles in nested lists & dictionary format

# checking article title for the first 10 retrieved articles

for i, paper in enumerate(papers_pharmacov['PubmedArticle'][:10]):

print("%d) %s" % (i+1,paper['MedlineCitation']['Article']['ArticleTitle'])) | 1) FarmaREL: An Italian pharmacovigilance project to monitor and evaluate adverse drug reactions in haematologic patients.

2) Feasibility and Educational Value of a Student-Run Pharmacovigilance Programme: A Prospective Cohort Study.

3) Developing a Crowdsourcing Approach and Tool for Pharmacovigilance Education Material Delivery.

4) Promoting and Protecting Public Health: How the European Union Pharmacovigilance System Works.

5) Effect of an educational intervention on knowledge and attitude regarding pharmacovigilance and consumer pharmacovigilance among community pharmacists in Lalitpur district, Nepal.

6) Pharmacovigilance and Biomedical Informatics: A Model for Future Development.

7) Pharmacovigilance in Europe: Place of the Pharmacovigilance Risk Assessment Committee (PRAC) in organisation and decisional processes.

8) Tamoxifen Pharmacovigilance: Implications for Safe Use in the Future.

9) Pharmacovigilance Skills, Knowledge and Attitudes in our Future Doctors - A Nationwide Study in the Netherlands.

10) Adverse drug reactions reporting in Calabria (Southern Italy) in the four-year period 2011-2014: impact of a regional pharmacovigilance project in light of the new European Legislation.

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Retrieving top 1.000 articles with Pharma keywordThis will be our base of comparison, we want to separate them from the others | results = search('Pharma', 1000) #querying PubMed

id_list = results['IdList']

papers_pharma = fetch_details(id_list)#retrieving the info about the articles in nested lists & dictionary format

# checking article title for the first 10 retrieved articles

for i, paper in enumerate(papers_pharma['PubmedArticle'][:10]):

print("%d) %s" % (i+1,paper['MedlineCitation']['Article']['ArticleTitle'])) | 1) Recent trends in specialty pharma business model.

2) The moderating role of absorptive capacity and the differential effects of acquisitions and alliances on Big Pharma firms' innovation performance.

3) Space-related pharma-motifs for fast search of protein binding motifs and polypharmacological targets.

4) Pharma Websites and "Professionals-Only" Information: The Implications for Patient Trust and Autonomy.

5) BRIC Health Systems and Big Pharma: A Challenge for Health Policy and Management.

6) Developing Deep Learning Applications for Life Science and Pharma Industry.

7) Exzellenz in der Bildung für eine innovative Schweiz: Die Position des Wirtschaftsdachverbandes Chemie Pharma Biotech.

8) Shaking Up Biotech/Pharma: Can Cues Be Taken from the Tech Industry?

9) Pharma-Nutritional Properties of Olive Oil Phenols. Transfer of New Findings to Human Nutrition.

10) Pharma Success in Product Development—Does Biotechnology Change the Paradigm in Product Development and Attrition.

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Saving ID's, labels and title + abstracts of the articlesWhen an article was retrieved via the Pharmacovigilance keyword, it will receive the label = 1 and = 0 else. We'll per article put the article title and article abstract together as our text data on the article. | # Save ids & label 1 = pharmacovigilance , 0 = not pharmacovigilance

# & Save title + abstract in dico

ids = []

labels = []

data = []

for i, paper in enumerate(papers_pharmacov['PubmedArticle']):

if 'Abstract' in paper['MedlineCitation']['Article'].keys(): #check that abstract info is available

ids.append(str(paper['MedlineCitation']['PMID']))

labels.append(1)

title = paper['MedlineCitation']['Article']['ArticleTitle'] #Article title

abstract = paper['MedlineCitation']['Article']['Abstract']['AbstractText'][0] #Abstract

data.append( title + abstract )

for i, paper in enumerate(papers_pharma['PubmedArticle']):

if 'Abstract' in paper['MedlineCitation']['Article'].keys(): #check that abstract info is available

ids.append(str(paper['MedlineCitation']['PMID']))

labels.append(0)

title = paper['MedlineCitation']['Article']['ArticleTitle'] #Article title

abstract = paper['MedlineCitation']['Article']['Abstract']['AbstractText'][0] #Abstract

data.append( title + abstract )

# Check result for one paper

ids[0] # ID

labels[0] # 1 = pharmacovigilance , 0 = not pharmacovigilance

data[0] # Title & abstract | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Transform to numeric attributesWe will now **transform** the **text into numeric attributes**. For this, we will convert every word to a number, but we first need to **split** the full text into **separate words**. This is done by using a ***Tokenizer***. The tokenizer will split the full text based on a certain pattern you specify. Here we'll take a very basic pattern and take any words that contain only upper- or lowercase letters and we will convert everything to lowercase. | from nltk.tokenize.regexp import RegexpTokenizer #import a tokenizer, to split the full text into separate words

def Tokenize_text_value(value):

tokenizer1 = RegexpTokenizer(r"[A-Za-z]+") # our self defined tokenizera

value = value.lower() # convert all words to lowercase

return tokenizer1.tokenize(value) # tokenize each text

# example of our tokenizer

Tokenize_text_value(data[0]) | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

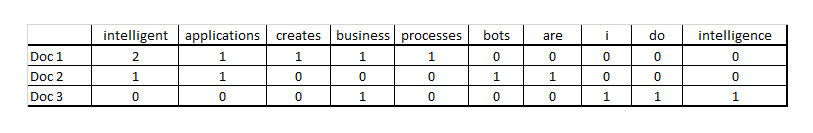

Using the ***bag-of-words*** method we can transform any document to a vector. Using this method you have **one column per word and one row per document** and either a binary value 1 if the word is present in a certain document, 0 if not or a count value of the number of times the word appears in the document. For instance, the following three sentences:1. Intelligent applications creates intelligent business processes2. Bots are intelligent applications3. I do business intelligenceCan be represented in the following matrix using the counts of each word as values in the matrix | # transform non-processed data to nummeric features:

from sklearn.feature_extraction.text import TfidfVectorizer

binary_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', binary = True,

tokenizer=Tokenize_text_value) # initialize the binary vectorizer

count_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=False,

tokenizer=Tokenize_text_value) # initialize the count vectorizer

binary_matrix = binary_vectorizer.fit_transform(data) # fit & transform

count_matrix = count_vectorizer.fit_transform(data) # fit & transform

# Check our output matrix shape: rows = documents, columns = words

binary_matrix.shape | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Check performance in a basic modelWe'll apply now a model on our 2 matrices. For this we will use the ***Naive Bayes model***, which (as the name tells) is based on the probabilistic Bayes theorem. It is used a lot in text-mining as it is really **fast** to train and apply and is able to **handle a lot of features**, which is often the case in text-mining, when you have one column per word. We will use the ***kappa*** measure to evaluate model performance. Kappa is a metric that is robust to class-imbalances in the data and varies from -1 to +1 with 0 being a random performance and +1 a perfect performance. | # apply cross validation Naive Bayes model

from sklearn.model_selection import cross_val_score

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import (cohen_kappa_score, make_scorer)

NB = MultinomialNB() # our Naive Bayes Model initialisation

scorer = make_scorer(cohen_kappa_score) # Our kappa score

scores = cross_val_score(NB,binary_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Binary matrix with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,count_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Count matrix with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var())) | Cross validation on Count matrix with a mean kappa score of 0.064193 and variance of 0.000682

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

TF-IDF transformationAn alternative to the binary and count matrix is the **tf-idf transformation**. It stands for ***Term Frequency - Inverse Document Frequency*** and is a measure that will try to find the words that are unique to each document and that characterizes the document compared to the other documents. How this achieved is by taking the term frequency (which is the same as the count that we have defined before) and multiplying it by the inverse document frequency (which is low when the term appears in all other documents and high when it appears in few other documents):*Copyright © Chris Albon, 2018* | # transform non-processed data to nummeric features:

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=True, smooth_idf = True ,

tokenizer=Tokenize_text_value) # initialize the tf-idf vectorizer

tfidf_matrix = tfidf_vectorizer.fit_transform(data) # fit & transform

scores = cross_val_score(NB,tfidf_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on TF-IDF matrix with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var())) | Cross validation on TF-IDF matrix with a mean kappa score of 0.320332 and variance of 0.003960

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

How to improve this score? How come the TF-IDF works the best, followed closely by the binary matrix and with the count matrix far behind? Let's have a look at the words that occur the most in the different documents: | import numpy as np

# Find words with maximum occurence for each document in the count_matrix

max_counts_per_doc = np.asarray(np.argmax(count_matrix,axis = 1)).ravel()

# Count how many times every word is the most occuring word across all documents

unique, counts = np.unique(max_counts_per_doc,return_counts=True)

# Keep only the words that are the most frequent word of at least 5 different documents

frequent = unique[counts > 5]

# Retrieve the vocabulary of our count matrix

vocab = count_vectorizer.get_feature_names()

# print out the words in frequent

for i in frequent:

print(vocab[i]) | a

and

for

in

of

the

to

with

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

As you can see those words are all words without any added value as they are mostly used to link certain words together in sentences, but have no standalone value. This is what we call ***Stop words***. So knowing that, we can find an intuition of why the tf-idf and binary transformations worked better than the count one. In the count one, we have seen that words that appear a lot, but have no value as such, get a high weight/value, whereas in binary every word gets the same weight and in tf-idf, the words that appear a lot in the other documents are automatically given a lower weight thanks to the IDF part. To avoid this problem we usually remove stop words Removing Stop words | # Remove the stop words

binary_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', binary = True,

tokenizer=Tokenize_text_value, stop_words = 'english') # initialize the binary vectorizer

count_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=False, smooth_idf=False,

tokenizer=Tokenize_text_value, stop_words = 'english') # initialize the count vectorizer

tfidf_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=True, smooth_idf=True,

tokenizer=Tokenize_text_value, stop_words = 'english') # initialize the tf-idf vectorizer

binary_matrix = binary_vectorizer.fit_transform(data) # fit & transform

count_matrix = count_vectorizer.fit_transform(data) # fit & transform

tfidf_matrix = tfidf_vectorizer.fit_transform(data) # fit & transform

scores = cross_val_score(NB,binary_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Binary matrix by removing stop-words with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,count_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Count matrix by removing stop-words with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,tfidf_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on TF-IDF matrix by removing stop-words with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var())) | Cross validation on TF-IDF matrix by removing stop-words with a mean kappa score of 0.682766 and variance of 0.011562

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

We have a big improvement in our performance when we remove the stop words. How can we go a step further? Now the following steps are mostly domain dependent. You have to think about your problem and what you would need to solve it. In this case, if we are using only the abstracts and the titles, if we had to do it ourselves, we would have a look at the most common keywords you have in the articles about Pharmacovigilance and when we have a new article to classify, we would look if we find those same keywords back. However, here we are analyzing all words (minus the stopwords) and not only the keywords. So we could try to filter out to keep only words that appear at least a certain number of times across all documents. Keeping only key-words | # keep only words that appear at least in 5% of the documents:

binary_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', binary = True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05) # initialize the binary vectorizer

count_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=False, smooth_idf=False,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05) # initialize the count vectorizer

tfidf_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=True, smooth_idf=True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05) # initialize the tf-idf vectorizer

binary_matrix = binary_vectorizer.fit_transform(data) # fit & transform

count_matrix = count_vectorizer.fit_transform(data) # fit & transform

tfidf_matrix = tfidf_vectorizer.fit_transform(data) # fit & transform

scores = cross_val_score(NB,binary_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Binary matrix by keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,count_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Count matrix by keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,tfidf_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on TF-IDF matrix by keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var())) | Cross validation on TF-IDF matrix by keeping only keywords appearing in at least 5% of the documents with a mean kappa score of 0.916734 and variance of 0.001633

| MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

Final improvementsWe've made a big improvement with this one as well. We can even go further and add some extra fine-tunings. Let's have a look at the final key-words: | tfidf_vectorizer.get_feature_names() | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

We can see that some words all refer to the same thing: *report, reported, reporting, reports* all refer to one same thing *report* and should therefore be grouped together => this can be done by ***stemming*** StemmingStemming is a technique where we try to reduce words to a common base form, this is done by chopping off the last part of the word: s's are removed, -ing is removed, -ed is removed, ... | # Define a stemmer that will preprocess the text before transforming it

from nltk.stem.porter import PorterStemmer

def preprocess(value):

stemmer = PorterStemmer()

#split in tokens

return ' '.join([stemmer.stem(i) for i in Tokenize_text_value(value) ])

# Have a look at what it gives on the first article

print(' '.join([i for i in Tokenize_text_value(data[0]) ])) # original

print('\n')

print(preprocess(data[0])) #stemmed

# Preprocess the documents by stemming the words

binary_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', binary = True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05, preprocessor = preprocess) # initialize the binary vectorizer

count_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=False, smooth_idf=False,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05, preprocessor = preprocess) # initialize the count vectorizer

tfidf_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=True, smooth_idf=True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.05, preprocessor = preprocess) # initialize the tf-idf vectorizer

binary_matrix = binary_vectorizer.fit_transform(data) # fit & transform

count_matrix = count_vectorizer.fit_transform(data) # fit & transform

tfidf_matrix = tfidf_vectorizer.fit_transform(data) # fit & transform

scores = cross_val_score(NB,binary_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Binary matrix by stemming and keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,count_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Count matrix by stemming and keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,tfidf_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on TF-IDF matrix by stemming and keeping only keywords appearing in at least 5%% of the documents with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

# Check the stemmed final vocabulary

tfidf_vectorizer.get_feature_names() | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

We can see that the performance slightly decreases with the stemming. Probably, because now when we are keeping words that appear in only 5% of the documents, we have more words than before, as before words with different endings were counted separately and now they are grouped together. So to correct for this we should increase our 5% threshold to take this effect into account. | # Preprocess the documents by stemming the words and keeping only words that appear in at least 10% of the documents:

binary_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', binary = True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.1, preprocessor = preprocess) # initialize the binary vectorizer

count_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=False, smooth_idf=False,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.1, preprocessor = preprocess) # initialize the count vectorizer

tfidf_vectorizer = TfidfVectorizer(input=u'content', analyzer=u'word', use_idf=True, smooth_idf=True,

tokenizer=Tokenize_text_value, stop_words = 'english'

, min_df = 0.1, preprocessor = preprocess) # initialize the tf-idf vectorizer

binary_matrix = binary_vectorizer.fit_transform(data) # fit & transform

count_matrix = count_vectorizer.fit_transform(data) # fit & transform

tfidf_matrix = tfidf_vectorizer.fit_transform(data) # fit & transform

scores = cross_val_score(NB,binary_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Binary matrix by stemming with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,count_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on Count matrix by stemming with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

scores = cross_val_score(NB,tfidf_matrix,labels,scoring = scorer,cv = 5 )

print('Cross validation on TF-IDF matrix by stemming with a mean kappa score of %f and variance of %f' % (scores.mean(),scores.var()))

# Check the stemmed final vocabulary

tfidf_vectorizer.get_feature_names() | _____no_output_____ | MIT | Text-Mining Hands-on.ipynb | tdekelver-bd/ResuMe |

This notebook was prepared by [Donne Martin](https://github.com/donnemartin). Source and license info is on [GitHub](https://github.com/donnemartin/interactive-coding-challenges). Challenge Notebook Problem: Implement a priority queue backed by an array.* [Constraints](Constraints)* [Test Cases](Test-Cases)* [Algorithm](Algorithm)* [Code](Code)* [Unit Test](Unit-Test)* [Solution Notebook](Solution-Notebook) Constraints* Do we expect the methods to be insert, extract_min, and decrease_key? * Yes* Can we assume there aren't any duplicate keys? * Yes* Do we need to validate inputs? * No* Can we assume this fits memory? * Yes Test Cases insert* `insert` general case -> inserted node extract_min* `extract_min` from an empty list -> None* `extract_min` general case -> min node decrease_key* `decrease_key` an invalid key -> None* `decrease_key` general case -> updated node AlgorithmRefer to the [Solution Notebook](priority_queue_solution.ipynb). If you are stuck and need a hint, the solution notebook's algorithm discussion might be a good place to start. Code | class PriorityQueueNode(object):

def __init__(self, obj, key):

self.obj = obj

self.key = key

def __repr__(self):

return str(self.obj) + ': ' + str(self.key)

class PriorityQueue(object):

def __init__(self):

self.array = []

def __len__(self):

return len(self.array)

def insert(self, node):

for k in range(len(self.array)):

if self.array[k].key < node.key:

# TODO: Implement me

pass

def extract_min(self):

if not self.array:

return None

else:

# TODO: Implement me

pass

def decrease_key(self, obj, new_key):

# TODO: Implement me

pass | _____no_output_____ | Apache-2.0 | arrays_strings/priority_queue_(unsolved)/priority_queue_challenge.ipynb | zzong2006/interactive-coding-challenges |

Unit Test **The following unit test is expected to fail until you solve the challenge.** | # %load test_priority_queue.py

import unittest

class TestPriorityQueue(unittest.TestCase):

def test_priority_queue(self):

priority_queue = PriorityQueue()

self.assertEqual(priority_queue.extract_min(), None)

priority_queue.insert(PriorityQueueNode('a', 20))

priority_queue.insert(PriorityQueueNode('b', 5))

priority_queue.insert(PriorityQueueNode('c', 15))

priority_queue.insert(PriorityQueueNode('d', 22))

priority_queue.insert(PriorityQueueNode('e', 40))

priority_queue.insert(PriorityQueueNode('f', 3))

priority_queue.decrease_key('f', 2)

priority_queue.decrease_key('a', 19)

mins = []

while priority_queue.array:

mins.append(priority_queue.extract_min().key)

self.assertEqual(mins, [2, 5, 15, 19, 22, 40])

print('Success: test_min_heap')

def main():

test = TestPriorityQueue()

test.test_priority_queue()

if __name__ == '__main__':

main() | _____no_output_____ | Apache-2.0 | arrays_strings/priority_queue_(unsolved)/priority_queue_challenge.ipynb | zzong2006/interactive-coding-challenges |

pIC50 Test | import numpy as np

import torch

import seaborn as sns

import malt

import pandas as pd

import dgllife

from dgllife.utils import smiles_to_bigraph, CanonicalAtomFeaturizer, CanonicalBondFeaturizer

df = pd.read_csv('../../../data/moonshot_pIC50.csv', index_col=0)

dgllife_dataset = dgllife.data.csv_dataset.MoleculeCSVDataset(

df=df,

smiles_to_graph=smiles_to_bigraph,

node_featurizer=CanonicalAtomFeaturizer(),

edge_featurizer=CanonicalBondFeaturizer(),

smiles_column='SMILES',

task_names=[

# 'MW', 'cLogP', 'r_inhibition_at_20_uM',

# 'r_inhibition_at_50_uM', 'r_avg_IC50', 'f_inhibition_at_20_uM',

# 'f_inhibition_at_50_uM', 'f_avg_IC50', 'relative_solubility_at_20_uM',

# 'relative_solubility_at_100_uM', 'trypsin_IC50',

'f_avg_pIC50'

],

init_mask=False,

cache_file_path='../../../data/moonshot_pIC50.bin'

)

sns.displot(dgllife_dataset.labels.numpy()[dgllife_dataset.labels.numpy() > 4.005])

from malt.data.collections import _dataset_from_dgllife

data = _dataset_from_dgllife(dgllife_dataset)

# mask data if it's at the limit of detection

data_masked = data[list(np.flatnonzero(np.array(data.y) > 4.005))]

data.shuffle(seed=2666)

ds_tr, ds_vl, ds_te = data_masked[:1500].split([8, 1, 1]) | _____no_output_____ | MIT | scripts/supervised/pIC50_test.ipynb | choderalab/malt |

Make model | model_choice = 'nn' # 'nn'

if model_choice == "gp":

model = malt.models.supervised_model.GaussianProcessSupervisedModel(

representation=malt.models.representation.DGLRepresentation(

out_features=128,

),

regressor=malt.models.regressor.ExactGaussianProcessRegressor(

in_features=128, out_features=2,

),

likelihood=malt.models.likelihood.HeteroschedasticGaussianLikelihood(),

)

elif model_choice == "nn":

model = malt.models.supervised_model.SimpleSupervisedModel(

representation=malt.models.representation.DGLRepresentation(

out_features=128,

),

regressor=malt.models.regressor.NeuralNetworkRegressor(

in_features=128, out_features=1,

),

likelihood=malt.models.likelihood.HomoschedasticGaussianLikelihood(),

) | _____no_output_____ | MIT | scripts/supervised/pIC50_test.ipynb | choderalab/malt |

Train and evaluate. | trainer = malt.trainer.get_default_trainer(

without_player=True,

batch_size=32,

n_epochs=3000,

learning_rate=1e-3

)

model = trainer(model, ds_tr)

r2 = malt.metrics.supervised_metrics.R2()(model, ds_te)

print(r2)

rmse = malt.metrics.supervised_metrics.RMSE()(model, ds_te)

print(rmse)

ds_te_loader = ds_te.view(batch_size=len(ds_te))

g, y = next(iter(ds_te_loader))

y_hat = model.condition(g).mean

g = sns.jointplot(x = ds_te.y, y = y_hat.detach().numpy())

g.set_axis_labels('y', '\hat{y}')

import torch

import dgl

import malt

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--data", type=str, default="esol")

parser.add_argument("--model", type=str, default="nn")

args = parser.parse_args([])

data = getattr(malt.data.collections, args.data)()

data.shuffle(seed=2666)

ds_tr, ds_vl, ds_te = data.split([8, 1, 1])

if args.model == "gp":

model = malt.models.supervised_model.GaussianProcessSupervisedModel(

representation=malt.models.representation.DGLRepresentation(

out_features=128,

),

regressor=malt.models.regressor.ExactGaussianProcessRegressor(

in_features=128, out_features=2,

),

likelihood=malt.models.likelihood.HeteroschedasticGaussianLikelihood(),

)

elif args.model == "nn":

model = malt.models.supervised_model.SimpleSupervisedModel(

representation=malt.models.representation.DGLRepresentation(

out_features=128,

),

regressor=malt.models.regressor.NeuralNetworkRegressor(

in_features=128, out_features=1,

),

likelihood=malt.models.likelihood.HomoschedasticGaussianLikelihood(),

)

trainer = malt.trainer.get_default_trainer(without_player=True, batch_size=len(ds_tr), n_epochs=3000, learning_rate=1e-3)

model = trainer(model, ds_tr)

r2 = malt.metrics.supervised_metrics.R2()(model, ds_te)

print(r2)

rmse = malt.metrics.supervised_metrics.RMSE()(model, ds_te)

print(rmse)

ds_te_loader = ds_te.view(batch_size=len(ds_te))

g, y = next(iter(ds_te_loader))

y_hat = model.condition(g).mean

g = sns.jointplot(x = ds_te.y, y = y_hat.detach().numpy())

g.set_axis_labels('y', '\hat{y}') | _____no_output_____ | MIT | scripts/supervised/pIC50_test.ipynb | choderalab/malt |

Inference and ValidationNow that you have a trained network, you can use it for making predictions. This is typically called **inference**, a term borrowed from statistics. However, neural networks have a tendency to perform *too well* on the training data and aren't able to generalize to data that hasn't been seen before. This is called **overfitting** and it impairs inference performance. To test for overfitting while training, we measure the performance on data not in the training set called the **validation** set. We avoid overfitting through regularization such as dropout while monitoring the validation performance during training. In this notebook, I'll show you how to do this in PyTorch. As usual, let's start by loading the dataset through torchvision. You'll learn more about torchvision and loading data in a later part. This time we'll be taking advantage of the test set which you can get by setting `train=False` here:```pythontestset = datasets.FashionMNIST('~/.pytorch/F_MNIST_data/', download=True, train=False, transform=transform)```The test set contains images just like the training set. Typically you'll see 10-20% of the original dataset held out for testing and validation with the rest being used for training. | import torch

from torchvision import datasets, transforms

# Define a transform to normalize the data

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# Download and load the training data

trainset = datasets.FashionMNIST('~/.pytorch/F_MNIST_data/', download=True, train=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True)

# Download and load the test data

testset = datasets.FashionMNIST('~/.pytorch/F_MNIST_data/', download=True, train=False, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=True) | _____no_output_____ | MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

Here I'll create a model like normal, using the same one from my solution for part 4. | from torch import nn, optim

import torch.nn.functional as F

class Classifier(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(784, 256)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(128, 64)

self.fc4 = nn.Linear(64, 10)

def forward(self, x):

# make sure input tensor is flattened

x = x.view(x.shape[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

x = F.log_softmax(self.fc4(x), dim=1)

return x | _____no_output_____ | MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

The goal of validation is to measure the model's performance on data that isn't part of the training set. Performance here is up to the developer to define though. Typically this is just accuracy, the percentage of classes the network predicted correctly. Other options are [precision and recall](https://en.wikipedia.org/wiki/Precision_and_recallDefinition_(classification_context)) and top-5 error rate. We'll focus on accuracy here. First I'll do a forward pass with one batch from the test set. | model = Classifier()

images, labels = next(iter(testloader))

# Get the class probabilities

ps = torch.exp(model(images))

# Make sure the shape is appropriate, we should get 10 class probabilities for 64 examples

print(ps.shape) | torch.Size([64, 10])

| MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

With the probabilities, we can get the most likely class using the `ps.topk` method. This returns the $k$ highest values. Since we just want the most likely class, we can use `ps.topk(1)`. This returns a tuple of the top-$k$ values and the top-$k$ indices. If the highest value is the fifth element, we'll get back 4 as the index. | top_p, top_class = ps.topk(1, dim=1)

# Look at the most likely classes for the first 10 examples

print(top_class[:10,:]) | tensor([[0],

[3],

[0],

[0],

[0],

[0],

[0],

[0],

[0],

[0]])

| MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

Now we can check if the predicted classes match the labels. This is simple to do by equating `top_class` and `labels`, but we have to be careful of the shapes. Here `top_class` is a 2D tensor with shape `(64, 1)` while `labels` is 1D with shape `(64)`. To get the equality to work out the way we want, `top_class` and `labels` must have the same shape.If we do```pythonequals = top_class == labels````equals` will have shape `(64, 64)`, try it yourself. What it's doing is comparing the one element in each row of `top_class` with each element in `labels` which returns 64 True/False boolean values for each row. | equals = top_class == labels.view(*top_class.shape) | _____no_output_____ | MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

Now we need to calculate the percentage of correct predictions. `equals` has binary values, either 0 or 1. This means that if we just sum up all the values and divide by the number of values, we get the percentage of correct predictions. This is the same operation as taking the mean, so we can get the accuracy with a call to `torch.mean`. If only it was that simple. If you try `torch.mean(equals)`, you'll get an error```RuntimeError: mean is not implemented for type torch.ByteTensor```This happens because `equals` has type `torch.ByteTensor` but `torch.mean` isn't implement for tensors with that type. So we'll need to convert `equals` to a float tensor. Note that when we take `torch.mean` it returns a scalar tensor, to get the actual value as a float we'll need to do `accuracy.item()`. | accuracy = torch.mean(equals.type(torch.FloatTensor))

print(f'Accuracy: {accuracy.item()*100}%') | Accuracy: 20.3125%

| MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

The network is untrained so it's making random guesses and we should see an accuracy around 10%. Now let's train our network and include our validation pass so we can measure how well the network is performing on the test set. Since we're not updating our parameters in the validation pass, we can speed up our code by turning off gradients using `torch.no_grad()`:```python turn off gradientswith torch.no_grad(): validation pass here for images, labels in testloader: ...```>**Exercise:** Implement the validation loop below and print out the total accuracy after the loop. You can largely copy and paste the code from above, but I suggest typing it in because writing it out yourself is essential for building the skill. In general you'll always learn more by typing it rather than copy-pasting. You should be able to get an accuracy above 80%. | model = Classifier()

criterion = nn.NLLLoss()

optimizer = optim.Adam(model.parameters(), lr=0.003)

epochs = 30

steps = 0

train_losses, test_losses = [], []

for e in range(epochs):

running_loss = 0

for images, labels in trainloader:

optimizer.zero_grad()

log_ps = model(images)

loss = criterion(log_ps, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

else:

## TODO: Implement the validation pass and print out the validation accuracy

test_loss = 0

accuracy = 0

with torch.no_grad():

for images, labels in testloader:

log_ps = model(images)

test_loss += criterion(log_ps, labels)

ps = torch.exp(log_ps)

top_p, top_class = ps.topk(1, dim=1)

equals = top_class == labels.view(*top_class.shape)

accuracy += torch.mean(equals.type(torch.FloatTensor))

train_losses.append(running_loss/len(trainloader))

test_losses.append(test_loss/len(testloader))

print("Epoch: {}/{}.. ".format(e+1, epochs),

"Training Loss: {:.3f}.. ".format(running_loss/len(trainloader)),

"Test Loss: {:.3f}.. ".format(test_loss/len(testloader)),

"Test Accuracy: {:.3f}".format(accuracy/len(testloader)))

| Epoch: 1/30.. Training Loss: 0.511.. Test Loss: 0.455.. Test Accuracy: 0.839

Epoch: 2/30.. Training Loss: 0.392.. Test Loss: 0.411.. Test Accuracy: 0.846

Epoch: 3/30.. Training Loss: 0.353.. Test Loss: 0.403.. Test Accuracy: 0.853

Epoch: 4/30.. Training Loss: 0.333.. Test Loss: 0.376.. Test Accuracy: 0.867

Epoch: 5/30.. Training Loss: 0.318.. Test Loss: 0.392.. Test Accuracy: 0.857

Epoch: 6/30.. Training Loss: 0.303.. Test Loss: 0.388.. Test Accuracy: 0.864

Epoch: 7/30.. Training Loss: 0.295.. Test Loss: 0.363.. Test Accuracy: 0.871

Epoch: 8/30.. Training Loss: 0.282.. Test Loss: 0.352.. Test Accuracy: 0.877

Epoch: 9/30.. Training Loss: 0.279.. Test Loss: 0.396.. Test Accuracy: 0.863

Epoch: 10/30.. Training Loss: 0.269.. Test Loss: 0.363.. Test Accuracy: 0.874

Epoch: 11/30.. Training Loss: 0.260.. Test Loss: 0.385.. Test Accuracy: 0.869

Epoch: 12/30.. Training Loss: 0.249.. Test Loss: 0.382.. Test Accuracy: 0.879

Epoch: 13/30.. Training Loss: 0.247.. Test Loss: 0.376.. Test Accuracy: 0.876

Epoch: 14/30.. Training Loss: 0.244.. Test Loss: 0.381.. Test Accuracy: 0.878

Epoch: 15/30.. Training Loss: 0.235.. Test Loss: 0.369.. Test Accuracy: 0.880

Epoch: 16/30.. Training Loss: 0.228.. Test Loss: 0.389.. Test Accuracy: 0.877

Epoch: 17/30.. Training Loss: 0.232.. Test Loss: 0.380.. Test Accuracy: 0.877

Epoch: 18/30.. Training Loss: 0.220.. Test Loss: 0.379.. Test Accuracy: 0.880

Epoch: 19/30.. Training Loss: 0.217.. Test Loss: 0.383.. Test Accuracy: 0.883

Epoch: 20/30.. Training Loss: 0.214.. Test Loss: 0.371.. Test Accuracy: 0.880

Epoch: 21/30.. Training Loss: 0.211.. Test Loss: 0.423.. Test Accuracy: 0.873

Epoch: 22/30.. Training Loss: 0.207.. Test Loss: 0.401.. Test Accuracy: 0.885

Epoch: 23/30.. Training Loss: 0.203.. Test Loss: 0.414.. Test Accuracy: 0.876

Epoch: 24/30.. Training Loss: 0.203.. Test Loss: 0.401.. Test Accuracy: 0.880

Epoch: 25/30.. Training Loss: 0.200.. Test Loss: 0.442.. Test Accuracy: 0.874

Epoch: 26/30.. Training Loss: 0.196.. Test Loss: 0.393.. Test Accuracy: 0.886

Epoch: 27/30.. Training Loss: 0.190.. Test Loss: 0.421.. Test Accuracy: 0.883

Epoch: 28/30.. Training Loss: 0.187.. Test Loss: 0.408.. Test Accuracy: 0.878

Epoch: 29/30.. Training Loss: 0.185.. Test Loss: 0.423.. Test Accuracy: 0.880

Epoch: 30/30.. Training Loss: 0.180.. Test Loss: 0.466.. Test Accuracy: 0.874

| MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

OverfittingIf we look at the training and validation losses as we train the network, we can see a phenomenon known as overfitting.The network learns the training set better and better, resulting in lower training losses. However, it starts having problems generalizing to data outside the training set leading to the validation loss increasing. The ultimate goal of any deep learning model is to make predictions on new data, so we should strive to get the lowest validation loss possible. One option is to use the version of the model with the lowest validation loss, here the one around 8-10 training epochs. This strategy is called *early-stopping*. In practice, you'd save the model frequently as you're training then later choose the model with the lowest validation loss.The most common method to reduce overfitting (outside of early-stopping) is *dropout*, where we randomly drop input units. This forces the network to share information between weights, increasing it's ability to generalize to new data. Adding dropout in PyTorch is straightforward using the [`nn.Dropout`](https://pytorch.org/docs/stable/nn.htmltorch.nn.Dropout) module.```pythonclass Classifier(nn.Module): def __init__(self): super().__init__() self.fc1 = nn.Linear(784, 256) self.fc2 = nn.Linear(256, 128) self.fc3 = nn.Linear(128, 64) self.fc4 = nn.Linear(64, 10) Dropout module with 0.2 drop probability self.dropout = nn.Dropout(p=0.2) def forward(self, x): make sure input tensor is flattened x = x.view(x.shape[0], -1) Now with dropout x = self.dropout(F.relu(self.fc1(x))) x = self.dropout(F.relu(self.fc2(x))) x = self.dropout(F.relu(self.fc3(x))) output so no dropout here x = F.log_softmax(self.fc4(x), dim=1) return x```During training we want to use dropout to prevent overfitting, but during inference we want to use the entire network. So, we need to turn off dropout during validation, testing, and whenever we're using the network to make predictions. To do this, you use `model.eval()`. This sets the model to evaluation mode where the dropout probability is 0. You can turn dropout back on by setting the model to train mode with `model.train()`. In general, the pattern for the validation loop will look like this, where you turn off gradients, set the model to evaluation mode, calculate the validation loss and metric, then set the model back to train mode.```python turn off gradientswith torch.no_grad(): set model to evaluation mode model.eval() validation pass here for images, labels in testloader: ... set model back to train modemodel.train()``` > **Exercise:** Add dropout to your model and train it on Fashion-MNIST again. See if you can get a lower validation loss or higher accuracy. | ## TODO: Define your model with dropout added

class Classifier(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(784, 256)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(128, 64)

self.fc4 = nn.Linear(64, 10)

self.dropout = nn.Dropout(p=0.2)

def forward(self,x):

x = x.view(x.shape[0], -1)

# Now with dropout

x = self.dropout(F.relu(self.fc1(x)))

x = self.dropout(F.relu(self.fc2(x)))

x = self.dropout(F.relu(self.fc3(x)))

# output so no dropout here

x = F.log_softmax(self.fc4(x), dim=1)

return x

model = Classifier()

criterion = nn.NLLLoss()

optimizer = optim.Adam(model.parameters(), lr=0.003)

epochs = 30

steps = 0

train_losses, test_losses = [], []

for e in range(epochs):

running_loss = 0

for images, labels in trainloader:

optimizer.zero_grad()

log_ps = model(images)

loss = criterion(log_ps, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

else:

test_loss = 0

accuracy = 0

# Turn off gradients for validation, saves memory and computations

with torch.no_grad():

model.eval()

for images, labels in testloader:

log_ps = model(images)

test_loss += criterion(log_ps, labels)

ps = torch.exp(log_ps)

top_p, top_class = ps.topk(1, dim=1)

equals = top_class == labels.view(*top_class.shape)

accuracy += torch.mean(equals.type(torch.FloatTensor))

model.train()

train_losses.append(running_loss/len(trainloader))

test_losses.append(test_loss/len(testloader))

print("Epoch: {}/{}.. ".format(e+1, epochs),

"Training Loss: {:.3f}.. ".format(running_loss/len(trainloader)),

"Test Loss: {:.3f}.. ".format(test_loss/len(testloader)),

"Test Accuracy: {:.3f}".format(accuracy/len(testloader)))

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import matplotlib.pyplot as plt

plt.plot(train_losses, label='Training loss')

plt.plot(test_losses, label='Validation loss')

plt.legend(frameon=False) | _____no_output_____ | MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

InferenceNow that the model is trained, we can use it for inference. We've done this before, but now we need to remember to set the model in inference mode with `model.eval()`. You'll also want to turn off autograd with the `torch.no_grad()` context. | # Import helper module (should be in the repo)

import helper

# Test out your network!

model.eval()

dataiter = iter(testloader)

images, labels = dataiter.next()

img = images[0]

# Convert 2D image to 1D vector

img = img.view(1, 784)

# Calculate the class probabilities (softmax) for img

with torch.no_grad():

output = model.forward(img)

ps = torch.exp(output)

# Plot the image and probabilities

helper.view_classify(img.view(1, 28, 28), ps, version='Fashion') | _____no_output_____ | MIT | intro-to-pytorch/Part 5 - Inference and Validation (Exercises).ipynb | adilfaiz001/deep-learning-v2-pytorch |

Requirements | # !pip install --upgrade transformers bertviz checklist | _____no_output_____ | Apache-2.0 | notebooks/old/DeprecatedTitles_t0_gpt.ipynb | IlyaGusev/NewsCausation |

Data loading | # !rm -rf ru_news_cause_v1.tsv*

# !wget https://www.dropbox.com/s/kcxnhjzfut4guut/ru_news_cause_v1.tsv.tar.gz

# !tar -xzvf ru_news_cause_v1.tsv.tar.gz

# !cat ru_news_cause_v1.tsv | wc -l

# !head ru_news_cause_v1.tsv | _____no_output_____ | Apache-2.0 | notebooks/old/DeprecatedTitles_t0_gpt.ipynb | IlyaGusev/NewsCausation |

GPTCause | from transformers import GPT2LMHeadModel, GPT2TokenizerFast

device = 'cuda'

model_id = 'sberbank-ai/rugpt3small_based_on_gpt2'

model = GPT2LMHeadModel.from_pretrained(model_id).to(device)

tokenizer = GPT2TokenizerFast.from_pretrained(model_id)

import torch

max_length = model.config.n_positions

def gpt_assess(s1, s2):

encodings = tokenizer(f'{s1} {s2}', return_tensors='pt')

with torch.no_grad():

outputs = model(encodings.input_ids.to(device), labels=encodings.input_ids.to(device))

log_likelihood = outputs[0] * encodings.input_ids.size(1)

return log_likelihood.detach().cpu().numpy()

def gpt_assess_pair(s1,s2):

ppl1 = gpt_assess(s1,s2)

ppl2 = gpt_assess(s2,s1)

if ppl1<ppl2:

return 0, ppl1/ppl2

else:

return 1, ppl1/ppl2

print(gpt_assess('Привет!', 'Как дела?'))

print(gpt_assess('Как дела?', 'Привет!'))

print(gpt_assess_pair('Как дела?', 'Привет!'))

print(gpt_assess_pair('Привет!', 'Как дела?'))

| 11.083624

23.637806

(1, 2.1326785)

(0, 0.46889395)

| Apache-2.0 | notebooks/old/DeprecatedTitles_t0_gpt.ipynb | IlyaGusev/NewsCausation |

Scoring | import csv

labels = []

texts = []

preds = []

confs = []

with open("ru_news_cause_v1.tsv", "r", encoding='utf-8') as r:

reader = csv.reader(r, delimiter="\t")

header = next(reader)

for row in reader:

r = dict(zip(header, row))

if float(r["confidence"]) < 0.69:

continue

result = r["result"]

mapping = {

"left_right_cause": 0,

"left_right_cancel": 0,

"right_left_cause": 1,

"right_left_cancel": 1

}

if result not in mapping:

continue

r["label"] = mapping[result]

labels.append(r['label'])

texts.append( (r["left_title"], r["right_title"] ) )

p, c = gpt_assess_pair( r["left_title"], r["right_title"] )

preds.append( p )

confs.append( c )

from collections import Counter

print('labels', Counter(labels))

print('preds', Counter(preds))

import matplotlib.pyplot as plt

plt.hist(confs)

plt.show()

from sklearn.metrics import classification_report, balanced_accuracy_score, confusion_matrix

y_true = labels

y_pred = preds

print(classification_report(y_true, y_pred))

print('balanced_accuracy_score', balanced_accuracy_score(y_true, y_pred))

print('\nconfusion_matrix\n',confusion_matrix(y_true, y_pred))

import numpy as np

confidence_th = .1

mask = np.array(list(map(lambda x:abs(x-1.), confs)))>confidence_th

y_true = np.array(labels)[mask]

y_pred = np.array(preds)[mask]

print(classification_report(y_true, y_pred))

print('balanced_accuracy_score', balanced_accuracy_score(y_true, y_pred))

print('\nconfusion_matrix\n',confusion_matrix(y_true, y_pred))

from sklearn.metrics import f1_score

xs = []

ys = []

for th_idx in range(250):

th = th_idx/1000.

mask = np.array(list(map(lambda x:abs(x-1.), confs)))>th

y_true = np.array(labels)[mask]

y_pred = np.array(preds)[mask]

xs.append( th )

ys.append( f1_score(y_true, y_pred) )

import matplotlib.pyplot as plt

plt.plot(xs, ys)

plt.suptitle('f1 by conf_th')

plt.show() | _____no_output_____ | Apache-2.0 | notebooks/old/DeprecatedTitles_t0_gpt.ipynb | IlyaGusev/NewsCausation |

CS224N Assignment 1: Exploring Word Vectors (25 Points) Due 4:30pm, Tue Jan 14 Welcome to CS224n! Before you start, make sure you read the README.txt in the same directory as this notebook. You will find many provided codes in the notebook. We highly encourage you to read and understand the provided codes as part of the learning :-) | # All Import Statements Defined Here

# Note: Do not add to this list.

# ----------------

import sys

assert sys.version_info[0]==3

assert sys.version_info[1] >= 5

from gensim.models import KeyedVectors

from gensim.test.utils import datapath

import pprint

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = [10, 5]

import nltk

nltk.download('reuters')

from nltk.corpus import reuters

import numpy as np

import random

import scipy as sp

from sklearn.decomposition import TruncatedSVD

from sklearn.decomposition import PCA

START_TOKEN = '<START>'

END_TOKEN = '<END>'

np.random.seed(0)

random.seed(0)

# ---------------- | [nltk_data] Downloading package reuters to

[nltk_data] /usr/local/share/nltk_data...

[nltk_data] Package reuters is already up-to-date!

| MIT | CS224n/assignment1/exploring_word_vectors.ipynb | iofu728/Task |

Word VectorsWord Vectors are often used as a fundamental component for downstream NLP tasks, e.g. question answering, text generation, translation, etc., so it is important to build some intuitions as to their strengths and weaknesses. Here, you will explore two types of word vectors: those derived from *co-occurrence matrices*, and those derived via *GloVe*. **Assignment Notes:** Please make sure to save the notebook as you go along. Submission Instructions are located at the bottom of the notebook.**Note on Terminology:** The terms "word vectors" and "word embeddings" are often used interchangeably. The term "embedding" refers to the fact that we are encoding aspects of a word's meaning in a lower dimensional space. As [Wikipedia](https://en.wikipedia.org/wiki/Word_embedding) states, "*conceptually it involves a mathematical embedding from a space with one dimension per word to a continuous vector space with a much lower dimension*". Part 1: Count-Based Word Vectors (10 points)Most word vector models start from the following idea:*You shall know a word by the company it keeps ([Firth, J. R. 1957:11](https://en.wikipedia.org/wiki/John_Rupert_Firth))*Many word vector implementations are driven by the idea that similar words, i.e., (near) synonyms, will be used in similar contexts. As a result, similar words will often be spoken or written along with a shared subset of words, i.e., contexts. By examining these contexts, we can try to develop embeddings for our words. With this intuition in mind, many "old school" approaches to constructing word vectors relied on word counts. Here we elaborate upon one of those strategies, *co-occurrence matrices* (for more information, see [here](http://web.stanford.edu/class/cs124/lec/vectorsemantics.video.pdf) or [here](https://medium.com/data-science-group-iitr/word-embedding-2d05d270b285)). Co-OccurrenceA co-occurrence matrix counts how often things co-occur in some environment. Given some word $w_i$ occurring in the document, we consider the *context window* surrounding $w_i$. Supposing our fixed window size is $n$, then this is the $n$ preceding and $n$ subsequent words in that document, i.e. words $w_{i-n} \dots w_{i-1}$ and $w_{i+1} \dots w_{i+n}$. We build a *co-occurrence matrix* $M$, which is a symmetric word-by-word matrix in which $M_{ij}$ is the number of times $w_j$ appears inside $w_i$'s window among all documents.**Example: Co-Occurrence with Fixed Window of n=1**:Document 1: "all that glitters is not gold"Document 2: "all is well that ends well"| * | `` | all | that | glitters | is | not | gold | well | ends | `` ||----------|-------|-----|------|----------|------|------|-------|------|------|-----|| `` | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 || all | 2 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 || that | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 || glitters | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 || is | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 || not | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 || gold | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 || well | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 1 || ends | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 || `` | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 |**Note:** In NLP, we often add `` and `` tokens to represent the beginning and end of sentences, paragraphs or documents. In thise case we imagine `` and `` tokens encapsulating each document, e.g., "`` All that glitters is not gold ``", and include these tokens in our co-occurrence counts.The rows (or columns) of this matrix provide one type of word vectors (those based on word-word co-occurrence), but the vectors will be large in general (linear in the number of distinct words in a corpus). Thus, our next step is to run *dimensionality reduction*. In particular, we will run *SVD (Singular Value Decomposition)*, which is a kind of generalized *PCA (Principal Components Analysis)* to select the top $k$ principal components. Here's a visualization of dimensionality reduction with SVD. In this picture our co-occurrence matrix is $A$ with $n$ rows corresponding to $n$ words. We obtain a full matrix decomposition, with the singular values ordered in the diagonal $S$ matrix, and our new, shorter length-$k$ word vectors in $U_k$.This reduced-dimensionality co-occurrence representation preserves semantic relationships between words, e.g. *doctor* and *hospital* will be closer than *doctor* and *dog*. **Notes:** If you can barely remember what an eigenvalue is, here's [a slow, friendly introduction to SVD](https://davetang.org/file/Singular_Value_Decomposition_Tutorial.pdf). If you want to learn more thoroughly about PCA or SVD, feel free to check out lectures [7](https://web.stanford.edu/class/cs168/l/l7.pdf), [8](http://theory.stanford.edu/~tim/s15/l/l8.pdf), and [9](https://web.stanford.edu/class/cs168/l/l9.pdf) of CS168. These course notes provide a great high-level treatment of these general purpose algorithms. Though, for the purpose of this class, you only need to know how to extract the k-dimensional embeddings by utilizing pre-programmed implementations of these algorithms from the numpy, scipy, or sklearn python packages. In practice, it is challenging to apply full SVD to large corpora because of the memory needed to perform PCA or SVD. However, if you only want the top $k$ vector components for relatively small $k$ — known as [Truncated SVD](https://en.wikipedia.org/wiki/Singular_value_decompositionTruncated_SVD) — then there are reasonably scalable techniques to compute those iteratively. Plotting Co-Occurrence Word EmbeddingsHere, we will be using the Reuters (business and financial news) corpus. If you haven't run the import cell at the top of this page, please run it now (click it and press SHIFT-RETURN). The corpus consists of 10,788 news documents totaling 1.3 million words. These documents span 90 categories and are split into train and test. For more details, please see https://www.nltk.org/book/ch02.html. We provide a `read_corpus` function below that pulls out only articles from the "crude" (i.e. news articles about oil, gas, etc.) category. The function also adds `` and `` tokens to each of the documents, and lowercases words. You do **not** have to perform any other kind of pre-processing. | def read_corpus(category="crude"):

""" Read files from the specified Reuter's category.

Params:

category (string): category name

Return:

list of lists, with words from each of the processed files

"""

files = reuters.fileids(category)

return [[START_TOKEN] + [w.lower() for w in list(reuters.words(f))] + [END_TOKEN] for f in files]

| _____no_output_____ | MIT | CS224n/assignment1/exploring_word_vectors.ipynb | iofu728/Task |

Let's have a look what these documents are like…. | reuters_corpus = read_corpus()

pprint.pprint(reuters_corpus[:3], compact=True, width=100) | [['<START>', 'japan', 'to', 'revise', 'long', '-', 'term', 'energy', 'demand', 'downwards', 'the',

'ministry', 'of', 'international', 'trade', 'and', 'industry', '(', 'miti', ')', 'will', 'revise',

'its', 'long', '-', 'term', 'energy', 'supply', '/', 'demand', 'outlook', 'by', 'august', 'to',

'meet', 'a', 'forecast', 'downtrend', 'in', 'japanese', 'energy', 'demand', ',', 'ministry',

'officials', 'said', '.', 'miti', 'is', 'expected', 'to', 'lower', 'the', 'projection', 'for',

'primary', 'energy', 'supplies', 'in', 'the', 'year', '2000', 'to', '550', 'mln', 'kilolitres',

'(', 'kl', ')', 'from', '600', 'mln', ',', 'they', 'said', '.', 'the', 'decision', 'follows',

'the', 'emergence', 'of', 'structural', 'changes', 'in', 'japanese', 'industry', 'following',

'the', 'rise', 'in', 'the', 'value', 'of', 'the', 'yen', 'and', 'a', 'decline', 'in', 'domestic',

'electric', 'power', 'demand', '.', 'miti', 'is', 'planning', 'to', 'work', 'out', 'a', 'revised',

'energy', 'supply', '/', 'demand', 'outlook', 'through', 'deliberations', 'of', 'committee',

'meetings', 'of', 'the', 'agency', 'of', 'natural', 'resources', 'and', 'energy', ',', 'the',

'officials', 'said', '.', 'they', 'said', 'miti', 'will', 'also', 'review', 'the', 'breakdown',

'of', 'energy', 'supply', 'sources', ',', 'including', 'oil', ',', 'nuclear', ',', 'coal', 'and',

'natural', 'gas', '.', 'nuclear', 'energy', 'provided', 'the', 'bulk', 'of', 'japan', "'", 's',

'electric', 'power', 'in', 'the', 'fiscal', 'year', 'ended', 'march', '31', ',', 'supplying',

'an', 'estimated', '27', 'pct', 'on', 'a', 'kilowatt', '/', 'hour', 'basis', ',', 'followed',

'by', 'oil', '(', '23', 'pct', ')', 'and', 'liquefied', 'natural', 'gas', '(', '21', 'pct', '),',

'they', 'noted', '.', '<END>'],

['<START>', 'energy', '/', 'u', '.', 's', '.', 'petrochemical', 'industry', 'cheap', 'oil',

'feedstocks', ',', 'the', 'weakened', 'u', '.', 's', '.', 'dollar', 'and', 'a', 'plant',

'utilization', 'rate', 'approaching', '90', 'pct', 'will', 'propel', 'the', 'streamlined', 'u',

'.', 's', '.', 'petrochemical', 'industry', 'to', 'record', 'profits', 'this', 'year', ',',

'with', 'growth', 'expected', 'through', 'at', 'least', '1990', ',', 'major', 'company',

'executives', 'predicted', '.', 'this', 'bullish', 'outlook', 'for', 'chemical', 'manufacturing',

'and', 'an', 'industrywide', 'move', 'to', 'shed', 'unrelated', 'businesses', 'has', 'prompted',

'gaf', 'corp', '&', 'lt', ';', 'gaf', '>,', 'privately', '-', 'held', 'cain', 'chemical', 'inc',

',', 'and', 'other', 'firms', 'to', 'aggressively', 'seek', 'acquisitions', 'of', 'petrochemical',

'plants', '.', 'oil', 'companies', 'such', 'as', 'ashland', 'oil', 'inc', '&', 'lt', ';', 'ash',

'>,', 'the', 'kentucky', '-', 'based', 'oil', 'refiner', 'and', 'marketer', ',', 'are', 'also',

'shopping', 'for', 'money', '-', 'making', 'petrochemical', 'businesses', 'to', 'buy', '.', '"',

'i', 'see', 'us', 'poised', 'at', 'the', 'threshold', 'of', 'a', 'golden', 'period', ',"', 'said',

'paul', 'oreffice', ',', 'chairman', 'of', 'giant', 'dow', 'chemical', 'co', '&', 'lt', ';',

'dow', '>,', 'adding', ',', '"', 'there', "'", 's', 'no', 'major', 'plant', 'capacity', 'being',

'added', 'around', 'the', 'world', 'now', '.', 'the', 'whole', 'game', 'is', 'bringing', 'out',

'new', 'products', 'and', 'improving', 'the', 'old', 'ones', '."', 'analysts', 'say', 'the',

'chemical', 'industry', "'", 's', 'biggest', 'customers', ',', 'automobile', 'manufacturers',

'and', 'home', 'builders', 'that', 'use', 'a', 'lot', 'of', 'paints', 'and', 'plastics', ',',

'are', 'expected', 'to', 'buy', 'quantities', 'this', 'year', '.', 'u', '.', 's', '.',

'petrochemical', 'plants', 'are', 'currently', 'operating', 'at', 'about', '90', 'pct',

'capacity', ',', 'reflecting', 'tighter', 'supply', 'that', 'could', 'hike', 'product', 'prices',

'by', '30', 'to', '40', 'pct', 'this', 'year', ',', 'said', 'john', 'dosher', ',', 'managing',

'director', 'of', 'pace', 'consultants', 'inc', 'of', 'houston', '.', 'demand', 'for', 'some',

'products', 'such', 'as', 'styrene', 'could', 'push', 'profit', 'margins', 'up', 'by', 'as',

'much', 'as', '300', 'pct', ',', 'he', 'said', '.', 'oreffice', ',', 'speaking', 'at', 'a',

'meeting', 'of', 'chemical', 'engineers', 'in', 'houston', ',', 'said', 'dow', 'would', 'easily',

'top', 'the', '741', 'mln', 'dlrs', 'it', 'earned', 'last', 'year', 'and', 'predicted', 'it',

'would', 'have', 'the', 'best', 'year', 'in', 'its', 'history', '.', 'in', '1985', ',', 'when',

'oil', 'prices', 'were', 'still', 'above', '25', 'dlrs', 'a', 'barrel', 'and', 'chemical',

'exports', 'were', 'adversely', 'affected', 'by', 'the', 'strong', 'u', '.', 's', '.', 'dollar',

',', 'dow', 'had', 'profits', 'of', '58', 'mln', 'dlrs', '.', '"', 'i', 'believe', 'the',

'entire', 'chemical', 'industry', 'is', 'headed', 'for', 'a', 'record', 'year', 'or', 'close',

'to', 'it', ',"', 'oreffice', 'said', '.', 'gaf', 'chairman', 'samuel', 'heyman', 'estimated',

'that', 'the', 'u', '.', 's', '.', 'chemical', 'industry', 'would', 'report', 'a', '20', 'pct',

'gain', 'in', 'profits', 'during', '1987', '.', 'last', 'year', ',', 'the', 'domestic',

'industry', 'earned', 'a', 'total', 'of', '13', 'billion', 'dlrs', ',', 'a', '54', 'pct', 'leap',

'from', '1985', '.', 'the', 'turn', 'in', 'the', 'fortunes', 'of', 'the', 'once', '-', 'sickly',

'chemical', 'industry', 'has', 'been', 'brought', 'about', 'by', 'a', 'combination', 'of', 'luck',

'and', 'planning', ',', 'said', 'pace', "'", 's', 'john', 'dosher', '.', 'dosher', 'said', 'last',

'year', "'", 's', 'fall', 'in', 'oil', 'prices', 'made', 'feedstocks', 'dramatically', 'cheaper',

'and', 'at', 'the', 'same', 'time', 'the', 'american', 'dollar', 'was', 'weakening', 'against',

'foreign', 'currencies', '.', 'that', 'helped', 'boost', 'u', '.', 's', '.', 'chemical',

'exports', '.', 'also', 'helping', 'to', 'bring', 'supply', 'and', 'demand', 'into', 'balance',

'has', 'been', 'the', 'gradual', 'market', 'absorption', 'of', 'the', 'extra', 'chemical',

'manufacturing', 'capacity', 'created', 'by', 'middle', 'eastern', 'oil', 'producers', 'in',

'the', 'early', '1980s', '.', 'finally', ',', 'virtually', 'all', 'major', 'u', '.', 's', '.',

'chemical', 'manufacturers', 'have', 'embarked', 'on', 'an', 'extensive', 'corporate',

'restructuring', 'program', 'to', 'mothball', 'inefficient', 'plants', ',', 'trim', 'the',

'payroll', 'and', 'eliminate', 'unrelated', 'businesses', '.', 'the', 'restructuring', 'touched',

'off', 'a', 'flurry', 'of', 'friendly', 'and', 'hostile', 'takeover', 'attempts', '.', 'gaf', ',',

'which', 'made', 'an', 'unsuccessful', 'attempt', 'in', '1985', 'to', 'acquire', 'union',

'carbide', 'corp', '&', 'lt', ';', 'uk', '>,', 'recently', 'offered', 'three', 'billion', 'dlrs',

'for', 'borg', 'warner', 'corp', '&', 'lt', ';', 'bor', '>,', 'a', 'chicago', 'manufacturer',

'of', 'plastics', 'and', 'chemicals', '.', 'another', 'industry', 'powerhouse', ',', 'w', '.',

'r', '.', 'grace', '&', 'lt', ';', 'gra', '>', 'has', 'divested', 'its', 'retailing', ',',

'restaurant', 'and', 'fertilizer', 'businesses', 'to', 'raise', 'cash', 'for', 'chemical',

'acquisitions', '.', 'but', 'some', 'experts', 'worry', 'that', 'the', 'chemical', 'industry',

'may', 'be', 'headed', 'for', 'trouble', 'if', 'companies', 'continue', 'turning', 'their',

'back', 'on', 'the', 'manufacturing', 'of', 'staple', 'petrochemical', 'commodities', ',', 'such',

'as', 'ethylene', ',', 'in', 'favor', 'of', 'more', 'profitable', 'specialty', 'chemicals',

'that', 'are', 'custom', '-', 'designed', 'for', 'a', 'small', 'group', 'of', 'buyers', '.', '"',

'companies', 'like', 'dupont', '&', 'lt', ';', 'dd', '>', 'and', 'monsanto', 'co', '&', 'lt', ';',

'mtc', '>', 'spent', 'the', 'past', 'two', 'or', 'three', 'years', 'trying', 'to', 'get', 'out',

'of', 'the', 'commodity', 'chemical', 'business', 'in', 'reaction', 'to', 'how', 'badly', 'the',

'market', 'had', 'deteriorated', ',"', 'dosher', 'said', '.', '"', 'but', 'i', 'think', 'they',

'will', 'eventually', 'kill', 'the', 'margins', 'on', 'the', 'profitable', 'chemicals', 'in',

'the', 'niche', 'market', '."', 'some', 'top', 'chemical', 'executives', 'share', 'the',

'concern', '.', '"', 'the', 'challenge', 'for', 'our', 'industry', 'is', 'to', 'keep', 'from',

'getting', 'carried', 'away', 'and', 'repeating', 'past', 'mistakes', ',"', 'gaf', "'", 's',

'heyman', 'cautioned', '.', '"', 'the', 'shift', 'from', 'commodity', 'chemicals', 'may', 'be',

'ill', '-', 'advised', '.', 'specialty', 'businesses', 'do', 'not', 'stay', 'special', 'long',

'."', 'houston', '-', 'based', 'cain', 'chemical', ',', 'created', 'this', 'month', 'by', 'the',

'sterling', 'investment', 'banking', 'group', ',', 'believes', 'it', 'can', 'generate', '700',

'mln', 'dlrs', 'in', 'annual', 'sales', 'by', 'bucking', 'the', 'industry', 'trend', '.',

'chairman', 'gordon', 'cain', ',', 'who', 'previously', 'led', 'a', 'leveraged', 'buyout', 'of',

'dupont', "'", 's', 'conoco', 'inc', "'", 's', 'chemical', 'business', ',', 'has', 'spent', '1',

'.', '1', 'billion', 'dlrs', 'since', 'january', 'to', 'buy', 'seven', 'petrochemical', 'plants',

'along', 'the', 'texas', 'gulf', 'coast', '.', 'the', 'plants', 'produce', 'only', 'basic',

'commodity', 'petrochemicals', 'that', 'are', 'the', 'building', 'blocks', 'of', 'specialty',

'products', '.', '"', 'this', 'kind', 'of', 'commodity', 'chemical', 'business', 'will', 'never',

'be', 'a', 'glamorous', ',', 'high', '-', 'margin', 'business', ',"', 'cain', 'said', ',',

'adding', 'that', 'demand', 'is', 'expected', 'to', 'grow', 'by', 'about', 'three', 'pct',

'annually', '.', 'garo', 'armen', ',', 'an', 'analyst', 'with', 'dean', 'witter', 'reynolds', ',',

'said', 'chemical', 'makers', 'have', 'also', 'benefitted', 'by', 'increasing', 'demand', 'for',

'plastics', 'as', 'prices', 'become', 'more', 'competitive', 'with', 'aluminum', ',', 'wood',

'and', 'steel', 'products', '.', 'armen', 'estimated', 'the', 'upturn', 'in', 'the', 'chemical',

'business', 'could', 'last', 'as', 'long', 'as', 'four', 'or', 'five', 'years', ',', 'provided',

'the', 'u', '.', 's', '.', 'economy', 'continues', 'its', 'modest', 'rate', 'of', 'growth', '.',

'<END>'],

['<START>', 'turkey', 'calls', 'for', 'dialogue', 'to', 'solve', 'dispute', 'turkey', 'said',

'today', 'its', 'disputes', 'with', 'greece', ',', 'including', 'rights', 'on', 'the',

'continental', 'shelf', 'in', 'the', 'aegean', 'sea', ',', 'should', 'be', 'solved', 'through',

'negotiations', '.', 'a', 'foreign', 'ministry', 'statement', 'said', 'the', 'latest', 'crisis',

'between', 'the', 'two', 'nato', 'members', 'stemmed', 'from', 'the', 'continental', 'shelf',

'dispute', 'and', 'an', 'agreement', 'on', 'this', 'issue', 'would', 'effect', 'the', 'security',

',', 'economy', 'and', 'other', 'rights', 'of', 'both', 'countries', '.', '"', 'as', 'the',

'issue', 'is', 'basicly', 'political', ',', 'a', 'solution', 'can', 'only', 'be', 'found', 'by',

'bilateral', 'negotiations', ',"', 'the', 'statement', 'said', '.', 'greece', 'has', 'repeatedly',

'said', 'the', 'issue', 'was', 'legal', 'and', 'could', 'be', 'solved', 'at', 'the',

'international', 'court', 'of', 'justice', '.', 'the', 'two', 'countries', 'approached', 'armed',

'confrontation', 'last', 'month', 'after', 'greece', 'announced', 'it', 'planned', 'oil',

'exploration', 'work', 'in', 'the', 'aegean', 'and', 'turkey', 'said', 'it', 'would', 'also',

'search', 'for', 'oil', '.', 'a', 'face', '-', 'off', 'was', 'averted', 'when', 'turkey',

'confined', 'its', 'research', 'to', 'territorrial', 'waters', '.', '"', 'the', 'latest',

'crises', 'created', 'an', 'historic', 'opportunity', 'to', 'solve', 'the', 'disputes', 'between',

'the', 'two', 'countries', ',"', 'the', 'foreign', 'ministry', 'statement', 'said', '.', 'turkey',

"'", 's', 'ambassador', 'in', 'athens', ',', 'nazmi', 'akiman', ',', 'was', 'due', 'to', 'meet',

'prime', 'minister', 'andreas', 'papandreou', 'today', 'for', 'the', 'greek', 'reply', 'to', 'a',

'message', 'sent', 'last', 'week', 'by', 'turkish', 'prime', 'minister', 'turgut', 'ozal', '.',

'the', 'contents', 'of', 'the', 'message', 'were', 'not', 'disclosed', '.', '<END>']]

| MIT | CS224n/assignment1/exploring_word_vectors.ipynb | iofu728/Task |

Question 1.1: Implement `distinct_words` [code] (2 points)Write a method to work out the distinct words (word types) that occur in the corpus. You can do this with `for` loops, but it's more efficient to do it with Python list comprehensions. In particular, [this](https://coderwall.com/p/rcmaea/flatten-a-list-of-lists-in-one-line-in-python) may be useful to flatten a list of lists. If you're not familiar with Python list comprehensions in general, here's [more information](https://python-3-patterns-idioms-test.readthedocs.io/en/latest/Comprehensions.html).You may find it useful to use [Python sets](https://www.w3schools.com/python/python_sets.asp) to remove duplicate words. | def distinct_words(corpus):

""" Determine a list of distinct words for the corpus.

Params:

corpus (list of list of strings): corpus of documents

Return:

corpus_words (list of strings): list of distinct words across the corpus, sorted (using python 'sorted' function)

num_corpus_words (integer): number of distinct words across the corpus

"""

# ------------------

# Write your implementation here.

corpus_words = list(sorted(set([token for sentences in corpus for token in sentences])))

num_corpus_words = len(corpus_words)

# ------------------

return corpus_words, num_corpus_words

# ---------------------

# Run this sanity check

# Note that this not an exhaustive check for correctness.

# ---------------------

# Define toy corpus

test_corpus = ["{} All that glitters isn't gold {}".format(START_TOKEN, END_TOKEN).split(" "), "{} All's well that ends well {}".format(START_TOKEN, END_TOKEN).split(" ")]

test_corpus_words, num_corpus_words = distinct_words(test_corpus)

# Correct answers

ans_test_corpus_words = sorted([START_TOKEN, "All", "ends", "that", "gold", "All's", "glitters", "isn't", "well", END_TOKEN])

ans_num_corpus_words = len(ans_test_corpus_words)

# Test correct number of words

assert(num_corpus_words == ans_num_corpus_words), "Incorrect number of distinct words. Correct: {}. Yours: {}".format(ans_num_corpus_words, num_corpus_words)

# Test correct words

assert (test_corpus_words == ans_test_corpus_words), "Incorrect corpus_words.\nCorrect: {}\nYours: {}".format(str(ans_test_corpus_words), str(test_corpus_words))

# Print Success

print ("-" * 80)

print("Passed All Tests!")