markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

**Option 3:** Draw once before loop | np.random.seed(1917)

x = np.random.normal(0,1,size=100)

print(f'var(x) = {np.var(x):.3f}')

y_ = np.random.normal(0,1,size=x.size)

for sigma in [0.5,1.0,0.5]:

y = sigma*y_

print(f'sigma = {sigma:2f}: f = {f(x,y):.4f}') | var(x) = 0.951

sigma = 0.500000: f = 0.5522

sigma = 1.000000: f = 0.0143

sigma = 0.500000: f = 0.5522

| MIT | web/06/Examples_and_overview.ipynb | Jovansam/lectures-2021 |

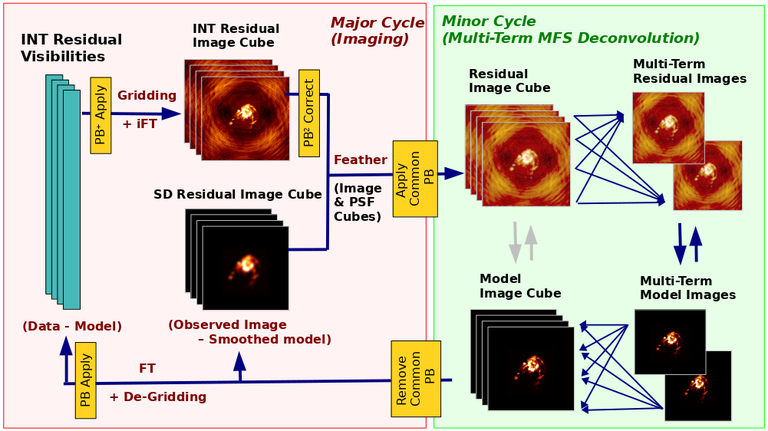

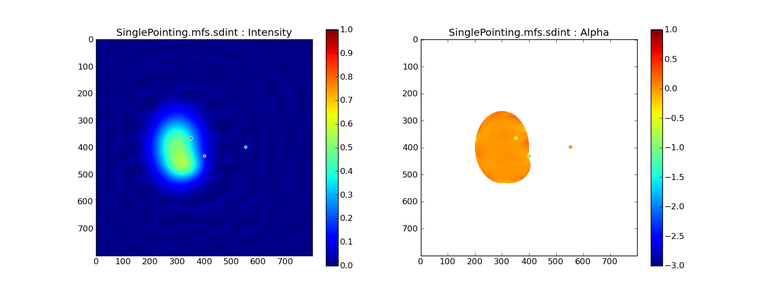

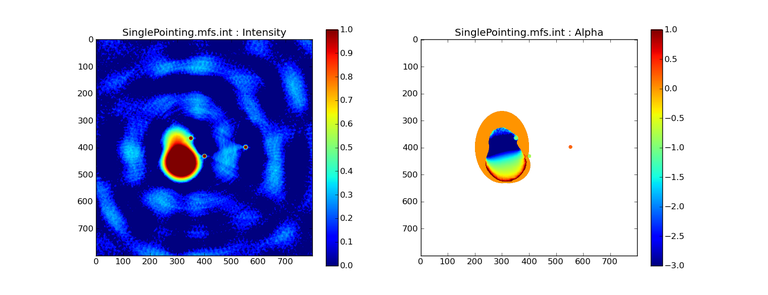

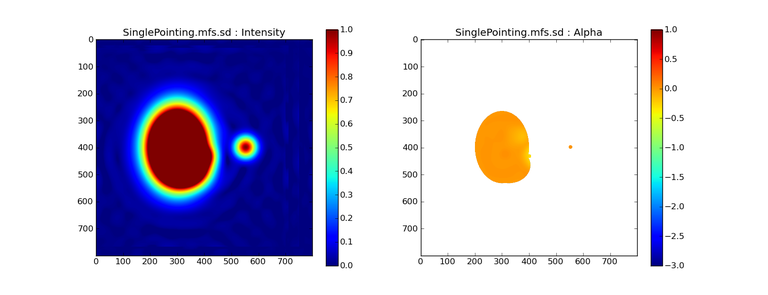

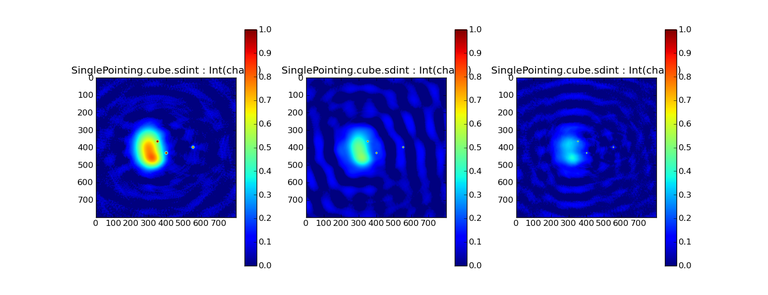

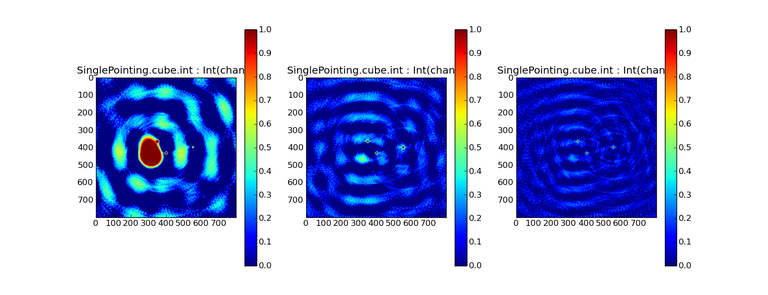

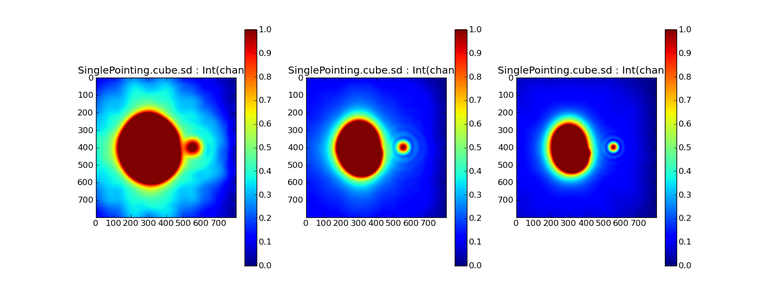

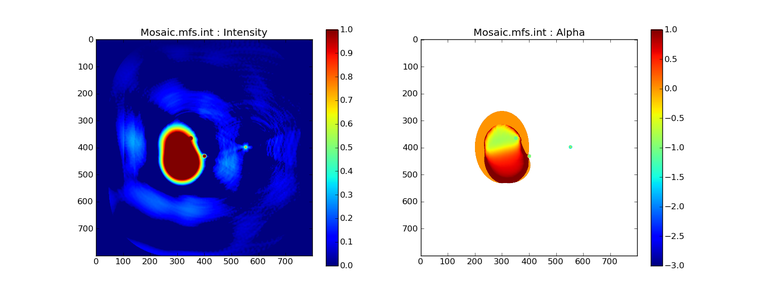

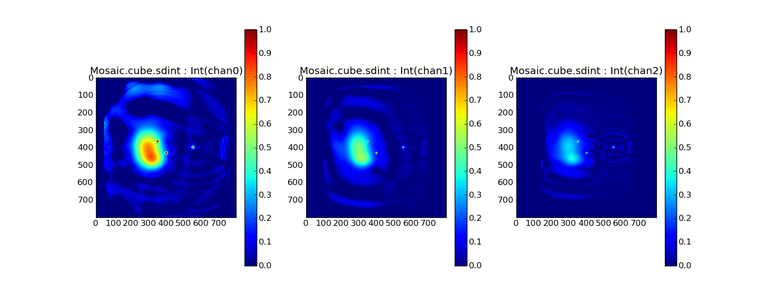

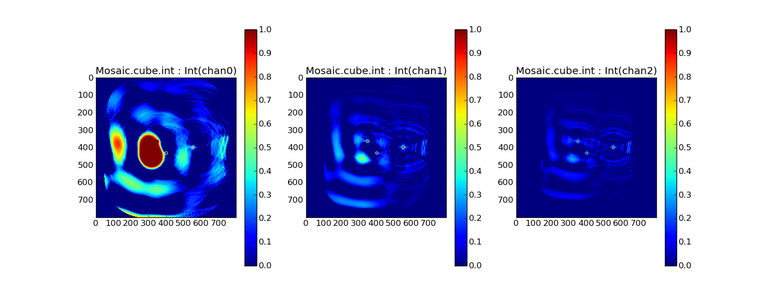

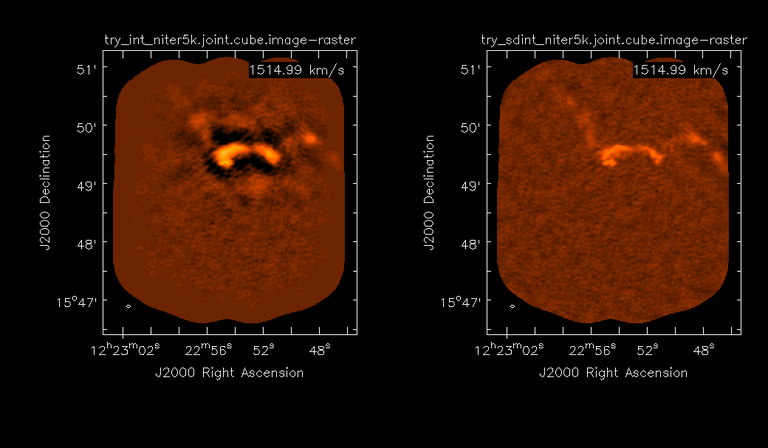

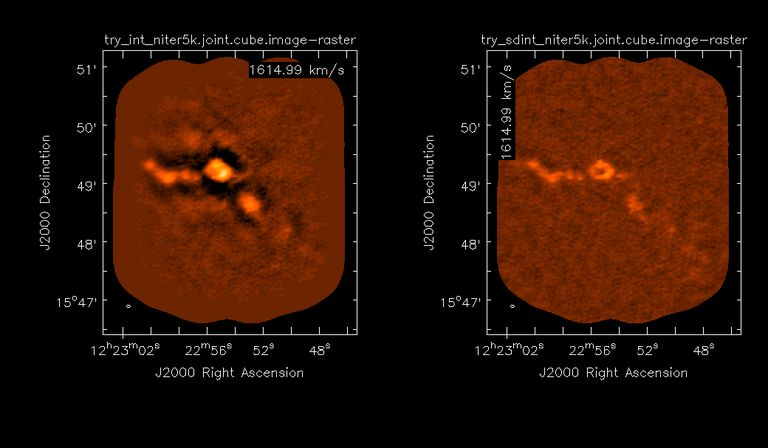

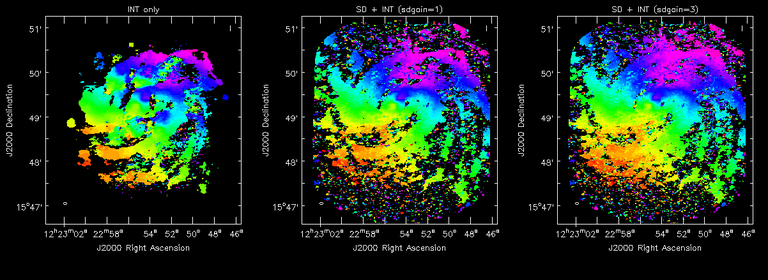

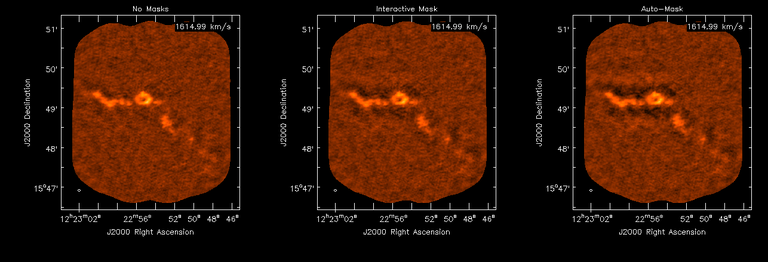

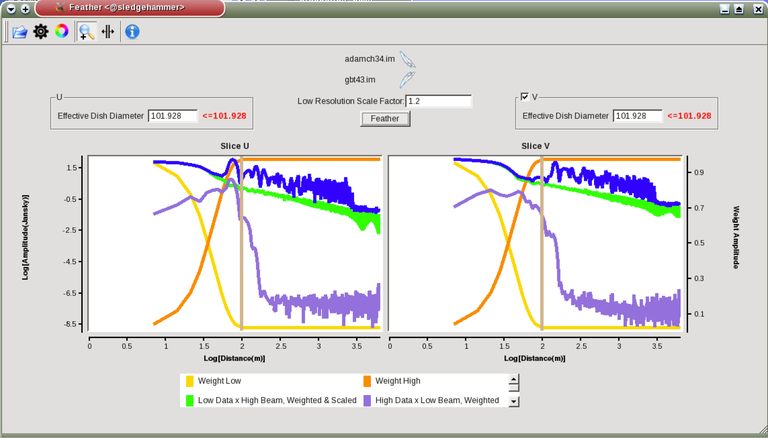

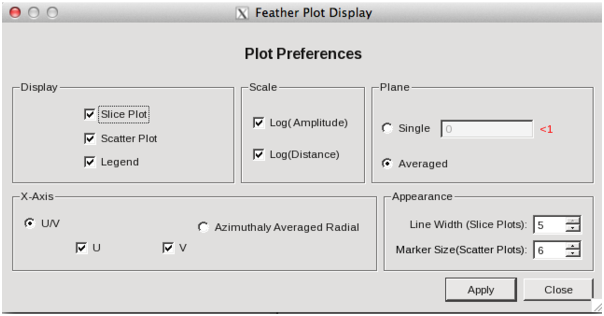

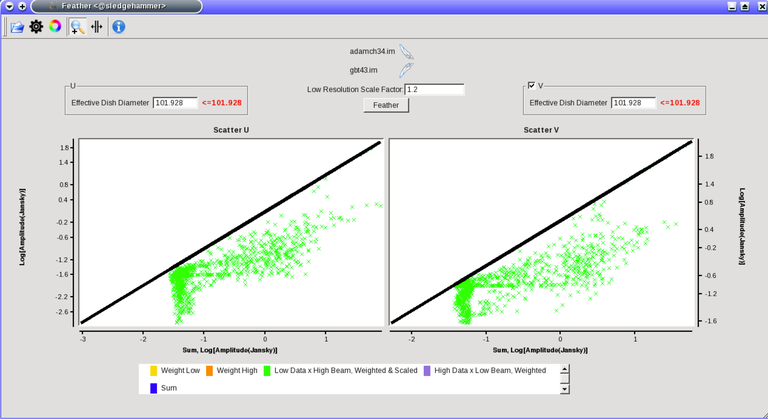

Image Combination Joint Single Dish and Interferometer Image Reconstruction The SDINT imaging algorithm allows joint reconstruction of wideband single dish and interferometer data. This algorithm is available in the task [sdintimaging](../api/casatasks.rstimaging) and described in [Rau, Naik & Braun (2019)](https://iopscience.iop.org/article/10.3847/1538-3881/ab1aa7/meta).Joint reconstruction of wideband single dish and interferometer data in CASA is experimental. Please use at own discretion.The usage modes that have been tested are documented below. SDINT AlgorithmInterferometer data are gridded into an image cube (and corresponding PSF). The single dish image and PSF cubes are combined with the interferometer cubes in a feathering step. The joint image and PSF cubes then form inputs to any deconvolution algorithm (in either *cube* or *mfs/mtmfs* modes). Model images from the deconvolution algorithm are translated back to model image cubes prior to subtraction from both the single dish image cube as well as the interferometer data to form a new pair of residual image cubes to be feathered in the next iteration. In the case of mosaic imaging, primary beam corrections are performed per channel of the image cube, followed by a multiplication by a common primary beam, prior to deconvolution. Therefore, for mosaic imaging, this task always implements *conjbeams=True* and *normtype='flatnoise'*.{.image-inline width="674" height="378"}The input single dish data are the single dish image and psf cubes. The input interferometer data is a MeasurementSet. In addition to imaging and deconvolution parameters from interferometric imaging (task **tclean**), there are controls for a feathering step to combine interferometer and single dish cubes within the imaging iterations. Note that the above diagram shows only the \'mtmfs\' variant. Cube deconvolution proceeds directly with the cubes in the green box above, without the extra conversion back and forth to the multi-term basis. Primary beam handling is also not shown in this diagram, but full details (via pseudocode) are available in the [reference publication.](https://iopscience.iop.org/article/10.3847/1538-3881/ab1aa7)The parameters used for controlling the joint deconvolution are described on the [sdintimaging](../api/casatasks.rstimaging) task pages. Usage ModesThe task **sdintimaging** contains the algorithm for joint reconstruction of wideband single dish and interferometer data. The **sdintimaging** task shares a significant number of parameters with the **tclean** task, but also contains unique parameters. A detailed overview of these parameters, and how to use them, can be found in the CASA Docs [task pages of sdintimaging](../api/casatasks.rstimaging).As seen from the diagram above and described on the **sdintimaging** task pages, there is considerable flexibility in usage modes. One can choose between interferometer-only, singledish-only and joint interferometer-singledish imaging. Outputs are restored images and associated data products (similar to task tclean).The following usage modes are available in the (experimental) sdintimaging task. Tested modes include all 12 combinations of:- Cube Imaging : All combinations of the following options. - *specmode = 'cube'* - *deconvolver = 'multiscale', 'hogbom'* - *usedata = 'sdint', 'sd' , 'int'* - *gridder = 'standard', 'mosaic'* - *parallel = False, True*- Wideband Multi-Term Imaging : All combinations of the following options. - *specmode = 'mfs'* - *deconvolver = 'mtmfs'* ( *nterms=1* for a single-term MFS image, and *nterms>1* for multi-term MFS image. Tests use *nterms=2* ) - *usedata = 'sdint', 'sd' , 'int'* - *gridder = 'standard', 'mosaic'* - *parallel = False, True***NOTE**: When the INT and/or SD cubes have flagged (and therefore empty) channels, only those channels that have non-zero images in both the INT and SD cubes are used for the joint reconstruction.**NOTE**: Single-plane joint imaging may be run with deconvolver='mtmfs' and nterms=1.**NOTE**: All other modes allowed by the new sdintimaging task are currently untested. Tests will be added in subsequent releases. Examples/Demos Basic test resultsThe sdintimaging task was run on a pair of simulated test datasets. Both contain a flat spectrum extended emission feature plus three point sources, two of which have spectral index=-1.0 and one which is flat-spectrum (rightmost point). The scale of the top half of the extended structure was chosen to lie within the central hole in the spatial-frequency plane at the middle frequency of the band so as to generate a situation where the interferometer-only imaging is difficult.Please refer to the [publication](https://iopscience.iop.org/article/10.3847/1538-3881/ab1aa7/meta) for a more detailed analysis of the imaging quality and comparisons of images without and with SD data. Images from a run on the ALMA M100 12m+7m+TP Science Verification Data suite are also shown below.*Single Pointing Simulation :*Wideband Multi-Term Imaging ( deconvolver=\'mtmfs\', specmode=\'mfs\' )- SD + INT A joint reconstruction accurately reconstructs both intensity and spectral index for the extended emission as well as the compact sources.- INT-only The intensity has negative bowls and the spectral index is overly steep, especially for the top half of the extended component.- SD-only The spectral index of the extended emission is accurate (at 0.0) and the point sources are barely visible at this SD angular resolution.Cube Imaging ( deconvolver=\'multiscale\', specmode=\'cube\' )- SD + INT A joint reconstruction has lower artifacts and more accurate intensities in all three channels, compared to the int-only reconstructions below - INT-only The intensity has negative bowls in the lower frequency channels and the extended emission is largely absent at the higher frequencies.- SD-only A demonstration of single-dish cube imaging with deconvolution of the SD-PSF. In this example, iterations have not been run until full convergence, which is why the sources still contain signatures of the PSF.*Mosaic Simulation*An observation of the same sky brightness was simulated with 25 pointings.Wideband Multi-Term Mosaic Imaging ( deconvolver=\'mtmfs\', specmode=\'mfs\' , gridder=\'mosaic\' )- SD + INT A joint reconstruction accurately reconstructs both intensity and spectral index for the extended emission as well as the compact sources. This is a demonstration of joint mosaicing along with wideband single-dish and interferometer combination.- INT-only The intensity has negative bowls and the spectral index is strongly inaccurate. Note that the errors are slightly less than the situation with the single-pointing example (where there was only one pointing's worth of uv-coverage).Cube Mosaic Imaging ( deconvolver='multiscale', specmode='cube', gridder='mosaic' )- SD + INT A joint reconstruction produces better per-channel reconstructions compared to the INT-only situation shown below. This is a demonstration of cube mosaic imaging along with SD+INT joint reconstruction. - INT-only Cube mosaic imaging with only interferometer data. This clearly shows negative bowls and artifacts arising from the missing flux. ALMA M100 Spectral Cube Imaging : 12m + 7m + TPThe sdintimaging task was run on the [ALMA M100 Science Verification Datasets](https://almascience.nrao.edu/alma-data/science-verification).\(1\) The single dish (TP) cube was pre-processed by adding per-plane restoringbeam information.\(2\) Cube specification parameters were obtained from the SD Image as follows```from sdint_helper import * sdintlib = SDINT_helper() sdintlib.setup_cube_params(sdcube='M100_TmP')Output : Shape of SD cube : [90 90 1 70\] Coordinate ordering : ['Direction', 'Direction', 'Stokes', 'Spectral']nchan = 70start = 114732899312.0Hzwidth = -1922516.74324HzFound 70 per-plane restoring beams\(For specmode='mfs' in sdintimaging, please remember to set 'reffreq' to a value within the freq range of the cube.Returned Dict : {'nchan': 70, 'start': '114732899312.0Hz', 'width': '-1922516.74324Hz'}```\(3\) Task sdintimaging was run with automatic SD-PSF generation, n-sigma stopping thresholds, a pb-based mask at the 0.3 gain level, and no other deconvolution masks (interactive=False).```sdintimaging(usedata="sdint", sdimage="../M100_TP", sdpsf="",sdgain=3.0, dishdia=12.0, vis="../M100_12m_7m", imagename="try_sdint_niter5k", imsize=1000, cell="0.5arcsec", phasecenter="J2000 12h22m54.936s +15d48m51.848s", stokes="I", specmode="cube", reffreq="", nchan=70, start="114732899312.0Hz", width="-1922516.74324Hz", outframe="LSRK", veltype="radio", restfreq="115.271201800GHz", interpolation="linear", perchanweightdensity=True, gridder="mosaic", mosweight=True, pblimit=0.2, deconvolver="multiscale", scales=[0, 5, 10, 15, 20], smallscalebias=0.0, pbcor=False, weighting="briggs", robust=0.5, niter=5000, gain=0.1, threshold=0.0, nsigma=3.0, interactive=False, usemask="user", mask="", pbmask=0.3)```**Results from two channels are show below. **LEFT : INT only (12m+7m) and RIGHT : SD+INT (12m + 7m + TP)Channel 23Channel 43 Moment 0 Maps : LEFT : INT only. MIDDLE : SD + INT with sdgain=1.0 RIGHT : SD + INT with sdgain=3.0Moment 1 Maps : LEFT : INT only. MIDDLE : SD + INT with sdgain=1.0 RIGHT : SD + INT with sdgain=3.0A comparison (shown for one channel) with and without masking is shown below. Notes : - In the reconstructed cubes, negative bowls have clearly been eliminated by using sdintimaging to combine interferometry + SD data. Residual images are close to noise-like too (not pictured above) suggesting a well-constrained and steadily converging imaging run. - The source structure is visibly different from the INT-only case, with high and low resolution structure appearing more well defined. However, the *high-resolution* peak flux in the SDINT image cube is almost a factor of 3 lower than the INT-only. While this may simply be because of deconvolution uncertainty in the ill-constrained INT-only reconstruction, it requires more investigation to evaluate absolute flux correctness. For example, it will be useful to evaluate if the INT-only reconstructed flux changes significantly with careful hand-masking. - Compare with a Feathered image : http://www.astroexplorer.org/details/apjaa60c2f1 : The reconstructed structure is consistent.- The middle and right panels compare reconstructions with different values of sdgain (1.0 and 3.0). The sdgain=3.0 run has a noticeable emphasis on the SD flux in the reconstructed moment maps, while the high resolution structures have the same are the same between sdgain=1 and 3. This is consistent with expectations from the algorithm, but requires further investigation to evaluate robustness in general.- Except for the last panel, no deconvolution masks were used (apart from a *pbmask* at the 0.3 gain level). The deconvolution quality even without masking is consistent with the expectation that when supplied with better data constraints in a joint reconstruction, the native algorithms are capable of converging on their own. In this example (same *niter* and *sdgain*), iterative cleaning with interactive and auto-masks (based mostly on interferometric peaks in the images) resulted in more artifacts compared to a run that allowed multi-scale clean to proceed on its own.- The results using sdintimaging on these ALMA data can be compared with performance results when [using feather](https://casaguides.nrao.edu/index.php?title=M100_Band3_Combine_5.4), and when [using tp2vis](https://science.nrao.edu/facilities/alma/alma-develop-old-022217/tp2vis_final_report.pdf) (ALMA study by J. Koda and P. Teuben). Fitting a new restoring beam to the Feathered PSFSince the deconvolution uses a joint SD+INT point spread function, the restoring beam is re-fitted after the feather step within the sdintimaging task. As a convenience feature, the corresponding tool method is also available to the user and may be used to invoke PSF refitting standalone, without needing an MS or any gridding of weights to make the PSF. This method will look for the imagename.psf (or imagename.psf.tt0), fit and set the new restoring beam. It is tied to the naming convention of tclean.```synu = casac.synthesisutils();synu.fitPsfBeam(imagename='qq', psfcutoff=0.3) Cubessynu.fitPsfBeam(imagename='qq', nterms=2, psfcutoff=0.3) Multi-term``` Tested Use CasesThe following is a list of use cases that have simulation-based functional verification tests within CASA.1. Wideband mulit-term imaging (SD+Int) Wideband data single field imaging by joint-reconstruction from single dish and interferometric data to obtain the high resolution of the interferometer while account for the zero spacing information. Use multi-term multi-frequency synthesis (MTMFS) algorithm to properly account for spectral information of the source.2. Wideband multi-term imaging: Int only The same as 1 except for using interferometric data only, which is useful to make a comparison with 1 (i.e. effect of missing flux). This is equivalent to running 'mtmfs' with specmode='mfs' and gridder='standard' in tclean 3. Wideband multi-term imaging: SD only The same as 1 expect for using single dish data only which is useful to make a comparison with 1 (i.e. to see how much high resolution information is missing). Also, sometimes, the SD PSF has significant sidelobes (Airy disk) and even single dish images can benefit from deconvolution. This is a use case where wideband multi-term imaging is applied to SD data alone to make images at the highest possible resolution as well as to derive spectral index information. 4. Single field cube imaging: SD+Int Spectral cube single field imaging by joint reconstruction of single dish and interferometric data to obtain single field spectral cube image. Use multi-scale clean for deconvolution 5. Single field cube imaging: Int only The same as 4 except for using the interferometric data only, which is useful to make a comparison with 4 (i.e. effect of missing flux). This is equivalent to running 'multiscale' with specmode='cube' and gridder='standard' in tclean.6. Single field cube imaging: SD only The same as 4 except for using the single dish data only, which is useful to make a comparison with 4 (i.e. to see how much high resolution information is missing) Also, it addresses the use case where SD PSF sidelobes are significant and where the SD images could benefit from multiscale (or point source) deconvolution per channel. 7. Wideband multi-term mosaic Imaging: SD+Int Wideband data mosaic imaging by joint-reconstruction from single dish and interferometric data to obtain the high resolution of the interferometer while account for the zero spacing information. Use multi-term multi-frequency synthesis (MTMFS) algorithm to properly account for spectral information of the source. Implement the concept of conjbeams (i.e. frequency dependent primary beam correction) for wideband mosaicing. 8. Wideband multi-term mosaic imaging: Int only The same as 7 except for using interferometric data only, which is useful to make a comparison with 7 (i.e. effect of missing flux). Also, this is an alternate implementation of the concept of conjbeams ( frequency dependent primary beam correction) available via tclean, and which is likely to be more robust to uv-coverage variations (and sumwt) across frequency. 9. Wideband multi-term mosaic imaging: SD only The same as 7 expect for using single dish data only which is useful to make a comparison with 7 (i.e. to see how much high resolution information is missing). This is the same situation as (3), but made on an image coordinate system that matches an interferometer mosaic mtmfs image. 10. Cube mosaic imaging: SD+Int Spectral cube mosaic imaging by joint reconstruction of single dish and interferometric data. Use multi-scale clean for deconvolution. 11. Cube mosaic imaging: Int only The same as 10 except for using the intererometric data only, which is useful to make a comparison with 10 (i.e. effect of missing flux). This is the same use case as gridder='mosaic' and deconvolver='multiscale' in tclean for specmode='cube'.12. Cube mosaic imaging: SD only The same as 10 except for using the single dish data only, which is useful to make a comparison with 10 (i.e. to see how much high resolution information is missing). This is the same situation as (6), but made on an image coordinate system that matches an interferometer mosaic cube image. 13. Wideband MTMFS SD+INT with channel 2 flagged in INT The same as 1, but with partially flagged data in the cubes. This is a practical reality with real data where the INT and SD data are likely to have gaps in the data due to radio frequency interferenece or other weight variations. 14. Cube SD+INT with channel 2 flagged The same as 4, but with partially flagged data in the cubes. This is a practical reality with real data where the INT and SD data are likely to have gaps in the data due to radio frequency interferenece or other weight variations. 15. Wideband MTMFS SD+INT with sdpsf="" The same as 1, but with an unspecified sdpsf. This triggers the auto-calculation of the SD PSF cube using restoring beam information from the regridded input sdimage. 16. INT-only cube comparison between tclean and sdintimaging Compare cube imaging results for a functionally equivalent run.17. INT-only mtmfs comparison between tclean and sdintimaging Compare mtmfs imaging results for a functionally equivalent run. Note that the sdintimaging task implements wideband primary beam correction in the image domain on the cube residual image, whereas tclean uses the 'conjbeams' parameter to apply an approximation of this correction during the gridding step.Note : Serial and Parallel Runs for an ALMA test dataset have been shown to be consistent to a 1e+6 dynamic range, consistent with differences measured for our current implementation of cube parallelization. References[Urvashi Rau, Nikhil Naik, and Timothy Braun 2019 AJ 158, 1](https://iopscience.iop.org/article/10.3847/1538-3881/ab1aa7/meta)https://github.com/urvashirau/WidebandSDINT*** Feather & CASAfeather Feathering is a technique used to combine a Single Dish (SD) image with an interferometric image of the same field.The goal of this process is to reconstruct the source emission on all spatial scales, ranging from the small spatial scales measured by the interferometer to the large-scale structure measured by the single dish. To do this, feather combines the images in Fourier space, weighting them by the spatial frequency response of each image. This technique assumes that the spatial frequencies of the single dish and interferometric data partially overlap. The subject of interferometric and single dish data combination has a long history. See the introduction of Koda et al 2011 (and references therein) [\[1\]](Bibliography) for a concise review, and Vogel et al 1984 [\[2\]](Bibliography), Stanimirovic et al 1999 [\[3\]](Bibliography), Stanimirovic 2002 [\[4\]](Bibliography), Helfer et al 2003 [\[5\]](Bibliography), and Weiss et al 2001 [\[6\]](Bibliography), among other referenced papers, for other methods and discussions concerning the combination of single dish and interferometric data.The feathering algorithm implemented in CASA is as follows: 1. Regrid the single dish image to match the coordinate system, image shape, and pixel size of the high resolution image. 2. Transform each image onto uniformly gridded spatial-frequency axes.3. Scale the Fourier-transformed low-resolution image by the ratio of the volumes of the two \'clean beams\' (high-res/low-res) to convert the single dish intensity (in Jy/beam) to that corresponding to the high resolution intensity (in Jy/beam). The volume of the beam is calculated as the volume under a two dimensional Gaussian with peak 1 and major and minor axes of the beam corresponding to the major and minor axes of the Gaussian. 4. Add the Fourier-transformed data from the high-resolution image, scaled by $(1-wt)$ where $wt$ is the Fourier transform of the \'clean beam\' defined in the low-resolution image, to the scaled low resolution image from step 3.5. Transform back to the image plane.The input images for feather must have the following characteristics:1. Both input images must have a well-defined beam shape for this task to work, which will be a \'clean beam\' for interferometric images and a \'primary-beam\' for a single-dish image. The beam for each image should be specified in the image header. If a beam is not defined in the header or feather cannot guess the beam based on the telescope parameter in the header, then you will need to add the beam size to the header using **imhead**. 2. Both input images must have the same flux density normalization scale. If necessary, the SD image should be converted from temperature units to Jy/beam. Since measuring absolute flux levels is difficult with single dishes, the single dish data is likely to be the one with the most uncertain flux calibration. The SD image flux can be scaled using the parameter *sdfactor* to place it on the same scale as the interferometer data. The casafeather task (see below) can be used to investigate the relative flux scales of the images.Feather attemps to regrid the single dish image to the interferometric image. Given that the single dish image frequently originates from other data reduction packages, CASA may have trouble performing the necessary regridding steps. If that happens, one may try to regrid the single dish image manually to the interferometric image. CASA has a few tasks to perform individual steps, including **imregrid** for coordinate transformations, **imtrans** to swap and reverse coordinate axes, the tool **ia.adddegaxes()** for adding degenerate axes (e.g. a single Stokes axis). See the \"[Image Analysis](image_analysis.ipynbimage-analysis)\" chapter for additional options. If you have trouble changing image projections, you can try the [montage package](http://montage.ipac.caltech.edu/), which also has an [associated python wrapper](http://www.astropy.org/montage-wrapper/).If you are feathering large images together, set the numbers of pixels along the X and Y axes to composite (non-prime) numbers in order to improve the algorithm speed. In general, FFTs work much faster on even and composite numbers. Then use the subimage task or tool to trim the number of pixels to something desirable. Inputs for task featherThe inputs for **feather** are: ```feather :: Combine two images using their Fourier transformsimagename = '' Name of output feathered imagehighres = '' Name of high resolution (interferometer) imagelowres = '' Name of low resolution (single dish) imagesdfactor = 1.0 Scale factor to apply to Single Dish imageeffdishdiam = -1.0 New effective SingleDish diameter to use in mlowpassfiltersd = False Filter out the high spatial frequencies of the SD image```The SD data cube is specified by the *lowres* parameter and the interferometric data cube by the *highres* parameter. The combined, feathered output cube name is given by the *imagename* parameter. The parameter *sdfactor* can be used to scale the flux calibration of the SD cube. The parameter *effdishdiam* can be used to change the weighting of the single dish image.The weighting functions for the data are usually the Fourier transform of the Single Dish beam FFT(PB~SD~) for the Single dish data, and the inverse, 1-FFT(PB~SD~), for the interferometric data. It is possible, however, to change the weighting functions by pretending that the SD is smaller in size via the *effdishdiam* parameter. This tapers the high spatial frequencies of the SD data and adds more weight to the interferometric data. The *lowpassfiltersd* can take out non-physical artifacts at very high spatial frequencies that are often present in SD data.Note that the only inputs are for images; **feather** will attempt to regrid the images to a common shape, i.e. pixel size, pixel numbers, and spectral channels. If you are having issues with the regridding inside feather, you may consider regridding using the **imregrid** and **specsmooth** tasks.The **feather** task does not perform any deconvolution but combines the single dish image with a presumably deconvolved interferometric image. The short spacings of the interferometric image that are extrapolated by the deconvolution process will be those that are down-weighted the most when combined with the single dish data. The single dish image must have a well-defined beam shape and the correct flux units for a model image (Jy/beam instead of Jy/pixel). Use the tasks **imhead** and **immath** first to convert if needed.Starting with a cleaned synthesis image and a low resolution image from a single dish telescope, the following example shows how they can be feathered: ```feather(imagename ='feather.im', Create an image called feather.im highres ='synth.im', The synthesis image is called synth.im lowres ='single_dish.im') The SD image is called single_dish.im``` Visual Interface for feather (casafeather)CASA also provides a visual interface to the **feather** task. The interface is run from a command line *outside* CASA by typing casafeather in a shell. An example of the interface is shown below. To start, one needs to specify a high and a low resolution image, typically an interferometric and a single dish map. Note that the single dish map needs to be in units of Jy/beam. The output image name can be specified. The non-deconvolved (dirty) interferometric image can also be specified to use as diagnostic of the relative flux scaling of the single dish and interferometer images. See below for more details. At the top of the display, the parameters *effdshdiameter* and *sdfactor* can be provided in the "Effective Dish Diameter" and "Low Resolution Scale Factor" input boxes. One you have specified the images and parameters, press the "Feather" button in the center of the GUI window to start the feathering process. The feathering process here includes regridding the low resolution image to the high resolution image.>Figure 1: The panel shows the "Original Data Slice", which are cuts through the u and v directions of the Fourier-transformed input images. Green is the single dish data (low resolution) and purple the interferometric data (high resolution). To bring them on the same flux scale, the low data were convolved to the high resolution beam and vice versa (selectable in color preferences). In addition, a single dish scaling of 1.2 was applied to adjust calibration differences. The weight functions are shown in yellow (for the low resolution data) and orange (for the high resolution data). The weighting functions were also applied to the green and purple slices. Image slices of the combined, feathered output image are shown in blue. The displays also show the location of the effective dish diameter by the vertical line. This value is kept at the original single dish diameter that is taken from the respective image header. The initial casafeather display shows two rows of plots. The panel shows the "Original Data Slice", which are either cuts through the u and v directions of the Fourier-transformed input images or a radial average. A vertical line shows the location of the effective dish diameter(s). The blue lines are the combined, feathered slices.>Figure 2: The casafeather "customize" window.The \'Customize\' button (gear icon on the top menu page) allows one to set the display parameters. Options are to show the slice plot, the scatter plot, or the legend. One can also select between logarithmic and linear axes; a good option is usually to make both axes logarithmic. You can also select whether the x-axis for the slices are in the u, or v, or both directions, or, alternatively a radial average in the uv-plane. For data cubes, one can also select a particular velocity plane, or to average the data across all velocity channels. The scatter plot can display any two data sets on the two axes, selected from the \'Color Preferences\' menu. The data can be the unmodified, original data, or data that have been convolved with the high or low resolution beams. One can also select to display data that were weighted and scaled by the functions discussed above.>Figure 3: The scatter plot in casafeather. The low data, convolved with high beam, weighted and scaled is still somewhat below the equality line (plotted against high data, convolved with low beam, weighted). In this case one can try to adjust the \"low resolution scale factor\" to bring the values closer to the line of equality, ie. to adjust the calibration scales. Plotting the data as a scatter plot is a useful diagnostic tool for checking for differences in flux scaling between the high and low resolution data sets.The dirty interferometer image contains the actual flux measurements made by the telescope. Therefore, if the single dish scaling is correct, the flux in the dirty image convolved with the low resolution beam and with the appropriate weighting applied should be the same as the flux of the low-resolution data convolved with the high resolution beam once weighted and scaled. If not, the *sdfactor* parameter can be adjusted until they are the same. One may also use the cleaned high resolution image instead of the dirty image, if the latter is not available. However, note that the cleaned high resolution image already contains extrapolations to larger spatial scales that may bias the comparison.*** Bibliography1. Koda et al 2011 (http://adsabs.harvard.edu/abs/2011ApJS..193...19K)2. Vogel et al 1984 (http://adsabs.harvard.edu/abs/1984ApJ...283..655V)3. Stanimirovic et al 1999 (http://adsabs.harvard.edu/abs/1999MNRAS.302..417S)4. Stanimirovic et al 2002 (http://adsabs.harvard.edu/abs/2002ASPC..278..375S)5. Helfer et al 2003 (http://adsabs.harvard.edu/abs/2003ApJS..145..259H)6. Weiss et al 2001 (http://adsabs.harvard.edu/abs/2001A%26A...365..571W) | _____no_output_____ | Apache-2.0 | docs/notebooks/image_combination.ipynb | yohei99/casadocs |

|

IPL Dataset Analysis Problem StatementWe want to know as to what happens during an IPL match which raises several questions in our mind with our limited knowledge about the game called cricket on which it is based. This analysis is done to know as which factors led one of the team to win and how does it matter. About the Dataset :The Indian Premier League (IPL) is a professional T20 cricket league in India contested during April-May of every year by teams representing Indian cities. It is the most-attended cricket league in the world and ranks sixth among all the sports leagues. It has teams with players from around the world and is very competitive and entertaining with a lot of close matches between teams.The IPL and other cricket related datasets are available at [cricsheet.org](https://cricsheet.org/%c2%a0(data). Feel free to visit the website and explore the data by yourself as exploring new sources of data is one of the interesting activities a data scientist gets to do. About the dataset:Snapshot of the data you will be working on:The dataset 1452 data points and 23 features|Features|Description||-----|-----||match_code|Code pertaining to individual match||date|Date of the match played||city|Location where the match was played||team1|team1||team2|team2||toss_winner|Who won the toss out of two teams||toss_decision|toss decision taken by toss winner||winner|Winner of that match between two teams||win_type|How did the team won(by wickets or runs etc.)||win_margin|difference with which the team won| |inning|inning type(1st or 2nd)||delivery|ball delivery||batting_team|current team on batting||batsman|current batsman on strike||non_striker|batsman on non-strike||bowler|Current bowler||runs|runs scored||extras|extra run scored||total|total run scored on that delivery including runs and extras||extras_type|extra run scored by wides or no ball or legby||player_out|player that got out||wicket_kind|How did the player got out||wicket_fielders|Fielder who caught out the player by catch| Analysing data using numpy module Read the data using numpy module. | import numpy as np

# Not every data format will be in csv there are other file formats also.

# This exercise will help you deal with other file formats and how toa read it.

path = './ipl_matches_small.csv'

data_ipl = np.genfromtxt(path, delimiter=',', skip_header=1, dtype=str)

print(data_ipl) | [['392203' '2009-05-01' 'East London' ... '' '' '']

['392203' '2009-05-01' 'East London' ... '' '' '']

['392203' '2009-05-01' 'East London' ... '' '' '']

...

['335987' '2008-04-21' 'Jaipur' ... '' '' '']

['335987' '2008-04-21' 'Jaipur' ... '' '' '']

['335987' '2008-04-21' 'Jaipur' ... '' '' '']]

| MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

Calculate the unique no. of matches in the provided dataset ? | # How many matches were held in total we need to know so that we can analyze further statistics keeping that in mind.im

import numpy as np

unique_match_code=np.unique(data_ipl[:,0])

print(unique_match_code) | ['335987' '392197' '392203' '392212' '501226' '729297']

| MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

Find the set of all unique teams that played in the matches in the data set. | # this exercise deals with you getting to know that which are all those six teams that played in the tournament.

import numpy as np

unique_match_team3=np.unique(data_ipl[:,3])

print(unique_match_team3)

unique_match_team4=np.unique(data_ipl[:,4])

print(unique_match_team4)

union=np.union1d(unique_match_team3,unique_match_team4)

print(union)

unique=np.unique(union)

print(unique)

| _____no_output_____ | MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

Find sum of all extras in all deliveries in all matches in the dataset | # An exercise to make you familiar with indexing and slicing up within data.

import numpy as np

extras=data_ipl[:,17]

data=extras.astype(np.int)

print(sum(data))

| 88

| MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

Get the array of all delivery numbers when a given player got out. Also mention the wicket type. | import numpy as np

deliveries=[]

wicket_type=[]

for i in data_ipl:

if(i[20]!=""):

a=i[11]

b=i[21]

deliveries.append(a)

wicket_type.append(b)

print(deliveries)

print(wicket_type)

| _____no_output_____ | MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

How many matches the team `Mumbai Indians` has won the toss? | data_arr=[]

for i in data_ipl:

if(i[5]=="Mumbai Indians"):

data_arr.append(i[0])

unique_match_id=np.unique(data_arr)

print(unique_match_id)

print(len(unique_match_id))

| ['392197' '392203']

2

| MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

Create a filter that filters only those records where the batsman scored 6 runs. Also who has scored the maximum no. of sixes overall ? | # An exercise to know who is the most aggresive player or maybe the scoring player

import numpy as np

counter=0

run_dict={}

arr=[]

for i in data_ipl:

#print(i[13])

#current_run = i[16]

#prev_run = run_dict[batsman_nm]

#batsman_nm = i[13]

#if prev_run == None:

#run_dict[batsman_nm] = current_run

#else:

#run_dict[batsman_nm] = run_dict[batsman_nm]current_run

if i[13] in run_dict:

run_dict[i[13]]=run_dict[i[13]]+int(i[16])

else:

run_dict[i[13]]=int(i[16])

print(run_dict)

| _____no_output_____ | MIT | Manipulating_Data_with_NumPy_Code_Along.ipynb | vidSanas/greyatom-python-for-data-science |

读取数据 | import torchvision.transforms as T

img_shape = (3, 224, 224)

def read_raw_img(path, resize, L=False):

img = Image.open(path)

if resize:

img = img.resize(resize)

if L:

img = img.convert('L')

return np.asarray(img)

class DogCat(data.Dataset):

def __init__(self,path, img_shape):

# self.batch_size = batch_size

self.img_shape = img_shape

imgs = os.listdir(path)

random.shuffle(imgs)

self.imgs = [os.path.join(path, img) for img in imgs]

# normalize = T.Normalize(mean = [0.485, 0.456, 0.406],

# std = [0.229, 0.224, 0.225])

# self.transforms = T.Compose([T.Resize(224),

# T.CenterCrop(224),

# T.ToTensor(),

# normalize])

def __getitem__(self, index):

# start = index * self.batch_size

# end = min(start + self.batch_size, len(self.imgs))

# size = end - start

# assert size > 0

# img_paths = self.imgs[start:end]

# a = t.zeros((size,) + self.img_shape, requires_grad=True)

# b = t.zeros((size, 1))

# for i in range(size):

img = read_raw_img(self.imgs[index], self.img_shape[1:], L=False).transpose((2,1,0))

# img = Image.open(img_paths[i])

x = t.from_numpy(img)

# a[i] = self.transforms(img)

y = 1 if 'dog' in self.imgs[index].split('/')[-1].split('.')[0] else 0

return x, y

def __len__(self):

return len(self.imgs)

train = DogCat(path+'train', img_shape) | _____no_output_____ | Apache-2.0 | Pytorch/Task4.ipynb | asd55667/DateWhale |

构建模型 | import math

class Vgg16(nn.Module):

def __init__(self, features, num_classes=1, init_weights=True):

super(Vgg16, self).__init__()

self.features = features

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

x = t.sigmoid(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

m.weight.data.normal_(0, 0.01)

m.bias.data.zero_()

def make_layers(cfg, mode, batch_norm=False):

layers = []

if mode == 'RGB':

in_channels = 3

elif mode == 'L':

in_channels = 1

else:

print('only RGB or L mode')

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

cfg = [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'] | _____no_output_____ | Apache-2.0 | Pytorch/Task4.ipynb | asd55667/DateWhale |

损失函数与优化器 | vgg = Vgg16(make_layers(cfg, 'RGB')).cuda()

print(vgg)

criterion = nn.BCELoss()

optimizer = t.optim.Adam(vgg.parameters(),lr=0.001) | Vgg16(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace)

(2): Dropout(p=0.5)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace)

(5): Dropout(p=0.5)

(6): Linear(in_features=4096, out_features=1, bias=True)

)

)

| Apache-2.0 | Pytorch/Task4.ipynb | asd55667/DateWhale |

模型训练 | use_cuda = t.cuda.is_available()

device = t.device("cuda:0" if use_cuda else "cpu")

# cudnn.benchmark = True

# Parameters

params = {'batch_size': 64,

'shuffle': True,

'num_workers': 6}

max_epochs = 1

# train = DogCat(path+'train', img_shape)

training_generator = data.DataLoader(train, **params)

for epoch in range(max_epochs):

for x, y_ in training_generator:

x, y_ = x.float().to(device), y_.float().to(device)

y = vgg(x)

loss = criterion(y, y_)

loss.backward()

optimizer.step()

| /home/wcw/anaconda3/envs/tf/lib/python3.6/site-packages/torch/nn/functional.py:2016: UserWarning: Using a target size (torch.Size([64])) that is different to the input size (torch.Size([64, 1])) is deprecated. Please ensure they have the same size.

"Please ensure they have the same size.".format(target.size(), input.size()))

/home/wcw/anaconda3/envs/tf/lib/python3.6/site-packages/torch/nn/functional.py:2016: UserWarning: Using a target size (torch.Size([28])) that is different to the input size (torch.Size([28, 1])) is deprecated. Please ensure they have the same size.

"Please ensure they have the same size.".format(target.size(), input.size()))

| Apache-2.0 | Pytorch/Task4.ipynb | asd55667/DateWhale |

模型评估 | accs = []

test = DogCat(path+'test', img_shape=img_shape)

test_loader = data.DataLoader(test, **params)

with t.set_grad_enabled(False):

for x, y_ in test_loader:

x, y_ = x.float().to(device), y_.float().to(device)

y = vgg(x)

acc = y.eq(y_).sum().item()/y.shape[0]

# acc = t.max(y, 1)[1].eq(t.max(y_, 1)[1]).sum().item()/y.shape[0]

accs.append(acc)

np.mean(accs) | _____no_output_____ | Apache-2.0 | Pytorch/Task4.ipynb | asd55667/DateWhale |

<imgsrc="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"><imgsrc="https://img.shields.io/badge/GitHub-100000?logo=github&logoColor=white" alt="GitHub"> Text Annotation Import* This notebook will provide examples of each supported annotation type for text assets. It will cover the following: * Model-assisted labeling - used to provide pre-annotated data for your labelers. This will enable a reduction in the total amount of time to properly label your assets. Model-assisted labeling does not submit the labels automatically, and will need to be reviewed by a labeler for submission. * Label Import - used to provide ground truth labels. These can in turn be used and compared against prediction labels, or used as benchmarks to see how your labelers are doing. * For information on what types of annotations are supported per data type, refer to this documentation: * https://docs.labelbox.com/docs/model-assisted-labelingoption-1-import-via-python-annotation-types-recommended * Notes: * Wait until the import job is complete before opening the Editor to make sure all annotations are imported properly. Installs | !pip install -q 'labelbox[data]' | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Imports | from labelbox.schema.ontology import OntologyBuilder, Tool, Classification, Option

from labelbox import Client, LabelingFrontend, LabelImport, MALPredictionImport

from labelbox.data.annotation_types import (

Label, TextData, Checklist, Radio, ObjectAnnotation, TextEntity,

ClassificationAnnotation, ClassificationAnswer

)

from labelbox.data.serialization import NDJsonConverter

import uuid

import json

import numpy as np | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

API Key and ClientProvide a valid api key below in order to properly connect to the Labelbox Client. | # Add your api key

API_KEY = None

client = Client(api_key=API_KEY) | INFO:labelbox.client:Initializing Labelbox client at 'https://api.labelbox.com/graphql'

| Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

---- Steps1. Make sure project is setup2. Collect annotations3. Upload Project setup We will be creating two projects, one for model-assisted labeling, and one for label imports | ontology_builder = OntologyBuilder(

tools=[

Tool(tool=Tool.Type.NER, name="named_entity")

],

classifications=[

Classification(class_type=Classification.Type.CHECKLIST, instructions="checklist", options=[

Option(value="first_checklist_answer"),

Option(value="second_checklist_answer")

]),

Classification(class_type=Classification.Type.RADIO, instructions="radio", options=[

Option(value="first_radio_answer"),

Option(value="second_radio_answer")

])])

mal_project = client.create_project(name="text_mal_project")

li_project = client.create_project(name="text_label_import_project")

dataset = client.create_dataset(name="text_annotation_import_demo_dataset")

test_txt_url = "https://storage.googleapis.com/labelbox-sample-datasets/nlp/lorem-ipsum.txt"

data_row = dataset.create_data_row(row_data=test_txt_url)

editor = next(client.get_labeling_frontends(where=LabelingFrontend.name == "Editor"))

mal_project.setup(editor, ontology_builder.asdict())

mal_project.datasets.connect(dataset)

li_project.setup(editor, ontology_builder.asdict())

li_project.datasets.connect(dataset) | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Create Label using Annotation Type Objects* It is recommended to use the Python SDK's annotation types for importing into Labelbox. Object Annotations | def create_objects():

named_enity = TextEntity(start=10,end=20)

named_enity_annotation = ObjectAnnotation(value=named_enity, name="named_entity")

return named_enity_annotation | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Classification Annotations | def create_classifications():

checklist = Checklist(answer=[ClassificationAnswer(name="first_checklist_answer"),ClassificationAnswer(name="second_checklist_answer")])

checklist_annotation = ClassificationAnnotation(value=checklist, name="checklist")

radio = Radio(answer = ClassificationAnswer(name = "second_radio_answer"))

radio_annotation = ClassificationAnnotation(value=radio, name="radio")

return checklist_annotation, radio_annotation | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Create a Label object with all of our annotations | image_data = TextData(uid=data_row.uid)

named_enity_annotation = create_objects()

checklist_annotation, radio_annotation = create_classifications()

label = Label(

data=image_data,

annotations = [

named_enity_annotation, checklist_annotation, radio_annotation

]

)

label.__dict__ | _____no_output_____ | Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Model Assisted Labeling To do model-assisted labeling, we need to convert a Label object into an NDJSON. This is easily done with using the NDJSONConverter classWe will create a Label called mal_label which has the same original structure as the label aboveNotes:* Each label requires a valid feature schema id. We will assign it using our built in `assign_feature_schema_ids` method* the NDJsonConverter takes in a list of labels | mal_label = Label(

data=image_data,

annotations = [

named_enity_annotation, checklist_annotation, radio_annotation

]

)

mal_label.assign_feature_schema_ids(ontology_builder.from_project(mal_project))

ndjson_labels = list(NDJsonConverter.serialize([mal_label]))

ndjson_labels

upload_job = MALPredictionImport.create_from_objects(

client = client,

project_id = mal_project.uid,

name="upload_label_import_job",

predictions=ndjson_labels)

# Errors will appear for each annotation that failed.

# Empty list means that there were no errors

# This will provide information only after the upload_job is complete, so we do not need to worry about having to rerun

print("Errors:", upload_job.errors) | INFO:labelbox.schema.annotation_import:Sleeping for 10 seconds...

| Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Label Import Label import is very similar to model-assisted labeling. We will need to re-assign the feature schema before continuing, but we can continue to use our NDJSonConverterWe will create a Label called li_label which has the same original structure as the label above | #for the purpose of this notebook, we will need to reset the schema ids of our checklist and radio answers

image_data = TextData(uid=data_row.uid)

named_enity_annotation = create_objects()

checklist_annotation, radio_annotation = create_classifications()

li_label = Label(

data=image_data,

annotations = [

named_enity_annotation, checklist_annotation, radio_annotation

]

)

li_label.assign_feature_schema_ids(ontology_builder.from_project(li_project))

ndjson_labels = list(NDJsonConverter.serialize([li_label]))

ndjson_labels, li_project.ontology().normalized

upload_job = LabelImport.create_from_objects(

client = client,

project_id = li_project.uid,

name="upload_label_import_job",

labels=ndjson_labels)

print("Errors:", upload_job.errors) | INFO:labelbox.schema.annotation_import:Sleeping for 10 seconds...

| Apache-2.0 | examples/model_assisted_labeling/ner_mal.ipynb | Cyniikal/labelbox-python |

Hough Lines Import resources and display the image | import numpy as np

import matplotlib.pyplot as plt

import cv2

%matplotlib inline

# Read in the image

image = cv2.imread('images/phone.jpg')

# Change color to RGB (from BGR)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

plt.imshow(image) | _____no_output_____ | MIT | 1_2_Convolutional_Filters_Edge_Detection/.ipynb_checkpoints/6_1. Hough lines-checkpoint.ipynb | sxtien/CVND_Exercises |

Perform edge detection | # Convert image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

# Define our parameters for Canny

low_threshold = 50

high_threshold = 100

edges = cv2.Canny(gray, low_threshold, high_threshold)

plt.imshow(edges, cmap='gray') | _____no_output_____ | MIT | 1_2_Convolutional_Filters_Edge_Detection/.ipynb_checkpoints/6_1. Hough lines-checkpoint.ipynb | sxtien/CVND_Exercises |

Find lines using a Hough transform | # Define the Hough transform parameters

# Make a blank the same size as our image to draw on

rho = 1

theta = np.pi/180

threshold = 60

min_line_length = 50

max_line_gap = 5

line_image = np.copy(image) #creating an image copy to draw lines on

# Run Hough on the edge-detected image

lines = cv2.HoughLinesP(edges, rho, theta, threshold, np.array([]),

min_line_length, max_line_gap)

# Iterate over the output "lines" and draw lines on the image copy

for line in lines:

for x1,y1,x2,y2 in line:

cv2.line(line_image,(x1,y1),(x2,y2),(255,0,0),5)

plt.imshow(line_image) | _____no_output_____ | MIT | 1_2_Convolutional_Filters_Edge_Detection/.ipynb_checkpoints/6_1. Hough lines-checkpoint.ipynb | sxtien/CVND_Exercises |

import torch

x = torch.arange(18).view(3,2,3)

print(x)

print(x[0,0,0])

print(x[1,0,0])

print(x[1,1,1])

x[1,0:2,0:2] | _____no_output_____ | MIT | Chapter3_Slicing_3D_Tensors.ipynb | SokichiFujita/PyTorch-for-Deep-Learning-and-Computer-Vision |

|

Using the same code as before, please solve the following exercises 1. Change the number of observations to 100,000 and see what happens. 2. Play around with the learning rate. Values like 0.0001, 0.001, 0.1, 1 are all interesting to observe. 3. Change the loss function. An alternative loss for regressions is the Huber loss. The Huber loss is more appropriate than the L2-norm when we have outliers, as it is less sensitive to them (in our example we don't have outliers, but you will surely stumble upon a dataset with outliers in the future). The L2-norm loss puts all differences *to the square*, so outliers have a lot of influence on the outcome. The proper syntax of the Huber loss is 'huber_loss' Useful tip: When you change something, don't forget to RERUN all cells. This can be done easily by clicking:Kernel -> Restart & Run AllIf you don't do that, your algorithm will keep the OLD values of all parameters.You can either use this file for all the exercises, or check the solutions of EACH ONE of them in the separate files we have provided. All other files are solutions of each problem. If you feel confident enough, you can simply change values in this file. Please note that it will be nice, if you return the file to starting position after you have solved a problem, so you can use the lecture as a basis for comparison. Import the relevant libraries | # We must always import the relevant libraries for our problem at hand. NumPy and TensorFlow are required for this example.

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf | _____no_output_____ | Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Data generationWe generate data using the exact same logic and code as the example from the previous notebook. The only difference now is that we save it to an npz file. Npz is numpy's file type which allows you to save numpy arrays into a single .npz file. We introduce this change because in machine learning most often: * you are given some data (csv, database, etc.)* you preprocess it into a desired format (later on we will see methods for preprocesing)* you save it into npz files (if you're working in Python) to access laterNothing to worry about - this is literally saving your NumPy arrays into a file that you can later access, nothing more. | # First, we should declare a variable containing the size of the training set we want to generate.

observations = 1000

# We will work with two variables as inputs. You can think about them as x1 and x2 in our previous examples.

# We have picked x and z, since it is easier to differentiate them.

# We generate them randomly, drawing from an uniform distribution. There are 3 arguments of this method (low, high, size).

# The size of xs and zs is observations x 1. In this case: 1000 x 1.

xs = np.random.uniform(low=-10, high=10, size=(observations,1))

zs = np.random.uniform(-10, 10, (observations,1))

# Combine the two dimensions of the input into one input matrix.

# This is the X matrix from the linear model y = x*w + b.

# column_stack is a Numpy method, which combines two matrices (vectors) into one.

generated_inputs = np.column_stack((xs,zs))

# We add a random small noise to the function i.e. f(x,z) = 2x - 3z + 5 + <small noise>

noise = np.random.uniform(-1, 1, (observations,1))

# Produce the targets according to our f(x,z) = 2x - 3z + 5 + noise definition.

# In this way, we are basically saying: the weights should be 2 and -3, while the bias is 5.

generated_targets = 2*xs - 3*zs + 5 + noise

# save into an npz file called "TF_intro"

np.savez('TF_intro', inputs=generated_inputs, targets=generated_targets) | _____no_output_____ | Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Solving with TensorFlowNote: This intro is just the basics of TensorFlow which has way more capabilities and depth than that. | # Load the training data from the NPZ

training_data = np.load('TF_intro.npz')

# Declare a variable where we will store the input size of our model

# It should be equal to the number of variables you have

input_size = 2

# Declare the output size of the model

# It should be equal to the number of outputs you've got (for regressions that's usually 1)

output_size = 1

# Outline the model

# We lay out the model in 'Sequential'

# Note that there are no calculations involved - we are just describing our network

model = tf.keras.Sequential([

# Each 'layer' is listed here

# The method 'Dense' indicates, our mathematical operation to be (xw + b)

tf.keras.layers.Dense(output_size,

# there are extra arguments you can include to customize your model

# in our case we are just trying to create a solution that is

# as close as possible to our NumPy model

kernel_initializer=tf.random_uniform_initializer(minval=-0.1, maxval=0.1),

bias_initializer=tf.random_uniform_initializer(minval=-0.1, maxval=0.1)

)

])

# We can also define a custom optimizer, where we can specify the learning rate

custom_optimizer = tf.keras.optimizers.SGD(learning_rate=0.02)

# Note that sometimes you may also need a custom loss function

# That's much harder to implement and won't be covered in this course though

# 'compile' is the place where you select and indicate the optimizers and the loss

model.compile(optimizer=custom_optimizer, loss='mean_squared_error')

# finally we fit the model, indicating the inputs and targets

# if they are not otherwise specified the number of epochs will be 1 (a single epoch of training),

# so the number of epochs is 'kind of' mandatory, too

# we can play around with verbose; we prefer verbose=2

model.fit(training_data['inputs'], training_data['targets'], epochs=100, verbose=2) | Epoch 1/100

1000/1000 - 0s - loss: 24.5755

Epoch 2/100

1000/1000 - 0s - loss: 1.1773

Epoch 3/100

1000/1000 - 0s - loss: 0.4253

Epoch 4/100

1000/1000 - 0s - loss: 0.3853

Epoch 5/100

1000/1000 - 0s - loss: 0.3727

Epoch 6/100

1000/1000 - 0s - loss: 0.3932

Epoch 7/100

1000/1000 - 0s - loss: 0.3817

Epoch 8/100

1000/1000 - 0s - loss: 0.3877

Epoch 9/100

1000/1000 - 0s - loss: 0.3729

Epoch 10/100

1000/1000 - 0s - loss: 0.3982

Epoch 11/100

1000/1000 - 0s - loss: 0.3809

Epoch 12/100

1000/1000 - 0s - loss: 0.3788

Epoch 13/100

1000/1000 - 0s - loss: 0.3714

Epoch 14/100

1000/1000 - 0s - loss: 0.3608

Epoch 15/100

1000/1000 - 0s - loss: 0.3507

Epoch 16/100

1000/1000 - 0s - loss: 0.3918

Epoch 17/100

1000/1000 - 0s - loss: 0.3697

Epoch 18/100

1000/1000 - 0s - loss: 0.3811

Epoch 19/100

1000/1000 - 0s - loss: 0.3781

Epoch 20/100

1000/1000 - 0s - loss: 0.3974

Epoch 21/100

1000/1000 - 0s - loss: 0.3974

Epoch 22/100

1000/1000 - 0s - loss: 0.3724

Epoch 23/100

1000/1000 - 0s - loss: 0.3561

Epoch 24/100

1000/1000 - 0s - loss: 0.3691

Epoch 25/100

1000/1000 - 0s - loss: 0.3650

Epoch 26/100

1000/1000 - 0s - loss: 0.3569

Epoch 27/100

1000/1000 - 0s - loss: 0.3707

Epoch 28/100

1000/1000 - 0s - loss: 0.4100

Epoch 29/100

1000/1000 - 0s - loss: 0.3703

Epoch 30/100

1000/1000 - 0s - loss: 0.3598

Epoch 31/100

1000/1000 - 0s - loss: 0.3775

Epoch 32/100

1000/1000 - 0s - loss: 0.3936

Epoch 33/100

1000/1000 - 0s - loss: 0.3968

Epoch 34/100

1000/1000 - 0s - loss: 0.3614

Epoch 35/100

1000/1000 - 0s - loss: 0.3588

Epoch 36/100

1000/1000 - 0s - loss: 0.3777

Epoch 37/100

1000/1000 - 0s - loss: 0.3637

Epoch 38/100

1000/1000 - 0s - loss: 0.3662

Epoch 39/100

1000/1000 - 0s - loss: 0.3655

Epoch 40/100

1000/1000 - 0s - loss: 0.3582

Epoch 41/100

1000/1000 - 0s - loss: 0.3759

Epoch 42/100

1000/1000 - 0s - loss: 0.4468

Epoch 43/100

1000/1000 - 0s - loss: 0.3613

Epoch 44/100

1000/1000 - 0s - loss: 0.3905

Epoch 45/100

1000/1000 - 0s - loss: 0.3825

Epoch 46/100

1000/1000 - 0s - loss: 0.3810

Epoch 47/100

1000/1000 - 0s - loss: 0.3546

Epoch 48/100

1000/1000 - 0s - loss: 0.3520

Epoch 49/100

1000/1000 - 0s - loss: 0.3878

Epoch 50/100

1000/1000 - 0s - loss: 0.3748

Epoch 51/100

1000/1000 - 0s - loss: 0.3978

Epoch 52/100

1000/1000 - 0s - loss: 0.3669

Epoch 53/100

1000/1000 - 0s - loss: 0.3650

Epoch 54/100

1000/1000 - 0s - loss: 0.3869

Epoch 55/100

1000/1000 - 0s - loss: 0.3952

Epoch 56/100

1000/1000 - 0s - loss: 0.3897

Epoch 57/100

1000/1000 - 0s - loss: 0.3698

Epoch 58/100

1000/1000 - 0s - loss: 0.3655

Epoch 59/100

1000/1000 - 0s - loss: 0.3717

Epoch 60/100

1000/1000 - 0s - loss: 0.3942

Epoch 61/100

1000/1000 - 0s - loss: 0.4334

Epoch 62/100

1000/1000 - 0s - loss: 0.3836

Epoch 63/100

1000/1000 - 0s - loss: 0.3631

Epoch 64/100

1000/1000 - 0s - loss: 0.3804

Epoch 65/100

1000/1000 - 0s - loss: 0.3671

Epoch 66/100

1000/1000 - 0s - loss: 0.3801

Epoch 67/100

1000/1000 - 0s - loss: 0.4032

Epoch 68/100

1000/1000 - 0s - loss: 0.3764

Epoch 69/100

1000/1000 - 0s - loss: 0.3549

Epoch 70/100

1000/1000 - 0s - loss: 0.3585

Epoch 71/100

1000/1000 - 0s - loss: 0.3747

Epoch 72/100

1000/1000 - 0s - loss: 0.3633

Epoch 73/100

1000/1000 - 0s - loss: 0.3493

Epoch 74/100

1000/1000 - 0s - loss: 0.3924

Epoch 75/100

1000/1000 - 0s - loss: 0.4246

Epoch 76/100

1000/1000 - 0s - loss: 0.3701

Epoch 77/100

1000/1000 - 0s - loss: 0.3959

Epoch 78/100

1000/1000 - 0s - loss: 0.3923

Epoch 79/100

1000/1000 - 0s - loss: 0.3587

Epoch 80/100

1000/1000 - 0s - loss: 0.3729

Epoch 81/100

1000/1000 - 0s - loss: 0.3649

Epoch 82/100

1000/1000 - 0s - loss: 0.3611

Epoch 83/100

1000/1000 - 0s - loss: 0.3701

Epoch 84/100

1000/1000 - 0s - loss: 0.3699

Epoch 85/100

1000/1000 - 0s - loss: 0.3494

Epoch 86/100

1000/1000 - 0s - loss: 0.3613

Epoch 87/100

1000/1000 - 0s - loss: 0.3933

Epoch 88/100

1000/1000 - 0s - loss: 0.4031

Epoch 89/100

1000/1000 - 0s - loss: 0.3814

Epoch 90/100

1000/1000 - 0s - loss: 0.3481

Epoch 91/100

1000/1000 - 0s - loss: 0.3664

Epoch 92/100

1000/1000 - 0s - loss: 0.3691

Epoch 93/100

1000/1000 - 0s - loss: 0.3599

Epoch 94/100

1000/1000 - 0s - loss: 0.3817

Epoch 95/100

1000/1000 - 0s - loss: 0.3572

Epoch 96/100

1000/1000 - 0s - loss: 0.3699

Epoch 97/100

1000/1000 - 0s - loss: 0.3666

Epoch 98/100

1000/1000 - 0s - loss: 0.3667

Epoch 99/100

1000/1000 - 0s - loss: 0.4198

Epoch 100/100

1000/1000 - 0s - loss: 0.3667

| Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Extract the weights and biasExtracting the weight(s) and bias(es) of a model is not an essential step for the machine learning process. In fact, usually they would not tell us much in a deep learning context. However, this simple example was set up in a way, which allows us to verify if the answers we get are correct. | # Extracting the weights and biases is achieved quite easily

model.layers[0].get_weights()

# We can save the weights and biases in separate variables for easier examination

# Note that there can be hundreds or thousands of them!

weights = model.layers[0].get_weights()[0]

weights

# We can save the weights and biases in separate variables for easier examination

# Note that there can be hundreds or thousands of them!

bias = model.layers[0].get_weights()[1]

bias | _____no_output_____ | Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Extract the outputs (make predictions)Once more, this is not an essential step, however, we usually want to be able to make predictions. | # We can predict new values in order to actually make use of the model

# Sometimes it is useful to round the values to be able to read the output

# Usually we use this method on NEW DATA, rather than our original training data

model.predict_on_batch(training_data['inputs']).round(1)

# If we display our targets (actual observed values), we can manually compare the outputs and the targets

training_data['targets'].round(1) | _____no_output_____ | Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Plotting the data | # The model is optimized, so the outputs are calculated based on the last form of the model

# We have to np.squeeze the arrays in order to fit them to what the plot function expects.

# Doesn't change anything as we cut dimensions of size 1 - just a technicality.

plt.plot(np.squeeze(model.predict_on_batch(training_data['inputs'])), np.squeeze(training_data['targets']))

plt.xlabel('outputs')

plt.ylabel('targets')

plt.show()

# Voila - what you see should be exactly the same as in the previous notebook!

# You probably don't see the point of TensorFlow now - it took us the same number of lines of code

# to achieve this simple result. However, once we go deeper in the next chapter,

# TensorFlow will save us hundreds of lines of code. | _____no_output_____ | Apache-2.0 | 17 - Deep Learning with TensorFlow 2.0/5_Introduction to TensorFlow 2/8_Exercises/TensorFlow_Minimal_example_All_exercises.ipynb | olayinka04/365-data-science-courses |

Loan Default Risk - Exploratory Data Analysis This notebook is focused on data exploration. The key objective is to familiarise myself with the data and to identify any issues. This could lead to data cleaning or feature engineering. Contents 1. Importing Relevant Libraries, Reading In Data 2. Anomly Detection and Correction 3. Data Exploration 4. Summary 5. Distribution of New Datasets 1.1 Importing Relevant Libraries | #Importing data wrangling library

import pandas as pd #Data Wrangling/Cleaning package for mixed data

import numpy as np #Data wrangling & manipulation for numerical data

import os

#Importing visulization libraries

from matplotlib import pyplot as plt #Importing visulization libraries

import seaborn as sns

#Importing Machine Learning Libraries(Preprocessing)

from sklearn.preprocessing import OneHotEncoder

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import LabelEncoder # sklearn preprocessing for dealing with categorical variables

#Importing Machine Learning Libraries(Modelling And Evaluation)

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import cross_val_score

from sklearn.metrics import accuracy_score

os.getcwd() # Get working Directory | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

1.2 Reading In Data | rawfilepath = 'C:/Users/chara.geru/OneDrive - Avanade/DataScienceProject/HomeCreditModel/data/raw/'

filename = 'application_train.csv'

interimfilepath1 = 'C:/Users/chara.geru/OneDrive - Avanade/DataScienceProject/HomeCreditModel/data/interim/'

filename1 = 'df1.csv'

filename2 = 'df2.csv'

application_train = pd.read_csv(rawfilepath + filename)

df1 = pd.read_csv(interimfilepath1 + filename1)

df2 = pd.read_csv(interimfilepath1 + filename2)

print('Size of application_train data:', application_train.shape) #Printing shape of datasets | Size of application_train data: (307511, 122)

| MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

This dataset has: - 122 columns (features)- 307511 rows | application_train.columns.values #Printing all column names

pd.set_option('display.max_columns', None) #Display all columns

application_train.describe() #Get summary statistics for all columns

application_train.head() #View first 5 rows of the dataset | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

Generally the data looks good based on the statistics shown from the describe method. Potentional issues - Values in DAYS_BIRTH column are negative. They represent number of days a person was before they applied for a loan. For a better representation, I will convert them to positive values and convert days to years, for better representation of the data.- DAYS_EMPLOYED will be given the same treatment for the same reasons. 2.1 Anomly Detection | (application_train['DAYS_BIRTH']).describe()

(application_train['DAYS_EMPLOYED']).describe() | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

3.1 Check for Nulls | # "This function creates a table to summarize the null values"

def nulltable(df):

"""

This function creates a table to summarize the null values

"""

total = df.isnull().sum().sort_values(ascending = False)

percent = (df.isnull().sum()/df.isnull().count()*100).sort_values(ascending = False)

missing_df_data = pd.concat([total, percent], axis=1, keys=['Total', 'Percent'])

return missing_df_data.head(30)

nulltable(application_train) | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

- There are a large number of columns(features) with more than 50% NULLS.- I've deicided to drop these columns as they will not provide much information for training the model.- If a feaure has less than 50% NULLS, these maybe filled up using an appropriate calcualtion such as mean, median or mode 3.2 Data balanced or imbalanced | #Data balanced or imbalanced

temp = application_train["TARGET"].value_counts()

fig1, ax1 = plt.subplots()

ax1.pie(temp, labels=['Loan Repayed','Loan Not Repayed'], autopct='%1.1f%%',wedgeprops={'edgecolor':'black'})

ax1.axis('equal')

plt.title('Loan Repayed or Not')

plt.show() | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

Data is higly imbalanced - This emphasises the importance of assessing the precision/recall to evaluate results. For example, predicting all rows as not defaulted would lead to an accuracy of 91.9%.- Consider rebalancing the training data 3.3 Number of each type of column | # Number of each type of column

application_train.dtypes.value_counts() | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

- There are 16 object columns.- These will need to be encoded when building the model (using label encoder or one hot encoder) 3.4 Number of unique classes in each object column | # Number of unique classes in each object column

application_train.select_dtypes('object').apply(pd.Series.nunique, axis = 0) | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

- Gender has 3 values. This needs investigation and correction. 4. SummaryBased on the analysis so far we have identified the need to handle: - skewed data - removing rows with large number of NULLS - remove rows with third gender This led to the development of 2 new datasets, as we can't be sure which iterations would lead to the best model performance. This is a good opportunity for trial and error where I compare the perforomance of different permutations.Here are some visualisations to describe the new datasetsdf1- Negative DAYS_BIRTH converted to positive YEARS_BIRTH- Rows with third gender dropped- Dealt with features with large number of NULLsdf2- df2 has all the changes implemented to df1- In adition to that, in df2 the data for the skewed columns ('AMT_CREDIT', 'AMT_INCOME_TOTAL', 'AMT_GOODS_PRICE') hads been logged 5. Distrubutions of New Datasets 5.1 Plotting distribution of Datasets -I had initally plotted historgams for features like AMT_CREDIT, AMT_TOTAL_INCOME and AMT_GOOD_PRICE using df1. But I found that the distributions were skewed. So I logged these features to create df2 and plotted these features again. I show the comparions of the same below: | # Set the style of plots

plt.style.use('fivethirtyeight')

plt.figure(figsize = (10, 12))

plt.subplot(2, 1, 1)

plt.title("Distribution of AMT_CREDIT")

plt.hist(df1["AMT_CREDIT"], bins =20)

plt.xlabel("AMT_CREDIT")

plt.subplot(2, 1, 2)

plt.title(" Log Distribution of AMT_CREDIT")

plt.hist(df2["AMT_CREDIT"], bins =20)

plt.xlabel("Log_AMT_CREDIT")

plt.figure(figsize = (10, 12))

plt.subplot(2, 1, 1)

plt.title("Distribution of AMT_INCOME_TOTAL")

plt.hist(df1["AMT_INCOME_TOTAL"].dropna(), bins =25)

plt.subplot(2, 1, 2)

plt.title(" Log Distribution of AMT_INCOME_TOTAL")

plt.hist(df2["AMT_INCOME_TOTAL"].dropna(), bins =25)

plt.xlabel("Log_INCOME_TOTAL")

plt.figure(figsize = (10, 12))

plt.subplot(2, 1, 1)

plt.title("Distribution of AMT_GOODS_PRICE")

plt.hist(df1["AMT_GOODS_PRICE"].dropna(), bins = 20)

plt.subplot(2, 1, 2)

plt.title(" Log Distribution of AMT_GOODS_PRICE")

plt.hist(df2["AMT_GOODS_PRICE"].dropna(), bins = 20)

plt.xlabel("Log_GOODS_PRICE")

plt.hist(df1['YEARS_EMPLOYED'])

plt.xlabel('Years of Employment') | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

- It is not resonable to have such high years of employment (> 40-60 years).- As there are amny rows, I would try and repalce the values with average years of employment- I would plot the distribution of the reasonable values to get a clearer picture of the disrtibution.- Based on the ditribution, I would decide to take a log of the values to reduce the skew. | less_years = df1[df1.YEARS_EMPLOYED <= 80]

more_years = df1[df1.YEARS_EMPLOYED >80]

plt.hist(less_years['YEARS_EMPLOYED'])

plt.xlabel('Distribution of Lesser Years of Employment') | _____no_output_____ | MIT | notebooks/ExploratoryDataAnalysis.ipynb | geracharu/DataScienceProject |

log this data and change it in df1 | less_years['YEARS_EMPLOYED'].mean()

df1['YEARS_EMPLOYED'] = np.where(df1['YEARS_EMPLOYED'] > 80, 7, df1['YEARS_EMPLOYED'])

plt.hist(df1['YEARS_EMPLOYED'])

plt.xlabel('Years of Employment')

plt.hist(df2['YEARS_EMPLOYED'])

plt.xlabel('Years of Employment')

##Defaul = df1[df1['TARGET'] == 1]

Not_defaul = df1[df1['TARGET'] == 0]

# Find correlations with the target and sort

correlations = df1.corr()['TARGET'].sort_values()

# Display correlations

print('\nMost Positive Correlations: \n ', correlations.tail(15))

print('\nMost Negative Correlations:\n', correlations.head(15))

# Find the correlation of the positive days since birth and target

df1['YEARS_BIRTH'] = abs(df1['YEARS_BIRTH'])

df1['YEARS_BIRTH'].corr(df1['TARGET'])

# Set the style of plots

plt.style.use('fivethirtyeight')

# Plot the distribution of ages in years

plt.hist(df1['YEARS_BIRTH'], edgecolor = 'k', bins = 25)

plt.title('Age of Client'); plt.xlabel('Age (years)'); plt.ylabel('Count');

# KDE plot of loans that were repaid on time

sns.kdeplot(df1.loc[df1['TARGET'] == 0, 'YEARS_BIRTH'] / 365, label = 'target == 0')

# KDE plot of loans which were not repaid on time

sns.kdeplot(df1.loc[df1['TARGET'] == 1, 'YEARS_BIRTH'] / 365, label = 'target == 1')

# Labeling of plot

plt.xlabel('Age (years)'); plt.ylabel('Density'); plt.title('Distribution of Ages');

age_data = df1[['TARGET', 'YEARS_BIRTH']]

age_data['YEARS_BIRTH'] = age_data['YEARS_BIRTH']