Search is not available for this dataset

pipeline_tag

stringclasses 48

values | library_name

stringclasses 205

values | text

stringlengths 0

18.3M

| metadata

stringlengths 2

1.07B

| id

stringlengths 5

122

| last_modified

null | tags

listlengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

|

|---|---|---|---|---|---|---|---|---|

automatic-speech-recognition

|

transformers

|

# Wav2Vec2-XLS-R-300M-EN-15

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-300m`**](https://huggingface.co/facebook/wav2vec2-xls-r-300m) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on 15 `en` -> `{lang}` translation pairs of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from spoken `en` (Engish) to the following written languages `{lang}`:

`en` -> {`de`, `tr`, `fa`, `sv-SE`, `mn`, `zh-CN`, `cy`, `ca`, `sl`, `et`, `id`, `ar`, `ta`, `lv`, `ja`}

For more information, please refer to Section *5.1.1* of the [official XLS-R paper](https://arxiv.org/abs/2111.09296).

## Usage

### Demo

The model can be tested on [**this space**](https://huggingface.co/spaces/facebook/XLS-R-300m-EN-15).

You can select the target language, record some audio in English,

and then sit back and see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline. By default, the checkpoint will

translate spoken English to written German. To change the written target language,

you need to pass the correct `forced_bos_token_id` to `generate(...)` to condition

the decoder on the correct target language.

To select the correct `forced_bos_token_id` given your choosen language id, please make use

of the following mapping:

```python

MAPPING = {

"de": 250003,

"tr": 250023,

"fa": 250029,

"sv": 250042,

"mn": 250037,

"zh": 250025,

"cy": 250007,

"ca": 250005,

"sl": 250052,

"et": 250006,

"id": 250032,

"ar": 250001,

"ta": 250044,

"lv": 250017,

"ja": 250012,

}

```

As an example, if you would like to translate to Swedish, you can do the following:

```python

from datasets import load_dataset

from transformers import pipeline

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-300m-en-to-15", feature_extractor="facebook/wav2vec2-xls-r-300m-en-to-15")

translation = asr(audio_file, forced_bos_token_id=forced_bos_token_id)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-300m-en-to-15")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-300m-en-to-15")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"], forced_bos_token_id=forced_bos_token)

transcription = processor.batch_decode(generated_ids)

```

## Results `en` -> `{lang}`

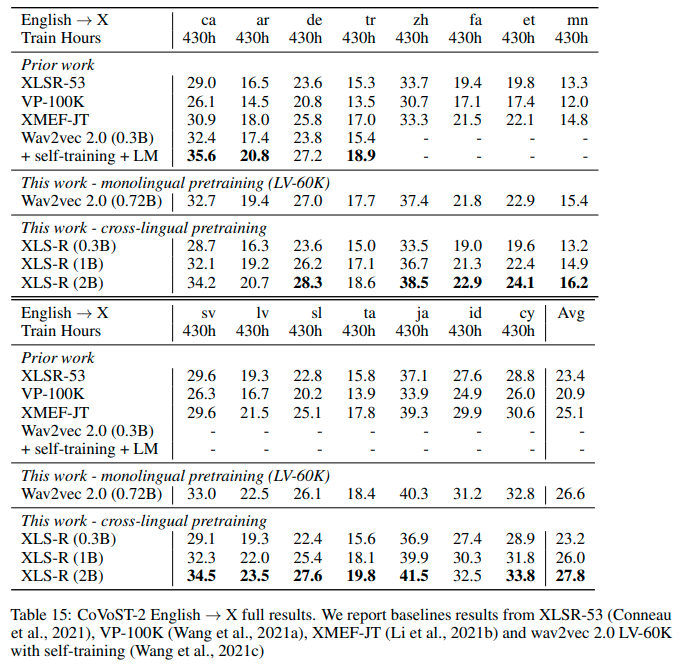

See the row of **XLS-R (0.3B)** for the performance on [Covost2](https://huggingface.co/datasets/covost2) for this model.

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-300m-en-to-15)

- [Wav2Vec2-XLS-R-1B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-1b-en-to-15)

- [Wav2Vec2-XLS-R-2B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-2b-en-to-15)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

{"language": ["multilingual", "en", "de", "tr", "fa", "sv", "mn", "zh", "cy", "ca", "sl", "et", "id", "ar", "ta", "lv", "ja"], "license": "apache-2.0", "tags": ["speech", "xls_r", "xls_r_translation", "automatic-speech-recognition"], "datasets": ["common_voice", "multilingual_librispeech", "covost2"], "pipeline_tag": "automatic-speech-recognition", "widget": [{"example_title": "English", "src": "https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3"}]}

|

facebook/wav2vec2-xls-r-300m-en-to-15

| null |

[

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"speech",

"xls_r",

"xls_r_translation",

"multilingual",

"en",

"de",

"tr",

"fa",

"sv",

"mn",

"zh",

"cy",

"ca",

"sl",

"et",

"id",

"ar",

"ta",

"lv",

"ja",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"arxiv:2111.09296",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

null |

transformers

|

# Wav2Vec2-XLS-R-300M

[Facebook's Wav2Vec2 XLS-R](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/) counting **300 million** parameters.

XLS-R is Facebook AI's large-scale multilingual pretrained model for speech (the "XLM-R for Speech"). It is pretrained on 436k hours of unlabeled speech, including VoxPopuli, MLS, CommonVoice, BABEL, and VoxLingua107. It uses the wav2vec 2.0 objective, in 128 languages. When using the model make sure that your speech input is sampled at 16kHz.

**Note**: This model should be fine-tuned on a downstream task, like Automatic Speech Recognition, Translation, or Classification. Check out [**this blog**](https://huggingface.co/blog/fine-tune-xlsr-wav2vec2) for more information about ASR.

[XLS-R Paper](https://arxiv.org/abs/2111.09296)

Authors: Arun Babu, Changhan Wang, Andros Tjandra, Kushal Lakhotia, Qiantong Xu, Naman Goyal, Kritika Singh, Patrick von Platen, Yatharth Saraf, Juan Pino, Alexei Baevski, Alexis Conneau, Michael Auli

**Abstract**

This paper presents XLS-R, a large-scale model for cross-lingual speech representation learning based on wav2vec 2.0. We train models with up to 2B parameters on 436K hours of publicly available speech audio in 128 languages, an order of magnitude more public data than the largest known prior work. Our evaluation covers a wide range of tasks, domains, data regimes and languages, both high and low-resource. On the CoVoST-2 speech translation benchmark, we improve the previous state of the art by an average of 7.4 BLEU over 21 translation directions into English. For speech recognition, XLS-R improves over the best known prior work on BABEL, MLS, CommonVoice as well as VoxPopuli, lowering error rates by 20%-33% relative on average. XLS-R also sets a new state of the art on VoxLingua107 language identification. Moreover, we show that with sufficient model size, cross-lingual pretraining can outperform English-only pretraining when translating English speech into other languages, a setting which favors monolingual pretraining. We hope XLS-R can help to improve speech processing tasks for many more languages of the world.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

# Usage

See [this google colab](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Fine_Tune_XLS_R_on_Common_Voice.ipynb) for more information on how to fine-tune the model.

You can find other pretrained XLS-R models with different numbers of parameters:

* [300M parameters version](https://huggingface.co/facebook/wav2vec2-xls-r-300m)

* [1B version version](https://huggingface.co/facebook/wav2vec2-xls-r-1b)

* [2B version version](https://huggingface.co/facebook/wav2vec2-xls-r-2b)

|

{"language": ["multilingual", "ab", "af", "sq", "am", "ar", "hy", "as", "az", "ba", "eu", "be", "bn", "bs", "br", "bg", "my", "yue", "ca", "ceb", "km", "zh", "cv", "hr", "cs", "da", "dv", "nl", "en", "eo", "et", "fo", "fi", "fr", "gl", "lg", "ka", "de", "el", "gn", "gu", "ht", "cnh", "ha", "haw", "he", "hi", "hu", "is", "id", "ia", "ga", "it", "ja", "jv", "kb", "kn", "kk", "rw", "ky", "ko", "ku", "lo", "la", "lv", "ln", "lt", "lm", "mk", "mg", "ms", "ml", "mt", "gv", "mi", "mr", "mn", "ne", false, "nn", "oc", "or", "ps", "fa", "pl", "pt", "pa", "ro", "rm", "rm", "ru", "sah", "sa", "sco", "sr", "sn", "sd", "si", "sk", "sl", "so", "hsb", "es", "su", "sw", "sv", "tl", "tg", "ta", "tt", "te", "th", "bo", "tp", "tr", "tk", "uk", "ur", "uz", "vi", "vot", "war", "cy", "yi", "yo", "zu"], "license": "apache-2.0", "tags": ["speech", "xls_r", "xls_r_pretrained"], "datasets": ["common_voice", "multilingual_librispeech"], "language_bcp47": ["zh-HK", "zh-TW", "fy-NL"]}

|

facebook/wav2vec2-xls-r-300m

| null |

[

"transformers",

"pytorch",

"wav2vec2",

"pretraining",

"speech",

"xls_r",

"xls_r_pretrained",

"multilingual",

"ab",

"af",

"sq",

"am",

"ar",

"hy",

"as",

"az",

"ba",

"eu",

"be",

"bn",

"bs",

"br",

"bg",

"my",

"yue",

"ca",

"ceb",

"km",

"zh",

"cv",

"hr",

"cs",

"da",

"dv",

"nl",

"en",

"eo",

"et",

"fo",

"fi",

"fr",

"gl",

"lg",

"ka",

"de",

"el",

"gn",

"gu",

"ht",

"cnh",

"ha",

"haw",

"he",

"hi",

"hu",

"is",

"id",

"ia",

"ga",

"it",

"ja",

"jv",

"kb",

"kn",

"kk",

"rw",

"ky",

"ko",

"ku",

"lo",

"la",

"lv",

"ln",

"lt",

"lm",

"mk",

"mg",

"ms",

"ml",

"mt",

"gv",

"mi",

"mr",

"mn",

"ne",

"no",

"nn",

"oc",

"or",

"ps",

"fa",

"pl",

"pt",

"pa",

"ro",

"rm",

"ru",

"sah",

"sa",

"sco",

"sr",

"sn",

"sd",

"si",

"sk",

"sl",

"so",

"hsb",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"ta",

"tt",

"te",

"th",

"bo",

"tp",

"tr",

"tk",

"uk",

"ur",

"uz",

"vi",

"vot",

"war",

"cy",

"yi",

"yo",

"zu",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"arxiv:2111.09296",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

automatic-speech-recognition

|

transformers

|

# Wav2Vec2-Large-XLSR-53 finetuned on multi-lingual Common Voice

This checkpoint leverages the pretrained checkpoint [wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

and is fine-tuned on [CommonVoice](https://huggingface.co/datasets/common_voice) to recognize phonetic labels in multiple languages.

When using the model make sure that your speech input is sampled at 16kHz.

Note that the model outputs a string of phonetic labels. A dictionary mapping phonetic labels to words

has to be used to map the phonetic output labels to output words.

[Paper: Simple and Effective Zero-shot Cross-lingual Phoneme Recognition](https://arxiv.org/abs/2109.11680)

Authors: Qiantong Xu, Alexei Baevski, Michael Auli

**Abstract**

Recent progress in self-training, self-supervised pretraining and unsupervised learning enabled well performing speech recognition systems without any labeled data. However, in many cases there is labeled data available for related languages which is not utilized by these methods. This paper extends previous work on zero-shot cross-lingual transfer learning by fine-tuning a multilingually pretrained wav2vec 2.0 model to transcribe unseen languages. This is done by mapping phonemes of the training languages to the target language using articulatory features. Experiments show that this simple method significantly outperforms prior work which introduced task-specific architectures and used only part of a monolingually pretrained model.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

from datasets import load_dataset

import torch

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-xlsr-53-espeak-cv-ft")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-xlsr-53-espeak-cv-ft")

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_values = processor(ds[0]["audio"]["array"], return_tensors="pt").input_values

# retrieve logits

with torch.no_grad():

logits = model(input_values).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

# => should give ['m ɪ s t ɚ k w ɪ l t ɚ ɪ z ð ɪ ɐ p ɑː s əl l ʌ v ð ə m ɪ d əl k l æ s ɪ z æ n d w iː aʊ ɡ l æ d t ə w ɛ l k ə m h ɪ z ɡ ɑː s p ə']

```

|

{"language": "multi-lingual", "license": "apache-2.0", "tags": ["speech", "audio", "automatic-speech-recognition", "phoneme-recognition"], "datasets": ["common_voice"], "widget": [{"example_title": "Librispeech sample 1", "src": "https://cdn-media.huggingface.co/speech_samples/sample1.flac"}, {"example_title": "Librispeech sample 2", "src": "https://cdn-media.huggingface.co/speech_samples/sample2.flac"}]}

|

facebook/wav2vec2-xlsr-53-espeak-cv-ft

| null |

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"speech",

"audio",

"phoneme-recognition",

"dataset:common_voice",

"arxiv:2109.11680",

"license:apache-2.0",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

automatic-speech-recognition

|

transformers

|

{}

|

facebook/wav2vec2-xlsr-53-phon-cv-babel-ft

| null |

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

|

automatic-speech-recognition

|

transformers

|

{}

|

facebook/wav2vec2-xlsr-53-phon-cv-ft

| null |

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

|

translation

|

transformers

|

# FSMT

## Model description

This is a ported version of [fairseq wmt19 transformer](https://github.com/pytorch/fairseq/blob/master/examples/wmt19/README.md) for de-en.

For more details, please see, [Facebook FAIR's WMT19 News Translation Task Submission](https://arxiv.org/abs/1907.06616).

The abbreviation FSMT stands for FairSeqMachineTranslation

All four models are available:

* [wmt19-en-ru](https://huggingface.co/facebook/wmt19-en-ru)

* [wmt19-ru-en](https://huggingface.co/facebook/wmt19-ru-en)

* [wmt19-en-de](https://huggingface.co/facebook/wmt19-en-de)

* [wmt19-de-en](https://huggingface.co/facebook/wmt19-de-en)

## Intended uses & limitations

#### How to use

```python

from transformers import FSMTForConditionalGeneration, FSMTTokenizer

mname = "facebook/wmt19-de-en"

tokenizer = FSMTTokenizer.from_pretrained(mname)

model = FSMTForConditionalGeneration.from_pretrained(mname)

input = "Maschinelles Lernen ist großartig, oder?"

input_ids = tokenizer.encode(input, return_tensors="pt")

outputs = model.generate(input_ids)

decoded = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(decoded) # Machine learning is great, isn't it?

```

#### Limitations and bias

- The original (and this ported model) doesn't seem to handle well inputs with repeated sub-phrases, [content gets truncated](https://discuss.huggingface.co/t/issues-with-translating-inputs-containing-repeated-phrases/981)

## Training data

Pretrained weights were left identical to the original model released by fairseq. For more details, please, see the [paper](https://arxiv.org/abs/1907.06616).

## Eval results

pair | fairseq | transformers

-------|---------|----------

de-en | [42.3](http://matrix.statmt.org/matrix/output/1902?run_id=6750) | 41.35

The score is slightly below the score reported by `fairseq`, since `transformers`` currently doesn't support:

- model ensemble, therefore the best performing checkpoint was ported (``model4.pt``).

- re-ranking

The score was calculated using this code:

```bash

git clone https://github.com/huggingface/transformers

cd transformers

export PAIR=de-en

export DATA_DIR=data/$PAIR

export SAVE_DIR=data/$PAIR

export BS=8

export NUM_BEAMS=15

mkdir -p $DATA_DIR

sacrebleu -t wmt19 -l $PAIR --echo src > $DATA_DIR/val.source

sacrebleu -t wmt19 -l $PAIR --echo ref > $DATA_DIR/val.target

echo $PAIR

PYTHONPATH="src:examples/seq2seq" python examples/seq2seq/run_eval.py facebook/wmt19-$PAIR $DATA_DIR/val.source $SAVE_DIR/test_translations.txt --reference_path $DATA_DIR/val.target --score_path $SAVE_DIR/test_bleu.json --bs $BS --task translation --num_beams $NUM_BEAMS

```

note: fairseq reports using a beam of 50, so you should get a slightly higher score if re-run with `--num_beams 50`.

## Data Sources

- [training, etc.](http://www.statmt.org/wmt19/)

- [test set](http://matrix.statmt.org/test_sets/newstest2019.tgz?1556572561)

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Facebook FAIR's WMT19 News Translation Task Submission},

author={Ng, Nathan and Yee, Kyra and Baevski, Alexei and Ott, Myle and Auli, Michael and Edunov, Sergey},

booktitle={Proc. of WMT},

}

```

## TODO

- port model ensemble (fairseq uses 4 model checkpoints)

|

{"language": ["de", "en"], "license": "apache-2.0", "tags": ["translation", "wmt19", "facebook"], "datasets": ["wmt19"], "metrics": ["bleu"], "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png"}

|

facebook/wmt19-de-en

| null |

[

"transformers",

"pytorch",

"safetensors",

"fsmt",

"text2text-generation",

"translation",

"wmt19",

"facebook",

"de",

"en",

"dataset:wmt19",

"arxiv:1907.06616",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

translation

|

transformers

|

# FSMT

## Model description

This is a ported version of [fairseq wmt19 transformer](https://github.com/pytorch/fairseq/blob/master/examples/wmt19/README.md) for en-de.

For more details, please see, [Facebook FAIR's WMT19 News Translation Task Submission](https://arxiv.org/abs/1907.06616).

The abbreviation FSMT stands for FairSeqMachineTranslation

All four models are available:

* [wmt19-en-ru](https://huggingface.co/facebook/wmt19-en-ru)

* [wmt19-ru-en](https://huggingface.co/facebook/wmt19-ru-en)

* [wmt19-en-de](https://huggingface.co/facebook/wmt19-en-de)

* [wmt19-de-en](https://huggingface.co/facebook/wmt19-de-en)

## Intended uses & limitations

#### How to use

```python

from transformers import FSMTForConditionalGeneration, FSMTTokenizer

mname = "facebook/wmt19-en-de"

tokenizer = FSMTTokenizer.from_pretrained(mname)

model = FSMTForConditionalGeneration.from_pretrained(mname)

input = "Machine learning is great, isn't it?"

input_ids = tokenizer.encode(input, return_tensors="pt")

outputs = model.generate(input_ids)

decoded = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(decoded) # Maschinelles Lernen ist großartig, oder?

```

#### Limitations and bias

- The original (and this ported model) doesn't seem to handle well inputs with repeated sub-phrases, [content gets truncated](https://discuss.huggingface.co/t/issues-with-translating-inputs-containing-repeated-phrases/981)

## Training data

Pretrained weights were left identical to the original model released by fairseq. For more details, please, see the [paper](https://arxiv.org/abs/1907.06616).

## Eval results

pair | fairseq | transformers

-------|---------|----------

en-de | [43.1](http://matrix.statmt.org/matrix/output/1909?run_id=6862) | 42.83

The score is slightly below the score reported by `fairseq`, since `transformers`` currently doesn't support:

- model ensemble, therefore the best performing checkpoint was ported (``model4.pt``).

- re-ranking

The score was calculated using this code:

```bash

git clone https://github.com/huggingface/transformers

cd transformers

export PAIR=en-de

export DATA_DIR=data/$PAIR

export SAVE_DIR=data/$PAIR

export BS=8

export NUM_BEAMS=15

mkdir -p $DATA_DIR

sacrebleu -t wmt19 -l $PAIR --echo src > $DATA_DIR/val.source

sacrebleu -t wmt19 -l $PAIR --echo ref > $DATA_DIR/val.target

echo $PAIR

PYTHONPATH="src:examples/seq2seq" python examples/seq2seq/run_eval.py facebook/wmt19-$PAIR $DATA_DIR/val.source $SAVE_DIR/test_translations.txt --reference_path $DATA_DIR/val.target --score_path $SAVE_DIR/test_bleu.json --bs $BS --task translation --num_beams $NUM_BEAMS

```

note: fairseq reports using a beam of 50, so you should get a slightly higher score if re-run with `--num_beams 50`.

## Data Sources

- [training, etc.](http://www.statmt.org/wmt19/)

- [test set](http://matrix.statmt.org/test_sets/newstest2019.tgz?1556572561)

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Facebook FAIR's WMT19 News Translation Task Submission},

author={Ng, Nathan and Yee, Kyra and Baevski, Alexei and Ott, Myle and Auli, Michael and Edunov, Sergey},

booktitle={Proc. of WMT},

}

```

## TODO

- port model ensemble (fairseq uses 4 model checkpoints)

|

{"language": ["en", "de"], "license": "apache-2.0", "tags": ["translation", "wmt19", "facebook"], "datasets": ["wmt19"], "metrics": ["bleu"], "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png"}

|

facebook/wmt19-en-de

| null |

[

"transformers",

"pytorch",

"fsmt",

"text2text-generation",

"translation",

"wmt19",

"facebook",

"en",

"de",

"dataset:wmt19",

"arxiv:1907.06616",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

translation

|

transformers

|

# FSMT

## Model description

This is a ported version of [fairseq wmt19 transformer](https://github.com/pytorch/fairseq/blob/master/examples/wmt19/README.md) for en-ru.

For more details, please see, [Facebook FAIR's WMT19 News Translation Task Submission](https://arxiv.org/abs/1907.06616).

The abbreviation FSMT stands for FairSeqMachineTranslation

All four models are available:

* [wmt19-en-ru](https://huggingface.co/facebook/wmt19-en-ru)

* [wmt19-ru-en](https://huggingface.co/facebook/wmt19-ru-en)

* [wmt19-en-de](https://huggingface.co/facebook/wmt19-en-de)

* [wmt19-de-en](https://huggingface.co/facebook/wmt19-de-en)

## Intended uses & limitations

#### How to use

```python

from transformers import FSMTForConditionalGeneration, FSMTTokenizer

mname = "facebook/wmt19-en-ru"

tokenizer = FSMTTokenizer.from_pretrained(mname)

model = FSMTForConditionalGeneration.from_pretrained(mname)

input = "Machine learning is great, isn't it?"

input_ids = tokenizer.encode(input, return_tensors="pt")

outputs = model.generate(input_ids)

decoded = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(decoded) # Машинное обучение - это здорово, не так ли?

```

#### Limitations and bias

- The original (and this ported model) doesn't seem to handle well inputs with repeated sub-phrases, [content gets truncated](https://discuss.huggingface.co/t/issues-with-translating-inputs-containing-repeated-phrases/981)

## Training data

Pretrained weights were left identical to the original model released by fairseq. For more details, please, see the [paper](https://arxiv.org/abs/1907.06616).

## Eval results

pair | fairseq | transformers

-------|---------|----------

en-ru | [36.4](http://matrix.statmt.org/matrix/output/1914?run_id=6724) | 33.47

The score is slightly below the score reported by `fairseq`, since `transformers`` currently doesn't support:

- model ensemble, therefore the best performing checkpoint was ported (``model4.pt``).

- re-ranking

The score was calculated using this code:

```bash

git clone https://github.com/huggingface/transformers

cd transformers

export PAIR=en-ru

export DATA_DIR=data/$PAIR

export SAVE_DIR=data/$PAIR

export BS=8

export NUM_BEAMS=15

mkdir -p $DATA_DIR

sacrebleu -t wmt19 -l $PAIR --echo src > $DATA_DIR/val.source

sacrebleu -t wmt19 -l $PAIR --echo ref > $DATA_DIR/val.target

echo $PAIR

PYTHONPATH="src:examples/seq2seq" python examples/seq2seq/run_eval.py facebook/wmt19-$PAIR $DATA_DIR/val.source $SAVE_DIR/test_translations.txt --reference_path $DATA_DIR/val.target --score_path $SAVE_DIR/test_bleu.json --bs $BS --task translation --num_beams $NUM_BEAMS

```

note: fairseq reports using a beam of 50, so you should get a slightly higher score if re-run with `--num_beams 50`.

## Data Sources

- [training, etc.](http://www.statmt.org/wmt19/)

- [test set](http://matrix.statmt.org/test_sets/newstest2019.tgz?1556572561)

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Facebook FAIR's WMT19 News Translation Task Submission},

author={Ng, Nathan and Yee, Kyra and Baevski, Alexei and Ott, Myle and Auli, Michael and Edunov, Sergey},

booktitle={Proc. of WMT},

}

```

## TODO

- port model ensemble (fairseq uses 4 model checkpoints)

|

{"language": ["en", "ru"], "license": "apache-2.0", "tags": ["translation", "wmt19", "facebook"], "datasets": ["wmt19"], "metrics": ["bleu"], "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png"}

|

facebook/wmt19-en-ru

| null |

[

"transformers",

"pytorch",

"fsmt",

"text2text-generation",

"translation",

"wmt19",

"facebook",

"en",

"ru",

"dataset:wmt19",

"arxiv:1907.06616",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

translation

|

transformers

|

# FSMT

## Model description

This is a ported version of [fairseq wmt19 transformer](https://github.com/pytorch/fairseq/blob/master/examples/wmt19/README.md) for ru-en.

For more details, please see, [Facebook FAIR's WMT19 News Translation Task Submission](https://arxiv.org/abs/1907.06616).

The abbreviation FSMT stands for FairSeqMachineTranslation

All four models are available:

* [wmt19-en-ru](https://huggingface.co/facebook/wmt19-en-ru)

* [wmt19-ru-en](https://huggingface.co/facebook/wmt19-ru-en)

* [wmt19-en-de](https://huggingface.co/facebook/wmt19-en-de)

* [wmt19-de-en](https://huggingface.co/facebook/wmt19-de-en)

## Intended uses & limitations

#### How to use

```python

from transformers import FSMTForConditionalGeneration, FSMTTokenizer

mname = "facebook/wmt19-ru-en"

tokenizer = FSMTTokenizer.from_pretrained(mname)

model = FSMTForConditionalGeneration.from_pretrained(mname)

input = "Машинное обучение - это здорово, не так ли?"

input_ids = tokenizer.encode(input, return_tensors="pt")

outputs = model.generate(input_ids)

decoded = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(decoded) # Machine learning is great, isn't it?

```

#### Limitations and bias

- The original (and this ported model) doesn't seem to handle well inputs with repeated sub-phrases, [content gets truncated](https://discuss.huggingface.co/t/issues-with-translating-inputs-containing-repeated-phrases/981)

## Training data

Pretrained weights were left identical to the original model released by fairseq. For more details, please, see the [paper](https://arxiv.org/abs/1907.06616).

## Eval results

pair | fairseq | transformers

-------|---------|----------

ru-en | [41.3](http://matrix.statmt.org/matrix/output/1907?run_id=6937) | 39.20

The score is slightly below the score reported by `fairseq`, since `transformers`` currently doesn't support:

- model ensemble, therefore the best performing checkpoint was ported (``model4.pt``).

- re-ranking

The score was calculated using this code:

```bash

git clone https://github.com/huggingface/transformers

cd transformers

export PAIR=ru-en

export DATA_DIR=data/$PAIR

export SAVE_DIR=data/$PAIR

export BS=8

export NUM_BEAMS=15

mkdir -p $DATA_DIR

sacrebleu -t wmt19 -l $PAIR --echo src > $DATA_DIR/val.source

sacrebleu -t wmt19 -l $PAIR --echo ref > $DATA_DIR/val.target

echo $PAIR

PYTHONPATH="src:examples/seq2seq" python examples/seq2seq/run_eval.py facebook/wmt19-$PAIR $DATA_DIR/val.source $SAVE_DIR/test_translations.txt --reference_path $DATA_DIR/val.target --score_path $SAVE_DIR/test_bleu.json --bs $BS --task translation --num_beams $NUM_BEAMS

```

note: fairseq reports using a beam of 50, so you should get a slightly higher score if re-run with `--num_beams 50`.

## Data Sources

- [training, etc.](http://www.statmt.org/wmt19/)

- [test set](http://matrix.statmt.org/test_sets/newstest2019.tgz?1556572561)

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Facebook FAIR's WMT19 News Translation Task Submission},

author={Ng, Nathan and Yee, Kyra and Baevski, Alexei and Ott, Myle and Auli, Michael and Edunov, Sergey},

booktitle={Proc. of WMT},

}

```

## TODO

- port model ensemble (fairseq uses 4 model checkpoints)

|

{"language": ["ru", "en"], "license": "apache-2.0", "tags": ["translation", "wmt19", "facebook"], "datasets": ["wmt19"], "metrics": ["bleu"], "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png"}

|

facebook/wmt19-ru-en

| null |

[

"transformers",

"pytorch",

"safetensors",

"fsmt",

"text2text-generation",

"translation",

"wmt19",

"facebook",

"ru",

"en",

"dataset:wmt19",

"arxiv:1907.06616",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

translation

|

transformers

|

# WMT 21 En-X

WMT 21 En-X is a 4.7B multilingual encoder-decoder (seq-to-seq) model trained for one-to-many multilingual translation.

It was introduced in this [paper](https://arxiv.org/abs/2108.03265) and first released in [this](https://github.com/pytorch/fairseq/tree/main/examples/wmt21) repository.

The model can directly translate English text into 7 other languages: Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de).

To translate into a target language, the target language id is forced as the first generated token.

To force the target language id as the first generated token, pass the `forced_bos_token_id` parameter to the `generate` method.

*Note: `M2M100Tokenizer` depends on `sentencepiece`, so make sure to install it before running the example.*

To install `sentencepiece` run `pip install sentencepiece`

Since the model was trained with domain tags, you should prepend them to the input as well.

* "wmtdata newsdomain": Use for sentences in the news domain

* "wmtdata otherdomain": Use for sentences in all other domain

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/wmt21-dense-24-wide-en-x")

tokenizer = AutoTokenizer.from_pretrained("facebook/wmt21-dense-24-wide-en-x")

inputs = tokenizer("wmtdata newsdomain One model for many languages.", return_tensors="pt")

# translate English to German

generated_tokens = model.generate(**inputs, forced_bos_token_id=tokenizer.get_lang_id("de"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "Ein Modell für viele Sprachen."

# translate English to Icelandic

generated_tokens = model.generate(**inputs, forced_bos_token_id=tokenizer.get_lang_id("is"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "Ein fyrirmynd fyrir mörg tungumál."

```

See the [model hub](https://huggingface.co/models?filter=wmt21) to look for more fine-tuned versions.

## Languages covered

English (en), Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de)

## BibTeX entry and citation info

```

@inproceedings{tran2021facebook

title={Facebook AI’s WMT21 News Translation Task Submission},

author={Chau Tran and Shruti Bhosale and James Cross and Philipp Koehn and Sergey Edunov and Angela Fan},

booktitle={Proc. of WMT},

year={2021},

}

```

|

{"language": ["multilingual", "ha", "is", "ja", "cs", "ru", "zh", "de", "en"], "license": "mit", "tags": ["translation", "wmt21"]}

|

facebook/wmt21-dense-24-wide-en-x

| null |

[

"transformers",

"pytorch",

"m2m_100",

"text2text-generation",

"translation",

"wmt21",

"multilingual",

"ha",

"is",

"ja",

"cs",

"ru",

"zh",

"de",

"en",

"arxiv:2108.03265",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

translation

|

transformers

|

# WMT 21 X-En

WMT 21 X-En is a 4.7B multilingual encoder-decoder (seq-to-seq) model trained for one-to-many multilingual translation.

It was introduced in this [paper](https://arxiv.org/abs/2108.03265) and first released in [this](https://github.com/pytorch/fairseq/tree/main/examples/wmt21) repository.

The model can directly translate text from 7 languages: Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de) to English.

To translate into a target language, the target language id is forced as the first generated token.

To force the target language id as the first generated token, pass the `forced_bos_token_id` parameter to the `generate` method.

*Note: `M2M100Tokenizer` depends on `sentencepiece`, so make sure to install it before running the example.*

To install `sentencepiece` run `pip install sentencepiece`

Since the model was trained with domain tags, you should prepend them to the input as well.

* "wmtdata newsdomain": Use for sentences in the news domain

* "wmtdata otherdomain": Use for sentences in all other domain

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/wmt21-dense-24-wide-x-en")

tokenizer = AutoTokenizer.from_pretrained("facebook/wmt21-dense-24-wide-x-en")

# translate German to English

tokenizer.src_lang = "de"

inputs = tokenizer("wmtdata newsdomain Ein Modell für viele Sprachen", return_tensors="pt")

generated_tokens = model.generate(**inputs)

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "A model for many languages"

# translate Icelandic to English

tokenizer.src_lang = "is"

inputs = tokenizer("wmtdata newsdomain Ein fyrirmynd fyrir mörg tungumál", return_tensors="pt")

generated_tokens = model.generate(**inputs)

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "One model for many languages"

```

See the [model hub](https://huggingface.co/models?filter=wmt21) to look for more fine-tuned versions.

## Languages covered

English (en), Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de)

## BibTeX entry and citation info

```

@inproceedings{tran2021facebook

title={Facebook AI’s WMT21 News Translation Task Submission},

author={Chau Tran and Shruti Bhosale and James Cross and Philipp Koehn and Sergey Edunov and Angela Fan},

booktitle={Proc. of WMT},

year={2021},

}

```

|

{"language": ["multilingual", "ha", "is", "ja", "cs", "ru", "zh", "de", "en"], "license": "mit", "tags": ["translation", "wmt21"]}

|

facebook/wmt21-dense-24-wide-x-en

| null |

[

"transformers",

"pytorch",

"m2m_100",

"text2text-generation",

"translation",

"wmt21",

"multilingual",

"ha",

"is",

"ja",

"cs",

"ru",

"zh",

"de",

"en",

"arxiv:2108.03265",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

text-generation

|

transformers

|

# XGLM-1.7B

XGLM-1.7B is a multilingual autoregressive language model (with 1.7 billion parameters) trained on a balanced corpus of a diverse set of languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-1.7B is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-1.7B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-1.7B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-1.7B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

```

|

{"language": ["multilingual", "en", "ru", "zh", "de", "es", "fr", "ja", "it", "pt", "el", "ko", "fi", "id", "tr", "ar", "vi", "th", "bg", "ca", "hi", "et", "bn", "ta", "ur", "sw", "te", "eu", "my", "ht", "qu"], "license": "mit", "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png", "inference": false}

|

facebook/xglm-1.7B

| null |

[

"transformers",

"pytorch",

"tf",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

text-generation

|

transformers

|

# XGLM-2.9B

XGLM-2.9B is a multilingual autoregressive language model (with 2.9 billion parameters) trained on a balanced corpus of a diverse set of languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-2.9B is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-2.9B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-2.9B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-2.9B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

```

|

{"language": ["multilingual", "en", "ru", "zh", "de", "es", "fr", "ja", "it", "pt", "el", "ko", "fi", "id", "tr", "ar", "vi", "th", "bg", "ca", "hi", "et", "bn", "ta", "ur", "sw", "te", "eu", "my", "ht", "qu"], "license": "mit", "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png", "inference": false}

|

facebook/xglm-2.9B

| null |

[

"transformers",

"pytorch",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

text-generation

|

transformers

|

# XGLM-4.5B

XGLM-4.5B is a multilingual autoregressive language model (with 4.5 billion parameters) trained on a balanced corpus of a diverse set of 134 languages. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-4.5B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-4.5B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-4.5B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

```

|

{"language": ["multilingual", "en", "ru", "zh", "de", "es", "fr", "ja", "it", "pt", "el", "ko", "fi", "id", "tr", "ar", "vi", "th", "bg", "ca", "hi", "et", "bn", "ta", "ur", "sw", "te", "eu", "my", "ht", "qu"], "license": "mit", "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png", "inference": false}

|

facebook/xglm-4.5B

| null |

[

"transformers",

"pytorch",

"safetensors",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

text-generation

|

transformers

|

# XGLM-564M

XGLM-564M is a multilingual autoregressive language model (with 564 million parameters) trained on a balanced corpus of a diverse set of 30 languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-564M is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-564M development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-564M")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-564M")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

```

|

{"language": ["multilingual", "en", "ru", "zh", "de", "es", "fr", "ja", "it", "pt", "el", "ko", "fi", "id", "tr", "ar", "vi", "th", "bg", "ca", "hi", "et", "bn", "ta", "ur", "sw", "te", "eu", "my", "ht", "qu"], "license": "mit", "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png", "inference": false}

|

facebook/xglm-564M

| null |

[

"transformers",

"pytorch",

"tf",

"jax",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

text-generation

|

transformers

|

# XGLM-7.5B

XGLM-7.5B is a multilingual autoregressive language model (with 7.5 billion parameters) trained on a balanced corpus of a diverse set of languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-7.5B is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-7.5B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-7.5B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-7.5B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

```

|

{"language": ["multilingual", "en", "ru", "zh", "de", "es", "fr", "ja", "it", "pt", "el", "ko", "fi", "id", "tr", "ar", "vi", "th", "bg", "ca", "hi", "et", "bn", "ta", "ur", "sw", "te", "eu", "my", "ht", "qu"], "license": "mit", "thumbnail": "https://huggingface.co/front/thumbnails/facebook.png", "inference": false}

|

facebook/xglm-7.5B

| null |

[

"transformers",

"pytorch",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

fill-mask

|

transformers

|

# XLM-RoBERTa-XL (xlarge-sized model)

XLM-RoBERTa-XL model pre-trained on 2.5TB of filtered CommonCrawl data containing 100 languages. It was introduced in the paper [Larger-Scale Transformers for Multilingual Masked Language Modeling](https://arxiv.org/abs/2105.00572) by Naman Goyal, Jingfei Du, Myle Ott, Giri Anantharaman, Alexis Conneau and first released in [this repository](https://github.com/pytorch/fairseq/tree/master/examples/xlmr).

Disclaimer: The team releasing XLM-RoBERTa-XL did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

XLM-RoBERTa-XL is a extra large multilingual version of RoBERTa. It is pre-trained on 2.5TB of filtered CommonCrawl data containing 100 languages.

RoBERTa is a transformers model pretrained on a large corpus in a self-supervised fashion. This means it was pretrained on the raw texts only, with no humans labeling them in any way (which is why it can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, it was pretrained with the Masked language modeling (MLM) objective. Taking a sentence, the model randomly masks 15% of the words in the input then run the entire masked sentence through the model and has to predict the masked words. This is different from traditional recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the sentence.

This way, the model learns an inner representation of 100 languages that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard classifier using the features produced by the XLM-RoBERTa-XL model as inputs.

## Intended uses & limitations

You can use the raw model for masked language modeling, but it's mostly intended to be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?search=xlm-roberta-xl) to look for fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked) to make decisions, such as sequence classification, token classification or question answering. For tasks such as text generation, you should look at models like GPT2.

## Usage

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='facebook/xlm-roberta-xl')

>>> unmasker("Europe is a <mask> continent.")

[{'score': 0.08562745153903961,

'token': 38043,

'token_str': 'living',

'sequence': 'Europe is a living continent.'},

{'score': 0.0799778401851654,

'token': 103494,

'token_str': 'dead',

'sequence': 'Europe is a dead continent.'},

{'score': 0.046154674142599106,

'token': 72856,

'token_str': 'lost',

'sequence': 'Europe is a lost continent.'},

{'score': 0.04358183592557907,

'token': 19336,

'token_str': 'small',

'sequence': 'Europe is a small continent.'},

{'score': 0.040570393204689026,

'token': 34923,

'token_str': 'beautiful',

'sequence': 'Europe is a beautiful continent.'}]

```

Here is how to use this model to get the features of a given text in PyTorch: