repoName

stringlengths 7

77

| tree

stringlengths 0

2.85M

| readme

stringlengths 0

4.9M

|

|---|---|---|

p2pvid_sdfn | .eslintrc.yml

.github

workflows

deploy.yml

.gitpod.yml

.travis.yml

README-Gitpod.md

README.md

as-pect.config.js

asconfig.json

assembly

__tests__

as-pect.d.ts

guestbook.spec.ts

as_types.d.ts

main.ts

model.ts

tsconfig.json

babel.config.js

neardev

shared-test-staging

test.near.json

shared-test

test.near.json

package-lock.json

package.json

src

App.js

config.js

index.html

index.js

tests

integration

App-integration.test.js

ui

App-ui.test.js

| Guest Book

==========

[](https://travis-ci.com/near-examples/guest-book)

[](https://gitpod.io/#https://github.com/near-examples/guest-book)

<!-- MAGIC COMMENT: DO NOT DELETE! Everything above this line is hidden on NEAR Examples page -->

Sign in with [NEAR] and add a message to the guest book! A starter app built with an [AssemblyScript] backend and a [React] frontend.

Quick Start

===========

To run this project locally:

1. Prerequisites: Make sure you have Node.js ≥ 12 installed (https://nodejs.org), then use it to install [yarn]: `npm install --global yarn` (or just `npm i -g yarn`)

2. Install dependencies: `yarn install` (or just `yarn`)

3. Run the local development server: `yarn dev` (see `package.json` for a

full list of `scripts` you can run with `yarn`)

Now you'll have a local development environment backed by the NEAR TestNet! Running `yarn dev` will tell you the URL you can visit in your browser to see the app.

Exploring The Code

==================

1. The backend code lives in the `/assembly` folder. This code gets deployed to

the NEAR blockchain when you run `yarn deploy:contract`. This sort of

code-that-runs-on-a-blockchain is called a "smart contract" – [learn more

about NEAR smart contracts][smart contract docs].

2. The frontend code lives in the `/src` folder.

[/src/index.html](/src/index.html) is a great place to start exploring. Note

that it loads in `/src/index.js`, where you can learn how the frontend

connects to the NEAR blockchain.

3. Tests: there are different kinds of tests for the frontend and backend. The

backend code gets tested with the [asp] command for running the backend

AssemblyScript tests, and [jest] for running frontend tests. You can run

both of these at once with `yarn test`.

Both contract and client-side code will auto-reload as you change source files.

Deploy

======

Every smart contract in NEAR has its [own associated account][NEAR accounts]. When you run `yarn dev`, your smart contracts get deployed to the live NEAR TestNet with a throwaway account. When you're ready to make it permanent, here's how.

Step 0: Install near-cli

--------------------------

You need near-cli installed globally. Here's how:

npm install --global near-cli

This will give you the `near` [CLI] tool. Ensure that it's installed with:

near --version

Step 1: Create an account for the contract

------------------------------------------

Visit [NEAR Wallet] and make a new account. You'll be deploying these smart contracts to this new account.

Now authorize NEAR CLI for this new account, and follow the instructions it gives you:

near login

Step 2: set contract name in code

---------------------------------

Modify the line in `src/config.js` that sets the account name of the contract. Set it to the account id you used above.

const CONTRACT_NAME = process.env.CONTRACT_NAME || 'your-account-here!'

Step 3: change remote URL if you cloned this repo

-------------------------

Unless you forked this repository you will need to change the remote URL to a repo that you have commit access to. This will allow auto deployment to Github Pages from the command line.

1) go to GitHub and create a new repository for this project

2) open your terminal and in the root of this project enter the following:

$ `git remote set-url origin https://github.com/YOUR_USERNAME/YOUR_REPOSITORY.git`

Step 4: deploy!

---------------

One command:

yarn deploy

As you can see in `package.json`, this does two things:

1. builds & deploys smart contracts to NEAR TestNet

2. builds & deploys frontend code to GitHub using [gh-pages]. This will only work if the project already has a repository set up on GitHub. Feel free to modify the `deploy` script in `package.json` to deploy elsewhere.

[NEAR]: https://nearprotocol.com/

[yarn]: https://yarnpkg.com/

[AssemblyScript]: https://docs.assemblyscript.org/

[React]: https://reactjs.org

[smart contract docs]: https://docs.nearprotocol.com/docs/roles/developer/contracts/assemblyscript

[asp]: https://www.npmjs.com/package/@as-pect/cli

[jest]: https://jestjs.io/

[NEAR accounts]: https://docs.nearprotocol.com/docs/concepts/account

[NEAR Wallet]: https://wallet.nearprotocol.com

[near-cli]: https://github.com/nearprotocol/near-cli

[CLI]: https://www.w3schools.com/whatis/whatis_cli.asp

[create-near-app]: https://github.com/nearprotocol/create-near-app

[gh-pages]: https://github.com/tschaub/gh-pages

|

mehmetlevent_near_museum_ | Cargo.toml

README.md

as-pect.config.js

asconfig.json

package.json

simulation

Cargo.toml

README.md

src

lib.rs

meme.rs

museum.rs

INIT MUSEUM

ADD CONTRIBUTOR

CREATE MEME

VERIFY MEME

src

as-pect.d.ts

as_types.d.ts

meme

README.md

__tests__

README.md

index.unit.spec.ts

asconfig.json

assembly

index.ts

models.ts

museum

README.md

__tests__

README.md

index.unit.spec.ts

asconfig.json

assembly

index.ts

models.ts

tsconfig.json

utils.ts

| # Simulation Tests

## Usage

`yarn test:simulate`

## File Structure

```txt

simulation

├── Cargo.toml <-- Rust project config

├── README.md <-- * you are here

└── src

├── lib.rs <-- this is the business end of simulation

├── meme.rs <-- type wrapper for Meme contract

└── museum.rs <-- type wrapper for Museum contract

```

## Orientation

The simulation environment requires that we

## Output

```txt

running 1 test

---------------------------------------

---- INIT MUSEUM ----------------------

---------------------------------------

ExecutionResult {

outcome: ExecutionOutcome {

logs: [

"museum was created",

],

receipt_ids: [],

burnt_gas: 4354462070277,

tokens_burnt: 0,

status: SuccessValue(``),

},

}

---------------------------------------

---- ADD CONTRIBUTOR ------------------

---------------------------------------

ExecutionResult {

outcome: ExecutionOutcome {

logs: [

"contributor was added",

],

receipt_ids: [],

burnt_gas: 4884161460212,

tokens_burnt: 0,

status: SuccessValue(``),

},

}

---------------------------------------

---- CREATE MEME ----------------------

---------------------------------------

[

Some(

ExecutionResult {

outcome: ExecutionOutcome {

logs: [],

receipt_ids: [

`AmMRhhhYir4wNuUxhf8uCoKgnpv5nHQGvBqAeEWFL344`,

],

burnt_gas: 2428142357466,

tokens_burnt: 0,

status: SuccessReceiptId(AmMRhhhYir4wNuUxhf8uCoKgnpv5nHQGvBqAeEWFL344),

},

},

),

Some(

ExecutionResult {

outcome: ExecutionOutcome {

logs: [

"attempting to create meme",

],

receipt_ids: [

`B2BBAoJYj3EFE3Co6PRmfkXopTD654gUj8H6ywSsQD9e`,

`4NWBWN9dWuwiwbkwnub9Rv134Ubu2eJmbkdk1bJzeFtr`,

],

burnt_gas: 19963342520004,

tokens_burnt: 0,

status: SuccessValue(``),

},

},

),

Some(

ExecutionResult {

outcome: ExecutionOutcome {

logs: [],

receipt_ids: [],

burnt_gas: 4033749130056,

tokens_burnt: 0,

status: SuccessValue(``),

},

},

),

Some(

ExecutionResult {

outcome: ExecutionOutcome {

logs: [

"Meme [ usain.museum ] successfully created",

],

receipt_ids: [],

burnt_gas: 4644097556149,

tokens_burnt: 0,

status: SuccessValue(``),

},

},

),

]

---------------------------------------

---- VERIFY MEME ----------------------

---------------------------------------

Object({

"creator": String(

"museum",

),

"created_at": String(

"17000000000",

),

"vote_score": Number(

0,

),

"total_donations": String(

"0",

),

"title": String(

"usain refrain",

),

"data": String(

"https://9gag.com/gag/ayMDG8Y",

),

"category": Number(

0,

),

})

test test::test_add_meme ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out

Doc-tests simulation-near-academy-contracts

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out

✨ Done in 20.99s.

```

# Unit Tests for `Meme` Contract

## Usage

```sh

yarn test:unit -f meme

```

## Output

*Note: the tests marked with `Todo` must be verified using simulation tests because they involve cross-contract calls (which can not be verified using unit tests).*

```txt

[Describe]: meme initialization

[Success]: ✔ creates a new meme with proper metadata

[Success]: ✔ prevents double initialization

[Success]: ✔ requires title not to be blank

[Success]: ✔ requires a minimum balance

[Describe]: meme voting

[Success]: ✔ allows individuals to vote

[Success]: ✔ prevents vote automation for individuals

[Success]: ✔ prevents any user from voting more than once

[Describe]: meme captures votes

[Success]: ✔ captures all votes

[Success]: ✔ calculates a running vote score

[Success]: ✔ returns a list of recent votes

[Success]: ✔ allows groups to vote

[Describe]: meme comments

[Success]: ✔ captures comments

[Success]: ✔ rejects comments that are too long

[Success]: ✔ captures multiple comments

[Describe]: meme donations

[Success]: ✔ captures donations

[Describe]: captures donations

[Success]: ✔ captures all donations

[Success]: ✔ calculates a running donations total

[Success]: ✔ returns a list of recent donations

[Todo]: releases donations

[File]: src/meme/__tests__/index.unit.spec.ts

[Groups]: 7 pass, 7 total

[Result]: ✔ PASS

[Snapshot]: 0 total, 0 added, 0 removed, 0 different

[Summary]: 18 pass, 0 fail, 18 total

[Time]: 46.469ms

```

# Unit Tests for `Museum` Contract

## Usage

```sh

yarn test:unit -f museum

```

## Output

*Note: the tests marked with `Todo` must be verified using simulation tests because they involve cross-contract calls (which can not be verified using unit tests).*

```txt

[Describe]: museum initialization

[Success]: ✔ creates a new museum with proper metadata

[Success]: ✔ prevents double initialization

[Success]: ✔ requires title not to be blank

[Success]: ✔ requires a minimum balance

[Describe]: Museum self-service methods

[Success]: ✔ returns a list of owners

[Success]: ✔ returns a list of memes

[Success]: ✔ returns a count of memes

[Success]: ✔ allows users to add / remove themselves as contributors

[Todo]: allows whitelisted contributors to create a meme

[Describe]: Museum owner methods

[Success]: ✔ allows owners to whitelist a contributor

[Success]: ✔ allows owners to remove a contributor

[Success]: ✔ allows owners to add a new owner

[Success]: ✔ allows owners to remove an owner

[Todo]: allows owners to remove a meme

[File]: src/museum/__tests__/index.unit.spec.ts

[Groups]: 4 pass, 4 total

[Result]: ✔ PASS

[Snapshot]: 0 total, 0 added, 0 removed, 0 different

[Summary]: 12 pass, 0 fail, 12 total

[Time]: 29.181ms

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

[Result]: ✔ PASS

[Files]: 1 total

[Groups]: 4 count, 4 pass

[Tests]: 12 pass, 0 fail, 12 total

[Time]: 8846.571ms

✨ Done in 9.51s.

```

# The Meme Museum

This repo is deprecated. Please use: https://github.com/NEAR-Edu/near-academy-contracts

# Meme Contract

**NOTE**

If you try to call a method which requires a signature from a valid account, you will see this error:

```txt

"error": "wasm execution failed with error: FunctionCallError(HostError(ProhibitedInView ..."

```

This will happen anytime you try using `near view ...` when you should be using `near call ...`. So it's important to pay close attention in the following examples as to which is being used, a `view` or a `call` (aka. "change") method.

----

## deployment

```sh

near dev-deploy ./build/release/meme.wasm

```

## initialization

`init(title: string, data: string, category: Category): void`

```sh

# anyone can initialize meme (so this must be done by the museum at deploy-time)

near call dev-1614603380541-7288163 init '{"title": "hello world", "data": "https://9gag.com/gag/ayMDG8Y", "category": 0}' --account_id dev-1614603380541-7288163 --amount 3

```

## view methods

`get_meme(): Meme`

```sh

# anyone can read meme metadata

near view dev-1614603380541-7288163 get_meme

```

```js

{

creator: 'dev-1614603380541-7288163',

created_at: '1614603702927464728',

vote_score: 4,

total_donations: '0',

title: 'hello world',

data: 'https://9gag.com/gag/ayMDG8Y',

category: 0

}

```

`get_recent_votes(): Array<Vote>`

```sh

# anyone can request a list of recent votes

near view dev-1614603380541-7288163 get_recent_votes

```

```js

[

{

created_at: '1614603886399296553',

value: 1,

voter: 'dev-1614603380541-7288163'

},

{

created_at: '1614603988616406809',

value: 1,

voter: 'sherif.testnet'

},

{

created_at: '1614604214413823755',

value: 2,

voter: 'batch-dev-1614603380541-7288163'

},

[length]: 3

]

```

`get_vote_score(): i32`

```sh

near view dev-1614603380541-7288163 get_vote_score

```

```js

4

```

`get_donations_total(): u128`

```sh

near view dev-1614603380541-7288163 get_donations_total

```

```js

'5000000000000000000000000'

```

`get_recent_donations(): Array<Donation>`

```sh

near view dev-1614603380541-7288163 get_recent_donations

```

```js

[

{

amount: '5000000000000000000000000',

donor: 'sherif.testnet',

created_at: '1614604980292030188'

},

[length]: 1

]

```

## change methods

`vote(value: i8): void`

```sh

# user votes for meme

near call dev-1614603380541-7288163 vote '{"value": 1}' --account_id sherif.testnet

```

`batch_vote(value: i8, is_batch: bool = true): void`

```sh

# only the meme contract can call this method

near call dev-1614603380541-7288163 batch_vote '{"value": 2}' --account_id dev-1614603380541-7288163

```

`add_comment(text: string): void`

```sh

near call dev-1614603380541-7288163 add_comment '{"text":"i love this meme"}' --account_id sherif.testnet

```

`get_recent_comments(): Array<Comment>`

```sh

near view dev-1614603380541-7288163 get_recent_comments

```

```js

[

{

created_at: '1614604543670811624',

author: 'sherif.testnet',

text: 'i love this meme'

},

[length]: 1

]

```

`donate(): void`

```sh

near call dev-1614603380541-7288163 donate --account_id sherif.testnet --amount 5

```

`release_donations(account: AccountId): void`

```sh

near call dev-1614603380541-7288163 release_donations '{"account":"sherif.testnet"}' --account_id dev-1614603380541-728816

```

This method automatically calls `on_donations_released` which logs *"Donations were released"*

# Museum Contract

**NOTE**

If you try to call a method which requires a signature from a valid account, you will see this error:

```txt

"error": "wasm execution failed with error: FunctionCallError(HostError(ProhibitedInView ..."

```

This will happen anytime you try using `near view ...` when you should be using `near call ...`. So it's important to pay close attention in the following examples as to which is being used, a `view` or a `call` (aka. "change") method.

----

## environment

```sh

# contract source code

export WASM_FILE=./build/release/museum.wasm

# system accounts

export CONTRACT_ACCOUNT=# NEAR account where the contract will live

export MASTER_ACCOUNT=$CONTRACT_ACCOUNT # can be any NEAR account that controls CONTRACT_ACCOUNT

# user accounts

export OWNER_ACCOUNT=# NEAR account that will control the museum

export CONTRIBUTOR_ACCOUNT=# NEAR account that will contribute memes

# configuration metadata

export MUSEUM_NAME="The Meme Museum" # a name for the museum itself, just metadata

export ATTACHED_TOKENS=3 # minimum tokens to attach to the museum initialization method (for storage)

export ATTACHED_GAS="300000000000000" # maximum allowable attached gas is 300Tgas (300 "teragas", 300 x 10^12)

```

## deployment

**Approach #1**: using `near dev-deploy` for developer convenience

This contract can be deployed to a temporary development account that's automatically generated:

```sh

near dev-deploy $WASM_FILE

export CONTRACT_ACCOUNT=# the account that appears as a result of running this command

```

The result of executing the above command will be a temporary `dev-###-###` account and related `FullAccess` keys with the contract deployed (to this same account).

**Approach #2**: using `near deploy` for more control

Alternatively, the contract can be deployed to a specific account for which a `FullAccess` key is available. This account must be created and funded first.

```sh

near create-account $CONTRACT_ACCOUNT --masterAccount $MASTER_ACCOUNT

near deploy $WASM_FILE

```

This manual deployment method is the only way to deploy a contract to a specific account. It's important to consider initializing the contract in the same step. This is clarified below in the "initialization" section.

## initialization

`init(name: string, owners: AccountId[]): void`

**Approach #1**: initialize after `dev-deploy`

After `near dev-deploy` we can initialize the contract

```sh

# initialization method arguments

# '{"name":"The Meme Museum", "owners": ["<owner-account-id>"]}'

export INIT_METHOD_ARGS="'{\"name\":\"$MUSEUM_NAME\", \"owners\": [\"$OWNER_ACCOUNT\"]}'"

# initialize contract

near call $CONTRACT_ACCOUNT init $INIT_METHOD_ARGS --account_id $CONTRACT_ACCOUNT --amount $ATTACHED_TOKENS

```

**Approach #2**: deploy and initialize in a single step

Or we can initialize at the same time as deploying. This is particularly useful for production deployments where an adversarial validator may try to front-run your contract initialization unless you bundle the `FunctionCall` action to `init()` as part of the transaction to `DeployContract`.

```sh

# initialization method arguments

# '{"name":"The Meme Museum", "owners": ["<owner-account-id>"]}'

export INIT_METHOD_ARGS="'{\"name\":\"$MUSEUM_NAME\", \"owners\": [\"$OWNER_ACCOUNT\"]}'"

# deploy AND initialize contract in a single step

near deploy $CONTRACT_ACCOUNT $WASM_FILE --initFunction init --initArgs $INIT_METHOD_ARGS --account_id $CONTRACT_ACCOUNT --initDeposit $ATTACHED_TOKENS

```

## view methods

`get_museum(): Museum`

```sh

near view $CONTRACT_ACCOUNT get_museum

```

```js

{ created_at: '1614636541756865886', name: 'The Meme Museum' }

```

`get_owner_list(): AccountId[]`

```sh

near view $CONTRACT_ACCOUNT get_owner_list

```

```js

[ '<owner-account-id>', [length]: 1 ]

```

`get_meme_list(): AccountId[]`

```sh

near view $CONTRACT_ACCOUNT get_meme_list

```

```js

[ 'usain', [length]: 1 ]

```

`get_meme_count(): u32`

```sh

near view $CONTRACT_ACCOUNT get_meme_count

```

```js

1

```

## change methods

### contributor methods

`add_myself_as_contributor(): void`

```sh

near call $CONTRACT_ACCOUNT add_myself_as_contributor --account_id $CONTRIBUTOR_ACCOUNT

```

`remove_myself_as_contributor(): void`

```sh

near call $CONTRACT_ACCOUNT remove_myself_as_contributor --account_id $CONTRIBUTOR_ACCOUNT

```

`add_meme(meme: AccountId, title: string, data: string, category: Category): void`

```sh

near call $CONTRACT_ACCOUNT add_meme '{"meme":"usain", "title": "usain refrain","data":"https://9gag.com/gag/ayMDG8Y", "category": 0 }' --account_id $CONTRIBUTOR_ACCOUNT --amount $ATTACHED_TOKENS --gas $ATTACHED_GAS

```

`on_meme_created(meme: AccountId): void`

This method is called automatically by `add_meme()` as a confirmation of meme account creation.

### owner methods

`add_contributor(account: AccountId): void`

```sh

# initialization method arguments

# '{"account":"<contributor-account-id>"}'

export METHOD_ARGS="'{\"account\":\"$CONTRIBUTOR_ACCOUNT\"}'"

near call $CONTRACT_ACCOUNT add_contributor $METHOD_ARGS --account_id $OWNER_ACCOUNT

```

`remove_contributor(account: AccountId): void`

```sh

near call $CONTRACT_ACCOUNT remove_contributor '{"account":"<contributor-account-id>"}' --account_id $OWNER_ACCOUNT

```

`add_owner(account: AccountId): void`

```sh

near call $CONTRACT_ACCOUNT add_owner '{"account":"<new-owner-account-id>"}' --account_id $OWNER_ACCOUNT

```

`remove_owner(account: AccountId): void`

```sh

near call $CONTRACT_ACCOUNT remove_owner '{"account":"<some-owner-account-id>"}' --account_id $OWNER_ACCOUNT

```

`remove_meme(meme: AccountId): void`

```sh

near call $CONTRACT_ACCOUNT remove_meme '{"meme":"usain"}' --account_id $OWNER_ACCOUNT

```

`on_meme_removed(meme: AccountId): void`

This method is called automatically by `remove_meme()` as a confirmation of meme account deletion.

|

iGotABadIdea_THE-VOTING-APP | .gitpod.yml

README.md

SECURITY.md

|

babel.config.js

contract

README.md

as-pect.config.js

asconfig.json

assembly

__tests__

as-pect.d.ts

main.spec.ts

as_types.d.ts

index.ts

tsconfig.json

compile.js

package-lock.json

package.json

dist

index.html

logo-black.eab7a939.svg

logo-white.7fec831f.svg

src.61fc8ea7.css

src.64fd6f3b.css

src.e31bb0bc.css

Header Section

neardev

dev-account.env

shared-test-staging

test.near.json

shared-test

test.near.json

package.json

src

App.js

__mocks__

fileMock.js

assets

logo-black.svg

logo-white.svg

components

navbar

navbar.css

config.js

global.css

index.html

index.js

jest.init.js

main.test.js

utils.js

wallet

login

index.html

| DecentralHack Smart Contract

==================

A [smart contract] written in [AssemblyScript] for an app initialized with [create-near-app]

Quick Start

===========

Before you compile this code, you will need to install [Node.js] ≥ 12

Exploring The Code

==================

1. The main smart contract code lives in `assembly/index.ts`. You can compile

it with the `./compile` script.

2. Tests: You can run smart contract tests with the `./test` script. This runs

standard AssemblyScript tests using [as-pect].

[smart contract]: https://docs.near.org/docs/develop/contracts/overview

[AssemblyScript]: https://www.assemblyscript.org/

[create-near-app]: https://github.com/near/create-near-app

[Node.js]: https://nodejs.org/en/download/package-manager/

[as-pect]: https://www.npmjs.com/package/@as-pect/cli

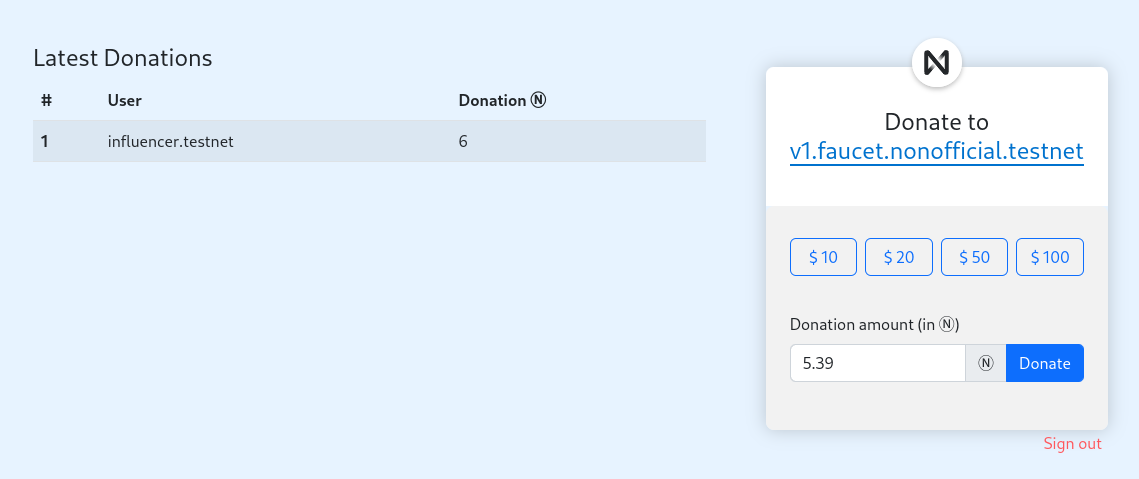

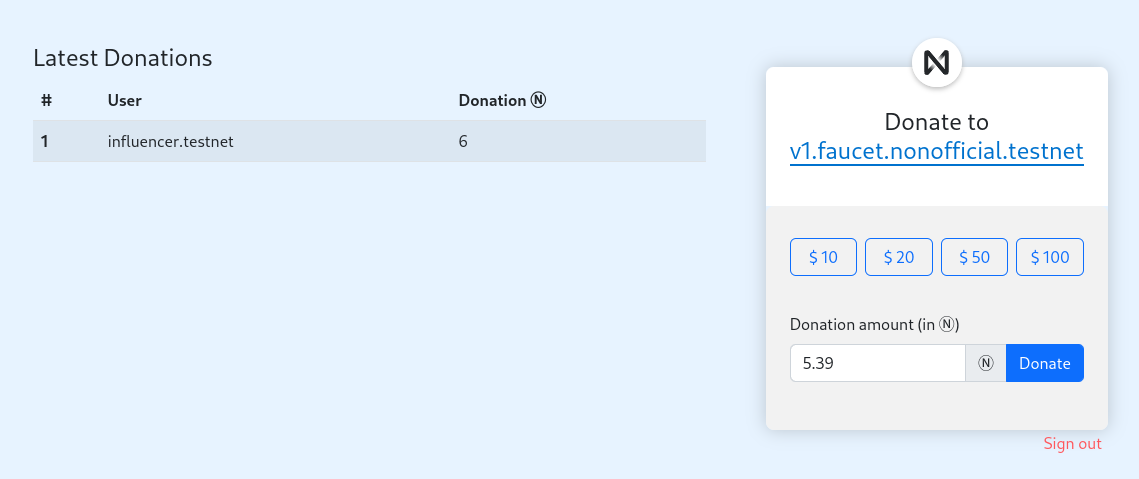

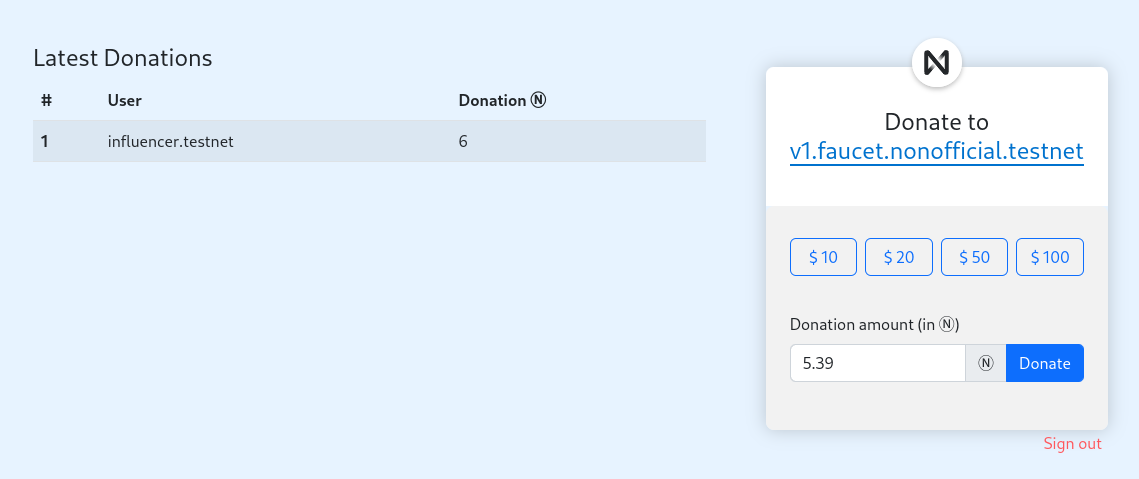

The Voting Game

==================

**The Voting Game is a party game that uncovers the hilarious truth behind your friendships. Personal stories are often shared after a revealing vote.**

<hr/>

Our DApp (on NEAR) provides a client interface to party game that uncovers the hilarious truth behind your friendships and store their records permanently on the blockchain and retrieve it whenever needed. Along with this we also provide a B2B solution for clients who produce a huge amount of data which becomes difficult to manage very quickly. With The Voting Game, gameplay varies dramatically based on who you're playing with . We provide a safe encrypted and distributed storage and free solution so that clients can play it over again. Transparency is what we aim at.

Preview

===========

Home page of our Voting Game :-

Sidebar to provides easy access to any section of the website:-

List of available pools for voting :-

Here comes the interesting part,You can create your own poll by just filling some basic information :-

Click on vote button to submit your response :-

After submission you can see the amount of votes given to each option :-

This [React] app was initialized with [create-near-app]

Quick Start

===========

To run this project locally:

1. Prerequisites: Make sure you've installed [Node.js] ≥ 12

2. Install dependencies: `yarn install`

3. Run the local development server: `yarn dev` (see `package.json` for a

full list of `scripts` you can run with `yarn`)

Now you'll have a local development environment backed by the NEAR TestNet!

Go ahead and play with the app and the code. As you make code changes, the app will automatically reload.

Exploring The Code

==================

1. The "backend" code lives in the `/contract` folder. See the README there for

more info.

2. The frontend code lives in the `/src` folder. `/src/index.html` is a great

place to start exploring. Note that it loads in `/src/index.js`, where you

can learn how the frontend connects to the NEAR blockchain.

3. Tests: there are different kinds of tests for the frontend and the smart

contract. See `contract/README` for info about how it's tested. The frontend

code gets tested with [jest]. You can run both of these at once with `yarn

run test`.

Deploy

======

Every smart contract in NEAR has its [own associated account][NEAR accounts]. When you run `yarn dev`, your smart contract gets deployed to the live NEAR TestNet with a throwaway account. When you're ready to make it permanent, here's how.

Step 0: Install near-cli (optional)

-------------------------------------

[near-cli] is a command line interface (CLI) for interacting with the NEAR blockchain. It was installed to the local `node_modules` folder when you ran `yarn install`, but for best ergonomics you may want to install it globally:

yarn install --global near-cli

Or, if you'd rather use the locally-installed version, you can prefix all `near` commands with `npx`

Ensure that it's installed with `near --version` (or `npx near --version`)

Step 1: Create an account for the contract

------------------------------------------

Each account on NEAR can have at most one contract deployed to it. If you've already created an account such as `your-name.testnet`, you can deploy your contract to `DecentralHack.your-name.testnet`. Assuming you've already created an account on [NEAR Wallet], here's how to create `DecentralHack.your-name.testnet`:

1. Authorize NEAR CLI, following the commands it gives you:

near login

2. Create a subaccount (replace `YOUR-NAME` below with your actual account name):

near create-account DecentralHack.YOUR-NAME.testnet --masterAccount YOUR-NAME.testnet

Step 2: set contract name in code

---------------------------------

Modify the line in `src/config.js` that sets the account name of the contract. Set it to the account id you used above.

const CONTRACT_NAME = process.env.CONTRACT_NAME || 'DecentralHack.YOUR-NAME.testnet'

Step 3: deploy!

---------------

One command:

yarn deploy

As you can see in `package.json`, this does two things:

1. builds & deploys smart contract to NEAR TestNet

2. builds & deploys frontend code to GitHub using [gh-pages]. This will only work if the project already has a repository set up on GitHub. Feel free to modify the `deploy` script in `package.json` to deploy elsewhere.

Troubleshooting

===============

On Windows, if you're seeing an error containing `EPERM` it may be related to spaces in your path. Please see [this issue](https://github.com/zkat/npx/issues/209) for more details.

[React]: https://reactjs.org/

[create-near-app]: https://github.com/near/create-near-app

[Node.js]: https://nodejs.org/en/download/package-manager/

[jest]: https://jestjs.io/

[NEAR accounts]: https://docs.near.org/docs/concepts/account

[NEAR Wallet]: https://wallet.testnet.near.org/

[near-cli]: https://github.com/near/near-cli

[gh-pages]: https://github.com/tschaub/gh-pages

|

joe-rlo_ReadyLayerOnePodcastNFT_test | README.md

market-contract

Cargo.toml

README.md

build.sh

src

external.rs

internal.rs

lib.rs

nft_callbacks.rs

sale.rs

sale_views.rs

nft-contract

Cargo.toml

README.md

build.sh

src

approval.rs

enumeration.rs

events.rs

internal.rs

lib.rs

metadata.rs

mint.rs

nft_core.rs

royalty.rs

package.json

| # Ready Layer One Welcome NFT

Just a clone of the NEAR NFT tutorial

### Minting Token

```bash=

near call nft.readylayerone.testnet nft_mint '{"token_id": "token-1", "metadata": {"title": "Ready Layer One Podcast Cover", "description": "Check out the Ready Layer One Podcast at https://anchor.fm/readylayerone A crypto and NEAR focused podcast.", "media": "http://readylayerone.s3.amazonaws.com/1.png"}, "receiver_id": "'$MAIN_ACCOUNT'"}' --accountId $MAIN_ACCOUNT --amount 0.1

```

# TBD

# TBD

|

g1ts_dApp-demo-on-NEAR | contract

README.md

as-pect.config.js

asconfig.json

assembly

__tests__

as-pect.d.ts

main.spec.ts

as_types.d.ts

index.ts

models.ts

tsconfig.json

types.ts

package.json

frontend

src

assets

css

milligram.css

styles.css

img

circle.svg

cross.svg

js

app.js

game_engine.js

near

config.js

utils.js

index.html

board

neardev

dev-account.env

package.json

| |

michaelxaolin_learnto.near | .gitpod.yml

README.md

babel.config.js

contract

README.md

as-pect.config.js

asconfig.json

assembly

__tests__

as-pect.d.ts

main.spec.ts

as_types.d.ts

index.ts

tsconfig.json

compile.js

node_modules

.bin

acorn.cmd

acorn.ps1

asb.cmd

asb.ps1

asbuild.cmd

asbuild.ps1

asc.cmd

asc.ps1

asinit.cmd

asinit.ps1

asp.cmd

asp.ps1

aspect.cmd

aspect.ps1

assemblyscript-build.cmd

assemblyscript-build.ps1

eslint.cmd

eslint.ps1

esparse.cmd

esparse.ps1

esvalidate.cmd

esvalidate.ps1

js-yaml.cmd

js-yaml.ps1

mkdirp.cmd

mkdirp.ps1

near-vm-as.cmd

near-vm-as.ps1

near-vm.cmd

near-vm.ps1

nearley-railroad.cmd

nearley-railroad.ps1

nearley-test.cmd

nearley-test.ps1

nearley-unparse.cmd

nearley-unparse.ps1

nearleyc.cmd

nearleyc.ps1

node-which.cmd

node-which.ps1

rimraf.cmd

rimraf.ps1

semver.cmd

semver.ps1

shjs.cmd

shjs.ps1

wasm-opt.cmd

wasm-opt.ps1

.package-lock.json

@as-covers

assembly

CONTRIBUTING.md

README.md

index.ts

package.json

tsconfig.json

core

CONTRIBUTING.md

README.md

package.json

glue

README.md

lib

index.d.ts

index.js

package.json

transform

README.md

lib

index.d.ts

index.js

util.d.ts

util.js

node_modules

visitor-as

.github

workflows

test.yml

README.md

as

index.d.ts

index.js

asconfig.json

dist

astBuilder.d.ts

astBuilder.js

base.d.ts

base.js

baseTransform.d.ts

baseTransform.js

decorator.d.ts

decorator.js

examples

capitalize.d.ts

capitalize.js

exportAs.d.ts

exportAs.js

functionCallTransform.d.ts

functionCallTransform.js

includeBytesTransform.d.ts

includeBytesTransform.js

list.d.ts

list.js

toString.d.ts

toString.js

index.d.ts

index.js

path.d.ts

path.js

simpleParser.d.ts

simpleParser.js

transformRange.d.ts

transformRange.js

transformer.d.ts

transformer.js

utils.d.ts

utils.js

visitor.d.ts

visitor.js

package.json

tsconfig.json

package.json

@as-pect

assembly

README.md

assembly

index.ts

internal

Actual.ts

Expectation.ts

Expected.ts

Reflect.ts

ReflectedValueType.ts

Test.ts

assert.ts

call.ts

comparison

toIncludeComparison.ts

toIncludeEqualComparison.ts

log.ts

noOp.ts

package.json

types

as-pect.d.ts

as-pect.portable.d.ts

env.d.ts

cli

README.md

init

as-pect.config.js

env.d.ts

example.spec.ts

init-types.d.ts

portable-types.d.ts

lib

as-pect.cli.amd.d.ts

as-pect.cli.amd.js

help.d.ts

help.js

index.d.ts

index.js

init.d.ts

init.js

portable.d.ts

portable.js

run.d.ts

run.js

test.d.ts

test.js

types.d.ts

types.js

util

CommandLineArg.d.ts

CommandLineArg.js

IConfiguration.d.ts

IConfiguration.js

asciiArt.d.ts

asciiArt.js

collectReporter.d.ts

collectReporter.js

getTestEntryFiles.d.ts

getTestEntryFiles.js

removeFile.d.ts

removeFile.js

strings.d.ts

strings.js

writeFile.d.ts

writeFile.js

worklets

ICommand.d.ts

ICommand.js

compiler.d.ts

compiler.js

package.json

core

README.md

lib

as-pect.core.amd.d.ts

as-pect.core.amd.js

index.d.ts

index.js

reporter

CombinationReporter.d.ts

CombinationReporter.js

EmptyReporter.d.ts

EmptyReporter.js

IReporter.d.ts

IReporter.js

SummaryReporter.d.ts

SummaryReporter.js

VerboseReporter.d.ts

VerboseReporter.js

test

IWarning.d.ts

IWarning.js

TestContext.d.ts

TestContext.js

TestNode.d.ts

TestNode.js

transform

assemblyscript.d.ts

assemblyscript.js

createAddReflectedValueKeyValuePairsMember.d.ts

createAddReflectedValueKeyValuePairsMember.js

createGenericTypeParameter.d.ts

createGenericTypeParameter.js

createStrictEqualsMember.d.ts

createStrictEqualsMember.js

emptyTransformer.d.ts

emptyTransformer.js

hash.d.ts

hash.js

index.d.ts

index.js

util

IAspectExports.d.ts

IAspectExports.js

IWriteable.d.ts

IWriteable.js

ReflectedValue.d.ts

ReflectedValue.js

TestNodeType.d.ts

TestNodeType.js

rTrace.d.ts

rTrace.js

stringifyReflectedValue.d.ts

stringifyReflectedValue.js

timeDifference.d.ts

timeDifference.js

wasmTools.d.ts

wasmTools.js

package.json

csv-reporter

index.ts

lib

as-pect.csv-reporter.amd.d.ts

as-pect.csv-reporter.amd.js

index.d.ts

index.js

package.json

readme.md

tsconfig.json

json-reporter

index.ts

lib

as-pect.json-reporter.amd.d.ts

as-pect.json-reporter.amd.js

index.d.ts

index.js

package.json

readme.md

tsconfig.json

snapshots

__tests__

snapshot.spec.ts

jest.config.js

lib

Snapshot.d.ts

Snapshot.js

SnapshotDiff.d.ts

SnapshotDiff.js

SnapshotDiffResult.d.ts

SnapshotDiffResult.js

as-pect.core.amd.d.ts

as-pect.core.amd.js

index.d.ts

index.js

parser

grammar.d.ts

grammar.js

package.json

src

Snapshot.ts

SnapshotDiff.ts

SnapshotDiffResult.ts

index.ts

parser

grammar.ts

tsconfig.json

@assemblyscript

loader

README.md

index.d.ts

index.js

package.json

umd

index.d.ts

index.js

package.json

@babel

code-frame

README.md

lib

index.js

package.json

helper-validator-identifier

README.md

lib

identifier.js

index.js

keyword.js

package.json

scripts

generate-identifier-regex.js

highlight

README.md

lib

index.js

node_modules

ansi-styles

index.js

package.json

readme.md

chalk

index.js

package.json

readme.md

templates.js

types

index.d.ts

color-convert

CHANGELOG.md

README.md

conversions.js

index.js

package.json

route.js

color-name

.eslintrc.json

README.md

index.js

package.json

test.js

escape-string-regexp

index.js

package.json

readme.md

has-flag

index.js

package.json

readme.md

supports-color

browser.js

index.js

package.json

readme.md

package.json

@eslint

eslintrc

CHANGELOG.md

README.md

conf

config-schema.js

environments.js

eslint-all.js

eslint-recommended.js

lib

cascading-config-array-factory.js

config-array-factory.js

config-array

config-array.js

config-dependency.js

extracted-config.js

ignore-pattern.js

index.js

override-tester.js

flat-compat.js

index.js

shared

ajv.js

config-ops.js

config-validator.js

deprecation-warnings.js

naming.js

relative-module-resolver.js

types.js

package.json

@humanwhocodes

config-array

README.md

api.js

package.json

object-schema

.eslintrc.js

.travis.yml

README.md

package.json

src

index.js

merge-strategy.js

object-schema.js

validation-strategy.js

tests

merge-strategy.js

object-schema.js

validation-strategy.js

acorn-jsx

README.md

index.d.ts

index.js

package.json

xhtml.js

acorn

CHANGELOG.md

README.md

dist

acorn.d.ts

acorn.js

acorn.mjs.d.ts

bin.js

package.json

ajv

.tonic_example.js

README.md

dist

ajv.bundle.js

ajv.min.js

lib

ajv.d.ts

ajv.js

cache.js

compile

async.js

equal.js

error_classes.js

formats.js

index.js

resolve.js

rules.js

schema_obj.js

ucs2length.js

util.js

data.js

definition_schema.js

dotjs

README.md

_limit.js

_limitItems.js

_limitLength.js

_limitProperties.js

allOf.js

anyOf.js

comment.js

const.js

contains.js

custom.js

dependencies.js

enum.js

format.js

if.js

index.js

items.js

multipleOf.js

not.js

oneOf.js

pattern.js

properties.js

propertyNames.js

ref.js

required.js

uniqueItems.js

validate.js

keyword.js

refs

data.json

json-schema-draft-04.json

json-schema-draft-06.json

json-schema-draft-07.json

json-schema-secure.json

package.json

scripts

.eslintrc.yml

bundle.js

compile-dots.js

ansi-colors

README.md

index.js

package.json

symbols.js

types

index.d.ts

ansi-regex

index.d.ts

index.js

package.json

readme.md

ansi-styles

index.d.ts

index.js

package.json

readme.md

argparse

CHANGELOG.md

README.md

index.js

lib

action.js

action

append.js

append

constant.js

count.js

help.js

store.js

store

constant.js

false.js

true.js

subparsers.js

version.js

action_container.js

argparse.js

argument

error.js

exclusive.js

group.js

argument_parser.js

const.js

help

added_formatters.js

formatter.js

namespace.js

utils.js

package.json

as-bignum

README.md

assembly

__tests__

as-pect.d.ts

i128.spec.as.ts

safe_u128.spec.as.ts

u128.spec.as.ts

u256.spec.as.ts

utils.ts

fixed

fp128.ts

fp256.ts

index.ts

safe

fp128.ts

fp256.ts

types.ts

globals.ts

index.ts

integer

i128.ts

i256.ts

index.ts

safe

i128.ts

i256.ts

i64.ts

index.ts

u128.ts

u256.ts

u64.ts

u128.ts

u256.ts

tsconfig.json

utils.ts

package.json

asbuild

README.md

dist

cli.d.ts

cli.js

commands

build.d.ts

build.js

fmt.d.ts

fmt.js

index.d.ts

index.js

init

cmd.d.ts

cmd.js

files

asconfigJson.d.ts

asconfigJson.js

aspecConfig.d.ts

aspecConfig.js

assembly_files.d.ts

assembly_files.js

eslintConfig.d.ts

eslintConfig.js

gitignores.d.ts

gitignores.js

index.d.ts

index.js

indexJs.d.ts

indexJs.js

packageJson.d.ts

packageJson.js

test_files.d.ts

test_files.js

index.d.ts

index.js

interfaces.d.ts

interfaces.js

run.d.ts

run.js

test.d.ts

test.js

index.d.ts

index.js

main.d.ts

main.js

utils.d.ts

utils.js

index.js

node_modules

cliui

CHANGELOG.md

LICENSE.txt

README.md

index.js

package.json

wrap-ansi

index.js

package.json

readme.md

y18n

CHANGELOG.md

README.md

index.js

package.json

yargs-parser

CHANGELOG.md

LICENSE.txt

README.md

index.js

lib

tokenize-arg-string.js

package.json

yargs

CHANGELOG.md

README.md

build

lib

apply-extends.d.ts

apply-extends.js

argsert.d.ts

argsert.js

command.d.ts

command.js

common-types.d.ts

common-types.js

completion-templates.d.ts

completion-templates.js

completion.d.ts

completion.js

is-promise.d.ts

is-promise.js

levenshtein.d.ts

levenshtein.js

middleware.d.ts

middleware.js

obj-filter.d.ts

obj-filter.js

parse-command.d.ts

parse-command.js

process-argv.d.ts

process-argv.js

usage.d.ts

usage.js

validation.d.ts

validation.js

yargs.d.ts

yargs.js

yerror.d.ts

yerror.js

index.js

locales

be.json

de.json

en.json

es.json

fi.json

fr.json

hi.json

hu.json

id.json

it.json

ja.json

ko.json

nb.json

nl.json

nn.json

pirate.json

pl.json

pt.json

pt_BR.json

ru.json

th.json

tr.json

zh_CN.json

zh_TW.json

package.json

yargs.js

package.json

assemblyscript-json

.eslintrc.js

.travis.yml

README.md

assembly

JSON.ts

decoder.ts

encoder.ts

index.ts

tsconfig.json

util

index.ts

index.js

package.json

temp-docs

README.md

classes

decoderstate.md

json.arr.md

json.bool.md

json.float.md

json.integer.md

json.null.md

json.num.md

json.obj.md

json.str.md

json.value.md

jsondecoder.md

jsonencoder.md

jsonhandler.md

throwingjsonhandler.md

modules

json.md

assemblyscript-regex

.eslintrc.js

.github

workflows

benchmark.yml

release.yml

test.yml

README.md

as-pect.config.js

asconfig.empty.json

asconfig.json

assembly

__spec_tests__

generated.spec.ts

__tests__

alterations.spec.ts

as-pect.d.ts

boundary-assertions.spec.ts

capture-group.spec.ts

character-classes.spec.ts

character-sets.spec.ts

characters.ts

empty.ts

quantifiers.spec.ts

range-quantifiers.spec.ts

regex.spec.ts

utils.ts

char.ts

env.ts

index.ts

nfa

matcher.ts

nfa.ts

types.ts

walker.ts

parser

node.ts

parser.ts

string-iterator.ts

walker.ts

regexp.ts

tsconfig.json

util.ts

benchmark

benchmark.js

package.json

spec

test-generator.js

ts

index.ts

tsconfig.json

assemblyscript-temporal

.github

workflows

node.js.yml

release.yml

README.md

as-pect.config.js

asconfig.empty.json

asconfig.json

assembly

__tests__

README.md

as-pect.d.ts

date.spec.ts

duration.spec.ts

empty.ts

plaindate.spec.ts

plaindatetime.spec.ts

plainmonthday.spec.ts

plaintime.spec.ts

plainyearmonth.spec.ts

timezone.spec.ts

zoneddatetime.spec.ts

constants.ts

date.ts

duration.ts

enums.ts

env.ts

index.ts

instant.ts

now.ts

plaindate.ts

plaindatetime.ts

plainmonthday.ts

plaintime.ts

plainyearmonth.ts

timezone.ts

tsconfig.json

tz

__tests__

index.spec.ts

rule.spec.ts

zone.spec.ts

iana.ts

index.ts

rule.ts

zone.ts

utils.ts

zoneddatetime.ts

development.md

package.json

tzdb

README.md

iana

theory.html

zoneinfo2tdf.pl

assemblyscript

README.md

cli

README.md

asc.d.ts

asc.js

asc.json

shim

README.md

fs.js

path.js

process.js

transform.d.ts

transform.js

util

colors.d.ts

colors.js

find.d.ts

find.js

mkdirp.d.ts

mkdirp.js

options.d.ts

options.js

utf8.d.ts

utf8.js

dist

asc.js

assemblyscript.d.ts

assemblyscript.js

sdk.js

index.d.ts

index.js

lib

loader

README.md

index.d.ts

index.js

package.json

umd

index.d.ts

index.js

package.json

rtrace

README.md

bin

rtplot.js

index.d.ts

index.js

package.json

umd

index.d.ts

index.js

package.json

package-lock.json

package.json

std

README.md

assembly.json

assembly

array.ts

arraybuffer.ts

atomics.ts

bindings

Date.ts

Math.ts

Reflect.ts

asyncify.ts

console.ts

wasi.ts

wasi_snapshot_preview1.ts

wasi_unstable.ts

builtins.ts

compat.ts

console.ts

crypto.ts

dataview.ts

date.ts

diagnostics.ts

error.ts

function.ts

index.d.ts

iterator.ts

map.ts

math.ts

memory.ts

number.ts

object.ts

polyfills.ts

process.ts

reference.ts

regexp.ts

rt.ts

rt

README.md

common.ts

index-incremental.ts

index-minimal.ts

index-stub.ts

index.d.ts

itcms.ts

rtrace.ts

stub.ts

tcms.ts

tlsf.ts

set.ts

shared

feature.ts

target.ts

tsconfig.json

typeinfo.ts

staticarray.ts

string.ts

symbol.ts

table.ts

tsconfig.json

typedarray.ts

uri.ts

util

casemap.ts

error.ts

hash.ts

math.ts

memory.ts

number.ts

sort.ts

string.ts

uri.ts

vector.ts

wasi

index.ts

portable.json

portable

index.d.ts

index.js

types

assembly

index.d.ts

package.json

portable

index.d.ts

package.json

tsconfig-base.json

astral-regex

index.d.ts

index.js

package.json

readme.md

axios

CHANGELOG.md

README.md

UPGRADE_GUIDE.md

dist

axios.js

axios.min.js

index.d.ts

index.js

lib

adapters

README.md

http.js

xhr.js

axios.js

cancel

Cancel.js

CancelToken.js

isCancel.js

core

Axios.js

InterceptorManager.js

README.md

buildFullPath.js

createError.js

dispatchRequest.js

enhanceError.js

mergeConfig.js

settle.js

transformData.js

defaults.js

helpers

README.md

bind.js

buildURL.js

combineURLs.js

cookies.js

deprecatedMethod.js

isAbsoluteURL.js

isURLSameOrigin.js

normalizeHeaderName.js

parseHeaders.js

spread.js

utils.js

package.json

balanced-match

.github

FUNDING.yml

LICENSE.md

README.md

index.js

package.json

base-x

LICENSE.md

README.md

package.json

src

index.d.ts

index.js

binary-install

README.md

example

binary.js

package.json

run.js

index.js

package.json

src

binary.js

binaryen

README.md

index.d.ts

package-lock.json

package.json

wasm.d.ts

bn.js

CHANGELOG.md

README.md

lib

bn.js

package.json

brace-expansion

README.md

index.js

package.json

bs58

CHANGELOG.md

README.md

index.js

package.json

callsites

index.d.ts

index.js

package.json

readme.md

camelcase

index.d.ts

index.js

package.json

readme.md

chalk

index.d.ts

package.json

readme.md

source

index.js

templates.js

util.js

chownr

README.md

chownr.js

package.json

cliui

CHANGELOG.md

LICENSE.txt

README.md

build

lib

index.js

string-utils.js

package.json

color-convert

CHANGELOG.md

README.md

conversions.js

index.js

package.json

route.js

color-name

README.md

index.js

package.json

commander

CHANGELOG.md

Readme.md

index.js

package.json

typings

index.d.ts

concat-map

.travis.yml

example

map.js

index.js

package.json

test

map.js

cross-spawn

CHANGELOG.md

README.md

index.js

lib

enoent.js

parse.js

util

escape.js

readShebang.js

resolveCommand.js

package.json

csv-stringify

README.md

lib

browser

index.js

sync.js

es5

index.d.ts

index.js

sync.d.ts

sync.js

index.d.ts

index.js

sync.d.ts

sync.js

package.json

debug

README.md

package.json

src

browser.js

common.js

index.js

node.js

decamelize

index.js

package.json

readme.md

deep-is

.travis.yml

example

cmp.js

index.js

package.json

test

NaN.js

cmp.js

neg-vs-pos-0.js

diff

CONTRIBUTING.md

README.md

dist

diff.js

lib

convert

dmp.js

xml.js

diff

array.js

base.js

character.js

css.js

json.js

line.js

sentence.js

word.js

index.es6.js

index.js

patch

apply.js

create.js

merge.js

parse.js

util

array.js

distance-iterator.js

params.js

package.json

release-notes.md

runtime.js

discontinuous-range

.travis.yml

README.md

index.js

package.json

test

main-test.js

doctrine

CHANGELOG.md

README.md

lib

doctrine.js

typed.js

utility.js

package.json

emoji-regex

LICENSE-MIT.txt

README.md

es2015

index.js

text.js

index.d.ts

index.js

package.json

text.js

enquirer

CHANGELOG.md

README.md

index.d.ts

index.js

lib

ansi.js

combos.js

completer.js

interpolate.js

keypress.js

placeholder.js

prompt.js

prompts

autocomplete.js

basicauth.js

confirm.js

editable.js

form.js

index.js

input.js

invisible.js

list.js

multiselect.js

numeral.js

password.js

quiz.js

scale.js

select.js

snippet.js

sort.js

survey.js

text.js

toggle.js

render.js

roles.js

state.js

styles.js

symbols.js

theme.js

timer.js

types

array.js

auth.js

boolean.js

index.js

number.js

string.js

utils.js

package.json

env-paths

index.d.ts

index.js

package.json

readme.md

escalade

dist

index.js

index.d.ts

package.json

readme.md

sync

index.d.ts

index.js

escape-string-regexp

index.d.ts

index.js

package.json

readme.md

eslint-scope

CHANGELOG.md

README.md

lib

definition.js

index.js

pattern-visitor.js

reference.js

referencer.js

scope-manager.js

scope.js

variable.js

package.json

eslint-utils

README.md

index.js

node_modules

eslint-visitor-keys

CHANGELOG.md

README.md

lib

index.js

visitor-keys.json

package.json

package.json

eslint-visitor-keys

CHANGELOG.md

README.md

lib

index.js

visitor-keys.json

package.json

eslint

CHANGELOG.md

README.md

bin

eslint.js

conf

category-list.json

config-schema.js

default-cli-options.js

eslint-all.js

eslint-recommended.js

replacements.json

lib

api.js

cli-engine

cli-engine.js

file-enumerator.js

formatters

checkstyle.js

codeframe.js

compact.js

html.js

jslint-xml.js

json-with-metadata.js

json.js

junit.js

stylish.js

table.js

tap.js

unix.js

visualstudio.js

hash.js

index.js

lint-result-cache.js

load-rules.js

xml-escape.js

cli.js

config

default-config.js

flat-config-array.js

flat-config-schema.js

rule-validator.js

eslint

eslint.js

index.js

init

autoconfig.js

config-file.js

config-initializer.js

config-rule.js

npm-utils.js

source-code-utils.js

linter

apply-disable-directives.js

code-path-analysis

code-path-analyzer.js

code-path-segment.js

code-path-state.js

code-path.js

debug-helpers.js

fork-context.js

id-generator.js

config-comment-parser.js

index.js

interpolate.js

linter.js

node-event-generator.js

report-translator.js

rule-fixer.js

rules.js

safe-emitter.js

source-code-fixer.js

timing.js

options.js

rule-tester

index.js

rule-tester.js

rules

accessor-pairs.js

array-bracket-newline.js

array-bracket-spacing.js

array-callback-return.js

array-element-newline.js

arrow-body-style.js

arrow-parens.js

arrow-spacing.js

block-scoped-var.js

block-spacing.js

brace-style.js

callback-return.js

camelcase.js

capitalized-comments.js

class-methods-use-this.js

comma-dangle.js

comma-spacing.js

comma-style.js

complexity.js

computed-property-spacing.js

consistent-return.js

consistent-this.js

constructor-super.js

curly.js

default-case-last.js

default-case.js

default-param-last.js

dot-location.js

dot-notation.js

eol-last.js

eqeqeq.js

for-direction.js

func-call-spacing.js

func-name-matching.js

func-names.js

func-style.js

function-call-argument-newline.js

function-paren-newline.js

generator-star-spacing.js

getter-return.js

global-require.js

grouped-accessor-pairs.js

guard-for-in.js

handle-callback-err.js

id-blacklist.js

id-denylist.js

id-length.js

id-match.js

implicit-arrow-linebreak.js

indent-legacy.js

indent.js

index.js

init-declarations.js

jsx-quotes.js

key-spacing.js

keyword-spacing.js

line-comment-position.js

linebreak-style.js

lines-around-comment.js

lines-around-directive.js

lines-between-class-members.js

max-classes-per-file.js

max-depth.js

max-len.js

max-lines-per-function.js

max-lines.js

max-nested-callbacks.js

max-params.js

max-statements-per-line.js

max-statements.js

multiline-comment-style.js

multiline-ternary.js

new-cap.js

new-parens.js

newline-after-var.js

newline-before-return.js

newline-per-chained-call.js

no-alert.js

no-array-constructor.js

no-async-promise-executor.js

no-await-in-loop.js

no-bitwise.js

no-buffer-constructor.js

no-caller.js

no-case-declarations.js

no-catch-shadow.js

no-class-assign.js

no-compare-neg-zero.js

no-cond-assign.js

no-confusing-arrow.js

no-console.js

no-const-assign.js

no-constant-condition.js

no-constructor-return.js

no-continue.js

no-control-regex.js

no-debugger.js

no-delete-var.js

no-div-regex.js

no-dupe-args.js

no-dupe-class-members.js

no-dupe-else-if.js

no-dupe-keys.js

no-duplicate-case.js

no-duplicate-imports.js

no-else-return.js

no-empty-character-class.js

no-empty-function.js

no-empty-pattern.js

no-empty.js

no-eq-null.js

no-eval.js

no-ex-assign.js

no-extend-native.js

no-extra-bind.js

no-extra-boolean-cast.js

no-extra-label.js

no-extra-parens.js

no-extra-semi.js

no-fallthrough.js

no-floating-decimal.js

no-func-assign.js

no-global-assign.js

no-implicit-coercion.js

no-implicit-globals.js

no-implied-eval.js

no-import-assign.js

no-inline-comments.js

no-inner-declarations.js

no-invalid-regexp.js

no-invalid-this.js

no-irregular-whitespace.js

no-iterator.js

no-label-var.js

no-labels.js

no-lone-blocks.js

no-lonely-if.js

no-loop-func.js

no-loss-of-precision.js

no-magic-numbers.js

no-misleading-character-class.js

no-mixed-operators.js

no-mixed-requires.js

no-mixed-spaces-and-tabs.js

no-multi-assign.js

no-multi-spaces.js

no-multi-str.js

no-multiple-empty-lines.js

no-native-reassign.js

no-negated-condition.js

no-negated-in-lhs.js

no-nested-ternary.js

no-new-func.js

no-new-object.js

no-new-require.js

no-new-symbol.js

no-new-wrappers.js

no-new.js

no-nonoctal-decimal-escape.js

no-obj-calls.js

no-octal-escape.js

no-octal.js

no-param-reassign.js

no-path-concat.js

no-plusplus.js

no-process-env.js

no-process-exit.js

no-promise-executor-return.js

no-proto.js

no-prototype-builtins.js

no-redeclare.js

no-regex-spaces.js

no-restricted-exports.js

no-restricted-globals.js

no-restricted-imports.js

no-restricted-modules.js

no-restricted-properties.js

no-restricted-syntax.js

no-return-assign.js

no-return-await.js

no-script-url.js

no-self-assign.js

no-self-compare.js

no-sequences.js

no-setter-return.js

no-shadow-restricted-names.js

no-shadow.js

no-spaced-func.js

no-sparse-arrays.js

no-sync.js

no-tabs.js

no-template-curly-in-string.js

no-ternary.js

no-this-before-super.js

no-throw-literal.js

no-trailing-spaces.js

no-undef-init.js

no-undef.js

no-undefined.js

no-underscore-dangle.js

no-unexpected-multiline.js

no-unmodified-loop-condition.js

no-unneeded-ternary.js

no-unreachable-loop.js

no-unreachable.js

no-unsafe-finally.js

no-unsafe-negation.js

no-unsafe-optional-chaining.js

no-unused-expressions.js

no-unused-labels.js

no-unused-vars.js

no-use-before-define.js

no-useless-backreference.js

no-useless-call.js

no-useless-catch.js

no-useless-computed-key.js

no-useless-concat.js

no-useless-constructor.js

no-useless-escape.js

no-useless-rename.js

no-useless-return.js

no-var.js

no-void.js

no-warning-comments.js

no-whitespace-before-property.js

no-with.js

nonblock-statement-body-position.js

object-curly-newline.js

object-curly-spacing.js

object-property-newline.js

object-shorthand.js

one-var-declaration-per-line.js

one-var.js

operator-assignment.js

operator-linebreak.js

padded-blocks.js

padding-line-between-statements.js

prefer-arrow-callback.js

prefer-const.js

prefer-destructuring.js

prefer-exponentiation-operator.js

prefer-named-capture-group.js

prefer-numeric-literals.js

prefer-object-spread.js

prefer-promise-reject-errors.js

prefer-reflect.js

prefer-regex-literals.js

prefer-rest-params.js

prefer-spread.js

prefer-template.js

quote-props.js

quotes.js

radix.js

require-atomic-updates.js

require-await.js

require-jsdoc.js

require-unicode-regexp.js

require-yield.js

rest-spread-spacing.js

semi-spacing.js

semi-style.js

semi.js

sort-imports.js

sort-keys.js

sort-vars.js

space-before-blocks.js

space-before-function-paren.js

space-in-parens.js

space-infix-ops.js

space-unary-ops.js

spaced-comment.js

strict.js

switch-colon-spacing.js

symbol-description.js

template-curly-spacing.js

template-tag-spacing.js

unicode-bom.js

use-isnan.js

utils

ast-utils.js

fix-tracker.js

keywords.js

lazy-loading-rule-map.js

patterns

letters.js

unicode

index.js

is-combining-character.js

is-emoji-modifier.js

is-regional-indicator-symbol.js

is-surrogate-pair.js

valid-jsdoc.js

valid-typeof.js

vars-on-top.js

wrap-iife.js

wrap-regex.js

yield-star-spacing.js

yoda.js

shared

ajv.js

ast-utils.js

config-validator.js

deprecation-warnings.js

logging.js

relative-module-resolver.js

runtime-info.js

string-utils.js

traverser.js

types.js

source-code

index.js

source-code.js

token-store

backward-token-comment-cursor.js

backward-token-cursor.js

cursor.js

cursors.js

decorative-cursor.js

filter-cursor.js

forward-token-comment-cursor.js

forward-token-cursor.js

index.js

limit-cursor.js

padded-token-cursor.js

skip-cursor.js

utils.js

messages

all-files-ignored.js

extend-config-missing.js

failed-to-read-json.js

file-not-found.js

no-config-found.js

plugin-conflict.js

plugin-invalid.js

plugin-missing.js

print-config-with-directory-path.js

whitespace-found.js

package.json

espree

CHANGELOG.md

README.md

espree.js

lib

ast-node-types.js

espree.js

features.js

options.js

token-translator.js

visitor-keys.js

node_modules

eslint-visitor-keys

CHANGELOG.md

README.md

lib

index.js

visitor-keys.json

package.json

package.json

esprima

README.md

bin

esparse.js

esvalidate.js

dist

esprima.js

package.json

esquery

README.md

dist

esquery.esm.js

esquery.esm.min.js

esquery.js

esquery.lite.js

esquery.lite.min.js

esquery.min.js

license.txt

node_modules

estraverse

README.md

estraverse.js

gulpfile.js

package.json

package.json

parser.js

esrecurse

README.md

esrecurse.js

gulpfile.babel.js

node_modules

estraverse

README.md

estraverse.js

gulpfile.js

package.json

package.json

estraverse

README.md

estraverse.js

gulpfile.js

package.json

esutils

README.md

lib

ast.js

code.js

keyword.js

utils.js

package.json

fast-deep-equal

README.md

es6

index.d.ts

index.js

react.d.ts

react.js

index.d.ts

index.js

package.json

react.d.ts

react.js

fast-json-stable-stringify

.eslintrc.yml

.github

FUNDING.yml

.travis.yml

README.md

benchmark

index.js

test.json

example

key_cmp.js

nested.js

str.js

value_cmp.js

index.d.ts

index.js

package.json

test

cmp.js

nested.js

str.js

to-json.js

fast-levenshtein

LICENSE.md

README.md

levenshtein.js

package.json

file-entry-cache

README.md

cache.js

changelog.md

package.json

find-up

index.d.ts

index.js

package.json

readme.md

flat-cache

README.md

changelog.md

package.json

src

cache.js

del.js

utils.js

flatted

.github

FUNDING.yml

README.md

SPECS.md

cjs

index.js

package.json

es.js

esm

index.js

index.js

min.js

package.json

php

flatted.php

types.d.ts

follow-redirects

README.md

http.js

https.js

index.js

node_modules

debug

.coveralls.yml

.travis.yml

CHANGELOG.md

README.md

karma.conf.js

node.js

package.json

src

browser.js

debug.js

index.js

node.js

ms

index.js

license.md

package.json

readme.md

package.json

fs-minipass

README.md

index.js

package.json

fs.realpath

README.md

index.js

old.js

package.json

function-bind

.jscs.json

.travis.yml

README.md

implementation.js

index.js

package.json

test

index.js

functional-red-black-tree

README.md

bench

test.js

package.json

rbtree.js

test

test.js

get-caller-file

LICENSE.md

README.md

index.d.ts

index.js

package.json

glob-parent

CHANGELOG.md

README.md

index.js

package.json

glob

README.md

changelog.md

common.js

glob.js

package.json

sync.js

globals

globals.json

index.d.ts

index.js

package.json

readme.md

has-flag

index.d.ts

index.js

package.json

readme.md

has

README.md

package.json

src

index.js

test

index.js

hasurl

README.md

index.js

package.json

ignore

CHANGELOG.md

README.md

index.d.ts

index.js

legacy.js

package.json

import-fresh

index.d.ts

index.js

package.json

readme.md

imurmurhash

README.md

imurmurhash.js

imurmurhash.min.js

package.json

inflight

README.md

inflight.js

package.json

inherits

README.md

inherits.js

inherits_browser.js

package.json

interpret

README.md

index.js

mjs-stub.js

package.json

is-core-module

CHANGELOG.md

README.md

core.json

index.js

package.json

test

index.js

is-extglob

README.md

index.js

package.json

is-fullwidth-code-point

index.d.ts

index.js

package.json

readme.md

is-glob

README.md

index.js

package.json

isarray

.travis.yml

README.md

component.json

index.js

package.json

test.js

isexe

README.md

index.js

mode.js

package.json

test

basic.js

windows.js

isobject

README.md

index.js

package.json

js-base64

LICENSE.md

README.md

base64.d.ts

base64.js

package.json

js-tokens

CHANGELOG.md

README.md

index.js

package.json

js-yaml

CHANGELOG.md

README.md

bin

js-yaml.js

dist

js-yaml.js

js-yaml.min.js

index.js

lib

js-yaml.js

js-yaml

common.js

dumper.js

exception.js

loader.js

mark.js

schema.js

schema

core.js

default_full.js

default_safe.js

failsafe.js

json.js

type.js

type

binary.js

bool.js

float.js

int.js

js

function.js

regexp.js

undefined.js

map.js

merge.js

null.js

omap.js

pairs.js

seq.js

set.js

str.js

timestamp.js

package.json

json-schema-traverse

.eslintrc.yml

.travis.yml

README.md

index.js

package.json

spec

.eslintrc.yml

fixtures

schema.js

index.spec.js

json-stable-stringify-without-jsonify

.travis.yml

example

key_cmp.js

nested.js

str.js

value_cmp.js

index.js

package.json

test

cmp.js

nested.js

replacer.js

space.js

str.js

to-json.js

levn

README.md

lib

cast.js

index.js

parse-string.js

package.json

line-column

README.md

lib

line-column.js

package.json

locate-path

index.d.ts

index.js

package.json

readme.md

lodash.clonedeep

README.md

index.js

package.json

lodash.merge

README.md

index.js

package.json

lodash.sortby

README.md

index.js

package.json

lodash.truncate

README.md

index.js

package.json

long

README.md

dist

long.js

index.js

package.json

src

long.js

lru-cache

README.md

index.js

package.json

minimatch

README.md

minimatch.js

package.json

minimist

.travis.yml

example

parse.js

index.js

package.json

test

all_bool.js

bool.js

dash.js

default_bool.js

dotted.js

kv_short.js

long.js

num.js

parse.js

parse_modified.js

proto.js

short.js

stop_early.js

unknown.js

whitespace.js

minipass

README.md

index.js

package.json

minizlib

README.md

constants.js

index.js

package.json

mkdirp

bin

cmd.js

usage.txt

index.js

package.json

moo

README.md

moo.js

package.json

ms

index.js

license.md

package.json

readme.md

natural-compare

README.md

index.js

package.json

near-mock-vm

assembly

__tests__

main.ts

context.ts

index.ts

outcome.ts

vm.ts

bin

bin.js

package.json

pkg

near_mock_vm.d.ts

near_mock_vm.js

package.json

vm

dist

cli.d.ts

cli.js

context.d.ts

context.js

index.d.ts

index.js

memory.d.ts

memory.js

runner.d.ts

runner.js

utils.d.ts

utils.js

index.js

near-sdk-as

as-pect.config.js

as_types.d.ts

asconfig.json

asp.asconfig.json

assembly

__tests__

as-pect.d.ts

assert.spec.ts

avl-tree.spec.ts

bignum.spec.ts

contract.spec.ts

contract.ts

data.txt

empty.ts

generic.ts

includeBytes.spec.ts

main.ts

max-heap.spec.ts

model.ts

near.spec.ts

persistent-set.spec.ts

promise.spec.ts

rollback.spec.ts

roundtrip.spec.ts

runtime.spec.ts

unordered-map.spec.ts

util.ts

utils.spec.ts

as_types.d.ts

bindgen.ts

index.ts

json.lib.ts

tsconfig.json

vm

__tests__

vm.include.ts

index.ts

compiler.js

imports.js

package.json

near-sdk-bindgen

README.md

assembly

index.ts

compiler.js

dist

JSONBuilder.d.ts

JSONBuilder.js

classExporter.d.ts

classExporter.js

index.d.ts

index.js

transformer.d.ts

transformer.js

typeChecker.d.ts

typeChecker.js

utils.d.ts

utils.js

index.js

package.json

near-sdk-core

README.md

asconfig.json

assembly

as_types.d.ts

base58.ts

base64.ts

bignum.ts

collections

avlTree.ts

index.ts

maxHeap.ts

persistentDeque.ts

persistentMap.ts

persistentSet.ts

persistentUnorderedMap.ts

persistentVector.ts

util.ts

contract.ts

datetime.ts

env

env.ts

index.ts

runtime_api.ts

index.ts

logging.ts

math.ts

promise.ts

storage.ts

tsconfig.json

util.ts

docs

assets

css

main.css

js

main.js

search.json

classes

_sdk_core_assembly_collections_avltree_.avltree.html

_sdk_core_assembly_collections_avltree_.avltreenode.html