repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

kagaminccino/LAVSE | ['denoising', 'speech enhancement', 'data compression'] | ['Lite Audio-Visual Speech Enhancement'] | main/build_model.py main/scoring.py main/prepare_path_list.py main/data_generator.py main/utils.py main/main.py LAVSE weights_init data_nor stft2spec AV_Dataset prepare_val_path_list prepare_test_path_list prepare_path_list prepare_train_path_list write_score scoring_dir scoring_file scoring_STOI scoring_PESQ prepare_scoring_list val cal_time test collate_fn model_detail_string train xavier_normal_ isinstance named_parameters mean std norm data_nor squeeze log10 permute extend replace extend replace extend replace prepare_val_path_list prepare_test_path_list prepare_train_path_list check_output float replace decode stoi rsplit read scoring_STOI scoring_PESQ sorted replace print glob scoring_file tqdm append extend replace writer time print writerow cal_time len scoring_dir close call open replace list arange min index_select stack zip enumerate column_stack val Visdom time line print cal_time zero_grad localtime av DataLoader asctime save model_detail_string range __name__ len av eval DataLoader train len time print name cal_time len localtime av DataLoader eval asctime __name__ mode | # Lite Audio-Visual Speech Enhancement (Interspeech 2020) ## Introduction This is the PyTorch implementation of [Lite Audio-Visual Speech Enhancement (LAVSE)](https://arxiv.org/abs/2005.11769). We have also put some preprocessed sample data (including enhanced results) in this repository. The dataset of TMSV (Taiwan Mandarin speech with video) used in LAVSE is released [here](https://bio-asplab.citi.sinica.edu.tw/Opensource.html#TMSV). Please cite the following paper if you find the codes useful in your research. ``` @inproceedings{chuang2020lite, title={Lite Audio-Visual Speech Enhancement}, author={Chuang, Shang-Yi and Tsao, Yu and Lo, Chen-Chou and Wang, Hsin-Min}, | 2,600 |

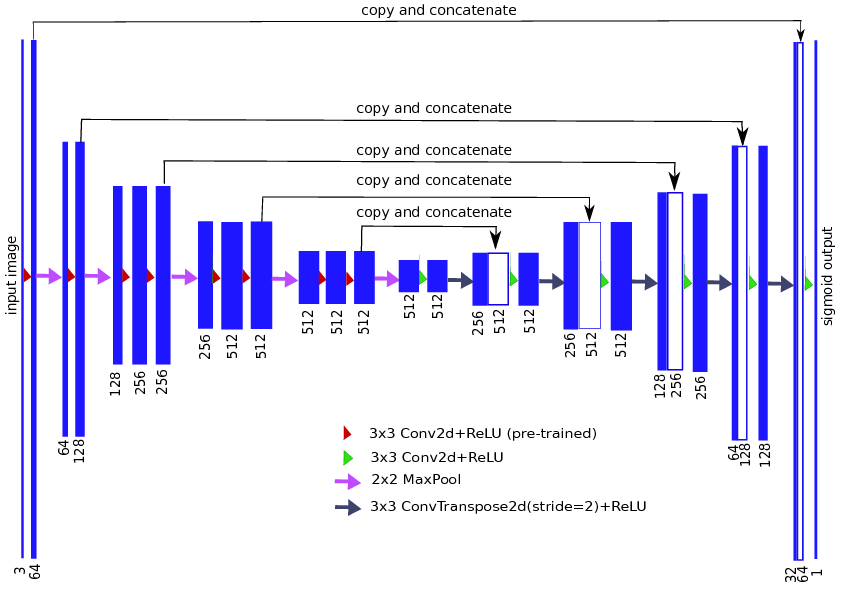

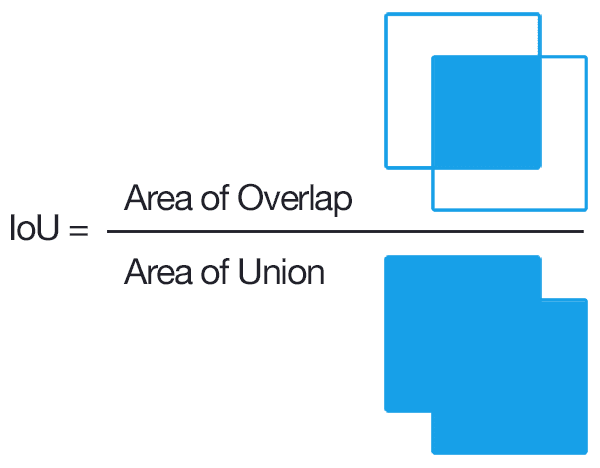

kaichoulyc/tgs-salts | ['semantic segmentation'] | ['TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation'] | lovasz_losses.py lovasz_grad flatten_binary_scores iou binary_xloss xloss lovasz_hinge_flat StableBCELoss lovasz_hinge lovasz_softmax_flat mean flatten_probas lovasz_softmax iou_binary cumsum sum len mean zip append float sum list map zip append float sum range mean lovasz_hinge_flat data lovasz_grad relu Variable sort dot float view Variable float flatten_binary_scores mean lovasz_softmax_flat data lovasz_grad Variable sort size dot append float abs range size view filterfalse isnan iter next enumerate | # AlbuNet for TGS salts Identification Albunet is moification of standart unet [arXiv paper](https://arxiv.org/abs/1801.05746) made by [Vladimir Iglovikov, Alexey Shvets](https://github.com/ternaus/TernausNet)  This net was used by me for TGS salts Identification chalange and showed quite good results even without augmentations and long learning. For evaluation was used IoU. [Score](https://www.kaggle.com/c/tgs-salt-identification-challenge/leaderboard): 0.69 on public and 0.72 on private  # Reps used for this project: https://github.com/ternaus/TernausNet https://github.com/bermanmaxim/LovaszSoftmax | 2,601 |

kaidi-jin/backdoor_samples_detection | ['adversarial defense'] | ['A Unified Framework for Analyzing and Detecting Malicious Examples of DNN Models'] | utils/model_util.py utils/backdoor_data_util.py attack/generate_backdoor_samples.py model_mutation/SPRT_detector.py injection/injection_utils.py utils/train_data_util.py model_mutation/gaussian_fuzzing.py attack/cw_attack.py injection/injection_model.py main tutorial_cw infect_one_image filter_part generate_backdoor_data infect_by_trigger_img inject_backdoor DataGenerator injection_func mask_pattern_func infect_X BackdoorCall construct_mask_corner construct_mask_box model_structure gaussian_fuzzing get_threshold_relax Trans_csv each_model_predict_result detect calculate_sprt_ratio main Keras_Model load_backdoor_data load_keras_model load_mnist_model load_gtsrb_model load_face_dataset load_mnist_dataset load_gtsrb_dataset load_train_dataset load_h5_dataset CarliniWagnerL2 where set_random_seed save DEBUG argmax KerasModelWrapper GPUOptions Session max str list set_session load_model exit set_log_level shape append sum range predict format close choice mean load_train_dataset generate_np print AccuracyReport min repeat array len tutorial_cw add_argument check_installation ArgumentParser parse_args copy append zeros imread range deepcopy range imread infect_one_image copy list zeros_like print makedirs exit choice infect_by_trigger_img shape load_train_dataset save array range infect_X len copy choice mask_pattern_func injection_func copy remove format evaluate DataGenerator print generate_data fit_generator BackdoorCall load_train_dataset evaluate_generator save load_keras_model exists len append construct_mask_corner zeros clear_session to_categorical save_weights set_weights str exit shape get_weights range normal Dense load_weights time model_structure isinstance evaluate print zfill makedirs print exit writer join time list argmax format clear_session print writerow Keras_Model listdir predict open int time log calculate_sprt_ratio len list map to_csv as_matrix zip DataFrame read_csv values print len exit array Model Input Adam compile compile Sequential Adam add Dense MaxPooling2D Conv2D Flatten Dropout reshape to_categorical astype load_data print exit load_h5_dataset print to_categorical File exit vstack | # BE_detection ## About Code to the paper ["A Unified Framework for Analyzing and Detecting Malicious Examples of DNN Models"](https://arxiv.org/abs/2006.14871). The model mutation method based on the [code](https://github.com/dgl-prc/m_testing_adversatial_sample) for adversarial sample detection. ## Repo Structure - `data:` Training datasets and malicious data. - `model:` Trojaned Backdoor models. - `injecting backdoor:` To train the backdoor model. - `attack:` generate the adversarial example by CW attack and backdoor smaples. | 2,602 |

kaidic/LDAM-DRW | ['long tail learning', 'long tail learning with class descriptors'] | ['Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss'] | losses.py models/resnet_cifar.py imbalance_cifar.py models/__init__.py utils.py cifar_train.py validate adjust_learning_rate main_worker main train IMBALANCECIFAR10 IMBALANCECIFAR100 FocalLoss LDAMLoss focal_loss prepare_folders AverageMeter accuracy ImbalancedDatasetSampler save_checkpoint plot_confusion_matrix calc_confusion_mat resnet110 resnet20 ResNet_s LambdaLayer resnet44 test NormedLinear resnet1202 resnet56 resnet32 _weights_init BasicBlock seed join prepare_folders warn device_count manual_seed main_worker parse_args gpu validate store_name SGD warn DataLoader adjust_learning_rate root_log save_checkpoint arch cuda max open set_device ImbalancedDatasetSampler load_state_dict IMBALANCECIFAR10 to CIFAR100 sum range SummaryWriter format Compose start_epoch lr resume CIFAR10 power IMBALANCECIFAR100 get_cls_num_list flush load join add_scalar print write parameters isfile train epochs array gpu len model zero_grad cuda update format size avg item flush enumerate time criterion backward print add_scalar AverageMeter write accuracy step gpu len eval AverageMeter param_groups lr exp join print store_name astype eval savefig plot_confusion_matrix root_log sum diag format subplots text get_xticklabels confusion_matrix colorbar set tight_layout imshow setp max range print mkdir copyfile replace save weight kaiming_normal_ __name__ print | ## Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, Tengyu Ma _________________ This is the official implementation of LDAM-DRW in the paper [Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss](https://arxiv.org/pdf/1906.07413.pdf) in PyTorch. ### Dependency The code is built with following libraries: - [PyTorch](https://pytorch.org/) 1.2 - [TensorboardX](https://github.com/lanpa/tensorboardX) - [scikit-learn](https://scikit-learn.org/stable/) ### Dataset | 2,603 |

kailigo/SN_loss_for_reID | ['person re identification'] | ['Support Neighbor Loss for Person Re-Identification'] | src/data/dataset/Prefetcher.py src/data/utils/utils.py src/data/utils/dataset_utils.py src/data_preparation/gen_splits.py src/data_preparation/combine_trainval_sets.py src/data_preparation/transform_cuhk03_official_split.py src/data_preparation/reformat_cuhk01_486.py src/data_preparation/transform_cub.py src/model/myModel.py src/data_preparation/transform_cuhk03.py src/data_preparation/reformat_mars.py src/data/utils/visualize_rank_list.py src/data/utils/metric.py src/data/dataset/Dataset.py src/data_preparation/transform_cuhk01.py src/data_preparation/transform_viper.py src/data_preparation/transform_mars.py src/data_preparation/transform_market1501.py src/data_preparation/transform_duke.py src/model/Model.py src/data/utils/visualization.py src/data/dataset/TestSet.py src/data/dataset/__init__.py src/losses/SN_loss.py src/data_preparation/reformat_cuhk01.py src/data/dataset/TrainSet.py src/data/utils/re_ranking.py src/data_preparation/reformat_viper.py src/main.py src/data/utils/distance.py src/data_preparation/reformat_cuhk03_official_split.py src/data_preparation/reformat_cub.py src/data/dataset/PreProcessImage.py src/data_preparation/combine_image_distractor_market.py src/model/resnet.py Config main ExtractFeature Dataset Enqueuer Prefetcher Counter PreProcessIm TestSet TrainSet create_dataset get_im_names parse_im_name move_ims partition_train_val_set normalize compute_dist mean_ap _unique_sample cmc re_ranking load_pickle measure_time tight_float_str may_set_mode save_pickle save_mat find_index adjust_lr_exp set_devices may_transfer_optims set_seed adjust_lr_staircase transfer_optim_state may_transfer_modules_optims load_state_dict is_iterable save_ckpt TransferModulesOptims get_model_wrapper time_str TransferVarTensor to_scalar str2bool may_make_dir set_devices_for_ml load_ckpt RunningAverageMeter AverageMeter ReDirectSTD print_array RecentAverageMeter add_border get_rank_list save_rank_list_to_im read_im make_im_grid save_im Config main ExtractFeature get_im_names combine_trainval_sets move_ims write_json mkdir_if_missing mkdir_if_nonexist read_json move_images_to_dir mkdir_if_nonexist read_json move_images_to_dir main_labeled move_images_to_dir Mars get_names init_dataset mkdir_if_nonexist read_json move_images_to_dir transform parse_original_im_name save_images transform parse_original_im_name save_images old_main transform save_images transform parse_original_im_name save_images transform parse_original_im_name save_images transform parse_original_im_name save_images transform parse_original_im_name save_images transform parse_original_im_name save_images main re_ranking_retrieval euclidean_dist SN_LOSS Model Model ResNet resnet50 Bottleneck resnet152 conv3x3 remove_fc resnet34 resnet18 BasicBlock resnet101 Config staircase_decay_multiply_factor sys_device_ids model_w zero_grad DataParallel exp_decay_at_epoch may_set_mode dataset base_lr cuda seed adjust_lr_exp total_epochs set_devices set_seed adjust_lr_staircase stderr_file Adam log_to_file pprint Model append normalize normalize_feature save_ckpt range update SummaryWriter __dict__ test resume stdout_file float to_scalar long add_scalars ids2labels NLLLoss time load_ckpt criterion backward print Variable AverageMeter test_full staircase_decay_at_epochs dict ReDirectSTD parameters TVT only_test create_dataset next_batch step ckpt_file len update join load_pickle list format print ospeu set dict sum TrainSet TestSet len int glob join array join basename defaultdict format copy append parse_im_name seed int list remove arange setdiff1d sort hstack shuffle set flatten dict unique append array len norm sqrt T normalize matmul zeros list items choice defaultdict cumsum argsort shape _unique_sample zip append zeros range enumerate len format print average_precision_score argsort shape __version__ zeros range minimum exp zeros_like concatenate transpose astype float32 mean int32 unique append zeros sum max range len abspath dirname may_make_dir dict savemat is_tensor isinstance Parameter items list isinstance cpu cuda transfer_optim_state state Optimizer isinstance format Optimizer Module isinstance print state transfer_optim_state cpu cuda __name__ TransferModulesOptims TransferVarTensor find_index list remove TransferModulesOptims sort set TransferVarTensor append load format print load_state_dict zip dict dirname abspath save may_make_dir data items list isinstance print set copy_ keys state_dict eval Module train isinstance makedirs seed format enabled print manual_seed print enumerate param_groups float rstrip print find_index rstrip param_groups print print time format ndarray isinstance copy dtype ndarray isinstance astype enumerate argsort append zip resize transpose asarray open ospdn transpose may_make_dir save add_border read_im zip append save_im make_im_grid len TMO save ExtractFeature str set_trace save_rank_list_to_im load_state_dict rank_list_size format RandomState zip enumerate load get_rank_list compute_dist argsort set_feat_func int basename sort append split list sort set dict zip ospj range len save_pickle load_pickle list format print sort zip ospj range move_ims may_make_dir makedirs dirname mkdir_if_missing makedirs join format copy print range len load join int format str move_images_to_dir glob print len makedirs append range open join join print get_im_names sort cumsum set dict enumerate dirname abspath append save_pickle array move_ims may_make_dir len join list format save_images partition_train_val_set print sort set dict zip range save_pickle len int add_argument zip_file abspath ArgumentParser transform expanduser save_dir parse_args dump format File write zip chain range load_pickle dirname abspath exists may_make_dir minimum exp zeros_like transpose astype float32 mean int32 unique append zeros sum max range len size expand t sqrt addmm_ list rand IntTensor mm deepcopy list items startswith load_url ResNet remove_fc load_state_dict load_url ResNet remove_fc load_state_dict load_url ResNet remove_fc load_state_dict load_url ResNet remove_fc load_state_dict load_url ResNet remove_fc load_state_dict | # Support Neighbor Loss for Person Re-Identification This repository is for the paper introduced in the following paper Kai Li, Zhengming Ding, Kunpeng Li, Yulun Zhang, and Yun Fu, "Support Neighbor Loss for Person Re-Identification", ACM Multimedia (ACM MM) 2018, [[arXiv]](https://arxiv.org/abs/1808.06030) ## Environment Python 3 + PyTorch 3.0 ## Data preparation Please refer [this repo](https://github.com/huanghoujing/person-reid-triplet-loss-baseline) for the data preparation and modify the data locations accordingly in the train.sh and test.sh files. ## Train ``` sh ./train.sh | 2,604 |

kaiolae/UnityMLAgents | ['unity'] | ['Unity: A General Platform for Intelligent Agents'] | ml-agents/mlagents/envs/communicator_objects/environment_parameters_proto_pb2.py ml-agents/tests/trainers/test_trainer_controller.py ml-agents/mlagents/trainers/buffer.py ml-agents/mlagents/trainers/bc/online_trainer.py ml-agents/mlagents/envs/communicator_objects/unity_rl_initialization_input_pb2.py ml-agents/mlagents/envs/communicator_objects/brain_parameters_proto_pb2.py ml-agents/tests/envs/test_envs.py ml-agents/mlagents/envs/communicator_objects/__init__.py ml-agents/mlagents/envs/rpc_communicator.py ml-agents/mlagents/trainers/ppo/__init__.py gym-unity/gym_unity/envs/__init__.py ml-agents/mlagents/trainers/tensorflow_to_barracuda.py ml-agents/mlagents/envs/communicator_objects/agent_action_proto_pb2.py ml-agents/mlagents/trainers/learn.py gym-unity/gym_unity/envs/unity_env.py ml-agents/mlagents/trainers/bc/trainer.py ml-agents/mlagents/trainers/policy.py ml-agents/tests/trainers/test_learn.py ml-agents/mlagents/envs/communicator_objects/unity_rl_initialization_output_pb2.py ml-agents/tests/trainers/test_curriculum.py ml-agents/mlagents/trainers/meta_curriculum.py ml-agents/mlagents/trainers/curriculum.py ml-agents/mlagents/trainers/ppo/models.py ml-agents/mlagents/envs/communicator_objects/space_type_proto_pb2.py ml-agents/mlagents/envs/communicator_objects/unity_output_pb2.py ml-agents/mlagents/envs/communicator_objects/unity_input_pb2.py ml-agents/tests/trainers/test_demo_loader.py gym-unity/gym_unity/__init__.py ml-agents/mlagents/trainers/ppo/policy.py ml-agents/mlagents/envs/communicator_objects/engine_configuration_proto_pb2.py ml-agents/mlagents/envs/socket_communicator.py gym-unity/setup.py ml-agents/mlagents/trainers/trainer_controller.py ml-agents/mlagents/envs/communicator_objects/agent_info_proto_pb2.py ml-agents/mlagents/envs/communicator_objects/unity_to_external_pb2_grpc.py ml-agents/tests/trainers/test_ppo.py ml-agents/mlagents/envs/brain.py ml-agents/mlagents/trainers/bc/policy.py ml-agents/tests/trainers/test_bc.py ml-agents/mlagents/trainers/demo_loader.py ml-agents/tests/mock_communicator.py ml-agents/mlagents/envs/communicator_objects/unity_message_pb2.py ml-agents/mlagents/trainers/models.py ml-agents/mlagents/trainers/__init__.py ml-agents/mlagents/envs/communicator_objects/resolution_proto_pb2.py ml-agents/mlagents/envs/communicator_objects/unity_to_external_pb2.py ml-agents/mlagents/envs/communicator_objects/unity_rl_input_pb2.py ml-agents/mlagents/envs/communicator_objects/demonstration_meta_proto_pb2.py ml-agents/tests/trainers/test_buffer.py ml-agents/mlagents/trainers/trainer.py ml-agents/mlagents/envs/communicator.py ml-agents/tests/envs/test_rpc_communicator.py ml-agents/setup.py ml-agents/mlagents/envs/communicator_objects/unity_rl_output_pb2.py ml-agents/mlagents/envs/__init__.py ml-agents/mlagents/trainers/bc/__init__.py gym-unity/tests/test_gym.py ml-agents/mlagents/envs/exception.py ml-agents/mlagents/envs/environment.py ml-agents/mlagents/trainers/bc/models.py ml-agents/mlagents/trainers/barracuda.py ml-agents/mlagents/envs/communicator_objects/command_proto_pb2.py ml-agents/mlagents/trainers/bc/offline_trainer.py ml-agents/mlagents/trainers/exception.py ml-agents/tests/trainers/test_meta_curriculum.py ml-agents/mlagents/trainers/ppo/trainer.py ml-agents/mlagents/envs/communicator_objects/header_pb2.py ml-agents/tests/trainers/test_barracuda_converter.py UnityGymException ActionFlattener UnityEnv create_mock_vector_braininfo test_gym_wrapper test_multi_agent test_branched_flatten setup_mock_unityenvironment create_mock_brainparams BrainInfo BrainParameters Communicator UnityEnvironment UnityWorkerInUseException UnityException UnityTimeOutException UnityEnvironmentException UnityActionException RpcCommunicator UnityToExternalServicerImplementation SocketCommunicator UnityToExternalServicer UnityToExternalStub add_UnityToExternalServicer_to_server BarracudaWriter compress Build sort lstm write fuse_batchnorm_weights trim gru Model summary Struct parse_args to_json rnn BufferException Buffer Curriculum make_demo_buffer load_demonstration demo_to_buffer CurriculumError MetaCurriculumError TrainerError run_training prepare_for_docker_run init_environment try_create_meta_curriculum main load_config MetaCurriculum LearningModel Policy UnityPolicyException get_layer_shape pool_to_HW flatten process_layer process_model basic_lstm get_attr ModelBuilderContext order_by get_epsilon get_tensor_dtype replace_strings_in_list get_tensor_dims by_op remove_duplicates_from_list by_name convert strides_to_HW get_tensor_data gru UnityTrainerException Trainer TrainerController BehavioralCloningModel OfflineBCTrainer OnlineBCTrainer BCPolicy BCTrainer PPOModel PPOPolicy PPOTrainer get_gae discount_rewards MockCommunicator test_initialization test_reset test_close test_step test_handles_bad_filename test_rpc_communicator_checks_port_on_create test_rpc_communicator_create_multiple_workers test_rpc_communicator_close test_barracuda_converter test_dc_bc_model test_cc_bc_model test_visual_cc_bc_model test_bc_policy_evaluate dummy_config test_visual_dc_bc_model assert_array test_buffer location default_reset_parameters test_init_curriculum_bad_curriculum_raises_error test_init_curriculum_happy_path test_increment_lesson test_get_config test_load_demo basic_options test_docker_target_path test_run_training test_init_meta_curriculum_happy_path test_increment_lessons_with_reward_buff_sizes default_reset_parameters MetaCurriculumTest test_increment_lessons measure_vals reward_buff_sizes test_set_all_curriculums_to_lesson_num test_get_config test_set_lesson_nums test_init_meta_curriculum_bad_curriculum_folder_raises_error more_reset_parameters test_rl_functions test_ppo_model_dc_vector_curio test_ppo_model_dc_vector_rnn test_ppo_model_cc_vector_rnn test_ppo_policy_evaluate test_ppo_model_cc_visual dummy_config test_ppo_model_dc_vector test_ppo_model_dc_visual test_ppo_model_cc_visual_curio test_ppo_model_dc_visual_curio test_ppo_model_cc_vector_curio test_ppo_model_cc_vector test_initialize_online_bc_trainer basic_trainer_controller assert_bc_trainer_constructed test_initialize_trainer_parameters_uses_defaults dummy_bad_config test_take_step_adds_experiences_to_trainer_and_trains test_initialize_trainer_parameters_override_defaults test_initialize_invalid_trainer_raises_exception test_start_learning_trains_until_max_steps_then_saves dummy_config dummy_offline_bc_config_with_override test_initialization_seed test_initialize_ppo_trainer test_start_learning_updates_meta_curriculum_lesson_number assert_ppo_trainer_constructed test_take_step_resets_env_on_global_done test_start_learning_trains_forever_if_no_train_model dummy_offline_bc_config trainer_controller_with_take_step_mocks trainer_controller_with_start_learning_mocks dummy_online_bc_config create_mock_vector_braininfo sample UnityEnv setup_mock_unityenvironment step create_mock_brainparams create_mock_vector_braininfo UnityEnv setup_mock_unityenvironment step create_mock_brainparams setup_mock_unityenvironment create_mock_vector_braininfo create_mock_brainparams UnityEnv Mock list Mock array range method_handlers_generic_handler add_generic_rpc_handlers join isdir print replaceFilenameExtension add_argument exit verbose source_file ArgumentParser target_file sqrt topologicalSort list hasattr layers addEdge Graph print inputs set len list hasattr layers print filter match trim_model compile data layers print tensors float16 replace layers dumps layers isinstance print tensors inputs zip to_json globals Build tanh mad tanh mul Build concat add sigmoid sub mad _ tanh mul Build concat add sigmoid mad Buffer reset_local_buffers number_visual_observations append_update_buffer append range enumerate make_demo_buffer load_demonstration number_steps read suffix BrainParametersProto from_agent_proto DemonstrationMetaProto ParseFromString AgentInfoProto append from_proto _DecodeVarint32 start_learning int str format external_brain_names TrainerController put init_environment try_create_meta_curriculum load_config list MetaCurriculum keys _resetParameters chmod format basename isdir glob copyfile copytree prepare_for_docker_run replace int Process getLogger print run_training start Queue info append randint docopt range endswith len HasField hasattr get_attr tensor_shape ndarray isinstance shape int_val bool_val float_val ListFields name ndarray isinstance str tensor_content ndarray product isinstance get_tensor_dtype print get_tensor_dims unpack int_val bool_val array float_val enter append add set name find_tensor_by_name split name lstm find_tensor_by_name find_forget_bias split get_layer_shape id Struct tensor hasattr name patch_data input_shapes out_shapes input get_attr append replace_strings_in_list tensors astype op zip enumerate print float32 patch_data_fn model_tensors map_ignored_layer_to_its_input co_argcount len items list get_tensors hasattr name print process_layer eval ModelBuilderContext layers verbose Struct process_model open compress node GraphDef Model dims_to_barracuda_shape insert get_tensor_dims inputs ParseFromString cleanup_layers read memories print sort write trim summary list zeros_like size reversed range asarray tolist discount_rewards UnityEnvironment close MockCommunicator UnityEnvironment close MockCommunicator reset str local_done print agents step close reset MockCommunicator UnityEnvironment len UnityEnvironment close MockCommunicator close RpcCommunicator close RpcCommunicator close RpcCommunicator join remove _get_candidate_names convert _get_default_tempdir dirname abspath isfile next BCPolicy evaluate close reset MockCommunicator reset_default_graph UnityEnvironment reset_default_graph reset_default_graph reset_default_graph reset_default_graph flatten list range len get_batch Buffer assert_array append_update_buffer make_mini_batch append reset_agent array range Curriculum Curriculum Curriculum make_demo_buffer load_demonstration dirname abspath MagicMock basic_options MagicMock MetaCurriculum assert_has_calls MetaCurriculumTest increment_lessons assert_called_with MetaCurriculumTest increment_lessons assert_called_with assert_not_called MetaCurriculumTest set_all_curriculums_to_lesson_num MetaCurriculumTest dict update MetaCurriculumTest evaluate close reset MockCommunicator PPOPolicy reset_default_graph UnityEnvironment reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph reset_default_graph assert_array_almost_equal array discount_rewards dummy_offline_bc_config TrainerController assert_called_with BrainInfoMock basic_trainer_controller assert_bc_trainer_constructed dummy_offline_bc_config summaries_dir model_path keep_checkpoints BrainInfoMock basic_trainer_controller assert_bc_trainer_constructed summaries_dir model_path keep_checkpoints dummy_offline_bc_config_with_override BrainInfoMock basic_trainer_controller assert_bc_trainer_constructed summaries_dir model_path keep_checkpoints dummy_online_bc_config BrainInfoMock basic_trainer_controller assert_ppo_trainer_constructed summaries_dir dummy_config model_path keep_checkpoints initialize_trainers BrainInfoMock dummy_bad_config basic_trainer_controller MagicMock basic_trainer_controller start_learning assert_called_once MagicMock assert_not_called dummy_config trainer_controller_with_start_learning_mocks assert_called_once_with start_learning assert_called_once MagicMock dummy_config trainer_controller_with_start_learning_mocks assert_called_once_with start_learning MagicMock dummy_config trainer_controller_with_start_learning_mocks assert_called_once_with lesson MagicMock basic_trainer_controller take_step assert_called_once MagicMock trainer_controller_with_take_step_mocks assert_called_once MagicMock take_step assert_not_called trainer_controller_with_take_step_mocks assert_called_once_with | <img src="docs/images/unity-wide.png" align="middle" width="3000"/> <img src="docs/images/image-banner.png" align="middle" width="3000"/> # Unity ML-Agents Toolkit (Beta) **The Unity Machine Learning Agents Toolkit** (ML-Agents) is an open-source Unity plugin that enables games and simulations to serve as environments for training intelligent agents. Agents can be trained using reinforcement learning, imitation learning, neuroevolution, or other machine learning methods through a simple-to-use Python API. We also provide implementations (based on TensorFlow) of state-of-the-art algorithms to enable game developers and hobbyists to easily train intelligent agents for 2D, 3D and VR/AR games. These trained agents can be | 2,605 |

kaiqiangh/extracting-video-features-ResNeXt | ['action recognition'] | ['Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet?'] | generate_result_video/generate_result_video.py train.py validation.py models/pre_act_resnet.py models/resnext.py temporal_transforms.py spatial_transforms.py test.py dataset.py models/wide_resnet.py opts.py mean.py models/densenet.py classify.py main.py models/resnet.py model.py classify_video Video get_class_labels load_annotation_data video_loader make_dataset accimage_loader get_default_image_loader get_default_video_loader pil_loader get_video_names_and_annotations get_mean generate_model parse_opts CenterCrop ToTensor Compose Scale Normalize LoopPadding TemporalCenterCrop calculate_video_results test get_fps get_fine_tuning_parameters DenseNet densenet201 densenet169 densenet264 _DenseLayer _DenseBlock _Transition densenet121 conv3x3x3 get_fine_tuning_parameters resnet50 downsample_basic_block resnet152 PreActivationBasicBlock resnet34 resnet200 PreActivationBottleneck resnet18 PreActivationResNet resnet101 conv3x3x3 get_fine_tuning_parameters ResNet downsample_basic_block resnet50 Bottleneck resnet152 resnet34 resnet200 resnet18 resnet10 BasicBlock resnet101 ResNeXtBottleneck conv3x3x3 get_fine_tuning_parameters resnet50 downsample_basic_block ResNeXt resnet152 resnet101 conv3x3x3 get_fine_tuning_parameters WideBottleneck resnet50 downsample_basic_block WideResNet Video Compose DataLoader sample_duration LoopPadding join format image_loader append exists get_default_image_loader append items list format deepcopy list IntTensor append listdir range len densenet169 densenet201 resnet50 densenet264 DataParallel resnet101 resnet34 resnet200 resnet18 resnet152 resnet10 cuda densenet121 parse_args set_defaults add_argument ArgumentParser topk size mean stack append range update time format model print Variable cpu AverageMeter size eval calculate_video_results append range enumerate len decode format communicate len round float listdir Popen find DenseNet DenseNet DenseNet DenseNet append format range named_parameters data isinstance FloatTensor Variable zero_ avg_pool3d cuda cat PreActivationResNet PreActivationResNet PreActivationResNet PreActivationResNet PreActivationResNet PreActivationResNet ResNet ResNet ResNet ResNet ResNet ResNet ResNet ResNeXt ResNeXt ResNeXt WideResNet | # extracting-video-features-ResNeXt Extracting video features from pre-trained ResNeXt model Credit: [repo](https://github.com/kenshohara/video-classification-3d-cnn-pytorch) # Video Classification Using 3D ResNet This is a pytorch code for video (action) classification using 3D ResNet trained by [this code](https://github.com/kenshohara/3D-ResNets-PyTorch). The 3D ResNet is trained on the Kinetics dataset, which includes 400 action classes. This code uses videos as inputs and outputs class names and predicted class scores for each 16 frames in the score mode. In the feature mode, this code outputs features of 512 dims (after global average pooling) for each 16 frames. **Torch (Lua) version of this code is available [here](https://github.com/kenshohara/video-classification-3d-cnn).** ## Requirements | 2,606 |

kaiwang960112/Challenge-condition-FER-dataset | ['facial expression recognition'] | ['Region Attention Networks for Pose and Occlusion Robust Facial Expression Recognition'] | AffectNet_dir/simple_sample/part_attention.py AffectNet_dir/simple_sample/train_attention_part_face.py FERplus_dir/part_attention_sample.py FERplus_dir/val_part_attention_sample.py AffectNet_dir/unbalanced_sample_train/part_attentioon_sample_fly.py FERplus_dir/part_attention.py FERplus_dir/train_attention_rank_loss.py AffectNet_dir/unbalanced_sample_train/part_attention.py AffectNet_dir/simple_sample/part_attentioon_sample_fly.py AffectNet_dir/unbalanced_sample_train/sampler.py FERplus_dir/attention_rank_loss.py FERplus_dir/test_rank_loss_attention.py AffectNet_dir/unbalanced_sample_train/train_attention_part_face.py norm_angle ResNet resnet50 Bottleneck resnet152 sigmoid conv3x3 MyLoss resnet34 resnet18 BasicBlock resnet101 load_imgs CaffeCrop MsCelebDataset norm_angle ResNet resnet50 Bottleneck resnet152 sigmoid conv3x3 MyLoss resnet34 resnet18 BasicBlock resnet101 load_imgs CaffeCrop MsCelebDataset norm_angle ResNet resnet50 Bottleneck resnet152 sigmoid conv3x3 MyLoss resnet34 resnet18 BasicBlock resnet101 norm_angle ResNet resnet50 Bottleneck resnet152 sigmoid conv3x3 resnet34 resnet18 BasicBlock resnet101 load_imgs CaffeCrop MsCelebDataset main get_val_data load_imgs CaffeCrop MsCelebDataset sigmoid abs load_url ResNet load_state_dict load_url ResNet load_state_dict ResNet load_url ResNet load_state_dict load_url ResNet load_state_dict list Compose img_dir_val DataLoader CaffeCrop MsCelebDataset model get_val_data resnet34 argmax cuda open str list load_state_dict resnet18 parse_args resnet101 resnet50 eval softmax float enumerate load join int print Variable write split numpy find | ## I have uploaded the occlusion- and pose-RAFDB list, you can see at RAF_DB_dir. Thank you for your kindly waiting. ## Our manuscript has been accepted by Transactions on Image Processing as a REGULAR paper! [link](https://arxiv.org/pdf/1905.04075.pdf) # Region Attention Networks for Pose and Occlusion Robust Facial Expression Recognition Kai Wang, Xiaojiang Peng, Jianfei Yang, Debin Meng, and Yu Qiao Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences {kai.wang, xj.peng, db.meng, yu.qiao}@siat.ac.cn  ## Abstract Occlusion and pose variations, which can change facial appearance significantly, are among two major obstacles for automatic Facial Expression Recognition (FER). Though automatic FER has made substantial progresses in the past few decades, occlusion-robust and pose-invariant issues of FER have received relatively less attention, especially in real-world scenarios.Thispaperaddressesthereal-worldposeandocclusionrobust FER problem with three-fold contributions. First, to stimulate the research of FER under real-world occlusions and variant poses, we build several in-the-wild facial expression datasets with manual annotations for the community. Second, we propose a novel Region Attention Network (RAN), to adaptively capture the importance of facial regions for occlusion and pose variant FER. The RAN aggregates and embeds varied number of region features produced by a backbone convolutional neural network into a compact fixed-length representation. Last, inspired by the fact that facial expressions are mainly defined by facial action units, we propose a region biased loss to encourage high attentionweightsforthemostimportantregions.Weexamineour RAN and region biased loss on both our built test datasets and four popular datasets: FERPlus, AffectNet, RAF-DB, and SFEW. Extensive experiments show that our RAN and region biased loss largely improve the performance of FER with occlusion and variant pose. Our methods also achieve state-of-the-art results on FERPlus, AffectNet, RAF-DB, and SFEW.  | 2,607 |

kaiwang960112/Self-Cure-Network | ['facial expression recognition'] | ['Suppressing Uncertainties for Large-Scale Facial Expression Recognition'] | src/train.py src/image_utils.py test.py generate.py Res18Feature color2gray add_gaussian_noise flip_image RafDataSet initialize_weight_goog run_training Res18Feature parse_args normal uint8 astype shape COLOR_RGB2GRAY copy cvtColor add_argument ArgumentParser isinstance fill_ size out_channels Conv2d sqrt normal_ zero_ uniform_ BatchNorm2d Linear zero_grad SGD pretrained DataLoader margin_1 numpy modules raf_path cuda max topk initialize_weight_goog squeeze Adam epochs res18 load_state_dict parse_args margin_2 sum CrossEntropyLoss range state_dict size Compose mean lr softmax Res18Feature float enumerate load int RafDataSet ExponentialLR criterion backward print __len__ parameters beta train step | ## We find that SCN can correct about 50% noisy labels when train two fer datasets (add 10%~30% flip noises) together. We also find that scn can work in Face Recognition!!! ## Thank you for everyone nice and kindly waiting! ## News:My friend [nzhq](https://github.com/nzhq) open the SCN code and reproduce the experiments result!!! Thank you Zhiqing!!! ## For the WebEmotion Dataset, I will open the search and clips generation code, everyone can download the videos from YouTube with my code. ## Our manuscript has been accepted by CVPR2020! [link](https://arxiv.org/pdf/2002.10392.pdf) ## I really appreciate the contribution from my co-authors: Prof. Yu Qiao, Prof. Xiaojiang Peng, Jianfei Yang and Prof. Shijian Lu # Based on our further exploring, SCN can be applied in many other topics. # Suppressing Uncertainties for Large-Scale Facial Expression Recognition Kai Wang, Xiaojiang Peng, Jianfei Yang, Shijian Lu, and Yu Qiao Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences | 2,608 |

kakazxz/myops | ['denoising'] | ['Multi-Modality Pathology Segmentation Framework: Application to Cardiac Magnetic Resonance Images'] | ASSN/cascade_multiseqseg/cascade_network.py ASSN/cascade_multiseqseg/help.py ASSN/config/load_embedding_arg.py ASSN/preprocessor/sitkOPtool.py PRSN/dataset/MDataset.py ASSN/preprocessor/chaos.py ASSN/multiseqseg/convert_to_tfrecord.py PRSN/utils/init_util.py ASSN/dirutil/helper.py ASSN/multiseqseg/utils.py ASSN/cascade_multiseqseg/cascade_prepare.py ASSN/preprocessor/resize_demo.py ASSN/preprocessor/tools.py ASSN/antoencoder.py ASSN/logger/Logger.py PRSN/utils/common.py PRSN/models/gcblock.py PRSN/utils/metrics.py ASSN/reconstrction_seg.py ASSN/multiseqseg/dice_loss.py PRSN/config.py ASSN/multiseqseg/model.py PRSN/inference.py ASSN/preprocessor/prepare_2020_myo.py ASSN/cascade_multiseqseg/reconstruction.py ASSN/multiseqseg/sampler.py ASSN/sitkImageIO/TensorSaver.py ASSN/config/Defines.py ASSN/evaluate/metric.py ASSN/preprocessor/sitkSpatialAugment.py ASSN/cascadeACMyo.py ASSN/multiseqseg/multiseqseg.py ASSN/cascade_multiseqseg/cascade_sampler.py ASSN/multiseqseg/load_data.py ASSN/dirutil/SampleSplitter.py PRSN/models/scmodel.py ASSN/cascade_multiseqseg/restore.py ASSN/sitkImageIO/itkdatawriter.py ASSN/multiseqseg/challenge_sampler.py ASSN/model/base_model.py ASSN/cascade_multiseqseg/tool.py ASSN/multiseqseg/prepare_data.py ASSN/tool/mask.py PRSN/utils/logger.py ASSN/numpyopt/help.py ASSN/preprocessor/Registrator.py ASSN/preprocessor/sitkIntensityAugment.py ASSN/preprocessor/myo_chanlledge.py ASSN/autoencoder/autoencoder.py ASSN/multiseqseg/ops.py PRSN/models/prsn.py ASSN/sitkImageIO/itkdatareader.py PRSN/train.py ASSN/preprocessor/PreProcessor.py ASSN/preprocessor/error_nii_fix.py ASSN/config/configer.py ASSN/preprocessor/Rotater.py ShapeAutoEncode AECNN swap_neighbor_labels_with_prob ACMyoMultiSeq prepare_masked_valid_data prepare_masked_data merge_slice prepare_masked_test_data prepare_slices CascadeMyoSampler CascasedChallengeSample CascasedValidSample CascadeMyoPathologySampler evaluateV2 cal_diceV2 evaluate cal_dice Reconstruction filter_data restore reindex_for_myo_scar_edema_ZHANGZHEN l2_loss reindex_for_myo_scar_edema VoteNetParser RegParser get_reg_config get_vote_config Get_Name_By_Index get_args clear get_name_wo_suffix mkcleardir mkoutputname sort_glob writeListToFile filename listdir mkdir_if_not_exist mk_or_cleardir split_vote remove_file isVoteTestDir split dice_compute dice_and_hd ssd neg_jac computeQualityMeasures ncc mi calculate_binary_dice sad print_mean_and_std getLoggerV3 print_result getLogger BaseModel ChallengeMyoSampler int64_feature bytes_feature write_example soft_dice_loss single_class_dice_coefficient dice_coefficient HDR2LDR LDR2HDR_batch transform_LDR transform_HDR load_data LDR2HDR get_input save_results model tonemap HDR2LDR LDR2HDR tonemap_np Multiseqseg lrelu conv2d_transpose batch_norm binary_cross_entropy linear batch_instance_norm conv2d kl_divergence conv_cond_concat prepare_test_data prepare_data prepare_test_slice prepare_slices Sampler get_image get_image2HDR to_json make_gif radiance_writer save_images transform visualize rgb2gray center_crop merge_images compute_psnr LDR2HDR inverse_transform imread imsave merge zero_padding zero_padding_3d generate_3dMR generate_3dCT fix_image fix_label convert_img convert_lab crop_by_label slice_by_label PostProcessor PreProcessor Registrator Rotater clipScaleImage sigmoid_mapping histogram_equalization mult_and_add_intensity_fields augment_images_intensity resample_segmentations recast_pixel_val paste_roi_image eul2quat augment_img_lab threshold_based_crop augment_multi_imgs_lab parameter_space_regular_grid_sampling parameter_space_random_sampling augment_images_spatial augment_img augment_imgs_labs similarity3D_parameter_space_regular_sampling similarity3D_parameter_space_random_sampling reindex_label_array sitkResize3D simple_random_crop_image sitkResample3DV2 get_bounding_box_by_ids reindex_label get_bounding_box_by_id reverse_one_hot padd binarize_numpy_array get_bounding_box crop_by_bbox resize3DArray zoom3Darray sitkResize3DV2 random_crop_image_and_labels random_crop_for_trainning get_rotate_ref_img convertArrayToImg binarize_img normalize_mask get_bounding_boxV2 convert_img_2_nor_array read_png_seriesV2 convert_lab_2_array FusionSitkDataReader sitk_read_img_lab sitk_read_dico_series LazySitkDataReader VoteDataReader read_png_series convert_p_lab_2_array_batch convert_p_img_2_nor_array_batch RegistorDataReader convert_p_lab_2_array convert_img_2_scale_array convert_p_img_2_nor_array sitk_write_multi_lab sitk_wirte_ori_image write_png_image write_png_lab sitk_write_labs write_images sitk_write_lab sitk_write_image sitk_write_images saveTensor create_mask inference finame val train myops_dataset load_slicer make_one_hot MultiModalityData_load ContextBlock SpatialAttention ChannelAttention MixedFusion_BlockS4 NonLocalBlock MixedFusion_Block3 up maxpool nodo avgpool PRSN4 MixedFusion_Block4 conv_decod_block nonloacl_decod_block scSE cSE sSE random_crop_3d2 finame adjust_learning_rate resize sitk_read_row brats_dataset load_slicer standardization make_one_hot_3d random_crop_3d load_slicer2 normalization norm_img random_gamma random_flip_3d nib_read_row standardization2 load_file_name_list convert random_crop_2d nib_read_row2 weights_init_orthogonal weights_init_normal weights_init_xavier init_weights adjust_learning_rate print_network weights_init_kaiming Logger DiceMeanLoss T P TP SoftDiceLoss DiceMean WeightDiceLoss dice cross_entropy_3D cross_entropy_2D list where copy choice range print sort_glob dataset_dir prepare_slices exists uint16 myo_seg_dir astype ReadImage GetArrayFromImage sort_glob append range sitk_write_image get_bounding_box_by_ids recast_pixel_val sitkLinear crop_by_bbox binarize_img sitkResize3DV2 ReadImage GetArrayFromImage sort_glob range sitk_write_image mk_or_cleardir sitkNearestNeighbor get_name_wo_suffix recast_pixel_val ReadImage sort_glob dataset_dir sitk_write_image get_name_wo_suffix recast_pixel_val ReadImage sort_glob dataset_dir sitk_write_image str print cal_dice mean sort_glob std cal_diceV2 str print mean sort_glob std print dc ReadImage GetArrayFromImage create_mask zip append array print dc ReadImage GetArrayFromImage reindex_for_myo_scar_edema_ZHANGZHEN create_mask zip append array append join walk split zeros_like get_data save abs max str len range replace astype load join filter_data int affine print Nifti1Image split makedirs zeros uint16 where shape zeros uint16 where shape RegParser argv VoteNetParser argv enumerate makedirs join str remove isdir print rmtree isfile listdir clear makedirs clear rmtree makedirs sleep basename split sort remove close writelines exists open glob sort join move print sort len range rmtree listdir exists makedirs join basename isVoteTestDir move mkcleardir listdir mkdir_if_not_exist glob rmtree basename squeeze where print sum sum DisplacementFieldJacobianDeterminant gradient mean GetImageFromArray GetArrayFromImage abs GetHausdorffDistance GetAverageHausdorffDistance dict GetImageFromArray LabelOverlapMeasuresImageFilter HausdorffDistanceImageFilter GetDiceCoefficient Execute hd dc print mean std stdout basicConfig makedirs StreamHandler FileHandler stdout basicConfig getLogger makedirs StreamHandler FileHandler join format concatenate TFRecordWriter close write SerializeToString Example sum mean sum reduce_mean reduce_sum resize_images cast float32 resize_images read TFRecordReader decode_raw uint8 shuffle_batch reshape float32 LDR2HDR_batch transform_LDR cast int32 random_uniform transform_HDR parse_single_example less rot90 cond get_image join sorted glob astype float32 LDR2HDR zeros enumerate len save_images min shape ceil zeros range enumerate len get_shape as_list assert_rank divide reduce_sum assert_equal shape tile expand_dims print sort_glob dataset_dir prepare_slices exists prepare_test_slice sort_glob dataset_dir glob sort sitkLinear sitkResize3DV2 ReadImage range sitk_write_image mk_or_cleardir mean zeros enumerate int round center_crop resize VideoClip write_gif make_gif arange save_images batch_size print sampler strftime uniform gmtime run tile append zeros range enumerate shape zeros shape zeros sitk_read_dico_series where read_png_series zip sitk_write_lab sitk_write_image read_png_seriesV2 sitk_read_dico_series zip sitk_write_lab sitk_write_image load affine dataobj Nifti1Image save load affine print copy Nifti1Image unique save sum glob sitk_wirte_ori_image GetImageFromArray ReadImage GetArrayFromImage glob sitk_wirte_ori_image GetImageFromArray ReadImage GetArrayFromImage sitk_write_image sitkLinear glob crop_by_bbox sitkResize3DV2 ReadImage GetArrayFromImage sitkResample3DV2 mkdir_if_not_exist get_bounding_boxV2 sitkNearestNeighbor sitk_write_image sitkLinear glob crop_by_bbox sitkResize3DV2 ReadImage GetArrayFromImage sitkResample3DV2 mkdir_if_not_exist get_bounding_boxV2 sitkNearestNeighbor SpeckleNoiseImageFilter SmoothingRecursiveGaussianImageFilter SetBeta MedianImageFilter AdaptiveHistogramEqualizationImageFilter SetRadius AdditiveGaussianNoiseImageFilter str BilateralImageFilter ShotNoiseImageFilter append SetAlpha SetRangeSigma GetName WriteImage zip SetVariance enumerate SaltAndPepperNoiseImageFilter SetDomainSigma SetSigma DiscreteGaussianImageFilter GetPixelIDValue GetSpacing GetSize TransformContinuousIndexToPhysicalPoint GetDirection GaussianSource GetOrigin array dtype cumsum min iinfo astype bincount ravel max GetArrayViewFromImage SigmoidImageFilter SetAlpha SetBeta SetOutputMaximum median float SetOutputMinimum GetArrayViewFromImage percentile Threshold RescaleIntensity sitkFloat32 astype ReadImage GetArrayFromImage Execute SetOutputPixelType GetPixelID CastImageFilter GetPixelIDValue recast_pixel_val GetSpacing Image GetSize SetSpacing GetDirection SetOrigin TransformPhysicalPointToIndex Paste GetOrigin SetDirection SetDefaultPixelValue Execute ResampleImageFilter SetInterpolator SetReferenceImage sitkNearestNeighbor list random zip list random zip cos sqrt sin zeros argmax isclose Transform Resample AddTransform append SetParameters GetPixelIDValue Transform Image SetOffset randint pi flatten AffineTransform parameter_space_regular_grid_sampling linspace augment_images_spatial GetSize SetDirection GetDimension GetOrigin similarity3D_parameter_space_random_sampling SetMatrix TransformContinuousIndexToPhysicalPoint SetSpacing TranslationTransform SetTranslation GetDirection TransformPoint zip join SetCenter SetOrigin AddTransform zeros array len GetPixelIDValue Transform Image SetOffset randint pi flatten AffineTransform linspace augment_images_spatial GetSize SetDirection GetDimension GetOrigin similarity3D_parameter_space_random_sampling SetMatrix TransformContinuousIndexToPhysicalPoint SetSpacing TranslationTransform SetTranslation GetDirection TransformPoint zip join SetCenter SetOrigin AddTransform zeros array len GetPixelIDValue Transform Image SetOffset randint pi flatten AffineTransform linspace augment_images_spatial GetSize SetDirection GetDimension append GetOrigin similarity3D_parameter_space_random_sampling SetMatrix TransformContinuousIndexToPhysicalPoint SetSpacing TranslationTransform SetTranslation GetDirection TransformPoint zip join SetCenter SetOrigin AddTransform zeros array len GetPixelIDValue Transform Image SetOffset randint pi flatten AffineTransform parameter_space_regular_grid_sampling linspace augment_images_spatial GetSize SetDirection GetDimension GetOrigin similarity3D_parameter_space_random_sampling SetMatrix TransformContinuousIndexToPhysicalPoint SetSpacing TranslationTransform SetTranslation GetDirection TransformPoint join SetCenter SetOrigin AddTransform zeros array len OtsuThreshold LabelShapeStatisticsImageFilter GetBoundingBox Execute min padd shape nonzero append max range min where padd shape nonzero append max range max isinstance Image min padd shape GetArrayFromImage nonzero append binarize_numpy_array range GetImageFromArray CopyInformation convertArrayToImg where shape GetArrayFromImage zeros zeros where shape all arange slice print len ndim delete append diff seed random_crop concat seed random_crop stack unstack shape GetPixelIDValue Image SetSpacing GetDirection SetOrigin GetOrigin SetDirection int GetPixelIDValue GetSpacing Image GetSize print reshape SetSpacing SetDirection GetDimension matmul flatten GetDirection SetOrigin zeros float abs array SetOutputSpacing list int GetSpacing SetSize Execute SetOutputOrigin SetOutputDirection ResampleImageFilter GetSize astype GetDirection GetOrigin array SetInterpolator SetOutputSpacing list int GetSpacing SetSize Execute SetOutputOrigin SetOutputDirection ResampleImageFilter GetSize astype GetDirection GetOrigin array SetInterpolator CopyInformation to_categorical where GetImageFromArray GetArrayFromImage enumerate to_categorical where enumerate argmax reshape ravel astype float32 where mean GetArrayFromImage std ReadImage GetArrayFromImage zscore RescaleIntensity GetArrayFromImage RescaleIntensity append expand_dims array convert_p_img_2_nor_array GetArrayFromImage ReadImage convert_lab_2_array append expand_dims convert_p_lab_2_array array append ReadImage augment_imgs_labs zip GetGDCMSeriesIDs Execute GetSize print ImageSeriesReader GetGDCMSeriesFileNames SetFileNames len append imread sort_glob stack insert imread sort_glob stack join CopyInformation WriteImage GetImageFromArray range mk_or_cleardir join uint16 CopyInformation astype where WriteImage GetImageFromArray range mk_or_cleardir join WriteImage makedirs join uint16 CopyInformation astype where WriteImage GetImageFromArray mk_or_cleardir join uint16 CopyInformation astype WriteImage GetImageFromArray mk_or_cleardir join CopyInformation WriteImage GetImageFromArray makedirs join uint8 format imwrite Image isinstance astype GetArrayFromImage makedirs join uint8 format imwrite Image isinstance astype where GetArrayFromImage makedirs Nifti1Image range save uint16 where shape zeros enumerate split print eval format array print eval format scalar_summary T format P backward model print TP scalar_summary zero_grad float dice item step enumerate len zeros range append join walk get_data replace split MaxPool2d AvgPool2d BatchNorm2d Sequential ContextBlock Conv2d BatchNorm2d Sequential NonLocalBlock shape array zoom append join walk load ones_like load ones_like replace get_data split mean std where mean std min max ReadImage GetArrayFromImage zoom rint zeros_like shape load_slicer astype resize shape astype load_slicer2 resize zeros range randint randint randint rand flip adjust_gamma rand param_groups lr data normal constant __name__ data normal constant xavier_normal __name__ data normal constant __name__ kaiming_normal data normal constant orthogonal __name__ apply print parameters view log_softmax size nll_loss numel view log_softmax size nll_loss numel sum | # myops2020 Multi-modality Pathology Segmentation Framework: Application to Cardiac Magnetic Resonance Images ``` @inproceedings{zhang2020multi, title={Multi-modality Pathology Segmentation Framework: Application to Cardiac Magnetic Resonance Images}, author={Zhang, Zhen and Liu, Chenyu and Ding, Wangbin and Wang, Sihan and Pei, Chenhao and Yang, Mingjing and Huang, Liqin}, booktitle={Myocardial Pathology Segmentation Combining Multi-Sequence CMR Challenge}, pages={37--48}, year={2020}, organization={Springer} | 2,609 |

kakirastern13/OCR-with-YOLO | ['optical character recognition'] | ['FUNSD: A Dataset for Form Understanding in Noisy Scanned Documents'] | darknet_veztan/scripts/voc_label.py darknet_veztan/python/darknet.py darknet_veztan/python/proverbot.py darknet_veztan/examples/detector.py darknet_veztan/examples/detector-scipy-opencv.py array_to_image detect2 METADATA DETECTION c_array detect IMAGE sample classify BOX predict_tactics predict_tactic convert_annotation convert c_float transpose flatten c_array IMAGE sorted POINTER network_detect make_probs c_void_p make_boxes num_boxes cast classes free_ptrs append range uniform sum range len sorted predict_image classes append range free_detections sorted do_nms_obj c_int pointer h get_network_boxes w predict_image free_image classes append load_image range bbox c_array chr predict sample reset_rnn sorted predict_tactic append range int str parse join text convert write index getroot iter open find | # OCR with YOLO A repository for keep a record of some findings made regarding some OCR project ## Literature & Datasets: Scan and extract text from an image using Python libraries https://developer.ibm.com/tutorials/document-scanner/ Tutorial : Building a custom OCR using YOLO and Tesseract https://medium.com/saarthi-ai/how-to-build-your-own-ocr-a5bb91b622ba Deep Learning Based OCR for Text in the Wild https://nanonets.com/blog/deep-learning-ocr/ ICDAR 2019 Competition on Table Detection and Recognition (cTDaR) | 2,610 |

kaliahinartem/twitter_sentiment_analysis | ['sentiment analysis', 'word embeddings', 'twitter sentiment analysis'] | ['BB_twtr at SemEval-2017 Task 4: Twitter Sentiment Analysis with CNNs and LSTMs'] | SemEval/dataset_readers/semeval_datareader.py SemEval/dataset_readers/utils.py SemEval/models/semeval_classifier_attention.py SemEval/models/my_bcn.py SemEval/predictors/semeval_predictor.py SemEval/models/semeval_classifier.py SemEvalDatasetReader main preprocess tokenize BiattentiveClassificationNetwork SemEvalClassifier SemEvalClassifierAttention SemEvalPredictor tokenize list punctuation words | # SemEval-2017 Task 4 Sentiment Analysis in Twitter ## Introduction [SemEval-2017 Task 4](http://alt.qcri.org/semeval2017/task4/index.php?id=data-and-tools) is a text sentiment classification task: Given a message, classify whether the message is of positive, negative, or neutral sentiment. ## Run Experiments ```bash # install the environment conda create -n allennlp python=3.6 source activate allennlp pip install -r requirements.txt ``` | 2,611 |

kalyo-zjl/APD | ['pedestrian detection'] | ['Attribute-aware Pedestrian Detection in a Crowd'] | peddla.py demo.py load_model preprocess main parse_args parse_det a_nms peddla_net fill_fc_weights Root Bottleneck get_model_url BasicBlock DLAUp DeformConv DLA BottleneckX Tree IDAUp DLASeg Interpolate conv3x3 Identity fill_up_weights dla34 convolution add_argument ArgumentParser int exp asarray where append numpy range a_nms len minimum ones_like min maximum sqrt append sum max load format print shape load_state_dict state_dict subplots div cuda show sorted load_model imshow parse_args range glob synchronize astype sqrt eval preprocess parse_det sigmoid_ reshape float32 add_patch Rectangle img_list len load_pretrained_model DLA isinstance bias Conv2d modules constant_ data fabs size ceil range DLASeg format | # APD [Attribute-aware Pedestrian Detection in a Crowd](https://arxiv.org/pdf/1910.09188.pdf) ## Installation To run the demo, the following requirements are needed. ``` numpy matplotlib torch >= 0.4.1 glob argparse | 2,612 |

kamata1729/QATM_pytorch | ['template matching'] | ['QATM: Quality-Aware Template Matching For Deep Learning'] | utils.py qatm.py qatm_pytorch.py MyNormLayer nms nms_multi run_one_sample run_multi_sample CreateModel plot_result_multi ImageDataset Featex QATM plot_result all_sample_iou locate_bbox score2curve plot_success_curve evaluate_iou compute_score IoU minimum transpose maximum where append array imwrite tuple copy imshow rectangle minimum int arange concatenate ones reshape transpose astype maximum where array zip append max list imwrite copy imshow color_palette plot_result max range len exp model min log range compute_score resize cpu numpy is_cuda append append run_one_sample min max ones filter2D argmax max int linspace append sum array len locate_bbox append IoU range len show format plot score2curve grid mean ylim title figure linspace xticks yticks | # Pytorch Non-Official Implementation of QATM:Quality-Aware Template Matching For Deep Learning arxiv: https://arxiv.org/abs/1903.07254 original code (tensorflow+keras): https://github.com/cplusx/QATM Qiita(Japanese): https://qiita.com/kamata1729/items/11fd55992c740526f6fc # Dependencies * torch(1.0.0) * torchvision(0.2.1) * cv2 * seaborn * sklearn | 2,613 |

kaminAI/beem | ['iris segmentation'] | ['Boltzmann Exploration Expectation–Maximisation'] | src/plotting.py src/utils.py src/models.py src/evaluation.py compute_purity eval_cluster BeemGMM plot_roc_curve plot_raw_gp_data plot_metrics roc_curve_with_error_bounds plot_purity greedy_sampler boltzmann assign score_mixture_likelihood boltzmann_sampling boltzmann_global_opt make_equal_bin_sizes bincount argmax set homogeneity_score compute_purity adjusted_rand_score normalized_mutual_info_score show subplots set_title plot set_xlabel set_xlim roc_curve trapz now strftime set_ylabel savefig legend set_ylim update show use subplots Patch rc set_xlabel grid now strftime scatter set_style set_ylabel legend color_palette savefig setLevel enumerate subplots arange grid MultipleLocator setLevel show set_major_locator set_xlabel strftime savefig update plot set_xlim mean rc now set_ylabel set_style color_palette fill_between std set_ylim len subplots trapz linspace show set_xlabel strftime savefig legend append update plot set_xlim mean interp minimum rc roc_curve maximum now set_ylabel fill_between std set_ylim len subplots arange grid MultipleLocator setLevel show set_major_locator set_xlabel strftime savefig legend update plot set_xlim mean enumerate rc now set_ylabel set_style color_palette fill_between std set_ylim len divmod len argmax asarray exp reshape sum max exp sum max func mean max argmax | # Boltzmann Exploration Expectation-Maximisation We present a general method for fitting finite mixture models (FMM). Learning in a mixture model consists of finding the most likely cluster assignment for each data-point, as well as finding the parameters of the clusters themselves. In many mixture models this is difficult with current learning methods, where the most common approach is to employ monotone learning algorithms e.g. the conventional expectation-maximisation algorithm. While effective, the success of any monotone algorithm is crucially dependant on good parameter initialisation, where a common choice is _K_-means initialisation, commonly employed for Gaussian mixture models. For other types of mixture models the path to good initialisation parameters is often unclear and may require a problem specific solution. To this end, we propose a general heuristic learning algorithm that utilises Boltzmann exploration to assign each observation to a specific base distribution within the mixture model, which we call Boltzmann exploration expectation-maximisation (BEEM). With BEEM, hard assignments allow straight forward parameter learning for each base distribution by conditioning only on its assigned observations. Consequently it can be applied to mixtures of any base distribution where single component parameter learning is tractable. The stochastic learning procedure is able to escape local optima and is thus insensitive to parameter initialisation. We show competitive performance on a number of synthetic benchmark cases as well as on real-world datasets. [Full paper](https://arxiv.org/abs/1912.08869) **Corresponding authors**: * [Mathias Edman]([email protected]), Kamin AI AB * [Neil Dhir]([email protected]), Kamin AI AB ## Demo There is no specific installation required to use BEEM. This implementation uses only bits and bobs from the standard python SciPy stack and `python 3+`. For an example of how to use it, try the [BEEM_Rainbow_demo.ipynb](BEEM_Rainbow_demo.ipynb) demo, which replicates the results from section **5.1**. <p align="center"> | 2,614 |