repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

kuihua/MSPFN | ['rain removal', 'single image deraining'] | ['Multi-Scale Progressive Fusion Network for Single Image Deraining'] | model/train_data/crop.py utils/bianyuan.py model/TEST_MSPFN_M17N1.py model/train_MSPFN.py model/test/test_MSPFN.py model/load_rain.py model/TEST_MSPFN.py model/image_crop.py utils/augment.py model/spp_layer.py utils/BasicConvLSTMCell.py utils/layer.py utils/closed_form_matting.py model/ps.py model/train_data/preprocessing.py model/MSPFN.py utils/non_local.py crop img_crop load MODEL _PS np_spatial_pyramid_pooling tf_spatial_pyramid_pooling Model Model normalize train save_img count_model_params main preprocess main preprocess random_flip_left_right random_brightness random_crop_and_zoom _augment normalize augment BasicConvLSTMCell ConvRNNCell _conv_linear edge getLaplacian getlaplacian1 down_sample avg_pooling_layer Feature_enhance flatten_layer NonLocalBlock lrelu prelu max_pooling_layer _PS rotate conv_layer batch_normalize full_connection_layer pixel_shuffle_layer deconv_layer PS_layer pool_sample edge pixel_shuffle_layerg gkern _convND non_local_block dtype concatenate astype float32 append range len as_list print reshape concat split itemsize as_strided append concatenate reshape transpose copy floor ceil sum prod range amax train_loss Saver save Session run restore list placeholder rotate normalize range format global_variables_initializer get_checkpoint_state load int join minimize print float32 tqdm MODEL AdamOptimizer randint bool len print get_shape trainable_variables join uint8 format COLOR_BGR2RGB xlabel add_subplot close imshow enumerate set_ticks_position figure mkdir savefig tick_params range clip cvtColor len join format print mkdir save listdir crop range open preprocess update int imwrite glob close tqdm resize append imread array enumerate len flip reshape rand LUT uniform resize random_flip_left_right random_brightness random_crop_and_zoom resize normalize Canny COLOR_RGB2GRAY bitwise_and Sobel CV_16SC1 GaussianBlur cvtColor broadcast_to list csr_matrix transpose identity matmul shape sum diags range grey_erosion int T reshape inv repeat zeros ravel array shape transpose tocoo as_list array get_variable get_variable get_variable get_variable get_variable zeros as_list transpose split split print concat split as_list int resize_images slice concat as_list int avg_pooling_layer slice concat as_list concat slice list int_shape _convND dot add | # Multi-Scale Progressive Fusion Network for Single Image Deraining (MSPFN) This is an implementation of the MSPFN model proposed in the paper ([Multi-Scale Progressive Fusion Network for Single Image Deraining](https://arxiv.org/abs/2003.10985)) with TensorFlow. # Requirements - Python 3 - TensorFlow 1.12.0 - OpenCV - tqdm - glob | 2,700 |

kulikovv/DeepColoring | ['instance segmentation', 'semantic segmentation', 'autonomous driving'] | ['Instance Segmentation by Deep Coloring'] | deepcoloring/halo_loss.py deepcoloring/architecture.py cvppp/train_cvppp.py deepcoloring/train.py setup.py deepcoloring/data.py ecoli/train_ecoli.py deepcoloring/utils.py deepcoloring/__init__.py ReadableDir evaluate UpModule clip_align EUnet DownModule Reader flatten build_halo_mask halo_loss train blur rotate90 rescale best_dice visualize rotate normalize postprocess random_gamma symmetric_best_dice random_scale vgg_normalize random_contrast flip_vertically clip_patch get_as_list random_transform random_noise rgb2gray clip_patch_random rgba2rgb flip_horizontally clip_mask_builder ReadableDir postprocess get_as_list symmetric_best_dice model eval append to range len size expand_dims repeat zeros to circle log_softmax size min index_select softmax to sum range generator mask_builder model zero_grad save Adam savetxt append to range state_dict halo_loss item float int print_percent backward parameters step prepare subplots zeros_like argmax set_title imshow shape ceil append range colors postprocess format distance_transform_edt softmax from_list get_cmap int text unravel_index float sum append zeros_like unique enumerate zeros_like polygon find_contours argmax range | # Instance Segmentation by Deep Coloring [Paper preprint](https://arxiv.org/abs/1807.10007)  ### About We propose a new and, arguably, a very simple reduction of instance segmentation to semantic segmentation. This reduction allows to train feed-forward non-recurrent deep instance segmentation systems in an end-to-end fashion using architectures that have been proposed for semantic segmentation. Our approach proceeds by introducing a fixed number of labels (colors) and then dynamically assigning object instances to those labels during training (coloring). A standard semantic segmentation objective is then used to train a network that can color previously unseen images. At test time, individual object instances can be recovered from the output of the trained convolutional network using simple connected component analysis. In the experimental validation, the coloring approach is shown to be capable of solving diverse instance segmentation tasks arising in autonomous driving (the Cityscapes benchmark), plant phenotyping (the CVPPP leaf segmentation challenge), and high-throughput microscopy image analysis. ### Test with pretrained models 1. For cvppp run notebook cvppp/cvppp.ipynb, follow code 2. For ecoli run notebook ecoli/ecoli.ipynb, follow code ### Trainging guidelines 1. [Guideline for cvppp](cvppp/README.md) | 2,701 |

kulikovv/harmonic | ['instance segmentation', 'semantic segmentation'] | ['Instance Segmentation of Biological Images Using Harmonic Embeddings'] | models/pspnet.py datasets/data.py harmonic/__init__.py models/extractors.py harmonic/sinconv.py models/__init__.py harmonic/cluster.py datasets/__init__.py harmonic/utils.py harmonic/coordconv.py models/unet.py harmonic/embeddings.py default_loader Reader cluster AddCoords CoordConv get_embeddings flat_pattern_rdm Embedding pairwise_distances pow_weights_norm log_weights_norm linear_weights_norm SinConv AddSine set_lr print_percent recursive_search disable_gradients_for_class train Fire SqueezeNet densenet DenseNet ResNet resnet50 Bottleneck resnet152 squeezenet conv3x3 _DenseLayer resnet34 _DenseBlock resnet18 load_weights_sequential BasicBlock _Transition resnet101 PSPUpsampleS PSPNet PSPModule freeze_layer PSPUpsample outconv double_conv UNet down ups inconv reshape transpose astype MeanShift labels_ unique zeros max regionprops fit transpose mm view weights_norm int view size contiguous device shape repeat unsqueeze item gather to long scatter_add rand print str param_groups children isinstance parameters recursive_search zero_grad disable_gradients_for_class save set_lr list step Adam freeze_encoder floss savetxt load_state_dict append to range detach state_dict mean float net emb enumerate load int print_percent backward print loadtxt parameters isfile numpy len OrderedDict items list load_state_dict load SqueezeNet load_weights_sequential load SqueezeNet load_weights_sequential load ResNet load_url load_weights_sequential load ResNet load_url load_weights_sequential load ResNet load_url load_weights_sequential load_url ResNet load_weights_sequential load ResNet load_url load_weights_sequential parameters | # Instance Segmentation Using Harmonic Embeddings <p align="middle"> <img src="images/pipeline.png" width="600" /> </p> <p> We present a new instance segmentation approach tailored to biological images, where instances may correspond to individual cells, organisms or plant parts. Unlike instance segmentation for user photographs or road scenes, in biological data object instances may be particularly densely packed, the appearance variation may be particularly low, the processing power may be restricted, while, on the other hand, the variability of sizes of individual instances may be limited. These peculiarities are successfully addressed and exploited by the proposed approach. </p> <p> Our approach describes each object instance using an expectation of a limited number of sine waves with frequencies and phases adjusted to particular object sizes and densities. At train time, a fully-convolutional network is learned to predict the object embeddings at each pixel using a simple pixelwise regression loss, while at test time the instances are recovered using clustering in the embeddings space. In the experiments, we show that our approach outperforms previous embedding-based instance segmentation approaches on a number of biological datasets, achieving state-of-the-art on a popular CVPPP benchmark. Notably, this excellent performance is combined with computational efficiency that is needed for deployment to domain specialists. </p> | 2,702 |

kumnikhil/christmAIs_replica | ['style transfer'] | ['A Neural Algorithm of Artistic Style'] | tests/test_drawer.py docs/conf.py christmais/parser.py tests/test_styler.py christmais/drawer.py setup.py christmais/__init__.py christmais/tasks/christmais_time.py christmais/styler.py tests/test_parser.py tests/conftest.py Drawer Parser Styler main build_parser pytest_addoption process_img local styler drawer parser test_draw_png test_read_categories test_create_index_html test_draw test_read_categories test_get_similar test_get_most_similar test_get_actual_label test_save_preprocessed_img test_style_transfer test_get_img_lists_return_type test_get_img_lists_return_objects test_get_data_and_name test_create_placeholder_return_type add_argument ArgumentParser style_transfer format get_most_similar getLogger Drawer draw output Parser info install parse_args build_parser Styler INFO addoption glob _read_categories glob _create_index_html format glob _create_index_html _draw_png format glob draw format zip get_most_similar _get_actual_label _get_similar _create_placeholder float32 placeholder _get_img_lists _get_img_lists glob format _get_data_and_name style_transfer | # christmAIs [](https://console.cloud.google.com/cloud-build/builds?authuser=2&organizationId=301224238109&project=tm-github-builds) [](https://christmais-2018.readthedocs.io/en/latest/?badge=latest) [](https://www.gnu.org/licenses/old-licenses/gpl-2.0.en.html)  **christmAIs** ("krees-ma-ees") is text-to-abstract art generation for the holidays! This work converts any input string into an abstract art by: - finding the most similar [Quick, Draw!](https://quickdraw.withgoogle.com/data) class using [GloVe](https://nlp.stanford.edu/projects/glove/) - drawing the nearest class using a Variational Autoencoder (VAE) called [Sketch-RNN](https://arxiv.org/abs/1704.03477); and | 2,703 |

kundajelab/deeplift | ['text classification'] | ['Towards better understanding of gradient-based attribution methods for Deep Neural Networks'] | deeplift/conversion/kerasapi_conversion.py deeplift/layers/normalization.py deeplift/visualization/matplotlib_helpers.py tests/layers/test_activation.py tests/layers/test_conv1d.py deeplift/layers/helper_functions.py tests/layers/test_pool2d.py tests/conversion/sequential/test_conv2d_model_valid_padding.py deeplift/visualization/viz_sequence.py tests/layers/test_conv2d.py tests/conversion/functional/test_functional_concatenate_model.py deeplift/layers/__init__.py deeplift/layers/core.py deeplift/util.py tests/shuffling/test_dinuc_shuffle.py tests/conversion/sequential/test_load_batchnorm.py tests/conversion/sequential/test_conv1d_model_valid_padding.py deeplift/layers/activations.py deeplift/layers/convolutional.py tests/layers/test_dense.py tests/layers/test_pool1d.py tests/conversion/sequential/test_conv1d_model_same_padding.py tests/conversion/sequential/test_conv2d_model_same_padding.py deeplift/dinuc_shuffle.py tests/layers/test_concat.py tests/layers/test_deeplift_genomics_default_mode.py deeplift/layers/pooling.py setup.py tests/conversion/sequential/test_sigmoid_tanh_activations.py deeplift/models.py deeplift/__init__.py tests/conversion/sequential/test_conv2d_model_channels_first.py string_to_char_array dna_to_one_hot char_array_to_string test_dinuc_content tokens_to_one_hot dinuc_shuffle one_hot_to_tokens dinuc_content one_hot_to_dna bench SequentialModel Model GraphModel get_integrated_gradients_function superclass_in_base_classes compile_func mean_normalise_weights_for_sequence_convolution connect_list_of_layers get_hypothetical_contribs_func_onehot is_gzipped in_place_shuffle randomly_shuffle_seq assert_is_type is_type get_file_handle get_shuffle_seq_ref_function get_session load_yaml_data_from_file assert_type assert_is_not_type run_function_in_batches enum flatten_conversion conv1d_conversion globalmaxpooling1d_conversion insert_weights_into_nested_model_config functional_container_conversion linear_conversion globalavgpooling1d_conversion convert_sequential_model activation_conversion prelu_conversion relu_conversion prep_pool2d_kwargs activation_to_conversion_function sequential_container_conversion maxpool1d_conversion concat_conversion_function convert_functional_model tanh_conversion validate_keys convert_model_from_saved_files batchnorm_conversion prep_pool1d_kwargs noop_conversion avgpool2d_conversion conv2d_conversion maxpool2d_conversion layer_name_to_conversion_function ConvertedModelContainer softmax_conversion input_layer_conversion sigmoid_conversion dense_conversion avgpool1d_conversion PReLU Softmax Tanh Sigmoid ReLU Activation Conv2D Conv Conv1D Merge Concat SingleInputMixin Node Dense ListInputMixin OneDimOutputMixin Input NoOp Flatten Layer add_val_to_col lte_mask distribute_over_product pseudocount_near_zero gte_mask eq_mask gt_mask lt_mask conv1d_transpose_via_conv2d BatchNormalization MaxPool1D AvgPool1D GlobalMaxPool1D MaxPool2D Pool2D AvgPool2D Pool1D GlobalAvgPool1D plot_hist plot_t plot_weights plot_g plot_weights_given_ax plot_a plot_c TestFunctionalConcatModel TestConvolutionalModel TestConvolutionalModel TestConvolutionalModel TestConvolutionalModel TestConvolutionalModel TestBatchNorm TestConvolutionalModel TestActivations TestConcat TestConv TestConv TestDense TestDense TestPool TestPool TestDinucShuffle dinuc_count tile where string_to_char_array permutation RandomState arange char_array_to_string tokens_to_one_hot empty_like shape one_hot_to_tokens unique append empty range len RandomState total_seconds print now dinuc_shuffle mean append range len join nuc_content sorted RandomState dna_to_one_hot print tuple dinuc_content choice dinuc_shuffle zeros sum keys range len enum print items list hasattr staticmethod setattr print extend func zip str print mean expand_dims sum get_file_handle close load print remove is_gzipped isfile read close open set_inputs randint range len activation_conversion validate_keys extend activation_conversion validate_keys extend activation_conversion validate_keys extend validate_keys prep_pool2d_kwargs prep_pool2d_kwargs prep_pool1d_kwargs prep_pool1d_kwargs Input load decode read list hasattr File insert_weights_into_nested_model_config OrderedDict loads zip open str print build_fwd_pass_vars sequential_container_conversion append Input flush print conversion_function extend connect_list_of_layers layer_name_to_conversion_function enumerate str list items isinstance conversion_function OrderedDict set_inputs zip layer_name_to_conversion_function max range append len str print build_fwd_pass_vars functional_container_conversion output_layers enum Variable zeros scatter_update reshape show title hist figure len add_patch Polygon Ellipse Rectangle add_patch Ellipse Rectangle add_patch Rectangle add_patch sorted arange plot_func squeeze transpose min set_xlim add_patch set_ticks Rectangle append abs max range set_ylim enumerate show plot_weights_given_ax add_subplot figure defaultdict range len | DeepLIFT: Deep Learning Important FeaTures === [](https://travis-ci.org/kundajelab/deeplift) [](https://github.com/kundajelab/deeplift/blob/master/LICENSE) **This version of DeepLIFT has been tested with Keras 2.2.4 & tensorflow 1.14.0**. See [this FAQ question](#my-model-architecture-is-not-supported-by-this-deeplift-implementation-what-should-i-do) for information on other implementations of DeepLIFT that may work with different versions of tensorflow/pytorch, as well as a wider range of architectures. See the tags for older versions. This repository implements the methods in ["Learning Important Features Through Propagating Activation Differences"](https://arxiv.org/abs/1704.02685) by Shrikumar, Greenside & Kundaje, as well as other commonly-used methods such as gradients, gradient-times-input (equivalent to a version of Layerwise Relevance Propagation for ReLU networks), [guided backprop](https://arxiv.org/abs/1412.6806) and [integrated gradients](https://arxiv.org/abs/1611.02639). Here is a link to the [slides](https://docs.google.com/file/d/0B15F_QN41VQXSXRFMzgtS01UOU0/edit?usp=docslist_api&filetype=mspresentation) and the [video](https://vimeo.com/238275076) of the 15-minute talk given at ICML. [Here](https://www.youtube.com/playlist?list=PLJLjQOkqSRTP3cLB2cOOi_bQFw6KPGKML) is a link to a longer series of video tutorials. Please see the [FAQ](https://github.com/kundajelab/deeplift/blob/master/README.md#faq) and file a github issue if you have questions. Note: when running DeepLIFT for certain computer vision tasks **you may get better results if you compute contribution scores of some higher convolutional layer rather than the input pixels**. Use the argument `find_scores_layer_idx` to specify which layer to compute the scores for. **Please be aware that figuring out optimal references is still an open problem. Suggestions on good heuristics for different applications are welcome.** In the meantime, feel free to look at this github issue for general ideas: https://github.com/kundajelab/deeplift/issues/104 Please feel free to follow this repository to stay abreast of updates. | 2,704 |

kunwuz/DGTN | ['session based recommendations'] | ['DGTN: Dual-channel Graph Transition Network for Session-based Recommendation'] | model/multi_sess.py train.py model/InOutGat.py model/model.py main.py preprocess/cikm16_org_prepro.py model/srgnn.py preprocess/cikm16_perprocess.py neigh_retrieval/neighborhood_retrieval.py model/ggnn.py preprocess/rcs15_perprocess.py neigh_retrieval/knn.py data/multi_sess_graph.py preprocess/rcs15_org_prepro.py utils/saver.py main forward case_study MultiSessionsGraph InOutGGNN normal ones kaiming_uniform uniform reset InOutGATConv_intra glorot InOutGATConv zeros CNNFusing Embedding2Score ItemFusing NARM GraphModel GroupGraph SRGNN KNN filter_data get_sequence split_train_test preprocess load_data process_seqs get_dict filter_data get_sequence split_train_test preprocess load_data process_seqs get_dict filter_data get_sequence split_train_test split_train preprocess load_data filter_test process_seqs get_dict filter_data get_sequence split_train_test split_train preprocess load_data filter_test process_seqs get_dict print_txt LambdaLR localtime DataLoader warning device dataset forward str list getcwd map strftime Adam load_state_dict to range SummaryWriter format epoch manual_seed random_seed load print parameters MultiSessionsGraph step makedirs y model zero_grad numpy len append to isin mean eval item zip enumerate time backward print loss_function train step add_scalar y model print eval zip to numpy enumerate uniform_ sqrt uniform_ sqrt uniform_ sqrt size fill_ fill_ normal_ children _reset fromtimestamp nunique utc format print min apply isoformat max read_csv len fromtimestamp nunique utc format print size min isoformat max len nunique format print size index max len print sort_values index enumerate print time enumerate time print process_seqs len unique sort_values drop_duplicates values enumerate dump get_sequence print open get_dict makedirs len range int nunique format print sort_values range len nunique format print size len str split_train filter_test str sorted format items write close open | # DGTN: Dual-channel Graph Transition Network for Session-based Recommendation This repository contains PyTorch Implementation of ICDMW 2020 (NeuRec @ ICDM) paper: [*DGTN: Dual-channel Graph Transition Network for Session-based Recommendation*.](https://arxiv.org/abs/2009.10002) Please check our paper for more details about our work if you are interested. ## Usage Following the steps below to run our codes: ### 1. Preprocess The preprocess code is in `preprocess/` ### 2. Neighbors retrieval Please run `neigh_retrieval/neighborhood_retrieval.py` ### 3. Run the model | 2,705 |

kupuSs/DIEN-pipline | ['click through rate prediction'] | ['Deep Interest Evolution Network for Click-Through Rate Prediction'] | main/sequence.py main/core.py data_process/data_loader.py main/inputs.py main/rnn.py main/rnn_utils.py main/activation.py main/normalization.py main/utils.py main/rnn_v2.py data_process/feature_process.py run/run.py data_process/config_customize.py data/concat_taobao_items.py data_process/feature_process_parallel.py data_process/utils.py data_process/get_standard_input.py data_process/config_amazon_books.py main/dien.py data_process/config_taobao.py data_loader encoder_sparse_feature_test negative_sampling scaler_dense_feature encoder_sequence truncated_sequence encoder_sparse_feature_train tmp_func apply_parallel get_input run get_standard_input get_stats sd print_values print_header reduce_mem_usage_sd activation_layer Dice LocalActivationUnit PredictionLayer DNN auxiliary_net DIEN interest_evolution auxiliary_loss embedding_lookup VarLenSparseFeat get_linear_logit get_inputs_list combined_dnn_input input_from_feature_columns SparseFeat get_fixlen_feature_names DenseFeat build_input_features get_embedding_vec_list create_embedding_matrix get_varlen_feature_names varlen_embedding_lookup get_varlen_pooling_list create_embedding_dict get_dense_input LayerNormalization dynamic_rnn _dynamic_rnn_loop _best_effort_input_batch_size _infer_state_dtype _like_rnncell_ _rnn_step _transpose_batch_time _reverse_seq VecAttGRUCell QAAttGRUCell _Linear_ dynamic_rnn _dynamic_rnn_loop _best_effort_input_batch_size _infer_state_dtype _like_rnncell_ _rnn_step _transpose_batch_time _reverse_seq SequencePoolingLayer Transformer KMaxPooling BiLSTM AttentionSequencePoolingLayer DynamicGRU BiasEncoding positional_encoding NoMask concat_fun reduce_max reduce_sum div reduce_mean softmax Hash Linear get_stats get_chunk print concat append reduce_mem_usage_sd read_csv tqdm apply map tqdm fillna map tqdm str list map tqdm dict apply zip range keys read_csv values len list pad_sequences map tqdm read_csv values tqdm max apply append randint range len apply time sparse_features encoder_sparse_feature_test dense_features negative_sampling print scaler_dense_feature map encoder_sequence data_loader get_fixlen_feature_names truncated_sequence encoder_sparse_feature_train array sequence_features columns DataFrame print str nunique format print memory_usage sum dtypes nunique abs astype mean round sum max nunique columns format print astype sd tqdm sum dtypes Dice issubclass Activation activation sequence_mask concat float32 reduce_mean cast dense batch_normalization sigmoid subtract multiply auxiliary_loss embedding_lookup list concat_fun get_dense_input combined_dnn_input global_variables_initializer name interest_evolution build_input_features Model create_embedding_matrix add_loss append varlen_embedding_lookup get_varlen_pooling_list Input values run build_input_features build_input_features OrderedDict Input maxlen isinstance int print Embedding dimension pow name use_hash append create_embedding_dict append input_from_feature_columns concat_fun range name embedding_name use_hash append name embedding_name use_hash name combiner append append embedding_lookup create_embedding_matrix varlen_embedding_lookup get_varlen_pooling_list get_dense_input concat_fun get_shape concatenate transpose concat rank set_shape shape value all is_sequence get_shape _copy_some_through call_cell assert_same_structure flatten set_shape zip pack_sequence_as cond get_shape tuple merge_with unknown_shape stack set_shape reverse_sequence unstack zip append flatten tuple identity to_int32 value constant output_size _best_effort_input_batch_size tuple while_loop reduce_max _concat flatten shape set_shape zip pack_sequence_as reduce_min state_size AUTO_REUSE as_list embedding_lookup concat variable cos sin expand_dims array range | # DIEN-PIPLINE DIEN-pipline implement 一个DIEN的pipline简单实现,包括以下部分: * 模型本身 * 数据预处理 * 负采样实现 * 简易搭建,只需要根据数据填写config文件即可 该pipline实现了数据获取,数据预处理,sequence负采样的集成,且是解耦的,可以快速应用于新的数据集,实现了amazon和taobao两个数据集的baseline ## 感谢以下大神的开源代码 * code: https://github.com/shenweichen/DeepCTR | 2,706 |

kurapan/CRNN | ['optical character recognition', 'scene text recognition'] | ['An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition'] | train.py eval.py data_generator.py models.py STN/spatial_transformer.py config.py utils.py Generator ValGenerator TrainGenerator evaluate_batch predict_text evaluate create_output_directory set_gpus load_image preprocess_image collect_data evaluate_one loc_net ctc_lambda_func CRNN CRNN_STN create_output_directory get_callbacks instantiate_multigpu_model_if_multiple_gpus load_weights_if_resume_training get_models set_gpus get_generators get_optimizer PredictionModelCheckpoint MultiGPUModelCheckpoint Evaluator create_result_subdir resize_image pad_image SpatialTransformer create_result_subdir print makedirs data_path isfile transpose nb_channels resize_image flip pad_image shape join replace predict evaluate_batch evaluate_one predict_text format print load_image preprocess_image predict_text basename tqdm load_image preprocess_image zeros Model Input get_shape concatenate add Model Input get_shape concatenate add Model Input copy output_dir ValGenerator TrainGenerator Adam SGD join PredictionModelCheckpoint MultiGPUModelCheckpoint TensorBoard prediction_model_cp_filename Evaluator training_model_cp_filename ReduceLROnPlateau tb_log label_len characters optimizer makedirs load_model_path resume_training load_weights gpus multi_gpu_model len int join basename glob max makedirs zeros concatenate resize asarray resize | ## CRNN Keras implementation of Convolutional Recurrent Neural Network for text recognition There are two models available in this implementation. One is based on the original CRNN model, and the other one includes a spatial transformer network layer to rectify the text. However, the performance does not differ very much, so it is up to you which model you choose. ### Training You can use the Synth90k dataset to train the model, but you can also use your own data. If you use your own data, you will have to rewrite the code that loads the data accordingly to the structure of your data. To download the Synth90k dataset, go to this [page](http://www.robots.ox.ac.uk/~vgg/data/text/) and download the MJSynth dataset. Either put the Synth90k dataset in `data/Synth90k` or specify the path to the dataset using the `--base_dir` argument. The base directory should include a lot of subdirectories with Synth90k data, annotation files for training, validation, and test data, a file listing paths to all images in the dataset, and a lexicon file. Use the `--model` argument to specify which of the two available models you want to use. The default model is CRNN with STN layer. See `config.py` for details. Run the `train.py` script to perform training, and use the arguments accordingly to your setup. #### Execution example | 2,707 |

kurapan/EAST | ['optical character recognition', 'scene text detection', 'curved text detection'] | ['EAST: An Efficient and Accurate Scene Text Detector'] | losses.py train.py lanms/.ycm_extra_conf.py lanms/__init__.py eval.py lanms/__main__.py locality_aware_nms.py data_processor.py adamw.py model.py AdamW threadsafe_generator generator restore_rectangle_rbox line_cross_point get_text_file resize_image sort_rectangle count_samples all generate_rbox check_and_validate_polys restore_rectangle line_verticle shrink_poly crop_area fit_line load_data_process val_generator pad_image get_images polygon_area point_dist_to_line load_annotation threadsafe_iter load_data rectangle_from_parallelogram get_images sort_poly resize_image detect main standard_nms weighted_merge nms_locality intersection dice_loss rbox_loss resize_output_shape resize_bilinear EAST_model CustomTensorBoard CustomModelCheckpoint make_image_summary SmallTextWeight lr_decay main ValidationEvaluator GetCompilationInfoForFile IsHeaderFile MakeRelativePathsInFlagsAbsolute FlagsForFile DirectoryOfThisScript merge_quadrangle_n9 glob join format extend polygon_area print zip append clip min astype choice shape int32 zeros range max clip arctan2 polyfit print norm arccos line_verticle fit_line dot line_cross_point sum arctan print argmin argmax concatenate reshape transpose zeros array fillPoly line_cross_point sort_rectangle ones argmin append sum range astype fit_line zip enumerate norm point_dist_to_line min argwhere zeros array rectangle_from_parallelogram replace randint copy shape zeros max shape float resize arange subplots input_size get_text_file resize resize_image abs show ones shape imshow generate_rbox check_and_validate_polys append training_data_path imread format crop_area close shuffle tight_layout choice add_artist astype pad_image zeros get_images Polygon print text set_yticks min float32 set_xticks load_annotation randint array arange input_size get_text_file resize_image shape generate_rbox check_and_validate_polys append training_data_path imread format astype shuffle pad_image get_images print float32 load_annotation validation_data_path array format print input_size shape load_annotation get_text_file check_and_validate_polys generate_rbox resize_image imread pad_image get join get_images print close nb_workers array validation_data_path Pool len test_data_path endswith print append walk len int time format zeros_like print reshape fillPoly astype argwhere int32 zeros restore_rectangle merge_quadrangle_n9 enumerate sum argmin imwrite output_dir resize_image open basename gpu_list predict format model_from_json close detect load_weights model_path join read get_images time print reshape makedirs reshape area Polygon append array append weighted_merge list fromarray BytesIO close getvalue shape save generator checkpoint_path multi_gpu_model model variable input_size count_samples CustomTensorBoard CustomModelCheckpoint init_learning_rate SmallTextWeight fit_generator restore_model summary mkdir LearningRateScheduler compile AdamW rmtree load_data EAST_model to_json ValidationEvaluator len append join startswith IsHeaderFile compiler_flags_ exists compiler_flags_ GetCompilationInfoForFile compiler_working_dir_ MakeRelativePathsInFlagsAbsolute DirectoryOfThisScript nms_impl array copy | # EAST: An Efficient and Accurate Scene Text Detector This is a Keras implementation of EAST based on a Tensorflow implementation made by [argman](https://github.com/argman/EAST). The original paper by Zhou et al. is available on [arxiv](https://arxiv.org/abs/1704.03155). + Only RBOX geometry is implemented + Differences from the original paper + Uses ResNet-50 instead of PVANet + Uses dice loss function instead of balanced binary cross-entropy + Uses AdamW optimizer instead of the original Adam The implementation of AdamW optimizer is borrowed from [this repository](https://github.com/shaoanlu/AdamW-and-SGDW). The code should run under both Python 2 and Python 3. | 2,708 |

kurowasan/GraN-DAG | ['causal inference'] | ['Gradient-Based Neural DAG Learning'] | gran_dag/utils/topo_sort.py notears/notears/notears.py notears/notears/simple_demo.py notears/main.py dag_gnn/dag_gnn/utils.py gran_dag/utils/penalty.py notears/notears/cppext/eigen/debug/gdb/__init__.py gran_dag/models/base_model.py gran_dag/train.py gran_dag/dag_optim.py gran_dag/data.py gran_dag/plot.py cam/main.py dag_gnn/main.py gran_dag/main.py notears/notears/cppext/setup.py cam/cam.py gran_dag/utils/metrics.py notears/notears/cppext/eigen/scripts/relicense.py gran_dag/models/learnables.py random_baseline/main.py dag_gnn/dag_gnn/train.py gran_dag/utils/save.py main.py dag_gnn/dag_gnn/modules.py notears/notears/utils.py notears/notears/cppext/eigen/debug/gdb/printers.py CAM_with_score message_warning main _print_metrics main _print_metrics MLPDDecoder SEMDecoder SEMEncoder MLPDecoder MLPDEncoder MLPEncoder MLPDiscreteDecoder evaluate_score update_optimizer _h_A retrain stau dag_gnn gauss_sample_z_new matrix_poly load_numpy_data binary_accuracy preprocess_adj preprocess_adj_new sample_gumbel get_tril_indices my_normalize normalize_adj simulate_population_sample simulate_sem kl_categorical sparse_to_tuple to_2d_idx kl_categorical_uniform gauss_sample_z get_correct_per_bucket count_accuracy compute_BiCScore A_connect_loss sample_logistic get_buckets get_offdiag_indices kl_gaussian list_files read_BNrep my_softmax get_triu_offdiag_indices load_data_discrete get_triu_indices get_tril_offdiag_indices preprocess_adj_new1 A_positive_loss kl_gaussian_sem get_minimum_distance nll_catogrical gumbel_softmax_sample binary_concrete get_correct_per_bucket_ isnan load_data simulate_random_dag compute_local_BiCScore encode_onehot binary_concrete_sample nll_gaussian gumbel_softmax compute_jacobian_avg TrExpScipy compute_constraint is_acyclic compute_A_phi DataManagerFile file_exists main _print_metrics plot_learning_curves plot_adjacency plot_learning_curves_retrain plot_weighted_adjacency cam_pruning_ pns pns_ cam_pruning retrain train to_dag BaseModel LearnableModel_NonLinGaussANM LearnableModel_NonLinGauss LearnableModel edge_errors shd compute_penalty compute_group_lasso_l2_penalty load dump np_to_csv generate_complete_dag main _print_metrics notears_live notears retrain LinearMasked notears_simple simulate_sem count_accuracy simulate_random_dag simulate_population_sample EigenQuaternionPrinter _MatrixEntryIterator EigenSparseMatrixPrinter lookup_function register_eigen_printers build_eigen_dictionary EigenMatrixPrinter update sample_random_dag main get_score CAM_with_score SHD_CPDAG score exp_path test_samples SHD save DataFrame CAM sel_method prune_method metrics_callback train_samples plot_adjacency i_dataset cutoff predict dump edge_errors SID variable_sel float DataManagerFile join time data_path pruning numpy to_numpy_matrix makedirs print items list pns num_neighbors abs seed cam_pruning_ ones pns_ double pns_thresh astype cam_pruning retrain manual_seed dag_gnn random_seed set_default_tensor_type print min eye matrix_poly trace prox_plus abs param_groups log10 eval c_A save_folder exp_path load_numpy_data SGD LBFGS unsqueeze k_max_iter data_variable_size tensor cuda log prior open list StepLR lambda_A ones Adam load_state_dict DoubleTensor dynamic_graph double range dump format inf get_triu_offdiag_indices item isoformat h_tol flush load join time int get_tril_offdiag_indices print Variable clone now parameters load_folder eye _h_A encode_onehot zeros train epochs array makedirs evaluate_score save_folder exp_path load_numpy_data SGD LBFGS unsqueeze k_max_iter data_variable_size cuda log prior open list StepLR ones Adam graph_threshold load_state_dict DoubleTensor dynamic_graph double range dump format get_triu_offdiag_indices isoformat load join time int get_tril_offdiag_indices print Variable now parameters load_folder eye encode_onehot zeros epochs array makedirs int permutation DiGraph ones astype extend choice dot uniform eye append zeros float round range tril exponential normal list gumbel dot topological_sort predecessors sin zeros to_numpy_array range dot sqrt pinv eye T setdiff1d concatenate tril intersect1d float max flatnonzero len contiguous softmax Variable binary_concrete_sample float data sample_logistic Variable size cuda is_cuda float float size cuda double sample_gumbel is_cuda data view Variable size scatter_ gumbel_softmax_sample cuda zeros max is_cuda exp size double range double sum double join iglob data_dir loadtxt search dict DiGraph endswith simulate_sem FloatTensor read_BNrep DataLoader TensorDataset simulate_random_dag amax DiGraph endswith simulate_sem read_BNrep DataLoader TensorDataset simulate_random_dag x_dims Tensor from_numpy_matrix Tensor DataLoader TensorDataset floor float array get list map set array ones t eye ones t eye ones t eye zeros zeros transpose sum matmul min arange min append numpy max range numpy append sum range len numpy append sum range len log sum squeeze exp sum log size range exp pi from_numpy pow div log abs matmul pow sum diag transpose double transpose double transpose double inverse size norm double range to_tuple range isinstance len div double range sum where compute_local_BiCScore append sum range values tuple log dict sum prod range amax num_vars apply matmul range eye num_vars sum unsqueeze eye abs enumerate einsum detach num_vars unbind get_parameters compute_log_likelihood mean sample zeros range hid_dim num_layers LearnableModel_NonLinGaussANM LearnableModel_NonLinGauss reset_params to_dag file_exists num_vars format lr load train gpu subplots arange clf linspace plotting_callback tick_params max list set_yscale set_xlabel twinx savefig legend range plot tight_layout interp join set_ylabel array len set_aspect join subplots set_title clf savefig heatmap join list subplots plot print set_xlabel tight_layout clf savefig legend array range set_ylim len join list subplots plot set_xlabel tight_layout clf savefig legend array range set_ylim len str SelectFromModel format concatenate print num_samples astype copy ExtraTreesRegressor sample float range fit join time format dump print plot_adjacency pns_ save numpy exists makedirs exp_path stop_crit_win zero_grad SGD plot_weighted_adjacency numpy save num_train_iter exists metrics_callback lambda_init mu_init plot_adjacency plot_learning_curves RMSprop append range dump format inf astype eval item sample join time get_parameters backward print num_samples float32 parameters get_w_adj compute_constraint zeros step train_batch_size makedirs SHD_CPDAG exp_path save exists metrics_callback jac_thresh plot_adjacency dump edge_errors SID eval sample float join time get_parameters print num_samples shd_metric t get_w_adj numpy makedirs join str launch_R_script format remove concatenate np_to_csv dict realpath dirname SHD_CPDAG exp_path copy_ save exists metrics_callback cam_pruning_ plot_adjacency dump edge_errors SID eval sample float type join time get_parameters print num_samples shd_metric Tensor numpy makedirs plot_learning_curves_retrain zero_grad num_train_iter exists metrics_callback RMSprop append to_dag inf patience eval item sample deepcopy get_parameters backward train step train_batch_size sum retrieve_adjacency_matrix uuid4 str join to_csv dirname DataFrame DiGraph copy all_topological_sorts append enumerate lambda1 max_iter matmul sum max_iter_retrain w_threshold notears h_tol minimize_subproblem astype shape h_func float_ range add_tools F_func stream flatten h_func push_notebook show minimize_subproblem rect shape ray range patch gridplot tile ColumnDataSource line HoverTool figure LinearColorMapper Adagrad model num_samples LinearMasked _h minimize shape range x append strip_typedefs search tag target type permutation uniform zeros float range uniform sample_random_dag | # Gradient-Based Neural DAG Learning

This code was written by the authors of the ICLR 2020 submission: "Gradient-Based Neural DAG Learning".

Our implementation is in PyTorch but some functions rely on the

[Causal Discovery Toolbox](https://diviyan-kalainathan.github.io/CausalDiscoveryToolbox/html/index.html) which relies

partly on the R programming language.

## Run the code

To use our implementation of GraN-DAG, simply install Singularity (instructions: [https://www.sylabs.io/guides/3.0/user-guide/installation.html](https://www.sylabs.io/guides/3.0/user-guide/installation.html))

and run the code in our container (download it here: [https://drive.google.com/file/d/1BOzKMWOgV-IGO9yIz_gq-UrgvME82zxd/view?usp=sharing](https://drive.google.com/file/d/1BOzKMWOgV-IGO9yIz_gq-UrgvME82zxd/view?usp=sharing)). Use start_example.sh (update the paths) to launch the differents methods (GraN-DAG, DAG-GNN, NOTEARS, CAM).

| 2,709 |

kushalchauhan98/dynamic-meta-embeddings | ['word embeddings'] | ['Dynamic Meta-Embeddings for Improved Sentence Representations'] | embedders.py models.py utils.py modules.py main.py SingleEmbedder ConcatEmbedder DMEmbedder CDMEmbedder Model SNLIModel BiLSTM_Max Classifier CustomVocab FastTextCC | # Dynamic Meta-Embeddings for Improved Sentence Representations <img src="https://upload.wikimedia.org/wikipedia/commons/9/96/Pytorch_logo.png" width="12%"> [](https://opensource.org/licenses/MIT) This repository contains my PyTorch implementation of the paper: **Dynamic Meta-Embeddings for Improved Sentence Representations**<br> Douwe Kiela, Changhan Wang and Kyunghyun Cho<br> *Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing*<br> [[arXiv](https://arxiv.org/abs/1804.07983)] [[GitHub](https://github.com/facebookresearch/DME)] ### Abstract While one of the first steps in many NLP systems is selecting what pre-trained word embeddings to use, we argue that such a step is better left for neural networks to figure out by themselves. To that end, we introduce dynamic meta-embeddings, a simple yet effective method for the supervised learning of embedding ensembles, which leads to state of-the-art performance within the same model class on a variety of tasks. We subsequently show how the technique can be used to shed new light on the usage of word embeddings in NLP systems. ### Usage | 2,710 |

kushaltirumala/callibratable_style_consistency_flies | ['imitation learning'] | ['Learning Calibratable Policies using Programmatic Style-Consistency'] | eval_model.py lib/distributions/multinomial.py transforming_data/transform_data.py util/datasets/fruit_fly/__init__.py util/environments/fruit_fly.py util/datasets/bball/core.py lib/distributions/normal.py lib/models/ctvae_info.py scripts/check_dynamics_loss.py scripts/sample_ctvae_two_model.py train.py run.py lib/distributions/bernoulli.py util/datasets/__init__.py util/datasets/bball/label_functions/__init__.py util/datasets/bball/label_functions/heuristics.py scripts/eval_cop_policy.py lib/distributions/__init__.py util/environments/bball.py lib/models/ctvae_style.py lib/distributions/core.py lib/models/ctvae_mi.py util/datasets/fruit_fly/label_functions/__init__.py scripts/visualize_samples_ctvae.py util/logging/core.py util/logging/__init__.py lib/models/__init__.py run_single.py scripts/compute_stylecon_ctvae.py util/environments/core.py util/environments/__init__.py lib/models/core.py util/datasets/core.py util/datasets/bball/__init__.py lib/distributions/dirac.py util/datasets/fruit_fly/label_functions/heuristics.py scripts/sample_ctvae.py lib/models/ctvae.py util/datasets/fruit_fly/core.py evaluate_model run_config run_epoch start_training Bernoulli Distribution Dirac Multinomial Normal BaseSequentialModel CTVAE CTVAE_info CTVAE_mi CTVAE_style get_model_class check_selfcon_tvaep_dm compute_stylecon_ctvae visualize_samples_ctvae get_classification_loss visualize_samples_ctvae visualize_samples_ctvae visualize_samples_ctvae overlap_interval LabelFunction TrajectoryDataset load_dataset normalize BBallDataset _set_figax unnormalize Displacement AverageSpeed Curvature Destination Direction normalize unnormalize FruitFlyDataset _set_figax Displacement GroundTruth AverageSpeed Curvature Destination Direction BBallEnv BaseEnvironment generate_rollout FruitFlyEnv load_environment LogEntry endswith load_weights array listdir fsdecode join format start_training print copy save_dir makedirs str optimize model print to losses itemize absorb requires_environment average eval LogEntry requires_labels train enumerate get_model_class label_dim requires_environment DataLoader save round seed filter_and_load_state_dict active_label_functions dirname action_dim load_dataset append load_environment to sum run_epoch range state_dict format num_parameters prepare_stage lower manual_seed requires_labels stage load join time pop isinstance print state_dim summary randint get_model_class DataLoader clf set_major_formatter abs filter_and_load_state_dict model_class active_label_functions view transpose ylabel title savefig load_dataset append format PercentFormatter mean lower xlim enumerate load join print xlabel hist median train numpy len get_model_class categorical DataLoader linspace values filter_and_load_state_dict model_class active_label_functions list num_values ones name transpose tolist ylabel randperm title savefig iter load_dataset load_environment next sum cat format plot size close eval lower item label enumerate load join items int print xlabel num_samples zeros fill_between numpy load_model get_model_class DataLoader unsqueeze filter_and_load_state_dict model_class active_label_functions transpose iter load_dataset load_environment next format mean eval lower load join print repeat type arange categorical around linspace save max output_dim ones min zeros lower int int join set_xlim add_subplot imshow set_visible figure resize imread set_ylim get_obs is_recurrent act update_hidden unsqueeze append step range | # Learning Calibratable Policies using Programmatic Style-Consistency [(arXiv)](https://arxiv.org/abs/1910.01179) ## Code Code is written in Python 3.7.4 and [PyTorch](https://pytorch.org/) v.1.0.1. Will be updated for PyTorch 1.3 in the future. ## Usage Train models with: `$ python run_single.py -d <device id> --config_dir <config folder name>` Not specifying a device will use CPU by default. See JSONs in `configs\` to see examples of config files. ### Test Run `$ python run_single.py --config_dir test --test_code` should run without errors. ## Scripts | 2,711 |

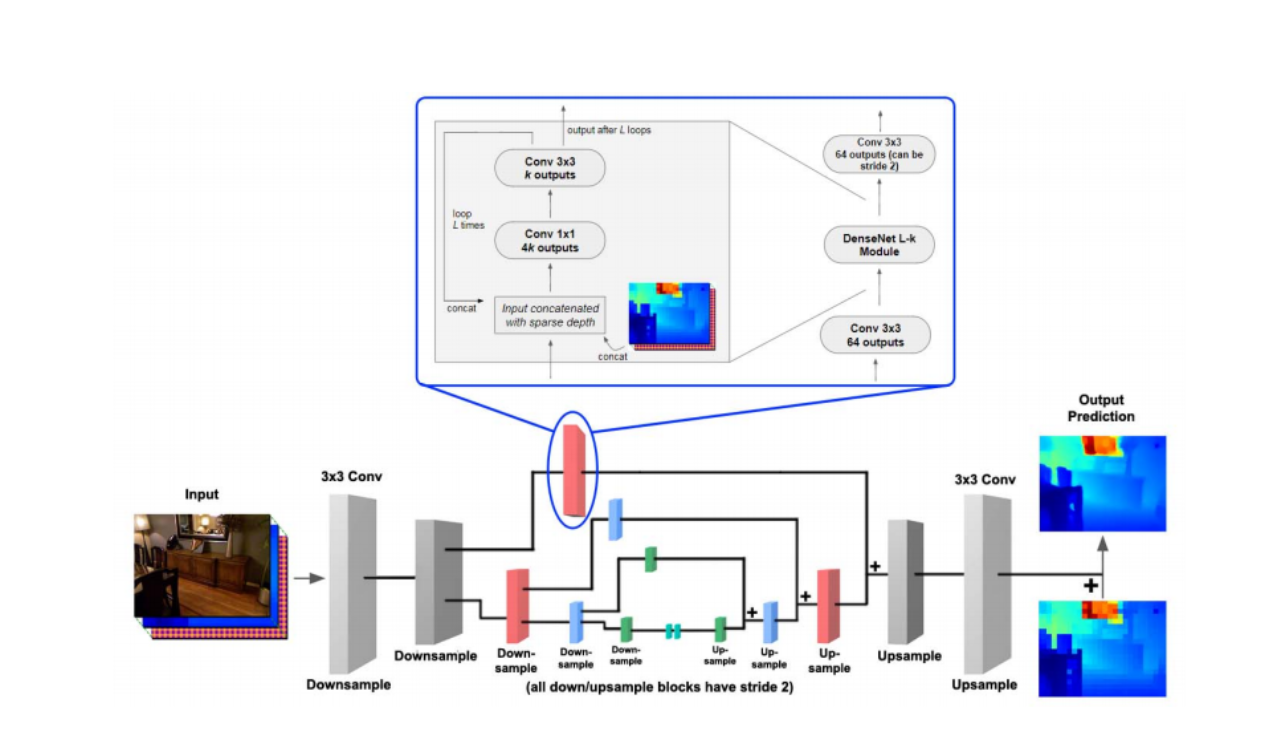

kvmanohar22/sparse_depth_sensing | ['depth estimation', 'monocular depth estimation'] | ['Estimating Depth from RGB and Sparse Sensing'] | tests/test_generate_mask.py utils/utils.py nnet/densenet.py nnet/d3.py tests/test_sparse_inputs.py utils/options.py D3 DenseNet_conv main main options path_exists generate_mask sparse_inputs show File imshow generate_mask figure time format print transpose add_subplot sparse_inputs arange print argmin square shape sqrt zip zeros ravel array range enumerate zeros astype int32 | # Estimating Depth from RGB and Sparse Sensing Paper Link: [arxiv](https://arxiv.org/abs/1804.02771) # Requirements - Ubuntu (Tested only on 16.04) - Python 3 - Chainer - ChainerCV - cupy - [imgplot](https://github.com/musyoku/imgplot/) # TODO | 2,712 |

kvsphantom/multitask-unet-bss | ['music source separation'] | ['Multi-channel U-Net for Music Source Separation'] | models/wrapper.py train/cunet.py utils/utils.py eval/eval_metrics.py test/dwa.py train/spec_channel_unet.py train/grad_based.py train/unit_weighted.py test/grad_based.py train/energy_based.py eval/stitch_audio.py loss/losses.py train/spec_channel_unet_nomasks.py test/energy_based.py utils/EarlyStopping.py dataset/downsample_gt.py train/baseline.py utils/plots/energy_distrib_plots.py test/baseline.py test/cunet.py dataset/dataloaders.py utils/plots/generate_spectrograms.py settings.py test/unit_weighted.py dataset/compute_energy.py dataset/preprocessing.py models/cunet.py utils/plots/tables.py dataset/filter_musdb_split.py utils/plots/results.py test/spec_channel_unet.py train/dwa.py set_path UnetInputUnfiltered CUnetInput UnetInput split_sources _stft get_signal_energy save_chunks get_sources gradient_loss SingleSourceDirectLoss EnergyBasedLossPowerP IndividualLosses SpecChannelUnetLoss UnitWeightedLoss GradientLoss CUNetLoss EnergyBasedLossInstantwise EnergyBasedLossPowerPMask CUNet TransitionBlock center_crop ConvolutionalBlock DenseBlock isnumber crop AtrousBlock CUNetWrapper Wrapper SpecChannelUnetNoMaskWrapper main Baseline main CUNetTest DWA main main EnergyBased main GradBased main SpecChannelUnet main UnitWeighted main Baseline main CUNetTrain DWA main main EnergyBased main GradBased main SpecChannelUnet main SpecChannelUnetNoMask main UnitWeighted EarlyStopping save_spectrogram power_to_db get_conditions create_folder amplitude_to_db setup_logger warpgrid rescale istft_reconstruction linearize_log_freq_scale plot_spectrogram makedirs list partial resample map stack int concatenate reshape shape max size cuda join str int create_folder write_wav get_signal_energy zeros round enumerate len abs gradient int size round load items list replace UNet OrderedDict Baseline load_state_dict train Wrapper CUNetWrapper CUNetTest CUNet DWA EnergyBased GradBased SpecChannelUnet UnitWeighted CUNetTrain SpecChannelUnetNoMaskWrapper SpecChannelUnetNoMask setFormatter getLogger addHandler StreamHandler Formatter setLevel FileHandler umask makedirs ref size expand log10 tensor max callable detach ref pow tensor abs callable min max power astype float32 linspace meshgrid zeros log complex exp astype istft grid_sample make_grid add_images amplitude_to_db rescale unsqueeze cpu rescale amplitude_to_db save list arange ones append zeros max | # Multi-channel Unet for Music Source Separation **Note 1**: The pre-trained weights of most of the models used in these experiments are made available here: [https://shorturl.at/aryOX](https://shorturl.at/aryOX) **Note 2**: For demos, visit our [project webpage](https://vskadandale.github.io/multi-channel-unet/). #### <ins>Usage Instructions</ins>: This repository is organized as follows: The data preprocessing steps are covered under the folder: code/dataset. ``` └── dataset ├── compute_energy.py ├── dataloaders.py | 2,713 |

kylemin/Gaze-Attention | ['action recognition', 'egocentric activity recognition'] | ['Integrating Human Gaze into Attention for Egocentric Activity Recognition'] | pytorch_i3d.py dataset.py utils.py main.py model.py transform igazeDataset trainingSampler load_model test print_args load_weights adjust_lr main train load_weights_and_set_opt I3D_IGA_attn I3D_IGA_base I3D_IGA_gaze InceptionI3d MaxPool3dSamePadding InceptionModule Unit3D _pointwise_loss compute_cross_entropy compute_gradients_gaze get_accuracy sample_gumbel make_hard_decision div permute datasplit DataLoader DataParallel device datapath load_weights_and_set_opt seed load_model parse_args to manual_seed_all stride test manual_seed is_available trange crop join print print_args isfile igazeDataset train weight mode I3D_IGA_attn I3D_IGA_base I3D_IGA_gaze load join items print size copy_ isfile state_dict replace SGD load_weights append weight print datasplit wd lr b ngpu weight test_sparse mode param_groups clip_grad_norm_ zero_grad compute_gradients_gaze save kl_div tensor max exp view model_base sum range state_dict eps LongTensor log_softmax compute_cross_entropy mean zip max_pool2d model_gaze crop enumerate join time iters backward print makedirs adjust_lr zeros step make_hard_decision time print confusion_matrix eval get_accuracy append sum range len rand shape sample_gumbel view scatter_ model_attn lambd | # Gaze-Attention Integrating Human Gaze into Attention for Egocentric Activity Recognition (WACV 2021)\ [**paper**](https://openaccess.thecvf.com/content/WACV2021/html/Min_Integrating_Human_Gaze_Into_Attention_for_Egocentric_Activity_Recognition_WACV_2021_paper.html) | [**presentation**](https://youtu.be/k-VUi54GjXQ) ## Overview It is well known that human gaze carries significant information about visual attention. In this work, we introduce an effective probabilistic approach to integrate human gaze into spatiotemporal attention for egocentric activity recognition. Specifically, we propose to reformulate the discrete training objective so that it can be optimized using an unbiased gradient estimator. It is empirically shown that our gaze-combined attention mechanism leads to a significant improvement of activity recognition performance on egocentric videos by providing additional cues across space and time. | Method | Backbone network | Acc(%) | Acc\*(%) | |:----------------------|:------------------:|:-------:|:-------:| | [Li et al.](https://openaccess.thecvf.com/content_ECCV_2018/papers/Yin_Li_In_the_Eye_ECCV_2018_paper.pdf) | I3D | 53.30 | - | | [Sudhakaran et al.](http://bmvc2018.org/contents/papers/0756.pdf) | ResNet34+LSTM | - | 60.76 | | [LSTA](https://openaccess.thecvf.com/content_CVPR_2019/papers/Sudhakaran_LSTA_Long_Short-Term_Attention_for_Egocentric_Action_Recognition_CVPR_2019_paper.pdf) | ResNet34+LSTM | - | 61.86 | | 2,714 |

kylie-box/word_prisms | ['word embeddings'] | ['Learning Efficient Task-Specific Meta-Embeddings with Word Prisms'] | logger.py convert_as_word_prism.py embedding_models.py evaluation_models.py result.py train_text_classification.py load_as_word_prism.py dataset.py train_sequence_labelling.py setup.py config.py utils.py constants.py dictionary.py create_directory load_config read_rc save_dictionary load_embeddings read_given_dict save_embeddings main TextClassificationLoader SNLIIter TextClassificationIter NLIDatasetLoader SNLIDataset get_emb_key get_preprocessing Dictionary WordPrism EmbeddingModel Embeddings EmbWrapper SysLvlLC BaseEvaluationModel WordLvlLC NLIModel SeqLabModel ContextualizedLvlLC TextClassificationModel ConcatBaseline load_embwrapper load_word_prism create_logger LogFormatter ResultsHolder ResultsObtainedError get_model get_optimizer_scheduler evaluate get_dataset data_loading_prep report_args set_seeds main write_prediction_files train get_parser get_model get_optimizer_scheduler evaluate get_dataset data_loading_prep report_args set_seeds main write_prediction_files train get_parser nn_init norm cooc_path emb_path init_weight pmi get_dtype load_shard get_device normalize corpus_path count_param_num join chdir strftime output_dir makedirs int join format print endswith next sum array open print save_embedding_from_V dirname dict_path print set_dictionary_path Dictionary save emb_path save_dictionary save_embeddings load_embeddings join int format load_embwrapper embeds_root min dictionary shape WordPrism get_device startswith info load_embedding normalize truncation range append len setFormatter getLogger addHandler LogFormatter StreamHandler DEBUG setLevel INFO FileHandler seed manual_seed_all manual_seed ReduceLROnPlateau Adam SGD train_test_split SequenceLoader sort_by_length padding_id feed_full_seq_ds get_optimizer_scheduler save_model model clip_grad_norm_ zero_grad verbose add_ds_results output_dir save defaultdict id2label tolist append count_param_num grad_clip range early_stop_patience update epoch format orthogonalize get_id orthogonal info join task backward isnan parameters monitor_word ResultsHolder beta write_prediction_files step loss task format info join add_argument dirname abspath ArgumentParser serialize report_args create_logger n_classes set_seeds output_dir load_word_prism labels_to_idx id2labels mb_size get_dataset dictionary data_loading_prep getattr train_test_split to dim sst_labels format write_logger orthogonal info train join embedding get_x_y get_device evaluation get_model callable len vars SNLIIter SequenceLabellingIter TextClassificationIter data max premise model text label hypothesis set_dataset_mapping premise vocab train_iter label enumerate text hypothesis itos vocab task join join join matrix isinstance Tensor ndarray isinstance xavier_uniform_ kaiming_uniform_ orthogonal_ isinstance Sequential init_weight named_parameters getattr uniform_ split | # Word Prisms Word embeddings are trained to predict word cooccurrence statistics, which leads them to possess different lexical properties (syntactic, semantic, synonymy, hypernymy, etc.) depending on the notion of context defined at training time. These properties manifest when querying the embedding space for the most similar vectors, and when used at the input layer of deep neural networks trained to solve NLP problems. Meta-embeddings combine multiple sets of differently trained word embeddings, and have been shown to successfully improve intrinsic and extrinsic performance over equivalent models which use just one set of source embeddings. We introduce *word prisms*: a simple and efficient meta-embedding method that learns to combine source embeddings according to the task at hand. Word prisms learn orthogonal transformations to linearly combine the input source embeddings, which allows them to be very efficient at inference time. We evaluate word prisms in comparison to other meta-embedding methods on six extrinsic evaluations and observe that word prisms offer improvements in performance on all tasks. ## Requirements * Python 3.6+ * NumPy 1.18.1 | 2,715 |

kymatio/phaseharmonics | ['time series'] | ['Phase Harmonic Correlations and Convolutional Neural Networks'] | kymatio/datasets.py kymatio/caching.py code_rec2d/cartoond/test_rec_bump_chunkid_lbfgs2_gpu_N64.py code_rec2d/compute_coeff.py code_rec2d/cartoond/test_rec_bump_chunkid_lbfgs2_cpu_N64.py kymatio/phaseharmonics2d/backend/backend_torch.py kymatio/version.py kymatio/__init__.py kymatio/phaseharmonics2d/backend/backend_common.py code_rec2d/boat/test_rec_bump_chunkid_lbfgs_gpu_N256_ps2par.py scatnet-0.2a/ScatNetLight/svm_robust/libsvm-compact-0.1/tools/grid.py kymatio/phaseharmonics2d/backend/backend_utils.py scatnet-0.2a/ScatNetLight/svm_robust/libsvm-compact-0.1/tools/easy.py kymatio/phaseharmonics2d/utils.py code_rec2d/cartoond/test_rec_bump_chunkid_lbfgs_gpu_N64.py scatnet-0.2a/ScatNetLight/svm_robust/libsvm-compact-0.1/tools/checkdata.py code_rec2d/cartoond/test_rec_bump_chunkid_lbfgs_gpu_N256_ps2par.py kymatio/phaseharmonics2d/backend/backend_skcuda.py kymatio/phaseharmonics2d/phase_harmonics_k_bump_chunkid_pershift.py kymatio/phaseharmonics2d/filter_bank.py kymatio/phaseharmonics2d/phase_harmonics_k_bump_chunkid_simplephase.py scatnet-0.2a/ScatNetLight/svm_robust/libsvm-compact-0.1/tools/subset.py kymatio/phaseharmonics2d/backend/__init__.py setup.py kymatio/phaseharmonics2d/__init__.py kymatio/phaseharmonics2d/phase_harmonics_k_bump_chunkid_scaleinter.py code_rec2d/compute_coeff_ps2.py grad_obj_fun fun_and_grad_conv callback_print obj_fun obj_func closure obj_fun obj_func closure obj_fun grad_obj_fun fun_and_grad_conv callback_print obj_fun grad_obj_fun fun_and_grad_conv callback_print obj_fun find_cache_base_dir get_cache_dir _download read_xyz fetch_fsdd find_datasets_base_dir fetch_qm7 get_dataset_dir _pca_align_positions convert_complexNp_floatT periodize_filter_fft filter_bank filter_bank_real haar_2d gabor_2d morlet_2d PHkPerShift2d PhkScaleInter2d PhaseHarmonics2d fft2_c2c compute_padding ifft2_c2r fft2 periodic_signed_dis periodic_dis ifft2_c2c mul SubInitSpatialMeanC PhaseExpLL PhaseHarmonicsIso conjugate StablePhase SubInitMeanIso PhaseHarmonics2 unpad fft iscomplex PhaseExp_par PhaseExpSk real SubInitMean PeriodicShift2D ones_like Pad log2_pows SubInitSpatialMeanCinFFT PhaseHarmonic pows DivInitStd SubInitSpatialMeanR StablePhaseExp modulus DivInitStdQ0 Modulus SubsampleFourier imag getDtype cdgmmMulcu load_kernel cdgmmMul cdgmm HookDetectNan NanError HookPrintName MaskedFillZero count_nans is_long_tensor main my_float err process_options LocalWorker redraw calculate_jobs permute_sequence TelnetWorker SSHWorker main WorkerStopToken range_f Worker exit_with_help main process_options sum wph_op synchronize to range len time grad_obj_fun reshape print requires_grad_ append tensor to range backward range zero_ obj_fun obj_func time zero_grad print zero_ requires_grad_ grad obj_fun cuda get join find_cache_base_dir exists makedirs get expanduser join makedirs exists find_datasets_base_dir urlopen str join print get_dataset_dir getstatusoutput int list zeros_like map zip append zeros float array range split zeros_like astype copy dot eigh zip load join _download savez read_xyz get_cache_dir get_dataset_dir _pca_align_positions exists int periodize_filter_fft FloatTensor fft2 pi stack gabor_2d haar_2d append range morlet_2d zeros convert_complexNp_floatT fft2 complex64 load join format print get_cache_dir int ones multiply float32 complex64 shape zeros range sum gabor_2d exp fftshift cos float32 complex64 dot sin zeros array zeros_like clone append stack mul range append stack mul range stack imag real norm ifft size irfft compile_with_cache substitute DoubleTensor isinstance FloatTensor size new view expand_as print print format pop int format err print len exit open my_float range split stdin join list format print exit map append split append pop int append len all sort write encode round max flush permute_sequence append float range_f range len appendleft get process_options append getuser write calculate_jobs redraw put getpass start Queue flush print format exit int exit_with_help len Label sort close label float | PhaseHarmonics: Wavelet phase harmonic transform in PyTorch ====================================== This is an implementation of the wavelet phase harmonic transform based on Kymatio (in the Python programming language). It is suitable for audio and image analysis and modeling. ### Related Publications * [2019] Stéphane Mallat, Sixin Zhang, Gaspar Rochette. Phase Harmonic Correlations and Convolutional Neural Networks. [(paper)](https://arxiv.org/abs/1810.12136). * [2020] E. Allys, T. Marchand, et al. New Interpretable Statistics for Large Scale Structure Analysis and Generation. [(paper)](https://github.com/Ttantto/wph_quijote).[(code)](https://github.com/Ttantto/wph_quijote). * [2021] Sixin Zhang, Stéphane Mallat. Maximum Entropy Models from Phase Harmonic Covariances. [(paper)](https://arxiv.org/abs/1911.10017).[(code)](https://github.com/sixin-zh/kymatio_wph). * [2021] Bruno Regaldo-Saint Blancard, Erwan Allys, François Boulanger, François Levrier, Niall Jeffrey. A new approach for the statistical denoising of Planck interstellar dust polarization data. [(paper)](https://arxiv.org/abs/2102.03160). [(code)](https://github.com/bregaldo/pywph). * [2022] Brochard, Zhang, Mallat. Generalized Rectifier Wavelet Covariance Model For texture Synthesis. [(paper)](https://openreview.net/pdf?id=ziRLU3Y2PN_). [(code)](https://github.com/abrochar/wavelet-texture-synthesis). * [2022] Antoine Brochard, Bartłomiej Błaszczyszyn, Sixin Zhang, Stéphane Mallat. Particle gradient descent model for point process generation. [(paper)](https://link-springer-com.gorgone.univ-toulouse.fr/article/10.1007/s11222-022-10099-x). [(code)](https://github.com/abrochar/pp_syn) | 2,716 |

kyunghyuncho/strawman | ['sentiment analysis'] | ['Strawman: an Ensemble of Deep Bag-of-Ngrams for Sentiment Analysis'] | predict.py extract_dictionary.py trainer.py main extract_ngrams main main BBNet data_iterator range set OrderedDict list keys values Softmax data_iterator argmax from_numpy append sum options format concatenate astype softmax enumerate print Variable split nn train numpy array len zero_grad step Adam BBNet range eval manual_seed float net criterion backward parameters cpu | # strawman | 2,717 |

kzkadc/alocc_mnist | ['outlier detection', 'one class classifier', 'anomaly detection'] | ['Adversarially Learned One-Class Classifier for Novelty Detection'] | chainer/train.py chainer/model.py pytorch/model.py pytorch/train.py ExtendedClassifier Generator EvalModel Discriminator GANUpdater ext_save_img get_mnist_num main parse_args get_discriminator get_generator Detector GANTrainer save_img get_mnist_num evaluate_accuracy plot_metrics main print_logs parse_args mkdir stack LogReport Trainer get_mnist_num Path SerialIterator ConcatenatedDataset run use setup ones Generator Adam pprint add_hook Discriminator Evaluator ProgressBar PlotReport mkdir PrintReport GANUpdater EvalModel result_dir ExtendedClassifier ext_save_img TupleDataset user_gpu_mode extend snapshot_object WeightDecay zeros to_gpu len add_argument pprint ArgumentParser main vars DataLoader evaluate_accuracy ITERATION_COMPLETED device print_logs GANTrainer to add_event_handler set plot_metrics print Engine save_img parameters MNIST mkdir | # ディープラーニングによる異常検知手法ALOCC Sabokrou, et al. "Adversarially Learned One-Class Classifier for Novelty Detection", The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 3379-3388 https://arxiv.org/abs/1802.09088 ## 準備 (Chainer) ChainerとOpenCVを使います。 インストール: ```bash $ sudo pip install chainer opencv-python ``` ## 準備 (PyTorch) | 2,718 |

l294265421/SCAN | ['sentiment analysis', 'graph attention'] | ['Sentence Constituent-Aware Aspect-Category Sentiment Analysis with Graph Attention Networks'] | nlp_tasks/bert_keras/tokenizer.py nlp_tasks/utils/attention_visualizer.py nlp_tasks/utils/vector_utils.py nlp_tasks/utils/event_extractor.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/cnn_encoder_seq2seq.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/acd_and_sc_bootstrap_pytorch_sentence_constituency.py nlp_tasks/common/common_path.py nlp_tasks/utils/http_utils.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/my_allennlp_trainer_epoch.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/acd_and_sc_train_templates_pytorch.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/my_allennlp_trainer.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/pytorch_models.py nlp_tasks/utils/corenlp_sentence_parser.py nlp_tasks/utils/pdf_metadata_reader.py nlp_tasks/utils/stanfordnlp_sentence_constituency_parser.py nlp_tasks/bert_keras/data_loader.py nlp_tasks/utils/text_segmentation.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/allennlp_callback.py nlp_tasks/utils/word_processor.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/allennlp_metrics.py nlp_tasks/utils/es_utils.py nlp_tasks/absa/aspect_category_detection_and_sentiment_classification/acd_and_sc_data_reader.py nlp_tasks/bert_keras/data_adapter.py nlp_tasks/utils/annotation_utils.py original_data/absa/ABSA_DevSplits/reader.py nlp_tasks/absa/data_adapter/data_object.py nlp_tasks/absa/sentence_analysis/constituency_parser.py nlp_tasks/utils/file_utils.py nlp_tasks/utils/create_graph.py nlp_tasks/utils/my_corenlp.py nlp_tasks/utils/sentence_segmenter.py nlp_tasks/utils/tokenizer_wrappers.py nlp_tasks/utils/argument_utils.py nlp_tasks/utils/datetime_utils.py nlp_tasks/utils/tokenizers.py nlp_tasks/utils/corenlp_factory.py nlp_tasks/absa/entities/ModelTrainTemplate.py AcdAndScDatasetReaderConstituencyBert TextInAllAspectSentimentOut TextInAllAspectSentimentOutSentenceConstituency AcdAndScDatasetReaderConstituencyBertSingle SentenceConsituencyAwareModelBertSingle SentenceConsituencyAwareModelBert TextInAllAspectSentimentOutTrainTemplate BaseSentenceConsituencyAwareModel SentenceConsituencyAwareModel EstimateCallback FixedLossWeightCallback SetLossWeightCallback Callback BinaryF1 CnnEncoder Trainer Trainer TextInAllAspectSentimentOutEstimator DglGraphAverage TextInAllAspectSentimentOutEstimatorAll DglGraphAttentionForAspectCategory GATForAspectCategory DglGraphConvolution ConstituencyBertSingle AverageAttention MultiHeadGATLayer Estimator DotProductAttentionInHtt GAT AttentionInCan BernoulliAttentionInHtt GATLayer NodeApplyModule LocationMaskLayer AttentionInHtt DglGraphConvolutionForAspectCategory GatEdge TextInAllAspectSentimentOutModel DglGraphAverageForAspectCategory SentenceConsituencyAwareModelV8 ConstituencyBert DglGraphAttentionForAspectCategoryWithDottedLines Semeval2016Task5Sub1 AbsaSentence AsgcnData AspectCategory Semeval2015Task12 load_csv_data Semeval2016Task5Sub2 AspectTerm Semeval2014Task4Rest Semeval2014Task4RestDevSplits BaseDataset AbsaDocument SemEval141516LargeRest Semeval2015Task12Rest Semeval2016Task5RestSub1 Text AbsaText Semeval2014Task4 MAMSACSA get_dataset_class_by_name ModelTrainTemplate SerialNumber ConstituencyTreeNode SingleSentenceGenerator SentencePairGenerator convert_data get_pair_data_from_file get_data_from_file EnglishTokenizer TokenizerReturningSpace get_task_data_dir split_sentence my_bool plot_multi_attentions_of_sentence_backup plot_attentions plot_attentions_pakdd extract_numbers plot_attention plot_multi_attentions_of_sentence create_corenlp_server CorenlpParser create_dependency_graph_by_spacy create_dependency_graph_for_dgl_for_syntax_aware_atsa_bert create_dependency_graph create_sentence_constituency_graph_for_dgl create_dependency_graph_for_dgl get_coref_edges plot_dgl_graph create_sentence_constituency_graph_for_dgl_with_dotted_line create_aspect_term_dependency_graph create_coref_graph parse_dates now day_ago second_diff day_diff search_esproxy_by_scroll search search_by_scroll get_ccomp_event_representation_by_constituency_tree get_verb_event_representation_by_constituency_tree get_event_representation_by_constituency_tree get_noun_event_representation_by_constituency_tree read_all_lines write_lines read_all_content append_line read_all_lines_generator rm_r get post StanfordCoreNLP main printMeta NltkSentenceSegmenter SimpleChineseSentenceSegmenter BaseSentenceSegmenter ConstituencyParseSentenceSegmenter parse_corenlp_parse_result sub_constituency_parser_result_generator TreeNode ZhSplitStentence ZhSplitParagraph NltkTokenizer SpacyTokenizer StanfordTokenizer AllennlpBertTokenizer JiebaTokenizer BaseTokenizer TokenizerWithCustomWordSegmenter get_trained_count_and_tfidf_model to_tfidf_vectors2 to_tfidf_vectors3 to_tfidf_vectors WordProcessorInterface StemProcessor LowerProcessor BaseWordProcessor StopWordProcessor join append read_all_lines print reader list pad_sequences shuffle encode array range append len append read_all_lines read_all_lines append show subplots DataFrame heatmap range len show subplots set_title get_xticklabels tight_layout colorbar subplots_adjust setp append tick_params DataFrame heatmap range enumerate len show subplots set_title append DataFrame heatmap range enumerate len show list subplots set_title get_xticklabels tight_layout subplots_adjust savefig setp append tick_params DataFrame heatmap range enumerate len show list subplots set_title get_xticklabels tight_layout subplots_adjust savefig setp append tick_params DataFrame heatmap range enumerate len findall zeros range dependency_parse len append coref range len children spacy_nlp astype len update children list extend add_nodes DGLGraph get_coref_edges zip append spacy_nlp add_edges len update join children list add_edges add_nodes DGLGraph zip append spacy_nlp range len update list add_edges add_nodes DGLGraph zip append range len update list get_adjacency_list_between_all_node_and_leaf get_all_nodes get_all_leaves add_nodes DGLGraph get_all_inner_nodes zip append add_edges len list get_adjacency_list_between_all_node_and_leaf get_all_nodes get_all_leaves add_nodes DGLGraph zip append add_edges len kamada_kawai_layout show draw to_networkx print append zeros coref range len append strptime timedelta strftime timedelta now strptime total_seconds strptime days loads deepcopy post extend get deepcopy str extend index post loads get deepcopy extend post loads get_np_ancestor parent get_ancestor get_np_ancestor parent join remove rmdir walk Request dumps bytes getDocumentInfo format print PdfFileReader open add_option OptionParser print exit printMeta usage filename parse_args append pop enumerate sub_constituency_parser_result_generator index TreeNode sub append transform TfidfTransformer CountVectorizer fit transform TfidfTransformer fit_transform CountVectorizer toarray | # The code and data for the paper "Sentence Constituent-Aware Aspect-Category Sentiment Analysis with Graph Attention Networks" # Requirements - Python 3.6.8 - torch==1.2.0 - pytorch-transformers==1.1.0 - allennlp==0.9.0 # Supported datasets - SemEval-2014-Task-4-REST-DevSplits (Rest14) - MAMSACSA (MAMS-ACSA) - SemEval-141516-LARGE-REST | 2,719 |

lRomul/argus-alaska | ['data augmentation'] | ['BitMix: Data Augmentation for Image Steganalysis'] | src/models/classifiers.py src/mixers.py src/models/__init__.py train.py src/__init__.py src/models/timm_model.py src/ema.py src/metrics.py src/predictor.py src/transforms.py make_folds.py src/config.py src/datasets.py src/models/custom_efficient.py predict.py make_quality_json.py src/utils.py src/argus_models.py src/losses.py src/models/custom_resnet.py predict_validation predict_test get_lr train_fold AlaskaModel get_prediction_transform get_folds_data AlaskaDataset get_test_data AlaskaSampler load_image AlaskaDistributedSampler EmaMonitorCheckpoint ModelEma SmoothingOhemCrossEntropy AlaskaCrossEntropy LabelSmoothingCrossEntropy Accuracy WeightedAuc alaska_weighted_auc rand_bbox BitMix mix_target EmptyMix RandomMixer Predictor predict_data UseWithProb get_transforms OneOf RandomRotate90 deep_chunk initialize_amp get_best_model_path target2altered get_image_quality load_pretrain_weigths initialize_ema check_dir_not_exist Classifier CustomEfficient CustomResnet TimmModel savez get_test_data to_csv mkdir DataFrame predict items get_folds_data list savez concatenate mkdir append array len AlaskaDataset load_pretrain_weigths DistributedDataParallel AlaskaModel AlaskaSampler DataLoader EmptyMix get_transforms initialize_amp set_device initialize_ema to AlaskaDistributedSampler get_folds_data zip RandomMixer get_best_model_path print fit convert_sync_batchnorm nn_module len convert open items list name data_dir fold train_folds_path zip append read_csv append sorted glob concatenate roc_curve dot linspace array enumerate auc sqrt clip int randint append zip AlaskaDataset DataLoader cat append numpy flip predict Compose Tensor partial isinstance Tensor sum isinstance initialize nn_module optimizer nn_module ModelEma str read str sorted glob search Path append float load_state_dict load_model state_dict print rmtree eval Path input exists | # ALASKA2 Image Steganalysis Source code of solution for [ALASKA2 Image Steganalysis](https://www.kaggle.com/c/alaska2-image-steganalysis) competition. ## Solution Key points: * Efficientnets * DDP training with SyncBN and Apex mixed precision * AdamW with cosine annealing * EMA Model * Bitmix ## Quick setup and start | 2,720 |