modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

BigeS/DialoGPT-small-Rick | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 10 | 2022-11-24T23:16:16Z | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8468253968253968

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4919786096256685

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.49554896142433236

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6203446359088383

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.794

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.3991228070175439

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4675925925925926

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8946813319270754

- name: F1 (macro)

type: f1_macro

value: 0.8881909354487081

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8211267605633803

- name: F1 (macro)

type: f1_macro

value: 0.6170555844268196

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6338028169014085

- name: F1 (macro)

type: f1_macro

value: 0.6222691959704637

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9571537872991583

- name: F1 (macro)

type: f1_macro

value: 0.8677239073477255

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.878094641178314

- name: F1 (macro)

type: f1_macro

value: 0.8771653942410037

---

# relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.4919786096256685

- Accuracy on SAT: 0.49554896142433236

- Accuracy on BATS: 0.6203446359088383

- Accuracy on U2: 0.3991228070175439

- Accuracy on U4: 0.4675925925925926

- Accuracy on Google: 0.794

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.8946813319270754

- Micro F1 score on CogALexV: 0.8211267605633803

- Micro F1 score on EVALution: 0.6338028169014085

- Micro F1 score on K&H+N: 0.9571537872991583

- Micro F1 score on ROOT09: 0.878094641178314

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.8468253968253968

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 5

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 1

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: parent

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-a-nce-1-parent/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

BinksSachary/ShaxxBot | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | 2022-11-24T23:27:16Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: distilcamembert-cae-no-behavior

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilcamembert-cae-no-behavior

This model is a fine-tuned version of [cmarkea/distilcamembert-base](https://huggingface.co/cmarkea/distilcamembert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7115

- Precision: 0.8033

- Recall: 0.7975

- F1: 0.7966

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 1.1648 | 1.0 | 40 | 0.9164 | 0.5427 | 0.6076 | 0.5502 |

| 0.785 | 2.0 | 80 | 0.6939 | 0.7223 | 0.7089 | 0.6976 |

| 0.4211 | 3.0 | 120 | 0.7189 | 0.8007 | 0.7722 | 0.7823 |

| 0.2326 | 4.0 | 160 | 0.6878 | 0.7843 | 0.7595 | 0.7640 |

| 0.1357 | 5.0 | 200 | 0.7115 | 0.8033 | 0.7975 | 0.7966 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Blabla/Pipipopo | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-24T23:43:44Z | ---

license: openrail++

language:

- en

tags:

- stable-diffusion

- text-to-image

- diffusers

thumbnail: "https://huggingface.co/nitrosocke/Future-Diffusion/resolve/main/images/future-diffusion-thumbnail-2.jpg"

inference: false

---

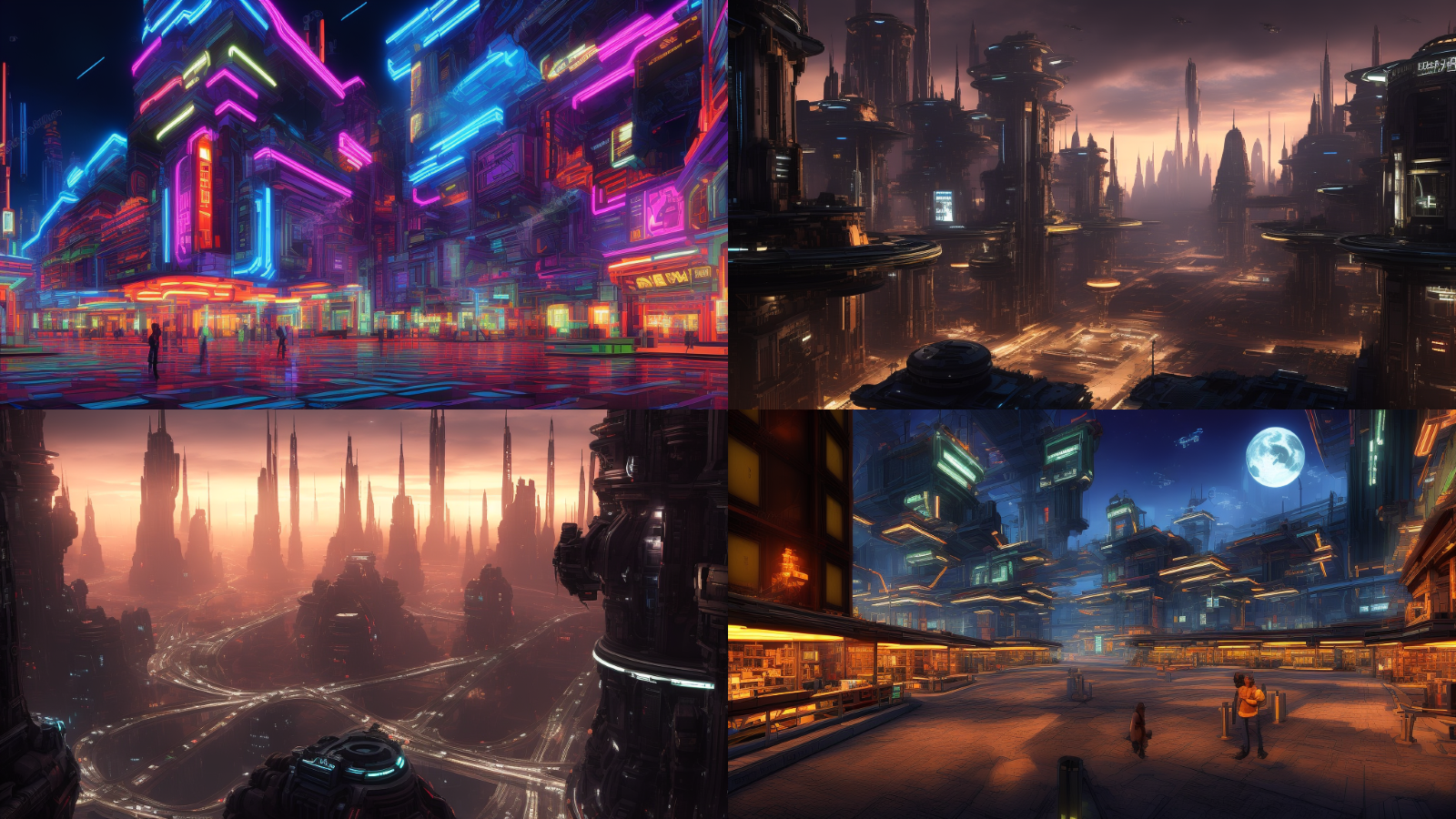

### Future Diffusion

This is the fine-tuned Stable Diffusion 2.0 model trained on high quality 3D images with a futuristic Sci-Fi theme.

Use the tokens

`future style`

in your prompts for the effect.

Trained on Stability.ai's [Stable Diffusion 2.0 Base](https://huggingface.co/stabilityai/stable-diffusion-2-base) with 512x512 resolution.

**If you enjoy my work and want to test new models before release, please consider supporting me**

[](https://patreon.com/user?u=79196446)

**Disclaimer: The SD 2.0 model is just over 24h old at this point and we still need to figure out how it works exactly. Please view this as an early prototype and experiment with the model.**

**Characters rendered with the model:**

**Cars and Animals rendered with the model:**

**Landscapes rendered with the model:**

#### Prompt and settings for the Characters:

**future style [subject] Negative Prompt: duplicate heads bad anatomy**

_Steps: 20, Sampler: Euler a, CFG scale: 7, Size: 512x704_

#### Prompt and settings for the Landscapes:

**future style city market street level at night Negative Prompt: blurry fog soft**

_Steps: 20, Sampler: Euler a, CFG scale: 7, Size: 1024x576_

This model was trained using the diffusers based dreambooth training by ShivamShrirao using prior-preservation loss and the _train-text-encoder_ flag in 7.000 steps.

## License

This model is open access and available to all, with a CreativeML Open RAIL++-M License further specifying rights and usage.

[Please read the full license here](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL) |

Blackmist786/DialoGPt-small-transformers4 | [

"pytorch"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | 2022-11-24T23:45:37Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: distilcamembert-cae-territory

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilcamembert-cae-territory

This model is a fine-tuned version of [cmarkea/distilcamembert-base](https://huggingface.co/cmarkea/distilcamembert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7346

- Precision: 0.7139

- Recall: 0.6835

- F1: 0.6887

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 1.1749 | 1.0 | 40 | 1.0498 | 0.1963 | 0.4430 | 0.2720 |

| 0.9833 | 2.0 | 80 | 0.8853 | 0.7288 | 0.6709 | 0.6625 |

| 0.6263 | 3.0 | 120 | 0.7503 | 0.7237 | 0.6709 | 0.6689 |

| 0.3563 | 4.0 | 160 | 0.7346 | 0.7139 | 0.6835 | 0.6887 |

| 0.2253 | 5.0 | 200 | 0.7303 | 0.7139 | 0.6835 | 0.6887 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Blerrrry/Kkk | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: distilcamembert-cae-component

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilcamembert-cae-component

This model is a fine-tuned version of [cmarkea/distilcamembert-base](https://huggingface.co/cmarkea/distilcamembert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3683

- Precision: 0.9317

- Recall: 0.9303

- F1: 0.9306

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 0.6221 | 1.0 | 309 | 0.3860 | 0.9007 | 0.8720 | 0.8761 |

| 0.1723 | 2.0 | 618 | 0.3505 | 0.9233 | 0.9157 | 0.9168 |

| 0.0604 | 3.0 | 927 | 0.3683 | 0.9317 | 0.9303 | 0.9306 |

| 0.0117 | 4.0 | 1236 | 0.4214 | 0.9311 | 0.9303 | 0.9304 |

| 0.0061 | 5.0 | 1545 | 0.4232 | 0.9317 | 0.9303 | 0.9305 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

BlightZz/DialoGPT-medium-Kurisu | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 19 | 2022-11-25T00:12:29Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9281121187139324

- name: Recall

type: recall

value: 0.9473241332884551

- name: F1

type: f1

value: 0.9376197218289332

- name: Accuracy

type: accuracy

value: 0.9862689115205746

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0610

- Precision: 0.9281

- Recall: 0.9473

- F1: 0.9376

- Accuracy: 0.9863

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0859 | 1.0 | 1756 | 0.0663 | 0.9088 | 0.9312 | 0.9199 | 0.9822 |

| 0.0331 | 2.0 | 3512 | 0.0622 | 0.9270 | 0.9461 | 0.9365 | 0.9856 |

| 0.016 | 3.0 | 5268 | 0.0610 | 0.9281 | 0.9473 | 0.9376 | 0.9863 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

BogdanKuloren/continual-learning-paper-embeddings-model | [

"pytorch",

"mpnet",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"MPNetModel"

],

"model_type": "mpnet",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 11 | 2022-11-25T00:26:15Z | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.743095238095238

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4839572192513369

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4896142433234421

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6375764313507504

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.862

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4868421052631579

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5046296296296297

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8952840138616845

- name: F1 (macro)

type: f1_macro

value: 0.8885186450216263

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.819718309859155

- name: F1 (macro)

type: f1_macro

value: 0.6131261712437293

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6159263271939328

- name: F1 (macro)

type: f1_macro

value: 0.6066052358250007

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9501982332892815

- name: F1 (macro)

type: f1_macro

value: 0.865577305932915

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8755875900971483

- name: F1 (macro)

type: f1_macro

value: 0.8756061231187074

---

# relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.4839572192513369

- Accuracy on SAT: 0.4896142433234421

- Accuracy on BATS: 0.6375764313507504

- Accuracy on U2: 0.4868421052631579

- Accuracy on U4: 0.5046296296296297

- Accuracy on Google: 0.862

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.8952840138616845

- Micro F1 score on CogALexV: 0.819718309859155

- Micro F1 score on EVALution: 0.6159263271939328

- Micro F1 score on K&H+N: 0.9501982332892815

- Micro F1 score on ROOT09: 0.8755875900971483

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.743095238095238

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 5

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 1

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: child_prototypical

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-1-child-prototypical/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

BonjinKim/dst_kor_bert | [

"pytorch",

"jax",

"bert",

"pretraining",

"transformers"

]

| null | {

"architectures": [

"BertForPreTraining"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.763452380952381

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4358288770053476

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.44510385756676557

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6453585325180656

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.764

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.41228070175438597

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4305555555555556

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.893174627090553

- name: F1 (macro)

type: f1_macro

value: 0.88253444204685

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.7779342723004695

- name: F1 (macro)

type: f1_macro

value: 0.5467947875271857

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6013001083423619

- name: F1 (macro)

type: f1_macro

value: 0.5766482049475778

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9545802323155039

- name: F1 (macro)

type: f1_macro

value: 0.8630198238087204

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8608586649952994

- name: F1 (macro)

type: f1_macro

value: 0.8593411038565139

---

# relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.4358288770053476

- Accuracy on SAT: 0.44510385756676557

- Accuracy on BATS: 0.6453585325180656

- Accuracy on U2: 0.41228070175438597

- Accuracy on U4: 0.4305555555555556

- Accuracy on Google: 0.764

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.893174627090553

- Micro F1 score on CogALexV: 0.7779342723004695

- Micro F1 score on EVALution: 0.6013001083423619

- Micro F1 score on K&H+N: 0.9545802323155039

- Micro F1 score on ROOT09: 0.8608586649952994

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.763452380952381

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 9

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 2

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: child_prototypical

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-d-nce-2-child-prototypical/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

Boondong/Wandee | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-25T00:29:42Z | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.7553174603174603

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.3877005347593583

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.3887240356083086

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6158977209560867

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.622

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.38596491228070173

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.39814814814814814

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8958866957962935

- name: F1 (macro)

type: f1_macro

value: 0.8899187556569595

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.7953051643192488

- name: F1 (macro)

type: f1_macro

value: 0.5661036014906222

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.5839653304442037

- name: F1 (macro)

type: f1_macro

value: 0.5694962586836211

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9565277874382695

- name: F1 (macro)

type: f1_macro

value: 0.8719904519356941

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8718270134753996

- name: F1 (macro)

type: f1_macro

value: 0.8712363187552654

---

# relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.3877005347593583

- Accuracy on SAT: 0.3887240356083086

- Accuracy on BATS: 0.6158977209560867

- Accuracy on U2: 0.38596491228070173

- Accuracy on U4: 0.39814814814814814

- Accuracy on Google: 0.622

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.8958866957962935

- Micro F1 score on CogALexV: 0.7953051643192488

- Micro F1 score on EVALution: 0.5839653304442037

- Micro F1 score on K&H+N: 0.9565277874382695

- Micro F1 score on ROOT09: 0.8718270134753996

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.7553174603174603

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 3

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: child_prototypical

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-0-child-prototypical/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

BossLee/t5-gec | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"T5ForConditionalGeneration"

],

"model_type": "t5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 200,

"min_length": 30,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": "summarize: "

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to German: "

},

"translation_en_to_fr": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to French: "

},

"translation_en_to_ro": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to Romanian: "

}

}

} | 6 | 2022-11-25T00:31:41Z | ---

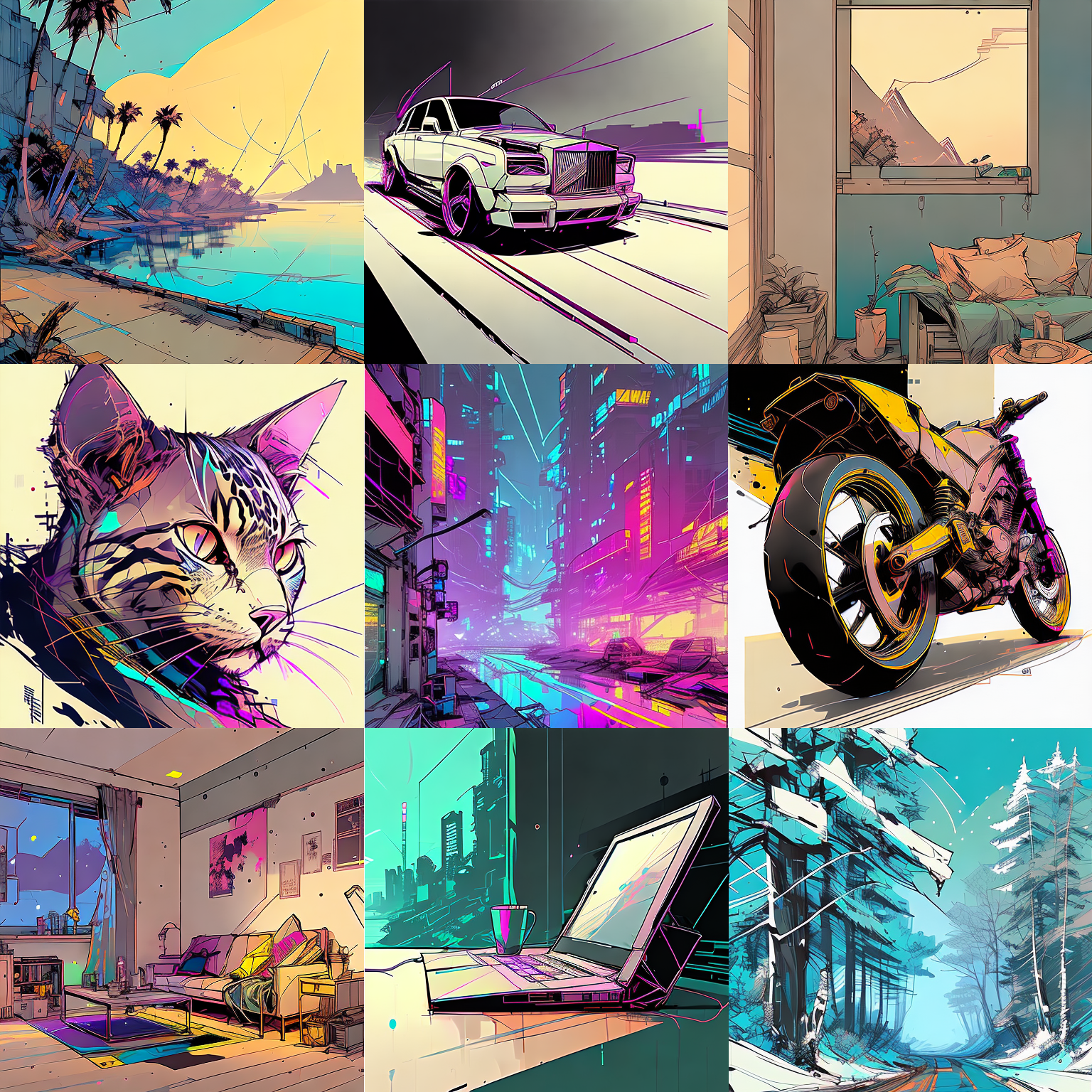

## AloeVera's SimpMaker2000 Mix

This model is a highly versatile and balanced mix that will allow you to generate stunning pictures in a wide range of styles and settings.

# Scroll Down for Samples

-language: en

-license: Unknown

## This Model was merged using the following models:

- SameDoesArts Ultmerge

- Anything V3.0

- Zeipher F222

- SXD 8.0

- Hassans Blend v2

- Zeipher F111

## Prompting

To make this model work I highly recommend you use CLIP SKIP 1, and the VAE Stable Diffusion 1.5 - 840'000 steps.

All the image below have the prompt data etc, you can use https://www.metadata2go.com/ to retrieve it, to guide you

with the prompting of the model. With the right words.. pretty much EVERYTHING can be achieved with this model.

I used NMKD's GUI 1.7.1 to generate the sampled images

Sampler: DPM++2 A

## DISCLAIMER

I do not presume to OWN any form of copyrights related to the models used in this merge, I only mean to share this balanced mix that in my opinion produces

excellent pictures in a wide variety of styles ranging from SFW, to fantasy, to NSFW, to horror, to photographs, anime, etc...

AloeVera

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669340090307-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669340092012-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669340092013-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669340091729-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669340090733-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341162944-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341151859-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341162937-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341163224-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341162936-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341162085-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341693883-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341699128-63800c8c96f995a21717050c.png">

<img width="768px" src="https://s3.amazonaws.com/moonup/production/uploads/1669341694334-63800c8c96f995a21717050c.png">

|

Botjallu/DialoGPT-small-harrypotter | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.6083928571428572

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.2967914438502674

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.29080118694362017

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5525291828793775

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.626

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.40350877192982454

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.40046296296296297

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9013108332077746

- name: F1 (macro)

type: f1_macro

value: 0.8942952749488488

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.7812206572769953

- name: F1 (macro)

type: f1_macro

value: 0.5351011171746861

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.59534127843987

- name: F1 (macro)

type: f1_macro

value: 0.578313715405001

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9534673436739236

- name: F1 (macro)

type: f1_macro

value: 0.8633473853913686

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8420557818865559

- name: F1 (macro)

type: f1_macro

value: 0.8473749954042976

---

# relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.2967914438502674

- Accuracy on SAT: 0.29080118694362017

- Accuracy on BATS: 0.5525291828793775

- Accuracy on U2: 0.40350877192982454

- Accuracy on U4: 0.40046296296296297

- Accuracy on Google: 0.626

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9013108332077746

- Micro F1 score on CogALexV: 0.7812206572769953

- Micro F1 score on EVALution: 0.59534127843987

- Micro F1 score on K&H+N: 0.9534673436739236

- Micro F1 score on ROOT09: 0.8420557818865559

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.6083928571428572

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: average

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 9

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 2

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: child_prototypical

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-average-prompt-e-nce-2-child-prototypical/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

Botslity/Bot | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-25T00:36:22Z | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.7961111111111111

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5668449197860963

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5637982195845698

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.7087270705947749

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.862

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5185185185185185

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9094470393249963

- name: F1 (macro)

type: f1_macro

value: 0.9071978262208379

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8481220657276995

- name: F1 (macro)

type: f1_macro

value: 0.6770534875484192

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6663055254604551

- name: F1 (macro)

type: f1_macro

value: 0.6477804074680519

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9549975655560965

- name: F1 (macro)

type: f1_macro

value: 0.8718545305555775

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8994045753682232

- name: F1 (macro)

type: f1_macro

value: 0.8985019443558045

---

# relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical

RelBERT fine-tuned from [roberta-base](https://huggingface.co/roberta-base) on

[relbert/semeval2012_relational_similarity_v6](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity_v6).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.5668449197860963

- Accuracy on SAT: 0.5637982195845698

- Accuracy on BATS: 0.7087270705947749

- Accuracy on U2: 0.5

- Accuracy on U4: 0.5185185185185185

- Accuracy on Google: 0.862

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9094470393249963

- Micro F1 score on CogALexV: 0.8481220657276995

- Micro F1 score on EVALution: 0.6663055254604551

- Micro F1 score on K&H+N: 0.9549975655560965

- Micro F1 score on ROOT09: 0.8994045753682232

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.7961111111111111

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-base

- max_length: 64

- mode: mask

- data: relbert/semeval2012_relational_similarity_v6

- split: train

- split_eval: validation

- template_mode: manual

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 5

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 1

- exclude_relation: None

- n_sample: 320

- gradient_accumulation: 8

- relation_level: None

- data_level: child_prototypical

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-1-child-prototypical/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

BotterHax/DialoGPT-small-harrypotter | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

datasets:

- relbert/semeval2012_relational_similarity_v6

model-index:

- name: relbert/relbert-roberta-base-semeval2012-v6-mask-prompt-a-nce-2-child-prototypical

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.7766666666666666

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5106951871657754

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.5192878338278932

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6336853807670928

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.836

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4956140350877193

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4675925925925926

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9070363115865602

- name: F1 (macro)

type: f1_macro

value: 0.9020957402698154

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8448356807511737

- name: F1 (macro)

type: f1_macro

value: 0.656354139071707

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6630552546045504

- name: F1 (macro)

type: f1_macro

value: 0.6446451538634405

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9517980107115531

- name: F1 (macro)

type: f1_macro

value: 0.8705494994159542

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset: