modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Chakita/gpt2_mwp | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | 2022-11-21T17:59:15Z | ---

license: bsd-3-clause

datasets:

- bookcorpus

- wikipedia

- openwebtext

---

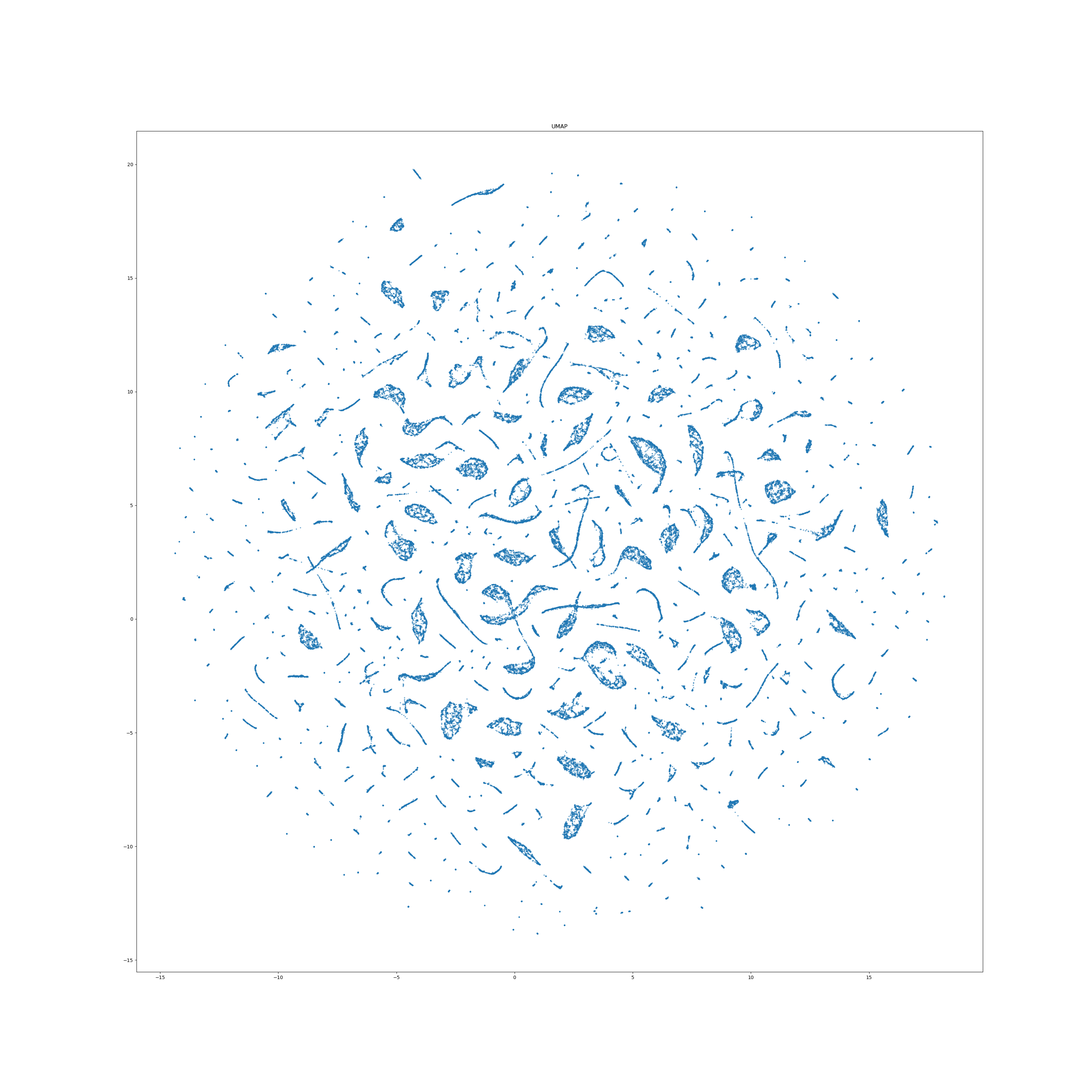

# FlexiBERT-Mini model

Pretrained model on the English language using a macked language modeling (MLM) objective. It was found by executing a neural architecture search (NAS) over a design space of ~3.32 billion *flexible* and *heterogeneous* transformer architectures in [this paper](https://arxiv.org/abs/2205.11656). The model is case sensitive.

# Model description

The model consists of diverse attention heads including the traditional self-attention and the discrete cosine transform (DCT). The design space also supports weighted multiplicative attention (WMA), discrete Fourier transform (DFT), and convolution operations in the same transformer model along with different hidden dimensions for each encoder layer.

# How to use

This model should be finetuned on a downstream task. Other models within the FlexiBERT design space can be generated using a model dicsiontary. See this [github repo](https://github.com/JHA-Lab/txf_design-space) for more details. To instantiate a fresh FlexiBERT-Mini model (for pre-trainining using the MLM objective):

```python

from transformers import FlexiBERTConfig, FlexiBERTModel, FlexiBERTForMaskedLM

config = FlexiBERTConfig()

model_dict = {'l': 4, 'o': ['sa', 'sa', 'l', 'l'], 'h': [256, 256, 128, 128], 'n': [2, 2, 4, 4],

'f': [[512, 512, 512], [512, 512, 512], [1024], [1024]], 'p': ['sdp', 'sdp', 'dct', 'dct']}

config.from_model_dict(model_dict)

model = FlexiBERTForMaskedLM(config)

```

# Developer

[Shikhar Tuli](https://github.com/shikhartuli). For any questions, comments or suggestions, please reach me at [[email protected]](mailto:[email protected]).

# Cite this work

Cite our work using the following bitex entry:

```

@article{tuli2022jair,

title={{FlexiBERT}: Are Current Transformer Architectures too Homogeneous and Rigid?},

author={Tuli, Shikhar and Dedhia, Bhishma and Tuli, Shreshth and Jha, Niraj K.},

year={2022},

eprint={2205.11656},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

# License

BSD-3-Clause.

Copyright (c) 2022, Shikhar Tuli and Jha Lab.

All rights reserved.

See License file for more details.

|

Chan/distilroberta-base-finetuned-wikitext2 | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T18:29:10Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: BERiT_2000_2_layers_40_epochs

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# BERiT_2000_2_layers_40_epochs

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 6.8375

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 40

- label_smoothing_factor: 0.2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| 15.0851 | 0.19 | 500 | 8.5468 |

| 7.8971 | 0.39 | 1000 | 7.3376 |

| 7.3108 | 0.58 | 1500 | 7.1632 |

| 7.134 | 0.77 | 2000 | 7.0700 |

| 7.0956 | 0.97 | 2500 | 7.0723 |

| 7.0511 | 1.16 | 3000 | 6.9560 |

| 7.0313 | 1.36 | 3500 | 6.9492 |

| 7.0028 | 1.55 | 4000 | 6.9048 |

| 6.9563 | 1.74 | 4500 | 6.8456 |

| 6.9214 | 1.94 | 5000 | 6.8019 |

| 11.1596 | 2.13 | 5500 | 7.5882 |

| 7.5824 | 2.32 | 6000 | 7.1291 |

| 7.2581 | 2.52 | 6500 | 7.1123 |

| 7.2232 | 2.71 | 7000 | 7.1059 |

| 7.1734 | 2.9 | 7500 | 7.1120 |

| 7.1504 | 3.1 | 8000 | 7.0946 |

| 7.1314 | 3.29 | 8500 | 7.0799 |

| 7.1236 | 3.49 | 9000 | 7.1175 |

| 7.1275 | 3.68 | 9500 | 7.0905 |

| 7.1087 | 3.87 | 10000 | 7.0839 |

| 7.1212 | 4.07 | 10500 | 7.0822 |

| 7.1136 | 4.26 | 11000 | 7.0703 |

| 7.1025 | 4.45 | 11500 | 7.1035 |

| 7.0931 | 4.65 | 12000 | 7.0759 |

| 7.0899 | 4.84 | 12500 | 7.0883 |

| 7.0834 | 5.03 | 13000 | 7.1307 |

| 7.0761 | 5.23 | 13500 | 7.0642 |

| 7.0706 | 5.42 | 14000 | 7.0324 |

| 7.0678 | 5.62 | 14500 | 7.0704 |

| 7.0614 | 5.81 | 15000 | 7.0317 |

| 7.0569 | 6.0 | 15500 | 7.0421 |

| 7.057 | 6.2 | 16000 | 7.0250 |

| 7.0503 | 6.39 | 16500 | 7.0129 |

| 7.0529 | 6.58 | 17000 | 7.0316 |

| 7.0453 | 6.78 | 17500 | 7.0436 |

| 7.0218 | 6.97 | 18000 | 7.0064 |

| 7.0415 | 7.16 | 18500 | 7.0385 |

| 7.0338 | 7.36 | 19000 | 6.9756 |

| 7.0488 | 7.55 | 19500 | 7.0054 |

| 7.0347 | 7.75 | 20000 | 6.9946 |

| 7.0464 | 7.94 | 20500 | 7.0055 |

| 7.017 | 8.13 | 21000 | 7.0158 |

| 7.0159 | 8.33 | 21500 | 7.0052 |

| 7.0223 | 8.52 | 22000 | 6.9925 |

| 6.9989 | 8.71 | 22500 | 7.0307 |

| 7.0218 | 8.91 | 23000 | 6.9767 |

| 6.9998 | 9.1 | 23500 | 7.0096 |

| 7.01 | 9.3 | 24000 | 6.9599 |

| 6.9964 | 9.49 | 24500 | 6.9896 |

| 6.9906 | 9.68 | 25000 | 6.9903 |

| 7.0336 | 9.88 | 25500 | 6.9807 |

| 7.0053 | 10.07 | 26000 | 6.9776 |

| 6.9826 | 10.26 | 26500 | 6.9836 |

| 6.9897 | 10.46 | 27000 | 6.9886 |

| 6.9829 | 10.65 | 27500 | 6.9991 |

| 6.9849 | 10.84 | 28000 | 6.9651 |

| 6.9901 | 11.04 | 28500 | 6.9822 |

| 6.9852 | 11.23 | 29000 | 6.9921 |

| 6.9757 | 11.43 | 29500 | 6.9636 |

| 6.991 | 11.62 | 30000 | 6.9952 |

| 6.9818 | 11.81 | 30500 | 6.9799 |

| 6.9911 | 12.01 | 31000 | 6.9725 |

| 6.9423 | 12.2 | 31500 | 6.9540 |

| 6.9885 | 12.39 | 32000 | 6.9771 |

| 6.9636 | 12.59 | 32500 | 6.9475 |

| 6.9567 | 12.78 | 33000 | 6.9653 |

| 6.9749 | 12.97 | 33500 | 6.9711 |

| 6.9739 | 13.17 | 34000 | 6.9691 |

| 6.9651 | 13.36 | 34500 | 6.9569 |

| 6.9599 | 13.56 | 35000 | 6.9608 |

| 6.957 | 13.75 | 35500 | 6.9531 |

| 6.9539 | 13.94 | 36000 | 6.9704 |

| 6.958 | 14.14 | 36500 | 6.9478 |

| 6.9597 | 14.33 | 37000 | 6.9510 |

| 6.9466 | 14.52 | 37500 | 6.9625 |

| 6.9518 | 14.72 | 38000 | 6.9787 |

| 6.9509 | 14.91 | 38500 | 6.9391 |

| 6.9505 | 15.1 | 39000 | 6.9694 |

| 6.9311 | 15.3 | 39500 | 6.9440 |

| 6.9513 | 15.49 | 40000 | 6.9425 |

| 6.9268 | 15.69 | 40500 | 6.9223 |

| 6.9415 | 15.88 | 41000 | 6.9435 |

| 6.9308 | 16.07 | 41500 | 6.9281 |

| 6.9216 | 16.27 | 42000 | 6.9415 |

| 6.9265 | 16.46 | 42500 | 6.9164 |

| 6.9023 | 16.65 | 43000 | 6.9237 |

| 6.9407 | 16.85 | 43500 | 6.9100 |

| 6.9211 | 17.04 | 44000 | 6.9295 |

| 6.9147 | 17.23 | 44500 | 6.9131 |

| 6.9224 | 17.43 | 45000 | 6.9188 |

| 6.9215 | 17.62 | 45500 | 6.9077 |

| 6.915 | 17.82 | 46000 | 6.9371 |

| 6.906 | 18.01 | 46500 | 6.8932 |

| 6.91 | 18.2 | 47000 | 6.9100 |

| 6.8999 | 18.4 | 47500 | 6.9251 |

| 6.9113 | 18.59 | 48000 | 6.9078 |

| 6.9197 | 18.78 | 48500 | 6.9099 |

| 6.8985 | 18.98 | 49000 | 6.9074 |

| 6.9009 | 19.17 | 49500 | 6.8971 |

| 6.8937 | 19.36 | 50000 | 6.8982 |

| 6.9094 | 19.56 | 50500 | 6.9077 |

| 6.9069 | 19.75 | 51000 | 6.9006 |

| 6.8991 | 19.95 | 51500 | 6.8912 |

| 6.8924 | 20.14 | 52000 | 6.8881 |

| 6.899 | 20.33 | 52500 | 6.8899 |

| 6.9028 | 20.53 | 53000 | 6.8938 |

| 6.8997 | 20.72 | 53500 | 6.8822 |

| 6.8943 | 20.91 | 54000 | 6.9005 |

| 6.8804 | 21.11 | 54500 | 6.9048 |

| 6.8848 | 21.3 | 55000 | 6.9062 |

| 6.9072 | 21.49 | 55500 | 6.9104 |

| 6.8783 | 21.69 | 56000 | 6.9069 |

| 6.8879 | 21.88 | 56500 | 6.8938 |

| 6.8922 | 22.08 | 57000 | 6.8797 |

| 6.8892 | 22.27 | 57500 | 6.9168 |

| 6.8863 | 22.46 | 58000 | 6.8820 |

| 6.8822 | 22.66 | 58500 | 6.9130 |

| 6.8752 | 22.85 | 59000 | 6.8973 |

| 6.8823 | 23.04 | 59500 | 6.8933 |

| 6.8813 | 23.24 | 60000 | 6.8919 |

| 6.8787 | 23.43 | 60500 | 6.8855 |

| 6.8886 | 23.63 | 61000 | 6.8956 |

| 6.8744 | 23.82 | 61500 | 6.9092 |

| 6.8799 | 24.01 | 62000 | 6.8944 |

| 6.879 | 24.21 | 62500 | 6.8850 |

| 6.8797 | 24.4 | 63000 | 6.8782 |

| 6.8724 | 24.59 | 63500 | 6.8691 |

| 6.8803 | 24.79 | 64000 | 6.8965 |

| 6.8899 | 24.98 | 64500 | 6.8986 |

| 6.8873 | 25.17 | 65000 | 6.9034 |

| 6.8777 | 25.37 | 65500 | 6.8658 |

| 6.8784 | 25.56 | 66000 | 6.8803 |

| 6.8791 | 25.76 | 66500 | 6.8727 |

| 6.8736 | 25.95 | 67000 | 6.8832 |

| 6.8865 | 26.14 | 67500 | 6.8811 |

| 6.8668 | 26.34 | 68000 | 6.8817 |

| 6.8709 | 26.53 | 68500 | 6.8945 |

| 6.8755 | 26.72 | 69000 | 6.8777 |

| 6.8635 | 26.92 | 69500 | 6.8747 |

| 6.8752 | 27.11 | 70000 | 6.8875 |

| 6.8729 | 27.3 | 70500 | 6.8696 |

| 6.8728 | 27.5 | 71000 | 6.8659 |

| 6.8692 | 27.69 | 71500 | 6.8856 |

| 6.868 | 27.89 | 72000 | 6.8689 |

| 6.8668 | 28.08 | 72500 | 6.8877 |

| 6.8576 | 28.27 | 73000 | 6.8783 |

| 6.8633 | 28.47 | 73500 | 6.8828 |

| 6.8737 | 28.66 | 74000 | 6.8717 |

| 6.8702 | 28.85 | 74500 | 6.8485 |

| 6.8785 | 29.05 | 75000 | 6.8771 |

| 6.8818 | 29.24 | 75500 | 6.8815 |

| 6.8647 | 29.43 | 76000 | 6.8877 |

| 6.8574 | 29.63 | 76500 | 6.8920 |

| 6.8474 | 29.82 | 77000 | 6.8936 |

| 6.8558 | 30.02 | 77500 | 6.8768 |

| 6.8645 | 30.21 | 78000 | 6.8921 |

| 6.8786 | 30.4 | 78500 | 6.8604 |

| 6.8693 | 30.6 | 79000 | 6.8603 |

| 6.855 | 30.79 | 79500 | 6.8559 |

| 6.8429 | 30.98 | 80000 | 6.8746 |

| 6.8688 | 31.18 | 80500 | 6.8774 |

| 6.8735 | 31.37 | 81000 | 6.8643 |

| 6.8541 | 31.56 | 81500 | 6.8767 |

| 6.8695 | 31.76 | 82000 | 6.8804 |

| 6.8607 | 31.95 | 82500 | 6.8674 |

| 6.8538 | 32.15 | 83000 | 6.8572 |

| 6.8472 | 32.34 | 83500 | 6.8683 |

| 6.8763 | 32.53 | 84000 | 6.8758 |

| 6.8405 | 32.73 | 84500 | 6.8764 |

| 6.8658 | 32.92 | 85000 | 6.8614 |

| 6.8834 | 33.11 | 85500 | 6.8641 |

| 6.8554 | 33.31 | 86000 | 6.8787 |

| 6.8738 | 33.5 | 86500 | 6.8747 |

| 6.848 | 33.69 | 87000 | 6.8699 |

| 6.8621 | 33.89 | 87500 | 6.8654 |

| 6.8543 | 34.08 | 88000 | 6.8639 |

| 6.8606 | 34.28 | 88500 | 6.8852 |

| 6.8666 | 34.47 | 89000 | 6.8840 |

| 6.8717 | 34.66 | 89500 | 6.8773 |

| 6.854 | 34.86 | 90000 | 6.8671 |

| 6.8526 | 35.05 | 90500 | 6.8762 |

| 6.8592 | 35.24 | 91000 | 6.8644 |

| 6.8641 | 35.44 | 91500 | 6.8599 |

| 6.8655 | 35.63 | 92000 | 6.8622 |

| 6.8557 | 35.82 | 92500 | 6.8671 |

| 6.8546 | 36.02 | 93000 | 6.8573 |

| 6.853 | 36.21 | 93500 | 6.8542 |

| 6.8597 | 36.41 | 94000 | 6.8518 |

| 6.8576 | 36.6 | 94500 | 6.8700 |

| 6.8549 | 36.79 | 95000 | 6.8628 |

| 6.8576 | 36.99 | 95500 | 6.8695 |

| 6.8505 | 37.18 | 96000 | 6.8870 |

| 6.8564 | 37.37 | 96500 | 6.8898 |

| 6.8627 | 37.57 | 97000 | 6.8619 |

| 6.8502 | 37.76 | 97500 | 6.8696 |

| 6.8548 | 37.96 | 98000 | 6.8663 |

| 6.8512 | 38.15 | 98500 | 6.8683 |

| 6.8484 | 38.34 | 99000 | 6.8605 |

| 6.8581 | 38.54 | 99500 | 6.8749 |

| 6.8525 | 38.73 | 100000 | 6.8849 |

| 6.8375 | 38.92 | 100500 | 6.8712 |

| 6.8423 | 39.12 | 101000 | 6.8905 |

| 6.8559 | 39.31 | 101500 | 6.8574 |

| 6.8441 | 39.5 | 102000 | 6.8722 |

| 6.8467 | 39.7 | 102500 | 6.8550 |

| 6.8389 | 39.89 | 103000 | 6.8375 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Chandanbhat/distilbert-base-uncased-finetuned-cola | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T18:56:14Z | ---

license: mit

tags:

- generated_from_trainer

- nlu

- intent-classification

- text-classification

metrics:

- accuracy

- f1

model-index:

- name: xlm-r-base-amazon-massive-intent-label_smoothing

results:

- task:

name: intent-classification

type: intent-classification

dataset:

name: MASSIVE

type: AmazonScience/massive

split: test

metrics:

- name: F1

type: f1

value: 0.8879

datasets:

- AmazonScience/massive

language:

- en

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-r-base-amazon-massive-intent-label_smoothing

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the [MASSIVE1.1](https://huggingface.co/datasets/AmazonScience/massive) dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5148

- Accuracy: 0.8879

- F1: 0.8879

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- label_smoothing_factor: 0.4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 3.3945 | 1.0 | 720 | 2.7175 | 0.7900 | 0.7900 |

| 2.7629 | 2.0 | 1440 | 2.5660 | 0.8549 | 0.8549 |

| 2.5143 | 3.0 | 2160 | 2.5389 | 0.8711 | 0.8711 |

| 2.4678 | 4.0 | 2880 | 2.5172 | 0.8883 | 0.8883 |

| 2.4187 | 5.0 | 3600 | 2.5148 | 0.8879 | 0.8879 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2 |

CharlieChen/feedback-bigbird | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: t5-small-vanilla-mtop

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-vanilla-mtop

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1581

- Exact Match: 0.6331

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 32

- total_train_batch_size: 512

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 3000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Exact Match |

|:-------------:|:-----:|:----:|:---------------:|:-----------:|

| 1.5981 | 6.65 | 200 | 0.1598 | 0.4940 |

| 0.1335 | 13.33 | 400 | 0.1155 | 0.5884 |

| 0.074 | 19.98 | 600 | 0.1046 | 0.6094 |

| 0.0497 | 26.65 | 800 | 0.1065 | 0.6139 |

| 0.0363 | 33.33 | 1000 | 0.1134 | 0.6255 |

| 0.0278 | 39.98 | 1200 | 0.1177 | 0.6313 |

| 0.022 | 46.65 | 1400 | 0.1264 | 0.6255 |

| 0.0183 | 53.33 | 1600 | 0.1260 | 0.6304 |

| 0.0151 | 59.98 | 1800 | 0.1312 | 0.6300 |

| 0.0124 | 66.65 | 2000 | 0.1421 | 0.6277 |

| 0.0111 | 73.33 | 2200 | 0.1405 | 0.6277 |

| 0.0092 | 79.98 | 2400 | 0.1466 | 0.6331 |

| 0.008 | 86.65 | 2600 | 0.1522 | 0.6340 |

| 0.007 | 93.33 | 2800 | 0.1590 | 0.6295 |

| 0.0064 | 99.98 | 3000 | 0.1581 | 0.6331 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Charlotte77/model_test | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | Third model is Nightmare Wet Worms. Prompt being "NghtmrWrmFrk". It's more based on my models that are full of tentacles, worms, maggots, wet looking, drippy....etc. This model isn't perfect and alot of words don't seem to matter as much, but you can still get some amazing results if your into this type of look. Heck, just type a bunch of random words and you get weird images! Keep the CFG low, steps at any amount though. Samples can be anything.

|

ChaseBread/DialoGPT-small-harrypotter | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | 2022-11-21T19:08:28Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: t5-base-vanilla-mtop

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-vanilla-mtop

This model is a fine-tuned version of [google/mt5-base](https://huggingface.co/google/mt5-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2080

- Exact Match: 0.6394

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 64

- total_train_batch_size: 512

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 3000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Exact Match |

|:-------------:|:-----:|:----:|:---------------:|:-----------:|

| 1.0516 | 6.65 | 200 | 0.1173 | 0.5875 |

| 0.0541 | 13.33 | 400 | 0.1130 | 0.6331 |

| 0.0468 | 19.98 | 600 | 0.1290 | 0.6036 |

| 0.0241 | 26.65 | 800 | 0.1306 | 0.6273 |

| 0.0125 | 33.33 | 1000 | 0.1425 | 0.6291 |

| 0.0077 | 39.98 | 1200 | 0.1518 | 0.6345 |

| 0.0054 | 46.65 | 1400 | 0.1643 | 0.6362 |

| 0.004 | 53.33 | 1600 | 0.1718 | 0.6362 |

| 0.0033 | 59.98 | 1800 | 0.1803 | 0.6336 |

| 0.0026 | 66.65 | 2000 | 0.1808 | 0.6394 |

| 0.0021 | 73.33 | 2200 | 0.1915 | 0.6371 |

| 0.0017 | 79.98 | 2400 | 0.1919 | 0.6403 |

| 0.0013 | 86.65 | 2600 | 0.2024 | 0.6358 |

| 0.0011 | 93.33 | 2800 | 0.2049 | 0.6353 |

| 0.0008 | 99.98 | 3000 | 0.2080 | 0.6394 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Cheapestmedsshop/Buymodafinilus | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- generated_from_keras_callback

model-index:

- name: GeoBERT

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# GeoBERT_Analyzer

GeoBERT_Analyzer is a Text Classification model that was fine-tuned from GeoBERT on the Geoscientific Corpus dataset.

The model was trained on the Labeled Geoscientific & Non-Geosceintific Corpus dataset (21416 x 2 sentences).

## Intended uses

The train aims to make the Language Model have the ability to distinguish between Geoscience and Non – Geoscience (General) corpus

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 14000, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.10.0

- Datasets 2.4.0

- Tokenizers 0.12.1

## Model performances (metric: seqeval)

entity|precision|recall|f1

-|-|-|-

General |0.9976|0.9980|0.9978

Geoscience|0.9980|0.9984|0.9982

## How to use GeoBERT with HuggingFace

##### Load GeoBERT and its sub-word tokenizer :

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("botryan96/GeoBERT_analyzer")

model = AutoModelForTokenClassification.from_pretrained("botryan96/GeoBERT_analyzer")

#Define the pipeline

from transformers import pipeline

anlyze_machine=pipeline('text-classification',model = model_checkpoint2)

#Define the sentences

sentences = ['the average iron and sulfate concentrations were calculated to be 19 . 6 5 . 2 and 426 182 mg / l , respectively .',

'She first gained media attention as a friend and stylist of Paris Hilton']

#Deploy the machine

anlyze_machine(sentences)

``` |

Cheatham/xlm-roberta-base-finetuned | [

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"XLMRobertaForSequenceClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 20 | null | ---

language:

- en

thumbnail: https://github.com/karanchahal/distiller/blob/master/distiller.jpg

tags:

- question-answering

license: apache-2.0

datasets:

- squad

metrics:

- squad

---

# DistilBERT with a second step of distillation

## Model description

This model replicates the "DistilBERT (D)" model from Table 2 of the [DistilBERT paper](https://arxiv.org/pdf/1910.01108.pdf). In this approach, a DistilBERT student is fine-tuned on SQuAD v1.1, but with a BERT model (also fine-tuned on SQuAD v1.1) acting as a teacher for a second step of task-specific distillation.

In this version, the following pre-trained models were used:

* Student: `distilbert-base-uncased`

* Teacher: `lewtun/bert-base-uncased-finetuned-squad-v1`

## Training data

This model was trained on the SQuAD v1.1 dataset which can be obtained from the `datasets` library as follows:

```python

from datasets import load_dataset

squad = load_dataset('squad')

```

## Training procedure

## Eval results

| | Exact Match | F1 |

|------------------|-------------|------|

| DistilBERT paper | 79.1 | 86.9 |

| Ours | 78.4 | 86.5 |

The scores were calculated using the `squad` metric from `datasets`.

### BibTeX entry and citation info

```bibtex

@misc{sanh2020distilbert,

title={DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter},

author={Victor Sanh and Lysandre Debut and Julien Chaumond and Thomas Wolf},

year={2020},

eprint={1910.01108},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

Cheatham/xlm-roberta-large-finetuned-d1 | [

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"XLMRobertaForSequenceClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 20 | 2022-11-21T19:12:40Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

---

### ataturkai Dreambooth model trained by thothai with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

|

Cheatham/xlm-roberta-large-finetuned-r01 | [

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"XLMRobertaForSequenceClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 23 | 2022-11-21T19:21:21Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

---

### Open Potion Bottle v2 Dreambooth model trained by [piEsposito](https://twitter.com/piesposi_to) with open weights, configs and prompts (as it should be)

- Concept: `potionbottle`

You can run this concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

## Usage examples with `potionbottle`

- Prompt: fantasy dragon inside a potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/pottionbottle_1.png" width=512/>

- Prompt: potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/potionbottle_2.png" width=512/>

- Prompt: green potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/potionbottle_3.png" width=512/>

- Prompt: spiral galaxy inside a potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/potionbottle_4.png" width=512/>

- Prompt: lightning storm inside a potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/pottionbottle_5.png" width=512/>

- Prompt: pomeranian as a potionbottle, perfectly ornated, intricate details, 3d render vray, uhd, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/potionbottle_6.png" width=512/>

- Prompt: milkshake as potionbottle, perfectly ornated, intricate details, 3d render vray, beautiful, trending on artstation

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/pottionbottle_7.png" width=512/>

- Prompt: a square potionbottle full of fire. Art by smoose2. Caustic reflections, shadows

- CFG Scale: 10

- Scheduler: `diffusers.EulerAncestralDiscreteScheduler`

- Steps: 30

<img src="https://huggingface.co/piEsposito/openpotionbottle-v2/resolve/main/concept_images/pottionbottle_8.png" width=512/>

#### By https://twitter.com/piesposi_to

|

Cheatham/xlm-roberta-large-finetuned3 | [

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"XLMRobertaForSequenceClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 22 | 2022-11-21T19:21:32Z | ---

language: "en"

thumbnail:

tags:

- speechbrain

- embeddings

- Speaker

- Verification

- Identification

- pytorch

- ECAPA-TDNN

license: "apache-2.0"

datasets:

- voxceleb

metrics:

- EER

- Accuracy

inference: true

widget:

- example_title: VoxCeleb Speaker id10003

src: https://cdn-media.huggingface.co/speech_samples/VoxCeleb1_00003.wav

- example_title: VoxCeleb Speaker id10004

src: https://cdn-media.huggingface.co/speech_samples/VoxCeleb_00004.wav

---

# Speaker Identification with ECAPA-TDNN embeddings on Voxceleb

This repository provides a pretrained ECAPA-TDNN model using SpeechBrain. The system can be used to extract speaker embeddings as well. Since we can't find any resource that has SpeechBrain or HuggingFace compatible checkpoints that has only been trained on VoxCeleb2 development data, so we decide to pre-train an ECAPA-TDNN system from scratch.

# Pipeline description

This system is composed of an ECAPA-TDNN model. It is a combination of convolutional and residual blocks. The embeddings are extracted using attentive statistical pooling. The system is trained with Additive Margin Softmax Loss.

We use FBank (16kHz, 25ms frame length, 10ms hop length, 80 filter-bank channels) as the input features. It was trained using initial learning rate of 0.001 and batch size of 512 with cyclical learning rate policy (CLR) for 20 epochs on 4 A100 GPUs. We employ additive noises and reverberation from [MUSAN](http://www.openslr.org/17/) and [RIR](http://www.openslr.org/28/) datasets to enrich the supervised information. The pre-training progress takes approximately ten days for the ECAPA-TDNN model.

# Performance

**VoxCeleb1-O** is the original verification test set from VoxCeleb1 consisting of 40 speakers. All speakers with names starting with "E" are reserved for testing. **VoxCeleb1-E** uses the entire VoxCeleb1 dataset, covering 1251 speakers. **VoxCeleb1-H** is a hard version of evaluation set consisting of 552536 pairs with 1190 speakers with the same nationality and gender. There are 18 nationality-gender combinations each with at least 5 individuals.

| Splits | Backend | S-norm | EER(%) | minDCF(0.01) |

|:-------------:|:--------------:|:--------------:|:--------------:|:--------------:|

| VoxCeleb1-O | cosine | no | 1.29 | 0.13 |

| VoxCeleb1-O | cosine | yes | 1.19 | 0.11 |

| VoxCeleb1-E | cosine | no | 1.42 | 0.16 |

| VoxCeleb1-E | cosine | yes | 1.31 | 0.14 |

| VoxCeleb1-H | cosine | no | 2.66 | 0.26 |

| VoxCeleb1-H | cosine | yes | 2.48 | 0.23 |

- VoxCeleb1-O: includes 37611 test pairs with 40 speakers.

- VoxCeleb1-E: includes 579818 test pairs with 1251 speakers.

- VoxCeleb1-H: includes 550894 test pairs with 1190 speakers.

# Compute the speaker embeddings

The system is trained with recordings sampled at 16kHz (single channel).

```python

import torch

import torchaudio

from speechbrain.pretrained.interfaces import Pretrained

from speechbrain.pretrained import EncoderClassifier

class Encoder(Pretrained):

MODULES_NEEDED = [

"compute_features",

"mean_var_norm",

"embedding_model"

]

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

def encode_batch(self, wavs, wav_lens=None, normalize=False):

# Manage single waveforms in input

if len(wavs.shape) == 1:

wavs = wavs.unsqueeze(0)

# Assign full length if wav_lens is not assigned

if wav_lens is None:

wav_lens = torch.ones(wavs.shape[0], device=self.device)

# Storing waveform in the specified device

wavs, wav_lens = wavs.to(self.device), wav_lens.to(self.device)

wavs = wavs.float()

# Computing features and embeddings

feats = self.mods.compute_features(wavs)

feats = self.mods.mean_var_norm(feats, wav_lens)

embeddings = self.mods.embedding_model(feats, wav_lens)

if normalize:

embeddings = self.hparams.mean_var_norm_emb(

embeddings,

torch.ones(embeddings.shape[0], device=self.device)

)

return embeddings

classifier = Encoder.from_hparams(

source="yangwang825/ecapa-tdnn-vox2"

)

signal, fs = torchaudio.load('spk1_snt1.wav')

embeddings = classifier.encode_batch(signal)

>>> torch.Size([1, 1, 192])

```

We will release our training results (models, logs, etc) shortly.

# References

1. Ravanelli et al., SpeechBrain: A General-Purpose Speech Toolkit, 2021

2. Desplanques et al., ECAPA-TDNN: Emphasized Channel Attention, Propagation and Aggregation in TDNN Based Speaker Verification, 2020 |

Check/vaw2tmp | [

"tensorboard"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T19:24:42Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- opus100

model-index:

- name: t5-small-finetuned-ta-to-en

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-ta-to-en

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the opus100 dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6087

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 3.826 | 1.0 | 11351 | 3.6087 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

CheonggyeMountain-Sherpa/kogpt-trinity-poem | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 15 | 2022-11-21T19:28:21Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: "food_crit "

---

### Jak's Creepy Critter Pack for Stable Diffusion

Trained using TheLastBen Dreambooth colab notebook, using 95 training images, 5000 training steps.

Use Prompt: "food_crit" in the beginning of your prompt followed by a food. No major prompt-crafting needed.

Thanks to /u/Jak_TheAI_Artist for supplying training images!

Sample pictures of this concept:

prompt: "food_crit, spaghetti and meatballs"

prompt: "food_crit, snowcone"

prompt: "food_crit, cola cola, vibrant colors"

Steps: 27, Sampler: Euler a, CFG scale: 6, Seed: 1195328763

prompt: "shiny ceramic 3d painting, (mens's shoe creature) gum stuck to sole, high detail render, vibrant, cinematic lighting"

Negative prompt: painting, photoshop, illustration, blurry, dull, drawing

Steps: 40, Sampler: Euler a, CFG scale: 10, Seed: 1018346393

Prompt: "melting trippy zombie muscle car, smoking, with big eyes, hyperrealistic, intricate detail, high detail render, vibrant, cinematic lighting, shiny, ceramic, reflections"

Negative prompt: "painting, photoshop, illustration, blurry, dull"

Steps: 40, Sampler: Euler a, CFG scale: 10, Seed: 3713218290, Size: 960x512, Model hash: d9aa872b

|

Chertilasus/main | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T19:28:59Z | ---

language:

- te

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- Chai_Bisket_Stories_16-08-2021_14-17

metrics:

- wer

model-index:

- name: Whisper Small Telugu - Naga Budigam

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Chai_Bisket_Stories_16-08-2021_14-17

type: Chai_Bisket_Stories_16-08-2021_14-17

config: None

split: None

args: 'config: te, split: test'

metrics:

- name: Wer

type: wer

value: 77.48711850971065

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Telugu - Naga Budigam

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Chai_Bisket_Stories_16-08-2021_14-17 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7063

- Wer: 77.4871

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.2933 | 2.62 | 500 | 0.3849 | 86.6429 |

| 0.0692 | 5.24 | 1000 | 0.3943 | 82.7190 |

| 0.0251 | 7.85 | 1500 | 0.4720 | 82.4415 |

| 0.0098 | 10.47 | 2000 | 0.5359 | 81.6092 |

| 0.0061 | 13.09 | 2500 | 0.5868 | 75.9413 |

| 0.0025 | 15.71 | 3000 | 0.6235 | 76.6944 |

| 0.0009 | 18.32 | 3500 | 0.6634 | 78.3987 |

| 0.0005 | 20.94 | 4000 | 0.6776 | 77.1700 |

| 0.0002 | 23.56 | 4500 | 0.6995 | 78.2798 |

| 0.0001 | 26.18 | 5000 | 0.7063 | 77.4871 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Chester/traffic-rec | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T19:30:17Z | ---

language: en

datasets:

- Dizex/InstaFoodSet

widget:

- text: "Today's meal: Fresh olive poké bowl topped with chia seeds. Very delicious!"

example_title: "Food example 1"

- text: "Tartufo Pasta with garlic flavoured butter and olive oil, egg yolk, parmigiano and pasta water."

example_title: "Food example 2"

tags:

- Instagram

- NER

- Named Entity Recognition

- Food Entity Extraction

- Social Media

- Informal text

- RoBERTa

license: mit

---

# InstaFoodRoBERTa-NER

## Model description

**InstaFoodRoBERTa-NER** is a fine-tuned BERT model that is ready to use for **Named Entity Recognition** of Food entities on informal text (social media like). It has been trained to recognize a single entity: food (FOOD).

Specifically, this model is a *roberta-base* model that was fine-tuned on a dataset consisting of 400 English Instagram posts related to food. The [dataset](https://huggingface.co/datasets/Dizex/InstaFoodSet) is open source.

## Intended uses

#### How to use

You can use this model with Transformers *pipeline* for NER.

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

from transformers import pipeline

tokenizer = AutoTokenizer.from_pretrained("Dizex/InstaFoodRoBERTa-NER")

model = AutoModelForTokenClassification.from_pretrained("Dizex/InstaFoodRoBERTa-NER")

pipe = pipeline("ner", model=model, tokenizer=tokenizer)

example = "Today's meal: Fresh olive poké bowl topped with chia seeds. Very delicious!"

ner_entity_results = pipe(example, aggregation_strategy="simple")

print(ner_entity_results)

```

To get the extracted food entities as strings you can use the following code:

```python

def convert_entities_to_list(text, entities: list[dict]) -> list[str]:

ents = []

for ent in entities:

e = {"start": ent["start"], "end": ent["end"], "label": ent["entity_group"]}

if ents and -1 <= ent["start"] - ents[-1]["end"] <= 1 and ents[-1]["label"] == e["label"]:

ents[-1]["end"] = e["end"]

continue

ents.append(e)

return [text[e["start"]:e["end"]] for e in ents]

print(convert_entities_to_list(example, ner_entity_results))

```

This will result in the following output:

```python

['olive poké bowl', 'chia seeds']

```

## Performance on [InstaFoodSet](https://huggingface.co/datasets/Dizex/InstaFoodSet)

metric|val

-|-

f1 |0.91

precision |0.89

recall |0.93

|

Chikita1/www_stash_stock | [

"license:bsd-3-clause-clear"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T21:12:34Z | ---

language:

- en

license: creativeml-openrail-m

thumbnail: "https://huggingface.co/ai-characters/4elements-diffusion/resolve/main/gandr-collage.jpg"

tags:

- stable-diffusion

- text-to-image

- image-to-image

---

# 4elements-diffusion

##### A StableDiffusion All-In-One Legend of Korra style + Korra character Dreambooth model created by AI-Characters

#### For what tokens to use in your prompts to employ the desired effects scroll down to the following section of this page: "Tokens to use to prompt the artstyle as well as Korra's different outfits"

**Feel free to donate to my [KoFi](https://ko-fi.com/aicharacters)** to help me fund renting GPU's for further model creation and experimentation!

Follow me on [Twitter](https://twitter.com/ai_characters) and [Instagram](https://www.instagram.com/ai_characters/) for AI art posts and model updates!

## Quick Feature Overview

- Create anyone and anything in the LoK artstyle!

- Create Korra in any artstyle!

- Mix and match all of Korra's outfits however you want to!

- Give anyone Korra's outfits!

- Give Korra any outfits!

*This model is much trickier to use than other models, but in return it is very flexible and has high likeness!* **I thus highly recommend checking out the "How to correctly use this model" section of this page!**

--- This model is not yet final! I will keep working on it and trying to improve it! I also welcome anyone to use my uploaded dataset (see at the bottom of this page) to create a better version! ---

## IMPORTANT INFORMATION BEFORE YOU USE THIS MODEL

I highly recommend using img2img when using this model, either by converting photos into the Legend of Korra artstyle or by resizing your initial 512x512 txt2img Legend of Korra style generations up to 1024x1024 or higher resolutions. **Your initial 512x512 txt2img generations using the Legend of Korra artstyle WILL ALWAYS look like crap** if you generate shots of characters that are more zoomed out than just a closeup (e.g. half-body or full-shot). **Resizing the initial 512x512 generations to 1024x1024 or bigger** (full-shots will likely need 1536x1536 to look good) using img2img **will drastically improve your experience using this model!**

The model is also infected, e.g. photos output with this model WILL look different from those output in the vanilla SD model! So I recommend generating people in the vanilla SD model using txt2img first and then sending them to img2img and switching the model to this one and then applying the style! This way your result is more true to vanilla SD but just with the style applied!

**For more useful information on how to correctly use this model, see the "How to correctly use this model" section of this page!**

## Introduction

Welcome to my first ever published StableDiffusion model and the first public model **trained on the Legend of Korra artstyle**! But not just the artstyle: **I have trained this model on Korra, including *all* of her outfits, as well!** In total this model was trained using a manually captioned dataset of 1142 images: screencaps from the show, fanart, and cosplay photos.

I spent every day the last 4 weeks working on this project and spent hundreds of euros renting many many many GPU hours on VastAI to experiment with various parameters. I have created more than 50 ckpt's since then and learned a ton since then and got a ton of insight.

## Recommended samplers, steps, CFG values and denoising strength settings (for img2img)

- Euler a at 20 steps for quick results

- LMS at 100-150 steps for higher quality results that also follow your prompt more closely

- DPM++ 2M Karras at 20 steps for an alternative to EulerA

- CFG value from 7 to 4 (4 can look better in terms of image quality because it will have less of the overtraining effect, but it can also look less detailed)

- denoising strength of 0.4-0.6 for general img2img, and up to around 0.8 for more harcore cases where the style needs more denoising to be correctly applied (thoughthat will change the image of course, consider also to just do multiple runs through 0.5-0.6)

## How to correctly use this model (it's not as simple as the other models floating around the web currently!)

This model is not as easy to use as some of the other models you might be used to. For good results prompt engineering and img2img resizing is required. I highly recommend tinkering with the prompt weights, word order in the prompt, samplers, cfg and step values, etc! The results can be well worth it!

**My recommendation is to generate a photo in the vanilla SD model, send it to img2img, then switching the model to this one, and using the img2img function to transfer the style to the Legend of Korra style!** Also consider inpainting (though this model isn't trained on the new base inpainting model yet)! **I also recommend to keep prompts simple and the "zoom" closer to the character for better results! Though sometimes a highly complex prompt can also result in much better generations**, e.g. "Emma Watson, tlok artstyle" will almost always produce much worse results than a more complex prompt!

- **The most important bit first: SD doesn't play well with the artstyle at the standard 512x512. So your initial 512x512 generations in the artstyle will need to be resized to 1024x1024 for half-body shots and 1536x1536 for full-body shots in order to look good.** Closeups will look okay in 512x512 but I still recommend upscaling to 1024x1024.

An example:

Initial 512x512 generation

Upscaled to 1024x1024 (with an inpainted face)

Upscaled to 1526x1536 (with an inpainted face)

- **I highly recommend using the following negative prompt for *all* generations** (no matter what style, aka it massively improves the tlok artstyle generations as well!):

**blur, vignette, instagram**

This will drastically reduce the "overtrained effect" of the generations, e.g. too bright, vignetted and fried images. I have no idea why that works. It just does.

Examples:

Without the negative prompt:

With the negative prompt:

- Only for photos: You can add "photo, tlok artstyle" to the negative prompt for a further reduction in the "overtrained effect"! Doesn't always work, but sometimes does! Having photo in both the positive and negative prompt may sound nonsensical, but it works!

- **Also consider going from a CFG value of 7 down to a CFG value of 4.** This will make the image somewhat less detailed but it will also look much better in certain cases!

Example:

CFG value of 7:

CFG value of 4:

- **Use "cosplay photo" and not just "photo" in your positive prompt as just "photo" is sometimes not strong enough to force through the photo style, while "cosplay photo" almost always is because the captions were trained on that!**

Example:

Just "photo"

"cosplay photo"

- The model was trained using captions such as "cosplay photo", "full-shot", "half-body", "closeup", "facial closeup", among others. **So in case you are trying to force a different style but the tlok artstyle keeps popping up, try changing "full-shot" to "full-body" for example!**

- **Alternatively, add "tlok artstyle" to the negative prompt if you find that the Legend of Korra style is influencing your prompt too strongly!**

Example:

"19th century oil painting"

"19th century oil painting (negative prompt "tlok artstyle")"

- **Sometimes the photo generations of Korra will be too white, add "white skin" to the negative prompt in that case!**

## Example generations using this model!

empire state building tlok artstyle (using img2img)

woman with long blue hair wearing a traditional Japanese kimono during golden hour lighting tlok artstyle (resized with img2img + face inpainted)

young woman with red hair wearing modern casual white tshirt and blue jeans standing in front of the Brandenburg Gate tlok artstyle (resized with img2img + face inpainted)

written letter tlok artstyle (resized using img2img)

Korra wearing business suit stada hairstyle tlok artstyle (resized with img2img + face inpainted)

full-shot Korra wearing astronaut outfit stada hairstyle tlok artstyle (resized using img2img)

Korra wearing defa outfit stada hairstyle as a cute pixar character (resized using img2img)

half-body Korra wearing Kimono taio hairstyle figurine (resized using img2img)

dog tlok artstyle

mountain river valley tlok artstyle

Korra wearing bikini shoa hairstyle realistic detailed digital art by Greg Rutkowski

Korra wearing rain jacket and jeans stada hairstyle cosplay photograph

car on a road city street background tlok artstyle (resized using img2img)

Emma Watson (wearing defa outfit:1.3) cosplay photograph (resized using img2img)

%20cosplay%20photograph.png)

Zendaya standing in a forest wearing runa outfit tlok artstyle (resized using img2img + face inpainted)

## Tokens to use to prompt the artstyle as well as Korra's different outfits

**You can give Korra's outfits and hairstyles also to other people thanks to the token method! You can also mix and match outfits and hairstyles however you want to**, though results may at times be worse than if you just pair the correct hairstyle to the correct outfit (aka as it was in the show)!

Legend of Korra artstyle:

- tlok artstyle

Korra's hairstyles:

- stada hairstyle (Default ponytail hair)

- oped hairstyle (Opened hair)

- loes hairstyle (Loose hair)

- shoa hairstyle (Season4 short hair)

- taio hairstyle (Traditional formal hair)

- foha hairstyle (Season4 formal hair)

- okch hairstyle (young child Korra hairstyle)

Korra's outfits:

"wearing X outfit"

**(the second words are the hairstyles, e.g. with "runa shoa" "runa" is the outfit and "shoa" the hairstyle; prompting the corresponding hairstyle alongside the outfit will give you better likeness, but you can also mix and match different hairstyles and outfits together as you see fit at the cost of likeness, though some outfits and hairstyles work better than others in this regard)**

- runa shoa (earth kingdom runaway)

- saco stada (default parka)

- aino stada (airnomad (makes her look like a child for some reason))

- fife stada (fireferrets probending uniform)

- eqli stada (equalist disguise)

- boez oped (season2 parka)

- defa stada (default outfit)

- alte stada (season2 outfit)

- asai shoa (Asami's jacket (doesn't work so well))

- taso stada (Tarrlok's taskforce)

- dava oped (dark avatar/season 3 finale)

- seri foha (series finale gown)

- fose shoa (season4 outfit)

- proe stada (probending training attire)

- tuwa shoa (turfwars finale gown from the comics (doesn't work so well))

- cidi stada (civilian disguise)

- epgo taio (traditional dress)

- bafo loes (bath/sleeping robe)

- ektu shoa (earth kingdom tunic/hoodie)

- pama loes (pajamas)

- exci stada (firebending exercise (doesn't work so well))

- as chie, wearing yowi (child korra, winter outfit from the comics)

- as chie, wearing suou (child kora, summer outfit)

## Current shortcomings of the model

- the model is infected due to no regularization. This means better likeness but also means that you are better off using the original vanilla SD model for txt2img photo generations and then send them to img2img and switch the model over to this one for style transfer!

- the model may struggle at times with more complex prompts

- location tagging is very rudimentary for now (exterior, day, arctic)

- Landscapes could look better

- No tagging of unique locations, e.g. Republic City

- Korra is the only trained character for now

- a few of the outfits don't work that well because of low amount of training images or low resolution images. Generally some outfits, people, things, styles and prompts will work better than others

- likeness was better for certain prompts in my older models

## Outlook into the future

- Ideally I will be able to expand upon this model in the future by adding all the other characters from the show and maybe even ATLA characters! However, right now I am uncertain if that is possible, as the model is already heavily trained.

- Generally I want to improve this models likeness and flexibility

- Training this model on the new base inpainting model

- I seek to produce more models in the future such as models for Ahsoka, Aloy, Owl House, Ghibli, Sadie Sink, She-Ra, various online artists... but that will take time (and money)

## How I created this model and the underlying dataset (+ dataset download link!)

At first I wanted to create only a small Korra model with only her default outfit. In the first days I was experimenting with the standard class and token Dreambooth method using JoePennas repo. For that I manually downloaded 900 screenshots from the show of Korra in her default outfit from fancaps.net. I then manually cropped and resized those images. As I ran into walls I stopped trying to create this model and restarted trying to create a general style model using native finetuning instead. This time however I used the 40€ paid version of "Bulk Image Downloader" to automatically download around 30000 screencaps of the show from fancaps.net. I then used AntiDupl.NET to delete around half of the images which were found out by the program to be a duplicate. I then used ChaiNNer and IrfanView to bulk crop and resize the rest of the dataset to 512x512. I also downloaded around 200 high-quality fanarts and cosplay photos depicting Korra in her various outfits and some non-show outfits and used Irfanview to automatically resize them to 512x512 without cropping by adding black borders to the image (those do not show up in the final model output, luckily).

I spent a lot of money on GPU renting for the native finetuning but results were worse than my Dreambooth experiments so I went back to Dreambooth and used a small fraction of the finetuning dataset to create a style model. I learned a lot this time around and improved my model results but still results were not to my liking.

That is when I found out about the caption method in JoePennas repo. So I went ahead and spent an entire weekend, 12 hours each day, manually captioning around 1000 images. I used around 300 images from the former finetuning dataset for the style, 600 from the former 900 manually cropped and resized screencaps of Korra in her default outfit, then around 200 fanarts and cosplay photos and some additional screencaps and images of Korra in all her other outfits, to create my final dataset.

I used "Bulk File Rename" for Windows 10 to bulk rename files aka add captions.

**[The captioned dataset can be found here!](https://huggingface.co/datasets/ai-characters/4elements-diffusion-captioned-dataset)**

**[The 14000 show screencaps can be found for download here!](https://www.dropbox.com/s/406u0tv9xuttgku/14284%20images%2C%20512x512%2C%20automatically%20cropped%2C%20downscaled%20from%201080x1080.7z?dl=1)**

I encourage everyone to try and do it better than me and create your own Legend of Korra model!

Ultimately I spent the past two weeks experimenting with various different captions and training settings to reach my final model.

My final model uses these training settings:

- Repo: JoePenna's with captions (no class or regularization and only a fake token that will not be used during training)

- Learning rate: 3e-6 (for 80 repeats) and 2e-6 (for 35 repeats)

- Repeats/Steps: See above (1 repeat = one run through the entire dataset, so 1142 steps)

I had to use such high learning rates because due to the nature of the size of the dataset and captions it required it to attain the likeness I wanted for both the style and all of Korra's outfits.

There is much more to be said here regarding my workflow, experimentation, and the like, but I don't want to make this longer than necessary and this *is* already very long.

## Alternative Download Links

[Alternative download link for the model](https://www.dropbox.com/s/ayyk6c039gux7zs/4elements-diffusion.ckpt?dl=1)

[Alternative download link for the captioned dataset](https://www.dropbox.com/s/iobslrmyvdoi8oy/1142%20images%2C%20manually%20captioned%2C%20manual%20and%20automatic%20cropping%2C%20downscaled%20from%201024x1024.7z?dl=1)

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage. The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully) [Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

Ching/negation_detector | [

"pytorch",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"RobertaForQuestionAnswering"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | null | Access to model wmduggan41/kd-distilBERT-clinc is restricted and you are not in the authorized list. Visit https://huggingface.co/wmduggan41/kd-distilBERT-clinc to ask for access. |

Chinmay/mlindia | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-11-21T19:37:03Z | ---

license: creativeml-openrail-m

---

Anything-V3.0 based StableDiffusion model with Dreambooth training based on the general artstyle of Daniel Conway. Trained for 2,400 steps using 30 total training images.

## Usage

Can be used in StableDiffusion, including the extremely popular Web UI by Automatic1111, like any other model by placing the .CKPT file in the correct directory. Please consult the documentation for your installation of StableDiffusion for more specific instructions.

Use the following tokens in your prompt to achieve the desired output.

Token: ```"dconway"``` Class: ```"illustration style"```