modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

DeepPavlov/distilrubert-tiny-cased-conversational-v1 | [

"pytorch",

"distilbert",

"ru",

"arxiv:2205.02340",

"transformers"

]

| null | {

"architectures": null,

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9,141 | 2022-11-16T10:20:11Z | ---

license: openrail

library_name: diffusers

tags:

- TPU

- JAX

- Flax

- stable-diffusion

- text-to-image

language:

- en

---

|

DeltaHub/adapter_t5-3b_qnli | [

"pytorch",

"transformers"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: bert_uncased_L-2_H-128_A-2-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.913

- name: F1

type: f1

value: 0.9131486432959599

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert_uncased_L-2_H-128_A-2-finetuned-emotion

This model is a fine-tuned version of [google/bert_uncased_L-2_H-128_A-2](https://huggingface.co/google/bert_uncased_L-2_H-128_A-2) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2502

- Accuracy: 0.913

- F1: 0.9131

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.5953 | 1.0 | 250 | 1.4759 | 0.5055 | 0.3899 |

| 1.3208 | 2.0 | 500 | 1.1113 | 0.628 | 0.5554 |

| 1.0064 | 3.0 | 750 | 0.8224 | 0.79 | 0.7802 |

| 0.7535 | 4.0 | 1000 | 0.6185 | 0.8455 | 0.8425 |

| 0.5891 | 5.0 | 1250 | 0.5004 | 0.877 | 0.8758 |

| 0.4783 | 6.0 | 1500 | 0.4260 | 0.8865 | 0.8862 |

| 0.4078 | 7.0 | 1750 | 0.3787 | 0.8905 | 0.8903 |

| 0.3554 | 8.0 | 2000 | 0.3432 | 0.891 | 0.8909 |

| 0.3146 | 9.0 | 2250 | 0.3181 | 0.8925 | 0.8924 |

| 0.2808 | 10.0 | 2500 | 0.2986 | 0.8965 | 0.8970 |

| 0.2659 | 11.0 | 2750 | 0.2881 | 0.9 | 0.8999 |

| 0.2487 | 12.0 | 3000 | 0.2740 | 0.907 | 0.9072 |

| 0.2253 | 13.0 | 3250 | 0.2683 | 0.9045 | 0.9047 |

| 0.2103 | 14.0 | 3500 | 0.2650 | 0.9095 | 0.9099 |

| 0.1995 | 15.0 | 3750 | 0.2551 | 0.9105 | 0.9108 |

| 0.1894 | 16.0 | 4000 | 0.2534 | 0.9085 | 0.9088 |

| 0.1791 | 17.0 | 4250 | 0.2473 | 0.91 | 0.9102 |

| 0.168 | 18.0 | 4500 | 0.2441 | 0.913 | 0.9134 |

| 0.1563 | 19.0 | 4750 | 0.2459 | 0.9105 | 0.9107 |

| 0.1511 | 20.0 | 5000 | 0.2497 | 0.9075 | 0.9076 |

| 0.1363 | 21.0 | 5250 | 0.2502 | 0.913 | 0.9131 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

DemangeJeremy/4-sentiments-with-flaubert | [

"pytorch",

"flaubert",

"text-classification",

"fr",

"transformers",

"sentiments",

"french",

"flaubert-large"

]

| text-classification | {

"architectures": [

"FlaubertForSequenceClassification"

],

"model_type": "flaubert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 226 | null | Access to model AlexKozachuk/Kitchen is restricted and you are not in the authorized list. Visit https://huggingface.co/AlexKozachuk/Kitchen to ask for access. |

Deniskin/emailer_medium_300 | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 14 | null | # This is min-stable-diffusion weights file

## I hope you enjoyed. I hope you can discovery light!!!

#### weight file notes

1) wd-1-3-penultimate-ucg-cont.pt is waifu-diffusion-v1-4 weight

2) mdjrny-v4.pt is midjourney-v4-diffusion weight

3) stable_diffusion_v1_4.pt is CompVis/stable-diffusion-v1-4

4) stable_diffusion_v1_5.pt is runwayml/stable-diffusion-v1-5

5) animev3.pt is https://huggingface.co/Linaqruf/personal_backup/tree/main/animev3ckpt

6) Anything-V3.0.pt is https://huggingface.co/Linaqruf/anything-v3.0

#### install and run is github

https://github.com/scale100xu/min-stable-diffusion

|

Deniskin/essays_small_2000 | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: vit-large-patch32-384-finetuned-melanoma

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8272727272727273

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-large-patch32-384-finetuned-melanoma

This model is a fine-tuned version of [google/vit-large-patch32-384](https://huggingface.co/google/vit-large-patch32-384) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0767

- Accuracy: 0.8273

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 40

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 1.0081 | 1.0 | 550 | 0.7650 | 0.68 |

| 0.7527 | 2.0 | 1100 | 0.6693 | 0.7364 |

| 0.6234 | 3.0 | 1650 | 0.6127 | 0.7709 |

| 2.6284 | 4.0 | 2200 | 0.6788 | 0.7655 |

| 0.1406 | 5.0 | 2750 | 0.6657 | 0.7836 |

| 0.317 | 6.0 | 3300 | 0.6936 | 0.78 |

| 2.5358 | 7.0 | 3850 | 0.7104 | 0.7909 |

| 1.5802 | 8.0 | 4400 | 0.6928 | 0.8 |

| 0.088 | 9.0 | 4950 | 0.8060 | 0.7982 |

| 0.0183 | 10.0 | 5500 | 0.7811 | 0.8091 |

| 0.0074 | 11.0 | 6050 | 0.7185 | 0.7945 |

| 0.0448 | 12.0 | 6600 | 0.8780 | 0.7909 |

| 0.4288 | 13.0 | 7150 | 0.8229 | 0.82 |

| 0.017 | 14.0 | 7700 | 0.7516 | 0.8182 |

| 0.0057 | 15.0 | 8250 | 0.7974 | 0.7964 |

| 1.7571 | 16.0 | 8800 | 0.7866 | 0.8218 |

| 1.3159 | 17.0 | 9350 | 0.8491 | 0.8073 |

| 1.649 | 18.0 | 9900 | 0.8432 | 0.7891 |

| 0.0014 | 19.0 | 10450 | 0.8870 | 0.82 |

| 0.002 | 20.0 | 11000 | 0.9460 | 0.8236 |

| 0.3717 | 21.0 | 11550 | 0.8866 | 0.8327 |

| 0.0025 | 22.0 | 12100 | 1.0287 | 0.8073 |

| 0.0094 | 23.0 | 12650 | 0.9696 | 0.8091 |

| 0.002 | 24.0 | 13200 | 0.9659 | 0.8018 |

| 0.1001 | 25.0 | 13750 | 0.9712 | 0.8327 |

| 0.2953 | 26.0 | 14300 | 1.0512 | 0.8236 |

| 0.0141 | 27.0 | 14850 | 1.0503 | 0.82 |

| 0.612 | 28.0 | 15400 | 1.2020 | 0.8109 |

| 0.0792 | 29.0 | 15950 | 1.0498 | 0.8364 |

| 0.0117 | 30.0 | 16500 | 1.0079 | 0.8327 |

| 0.0568 | 31.0 | 17050 | 1.0199 | 0.8255 |

| 0.0001 | 32.0 | 17600 | 1.0319 | 0.8291 |

| 0.075 | 33.0 | 18150 | 1.0427 | 0.8382 |

| 0.001 | 34.0 | 18700 | 1.1289 | 0.8382 |

| 0.0001 | 35.0 | 19250 | 1.0589 | 0.8364 |

| 0.0006 | 36.0 | 19800 | 1.0349 | 0.8236 |

| 0.0023 | 37.0 | 20350 | 1.1192 | 0.8273 |

| 0.0002 | 38.0 | 20900 | 1.0863 | 0.8273 |

| 0.2031 | 39.0 | 21450 | 1.0604 | 0.8255 |

| 0.0006 | 40.0 | 22000 | 1.0767 | 0.8273 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

DeskDown/MarianMixFT_en-ms | [

"pytorch",

"marian",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MarianMTModel"

],

"model_type": "marian",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 512 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1294 with parameters:

```

{'batch_size': 3, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 1294,

"warmup_steps": 130,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 4096, 'do_lower_case': False}) with Transformer model: LongformerModel

(1): Pooling({'word_embedding_dimension': 512, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

DeskDown/MarianMixFT_en-my | [

"pytorch",

"marian",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MarianMTModel"

],

"model_type": "marian",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

---

Example result:

===============

# Using whitemanedb_step_3500.ckpt

# Using dbwhitemane.ckpt

%2C%20best%20quality%2C%20(masterpiece_1.3)%2C%20(red%20eyes_1.2)%2C%20blush%2C%20embarrassed.png)

%2C%20lush%2C%20%20blond.png)

Clip skip comparsion

I uploaded for now 3 models (more incoming for whitemane):

-[whitemanedb_step_2500.ckpt](https://huggingface.co/sd-dreambooth-library/sally-whitemanev/blob/main/whitemanedb_step_2500.ckpt)

-[whitemanedb_step_3500.ckpt](https://huggingface.co/sd-dreambooth-library/sally-whitemanev/blob/main/whitemanedb_step_3500.ckpt)

Are trained with 21 images and the trigger is "whitemanedb", this is my first attempts and I didn't get the final file because I ran out of space on drive :\ but model seems to work just fine.

The second model is [dbwhitemane.ckpt](https://huggingface.co/sd-dreambooth-library/sally-whitemanev/blob/main/dbwhitemane.ckpt)

This one has a total of 39 images used for training that you can find [here](https://huggingface.co/sd-dreambooth-library/sally-whitemanev/tree/main/dataset)

**Model is based on AnythingV3 FP16 [38c1ebe3]

And so I would recommend to use a VAE from NAI, Anything or WaifuDiffusion**

**Also set clip skip to 2 will help because its based on NAI model**

# Promt examples

This one is for the comparsion on top

> whitemanedb , 8k, 4k, (highres:1.1), best quality, (masterpiece:1.3), (red eyes:1.2), blush, embarrassed

> Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, deformed face, (poorly drawn face)),((buckteeth)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), 1boy,

> Steps: 45, Sampler: Euler a, CFG scale: 7, Seed: 772493513, Size: 512x512, Model hash: 313ad056, Eta: 0.07, Clip skip: 2

> whitemanedb taking a bath, 8k, 4k, (highres:1.1), best quality, (masterpiece:1.3), (red eyes:1.2), nsfw, nude, blush, nipples,

> Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, deformed face, (poorly drawn face)),((buckteeth)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), 1boy,

> Steps: 45, Sampler: Euler a, CFG scale: 7, Seed: 3450621385, Size: 512x512, Model hash: 313ad056, Eta: 0.07, Clip skip: 2

> whitemanedb in a forest

> Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, deformed face

> Steps: 35, Sampler: Euler a, CFG scale: 10.0, Seed: 2547952708, Size: 512x512, Model hash: 313ad056, Eta: 0.07, Clip skip: 2

> lying in the ground , princess, 1girl, solo, sbwhitemane in forest , leather armor, red eyes, lush

> Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, deformed face, (poorly drawn face)),((buckteeth)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), 1boy,

> Steps: 58, Sampler: Euler a, CFG scale: 7, Seed: 1390776440, Size: 512x512, Model hash: 8b1a4378, Clip skip: 2

> sbwhitemane leaning forward, princess, 1girl, solo,elf in forest , leather armor, large eyes, (ice green eyes:1.1), lush, blonde hair, realistic photo

> Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, deformed face, (poorly drawn face)),((buckteeth)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), 1boy,

> Steps: 45, Sampler: Euler a, CFG scale: 7, Seed: 1501953711, Size: 512x512, Model hash: 8b1a4378, Clip skip: 2

Enjoy, any recommendation or help is welcome, this is my first model and probably a lot of things can be improved! |

DeskDown/MarianMix_en-zh-10 | [

"pytorch",

"tensorboard",

"marian",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MarianMTModel"

],

"model_type": "marian",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2313

- Accuracy: 0.92

- F1: 0.9200

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8717 | 1.0 | 250 | 0.3385 | 0.9015 | 0.8976 |

| 0.2633 | 2.0 | 500 | 0.2313 | 0.92 | 0.9200 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Tokenizers 0.13.2

|

Dhritam/Zova-bot | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- generated_from_trainer

model-index:

- name: unifiedqa-v2-t5-base-1363200-finetuned-causalqa-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# unifiedqa-v2-t5-base-1363200-finetuned-causalqa-squad

This model is a fine-tuned version of [allenai/unifiedqa-v2-t5-base-1363200](https://huggingface.co/allenai/unifiedqa-v2-t5-base-1363200) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2574

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.7378 | 0.05 | 73 | 1.1837 |

| 0.6984 | 0.1 | 146 | 0.8918 |

| 0.4511 | 0.15 | 219 | 0.8342 |

| 0.4696 | 0.2 | 292 | 0.7642 |

| 0.295 | 0.25 | 365 | 0.7996 |

| 0.266 | 0.3 | 438 | 0.7773 |

| 0.2372 | 0.35 | 511 | 0.8592 |

| 0.2881 | 0.39 | 584 | 0.8440 |

| 0.2578 | 0.44 | 657 | 0.8306 |

| 0.2733 | 0.49 | 730 | 0.8228 |

| 0.2073 | 0.54 | 803 | 0.8419 |

| 0.2683 | 0.59 | 876 | 0.8241 |

| 0.2693 | 0.64 | 949 | 0.8573 |

| 0.355 | 0.69 | 1022 | 0.8204 |

| 0.2246 | 0.74 | 1095 | 0.8530 |

| 0.2468 | 0.79 | 1168 | 0.8410 |

| 0.3102 | 0.84 | 1241 | 0.8035 |

| 0.2115 | 0.89 | 1314 | 0.8262 |

| 0.1855 | 0.94 | 1387 | 0.8560 |

| 0.1772 | 0.99 | 1460 | 0.8747 |

| 0.1509 | 1.04 | 1533 | 0.9132 |

| 0.1871 | 1.09 | 1606 | 0.8920 |

| 0.1624 | 1.14 | 1679 | 0.9085 |

| 0.1404 | 1.18 | 1752 | 0.9460 |

| 0.1639 | 1.23 | 1825 | 0.9812 |

| 0.0983 | 1.28 | 1898 | 0.9790 |

| 0.1395 | 1.33 | 1971 | 0.9843 |

| 0.1439 | 1.38 | 2044 | 0.9877 |

| 0.1397 | 1.43 | 2117 | 1.0338 |

| 0.1095 | 1.48 | 2190 | 1.0589 |

| 0.1228 | 1.53 | 2263 | 1.0498 |

| 0.1246 | 1.58 | 2336 | 1.0923 |

| 0.1438 | 1.63 | 2409 | 1.0995 |

| 0.1305 | 1.68 | 2482 | 1.0867 |

| 0.1077 | 1.73 | 2555 | 1.1013 |

| 0.2104 | 1.78 | 2628 | 1.0765 |

| 0.1633 | 1.83 | 2701 | 1.0796 |

| 0.1658 | 1.88 | 2774 | 1.0314 |

| 0.1358 | 1.92 | 2847 | 0.9823 |

| 0.1571 | 1.97 | 2920 | 0.9826 |

| 0.1127 | 2.02 | 2993 | 1.0324 |

| 0.0927 | 2.07 | 3066 | 1.0679 |

| 0.0549 | 2.12 | 3139 | 1.1069 |

| 0.0683 | 2.17 | 3212 | 1.1624 |

| 0.0677 | 2.22 | 3285 | 1.1174 |

| 0.0615 | 2.27 | 3358 | 1.1431 |

| 0.0881 | 2.32 | 3431 | 1.1721 |

| 0.0807 | 2.37 | 3504 | 1.1885 |

| 0.0955 | 2.42 | 3577 | 1.1991 |

| 0.0779 | 2.47 | 3650 | 1.1999 |

| 0.11 | 2.52 | 3723 | 1.1774 |

| 0.0852 | 2.57 | 3796 | 1.2095 |

| 0.0616 | 2.62 | 3869 | 1.1824 |

| 0.072 | 2.67 | 3942 | 1.2397 |

| 0.1055 | 2.71 | 4015 | 1.2181 |

| 0.0806 | 2.76 | 4088 | 1.2159 |

| 0.0684 | 2.81 | 4161 | 1.1864 |

| 0.0869 | 2.86 | 4234 | 1.1816 |

| 0.1023 | 2.91 | 4307 | 1.1717 |

| 0.0583 | 2.96 | 4380 | 1.1477 |

| 0.0684 | 3.01 | 4453 | 1.1662 |

| 0.0319 | 3.06 | 4526 | 1.2174 |

| 0.0609 | 3.11 | 4599 | 1.1947 |

| 0.0435 | 3.16 | 4672 | 1.1821 |

| 0.0417 | 3.21 | 4745 | 1.1964 |

| 0.0502 | 3.26 | 4818 | 1.2140 |

| 0.0844 | 3.31 | 4891 | 1.2028 |

| 0.0692 | 3.36 | 4964 | 1.2215 |

| 0.0366 | 3.41 | 5037 | 1.2136 |

| 0.0615 | 3.46 | 5110 | 1.2224 |

| 0.0656 | 3.5 | 5183 | 1.2468 |

| 0.0469 | 3.55 | 5256 | 1.2554 |

| 0.0475 | 3.6 | 5329 | 1.2804 |

| 0.0998 | 3.65 | 5402 | 1.2035 |

| 0.0505 | 3.7 | 5475 | 1.2095 |

| 0.0459 | 3.75 | 5548 | 1.2064 |

| 0.0256 | 3.8 | 5621 | 1.2164 |

| 0.0831 | 3.85 | 5694 | 1.2154 |

| 0.0397 | 3.9 | 5767 | 1.2126 |

| 0.0449 | 3.95 | 5840 | 1.2174 |

| 0.0322 | 4.0 | 5913 | 1.2288 |

| 0.059 | 4.05 | 5986 | 1.2274 |

| 0.0382 | 4.1 | 6059 | 1.2228 |

| 0.0202 | 4.15 | 6132 | 1.2177 |

| 0.0328 | 4.2 | 6205 | 1.2305 |

| 0.0407 | 4.24 | 6278 | 1.2342 |

| 0.0356 | 4.29 | 6351 | 1.2448 |

| 0.0414 | 4.34 | 6424 | 1.2537 |

| 0.0448 | 4.39 | 6497 | 1.2540 |

| 0.0545 | 4.44 | 6570 | 1.2552 |

| 0.0492 | 4.49 | 6643 | 1.2570 |

| 0.0293 | 4.54 | 6716 | 1.2594 |

| 0.0498 | 4.59 | 6789 | 1.2562 |

| 0.0349 | 4.64 | 6862 | 1.2567 |

| 0.0497 | 4.69 | 6935 | 1.2550 |

| 0.0194 | 4.74 | 7008 | 1.2605 |

| 0.0255 | 4.79 | 7081 | 1.2590 |

| 0.0212 | 4.84 | 7154 | 1.2571 |

| 0.0231 | 4.89 | 7227 | 1.2583 |

| 0.0399 | 4.94 | 7300 | 1.2580 |

| 0.0719 | 4.99 | 7373 | 1.2574 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Dibyaranjan/nl_image_search | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: cc-by-nc-4.0

tags:

- galactica

widget:

- text: "The Transformer architecture [START_REF]"

- text: "The Schwarzschild radius is defined as: \\["

- text: "A force of 0.6N is applied to an object, which accelerates at 3m/s. What is its mass? <work>"

- text: "Lecture 1: The Ising Model\n\n"

- text: "[START_I_SMILES]"

- text: "[START_AMINO]GHMQSITAGQKVISKHKNGRFYQCEVVRLTTETFYEVNFDDGSFSDNLYPEDIVSQDCLQFGPPAEGEVVQVRWTDGQVYGAKFVASHPIQMYQVEFEDGSQLVVKRDDVYTLDEELP[END_AMINO] ## Keywords"

inference: false

---

# GALACTICA 1.3B (base)

Model card from the original [repo](https://github.com/paperswithcode/galai/blob/main/docs/model_card.md)

Following [Mitchell et al. (2018)](https://arxiv.org/abs/1810.03993), this model card provides information about the GALACTICA model, how it was trained, and the intended use cases. Full details about how the model was trained and evaluated can be found in the [release paper](https://galactica.org/paper.pdf).

## Model Details

The GALACTICA models are trained on a large-scale scientific corpus. The models are designed to perform scientific tasks, including but not limited to citation prediction, scientific QA, mathematical reasoning, summarization, document generation, molecular property prediction and entity extraction. The models were developed by the Papers with Code team at Meta AI to study the use of language models for the automatic organization of science. We train models with sizes ranging from 125M to 120B parameters. Below is a summary of the released models:

| Size | Parameters |

|:-----------:|:-----------:|

| `mini` | 125 M |

| `base` | 1.3 B |

| `standard` | 6.7 B |

| `large` | 30 B |

| `huge` | 120 B |

## Release Date

November 2022

## Model Type

Transformer based architecture in a decoder-only setup with a few modifications (see paper for more details).

## Paper & Demo

[Paper](https://galactica.org/paper.pdf) / [Demo](https://galactica.org)

## Model Use

The primary intended users of the GALACTICA models are researchers studying language models applied to the scientific domain. We also anticipate the model will be useful for developers who wish to build scientific tooling. However, we caution against production use without safeguards given the potential of language models to hallucinate.

The models are made available under a non-commercial CC BY-NC 4.0 license. More information about how to use the model can be found in the README.md of this repository.

## Training Data

The GALACTICA models are trained on 106 billion tokens of open-access scientific text and data. This includes papers, textbooks, scientific websites, encyclopedias, reference material, knowledge bases, and more. We tokenize different modalities to provide a natural langauge interface for different tasks. See the README.md for more information. See the paper for full information on the training data.

## How to use

Find below some example scripts on how to use the model in `transformers`:

## Using the Pytorch model

### Running the model on a CPU

<details>

<summary> Click to expand </summary>

```python

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-1.3b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-1.3b")

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

### Running the model on a GPU

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-1.3b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-1.3b", device_map="auto")

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

### Running the model on a GPU using different precisions

#### FP16

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

import torch

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-1.3b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-1.3b", device_map="auto", torch_dtype=torch.float16)

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

#### INT8

<details>

<summary> Click to expand </summary>

```python

# pip install bitsandbytes accelerate

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-1.3b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-1.3b", device_map="auto", load_in_8bit=True)

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

## Performance and Limitations

The model outperforms several existing language models on a range of knowledge probes, reasoning, and knowledge-intensive scientific tasks. This also extends to general NLP tasks, where GALACTICA outperforms other open source general language models. That being said, we note a number of limitations in this section.

As with other language models, GALACTICA is often prone to hallucination - and training on a high-quality academic corpus does not prevent this, especially for less popular and less cited scientific concepts. There are no guarantees of truthful output when generating from the model. This extends to specific modalities such as citation prediction. While GALACTICA's citation behaviour approaches the ground truth citation behaviour with scale, the model continues to exhibit a popularity bias at larger scales.

In addition, we evaluated the model on several types of benchmarks related to stereotypes and toxicity. Overall, the model exhibits substantially lower toxicity rates compared to other large language models. That being said, the model continues to exhibit bias on certain measures (see the paper for details). So we recommend care when using the model for generations.

## Broader Implications

GALACTICA can potentially be used as a new way to discover academic literature. We also expect a lot of downstream use for application to particular domains, such as mathematics, biology, and chemistry. In the paper, we demonstrated several examples of the model acting as alternative to standard search tools. We expect a new generation of scientific tools to be built upon large language models such as GALACTICA.

We encourage researchers to investigate beneficial and new use cases for these models. That being said, it is important to be aware of the current limitations of large language models. Researchers should pay attention to common issues such as hallucination and biases that could emerge from using these models.

## Citation

```bibtex

@inproceedings{GALACTICA,

title={GALACTICA: A Large Language Model for Science},

author={Ross Taylor and Marcin Kardas and Guillem Cucurull and Thomas Scialom and Anthony Hartshorn and Elvis Saravia and Andrew Poulton and Viktor Kerkez and Robert Stojnic},

year={2022}

}

``` |

Digakive/Hsgshs | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

language:

- uk

- sv

tags:

- generated_from_trainer

- translation

model-index:

- name: mt-uk-sv-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt-uk-sv-finetuned

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-uk-sv](https://huggingface.co/Helsinki-NLP/opus-mt-uk-sv) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.4210

- eval_bleu: 40.6634

- eval_runtime: 966.5303

- eval_samples_per_second: 18.744

- eval_steps_per_second: 4.687

- epoch: 6.0

- step: 40764

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 24

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.25.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Dilmk2/DialoGPT-small-harrypotter | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 13 | null | ---

library_name: stable-baselines3

tags:

- seals/MountainCar-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: -100.60 +/- 5.75

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: seals/MountainCar-v0

type: seals/MountainCar-v0

---

# **PPO** Agent playing **seals/MountainCar-v0**

This is a trained model of a **PPO** agent playing **seals/MountainCar-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo ppo --env seals/MountainCar-v0 -orga ernestumorga -f logs/

python enjoy.py --algo ppo --env seals/MountainCar-v0 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo ppo --env seals/MountainCar-v0 -orga ernestumorga -f logs/

rl_zoo3 enjoy --algo ppo --env seals/MountainCar-v0 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo ppo --env seals/MountainCar-v0 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo ppo --env seals/MountainCar-v0 -f logs/ -orga ernestumorga

```

## Hyperparameters

```python

OrderedDict([('batch_size', 512),

('clip_range', 0.2),

('ent_coef', 6.4940755116195606e-06),

('gae_lambda', 0.98),

('gamma', 0.99),

('learning_rate', 0.0004476103728105138),

('max_grad_norm', 1),

('n_envs', 16),

('n_epochs', 20),

('n_steps', 256),

('n_timesteps', 1000000.0),

('normalize', 'dict(norm_obs=False, norm_reward=True)'),

('policy',

'imitation.policies.base.MlpPolicyWithNormalizeFeaturesExtractor'),

('policy_kwargs',

'dict(activation_fn=nn.Tanh, net_arch=[dict(pi=[64, 64], vf=[64, '

'64])])'),

('vf_coef', 0.25988158989488963),

('normalize_kwargs', {'norm_obs': False, 'norm_reward': False})])

```

|

DingleyMaillotUrgell/homer-bot | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null | ---

language: id

license: mit

datasets:

- oscar

- wikipedia

- id_newspapers_2018

widget:

- text: Saya [MASK] makan nasi goreng.

- text: Kucing itu sedang bermain dengan [MASK].

pipeline_tag: fill-mask

---

# Indonesian small BigBird model

## Source Code

Source code to create this model is available at [https://github.com/ilos-vigil/bigbird-small-indonesian](https://github.com/ilos-vigil/bigbird-small-indonesian).

## Downstream Task

* NLI/ZSC: [ilos-vigil/bigbird-small-indonesian-nli](https://huggingface.co/ilos-vigil/bigbird-small-indonesian-nli)

## Model Description

This **cased** model has been pretrained with Masked LM objective. It has ~30M parameters and was pretrained with 8 epoch/51474 steps with 2.078 eval loss (7.988 perplexity). Architecture of this model is shown in the configuration snippet below. The tokenizer was trained with whole dataset with 30K vocabulary size.

```py

from transformers import BigBirdConfig

config = BigBirdConfig(

vocab_size = 30_000,

hidden_size = 512,

num_hidden_layers = 4,

num_attention_heads = 8,

intermediate_size = 2048,

max_position_embeddings = 4096,

is_encoder_decoder=False,

attention_type='block_sparse'

)

```

## How to use

> Inference with Transformers pipeline (one MASK token)

```py

>>> from transformers import pipeline

>>> pipe = pipeline(task='fill-mask', model='ilos-vigil/bigbird-small-indonesian')

>>> pipe('Saya sedang bermain [MASK] teman saya.')

[{'score': 0.7199566960334778,

'token': 14,

'token_str':'dengan',

'sequence': 'Saya sedang bermain dengan teman saya.'},

{'score': 0.12370546162128448,

'token': 17,

'token_str': 'untuk',

'sequence': 'Saya sedang bermain untuk teman saya.'},

{'score': 0.0385284349322319,

'token': 331,

'token_str': 'bersama',

'sequence': 'Saya sedang bermain bersama teman saya.'},

{'score': 0.012146958149969578,

'token': 28,

'token_str': 'oleh',

'sequence': 'Saya sedang bermain oleh teman saya.'},

{'score': 0.009499032981693745,

'token': 25,

'token_str': 'sebagai',

'sequence': 'Saya sedang bermain sebagai teman saya.'}]

```

> Inference with PyTorch (one or multiple MASK token)

```py

import torch

from transformers import BigBirdTokenizerFast, BigBirdForMaskedLM

from pprint import pprint

tokenizer = BigBirdTokenizerFast.from_pretrained('ilos-vigil/bigbird-small-indonesian')

model = BigBirdForMaskedLM.from_pretrained('ilos-vigil/bigbird-small-indonesian')

topk = 5

text = 'Saya [MASK] bermain [MASK] teman saya.'

tokenized_text = tokenizer(text, return_tensors='pt')

raw_output = model(**tokenized_text)

tokenized_output = torch.topk(raw_output.logits, topk, dim=2).indices

score_output = torch.softmax(raw_output.logits, dim=2)

result = []

for position_idx in range(tokenized_text['input_ids'][0].shape[0]):

if tokenized_text['input_ids'][0][position_idx] == tokenizer.mask_token_id:

outputs = []

for token_idx in tokenized_output[0, position_idx]:

output = {}

output['score'] = score_output[0, position_idx, token_idx].item()

output['token'] = token_idx.item()

output['token_str'] = tokenizer.decode(output['token'])

outputs.append(output)

result.append(outputs)

pprint(result)

```

```py

[[{'score': 0.22353802621364594, 'token': 36, 'token_str': 'dapat'},

{'score': 0.13962049782276154, 'token': 24, 'token_str': 'tidak'},

{'score': 0.13610956072807312, 'token': 32, 'token_str': 'juga'},

{'score': 0.0725034773349762, 'token': 584, 'token_str': 'bermain'},

{'score': 0.033740025013685226, 'token': 38, 'token_str': 'akan'}],

[{'score': 0.7111291885375977, 'token': 14, 'token_str': 'dengan'},

{'score': 0.10754624754190445, 'token': 17, 'token_str': 'untuk'},

{'score': 0.022657711058855057, 'token': 331, 'token_str': 'bersama'},

{'score': 0.020862115547060966, 'token': 25, 'token_str': 'sebagai'},

{'score': 0.013086902908980846, 'token': 11, 'token_str': 'di'}]]

```

## Limitations and bias

Due to low parameter count and case-sensitive tokenizer/model, it's expected this model have low performance on certain fine-tuned task. Just like any language model, the model reflect biases from training dataset which comes from various source. Here's an example of how the model can have biased predictions,

```py

>>> pipe('Memasak dirumah adalah kewajiban seorang [MASK].')

[{'score': 0.16381049156188965,

'sequence': 'Memasak dirumah adalah kewajiban seorang budak.',

'token': 4910,

'token_str': 'budak'},

{'score': 0.1334381103515625,

'sequence': 'Memasak dirumah adalah kewajiban seorang wanita.',

'token': 649,

'token_str': 'wanita'},

{'score': 0.11588197946548462,

'sequence': 'Memasak dirumah adalah kewajiban seorang lelaki.',

'token': 6368,

'token_str': 'lelaki'},

{'score': 0.061377108097076416,

'sequence': 'Memasak dirumah adalah kewajiban seorang diri.',

'token': 258,

'token_str': 'diri'},

{'score': 0.04679233580827713,

'sequence': 'Memasak dirumah adalah kewajiban seorang gadis.',

'token': 6845,

'token_str': 'gadis'}]

```

## Training and evaluation data

This model was pretrained with [Indonesian Wikipedia](https://huggingface.co/datasets/wikipedia) with dump file from 2022-10-20, [OSCAR](https://huggingface.co/datasets/oscar) on subset `unshuffled_deduplicated_id` and [Indonesian Newspaper 2018](https://huggingface.co/datasets/id_newspapers_2018). Preprocessing is done using function from [task guides - language modeling](https://huggingface.co/docs/transformers/tasks/language_modeling#preprocess) with 4096 block size. Each dataset is splitted using [`train_test_split`](https://huggingface.co/docs/datasets/main/en/package_reference/main_classes#datasets.Dataset.train_test_split) with 5% allocation as evaluation data.

## Training Procedure

The model was pretrained on single RTX 3060 with 8 epoch/51474 steps with accumalted batch size 128. The sequence was limited to 4096 tokens. The optimizer used is AdamW with LR 1e-4, weight decay 0.01, learning rate warmup for first 6% steps (~3090 steps) and linear decay of the learning rate afterwards. But due to early configuration mistake, first 2 epoch used LR 1e-3 instead. Additional information can be seen on Tensorboard training logs.

## Evaluation

The model achieve the following result during training evaluation.

| Epoch | Steps | Eval. loss | Eval. perplexity |

| ----- | ----- | ---------- | ---------------- |

| 1 | 6249 | 2.466 | 11.775 |

| 2 | 12858 | 2.265 | 9.631 |

| 3 | 19329 | 2.127 | 8.390 |

| 4 | 25758 | 2.116 | 8.298 |

| 5 | 32187 | 2.097 | 8.141 |

| 6 | 38616 | 2.087 | 8.061 |

| 7 | 45045 | 2.081 | 8.012 |

| 8 | 51474 | 2.078 | 7.988 | |

DivyanshuSheth/T5-Seq2Seq-Final | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

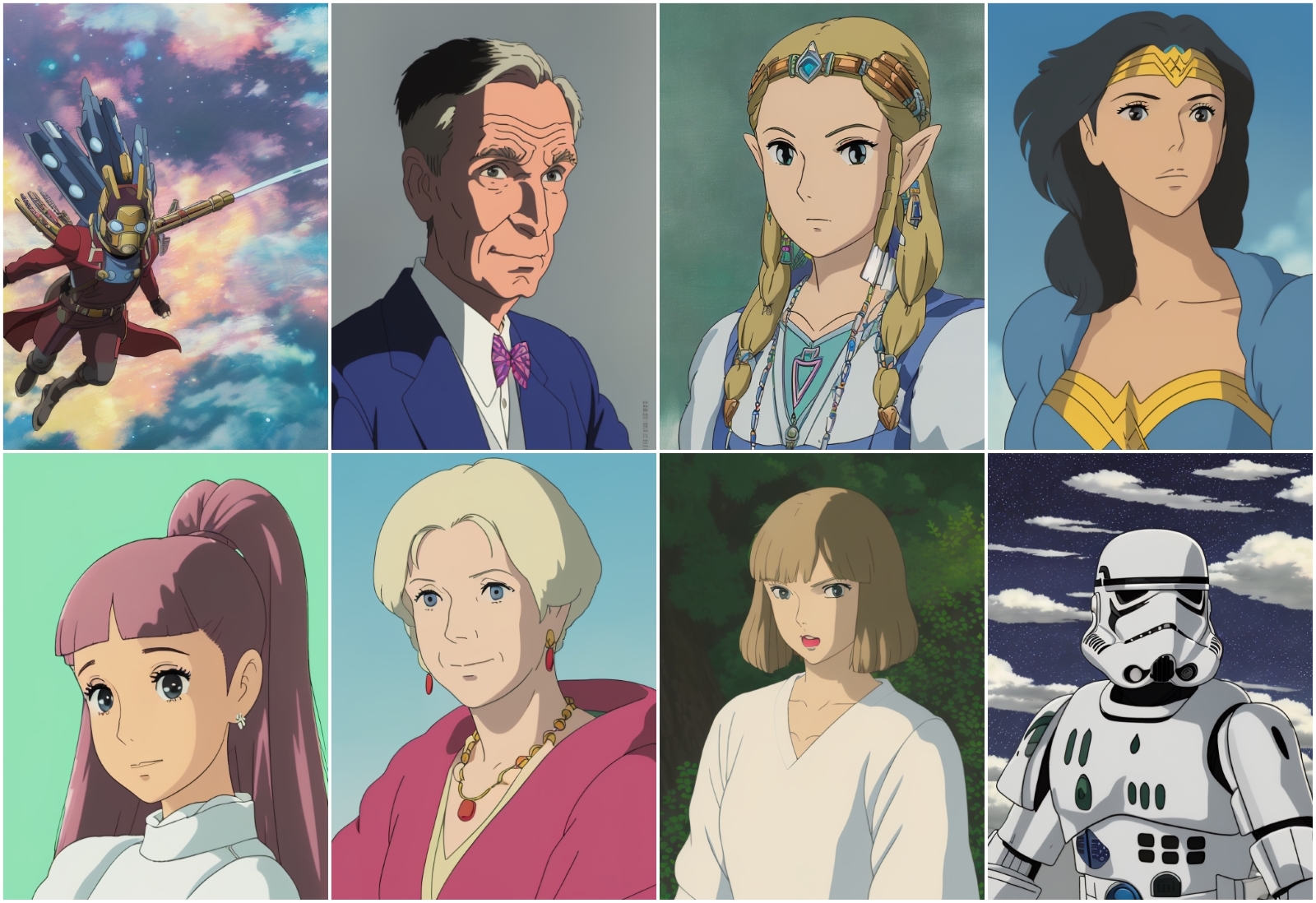

} | 0 | null | ---

license: apache-2.0

---

## Prompt Trigger Keywords

Use `darkprincess638 person` to trigger the character, this works best at the start of the prompt

## Examples

Here are some random generations to show the flexibility of the model with different prompts

|

Dizoid/Lll | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: cc-by-nc-4.0

tags:

- galactica

widget:

- text: "The Transformer architecture [START_REF]"

- text: "The Schwarzschild radius is defined as: \\["

- text: "A force of 0.6N is applied to an object, which accelerates at 3m/s. What is its mass? <work>"

- text: "Lecture 1: The Ising Model\n\n"

- text: "[START_I_SMILES]"

- text: "[START_AMINO]GHMQSITAGQKVISKHKNGRFYQCEVVRLTTETFYEVNFDDGSFSDNLYPEDIVSQDCLQFGPPAEGEVVQVRWTDGQVYGAKFVASHPIQMYQVEFEDGSQLVVKRDDVYTLDEELP[END_AMINO] ## Keywords"

inference: false

---

# GALACTICA 6.7B (standard)

Model card from the original [repo](https://github.com/paperswithcode/galai/blob/main/docs/model_card.md)

Following [Mitchell et al. (2018)](https://arxiv.org/abs/1810.03993), this model card provides information about the GALACTICA model, how it was trained, and the intended use cases. Full details about how the model was trained and evaluated can be found in the [release paper](https://galactica.org/paper.pdf).

## Model Details

The GALACTICA models are trained on a large-scale scientific corpus. The models are designed to perform scientific tasks, including but not limited to citation prediction, scientific QA, mathematical reasoning, summarization, document generation, molecular property prediction and entity extraction. The models were developed by the Papers with Code team at Meta AI to study the use of language models for the automatic organization of science. We train models with sizes ranging from 125M to 120B parameters. Below is a summary of the released models:

| Size | Parameters |

|:-----------:|:-----------:|

| `mini` | 125 M |

| `base` | 1.3 B |

| `standard` | 6.7 B |

| `large` | 30 B |

| `huge` | 120 B |

## Release Date

November 2022

## Model Type

Transformer based architecture in a decoder-only setup with a few modifications (see paper for more details).

## Paper & Demo

[Paper](https://galactica.org/paper.pdf) / [Demo](https://galactica.org)

## Model Use

The primary intended users of the GALACTICA models are researchers studying language models applied to the scientific domain. We also anticipate the model will be useful for developers who wish to build scientific tooling. However, we caution against production use without safeguards given the potential of language models to hallucinate.

The models are made available under a non-commercial CC BY-NC 4.0 license. More information about how to use the model can be found in the README.md of this repository.

## Training Data

The GALACTICA models are trained on 106 billion tokens of open-access scientific text and data. This includes papers, textbooks, scientific websites, encyclopedias, reference material, knowledge bases, and more. We tokenize different modalities to provide a natural langauge interface for different tasks. See the README.md for more information. See the paper for full information on the training data.

## How to use

Find below some example scripts on how to use the model in `transformers`:

## Using the Pytorch model

### Running the model on a CPU

<details>

<summary> Click to expand </summary>

```python

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-6.7b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-6.7b")

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

### Running the model on a GPU

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-6.7b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-6.7b", device_map="auto")

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

### Running the model on a GPU using different precisions

#### FP16

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

import torch

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-6.7b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-6.7b", device_map="auto", torch_dtype=torch.float16)

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

#### INT8

<details>

<summary> Click to expand </summary>

```python

# pip install bitsandbytes accelerate

from transformers import AutoTokenizer, OPTForCausalLM

tokenizer = AutoTokenizer.from_pretrained("facebook/galactica-6.7b")

model = OPTForCausalLM.from_pretrained("facebook/galactica-6.7b", device_map="auto", load_in_8bit=True)

input_text = "The Transformer architecture [START_REF]"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

```

</details>

## Performance and Limitations

The model outperforms several existing language models on a range of knowledge probes, reasoning, and knowledge-intensive scientific tasks. This also extends to general NLP tasks, where GALACTICA outperforms other open source general language models. That being said, we note a number of limitations in this section.

As with other language models, GALACTICA is often prone to hallucination - and training on a high-quality academic corpus does not prevent this, especially for less popular and less cited scientific concepts. There are no guarantees of truthful output when generating from the model. This extends to specific modalities such as citation prediction. While GALACTICA's citation behaviour approaches the ground truth citation behaviour with scale, the model continues to exhibit a popularity bias at larger scales.

In addition, we evaluated the model on several types of benchmarks related to stereotypes and toxicity. Overall, the model exhibits substantially lower toxicity rates compared to other large language models. That being said, the model continues to exhibit bias on certain measures (see the paper for details). So we recommend care when using the model for generations.

## Broader Implications

GALACTICA can potentially be used as a new way to discover academic literature. We also expect a lot of downstream use for application to particular domains, such as mathematics, biology, and chemistry. In the paper, we demonstrated several examples of the model acting as alternative to standard search tools. We expect a new generation of scientific tools to be built upon large language models such as GALACTICA.

We encourage researchers to investigate beneficial and new use cases for these models. That being said, it is important to be aware of the current limitations of large language models. Researchers should pay attention to common issues such as hallucination and biases that could emerge from using these models.

## Citation

```bibtex

@inproceedings{GALACTICA,

title={GALACTICA: A Large Language Model for Science},

author={Ross Taylor and Marcin Kardas and Guillem Cucurull and Thomas Scialom and Anthony Hartshorn and Elvis Saravia and Andrew Poulton and Viktor Kerkez and Robert Stojnic},

year={2022}

}

``` |

Dkwkk/Da | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language: en

tags:

- newspapers

- library

- historic

- glam

- mdma

license: mit

metrics:

- pseudo-perplexity

widget:

- text: "1820 [DATE] We received a letter from [MASK] Majesty."

- text: "1850 [DATE] We received a letter from [MASK] Majesty."

- text: "[MASK] [DATE] The Franco-Prussian war is a matter of great concern."

- text: "[MASK] [DATE] The Schleswig war is a matter of great concern."

---

**MODEL CARD UNDER CONSTRUCTION, ETA END OF NOVEMBER**

<img src="https://upload.wikimedia.org/wikipedia/commons/5/5b/NCI_peas_in_pod.jpg" alt="erwt" width="200" >

# ERWT-year

🌺ERWT\* a language model that (🤭 maybe 🤫) knows more about history than you...🌺

ERWT is a fine-tuned [`distilbert-base-uncased`](https://huggingface.co/distilbert-base-uncased) model trained on historical newspapers from the [Heritage Made Digital collection](https://huggingface.co/datasets/davanstrien/hmd-erwt-training).

We trained a model based on a combination of text and **temporal metadata** (i.e. year information).

ERWT performs [**time-sensitive masked language modelling**](#historical-language-change-herhis-majesty-%F0%9F%91%91) or [**date prediction**]((#date-prediction-pub-quiz-with-lms-%F0%9F%8D%BB)).

This model is served by [Kaspar von Beelen](https://huggingface.co/Kaspar) and [Daniel van Strien](https://huggingface.co/davanstrien), *"Improving AI, one pea at a time"*.

If these models happen to be useful, please cite our working paper.

```

@misc{https://doi.org/10.48550/arxiv.2211.10086,

doi = {10.48550/ARXIV.2211.10086},

url = {https://arxiv.org/abs/2211.10086},

author = {Beelen, Kaspar and van Strien, Daniel},

keywords = {Computation and Language (cs.CL), Digital Libraries (cs.DL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Metadata Might Make Language Models Better},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}}

```

\*ERWT is dutch for PEA.

# Overview

- [Introduction: Repent Now 😇](#introductory-note-repent-now-%F0%9F%98%87)

- [Background: MDMA to the rescue 🙂](#background-mdma-to-the-rescue-%F0%9F%99%82)

- [Intended Use: LMs as History Machines 🚂](#intended-use-lms-as-history-machines)

- [Historical Language Change: Her/His Majesty? 👑](#historical-language-change-herhis-majesty-%F0%9F%91%91)

- [Date Prediction: Pub Quiz with LMs 🍻](#date-prediction-pub-quiz-with-lms-%F0%9F%8D%BB)

- [Limitations: Not all is well 😮](#limitations-not-all-is-well-%F0%9F%98%AE)

- [Training Data](#training-data)

- [Training Routine](#training-routine)

- [Data Description](#data-description)

- [Evaluation: 🤓 In case you care to count 🤓](#evaluation-%F0%9F%A4%93-in-case-you-care-to-count-%F0%9F%A4%93)

## Introductory Note: Repent Now. 😇

The ERWT models are trained for **experimental purposes**.

Please consult the [**limitations**](#limitations-not-all-is-well-%F0%9F%98%AE) section (seriously before using the models. Seriously, read this section, **we don't repent in public just for fun**).

If you can't get enough of these neural peas and crave some more. In that case, you can consult our working paper ["Metadata Might Make Language Models Better"](https://arxiv.org/abs/2211.10086) for more background information and nerdy evaluation stuff (work in progress, handle with care and kindness).

## Background: MDMA to the rescue. 🙂

ERWT was created using a **M**eta**D**ata **M**asking **A**pproach (or **MDMA** 💊), a scenario in which we train a Masked Language Model (MLM) on text and metadata simultaneously. Our intuition was that incorporating metadata (information that *describes* a text but and is not part of the content) may make language models "better", or at least make them more **sensitive** to historical, political and geographical aspects of language use. We mainly use temporal, political and geographical metadata.

ERWT is a [`distilbert-base-uncased`](https://huggingface.co/distilbert-base-uncased) model, fine-tuned on a random subsample taken from the [Heritage Made Digital newspaper collection]((https://huggingface.co/datasets/davanstrien/hmd-erwt-training)). The training data comprises around half a billion words.

To unleash the power of MDMA, we adapted to the training routine mainly by fidgeting with the input data.

When preprocessing the text, we prepended each segment of hundred words with a time stamp (year of publication) and a special `[DATE]` token.

The snippet below, taken from the [Londonderry Sentinel]:(https://www.britishnewspaperarchive.co.uk/viewer/bl/0001480/18700722/014/0002)...

```

Every scrap of intelligence relative to the war between France and Prussia is now read with interest.

```

... would be formatted as:

```python

"1870 [DATE] Every scrap of intelligence relative to the war between France and Prussia is now read with interest."

```

These text chunks are then forwarded to the data collator and eventually the language model.

Exposed to the tokens and (temporal) metadata, the model learns a relation between text and time. When a text token is hidden, the prepended `year` field influences the prediction of the masked words. Vice versa, when the prepended metadata is hidden, the model predicts the year of publication based on the content.

## Intended Use: LMs as History Machines.

Exposing the model to temporal metadata allows us to investigate **historical language change** and perform **date prediction**.

### Historical Language Change: Her/His Majesty? 👑

Let's show how ERWT works with a very concrete example.

The ERWT models are trained on a handful of British newspapers published between 1800 and 1870. It can be used to monitor historical change in this specific context.

Imagine you are confronted with the following snippet: "We received a letter from [MASK] Majesty" and want to predict the correct pronoun for the masked token (again assuming a British context).

👩🏫 **History Intermezzo** Please remember, for most of the nineteenth century, Queen Victoria ruled Britain, from 1837 to 1901 to be precise. Her nineteenth-century predecessors (George III, George IV and William IV) were all male.

While a standard language model will provide you with one a general prediction—based on what it has observed during training–ERWT allows you to manipulate to prediction, by anchoring the text in a specific year.

Doing this requires just a few lines of code:

```python

from transformers import pipeline

mask_filler = pipeline("fill-mask",

model='Livingwithmachines/erwt-year')

mask_filler(f"1820 [DATE] We received a letter from [MASK] Majesty.")

```

This returns "his" as the most likely filler:

```python

{'score': 0.8527863025665283,

'token': 2010,

'token_str': 'his',

'sequence': '1820 we received a letter from his majesty.'}

```

However, if we change the date at the start of the sentence to 1850:

```python

mask_filler(f"1850 [DATE] We received a letter from [MASK] Majesty.")

```

ERWT puts most of the probability mass on the token "her" and only a little bit on "his".

```python

{'score': 0.8168327212333679,

'token': 2014,

'token_str': 'her',

'sequence': '1850 we received a letter from her majesty.'}

```

You can repeat this experiment for yourself using the example sentences in the **Hosted inference API** at the top right.

Okay, but why is this **interesting**?

Firstly, eyeballing some toy examples (but also using more rigorous metrics such as [perplexity](#evaluation-%F0%9F%A4%93-in-case-you-care-to-count-%F0%9F%A4%93)) shows that MLMs yield more accurate predictions when they have access to temporal metadata.

In other words, **ERWT models are better at capturing historical context.**

Secondly, MDMA may **reduce biases** that arise from imbalanced training data (or at least give us more of a handle on this problem). Admittedly, we have to prove this more formally, but some experiments at least hint in this direction.

### Date Prediction: Pub Quiz with LMs 🍻