modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Batsy24/DialoGPT-small-Twilight_EdBot | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | ---

library_name: sklearn

tags:

- sklearn

- skops

- tabular-classification

widget:

structuredData:

x0:

- 5.8

- 6.0

- 5.5

x1:

- 2.8

- 2.2

- 4.2

x2:

- 5.1

- 4.0

- 1.4

x3:

- 2.4

- 1.0

- 0.2

---

# Model description

[More Information Needed]

## Intended uses & limitations

[More Information Needed]

## Training Procedure

### Hyperparameters

The model is trained with below hyperparameters.

<details>

<summary> Click to expand </summary>

| Hyperparameter | Value |

|--------------------------|---------|

| bootstrap | True |

| ccp_alpha | 0.0 |

| class_weight | |

| criterion | gini |

| max_depth | |

| max_features | sqrt |

| max_leaf_nodes | |

| max_samples | |

| min_impurity_decrease | 0.0 |

| min_samples_leaf | 1 |

| min_samples_split | 2 |

| min_weight_fraction_leaf | 0.0 |

| n_estimators | 100 |

| n_jobs | |

| oob_score | False |

| random_state | |

| verbose | 0 |

| warm_start | False |

</details>

### Model Plot

The model plot is below.

<style>#sk-container-id-1 {color: black;background-color: white;}#sk-container-id-1 pre{padding: 0;}#sk-container-id-1 div.sk-toggleable {background-color: white;}#sk-container-id-1 label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-container-id-1 label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-container-id-1 label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-container-id-1 div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-container-id-1 div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-container-id-1 div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-container-id-1 input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-container-id-1 input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-container-id-1 div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-container-id-1 div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-container-id-1 div.sk-estimator:hover {background-color: #d4ebff;}#sk-container-id-1 div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-container-id-1 div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: 0;}#sk-container-id-1 div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;position: relative;}#sk-container-id-1 div.sk-item {position: relative;z-index: 1;}#sk-container-id-1 div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;position: relative;}#sk-container-id-1 div.sk-item::before, #sk-container-id-1 div.sk-parallel-item::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: -1;}#sk-container-id-1 div.sk-parallel-item {display: flex;flex-direction: column;z-index: 1;position: relative;background-color: white;}#sk-container-id-1 div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-container-id-1 div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-container-id-1 div.sk-parallel-item:only-child::after {width: 0;}#sk-container-id-1 div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;}#sk-container-id-1 div.sk-label label {font-family: monospace;font-weight: bold;display: inline-block;line-height: 1.2em;}#sk-container-id-1 div.sk-label-container {text-align: center;}#sk-container-id-1 div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-container-id-1 div.sk-text-repr-fallback {display: none;}</style><div id="sk-container-id-1" class="sk-top-container"><div class="sk-text-repr-fallback"><pre>RandomForestClassifier()</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-1" type="checkbox" checked><label for="sk-estimator-id-1" class="sk-toggleable__label sk-toggleable__label-arrow">RandomForestClassifier</label><div class="sk-toggleable__content"><pre>RandomForestClassifier()</pre></div></div></div></div></div>

## Evaluation Results

You can find the details about evaluation process and the evaluation results.

| Metric | Value |

|----------|---------|

# How to Get Started with the Model

Use the code below to get started with the model.

<details>

<summary> Click to expand </summary>

```python

[More Information Needed]

```

</details>

# Model Card Authors

This model card is written by following authors:

[More Information Needed]

# Model Card Contact

You can contact the model card authors through following channels:

[More Information Needed]

# Citation

Below you can find information related to citation.

**BibTeX:**

```

[More Information Needed]

``` |

Baybars/wav2vec2-xls-r-1b-turkish | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"tr",

"dataset:common_voice",

"transformers",

"common_voice",

"generated_from_trainer"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 13 | null | <div class="overflow-hidden">

<span class="absolute" style="top: -260px;left: 0;color: red;">boo</span>

</div>

# Test

<style>

img {

display: inline;

}

a {

color: red !important;

}

</style>

| [](#model-architecture)

| [](#model-architecture)

| [](#datasets)

| [](#deployment-with-nvidia-riva) |

|

BeIR/query-gen-msmarco-t5-large-v1 | [

"pytorch",

"jax",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"T5ForConditionalGeneration"

],

"model_type": "t5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 200,

"min_length": 30,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": "summarize: "

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to German: "

},

"translation_en_to_fr": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to French: "

},

"translation_en_to_ro": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to Romanian: "

}

}

} | 1,225 | 2022-12-12T11:51:31Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-wikitext2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.8164

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 64

- total_train_batch_size: 512

- total_eval_batch_size: 20

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- training precision: Mixed Precision

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cpu

- Datasets 2.7.1

- Tokenizers 0.12.1

|

Benicio/t5-small-finetuned-en-to-ro | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: BERiT_2000_custom_architecture_2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# BERiT_2000_custom_architecture_2

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.9854

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 16.4316 | 0.19 | 500 | 9.0685 |

| 8.2958 | 0.39 | 1000 | 7.6483 |

| 7.4324 | 0.58 | 1500 | 7.1707 |

| 7.0054 | 0.77 | 2000 | 6.8592 |

| 6.8522 | 0.97 | 2500 | 6.7710 |

| 6.7538 | 1.16 | 3000 | 6.5845 |

| 6.634 | 1.36 | 3500 | 6.4525 |

| 6.5784 | 1.55 | 4000 | 6.3129 |

| 6.5135 | 1.74 | 4500 | 6.3312 |

| 6.4552 | 1.94 | 5000 | 6.2546 |

| 6.4685 | 2.13 | 5500 | 6.2857 |

| 6.4356 | 2.32 | 6000 | 6.2285 |

| 6.3566 | 2.52 | 6500 | 6.2295 |

| 6.394 | 2.71 | 7000 | 6.1790 |

| 6.3412 | 2.9 | 7500 | 6.1880 |

| 6.3115 | 3.1 | 8000 | 6.2130 |

| 6.3163 | 3.29 | 8500 | 6.1831 |

| 6.2978 | 3.49 | 9000 | 6.1945 |

| 6.3082 | 3.68 | 9500 | 6.1485 |

| 6.2729 | 3.87 | 10000 | 6.1752 |

| 6.307 | 4.07 | 10500 | 6.1331 |

| 6.2494 | 4.26 | 11000 | 6.1082 |

| 6.2523 | 4.45 | 11500 | 6.2110 |

| 6.2455 | 4.65 | 12000 | 6.1326 |

| 6.2399 | 4.84 | 12500 | 6.1779 |

| 6.2297 | 5.03 | 13000 | 6.1587 |

| 6.2374 | 5.23 | 13500 | 6.1458 |

| 6.2265 | 5.42 | 14000 | 6.1370 |

| 6.2222 | 5.62 | 14500 | 6.1511 |

| 6.2209 | 5.81 | 15000 | 6.1320 |

| 6.2146 | 6.0 | 15500 | 6.1124 |

| 6.214 | 6.2 | 16000 | 6.1439 |

| 6.1907 | 6.39 | 16500 | 6.0981 |

| 6.2119 | 6.58 | 17000 | 6.1465 |

| 6.1858 | 6.78 | 17500 | 6.1594 |

| 6.1552 | 6.97 | 18000 | 6.0742 |

| 6.1926 | 7.16 | 18500 | 6.1176 |

| 6.1813 | 7.36 | 19000 | 6.0107 |

| 6.1812 | 7.55 | 19500 | 6.0852 |

| 6.1852 | 7.75 | 20000 | 6.0845 |

| 6.1945 | 7.94 | 20500 | 6.1260 |

| 6.1542 | 8.13 | 21000 | 6.1032 |

| 6.1685 | 8.33 | 21500 | 6.0650 |

| 6.1619 | 8.52 | 22000 | 6.1028 |

| 6.1279 | 8.71 | 22500 | 6.1269 |

| 6.1575 | 8.91 | 23000 | 6.0793 |

| 6.1401 | 9.1 | 23500 | 6.1479 |

| 6.159 | 9.3 | 24000 | 6.0319 |

| 6.1227 | 9.49 | 24500 | 6.0677 |

| 6.1201 | 9.68 | 25000 | 6.0527 |

| 6.1473 | 9.88 | 25500 | 6.1305 |

| 6.1539 | 10.07 | 26000 | 6.1079 |

| 6.091 | 10.26 | 26500 | 6.1219 |

| 6.1015 | 10.46 | 27000 | 6.1317 |

| 6.1048 | 10.65 | 27500 | 6.1149 |

| 6.0955 | 10.84 | 28000 | 6.1216 |

| 6.129 | 11.04 | 28500 | 6.0427 |

| 6.1007 | 11.23 | 29000 | 6.1289 |

| 6.1266 | 11.43 | 29500 | 6.0564 |

| 6.1203 | 11.62 | 30000 | 6.1143 |

| 6.1038 | 11.81 | 30500 | 6.0957 |

| 6.0989 | 12.01 | 31000 | 6.0707 |

| 6.0571 | 12.2 | 31500 | 6.0013 |

| 6.1017 | 12.39 | 32000 | 6.1356 |

| 6.0649 | 12.59 | 32500 | 6.0981 |

| 6.0704 | 12.78 | 33000 | 6.0588 |

| 6.088 | 12.97 | 33500 | 6.0796 |

| 6.1112 | 13.17 | 34000 | 6.0809 |

| 6.0888 | 13.36 | 34500 | 6.0776 |

| 6.0482 | 13.56 | 35000 | 6.0710 |

| 6.0588 | 13.75 | 35500 | 6.0877 |

| 6.0517 | 13.94 | 36000 | 6.0650 |

| 6.0832 | 14.14 | 36500 | 5.9890 |

| 6.0655 | 14.33 | 37000 | 6.0445 |

| 6.0705 | 14.52 | 37500 | 6.0037 |

| 6.0789 | 14.72 | 38000 | 6.0777 |

| 6.0645 | 14.91 | 38500 | 6.0475 |

| 6.0347 | 15.1 | 39000 | 6.1148 |

| 6.0478 | 15.3 | 39500 | 6.0639 |

| 6.0638 | 15.49 | 40000 | 6.0373 |

| 6.0377 | 15.69 | 40500 | 6.0116 |

| 6.0402 | 15.88 | 41000 | 6.0483 |

| 6.0382 | 16.07 | 41500 | 6.1025 |

| 6.039 | 16.27 | 42000 | 6.0488 |

| 6.0232 | 16.46 | 42500 | 6.0219 |

| 5.9946 | 16.65 | 43000 | 6.0541 |

| 6.063 | 16.85 | 43500 | 6.0436 |

| 6.0141 | 17.04 | 44000 | 6.0609 |

| 6.0196 | 17.23 | 44500 | 6.0551 |

| 6.0331 | 17.43 | 45000 | 6.0576 |

| 6.0174 | 17.62 | 45500 | 6.0498 |

| 6.0366 | 17.82 | 46000 | 6.0782 |

| 6.0299 | 18.01 | 46500 | 6.0196 |

| 6.0009 | 18.2 | 47000 | 6.0262 |

| 5.9758 | 18.4 | 47500 | 6.0824 |

| 6.0285 | 18.59 | 48000 | 6.0799 |

| 6.025 | 18.78 | 48500 | 5.9511 |

| 5.9806 | 18.98 | 49000 | 6.0086 |

| 5.9915 | 19.17 | 49500 | 6.0089 |

| 5.9957 | 19.36 | 50000 | 6.0330 |

| 6.0311 | 19.56 | 50500 | 6.0083 |

| 5.995 | 19.75 | 51000 | 6.0394 |

| 6.0034 | 19.95 | 51500 | 5.9854 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

BigSalmon/GPTHeHe | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"has_space"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

tags:

- generated_from_trainer

datasets:

- AlekseyKorshuk/dalio-all-io

metrics:

- accuracy

model-index:

- name: 1.3b-all-2-epoch-v1-after-book

results:

- task:

name: Causal Language Modeling

type: text-generation

dataset:

name: AlekseyKorshuk/dalio-all-io

type: AlekseyKorshuk/dalio-all-io

metrics:

- name: Accuracy

type: accuracy

value: 0.06395348837209303

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# 1.3b-all-2-epoch-v1-after-book

This model is a fine-tuned version of [/models/1.3b-dalio-principles-book](https://huggingface.co//models/1.3b-dalio-principles-book) on the AlekseyKorshuk/dalio-all-io dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9482

- Accuracy: 0.0640

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 32

- total_eval_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 2.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.17 | 0.07 | 1 | 2.0547 | 0.0621 |

| 2.1814 | 0.13 | 2 | 2.0547 | 0.0621 |

| 2.0963 | 0.2 | 3 | 2.0234 | 0.0625 |

| 2.1383 | 0.27 | 4 | 2.0195 | 0.0625 |

| 2.1625 | 0.33 | 5 | 2.0195 | 0.0625 |

| 2.1808 | 0.4 | 6 | 2.0156 | 0.0624 |

| 2.1587 | 0.47 | 7 | 2.0176 | 0.0626 |

| 2.0847 | 0.53 | 8 | 2.0137 | 0.0627 |

| 2.0336 | 0.6 | 9 | 2.0137 | 0.0627 |

| 2.1777 | 0.67 | 10 | 2.0059 | 0.0629 |

| 2.2034 | 0.73 | 11 | 2.0 | 0.0630 |

| 2.1665 | 0.8 | 12 | 1.9941 | 0.0628 |

| 2.0352 | 0.87 | 13 | 1.9883 | 0.0629 |

| 2.1263 | 0.93 | 14 | 1.9834 | 0.0628 |

| 2.1282 | 1.0 | 15 | 1.9785 | 0.0632 |

| 1.7159 | 1.07 | 16 | 1.9766 | 0.0633 |

| 1.8346 | 1.13 | 17 | 1.9775 | 0.0635 |

| 1.7183 | 1.2 | 18 | 1.9824 | 0.0634 |

| 1.6086 | 1.27 | 19 | 1.9883 | 0.0635 |

| 1.6497 | 1.33 | 20 | 1.9893 | 0.0634 |

| 1.6267 | 1.4 | 21 | 1.9854 | 0.0637 |

| 1.5962 | 1.47 | 22 | 1.9766 | 0.0637 |

| 1.5168 | 1.53 | 23 | 1.9697 | 0.0637 |

| 1.6213 | 1.6 | 24 | 1.9619 | 0.0637 |

| 1.4789 | 1.67 | 25 | 1.9580 | 0.0638 |

| 1.6796 | 1.73 | 26 | 1.9551 | 0.0638 |

| 1.5964 | 1.8 | 27 | 1.9531 | 0.0638 |

| 1.787 | 1.87 | 28 | 1.9512 | 0.0639 |

| 1.6536 | 1.93 | 29 | 1.9492 | 0.0640 |

| 1.7178 | 2.0 | 30 | 1.9482 | 0.0640 |

### Framework versions

- Transformers 4.25.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

BigSalmon/GPTNeo350MInformalToFormalLincoln | [

"pytorch",

"gpt_neo",

"text-generation",

"transformers",

"has_space"

]

| text-generation | {

"architectures": [

"GPTNeoForCausalLM"

],

"model_type": "gpt_neo",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

tags:

- autotrain

- token-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- Olusegun/autotrain-data-disease_tokens

co2_eq_emissions:

emissions: 1.569698418187329

---

# Model Trained Using AutoTrain

- Problem type: Entity Extraction

- Model ID: 2095367455

- CO2 Emissions (in grams): 1.5697

## Validation Metrics

- Loss: 0.000

- Accuracy: 1.000

- Precision: 1.000

- Recall: 1.000

- F1: 1.000

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/Olusegun/autotrain-disease_tokens-2095367455

```

Or Python API:

```

from transformers import AutoModelForTokenClassification, AutoTokenizer

model = AutoModelForTokenClassification.from_pretrained("Olusegun/autotrain-disease_tokens-2095367455", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("Olusegun/autotrain-disease_tokens-2095367455", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

BigSalmon/InformalToFormalLincoln21 | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"has_space"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-uncased-issues-128

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-issues-128

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2480

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 16

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0972 | 1.0 | 291 | 1.7066 |

| 1.6391 | 2.0 | 582 | 1.4318 |

| 1.4844 | 3.0 | 873 | 1.3734 |

| 1.3997 | 4.0 | 1164 | 1.3806 |

| 1.3398 | 5.0 | 1455 | 1.1957 |

| 1.2846 | 6.0 | 1746 | 1.2837 |

| 1.2379 | 7.0 | 2037 | 1.2665 |

| 1.1969 | 8.0 | 2328 | 1.2154 |

| 1.1651 | 9.0 | 2619 | 1.1756 |

| 1.1415 | 10.0 | 2910 | 1.2114 |

| 1.1296 | 11.0 | 3201 | 1.2138 |

| 1.1047 | 12.0 | 3492 | 1.1655 |

| 1.0802 | 13.0 | 3783 | 1.2566 |

| 1.0775 | 14.0 | 4074 | 1.1650 |

| 1.0645 | 15.0 | 4365 | 1.1294 |

| 1.062 | 16.0 | 4656 | 1.2480 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.11.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

BigSalmon/NEO125InformalToFormalLincoln | [

"pytorch",

"gpt_neo",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPTNeoForCausalLM"

],

"model_type": "gpt_neo",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

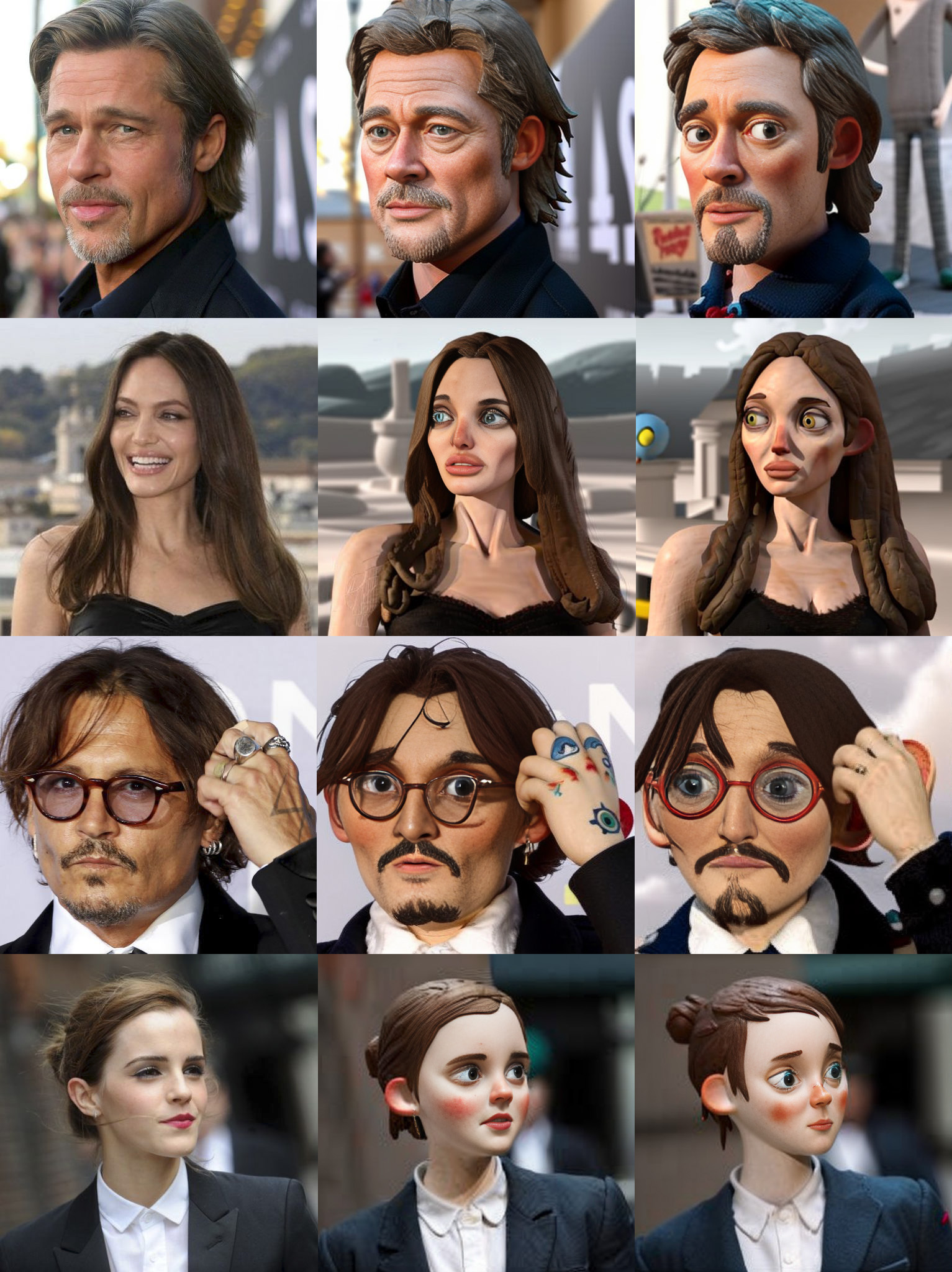

} | 8 | 2022-11-15T20:34:03Z | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

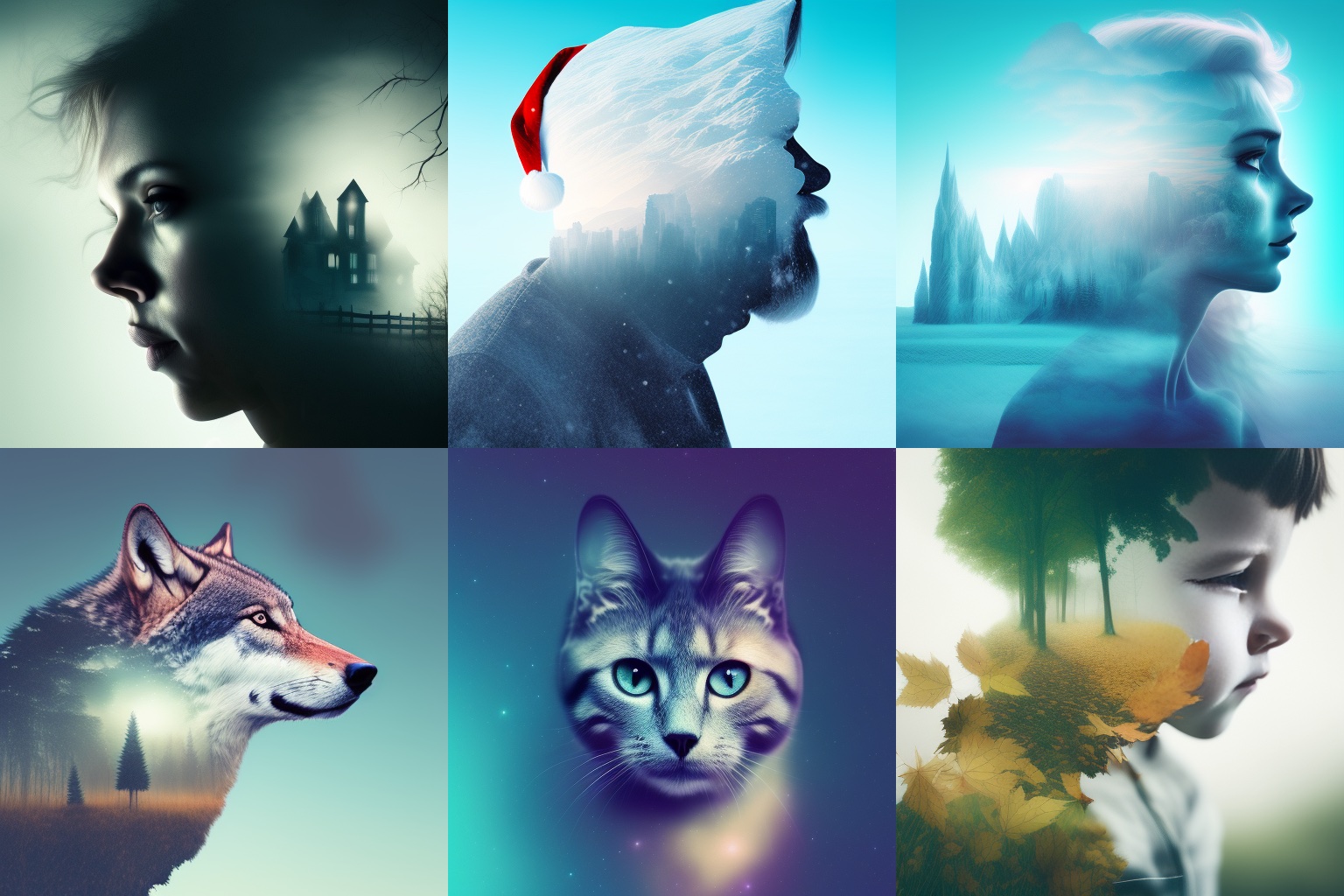

[*Click here to download the latest Double Exposure embedding for SD 2.x in higher resolution*](https://huggingface.co/joachimsallstrom/Double-Exposure-Embedding)!

**Double Exposure Diffusion**

This is version 2 of the <i>Double Exposure Diffusion</i> model, trained specifically on images of people and a few animals.

The model file (Double_Exposure_v2.ckpt) can be downloaded on the **Files** page. You trigger double exposure style images using token: **_dublex style_** or just **_dublex_**.

**Example 1:**

#### Example prompts and settings

<i>Galaxy man (image 1):</i><br>

**dublex man galaxy**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 3273014177, Size: 512x512_

<i>Emma Stone (image 2):</i><br>

**dublex style Emma Stone, galaxy**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 250257155, Size: 512x512_

<i>Frodo (image 6):</i><br>

**dublex style young Elijah Wood as (Frodo), portrait, dark nature**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 3717002975, Size: 512x512_

<br>

**Example 2:**

#### Example prompts and settings

<i>Scarlett Johansson (image 1):</i><br>

**dublex Scarlett Johansson, (haunted house), black background**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 3059560186, Size: 512x512_

<i>Frozen Elsa (image 3):</i><br>

**dublex style Elsa, ice castle**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 2867934627, Size: 512x512_

<i>Wolf (image 4):</i><br>

**dublex style wolf closeup, moon**<br>

_Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 312924946, Size: 512x512_

<br>

<p>

This model was trained using Shivam's DreamBooth model on Google Colab @ 2000 steps.

</p>

The previous version 1 of Double Exposure Diffusion is also available in the **Files** section.

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) |

Bman/DialoGPT-medium-harrypotter | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: platzi-distilroberta-base-mrpc-glue-tommasory

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: mrpc

split: train

args: mrpc

metrics:

- name: Accuracy

type: accuracy

value: 0.8308823529411765

- name: F1

type: f1

value: 0.8733944954128441

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# platzi-distilroberta-base-mrpc-glue-tommasory

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7098

- Accuracy: 0.8309

- F1: 0.8734

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.5196 | 1.09 | 500 | 0.5289 | 0.8260 | 0.8739 |

| 0.3407 | 2.18 | 1000 | 0.7098 | 0.8309 | 0.8734 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

BossLee/t5-gec | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"T5ForConditionalGeneration"

],

"model_type": "t5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 200,

"min_length": 30,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": "summarize: "

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to German: "

},

"translation_en_to_fr": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to French: "

},

"translation_en_to_ro": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to Romanian: "

}

}

} | 6 | null | depressed man sittin on a bar drinking whisky and smoke a cigarrette |

Botslity/Bot | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: distilgpt2-witcherbooks-clm

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# distilgpt2-witcherbooks-clm

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.6.1

- Tokenizers 0.13.2

|

Branex/gpt-neo-2.7B | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

### Player on Stable Diffusion via Dreambooth trained on the [fast-DreamBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

#### Model by Laughify

This your the Stable Diffusion model fine-tuned the Player concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt(s)`: ****

You can also train your own concepts and upload them to the library by using [the fast-DremaBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb).

You can run your new concept via A1111 Colab :[Fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Sample pictures of this concept:

|

Brayan/CNN_Brain_Tumor | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the squad dataset.

## Model description

This is a first attempt at following the directions from the huggingface course. It was run on colab and a private server

## Intended uses & limitations

This model is fine-tuned for extractive question answering.

## Training and evaluation data

SQuAD

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Brona/poc_de | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9245

- name: F1

type: f1

value: 0.9245878206545592

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2259

- Accuracy: 0.9245

- F1: 0.9246

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8516 | 1.0 | 250 | 0.3235 | 0.9055 | 0.9024 |

| 0.2547 | 2.0 | 500 | 0.2259 | 0.9245 | 0.9246 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

CAMeL-Lab/bert-base-arabic-camelbert-mix-pos-egy | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 62 | null | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

# neonHorror

This is a Stable Diffusion model about horror illustrations with a little bit of neon lights.

Some recomendations: the magic word for your prompts is neonHorror .In some times, you would put some prompts like:

request, in neonHorror style

or

an illustration of request, in neonHorror style

or

neonHorror, request

PS: you can replace 'request' with a person, character, etc.

If you enjoy my work, please consider supporting me:

[](https://www.buymeacoffee.com/elrivx)

Examples:

<img src=https://imgur.com/qFz4YCE.png width=30% height=30%>

<img src=https://imgur.com/H3zsCIP.png width=30% height=30%>

<img src=https://imgur.com/KcgTQEE.png width=30% height=30%>

<img src=https://imgur.com/5p6sUQk.png width=30% height=30%>

<img src=https://imgur.com/U1rpAQq.png width=30% height=30%>

<img src=https://imgur.com/lfHCbiV.png width=30% height=30%>

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-did-nadi | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 71 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: bert-base-multilingual-cased-finetuned-squad-squadv

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-cased-finetuned-squad-squadv

This model is a fine-tuned version of [monakth/bert-base-multilingual-cased-finetuned-squad](https://huggingface.co/monakth/bert-base-multilingual-cased-finetuned-squad) on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-sixteenth | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 26 | null | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: farsi_lastname_classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# farsi_lastname_classifier

This model is a fine-tuned version of [microsoft/deberta-v3-small](https://huggingface.co/microsoft/deberta-v3-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0436

- Pearson: 0.9325

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 8e-05

- train_batch_size: 128

- eval_batch_size: 256

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 1.0 | 12 | 0.2989 | 0.6985 |

| No log | 2.0 | 24 | 0.1378 | 0.7269 |

| No log | 3.0 | 36 | 0.0459 | 0.9122 |

| No log | 4.0 | 48 | 0.0454 | 0.9304 |

| No log | 5.0 | 60 | 0.0564 | 0.9168 |

| No log | 6.0 | 72 | 0.0434 | 0.9315 |

| No log | 7.0 | 84 | 0.0452 | 0.9254 |

| No log | 8.0 | 96 | 0.0381 | 0.9320 |

| No log | 9.0 | 108 | 0.0441 | 0.9327 |

| No log | 10.0 | 120 | 0.0436 | 0.9325 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

CAMeL-Lab/bert-base-arabic-camelbert-msa | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,967 | null | ---

tags:

- conversational

---

#RedBot made from DialoGPT-medium |

CLTL/icf-levels-adm | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: farsi_lastname_classifier_1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# farsi_lastname_classifier_1

This model is a fine-tuned version of [microsoft/deberta-v3-small](https://huggingface.co/microsoft/deberta-v3-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0482

- Pearson: 0.9232

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 8e-05

- train_batch_size: 128

- eval_batch_size: 256

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 1.0 | 12 | 0.2705 | 0.7018 |

| No log | 2.0 | 24 | 0.0993 | 0.7986 |

| No log | 3.0 | 36 | 0.0804 | 0.8347 |

| No log | 4.0 | 48 | 0.0433 | 0.9246 |

| No log | 5.0 | 60 | 0.0559 | 0.9176 |

| No log | 6.0 | 72 | 0.0465 | 0.9334 |

| No log | 7.0 | 84 | 0.0503 | 0.9154 |

| No log | 8.0 | 96 | 0.0438 | 0.9222 |

| No log | 9.0 | 108 | 0.0468 | 0.9260 |

| No log | 10.0 | 120 | 0.0482 | 0.9232 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

CalvinHuang/mt5-small-finetuned-amazon-en-es | [

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"transformers",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible"

]

| summarization | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 16 | null | ---

license: mit

---

### qingqingdezhaopian on Stable Diffusion via Dreambooth trained on the [fast-DreamBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

#### Model by liuwei33

This your the Stable Diffusion model fine-tuned the qingqingdezhaopian concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt(s)`: **15.png**

You can also train your own concepts and upload them to the library by using [the fast-DremaBooth.ipynb by TheLastBen](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb).

You can run your new concept via A1111 Colab :[Fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Sample pictures of this concept:

15.png

|

Cameron/BERT-mdgender-convai-binary | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

license: creativeml-openrail-m

---

portrait of a beautiful woman in the style of PatrickNagel

|

Capreolus/electra-base-msmarco | [

"pytorch",

"tf",

"electra",

"text-classification",

"arxiv:2008.09093",

"transformers"

]

| text-classification | {

"architectures": [

"ElectraForSequenceClassification"

],

"model_type": "electra",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 110 | null | ---

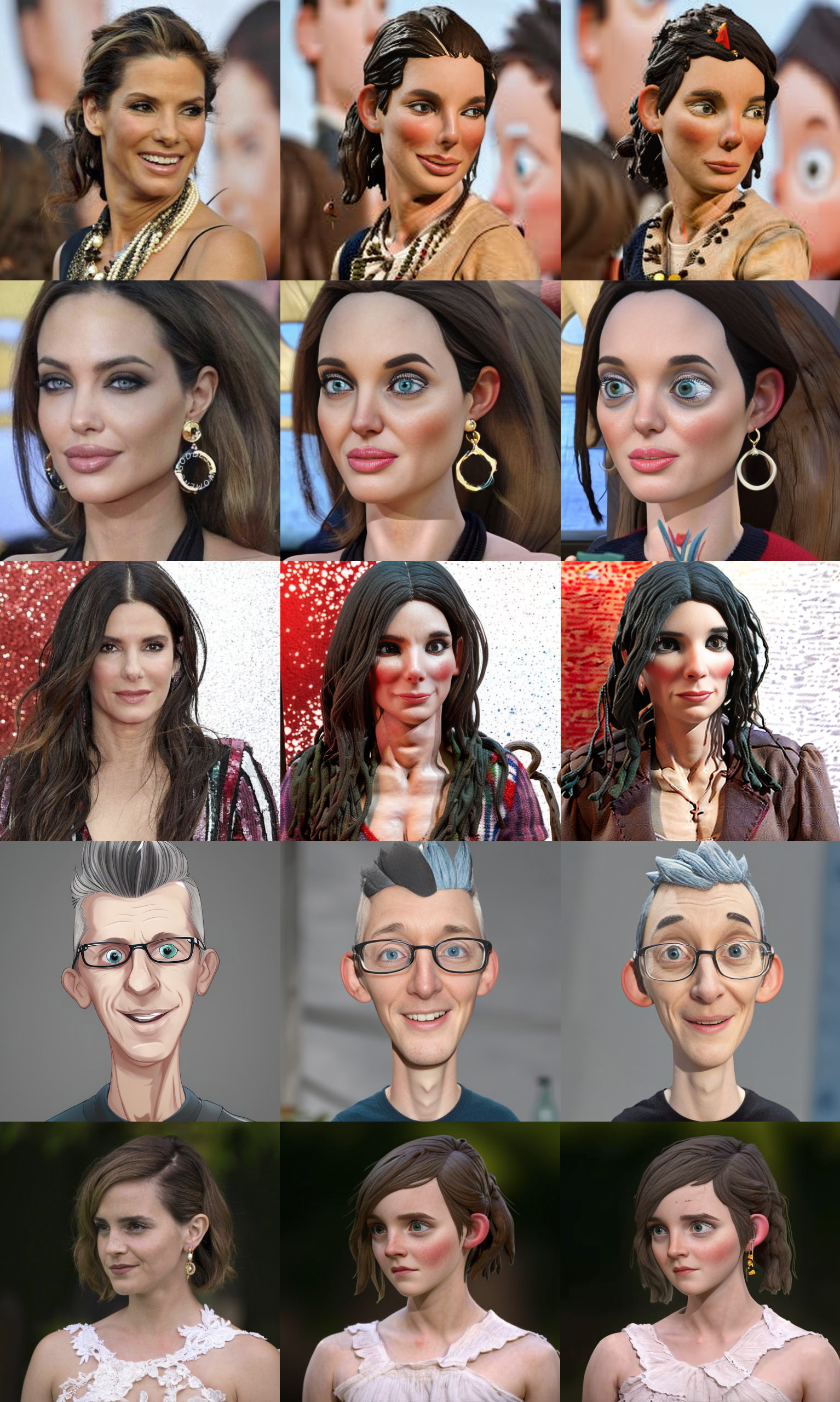

license: creativeml-openrail-m

---

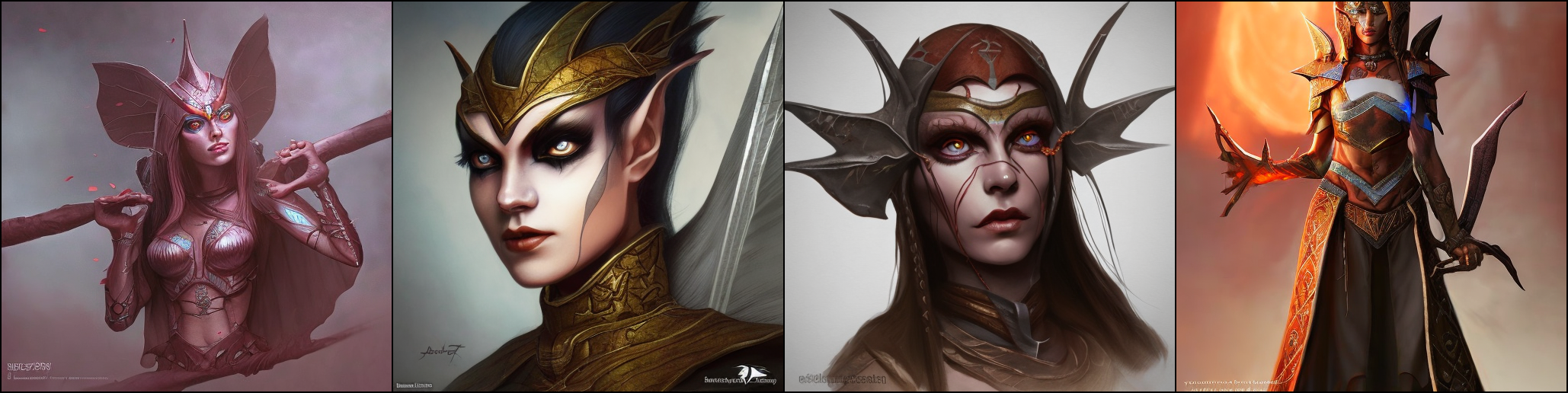

This model tries to mimick the stylized 3d look but with a realistic twist on texture and overall materials rendition.

Use "tdst style" (without quotes) to activate the model

As usual, if you want a better likeness with your subject you can either use brackets like in: [3dst style:10] or give more emphasis to the subject like in: (subject:1.3) |

dccuchile/albert-base-spanish-finetuned-pawsx | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mT5_multilingual_XLSum-finetuned-liputan6-coba

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mT5_multilingual_XLSum-finetuned-liputan6-coba

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2713

- Rouge1: 0.3371

- Rouge2: 0.2029

- Rougel: 0.2927

- Rougelsum: 0.309

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:---------:|

| 1.4304 | 1.0 | 4474 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

| 1.4286 | 2.0 | 8948 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

| 1.429 | 3.0 | 13422 | 1.2713 | 0.3371 | 0.2029 | 0.2927 | 0.309 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

dccuchile/albert-large-spanish-finetuned-pawsx | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-fr

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.fr

metrics:

- name: F1

type: f1

value: 0.8422468886646486

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2661

- F1: 0.8422

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.5955 | 1.0 | 191 | 0.3344 | 0.7932 |

| 0.2556 | 2.0 | 382 | 0.2923 | 0.8252 |

| 0.1741 | 3.0 | 573 | 0.2661 | 0.8422 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1

- Datasets 1.16.1

- Tokenizers 0.10.3

|

dccuchile/albert-tiny-spanish-finetuned-ner | [

"pytorch",

"albert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"AlbertForTokenClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7518

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.5791 | 1.0 | 554 | 2.2242 |

| 2.0656 | 2.0 | 1108 | 1.8537 |

| 1.6831 | 3.0 | 1662 | 1.7848 |

| 1.4963 | 4.0 | 2216 | 1.7518 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

dccuchile/bert-base-spanish-wwm-cased-finetuned-pawsx | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilroberta-base-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-finetuned-wikitext2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8340

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0843 | 1.0 | 2406 | 1.9226 |

| 1.9913 | 2.0 | 4812 | 1.8820 |

| 1.9597 | 3.0 | 7218 | 1.8214 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

dccuchile/bert-base-spanish-wwm-cased-finetuned-qa-mlqa | [

"pytorch",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {