modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Davlan/mbart50-large-yor-eng-mt | [

"pytorch",

"mbart",

"text2text-generation",

"arxiv:2103.08647",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MBartForConditionalGeneration"

],

"model_type": "mbart",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | Access to model ThomasGerald/MBARTHEZ-QG is restricted and you are not in the authorized list. Visit https://huggingface.co/ThomasGerald/MBARTHEZ-QG to ask for access. |

Davlan/mt5_base_eng_yor_mt | [

"pytorch",

"mt5",

"text2text-generation",

"arxiv:2103.08647",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 433 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 433,

"warmup_steps": 44,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Davlan/naija-twitter-sentiment-afriberta-large | [

"pytorch",

"tf",

"xlm-roberta",

"text-classification",

"arxiv:2201.08277",

"transformers",

"has_space"

]

| text-classification | {

"architectures": [

"XLMRobertaForSequenceClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 61 | null | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: DLL888/bert-base-uncased-squad

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# DLL888/bert-base-uncased-squad

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on [SQuAD](https://huggingface.co/datasets/squad) dataset.

It achieves the following results on the evaluation set:

- Exact Match: 80.21759697256385

- F1: 87.77849998885436

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training Machine

Trained in Google Colab Pro with the following specs:

- A100-SXM4-40GB

- NVIDIA-SMI 460.32.03

- Driver Version: 460.32.03

- CUDA Version: 11.2

Training took about 26 minutes for two epochs.

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 10564, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 500, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: mixed_float16

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Validation Loss | Validation End Logits Accuracy | Validation Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:---------------:|:------------------------------:|:--------------------------------:|:-----:|

| 1.4348 | 0.6368 | 0.5974 | 1.0155 | 0.7193 | 0.6825 | 0 |

| 0.8072 | 0.7735 | 0.7320 | 0.9990 | 0.7302 | 0.6983 | 1 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-finetuned-amharic | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 401 | 2022-12-01T14:31:20Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 653.50 +/- 137.33

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga sayby -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga sayby -f logs/

rl_zoo3 enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga sayby

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

Davlan/xlm-roberta-base-finetuned-chichewa | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: enlm-roberta-81-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# enlm-roberta-81-imdb

This model is a fine-tuned version of [manirai91/enlm-r](https://huggingface.co/manirai91/enlm-r) on the imdb dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.06

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.11.0

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-finetuned-english | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | 2022-12-01T14:34:41Z | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

The embeddings in this repository were trained for the 768px [Stable Diffusion v2.0](https://huggingface.co/stabilityai/stable-diffusion-2) model. The embeddings should work on any model that uses SD v2.0 as a base.

Currently the kc32-v4-5000.pt & kc16-v4-5000.pt embeddings seem to perform the best.

**Knollingcase v1**

The v1 embeddings were trained for 4000 iterations with a batch size of 2, a text dropout of 10%, & 16 vectors using Automatic1111's WebUI. A total of 69 training images with high quality captions were used.

**Knollingcase v2**

The v2 embeddings were trained for 5000 iterations with a batch size of 4 and a text dropout of 10%, & 16 vectors using Automatic1111's WebUI. A total of 78 training images with high quality captions were used.

**Knollingcase v3**

The v3 embeddings were trained for 4000-6250 iterations with a batch size of 4 and a text dropout of 10%, & 16 vectors using Automatic1111's WebUI. A total of 86 training images with high quality captions were used.

<div align="center">

<img src="https://huggingface.co/ProGamerGov/knollingcase-embeddings-sd-v2-0/resolve/main/cruise_ship_on_wave_kc16-v3-6250.png">

</div>

* [Full Image](https://huggingface.co/ProGamerGov/knollingcase-embeddings-sd-v2-0/resolve/main/cruise_ship_on_wave_kc16-v3-6250.png)

**Knollingcase v4**

The v4 embeddings were trained for 4000-6250 iterations with a batch size of 4 and a text dropout of 10%, using Automatic1111's WebUI. A total of 116 training images with high quality captions were used.

<div align="center">

<img src="https://huggingface.co/ProGamerGov/knollingcase-embeddings-sd-v2-0/resolve/main/v4_size_768_t4x11.jpg">

</div>

* [Full Image](https://huggingface.co/ProGamerGov/knollingcase-embeddings-sd-v2-0/resolve/main/v4_size_768_t4x11.jpg)

**Usage**

To use the embeddings, download and then rename the files to whatever trigger word you want to use. They were trained with kc8, kc16, kc32, but any trigger word should work.

The knollingcase style is considered to be a concept inside a sleek (sometimes scifi) display case with transparent walls, and a minimalistic background.

Suggested prompts:

```

<concept>, micro-details, photorealism, photorealistic, <kc-vx-iter>

photorealistic <concept>, very detailed, scifi case, <kc-vx-iter>

<concept>, very detailed, scifi transparent case, <kc-vx-iter>

```

Suggested negative prompts:

```

blurry, toy, cartoon, animated, underwater, photoshop

```

Suggested samplers:

DPM++ SDE Karras (used for the example images) or DPM++ 2S a Karras

|

Davlan/xlm-roberta-base-finetuned-igbo | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 68 | null | ---

language:

- en

license: creativeml-openrail-m

thumbnail: "https://huggingface.co/Guizmus/MosaicArt/resolve/main/showcase.jpg"

tags:

- stable-diffusion

- text-to-image

- image-to-image

---

# Mosaic Art

## Details

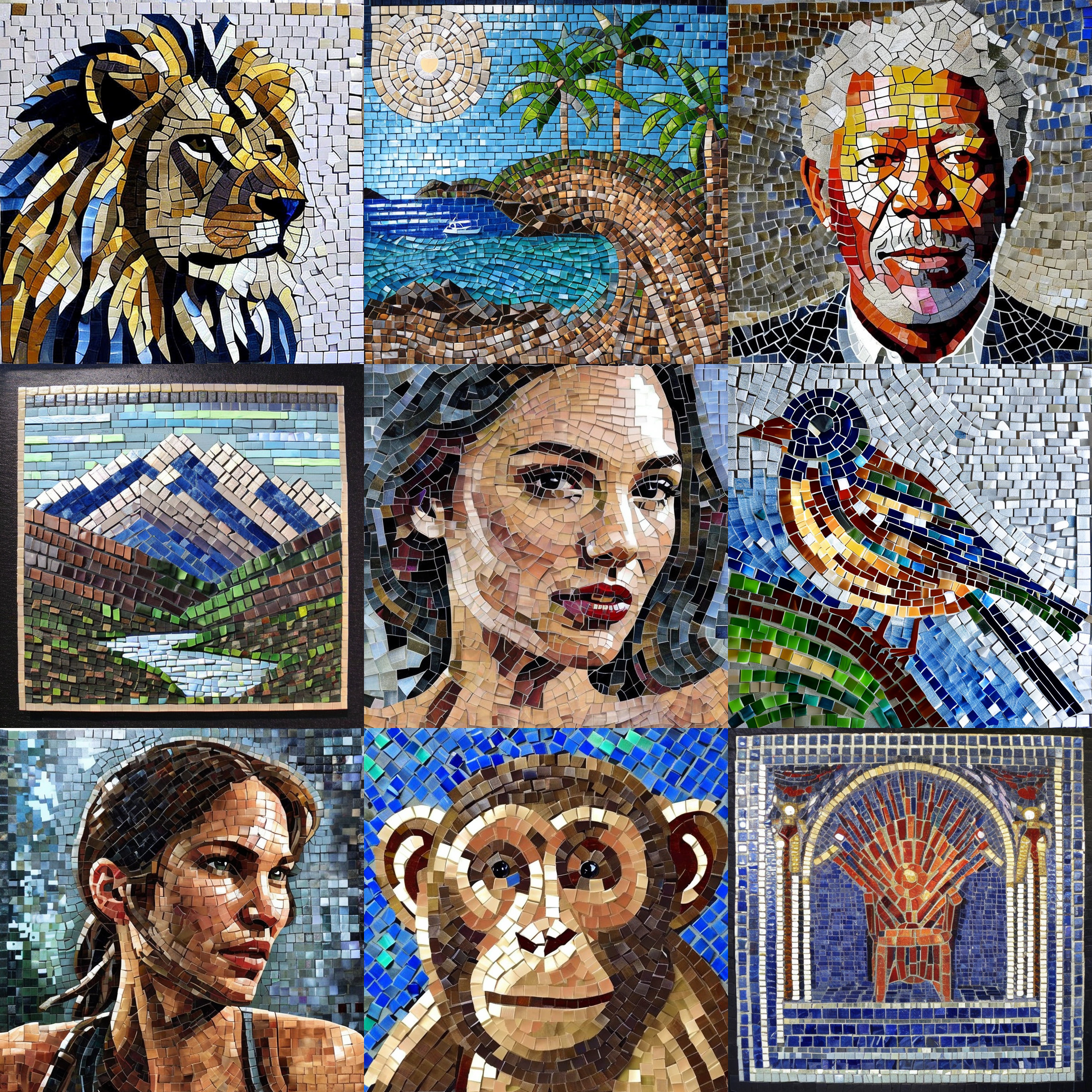

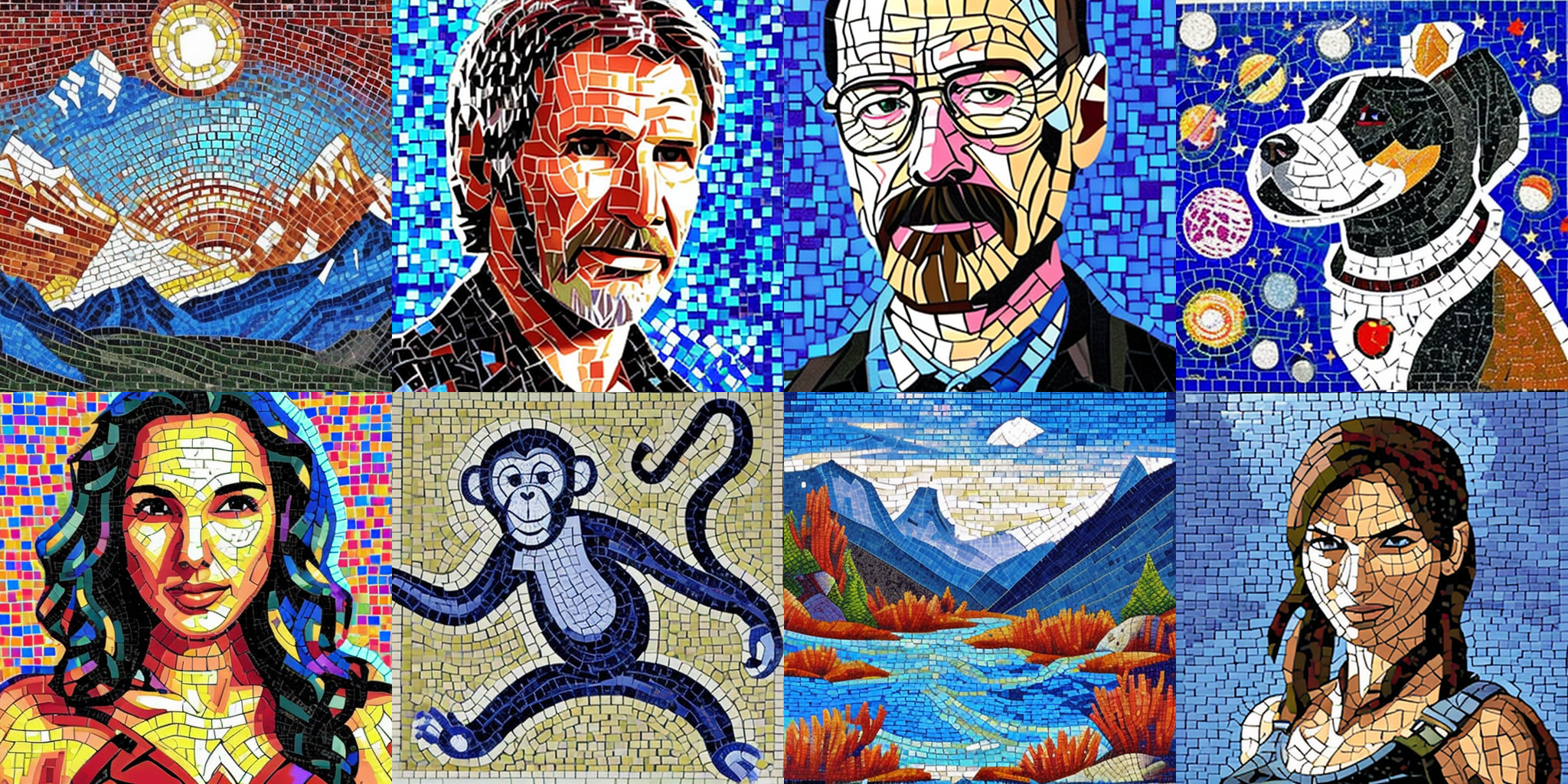

This is a Dreamboothed Stable Diffusion model trained on pictures of mosaic art.

The total dataset is made of 46 pictures. V2 was trained on [Stable diffusion 2.1 768](https://huggingface.co/stabilityai/stable-diffusion-2-1). I used [StableTuner](https://github.com/devilismyfriend/StableTuner) to do the training, using full caption on the pictures with almost no recurring word outside the main concept, so that no additionnal regularisation was needed. 6 epochs of 40 repeats on LR 1e-6 were used, with prior preservation.

V1 was trained on [runawayml 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) and the [new VAE](https://huggingface.co/stabilityai/sd-vae-ft-mse). I used [EveryDream](https://github.com/victorchall/EveryDream-trainer) to do the training, using full caption on the pictures with almost no recurring word outside the main concept, so that no additionnal regularisation was needed. Out of e0 to e11 epochs, e8 was selected as the best application of style while not overtraining. Prior preservation was constated as good. A total of 9 epochs of 40 repeats with a learning rate of 1e-6.

The token "Mosaic Art" will bring in the new concept, trained as a style.

The recommended sampling is k_Euler_a or DPM++ 2M Karras on 20 steps, CFGS 7.5 .

## Model v2

[CKPT v2](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v2.ckpt)

[YAML v2](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v2.yaml)

## Model v1

[CKPT v1](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v1.ckpt)

[CKPT v1 with ema weights](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v1_ema.ckpt)

[Dataset](https://huggingface.co/Guizmus/MosaicArt/resolve/main/dataset_v1.zip)

## 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

from diffusers import StableDiffusionPipeline

import torch

model_id = "Guizmus/MosaicArt"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "Mosaic Art dog on the moon"

image = pipe(prompt).images[0]

image.save("./MosaicArt.png")

``` |

Davlan/xlm-roberta-base-finetuned-kinyarwanda | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 61 | null | Access to model corumba/desenhista is restricted and you are not in the authorized list. Visit https://huggingface.co/corumba/desenhista to ask for access. |

Davlan/xlm-roberta-base-finetuned-luganda | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 11 | null | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 26560 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 26560,

"warmup_steps": 2656,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Davlan/xlm-roberta-base-finetuned-yoruba | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 29 | null | This model is said to be a high-quality female anatomy model, though its exact design aesthetic (i.e. anime or semi-realistic) remains unclear.

The only known fact is that the renowned Berry Mix model is reportedly created using this model as its base

makes realsitc humans |

Dayout/test | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: creativeml-openrail-m

---

### MODEL_CONFIG_DDIM_TRAIN

```

MODEL_CONFIG_DDIM = {

"_class_name": "StableDiffusionPipeline",

"_diffusers_version": "0.6.0",

"scheduler": [

"diffusers",

"DDIMScheduler"

],

"text_encoder": [

"transformers",

"CLIPTextModel"

],

"tokenizer": [

"transformers",

"CLIPTokenizer"

],

"unet": [

"diffusers",

"UNet2DConditionModel"

],

"vae": [

"diffusers",

"AutoencoderKL"

]

}

```

### MODEL_CONFIG_DDIM_SAVE

```

MODEL_CONFIG_DDIM_SAVE = {

"_class_name": "StableDiffusionPipeline",

"_diffusers_version": "0.9.0.dev0",

"scheduler": [

"diffusers",

"DDIMScheduler"

],

"text_encoder": [

"transformers",

"CLIPTextModel"

],

"tokenizer": [

"transformers",

"CLIPTokenizer"

],

"unet": [

"diffusers",

"UNet2DConditionModel"

],

"vae": [

"diffusers",

"AutoencoderKL"

]

}

```

### SCHEDULER_CONFIG_DDIM_TRAIN

```

SCHEDULER_CONFIG_DDIM_TRAIN = {

"_class_name": "DDIMScheduler",

"_diffusers_version": "0.6.0",

"beta_end": 0.012,

"beta_schedule": "scaled_linear",

"beta_start": 0.00085,

"clip_sample": false,

"num_train_timesteps": 1000,

"set_alpha_to_one": false,

"skip_prk_steps": true,

"steps_offset": 1,

"trained_betas": null

}

```

### SCHEDULER_CONFIG_DDIM_SAVE

```

SCHEDULER_CONFIG_DDIM_SAVE = {

"_class_name": "DDIMScheduler",

"_diffusers_version": "0.9.0.dev0",

"beta_end": 0.012,

"beta_schedule": "scaled_linear",

"beta_start": 0.00085,

"clip_sample": false,

"num_train_timesteps": 1000,

"prediction_type": "epsilon",

"set_alpha_to_one": false,

"skip_prk_steps": true,

"steps_offset": 1,

"trained_betas": null

}

``` |

Declan/Breitbart_model_v4 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | 2022-12-01T16:36:39Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: distilbert-base-uncased-finetuned-sngp-for-qa-squad-seed-999

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-sngp-for-qa-squad-seed-999

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad_v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 6.0586

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 7 | 5.9735 |

| No log | 2.0 | 14 | 6.0586 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Declan/ChicagoTribune_model_v5 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('VlakoResker/sd-class-butterflies-32')

image = pipeline().images[0]

image

```

|

Declan/FoxNews_model_v1 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('bowwwave/sd-class-butterflies-64')

image = pipeline().images[0]

image

```

|

Declan/HuffPost_model_v4 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

datasets:

- tweet_eval

metrics:

- f1

- accuracy

model-index:

- name: cardiffnlp/roberta-base-hate

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: tweet_eval

type: hate

split: test

metrics:

- name: Micro F1 (tweet_eval/hate)

type: micro_f1_tweet_eval/hate

value: 0.5131313131313131

- name: Macro F1 (tweet_eval/hate)

type: micro_f1_tweet_eval/hate

value: 0.4634195952763752

- name: Accuracy (tweet_eval/hate)

type: accuracy_tweet_eval/hate

value: 0.5131313131313131

pipeline_tag: text-classification

widget:

- text: Get the all-analog Classic Vinyl Edition of "Takin Off" Album from {@herbiehancock@} via {@bluenoterecords@} link below {{URL}}

example_title: "topic_classification 1"

- text: Yes, including Medicare and social security saving👍

example_title: "sentiment 1"

- text: All two of them taste like ass.

example_title: "offensive 1"

- text: If you wanna look like a badass, have drama on social media

example_title: "irony 1"

- text: Whoever just unfollowed me you a bitch

example_title: "hate 1"

- text: I love swimming for the same reason I love meditating...the feeling of weightlessness.

example_title: "emotion 1"

- text: Beautiful sunset last night from the pontoon @TupperLakeNY

example_title: "emoji 1"

---

# cardiffnlp/roberta-base-hate

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the

[`tweet_eval (hate)`](https://huggingface.co/datasets/tweet_eval)

via [`tweetnlp`](https://github.com/cardiffnlp/tweetnlp).

Training split is `train` and parameters have been tuned on the validation split `validation`.

Following metrics are achieved on the test split `test` ([link](https://huggingface.co/cardiffnlp/roberta-base-hate/raw/main/metric.json)).

- F1 (micro): 0.5131313131313131

- F1 (macro): 0.4634195952763752

- Accuracy: 0.5131313131313131

### Usage

Install tweetnlp via pip.

```shell

pip install tweetnlp

```

Load the model in python.

```python

import tweetnlp

model = tweetnlp.Classifier("cardiffnlp/roberta-base-hate", max_length=128)

model.predict('Get the all-analog Classic Vinyl Edition of "Takin Off" Album from {@herbiehancock@} via {@bluenoterecords@} link below {{URL}}')

```

### Reference

```

@inproceedings{camacho-collados-etal-2022-tweetnlp,

title={{T}weet{NLP}: {C}utting-{E}dge {N}atural {L}anguage {P}rocessing for {S}ocial {M}edia},

author={Camacho-Collados, Jose and Rezaee, Kiamehr and Riahi, Talayeh and Ushio, Asahi and Loureiro, Daniel and Antypas, Dimosthenis and Boisson, Joanne and Espinosa-Anke, Luis and Liu, Fangyu and Mart{'\i}nez-C{'a}mara, Eugenio and others},

author = "Ushio, Asahi and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

month = nov,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

Declan/NPR_model_v3 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | null | ---

datasets:

- tweet_eval

metrics:

- f1

- accuracy

model-index:

- name: cardiffnlp/twitter-roberta-base-2021-124m-emoji

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: tweet_eval

type: emoji

split: test

metrics:

- name: Micro F1 (tweet_eval/emoji)

type: micro_f1_tweet_eval/emoji

value: 0.46162

- name: Macro F1 (tweet_eval/emoji)

type: micro_f1_tweet_eval/emoji

value: 0.34612351090521765

- name: Accuracy (tweet_eval/emoji)

type: accuracy_tweet_eval/emoji

value: 0.46162

pipeline_tag: text-classification

widget:

- text: Get the all-analog Classic Vinyl Edition of "Takin Off" Album from {@herbiehancock@} via {@bluenoterecords@} link below {{URL}}

example_title: "topic_classification 1"

- text: Yes, including Medicare and social security saving👍

example_title: "sentiment 1"

- text: All two of them taste like ass.

example_title: "offensive 1"

- text: If you wanna look like a badass, have drama on social media

example_title: "irony 1"

- text: Whoever just unfollowed me you a bitch

example_title: "hate 1"

- text: I love swimming for the same reason I love meditating...the feeling of weightlessness.

example_title: "emotion 1"

- text: Beautiful sunset last night from the pontoon @TupperLakeNY

example_title: "emoji 1"

---

# cardiffnlp/twitter-roberta-base-2021-124m-emoji

This model is a fine-tuned version of [cardiffnlp/twitter-roberta-base-2021-124m](https://huggingface.co/cardiffnlp/twitter-roberta-base-2021-124m) on the

[`tweet_eval (emoji)`](https://huggingface.co/datasets/tweet_eval)

via [`tweetnlp`](https://github.com/cardiffnlp/tweetnlp).

Training split is `train` and parameters have been tuned on the validation split `validation`.

Following metrics are achieved on the test split `test` ([link](https://huggingface.co/cardiffnlp/twitter-roberta-base-2021-124m-emoji/raw/main/metric.json)).

- F1 (micro): 0.46162

- F1 (macro): 0.34612351090521765

- Accuracy: 0.46162

### Usage

Install tweetnlp via pip.

```shell

pip install tweetnlp

```

Load the model in python.

```python

import tweetnlp

model = tweetnlp.Classifier("cardiffnlp/twitter-roberta-base-2021-124m-emoji", max_length=128)

model.predict('Get the all-analog Classic Vinyl Edition of "Takin Off" Album from {@herbiehancock@} via {@bluenoterecords@} link below {{URL}}')

```

### Reference

```

@inproceedings{camacho-collados-etal-2022-tweetnlp,

title={{T}weet{NLP}: {C}utting-{E}dge {N}atural {L}anguage {P}rocessing for {S}ocial {M}edia},

author={Camacho-Collados, Jose and Rezaee, Kiamehr and Riahi, Talayeh and Ushio, Asahi and Loureiro, Daniel and Antypas, Dimosthenis and Boisson, Joanne and Espinosa-Anke, Luis and Liu, Fangyu and Mart{'\i}nez-C{'a}mara, Eugenio and others},

author = "Ushio, Asahi and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

month = nov,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

Declan/NPR_model_v6 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

language:

- gl

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small gl - Galician

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small gl - Galician

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.25.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Declan/WallStreetJournal_model_v1 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('shahukareem/sd-class-butterflies-64')

image = pipeline().images[0]

image

```

|

Declan/WallStreetJournal_model_v2 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | 2022-12-01T19:09:34Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: mdeberta_all

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mdeberta_all

This model is a fine-tuned version of [microsoft/mdeberta-v3-base](https://huggingface.co/microsoft/mdeberta-v3-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2148

- Aerospacemanufacturer Precision: 0.7073

- Aerospacemanufacturer Recall: 0.8406

- Aerospacemanufacturer F1: 0.7682

- Aerospacemanufacturer Number: 138

- Anatomicalstructure Precision: 0.6762

- Anatomicalstructure Recall: 0.7269

- Anatomicalstructure F1: 0.7006

- Anatomicalstructure Number: 227

- Artwork Precision: 0.5802

- Artwork Recall: 0.5802

- Artwork F1: 0.5802

- Artwork Number: 131

- Artist Precision: 0.7565

- Artist Recall: 0.7938

- Artist F1: 0.7747

- Artist Number: 1722

- Athlete Precision: 0.7195

- Athlete Recall: 0.7636

- Athlete F1: 0.7409

- Athlete Number: 719

- Carmanufacturer Precision: 0.6806

- Carmanufacturer Recall: 0.8176

- Carmanufacturer F1: 0.7429

- Carmanufacturer Number: 159

- Cleric Precision: 0.6867

- Cleric Recall: 0.5124

- Cleric F1: 0.5869

- Cleric Number: 201

- Clothing Precision: 0.5797

- Clothing Recall: 0.625

- Clothing F1: 0.6015

- Clothing Number: 128

- Disease Precision: 0.6262

- Disease Recall: 0.6768

- Disease F1: 0.6505

- Disease Number: 198

- Drink Precision: 0.7296

- Drink Recall: 0.8112

- Drink F1: 0.7682

- Drink Number: 143

- Facility Precision: 0.6439

- Facility Recall: 0.7203

- Facility F1: 0.6800

- Facility Number: 497

- Food Precision: 0.6786

- Food Recall: 0.5327

- Food F1: 0.5969

- Food Number: 214

- Humansettlement Precision: 0.8594

- Humansettlement Recall: 0.8792

- Humansettlement F1: 0.8692

- Humansettlement Number: 1689

- Medicalprocedure Precision: 0.6545

- Medicalprocedure Recall: 0.7606

- Medicalprocedure F1: 0.7036

- Medicalprocedure Number: 142

- Medication/vaccine Precision: 0.7183

- Medication/vaccine Recall: 0.765

- Medication/vaccine F1: 0.7409

- Medication/vaccine Number: 200

- Musicalgrp Precision: 0.7132

- Musicalgrp Recall: 0.7688

- Musicalgrp F1: 0.7400

- Musicalgrp Number: 372

- Musicalwork Precision: 0.7513

- Musicalwork Recall: 0.7052

- Musicalwork F1: 0.7275

- Musicalwork Number: 407

- Org Precision: 0.6335

- Org Recall: 0.6117

- Org F1: 0.6224

- Org Number: 667

- Otherloc Precision: 0.7514

- Otherloc Recall: 0.6205

- Otherloc F1: 0.6797

- Otherloc Number: 224

- Otherper Precision: 0.4558

- Otherper Recall: 0.5821

- Otherper F1: 0.5112

- Otherper Number: 859

- Otherprod Precision: 0.6076

- Otherprod Recall: 0.5543

- Otherprod F1: 0.5797

- Otherprod Number: 433

- Politician Precision: 0.6228

- Politician Recall: 0.4793

- Politician F1: 0.5417

- Politician Number: 603

- Privatecorp Precision: 0.7159

- Privatecorp Recall: 0.4884

- Privatecorp F1: 0.5806

- Privatecorp Number: 129

- Publiccorp Precision: 0.56

- Publiccorp Recall: 0.6914

- Publiccorp F1: 0.6188

- Publiccorp Number: 243

- Scientist Precision: 0.4545

- Scientist Recall: 0.4497

- Scientist F1: 0.4521

- Scientist Number: 189

- Software Precision: 0.7159

- Software Recall: 0.8046

- Software F1: 0.7577

- Software Number: 307

- Sportsgrp Precision: 0.7845

- Sportsgrp Recall: 0.8701

- Sportsgrp F1: 0.8251

- Sportsgrp Number: 385

- Sportsmanager Precision: 0.6667

- Sportsmanager Recall: 0.5361

- Sportsmanager F1: 0.5943

- Sportsmanager Number: 194

- Station Precision: 0.7406

- Station Recall: 0.8093

- Station F1: 0.7734

- Station Number: 194

- Symptom Precision: 0.6316

- Symptom Recall: 0.5581

- Symptom F1: 0.5926

- Symptom Number: 129

- Vehicle Precision: 0.5514

- Vehicle Recall: 0.6505

- Vehicle F1: 0.5969

- Vehicle Number: 206

- Visualwork Precision: 0.7538

- Visualwork Recall: 0.7951

- Visualwork F1: 0.7739

- Visualwork Number: 693

- Writtenwork Precision: 0.6913

- Writtenwork Recall: 0.6803

- Writtenwork F1: 0.6858

- Writtenwork Number: 563

- Overall Precision: 0.6928

- Overall Recall: 0.7142

- Overall F1: 0.7033

- Overall Accuracy: 0.9355

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 15.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Aerospacemanufacturer Precision | Aerospacemanufacturer Recall | Aerospacemanufacturer F1 | Aerospacemanufacturer Number | Anatomicalstructure Precision | Anatomicalstructure Recall | Anatomicalstructure F1 | Anatomicalstructure Number | Artwork Precision | Artwork Recall | Artwork F1 | Artwork Number | Artist Precision | Artist Recall | Artist F1 | Artist Number | Athlete Precision | Athlete Recall | Athlete F1 | Athlete Number | Carmanufacturer Precision | Carmanufacturer Recall | Carmanufacturer F1 | Carmanufacturer Number | Cleric Precision | Cleric Recall | Cleric F1 | Cleric Number | Clothing Precision | Clothing Recall | Clothing F1 | Clothing Number | Disease Precision | Disease Recall | Disease F1 | Disease Number | Drink Precision | Drink Recall | Drink F1 | Drink Number | Facility Precision | Facility Recall | Facility F1 | Facility Number | Food Precision | Food Recall | Food F1 | Food Number | Humansettlement Precision | Humansettlement Recall | Humansettlement F1 | Humansettlement Number | Medicalprocedure Precision | Medicalprocedure Recall | Medicalprocedure F1 | Medicalprocedure Number | Medication/vaccine Precision | Medication/vaccine Recall | Medication/vaccine F1 | Medication/vaccine Number | Musicalgrp Precision | Musicalgrp Recall | Musicalgrp F1 | Musicalgrp Number | Musicalwork Precision | Musicalwork Recall | Musicalwork F1 | Musicalwork Number | Org Precision | Org Recall | Org F1 | Org Number | Otherloc Precision | Otherloc Recall | Otherloc F1 | Otherloc Number | Otherper Precision | Otherper Recall | Otherper F1 | Otherper Number | Otherprod Precision | Otherprod Recall | Otherprod F1 | Otherprod Number | Politician Precision | Politician Recall | Politician F1 | Politician Number | Privatecorp Precision | Privatecorp Recall | Privatecorp F1 | Privatecorp Number | Publiccorp Precision | Publiccorp Recall | Publiccorp F1 | Publiccorp Number | Scientist Precision | Scientist Recall | Scientist F1 | Scientist Number | Software Precision | Software Recall | Software F1 | Software Number | Sportsgrp Precision | Sportsgrp Recall | Sportsgrp F1 | Sportsgrp Number | Sportsmanager Precision | Sportsmanager Recall | Sportsmanager F1 | Sportsmanager Number | Station Precision | Station Recall | Station F1 | Station Number | Symptom Precision | Symptom Recall | Symptom F1 | Symptom Number | Vehicle Precision | Vehicle Recall | Vehicle F1 | Vehicle Number | Visualwork Precision | Visualwork Recall | Visualwork F1 | Visualwork Number | Writtenwork Precision | Writtenwork Recall | Writtenwork F1 | Writtenwork Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:------:|:---------------:|:-------------------------------:|:----------------------------:|:------------------------:|:----------------------------:|:-----------------------------:|:--------------------------:|:----------------------:|:--------------------------:|:-----------------:|:--------------:|:----------:|:--------------:|:----------------:|:-------------:|:---------:|:-------------:|:-----------------:|:--------------:|:----------:|:--------------:|:-------------------------:|:----------------------:|:------------------:|:----------------------:|:----------------:|:-------------:|:---------:|:-------------:|:------------------:|:---------------:|:-----------:|:---------------:|:-----------------:|:--------------:|:----------:|:--------------:|:---------------:|:------------:|:--------:|:------------:|:------------------:|:---------------:|:-----------:|:---------------:|:--------------:|:-----------:|:-------:|:-----------:|:-------------------------:|:----------------------:|:------------------:|:----------------------:|:--------------------------:|:-----------------------:|:-------------------:|:-----------------------:|:----------------------------:|:-------------------------:|:---------------------:|:-------------------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:-------------:|:----------:|:------:|:----------:|:------------------:|:---------------:|:-----------:|:---------------:|:------------------:|:---------------:|:-----------:|:---------------:|:-------------------:|:----------------:|:------------:|:----------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:-------------------:|:----------------:|:------------:|:----------------:|:------------------:|:---------------:|:-----------:|:---------------:|:-------------------:|:----------------:|:------------:|:----------------:|:-----------------------:|:--------------------:|:----------------:|:--------------------:|:-----------------:|:--------------:|:----------:|:--------------:|:-----------------:|:--------------:|:----------:|:--------------:|:-----------------:|:--------------:|:----------:|:--------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:-----------------:|:--------------:|:----------:|:----------------:|

| 0.2881 | 1.0 | 21353 | 0.2534 | 0.5191 | 0.6884 | 0.5919 | 138 | 0.5693 | 0.6872 | 0.6228 | 227 | 0.4366 | 0.4733 | 0.4542 | 131 | 0.7109 | 0.8055 | 0.7552 | 1722 | 0.6782 | 0.6801 | 0.6792 | 719 | 0.6552 | 0.7170 | 0.6847 | 159 | 0.4874 | 0.4826 | 0.485 | 201 | 0.4639 | 0.6016 | 0.5238 | 128 | 0.4799 | 0.7222 | 0.5766 | 198 | 0.55 | 0.6923 | 0.6130 | 143 | 0.5305 | 0.6640 | 0.5898 | 497 | 0.4042 | 0.7196 | 0.5176 | 214 | 0.8164 | 0.8792 | 0.8466 | 1689 | 0.5799 | 0.6901 | 0.6302 | 142 | 0.5945 | 0.755 | 0.6652 | 200 | 0.7445 | 0.6425 | 0.6898 | 372 | 0.6667 | 0.6634 | 0.6650 | 407 | 0.5585 | 0.5652 | 0.5618 | 667 | 0.6724 | 0.5223 | 0.5879 | 224 | 0.3835 | 0.4773 | 0.4253 | 859 | 0.5237 | 0.4596 | 0.4895 | 433 | 0.5176 | 0.4643 | 0.4895 | 603 | 0.4688 | 0.1163 | 0.1863 | 129 | 0.4207 | 0.6008 | 0.4949 | 243 | 0.4099 | 0.3492 | 0.3771 | 189 | 0.6686 | 0.7362 | 0.7008 | 307 | 0.7688 | 0.7948 | 0.7816 | 385 | 0.5136 | 0.5825 | 0.5459 | 194 | 0.6667 | 0.8041 | 0.7290 | 194 | 0.5588 | 0.1473 | 0.2331 | 129 | 0.4910 | 0.5291 | 0.5093 | 206 | 0.6662 | 0.7489 | 0.7052 | 693 | 0.6180 | 0.5861 | 0.6016 | 563 | 0.6136 | 0.6640 | 0.6378 | 0.9220 |

| 0.2195 | 2.0 | 42706 | 0.2288 | 0.6409 | 0.8406 | 0.7273 | 138 | 0.6908 | 0.6300 | 0.6590 | 227 | 0.5625 | 0.5496 | 0.5560 | 131 | 0.7336 | 0.8124 | 0.7710 | 1722 | 0.6466 | 0.8067 | 0.7178 | 719 | 0.6095 | 0.8050 | 0.6938 | 159 | 0.5848 | 0.4975 | 0.5376 | 201 | 0.5260 | 0.6328 | 0.5745 | 128 | 0.5427 | 0.6414 | 0.5880 | 198 | 0.7042 | 0.6993 | 0.7018 | 143 | 0.6157 | 0.7123 | 0.6604 | 497 | 0.6140 | 0.4907 | 0.5455 | 214 | 0.8454 | 0.8745 | 0.8597 | 1689 | 0.6125 | 0.6901 | 0.6490 | 142 | 0.6898 | 0.745 | 0.7163 | 200 | 0.7299 | 0.7339 | 0.7319 | 372 | 0.6901 | 0.7224 | 0.7059 | 407 | 0.6224 | 0.5907 | 0.6062 | 667 | 0.7312 | 0.6071 | 0.6634 | 224 | 0.4851 | 0.4750 | 0.4800 | 859 | 0.5994 | 0.4804 | 0.5333 | 433 | 0.5675 | 0.5091 | 0.5367 | 603 | 0.6905 | 0.4496 | 0.5446 | 129 | 0.5516 | 0.6379 | 0.5916 | 243 | 0.4570 | 0.3651 | 0.4059 | 189 | 0.7508 | 0.7459 | 0.7484 | 307 | 0.7833 | 0.8260 | 0.8040 | 385 | 0.7071 | 0.5103 | 0.5928 | 194 | 0.6920 | 0.7990 | 0.7416 | 194 | 0.4590 | 0.4341 | 0.4462 | 129 | 0.4717 | 0.7282 | 0.5725 | 206 | 0.7275 | 0.7821 | 0.7538 | 693 | 0.6618 | 0.6430 | 0.6523 | 563 | 0.6711 | 0.6946 | 0.6827 | 0.9317 |

| 0.1965 | 3.0 | 64059 | 0.2148 | 0.7073 | 0.8406 | 0.7682 | 138 | 0.6762 | 0.7269 | 0.7006 | 227 | 0.5802 | 0.5802 | 0.5802 | 131 | 0.7565 | 0.7938 | 0.7747 | 1722 | 0.7195 | 0.7636 | 0.7409 | 719 | 0.6806 | 0.8176 | 0.7429 | 159 | 0.6867 | 0.5124 | 0.5869 | 201 | 0.5797 | 0.625 | 0.6015 | 128 | 0.6262 | 0.6768 | 0.6505 | 198 | 0.7296 | 0.8112 | 0.7682 | 143 | 0.6439 | 0.7203 | 0.6800 | 497 | 0.6786 | 0.5327 | 0.5969 | 214 | 0.8594 | 0.8792 | 0.8692 | 1689 | 0.6545 | 0.7606 | 0.7036 | 142 | 0.7183 | 0.765 | 0.7409 | 200 | 0.7132 | 0.7688 | 0.7400 | 372 | 0.7513 | 0.7052 | 0.7275 | 407 | 0.6335 | 0.6117 | 0.6224 | 667 | 0.7514 | 0.6205 | 0.6797 | 224 | 0.4558 | 0.5821 | 0.5112 | 859 | 0.6076 | 0.5543 | 0.5797 | 433 | 0.6228 | 0.4793 | 0.5417 | 603 | 0.7159 | 0.4884 | 0.5806 | 129 | 0.56 | 0.6914 | 0.6188 | 243 | 0.4545 | 0.4497 | 0.4521 | 189 | 0.7159 | 0.8046 | 0.7577 | 307 | 0.7845 | 0.8701 | 0.8251 | 385 | 0.6667 | 0.5361 | 0.5943 | 194 | 0.7406 | 0.8093 | 0.7734 | 194 | 0.6316 | 0.5581 | 0.5926 | 129 | 0.5514 | 0.6505 | 0.5969 | 206 | 0.7538 | 0.7951 | 0.7739 | 693 | 0.6913 | 0.6803 | 0.6858 | 563 | 0.6928 | 0.7142 | 0.7033 | 0.9355 |

| 0.1665 | 4.0 | 85412 | 0.2193 | 0.7917 | 0.8261 | 0.8085 | 138 | 0.7069 | 0.7225 | 0.7146 | 227 | 0.5867 | 0.6718 | 0.6263 | 131 | 0.7710 | 0.7938 | 0.7823 | 1722 | 0.6962 | 0.7650 | 0.7290 | 719 | 0.7904 | 0.8302 | 0.8098 | 159 | 0.6221 | 0.5323 | 0.5737 | 201 | 0.5743 | 0.6641 | 0.6159 | 128 | 0.5966 | 0.7172 | 0.6514 | 198 | 0.7914 | 0.7692 | 0.7801 | 143 | 0.6395 | 0.7103 | 0.6730 | 497 | 0.6422 | 0.6121 | 0.6268 | 214 | 0.8338 | 0.9059 | 0.8683 | 1689 | 0.6711 | 0.7183 | 0.6939 | 142 | 0.7635 | 0.775 | 0.7692 | 200 | 0.7669 | 0.7608 | 0.7638 | 372 | 0.6872 | 0.7936 | 0.7366 | 407 | 0.7100 | 0.5982 | 0.6493 | 667 | 0.7181 | 0.7277 | 0.7228 | 224 | 0.4765 | 0.5553 | 0.5129 | 859 | 0.6225 | 0.5866 | 0.6040 | 433 | 0.6078 | 0.5191 | 0.5599 | 603 | 0.7222 | 0.5039 | 0.5936 | 129 | 0.6065 | 0.7737 | 0.6799 | 243 | 0.4783 | 0.5238 | 0.5 | 189 | 0.7313 | 0.7980 | 0.7632 | 307 | 0.8401 | 0.8597 | 0.8498 | 385 | 0.6058 | 0.6495 | 0.6269 | 194 | 0.7512 | 0.7938 | 0.7719 | 194 | 0.6983 | 0.6279 | 0.6612 | 129 | 0.5804 | 0.7184 | 0.6421 | 206 | 0.7571 | 0.8052 | 0.7804 | 693 | 0.6916 | 0.6892 | 0.6904 | 563 | 0.7012 | 0.7309 | 0.7158 | 0.9375 |

| 0.1314 | 5.0 | 106765 | 0.2272 | 0.7707 | 0.8768 | 0.8203 | 138 | 0.7137 | 0.7577 | 0.7350 | 227 | 0.6058 | 0.6336 | 0.6194 | 131 | 0.7229 | 0.8513 | 0.7819 | 1722 | 0.7361 | 0.7761 | 0.7556 | 719 | 0.6839 | 0.8302 | 0.7500 | 159 | 0.5845 | 0.6020 | 0.5931 | 201 | 0.6148 | 0.6484 | 0.6312 | 128 | 0.6121 | 0.7172 | 0.6605 | 198 | 0.6970 | 0.8042 | 0.7468 | 143 | 0.6438 | 0.6982 | 0.6699 | 497 | 0.6197 | 0.6776 | 0.6473 | 214 | 0.8390 | 0.8887 | 0.8631 | 1689 | 0.7333 | 0.6972 | 0.7148 | 142 | 0.7443 | 0.815 | 0.7780 | 200 | 0.7217 | 0.7876 | 0.7532 | 372 | 0.7113 | 0.7568 | 0.7333 | 407 | 0.6682 | 0.6522 | 0.6601 | 667 | 0.7136 | 0.7009 | 0.7072 | 224 | 0.5351 | 0.4796 | 0.5058 | 859 | 0.5930 | 0.6259 | 0.6090 | 433 | 0.6112 | 0.5240 | 0.5643 | 603 | 0.7767 | 0.6202 | 0.6897 | 129 | 0.6254 | 0.7284 | 0.6730 | 243 | 0.4815 | 0.4815 | 0.4815 | 189 | 0.7654 | 0.8078 | 0.7861 | 307 | 0.7611 | 0.8935 | 0.8220 | 385 | 0.6667 | 0.6082 | 0.6361 | 194 | 0.7828 | 0.7990 | 0.7908 | 194 | 0.6692 | 0.6899 | 0.6794 | 129 | 0.5983 | 0.6942 | 0.6427 | 206 | 0.7584 | 0.8153 | 0.7858 | 693 | 0.6740 | 0.7052 | 0.6892 | 563 | 0.7006 | 0.7401 | 0.7198 | 0.9378 |

| 0.1224 | 6.0 | 128118 | 0.2275 | 0.8286 | 0.8406 | 0.8345 | 138 | 0.6898 | 0.7445 | 0.7161 | 227 | 0.6013 | 0.7252 | 0.6574 | 131 | 0.7574 | 0.8415 | 0.7972 | 1722 | 0.7400 | 0.7483 | 0.7441 | 719 | 0.8084 | 0.8491 | 0.8282 | 159 | 0.7055 | 0.5721 | 0.6319 | 201 | 0.6061 | 0.625 | 0.6154 | 128 | 0.7090 | 0.6768 | 0.6925 | 198 | 0.7868 | 0.7483 | 0.7670 | 143 | 0.6454 | 0.7545 | 0.6957 | 497 | 0.6287 | 0.6963 | 0.6608 | 214 | 0.8548 | 0.8851 | 0.8697 | 1689 | 0.7669 | 0.7183 | 0.7418 | 142 | 0.75 | 0.825 | 0.7857 | 200 | 0.7130 | 0.8280 | 0.7662 | 372 | 0.6848 | 0.8059 | 0.7404 | 407 | 0.7112 | 0.6462 | 0.6771 | 667 | 0.7879 | 0.6964 | 0.7393 | 224 | 0.5378 | 0.5378 | 0.5378 | 859 | 0.6554 | 0.5797 | 0.6152 | 433 | 0.5946 | 0.5887 | 0.5917 | 603 | 0.8131 | 0.6744 | 0.7373 | 129 | 0.6483 | 0.7737 | 0.7054 | 243 | 0.5537 | 0.5185 | 0.5355 | 189 | 0.7704 | 0.7980 | 0.784 | 307 | 0.8415 | 0.8961 | 0.8679 | 385 | 0.6566 | 0.6701 | 0.6633 | 194 | 0.7879 | 0.8041 | 0.7959 | 194 | 0.6159 | 0.7829 | 0.6894 | 129 | 0.5887 | 0.7087 | 0.6432 | 206 | 0.7864 | 0.8023 | 0.7943 | 693 | 0.7388 | 0.6732 | 0.7045 | 563 | 0.7221 | 0.7475 | 0.7346 | 0.9406 |

| 0.0964 | 7.0 | 149471 | 0.2456 | 0.7947 | 0.8696 | 0.8304 | 138 | 0.7107 | 0.7577 | 0.7335 | 227 | 0.6522 | 0.6870 | 0.6691 | 131 | 0.7780 | 0.8182 | 0.7976 | 1722 | 0.7546 | 0.7483 | 0.7514 | 719 | 0.7870 | 0.8365 | 0.8110 | 159 | 0.6020 | 0.6020 | 0.6020 | 201 | 0.58 | 0.6797 | 0.6259 | 128 | 0.6129 | 0.7677 | 0.6816 | 198 | 0.7468 | 0.8252 | 0.7841 | 143 | 0.6642 | 0.7284 | 0.6948 | 497 | 0.6840 | 0.6776 | 0.6808 | 214 | 0.8586 | 0.8810 | 0.8697 | 1689 | 0.7836 | 0.7394 | 0.7609 | 142 | 0.7082 | 0.825 | 0.7621 | 200 | 0.7731 | 0.7876 | 0.7803 | 372 | 0.7606 | 0.7494 | 0.7550 | 407 | 0.6726 | 0.6837 | 0.6781 | 667 | 0.7581 | 0.7277 | 0.7426 | 224 | 0.5176 | 0.5634 | 0.5396 | 859 | 0.6599 | 0.6005 | 0.6288 | 433 | 0.5938 | 0.5672 | 0.5802 | 603 | 0.8776 | 0.6667 | 0.7577 | 129 | 0.7198 | 0.7613 | 0.74 | 243 | 0.5078 | 0.5185 | 0.5131 | 189 | 0.7933 | 0.7752 | 0.7842 | 307 | 0.8033 | 0.8909 | 0.8448 | 385 | 0.6071 | 0.7010 | 0.6507 | 194 | 0.7429 | 0.8041 | 0.7723 | 194 | 0.7321 | 0.6357 | 0.6805 | 129 | 0.5775 | 0.7233 | 0.6422 | 206 | 0.7858 | 0.7994 | 0.7926 | 693 | 0.6678 | 0.7282 | 0.6967 | 563 | 0.7199 | 0.7475 | 0.7334 | 0.9403 |

| 0.0838 | 8.0 | 170824 | 0.2562 | 0.7722 | 0.8841 | 0.8243 | 138 | 0.6929 | 0.7753 | 0.7318 | 227 | 0.6483 | 0.7176 | 0.6812 | 131 | 0.7859 | 0.8101 | 0.7978 | 1722 | 0.7419 | 0.7316 | 0.7367 | 719 | 0.7389 | 0.8365 | 0.7847 | 159 | 0.5797 | 0.5970 | 0.5882 | 201 | 0.5878 | 0.6797 | 0.6304 | 128 | 0.6574 | 0.7172 | 0.6860 | 198 | 0.7597 | 0.8182 | 0.7879 | 143 | 0.7108 | 0.7123 | 0.7116 | 497 | 0.6511 | 0.7150 | 0.6815 | 214 | 0.8791 | 0.8822 | 0.8806 | 1689 | 0.75 | 0.7606 | 0.7552 | 142 | 0.7594 | 0.805 | 0.7816 | 200 | 0.7842 | 0.8011 | 0.7926 | 372 | 0.7395 | 0.7813 | 0.7599 | 407 | 0.6965 | 0.6777 | 0.6869 | 667 | 0.7179 | 0.75 | 0.7336 | 224 | 0.5081 | 0.5809 | 0.5421 | 859 | 0.6327 | 0.6166 | 0.6246 | 433 | 0.6094 | 0.5821 | 0.5954 | 603 | 0.8776 | 0.6667 | 0.7577 | 129 | 0.7059 | 0.7407 | 0.7229 | 243 | 0.5444 | 0.5185 | 0.5312 | 189 | 0.7722 | 0.7948 | 0.7833 | 307 | 0.8067 | 0.8779 | 0.8408 | 385 | 0.6408 | 0.6804 | 0.6600 | 194 | 0.7546 | 0.8402 | 0.7951 | 194 | 0.6831 | 0.7519 | 0.7159 | 129 | 0.6255 | 0.7136 | 0.6667 | 206 | 0.7392 | 0.8427 | 0.7876 | 693 | 0.7289 | 0.7069 | 0.7178 | 563 | 0.7242 | 0.7514 | 0.7376 | 0.9414 |

| 0.0753 | 9.0 | 192177 | 0.2708 | 0.8026 | 0.8841 | 0.8414 | 138 | 0.7054 | 0.8018 | 0.7505 | 227 | 0.6277 | 0.6565 | 0.6418 | 131 | 0.7762 | 0.8380 | 0.8059 | 1722 | 0.7552 | 0.7552 | 0.7552 | 719 | 0.7701 | 0.8428 | 0.8048 | 159 | 0.6610 | 0.5821 | 0.6190 | 201 | 0.5915 | 0.6562 | 0.6222 | 128 | 0.6575 | 0.7273 | 0.6906 | 198 | 0.7887 | 0.7832 | 0.7860 | 143 | 0.7050 | 0.7163 | 0.7106 | 497 | 0.6270 | 0.7383 | 0.6781 | 214 | 0.8441 | 0.8881 | 0.8656 | 1689 | 0.7589 | 0.7535 | 0.7562 | 142 | 0.7125 | 0.855 | 0.7773 | 200 | 0.755 | 0.8118 | 0.7824 | 372 | 0.7512 | 0.8010 | 0.7753 | 407 | 0.6788 | 0.6972 | 0.6879 | 667 | 0.7830 | 0.7411 | 0.7615 | 224 | 0.5155 | 0.5821 | 0.5467 | 859 | 0.6386 | 0.6490 | 0.6438 | 433 | 0.6629 | 0.5804 | 0.6189 | 603 | 0.8598 | 0.7132 | 0.7797 | 129 | 0.6667 | 0.7490 | 0.7054 | 243 | 0.4787 | 0.5344 | 0.505 | 189 | 0.7610 | 0.7883 | 0.7744 | 307 | 0.8285 | 0.8909 | 0.8586 | 385 | 0.7027 | 0.6701 | 0.6860 | 194 | 0.7778 | 0.8299 | 0.8030 | 194 | 0.6923 | 0.7674 | 0.7279 | 129 | 0.6396 | 0.6893 | 0.6636 | 206 | 0.7879 | 0.8095 | 0.7986 | 693 | 0.7110 | 0.7123 | 0.7116 | 563 | 0.7258 | 0.7593 | 0.7422 | 0.9424 |