modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

bert-base-multilingual-cased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"multilingual",

"af",

"sq",

"ar",

"an",

"hy",

"ast",

"az",

"ba",

"eu",

"bar",

"be",

"bn",

"inc",

"bs",

"br",

"bg",

"my",

"ca",

"ceb",

"ce",

"zh",

"cv",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fi",

"fr",

"gl",

"ka",

"de",

"el",

"gu",

"ht",

"he",

"hi",

"hu",

"is",

"io",

"id",

"ga",

"it",

"ja",

"jv",

"kn",

"kk",

"ky",

"ko",

"la",

"lv",

"lt",

"roa",

"nds",

"lm",

"mk",

"mg",

"ms",

"ml",

"mr",

"mn",

"min",

"ne",

"new",

"nb",

"nn",

"oc",

"fa",

"pms",

"pl",

"pt",

"pa",

"ro",

"ru",

"sco",

"sr",

"scn",

"sk",

"sl",

"aze",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"th",

"ta",

"tt",

"te",

"tr",

"uk",

"ud",

"uz",

"vi",

"vo",

"war",

"cy",

"fry",

"pnb",

"yo",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4,749,504 | 2022-12-10T11:20:30Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 259.18 +/- 20.19

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

bert-base-uncased | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"exbert",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 59,663,489 | 2022-12-10T11:36:29Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: HeineKayn/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

bert-large-cased-whole-word-masking-finetuned-squad | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"bert",

"question-answering",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8,214 | 2022-12-10T11:42:22Z | ---

license: mit

---

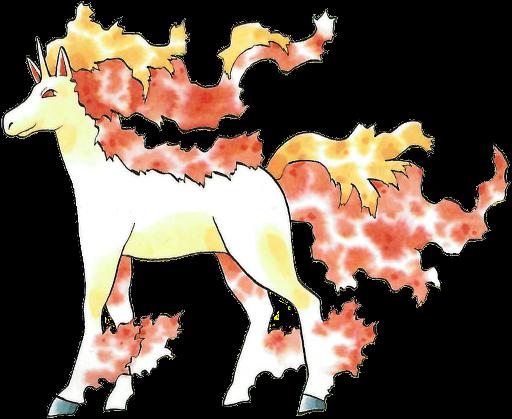

### ihylc on Stable Diffusion

This is the `<ihylc>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

bert-large-cased-whole-word-masking | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,316 | 2022-12-10T11:43:08Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 253.24 +/- 22.99

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

bert-large-uncased-whole-word-masking-finetuned-squad | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"question-answering",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 480,510 | null | Access to model artinzareie/RikkaDiffusion is restricted and you are not in the authorized list. Visit https://huggingface.co/artinzareie/RikkaDiffusion to ask for access. |

camembert-base | [

"pytorch",

"tf",

"safetensors",

"camembert",

"fill-mask",

"fr",

"dataset:oscar",

"arxiv:1911.03894",

"transformers",

"license:mit",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"CamembertForMaskedLM"

],

"model_type": "camembert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1,440,898 | 2022-12-10T12:06:59Z | ---

license: bigscience-bloom-rail-1.0

tags:

- generated_from_trainer

model-index:

- name: bloom-560m-finetuned-the-stack-prolog

results: []

widget:

- text: '% Define un hecho que indica que "hello" es un saludo

saludo("hello").

% Define una regla que indica que "world" es un objeto

objeto("world").

% Define una regla que combina el saludo y el objeto para producir la salida "Hola mundo"

hola_mundo :-'

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bloom-560m-finetuned-the-stack-prolog

This model is a fine-tuned version of [bigscience/bloom-560m](https://huggingface.co/bigscience/bloom-560m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2433

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.2334 | 0.2 | 200 | 0.9993 |

| 0.9174 | 0.4 | 400 | 0.7460 |

| 0.7892 | 0.6 | 600 | 0.6046 |

| 0.6805 | 0.8 | 800 | 0.4964 |

| 0.5898 | 0.99 | 1000 | 0.4283 |

| 0.411 | 1.19 | 1200 | 0.3721 |

| 0.3705 | 1.39 | 1400 | 0.3182 |

| 0.3516 | 1.59 | 1600 | 0.2795 |

| 0.3298 | 1.79 | 1800 | 0.2528 |

| 0.2721 | 1.99 | 2000 | 0.2433 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.5.1

- Tokenizers 0.13.0

|

ctrl | [

"pytorch",

"tf",

"ctrl",

"en",

"arxiv:1909.05858",

"arxiv:1910.09700",

"transformers",

"license:bsd-3-clause",

"has_space"

]

| null | {

"architectures": null,

"model_type": "ctrl",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 17,007 | 2022-12-10T12:13:22Z |

---

license: cc-by-4.0

metrics:

- bleu4

- meteor

- rouge-l

- bertscore

- moverscore

language: en

datasets:

- lmqg/qg_squad

pipeline_tag: text2text-generation

tags:

- answer extraction

widget:

- text: "<hl> Beyonce further expanded her acting career, starring as blues singer Etta James in the 2008 musical biopic, Cadillac Records. <hl> Her performance in the film received praise from critics, and she garnered several nominations for her portrayal of James, including a Satellite Award nomination for Best Supporting Actress, and a NAACP Image Award nomination for Outstanding Supporting Actress."

example_title: "Answering Extraction Example 1"

- text: "Beyonce further expanded her acting career, starring as blues singer Etta James in the 2008 musical biopic, Cadillac Records. <hl> Her performance in the film received praise from critics, and she garnered several nominations for her portrayal of James, including a Satellite Award nomination for Best Supporting Actress, and a NAACP Image Award nomination for Outstanding Supporting Actress. <hl>"

example_title: "Answering Extraction Example 2"

model-index:

- name: lmqg/bart-large-squad-ae

results:

- task:

name: Text2text Generation

type: text2text-generation

dataset:

name: lmqg/qg_squad

type: default

args: default

metrics:

- name: BLEU4 (Answer Extraction)

type: bleu4_answer_extraction

value: 58.61

- name: ROUGE-L (Answer Extraction)

type: rouge_l_answer_extraction

value: 68.96

- name: METEOR (Answer Extraction)

type: meteor_answer_extraction

value: 41.89

- name: BERTScore (Answer Extraction)

type: bertscore_answer_extraction

value: 91.93

- name: MoverScore (Answer Extraction)

type: moverscore_answer_extraction

value: 82.41

- name: AnswerF1Score (Answer Extraction)

type: answer_f1_score__answer_extraction

value: 69.67

- name: AnswerExactMatch (Answer Extraction)

type: answer_exact_match_answer_extraction

value: 58.95

---

# Model Card of `lmqg/bart-large-squad-ae`

This model is fine-tuned version of [facebook/bart-large](https://huggingface.co/facebook/bart-large) for answer extraction on the [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [facebook/bart-large](https://huggingface.co/facebook/bart-large)

- **Language:** en

- **Training data:** [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="en", model="lmqg/bart-large-squad-ae")

# model prediction

answers = model.generate_a("William Turner was an English painter who specialised in watercolour landscapes")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/bart-large-squad-ae")

output = pipe("<hl> Beyonce further expanded her acting career, starring as blues singer Etta James in the 2008 musical biopic, Cadillac Records. <hl> Her performance in the film received praise from critics, and she garnered several nominations for her portrayal of James, including a Satellite Award nomination for Best Supporting Actress, and a NAACP Image Award nomination for Outstanding Supporting Actress.")

```

## Evaluation

- ***Metric (Answer Extraction)***: [raw metric file](https://huggingface.co/lmqg/bart-large-squad-ae/raw/main/eval/metric.first.answer.paragraph_sentence.answer.lmqg_qg_squad.default.json)

| | Score | Type | Dataset |

|:-----------------|--------:|:--------|:---------------------------------------------------------------|

| AnswerExactMatch | 58.95 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| AnswerF1Score | 69.67 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| BERTScore | 91.93 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_1 | 65.82 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_2 | 63.21 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_3 | 60.73 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| Bleu_4 | 58.61 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| METEOR | 41.89 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| MoverScore | 82.41 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

| ROUGE_L | 68.96 | default | [lmqg/qg_squad](https://huggingface.co/datasets/lmqg/qg_squad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_squad

- dataset_name: default

- input_types: ['paragraph_sentence']

- output_types: ['answer']

- prefix_types: None

- model: facebook/bart-large

- max_length: 512

- max_length_output: 32

- epoch: 5

- batch: 32

- lr: 5e-05

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 2

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/bart-large-squad-ae/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

distilbert-base-german-cased | [

"pytorch",

"safetensors",

"distilbert",

"fill-mask",

"de",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"DistilBertForMaskedLM"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 43,667 | 2022-12-10T12:21:18Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### messy_sketch_art_style Dreambooth model trained by apurik-parv with [Shivamshri rao's DreamBooth implementation]

Instance prompt:**meartsty**

As the name implies the the model is trained on messy art style sketch /doodle images for 50000 steps.

Simple prompts can replicate faithfully.

complicated and contradicting prompts will add elements of noise to the image.

Feel free to experiment with it.

|

distilbert-base-uncased-finetuned-sst-2-english | [

"pytorch",

"tf",

"rust",

"safetensors",

"distilbert",

"text-classification",

"en",

"dataset:sst2",

"dataset:glue",

"arxiv:1910.01108",

"doi:10.57967/hf/0181",

"transformers",

"license:apache-2.0",

"model-index",

"has_space"

]

| text-classification | {

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3,060,704 | 2022-12-10T12:43:19Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 512 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 187841 with parameters:

```

{'batch_size': 2, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`__main__.BregmanRankingLoss`

Parameters of the fit()-Method:

```

{

"epochs": 4,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 3e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 5000,

"warmup_steps": 75137,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 4096, 'do_lower_case': False}) with Transformer model: LongformerModel

(1): Pooling({'word_embedding_dimension': 512, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

distilroberta-base | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"roberta",

"fill-mask",

"en",

"dataset:openwebtext",

"arxiv:1910.01108",

"arxiv:1910.09700",

"transformers",

"exbert",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3,342,240 | 2022-12-10T12:47:10Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 292.51 +/- 14.48

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

gpt2-medium | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"gpt2",

"text-generation",

"en",

"arxiv:1910.09700",

"transformers",

"license:mit",

"has_space"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 759,601 | 2022-12-10T13:09:48Z | ---

title: SpeakToChatGPT

emoji: 📊

colorFrom: blue

colorTo: blue

sdk: gradio

sdk_version: 3.12.0

app_file: app.py

pinned: false

duplicated_from: yizhangliu/chatGPT

---

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

Pinwheel/wav2vec2-large-xls-r-300m-hi-v3 | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-10T22:10:52Z | ---

language:

- pt

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Tiny Portuguese - Prince Canuma

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Tiny Portuguese - Prince Canuma

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

Pinwheel/wav2vec2-large-xls-r-300m-tr-colab | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"transformers"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | 2022-12-10T22:16:53Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3-nice

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="osanseviero/q-Taxi-v3-nice", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

AbidHasan95/movieHunt2 | [

"pytorch",

"distilbert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"DistilBertForTokenClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | 2022-12-10T23:03:57Z | ---

tags:

- generated_from_trainer

datasets:

- samsum

model-index:

- name: pegasus-samsum-5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-samsum-5

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on the samsum dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3386

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.6957 | 0.54 | 500 | 1.4857 |

| 1.4033 | 1.09 | 1000 | 1.4117 |

| 1.497 | 1.63 | 1500 | 1.3742 |

| 1.4132 | 2.17 | 2000 | 1.3582 |

| 1.3858 | 2.72 | 2500 | 1.3482 |

| 1.2908 | 3.26 | 3000 | 1.3477 |

| 1.2357 | 3.8 | 3500 | 1.3386 |

| 1.2499 | 4.35 | 4000 | 1.3419 |

| 1.2349 | 4.89 | 4500 | 1.3386 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Adinda/Adinda | [

"license:artistic-2.0"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-11T06:48:37Z | ---

language:

- en

thumbnail: "https://s3.amazonaws.com/moonup/production/uploads/1670742434498-633a20a88f27255b6b56290b.png"

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

# Chinese Digital Art Diffusion

**Trigger Words: CNDigitalArt Style**

This is a fine-tuned Stable Diffusion model trained on some of the **Chinese Digital Arts** style that usually uses on Chinese Interactive Reading (Visual Novel) platforms such as **Orange Light** [66rpg.com](https://66rpg.com) or **NetEase Interactive Reading Platform** [avg.163.com](https://avg.163.com/).

_if you don't know what that is, don't worry, it's just one of those really big thing in China that majority of Westerners had no clue about._

Use the tokens **_CNDigitalArt Style_** in your prompts to test and experiment it yourself.

**EXAMPLES:**

_These results were tested on the 2000 Steps model [ **CNDigitalArt_2000.ckpt**](https://huggingface.co/CultivatorX/Chinese-Digital-Art/blob/main/CNDigitalArt_2000.ckpt).

I just did 20 batches of -1 seeds in random for each of the prompt (most of which isn't that good) but it does have some really good ones.

Prompt: **a portrait of Megan Fox in CNDigitalArt Style**

Negative prompt: _lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name, two faces, two heads_

Steps: 20, Sampler: Euler, CFG scale: 7, Seed: 593563256, Face restoration: GFPGAN, Size: 512x512, Model hash: 2258c119

Prompt: **a portrait of Scarlett Johansson in CNDigitalArt Style**

Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name, two faces, two heads

Steps: 20, Sampler: Euler, CFG scale: 7, Seed: 4272335413, Face restoration: GFPGAN, Size: 512x512, Model hash: 2258c119

=====================================================================

=====================================================================

Prompt: **a portrait of Emma Watson in CNDigitalArt Style**

Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name, two faces, two heads

Steps: 20, Sampler: Euler, CFG scale: 7, Seed: 3813059825, Face restoration: GFPGAN, Size: 512x512, Model hash: 2258c119

Prompt: **a portrait of Zendaya in CNDigitalArt Style**

Negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name, two faces, two heads

Steps: 20, Sampler: Euler, CFG scale: 7, Seed: 962052606, Face restoration: GFPGAN, Size: 512x512, Model hash: 2258c119 |

Aimendo/Triage | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Carpet Cleaning Plano TX

https://carpetcleaningplanotx.com/

(469) 444-1903

At Rug Cleaning Plano in TX we likewise have a truck mounted cover cleaning framework. These versatile vehicles have a force to be reckoned with of hardware. They generally have these on them and they can finish any occupation properly. Whether it is a little home, an enormous house or a gigantic modern intricate, the undertaking is rarely too large or intense. |

Aimendo/autonlp-triage-35248482 | [

"pytorch",

"bert",

"text-classification",

"en",

"dataset:Aimendo/autonlp-data-triage",

"transformers",

"autonlp",

"co2_eq_emissions"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

license: other

---

Carpet Stain Removal Plano TX

https://carpetcleaningplanotx.com/carpet-stain-removal.html

(469) 444-1903

Carpet Cleaning Plano in Texas is the company of choice for the majority of customers when it comes to stain removal.We have the best-trained staff and professional technology.We will get rid of even the worst stain.That is if it comes from your upholstery, fabrics, curtains, and carpets.Try us out today, and you'll see why the majority of people prefer us to everyone else. |

Akashpb13/Central_kurdish_xlsr | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"ckb",

"dataset:mozilla-foundation/common_voice_8_0",

"transformers",

"mozilla-foundation/common_voice_8_0",

"generated_from_trainer",

"robust-speech-event",

"model_for_talk",

"hf-asr-leaderboard",

"license:apache-2.0",

"model-index"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 10 | null | ---

license: other

---

Upholstery Cleaning Fort Worth TX

https://txfortworthcarpetcleaning.com/upholstery-cleaning.html

(817) 523-1237

When you sit on your upholstery, you inhale allergens, dirt, and dust that are trapped in its fibers.Therefore, if you want to ensure the safety of your upholstery—especially if you have children or pets—you need to hire experts in carpet cleaning for upholstery in Worth, Texas.We have the best upholstery cleaners who will come to your house and do an excellent job of cleaning it.Understanding the various fibers of your furniture is important to our technicians because it helps them choose effective and safe cleaning methods.When you hire us, we promise to give you a lot of attention and care, and we won't start cleaning your upholstery until we make sure the products we use are safe for the kind of fabric it is made of. |

Akashpb13/xlsr_maltese_wav2vec2 | [

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"mt",

"dataset:common_voice",

"transformers",

"audio",

"speech",

"xlsr-fine-tuning-week",

"license:apache-2.0",

"model-index"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: other

---

Rug Cleaning Mesquite TX

http://mesquitecarpetcleaningtx.com/rug-cleaning.html

(469) 213-8132

Carpet and area rug manufacturers recommend using the free hot water extraction system from Our Rug Cleaning.Carpet Cleaning Mesquite TX can also clean some area rugs at a lower temperature, depending on how many fibers they have. These rugs need to be cleaned with cool water routines.Using a high-controlled cleaning process and a deposit-free cleaning result, we remove all dirt, sand, coarseness, and grime from the area rugs. |

Akira-Yana/distilbert-base-uncased-finetuned-cola | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-11T07:58:17Z | ---

language:

- en

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

# Anything V3

Welcome to Anything V3 - a latent diffusion model for weebs. This model is intended to produce high-quality, highly detailed anime style with just a few prompts. Like other anime-style Stable Diffusion models, it also supports danbooru tags to generate images.

e.g. **_1girl, white hair, golden eyes, beautiful eyes, detail, flower meadow, cumulonimbus clouds, lighting, detailed sky, garden_**

## Gradio

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run Anything-V3.0:

[Open in Spaces](https://huggingface.co/spaces/akhaliq/anything-v3.0)

## 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

from diffusers import StableDiffusionPipeline

import torch

model_id = "Linaqruf/anything-v3.0"

branch_name= "diffusers"

pipe = StableDiffusionPipeline.from_pretrained(model_id, revision=branch_name, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "pikachu"

image = pipe(prompt).images[0]

image.save("./pikachu.png")

```

## Examples

Below are some examples of images generated using this model:

**Anime Girl:**

```

1girl, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden

Steps: 50, Sampler: DDIM, CFG scale: 12

```

**Anime Boy:**

```

1boy, medium hair, blonde hair, blue eyes, bishounen, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden

Steps: 50, Sampler: DDIM, CFG scale: 12

```

**Scenery:**

```

scenery, shibuya tokyo, post-apocalypse, ruins, rust, sky, skyscraper, abandoned, blue sky, broken window, building, cloud, crane machine, outdoors, overgrown, pillar, sunset

Steps: 50, Sampler: DDIM, CFG scale: 12

```

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) |

Akiva/Joke | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Upholstery Cleaning Mesquite TX

http://mesquitecarpetcleaningtx.com/upholstery-cleaning.html

(469) 213-8132

We will either dry clean the upholstery or stream steam clean it, depending on the fabric.To bring your upholstery back to life, we use a highly specialized upholstery cleaning tool, a protective 2 inch cover process, and a buildup-free cleaning solution. |

AkshatSurolia/DeiT-FaceMask-Finetuned | [

"pytorch",

"deit",

"image-classification",

"dataset:Face-Mask18K",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| image-classification | {

"architectures": [

"DeiTForImageClassification"

],

"model_type": "deit",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 46 | 2022-12-11T08:12:22Z | ---

license: other

---

Green Carpet Cleaning Garland

http://garlandcarpetcleaner.com/

(972) 256-8544

One of methods we follow at cover cleaning is "Steam Cleaning Administration" that depends on utilizing minimal high temp water and more steam, centering steam - which infiltrating into profound on spots and stain to dissolve every one of them even the hardest ones and kill all poisons from your rug. Then, at that point, the job of our compelling green items starts to clear this large number of components, returning your floor covering shimmered and bright. At last, we utilize our excellent dry machines, so your rug will be full dry inside no time. We have specific floor covering steam cleaners, so they know how to follow the high amazing skill simultaneously, safeguarding your rug from any harms. |

AkshayDev/BERT_Fine_Tuning | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Richardson TX Carpet Cleaning

https://carpetcleaning-richardson.com/

(972) 454-9815

Pets are outlandish, and generally they are tomfoolery, and that is the explanation a large portion of us keep them. Notwithstanding, usually now and again they wreck in the house and right on the costly rug or carpet. A specialist from Richardson Texas Pet Stain Cleaning prescribes that it's fundamental to have the stain eliminated right away and inappropriate or lacking pet stain evacuation can set the color for all time and any further stain can harm your carpet completely or significantly more peeing can cause the scent that appears never to disappear. |

AkshaySg/GrammarCorrection | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Carpet Stain Removal Richardson TX

https://carpetcleaning-richardson.com/carpet-stain-removal.html

(972) 454-9815

One of the reasons our carpet stain cleaning is so popular with customers is that it is eco-friendly.Our products are safe for the home, pets, and children.We are able to quickly clean tough stains that you believe are permanent and cannot be removed from your carpet.You will quickly observe the disappearance of what you thought was a stain that would not go away. |

AkshaySg/LanguageIdentification | [

"multilingual",

"dataset:VoxLingua107",

"LID",

"spoken language recognition",

"license:apache-2.0"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: convnext-tiny-224-finetuned-eurosat-att-auto

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9506172839506173

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# convnext-tiny-224-finetuned-eurosat-att-auto

This model is a fine-tuned version of [facebook/convnext-tiny-224](https://huggingface.co/facebook/convnext-tiny-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5076

- Accuracy: 0.9506

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.5583 | 0.97 | 23 | 1.6008 | 0.7160 |

| 1.2953 | 1.97 | 46 | 1.2957 | 0.7531 |

| 0.9488 | 2.97 | 69 | 1.0720 | 0.8148 |

| 0.7036 | 3.97 | 92 | 0.8965 | 0.8642 |

| 0.5446 | 4.97 | 115 | 0.7574 | 0.9383 |

| 0.4113 | 5.97 | 138 | 0.6522 | 0.9383 |

| 0.2259 | 6.97 | 161 | 0.5720 | 0.9383 |

| 0.1863 | 7.97 | 184 | 0.5076 | 0.9506 |

| 0.1443 | 8.97 | 207 | 0.4795 | 0.9383 |

| 0.1289 | 9.97 | 230 | 0.4685 | 0.9383 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

AlanDev/DallEMiniButBetter | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Dryer Vent Cleaning Richardson TX

https://carpetcleaning-richardson.com/dryer-vent-cleaning.html

(972) 454-9815

Additionally, if your vents are clogged, we can assist you in preventing dryer fires.If your clothes get too hot in your dryer or if it is too hot, this means that the hot air vents are blocked.When we remove the accumulated lint from the vents, we will be able to resolve this issue quickly.When customers need their dryers reconditioned or all of the lint that has built up in their vents removed, our skilled team is there to help. |

AlanDev/test | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: other

---

Coppell Carpet Cleaning

https://coppellcarpetcleaning.com/

(972) 914-8246

Cover Green Cleaners utilizes the most complicated and better strategies than play out the entirety of your home's cleaning. Our clients comment how satisfied they are that we just utilize material or cleaning items that are alright for their kids, pets and other relatives. They generally value the way that we volunteer to make their homes completely safe. |

Aleksandar/distilbert-srb-ner | [

"pytorch",

"distilbert",

"token-classification",

"sr",

"dataset:wikiann",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"DistilBertForTokenClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | null | ---

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: convnext-tiny-224-finetuned-eurosat-vitconfig-test

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# convnext-tiny-224-finetuned-eurosat-vitconfig-test

This model is a fine-tuned version of [](https://huggingface.co/) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 2

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Aleksandar/electra-srb-oscar | [

"pytorch",

"electra",

"fill-mask",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"ElectraForMaskedLM"

],

"model_type": "electra",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | ---

language:

- zh

inference:

parameters:

top_p: 0.9

max_new_tokens: 128

num_return_sequences: 3

do_sample: true

repetition_penalty: 1.1

license: apache-2.0

tags:

- generate

- gpt2

widget:

- 北京是中国的

- 西湖的景色

---

# Wenzhong2.0-GPT2-110M-BertTokenizer-chinese

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

- Docs: [Fengshenbang-Docs](https://fengshenbang-doc.readthedocs.io/)

## 简介 Brief Introduction

善于处理NLG任务,中文版的GPT2-Small。基于BertTokenizer,实现字级别token,更便于受控文本生成。

Focused on handling NLG tasks, Chinese GPT2-Small.

## 模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

| :----: | :----: | :----: | :----: | :----: | :----: |

| 通用 General | 自然语言生成 NLG | 闻仲 Wenzhong | GPT2 | 110M | 中文 Chinese |

## 模型信息 Model Information

类似于Wenzhong2.0-GPT2-3.5B-chinese,我们实现了一个small版本的12层的Wenzhong2.0-GPT2-110M-BertTokenizer-chinese,并在悟道(300G版本)上面进行预训练。本次开源别于之前开源的闻仲-GPT2系列,主要在于将BPE的分词换成了BertTokenzier的字级别分词。

Similar to Wenzhong2.0-GPT2-3.5B-chinese, we implement a small size Wenzhong2.0-GPT2-110M-BertTokenizer-chinese with 12 layers, which is pre-trained on Wudao Corpus (300G version).This open source version is different from the previous open source Wenzhong-GPT2 series, mainly because the word segmentation of BPE is replaced by the word level word segmentation of BertTokenzier.

## 使用 Usage

### 加载模型 Loading Models

```python

from transformers import BertTokenizer,GPT2LMHeadModel

hf_model_path = 'IDEA-CCNL/Wenzhong2.0-GPT2-110M-BertTokenizer-chinese'

tokenizer = BertTokenizer.from_pretrained(hf_model_path)

model = GPT2LMHeadModel.from_pretrained(hf_model_path)

```

### 使用示例 Usage Examples

这里需要提一点,GPT在训练的时候是没有添加special_tokens的,BertTokenizer会默认补充special_tokens,所以在tokenzier的时候需要将add_special_tokens设置为false,这样生产效果会更好。

```python

def generate_word_level(input_text,n_return=5,max_length=128,top_p=0.9):

inputs = tokenizer(input_text,return_tensors='pt',add_special_tokens=False).to(model.device)

gen = model.generate(

inputs=inputs['input_ids'],

max_length=max_length,

do_sample=True,

top_p=top_p,

eos_token_id=21133,

pad_token_id=0,

num_return_sequences=n_return)

sentences = tokenizer.batch_decode(gen)

for idx,sentence in enumerate(sentences):

print(f'sentence {idx}: {sentence}')

print('*'*20)

return gen

outputs = generate_word_level('西湖的景色',n_return=5,max_length=128)

```

## 引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的[论文](https://arxiv.org/abs/2209.02970):

If you are using the resource for your work, please cite the our [paper](https://arxiv.org/abs/2209.02970):

```text

@article{fengshenbang,

author = {Jiaxing Zhang and Ruyi Gan and Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},