modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Axon/resnet18-v1 | [

"dataset:ImageNet",

"arxiv:1512.03385",

"Axon",

"Elixir",

"license:apache-2.0"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: creativeml-openrail-m

---

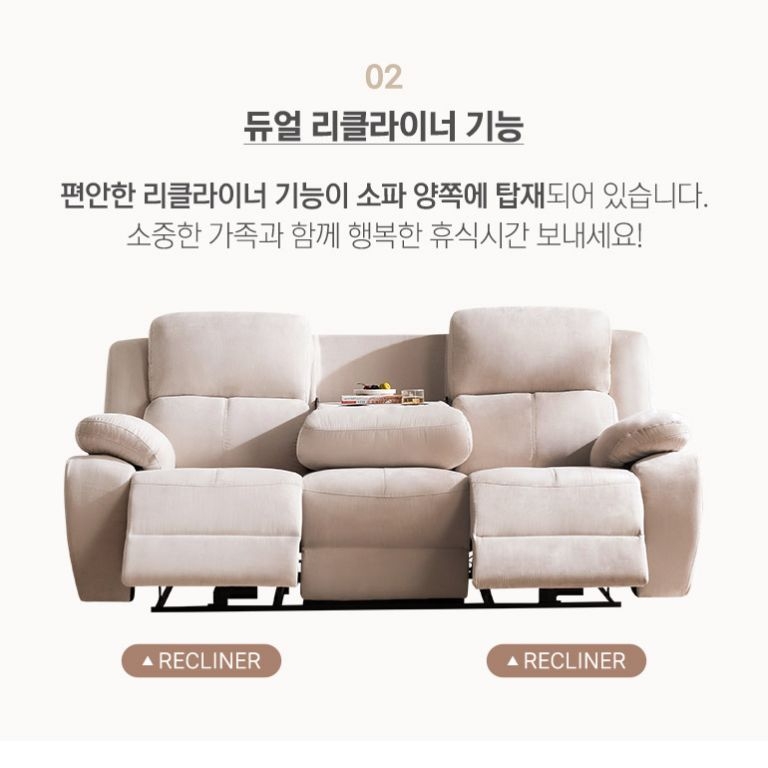

##Textual Inversion Embed + Hypernetwork For SD 2 models by ShadoWxShinigamI

Trained on 200 BLIP Captioned images from my personal MJ Generations. Meant to be used with 768 Models.

16 Vectors - 625 Steps - TI Embed

Swish - 10000 Steps - Hypernetwork.

The Hypernetwork is meant to be an augment to be used alongside the embed. Using at 0.5 Strength tends to produce the best output (YMMV)

Examples :-

|

Axon/resnet50-v1 | [

"dataset:ImageNet",

"arxiv:1512.03385",

"Axon",

"Elixir",

"license:apache-2.0"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-12T06:51:06Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: gufte/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Ayham/bert_gpt2_summarization_cnndm | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- NbAiLab/NCC_S

metrics:

- wer

model-index:

- name: Whisper Base Norwegian

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: NbAiLab/NCC_S

type: NbAiLab/NCC_S

config: 'no'

split: validation

args: 'no'

metrics:

- name: Wer

type: wer

value: 15.012180267965894

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Base Norwegian

This model is a fine-tuned version of [pere/whisper-small-nob-clr](https://huggingface.co/pere/whisper-small-nob-clr) on the NbAiLab/NCC_S dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3284

- Wer: 15.0122

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant_with_warmup

- training_steps: 3000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.5975 | 0.33 | 1000 | 0.3354 | 15.7734 |

| 0.5783 | 0.67 | 2000 | 0.3327 | 16.3520 |

| 0.5788 | 1.0 | 3000 | 0.3284 | 15.0122 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

Ayham/bert_gpt2_summarization_cnndm_new | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

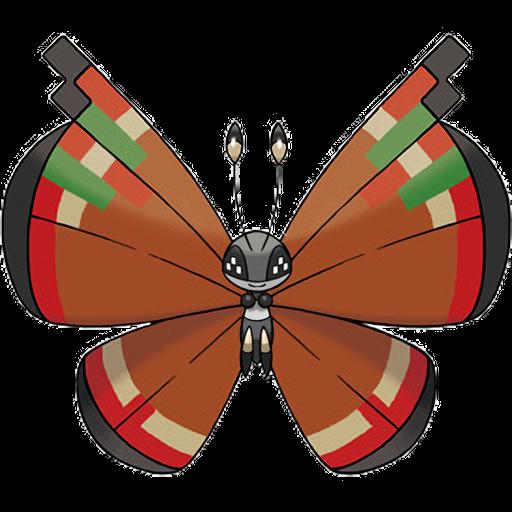

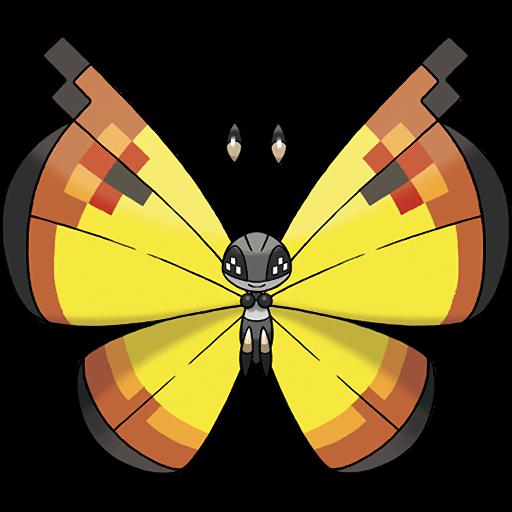

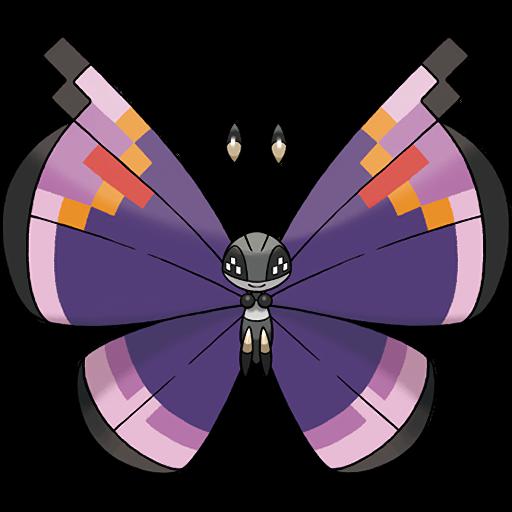

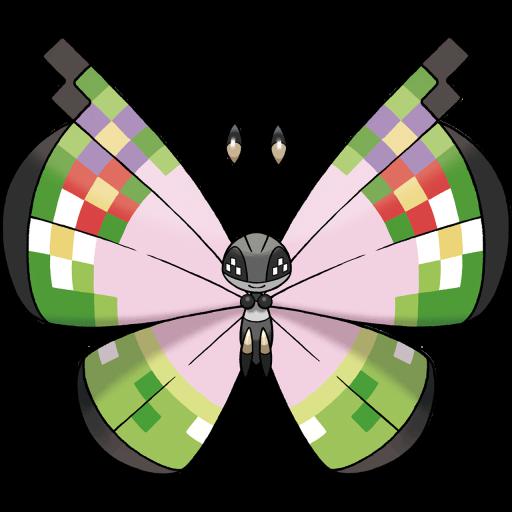

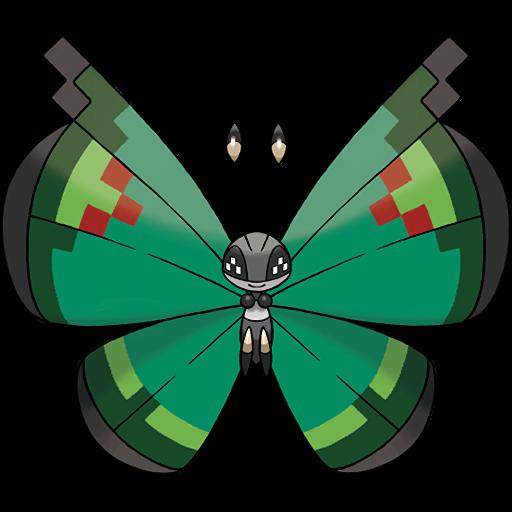

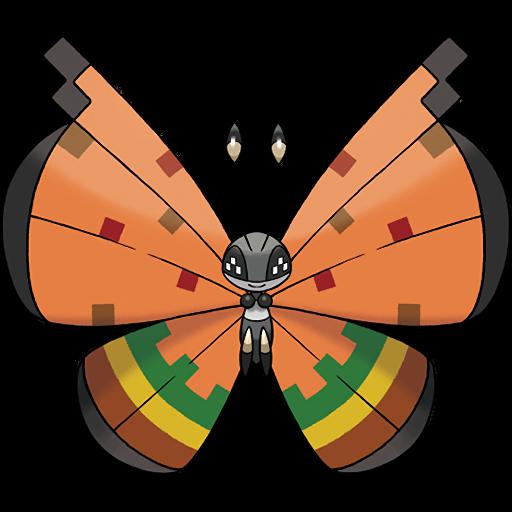

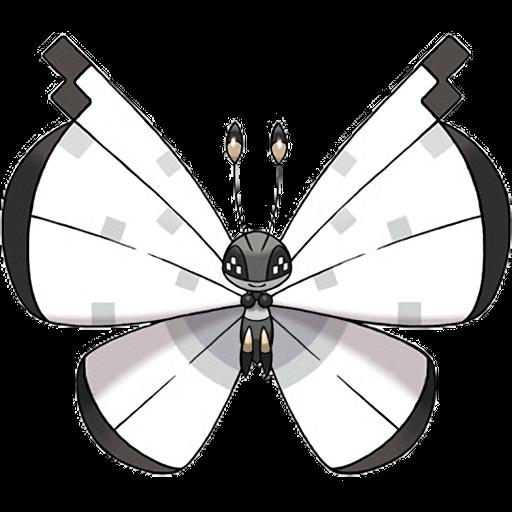

This model is a diffusion model for unconditional image generation of butterflies

trained on the ceyda smithsonian butterflies at a sample resolution of 64*64.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('Apocalypse-19/ceyda-butterflies-64')

image = pipeline().images[0]

image

```

|

Ayham/bert_gpt2_summarization_xsum | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:xsum",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: Pech82/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Ayham/bert_roberta_summarization_cnn_dailymail | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | 2022-12-12T07:46:35Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: samik

---

### samik_test Dreambooth model trained by sokobanni with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v2-1-768 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

samik (use that on your prompt)

|

Ayham/roberta_distilgpt2_summarization_cnn_dailymail | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | 2022-12-13T12:01:56Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: donut-base-sroie

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# donut-base-sroie

This model is a fine-tuned version of [naver-clova-ix/donut-base](https://huggingface.co/naver-clova-ix/donut-base) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3.4707116138614145e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

Ayham/roberta_gpt2_summarization_cnn_dailymail | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 31 | null | ---

license: creativeml-openrail-m

---

Stable Diffusion v1-5 with the fine-tuned VAE `sd-vae-ft-mse` and files with config modifications for making it better to fine-tune made by [fast-stable-diffusion by TheLastBen](https://github.com/TheLastBen/fast-stable-diffusion) to be used on [fastDreambooth Colab Notebook](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) and on the [Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training)

Is not suited for inference and training elsewhere is under your own risk. The [model LICENSE](https://huggingface.co/spaces/CompVis/stable-diffusion-license) still applies normally for this use-case. Refer to the [original repository](https://huggingface.co/runwayml/stable-diffusion-v1-5) for the model card |

Ayjayo/DialoGPT-medium-AyjayoAI | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | 2022-12-12T09:38:01Z | ---

model-index:

- name: YLIK/stt_en_conformer_ctc_small

results:

- task:

type: automatic-speech-recognition

dataset:

name: Librispeech (clean)

type: librispeech_asr

config: other

split: test

args:

language: en

metrics:

- type: wer

value: 8.1

name: WER

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

# Table of Contents

1. [Model Details](#model-details)

2. [Uses](#uses)

3. [Bias, Risks, and Limitations](#bias-risks-and-limitations)

4. [Training Details](#training-details)

5. [Evaluation](#evaluation)

6. [Model Examination](#model-examination-optional)

7. [Environmental Impact](#environmental-impact)

8. [Technical Specifications](#technical-specifications-optional)

9. [Citation](#citation-optional)

10. [Glossary](#glossary-optional)

11. [More Information](#more-information-optional)

12. [Model Card Authors](#model-card-authors-optional)

13. [Model Card Contact](#model-card-contact)

14. [How To Get Started With the Model](#how-to-get-started-with-the-model)

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

- **Resources for more information:** [More Information Needed]

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

## Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

## Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

## Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

# Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

## Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

## Training Procedure [optional]

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

### Preprocessing

[More Information Needed]

### Speeds, Sizes, Times

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

## Testing Data, Factors & Metrics

### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

## Results

[More Information Needed]

# Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

# Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

# Technical Specifications [optional]

## Model Architecture and Objective

[More Information Needed]

## Compute Infrastructure

[More Information Needed]

### Hardware

[More Information Needed]

### Software

[More Information Needed]

# Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

# Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

# More Information [optional]

[More Information Needed]

# Model Card Authors [optional]

[More Information Needed]

# Model Card Contact

[More Information Needed]

# How to Get Started with the Model

Use the code below to get started with the model.

<details>

<summary> Click to expand </summary>

[More Information Needed]

</details> |

Aymene/opus-mt-en-ro-finetuned-en-to-ro | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-12T09:42:56Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 252.79 +/- 19.40

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Ayran/DialoGPT-medium-harry-potter-1-through-3 | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | 2022-12-12T09:56:02Z | ---

language:

- ca

license: apache-2.0

tags:

- "catalan"

- "masked-lm"

- "longformer"

- "longformer-base-4096-ca-v2"

- "CaText"

- "Catalan Textual Corpus"

widget:

- text: "El Català és una llengua molt <mask>."

- text: "Salvador Dalí va viure a <mask>."

- text: "La Costa Brava té les millors <mask> d'Espanya."

- text: "El cacaolat és un batut de <mask>."

- text: "<mask> és la capital de la Garrotxa."

- text: "Vaig al <mask> a buscar bolets."

- text: "Antoni Gaudí vas ser un <mask> molt important per la ciutat."

- text: "Catalunya és una referència en <mask> a nivell europeu."

---

# Catalan Longformer (longformer-base-4096-ca-v2) base model

## Table of Contents

<details>

<summary>Click to expand</summary>

- [Model description](#model-description)

- [Intended uses and limitations](#intended-uses)

- [How to use](#how-to-use)

- [Limitations and bias](#limitations-and-bias)

- [Training](#training)

- [Training data](#training-data)

- [Training procedure](#training-procedure)

- [Evaluation](#evaluation)

- [CLUB benchmark](#club-benchmark)

- [Evaluation results](#evaluation-results)

- [Licensing Information](#licensing-information)

- [Additional information](#additional-information)

- [Author](#author)

- [Contact information](#contact-information)

- [Copyright](#copyright)

- [Licensing information](#licensing-information)

- [Funding](#funding)

- [Citing information](#citing-information)

- [Disclaimer](#disclaimer)

</details>

## Model description

The **longformer-base-4096-ca-v2** is the [Longformer](https://huggingface.co/allenai/longformer-base-4096) version of the [roberta-base-ca-v2](https://huggingface.co/projecte-aina/roberta-base-ca-v2) masked language model for the Catalan language. The use of these models allows us to process larger contexts (up to 4096 tokens) as input without the need of additional aggregation strategies. The pretraining process of this model started from the **roberta-base-ca-v2** checkpoint and was pretrained for MLM on both short and long documents in Catalan.

The Longformer model uses a combination of sliding window (local) attention and global attention. Global attention is user-configured based on the task to allow the model to learn task-specific representations. Please refer to the original [paper](https://arxiv.org/abs/2004.05150) for more details on how to set global attention.

## Intended uses and limitations

The **longformer-base-4096-ca-v2** model is ready-to-use only for masked language modeling to perform the Fill Mask task (try the inference API or read the next section).

However, it is intended to be fine-tuned on non-generative downstream tasks such as Question Answering, Text Classification, or Named Entity Recognition.

## How to use

Here is how to use this model:

```python

from transformers import AutoModelForMaskedLM

from transformers import AutoTokenizer, FillMaskPipeline

from pprint import pprint

tokenizer_hf = AutoTokenizer.from_pretrained('projecte-aina/longformer-base-4096-ca-v2')

model = AutoModelForMaskedLM.from_pretrained('projecte-aina/longformer-base-4096-ca-v2')

model.eval()

pipeline = FillMaskPipeline(model, tokenizer_hf)

text = f"Em dic <mask>."

res_hf = pipeline(text)

pprint([r['token_str'] for r in res_hf])

```

## Limitations and bias

At the time of submission, no measures have been taken to estimate the bias embedded in the model. However, we are well aware that our models may be biased since the corpus have been collected using crawling techniques on multiple web sources. We intend to conduct research in these areas in the future, and if completed, this model card will be updated.

## Training

### Training data

The training corpus consists of several corpora gathered from web crawling and public corpora.

| Corpus | Size in GB |

|-------------------------|------------|

| Catalan Crawling | 13.00 |

| Wikipedia | 1.10 |

| DOGC | 0.78 |

| Catalan Open Subtitles | 0.02 |

| Catalan Oscar | 4.00 |

| CaWaC | 3.60 |

| Cat. General Crawling | 2.50 |

| Cat. Goverment Crawling | 0.24 |

| ACN | 0.42 |

| Padicat | 0.63 |

| RacoCatalá | 8.10 |

| Nació Digital | 0.42 |

| Vilaweb | 0.06 |

| Tweets | 0.02 |

For this specific pre-training process, we have performed an undersampling process to obtain a corpus of 5,3 GB.

### Training procedure

The training corpus has been tokenized using a byte version of Byte-Pair Encoding (BPE) used in the original [RoBERTA](https://arxiv.org/abs/1907.11692) model with a vocabulary size of 50,262 tokens. The RoBERTa-base-bne pre-training consists of a masked language model training that follows the approach employed for the RoBERTa base. The training lasted a total of 37 hours with 8 computing nodes each one with 2 AMD MI50 GPUs of 32GB VRAM.

## Evaluation

### CLUB benchmark

The **longformer-base-4096-ca-v2** model has been fine-tuned on the downstream tasks of the [Catalan Language Understanding Evaluation benchmark](https://club.aina.bsc.es/) (CLUB),

that has been created along with the model.

It contains the following tasks and their related datasets:

1. Named Entity Recognition (NER)

**[NER (AnCora)](https://zenodo.org/record/4762031#.YKaFjqGxWUk)**: extracted named entities from the original [Ancora](https://doi.org/10.5281/zenodo.4762030) version,

filtering out some unconventional ones, like book titles, and transcribed them into a standard CONLL-IOB format

2. Part-of-Speech Tagging (POS)

**[POS (AnCora)](https://zenodo.org/record/4762031#.YKaFjqGxWUk)**: from the [Universal Dependencies treebank](https://github.com/UniversalDependencies/UD_Catalan-AnCora) of the well-known Ancora corpus.

3. Text Classification (TC)

**[TeCla](https://huggingface.co/datasets/projecte-aina/tecla)**: consisting of 137k news pieces from the Catalan News Agency ([ACN](https://www.acn.cat/)) corpus, with 30 labels.

4. Textual Entailment (TE)

**[TE-ca](https://huggingface.co/datasets/projecte-aina/teca)**: consisting of 21,163 pairs of premises and hypotheses, annotated according to the inference relation they have (implication, contradiction, or neutral), extracted from the [Catalan Textual Corpus](https://huggingface.co/datasets/projecte-aina/catalan_textual_corpus).

5. Semantic Textual Similarity (STS)

**[STS-ca](https://huggingface.co/datasets/projecte-aina/sts-ca)**: consisting of more than 3000 sentence pairs, annotated with the semantic similarity between them, scraped from the [Catalan Textual Corpus](https://huggingface.co/datasets/projecte-aina/catalan_textual_corpus).

6. Question Answering (QA):

**[VilaQuAD](https://huggingface.co/datasets/projecte-aina/vilaquad)**: contains 6,282 pairs of questions and answers, outsourced from 2095 Catalan language articles from VilaWeb newswire text.

**[ViquiQuAD](https://huggingface.co/datasets/projecte-aina/viquiquad)**: consisting of more than 15,000 questions outsourced from Catalan Wikipedia randomly chosen from a set of 596 articles that were originally written in Catalan.

**[CatalanQA](https://huggingface.co/datasets/projecte-aina/catalanqa)**: an aggregation of 2 previous datasets (VilaQuAD and ViquiQuAD), 21,427 pairs of Q/A balanced by type of question, containing one question and one answer per context, although the contexts can repeat multiple times.

### Evaluation results

After fine-tuning the model on the downstream tasks, it achieved the following performance:

### Evaluation results

| Task | NER (F1) | POS (F1) | STS-ca (Comb) | TeCla (Acc.) | TEca (Acc.) | VilaQuAD (F1/EM)| ViquiQuAD (F1/EM) | CatalanQA (F1/EM) | XQuAD-ca <sup>1</sup> (F1/EM) |

| ------------|:-------------:| -----:|:------|:------|:-------|:------|:----|:----|:----|

| RoBERTa-large-ca-v2 | **89.82** | **99.02** | **83.41** | **75.46** | 83.61 | **89.34/75.50** | **89.20**/75.77 | **90.72/79.06** | **73.79**/55.34 |

| RoBERTa-base-ca-v2 | 89.29 | 98.96 | 79.07 | 74.26 | 83.14 | 87.74/72.58 | 88.72/**75.91** | 89.50/76.63 | 73.64/**55.42** |

| Longformer-base-4096-ca-v2 | 88.49 | 98.98 | 78.37 | 73.79 | **83.89** | 87.59/72.33 | 88.70/**76.05** | 89.33/77.03 | 73.09/54.83 |

| BERTa | 89.76 | 98.96 | 80.19 | 73.65 | 79.26 | 85.93/70.58 | 87.12/73.11 | 89.17/77.14 | 69.20/51.47 |

| mBERT | 86.87 | 98.83 | 74.26 | 69.90 | 74.63 | 82.78/67.33 | 86.89/73.53 | 86.90/74.19 | 68.79/50.80 |

| XLM-RoBERTa | 86.31 | 98.89 | 61.61 | 70.14 | 33.30 | 86.29/71.83 | 86.88/73.11 | 88.17/75.93 | 72.55/54.16 |

<sup>1</sup> : Trained on CatalanQA, tested on XQuAD-ca.

## Additional information

### Author

Text Mining Unit (TeMU) at the Barcelona Supercomputing Center ([email protected])

### Contact information

For further information, send an email to [email protected]

### Copyright

Copyright (c) 2022 Text Mining Unit at Barcelona Supercomputing Center

### Licensing information

[Apache License, Version 2.0](https://www.apache.org/licenses/LICENSE-2.0)

### Funding

This work was funded by the [Departament de la Vicepresidència i de Polítiques Digitals i Territori de la Generalitat de Catalunya](https://politiquesdigitals.gencat.cat/ca/inici/index.html#googtrans(ca|en) within the framework of [Projecte AINA](https://politiquesdigitals.gencat.cat/ca/economia/catalonia-ai/aina).

### Disclaimer

<details>

<summary>Click to expand</summary>

The models published in this repository are intended for a generalist purpose and are available to third parties. These models may have bias and/or any other undesirable distortions.

When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of Artificial Intelligence.

In no event shall the owner and creator of the models (BSC – Barcelona Supercomputing Center) be liable for any results arising from the use made by third parties of these models.

</details>

|

Ayran/DialoGPT-medium-harry-potter-1-through-4-plus-6 | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null | Hellhound model mixed using AnythingV3 and centaur only tagged images from danbooru

Dataset of 200 images with complete tag lists

Works ok tho you its not much more than u can get with pure AnythingV3 though it was laking with the detail of fur so i just felt like doing it

best prompts would be variations of the example prompt

More monster girl models on the way feel free to request your favs :)

Hellhound: 1girl, animal_ears, animal_hands, black_fur, black_hair, black_sclera, black_skin, breasts, claws, colored_sclera, colored_skin, dog_ears, flaming_eyes, large_breasts, long_hair, looking_at_viewer, monster_girl, red_eyes, tail, dynamic pose

|

Ayu/Shiriro | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: whisper-calls-small

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-calls-small

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the None dataset.

Just a test, probably not a very good model

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.076 | 4.03 | 1000 | 0.0481 | 4.1883 |

| 0.0068 | 8.06 | 2000 | 0.0049 | 0.6362 |

| 0.0011 | 12.1 | 3000 | 0.0012 | 0.0157 |

| 0.0005 | 16.13 | 4000 | 0.0006 | 0.0 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Azaghast/DistilBART-SCP-ParaSummarization | [

"pytorch",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"BartForConditionalGeneration"

],

"model_type": "bart",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 142,

"min_length": 56,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 265.04 +/- 38.0587

name: mean_reward

verified: false

---

## To Import and Use the model

```python

from stable_baselines3 import PPO

from huggingface_sb3 import load_from_hub

repo_id = "Eslam25/LunarLander-v2-PPO"

filename = "ppo_1st.zip"

custom_objects = {

"learning_rate": 0.0,

"lr_schedule": lambda _: 0.0,

"clip_range": lambda _: 0.0,

}

checkpoint = load_from_hub(repo_id, filename)

model = PPO.load(checkpoint, custom_objects=custom_objects, print_system_info=True)

``` |

BAHIJA/distilbert-base-uncased-finetuned-cola | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | {

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 36 | null | ---

library_name: PyLaia

license: mit

tags:

- PyLaia

- PyTorch

- Handwritten text recognition

metrics:

- CER

- WER

language:

- 'no'

---

# Hugin-Munin handwritten text recognition

This model performs Handwritten Text Recognition in Norwegian. It was was developed during the [HUGIN-MUNIN project](https://hugin-munin-project.github.io/).

## Model description

The model has been trained using the PyLaia library on the [NorHand](https://zenodo.org/record/6542056) document images.

Training images were resized with a fixed height of 128 pixels, keeping the original aspect ratio.

## Evaluation results

The model achieves the following results:

| set | CER (%) | WER (%) |

| ----- | ---------- | --------- |

| train | 2.17 | 7.65 |

| val | 8.78 | 24.93 |

| test | 7.94 | 24.04 |

Results improve on validation and test sets when PyLaia is combined with a 6-gram language model.

The language model is trained on [this text corpus](https://www.nb.no/sprakbanken/en/resource-catalogue/oai-nb-no-sbr-73/) published by the National Library of Norway.

| set | CER (%) | WER (%) |

| ----- | ---------- | --------- |

| train | 2.40 | 8.10 |

| val | 7.45 | 19.75 |

| test | 6.55 | 18.2 |

## How to use

Please refer to the PyLaia library page (https://pypi.org/project/pylaia/) to use this model.

# Cite us!

```bibtex

@inproceedings{10.1007/978-3-031-06555-2_27,

author = {Maarand, Martin and Beyer, Yngvil and K\r{a}sen, Andre and Fosseide, Knut T. and Kermorvant, Christopher},

title = {A Comprehensive Comparison of Open-Source Libraries for Handwritten Text Recognition in Norwegian},

year = {2022},

isbn = {978-3-031-06554-5},

publisher = {Springer-Verlag},

address = {Berlin, Heidelberg},

url = {https://doi.org/10.1007/978-3-031-06555-2_27},

doi = {10.1007/978-3-031-06555-2_27},

booktitle = {Document Analysis Systems: 15th IAPR International Workshop, DAS 2022, La Rochelle, France, May 22–25, 2022, Proceedings},

pages = {399–413},

numpages = {15},

keywords = {Norwegian language, Open-source, Handwriting recognition},

location = {La Rochelle, France}

}

``` |

BME-TMIT/foszt2oszt | [

"pytorch",

"encoder-decoder",

"text2text-generation",

"hu",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 15 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased_cls_SentEval-CR

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased_cls_SentEval-CR

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4301

- Accuracy: 0.9110

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 189 | 0.3037 | 0.8898 |

| No log | 2.0 | 378 | 0.3295 | 0.8951 |

| 0.2938 | 3.0 | 567 | 0.3413 | 0.9057 |

| 0.2938 | 4.0 | 756 | 0.4158 | 0.9070 |

| 0.2938 | 5.0 | 945 | 0.4301 | 0.9110 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

BOON/electra-xlnet | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-12T11:08:05Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery-wohoo

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="osanseviero/q-FrozenLake-v1-4x4-noSlippery-wohoo", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

BSC-LT/RoBERTalex | [

"pytorch",

"roberta",

"fill-mask",

"es",

"dataset:legal_ES",

"dataset:temu_legal",

"arxiv:2110.12201",

"transformers",

"legal",

"spanish",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 24 | 2022-12-12T11:10:15Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: super_taxi

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.46 +/- 2.78

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="osanseviero/super_taxi", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Babelscape/rebel-large | [

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"en",

"dataset:Babelscape/rebel-dataset",

"transformers",

"seq2seq",

"relation-extraction",

"license:cc-by-nc-sa-4.0",

"model-index",

"autotrain_compatible",

"has_space"

]

| text2text-generation | {

"architectures": [

"BartForConditionalGeneration"

],

"model_type": "bart",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9,458 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: w2v2-libri

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# w2v2-libri

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7315

- Wer: 0.5574

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.98) and epsilon=1e-07

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 3000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 7.1828 | 50.0 | 200 | 3.0563 | 1.0 |

| 2.8849 | 100.0 | 400 | 2.9023 | 1.0 |

| 1.5108 | 150.0 | 600 | 1.1468 | 0.6667 |

| 0.1372 | 200.0 | 800 | 1.3749 | 0.6279 |

| 0.0816 | 250.0 | 1000 | 1.3985 | 0.6224 |

| 0.0746 | 300.0 | 1200 | 1.5285 | 0.6141 |

| 0.0556 | 350.0 | 1400 | 1.5496 | 0.5920 |

| 0.0644 | 400.0 | 1600 | 1.6263 | 0.5947 |

| 0.0546 | 450.0 | 1800 | 1.6803 | 0.5906 |

| 0.0491 | 500.0 | 2000 | 1.6155 | 0.5837 |

| 0.0518 | 550.0 | 2200 | 1.6784 | 0.5698 |

| 0.0314 | 600.0 | 2400 | 1.6050 | 0.5602 |

| 0.0048 | 650.0 | 2600 | 1.7703 | 0.5546 |

| 0.0042 | 700.0 | 2800 | 1.7135 | 0.5615 |

| 0.0025 | 750.0 | 3000 | 1.7315 | 0.5574 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 1.18.3

- Tokenizers 0.13.2

|

Babelscape/wikineural-multilingual-ner | [

"pytorch",

"tensorboard",

"safetensors",

"bert",

"token-classification",

"de",

"en",

"es",

"fr",

"it",

"nl",

"pl",

"pt",

"ru",

"multilingual",

"dataset:Babelscape/wikineural",

"transformers",

"named-entity-recognition",

"sequence-tagger-model",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 41,608 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 259.85 +/- 19.20

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Babysittingyoda/DialoGPT-small-familyguy | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 13 | 2022-12-12T11:45:00Z | ---

language:

- es

tags:

- pytorch

- causal-lm

license: apache-2.0

datasets:

- bertin-project/mc4-es-sampled

- infolibros

---

# BERTIN GPT-J-6B-infolibros

This model is a fine-tuned version of [BERTIN-GPT-J-6B](https://huggingface.co/bertin-project/bertin-gpt-j-6B) on the [InfoLibros Corpus](https://zenodo.org/record/7254400). |

Banshee/dialoGPT-small-luke | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: afl-3.0

---

# Overview

`ul2-large-japanese` is a Japanese version of UL2.

# TODOs

- [ ] Documentation

- [x] Pre-training

- [ ] evaluate on downstream tasks

- If you get some experimental results of this model on downstream tasks, please feel free to make Pull Requests.

# Results

- Under construction

## Question Answering

## Others

# Acknowledgement

Research supported with Cloud TPUs from Google's TPU Research Cloud (TRC)

|

Barbarameerr/Barbara | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-12T12:24:43Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: roberta-large_cls_CR

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-large_cls_CR

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3325

- Accuracy: 0.9043

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 213 | 0.4001 | 0.875 |

| No log | 2.0 | 426 | 0.4547 | 0.8324 |

| 0.499 | 3.0 | 639 | 0.3161 | 0.8963 |

| 0.499 | 4.0 | 852 | 0.3219 | 0.9069 |

| 0.2904 | 5.0 | 1065 | 0.3325 | 0.9043 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Barleysack/AERoberta | [

"pytorch",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"RobertaForQuestionAnswering"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 273.72 +/- 19.85

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

BatuhanYilmaz/mlm-finetuned-imdb | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

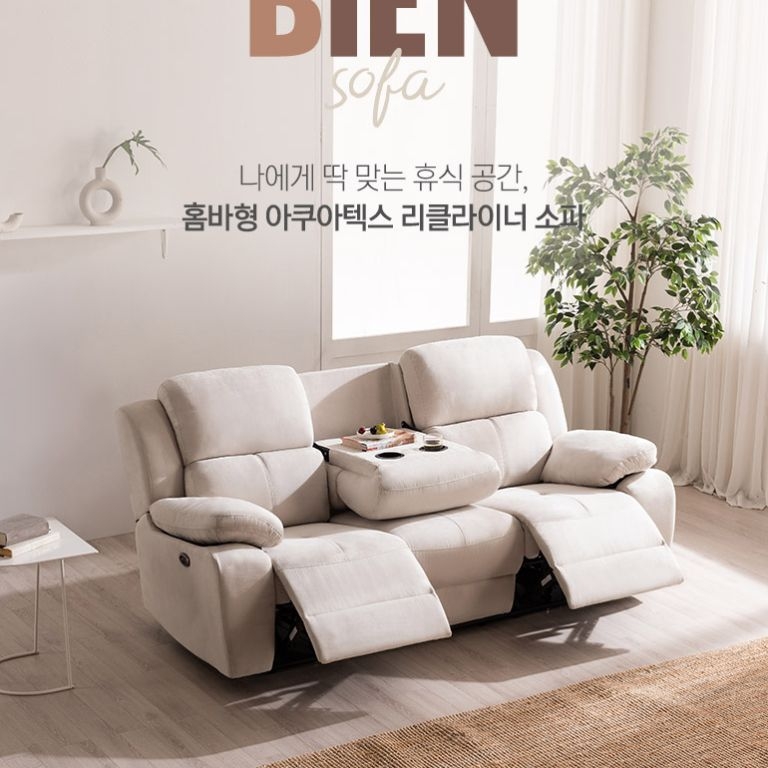

### rembrantSito Dreambooth model trained by theguaz with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

|

Baybars/debateGPT | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-large-uncased_cls_sst2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-uncased_cls_sst2

This model is a fine-tuned version of [bert-large-uncased](https://huggingface.co/bert-large-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3787

- Accuracy: 0.9255

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 433 | 0.4188 | 0.8578 |

| 0.3762 | 2.0 | 866 | 0.4894 | 0.8968 |

| 0.3253 | 3.0 | 1299 | 0.3313 | 0.9094 |

| 0.1601 | 4.0 | 1732 | 0.3399 | 0.9232 |

| 0.0744 | 5.0 | 2165 | 0.3787 | 0.9255 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Baybars/wav2vec2-xls-r-300m-cv8-turkish | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"tr",

"dataset:common_voice",

"transformers",

"common_voice",

"generated_from_trainer",

"hf-asr-leaderboard",

"robust-speech-event",

"license:apache-2.0"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: lukee/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

BeIR/query-gen-msmarco-t5-base-v1 | [

"pytorch",

"jax",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"T5ForConditionalGeneration"

],

"model_type": "t5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 200,

"min_length": 30,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": "summarize: "

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to German: "

},

"translation_en_to_fr": {

"early_stopping": true,