modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Davlan/mbart50-large-eng-yor-mt | [

"pytorch",

"mbart",

"text2text-generation",

"arxiv:2103.08647",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MBartForConditionalGeneration"

],

"model_type": "mbart",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 255.91 +/- 19.75

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Davlan/mt5-small-en-pcm | [

"pytorch",

"mt5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="toinsson/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Davlan/mt5-small-pcm-en | [

"pytorch",

"mt5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | 2022-12-21T12:11:17Z | ---

license: apache-2.0

tags:

- google/fleurs

- generated_from_trainer

- automatic-speech-recognition

- hf-asr-leaderboard

- pashto

- ps

datasets:

- fleurs

metrics:

- wer

model-index:

- name: facebook/wav2vec2-xls-r-300m

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: google/fleurs

type: google/fleurs

args: 'config: ps_af, split: test'

metrics:

- name: Wer

type: wer

value: 0.5159447476125512

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# facebook/wav2vec2-xls-r-300m

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the GOOGLE/FLEURS - PS_AF dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9162

- Wer: 0.5159

- Cer: 0.1972

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 7.5e-07

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- training_steps: 6000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Cer | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:------:|:---------------:|:------:|

| 5.0767 | 6.33 | 500 | 1.0 | 4.8783 | 1.0 |

| 3.1156 | 12.66 | 1000 | 1.0 | 3.0990 | 1.0 |

| 1.3506 | 18.99 | 1500 | 0.2889 | 1.1056 | 0.7031 |

| 0.9997 | 25.32 | 2000 | 0.2301 | 0.9191 | 0.5944 |

| 0.7838 | 31.65 | 2500 | 0.2152 | 0.8952 | 0.5556 |

| 0.6665 | 37.97 | 3000 | 0.2017 | 0.8908 | 0.5252 |

| 0.6265 | 44.3 | 3500 | 0.1954 | 0.9063 | 0.5133 |

| 0.5935 | 50.63 | 4000 | 0.1969 | 0.9162 | 0.5156 |

| 0.5174 | 56.96 | 4500 | 0.1972 | 0.9287 | 0.5140 |

| 0.5462 | 63.29 | 5000 | 0.1974 | 0.9370 | 0.5138 |

| 0.5564 | 69.62 | 5500 | 0.1977 | 0.9461 | 0.5148 |

| 0.5252 | 75.95 | 6000 | 0.9505 | 0.5118 | 0.1969 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

Davlan/mt5_base_eng_yor_mt | [

"pytorch",

"mt5",

"text2text-generation",

"arxiv:2103.08647",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: distilbert-profane-final

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-profane-final

This model is a fine-tuned version of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2773

- Accuracy: 0.8992

- Precision: 0.8261

- Recall: 0.7987

- F1: 0.8114

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| No log | 1.0 | 296 | 0.2862 | 0.8907 | 0.8230 | 0.7528 | 0.7807 |

| 0.3379 | 2.0 | 592 | 0.2650 | 0.9097 | 0.8748 | 0.7778 | 0.8148 |

| 0.3379 | 3.0 | 888 | 0.2632 | 0.9049 | 0.8417 | 0.7999 | 0.8185 |

| 0.221 | 4.0 | 1184 | 0.2772 | 0.8916 | 0.8055 | 0.8055 | 0.8055 |

| 0.221 | 5.0 | 1480 | 0.2773 | 0.8992 | 0.8261 | 0.7987 | 0.8114 |

### Framework versions

- Transformers 4.24.0.dev0

- Pytorch 1.11.0+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Davlan/mt5_base_yor_eng_mt | [

"pytorch",

"mt5",

"text2text-generation",

"arxiv:2103.08647",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

language:

- en

tags:

- stable-diffusion

- text-to-image

license: creativeml-openrail-m

inference: true

pipeline_tag: text-to-image

---

# i modelli 'Inizio'

<img src=https://ac-p.namu.la/20230106sac/980c715bd45a7fb0cfe8ce06715c6c4034825fa4d4d07267a5585eb709f2cefa.png

width=100% height=100%>

Inizio is the series of custom mixed models. Based on many released open-source models, and support .safetensors format only.

[WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)-amicable

## Summary

This model repository includes 7 models currently:

1. *Inizio Fantasma*: Blossom mix+[Anything 3.0](https://huggingface.co/Linaqruf/anything-v3.0)+[SamDoesArtsUltMerge](https://civitai.com/models/68/samdoesarts-ultmerge); weighted, M=0.2. Quietly impressive semi-realistic model.

2. *Inizio Inseguitore*: [ALG](https://arca.live/b/aiart/64297100)+[SamDoesArtsUltMerge](https://civitai.com/models/68/samdoesarts-ultmerge)+Blossom Mix; add difference, M=0.3. Similar to Inizio Fantasma, but anime-style focused.

3. *Inizio Foschia*: [ALG](https://arca.live/b/aiart/64297100)+Inizio Fantasma+[A Certain Model](https://huggingface.co/JosephusCheung/ACertainModel); weighted, M=0.7. Similar to Inizio Inseguitore.

4. *Inizio Replicante*: Inizio Foschia+[DBmai](https://tieba.baidu.com/p/8136937175)+[Finale 5o](https://arca.live/b/aiart/65251337); weighted, M=0.5. Well-tuned Semi-Realistic Anime model. The most fashionable.

5. *Inizio Skinjob*: Inizio Replicante+[Berry Mix](https://rentry.co/LFTBL)+[ElyOrange](https://huggingface.co/WarriorMama777/OrangeMixs); weighted, M=0.6. Well-tuned Semi-Realistic Anime model.

6. *Inizio Deckard*: ([Kribo Nstal](https://civitai.com/models/1225/kribomix-nstal)+Inizio Skinjob; weighted, M=0.5)+Inizio Fantasma+Kribo Nstal; weighted, M=0.5.

7. *Inizio Unico* Inizio Fantasma+Inizio Inseguitore+Inizio Foschia+Inizio Replicante+Inizio Skinjob+Inizio Deckard; weighted, M=1/6. The most advanced Inizio model.

## Recommend Settings (Especially for Inizio Unico)

- Variable Automatic Encoder: [SD MSE 840k.vae.pt](https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.ckpt)

- Embedding:[bad_prompt_ver2](https://huggingface.co/datasets/Nerfgun3/bad_prompt/resolve/main/bad_prompt_version2.pt)

- Clip skip: 3

- Prompt: object-related tag first, quality-related tag later; [prompt list](https://docs.google.com/document/d/1X26U00Pxsqmyi47RjxDBMDLCcmyN23z-NEDBgd-vW-c/edit?usp=sharing)

- Resolution: 1024x576->1366x768 w/ HighRes. Fix

- HighRes. Fix: Latent or ESRGAN; upscale by 2

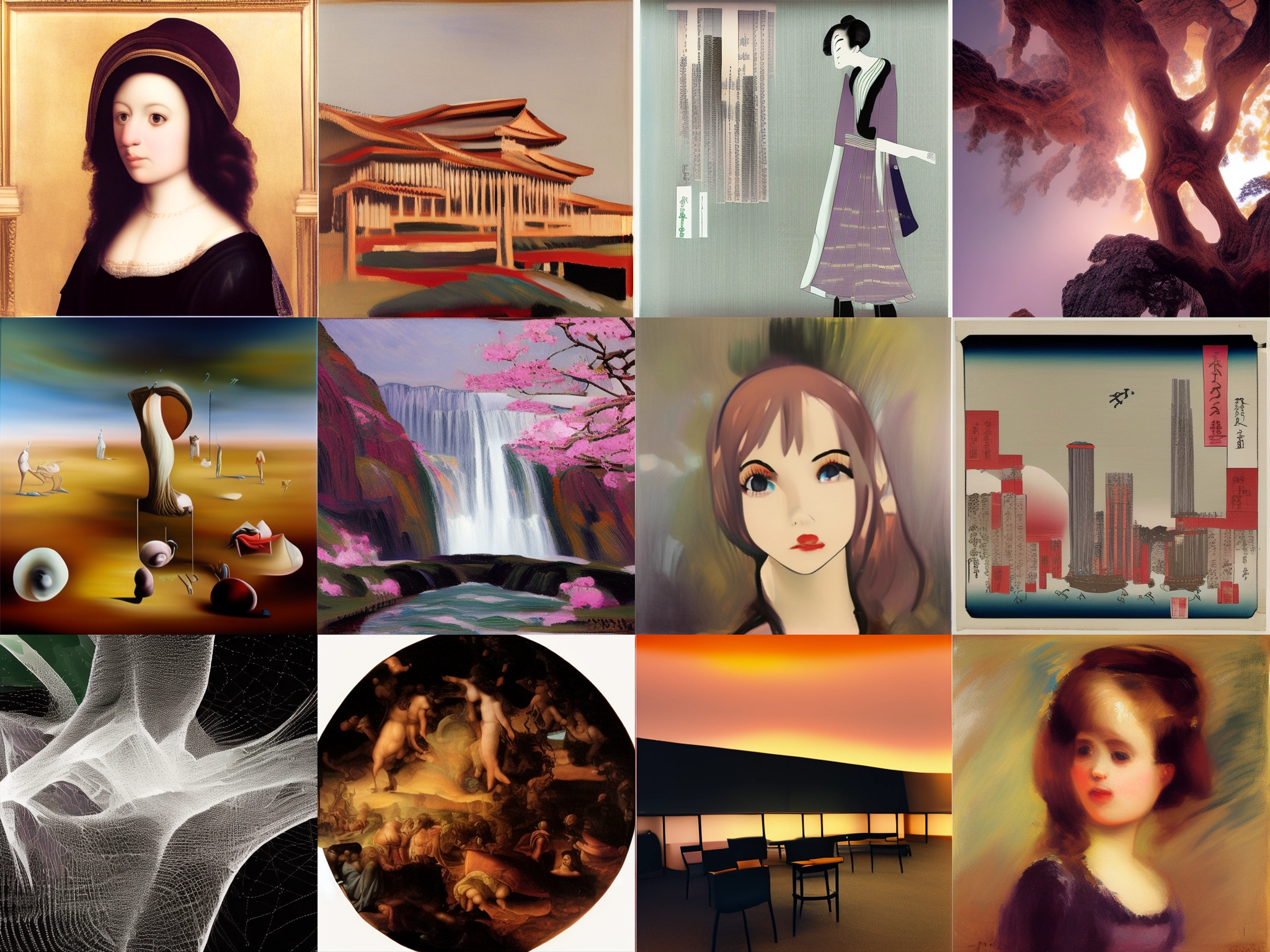

## Sample Image

> <img src=https://ac2-p.namu.la/20230106sac2/39fd2e16ccf9b0f0254fb97993a575783c8f58a0031c86c54e55ba116e9e21fb.png

> width=100% height=100%>

> ▲ X / Y Plot #1

>

> <img src=https://ac-p.namu.la/20230106sac/5ded2216805dcbadd5ad581497c881582e8234a2f0ca3200163e89d9d86d8443.png

> width=100% height=100%>

> ▲ X / Y Plot #2

>

> <img src=https://ac-p.namu.la/20230106sac/d9ac67ac785d8e70c759eac374a9328a5548a1f4f9ed1a4a15dd842eb0ae6f20.png

> width=100% height=100%>

> ▲ X / Y Plot #3

>

> <img src=https://ac-p.namu.la/20230106sac/fa3ff5f04a30c8ef68d4e937c1511d6806d3d6b0bb27e6bcc4dba056ad5d6b76.png

> width=30% height=30%>

> ▲ Inizio Skinjob

>

> <img src=https://ac-p.namu.la/20230106sac/17be3a43666816c43776455f31a04f47b01817632b53fb67341444d11318dfd3.png

> width=30% height=30%>

> <img src=https://ac-p.namu.la/20230108sac/ab8169d92f6c9a3bcc47a4cb3138c92bd41bfb794e0020ec3e601834ebd30252.png

> width=30% height=30%>

> <img src=https://ac-p.namu.la/20230108sac/2dbb20abadbed8734fb7b4a8fb1c77485e25de9bfdb671bb91e15d9853cbf972.png

> width=30% height=30%>

> <img src=https://ac-p.namu.la/20230108sac/269e9b1e7f0c9eb2db7c3b5100c1cce6fc75df5fe8c2f02499db55e4b18943f4.jpg

> width=100% height=100%>

> ▲ Inizio Unico

>

## License Information

This model follows Creative ML Open RAIL-M: [Stable Diffusion License](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

But, You may use whatever you want. I don't like to set such restriction.

## Contact

*[email protected]* or [*Find Cinnamomo on AI Art Channel*](https://arca.live/b/aiart) |

Davlan/xlm-roberta-base-finetuned-english | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 43680 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 2,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 8736,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Davlan/xlm-roberta-base-finetuned-hausa | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 234 | null | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-Pixelcopter-Basic

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 16.20 +/- 20.08

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Davlan/xlm-roberta-base-finetuned-kinyarwanda | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 61 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 284.82 +/- 13.27

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

import gym

from stable_baselines3 import PPO

from stable_baselines3.common.vec_env import DummyVecEnv

from stable_baselines3.common.env_util import make_vec_env

from huggingface_sb3 import package_to_hub

from huggingface_sb3 import load_from_hub

repo_id = "raghuvamsidhar/ppo-LunarLander-v2" # The repo_id

filename = "PPO-LunarLander-v2-RVD.zip" # The model filename.zip

# When the model was trained on Python 3.8 the pickle protocol is 5

# But Python 3.6, 3.7 use protocol 4

# In order to get compatibility we need to:

# 1. Install pickle5 (we done it at the beginning of the colab)

# 2. Create a custom empty object we pass as parameter to PPO.load()

custom_objects = {

"learning_rate": 0.0,

"lr_schedule": lambda _: 0.0,

"clip_range": lambda _: 0.0,

}

checkpoint = load_from_hub(repo_id, filename)

model = PPO.load(checkpoint, custom_objects=custom_objects, print_system_info=True)

...

```

|

Davlan/xlm-roberta-base-finetuned-lingala | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | 2022-12-21T12:51:33Z | ---

language:

- hi

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small Hi - Sanchit Gandhi

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Hi - Sanchit Gandhi

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 450

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-finetuned-shona | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

language:

- or

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: Whisper Small Odia

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 or

type: mozilla-foundation/common_voice_11_0

config: or

split: test

args: or

metrics:

- name: Wer

type: wer

value: 26.600846262341328

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Odia

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the mozilla-foundation/common_voice_11_0 or dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4786

- Wer: 26.6008

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0001 | 24.01 | 250 | 0.4786 | 26.6008 |

| 0.0 | 49.01 | 500 | 0.5252 | 26.9394 |

| 0.0 | 74.01 | 750 | 0.5534 | 27.1368 |

| 0.0 | 99.01 | 1000 | 0.5644 | 26.9958 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-finetuned-somali | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: creativeml-openrail-m

language:

- en

thumbnail: "https://static.miraheze.org/intercriaturaswiki/2/2c/Dussian_model.png"

tags:

- text-to-image

- stable-diffusion

- stable-diffusion-diffusers

#datasets:

#- Name/name

inference: true

widget:

- text: alien insects, insectoid, scarab, alien, humanoid, insects, with tails and wings, very strange creatures

- text: A dussian, insect

--- |

Davlan/xlm-roberta-base-finetuned-wolof | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | 2022-12-21T13:18:03Z | ---

model-index:

- name: Sociovestix/lenu_FI

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: lenu

type: Sociovestix/lenu

config: FI

split: test

revision: fbe0b4b5b8d6950c10f5710f2c987728635a4afe

metrics:

- type: f1

value: 0.9853882549401615

name: f1

- type: f1

value: 0.7040205972185964

name: f1 macro

args:

average: macro

widget:

- text: "BetterMe Finland Oy"

- text: "Kotkan Kuurojen Yhdistys ry"

- text: "Kunnallisalan kehittämissäätiö sr"

- text: "Eero Kurvinen Ky"

- text: "OP-Tavoite 2 -sijoitusrahasto"

- text: "Asunto Oy Mertamäki"

- text: "Uponor Oyj"

- text: "Keskinäinen Kiinteistö Oy Keijo Lounala"

- text: "Kuusamon kaupunki"

- text: "Ilmajoen Musiikkijuhlat ry"

- text: "Hanne ja Timo Similä avoin yhtiö"

- text: "Vehmersalmen Osuuspankki"

- text: "Juvan seurakunta"

- text: "Nesteen Henkilöstörahasto hr"

- text: "Suur-Seudun Osuuskauppa SSO"

- text: "Tmi Ida Lehmus"

- text: "Pohjois-Karjalan sosiaali- ja terveyspalvelujen kuntayhtymä"

- text: "Finlaysonin Forssan Tehtaitten Sairauskassa"

- text: "Suomen Punainen Risti Savo-Karjalan piiri"

- text: "L-Fashion Group Oy:n Eläkesäätiö"

- text: "Gummandouran Osakaskunta"

- text: "Suomen Keskinäinen Lääkevahinkovakuutusyhtiö"

- text: "Työttömyyskassojen Tukikassa"

- text: "Kuntien Takauskeskus"

- text: "LUT-yliopiston ylioppilaskunta"

- text: "Huoltovarmuuskeskus"

- text: "Pohjois-Karjalan kauppakamari ry"

- text: "Turun katolinen seurakunta (Pyhän Birgitan ja Autuaan Hemminginseurakunta)"

- text: "Närpes Sjukvårdsfond"

- text: "Kvevlax Sparbank"

- text: "Valion Eläkekassa"

- text: "Yhteismetsä Visa"

- text: "Maatalousyhtymä Niinivehmas & Tallila"

- text: "Suomen Vahinkovakuutus Oy"

- text: "Pyhän Marian katolinen seurakunta"

- text: "Kumpulainen Elma Sofia kuolinpesä"

- text: "Afarak Group SE"

- text: "Nordiska Miljöfinansieringsbolaget, Pohjoismaiden ympäristörahoitusyhtiö NEFCO, Nordic Environment Finance Corporation"

- text: "Österbottens Fiskeriförsäkringsförening"

- text: "Joensuun ortodoksinen seurakunta"

- text: "Scandia Mink Ab konkursbo"

---

# LENU - Legal Entity Name Understanding for Finland

A [finnish Bert](https://huggingface.co/TurkuNLP/bert-base-finnish-cased-v1) model fine-tuned on finnish legal entity names (jurisdiction FI) from the Global [Legal Entity Identifier](https://www.gleif.org/en/about-lei/introducing-the-legal-entity-identifier-lei)

(LEI) System with the goal to detect [Entity Legal Form (ELF) Codes](https://www.gleif.org/en/about-lei/code-lists/iso-20275-entity-legal-forms-code-list).

---------------

<h1 align="center">

<a href="https://gleif.org">

<img src="http://sdglabs.ai/wp-content/uploads/2022/07/gleif-logo-new.png" width="220px" style="display: inherit">

</a>

</h1><br>

<h3 align="center">in collaboration with</h3>

<h1 align="center">

<a href="https://sociovestix.com">

<img src="https://sociovestix.com/img/svl_logo_centered.svg" width="700px" style="width: 100%">

</a>

</h1><br>

---------------

## Model Description

<!-- Provide a longer summary of what this model is. -->

The model has been created as part of a collaboration of the [Global Legal Entity Identifier Foundation](https://gleif.org) (GLEIF) and

[Sociovestix Labs](https://sociovestix.com) with the goal to explore how Machine Learning can support in detecting the ELF Code solely based on an entity's legal name and legal jurisdiction.

See also the open source python library [lenu](https://github.com/Sociovestix/lenu), which supports in this task.

The model has been trained on the dataset [lenu](https://huggingface.co/datasets/Sociovestix), with a focus on finnish legal entities and ELF Codes within the Jurisdiction "FI".

- **Developed by:** [GLEIF](https://gleif.org) and [Sociovestix Labs](https://huggingface.co/Sociovestix)

- **License:** Creative Commons (CC0) license

- **Finetuned from model [optional]:** TurkuNLP/bert-base-finnish-cased-v1

- **Resources for more information:** [Press Release](https://www.gleif.org/en/newsroom/press-releases/machine-learning-new-open-source-tool-developed-by-gleif-and-sociovestix-labs-enables-organizations-everywhere-to-automatically-)

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

An entity's legal form is a crucial component when verifying and screening organizational identity.

The wide variety of entity legal forms that exist within and between jurisdictions, however, has made it difficult for large organizations to capture legal form as structured data.

The Jurisdiction specific models of [lenu](https://github.com/Sociovestix/lenu), trained on entities from

GLEIF’s Legal Entity Identifier (LEI) database of over two million records, will allow banks,

investment firms, corporations, governments, and other large organizations to retrospectively analyze

their master data, extract the legal form from the unstructured text of the legal name and

uniformly apply an ELF code to each entity type, according to the ISO 20275 standard.

# Licensing Information

This model, which is trained on LEI data, is available under Creative Commons (CC0) license.

See [gleif.org/en/about/open-data](https://gleif.org/en/about/open-data).

# Recommendations

Users should always consider the score of the suggested ELF Codes. For low score values it may be necessary to manually review the affected entities. |

Davlan/xlm-roberta-base-finetuned-xhosa | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null | ---

language:

- ru

tags:

- Transformers

- bert

pipeline_tag: fill-mask

thumbnail: https://github.com/sberbank-ai/model-zoo

license: apache-2.0

widget:

- text: Применение метода <mask> векторов для решения задач классификации

---

# ruSciBERT

Model was trained by Sber AI team and MLSA Lab of Institute for AI, MSU.

If you use our model for your project, please tell us about it ([[email protected]]([email protected])).

[Presentation at the AI Journey 2022](https://ai-journey.ru/archive/?year=2022&video=https://vk.com/video_ext.phpq3u4e5st6io8nm7a0rkoid=-22522055a2n3did=456242496a2n3dhash=ae9efe06acf647fd)

* Task: `mask filling`

* Type: `encoder`

* Tokenizer: `bpe`

* Dict size: `50265`

* Num Parameters: `123 M`

* Training Data Volume: `6.5 GB` |

Davlan/xlm-roberta-base-finetuned-yoruba | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 29 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- wer

model-index:

- name: model_syllable_onSet2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# model_syllable_onSet2

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4231

- 0 Precision: 1.0

- 0 Recall: 0.96

- 0 F1-score: 0.9796

- 0 Support: 25

- 1 Precision: 0.9643

- 1 Recall: 0.9643

- 1 F1-score: 0.9643

- 1 Support: 28

- 2 Precision: 1.0

- 2 Recall: 0.9643

- 2 F1-score: 0.9818

- 2 Support: 28

- 3 Precision: 0.8889

- 3 Recall: 1.0

- 3 F1-score: 0.9412

- 3 Support: 16

- Accuracy: 0.9691

- Macro avg Precision: 0.9633

- Macro avg Recall: 0.9721

- Macro avg F1-score: 0.9667

- Macro avg Support: 97

- Weighted avg Precision: 0.9714

- Weighted avg Recall: 0.9691

- Weighted avg F1-score: 0.9695

- Weighted avg Support: 97

- Wer: 0.2827

- Mtrix: [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]]

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 70

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | 0 Precision | 0 Recall | 0 F1-score | 0 Support | 1 Precision | 1 Recall | 1 F1-score | 1 Support | 2 Precision | 2 Recall | 2 F1-score | 2 Support | 3 Precision | 3 Recall | 3 F1-score | 3 Support | Accuracy | Macro avg Precision | Macro avg Recall | Macro avg F1-score | Macro avg Support | Weighted avg Precision | Weighted avg Recall | Weighted avg F1-score | Weighted avg Support | Wer | Mtrix |

|:-------------:|:-----:|:----:|:---------------:|:-----------:|:--------:|:----------:|:---------:|:-----------:|:--------:|:----------:|:---------:|:-----------:|:--------:|:----------:|:---------:|:-----------:|:--------:|:----------:|:---------:|:--------:|:-------------------:|:----------------:|:------------------:|:-----------------:|:----------------------:|:-------------------:|:---------------------:|:--------------------:|:------:|:--------------------------------------------------------------------------------------:|

| 1.3102 | 4.16 | 100 | 1.2133 | 0.125 | 0.04 | 0.0606 | 25 | 0.0 | 0.0 | 0.0 | 28 | 0.3146 | 1.0 | 0.4786 | 28 | 0.0 | 0.0 | 0.0 | 16 | 0.2990 | 0.1099 | 0.26 | 0.1348 | 97 | 0.1230 | 0.2990 | 0.1538 | 97 | 0.9676 | [[0, 1, 2, 3], [0, 1, 0, 24, 0], [1, 7, 0, 21, 0], [2, 0, 0, 28, 0], [3, 0, 0, 16, 0]] |

| 0.7368 | 8.33 | 200 | 0.7100 | 1.0 | 0.72 | 0.8372 | 25 | 0.3333 | 0.0357 | 0.0645 | 28 | 0.3684 | 1.0 | 0.5385 | 28 | 0.0 | 0.0 | 0.0 | 16 | 0.4845 | 0.4254 | 0.4389 | 0.3600 | 97 | 0.4603 | 0.4845 | 0.3898 | 97 | 0.8227 | [[0, 1, 2, 3], [0, 18, 2, 5, 0], [1, 0, 1, 27, 0], [2, 0, 0, 28, 0], [3, 0, 0, 16, 0]] |

| 0.3813 | 12.49 | 300 | 0.3802 | 0.8519 | 0.92 | 0.8846 | 25 | 0.7333 | 0.7857 | 0.7586 | 28 | 0.9231 | 0.8571 | 0.8889 | 28 | 0.9286 | 0.8125 | 0.8667 | 16 | 0.8454 | 0.8592 | 0.8438 | 0.8497 | 97 | 0.8509 | 0.8454 | 0.8465 | 97 | 0.7694 | [[0, 1, 2, 3], [0, 23, 2, 0, 0], [1, 4, 22, 2, 0], [2, 0, 3, 24, 1], [3, 0, 3, 0, 13]] |

| 0.2761 | 16.65 | 400 | 0.2263 | 1.0 | 1.0 | 1.0 | 25 | 1.0 | 0.9643 | 0.9818 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9794 | 0.9722 | 0.9821 | 0.9762 | 97 | 0.9817 | 0.9794 | 0.9798 | 97 | 0.4392 | [[0, 1, 2, 3], [0, 25, 0, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.1596 | 20.82 | 500 | 0.2283 | 1.0 | 0.96 | 0.9796 | 25 | 0.9310 | 0.9643 | 0.9474 | 28 | 0.9643 | 0.9643 | 0.9643 | 28 | 0.9375 | 0.9375 | 0.9375 | 16 | 0.9588 | 0.9582 | 0.9565 | 0.9572 | 97 | 0.9595 | 0.9588 | 0.9589 | 97 | 0.4971 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 1, 0], [2, 0, 0, 27, 1], [3, 0, 1, 0, 15]] |

| 0.124 | 24.98 | 600 | 0.1841 | 1.0 | 0.96 | 0.9796 | 25 | 0.9655 | 1.0 | 0.9825 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.9412 | 1.0 | 0.9697 | 16 | 0.9794 | 0.9767 | 0.9811 | 0.9784 | 97 | 0.9803 | 0.9794 | 0.9794 | 97 | 0.2955 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 28, 0, 0], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.1162 | 29.16 | 700 | 0.2286 | 1.0 | 0.96 | 0.9796 | 25 | 0.9333 | 1.0 | 0.9655 | 28 | 1.0 | 0.9286 | 0.9630 | 28 | 0.9412 | 1.0 | 0.9697 | 16 | 0.9691 | 0.9686 | 0.9721 | 0.9694 | 97 | 0.9711 | 0.9691 | 0.9691 | 97 | 0.3627 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 28, 0, 0], [2, 0, 1, 26, 1], [3, 0, 0, 0, 16]] |

| 0.1576 | 33.33 | 800 | 0.2259 | 1.0 | 0.92 | 0.9583 | 25 | 0.9333 | 1.0 | 0.9655 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.9412 | 1.0 | 0.9697 | 16 | 0.9691 | 0.9686 | 0.9711 | 0.9688 | 97 | 0.9711 | 0.9691 | 0.9691 | 97 | 0.3210 | [[0, 1, 2, 3], [0, 23, 2, 0, 0], [1, 0, 28, 0, 0], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.0957 | 37.49 | 900 | 0.2757 | 1.0 | 0.96 | 0.9796 | 25 | 0.9643 | 0.9643 | 0.9643 | 28 | 0.9643 | 0.9643 | 0.9643 | 28 | 0.9412 | 1.0 | 0.9697 | 16 | 0.9691 | 0.9674 | 0.9721 | 0.9695 | 97 | 0.9697 | 0.9691 | 0.9691 | 97 | 0.3499 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 1, 0], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.1145 | 41.65 | 1000 | 0.2951 | 1.0 | 0.96 | 0.9796 | 25 | 1.0 | 0.9643 | 0.9818 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8421 | 1.0 | 0.9143 | 16 | 0.9691 | 0.9605 | 0.9721 | 0.9644 | 97 | 0.9740 | 0.9691 | 0.9701 | 97 | 0.3024 | [[0, 1, 2, 3], [0, 24, 0, 0, 1], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.121 | 45.82 | 1100 | 0.3262 | 1.0 | 0.96 | 0.9796 | 25 | 1.0 | 0.9643 | 0.9818 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8421 | 1.0 | 0.9143 | 16 | 0.9691 | 0.9605 | 0.9721 | 0.9644 | 97 | 0.9740 | 0.9691 | 0.9701 | 97 | 0.2885 | [[0, 1, 2, 3], [0, 24, 0, 0, 1], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.079 | 49.98 | 1200 | 0.3615 | 1.0 | 0.96 | 0.9796 | 25 | 0.9643 | 0.9643 | 0.9643 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9691 | 0.9633 | 0.9721 | 0.9667 | 97 | 0.9714 | 0.9691 | 0.9695 | 97 | 0.3615 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.0733 | 54.16 | 1300 | 0.3891 | 1.0 | 0.96 | 0.9796 | 25 | 0.9643 | 0.9643 | 0.9643 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9691 | 0.9633 | 0.9721 | 0.9667 | 97 | 0.9714 | 0.9691 | 0.9695 | 97 | 0.3082 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.0962 | 58.33 | 1400 | 0.3620 | 1.0 | 0.96 | 0.9796 | 25 | 0.9643 | 0.9643 | 0.9643 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9691 | 0.9633 | 0.9721 | 0.9667 | 97 | 0.9714 | 0.9691 | 0.9695 | 97 | 0.2851 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.0628 | 62.49 | 1500 | 0.4084 | 1.0 | 0.96 | 0.9796 | 25 | 0.9630 | 0.9286 | 0.9455 | 28 | 0.9643 | 0.9643 | 0.9643 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9588 | 0.9540 | 0.9632 | 0.9576 | 97 | 0.9607 | 0.9588 | 0.9590 | 97 | 0.3001 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 26, 1, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

| 0.0675 | 66.65 | 1600 | 0.4231 | 1.0 | 0.96 | 0.9796 | 25 | 0.9643 | 0.9643 | 0.9643 | 28 | 1.0 | 0.9643 | 0.9818 | 28 | 0.8889 | 1.0 | 0.9412 | 16 | 0.9691 | 0.9633 | 0.9721 | 0.9667 | 97 | 0.9714 | 0.9691 | 0.9695 | 97 | 0.2827 | [[0, 1, 2, 3], [0, 24, 1, 0, 0], [1, 0, 27, 0, 1], [2, 0, 0, 27, 1], [3, 0, 0, 0, 16]] |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-finetuned-zulu | [

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- google/fleurs

metrics:

- wer

model-index:

- name: Whisper Small Slovenian

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: google/fleurs sl_si

type: google/fleurs

config: sl_si

split: test

args: sl_si

metrics:

- name: Wer

type: wer

value: 39.37632455343627

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Slovenian

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the google/fleurs sl_si dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8831

- Wer: 39.3763

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0054 | 22.01 | 250 | 0.8189 | 39.5761 |

| 0.0015 | 45.01 | 500 | 0.8831 | 39.3763 |

| 0.0009 | 68.0 | 750 | 0.9106 | 39.5035 |

| 0.0008 | 90.01 | 1000 | 0.9193 | 39.6549 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

|

Davlan/xlm-roberta-base-masakhaner | [

"pytorch",

"xlm-roberta",

"token-classification",

"arxiv:2103.11811",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"XLMRobertaForTokenClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: distilbert-targin-final

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-targin-final

This model is a fine-tuned version of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6307

- Accuracy: 0.6882

- Precision: 0.6443

- Recall: 0.6384

- F1: 0.6409

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| No log | 1.0 | 296 | 0.5882 | 0.6854 | 0.6355 | 0.6182 | 0.6226 |

| 0.5995 | 2.0 | 592 | 0.5693 | 0.7015 | 0.6590 | 0.6019 | 0.6030 |

| 0.5995 | 3.0 | 888 | 0.5823 | 0.6882 | 0.6440 | 0.6377 | 0.6403 |

| 0.5299 | 4.0 | 1184 | 0.5968 | 0.6949 | 0.6488 | 0.6340 | 0.6386 |

| 0.5299 | 5.0 | 1480 | 0.6236 | 0.6835 | 0.6430 | 0.6436 | 0.6433 |

| 0.4698 | 6.0 | 1776 | 0.6307 | 0.6882 | 0.6443 | 0.6384 | 0.6409 |

### Framework versions

- Transformers 4.24.0.dev0

- Pytorch 1.11.0+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Davlan/xlm-roberta-base-ner-hrl | [

"pytorch",

"xlm-roberta",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"XLMRobertaForTokenClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 760 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.52 +/- 2.72

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="emmashe15/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Davlan/xlm-roberta-base-sadilar-ner | [

"pytorch",

"xlm-roberta",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"XLMRobertaForTokenClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: 1itai1/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Davlan/xlm-roberta-base-wikiann-ner | [

"pytorch",

"tf",

"xlm-roberta",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"XLMRobertaForTokenClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 235 | null | ---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: r123oy

---

### Roy Dreambooth model trained by duja1 with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v1-5 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

r123oy (use that on your prompt)

|

Dazai/Ok | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

## Привет! Это модель основанная на ruGPT3-small

Модель опубликована под лицензией MIT!

# Пример кода на Python

```python

from transformers import GPT2LMHeadModel, GPT2Tokenizer

model_name_or_path = "путь до папки с моделью"

tokenizer = GPT2Tokenizer.from_pretrained(model_name_or_path)

model = GPT2LMHeadModel.from_pretrained(model_name_or_path).cuda()

input_ids = tokenizer.encode(message.content, return_tensors="pt").cuda()

out = model.generate(input_ids.cuda(), repetition_penalty=5.0, do_sample=True, top_k=5, top_p=0.95, temperature=1)

generated_text = list(map(tokenizer.decode, out))

print(generated_text[0])

```

# Смешные текста

Пока их нет! Но если ты хочешь добавить смешной случай открывай pull request

|

Ddarkros/Test | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language:

- en

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

widget:

- text: "masterpiece, best quality, anime, 1girl, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, garden, looking at viewer"

example_title: "anime 1girl"

- text: "masterpiece, best quality, anime, 1boy, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, garden, looking at viewer"

example_title: "anime 1boy"

---

# This is only a test model, not recommended, please don't use it directly!

Fine-tuned off Stable Diffusion [v2-1_768-nonema-pruned.ckpt](https://huggingface.co/stabilityai/stable-diffusion-2-1/blob/main/v2-1_768-nonema-pruned.ckpt).

|

DeBERTa/deberta-v2-xxlarge | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language:

- el

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

- whisper-large

- mozilla-foundation/common_voice_11_0

- greek

datasets:

- mozilla-foundation/common_voice_11_0

- google/fleurs

metrics:

- wer

model-index:

- name: whisper-lg-el-intlv-xs-2

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 el

type: mozilla-foundation/common_voice_11_0

config: el

split: test

metrics:

- name: Wer

type: wer

value: 9.50037147102526

---

# whisper-lg-el-intlv-xs-2

This model is a fine-tuned version of [farsipal/whisper-lg-el-intlv-xs](https://huggingface.co/farsipal/whisper-lg-el-intlv-xs) on the mozilla-foundation/common_voice_11_0,google/fleurs el,el_gr dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2872

- Wer: 9.5004

## Model description

The model was trained on two interleaved datasets for transcription in the Greek language.

## Intended uses & limitations

Transcription in the Greek language

## Training and evaluation data

Training was performed on two interleaved datasets. Testing was performed on common voice 11.0 (el) test only.

## Training procedure

```

--model_name_or_path 'farsipal/whisper-lg-el-intlv-xs' \

--model_revision main \

--do_train True \

--do_eval True \

--use_auth_token False \

--freeze_feature_encoder False \

--freeze_encoder False \

--model_index_name 'whisper-lg-el-intlv-xs-2' \

--dataset_name 'mozilla-foundation/common_voice_11_0,google/fleurs' \

--dataset_config_name 'el,el_gr' \

--train_split_name 'train+validation,train+validation' \

--eval_split_name 'test,-' \

--text_column_name 'sentence,transcription' \

--audio_column_name 'audio,audio' \

--streaming False \

--max_duration_in_seconds 30 \

--do_lower_case False \

--do_remove_punctuation False \

--do_normalize_eval True \

--language greek \

--task transcribe \

--shuffle_buffer_size 500 \

--output_dir './data/finetuningRuns/whisper-lg-el-intlv-xs-2' \

--overwrite_output_dir True \

--per_device_train_batch_size 8 \

--gradient_accumulation_steps 4 \

--learning_rate 3.5e-6 \

--dropout 0.15 \

--attention_dropout 0.05 \

--warmup_steps 500 \

--max_steps 5000 \

--eval_steps 1000 \

--gradient_checkpointing True \

--cache_dir '~/.cache' \

--fp16 True \

--evaluation_strategy steps \

--per_device_eval_batch_size 8 \

--predict_with_generate True \

--generation_max_length 225 \

--save_steps 1000 \

--logging_steps 25 \

--report_to tensorboard \

--load_best_model_at_end True \

--metric_for_best_model wer \

--greater_is_better False \

--push_to_hub False \

--dataloader_num_workers 6

```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3.5e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0813 | 2.49 | 1000 | 0.2147 | 10.8284 |

| 0.0379 | 4.98 | 2000 | 0.2439 | 10.0111 |

| 0.0195 | 7.46 | 3000 | 0.2767 | 9.8811 |

| 0.0126 | 9.95 | 4000 | 0.2872 | 9.5004 |

| 0.0103 | 12.44 | 5000 | 0.3021 | 9.6954 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

|

DeadBeast/korscm-mBERT | [

"pytorch",

"bert",

"text-classification",

"korean",

"dataset:Korean-Sarcasm",

"transformers",

"license:apache-2.0"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 43 | null | ---

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- google/fleurs

metrics:

- wer

model-index:

- name: Whisper Small Maori

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: google/fleurs mi_nz

type: google/fleurs

config: mi_nz

split: test

args: mi_nz

metrics:

- name: Wer

type: wer

value: 30.481593707691317

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Maori

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the google/fleurs mi_nz dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7756

- Wer: 30.4816

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42