modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Declan/Politico_model_v3 | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | 2022-12-17T10:05:27Z | ---

language:

- en

tags:

- StableDiffusion

- Warhammer

- wh40k

license: apache-2.0

library_name: diffusers

pipeline_tag: text-to-image

---

StableDiffusion model trained on Sororitas Sisters of Battle dataset

Use token whsororitas for Sororitas

Use token whinsignia for Insignia-themed items

- Samples

|

DicoTiar/wisdomfiy | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Unterwexi/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

DiegoBalam12/institute_classification | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: TaxiV2

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Unterwexi/TaxiV2", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Dkwkk/Da | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="kfahn/Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Doohae/q_encoder | [

"pytorch"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

license: afl-3.0

language:

- it

---

<img src="https://huggingface.co/dlicari/lsg16k-Italian-Legal-BERT/resolve/main/ITALIAN_LEGAL_BERT-LSG.jpg" width="600"/>

# LSG16K-Italian-LEGAL-BERT

[Local-Sparse-Global](https://arxiv.org/abs/2210.15497) version of [ITALIAN-LEGAL-BERT-SC](https://huggingface.co/dlicari/Italian-Legal-BERT-SC) by replacing the full attention in the encoder part using the LSG converter script (https://github.com/ccdv-ai/convert\_checkpoint\_to\_lsg). We used the LSG attention with 16,384 maximum sequence length, 7 global tokens, 128 local block size, 128 sparse block size, 2 sparsity factors, 'norm' sparse selection pattern (select the highest norm tokens). |

Doohae/roberta | [

"pytorch",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"RobertaForQuestionAnswering"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: kontogiorgos/testpyramidsrnd

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

DoyyingFace/bert-asian-hate-tweets-asian-unclean-warmup-100 | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 28 | null | ---

language:

- th

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: Whisper Small Thai

results:

- task:

type: automatic-speech-recognition

name: Automatic Speech Recognition

dataset:

name: mozilla-foundation/common_voice_11_0 th

type: mozilla-foundation/common_voice_11_0

config: th

split: test

args: th

metrics:

- type: wer

value: 14.060702592690913

name: Wer

- type: mer

value: 13.786820528393562

name: Mer

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Thai

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the mozilla-foundation/common_voice_11_0 th,None,th_th dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1841

- Wer: 14.060

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0909 | 0.2 | 1000 | 0.3373 | 25.5752 |

| 0.0426 | 1.1 | 2000 | 0.2540 | 20.9739 |

| 0.0267 | 2.0 | 3000 | 0.2210 | 17.4080 |

| 0.0145 | 2.2 | 4000 | 0.2134 | 15.5675 |

| 0.0099 | 3.1 | 5000 | 0.1841 | 13.2285 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

DoyyingFace/bert-asian-hate-tweets-asonam-unclean | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | null | ---

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- google/fleurs

metrics:

- wer

model-index:

- name: Whisper Small Chinese Base

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: google/fleurs cmn_hans_cn

type: google/fleurs

config: cmn_hans_cn

split: test

args: cmn_hans_cn

metrics:

- name: Wer

type: wer

value: 16.643891773708663

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Chinese Base

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the google/fleurs cmn_hans_cn dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3573

- Wer: 16.6439

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0005 | 76.0 | 1000 | 0.3573 | 16.6439 |

| 0.0002 | 153.0 | 2000 | 0.3897 | 16.9749 |

| 0.0001 | 230.0 | 3000 | 0.4125 | 17.2330 |

| 0.0001 | 307.0 | 4000 | 0.4256 | 17.2451 |

| 0.0001 | 384.0 | 5000 | 0.4330 | 17.2300 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

DoyyingFace/bert-asian-hate-tweets-concat-clean-with-unclean-valid | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

tags:

- generated_from_keras_callback

model-index:

- name: Farras/mT5_multilingual_XLSum-kompas

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Farras/mT5_multilingual_XLSum-kompas

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.2318

- Validation Loss: 3.9491

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5.6e-05, 'decay_steps': 920, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.6000 | 4.0879 | 0 |

| 4.2318 | 3.9491 | 1 |

### Framework versions

- Transformers 4.25.1

- TensorFlow 2.10.0

- Datasets 2.7.1

- Tokenizers 0.13.2

|

albert-large-v2 | [

"pytorch",

"tf",

"safetensors",

"albert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1909.11942",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"AlbertForMaskedLM"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 26,792 | 2022-12-17T17:15:22Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="RajMoodley/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

albert-xlarge-v2 | [

"pytorch",

"tf",

"albert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1909.11942",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"AlbertForMaskedLM"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,973 | 2022-12-17T17:22:08Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="RajMoodley/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

albert-xxlarge-v2 | [

"pytorch",

"tf",

"safetensors",

"albert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1909.11942",

"transformers",

"exbert",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"AlbertForMaskedLM"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 42,640 | 2022-12-17T17:23:59Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: sdcid

---

### training params

```json

```

|

bert-base-cased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"exbert",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8,621,271 | 2022-12-17T17:27:29Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### novasessaodidicowe Dreambooth model trained by Murdokai with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

|

bert-base-german-cased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"de",

"transformers",

"exbert",

"license:mit",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 175,983 | null | ---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- NoAI

- AntiAI

---

Stable Diffusion 1.4 finetuned with a lot of NoAI/AntiAI images plus AI generated **creative** logos. Have fun 🤗

## Sample image

NoAI-Diffusion-variety v1.0

||||

|-|-|-|

||

## Diffusers

```py

from diffusers import StableDiffusionPipeline

import torch

model_id = "Kokohachi/NoAI-Diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16, revision="fp16")

pipe = pipe.to("cuda")

prompt = "sks icon, antiai logo"

image = pipe(prompt).images[0]

image.save("noai.png")

```

For more detailed instructions, use-cases and examples in JAX follow the instructions [here](https://github.com/huggingface/diffusers#text-to-image-generation-with-stable-diffusion)

|

bert-base-german-dbmdz-cased | [

"pytorch",

"jax",

"bert",

"fill-mask",

"de",

"transformers",

"license:mit",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1,814 | 2022-12-17T17:32:47Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: vit-vit-base-patch16-224-in21k-eurosat

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.988641975308642

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-vit-base-patch16-224-in21k-eurosat

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0957

- Accuracy: 0.9886

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3303 | 0.99 | 147 | 0.2950 | 0.9790 |

| 0.1632 | 1.99 | 294 | 0.1593 | 0.9842 |

| 0.1097 | 2.99 | 441 | 0.1223 | 0.9859 |

| 0.0868 | 3.99 | 588 | 0.1053 | 0.9877 |

| 0.0651 | 4.99 | 735 | 0.0957 | 0.9886 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

bert-base-german-dbmdz-uncased | [

"pytorch",

"jax",

"safetensors",

"bert",

"fill-mask",

"de",

"transformers",

"license:mit",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 68,305 | 2022-12-17T17:38:00Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PP0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -225.45 +/- 28.06

name: mean_reward

verified: false

---

# **PP0** Agent playing **LunarLander-v2**

This is a trained model of a **PP0** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

bert-base-multilingual-cased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"multilingual",

"af",

"sq",

"ar",

"an",

"hy",

"ast",

"az",

"ba",

"eu",

"bar",

"be",

"bn",

"inc",

"bs",

"br",

"bg",

"my",

"ca",

"ceb",

"ce",

"zh",

"cv",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fi",

"fr",

"gl",

"ka",

"de",

"el",

"gu",

"ht",

"he",

"hi",

"hu",

"is",

"io",

"id",

"ga",

"it",

"ja",

"jv",

"kn",

"kk",

"ky",

"ko",

"la",

"lv",

"lt",

"roa",

"nds",

"lm",

"mk",

"mg",

"ms",

"ml",

"mr",

"mn",

"min",

"ne",

"new",

"nb",

"nn",

"oc",

"fa",

"pms",

"pl",

"pt",

"pa",

"ro",

"ru",

"sco",

"sr",

"scn",

"sk",

"sl",

"aze",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"th",

"ta",

"tt",

"te",

"tr",

"uk",

"ud",

"uz",

"vi",

"vo",

"war",

"cy",

"fry",

"pnb",

"yo",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4,749,504 | 2022-12-17T17:38:20Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="jackson-lucas/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

bert-base-multilingual-uncased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"multilingual",

"af",

"sq",

"ar",

"an",

"hy",

"ast",

"az",

"ba",

"eu",

"bar",

"be",

"bn",

"inc",

"bs",

"br",

"bg",

"my",

"ca",

"ceb",

"ce",

"zh",

"cv",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fi",

"fr",

"gl",

"ka",

"de",

"el",

"gu",

"ht",

"he",

"hi",

"hu",

"is",

"io",

"id",

"ga",

"it",

"ja",

"jv",

"kn",

"kk",

"ky",

"ko",

"la",

"lv",

"lt",

"roa",

"nds",

"lm",

"mk",

"mg",

"ms",

"ml",

"mr",

"min",

"ne",

"new",

"nb",

"nn",

"oc",

"fa",

"pms",

"pl",

"pt",

"pa",

"ro",

"ru",

"sco",

"sr",

"scn",

"sk",

"sl",

"aze",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"ta",

"tt",

"te",

"tr",

"uk",

"ud",

"uz",

"vi",

"vo",

"war",

"cy",

"fry",

"pnb",

"yo",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 328,585 | null | Access to model sd-concepts-library/roberto-amaro-ocana is restricted and you are not in the authorized list. Visit https://huggingface.co/sd-concepts-library/roberto-amaro-ocana to ask for access. |

bert-large-cased-whole-word-masking-finetuned-squad | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"bert",

"question-answering",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8,214 | 2022-12-17T17:48:36Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice_11_0

metrics:

- wer

model-index:

- name: openai/whisper-small

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: common_voice_11_0

type: common_voice_11_0

config: ar

split: test

args: ar

metrics:

- name: Wer

type: wer

value: 55.249333333333325

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-small

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the common_voice_11_0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5848

- Wer: 55.2493

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0216 | 6.01 | 2000 | 0.4766 | 55.8587 |

| 0.0014 | 12.02 | 4000 | 0.5848 | 55.2493 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

bert-large-cased-whole-word-masking | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,316 | 2022-12-17T17:50:05Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="sheldon-spock/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

bert-large-cased | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 388,769 | null | ---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: sdcid

---

### a797ea21-1729-49ee-b2c9-1b0bc3d641f7 Dreambooth model trained by tzvc with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v1-5 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

sdcid (use that on your prompt)

|

bert-large-uncased-whole-word-masking-finetuned-squad | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"question-answering",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 480,510 | 2022-12-17T17:53:38Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.54 +/- 2.73

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="sheldon-spock/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

bert-large-uncased-whole-word-masking | [

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 76,685 | 2022-12-17T17:54:46Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: J4F4N4F/Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

distilbert-base-cased-distilled-squad | [

"pytorch",

"tf",

"rust",

"safetensors",

"openvino",

"distilbert",

"question-answering",

"en",

"dataset:squad",

"arxiv:1910.01108",

"arxiv:1910.09700",

"transformers",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"DistilBertForQuestionAnswering"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 257,745 | 2022-12-17T18:01:29Z | ---

license: mit

---

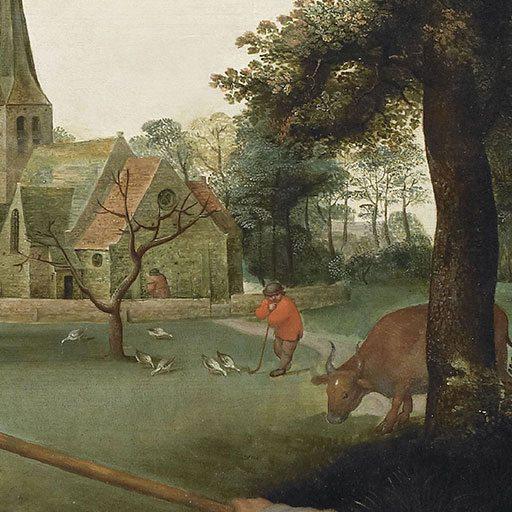

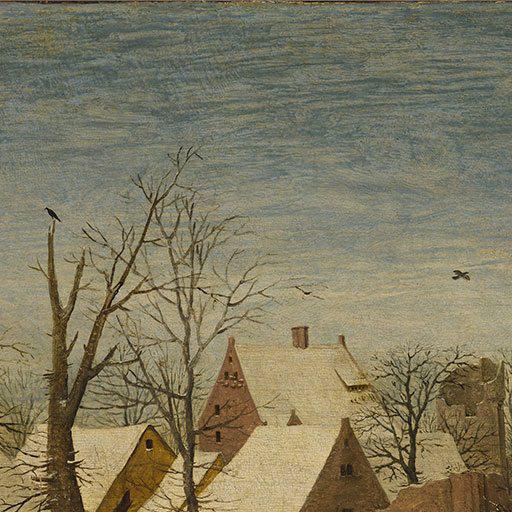

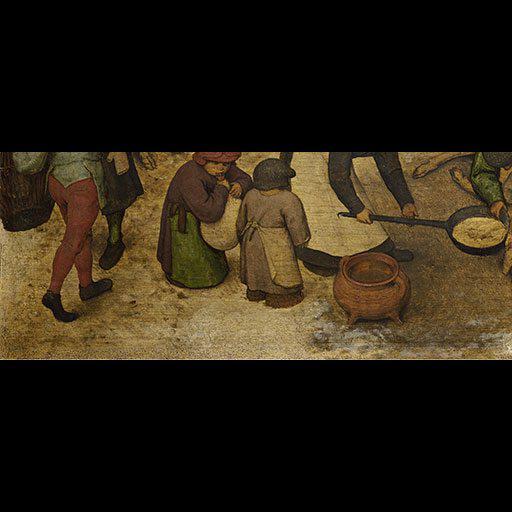

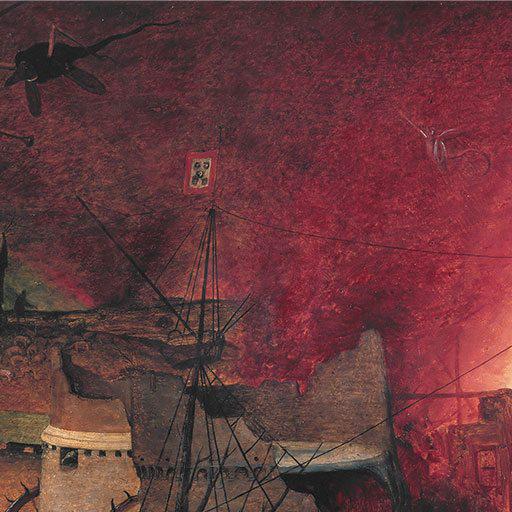

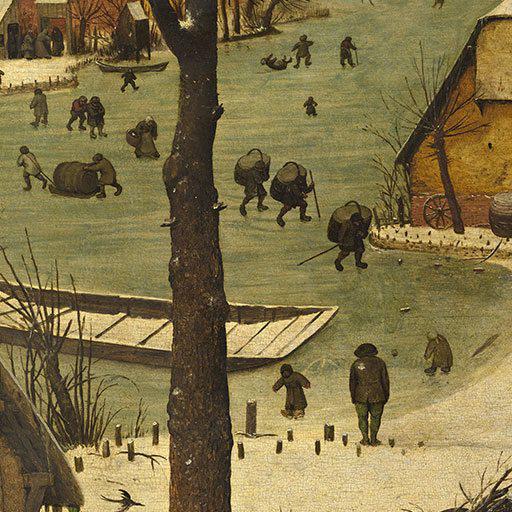

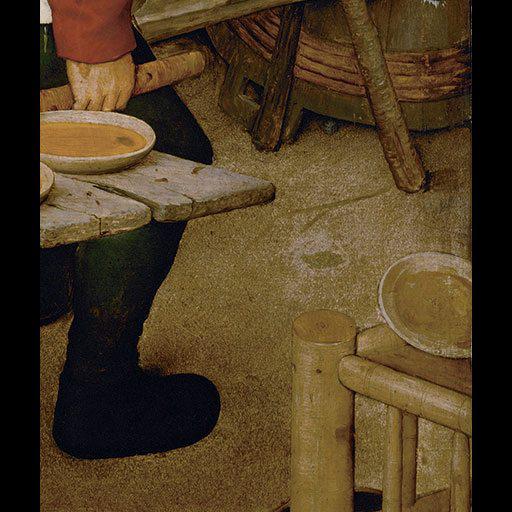

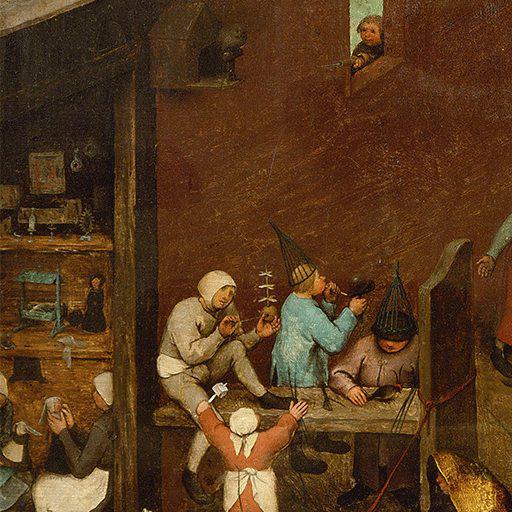

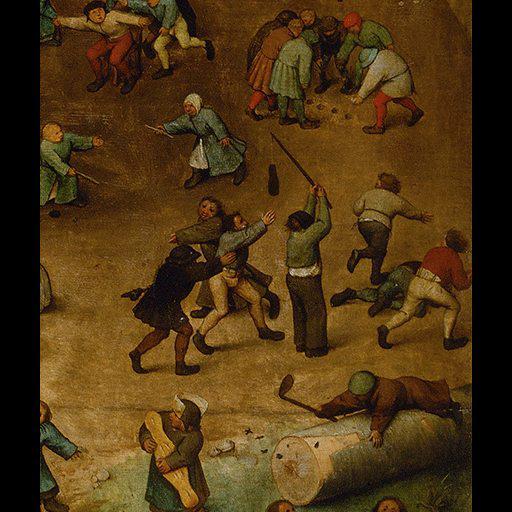

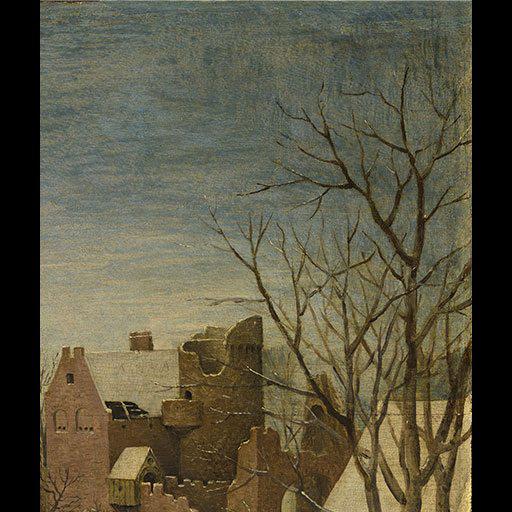

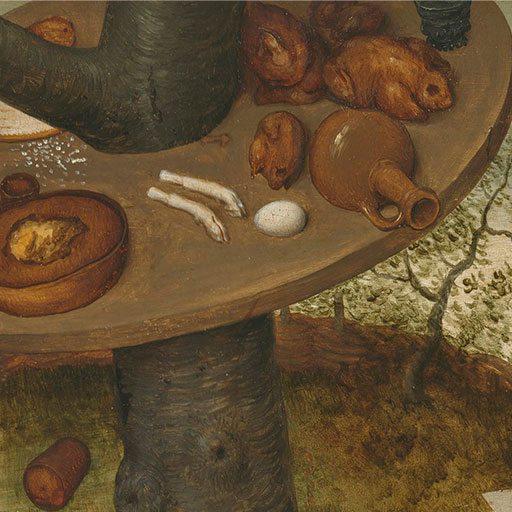

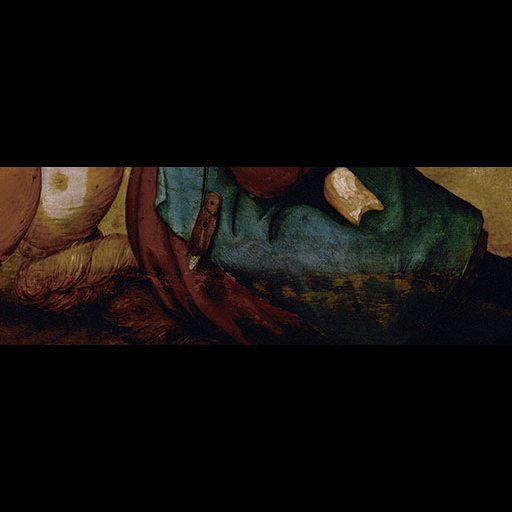

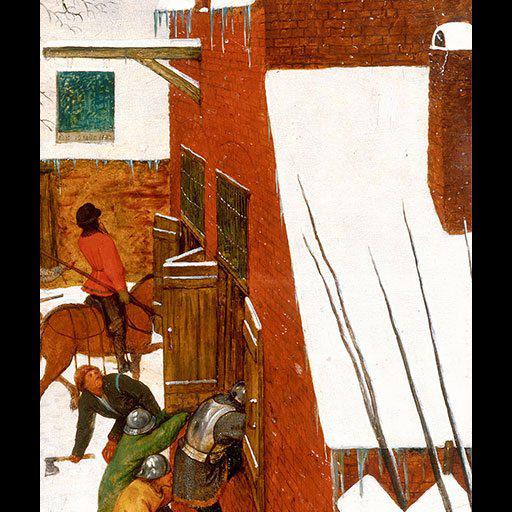

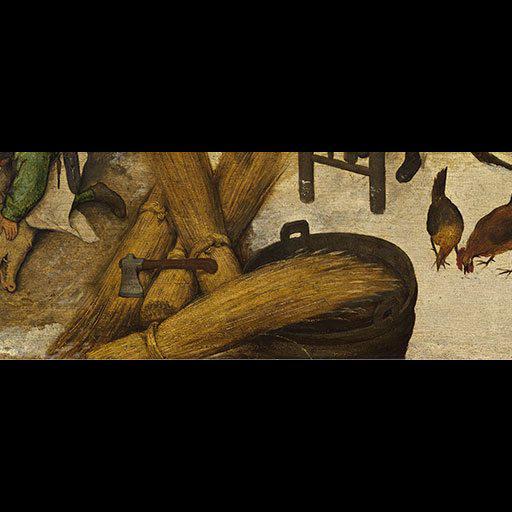

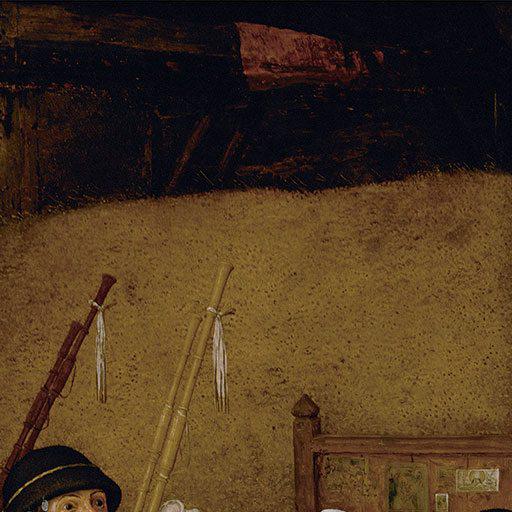

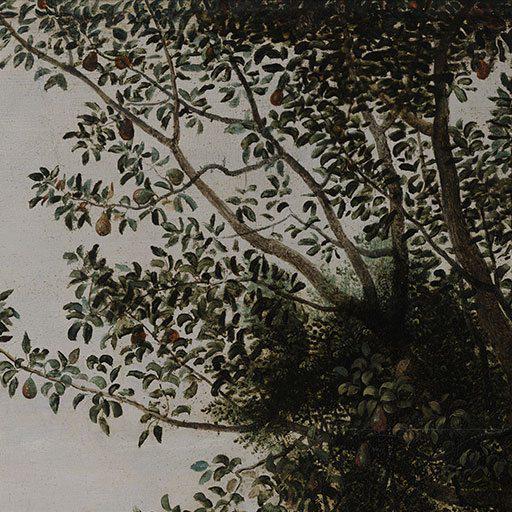

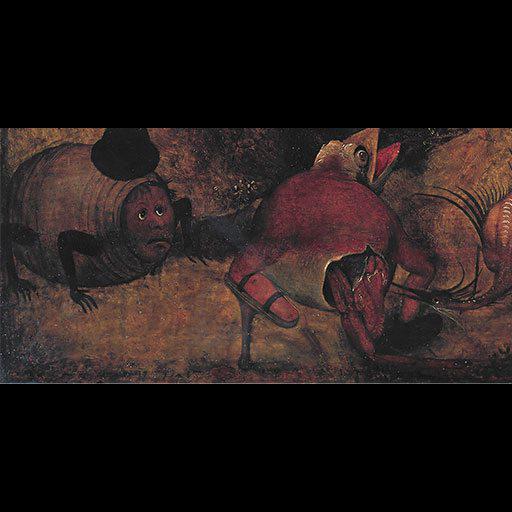

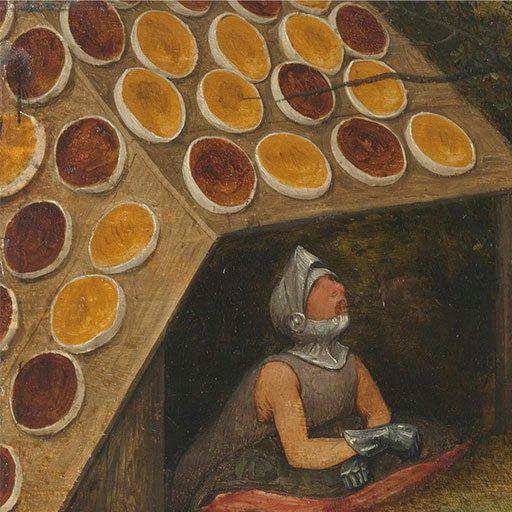

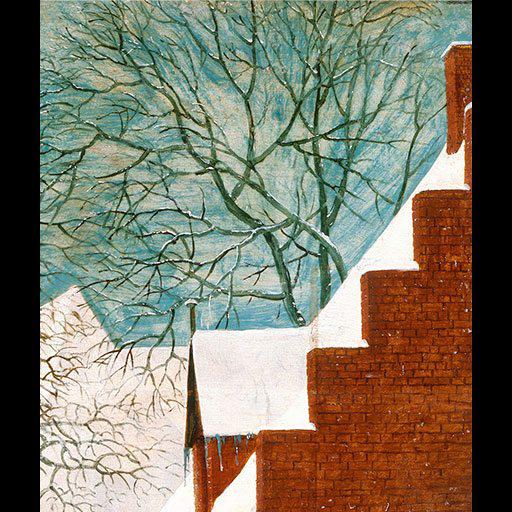

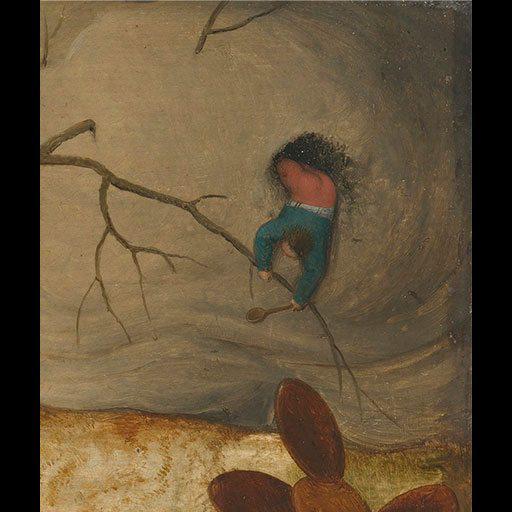

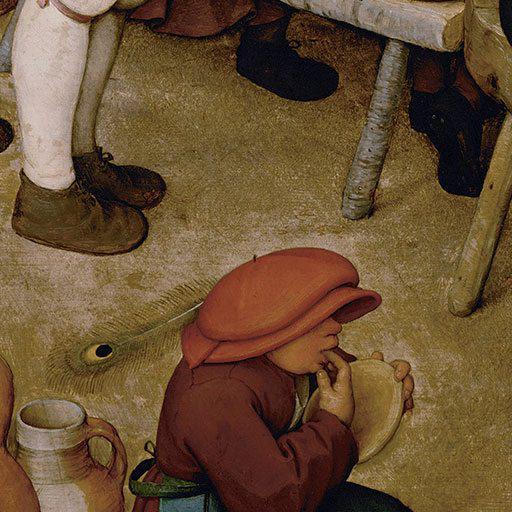

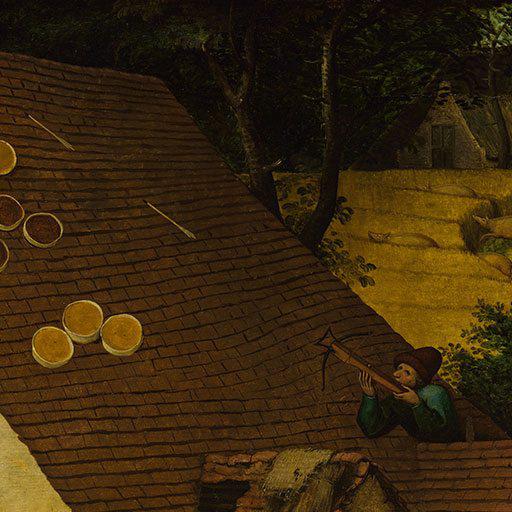

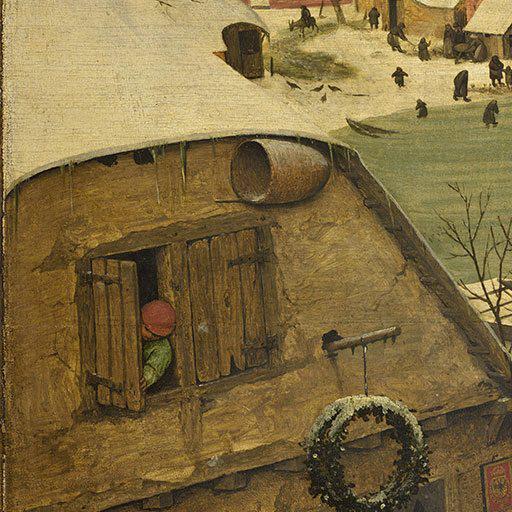

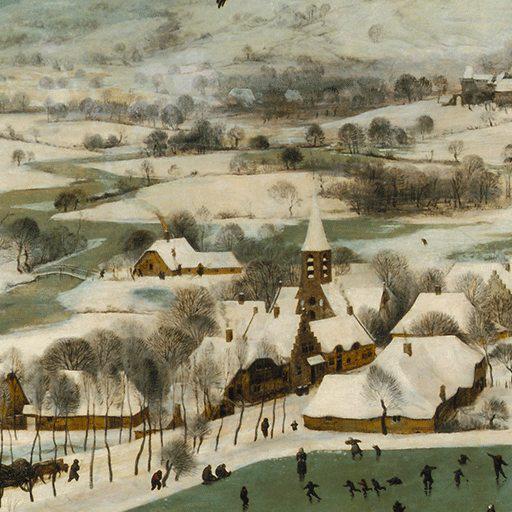

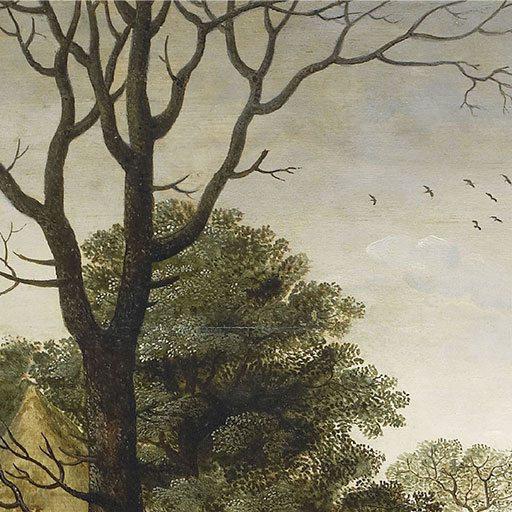

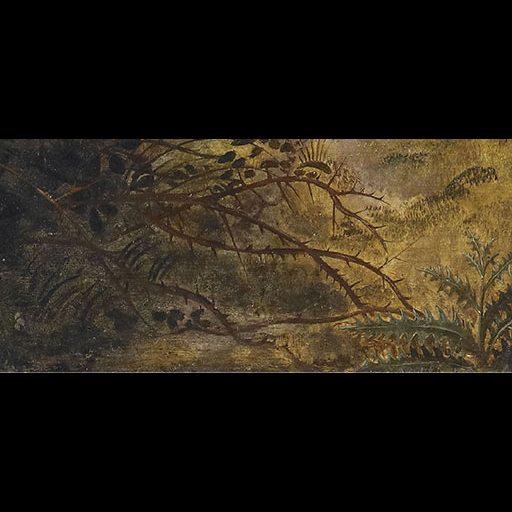

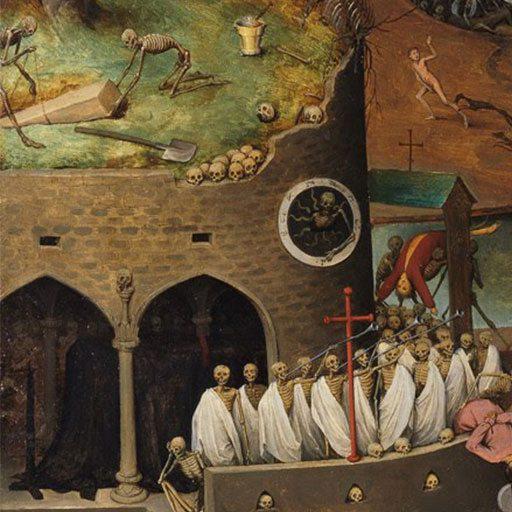

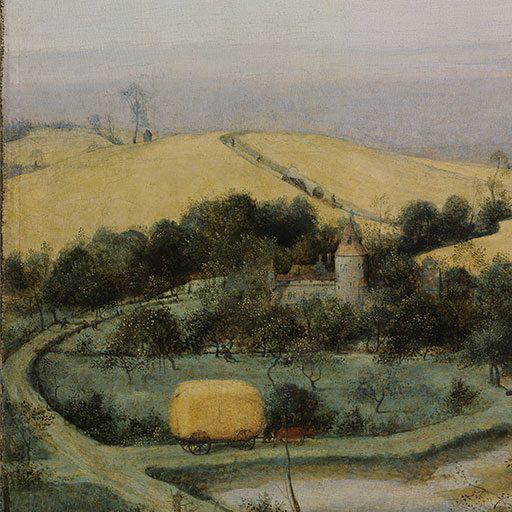

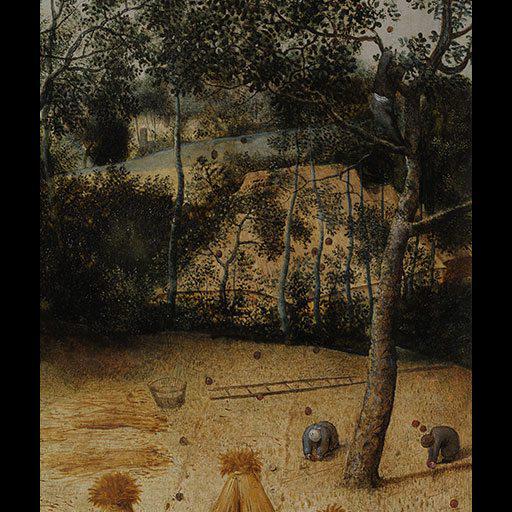

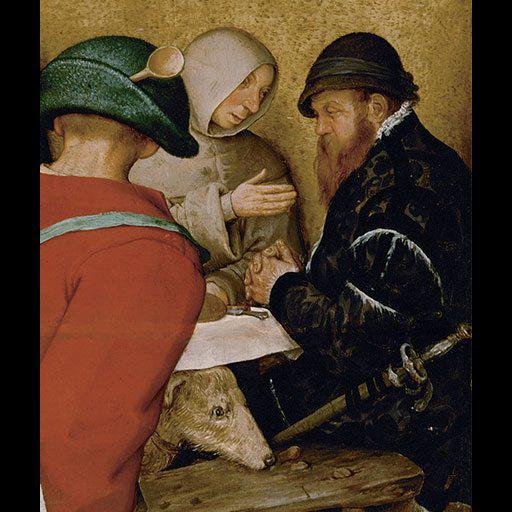

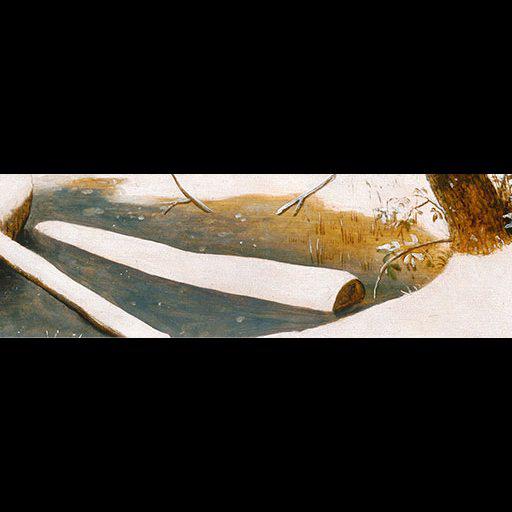

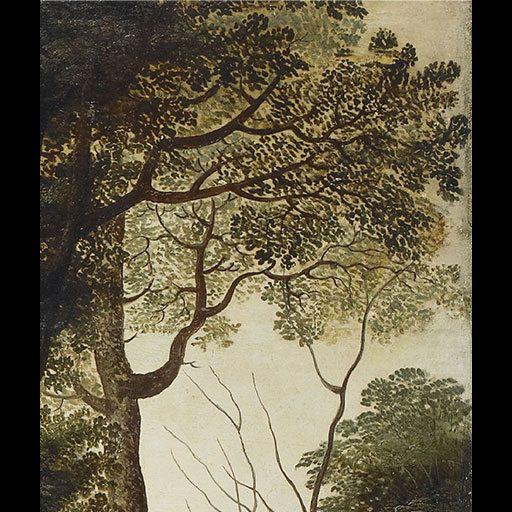

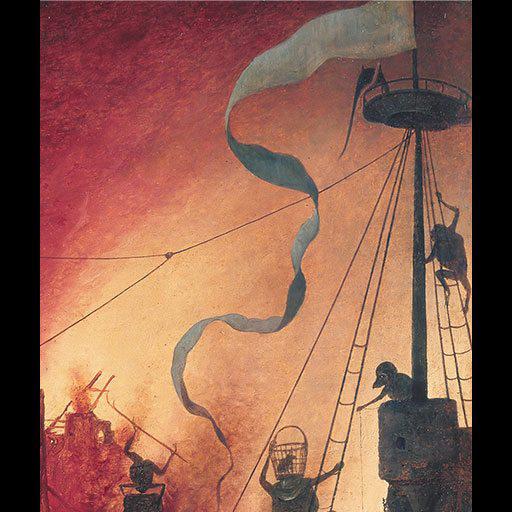

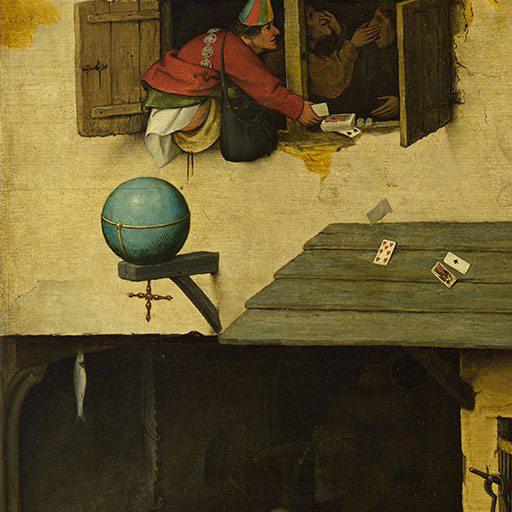

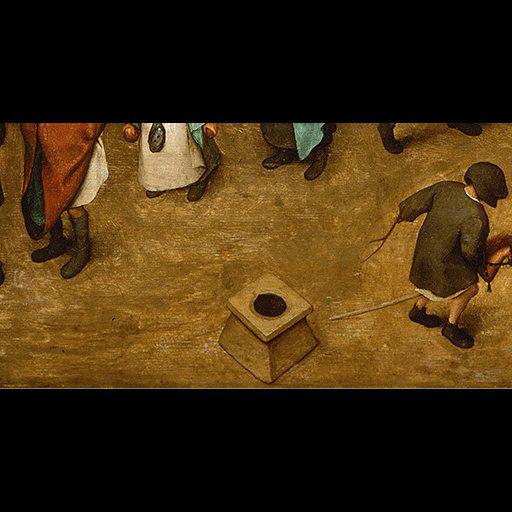

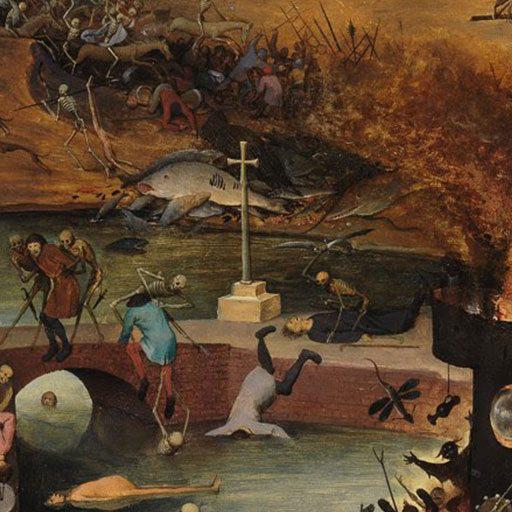

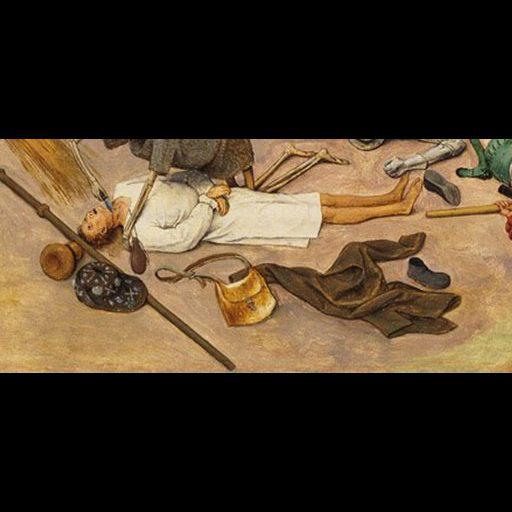

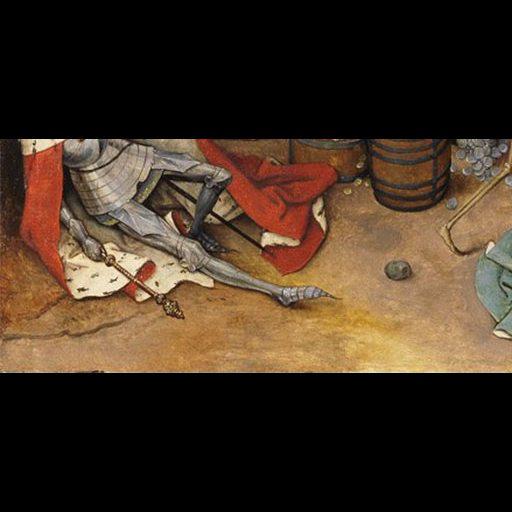

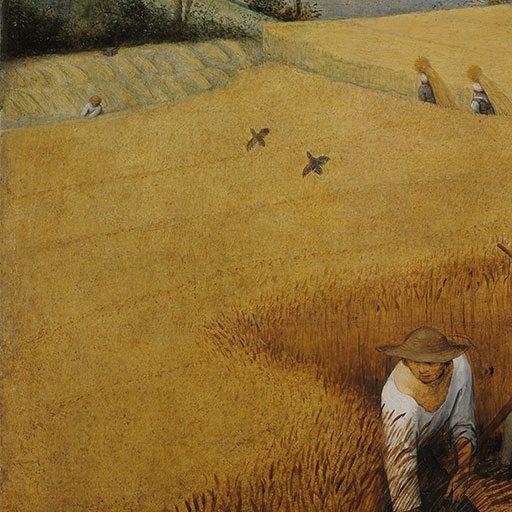

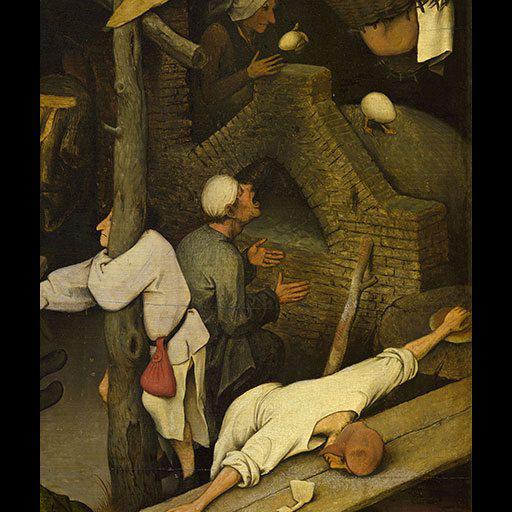

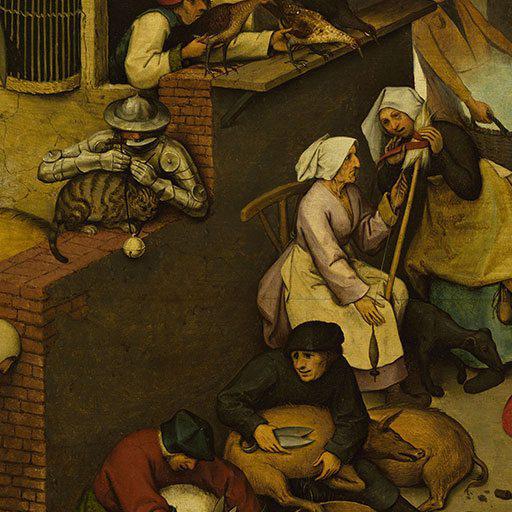

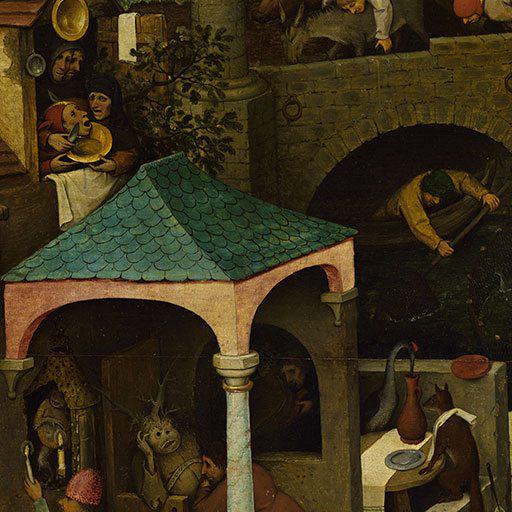

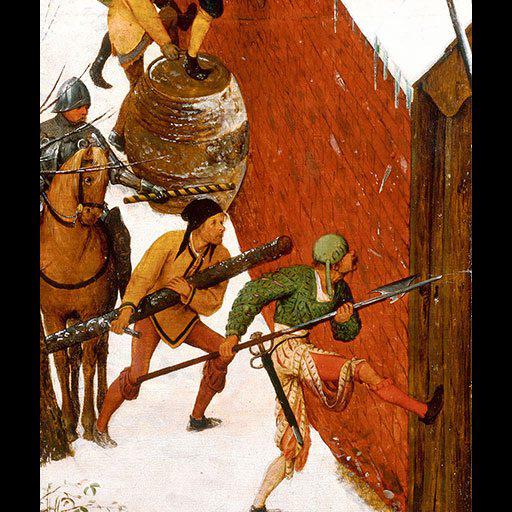

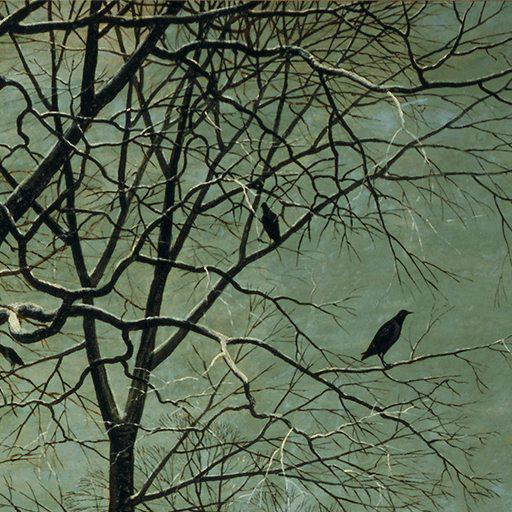

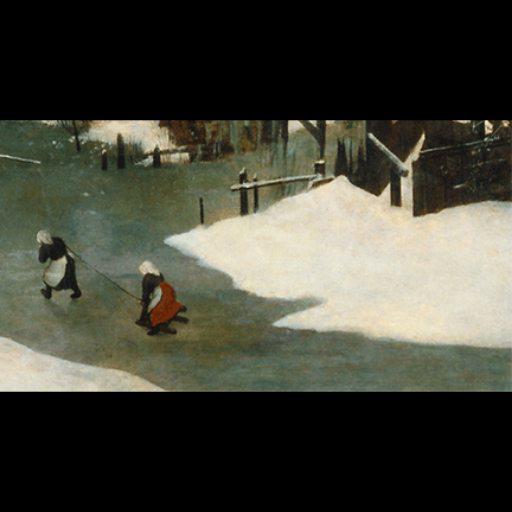

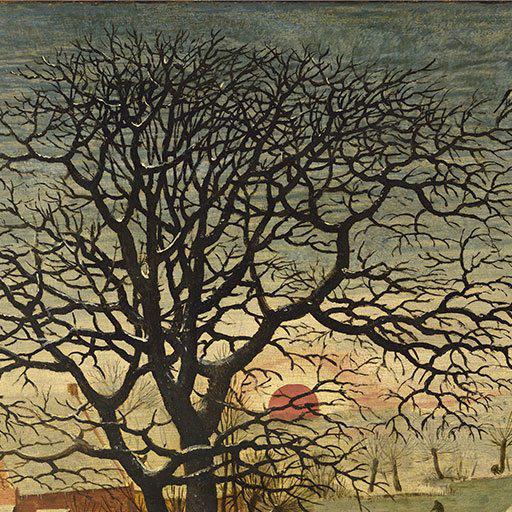

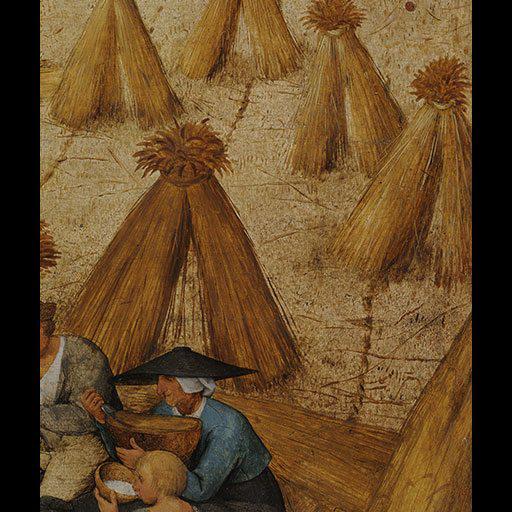

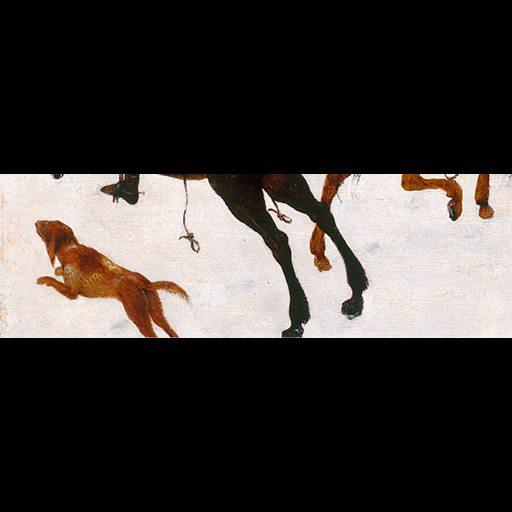

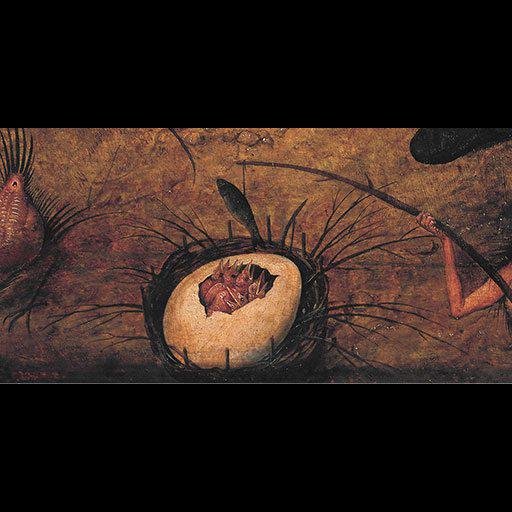

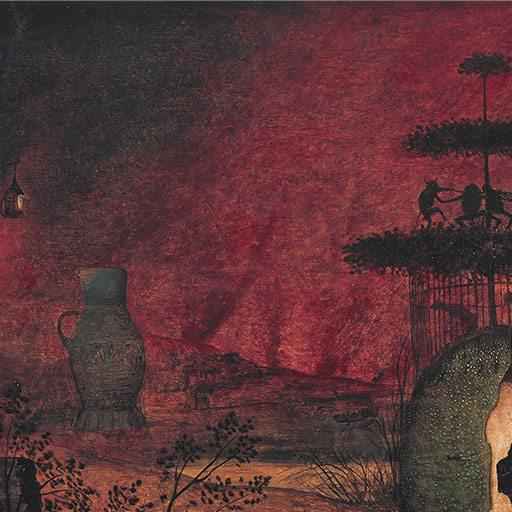

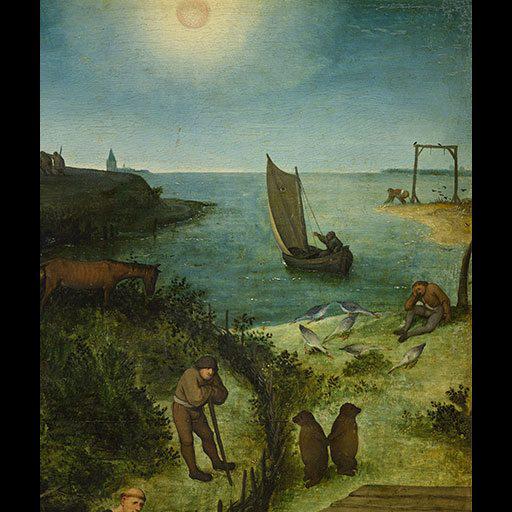

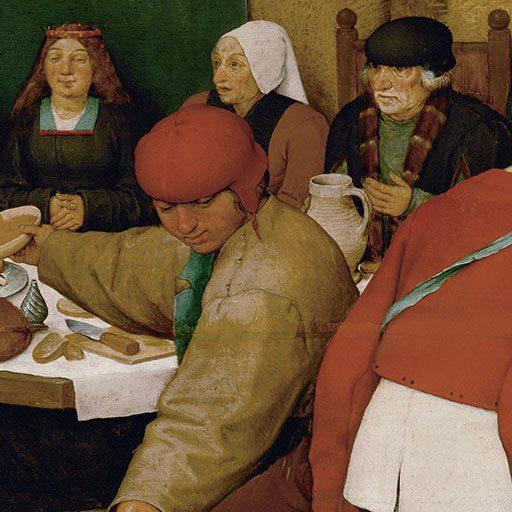

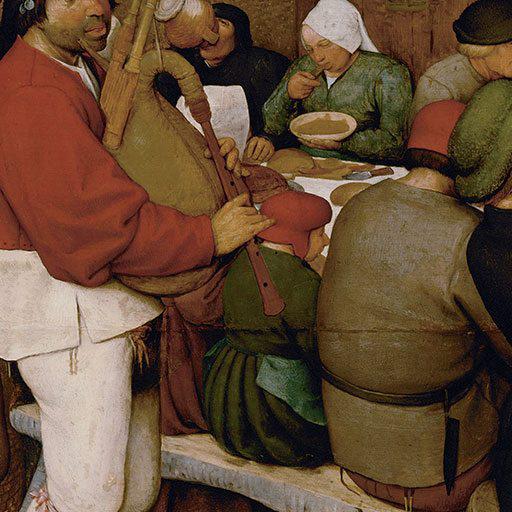

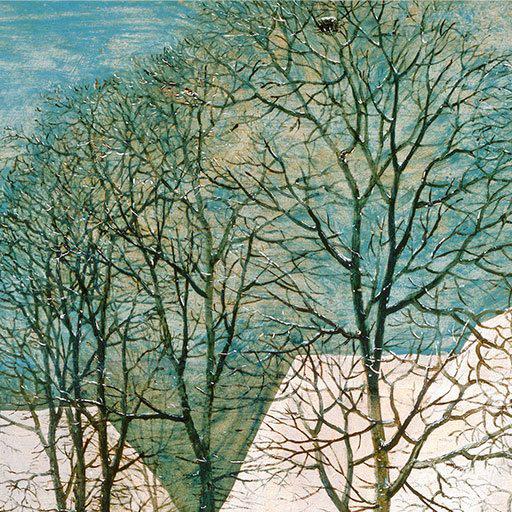

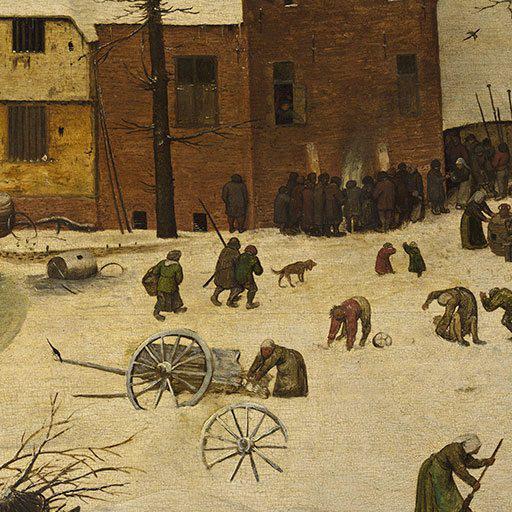

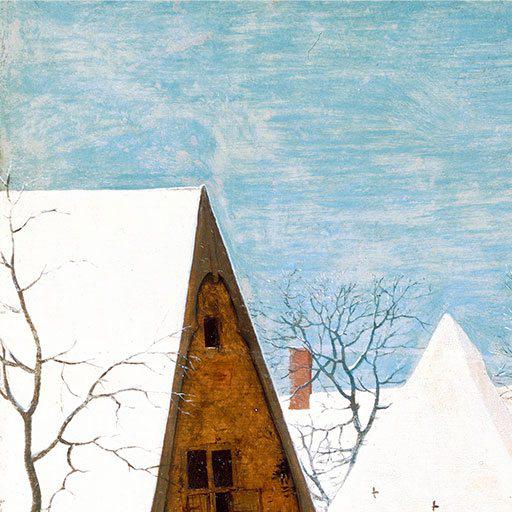

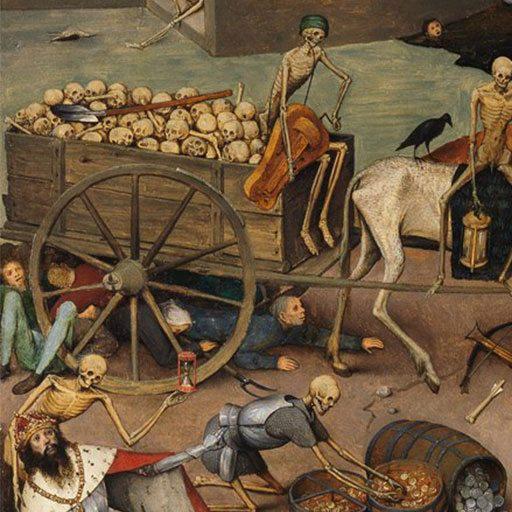

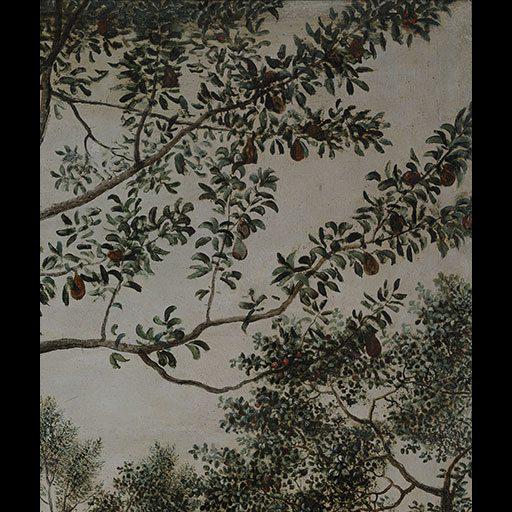

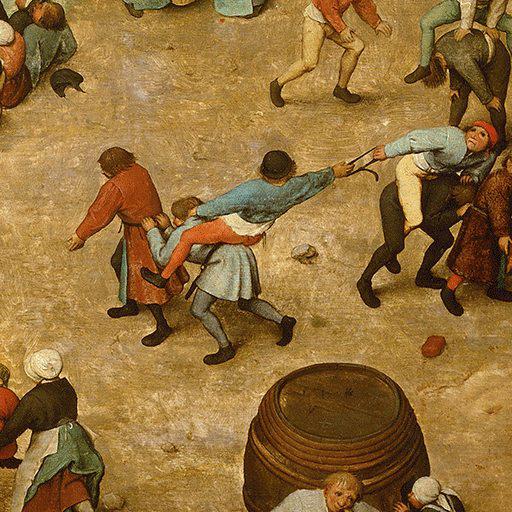

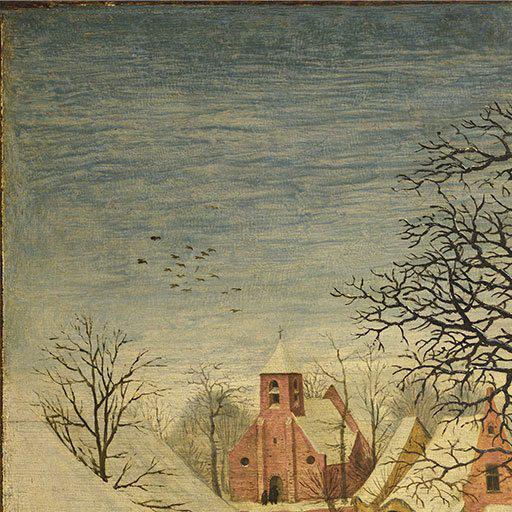

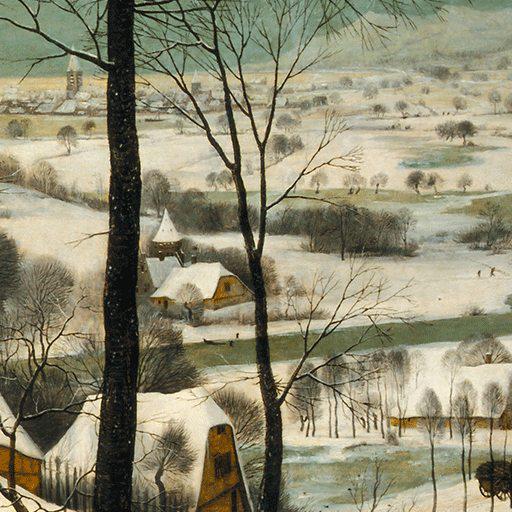

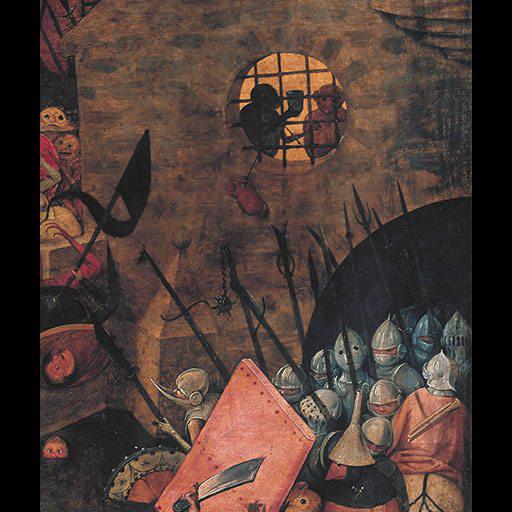

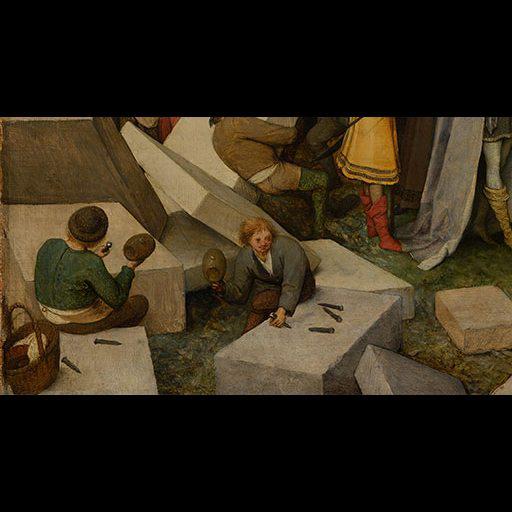

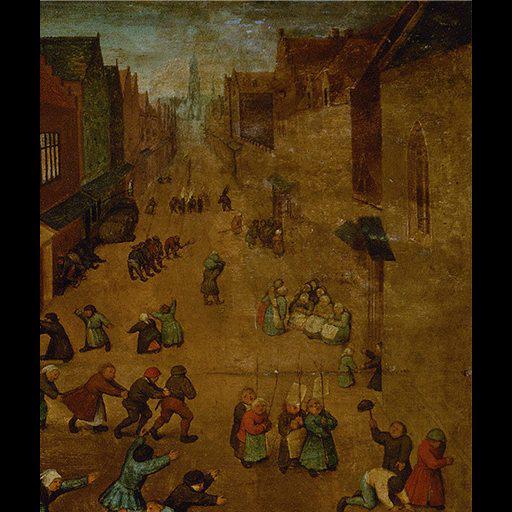

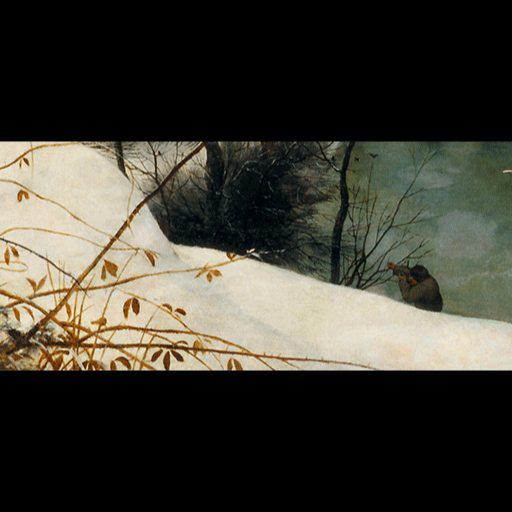

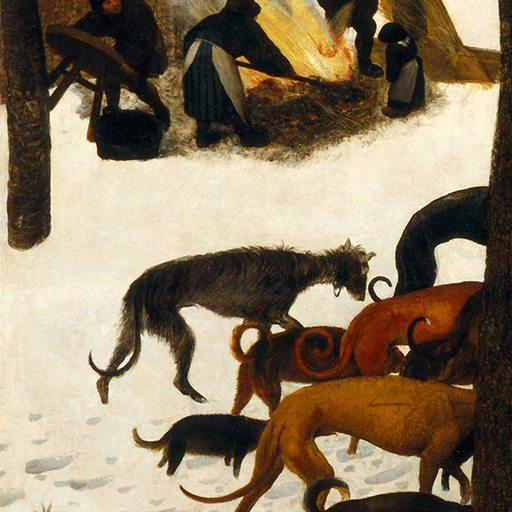

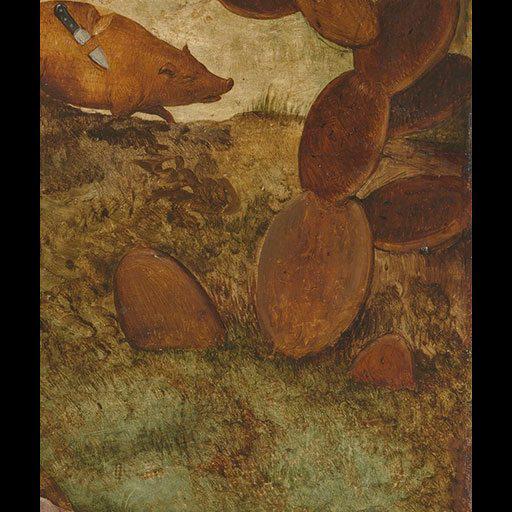

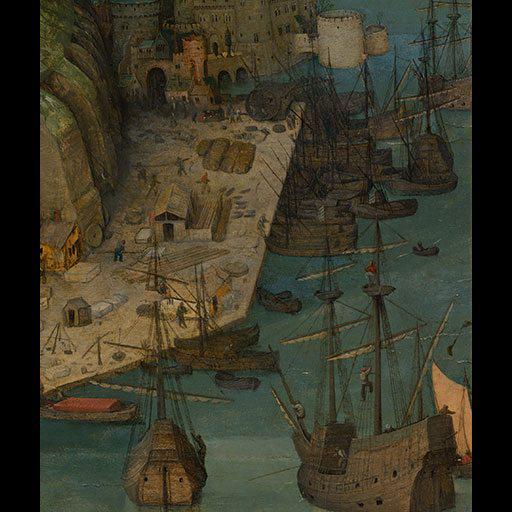

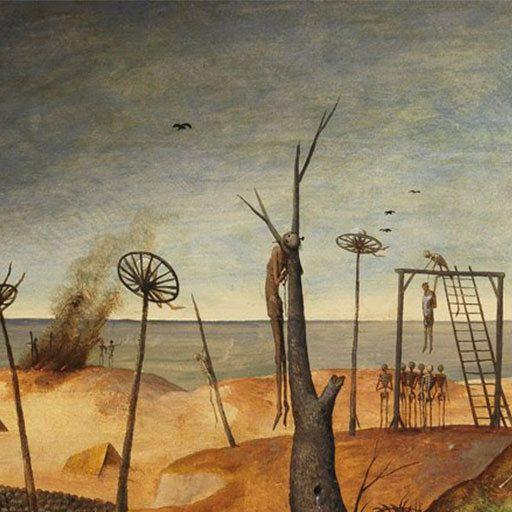

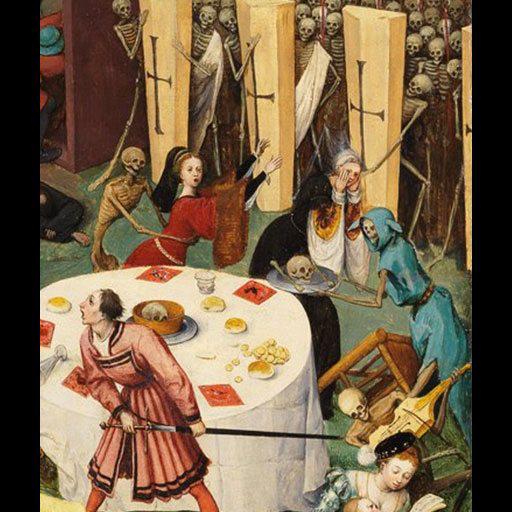

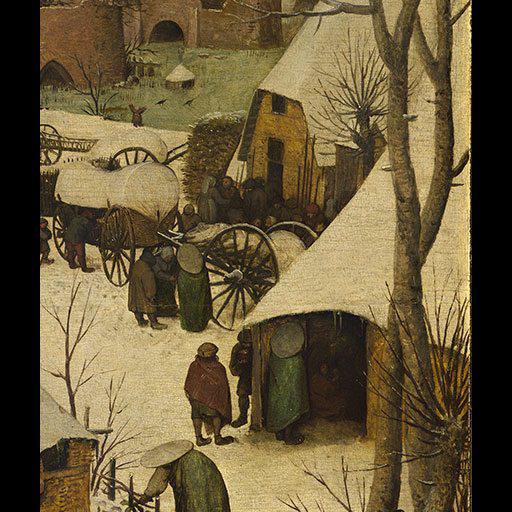

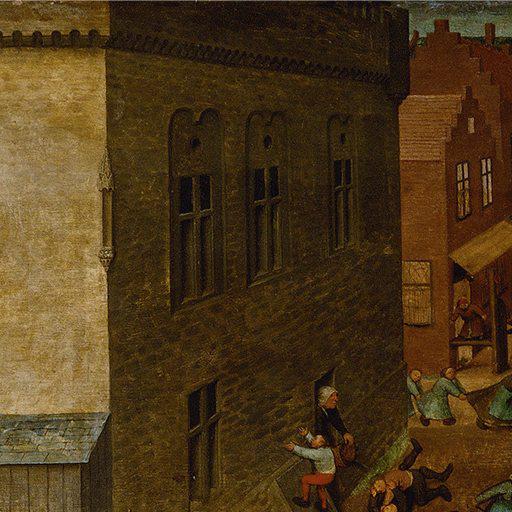

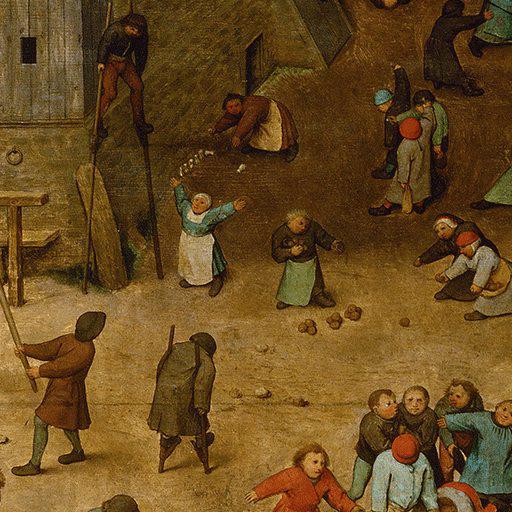

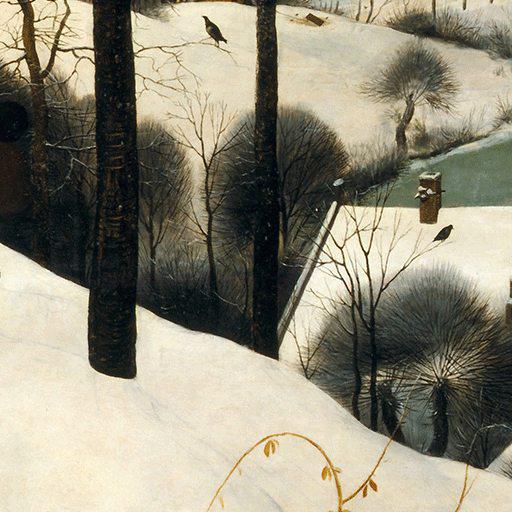

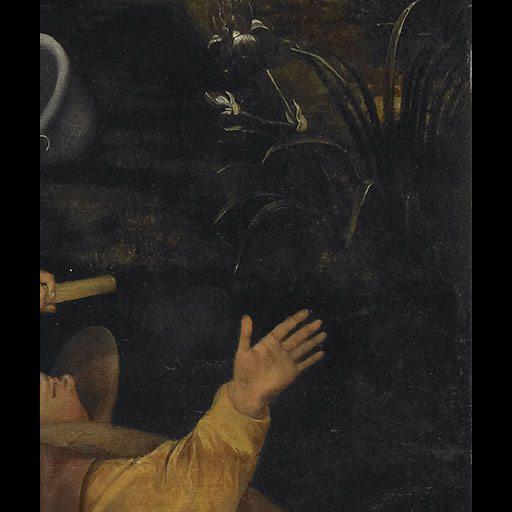

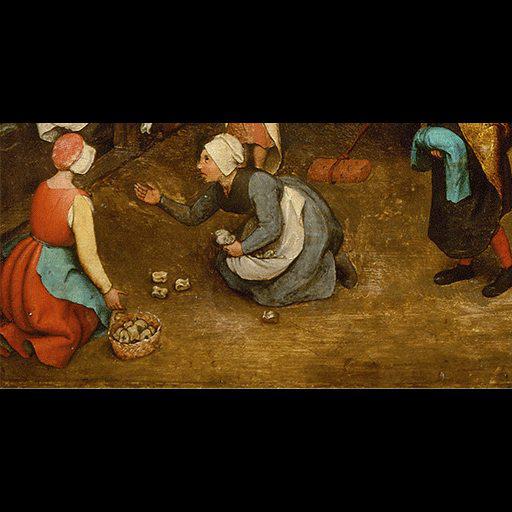

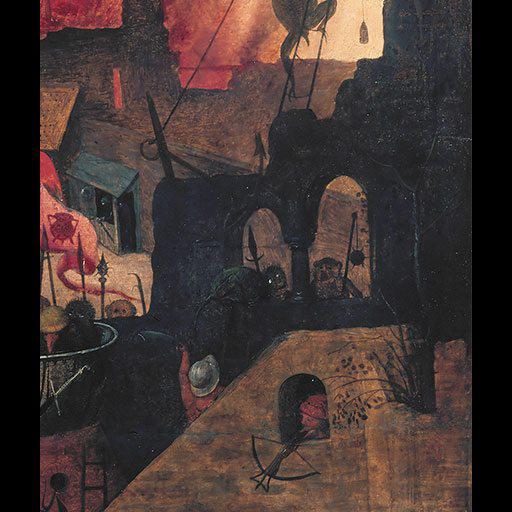

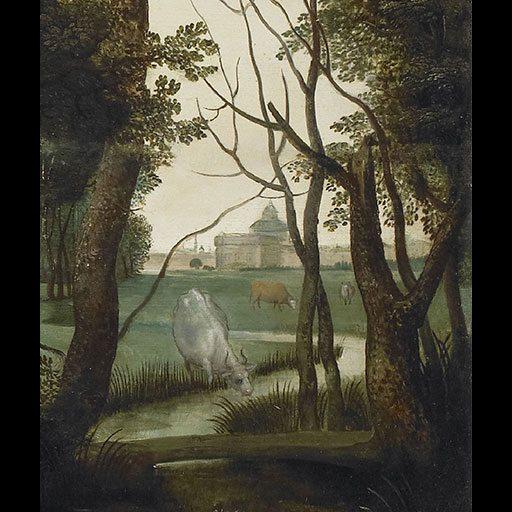

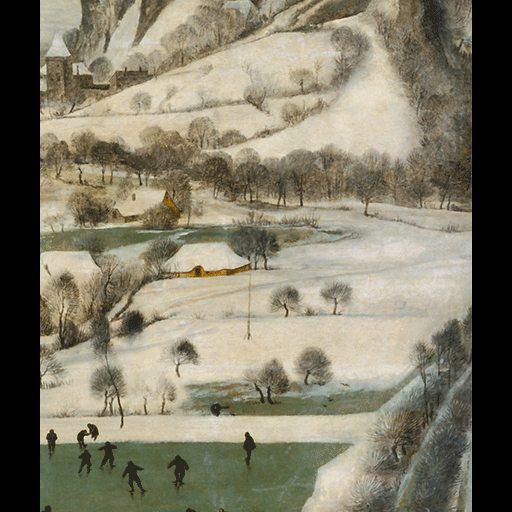

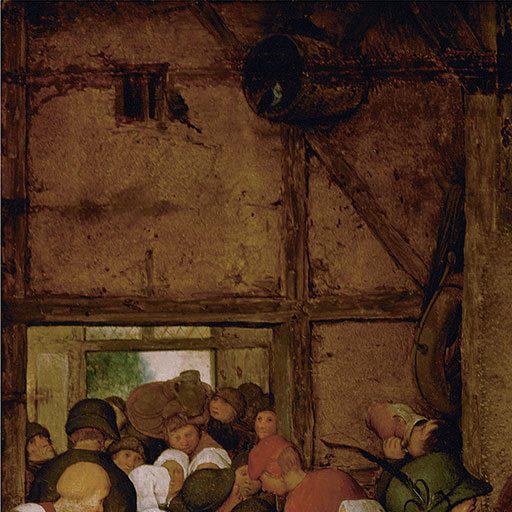

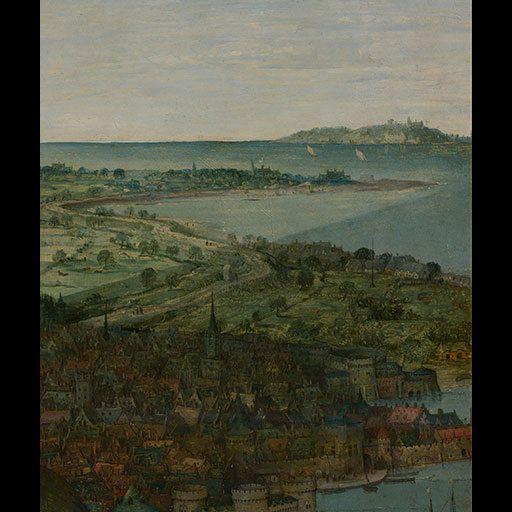

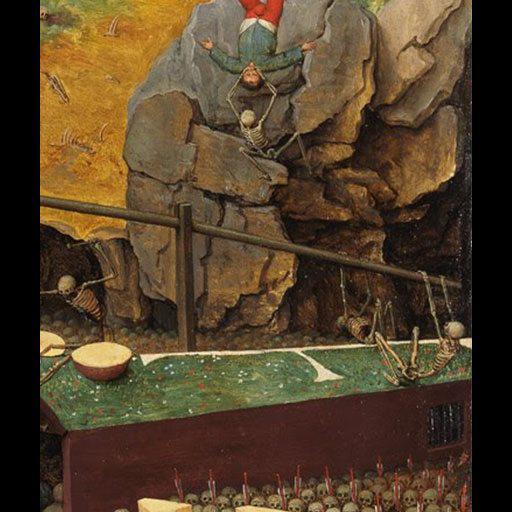

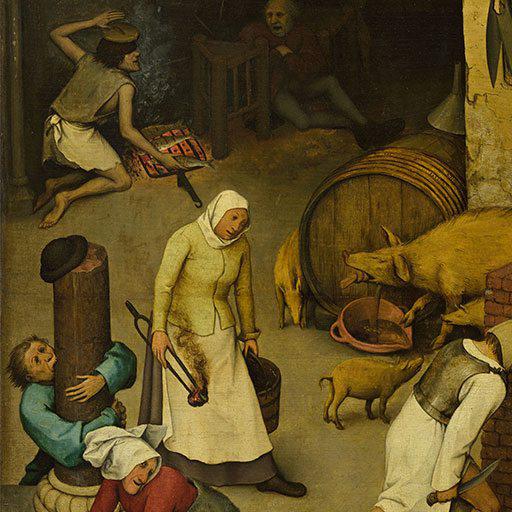

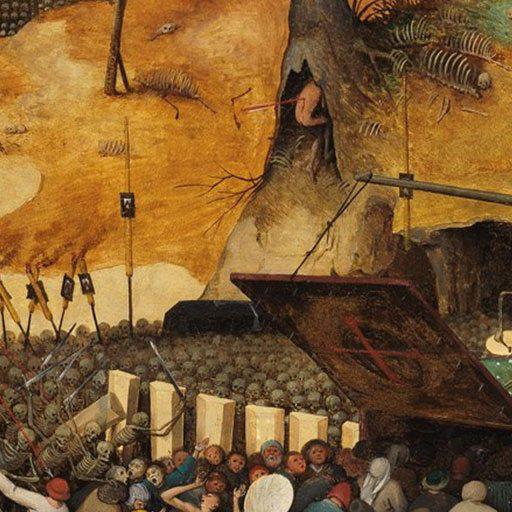

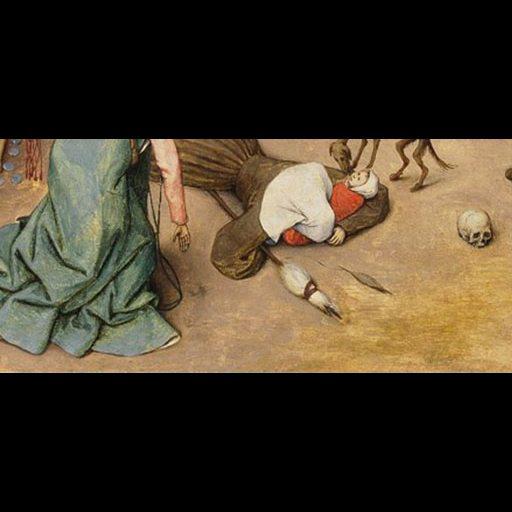

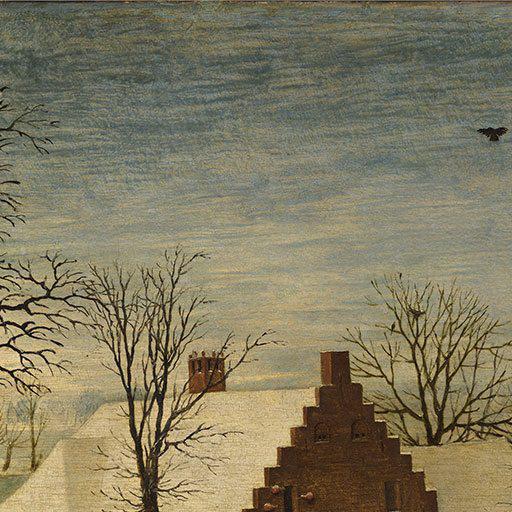

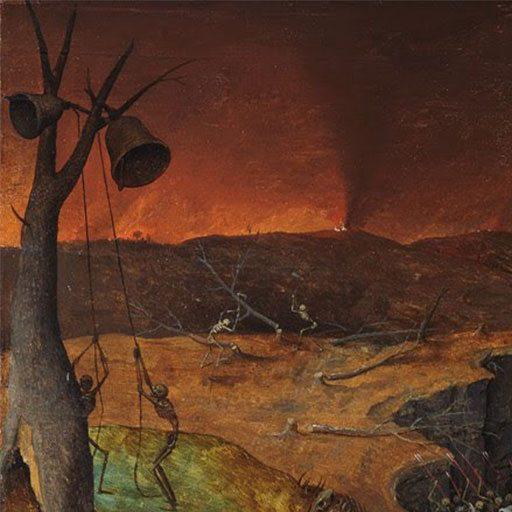

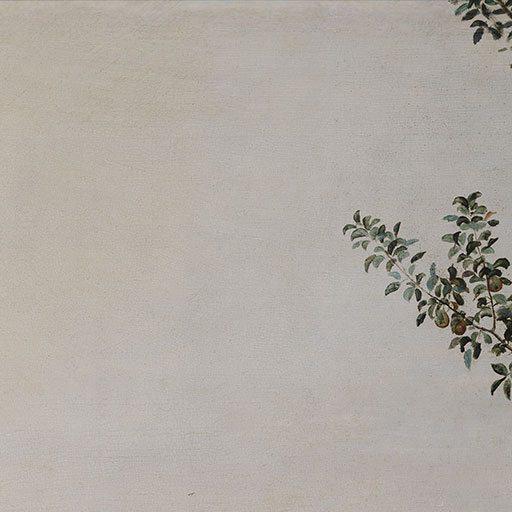

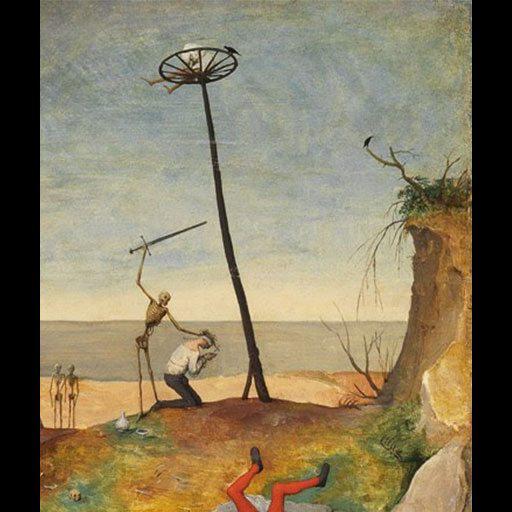

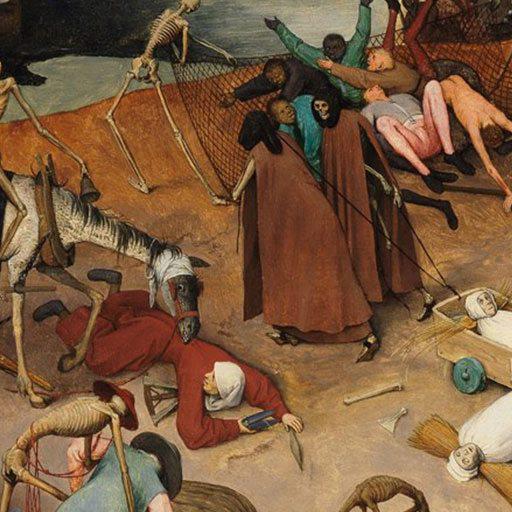

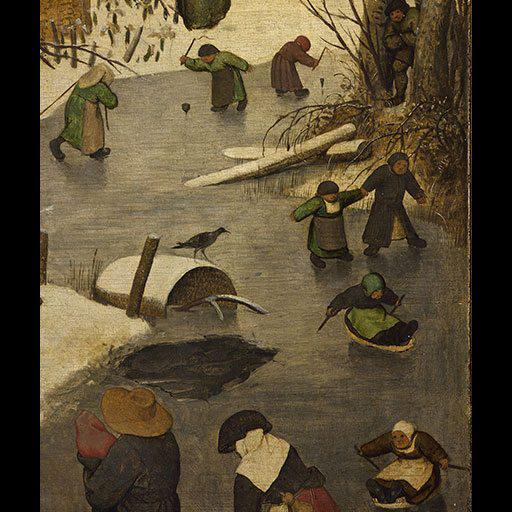

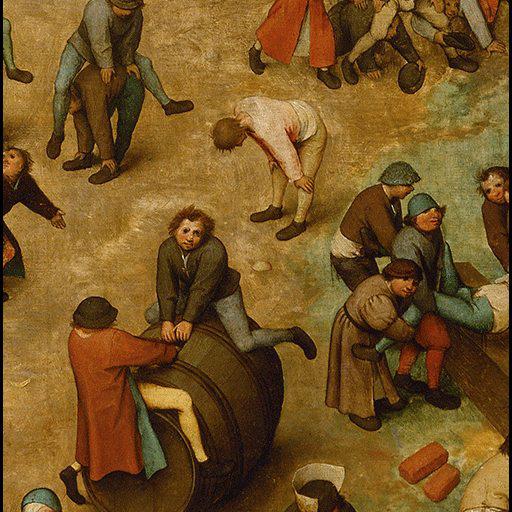

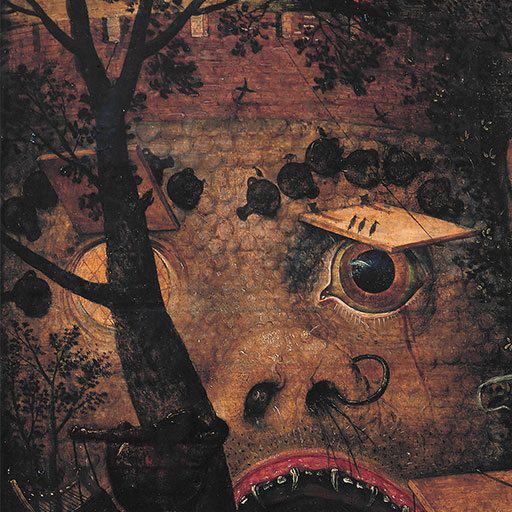

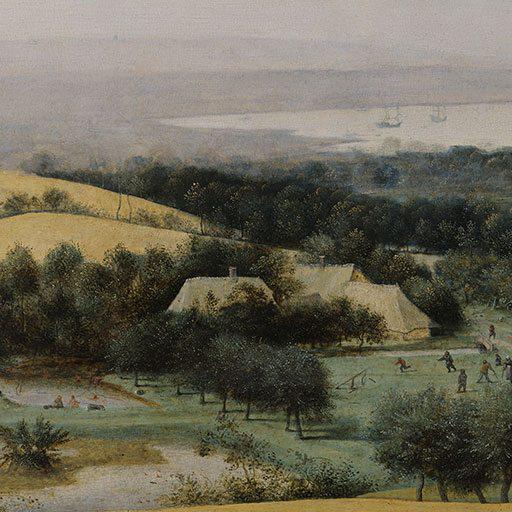

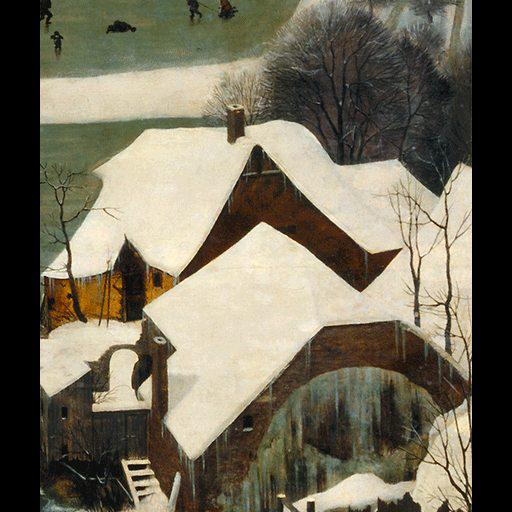

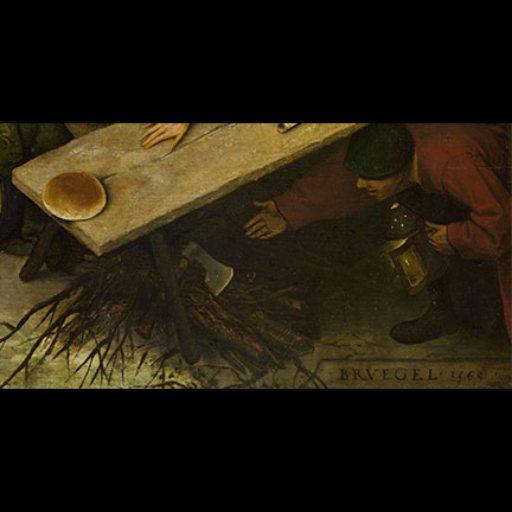

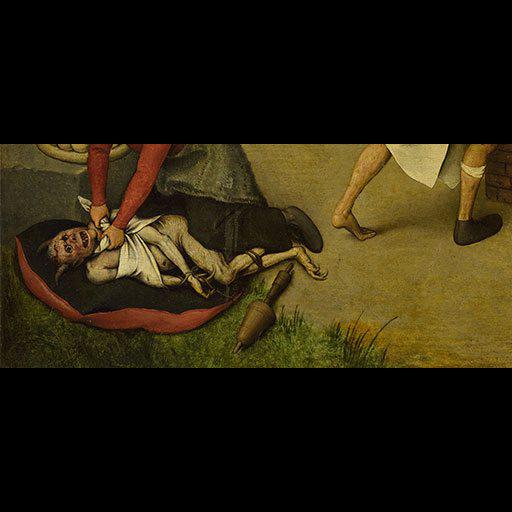

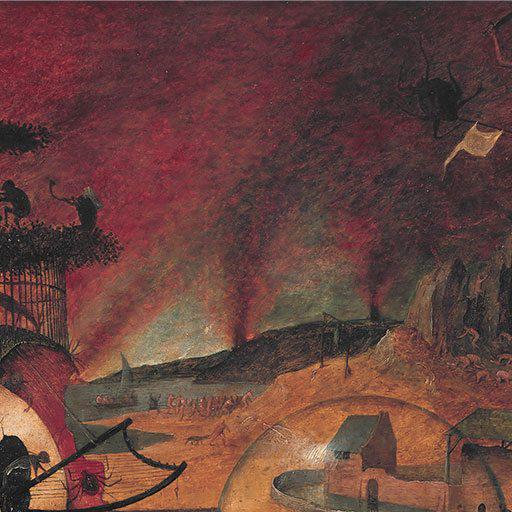

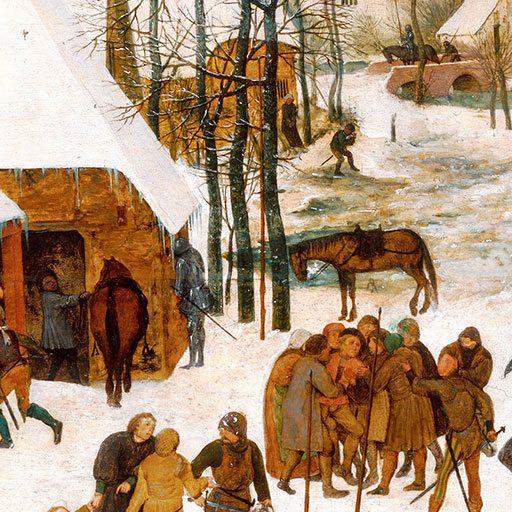

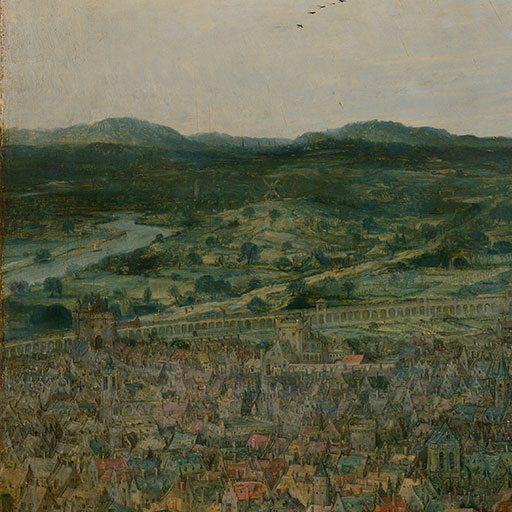

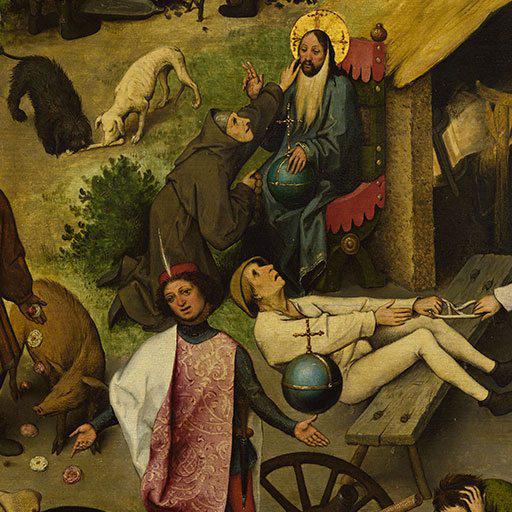

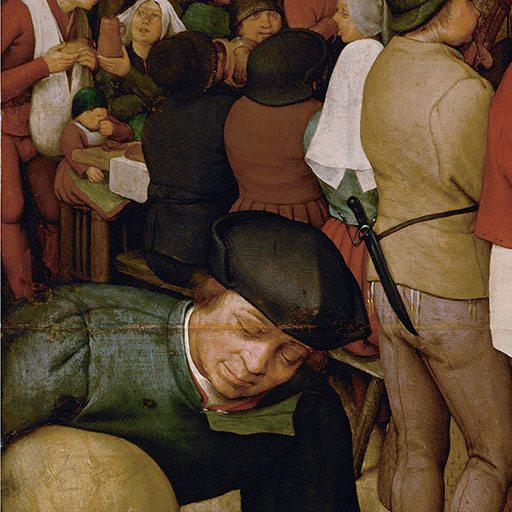

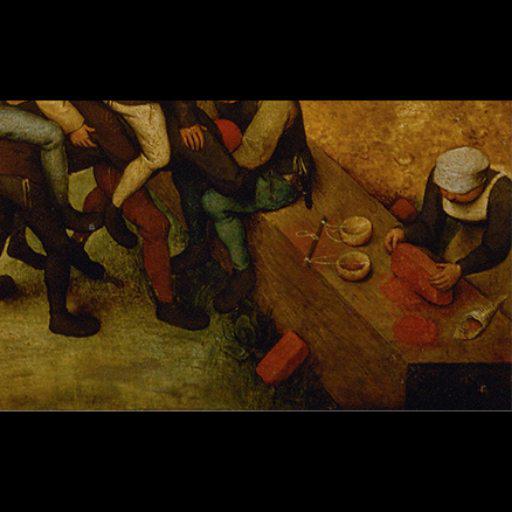

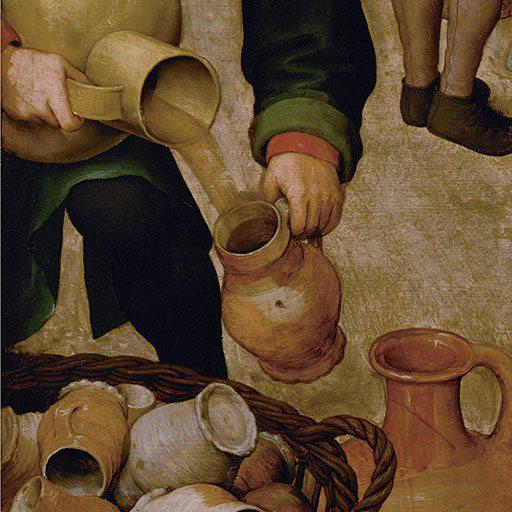

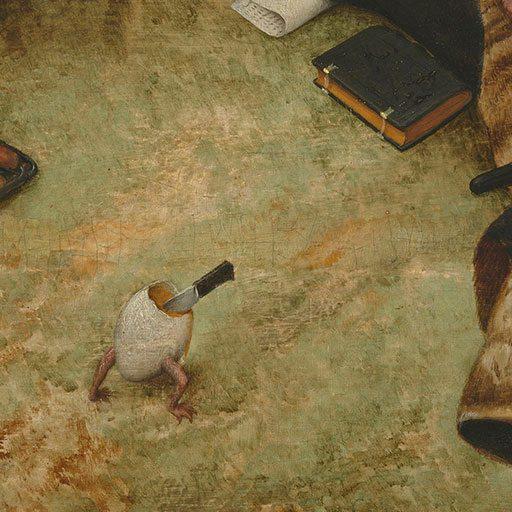

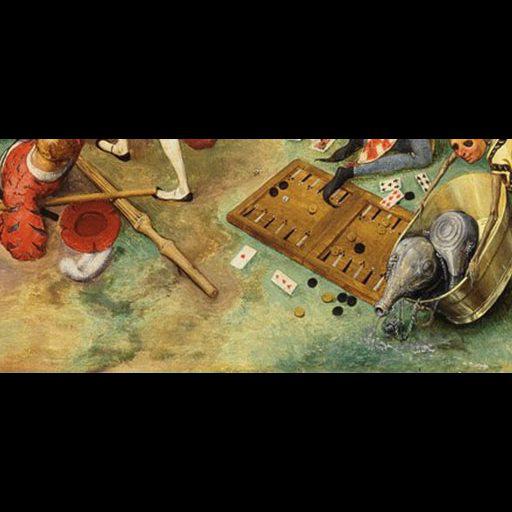

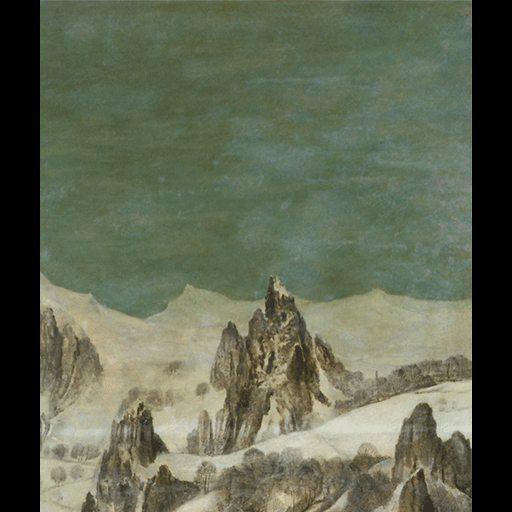

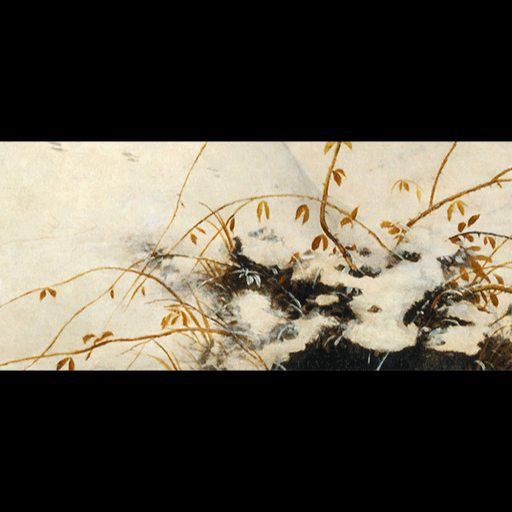

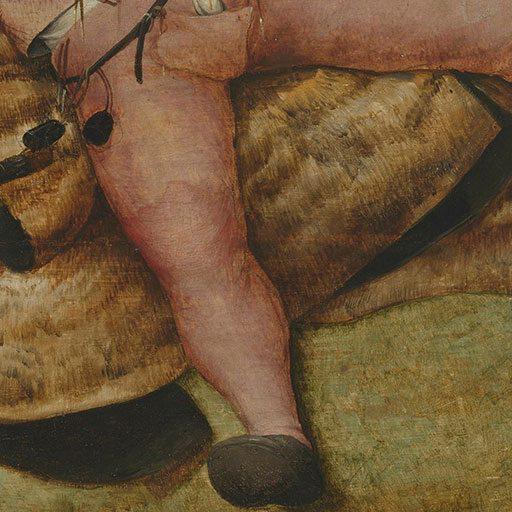

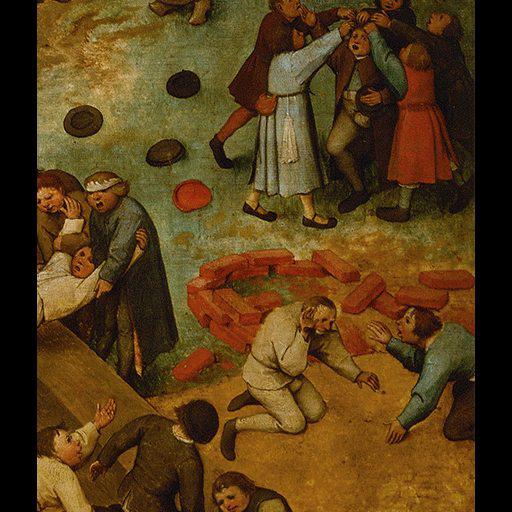

### painting made by bruegel V4 on Stable Diffusion

This version includes entire paintings, as well as close ups.

This is the `<bruegel-style-artwork>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Using stabilityai/stable-diffusion-2-base

Example output:

Here is the new concept you will be able to use as a `style`:

|

distilbert-base-multilingual-cased | [

"pytorch",

"tf",

"onnx",

"safetensors",

"distilbert",

"fill-mask",

"multilingual",

"af",

"sq",

"ar",

"an",

"hy",

"ast",

"az",

"ba",

"eu",

"bar",

"be",

"bn",

"inc",

"bs",

"br",

"bg",

"my",

"ca",

"ceb",

"ce",

"zh",

"cv",

"hr",

"cs",

"da",

"nl",

"en",

"et",

"fi",

"fr",

"gl",

"ka",

"de",

"el",

"gu",

"ht",

"he",

"hi",

"hu",

"is",

"io",

"id",

"ga",

"it",

"ja",

"jv",

"kn",

"kk",

"ky",

"ko",

"la",

"lv",

"lt",

"roa",

"nds",

"lm",

"mk",

"mg",

"ms",

"ml",

"mr",

"mn",

"min",

"ne",

"new",

"nb",

"nn",

"oc",

"fa",

"pms",

"pl",

"pt",

"pa",

"ro",

"ru",

"sco",

"sr",

"scn",

"sk",

"sl",

"aze",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"th",

"ta",

"tt",

"te",

"tr",

"uk",

"ud",

"uz",

"vi",

"vo",

"war",

"cy",

"fry",

"pnb",

"yo",

"dataset:wikipedia",

"arxiv:1910.01108",

"arxiv:1910.09700",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"DistilBertForMaskedLM"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8,339,633 | 2022-12-17T18:11:58Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3-v2

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="jackson-lucas/q-Taxi-v3-v2", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

distilbert-base-uncased-distilled-squad | [

"pytorch",

"tf",

"tflite",

"coreml",

"safetensors",

"distilbert",

"question-answering",

"en",

"dataset:squad",

"arxiv:1910.01108",

"arxiv:1910.09700",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| question-answering | {

"architectures": [

"DistilBertForQuestionAnswering"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 100,097 | 2022-12-17T18:12:34Z | ---

license: openrail

---

Hypernetworks of the Musical Isotope girls: Kafu, Sekai, Rime, Coko, and Haru.

|

distilbert-base-uncased-finetuned-sst-2-english | [

"pytorch",

"tf",

"rust",

"safetensors",

"distilbert",

"text-classification",

"en",

"dataset:sst2",

"dataset:glue",

"arxiv:1910.01108",

"doi:10.57967/hf/0181",

"transformers",

"license:apache-2.0",

"model-index",

"has_space"

]

| text-classification | {

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3,060,704 | 2022-12-17T18:13:59Z | Autoregressive prompt augmenter for https://medium.com/@enryu9000/anifusion-sd-91a59431a6dd.

|

distilbert-base-uncased | [

"pytorch",

"tf",

"jax",

"rust",

"safetensors",

"distilbert",

"fill-mask",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1910.01108",

"transformers",

"exbert",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| fill-mask | {

"architectures": [

"DistilBertForMaskedLM"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 10,887,471 | 2022-12-17T18:16:48Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 244.26 +/- 14.26

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

1503277708/namo | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-12-17T21:57:04Z | ---

tags:

- conversational

---

# Naruto DialoGPT Model |

AI-Lab-Makerere/en_lg | [

"pytorch",

"marian",

"text2text-generation",

"unk",

"dataset:Eric Peter/autonlp-data-EN-LUG",

"transformers",

"autonlp",

"co2_eq_emissions",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"MarianMTModel"

],

"model_type": "marian",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | 2022-12-18T04:10:39Z | ---

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-vi-25p

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-vi-25p

This model was trained from scratch on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8293

- Wer: 0.4109

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.9542 | 1.31 | 400 | 1.4443 | 0.5703 |

| 1.276 | 2.62 | 800 | 1.4606 | 0.5736 |

| 1.1311 | 3.93 | 1200 | 1.4552 | 0.5186 |

| 0.9519 | 5.25 | 1600 | 1.4477 | 0.5300 |

| 0.8293 | 6.56 | 2000 | 1.4166 | 0.5097 |

| 0.7555 | 7.87 | 2400 | 1.4100 | 0.4906 |

| 0.6724 | 9.18 | 2800 | 1.4982 | 0.4880 |

| 0.6038 | 10.49 | 3200 | 1.4524 | 0.4945 |

| 0.5338 | 11.8 | 3600 | 1.4995 | 0.4798 |

| 0.4988 | 13.11 | 4000 | 1.6715 | 0.4653 |

| 0.461 | 14.43 | 4400 | 1.5699 | 0.4552 |

| 0.4154 | 15.74 | 4800 | 1.5762 | 0.4557 |

| 0.3822 | 17.05 | 5200 | 1.5978 | 0.4471 |

| 0.3466 | 18.36 | 5600 | 1.6579 | 0.4512 |

| 0.3226 | 19.67 | 6000 | 1.6825 | 0.4378 |

| 0.2885 | 20.98 | 6400 | 1.7376 | 0.4421 |

| 0.2788 | 22.29 | 6800 | 1.7150 | 0.4300 |

| 0.249 | 23.61 | 7200 | 1.7073 | 0.4263 |

| 0.2317 | 24.92 | 7600 | 1.7349 | 0.4200 |

| 0.2171 | 26.23 | 8000 | 1.7419 | 0.4186 |

| 0.1963 | 27.54 | 8400 | 1.8438 | 0.4144 |

| 0.1906 | 28.85 | 8800 | 1.8293 | 0.4109 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

AIDA-UPM/MSTSb_stsb-xlm-r-multilingual | [

"pytorch",

"xlm-roberta",

"sentence-transformers",

"feature-extraction",

"sentence-similarity",

"transformers"

]

| sentence-similarity | {

"architectures": [

"XLMRobertaModel"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | 2022-12-18T04:36:30Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 256.58 +/- 16.41

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

AVSilva/bertimbau-large-fine-tuned-md | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"generated_from_trainer",

"license:mit",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Patil/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

AdapterHub/roberta-base-pf-commonsense_qa | [

"roberta",

"en",

"dataset:commonsense_qa",

"arxiv:2104.08247",

"adapter-transformers",

"adapterhub:comsense/csqa"

]

| null | {

"architectures": null,

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 20 | null | ---

language:

- "vi"

tags:

- "vietnamese"

- "token-classification"

- "pos"

- "dependency-parsing"

datasets:

- "universal_dependencies"

license: "cc-by-sa-4.0"

pipeline_tag: "token-classification"

widget:

- text: "Hai cái đầu thì tốt hơn một"

---

# phobert-large-vietnamese-ud-goeswith

## Model Description

This is a PhoBERT model pre-trained on Vietnamese texts for POS-tagging and dependency-parsing (using `goeswith` for subwords), derived from [phobert-large](https://huggingface.co/vinai/phobert-large).

## How to Use

```py

class UDgoeswithViNLP(object):

def __init__(self,bert):

from transformers import AutoTokenizer,AutoModelForTokenClassification

from ViNLP import word_tokenize

self.tokenizer=AutoTokenizer.from_pretrained(bert)

self.model=AutoModelForTokenClassification.from_pretrained(bert)

self.vinlp=word_tokenize

def __call__(self,text):

import numpy,torch,ufal.chu_liu_edmonds

t=self.vinlp(text)

w=self.tokenizer(t,add_special_tokens=False)["input_ids"]

z=[]

for i,j in enumerate(t):

if j.find("_")>0 and [k for k in w[i] if k==self.tokenizer.unk_token_id]!=[]:

w[i]=self.tokenizer(j.replace("_"," "))["input_ids"][1:-1]