modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Brendan/cse244b-hw2-roberta

|

[

"pytorch",

"roberta",

"text-classification",

"transformers"

] |

text-classification

|

{

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 28 | null |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/bert-base-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 64.48

name: Eval EM

- type: f1

value: 76.14

name: Eval F1

- type: exact_match

value: 48.89

name: Test EM

- type: f1

value: 59.89

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** BERT-Base-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [BERT-Base-uncased](https://huggingface.co/bert-base-uncased)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 8:39:10 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/bert-base-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.9254004955291748,

# 'start': 30,

# 'end': 68,

# 'answer': 'Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"bert-base-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=16,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

Broadus20/DialoGPT-small-joshua

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 12 | 2023-02-17T20:46:18Z |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/bert-large-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 69.52

name: Eval EM

- type: f1

value: 80.50

name: Eval F1

- type: exact_match

value: 55.00

name: Test EM

- type: f1

value: 65.78

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** BERT-Large-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [BERT-Large-uncased](https://huggingface.co/bert-large-uncased)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 28:35:38 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/bert-large-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.864973783493042,

# 'start': 30,

# 'end': 68,

# 'answer': 'Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"bert-large-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=8,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

|TinyRoBERTa | 81,529.346 | 4:27:06 *| 9:54 | 1:04 | 69.38 | 80.07| 53.29| 64.16|

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

Brona/model1

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | 2023-02-17T20:52:30Z |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/TinyRoBERTa-MRQA

results:

- task:

type: Question-Answering

dataset:

type: mrqa # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: mrqa # Required. A pretty name for the dataset. Example: Common Voice (French)

metrics:

- type: exact_match

value: 22.78

name: Eval EM

- type: f1

value: 32.42

name: Eval F1

- type: exact_match

value: 10.18

name: Test EM

- type: f1

value: 18.72

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** BERT-Tiny-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [BERT-Tiny-uncased](https://huggingface.co/google/bert_uncased_L-2_H-128_A-2)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 26:11 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/bert-tiny-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.134057879447937,

# 'start': 76,

# 'end': 80,

# 'answer': '2019'

# }

```

Yes, you read that correctly ... this model thinks MRQA is "2019". Look at its eval and test scores. A coin toss is more likely to get you a decent answer, haha.

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"bert-tiny-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=16,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

BrunoNogueira/DialoGPT-kungfupanda

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 10 | 2023-02-17T20:56:59Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: Wav2vec2-large-arabic-no-diacs_V1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Wav2vec2-large-arabic-no-diacs_V1

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2894

- Wer: 0.2470

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.6563 | 0.83 | 1000 | 0.5231 | 0.5077 |

| 0.4532 | 1.66 | 2000 | 0.3642 | 0.3588 |

| 0.2687 | 2.5 | 3000 | 0.3245 | 0.2920 |

| 0.1846 | 3.33 | 4000 | 0.3050 | 0.2640 |

| 0.1587 | 4.16 | 5000 | 0.2894 | 0.2470 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Bryan190/Aguy190

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | 2023-02-17T20:58:10Z |

---

license: creativeml-openrail-m

language:

- en

tags:

- embedding

- textual inversion

- isometric

- isometric dreams

---

# Isometric Dreams TEXTUAL INVERSION HUB

It won't let me upload a zip file or the text files, so this is the WHOLE LOT.

# coffee is nice: https://ko-fi.com/DUSKFALLcrew

Yea we're low on funds and need to keep up with the insanity of making things :3

# Invidiual files are on Civit

https://civitai.com/models/9064/isometric-ti-sets

https://civitai.com/models/9265/isometric-ti-sets-pt-2

https://civitai.com/models/9666/isometric-dreams-15-ti-set-3

https://civitai.com/models/9062/isometric-dreams-15-2000-ti

https://civitai.com/models/9060/isometric-ti-4000

https://civitai.com/models/9059/isometric-dreams-ti-4400

https://civitai.com/models/9058/isometricdreams-3900

https://civitai.com/models/8132/isometricdreams-300

|

Brykee/BrykeeBot

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | 2023-02-17T21:03:42Z |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/deberta-v3-xsmall-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 65.58

name: Eval EM

- type: f1

value: 77.17

name: Eval F1

- type: exact_match

value: 50.92

name: Test EM

- type: f1

value: 62.58

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** DeBERTa-v3-xsmall-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [DeBERTa-v3-xsmall](https://huggingface.co/microsoft/deberta-v3-xsmall)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 5:19:05 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/deberta-v3-xsmall-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.8907278776168823,

# 'start': 29,

# 'end': 68,

# 'answer': ' Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"deberta-v3-xsmall-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=16,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

Bryson575x/riceboi

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | 2023-02-17T21:04:04Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 223.70 +/- 73.03

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

BumBelDumBel/TRUMP

|

[

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"transformers",

"generated_from_trainer",

"license:mit"

] |

text-generation

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 5 | null |

Access to model s-1-n-t-h/bart-summarizer is restricted and you are not in the authorized list. Visit https://huggingface.co/s-1-n-t-h/bart-summarizer to ask for access.

|

BumBelDumBel/ZORK-AI-TEST

|

[

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"transformers",

"generated_from_trainer",

"license:mit"

] |

text-generation

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 9 | null |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/deberta-v3-large-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 76.08

name: Eval EM

- type: f1

value: 86.23

name: Eval F1

- type: exact_match

value: 64.27

name: Test EM

- type: f1

value: 75.22

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** DeBERTa-v3-Large-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [DeBERTa-v3-Large](https://huggingface.co/microsoft/deberta-v3-large)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 38:36:13 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/deberta-v3-large-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.9785431623458862,

# 'start': 29,

# 'end': 68,

# 'answer': ' Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"deberta-v3-large-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=8,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

BumBelDumBel/ZORK_AI_FANTASY

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | 2023-02-17T21:11:00Z |

---

license: apache-2.0

language:

- bn

Tags:

- paraphrase

tags:

- bangla

- semantics

- paraphrase

---

|

CALM/backup

|

[

"lean_albert",

"transformers"

] | null |

{

"architectures": [

"LeanAlbertForPretraining",

"LeanAlbertForTokenClassification",

"LeanAlbertForSequenceClassification"

],

"model_type": "lean_albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | 2023-02-17T21:20:52Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Yelinz/FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

CAMeL-Lab/bert-base-arabic-camelbert-ca-ner

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 85 | null |

---

license: mit

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: gpt-neo-2.7B-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt-neo-2.7B-imdb

This model is a fine-tuned version of [EleutherAI/gpt-neo-2.7B](https://huggingface.co/EleutherAI/gpt-neo-2.7B) on the imdb dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

### Training results

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu117

- Datasets 2.9.0

- Tokenizers 0.13.2

|

CAMeL-Lab/bert-base-arabic-camelbert-ca-pos-egy

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 16,451 | null |

---

license: mit

---

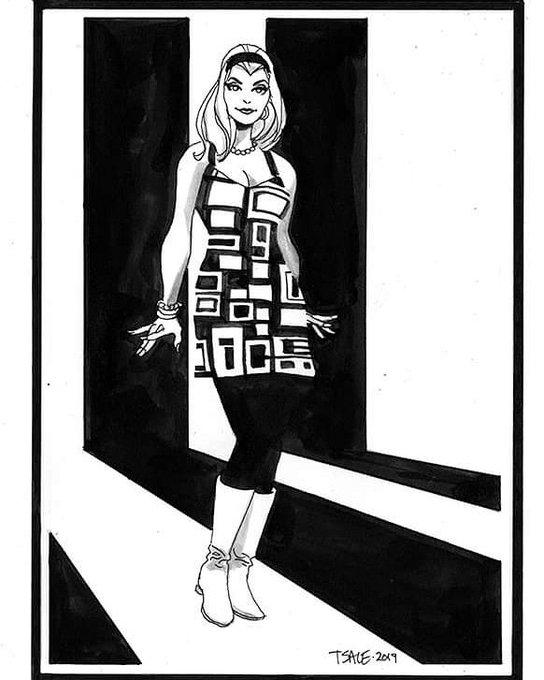

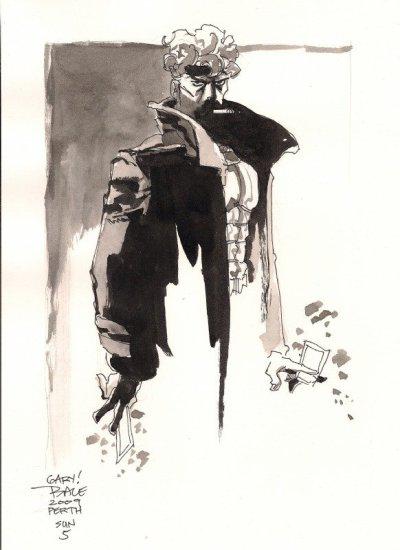

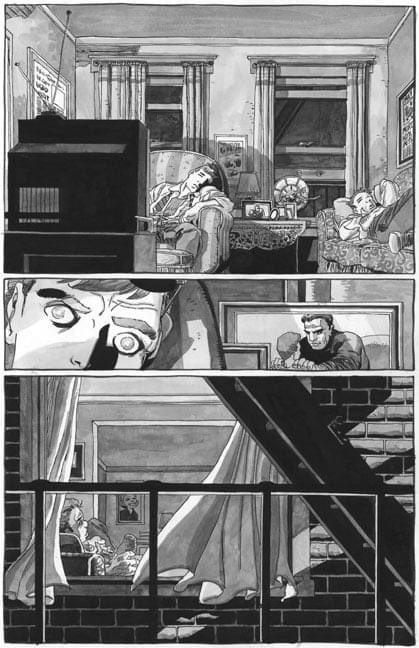

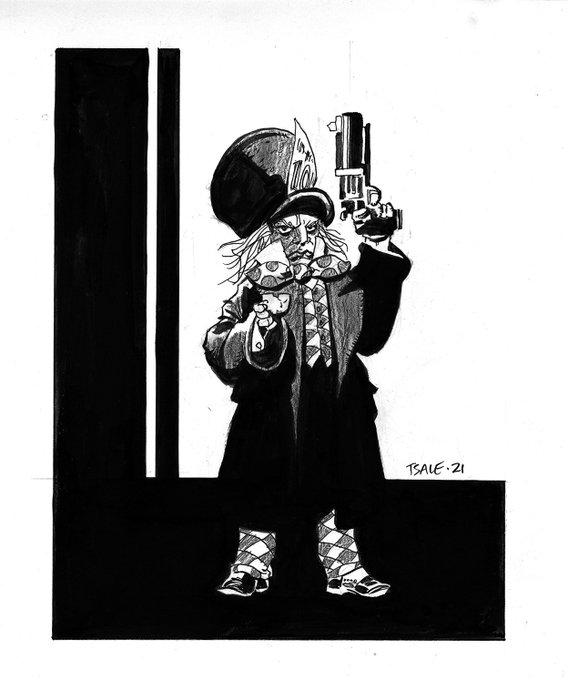

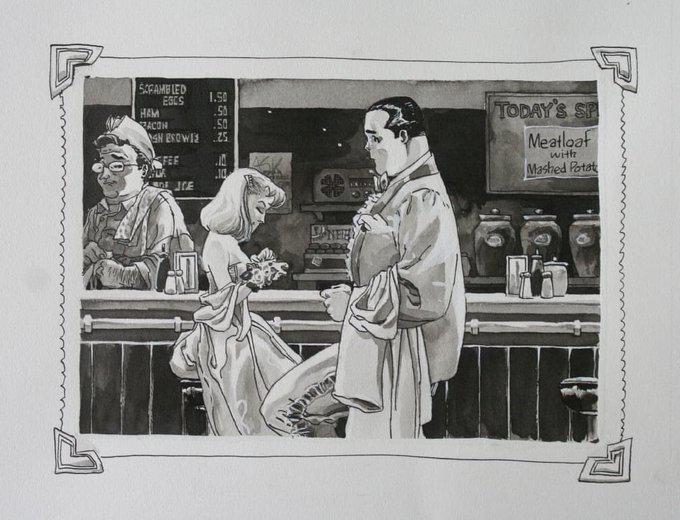

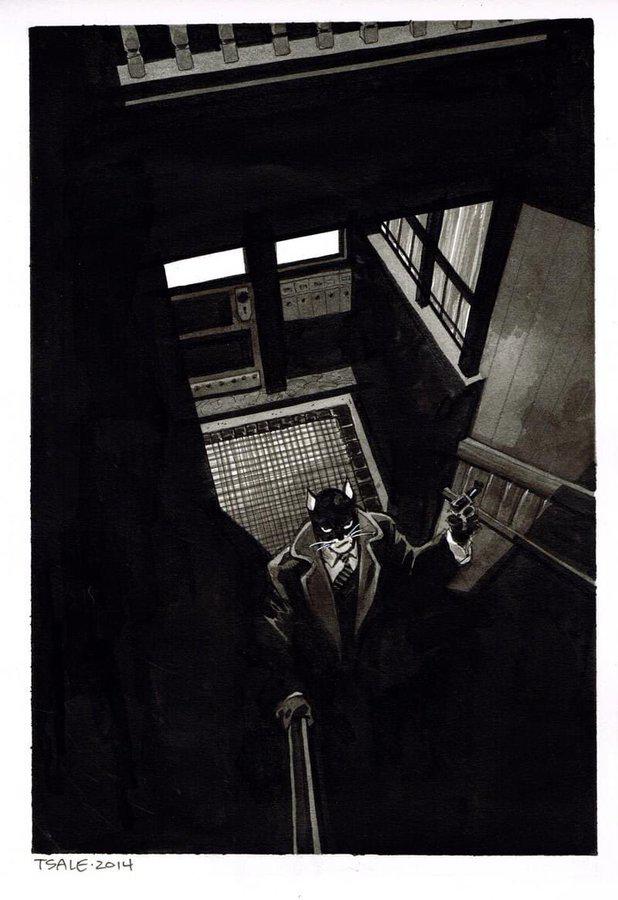

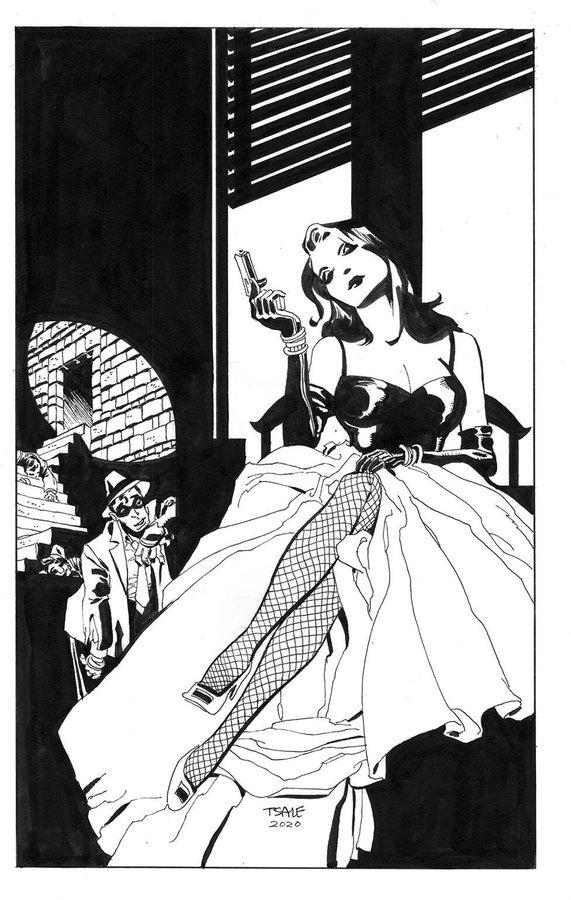

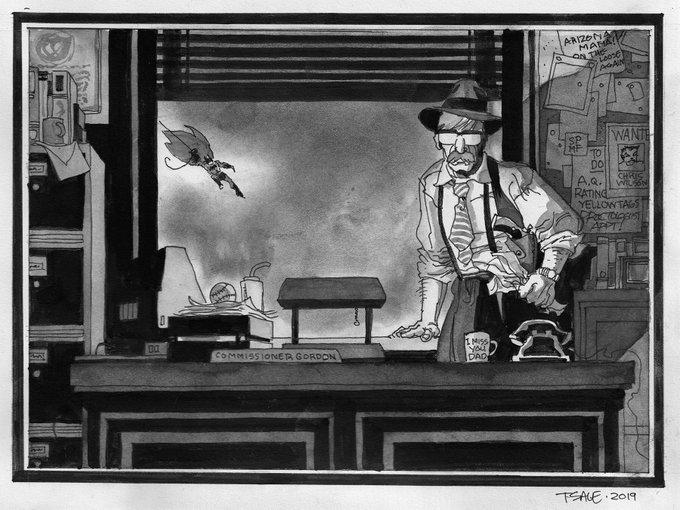

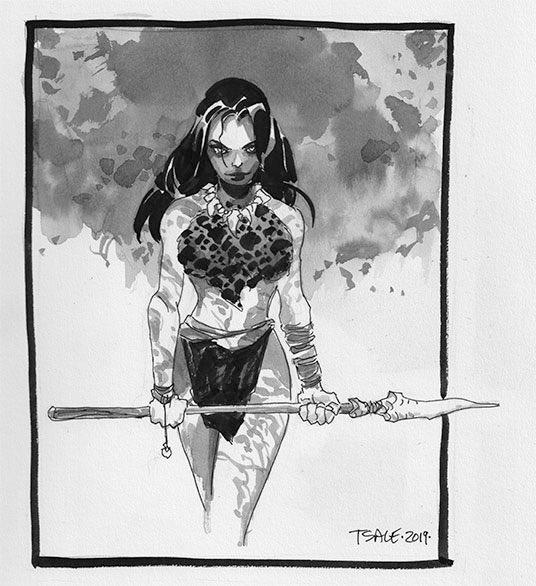

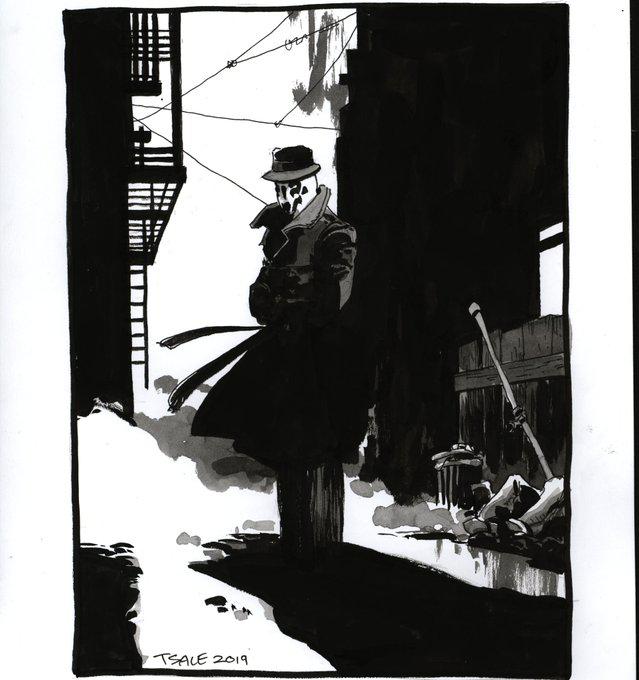

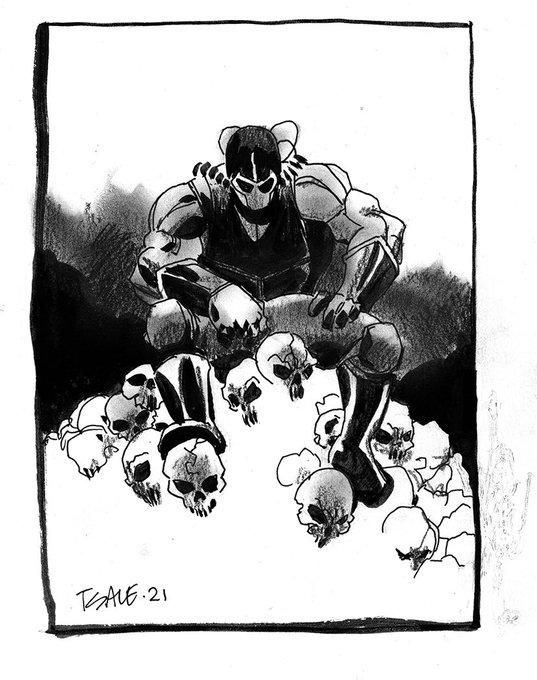

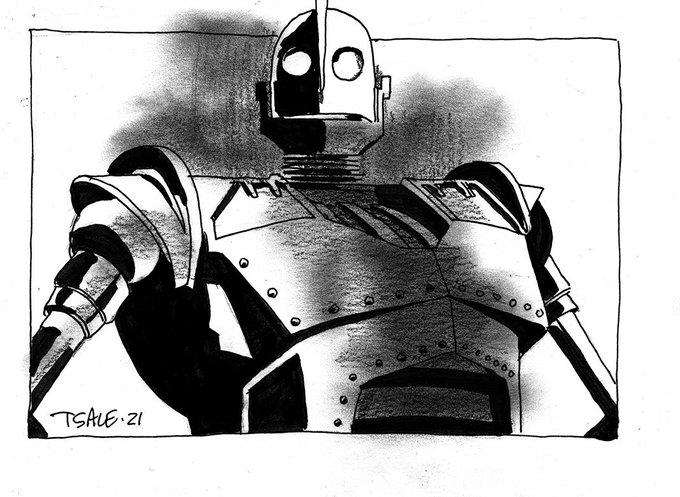

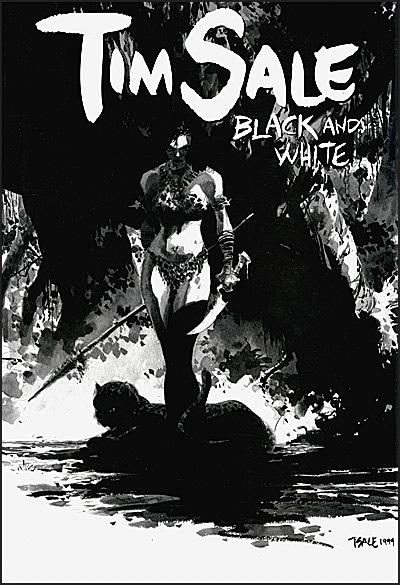

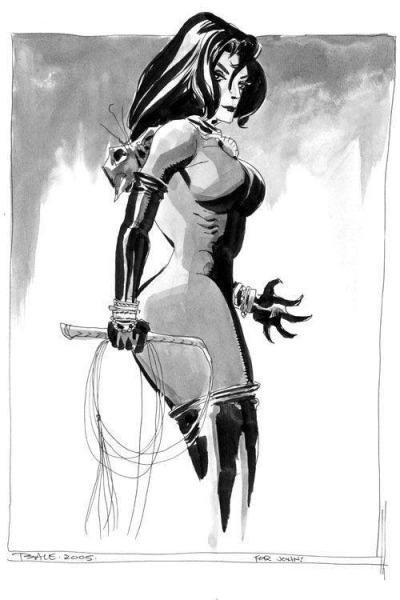

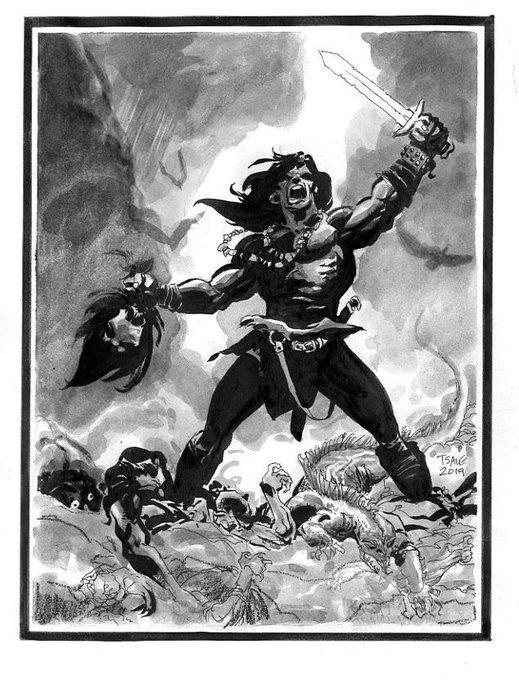

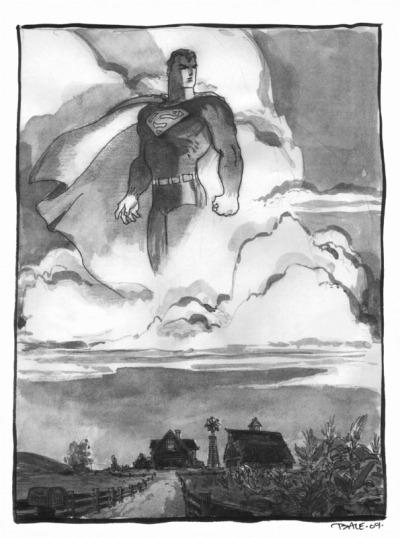

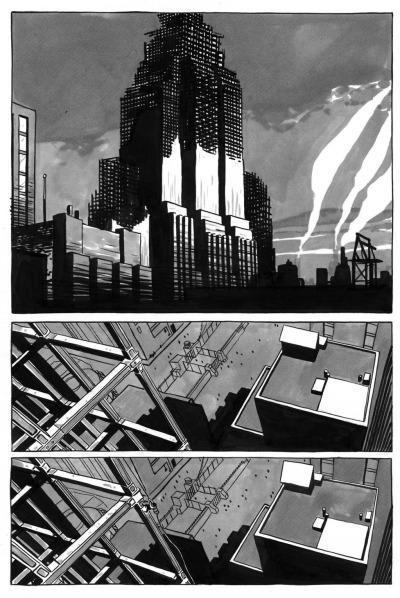

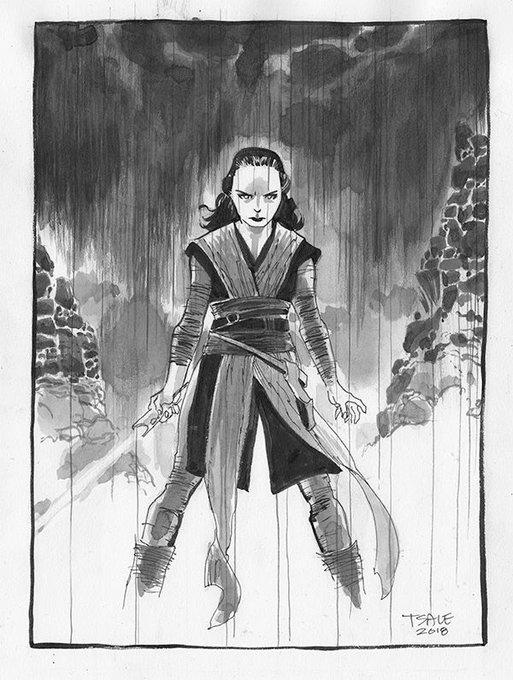

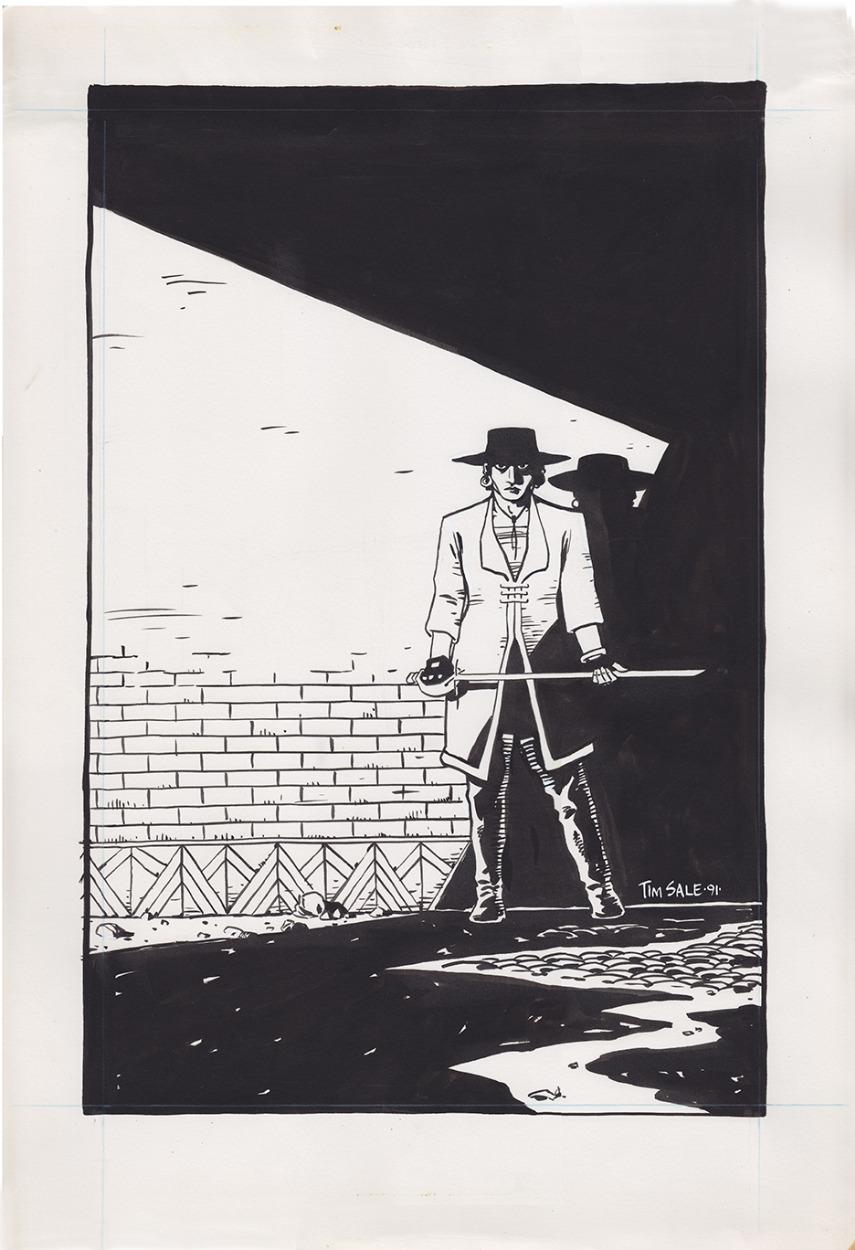

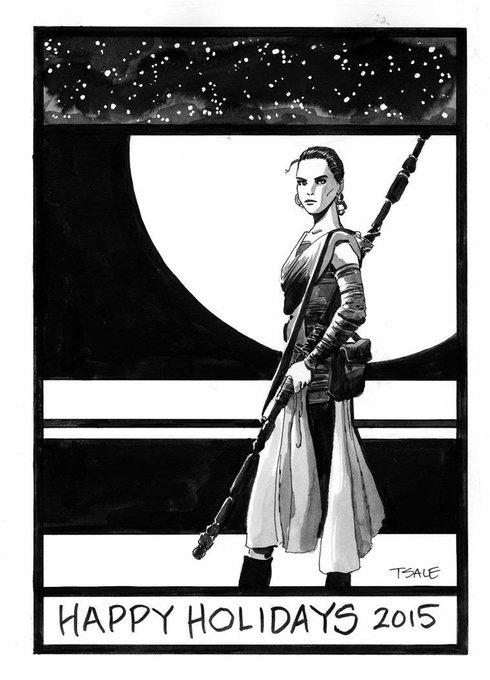

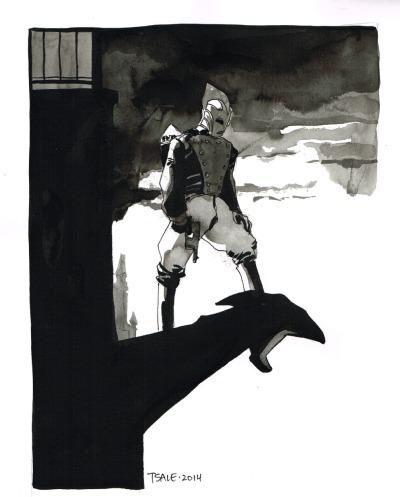

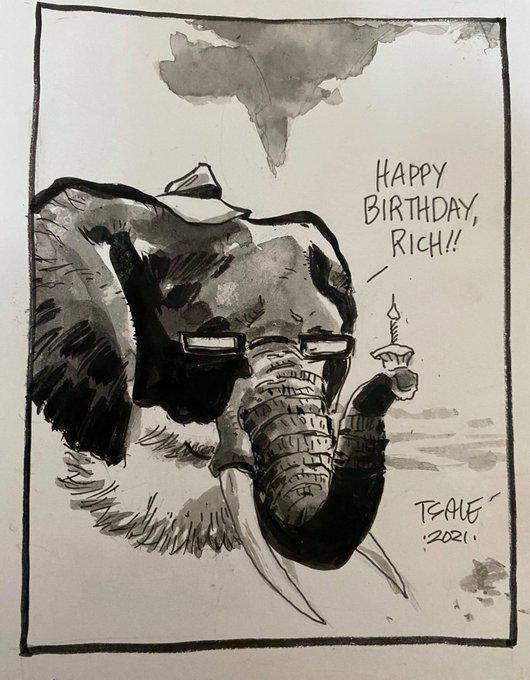

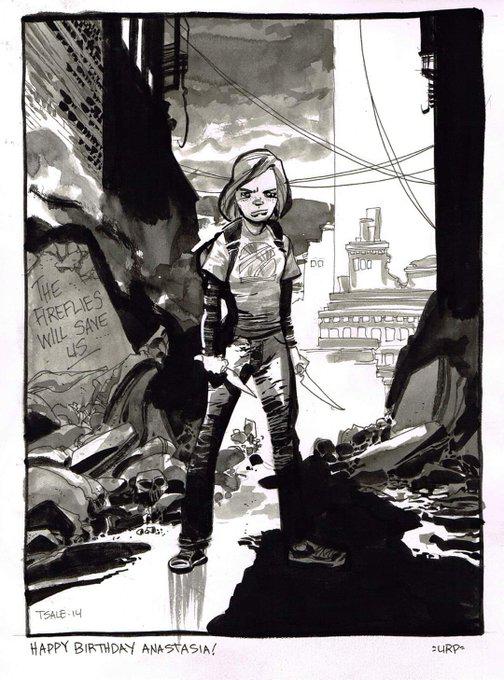

### tim-sale1 on Stable Diffusion

This is the `<cat-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

CAMeL-Lab/bert-base-arabic-camelbert-ca-pos-msa

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 71 | null |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 238.75 +/- 22.75

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CAMeL-Lab/bert-base-arabic-camelbert-ca

|

[

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

fill-mask

|

{

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 580 | null |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -1.37 +/- 0.34

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-pos-egy

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 32 | 2023-02-17T21:38:08Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SnowballTarget

library_name: ml-agents

---

# **ppo** Agent playing **SnowballTarget**

This is a trained model of a **ppo** agent playing **SnowballTarget** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-SnowballTarget

2. Step 1: Write your model_id: menoua/ML-Agents-SnowballTarget

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

CAMeL-Lab/bert-base-arabic-camelbert-da-pos-msa

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 27 | 2023-02-17T21:40:58Z |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/minilmv2-l6-h384-from-bert-large-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 59.31

name: Eval EM

- type: f1

value: 71.09

name: Eval F1

- type: exact_match

value: 41.78

name: Test EM

- type: f1

value: 53.30

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** MiniLMv2-L6-H384-from-BERT-Large-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [MiniLMv2-L6-H384-distilled-from-BERT-Large](https://huggingface.co/nreimers/MiniLMv2-L6-H384-distilled-from-BERT-Large)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 2:12:48 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/minilmv2-l6-h384-from-bert-large-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.30876627564430237,

# 'start': 30,

# 'end': 68,

# 'answer': 'Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"minilmv2-l6-h384-from-bert-large-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=16,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

CAMeL-Lab/bert-base-arabic-camelbert-da

|

[

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

fill-mask

|

{

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 449 | 2023-02-17T21:42:19Z |

---

license: apache-2.0

datasets:

- mrqa

language:

- en

metrics:

- exact_match

- f1

model-index:

- name: VMware/minilmv2-l6-h384-from-roberta-large-mrqa

results:

- task:

type: Question-Answering

dataset:

type: mrqa

name: MRQA

metrics:

- type: exact_match

value: 59.27

name: Eval EM

- type: f1

value: 70.64

name: Eval F1

- type: exact_match

value: 42.95

name: Test EM

- type: f1

value: 54.03

name: Test F1

---

This model release is part of a joint research project with Howard University's Innovation Foundry/AIM-AHEAD Lab.

# Model Details

- **Model name:** MiniLMv2-L6-H384-from-RoBERTa-Large-MRQA

- **Model type:** Extractive Question Answering

- **Parent Model:** [MiniLMv2-L6-H384-distilled-from-RoBERTa-Large](https://huggingface.co/nreimers/MiniLMv2-L6-H384-distilled-from-RoBERTa-Large)

- **Training dataset:** [MRQA](https://huggingface.co/datasets/mrqa) (Machine Reading for Question Answering)

- **Training data size:** 516,819 examples

- **Training time:** 2:15:10 on 1 Nvidia V100 32GB GPU

- **Language:** English

- **Framework:** PyTorch

- **Model version:** 1.0

# Intended Use

This model is intended to provide accurate answers to questions based on context passages. It can be used for a variety of tasks, including question-answering for search engines, chatbots, customer service systems, and other applications that require natural language understanding.

# How to Use

```python

from transformers import pipeline

question_answerer = pipeline("question-answering", model='VMware/minilmv2-l6-h384-from-roberta-large-mrqa')

context = "We present the results of the Machine Reading for Question Answering (MRQA) 2019 shared task on evaluating the generalization capabilities of reading comprehension systems. In this task, we adapted and unified 18 distinct question answering datasets into the same format. Among them, six datasets were made available for training, six datasets were made available for development, and the final six were hidden for final evaluation. Ten teams submitted systems, which explored various ideas including data sampling, multi-task learning, adversarial training and ensembling. The best system achieved an average F1 score of 72.5 on the 12 held-out datasets, 10.7 absolute points higher than our initial baseline based on BERT."

question = "What is MRQA?"

result = question_answerer(question=question, context=context)

print(result)

# {

# 'score': 0.5269668698310852,

# 'start': 30,

# 'end': 68,

# 'answer': 'Machine Reading for Question Answering'

# }

```

# Training Details

The model was trained for 1 epoch on the MRQA training set.

## Training Hyperparameters

```python

args = TrainingArguments(

"minilmv2-l6-h384-from-roberta-large-mrqa",

save_strategy="epoch",

learning_rate=1e-5,

num_train_epochs=1,

weight_decay=0.01,

per_device_train_batch_size=16,

)

```

# Evaluation Metrics

The model was evaluated using standard metrics for question-answering models, including:

Exact match (EM): The percentage of questions for which the model produces an exact match with the ground truth answer.

F1 score: A weighted average of precision and recall, which measures the overlap between the predicted answer and the ground truth answer.

# Model Family Performance

| Parent Language Model | Number of Parameters | Training Time | Eval Time | Test Time | Eval EM | Eval F1 | Test EM | Test F1 |

|---|:-:|:-:|:-:|:-:|:-:|:-:|:-:|:-:|

| BERT-Tiny | 4,369,666 | 26:11 | 0:41 | 0:04 | 22.78 | 32.42 | 10.18 | 18.72 |

| BERT-Base | 108,893,186 | 8:39:10 | 18:42 | 2:13 | 64.48 | 76.14 | 48.89 | 59.89 |

| BERT-Large | 334,094,338 | 28:35:38 | 1:00:56 | 7:14 | 69.52 | 80.50 | 55.00 | 65.78 |

| DeBERTa-v3-Extra-Small | 70,682,882 | 5:19:05 | 11:29 | 1:16 | 65.58 | 77.17 | 50.92 | 62.58 |

| DeBERTa-v3-Base | 183,833,090 | 12:13:41 | 28:18 | 3:09 | 71.43 | 82.59 | 59.49 | 70.46 |

| DeBERTa-v3-Large | 434,014,210 | 38:36:13 | 1:25:47 | 9:33 | **76.08** | **86.23** | **64.27** | **75.22** |

| ELECTRA-Small | 13,483,522 | 2:16:36 | 3:55 | 0:27 | 57.63 | 69.38 | 38.68 | 51.56 |

| ELECTRA-Base | 108,893,186 | 8:40:57 | 18:41 | 2:12 | 68.78 | 80.16 | 54.70 | 65.80 |

| ELECTRA-Large | 334,094,338 | 28:31:59 | 1:00:40 | 7:13 | 74.15 | 84.96 | 62.35 | 73.28 |

| MiniLMv2-L6-H384-from-BERT-Large | 22,566,146 | 2:12:48 | 4:23 | 0:40 | 59.31 | 71.09 | 41.78 | 53.30 |

| MiniLMv2-L6-H768-from-BERT-Large | 66,365,954 | 4:42:59 | 10:01 | 1:10 | 64.27 | 75.84 | 49.05 | 59.82 |

| MiniLMv2-L6-H384-from-RoBERTa-Large | 30,147,842 | 2:15:10 | 4:19 | 0:30 | 59.27 | 70.64 | 42.95 | 54.03 |

| MiniLMv2-L12-H384-from-RoBERTa-Large | 40,794,626 | 4:14:22 | 8:27 | 0:58 | 64.58 | 76.23 | 51.28 | 62.83 |

| MiniLMv2-L6-H768-from-RoBERTa-Large | 81,529,346 | 4:39:02 | 9:34 | 1:06 | 65.80 | 77.17 | 51.72 | 63.27 |

| TinyRoBERTa | 81,529.346 | 4:27:06\* | 9:54 | 1:04 | 69.38 | 80.07 | 53.29 | 64.16 |

| RoBERTa-Base | 124,056,578 | 8:50:29 | 18:59 | 2:11 | 69.06 | 80.08 | 55.53 | 66.49 |

| RoBERTa-Large | 354,312,194 | 29:16:06 | 1:01:10 | 7:04 | 74.08 | 84.38 | 62.20 | 72.88 |

\* TinyRoBERTa's training time isn't directly comparable to the other models since it was distilled from [VMware/roberta-large-mrqa](https://huggingface.co/VMware/roberta-large-mrqa) that was already trained on MRQA.

# Limitations and Bias

The model is based on a large and diverse dataset, but it may still have limitations and biases in certain areas. Some limitations include:

- Language: The model is designed to work with English text only and may not perform as well on other languages.

- Domain-specific knowledge: The model has been trained on a general dataset and may not perform well on questions that require domain-specific knowledge.

- Out-of-distribution questions: The model may struggle with questions that are outside the scope of the MRQA dataset. This is best demonstrated by the delta between its scores on the eval vs test datasets.

In addition, the model may have some bias in terms of the data it was trained on. The dataset includes questions from a variety of sources, but it may not be representative of all populations or perspectives. As a result, the model may perform better or worse for certain types of questions or on certain types of texts.

|

CAMeL-Lab/bert-base-arabic-camelbert-mix-did-madar-corpus6

|

[

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] |

text-classification

|

{

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {