modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Alessandro/model_name

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

language: en

thumbnail: http://www.huggingtweets.com/iwontsmthing1/1678830403864/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1605342956034064384/8CVvM3xW_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Хамовитий мопс</div>

<div style="text-align: center; font-size: 14px;">@iwontsmthing1</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Хамовитий мопс.

| Data | Хамовитий мопс |

| --- | --- |

| Tweets downloaded | 3247 |

| Retweets | 89 |

| Short tweets | 654 |

| Tweets kept | 2504 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3cze1uyx/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @iwontsmthing1's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/b6nhrz6u) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/b6nhrz6u/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/iwontsmthing1')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

AlexN/xls-r-300m-fr

|

[

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"fr",

"dataset:mozilla-foundation/common_voice_8_0",

"transformers",

"generated_from_trainer",

"hf-asr-leaderboard",

"mozilla-foundation/common_voice_8_0",

"robust-speech-event",

"model-index"

] |

automatic-speech-recognition

|

{

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 17 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: MultiLabel_V3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# MultiLabel_V3

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9683

- Accuracy: 0.7370

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.8572 | 0.1 | 100 | 1.1607 | 0.6466 |

| 0.8578 | 0.2 | 200 | 1.1956 | 0.6499 |

| 0.7362 | 0.3 | 300 | 1.1235 | 0.6885 |

| 0.8569 | 0.39 | 400 | 1.0460 | 0.6891 |

| 0.4851 | 0.49 | 500 | 1.1213 | 0.6891 |

| 0.7252 | 0.59 | 600 | 1.1512 | 0.6720 |

| 0.6333 | 0.69 | 700 | 1.1039 | 0.6913 |

| 0.6239 | 0.79 | 800 | 1.0636 | 0.7001 |

| 0.2768 | 0.89 | 900 | 1.0386 | 0.7073 |

| 0.4872 | 0.99 | 1000 | 1.0311 | 0.7062 |

| 0.3049 | 1.09 | 1100 | 1.0437 | 0.7155 |

| 0.1435 | 1.18 | 1200 | 1.0343 | 0.7222 |

| 0.2088 | 1.28 | 1300 | 1.0784 | 0.7194 |

| 0.4972 | 1.38 | 1400 | 1.1072 | 0.7166 |

| 0.3604 | 1.48 | 1500 | 1.0438 | 0.7150 |

| 0.2726 | 1.58 | 1600 | 1.0077 | 0.7293 |

| 0.3106 | 1.68 | 1700 | 1.0029 | 0.7326 |

| 0.3259 | 1.78 | 1800 | 0.9906 | 0.7310 |

| 0.3323 | 1.88 | 1900 | 0.9729 | 0.7359 |

| 0.2998 | 1.97 | 2000 | 0.9683 | 0.7370 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AlexN/xls-r-300m-pt

|

[

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"pt",

"dataset:mozilla-foundation/common_voice_8_0",

"transformers",

"robust-speech-event",

"mozilla-foundation/common_voice_8_0",

"generated_from_trainer",

"hf-asr-leaderboard",

"license:apache-2.0",

"model-index"

] |

automatic-speech-recognition

|

{

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 15 | null |

# Model Card for hestyle-controlnet

### Model Description

Scribble controlnet transferred Hestyle model.

- **Developed by:** Alethea.ai

- **Model type:** PyTorch Checkpoint

- **License:** [Will provide soon.]

- **Finetuned from model [optional]:** Hestyle

## Bias, Risks, and Limitations

[Will provide soon.]

### Recommendations

[Will provide soon.]

## Training Details

[Will provide soon.]

|

Alexander-Learn/bert-finetuned-ner-accelerate

|

[

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | 2023-03-14T22:07:00Z |

---

language:

- da

tags:

- generated_from_trainer

datasets:

- audiofolder

metrics:

- wer

model-index:

- name: Whisper Tiny Da - HollowVoice

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: audiofolder

type: audiofolder

config: default

split: train[-20%:]

args: default

metrics:

- name: Wer

type: wer

value: 86.49993452926542

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Tiny Da - HollowVoice

This model is a fine-tuned version of [openai/openai/whisper-tiny](https://huggingface.co/openai/openai/whisper-tiny) on the audiofolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5216

- Wer: 86.4999

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0319 | 24.39 | 1000 | 0.5216 | 86.4999 |

| 0.0031 | 48.78 | 2000 | 0.5156 | 89.3545 |

| 0.0017 | 73.17 | 3000 | 0.5267 | 89.7342 |

| 0.0013 | 97.56 | 4000 | 0.5312 | 90.9781 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AlirezaBaneshi/testPersianQA

|

[

"pytorch",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

] |

question-answering

|

{

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | null |

---

license: creativeml-openrail-m

language:

- en

tags:

- LoRA

- Lycoris

- stable diffusion

- ffxiv

- final fantasy xiv

- meteion

---

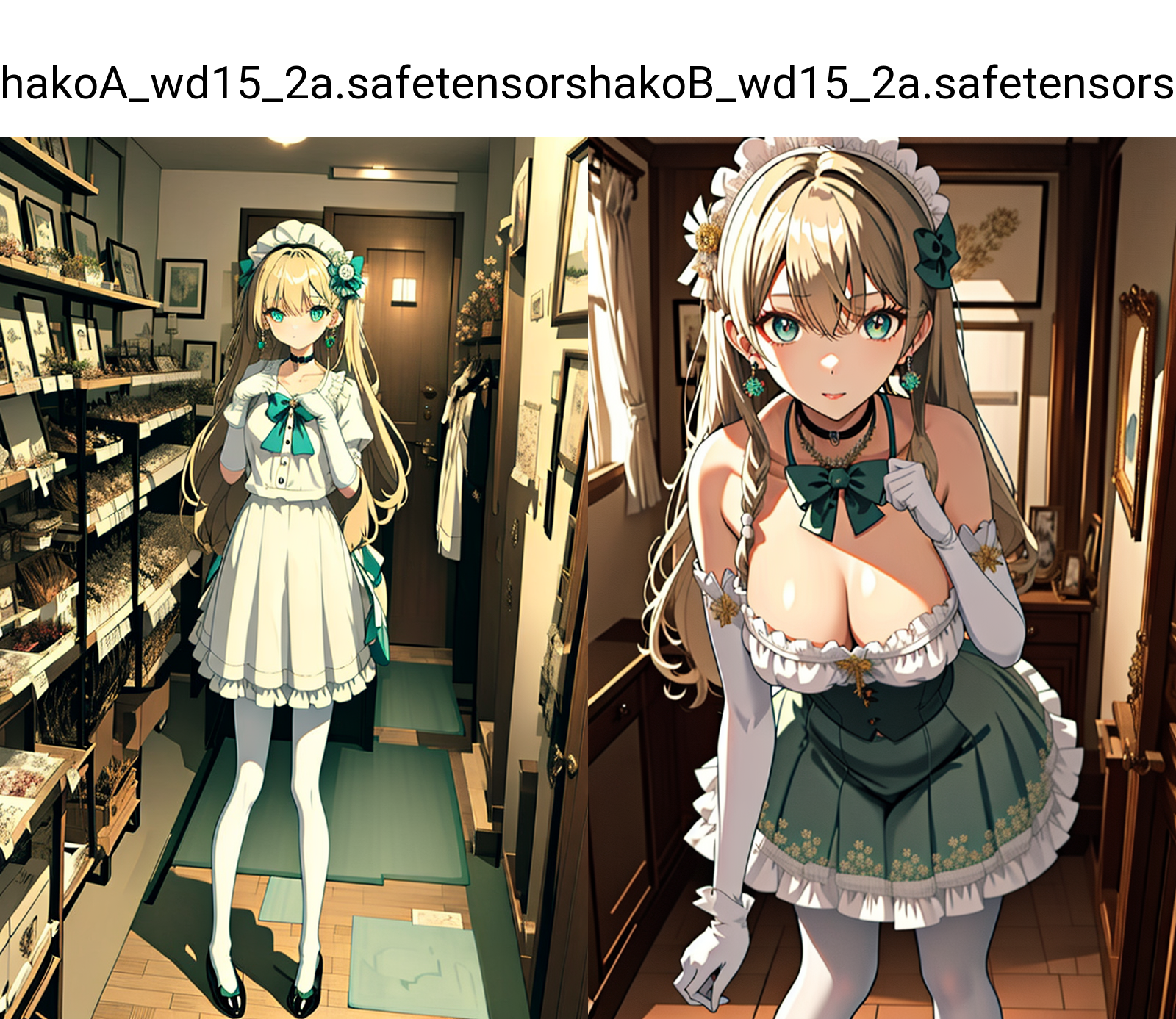

# 24 Cans of Monster: Meteion FFXIV Lycoris Model

Full previews are here at the moment: https://civitai.com/models/19689/24-cans-of-monster-meteion-ffxiv-endwalker-spoilers

I will be adding those in a folder in about 5 minutes though!

# WHAT GAME IS IT?

Final Fantasy XIV, Meteion is a charachter in the most recent of expansions 6.0 / Endwalker. This includes both her WORLD ENDING form, and her birb form as well as her existenial crisis she needs a snickers and a can of technicolor goo.

# Wait THIS IS A LYCORIS UPDATE!

Yes you'll need this: https://github.com/KohakuBlueleaf/a1111-sd-webui-locon

# Support Us!

We stream a lot of our testing on twitch: https://www.twitch.tv/duskfallcrew

any chance you can spare a coffee or three? https://ko-fi.com/DUSKFALLcrew

If you want custom LoRA OR MODEL trained an option will become available on the Patreon: https://www.patreon.com/earthndusk

# A Meme If you WILL:

This LoRA will end the world if you don't each her the proper ettiqutte.

No, the meme is not in the dataset, this is just a meme we had laying around.

# Official Samples by Us using NyanMixAbsurdRes2:

|

Aliskin/xlm-roberta-base-finetuned-marc

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: unit4

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Allybaby21/Allysai

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: mit

---

<h1 align="center">arabert-finetuned-caner</h1>

<p align="center">An ongoing project for implementation of NLP methods in the field of islamic studies.</p>

### Named Entity Recognition

briefly:

* We had to prepair CANERCorpus dataset which is avialable at [huggingface](https://huggingface.co/datasets/caner/). The dataset was not in the BIO format so model couldn't learn anything from it. We used an html version of dataset available on github and extracted a HuggingFace format dataset from it with BIO tags.

* Fine tunning started from a pre-traind model named "bert-base-arabertv02" and after 3 epoch of training model on the above mentioned dataset (80% splitted to training data and 20% to validation data), reached the following results: (evaluation is done by using compute metrics of python evaluate module. note that precision is overall precision, recall is overall recall and so on.)

* Trained model is available at [huggingface](https://huggingface.co/ArefSadeghian/arabert-finetuned-caner) and you can use it with the following code snippet:

```python

!pip install transformers

from transformers import pipeline

model_checkpoint = "ArefSadeghian/arabert-finetuned-caner"

# Replace this with above latest checkpoint

token_classifier = pipeline(

"token-classification", model=model_checkpoint, aggregation_strategy="simple"

)

s = "حَدَّثَنَا عَبْد اللَّهِ، حَدَّثَنِي عُبَيْدُ اللَّهِ بْنُ عُمَرَ الْقَوَارِيرِيُّ، حَدَّثَنَا يُونُسُ بْنُ أَرْقَمَ، حَدَّثَنَا يَزِيدُ بْنُ أَبِي زِيَادٍ، عَنْ عَبْدِ الرَّحْمَنِ بْنِ أَبِي لَيْلَى، قَالَ شَهِدْتُ عَلِيًّا رَضِيَ اللَّهُ عَنْهُ فِي الرَّحَبَةِ يَنْشُدُ النَّاسَ أَنْشُدُ اللَّهَ مَنْ سَمِعَ رَسُولَ اللَّهِ صَلَّى اللَّهُ عَلَيْهِ وَسَلَّمَ يَقُولُ يَوْمَ غَدِيرِ خُمٍّ مَنْ كُنْتُ مَوْلَاهُ فَعَلِيٌّ مَوْلَاهُ لَمَّا قَامَ فَشَهِدَ قَالَ عَبْدُ الرَّحْمَنِ فَقَامَ اثْنَا عَشَرَ بَدْرِيًّا كَأَنِّي أَنْظُرُ إِلَى أَحَدِهِمْ فَقَالُوا نَشْهَدُ أَنَّا سَمِعْنَا رَسُولَ اللَّهِ صَلَّى اللَّهُ عَلَيْهِ وَسَلَّمَ يَقُولُ يَوْمَ غَدِيرِ خُمٍّ أَلَسْتُ أَوْلَى بِالْمُؤْمِنِينَ مِنْ أَنْفُسِهِمْ وَأَزْوَاجِي أُمَّهَاتُهُمْ فَقُلْنَا بَلَى يَا رَسُولَ اللَّهِ قَالَ فَمَنْ كُنْتُ مَوْلَاهُ فَعَلِيٌّ مَوْلَاهُ اللَّهُمَّ وَالِ مَنْ وَالَاهُ وَعَادِ مَنْ عَادَاهُ"

token_classifier(s)

```

* This model is deployed on a Huggingface space using Gradio. So you can use it online [here](https://huggingface.co/spaces/ArefSadeghian/ArefSadeghian-arabert-finetuned-caner)!

|

Aloka/mbart50-ft-si-en

|

[

"pytorch",

"tensorboard",

"mbart",

"text2text-generation",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

] |

text2text-generation

|

{

"architectures": [

"MBartForConditionalGeneration"

],

"model_type": "mbart",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | null |

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

# Let's chat for 5 lines

for step in range(5):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

# pretty print last ouput tokens from bot

print("DialoGPT: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

|

Alstractor/distilbert-base-uncased-finetuned-cola

|

[

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

|

{

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 40 | null |

# AMR prediction with LGBMClassifier models

This repository contains a Python script for predicting antimicrobial resistance (AMR) using the LGBMClassifier model. The script reads input datasets from a directory, applies feature extraction techniques to obtain k-mer features, trains and tests the models using cross-validation, and outputs the results in text files.

## Getting Started

These instructions will get you a copy of the project up and running on your local machine for development and testing purposes.

### Prerequisites

This script requires the following Python libraries:

pandas

scikit-learn

numpy

tqdm

lightgbm

hyperopt

joblib

bayesian-optimization

skopt

### Installing

Clone the repository to your local machine and install the required libraries:

```bash

$ git clone https://github.com/username/repo.git

$ cd repo

$ pip install -r requirements.txt

```

### Usage

To use the script, execute the following command:

css

Copy code

```bash

$ python main.py

```

## Code Structure

The main script consists of several sections:

1 Import necessary libraries

2 Set seed for reproducibility

3 Define function to get list of models to evaluate

4 Load list of selected samples

5 Call function to get list of models

6 Initialize KFold cross-validation

7 Iterate over values of k to read the corresponding k-mer feature dataset

8 Iterate over the models list

9 Write results to text file

## Data Description

The input datasets are CSV files containing bacterial genomic sequences and their corresponding resistance profiles for selected antibiotics. The script reads these files from a directory and applies k-mer feature extraction techniques to obtain numerical feature vectors.

## Models

The script uses two models for AMR prediction: Random Forest and LGBMClassifier.

## Output

The script outputs the results of each model to a text file in the specified output directory. The results include accuracy, precision, recall, F1 score, and area under the ROC curve.

## Authors

Gabriel Sousa - gabrieltxs

## License

This project is licensed under the MIT License - see the LICENSE.md file for details.

[](https://choosealicense.com/licenses/mit/)

|

Amalq/distilroberta-base-finetuned-anxiety-depression

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 260.31 +/- 21.73

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

AmanPriyanshu/DistilBert-Sentiment-Analysis

|

[

"tf",

"distilbert",

"fill-mask",

"transformers",

"autotrain_compatible"

] |

fill-mask

|

{

"architectures": [

"DistilBertForMaskedLM"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 7 | null |

---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 1848.82 +/- 105.51

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

AmazonScience/qanlu

|

[

"pytorch",

"roberta",

"question-answering",

"en",

"dataset:atis",

"transformers",

"license:cc-by-4.0",

"autotrain_compatible",

"has_space"

] |

question-answering

|

{

"architectures": [

"RobertaForQuestionAnswering"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 494 | null |

---

language:

- en

- cy

pipeline_tag: translation

tags:

- translation

- marian

metrics:

- bleu

- cer

- wer

- wil

- wip

- chrf

widget:

- text: "The doctor will be late to attend to patients this morning."

example_title: "Example 1"

license: apache-2.0

model-index:

- name: "mt-dspec-health-en-cy"

results:

- task:

name: Translation

type: translation

metrics:

- name: SacreBLEU

type: bleu

value: 54.16

- name: CER

type: cer

value: 0.31

- name: WER

type: wer

value: 0.47

- name: WIL

type: wil

value: 0.67

- name: WIP

type: wip

value: 0.33

- name: SacreBLEU CHRF

type: chrf

value: 69.03

---

# mt-dspec-health-en-cy

A language translation model for translating between English and Welsh, specialised to the specific domain of Health and care.

This model was trained using custom DVC pipeline employing [Marian NMT](https://marian-nmt.github.io/),

the datasets prepared were generated from the following sources:

- [UK Government Legislation data](https://www.legislation.gov.uk)

- [OPUS-cy-en](https://opus.nlpl.eu/)

- [Cofnod Y Cynulliad](https://record.assembly.wales/)

- [Cofion Techiaith Cymru](https://cofion.techiaith.cymru)

The data was split into train, validation and tests sets, the test set containing health-specific segments from TMX files

selected at random from the [Cofion Techiaith Cymru](https://cofion.techiaith.cymru) website, which have been pre-classified as pertaining to the specific domain.

Having extracted the test set, the aggregation of remaining data was then split into 10 training and validation sets, and fed into 10 marian training sessions.

A website demonstrating use of this model is available at http://cyfieithu.techiaith.cymru.

## Evaluation

Evaluation was done using the python libraries [SacreBLEU](https://github.com/mjpost/sacrebleu) and [torchmetrics](https://torchmetrics.readthedocs.io/en/stable/).

## Usage

Ensure you have the prerequisite python libraries installed:

```bash

pip install transformers sentencepiece

```

```python

import trnasformers

model_id = "techiaith/mt-spec-health-en-cy"

tokenizer = transformers.AutoTokenizer.from_pretrained(model_id)

model = transformers.AutoModelForSeq2SeqLM.from_pretrained(model_id)

translate = transformers.pipeline("translation", model=model, tokenizer=tokenizer)

translated = translate("The doctor will be late to attend to patients this morning.")

print(translated["translation_text"])

```

|

Amba/wav2vec2-large-xls-r-300m-tr-colab

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

language:

- en

- cy

license: apache-2.0

pipeline_tag: translation

tags:

- translation

- marian

metrics:

- bleu

- cer

- chrf

- cer

- wer

- wil

- wip

widget:

- text: "The Curriculum and Assessment (Wales) Act 2021 (the Act) established the Curriculum for Wales and replaced the general curriculum used up until that point."

example_title: "Example 1"

model-index:

- name: mt-dspec-legislation-en-cy

results:

- task:

name: Translation

type: translation

metrics:

- type: bleu

value: 65.51

- type: cer

value: 0.28

- type: chrf

value: 74.69

- type: wer

value: 0.39

- type: wil

value: 0.54

- type: wip

value: 0.46

---

# mt-dspec-legislation-en-cy

A language translation model for translating between English and Welsh, specialised to the specific domain of Legislation.

This model was trained using custom DVC pipeline employing [Marian NMT](https://marian-nmt.github.io/),

the datasets prepared were generated from the following sources:

- [UK Government Legislation data](https://www.legislation.gov.uk)

- [OPUS-cy-en](https://opus.nlpl.eu/)

- [Cofnod Y Cynulliad](https://record.assembly.wales/)

- [Cofion Techiaith Cymru](https://cofion.techiaith.cymru)

The data was split into train, validation and test sets; the test set containing legislation-specific segments were selected randomly from TMX files

originating from the [Cofion Techiaith Cymru](https://cofion.techiaith.cymru) website, which have been pre-classified as pertaining to the specific domain,

and data files scraped from the UK Government Legislation website.

Having extracted the test set, the aggregation of remaining data was then split into 10 training and validation sets, and fed into 10 marian training sessions.

## Evaluation

Evaluation scores were produced using the python libraries [SacreBLEU](https://github.com/mjpost/sacrebleu) and [torchmetrics](https://torchmetrics.readthedocs.io/en/stable/).

## Usage

Ensure you have the prerequisite python libraries installed:

```bsdh

pip install transformers sentencepiece

```

```python

import trnasformers

model_id = "techiaith/mt-spec-health-en-cy"

tokenizer = transformers.AutoTokenizer.from_pretrained(model_id)

model = transformers.AutoModelForSeq2SeqLM.from_pretrained(model_id)

translate = transformers.pipeline("translation", model=model, tokenizer=tokenizer)

translated = translate(

"The Curriculum and Assessment (Wales) Act 2021 (the Act) "

"established the Curriculum for Wales and replaced the general "

"curriculum used up until that point."

)

print(translated["translation_text"])

```

|

Andranik/TestQaV1

|

[

"pytorch",

"rust",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

] |

question-answering

|

{

"architectures": [

"RobertaForQuestionAnswering"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | null |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.67 +/- 18.31

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

AndrewNLP/redditDepressionPropensityClassifiers

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_decay

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_decay** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_decay agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_decay.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_decay]"

python -m cleanrl_utils.enjoy --exp-name DQPN_decay --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed1/raw/main/dqpn_decay.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed1/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed1/raw/main/poetry.lock

poetry install --all-extras

python dqpn_decay.py --exp-name DQPN_decay --seed 1 --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk

```

# Hyperparameters

```python

{'alg_type': 'dqpn_decay.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'end_policy_network_frequency': 200,

'env_id': 'CartPole-v1',

'evaluation_fraction': 0.7,

'exp_name': 'DQPN_decay',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_tau': 1.0,

'save_model': True,

'seed': 1,

'start_e': 1.0,

'start_policy_network_frequency': 10000,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

Andrey1989/mbart-finetuned-en-to-kk

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_decay

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_decay** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_decay agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_decay.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_decay]"

python -m cleanrl_utils.enjoy --exp-name DQPN_decay --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed3/raw/main/dqpn_decay.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed3/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_decay-seed3/raw/main/poetry.lock

poetry install --all-extras

python dqpn_decay.py --exp-name DQPN_decay --seed 3 --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk

```

# Hyperparameters

```python

{'alg_type': 'dqpn_decay.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'end_policy_network_frequency': 200,

'env_id': 'CartPole-v1',

'evaluation_fraction': 0.7,

'exp_name': 'DQPN_decay',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_tau': 1.0,

'save_model': True,

'seed': 3,

'start_e': 1.0,

'start_policy_network_frequency': 10000,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

Andrey78/my_nlp_test_model

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

language: en

thumbnail: http://www.huggingtweets.com/barackobama-joebiden-realdonaldtrump/1678850778048/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/874276197357596672/kUuht00m_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1308769664240160770/AfgzWVE7_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1329647526807543809/2SGvnHYV_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Donald J. Trump & Joe Biden & Barack Obama</div>

<div style="text-align: center; font-size: 14px;">@barackobama-joebiden-realdonaldtrump</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Donald J. Trump & Joe Biden & Barack Obama.

| Data | Donald J. Trump | Joe Biden | Barack Obama |

| --- | --- | --- | --- |

| Tweets downloaded | 3173 | 3250 | 3250 |

| Retweets | 1077 | 661 | 321 |

| Short tweets | 519 | 26 | 19 |

| Tweets kept | 1577 | 2563 | 2910 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/br58nwn1/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @barackobama-joebiden-realdonaldtrump's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/13j83o80) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/13j83o80/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/barackobama-joebiden-realdonaldtrump')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Andrianos/bert-base-greek-punctuation-prediction-finetuned

|

[

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: mit

datasets:

- koliskos/fake_news

language:

- en

---

# Model Card for Model ID

Model is used to detect whether a news story is fake or legitimate.

- **Developed by:** koliskos

- **Model type:** Text Classification

- **Language(s) (NLP):** English

- **License:** mit

- **Finetuned from model:** DistilBERT

- **Repository:** koliskos/fine_tuned_fake_news_classifier

## Uses

This model is meant to classify news articles as real or fake.

## Bias, Risks, and Limitations

This model could potentially assume "fake" to be the default

prediction for news stories that contain names that are seen

heavily within fake news articles, ex: a news story about someone

named Hillary may be labeled fake even if it is real because the

name Hillary is heavily grounded within the context of Hillary Clinton.

## Model Card Contact

spkolisko "at" wellesley.edu

|

AnonymousSub/AR_consert

|

[

"pytorch",

"bert",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 2 | null |

---

license: cc-by-nc-4.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: videomae-base-finetuned-RealLifeViolenceSituations-subset

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# videomae-base-finetuned-RealLifeViolenceSituations-subset

This model is a fine-tuned version of [MCG-NJU/videomae-base](https://huggingface.co/MCG-NJU/videomae-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1618

- Accuracy: 0.9533

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 800

### Training results

| Training Loss | Epoch | Step | Accuracy | Validation Loss |

|:-------------:|:-----:|:----:|:--------:|:---------------:|

| 0.1065 | 0.25 | 200 | 0.9598 | 0.1470 |

| 0.067 | 1.25 | 400 | 0.9625 | 0.1415 |

| 0.0058 | 2.25 | 600 | 0.9625 | 0.1415 |

| 0.0274 | 3.25 | 800 | 0.9625 | 0.1415 |

| 0.0274 | 1.0 | 801 | 0.1411 | 0.9626 |

### Framework versions

- Transformers 4.27.2

- Pytorch 1.13.1

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AnonymousSub/AR_rule_based_roberta_twostagetriplet_epochs_1_shard_1

|

[

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 6 | 2023-03-15T05:40:28Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-confluence

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-confluence

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AnonymousSub/AR_rule_based_roberta_twostagetriplet_epochs_1_shard_10

|

[

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 2 | null |

---

license: cc-by-4.0

tags:

- generated_from_trainer

model-index:

- name: xlm-roberta-clickbait-spoiling-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-clickbait-spoiling-2

This model is a fine-tuned version of [deepset/xlm-roberta-base-squad2](https://huggingface.co/deepset/xlm-roberta-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7918

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 400 | 2.7691 |

| 3.0496 | 2.0 | 800 | 2.7095 |

| 2.4457 | 3.0 | 1200 | 2.7918 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AnonymousSub/SR_declutr

|

[

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 6 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

model-index:

- name: my_awesome_model

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: test

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.93052

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2372

- Accuracy: 0.9305

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.2346 | 1.0 | 1563 | 0.1895 | 0.9280 |

| 0.1531 | 2.0 | 3126 | 0.2372 | 0.9305 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

AnonymousSub/SR_rule_based_bert_quadruplet_epochs_1_shard_1

|

[

"pytorch",

"bert",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | null |

---

license: mit

---

Pretrained models of our method **DirectMHP**

Title: *DirectMHP: Direct 2D Multi-Person Head Pose Estimation with Full-range Angles*

Paper link: https://arxiv.org/abs/2302.01110

Code link: https://github.com/hnuzhy/DirectMHP

# Mulit-Person Head Pose Estimation Task (trained on CMU-HPE)

* DirectMHP-S --> [cmu_s_1280_e200_t40_lw010_best.pt](./cmu_s_1280_e200_t40_lw010_best.pt)

* DirectMHP-M --> [cmu_m_1280_e200_t40_lw010_best.pt](./cmu_m_1280_e200_t40_lw010_best.pt)

# Mulit-Person Head Pose Estimation Task (trained on AGORA-HPE)

* DirectMHP-S --> [agora_s_1280_e300_t40_lw010_best.pt](./agora_s_1280_e300_t40_lw010_best.pt)

* DirectMHP-M --> [agora_m_1280_e300_t40_lw010_best.pt](./agora_m_1280_e300_t40_lw010_best.pt)

# Single HPE datasets with YOLOv5+COCO format

* Resorted images used in our DirectMHP: [300W-LP.zip](./300W_LP.zip), [AFLW2000.zip](./AFLW2000.zip) and [BIWI_test.zip](./BIWI_test.zip).

* Resorted corresponding json files: [train_300W_LP.json](./train_300W_LP.json), [val_AFLW2000.json](./val_AFLW2000.json) and [BIWI_test.json](./BIWI_test.json).

# Single HPE Task Pretrained on WiderFace and Finetuning on 300W-LP

* DirectMHP-S --> [300wlp_s_512_e50_finetune_best.pt](./300wlp_s_512_e50_finetune_best.pt)

* DirectMHP-M --> [300wlp_m_512_e50_finetune_best.pt](./300wlp_m_512_e50_finetune_best.pt)

# Single HPE SixDRepNet Re-trained on AGORA-HPE and CMU-HPE

* AGORA-HPE --> [SixDRepNet_AGORA_bs256_e100_epoch_last.pth](./SixDRepNet_AGORA_bs256_e100_epoch_last.pth)

* CMU-HPE --> [SixDRepNet_CMU_bs256_e100_epoch_last.pth](./SixDRepNet_CMU_bs256_e100_epoch_last.pth)

|

AnonymousSub/SR_rule_based_hier_quadruplet_epochs_1_shard_1

|

[

"pytorch",

"bert",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | 2023-03-15T06:30:59Z |

---

license: mit

language:

- en

---

# BERT-Tiny (uncased)

This is the smallest version of 24 smaller BERT models (English only, uncased, trained with WordPiece masking)

released by [google-research/bert](https://github.com/google-research/bert).

These BERT models was released as TensorFlow checkpoints, however, this is the converted version to PyTorch.

More information can be found in [google-research/bert](https://github.com/google-research/bert) or [lyeoni/convert-tf-to-pytorch](https://github.com/lyeoni/convert-tf-to-pytorch).

## Evaluation

Here are the evaluation scores (F1/Accuracy) for the MPRC task.

|Model|MRPC|

|-|:-:|

|BERT-Tiny|81.22/68.38|

|BERT-Mini|81.43/69.36|

|BERT-Small|81.41/70.34|

|BERT-Medium|83.33/73.53|

|BERT-Base|85.62/78.19|

### References

```

@article{turc2019,

title={Well-Read Students Learn Better: On the Importance of Pre-training Compact Models},

author={Turc, Iulia and Chang, Ming-Wei and Lee, Kenton and Toutanova, Kristina},

journal={arXiv preprint arXiv:1908.08962v2 },

year={2019}

}

```

|

AnonymousSub/SR_rule_based_hier_triplet_epochs_1_shard_1

|

[

"pytorch",

"bert",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | 2023-03-15T06:33:28Z |

---

language:

- en

datasets:

- en_core_web_sm

thumbnail: >-

https://huggingface.co/giovannefeitosa/chatbot-about-pele/raw/main/images/pele.jpeg

tags:

- question-answering

- chatbot

- brazil

license: cc-by-nc-4.0

pipeline_tag: text2text-generation

library_name: sklearn

---

# Chatbot about Pele

This is demo project.

> library_name: sklearn

|

AnonymousSub/SR_rule_based_roberta_hier_triplet_epochs_1_shard_10

|

[

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 6 | null |

---

license: mit

language:

- ko

---

# Kconvo-roberta: Korean conversation RoBERTa ([github](https://github.com/HeoTaksung/Domain-Robust-Retraining-of-Pretrained-Language-Model))

- There are many PLMs (Pretrained Language Models) for Korean, but most of them are trained with written language.

- Here, we introduce a retrained PLM for prediction of Korean conversation data where we use verbal data for training.

## Usage

```python

# Kconvo-roberta

from transformers import RobertaTokenizerFast, RobertaModel

tokenizer_roberta = RobertaTokenizerFast.from_pretrained("yeongjoon/Kconvo-roberta")

model_roberta = RobertaModel.from_pretrained("yeongjoon/Kconvo-roberta")

```

-----------------

## Domain Robust Retraining of Pretrained Language Model

- Kconvo-roberta uses [klue/roberta-base](https://huggingface.co/klue/roberta-base) as the base model and retrained additionaly with the conversation dataset.

- The retrained dataset was collected through the [National Institute of the Korean Language](https://corpus.korean.go.kr/request/corpusRegist.do) and [AI-Hub](https://www.aihub.or.kr/aihubdata/data/list.do?pageIndex=1&currMenu=115&topMenu=100&dataSetSn=&srchdataClCode=DATACL001&srchOrder=&SrchdataClCode=DATACL002&searchKeyword=&srchDataRealmCode=REALM002&srchDataTy=DATA003), and the collected dataset is as follows.

```

- National Institute of the Korean Language

* 온라인 대화 말뭉치 2021

* 일상 대화 말뭉치 2020

* 구어 말뭉치

* 메신저 말뭉치

- AI-Hub

* 온라인 구어체 말뭉치 데이터

* 상담 음성

* 한국어 음성

* 자유대화 음성(일반남여)

* 일상생활 및 구어체 한-영 번역 병렬 말뭉치 데이터

* 한국인 대화음성

* 감성 대화 말뭉치

* 주제별 텍스트 일상 대화 데이터

* 용도별 목적대화 데이터

* 한국어 SNS

```

|

AnonymousSub/SR_rule_based_roberta_hier_triplet_epochs_1_shard_1_wikiqa_copy

|

[

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,