modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-31 12:28:44

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 540

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-31 12:23:33

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

CCMat/fgreeneruins-ruins

|

CCMat

| 2023-01-27T12:46:53Z | 6 | 1 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"landscape",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-20T16:37:03Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- landscape

widget:

- text: high quality photo of Venice in fgreeneruins ruins

---

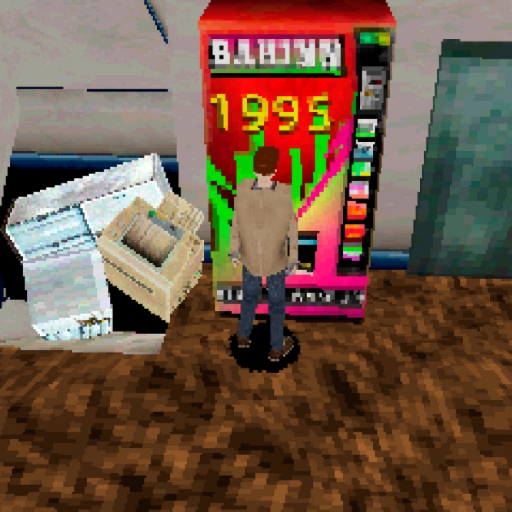

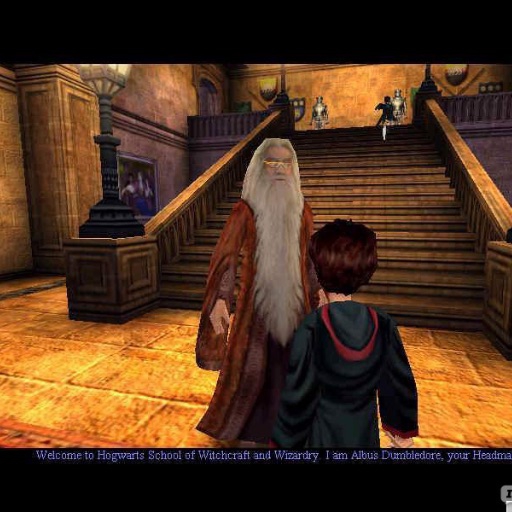

# DreamBooth model for the fgreeneruins concept trained on the CCMat/db-forest-ruins dataset.

This is a Stable Diffusion model fine-tuned on the fgreeneruins concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of fgreeneruins ruins**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `ruins` images for the landscape theme.<br>

Concept: **fgreeneruins** : forest ruins, greenery ruins<br>

Pretrained Model: [nitrosocke/elden-ring-diffusion](https://huggingface.co/nitrosocke/elden-ring-diffusion)<br>

Learning rate: 2e-6<br>

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('CCMat/fgreeneruins-ruins')

image = pipeline().images[0]

image

```

## Samples

Prompt: "high quality photo of Venice in fruins ruins"

<br>

Prompt: "high quality photo of Rome in fgreeneruins ruins with the Colosseum in the background"

<br>

Prompt: "fgreeneruins ruins in London near the Tower Bridge, professional photograph"

<br>

Prompt: "photo of Paris in fgreeneruins ruins, elden ring style"

Prompt: "fgreeneruins ruins in Saint Petersburg, Sovietwave"

|

CCMat/fforiver-river-mdj

|

CCMat

| 2023-01-27T12:46:36Z | 6 | 1 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"landscape",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-17T18:12:52Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- landscape

widget:

- text: Fallout concept of fforiver river in front of the Great Pyramid of Giza

---

# DreamBooth model for the fforiver concept trained on the CCMat/forest-river dataset.

This is a Stable Diffusion model fine-tuned on the fforiver concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of fforiver river**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `river` images for the landscape theme.

Pretrained Model: prompthero/openjourney

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('CCMat/fforiver-river-mdj')

image = pipeline().images[0]

image

```

## Samples

Prompt: "high quality photo of fforiver river along the Colosseum in Rome"

<br>

Prompt: "Fallout concept of fforiver river in front of Chichén Itzá in Mexico, sun rays, unreal engine 5"

<br>

|

Rajan/donut-base-sroie_300

|

Rajan

| 2023-01-27T12:37:52Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vision-encoder-decoder",

"image-text-to-text",

"generated_from_trainer",

"dataset:imagefolder",

"license:mit",

"endpoints_compatible",

"region:us"

] |

image-text-to-text

| 2023-01-27T12:20:38Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: donut-base-sroie_300

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# donut-base-sroie_300

This model is a fine-tuned version of [naver-clova-ix/donut-base](https://huggingface.co/naver-clova-ix/donut-base) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1

- Datasets 2.8.0

- Tokenizers 0.13.2

|

jirkoru/TemporalRegressionV2

|

jirkoru

| 2023-01-27T12:37:46Z | 0 | 0 |

sklearn

|

[

"sklearn",

"skops",

"tabular-classification",

"region:us"

] |

tabular-classification

| 2023-01-27T12:37:05Z |

---

library_name: sklearn

tags:

- sklearn

- skops

- tabular-classification

model_file: model.pkl

widget:

structuredData:

angel_n_rounds:

- 0.0

- 0.0

- 0.0

pre_seed_n_rounds:

- 0.0

- 0.0

- 0.0

seed_funding:

- 1250000.0

- 800000.0

- 8000000.0

seed_n_rounds:

- 1.0

- 3.0

- 1.0

time_first_funding:

- 1270.0

- 1856.0

- 689.0

time_till_series_a:

- 1455.0

- 1667.0

- 1559.0

---

# Model description

[More Information Needed]

## Intended uses & limitations

[More Information Needed]

## Training Procedure

### Hyperparameters

The model is trained with below hyperparameters.

<details>

<summary> Click to expand </summary>

| Hyperparameter | Value |

|-----------------------------------------------|----------------------------------------------------------------------------------------------------|

| memory | |

| steps | [('transformation', ColumnTransformer(transformers=[('min_max_scaler', MinMaxScaler(),<br /> ['time_first_funding', 'seed_funding',<br /> 'time_till_series_a'])])), ('model', LogisticRegression(penalty='none', random_state=0))] |

| verbose | False |

| transformation | ColumnTransformer(transformers=[('min_max_scaler', MinMaxScaler(),<br /> ['time_first_funding', 'seed_funding',<br /> 'time_till_series_a'])]) |

| model | LogisticRegression(penalty='none', random_state=0) |

| transformation__n_jobs | |

| transformation__remainder | drop |

| transformation__sparse_threshold | 0.3 |

| transformation__transformer_weights | |

| transformation__transformers | [('min_max_scaler', MinMaxScaler(), ['time_first_funding', 'seed_funding', 'time_till_series_a'])] |

| transformation__verbose | False |

| transformation__verbose_feature_names_out | True |

| transformation__min_max_scaler | MinMaxScaler() |

| transformation__min_max_scaler__clip | False |

| transformation__min_max_scaler__copy | True |

| transformation__min_max_scaler__feature_range | (0, 1) |

| model__C | 1.0 |

| model__class_weight | |

| model__dual | False |

| model__fit_intercept | True |

| model__intercept_scaling | 1 |

| model__l1_ratio | |

| model__max_iter | 100 |

| model__multi_class | auto |

| model__n_jobs | |

| model__penalty | none |

| model__random_state | 0 |

| model__solver | lbfgs |

| model__tol | 0.0001 |

| model__verbose | 0 |

| model__warm_start | False |

</details>

### Model Plot

The model plot is below.

<style>#sk-container-id-1 {color: black;background-color: white;}#sk-container-id-1 pre{padding: 0;}#sk-container-id-1 div.sk-toggleable {background-color: white;}#sk-container-id-1 label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-container-id-1 label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-container-id-1 label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-container-id-1 div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-container-id-1 div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-container-id-1 div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-container-id-1 input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-container-id-1 input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-container-id-1 div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-container-id-1 div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-container-id-1 div.sk-estimator:hover {background-color: #d4ebff;}#sk-container-id-1 div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-container-id-1 div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-1 div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: 0;}#sk-container-id-1 div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;position: relative;}#sk-container-id-1 div.sk-item {position: relative;z-index: 1;}#sk-container-id-1 div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;position: relative;}#sk-container-id-1 div.sk-item::before, #sk-container-id-1 div.sk-parallel-item::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: -1;}#sk-container-id-1 div.sk-parallel-item {display: flex;flex-direction: column;z-index: 1;position: relative;background-color: white;}#sk-container-id-1 div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-container-id-1 div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-container-id-1 div.sk-parallel-item:only-child::after {width: 0;}#sk-container-id-1 div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;}#sk-container-id-1 div.sk-label label {font-family: monospace;font-weight: bold;display: inline-block;line-height: 1.2em;}#sk-container-id-1 div.sk-label-container {text-align: center;}#sk-container-id-1 div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-container-id-1 div.sk-text-repr-fallback {display: none;}</style><div id="sk-container-id-1" class="sk-top-container" style="overflow: auto;"><div class="sk-text-repr-fallback"><pre>Pipeline(steps=[('transformation',ColumnTransformer(transformers=[('min_max_scaler',MinMaxScaler(),['time_first_funding','seed_funding','time_till_series_a'])])),('model', LogisticRegression(penalty='none', random_state=0))])</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-1" type="checkbox" ><label for="sk-estimator-id-1" class="sk-toggleable__label sk-toggleable__label-arrow">Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('transformation',ColumnTransformer(transformers=[('min_max_scaler',MinMaxScaler(),['time_first_funding','seed_funding','time_till_series_a'])])),('model', LogisticRegression(penalty='none', random_state=0))])</pre></div></div></div><div class="sk-serial"><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-2" type="checkbox" ><label for="sk-estimator-id-2" class="sk-toggleable__label sk-toggleable__label-arrow">transformation: ColumnTransformer</label><div class="sk-toggleable__content"><pre>ColumnTransformer(transformers=[('min_max_scaler', MinMaxScaler(),['time_first_funding', 'seed_funding','time_till_series_a'])])</pre></div></div></div><div class="sk-parallel"><div class="sk-parallel-item"><div class="sk-item"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-3" type="checkbox" ><label for="sk-estimator-id-3" class="sk-toggleable__label sk-toggleable__label-arrow">min_max_scaler</label><div class="sk-toggleable__content"><pre>['time_first_funding', 'seed_funding', 'time_till_series_a']</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-4" type="checkbox" ><label for="sk-estimator-id-4" class="sk-toggleable__label sk-toggleable__label-arrow">MinMaxScaler</label><div class="sk-toggleable__content"><pre>MinMaxScaler()</pre></div></div></div></div></div></div></div></div><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-5" type="checkbox" ><label for="sk-estimator-id-5" class="sk-toggleable__label sk-toggleable__label-arrow">LogisticRegression</label><div class="sk-toggleable__content"><pre>LogisticRegression(penalty='none', random_state=0)</pre></div></div></div></div></div></div></div>

## Evaluation Results

[More Information Needed]

# How to Get Started with the Model

[More Information Needed]

# Model Card Authors

This model card is written by following authors:

[More Information Needed]

# Model Card Contact

You can contact the model card authors through following channels:

[More Information Needed]

# Citation

Below you can find information related to citation.

**BibTeX:**

```

[More Information Needed]

```

# model_card_authors

jirko

# model_description

just the temporal regression with reduced input features

|

Antiraedus/LeDude-dog

|

Antiraedus

| 2023-01-27T12:36:51Z | 3 | 1 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"animal",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-31T02:55:08Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- animal

widget:

- text: a photo of LeDude dog in the Acropolis

---

# DreamBooth model for the LeDude concept trained by Antiraedus on the Antiraedus/Dude dataset.

This is a Stable Diffusion model fine-tuned on the LeDude concept with DreamBooth, which is my 10 year old Australian Silky terrier.

It can be used by modifying the `instance_prompt`: **a photo of LeDude dog**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `dog` images for the animal theme.

## Original

## Example

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('Antiraedus/LeDude-dog')

image = pipeline().images[0]

image

```

|

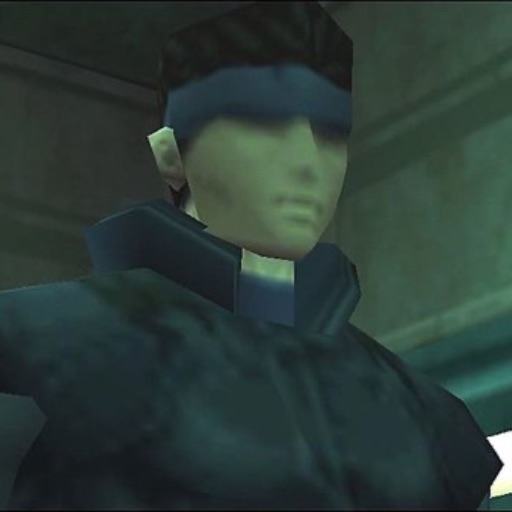

sd-dreambooth-library/retro3d

|

sd-dreambooth-library

| 2023-01-27T12:35:55Z | 13 | 31 |

diffusers

|

[

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-13T11:49:23Z |

---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

widget:

- text: trsldamrl Donald Trump

example_title: Retro3d donald Trump

- text: trsldamrl keanu reeves

example_title: Retro3d Keanu Reeves

- text: trsldamrl wizard castle

example_title: Retro3d wizard castle

---

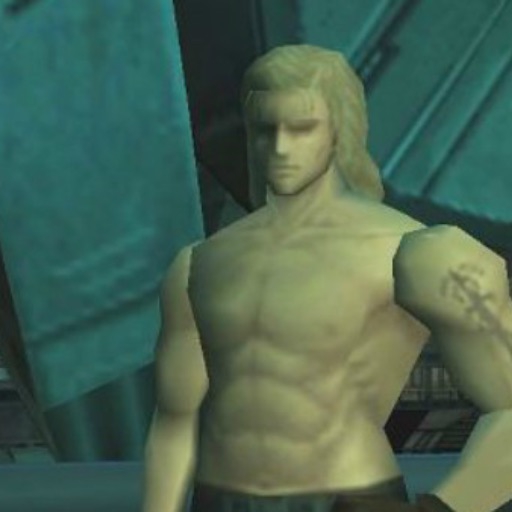

### retro3d Dreambooth model trained by abesmon with [Hugging Face Dreambooth Training Space](https://colab.research.google.com/drive/15cxJE2SBYJ0bZwoGzkdOSvqGtgz_Rvhk?usp=sharing) with the v2-1-512 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/drive/1FQkg1LBk99Ujpwn4fBZzGgEcuXz6-52-?usp=sharing). Don't forget to use the concept prompts!

concept named **trsldamrl** (use that on your prompt)

### Trained with:

|

plasmo/naturitize-sd2-1-768px

|

plasmo

| 2023-01-27T12:34:10Z | 5 | 10 |

diffusers

|

[

"diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-23T20:31:30Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: "naturitize "

---

### Jak's **Naturitize** Image Pack (SD2.1) for Stable Diffusion

**naturitize-sd2.1-768px v.1.0**

*THIS IS FOR Stable Diffusion VERSION 2.1*

You MUST also include the **naturitize-SD2.1-768px.yaml** file in the same directory as your model file (will be uploaded here for your convenience)

With this model, other than being trained from SD2.1, you can also mix and match embeddings to your images!

--------------------

Another Jak Texture Pack Release is here to help create your earthy, creations!

Trained using 112 (768px) training images, 8000 training steps, 500 Text_Encoder_steps.

Use Prompt: "**naturitize**" in the beginning of your prompt followed by a word. *No major prompt-crafting needed*.

Thanks to /u/Jak_TheAI_Artist and /u/okamiueru for creating training images!

Sample pictures of this concept:

|

plasmo/wooditize-sd2-1-768px

|

plasmo

| 2023-01-27T12:33:44Z | 9 | 8 |

diffusers

|

[

"diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-20T21:22:01Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: "wooditize "

---

### Jak's **WOODitize** Image Pack (SD2.1) for Stable Diffusion

**wooditize-sd2.1-768px v.1.0**

*THIS IS FOR Stable Diffusion VERSION 2.1*

You MUST also include the **wooditize-SD2.1-768px.yaml** file in the same directory as your model file (will be uploaded here for your convenience)

With this model, other than being trained from SD2.1, you can also mix and match embeddings to your images!

--------------------

Another Jak Texture Pack Release is here to help create WOOD cutouts and dioramas!

Trained using 111 (768px) training images, 8000 training steps, 500 Text_Encoder_steps.

Use Prompt: "**wooditize**" in the beginning of your prompt followed by a word. *No major prompt-crafting needed*.

Thanks to /u/Jak_TheAI_Artist for creating training images!

Sample pictures of this concept:

.png)

.png)

.png)

.png)

.png)

.png)

.png)

|

plasmo/clayitization-sd2-1-768px

|

plasmo

| 2023-01-27T12:33:33Z | 8 | 18 |

diffusers

|

[

"diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-19T10:54:13Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: "clayitization "

---

### Jak's **Clayitization** Image Pack (SD2.1) for Stable Diffusion

**clayitization-sd2.1-768px v.1.0**

*THIS IS FOR Stable Diffusion VERSION 2.1*

You MUST also include the **clayitization-SD2.1-768px.yaml** file in the same directory as your model file (will be uploaded here for your convenience)

With this model, other than being trained from SD2.1, you can also mix and match embeddings to your images!

--------------------

From the makers of [Woolitize](https://huggingface.co/plasmo/woolitize-768sd1-5), another versatile Jak Texture Pack is available to help unleash your Clay-itivity!

Trained using 100 (768px) training images, 8000 training steps, 500 Text_Encoder_steps.

Use Prompt: "**clayitization**" in the beginning of your prompt followed by a word. *No major prompt-crafting needed*.

Thanks to /u/Jak_TheAI_Artist for creating training images!

Tips:

- use fewer prompts to make a more raw clay look (eg. "clayitization, brad pitt" made the image below)

- change to square for portraits, and rectangle for dioramas

- add "3d, octane render, intricate details" for more realistic details in the clay

- use 768 resolution or larger images for best results

Sample pictures of this concept:

prompt: Clayitization, cat, mdjrny-ppc (embedding) *this is adding the Midjourney-papercut embedding*

prompt: Clayitization, brad pitt, inkpunk768 (embedding) *this is adding the Inkpunk768 embedding*

|

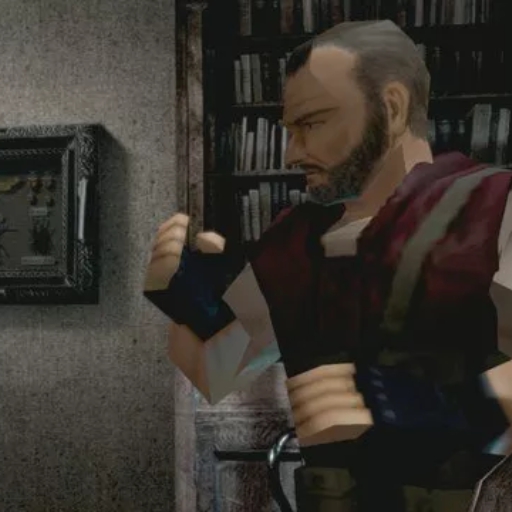

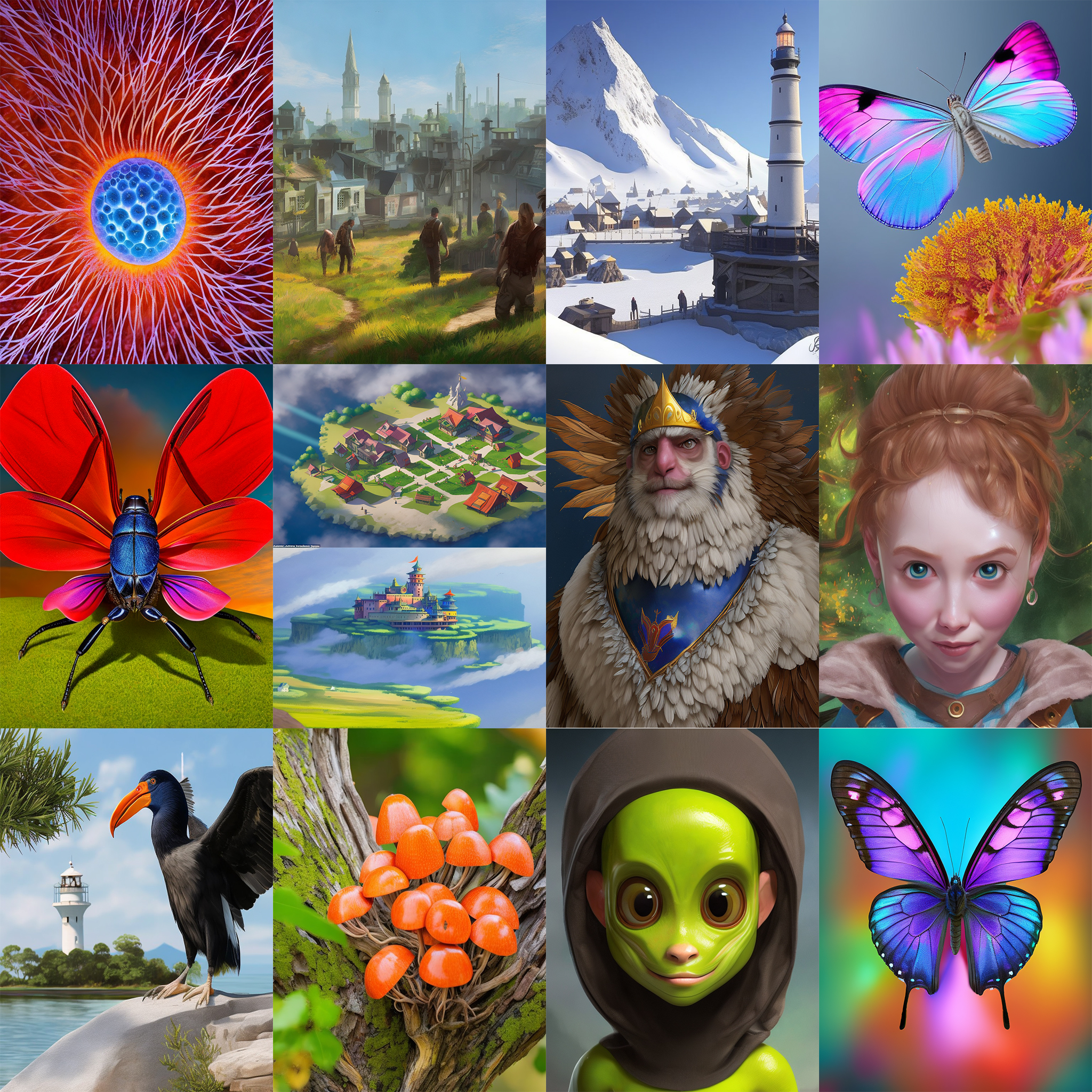

ManglerFTW/CharHelper

|

ManglerFTW

| 2023-01-27T12:04:11Z | 152 | 38 |

diffusers

|

[

"diffusers",

"stable-diffusion",

"text-to-image",

"doi:10.57967/hf/0217",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-17T20:44:25Z |

---

license: creativeml-openrail-m

tags:

- stable-diffusion

- text-to-image

---

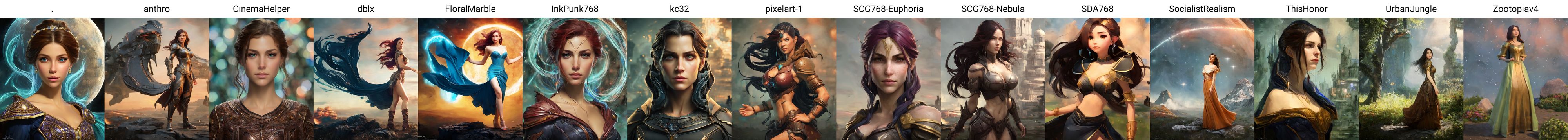

<b>Introduction:</b>

This model was trained on a digital painting style mainly with characters and portraits. The main objective is to train a model to be a tool to help with character design ideas.

It's base is Stable Diffusion V2.1 and is trained with 768X768 images. You will need to add the .yaml file into the same directory as your model to use.

<b>V4:</b>

<br /><br />

File Name is CharHelperV4.safetensors<br />

CharHelper V4 is a merge of CharHelper V3 and a newly trained model. This update is to provide a base for future updates. <b>All older keywords from CharHelper V3 will still work.</b>

Training subjects on this model are Aliens, Zombies, Birds, Cute styling, Lighthouses, and Macro Photography. Mix and match the styles and keywords to push the model further.

## Usage

<b>Use Auto for the vae in settings. If you are using a vae based on a SDv1.5 model, you may not get the best results.</b>

<br />

This model has multiple keywords that can be mixed and matched together in order to acheive a multitude of different styles. However, keywords aren't necessarily needed but can help with styling.

<b>Keywords:</b>

<b>Character Styles:</b>

CHV3CZombie, CHV3CAlien, CHV3CBird

<b>Scenery/Styles:</b>

CHV3SLighthouse, CHV3SCute, CHV3SMacro

<b>V3 Keywords:</b>

<b>Character Styles:</b>

CHV3CKnight, CHV3CWizard, CHV3CBarb, CHV3MTroll, CHV3MDeath, CHV3CRogue, CHV3CCyberpunk, CHV3CSamurai, CHV3CRobot

<b>Scenery/Landscapes:</b>

CHV3SWorld, CHV3SSciFi

<b>WIPs (needs fine-tuning, but try it out):</b>

CHV3MDragon, CHV3CVehicle

## Examples

<b>Aliens!</b>

CHV3CAlien, a portrait of a man in a cute alien creature costume inside a spaceship, a digital rendering, by Arthur Pan, predator, ultra detailed content, face, cockroach, avp, face shown, close-up shot, hastur, very detailed<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 8, Seed: 1751637417, Size: 768x768, Model hash: 0eb3318b, ENSD: 3<br /><br />

-with-big-eyes-surrounded-by-glowing-aura%2C-colo.jpg)

<b>Psychadelic Falcons!</b>

A portrait of an anthropomorphic falcon in knight's armor made of (crystal stars) with big eyes surrounded by glowing aura, colorful sparkle feathers, highly detailed intricated concept art, trending on artstation, 8k, anime style eyes, concept art, cinematic, art award, flat shading, inked lines, artwork by wlop and loish<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 11, Seed: 2894490509, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

<b>Macro Mushrooms!</b>

CHV3SMacro, a nighttime macro photograph of a glowing mushroom with vibrant bioluminescent caps growing on tree bark, flat lighting, under saturated, by Anna Haifisch, pexels, fine art, steampunk forest background, mobile wallpaper, roofed forest, trio, 4k vertical wallpaper, mid fall, high detail, cinematic, focus stacking, smooth, sharp focus, soft pastel colors, Professional, masterpiece, commissioned<br /><br />

Negative prompt: amateur, ((b&w)), ((close-up)), (((duplicate))), (((deformed))), blurry, (((bad proportions))), gross proportions, ugly, tiling, poorly drawn, mutation, mutated, disfigured, deformed, out of frame, blurry, bad art, text, logo, signature, watermark<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 7.5, Seed: 3958069384, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

%2C%20(a%20medium%20range%20portrait%20of%20elon%20musk%20dressed%20as%20a%20(rotting%20zombie_1.2))%2C%20Professional%2C%20masterpiece%2C%20commissi.png)

<b>Zombies!</b>

(CHV3CZombie:1.5), (a medium range portrait of elon musk dressed as a (rotting zombie:1.2)), Professional, masterpiece, commissioned, Artwork by Shigeru Miyamoto, attractive face, facial expression, professional hands, professional anatomy, 2 arms and 2 legs<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 9, Seed: 28710867, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

<b>Lighthouses!</b>

CHV3SLighthouse, a painting of a lighthouse on a small island, polycount contest winner, cliffside town, gold, house background, highlands, tileable, artbook artwork, paid art assets, farm, crisp clean shapes, featured art, mountains, captain, dominant pose, serene landscape, warm color scheme art rendition, low detail, bay, painting, lowres, birds, cgsociety<br /><br />

Negative prompt: 3d, 3d render, b&w, bad anatomy, bad anatomy, bad anatomy, bad art, bad art, bad proportions, blurry, blurry, blurry, body out of frame, canvas frame, cartoon, cloned face, close up, cross-eye, deformed, deformed, deformed, disfigured, disfigured, disfigured, duplicate, extra arms, extra arms, extra fingers, extra legs, extra legs, extra limbs, extra limbs, extra limbs, extra limbs, fused fingers, gross proportions, long neck, malformed limbs, missing arms, missing legs, morbid, mutated, mutated hands, mutated hands, mutation, mutation, mutilated, out of frame, out of frame, out of frame, Photoshop, poorly drawn face, poorly drawn face, poorly drawn feet, poorly drawn hands, poorly drawn hands, tiling, too many fingers<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 7, Seed: 1984075962, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

<b>Cute Creatures!</b>

CHV3SCute, CHV3CRogue, a cute cartoon fox in a rogue costume in a nordic marketplace in valhalla, concept art, deviantart contest winner, glowing flowers, dofus, epic fantasty card game art, digital art render, dmt art, cute pictoplasma, atom, award winning concept art, at sunrise, engineered, gardening, glowing and epic, awesome, neuroscience<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 9, Seed: 3708829983, Size: 768x768, Model hash: 0eb3318b, ENSD: 3<br /><br />

<b>Cool Landscapes!</b>

Studio ghibli's, castle in the sky, Professional, masterpiece, commissioned, CHV3SWorld, CHV3SLighthouse, CHV3SSciFi, pastel color palette<br /><br />

Negative prompt: 3d, 3d render, b&w, bad anatomy, bad anatomy, bad anatomy, bad art, bad art, bad proportions, blurry, blurry, blurry, body out of frame, canvas frame, cartoon, cloned face, close up, cross-eye, deformed, deformed, deformed, disfigured, disfigured, disfigured, duplicate, extra arms, extra arms, extra fingers, extra legs, extra legs, extra limbs, extra limbs, extra limbs, extra limbs, fused fingers, gross proportions, long neck, malformed limbs, missing arms, missing legs, morbid, mutated, mutated hands, mutated hands, mutation, mutation, mutilated, out of frame, out of frame, out of frame, Photoshop, poorly drawn face, poorly drawn face, poorly drawn feet, poorly drawn hands, poorly drawn hands, tiling, too many fingers, over-saturated<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 8, Seed: 2325208488, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

%20with%20big%20eyes%20surro.png)

<b>Even more Psychadelic birds!</b>

10mm focal length, a portrait of a cute style cat-bird that is standing in the snow, made of (crystal stars) with big eyes surrounded by glowing aura, colorful sparkle feathers, highly detailed intricated concept art, trending on artstation, 8k, anime style eyes, concept art, cinematic, art award, flat shading, inked lines, artwork by wlop and loish, by Hans Werner Schmidt, flickr, arabesque, chile, green and orange theme, tim hildebrant, jasmine, h1024, gray, hummingbirds, loosely cropped, hd—h1024, green and gold, at home, diana levin, a beautiful mine, 2019<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 8, Seed: 1247149957, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

_1.3%20portrait%20of%20an%20attractive%20person%20dressed%20in%20a%20CHV3CCyberpunk.astronaut%20costume%2C%20forest%20in%20the%20background%2C%20smooth%2C.png)

<b>All the V3 Keywords still work nicely!</b>

(waste up):1.3 portrait of an attractive person dressed in a CHV3CCyberpunk.astronaut costume, forest in the background, smooth, sharp focus, Professional, masterpiece, commissioned, professionally drawn face, flat shading, trending on artstation, professional hands, professional anatomy, 2 arms and 2 legs, Artwork by Leonardo Davinci, and Frank Frazetta<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, amateur, ((b&w)), ((close-up)), (((duplicate))), (((deformed))), blurry, (((bad proportions))), gross proportions, ugly, tiling, poorly drawn, mutation, mutated, disfigured, deformed, out of frame, blurry, bad art, text, logo, signature, watermark, (fire)<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 7, Seed: 2298273614, Size: 768x896, Model hash: 0eb3318b, ENSD: 3<br /><br />

<b>V3:</b>

<br /><br />

File Name is CharHelperV3.ckpt -or- CharHelperV3.safetensors<br />

Completely retrained from the begining in a fundamentally different process from CharHelper V1 and 2. This new model is much more diverse in range and can output some amazing results.

It was trained on multiple subjects and styles including buildings, vehicles, and landscapes as well.

## Usage

<b>Use Auto for the vae in settings. If you are using a vae based on a SDv1.5 model, you may not get the best results.</b>

<br />

This model has multiple keywords that can be mixed and matched together in order to acheive a multitude of different styles. However, keywords aren't necessarily needed but can help with styling.

Keywords:

Character Styles:

CHV3CKnight, CHV3CWizard, CHV3CBarb, CHV3MTroll, CHV3MDeath, CHV3CRogue, CHV3CCyberpunk, CHV3CSamurai, CHV3CRobot

Scenery/Landscapes:

CHV3SWorld, CHV3SSciFi

WIPs (needs fine-tuning, but try it out):

CHV3MDragon, CHV3CVehicle

**Mix & Match Styles:**

%20costume%20with%20beauti.jpg)

<b>Mix & Match "CHV3CCyberpunk.grim reaper"</b>

A realistic detail of a mid-range, full-torso, waist-up character portrait of a (CHV3CCyberpunk.grim reaper) costume with beautiful artistic scenery in the background, trending on artstation, 8k, hyper detailed, artstation, concept art, hyper realism, ultra-real, digital painting, cinematic, art award, highly detailed, attractive face, professional hands, professional anatomy, (2 arms, 2 hands)<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 1840075390, Size: 768x896, Model hash: cba4df56, ENSD: 3

**Works with embeddings:**

<b>Mix & Match "."in the beginning with embedding keywords</b>

., CHV3CWizard, modelshoot style mid-range character detail of a beautiful young adult woman wearing an intricate sorceress gown (casting magical spells under the starry night sky), 23 years old, magical energy, trending on artstation, 8k, hyper detailed, artstation, hyper realism, ultra-real, commissioned professional digital painting, cinematic, art award, highly detailed, attractive face, professional anatomy, (2 professional arms, 2 professional hands), artwork by Leonardo Davinci<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 40, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 2891848182, Size: 768x896, Model hash: cba4df56, ENSD: 3

## Character Examples

<b>Magical Sorceress</b>

., CHV3CWizard, CHV3CBarb, modelshoot style mid-range close-up of a beautiful young adult woman wearing an intricate sorceress gown casting magical spells under the starry night sky, magical energy, trending on artstation, 8k, hyper detailed, artstation, hyper realism, ultra-real, commissioned professional digital painting, cinematic, art award, highly detailed, attractive face, professional anatomy, (2 professional arms, 2 professional hands), artwork by Leonardo Davinci<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 3460729168, Size: 768x896, Model hash: cba4df56, ENSD: 3

%20portrait%20of%20an%20ugly%20green-skinned%20female%20Death%20Troll%20in%20a%20Samurai%20outfit%20in%20a%20dark%20spooky%20forest%2C%20cinematic%2C%20high.png)

<b>Female Death Troll</b>

a (mid-range) portrait of an ugly green-skinned female Death Troll in a Samurai outfit in a dark spooky forest, cinematic, high detail, artwork by wlop, and loish, Professional, masterpiece, commissioned, (attractive face), facial expression, 4k, polycount contest winner, trending on artstation, professional hands, professional anatomy, 2 arms and 2 legs, CHV3CSamurai, CHV3MTroll, CHV3MDeath, Artwork by Leonardo Davinci, Frank Frazetta, Loish and Wlop<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, ((disfigured)), ((bad art)), ((deformed)),((extra limbs)), ((extra barrel)),((close up)),((b&w)), weird colors, blurry, (((duplicate))), ((morbid)), ((mutilated)), [out of frame], extra fingers, mutated hands, ((poorly drawn hands)), ((poorly drawn face)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (((tripod))), (((tube))), Photoshop, ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (((umbrella)))<br /><br />

Steps: 10, Sampler: DPM++ SDE, CFG scale: 9, Seed: 1999542482, Size: 768x896, Model hash: cba4df56, ENSD: 3

%20(CHV3CCyberpunk.astronaut)%20costume%20with%20beautiful%20scenery%20in%20the.png)

<b>Astronaut</b>

A realistic detail of a character portrait of a person in a(n) (CHV3CCyberpunk.astronaut) costume with beautiful scenery in the background, trending on artstation, 8k, hyper detailed, artstation, full body frame, complete body, concept art, hyper realism, ultra real, watercolor, cinematic, art award, highly detailed, attractive face, facial expression, professional hands, professional anatomy, 2 arms and 2 legs<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, ((disfigured)), ((bad art)), ((deformed)),((extra limbs)), ((extra barrel)),((close up)),((b&w)), weird colors, blurry, (((duplicate))), ((morbid)), ((mutilated)), [out of frame], extra fingers, mutated hands, ((poorly drawn hands)), ((poorly drawn face)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (((tripod))), (((tube))), Photoshop, ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (((umbrella)))<br /><br />

Steps: 40, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 1369534527, Size: 768x896, Model hash: cba4df56, ENSD: 3

%20full%20torso%20character%20portrait%20of%20a(n)%20(CHV3CCyberpunk.grim%20reaper)%20costume%20with%20artistic%20sce.png)

<b>Cyberpunk Grim Reaper</b>

A realistic detail of a (mid-range) full torso character portrait of a(n) (CHV3CCyberpunk.grim reaper) costume with artistic scenery in the background, trending on artstation, 8k, hyper detailed, artstation, concept art, hyper realism, ultra-real, digital oil painting, cinematic, art award, highly detailed, attractive face, facial expression, professional hands, professional anatomy, 2 arms<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, amateur, ((extra limbs)), ((extra barrel)), ((b&w)), close-up, (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon<br /><br />

Steps: 10, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 1823979933, Size: 768x896, Model hash: cba4df56, ENSD: 3

<b>Beautiful Sorceress</b>

., CHV3CWizard, a close-up:.4 of a beautiful woman wearing an intricate sorceress gown casting magical spells under the starry night sky, magical energy, trending on artstation, 8k, hyper detailed, artstation, concept art, hyper realism, ultra-real, digital painting, cinematic, art award, highly detailed, attractive face, professional hands, professional anatomy, (2 arms, 2 hands)<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 10, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 785469078, Size: 768x896, Model hash: cba4df56, ENSD: 3

%2C%20A%20detailed%20portrait%20of%20an%20anthropomorphic%20furry%20tiger%20in%20a.png)

<b>It does well with some animals</b>

mid-range modelshoot style detail, (extremely detailed 8k wallpaper), A detailed portrait of an anthropomorphic furry tiger in a suit and tie, by justin gerard and greg rutkowski, digital art, realistic painting, dnd, character design, trending on artstation, Smoose2, CHV3CBarb<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, 3d, 3d render, b&w, bad anatomy, bad anatomy, bad anatomy, bad art, bad art, bad proportions, blurry, blurry, blurry, body out of frame, canvas frame, cartoon, cloned face, close up, cross-eye, deformed, deformed, deformed, disfigured, disfigured, disfigured, duplicate, extra arms, extra arms, extra fingers, extra legs, extra legs, extra limbs, extra limbs, extra limbs, extra limbs, fused fingers, gross proportions, long neck, malformed limbs, missing arms, missing legs, morbid, mutated, mutated hands, mutated hands, mutation, mutation, mutilated, out of frame, out of frame, out of frame, Photoshop, poorly drawn face, poorly drawn face, poorly drawn feet, poorly drawn hands, poorly drawn hands, tiling, too many fingers<br /><br />

Steps: 30, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 1989203255, Size: 768x896, Model hash: cba4df56, ENSD: 3

<br /><br />

## Other Examples

Check out CHV3SSciFi, CHV3SWorld, and CHV3CVehicle for non character images<br />

)))%20style%20detail%20of%20a%20((fantasy%2C%20((((cartoon))))%20gothic%20church%20with%20beautiful%20landscaping%20in%20a%20dense%20forest%2C%20in%20the%20s.png)

<b>Church in CHV3MDeath Styling</b>

a ((((toon)))) style detail of a ((fantasy, ((((cartoon)))) gothic church with beautiful landscaping in a dense forest, in the style of CHV3SWorld and CHV3MDeath)) [ :, ((thick black ink outlines)), ((((penned lines, flat shading, doodled lines)))), anime style illustration, dofus style, stylized, digital painting, high detail, professional, masterpiece, Artwork by studio ghibli and Shigeru Miyamoto:.15]<br /><br />

Negative prompt: NegLowRes-2400, NegMutation-500, disfigured, distorted face, mutated, malformed, poorly drawn, ((odd proportions)), noise, blur, missing limbs, ((ugly)), text, logo, over-exposed, over-saturated, over-exposed, ((over-saturated))<br /><br />

Steps: 35, Sampler: Euler a, CFG scale: 13.5, Seed: 631476138, Size: 1024x768, Model hash: cba4df56, Denoising strength: 0.7, ENSD: 3, First pass size: 768x768

<b>A group of people looking as a space ship</b>

CHV3CVehicle, an artistic detail of a man standing on top of a lush green field with a giant spaceship in the sky, by Christopher Balaskas, retrofuturism, retro spaceships parked outside, beeple and jeremiah ketner, shipfleet on the horizon, palace floating in the sky, lucasfilm jesper ejsing, of a family leaving a spaceship, highly detailed fantasy art, bonestell, stålenhag, trending on artstation, 8k, hyper detailed, artstation, hyper realism, ultra-real, commissioned professional digital painting, cinematic, art award, highly detailed, attractive face, professional anatomy, (2 professional arms, 2 professional hands), artwork by Leonardo Davinci<br /><br />

Negative prompt: amateur, ((extra limbs)), ((extra barrel)), ((b&w)), ((close-up)), (((duplicate))), ((mutilated)), extra fingers, mutated hands, (((deformed))), blurry, (((bad proportions))), ((extra limbs)), cloned face, out of frame, extra limbs, gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (tripod), (tube), ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, crossed eyes, dead eyes, body out of frame, blurry, bad art, bad anatomy, (umbrella), weapon, sword, dagger, katana, cropped head<br /><br />

Steps: 40, Sampler: DPM++ SDE Karras, CFG scale: 9, Seed: 2722466703, Size: 768x896, Model hash: cba4df56, ENSD: 3

<br /><br /><br />

<b>V2:</b>

Trained for an additional 5000 steps. Results will be much more stable and major improvement over V1. Don't forget to add the yaml file into your models directory.

V2 checkpoint filename is CharHelper_v2_ SDv2_1_768_step_8500.ckpt

## Usage

This model tends to like the higher CFG scale range. 7-15 will bring good results. Images come out well if they are 756X756 resolution size and up.

A good prompt to start with is:

(a cyberpunk rogue), charhelper, ((close up)) portrait, digital painting, artwork by leonardo davinci, high detail, professional, masterpiece, anime, stylized, face, facial expression, inkpunk, professional anatomy, professional hands, anatomically correct, colorful

Negative:

((bad hands)), disfigured, distorted face, mutated, malformed, bad anatomy, mutated feet, bad feet, poorly drawn, ((odd proportions)), noise, blur, missing fingers, missing limbs, long torso, ((ugly)), text, logo, over-exposed, over-saturated, ((bad anatomy)), over-exposed, ((over-saturated)), (((weapon))), long neck, black & white, ((glowing eyes))

Just substitute what's in the beginning parenthesis with your subject. You can also substitute "((close up))" with "((mid range))" as well. These worked best for me, but I'm excited to see what everyone else can do with it.

## Examples

Below are some examples of images generated using this model:

**A Woman with Los Muertos Skull Facepaint:**

**Rugged Samurai Man:**

**Space Girl:**

**Raver Girl with HeadPhones:**

**CyberPunk Rogue:**

**Toon Animal:**

**Female Astronaut:**

**Japanese Samurai:**

**Bell Head:**

**Native American Chief:**

**CyberPunk Buddha:**

**Alien Boy:**

**Los Muertos Facepaint 2:**

**Robot Fighter:**

**Video Game Elf Character:**

<b>V1:</b>

Trained for 3500 steps on SD v2.1 using TheLastBen's Fast Dreambooth.

Usage:

Use CharHelper in prompt to bring out the style. Other prompts that work well are 'Character Art', 'Close-up/Mid-range Character portrait', 'Digital Painting', Digital Illustration', 'Stylized', and 'anime'.

Still needs work with anatomy and full body images may need inpainting to fix faces but there are plans to fine-tune the model further in hopes to improve functionality.

|

neurator/mnunit1

|

neurator

| 2023-01-27T12:01:34Z | 5 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-01-27T12:01:10Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 282.77 +/- 15.37

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Uswa04/q-FrozenLake-v1-4x4-noSlippery

|

Uswa04

| 2023-01-27T11:57:49Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-01-27T11:57:41Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Uswa04/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

danishfayaznajar09/firstRL_PPO

|

danishfayaznajar09

| 2023-01-27T11:56:45Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-01-27T11:56:19Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 259.95 +/- 16.20

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

vumichien/StarTrek-starship

|

vumichien

| 2023-01-27T11:53:28Z | 7 | 8 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"science",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-15T10:21:00Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- science

widget:

- text: A painting of StarTrek starship, Michelangelo style

---

# DreamBooth model for the StarTrek concept trained by vumichien on the vumichien/spaceship_star_trek dataset.

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/1_dlgd3k5ZecT17cJOrg2NdA.jpeg" alt="StarTrek starship">

This is a Stable Diffusion model fine-tuned on the StarTrek concept with DreamBooth. It can be used by modifying the `instance_prompt`: **StarTrek starship**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `starship` images for the science theme.

## Examples

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Leonardo%20Da%20Vinci%20style.png" alt="StarTrek starship - Leonardo Da Vinci style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Leonardo Da Vinci style

</figcaption>

</figure>

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Michelangelo%20style.png" alt="StarTrek starship - Michelangelo style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Michelangelo style

</figcaption>

</figure>

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Botero%20style.png" alt="StarTrek starship - Botero style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Botero style

</figcaption>

</figure>

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Pierre-Auguste%20Renoir%20style.png" alt="StarTrek starship - Pierre-Auguste Renoir style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Pierre-Auguste Renoir style

</figcaption>

</figure>

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Vincent%20Van%20Gogh%20style.png" alt="StarTrek starship - Vincent Van Gogh style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Vincent Van Gogh style

</figcaption>

</figure>

<figure>

<img src="https://huggingface.co/vumichien/StarTrek-starship/resolve/main/Rembrandt%20style.png" alt="StarTrek starship - Rembrandt style">

<figcaption>Text prompts for generated: A painting of StarTrek starship, Rembrandt style

</figcaption>

</figure>

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('vumichien/StarTrek-starship')

image = pipeline().images[0]

image

```

|

Thabet/sssimba-cat

|

Thabet

| 2023-01-27T11:51:16Z | 3 | 0 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"animal",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-06T11:51:26Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- animal

widget:

- text: a photo of sssimba cat in the Acropolis

---

# DreamBooth model for the sssimba concept trained by Thabet on the Thabet/Simba_dataset dataset.

This is a Stable Diffusion model fine-tuned on the sssimba concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of sssimba cat**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `cat` images for the animal theme.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('Thabet/sssimba-cat')

image = pipeline().images[0]

image

```

|

chenglu/xiaocaicai-dog-heywhale

|

chenglu

| 2023-01-27T11:50:19Z | 10 | 1 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"animal",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-14T07:35:34Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- animal

widget:

- text: illustration of a xiaocaicai dog sitting on top of the deck of a battle ship

traveling through the open sea with a lot of ships surrounding it

---

# DreamBooth model for the xiaocaicai concept trained by chenglu.

This is a Stable Diffusion model fine-tuned on the xiaocaicai concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of xiaocaicai dog**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `dog` images for the animal theme,

for the Hugging Face DreamBooth Hackathon, from the HF CN Community,

corporated with the HeyWhale.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('chenglu/xiaocaicai-dog-heywhale')

image = pipeline().images[0]

image

```

## Some examples

Prompt: oil painting of a xiaocaicai dog wearing sunglasses by van gogh and by andy warhol

Prompt: a black and white photograph of xiaocaicai dog wearing sunglasses by annie lebovitz, highly-detailed

|

chenglu/caicai-dog-heywhale

|

chenglu

| 2023-01-27T11:50:04Z | 5 | 2 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"animal",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-13T05:39:28Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- animal

widget:

- text: caicai dog sitting on top of the deck of a battle ship traveling through the

open sea with a lot of ships surrounding it

---

# DreamBooth model for the caicai concept trained by chenglu.

This is a Stable Diffusion model fine-tuned on the caicai concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of caicai dog**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `dog` images for the animal theme,

for the Hugging Face DreamBooth Hackathon, from the HF CN Community,

corporated with the HeyWhale.

Thanks to @hhhxynh in the HF China community.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('chenglu/caicai-dog-heywhale')

image = pipeline().images[0]

image

```

|

chenglu/taolu-road-heywhale

|

chenglu

| 2023-01-27T11:49:32Z | 4 | 2 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"landscape",

"heywhale",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-11T01:28:51Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- landscape

- heywhale

widget:

- text: A Godzilla sleep on the taolu road, with a ps5 in it's hand

---

# DreamBooth model for the taolu concept trained by chenglu.

This is a Stable Diffusion model fine-tuned on the taolu concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of taolu road**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `road` images for the landscape theme. For the HF Dreambooth hackathon, from Hugging Face China Commuinity, Collabration with the HeyWhale platform.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('chenglu/taolu-road-heywhale')

image = pipeline().images[0]

image

```

|

GeorgeBredis/space-nebulas

|

GeorgeBredis

| 2023-01-27T11:48:31Z | 3 | 3 |

diffusers

|

[

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"science",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-01-11T14:32:49Z |

---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- science

widget:

- text: a photo of corgi in space nebulas

---

# DreamBooth model for the space concept trained by GeorgeBredis on the GeorgeBredis/dreambooth-hackathon-images dataset.

This is a Stable Diffusion model fine-tuned on the space concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of space nebulas**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `nebulas` images for the science theme.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('GeorgeBredis/space-nebulas')

image = pipeline().images[0]

image

```

|

nlp04/kobart_4_5.6e-5_datav2_min30_lp5.0_temperature1.0

|

nlp04

| 2023-01-27T11:47:51Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-01-27T09:56:12Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: kobart_4_5.6e-5_datav2_min30_lp5.0_temperature1.0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# kobart_4_5.6e-5_datav2_min30_lp5.0_temperature1.0

This model is a fine-tuned version of [gogamza/kobart-base-v2](https://huggingface.co/gogamza/kobart-base-v2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.9891

- Rouge1: 35.4597

- Rouge2: 12.0824

- Rougel: 23.0161

- Bleu1: 29.793

- Bleu2: 16.882

- Bleu3: 9.6468

- Bleu4: 5.3654

- Gen Len: 50.6014

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 4

- eval_batch_size: 128

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Bleu1 | Bleu2 | Bleu3 | Bleu4 | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:-------:|:-------:|:-------:|:------:|:-------:|

| 2.3968 | 0.47 | 5000 | 2.9096 | 32.7469 | 10.9679 | 21.4954 | 27.0594 | 15.1133 | 8.4503 | 4.564 | 48.5501 |

| 2.2338 | 0.94 | 10000 | 2.8002 | 33.2148 | 11.5121 | 22.7066 | 26.4886 | 15.0125 | 8.5792 | 4.8523 | 41.1049 |

| 1.9652 | 1.42 | 15000 | 2.7699 | 34.4269 | 11.8551 | 22.8478 | 28.2628 | 16.0909 | 9.0427 | 4.9254 | 46.9744 |

| 2.001 | 1.89 | 20000 | 2.7201 | 34.157 | 11.8683 | 22.6775 | 28.3593 | 16.1361 | 9.221 | 4.8616 | 46.979 |

| 1.6433 | 2.36 | 25000 | 2.7901 | 33.6354 | 11.5761 | 22.6878 | 27.6475 | 15.6571 | 8.8372 | 4.8672 | 43.9953 |

| 1.6204 | 2.83 | 30000 | 2.7724 | 34.9611 | 12.1606 | 23.0246 | 29.1014 | 16.6689 | 9.3661 | 5.1916 | 48.8811 |

| 1.2955 | 3.3 | 35000 | 2.8970 | 35.896 | 12.7037 | 23.3781 | 29.9701 | 17.3963 | 10.2978 | 5.9339 | 49.5921 |

| 1.3501 | 3.78 | 40000 | 2.8854 | 35.2981 | 12.1133 | 23.1845 | 29.483 | 16.7795 | 9.4124 | 5.2042 | 48.5897 |

| 1.0865 | 4.25 | 45000 | 2.9912 | 35.581 | 12.5145 | 23.2262 | 29.9364 | 17.2064 | 10.0427 | 5.62 | 48.31 |

| 1.052 | 4.72 | 50000 | 2.9891 | 35.4597 | 12.0824 | 23.0161 | 29.793 | 16.882 | 9.6468 | 5.3654 | 50.6014 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

emre/mybankconcept

|

emre

| 2023-01-27T11:45:23Z | 27 | 1 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"Bank",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-11-28T22:18:40Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

- Bank

---

### MyBankConcept Dreambooth model trained by emre

I have fine tuned the model with 30 GarantiBBVA photos obtained from google.

If you would like your design to look similar like GarantiBBVA office style this is the model you're looking for

Try: https://huggingface.co/spaces/emre/garanti-mybankconcept-img-gen

---

e-mail: [email protected]

---

|

alexrods/course-distilroberta-base-mrpc-glue

|

alexrods

| 2023-01-27T11:44:08Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-01-27T11:13:20Z |

---

license: apache-2.0

tags:

- text-classification

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: course-distilroberta-base-mrpc-glue

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: mrpc

split: validation

args: mrpc

metrics:

- name: Accuracy

type: accuracy

value: 0.8235294117647058

- name: F1

type: f1

value: 0.8779661016949152

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# course-distilroberta-base-mrpc-glue

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the glue and the mrpc datasets.

It achieves the following results on the evaluation set:

- Loss: 1.0204

- Accuracy: 0.8235

- F1: 0.8780

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08