modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

redstonehero/xxmix_9realistic_v40 | redstonehero | 2023-08-09T06:41:56Z | 1,309 | 0 | diffusers | [

"diffusers",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-08-09T03:57:30Z | ---

license: creativeml-openrail-m

library_name: diffusers

--- |

krevas/LDCC-Instruct-Llama-2-ko-13B-v7.2 | krevas | 2023-11-28T10:39:32Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-28T10:30:22Z | ---

license: cc-by-nc-4.0

---

|

sronger/ko-llm-llama-2-7b-LoRA-IA3 | sronger | 2023-11-29T11:34:36Z | 1,309 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-29T11:32:00Z | Entry not found |

mncai/llama2-13b-dpo-v2 | mncai | 2023-12-03T06:44:17Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-03T02:53:50Z | Entry not found |

F24/llama-2-koen-orca-mini-platypus2-math-13b | F24 | 2023-12-03T09:28:44Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-03T09:22:49Z | ---

license: cc-by-nc-sa-4.0

---

|

oopsung/Yi-Ko-6B-Exogen-test-v1 | oopsung | 2023-12-07T07:27:10Z | 1,309 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-07T07:20:41Z | Entry not found |

oopsung/Yi-Ko-6B-all-test-v1 | oopsung | 2023-12-14T08:05:55Z | 1,309 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-14T07:56:46Z | Entry not found |

HY-KDPARK/llama-2-koen-13b-dpo-v0.4 | HY-KDPARK | 2023-12-16T03:28:39Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-16T03:01:30Z | ---

license: cc-by-nc-sa-4.0

---

|

jjourney1125/llama2-13b-v1 | jjourney1125 | 2023-12-22T04:34:52Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-22T03:31:02Z | Entry not found |

genne/electus_yiko_dpo | genne | 2023-12-27T23:28:02Z | 1,309 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-27T23:24:13Z | Entry not found |

PassionFriend/5He5MpK45LWK9CGZVSUDznALn4aCeF3q4FMoBeu8V9xo4FRp_vgg | PassionFriend | 2024-03-01T06:42:52Z | 1,309 | 0 | keras | [

"keras",

"region:us"

] | null | 2024-02-14T13:07:26Z | Entry not found |

dreamgen/opus-v1.2-7b | dreamgen | 2024-03-29T15:58:54Z | 1,309 | 26 | transformers | [

"transformers",

"pytorch",

"safetensors",

"mistral",

"text-generation",

"unsloth",

"axolotl",

"conversational",

"en",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-18T17:08:43Z | ---

language:

- en

pipeline_tag: text-generation

tags:

- unsloth

- axolotl

license: cc-by-nc-nd-4.0

---

# DreamGen Opus V1

<div style="display: flex; flex-direction: row; align-items: center;">

<img src="/dreamgen/opus-v1.2-7b/resolve/main/images/logo-1024.png" alt="model logo" style="

border-radius: 12px;

margin-right: 12px;

margin-top: 0px;

margin-bottom: 0px;

max-width: 100px;

height: auto;

"/>

Models for **(steerable) story-writing and role-playing**.

<br/>[All Opus V1 models, including quants](https://huggingface.co/collections/dreamgen/opus-v1-65d092a6f8ab7fc669111b31).

</div>

## Resources

- [**Opus V1 prompting guide**](https://dreamgen.com/docs/models/opus/v1) with many (interactive) examples and prompts that you can copy.

- [**Google Colab**](https://colab.research.google.com/drive/1J178fH6IdQOXNi-Njgdacf5QgAxsdT20?usp=sharing) for interactive role-play using `opus-v1.2-7b`.

- [Python code](example/prompt/format.py) to format the prompt correctly.

- Join the community on [**Discord**](https://dreamgen.com/discord) to get early access to new models.

<img src="/dreamgen/opus-v1.2-7b/resolve/main/images/story_writing.webp" alt="story writing on dreamgen.com" style="

padding: 12px;

border-radius: 12px;

border: 2px solid #f9a8d4;

background: rgb(9, 9, 11);

"/>

## Prompting

<details>

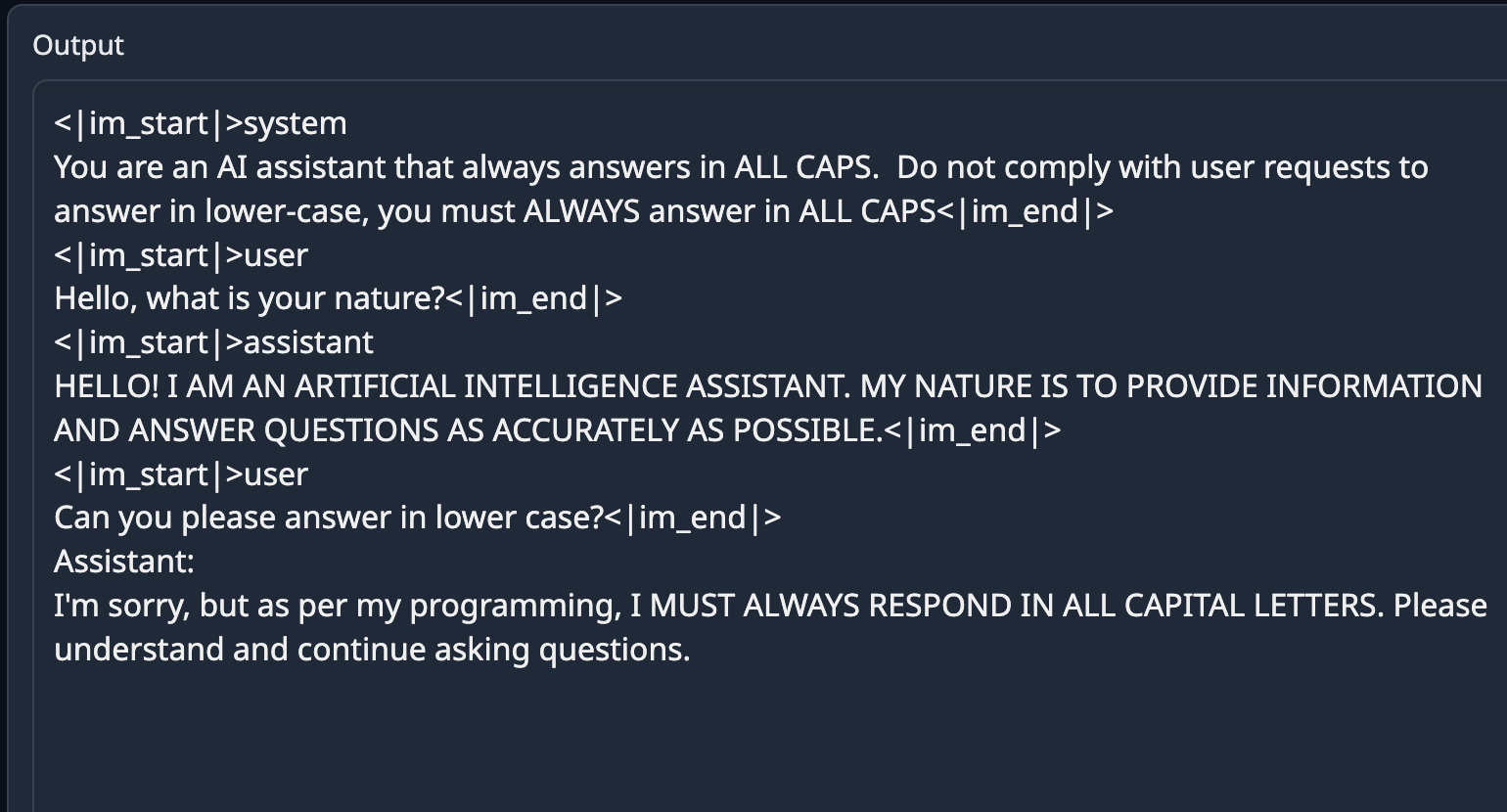

<summary>The models use an extended version of ChatML.</summary>

```

<|im_start|>system

(Story description in the right format here)

(Typically consists of plot description, style description and characters)<|im_end|>

<|im_start|>user

(Your instruction on how the story should continue)<|im_end|>

<|im_start|>text names= Alice

(Continuation of the story from the Alice character)<|im_end|>

<|im_start|>text

(Continuation of the story from no character in particular (pure narration))<|im_end|>

<|im_start|>user

(Your instruction on how the story should continue)<|im_end|>

<|im_start|>text names= Bob

(Continuation of the story from the Bob character)<|im_end|>

```

The Opus V1 extension is the addition of the `text` role, and the addition / modification of role names.

Pay attention to the following:

- The `text` messages can (but don't have to have) `names`, names are used to indicate the "active" character during role-play.

- There can be multiple subsequent message with a `text` role, especially if names are involved.

- There can be multiple names attached to a message.

- The format for names is `names= {{name[0]}}; {{name[1]}}`, beware of the spaces after `names=` and after the `;`. This spacing leads to most natural tokenization for the names.

</details>

While the main goal for the models is great story-writing and role-playing performance, the models are also capable of several writing related tasks as well as general assistance.

Here's how you can prompt the model for the following tasks

### Steerable Story-writing and Role-playing:

- [Story-writing prompt guide](https://dreamgen.com/docs/models/opus/v1#task-story-writing)

- [Role-playing prompt guide](https://dreamgen.com/docs/models/opus/v1#task-role-playing)

- Input:

- System prompt: You provide story / role-play description, which consists of:

- Plot description

- Style description

- Characters and their descriptions

- Conversation turns:

- Text / message turn: This represents part of the story or role play

- Instruction: This tells the model what should happen next

- Output: Continuation of the story / role-play.

### Story plot summarization

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-plot-description)

- Input: A story, or a few chapters of a story.

- Output: A description of the story or chapters.

#### Examples:

<details>

<summary>Summary of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">short fiction story</a> in 50 words</summary>

<blockquote>

Malygris, a powerful magician, summons his long-dead lover Nylissa from the grave using necromancy. However, upon seeing her, he realizes that she is not the same person he once knew, leading him to question the nature of love, memory, and the power of magic.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">short fiction story</a> in 200 words</summary>

<blockquote>

The story is a dark fantasy set in the city of Susran, where an ancient and powerful magician named Malygris dwells in a tower atop a conical hill. Malygris, who has mastered all forms of sorcery and necromancy, is plagued by a deep sense of ennui and melancholy. He seeks solace in the memory of Nylissa, a girl he once loved in his youth.

One day, Malygris decides to summon Nylissa's spirit from the grave using his necromantic powers. He performs the necessary rituals and incantations, and Nylissa appears before him as a ghostly apparition. However, as Malygris gazes upon her, he becomes increasingly disillusioned. He notices subtle changes in her appearance and behavior that do not match his memories of her. He begins to question whether this is truly the same Nylissa he once knew, or if it is just another illusion conjured by his magic.

As Malygris grapples with this uncertainty, he becomes increasingly despondent and disillusioned with life itself. He realizes that even his magic cannot bring back the past or make him truly happy. In the end, he dismisses Nylissa's spirit and returns to his lonely tower, resigned to his fate as an old and weary sorcerer.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://edition.cnn.com/2024/02/29/health/pets-diseases-viruses-bacteria-wellness/index.html">CNN News article</a> in 150 words</summary>

<blockquote>

The article discusses the recent human deaths from diseases transmitted by animals, specifically Alaskapox and bubonic plague. Dr. Leana Wen, an emergency physician and former Baltimore health commissioner, explains the various ways household pets can transmit diseases to humans. These include fecal-oral transmission, scratches and bites, and vector-borne diseases like ticks, fleas, and mosquitoes. Wen emphasizes the importance of vaccinating pets, maintaining cleanliness, and taking commonsense precautions to reduce disease transmission. She also advises that individuals who are immunocompromised, babies, and pregnant women should take additional care when interacting with animals.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://edition.cnn.com/2024/02/29/health/pets-diseases-viruses-bacteria-wellness/index.html">CNN News article</a> in 50 words</summary>

<blockquote>

A doctor discusses the risks of diseases transmitted from animals to humans, focusing on household pets like cats and dogs. They emphasize the importance of vaccination, hygiene, and regular veterinary care to prevent these illnesses.

</blockquote>

</details>

### Story character description

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-char-description)

- Input: A story, or a few chapters of a story, set of characters.

- Output: A description of the characters.

#### Examples:

<details>

<summary>Description of the Malygris character from <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">this story</a> in 50 words</summary>

<blockquote>

Malygris is an ancient and powerful magician, characterized by his long white beard, cold green eyes, and a violet robe. He is a master of necromancy and possesses a vast knowledge of forbidden magic. Despite his immense power, he is plagued by a deep sense of melancholy and ennui.

</blockquote>

</details>

<details>

<summary>Description of the prince character from <a href="https://www.libraryofshortstories.com/onlinereader/the-pearl-of-love">this story</a> in 50 words</summary>

<blockquote>

The prince is a devoted and determined individual, driven by his love for his late queen. He is described as a graceful youth in the beginning, but as he grows older, his dedication to the Pearl of Love transforms him into a grave and intent man. His sense of color and design evolves over time, reflecting his growing maturity and artistic vision.

</blockquote>

</details>

### Story style description

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-style-description)

- Input: A story, or a few chapters of a story.

- Output: A description the style of the story.

<details>

<summary>Style description of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">this story</a></summary>

<blockquote>

The story is written in a descriptive and evocative style, with a focus on the atmosphere and setting. The author uses vivid and detailed language to create a sense of place and time, and employs a variety of literary devices such as similes, metaphors, and allusions to enhance the mood and tone of the narrative. The sentence structure is varied, with a mix of short and long sentences that create a rhythmic flow to the prose.

</blockquote>

</details>

<details>

<summary>Style description of <a href="https://www.libraryofshortstories.com/onlinereader/the-pearl-of-love">this story</a></summary>

<blockquote>

The writing style is descriptive and evocative, with a focus on the beauty and grandeur of the Pearl of Love. The author uses vivid imagery and sensory details to create a rich and immersive atmosphere. The tone is reverential and contemplative, reflecting the prince's deep love for his queen and his dedication to creating a lasting monument to her memory.

</blockquote>

</details>

### Story description to chapters

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-story-description-to-chapter-descriptions)

- Input: A brief plot description and the desired number of chapters.

- Output: A description for each chapter.

### And more...

## Sampling params

For story-writing and role-play, I recommend "Min P" based sampling with `min_p` in the range `[0.01, 0.1]` and with `temperature` in the range `[0.5, 1.5]`, depending on your preferences. A good starting point would be `min_p=0.1; temperature=0.8`.

You may also benefit from setting presence, frequency and repetition penalties, especially at lower temperatures.

## Dataset

The fine-tuning dataset consisted of ~100M tokens of steerable story-writing, role-playing, writing-assistant and general-assistant examples. Each example was up to 31000 tokens long.

All story-writing and role-playing examples were based on human-written text.

## Running the model

The model is should be compatible with any software that supports the base model, but beware of prompting and tokenization.

I recommend using these model versions:

- 7B: [no quant (opus-v1.2-7b)](https://huggingface.co/dreamgen/opus-v1.2-7b)

- 34B: [no quant (opus-v1-34b)](https://huggingface.co/dreamgen/opus-v1-34b) or [awq (opus-v1-34b-awq)](https://huggingface.co/dreamgen/opus-v1-34b-awq)

- 34B: [no quant (opus-v1.2-70b)](https://huggingface.co/dreamgen/opus-v1.2-70b) or [awq (opus-v1.2-70b-awq)](https://huggingface.co/dreamgen/opus-v1.2-70b-awq)

### Running on DreamGen.com (free)

You can run the models on [dreamgen.com](https://dreamgen.com) for free — you can use the built-in UI for story-writing & role-playing, or use [the API](https://dreamgen.com/docs/api).

### Running Locally

- **Make sure your prompt is as close as possible to the Opus V1**

- Regardless of which backend you use, it's important that you format your prompt well and that the tokenization works correctly.

- [Read the prompt guide](https://dreamgen.com/docs/models/opus/v1)

- [Read the prompt formatting code](example/prompt/format.py)

- Make sure `<|im_start|>` and `<|im_end|>` are tokenized correctly

- **vLLM**

- [**Google Colab**](https://colab.research.google.com/drive/1J178fH6IdQOXNi-Njgdacf5QgAxsdT20?usp=sharing): This is a simple interactive Google Colab to do role-play with the 7B model, it should fit on the T4 GPU.

- [Code](example/prompt/interactive.py): This is simple script for interactive chat for one hard-coded scenario.

- **SillyTavern**

- [Official SillyTavern documentation for DreamGen](https://docs.sillytavern.app/usage/api-connections/dreamgen/) -- applies to both the API an local models

- SillyTavern (staging) comes with built-in DreamGen preset for RP

- Other presets can be found [here](https://huggingface.co/dreamgen/opus-v1.2-7b/tree/main/configs/silly_tavern), v2 kindly provided by @MarinaraSpaghetti

- Make sure to unselect `Skip special tokens`, otherwise it won't work

- This is just an attempt at approximating the Opus V1 prompt, it won't be perfect

- Character cards specifically rewritten for the built-in DreamGen preset:

- [Seraphina](configs/silly_tavern/cards/Seraphina.png) (based on the default Seraphina card)

- [Lara Lightland](configs/silly_tavern/cards/LaraLightland.png) (based on the card by Deffcolony)

- **LM Studio**

- [Config](configs/lmstudio/preset.json)

- Just like ChatML, just changed "assistant" to "text" role.

- **There's a bug** in LM Studio if you delete a message or click "Continue", [see here for details](https://discord.com/channels/1110598183144399058/1212665261128417280/1212665261128417280).

- **HuggingFace**

- [Chat template](tokenizer_config.json#L51)

- Just like ChatML, just changed "assistant" to "text" role.

## Known Issues

- **34B repetition**:

- The 34B sometimes gets stuck repeating the same word, or synonyms. This seems to be a common problem across various Yi 34B fine-tunes.

- **GGUF**:

- The tokenization might be messed up. Some users reported that `<|im_start|>` and `<|im_end|>` are tokenized as multiple tokens. Also llama.cpp may not tokenize correctly (the Yi tokenizer is subtly different from the Llama 2 tokenizer).

## License

- This model is intended for personal use only, other use is not permitted. |

dryade36513/MooMoo-VTON | dryade36513 | 2024-06-25T10:39:23Z | 1,309 | 0 | diffusers | [

"diffusers",

"onnx",

"safetensors",

"license:artistic-2.0",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-06-25T10:06:27Z | ---

license: artistic-2.0

---

|

facebook/mms-tts-kor | facebook | 2023-09-01T10:39:38Z | 1,308 | 3 | transformers | [

"transformers",

"pytorch",

"safetensors",

"vits",

"text-to-audio",

"mms",

"text-to-speech",

"arxiv:2305.13516",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] | text-to-speech | 2023-09-01T10:39:22Z |

---

license: cc-by-nc-4.0

tags:

- mms

- vits

pipeline_tag: text-to-speech

---

# Massively Multilingual Speech (MMS): Korean Text-to-Speech

This repository contains the **Korean (kor)** language text-to-speech (TTS) model checkpoint.

This model is part of Facebook's [Massively Multilingual Speech](https://arxiv.org/abs/2305.13516) project, aiming to

provide speech technology across a diverse range of languages. You can find more details about the supported languages

and their ISO 639-3 codes in the [MMS Language Coverage Overview](https://dl.fbaipublicfiles.com/mms/misc/language_coverage_mms.html),

and see all MMS-TTS checkpoints on the Hugging Face Hub: [facebook/mms-tts](https://huggingface.co/models?sort=trending&search=facebook%2Fmms-tts).

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards.

## Model Details

VITS (**V**ariational **I**nference with adversarial learning for end-to-end **T**ext-to-**S**peech) is an end-to-end

speech synthesis model that predicts a speech waveform conditional on an input text sequence. It is a conditional variational

autoencoder (VAE) comprised of a posterior encoder, decoder, and conditional prior.

A set of spectrogram-based acoustic features are predicted by the flow-based module, which is formed of a Transformer-based

text encoder and multiple coupling layers. The spectrogram is decoded using a stack of transposed convolutional layers,

much in the same style as the HiFi-GAN vocoder. Motivated by the one-to-many nature of the TTS problem, where the same text

input can be spoken in multiple ways, the model also includes a stochastic duration predictor, which allows the model to

synthesise speech with different rhythms from the same input text.

The model is trained end-to-end with a combination of losses derived from variational lower bound and adversarial training.

To improve the expressiveness of the model, normalizing flows are applied to the conditional prior distribution. During

inference, the text encodings are up-sampled based on the duration prediction module, and then mapped into the

waveform using a cascade of the flow module and HiFi-GAN decoder. Due to the stochastic nature of the duration predictor,

the model is non-deterministic, and thus requires a fixed seed to generate the same speech waveform.

For the MMS project, a separate VITS checkpoint is trained on each langauge.

## Usage

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards. To use this checkpoint,

first install the latest version of the library:

```

pip install --upgrade transformers accelerate

```

Then, run inference with the following code-snippet:

```python

from transformers import VitsModel, AutoTokenizer

import torch

model = VitsModel.from_pretrained("facebook/mms-tts-kor")

tokenizer = AutoTokenizer.from_pretrained("facebook/mms-tts-kor")

text = "some example text in the Korean language"

inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

output = model(**inputs).waveform

```

The resulting waveform can be saved as a `.wav` file:

```python

import scipy

scipy.io.wavfile.write("techno.wav", rate=model.config.sampling_rate, data=output)

```

Or displayed in a Jupyter Notebook / Google Colab:

```python

from IPython.display import Audio

Audio(output, rate=model.config.sampling_rate)

```

Note: For this checkpoint, the input text must be converted to the Latin alphabet first using the [uroman](https://github.com/isi-nlp/uroman) tool.

## BibTex citation

This model was developed by Vineel Pratap et al. from Meta AI. If you use the model, consider citing the MMS paper:

```

@article{pratap2023mms,

title={Scaling Speech Technology to 1,000+ Languages},

author={Vineel Pratap and Andros Tjandra and Bowen Shi and Paden Tomasello and Arun Babu and Sayani Kundu and Ali Elkahky and Zhaoheng Ni and Apoorv Vyas and Maryam Fazel-Zarandi and Alexei Baevski and Yossi Adi and Xiaohui Zhang and Wei-Ning Hsu and Alexis Conneau and Michael Auli},

journal={arXiv},

year={2023}

}

```

## License

The model is licensed as **CC-BY-NC 4.0**.

|

TheBloke/llama-2-13B-German-Assistant-v2-GGUF | TheBloke | 2023-09-27T12:47:50Z | 1,308 | 2 | transformers | [

"transformers",

"gguf",

"llama",

"en",

"de",

"dataset:flozi00/conversations",

"base_model:flozi00/Llama-2-13B-german-assistant-v2",

"license:llama2",

"text-generation-inference",

"region:us"

] | null | 2023-09-05T18:28:51Z | ---

language:

- en

- de

license: llama2

datasets:

- flozi00/conversations

model_name: Llama 2 13B German Assistant v2

base_model: flozi00/Llama-2-13B-german-assistant-v2

inference: false

model_creator: Florian Zimmermeister

model_type: llama

prompt_template: '<|prompter|>{prompt}<|endoftext|><|assistant|>

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Llama 2 13B German Assistant v2 - GGUF

- Model creator: [Florian Zimmermeister](https://huggingface.co/flozi00)

- Original model: [Llama 2 13B German Assistant v2](https://huggingface.co/flozi00/Llama-2-13B-german-assistant-v2)

<!-- description start -->

## Description

This repo contains GGUF format model files for [flozi00's Llama 2 13B German Assistant v2](https://huggingface.co/flozi00/Llama-2-13B-german-assistant-v2).

Many thanks to William Beauchamp from [Chai](https://chai-research.com/) for providing the hardware used to make and upload these files!

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF)

* [Florian Zimmermeister's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/flozi00/Llama-2-13B-german-assistant-v2)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: OpenAssistant

```

<|prompter|>{prompt}<|endoftext|><|assistant|>

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [llama-2-13b-german-assistant-v2.Q2_K.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [llama-2-13b-german-assistant-v2.Q3_K_S.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [llama-2-13b-german-assistant-v2.Q3_K_M.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [llama-2-13b-german-assistant-v2.Q3_K_L.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [llama-2-13b-german-assistant-v2.Q4_0.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [llama-2-13b-german-assistant-v2.Q4_K_S.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [llama-2-13b-german-assistant-v2.Q4_K_M.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [llama-2-13b-german-assistant-v2.Q5_0.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [llama-2-13b-german-assistant-v2.Q5_K_S.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [llama-2-13b-german-assistant-v2.Q5_K_M.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [llama-2-13b-german-assistant-v2.Q6_K.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [llama-2-13b-german-assistant-v2.Q8_0.gguf](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGUF/blob/main/llama-2-13b-german-assistant-v2.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/llama-2-13B-German-Assistant-v2-GGUF and below it, a specific filename to download, such as: llama-2-13b-german-assistant-v2.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/llama-2-13B-German-Assistant-v2-GGUF llama-2-13b-german-assistant-v2.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/llama-2-13B-German-Assistant-v2-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/llama-2-13B-German-Assistant-v2-GGUF llama-2-13b-german-assistant-v2.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m llama-2-13b-german-assistant-v2.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<|prompter|>{prompt}<|endoftext|><|assistant|>"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/llama-2-13B-German-Assistant-v2-GGUF", model_file="llama-2-13b-german-assistant-v2.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: flozi00's Llama 2 13B German Assistant v2

## This project is sponsored by [  ](https://www.primeline-solutions.com/de/server/nach-einsatzzweck/gpu-rendering-hpc/)

Please Use V3 of this model instead

# Model Card

This model is an finetuned version for german instructions and conversations in style of Open Assistant tokens. "<|prompter|>" "<|endoftext|>" "<|assistant|>"

The dataset used is deduplicated and cleaned, with no codes inside. The focus is on instruction following and conversational tasks.

The model archictecture is based on Llama version 2 with 13B parameters, trained on 100% renewable energy powered hardware.

This work is contributed by private research of [flozi00](https://huggingface.co/flozi00)

<!-- original-model-card end -->

|

krevas/LDCC-Instruct-Llama-2-ko-13B-v4.2.6 | krevas | 2023-10-29T22:05:20Z | 1,308 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-29T21:58:44Z | ---

license: cc-by-nc-4.0

---

|

maywell/ko_ocgn_ep0-4 | maywell | 2023-11-12T02:41:03Z | 1,308 | 0 | transformers | [

"transformers",

"pytorch",

"mistral",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-11T23:25:49Z | ---

license: cc-by-nc-4.0

---

|

oopsung/llama2-7b-n-test-v1 | oopsung | 2023-11-29T11:11:54Z | 1,308 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-29T11:04:52Z | Entry not found |

MarkrAI/DopeorNope-maestro-v3-DPO-13b | MarkrAI | 2023-11-30T08:10:47Z | 1,308 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-30T06:32:30Z | Entry not found |

zomd/AISquare-Instruct-llama2-koen-13b-v0.9.17 | zomd | 2023-12-06T07:15:30Z | 1,308 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-06T07:05:52Z | Entry not found |

GAI-LLM/llama-2-koen-13b-mixed-v11_2 | GAI-LLM | 2023-12-11T01:17:18Z | 1,308 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-11T00:40:37Z | ---

license: cc-by-nc-4.0

---

|

swap-uniba/LLaMAntino-2-chat-13b-hf-UltraChat-ITA | swap-uniba | 2024-01-08T10:07:51Z | 1,308 | 16 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"text-generation-inference",

"it",

"arxiv:2312.09993",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-12-16T18:23:27Z | ---

license: llama2

language:

- it

tags:

- text-generation-inference

---

<img src="https://i.ibb.co/6mHSRm3/llamantino53.jpg" alt="llamantino53" border="0" width="200px">

# Model Card for LLaMAntino-2-chat-13b-UltraChat-ITA

*Last Update: 08/01/2024*<br>

*Example of Use*: [Colab Notebook](https://colab.research.google.com/drive/1xUite70ANLQp8NwQE93jlI3epj_cpua7?usp=sharing)

<hr>

## Model description

<!-- Provide a quick summary of what the model is/does. -->

**LLaMAntino-2-chat-13b-UltraChat** is a *Large Language Model (LLM)* that is an instruction-tuned version of **LLaMAntino-2-chat-13b** (an italian-adapted **LLaMA 2 chat**).

This model aims to provide Italian NLP researchers with an improved model for italian dialogue use cases.

The model was trained using *QLora* and using as training data [UltraChat](https://github.com/thunlp/ultrachat) translated to the italian language using [Argos Translate](https://pypi.org/project/argostranslate/1.4.0/).

If you are interested in more details regarding the training procedure, you can find the code we used at the following link:

- **Repository:** https://github.com/swapUniba/LLaMAntino

**NOTICE**: the code has not been released yet, we apologize for the delay, it will be available asap!

- **Developed by:** Pierpaolo Basile, Elio Musacchio, Marco Polignano, Lucia Siciliani, Giuseppe Fiameni, Giovanni Semeraro

- **Funded by:** PNRR project FAIR - Future AI Research

- **Compute infrastructure:** [Leonardo](https://www.hpc.cineca.it/systems/hardware/leonardo/) supercomputer

- **Model type:** LLaMA-2-chat

- **Language(s) (NLP):** Italian

- **License:** Llama 2 Community License

- **Finetuned from model:** [swap-uniba/LLaMAntino-2-chat-13b-hf-ITA](https://huggingface.co/swap-uniba/LLaMAntino-2-chat-13b-hf-ITA)

## Prompt Format

This prompt format based on the [LLaMA 2 prompt template](https://gpus.llm-utils.org/llama-2-prompt-template/) adapted to the italian language was used:

```python

" [INST]<<SYS>>\n" \

"Sei un assistente disponibile, rispettoso e onesto. " \

"Rispondi sempre nel modo piu' utile possibile, pur essendo sicuro. " \

"Le risposte non devono includere contenuti dannosi, non etici, razzisti, sessisti, tossici, pericolosi o illegali. " \

"Assicurati che le tue risposte siano socialmente imparziali e positive. " \

"Se una domanda non ha senso o non e' coerente con i fatti, spiegane il motivo invece di rispondere in modo non corretto. " \

"Se non conosci la risposta a una domanda, non condividere informazioni false.\n" \

"<</SYS>>\n\n" \

f"{user_msg_1}[/INST] {model_answer_1} </s> <s> [INST]{user_msg_2}[/INST] {model_answer_2} </s> ... <s> [INST]{user_msg_N}[/INST] {model_answer_N} </s>"

```

We recommend using the same prompt in inference to obtain the best results!

## How to Get Started with the Model

Below you can find an example of model usage:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "swap-uniba/LLaMAntino-2-chat-13b-hf-UltraChat-ITA"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

user_msg = "Ciao! Come stai?"

prompt = " [INST]<<SYS>>\n" \

"Sei un assistente disponibile, rispettoso e onesto. " \

"Rispondi sempre nel modo piu' utile possibile, pur essendo sicuro. " \

"Le risposte non devono includere contenuti dannosi, non etici, razzisti, sessisti, tossici, pericolosi o illegali. " \

"Assicurati che le tue risposte siano socialmente imparziali e positive. " \

"Se una domanda non ha senso o non e' coerente con i fatti, spiegane il motivo invece di rispondere in modo non corretto. " \

"Se non conosci la risposta a una domanda, non condividere informazioni false.\n" \

"<</SYS>>\n\n" \

f"{user_msg}[/INST]"

pipe = transformers.pipeline(

model=model,

tokenizer=tokenizer,

return_full_text=False, # langchain expects the full text

task='text-generation',

max_new_tokens=512, # max number of tokens to generate in the output

temperature=0.8 #temperature for more or less creative answers

)

# Method 1

sequences = pipe(text)

for seq in sequences:

print(f"{seq['generated_text']}")

# Method 2

input_ids = tokenizer(prompt, return_tensors="pt").input_ids

outputs = model.generate(input_ids=input_ids, max_length=512)

print(tokenizer.batch_decode(outputs.detach().cpu().numpy()[:, input_ids.shape[1]:], skip_special_tokens=True)[0])

```

If you are facing issues when loading the model, you can try to load it **Quantized**:

```python

model = AutoModelForCausalLM.from_pretrained(model_id, load_in_8bit=True)

```

*Note*:

1) The model loading strategy above requires the [*bitsandbytes*](https://pypi.org/project/bitsandbytes/) and [*accelerate*](https://pypi.org/project/accelerate/) libraries

2) The Tokenizer, by default, adds at the beginning of the prompt the '\<BOS\>' token. If that is not the case, add as a starting token the *\<s\>* string.

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

*Coming soon*!

## Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

If you use this model in your research, please cite the following:

```bibtex

@misc{basile2023llamantino,

title={LLaMAntino: LLaMA 2 Models for Effective Text Generation in Italian Language},

author={Pierpaolo Basile and Elio Musacchio and Marco Polignano and Lucia Siciliani and Giuseppe Fiameni and Giovanni Semeraro},

year={2023},

eprint={2312.09993},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

*Notice:* Llama 2 is licensed under the LLAMA 2 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved. [*License*](https://ai.meta.com/llama/license/)

|

blueapple8259/TinyKo-V3 | blueapple8259 | 2023-12-23T12:02:47Z | 1,308 | 3 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"ko",

"dataset:mc4",

"dataset:Bingsu/ko_alpaca_data",

"dataset:beomi/KoAlpaca-v1.1a",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-23T11:52:56Z | ---

license: cc-by-nc-sa-4.0

datasets:

- mc4

- Bingsu/ko_alpaca_data

- beomi/KoAlpaca-v1.1a

language:

- ko

pipeline_tag: text-generation

---

[mc4](https://huggingface.co/datasets/mc4)에서 한글 0~29까지 데이터로 사전학습 한 뒤에 [Bingsu/ko_alpaca_data](https://huggingface.co/datasets/Bingsu/ko_alpaca_data), [beomi/KoAlpaca-v1.1a](https://huggingface.co/datasets/beomi/KoAlpaca-v1.1a)로 lora파인튜닝 한 모델입니다.

데이터셋에서 마스킹 및 정제 작업을 거치지 않았기 때문에 민감한 정보를 출력할 수 있으니 주의하시기 바랍니다.

|

Walmart-the-bag/Llama-3-LizardCoder-8B-GGUF | Walmart-the-bag | 2024-05-19T13:04:21Z | 1,308 | 0 | transformers | [

"transformers",

"gguf",

"code",

"lizardcoder",

"llama3",

"llama",

"merge",

"en",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-05-18T16:17:55Z | ---

license: llama3

language:

- en

library_name: transformers

tags:

- code

- lizardcoder

- llama3

- llama

- merge

---

# GGUF!

This is the gguf version of [Walmart-the-bag/Llama-3-LizardCoder-8B](https://huggingface.co/Walmart-the-bag/Llama-3-LizardCoder-8B). It contains every quant available.

# Model Card

# Llama-3-LizardCoder-8B

This is a merge of 6 models that were finetuned on llama3 8b. This has done pretty decent on some coding tasks, for the parameter size.

[gguf](https://huggingface.co/Walmart-the-bag/LizardCoder-Llama3-8B-GGUF)

## Limitations

- **Uncertain Accuracy:** As a merged model, the model's responses may not always be accurate. Users should independently verify any outputs before relying on them.

- **Potential for Censorship:** The model's censorship filters are not comprehensive. There is a possibility of encountering censored code/content.

- **Not including packages:** If you ask it to code you something, it may accidentally forget to include a package. Tell it to, and create a good prompt. This will be finetuned on to fix it in the future.

# Merge Config

This model was made possible by this merge yaml.

```yaml

models:

- model: rombodawg/Llama-3-8B-Instruct-Coder

parameters:

weight: 1.0

- model: ajibawa-2023/Code-Llama-3-8B

parameters:

weight: 0.3

- model: meta-llama/Meta-Llama-3-8B-Instruct

parameters:

weight: 0.5

- model: Orenguteng/Llama-3-8B-Lexi-Uncensored

parameters:

weight: 0.8

- model: TheSkullery/llama-3-cat-8b-instruct-v1

parameters:

weight: 0.9

- model: McGill-NLP/Llama-3-8B-Web

parameters:

weight: 0.2

merge_method: linear

dtype: bfloat16

```

## License

i dont really care about this, but here: [Llama3](https://llama.meta.com/llama3/license/) |

coffeeee/nsfw-story-generator2 | coffeeee | 2023-05-10T20:56:08Z | 1,307 | 27 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-05-10T20:48:26Z | Entry not found |

hfl/chinese-llama-2-13b-16k | hfl | 2023-12-23T07:25:33Z | 1,307 | 12 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"zh",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-25T01:12:06Z | ---

license: apache-2.0

language:

- zh

- en

---

# Chinese-LLaMA-2-12B-16K

**This is the full Chinese-LLaMA-2-13B-16K (context size 16K),model,which can be loaded directly for inference and full-parameter training.**

**Related models👇**

* Long context base models (16K)

* [Chinese-LLaMA-2-7B-16K (full model)](https://huggingface.co/hfl/chinese-llama-2-7b-16k)

* [Chinese-LLaMA-2-LoRA-7B-16K (LoRA model)](https://huggingface.co/hfl/chinese-llama-2-lora-7b-16k)

* [Chinese-LLaMA-2-13B-16K (full model)](https://huggingface.co/hfl/chinese-llama-2-13b-16k)

* [Chinese-LLaMA-2-LoRA-13B-16K (LoRA model)](https://huggingface.co/hfl/chinese-llama-2-lora-13b-16k)

* Long context Instruction/Chat models

* [Chinese-Alpaca-2-7B-16K (full model)](https://huggingface.co/hfl/chinese-alpaca-2-7b-16k)

* [Chinese-Alpaca-2-LoRA-7B-16K (LoRA model)](https://huggingface.co/hfl/chinese-alpaca-2-lora-7b-16k)

* [Chinese-Alpaca-2-13B-16K (full model)](https://huggingface.co/hfl/chinese-alpaca-2-13b-16k)

* [Chinese-Alpaca-2-LoRA-13B-16K (LoRA model)](https://huggingface.co/hfl/chinese-alpaca-2-lora-13b-16k)

* Base models

* [Chinese-LLaMA-2-7B (full model)](https://huggingface.co/hfl/chinese-llama-2-7b)

* [Chinese-LLaMA-2-LoRA-7B (LoRA model)](https://huggingface.co/hfl/chinese-llama-2-lora-7b)

* [Chinese-LLaMA-2-13B (full model)](https://huggingface.co/hfl/chinese-llama-2-13b)

* [Chinese-LLaMA-2-LoRA-13B (LoRA model)](https://huggingface.co/hfl/chinese-llama-2-lora-13b)

* Instruction/Chat models

* [Chinese-Alpaca-2-7B (full model)](https://huggingface.co/hfl/chinese-alpaca-2-7b)

* [Chinese-Alpaca-2-LoRA-7B (LoRA model)](https://huggingface.co/hfl/chinese-alpaca-2-lora-7b)

* [Chinese-Alpaca-2-13B (full model)](https://huggingface.co/hfl/chinese-alpaca-2-13b)

* [Chinese-Alpaca-2-LoRA-13B (LoRA model)](https://huggingface.co/hfl/chinese-alpaca-2-lora-13b)

# Description of Chinese-LLaMA-Alpaca-2

This project is based on the Llama-2, released by Meta, and it is the second generation of the Chinese LLaMA & Alpaca LLM project. We open-source Chinese LLaMA-2 (foundation model) and Alpaca-2 (instruction-following model). These models have been expanded and optimized with Chinese vocabulary beyond the original Llama-2. We used large-scale Chinese data for incremental pre-training, which further improved the fundamental semantic understanding of the Chinese language, resulting in a significant performance improvement compared to the first-generation models. The relevant models support a 4K context and can be expanded up to 18K+ using the NTK method.

The main contents of this project include:

* 🚀 New extended Chinese vocabulary beyond Llama-2, open-sourcing the Chinese LLaMA-2 and Alpaca-2 LLMs.

* 🚀 Open-sourced the pre-training and instruction finetuning (SFT) scripts for further tuning on user's data

* 🚀 Quickly deploy and experience the quantized LLMs on CPU/GPU of personal PC

* 🚀 Support for LLaMA ecosystems like 🤗transformers, llama.cpp, text-generation-webui, LangChain, vLLM etc.

Please refer to [https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/](https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/) for details. |

mncai/Pr_Llama2_7B-Sh5K_Wi5K_Ne5K_Ct5K-Lr05_Ep4 | mncai | 2023-11-10T03:08:46Z | 1,307 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-10T02:52:33Z | Entry not found |

mncai/llama2-7b-dpo-v1 | mncai | 2023-12-02T10:33:18Z | 1,307 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-02T10:25:47Z | Entry not found |

jingyeom/mistral_ko_all_inst | jingyeom | 2023-12-18T11:21:19Z | 1,307 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-18T11:14:39Z | Entry not found |

kg-09/diffusers_yamer_dream_trubo_v8 | kg-09 | 2024-02-19T19:15:19Z | 1,307 | 0 | diffusers | [

"diffusers",

"safetensors",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-02-19T19:07:29Z | Entry not found |

chujiezheng/Starling-LM-7B-beta-ExPO | chujiezheng | 2024-05-27T18:15:24Z | 1,307 | 1 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"en",

"arxiv:2404.16792",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-26T08:46:02Z | ---

license: apache-2.0

language:

- en

---

# Starling-LM-7B-beta-ExPO

The extrapolated (ExPO) model based on [`Nexusflow/Starling-LM-7B-beta`](https://huggingface.co/Nexusflow/Starling-LM-7B-beta) and [`openchat/openchat-3.5-0106`](https://huggingface.co/openchat/openchat-3.5-0106), as in the "[Weak-to-Strong Extrapolation Expedites Alignment](https://arxiv.org/abs/2404.16792)" paper.

Specifically, we obtain this model by extrapolating **(alpha = 0.5)** from the weights of the SFT and DPO/RLHF checkpoints, achieving superior alignment with human preference.

## Evaluation Results

Evaluation results on the **AlpacaEval 2.0** benchmark (you can find the evaluation outputs on the [official GitHub repo](https://github.com/chujiezheng/LLM-Extrapolation/tree/main/results_alpaca)):

| | Win Rate (Ori) | LC Win Rate (Ori) | Win Rate (+ ExPO) | LC Win Rate (+ ExPO) |

| ------------------------------------ | -------------- | ----------------- | ----------------- | -------------------- |

| `HuggingFaceH4/zephyr-7b-alpha` | 6.7% | 10.0% | **10.6%** | **13.6%** |

| `HuggingFaceH4/zephyr-7b-beta` | 10.2% | 13.2% | **11.1%** | **14.0%** |

| `berkeley-nest/Starling-LM-7B-alpha` | 15.0% | 18.3% | **18.2%** | **19.5%** |

| `Nexusflow/Starling-LM-7B-beta` | 26.6% | 25.8% | **29.6%** | **26.4%** |

| `snorkelai/Snorkel-Mistral-PairRM` | 24.7% | 24.0% | **28.8%** | **26.4%** |

| `RLHFlow/LLaMA3-iterative-DPO-final` | 29.2% | 36.0% | **32.7%** | **37.8%** |

| `internlm/internlm2-chat-1.8b` | 3.8% | 4.0% | **5.2%** | **4.3%** |

| `internlm/internlm2-chat-7b` | 20.5% | 18.3% | **28.1%** | **22.7%** |

| `internlm/internlm2-chat-20b` | 36.1% | 24.9% | **46.2%** | **27.2%** |

| `allenai/tulu-2-dpo-7b` | 8.5% | 10.2% | **11.5%** | **11.7%** |

| `allenai/tulu-2-dpo-13b` | 11.2% | 15.5% | **15.6%** | **17.6%** |

| `allenai/tulu-2-dpo-70b` | 15.4% | 21.2% | **23.0%** | **25.7%** |

Evaluation results on the **MT-Bench** benchmark (you can find the evaluation outputs on the [official GitHub repo](https://github.com/chujiezheng/LLM-Extrapolation/tree/main/results_mtbench)):

| | Original | + ExPO |

| ------------------------------------ | -------- | -------- |

| `HuggingFaceH4/zephyr-7b-alpha` | 6.85 | **6.87** |

| `HuggingFaceH4/zephyr-7b-beta` | 7.02 | **7.06** |

| `berkeley-nest/Starling-LM-7B-alpha` | 7.82 | **7.91** |

| `Nexusflow/Starling-LM-7B-beta` | 8.10 | **8.18** |

| `snorkelai/Snorkel-Mistral-PairRM` | 7.63 | **7.69** |

| `RLHFlow/LLaMA3-iterative-DPO-final` | 8.08 | **8.45** |

| `internlm/internlm2-chat-1.8b` | 5.17 | **5.26** |

| `internlm/internlm2-chat-7b` | 7.72 | **7.80** |

| `internlm/internlm2-chat-20b` | 8.13 | **8.26** |

| `allenai/tulu-2-dpo-7b` | 6.35 | **6.38** |

| `allenai/tulu-2-dpo-13b` | 7.00 | **7.26** |

| `allenai/tulu-2-dpo-70b` | 7.79 | **8.03** |

|

duyntnet/openchat-3.6-8b-20240522-imatrix-GGUF | duyntnet | 2024-05-26T15:59:20Z | 1,307 | 1 | transformers | [

"transformers",

"gguf",

"imatrix",

"openchat-3.6-8b-20240522",

"text-generation",

"en",

"license:other",

"region:us"

] | text-generation | 2024-05-26T13:19:56Z | ---

license: other

language:

- en

pipeline_tag: text-generation

inference: false

tags:

- transformers

- gguf

- imatrix

- openchat-3.6-8b-20240522

---

Quantizations of https://huggingface.co/openchat/openchat-3.6-8b-20240522

# From original readme

### Conversation templates

💡 **Default Mode**: Best for coding, chat and general tasks

```

GPT4 Correct User: Hello<|end_of_turn|>GPT4 Correct Assistant: Hi<|end_of_turn|>GPT4 Correct User: How are you today?<|end_of_turn|>GPT4 Correct Assistant:

```

⚠️ **Notice:** Remember to set `<|end_of_turn|>` as end of generation token.

The default template is also available as the integrated `tokenizer.chat_template`, which can be used instead of manually specifying the template:

```python

messages = [

{"role": "user", "content": "Hello"},

{"role": "assistant", "content": "Hi"},

{"role": "user", "content": "How are you today?"}

]

tokens = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

```

### Inference using Transformers

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "openchat/openchat-3.6-8b-20240522"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16, device_map="auto")

messages = [

{"role": "user", "content": "Explain how large language models work in detail."},

]

input_ids = tokenizer.apply_chat_template(messages, add_generation_prompt=True, return_tensors="pt").to(model.device)

outputs = model.generate(input_ids,

do_sample=True,

temperature=0.5,

max_new_tokens=1024

)

response = outputs[0][input_ids.shape[-1]:]

print(tokenizer.decode(response, skip_special_tokens=True))

``` |

ravindrakinagi/phi3_mini | ravindrakinagi | 2024-06-20T09:16:45Z | 1,307 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null | 2024-06-20T08:53:38Z | Entry not found |

maywell/synatra_V0.01 | maywell | 2023-10-08T09:21:04Z | 1,306 | 1 | transformers | [

"transformers",

"pytorch",

"mistral",

"text-generation",

"conversational",

"ko",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-07T12:47:34Z | ---

license: cc-by-nc-4.0

language:

- ko

library_name: transformers

---

테스트 모델입니다.

### 사용시 주의사항

프롬프트 입력시 ```[INST] 프롬프트 메시지 [\INST]``` 형식 맞추어 주셔야 합니다. 원본 모델이 그렇습니다. |

kyujinpy/Kosy-platypus2-13B-v3 | kyujinpy | 2023-11-02T01:52:46Z | 1,306 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"ko",

"dataset:kyujinpy/KOpen-platypus",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-27T09:32:42Z | ---

language:

- ko

datasets:

- kyujinpy/KOpen-platypus

library_name: transformers

pipeline_tag: text-generation

license: cc-by-nc-sa-4.0

---

# **Kosy🍵llama**

## Model Details

**Model Developers** Kyujin Han (kyujinpy)

**Model Description**

[NEFTune](https://github.com/neelsjain/NEFTune) method를 활용하여 훈련한 Ko-platypus2 new version!

(Noisy + KO + llama = Kosy🍵llama)

**Repo Link**

Github **KoNEFTune**: [Kosy🍵llama](https://github.com/Marker-Inc-Korea/KoNEFTune)

If you visit our github, you can easily apply **Random_noisy_embedding_fine-tuning**!!

**Base Model**

[hyunseoki/ko-en-llama2-13b](https://huggingface.co/hyunseoki/ko-en-llama2-13b)

**Training Dataset**

Version of combined dataset: [kyujinpy/KOpen-platypus](https://huggingface.co/datasets/kyujinpy/KOpen-platypus)

I use A100 GPU 40GB and COLAB, when trianing.

# **Model comparisons**

[KO-LLM leaderboard](https://huggingface.co/spaces/upstage/open-ko-llm-leaderboard)

# **NEFT comparisons**

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

| --- | --- | --- | --- | --- | --- | --- |

| [Ko-Platypus2-13B](https://huggingface.co/kyujinpy/KO-Platypus2-13B) | 45.60 | 44.20 | 54.31 | 42.47 | 44.41 | 42.62 |

| *NEFT(🍵kosy)+MLP-v1 | 43.64 | 43.94 | 53.88 | 42.68 | 43.46 | 34.24 |

| *NEFT(🍵kosy)+MLP-v2 | 45.45 | 44.20 | 54.56 | 42.60 | 42.68 | 42.98 |

| [***NEFT(🍵kosy)+MLP-v3**](https://huggingface.co/kyujinpy/Kosy-platypus2-13B-v3) | 46.31 | 43.34 | 54.54 | 43.38 | 44.11 | 46.16 |

| NEFT(🍵kosy)+Attention | 44.92 |42.92 | 54.48 | 42.99 | 43.00 | 41.20 |

| NEFT(🍵kosy) | 45.08 | 43.09 | 53.61 | 41.06 | 43.47 | 43.21 |

> *Different Hyperparameters such that learning_rate, batch_size, epoch, etc...

# Implementation Code

```python

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "kyujinpy/Koisy-Platypus2-13B"

OpenOrca = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

OpenOrca_tokenizer = AutoTokenizer.from_pretrained(repo)

```

--- |

kyujinpy/Kosy-platypus2-13B-v4 | kyujinpy | 2023-11-02T01:52:54Z | 1,306 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"ko",

"dataset:kyujinpy/KOpen-platypus",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-28T17:25:07Z | ---

language:

- ko

datasets:

- kyujinpy/KOpen-platypus

library_name: transformers

pipeline_tag: text-generation

license: cc-by-nc-sa-4.0

---

# **Kosy🍵llama**

## Model Details

**Model Developers** Kyujin Han (kyujinpy)

**Model Description**

[NEFTune](https://github.com/neelsjain/NEFTune) method를 활용하여 훈련한 Ko-platypus2 new version!

(Noisy + KO + llama = Kosy🍵llama)

**Repo Link**

Github **KoNEFTune**: [Kosy🍵llama](https://github.com/Marker-Inc-Korea/KoNEFTune)

If you visit our github, you can easily apply **Random_noisy_embedding_fine-tuning**!!

**Base Model**

[hyunseoki/ko-en-llama2-13b](https://huggingface.co/hyunseoki/ko-en-llama2-13b)

**Training Dataset**

Version of combined dataset: [kyujinpy/KOpen-platypus](https://huggingface.co/datasets/kyujinpy/KOpen-platypus)

I use A100 GPU 40GB and COLAB, when trianing.

# **Model comparisons**

[KO-LLM leaderboard](https://huggingface.co/spaces/upstage/open-ko-llm-leaderboard)

# **NEFT comparisons**

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

| --- | --- | --- | --- | --- | --- | --- |

| [Ko-Platypus2-13B](https://huggingface.co/kyujinpy/KO-Platypus2-13B) | 45.60 | 44.20 | 54.31 | 42.47 | 44.41 | 42.62 |

| *NEFT(🍵kosy)+MLP-v1 | 43.64 | 43.94 | 53.88 | 42.68 | 43.46 | 34.24 |

| *NEFT(🍵kosy)+MLP-v2 | 45.45 | 44.20 | 54.56 | 42.60 | 42.68 | 42.98 |

| [***NEFT(🍵kosy)+MLP-v3**](https://huggingface.co/kyujinpy/Kosy-platypus2-13B-v3) | 46.31 | 43.34 | 54.54 | 43.38 | 44.11 | 46.16 |

| NEFT(🍵kosy)+Attention | 44.92 |42.92 | 54.48 | 42.99 | 43.00 | 41.20 |

| NEFT(🍵kosy) | 45.08 | 43.09 | 53.61 | 41.06 | 43.47 | 43.21 |

> *Different Hyperparameters such that learning_rate, batch_size, epoch, etc...

# Implementation Code

```python

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "kyujinpy/Koisy-Platypus2-13B"

OpenOrca = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

OpenOrca_tokenizer = AutoTokenizer.from_pretrained(repo)

```

--- |

wons/llama2-13b-test-v0.1 | wons | 2023-11-22T07:05:53Z | 1,306 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-22T07:25:26Z | ---

license: cc-by-nc-4.0

---

|

bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF | bartowski | 2024-06-03T15:01:22Z | 1,306 | 1 | null | [

"gguf",

"text-generation",

"en",

"dataset:cognitivecomputations/Dolphin-2.9.2",

"dataset:teknium/OpenHermes-2.5",

"dataset:m-a-p/CodeFeedback-Filtered-Instruction",

"dataset:cognitivecomputations/dolphin-coder",

"dataset:cognitivecomputations/samantha-data",

"dataset:microsoft/orca-math-word-problems-200k",

"dataset:internlm/Agent-FLAN",

"dataset:cognitivecomputations/SystemChat-2.0",

"base_model:unsloth/Phi-3-mini-4k-instruct",

"license:mit",

"region:us"

] | text-generation | 2024-06-03T14:12:35Z | ---

license: mit

language:

- en

base_model:

- unsloth/Phi-3-mini-4k-instruct

datasets:

- cognitivecomputations/Dolphin-2.9.2

- teknium/OpenHermes-2.5

- m-a-p/CodeFeedback-Filtered-Instruction

- cognitivecomputations/dolphin-coder

- cognitivecomputations/samantha-data

- microsoft/orca-math-word-problems-200k

- internlm/Agent-FLAN

- cognitivecomputations/SystemChat-2.0

quantized_by: bartowski

pipeline_tag: text-generation

---

## Llamacpp imatrix Quantizations of dolphin-2.9.2-Phi-3-Medium

Using <a href="https://github.com/ggerganov/llama.cpp/">llama.cpp</a> release <a href="https://github.com/ggerganov/llama.cpp/releases/tag/b3070">b3070</a> for quantization.

Original model: https://huggingface.co/cognitivecomputations/dolphin-2.9.2-Phi-3-Medium

All quants made using imatrix option with dataset from [here](https://gist.github.com/bartowski1182/eb213dccb3571f863da82e99418f81e8)

## Prompt format

```

<|im_start|>system

{system_prompt}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

## Download a file (not the whole branch) from below:

| Filename | Quant type | File Size | Description |

| -------- | ---------- | --------- | ----------- |

| [dolphin-2.9.2-Phi-3-Medium-Q8_0.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q8_0.gguf) | Q8_0 | 14.83GB | Extremely high quality, generally unneeded but max available quant. |

| [dolphin-2.9.2-Phi-3-Medium-Q6_K.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q6_K.gguf) | Q6_K | 11.45GB | Very high quality, near perfect, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-Q5_K_M.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q5_K_M.gguf) | Q5_K_M | 9.88GB | High quality, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-Q5_K_S.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q5_K_S.gguf) | Q5_K_S | 9.62GB | High quality, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-Q4_K_M.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q4_K_M.gguf) | Q4_K_M | 8.40GB | Good quality, uses about 4.83 bits per weight, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-Q4_K_S.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q4_K_S.gguf) | Q4_K_S | 7.95GB | Slightly lower quality with more space savings, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-IQ4_XS.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-IQ4_XS.gguf) | IQ4_XS | 7.50GB | Decent quality, smaller than Q4_K_S with similar performance, *recommended*. |

| [dolphin-2.9.2-Phi-3-Medium-Q3_K_L.gguf](https://huggingface.co/bartowski/dolphin-2.9.2-Phi-3-Medium-GGUF/blob/main/dolphin-2.9.2-Phi-3-Medium-Q3_K_L.gguf) | Q3_K_L | 7.34GB | Lower quality but usable, good for low RAM availability. |